94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real., 17 January 2022

Sec. Technologies for VR

Volume 2 - 2021 | https://doi.org/10.3389/frvir.2021.743445

This article is part of the Research TopicAsymmetric Mixed Reality: Exploring Interaction and Collaboration with Heterogeneous Mixed Reality TechnologyView all 6 articles

Collaboration is an essential part of data analysis, allowing multiple users to combine their expertise and to debate about the interpretation of data discoveries using their contextual knowledge. The design of collaborative interfaces within the context of Immersive Analytics remains challenging, particularly due to the various user-centered characteristics of immersive technologies. In this article, we present the use case of a system that enables multiple users to synchronously explore the same data in a collaborative scenario that combines immersive and non-immersive interfaces in an asymmetric role setup. Such a setup allows for bridging the gap when applying heterogeneous display and interaction technologies, enabling each analyst to have an independent and different view of the data, while maintaining important collaborative aspects during the joint data exploration. We developed an immersive VR environment (head-mounted display, 3D gestural input) and a non-immersive desktop terminal (monitor, keyboard and mouse) centered around spatio-temporal data exploration. Supported through a real-time communication interface, synchronous collaborative features are integrated in both interfaces, facilitating the users in their ability to establish a shared context and to make spatio-temporal references. We conducted an empirical evaluation with five participant pairs (within-subject design) to investigate aspects of usability, user engagement, and collaboration during a confirmative analysis task. Synthesis of questionnaire results in combination with additional log file analysis, audio activity analysis, and observations, revealed good usability scores, high user engagement, as well as overall close and balanced collaboration of enthusiastic pairs during the task completion independent of their interface type, validating our system approach in general. Further supported through the self-constructed Spatio-Temporal Collaboration Questionnaire, we are able to contribute with discussion and considerations of the presented scenario and the synchronous collaborative features for the design of similar applications.

Immersive technologies have been experiencing a renaissance in the recent years. Hardware technologies that support immersive Virtual Reality (VR) and Mixed Reality (MR) experiences, for example head-mounted display (HMD), handheld, and tracking devices, have become increasingly ubiquitous over the past decade. Consequently, more and more developers and researchers are now able to create experiences that aim to strive from these novel display and interaction modalities, resulting in emerging trends as well as interesting application and research directions. Immersive Analytics (IA) is one such emerging research field. IA is concerned with the investigation of immersive display and interaction technologies in order to provide tools that support and facilitate data exploration and analytical reasoning (Dwyer et al., 2018; Skarbez et al., 2019). Within the context of data analysis, collaboration between multiple users is a major component, allowing the analysts to combine their expertise, knowledge, and experience (Isenberg et al., 2011), and together analyse and interpret the data as well as discuss findings and observations along the way, which is in itself an inherently social process (Heer and Agrawala, 2008; Billinghurst et al., 2018). A recent review of research about collaborative MR systems, spanning from 1995 to 2018, reveals an increase in the amount of relevant publications from 2012 onward compared to the rather lower publication numbers in this area in the years prior, confirming the increased interest in this topic in recent years (Ens et al., 2019). Nevertheless, immersive technologies are commonly rather user-centered by default (Hackathorn and Margolis, 2016; Skarbez et al., 2019), putting them in strong contrast with the desired collaborative aspects, as often important visual communication cues, e.g., gestures and mimics as facial expressions, body language, and spatial references, are no longer conventionally available. Especially within the context of collaborative data analysis and shared virtual environments, such nonverbal cues are important and should thus be supported accordingly, which is no trivial task (Churchill and Snowdon, 1998; Nguyen and Duval, 2014; Cruz et al., 2015). Unfortunately, there is a lack of empirical research in regard to collaboration within the context of IA (Fonnet and Prié, 2021), encouraging further investigations in this direction. Collaboration and its various aspects is considered a major topic in the current challenges of IA (Ens et al., 2021). Furthermore, collaboration within this context is not limited to scenarios where each collaborator uses the same display and interaction technologies, nor should it be. In fact, hybrid solutions that combine the use of different technologies in asymmetric scenarios where each collaborator has a distinct role are encouraged and anticipated, each providing different perspectives, insights, and considerations during the data analysis workflow (Isenberg, 2014; Wang et al., 2019). In regard to IA, naturally a closer integration with (non-immersive) analytical tools comes to mind, for instance as covered within research fields such as Information Visualization (InfoVis) and Visual Analytics (VA). After all, InfoVis, VA, and IA are envisioned to synergize and complement each other. The design of systems that allow active collaboration is complex in general due to the demand of supporting two main objectives, i.e., supporting the individual user’s aspects as well as those relevant for the collaborative group efforts (Gutwin and Greenberg, 1998). When users are not using the same system to work together but different ones, that additionally may be based on different technologies, this endeavour arguably becomes more complex, as design objectives need to be met for multiple systems. Furthermore, such asymmetric collaboration scenarios that feature different types of technologies commonly implement a kind of expert–novice relationship between its users (Ens et al., 2019), even though an interplay between equal professionals is desired (Isenberg et al., 2011; Billinghurst et al., 2018; Thomsen et al., 2019).

This article aims to address these research challenges by reporting on the design, implementation, and empirical evaluation of a collaborative data exploration system that consists of an immersive and a non-immersive interface. These interfaces allow two collaborators to explore different aspects of the same multivariate dataset, one using a VR application that is based on an HMD and 3D gestural input and the other using a desktop workstation with keyboard and mouse input, connected in real-time. While arguably each interface could be used as a stand-alone application in isolation in order to gain some insights from the data, we are motivated to bridge these interfaces and allow synchronous collaboration. Therefore, each interface provides features that allow the analyst collaborators to make visual references to each other in regard to the spatial and temporal dimensions in the data, while they are also able to verbally communicate via an audio-link in a remote setup. Within the scope of a representative data analysis task, we conducted a within-subjects user interaction study with pairs of participants in order to empirically evaluate aspects such as usability, user engagement, and collaboration using a combination of qualitative and quantitative data collection methods. This allows us to provide valuable reflections as well as design considerations for future work within the presented context. To summarize, this article advances the emerging field of Collaborative Immersive Analytics (CIA) with the following primary (PC) and secondary (SC) contributions:

• [PC.1] We describe the design and implementation of a hybrid asymmetric data exploration system that allows for synchronous analysis of a multivariate dataset. An integral component of the system is the real-time communication interface that enables spatio-temporal referencing across the interfaces.

• [PC.2] We present and discuss the results in regard to usability, user engagement, and collaboration based on an empirical evaluation with five pairs of participants (n = 10), where each pair completed twice a representative data analysis task with no time limitations; each participant got to use both interfaces (within-subject design).

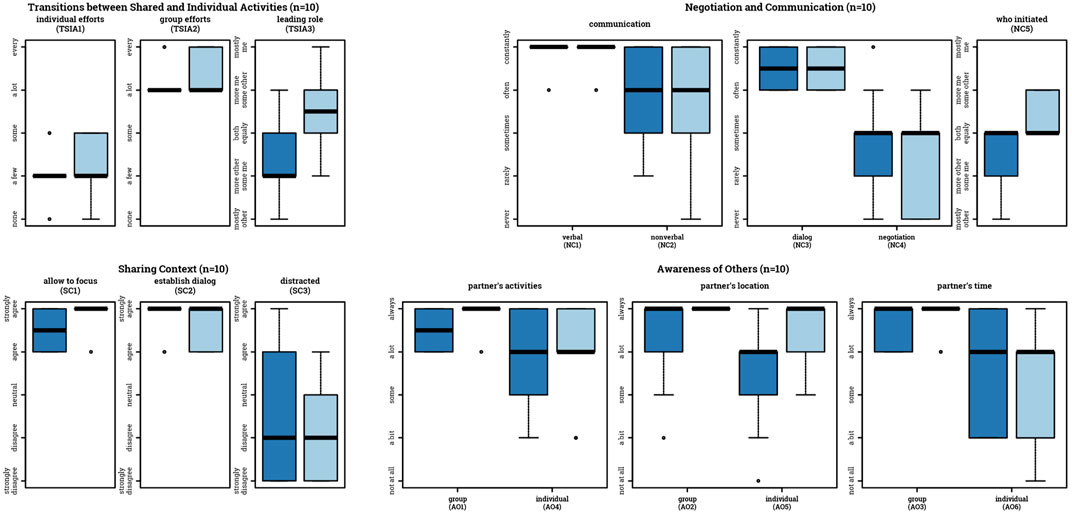

• [SC.1] To support our empirical evaluation of important collaborative aspects of the developed system, we created a self-constructed questionnaire. The Spatio-Temporal Collaboration Questionnaire aims to systematically assess four important collaboration dimensions as described by Churchill and Snowdon (1998) and Snowdon et al. (2001), in particular Transitions between Shared and Individual Activities, Negotiation and Communication, Sharing Context, and Awareness of Others. We report on the motivation and design for the questionnaire, and present the results of its application in practice.

• [SC.2] We came up with a process to generate multivariate datasets consisting of correlated (according to a model) timelines, so that we could use multiple scenarios of equivalent complexity in our study. The process is flexible and easy to adapt for other studies that require similar datasets.

The article is organized in the following way. It begins by describing relevant related work in Section 2, particularly in regard to CIA and asymmetric VR, providing important considerations and further motivations for our research objective. Section 3 formally defines our scenario and describes in detail all components of the developed collaborative system. The overall methodological approach for the empirical evaluation of the system is described in Section 4. The results, presented throughout Section 5, are discussed in Section 6. Finally, we conclude our work in Section 7, also providing some directions for future work.

Thematically aligned with the presented research objective, it is important to discuss relevant literature, providing valuable insights and starting points accordingly. This section first presents work related to the emerging topic of Collaborative Immersive Analytics. Thereafter, we summarize some relevant work in regard to asymmetric virtual reality experiences, in particular studies that investigated interaction aspects between immersed and non-immersed users. Finally, we reflect on the literature by stating further considerations and motivations for our research objective.

Billinghurst et al. (2018) recently defined Collaborative Immersive Analytics (CIA) as follows: “The shared use of immersive interaction and display technologies by more than one person for supporting collaborative analytical reasoning and decision making.”. CIA can be considered a multi-disciplinary research area that combines expertise from Immersive Analytics (IA) and Computer-Supported Cooperative Work (CSCW), two inherently multi-disciplinary research areas themselves (Snowdon et al., 2001; Dwyer et al., 2018; Skarbez et al., 2019). Some foundational characteristics and considerations in regard to collaborative virtual environments have already been described by Snowdon et al. back in 2001, for instance stating that these virtual spaces have the possibility to feature data representations and users at the same time. Snowdon et al. (2001) also highlight the importance for such spaces to be purposeful in order to overcome the users’ initial novelty reactions, and to eventually establish themselves as useful tools and places of interaction that can be visited more frequently. According to them, designing virtual spaces that support individual as well as group work, enabling users to collaborate and exchange information, may very well be such a meaningful purpose (Snowdon et al., 2001). Aligned with such collaborative virtual environments, Skarbez et al. (2019) recently described some interesting directions in order to investigate how collaboration can be facilitated through immersive technologies. This is particularly challenging as immersive technologies often tend to be inherently single user-centered (Skarbez et al., 2019). At the same time however, the application of interactive Virtual Reality (VR) technologies holds major potential to remove spatial boundaries and thus bring users closer together (LaValle, 2020, Chapter 10.4). There is a need to further explore aspects such as physical distribution (remote), temporal distribution (asynchronous), as well as collaboration using heterogeneous device types in order to evaluate potential benefits and limitations of using immersive technologies in collaborative scenarios (Skarbez et al., 2019). Billinghurst et al. (2018) expand on these aspects, further describing in detail various scenarios and use cases in regard to the application of CIA in alignment to the collaborators’ location in space (remote vs. co-located) and time (synchronous vs. asynchronous), as famously introduced by Johansen (1988). They reflect on the variety of opportunities for further research, for instance in regard to the appropriate design space and choice of immersive display and interaction technologies to support analytical tasks, the numbers of collaborators involved, and the lack of face-to-face communication in scenarios that involve HMDs (Billinghurst et al., 2018). Nevertheless, the evaluation of such complex immersive systems that involve multiple users remains challenging (Billinghurst et al., 2018; Skarbez et al., 2019; Ens et al., 2021).

Some interesting work in order to address aspects and challenges of CIA has been conducted. Collaboration features for three-dimensional virtual worlds (3DVWs) and their depiction in common CSCW taxonomies have been explored by Cruz et al. (2015). They highlight in particular the relevance of nonverbal communication cues to support collaboration in 3DVWs, while at the same time often only being implicitly included in holistic CSCW taxonomies. While CSCW concepts naturally inform the design of collaboration in virtual environments, it is equally important to not simply adopt these in isolation, but also consider the new perspectives and properties introduced by immersive virtual environments (Cruz et al., 2015). A review of collaborative Mixed Reality (MR) systems recently conducted by Ens et al. (2019) provide insights into the intersection of CSCW and MR research. Based on the categorisation of the research included in the review, namely time and space, symmetry, artificially, focus, and scenario, it becomes apparent that most work related to MR systems that feature synchronous (time), remote (space), and asymmetric (symmetry) collaboration focus on a remote expert scenario (Ens et al., 2019). Such scenarios commonly involve an expert user guiding a (more) novice user to one extent or the other. Asymmetric collaboration in remote synchronous setups using shared workspace scenarios, where the collaborators have rather equal roles, are much less common, more frequently focusing on symmetric collaboration instead (Ens et al., 2019). Besides the communication and information exchange, collaboration between multiple users features also various social aspects and dimensions (Heer and Agrawala, 2008). Efforts towards the support of more natural social interactions in large virtual environments have already been investigated by Benford and Fahlén, (1993) and Benford et al. (1994). In their papers, the authors describe the design and implementation of a spatial model to facilitate mutual awareness between multiple users in virtual environments through concepts such as aura, awareness, focus, nimbus, adapters and boundaries (Benford and Fahlén, 1993; Benford et al., 1994).

Wang et al. (2019) describe their vision of integrating immersive visualizations more closely into realistic scientific workflows. While highlighting some limitations of applying immersive technologies within such a practical day-to-day context, e.g., the still considerably high demand for calibration and maintenance, they also state that hybrid 2D/3D visualization environments that combine non-immersive and immersive visualizations and interactions may be much appreciated in the future (Wang et al., 2019). A similar vision for the complement and interplay of different interactive data exploration environments has been described by Isenberg (2014). Rather than having one visualization approach that satisfies all of an analyst’s needs, it is more likely to have multiple different ones for individual purposes that each strive from their own advantages, ideally allowing for a seamless transition and data analysis workflow along the way (Isenberg, 2014).

Cavallo et al. (2019) explored how analysts work in a co-located collaborative hybrid reality environment within the context of explorative data analysis. Their data visualization system incorporated Augmented Reality (AR), high resolution display, as well as interactive surface projection technologies. A comparative evaluation of their hybrid reality environment with a desktop-based one, where both environments shared an overall similar design and almost equal functionalities, revealed trends towards the collaborative arrival at more insights in a shorter amount of time using the hybrid reality environment (Cavallo et al., 2019). Nevertheless, Cavallo et al. (2019) conclude by encouraging the design and development of immersive data analysis solutions that aim to complement rather than replace non-immersive ones, in line with the vision as described by Wang et al. (2019). A distributed multi-user platform that incorporates different types of immersive technologies within the context of collaborative visualization has been presented by Khadka et al. (2018). They conducted a comparative study where the participants had to collaboratively solve a data visualization task in two different conditions: either all collaborators were using the same immersive technologies, or the collaborators were using different types of immersive technologies (Khadka et al., 2018). The results indicated trends towards increased effectiveness in their collaboration, i.e., better performance and lower task duration, within the scenario where the collaborators used different types of technologies for the analysis, allowing them to explore the data from different perspectives and synchronize their insights accordingly (Khadka et al., 2018). Different design requirements for mixed-presence collaborative visualization have been derived from the literature by Kim et al. (2010), who also present some initial reflections on these based on an evaluation designed around synchronous remote collaboration using different interactive tabletop systems. Among others, their described design requirements include aspects such as mixed presence, role-based collaboration, group awareness, information access, voice communication, and collaboration styles (Kim et al., 2010). These are certainly also relevant outside a scenario that exclusively involves collaborations around shared interactive surfaces, providing intriguing starting points for further exploration in similar directions. Nguyen and Duval (2014) investigated different aspects of communication in collaborative virtual environments, such as audio communication, embodiment and nonverbal communication, visual metaphors, as well as text and 3D annotation. They emphasize the importance of supporting awareness and communication for successful collaborations in virtual environments, concluding that further research in regard to these aspects is required (Nguyen and Duval, 2014). Nguyen et al. (2019) present a collaborative experience that allows multiple analysts in the same VR environment (co-located or remotely) to explore multidimensional data. Different implemented interaction techniques support the collaborators with typical analytical tasks, e.g., the construction of decision trees to divide the dataset into smaller subsets for further analysis. Furthermore, they chose to represent other collaborators as simple avatars that translate their respective movements into the shared VR environment (Nguyen et al., 2019), addressing mutual user awareness similar as Benford and Fahlén (1993).

Several practical toolkits that aim to facilitate the design and implementation of IA applications have been presented in the recent years (Butcher et al., 2019; Cordeil et al., 2019; Sicat et al., 2019). Naturally, there is potential to expand such toolkits through the addition of modules that focus on collaboration, for instance as described by Casarin et al. (2018).

In the past, some insightful research has been reported that aims to explore asymmetric interactions involving at least one type of immersive VR interface. Wideström et al. (2000) conducted a study to compare two different settings, an asymmetric VR (connected Cave-type and desktop system) versus real world setup, in regard to collaboration, leadership, and performance aspects within the scope of a two-person puzzle solving task. Their results show that the participants reported their contribution to the task completion more unequally in the VR setup compared to the real world one, and that they felt a higher degree of collaboration in the real world task due to the lack of face-to-face communication in the VR setup (Wideström et al., 2000). Arguably, the integration of additional information cues to better support mutual awareness helps to overcome such an experienced lower degree of collaboration in the immersive setup (Benford et al., 1994).

A taxonomy for asymmetric immersive interfaces within collaborative educational settings has been described by Thomsen et al. (2019). Within the scope of their work, the authors follow the general concept of one user being immersed in VR, while one or multiple others are not, defining a distinct actor (VR) - assistant (non-immersed) relationship that their taxonomy is designed around (Thomsen et al., 2019). The taxonomy consists of different components (asymmetric mechanics, hardware components, game components, collaboration mechanics) in order to address varying degrees of collaboration asymmetry (low, medium, high) between actor and assistant (Thomsen et al., 2019). Peter et al. (2018) propose a set of features for a non-immersed user in a guiding role to support communication with an immersed VR user, likewise to the actor-assistant relationship as described by Thomsen et al. (2019). Within their system’s setup, they envision the VR user to have a low degree of control but a high level of immersion, while it is the other way around for the VR-Guide, i.e., a high degree of control but a low level of immersion (Peter et al., 2018). The authors describe the design and implementation of a highlighting feature, comparing different variants, with the aim to focus the VR user’s attention to specific points of reference in the virtual environment based on the non-immersed user’s input (Peter et al., 2018). Similarly, Welsford-Ackroyd et al. (2020) evaluated their proposed system design that allows a non-immersed user, typically in a role as an outside spectator, to actively collaborate with a VR user using a large scale immersive display. Camera control and pointing features were provided to the spectator, the later of which clearly facilitated the communication between the two collaborators in a task scenario where the VR user had to place objects at certain locations as indicated by the spectator (Welsford-Ackroyd et al., 2020). Their system shows similarities to the VR-Guide one by Peter et al. (2018) in such that the non-immersed user directs the immersed one to a point-of-interest through visual highlights in the VR environment. In both cases, the VR user had arguably little to no awareness of the non-immersed user other than through the directed visual references, while the non-immersed user could somewhat “monitor” to VR user through the shared (mirrored) point-of-view at all times. This circumstance contributes to a rather unequal interplay between the users out of the gate.

However, there are also some interesting examples that aim to leverage on more equal contributions in asymmetric user role setups. For instance, Sugiura et al. (2018) investigated asymmetric collaboration between a VR user and (potentially) multiple non-immersed users around an interactive tabletop system within the context of interior design. While the VR user got to perceive the living space from an in-situ, real world-like perspective, the tabletop system featured a top-down view that allowed its users to see the position and orientation of the VR user as well as providing an overview of the living space (Sugiura et al., 2018). Immersed and non-immersed users were provided with features to point to targets of interests that were visually indicated in their collaborator’s respective interface (Sugiura et al., 2018). Gugenheimer et al. (2017) describe design guidelines for co-located asymmetric VR experiences based on insights gained from studies using their developed ShareVR prototype. The prototype allowed different types of interaction between an HMD and a non-HMD user based on a combination of VR and floor projection technologies. Among others, they emphasize on the importance to leverage on asymmetrical aspects, carefully considering each user’s roles in order to design meaningful interactions for collaboration accordingly. Insights and experiences of co-located asymmetric interaction between an HMD and a non-HMD user are described by Lee et al. (2020). The authors designed an application where each user assumed distinct roles, designed after their respective level of immersion, assuming a spatial relevant role for the HMD user and a more temporal one for the non-HMD user (Lee et al., 2020). The presented prototype featured a game-like experience that tasked the two collaborators to actively work together in order to navigate successfully through a maze, and was used to evaluate presence, game experience, and different aspects of the users’ roles within the scope of multiple experiments (Lee et al., 2020). The results indicate a higher than usual level of immersion of the non-HMD user due to the more active role and involvement in the overall task setup, as well as similar levels of enjoyment and social interaction among both user roles (Lee et al., 2020).

The recent advances in immersive technologies in general, as well as reviewing existing work as described thematically through Section 2.1 and Section 2.2, provide exciting opportunities for further research in these directions. For instance, a recently published literature survey of IA research, covering the years from 1991 to 2018, revealed that out of the identified 127 system papers, i.e., papers that describe and potentially evaluate an IA system, only 15 focused on collaboration (Fonnet and Prié, 2021). Fonnet and Prié (2021) go on to put this lack of research further into perspective, arguing that collaboration is widely considered one of the major aspects for the future success of IA. Their argument is in line with the reports and statements of other IA research (Billinghurst et al., 2018; Skarbez et al., 2019; Wang et al., 2019). In fact, 17 key research challenges in regard to IA have been recently defined by 24 experts, five of which are dedicated towards the topic of Collaborative Analytics, further highlighting the importance of collaborative aspects within this context (Ens et al., 2021). While collaboration in the same immersive environment using similar technologies is certainly one interesting direction for research, there are also exciting possibilities of combining immersive and non-immersive display and interaction technologies. After all, IA aims to provide novel, intuitive, and purposeful 3D data analysis tools that complement and synergize with InfoVis and VA workflows rather than replacing them (Isenberg, 2014; Cavallo et al., 2019; Wang et al., 2019).

In addition to the insights and directions as presented in the current state-of-the-art, we are also motivated to further explore the matter of bridging interactive InfoVis and IA based on some of our initial investigations (Reski et al., 2020b). More specifically, we investigated the mixture of applying immersive and non-immersive interfaces within the scope of a real world case study in the context of the digital humanities, allowing pairs of language students to analyse language variability on social networks (Reski et al., 2020b). Based on a sociolingustic context and an explorative data analysis scenario, i.e., undirected search without hypotheses (Aigner et al., 2011, Chapter 1.1), the immersed student analysed the social network data from a geospatial perspective, while the non-immersed student focused their efforts on aspects of textual analysis (Reski et al., 2020b). Both interfaces provided functionalities that allowed the students to send discrete signals to their peer, i.e., when they discovered something noteworthy in the data that they wanted to share, they could make a visual data annotation (Reski et al., 2020b). Based on the results of the user study, we were able to validate the usability of the presented interaction and collaborative between a pair of users where one was inside VR, while the other remained outside, each for their own dedicated data analysis purpose (Reski et al., 2020b). Based on these prior insights and experiences as well as the various described literature throughout Section 2.1 and Section 2.2, our overall stance in regard to CIA has not changed and involves 1) envisioning a synergy between immersive and non-immersive analytics applications, 2) endorsing the mentality that different visualization and interaction approaches can satisfy different data exploration and analysis needs, and 3) encouraging collaboration between multiple users to support joint analytical reasoning and data understanding, independent of their role and background, i.e., experts and novices are considered similarly (Reski et al., 2020b). For this purpose, we aim to investigate collaborative aspects in a scenario where two analysts explore a multivariate dataset at the same time from different perspectives, immersed and non-immersed, each assuming a distinct role in order to contribute to the joint data exploration activity. While various synchronous asymmetric research assumes the non-immersed user commonly in a more “guiding” or “assisting” role (Peter et al., 2018; Thomsen et al., 2019; Welsford-Ackroyd et al., 2020; Ens et al., 2021), our objective is to provide a use case where the involved analysts may contribute more equally, each based on their application and viewpoint. Furthermore, as IA technologies become more accessible in the future, it is of value to the community to investigate the integration with existing tools and practices that are common in the InfoVis and VA community, as for instance emphasized by Wang et al. (2019) and Cavallo et al. (2019). A fundamental aspect within this context is concerned with providing features that address aspects to support and facilitate the collaborative workflow: While both immersive and non-immersive application have to serve their own purpose and modality, it is important to consider anticipated means of communication and coordination between the collaborators in order to provide meaningful interface extensions that assist them with these endeavours. The design for collaborative information cues is particularly important within the context of immersive technologies, as they are often user-centered in nature, i.e., display and interaction technologies are by default rather tailored to be experienced by a single user (Skarbez et al., 2019). As such, they introduce more remote-like characteristics in regard to potential collaboration, even in co-located scenarios, and important visual information cues (gestures, mimic) are not as easily accessible, if at all. Consequently, nonverbal communication features become particularly important in such a setup (Cruz et al., 2015).

In order to move further in these directions, the objective of our investigation is to explore a representative use case that integrates immersive VR (HMD, 3D gestural input) technologies with non-immersive desktop ones within the context of CIA. Using our designed and implemented system, allowing pairs of participants to explore a spatio-temporal dataset, we aim to conduct an exploratory user interaction study in order to investigate important collaboration dimensions as outlined by Churchill and Snowdon (1998) and Snowdon et al. (2001), in particular Transitions between Shared and Individual Activities, Negotiation and Communication, Sharing Context, and Awareness of Others. Based on our methodology, we also intend to make assessments in regard to usability, user engagement, as well as additional collaborative aspects such as the pairs’ overall verbal communication activity and data exploration strategy, aiming to provide further insights about their collaboration within the presented context. In contrast to our earlier investigation (Reski et al., 2020b), the empirical evaluation presented in this article differs in some key aspects: 1) Both the immersive and the non-immersive interface focus on the analysis of spatio-temporal data under utilization of appropriate visualization approaches (Lundblad et al., 2010; Ward et al., 2015; Reski et al., 2020a); 2) The collaborative features to allow referencing across the interfaces are integrated more seamlessly through continuous signaling without the need to take dedicated actions to send discrete annotations; 3) Pairs of users use the developed system with the aim to complete a confirmative analysis task, i.e., a directed search to extract insights from the data (Aigner et al., 2011, Chapter 1.1); 4) In addition to usability (Brooke, 2013), we also examine aspects of user engagement (O’Brien et al., 2018) and collaboration in virtual environments (Churchill and Snowdon, 1998; Snowdon et al., 2001) including a quantitative audio activity analysis of the user pairs.

Based on our considerations and motivations as described in Section 2.3, we are particularly interested in scenarios that involve a hybrid asymmetric setup, enabling multiple users to explore and analyse a spatio-temporal dataset in synchronous collaboration. These key components are defined as follows:

• hybrid: The use of heterogeneous device types, i.e., a mixture of immersive 3D and non-immersive 2D display and interaction technologies.

• asymmetric: Multiple users assume different roles, naturally influenced by the interface they operate.

• explore and analyse: Explorative and confirmative data analysis according to the definitions by Aigner et al. (2011, Chapter 1.1), i.e., the exploration of a dataset to gain first insights or to confirm/reject hypotheses about the data.

• spatio-temporal dataset: A multivariate dataset, where each data item features data variables in regard to spatial (e.g., geolocation) and temporal (i.e., time) dimensions.

• synchronous: The data exploration and analysis activity is conducted by all users at the same time.

• collaboration: Multiple users work together, supported through means of communication and coordination.

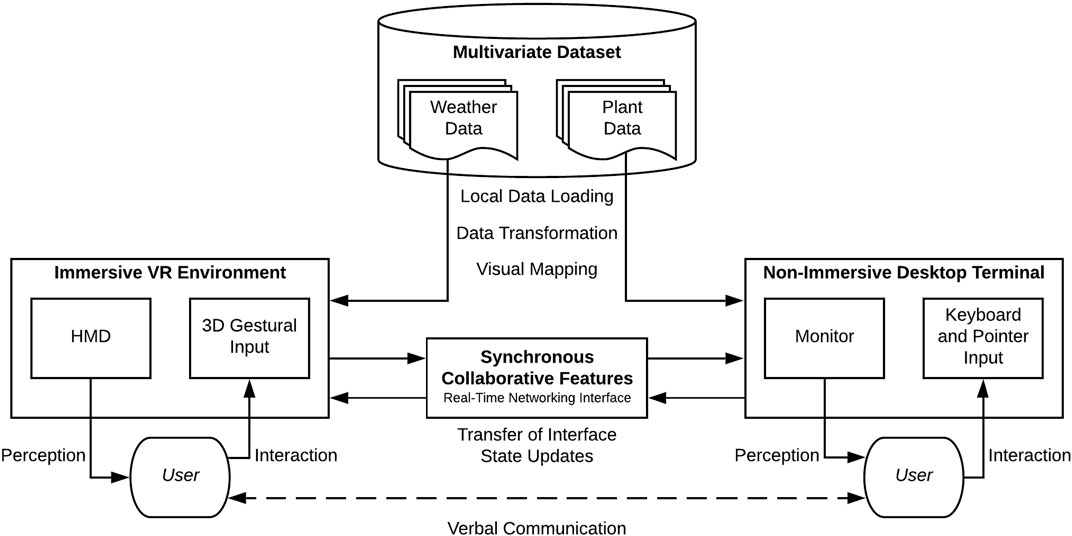

The objective with such a scenario is to satisfy a desired analytical workflow that incorporates different display types and interaction modalities (Wang et al., 2019; Cavallo et al., 2019; Isenberg, 2014), where collaborators are potentially coming from different domains, providing each their own perspectives and data insights, anticipating a rather equal interaction instead of a remote expert scenario (Ens et al., 2019). For this purpose, we set out to develop a system consisting of various components, as illustrated in Figure 1. An immersive VR environment allows for the interaction with spatio-temporal data using a 3D Radar Chart approach, as introduced and validated in our previous work (Reski et al., 2020a). Using a non-immersive desktop terminal, an analyst is able to explore different data variables of the same multivariate dataset in a representative interactive InfoVis interface. A real-time networking interface between the immersive VR environment and the non-immersive desktop terminal, allowing for the transfer of state updates in each interface to the other respectively, is responsible for providing various synchronous collaborative features. Such state updates include features, implemented in both the immersive and non-immersive interface, that allow each analyst to send and retrieve spatio-temporal references in their interface, aiming to facilitate their overall collaboration. Furthermore, we envision that both analysts are able to verbally communicate, i.e., talk to each other, either locally in close physical proximity or remotely via an established audio-link. In anticipation to the designed task as part of our empirical evaluation (later described in Section 4.1.2), we created a multivariate dataset that features spatio-temporal plant and climate data variables, partially inspired by existing use cases and open data sources. Within the scope of our investigation, the immersed analyst assumes the role of the plant expert, while the non-immersed one assumes the role of the climate specialist. It is noteworthy that both interfaces are data-agnostic, thus the developed system is able to support similar other use cases in the future with only minimal programming and data processing efforts.

FIGURE 1. Overview of the system architecture, illustrating all major components: (1) Multivariate Dataset, (2) Immersive VR Environment, (3) Non-Immersive Desktop Terminal, and (4) Synchronous Collaborative Features.

The remainder of this section describes each of the system’s components in more detail, including some insights in the implementation. A video demonstrating the developed system in action is available online.1

We considered a variety of open data sources for real-world inspiration and potential use.2 With our anticipated empirical evaluation in mind, we wanted to present the collaborators with a task that would allow them to specifically investigate and search for targeted insights, much in line with the task concept of a confirmative analysis as opposed to a more open-ended explorative analysis task as featured in our prior study (Reski et al., 2020b). A confirmative analysis task allows for a more direct task performance comparison among the different study sessions. Therefore, we needed a more “benchmark-like” dataset that would allow us to define a representative real-world data exploration task that could be handed over to the participants in the user interaction study, and used to assess in a comparative way their ability to complete a specific task using the developed interfaces. Unfortunately, to the best of our assessment, none of the existing real world data would have allowed us to easily achieve this.

Consequently, we created our own custom, representative multivariate dataset featuring artificially generated data. The data context is held purposefully simple to understand, allowing us to be as inclusive as possible in respect to the recruitment of participants, as no specialist knowledge is required. With the focus on spatio-temporal data, we generated time-series of plant and climate data variables for 39 countries (locations) in Europe. Each country features five plant data variables (different types of fruits or vegetables depending on the task scenario) as well as two climate data variables (sunlight and humidity). Finally, each of these seven data variables per location feature 150 time events. Thus, there is a total amount of 40,950 data values in the generated dataset.3 The special property of this artificially generated dataset is that each of five plant dimensions features either a positive or negative correlation to each of the two climate variables. While the values for all of the variables are diverse across the different locations, the correlations are consistent with a defined model, i.e., the correlations between the two climate and the five plant variables are the same independent of the location. Using this dataset, we are able to task the collaborators with the objective to analyse the data and identify these correlations by using their respective interfaces and implemented collaborative features (described in more detail in Section 4.1.2). Thus, the dataset of correlated timelines is appropriate for the design of confirmative analysis tasks (Aigner et al., 2011, Chapter 1.1).

For each location, the two humidity and sunlight climate timelines were generated using an R function.4 Each of the five plant5 timelines were generated by adding the humidity and sunlight timelines, multiplied by the weights as dictated by the model (either one or minus one, to indicate a positive or negative correlation respectively). These timeline data were further validated to confirm the compliance to the model used.6 A repository containing the datasets and the R code used to generate them (with examples of usage) is available online.7

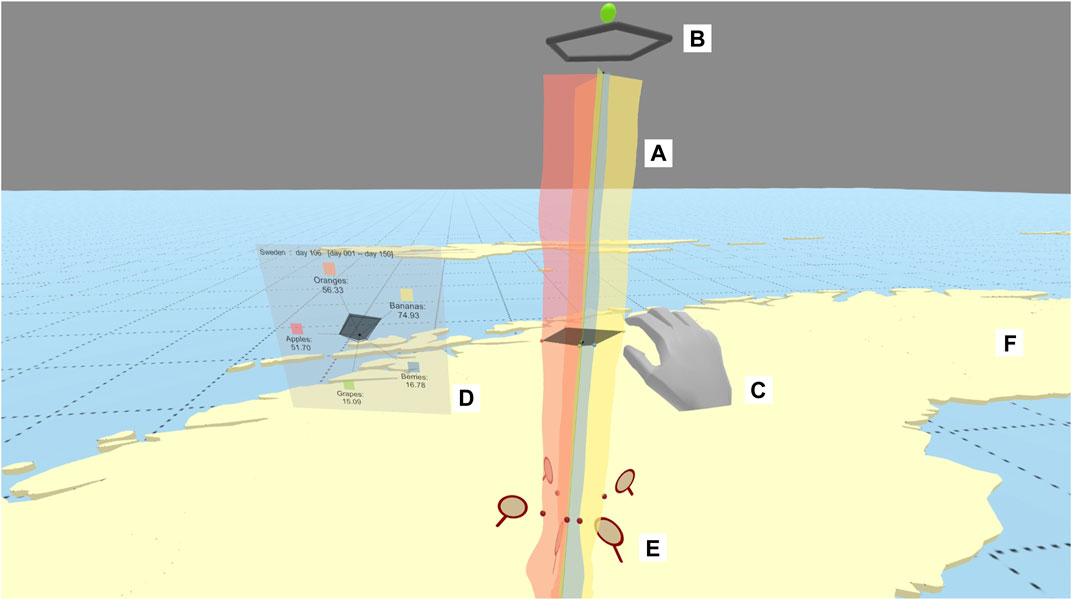

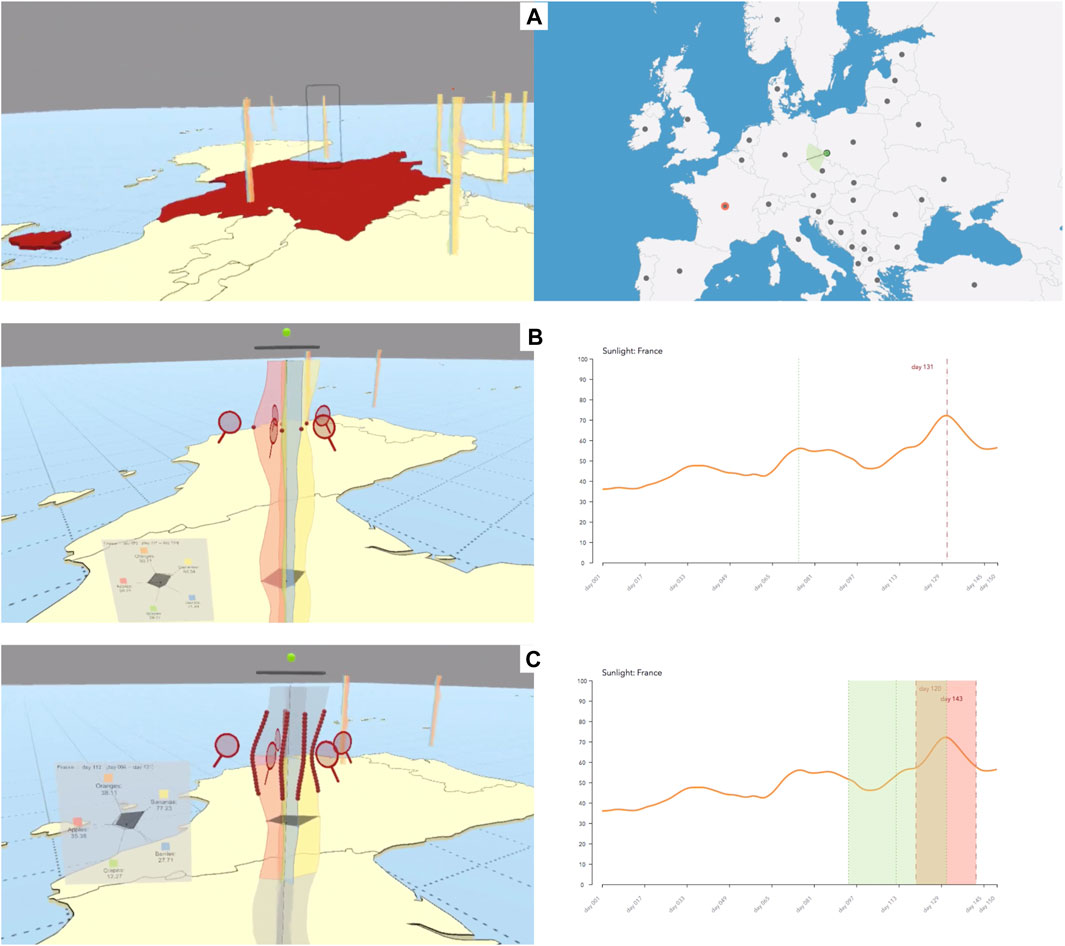

The immersive VR environment features an extended version of some of our earlier work (Reski et al., 2020a), utilizing a 3D Radar Chart approach for the interaction with time-oriented data. Adopted from the original two-dimensional approach, also known as Kiviat figures or star plots, presenting values for different data variables in a radial arrangement (Kolence, 1973; Kolence and Kiviat, 1973), we use the third dimension to visualize the time-series for each of the data variables. The result is a three-dimensional visualization of multiple data variable axes, radially arranged around a central time axis, with the individual data variable axes extending accordingly. Using immersive display technologies, i.e., an HMD, the user can observe and get impressions about all the different time-series data by naturally moving around and inspecting the 3D visualization, recent time events located at the top, while older ones are placed towards the bottom. Additionally, by utilizing 3D gestural input, certain interactive features are provided, allowing the user to interact with the visualization in a natural way by using their hands. The concept and design as well as reflections on the overall validated approach have been reported, indicating that the VR interface can be used for explorative data analysis (Reski et al., 2020a). Compared to the earlier version (Reski et al., 2020a), we made several changes and extensions to the VR interface, for instance 1) removing all graphical hand menus with the aim to focus on more natural hand selection and manipulation techniques instead of system control ones (LaViola et al., 2017, Chapters 7 and 9), and 2) implementing additional features, e.g., to support filter and reconfiguration tasks. Figure 2 and Figure 3 provide some impressions of the functionalities of the developed VR environment as described in detail throughout the remainder of this section.

FIGURE 2. Overview of the developed immersive VR environment (see Section 3.2). Annotations: (A) Data Variable Axes with (vertical) Time Axis representing the 3D Radar Chart; (B) Activation Toggle and Rotation Handle; (C) 3D Gestural Input and Time Slice; (D) Information Window; (E) Temporal Reference (time event) created by the non-immersed user (feature described in Section 3.4); (F) Country as 3D extruded polygon on the floor. A link to a video demonstration is available in Footnote 1.

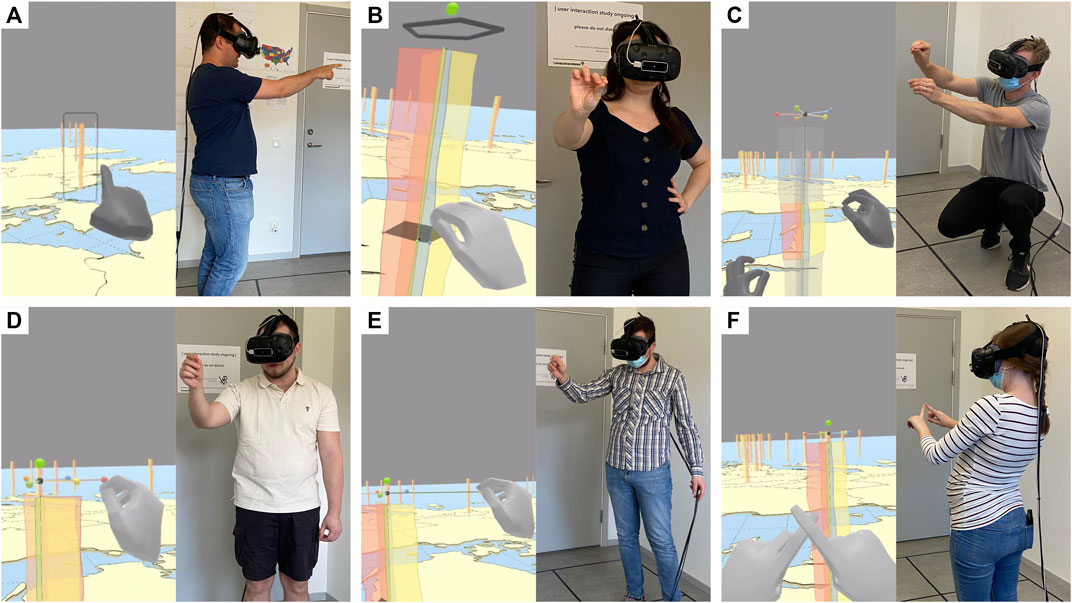

FIGURE 3. Different functionalities of the developed immersive VR environment (see Section 3.2), as operated through various participants during the empirical evaluation. Functionalities: (A) Target-based travel; (B) Time event selection via Time Slice grab; (C) Time range selection via “live sculpting”; (D) Data variable axes reconfiguration (sort); (E) Data variable axes filter; (F) State reset. A link to a video demonstration is available in Footnote 1.

The immersive VR environment features on the floor a visual representation of the European countries as 3D extruded polygons (see Figure 2F). Based on the generated data for 39 locations (see Section 3.1), 39 individual 3D Radar Charts are placed in the VR environment, each at the center of its associated country. Each 3D Radar Chart features five color coded, semitransparent data variable axes, one for each plant type, representing the data in that location (see Figure 2A). Thus, a total of 29,250 data values are displayed in the immersive VR environment.8 Based on a room-scale two-by-two meter area, the user, wearing an HMD with a 3D gestural input device attached, can walk around to investigate and interact with those charts in close proximity. The 3D gestural input (see Figures 2C, 3) allows for the implementation of various (hand) interaction techniques in the VR environment, in our case a mixture of hand-based grasping, indirect widget, and bimanual (gestural command) metaphors (LaViola et al., 2017, Chapters 7, 8, and 9).

To move closer to charts in the virtual environment that are placed “beyond” the physical real world limitations, a target-based travel mechanism has been implemented using a mixture of gaze-based input and gestural command: By simply looking around, the user can center their gaze on one of the several 3D Radar Charts in the VR environment, which will prompt a visual outline for user feedback, at which point the user can then make a hand posture to point towards the chart (index finger extended, all others not extended) in a “I want to go there”-like motion (see Figure 3A), initiating a translation of the user’s virtual position to the center of the chart. Additionally to this spatial exploration, making general observations to get an overview and potentially identifying interesting visual patterns, the user can also engage into more active contextual interaction with an individual 3D Radar Chart, displaying details-on-demand in regard to the temporal data variables (Shneiderman, 1996). While the user is engaged into such details-on-demand investigation with an individual 3D Radar Chart, the gaze-and-point target-based travel mechanics are inactive. In order to be able to move again to “far away” charts, the user is required to first disengage and deactivate the details-on-demand state of a 3D Radar Chart.

Each chart features above it a minimalistic sphere as an Activation Toggle that the user hand simply touch to iterate through three states: Activate/Rotate, Reconfigure/Filter, and Deactivate (see Figure 2B). Once activated, the chart will display its Time Slice, a 2D mesh representing the classical radar chart pattern, integrated to connect the values of the data variable axes in order to represent the currently selected time event (see Figure 2C). Using 3D gestural input, the user can grab the Time Slice to move it up and down in order to make selections forward and backward in time, automatically adjusting the Time Slice’s mesh to represent the values for the updated time event (see Figure 3B). The integrated Time Slice aims to facilitate data interpretation and visual pattern detection when investigating the time-series data in more detail (Reski et al., 2020a). An Information Window is anchored and displayed next to the Time Slice (see Figure 2D), providing additional numerical information about the selected time event, by presenting a traditional radar chart visualization with annotated numerical values, names, and color coding for the selected time event as well as an outlined radar that represents the averages for each data variable across the displayed time-series. By using a two-hand pinch technique (index finger and thumb touching in each hand respectively) close to the chart, the user can select a time range from the time-series to focus on (see Figure 3C). The pinching allows for a “live sculpting” of the desired time range, which is visually highlighted by removing the color from the data variable segments that are not included in the selection. By keeping the colorless semi-transparent segments outside the time range selection visible, the user is still able to perceive a preview of the time-series, maintaining information accordingly – another change compared to our initial version (Reski et al., 2020a). It is noteworthy that the outlined radar chart as part of the Information Window, representing the displayed averages across all data variables, updates to the applied time range selection. The visualization also features a Rotation Handle, allowing for convenient rotation of the 3D Radar Chart in place (see Figure 2B). The Reconfigure/Filter Handle features color coded spheres that are placed above each of the data variable axes and connected to the time axis origin. By grabbing the individual spheres, the user can manipulate the angular position of the linked data variable axis and thus reconfigure (sort) the radial arrangement (see Figure 3D). Additionally, the user can also grab and pull each individual sphere away far enough from the time axis origin until its visual connection “snaps”, effectively removing the linked data variable axis, i.e., filter out undesired data (see Figure 3E). Finally, by making a two-hand index finger cross posture, the user is able to reset the state of the entire 3D Radar Chart, displaying all available data variable axes as well as automatically selecting the entire available time-series range (see Figure 3F).

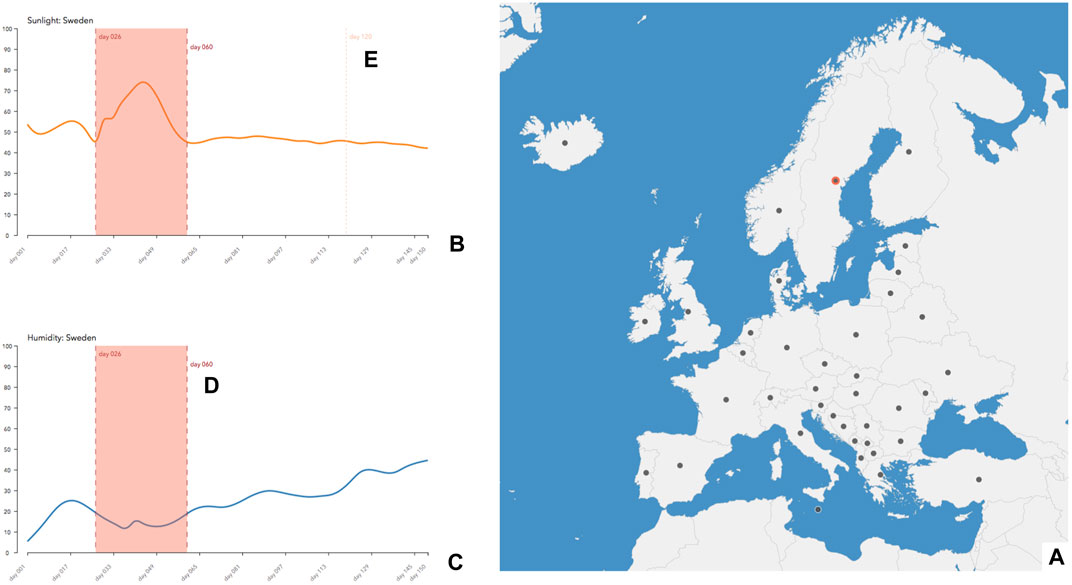

The non-immersive desktop terminal is designed as an interactive InfoVis, enabling its user to explore the climate data variables, i.e., sunlight and humidity, for each of the country locations based on the generated dataset (see Section 3.1). It is operated through a normal desktop monitor using keyboard and pointer (mouse) input. At this stage, the desktop terminal is held purposefully minimalistic but representative given the data context, using typical views and visualization techniques for geospatial and time-oriented data, for instance as described by Ward et al. (2015, Chapters 6 and 7) or as illustrated by Lundblad et al. (2010), who use a similar setup in their application. At this stage of the presented research, our intention with this approach is to focus on the integration of those interactive views that are relevant to within data context and will contain collaborative features (see Section 3.4). Figure 4 provides an overview of the developed desktop terminal.

FIGURE 4. Overview of the developed non-immersive desktop terminal (see Section 3.3; without collaborative information cues from VR interface). Annotations: (A) Map View, with Sweden selected; (B) Climate View - Sunlight; (C) Climate View - Humidity; (D) Time Range Selection, synchronized across both Climate Views; (E) Preview Line (through pointer hover). A link to a video demonstration is available in Footnote 1.

The right part of the interface features a Map View, displaying outlined the individual countries across Europe (see Figure 4A). An interactive node is placed at the center of each country, allowing the user to left-click and select the corresponding location accordingly. It is noteworthy that each node is placed at the exact same position as the individual 3D Radar Charts in the immersive VR environment (see Section 3.2). Once a location has been selected, indicated through a colored node outline in the Map View, it updates the Climate View on the left part of the interface, composed of two line graphs, each representing one of the climate data variables (sunlight and humidity) for that country (see Figures 4B,C). Each line graph’s horizontal axis encodes time, while the vertical one encodes the data value. By hovering over a line graph, a vertical dashed Preview Line provides some additional visual feedback in regard to the hovered time event (see Figure 4E). The user can select the hovered time event via left-click. With a single time event selected, the user can also select a time range through a combination of holding the COMMAND-key and left-click, effectively spanning a continuous interval along the time-series data from the first time event selection to the new one, indicated through a visual overlay (see Figure 4D). Time event and range selections can be updated by simply making new time selections in the interface, replacing the prior ones accordingly. Furthermore, time event and range selections are synchronized across the two line graphs, i.e., making a selection in one line graph will automatically display the same selection in the respective other one.

As described throughout Section 2, providing system features to support collaboration within synchronous hybrid asymmetric data analysis is not a trivial task and requires careful design considerations. In order to investigate the various collaboration dimensions as illustrated by Snowdon et al. (2001) within the scope of our investigation (see Section 2.3 and Section 4.2.3), we partially draw from the insights gained in a previous investigation (Reski et al., 2020b). In particular, we intend to facilitate the collaborators’ verbal communication, easing the way they together discuss, interpret, and make meaning of the data, allowing for an overall natural joint data exploration independent of the applied display and interaction technologies. For that purpose, we designed and implemented a set of synchronous collaborative features across the immersive VR environment and the non-immersive desktop terminal with the following objectives in mind:

• Support of the collaborator’s mutual understanding during their joint data exploration, i.e., facilitate Common Ground and Awareness (Heer and Agrawala, 2008).

• Support for sending and retrieving spatio-temporal references in each of the collaborators’ respective interfaces during their joint data exploration, i.e., facilitate Reference and Deixis (Heer and Agrawala, 2008) and nonverbal communication cues in general (Churchill and Snowdon, 1998).

• Integration of any collaborative features in a seamless and ubiquitous manner, aiming to add collaborative information cues to the respective interfaces without unnecessarily increasing the complexity of their operability.

The following collaborative information cues from the immersive VR environment are displayed in the non-immersive desktop terminal. The Map View features an added node that represents in real-time the position and field-of-view, i.e., orientation, of the VR user, allowing the desktop user to have an understanding of the VR user’s location in space (see Figure 5A, right), The location node, representing the 3D Radar Chart that the VR user is potentially actively interacting with (details-on-demand) is outlined accordingly in the Map View as well, indicating the VR user’s current engagement with it. If both collaborators are interacting with the same location, the Climate View features a vertical dashed line representing the VR user’s current time selection, i.e., the position of the Time Slice (see Figure 5B, right). Similarly, if the VR user applies a time range selection, an overlay in both of the line graphs is visualized in the Climate View accordingly (see Figure 5C, right). All interface elements representing information cues of the VR user are color coded differently to easily discern them from the desktop user.

FIGURE 5. Overview of the synchronous collaborative features as integrated across the immersive (left) and the non-immersive (right, excerpt) interface (see Section 3.4). The screenshots of both interfaces were taken at the same time. Annotations: (A) Spatial referencing through sharing the immersed user’s position and orientation in the Map View, with France selected by the non-immersed user and highlighted accordingly in VR; (B) Temporal referencing (time event) across both interfaces; (C) Temporal referencing (time range) across both interfaces. A link to a video demonstration is available in Footnote 1.

The other way around, the following collaborative information cues from the non-immersive desktop terminal are displayed in the immersive VR environment. Location selections made in the Map View will temporally highlight the corresponding country, extruded in 3D on the floor in the VR environment, in a different color, assuming the VR user is not already actively interacting with the 3D Radar Chart at that location (see Figure 5A, left). Time event and time range selections made in any of the two line graphs of the Climate View will be represented as virtual annotations in the corresponding 3D Radar Chart (see Figures 5B,C, left). More specifically, the selected data values across all data variables in 3D will be highlighted, aiming to catch the VR user’s attention as well as, in case of a time range selection, indirectly providing information about the amount of selected time events (“resolution”). Furthermore, a virtual symbol in the form a magnifying glass, aligned in space with the respective time selection, provides an additional cue to catch the VR user’s attention in the immersive VR environment, figuratively indicating that the desktop user is currently “investigating in this time context”.

The multivariate datasets (see Section 3.1) were created using R. The generated CSV files are loaded and parsed locally by both the immersive and non-immersive interface.

The immersive VR environment (see Section 3.2) utilizes a commercially available HTC Vive HMD (1080x1200 pixel resolution per eye, 90 Hz refresh rate), with a Leap Motion controller for the 3D gestural input attached to it and running the Ultraleap Hand Tracking V4 (Orion) software. The room-scale VR setup is calibrated as a two-by-two meter area for the HMD wearer to freely move in. The VR interface is developed using Unity 2019.3 under utilization of the additional packages SteamVR Plugin for Unity 1.2.3 and Leap Motion Core Assets 4.5.1. The initial version of the 3D Radar Chart implementation is available online,9 serving as the foundation for the applied changes and extensions as described throughout Section 3.2. A custom implementation to create extruded polygons10 was used in order to visualize the European countries on the floor as provided through the R package rworldmap.11 A logging system (see Section 4.2.1) has been integrated into the Unity application as well.12

The non-immersive desktop terminal (see Section 3.3) is running in fullscreen on a 27-inch display with a resolution of 2560x1440 pixels, operated through a standard keyboard and mouse. The interface is implemented using web technologies, i.e., HTML5, CSS, and JavaScript, as well as the D3.js (5.0.0) and TopoJSON (3.0.2) libraries.

The synchronous collaborative features (see Section 3.4) are realized through the implementation of a real-time communication interface based on the WebSocket Secure protocol. The server is implemented using Node.js (v.4.2.6), with respective WebSocket endpoint implementations in the Unity and JavaScript client applications accordingly.13

In order to assess how the developed system and its provided features are used by human users for collaborative data analysis in practice, we planned an empirical evaluation in the format of an user interaction study. This section describes the study design and applied measures.

The study was conducted with pairs of participants who alternated the roles of one person being immersed in VR, and the other using the non-immersive web application (within-subject design). One researcher was responsible for the practical conduction of the study and joined the pair, taking care of the study moderation, ensuring the developed system was functioning as intended, making observations and taking notes during the pair’s task completion, and furthermore documenting the process.

The study was set up in a controlled environment at our research group lab. The lab features a square two-by-two meter area, designated for the VR user to move freely without obstacles. There is enough space in the lab for the researcher’s workplace, from which the study moderation was conducted. Additionally, the lab features a workbench that is divided using a physical partition from the researcher’s workplace, allowing the VR user to complete their informed consent and questionnaires with pen and paper. The user operating the web application was seated alone in a dedicated separate office that featured a workbench with a computer, a 27-inch monitor, keyboard and mouse, as well as enough space to write down notes and complete the informed consent and questionnaires with pen and paper. Detailed information about hardware and software are described in Section 3.5. Additional remarks in general as well as in regard to the ongoing COVID-19 pandemic during the time of the study are stated in the article’s Ethics Statement.

The pair of participants used different interfaces (hybrid); the choice of interface additionally affected which part of the dataset they had access to, and therefore determined their role (asymmetric). Using the non-immersive interface provided access only to the climate data within the desktop application, while using the immersive interface provided access only to the plant data within the virtual environment. This made the users “climate” and “plant” experts respectively; these roles would flip with the switching of the interfaces for the second study task (within-subject design).

The collaborative nature of the tasks required them to work together to determine the correlations of each of the climate parameters to each of the plant parameters; the non-immersive user was additionally tasked to write down the answers, and any worthwhile observations, for both participants (using printed sheets that were provided; these are included in this article’s Supplementary Material).

Due to the artificial nature of the datasets, each of the pair’s answers could be checked against the model used to generate them, making the nature of this study a confirmative analysis (Aigner et al., 2011, Chapter 1.1) compared to the explorative analysis of our previous study (Reski et al., 2020b).

The choice of context (climate and flora at different locations across Europe, and how the climate conditions affect the plant growth) was chosen as concepts familiar to all participants. At the same time, any previous knowledge of geography and agriculture had to be dismissed, therefore the study was presented with a “science fictional” description to the participants that had to suspend their disbelief and pretend that they were exploring a parallel universe in the far future instead of working with real observations that followed known phenomena. The detailed task description, as it was presented to participants in our user interaction study, is included in the article’s Supplementary Material.

Each study session followed the same procedure of three stages: 1) introduction, 2) fruits task scenario, and 3) veggies task scenario. The overall duration was aimed at approximately 2 hours, including all three stages. The initial choice of which participant used which interface was random. For the two task stages, the participants were encouraged to explore the data and complete their task at their own pace. However, for practical purposes, the pair was given a duration of approximately 30 min to aim for and to have a frame of reference in regard to their task completion progress. Whether the pair required more or less time, was up to them. Consequently, each participant was anticipated to spent approximately 35 min (5 min warm-up; 30 min task) immersed in VR.

In the introduction, the participants were first welcomed and then asked to fill out an informed user consent in regard to their participation. Afterwards, demographic information about the participants’ background and prior virtual reality experiences were inquired. The moderator provided an overview about the two applications and their collaborative features, as well as about the data context and the task for their upcoming joint data explorations, i.e., the first and second task stages.

For the first task, each participant of the pair assumed their role and respective application. Using a special warm-up dataset, different from each of the two task scenario datasets, the participants were provided with the opportunity to warm-up and become familiar with their interfaces and the collaborative features. Once the pair felt comfortable, the moderator loaded the task scenario dataset and issued the start for the pair’s task completion by initiating the audio recording. During the tasks, the pair could only talk to each other, while the moderator refrained from making any comments to the pair, only writing down noteworthy observations. Once the pair considered themselves to be done with their task by speaking aloud “We are done with the data exploration” (or equivalent), the moderator stopped the audio recording. The participants were then asked to complete three questionnaires (in order): System Usability Scale (SUS), User Engagement Scale - Short Form (UES-SF), and our Spatio-Temporal Collaboration Questionnaire (see Section 4.2.3).

After a short break in which the moderator made several preparations, the participants switched their assumed roles and applications, and the second task stage started by following the same procedure as in the first one (warm-up, task, questionnaires). Finally, the pair was thanked for their participation and sent off. If they inquired about their task performance, they were informed after the study completion.

In order to investigate our research objective as described in Section 1 and Section 2.3, we assessed usability, user engagement, and aspects of the pair’s collaboration. For that purpose, we applied a mixture of quantitative and qualitative measures to collect data.

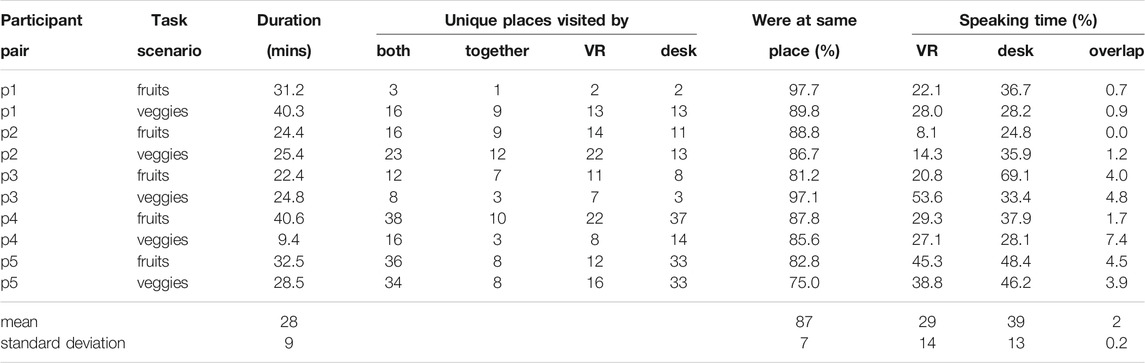

We collected system logs of all the participants’ interactions with their respective interfaces during the task sessions. Each log entry consists of a timestamp (in seconds), a user identifier, and multiple fields that describe the various contextual interactions in detail, e.g., a movement to a specific location or the selection of a specific time event or time range. Since all interactions in the non-immersive interface are communicated to the immersive interface via real-time communication interface, we decided to log the interactions for both users conveniently unified in one place. The outcome is a CSV file that can be easily processed according to our interests. For instance, an analysis can be conducted to identify when the collaborators were actively investigating the data in the same spatial location, or in regard to when each collaborator moved from location to location, to name just two examples. Within the scope of the presented collaborative system, we implemented the system logging for both interfaces as a separate lightweight module that is integrated as part of the VR application (see Section 3.5).

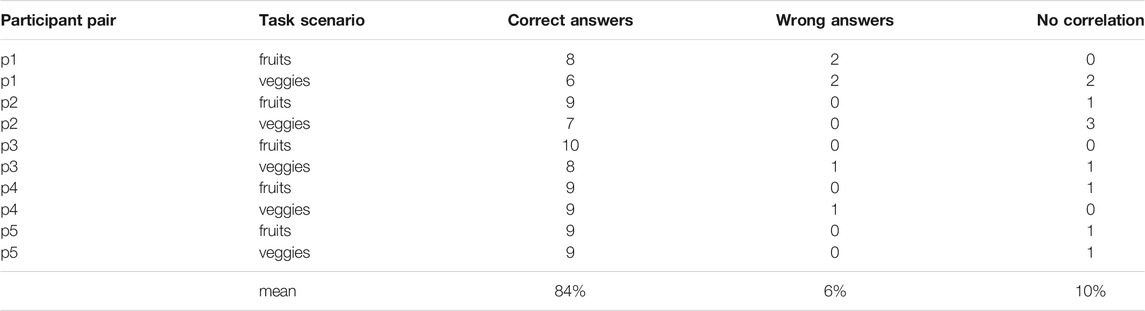

Based on the designed data analysis task as described in Section 4.1.2, we are able to assess the pair’s ability to collaboratively identify the potential correlations between the plant and the climate dimensions using their respective interfaces. For each task, this results in a total of ten correlation answers, i.e., five plant data dimensions × two climate data dimensions, each indicating either a negative, positive, or no correlation. While the option to answer no correlation was provided, a correlation was always defined by the models used in the fruits and veggies scenarios. Additionally, each of the ten correlation answers included an associated confidence (low, medium, high, or do not know), describing the pair’s reported confidence for their respective answers.

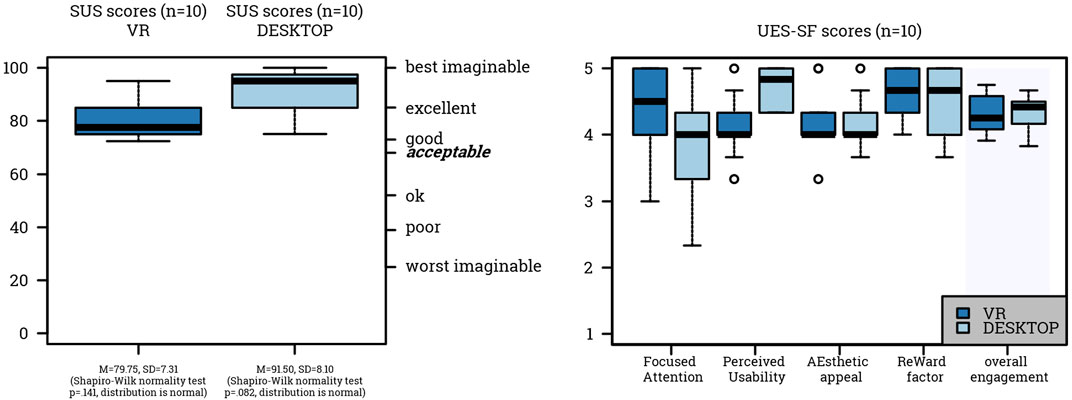

To make assessments about the general usability of each interface, the immersive VR and the non-immersive desktop one, we asked the participants to complete the System Usability Scale (SUS) questionnaire (Brooke, 2013, 1996). The SUS is composed of ten 5-point Likert scale items, resulting in an interpretable score between 0 (negative) to 100 (positive) (Brooke, 2013). Additionally, we choose to adopt the adjective ratings as proposed by Bangor et al. (2009), further facilitating the interpretation of the numerical score and explanation of the results.

Furthermore, to gain insights about the collaborators’ general engagement with their respective interface, we asked them to complete the User Engagement Scale - Short Form (UES-SF) questionnaire (O’Brien et al., 2018). As opposed to the much more extensive 30 item Long Form version, the UES-SF consists instead of only twelve 5-point Likert scale items, i.e., three items for each of its four factors: Focused Attention, Perceived Usability, Aesthetic Appeal, and Reward (O’Brien et al., 2018). Scores on a scale from 1 (negative) to 5 (positive) can be calculated for each of the four factors individually as well as an overall engagement score (O’Brien et al., 2018).

Assessing system usability and user engagement within the scope of our task allows to gain further insights into the implemented data exploration interfaces and their collaborative features. While both interfaces are using fundamentally different display and interaction technologies, we believe it is important to potentially identify factors that might impact the pair’s collaboration. The SUS and the UES-SF questionnaires are comparatively inexpensive data collection methods, and are both widely recognized and applied in the research community (Brooke, 2013; O’Brien et al., 2018).

With our research objective in mind, we are motivated to investigate aspects of the pair’s collaboration. In particular, additionally to observations through a researcher, we are interested in the pair’s own perception of their collaboration after the task completion. For that purpose, means of self-reporting through the participants are required, commonly implemented through Likert scale statements (quantitative) or open-ended interview-like questions (qualitative). To the best of our knowledge, there is no dedicated standardized CSCW questionnaire for the purpose of investigating collaboration in virtual environments. We also examined potential alternatives, for instance the Social Presence Module as part of the Game Experience Questionnaire (Poels et al., 2007; IJsselsteijn et al., 2013), but deemed those not specific enough within the scope and purpose of our investigation, where a pair is exploring and interacting with spatio-temporal data – a comparatively common use case (Fonnet and Prié, 2021).

Consequently, we set out to design a questionnaire to satisfy our needs. Based on relevant literature, we started by identifying important aspects and dimensions of collaboration. Dix (1994) presents a general framework for CSCW by dissecting its components in Cooperative Work and various aspects of Computer Support, i.e., Communication, Computerized Artefacts of Work, and Non-Computerized Artefacts. Throughout the framework, the importance of communicative aspects as part of cooperative work is emphasized, in particular as Computer Mediated Communication, arguing for its appropriate integration respectively (Dix, 1994). Within the context of CSCW, four key features that collaborative virtual environments should strive to support are defined by Snowdon et al. (2001) as follows: Sharing Context, Awareness of Others, Negotiation and Communication, and Transitions between Shared and Individual Activities. A conceptual framework and taxonomy by Gutwin and Greenberg (2002) is dedicated to awareness within the context of group work. Awareness, seen as a state of being attentive and informed about the events in a situation and environment, can be maintained rather easy and natural in face-to-face workspaces as opposed to groupware ones that do not feature face-to-face communication (Gutwin and Greenberg, 2002). Gutwin and Greenberg (2002) differentiate between situation awareness, workspace awareness, and awareness maintenance, and move on to propose a Workspace Awareness Framework to describe aspects related to environment, knowledge, exploration, and action. Pinelle et al. (2003) propose a task model to support Collaboration Usability Analysis. They categorize the mechanics of collaboration into different aspects of communication and coordination, and go on to describe their task model that consists of scenario, task (individual and collaborative), and action components. Andriessen (2001) proposes a heuristic classification of the major activities involved in cooperative scenarios according to interpersonal exchange processes (communication), task-oriented processes (cooperation, coordination, information sharing and learning), and group-oriented processes (social interaction). Within the more specific context of Collaborative Visual Analytics, Heer and Agrawala (2008) discuss important design considerations to facilitate collaborative data exploration, among others relevant to Common Ground and Awareness, Reference and Deixis, and Incentives and Engagement.

Based on the insights and impressions gained from the various classifications according to the described literature, all discussing collaboration in regard to similar themes from slightly different perspectives, we subjectively decided to follow and adopt the descriptions by Snowdon et al. (2001), emphasizing various key aspects that collaborative virtual environments should aim to support. We believe that the investigation of Sharing Context, Awareness of Others, Negotiation and Communication, and Transitions between Shared and Individual Activities should allow for the retrieval of insights in regard to different important collaborative aspects, thus providing a “bigger picture” of the collaboration during the completion of an analytical task (Snowdon et al., 2001).

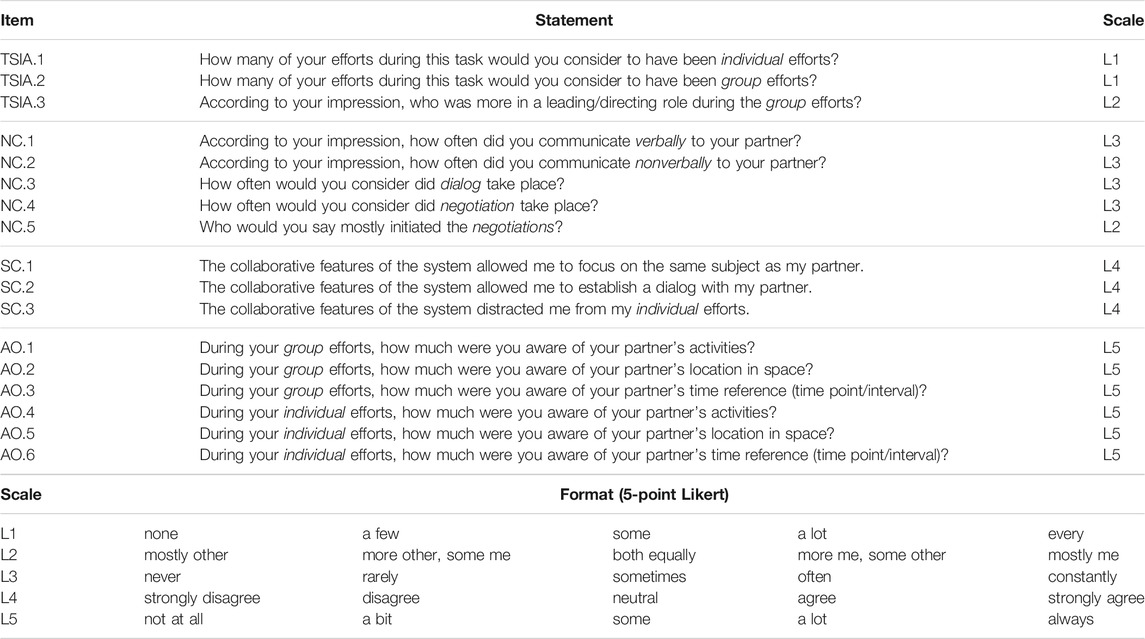

Our self-constructed questionnaire, named the Spatio-Temporal Collaboration Questionnaire, was designed to assess aspects in a setting of synchronous collaboration as follows. It features 17 5-point Likert scale statements that are thematically relevant to the four dimensions as adopted from Snowdon et al. (2001), and described in the following way:

• Transitions between Shared and Individual Activities (TSIA): The interplay between individual and group efforts, including the ability to switch between these, within the scope of collaborative work.

• Negotiation and Communication (NC): Verbal conversation (i.e., talk) facilitated through the ability of utilizing nonverbal information cues in order to discuss and interpret any task-related aspects of the activity (e.g., findings in the data, roles and structure of task approach, and so on).

• Sharing Context (SC): Characteristics and features of the shared space that facilitate and support focused and unfocused collaborative work, leading to shared understandings.

• Awareness of Others (AO): The ability to understand your partner’s activity during times of 1) focused collaboration and active communication (i.e., group efforts), as well as 2) more independent and individual work.

Table 1 presents an overview of all item statements and their Likert scales across these four dimensions. The design of the individual item statements is held purposefully generic, anticipating re-usability, remix, and further adoption for evaluations in similar contexts in the future. Under consideration of our overall scenario and task as described in Section 3 and Section 4.1.2, only the items AO.2, AO.3, AO.5, and AO.6 are rather use case specific in regard to the collaborator’s ability to send and retrieve spatio-temporal references using their respective interfaces. Therefore, these four items inquire ratings about the collaborator’s location in space and time reference during group and individual efforts. In practice, the questionnaire is to be filled out by each participating collaborator individually and directly after the respective task completion. The evaluation of the answers should allow for a quantitative analysis of the system’s collaborative features, and provide insights in regard to the collaboration as perceived by the collaborators themselves. Furthermore, the results should be interpreted within the context of the tested system and against its anticipated design, for instance to assess if an anticipated role distribution (equal, unequal) between the collaborators was fulfilled as intended, to name just one example. A two-page print version of the questionnaire, as it was presented to participants in our user interaction study, can be found in the article’s Supplementary Material.

TABLE 1. Overview of the items and Likert scales used in the designed Spatio-Temporal Collaboration Questionnaire.

As the participants were located in two physically separated rooms during the task sessions, there needed to be a way for them to verbally communicate (besides the nonverbal communication features of our system, i.e., the spatio-temporal referencing). The Zoom Cloud Meetings14 teleconferencing software was installed on both the machine that the non-immersed participant was using, as well as on the machine that run the immersive application. This enabled the participants to talk to each other via an audio call, which was recorded. Zoom conveniently allows the recording of separate audio streams for each call participant; therefore, at the end of each task session, three audio files (one of the combined audio, and one from each user) were obtained. Using the Audacity15 audio editor software and its Sound Finder tool, it is possible to obtain timestamps that describe when sound was detected16 in each participant’s audio file, and therefore roughly when they were (individually) speaking. Summing up the time intervals provides an estimation of each participant’s “speaking” amount, and it was also possible to calculate when and how much participants were “overlapping” (talking at the same time). These timestamps were further synchronized with the system log timestamps, by knowing when the audio recording of each session started (and ended). This can allow the correspondence of verbal communication activity and system events (including nonverbal communication cues).