- 1GW School of Medicine and Health Sciences, the George Washington University, Washington, DC, United States

- 2Distinguished Visiting Scholar at mediaX and Visiting Scholar the Virtual Human Interaction Lab, Stanford University, Stanford, CA, United States

- 3Department of Family Medicine, School of Medicine, West Virginia University, Morgantown, WV, United States

- 4Department of Pharmaceutical Systems and Policy, West Virginia University, Morgantown, WV, United States

- 5Department of Neurological Surgery, Berkeley Medical Center, West Virginia University, Martinsburg, WV, United States

In recent years, the advancement of eXtended Reality (XR) technologies including Virtual and Augmented reality (VR and AR respectively) has created new human-computer interfaces that come increasingly closer to replicating natural human movements, interactions, and experiences. In medicine, there is a need for tools that accelerate learning and enhance the realism of training as medical procedures and responsibilities become increasingly complex and time constraints are placed on trainee work. XR and other novel simulation technologies are now being adapted for medical education and are enabling further interactivity, immersion, and safety in medical training. In this review, we investigate efforts to adopt XR into medical education curriculums and simulation labs to help trainees enhance their understanding of anatomy, practice empathetic communication, rehearse clinical procedures, and refine surgical skills. Furthermore, we discuss the current state of the field of XR technology and highlight the advantages of using virtual immersive teaching tools considering the COVID-19 pandemic. Finally, we lay out a vision for the next generation of medical simulation labs using XR devices summarizing the best practices from our and others’ experiences.

Introduction

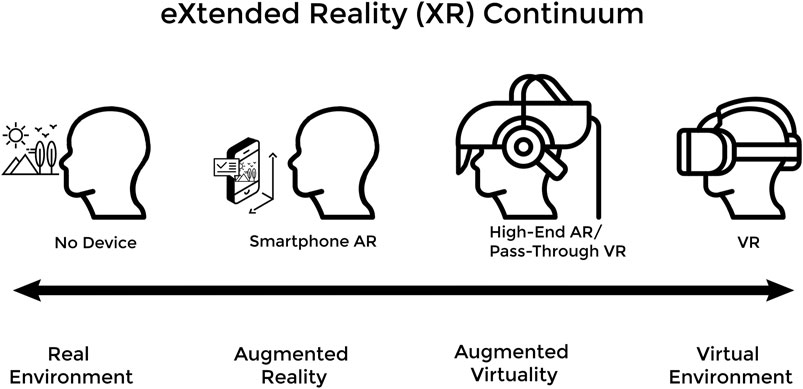

As technology advances it is inevitably adapted to fulfill unmet needs in new applications. This phenomenon is currently underway in medical education as eXtended Reality (XR) technology has been increasingly adopted over the past decade to address shortcomings in the field (Hauze et al., 2019; Zweifach and Triola, 2019). XR describes a continuum of immersive computing experiences that includes both Augmented Reality (AR) and Virtual Reality (VR). In AR, the user can still view the real world, but reality is now augmented with overlayed virtual elements (objects, content, and information). VR creates 3-dimensional (3D) virtual elements in entirely virtual environments (VEs) and the user (typically) cannot view or directly interact with the real world. There are a variety of experiences that span the gap between AR and VR and incorporate elements of both along the XR continuum (Figure 1).

FIGURE 1. The XR Continuum shows a progression from an entirely real environment to an entirely virtual environment with various XR devices facilitating movement along the continuum.

In this review we delve into how medical education programs are now adopting advanced simulation and XR technologies to help trainees enhance their understanding of anatomy, practice empathetic communication, rehearse clinical procedures, and refine surgical skills. In each of these fields of medical training, XR and advanced simulation technologies provide solutions to shortcomings in conventional education. Traditional instruction in anatomy, empathetic communication, and medical procedures or surgery relied on educational tools such as cadavers, patient actors, and regular clinical exposure, respectively. As will be discussed later, these educational tools are often costly, hard to procure, or unsafe to use as the only source of practice to master these complex medical concepts. There are many benefits to using XR technologies in medical education. These include deepening understanding of complex 3D structures, and improving active learning, memory recall, objectivity of assessments, educational enjoyment, and accessibility to educational experiences. These educational advantages can be attributed to the immersive nature of XR experiences as well as the portable, wearable, and relatively inexpensive nature of newer XR devices.

Overview of XR Technology

In simplified terms, XR experiences are made possible through the marriage of XR software with specialized hardware including, but not limited to, head-mounted displays (HMDs), sensors, and motion controllers. Software that is, designed for XR creates interactive virtual elements and VEs, and interfaces with XR hardware. The hardware delivers immersive experiences to the user via stereoscopic 3D displays, motion tracking, haptic feedback, and natural human-based user-interfaces (UIs). Current VR HMDs now include organic light emitting diode (OLED) displays allowing for excellent response times (screen refresh rates), color quality, field of view (FOV), and image resolution in a lightweight package (Kourtesis et al., 2019). This enables VR users to view virtual objects in full stereoscopic 3D mimicking the binocular vision through which they see the real world. Current high-end AR HMDs, like the Microsoft Hololens 2, exist as wearable devices with holographic see-through displays, 3D environment mapping, as well as eye, and hand tracking all powered by a specialized processing unit (Liu et al., 2018; O’Connor et al., 2019). These features enable gesture-based and look-based UIs and allow for context-based placing of virtual elements on the user’s view of the real world (Liu et al., 2018; O’Connor et al., 2019).

Motion tracking of the user during XR experiences allows for natural human movements and gestures to be captured and converted into interactions in the VE. Sensors situated either outside or inside of the wearable XR hardware (HMDs, motion controllers, body trackers, haptic suits, etc.) precisely tracks the user’s body positions and movements within the real-world play area. XR software then converts these positions and movements into actions for the avatar (a virtual representation of the user) in the VE. In the modern generations of VR devices, motion tracking has been accomplished via either outside-in tracking, i.e. sensors external to the user and HMD detect user movement and position, or more recently inside-out tracking, i.e. sensors built-into the HMD detect user movement and position (Kourtesis et al., 2019; Zweifach and Triola, 2019; Kourtesis et al., 2020). Both forms of tracking allow the user to walk within the play area, which is defined at the start of the VR session, while virtual locomotion such as teleportation allows the user’s avatar to move beyond the initial confines of the play area (Kourtesis et al., 2019). Modern VR headsets typically capture the position and motion of a user by tracking their HMD as well as a pair of handheld motion controllers to 6 degrees of freedom (6 DoF), allowing the XR software to provide users with proprioception and a sense of presence in the VE (Kourtesis et al., 2019). These motion controllers make human-computer interaction in XR much more natural than traditional computer interaction with mouse and keyboard as users can physically reach out with their hands and interact with virtual objects using the motion controllers (Kourtesis et al., 2019).

Haptic feedback, using force and tactile sensing and output, further immerses the user and allows them to feel resistance and texture from virtual elements (Coles et al., 2011). Most commercially available XR devices solely provide haptic feedback in the form of handheld controller vibration, but there are many devices in development such as haptic gloves and suits that provide greater degree of haptic feedback to the user (Coles et al., 2011; Kourtesis et al., 2019). Handheld motion controllers and newer hand/finger tracking allow users to conduct natural gestures and movements to interact with the VE, thus increasing the ergonomic nature of XR and deepening immersion (Coles et al., 2011; Hudson et al., 2019; Kourtesis et al., 2019; Aseeri and Interrante, 2021). Audiovisual (AV) output includes headphones and microphones built into HMDs allowing for 3D spatial sound, further immersing the user in the VE and also enabling social interactions with others connected to the same VE (Yarramreddy et al., 2018; Aseeri and Interrante, 2021).

Motion tracking and haptics as well as AV output from the HMD lets the user have immersive, social, and meaningful interactions in XR, all of which facilitate the dynamic delivery of information to aid active learning in medical education (Yarramreddy et al., 2018; Alfalah et al., 2019; Hudson et al., 2019; Aseeri and Interrante, 2021). To work as described above, XR devices must have sufficient computational power, and the (onboard or external) processor, graphics card, sound card, and operating system must be optimized for each XR experience (Kourtesis et al., 2019).

Recent Developments and Educational Benefits of XR

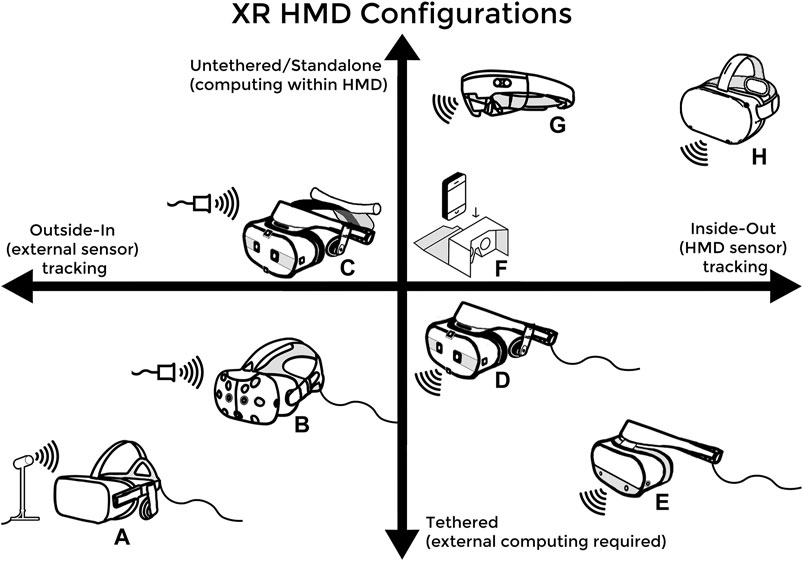

In 2015 and 2016, building off advances made in smartphone technology, multiple companies released the modern generation of commercial VR and AR devices (Kourtesis et al., 2019). Early iterations of modern VR devices initially consisted of an external personal computer (PC) with sufficient processing power, a connected HMD for 3D stereoscopic display and head-tracking, some form of gamepad or handheld motion controllers for user input and motion tracking, and an array of external sensors also connected to the PC to triangulate the user’s positions and movements within the VE and their designated play area (Kourtesis et al., 2019; Zweifach and Triola, 2019; Kourtesis et al., 2020). This configuration is referred to as tethered-VR with outside-in tracking (bottom left in Figure 2.) and is now one of many possible XR HMD configurations (Figure 2). While this arrangement for VR was common in 2016, it necessitated an expensive external gaming PC, significant technological competency on the part of the user, and often resulted in prolonged setup time. The axes of Figure 2 can be understood from an educational standpoint as follows: freedom of user movement increasing as one moves up and decreasing downward, visual clarity and processing power increasing as one moves down and decreasing upward, tracking fidelity increasing as one moves left and decreasing to the right, and ease-of-setup increasing as one moves to the right and decreasing to the left. Early high-end AR devices, such as the Magic Leap 1 and Microsoft HoloLens (Figure 2G), were also prohibitively expensive for most individual creators and many instead opted to use lower-end smartphone-based AR applications (Zweifach and Triola, 2019).

FIGURE 2. (A) the Oculus Rift and (B) the HTC Vive headsets are both tethered and externally-tracked VR headsets from 2016 (C) and (D) are two different variations of the HTC Vive Cosmos Headset that can be configured to have either external tracking and be untethered via a wireless adaptor or have inside-out tracking and be tethered to a PC (E) the Oculus Rift S, which features inside-out tracking and is PC tethered (F) simple phone VR devices such as the Google Cardboard, which requires no external trackers and uses the on-board smartphone as the processor and motion sensor (G) the Microsoft HoloLens AR headset, which features an on-board processor and inside-out tracking (H) the Oculus Quest 2, which represents the newest HMD configuration: standalone or untethered VR with inside-out tracking.

Commercial XR technologies have advanced quickly and now exist in more portable, accessible, inexpensive, and user-friendly forms (Kourtesis et al., 2019). For example, the Oculus Quest 2 (seen on the top right corner of the axes in Figure 2) represents the most compact, versatile, and cost-effective VR headset currently on the market; a standalone VR device that sells for $299 and has full motion-tracking without needing an external PC or sensors. Newer VR HMDs often have cameras as well as infrared and other sensors built-in to enable inside-out tracking. Since their debut, software developers have flocked to the growing XR development space and, consequently, there are now many thriving online marketplaces for XR games and experiences created by both independent developers and large software studios (Yarramreddy et al., 2018). Thanks to these innovations, medical training using XR and simulation has received even more attention and mainstream support.

There are many benefits of using XR in medical education and a growing number of studies are published each year supporting XR as an educational tool. Advances in AV output, motion tracking, and haptics have improved XR’s ability to approximate real-world medical procedures and practices (Coles et al., 2011; Moro et al., 2017; Kourtesis et al., 2019). Spatial visualization of information in XR has been demonstrated to potentially increase memory recall (Krokos et al., 2018). Research has shown that XR can improve understanding of complex 3D structures and increase educational enjoyment and satisfaction with the learning experience in XR compared to other methods (Alfalah et al., 2019). The increased interactivity afforded by XR also works to further deepen learner immersion and satisfaction with the virtual experience, aiding in active learning and increasing learner motivation (Hariri et al., 2004; Stepan et al., 2017; Hudson et al., 2019). Due to the virtual nature of XR, educational experiences can be practiced repeatedly and learners can be objectively assessed through the virtual platform (Dubin et al., 2017; Bond et al., 2019; Maicher et al., 2019; Pottle, 2019). Thanks to their portable and relatively inexpensive form, new commercial XR devices can also increase accessibility to educational experiences outside of the classroom (Moro et al., 2017; Kourtesis et al., 2019). The COVID-19 pandemic has further stressed the importance of embracing virtual learning tools such as XR that enable continued learning off-campus (Franchi, 2020; Iwanaga et al., 2020; Pather et al., 2020; Bond and Franchi, 2021). We envision a post-COVID world in which virtual simulation will firmly secure its place in medical education. In this review, we explore the current state of the field of medical simulation and its use in anatomy, clinical communication, and procedure training while laying out a vision for the next generation of medical education utilizing XR devices.

Search Strategy

In writing this review, papers on XR in education were consulted from a variety of medical and technological journals. PubMed was broadly used to query medical literature, with search terms including “virtual reality” AND “medical education” amongst others. IEEE Xplore was used to query technological literature published by the Institute of Electrical and Electronics Engineers and its publishing partners using similar search terms as above. References were chosen based on their relevance, findings, and contemporaneity–as many new applications of XR in medical education and XR innovations occurred within the past decade.

XR Applications for Gross Anatomy Education

Medical gross anatomy curriculum is a key component of preclinical education in medical school, and cadaveric dissection has traditionally been utilized to teach gross anatomy (Ghosh, 2017; Moro et al., 2017; Houser and Kondrashov, 2018; Franchi, 2020). This is because cadaver dissection allows students to directly observe the complex 3D spatial relationships between organs and structures that is, needed to master human anatomy (Ghosh, 2017; Javan et al., 2020). Over the past 2 decades, medical schools around the world have steadily decreased contact hours in cadaver dissection laboratories (Ramsey-Stewart et al., 2010; Drake et al., 2014; McBride and Drake, 2018). There are several factors contributing to this trend, discussion of which is beyond the scope of this review. Although most medical schools still use cadaver dissection as a part of their anatomy curriculum (Ghosh, 2017; McBride and Drake, 2018), the resulting decrease in learning time has forced curriculum directors to consider new ways to teach anatomy to medical students (Moro et al., 2017). There is an ongoing debate over the role of new technologies in anatomy education (Ramsey-Stewart et al., 2010; Ghosh, 2017; Wilson et al., 2018), with new learning techniques being compared against the gold-standard of textbook lessons and cadaver dissection. Recent studies and meta-analyses suggest that there is no difference in short term learning outcomes between anatomy curriculums teaching primarily through cadaver dissections and those that utilize other techniques, and that XR anatomy modules may be at least as effective as traditional cadaver-based or textbook anatomy lessons (Hariri et al., 2004; Moro et al., 2017; Stepan et al., 2017; Wilson et al., 2018). While this may not be a strong argument for XR to completely replace conventional anatomy education, the technology has been shown to increase student engagement, enjoyment, motivation, and memory recall (Hariri et al., 2004; Moro et al., 2017; Stepan et al., 2017; Krokos et al., 2018; Erolin et al., 2019) suggesting it would be beneficial to adopt XR alongside traditional methods.

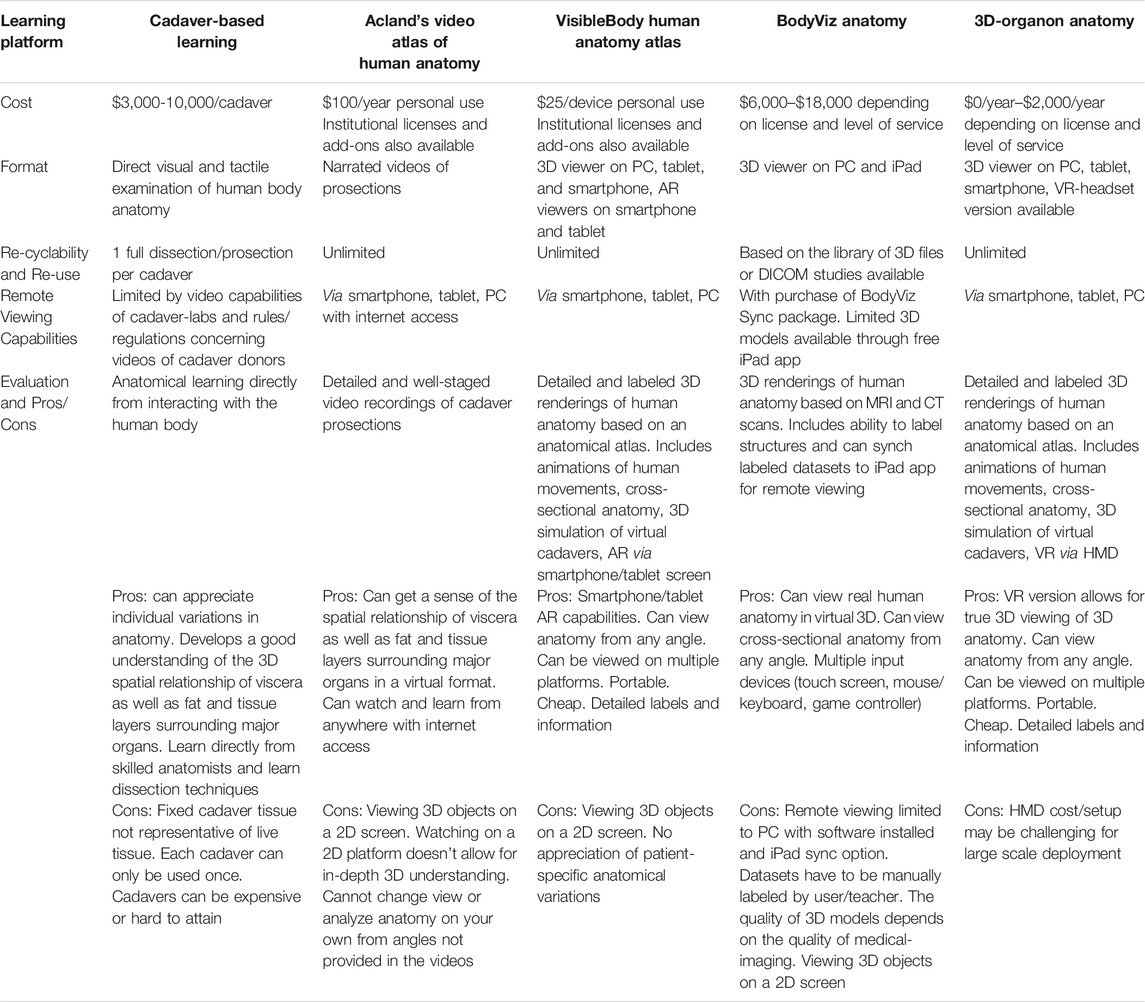

Several medical schools and affiliated hospitals have embraced this vision of the future and are using different XR tools or simulators to supplement their anatomy curricula (Erolin et al., 2019; Zweifach and Triola, 2019). Some are using purchasable XR devices and software while others have partnered with technology companies to design and pilot XR education programs specific to their institutions (Zweifach and Triola, 2019). There are now many different modalities for anatomy education ranging from cadaver dissection labs to smartphone apps, 3D reconstructions of medical images, and full XR anatomy visualizations, each with their own advantages and disadvantages as summarized in Table 1.

As seen in Table 1, the upfront costs of investing in XR solutions are still cheaper than the price of one cadaver, which can range from $3,000 to $10,000 (Grow and Shiffman, 2017), again suggesting that XR technologies could be a cost-effective adjunct to cadaver dissection programs. Additionally, many computer-based or smartphone/tablet-based XR platforms enable student learning outside of traditional classroom settings or laboratory environments (Erolin et al., 2019; Zweifach and Triola, 2019) This ability proved to be especially important during the COVID-19 pandemic when many medical schools and hospitals were forced to restrict access to their physical learning spaces including cadaver laboratories or were no longer able to accept cadavers due to infection concerns early in the pandemic (Alsoufi et al., 2020; Franchi, 2020; Iwanaga et al., 2020; Ooi and Ooi, 2020; Pather et al., 2020; Theoret and Ming, 2020). Those institutions that had the foresight to invest in XR and other anatomy education tools with online capabilities were better able to adjust to virtual learning environments forced by the pandemic (Erolin et al., 2019; Franchi, 2020; Iwanaga et al., 2020; Pather et al., 2020; Bond and Franchi, 2021). Instead of suspending interactive anatomy education until social distancing practices and COVID-19 vaccines allowed schools to reopen cadaver laboratories, these institutions were able to shift curriculum focus from a host of education methods (including cadaver labs) to those that enabled online interactive education (Iwanaga et al., 2020; Pather et al., 2020; Bond and Franchi, 2021). Medical students and other trainees at these institutions were better able to continue learning without major disruptions and could explore anatomy through a variety of educational techniques (including video recordings of cadaver dissections and prosections, as well as 3D platforms and XR), thereby deepening their understanding and appreciation of anatomy (Franchi, 2020).

These tools can also be used to help doctors in training learn and master anatomy throughout their careers. Beyond medical school, XR and surgical simulators have been shown to aid in pre-surgical planning and in solidifying detailed anatomy knowledge for surgical residents and junior surgeons (Hariri et al., 2004; Stepan et al., 2017; Alfalah et al., 2019; Tai et al., 2020a; Javan et al., 2020; Jean et al., 2021). Technology enabling patient-specific volumetric 3D reconstructions of medical imaging (Advanced 3D Visualization and Volume Modeling, RRID:SCR_007353) allows for detailed study (via computer screen or XR HMD) of patient-specific variations in anatomy, thereby enhancing preoperative planning, and refining surgical approaches leading to improved safety for procedures in high-risk locations (e.g., neurosurgery, cardiac surgery, etc.). As surgical trainees require deeper knowledge of anatomy in their area of specialization, these 3D reconstructions and XR simulators have been shown to help further deepen this knowledge without endangering the patient (Hariri et al., 2004; Stepan et al., 2017; Tai et al., 2020b; Javan et al., 2020). For surgical trainees, these tools are invaluable to developing a 3D mental map of their practice-specific anatomy and can help give them further comfort in planning and executing procedures (Figure 3).

FIGURE 3. A neurosurgical resident uses the Surgical Theater 360°VR (Surgical Theater, Cleveland, OH) visualization software and an early Oculus DK2 HMD (Facebook, Menlo Park, CA) to examine patient-specific anatomy and plan the optimal surgical approach to a cerebral aneurysm. Used with permission.

Currently, while there is limited published evidence of a substantial benefit to using XR for anatomy education, many studies have shown equivalent educational effectiveness to traditional methods as well as increased student enjoyment, engagement, and motivation (Hariri et al., 2004; Moro et al., 2017; Stepan et al., 2017; Krokos et al., 2018; Wilson et al., 2018; Erolin et al., 2019). These findings combined with the potential for remote learning and mastery of anatomy concepts using XR make it an engaging and versatile tool for anatomy education. We anticipate further studies will continue to prove the effectiveness of XR in anatomy education and will add to the growing body of evidence to solidify this technology as an essential component of the future of medical education.

XR Applications for Communication and Empathy Training

Simulation and XR technology are also being deployed to help medical trainees develop clinical communication skills. It is widely accepted that competent verbal and non-verbal communication skills are essential to the successful practice of medicine free from poor outcomes and low patient satisfaction (Epstein et al., 2005; Courteille et al., 2014). Communication and empathy are crucial to history-taking, conducting a physical examination, and arriving at accurate diagnoses. These soft skills have traditionally been taught and practiced via standardized patients (SPs), actors or instructors trained to behave like a patient under examination, and eventually via real patients (Keifenheim et al., 2015; Uchida et al., 2019). However, it can be resource and time-intensive to staff and train SPs and difficult to ensure consistency in SP instruction (Maicher et al., 2017). Additionally, students often have limited class time to practice with SPs (Keifenheim et al., 2015; Maicher et al., 2017; Uchida et al., 2019). To address these concerns, virtual standardized patients (VSPs) were designed to respond to students’ queries during a practice interview or examination in a standardized manner, allowing for controlled repeatable practice, and assessment of student communication and examination skills in a low-risk environment (Courteille et al., 2014; Guetterman et al., 2019; Pottle, 2019). In recent years, artificial intelligence (AI) as well as speech-to-text technology, has advanced dramatically to allow for accurate VSP natural language processing via different UIs including, text, speech, and AV (Guetterman et al., 2019; Maicher et al., 2017; McGrath et al., 2018). Recent XR innovations in motion tracking allow for natural human movements in the VE that help students practice body language and other nonverbal communication techniques (Kourtesis et al., 2020; Aseeri and Interrante, 2021). Innovative medical schools and affiliated hospitals are using a variety of VSP platforms constructed in-house or using proprietary technology developed with industry partners (Maicher et al., 2017; McGrath et al., 2018; Bond et al., 2019; Guetterman et al., 2019) (Figure 4).

FIGURE 4. A trainee uses the Acadicus VR platform (Acadicus, Arch Virtual, Madison, Wisconsin) to assess a VSP. The trainee avatar (a representation of the user in the VE) can be seen as the floating head and hands in the center of the image.

Research has shown that regular practice with VSPs can improve medical student proficiency in empathetic communication, discussing sensitive health information, and conflict resolution (Courteille et al., 2014; Kron et al., 2017; Fertleman et al., 2018). Several studies have demonstrated that different VSP platforms are effective in testing student ability to come up with correct differential diagnoses based on a VSP presentation, with about 80% of learners choosing the correct primary and secondary possible diagnoses (Maicher et al., 2017; Bond et al., 2019). VSP simulators with XR UIs can realistically test the clinical reasoning and decision-making skills of nursing and medical students in response to emergency scenarios such as anaphylactic shock, heart attack, and cardiac arrest without putting patients or students at risk (McGrath et al., 2018; Hauze et al., 2019; Pottle, 2019; Rushton et al., 2020). A study comparing varying degrees of immersion amongst these clinical simulators found that more immersive VSP interactions resulted in higher levels of nursing student competency and confidence in the completion of high-risk clinical tasks as compared to mannequin-based clinical skills assessment (Rushton et al., 2020).

Aside from testing diagnostic and crisis-response skills, another advantage of VSP platforms is the built-in objective assessment of student communication and diagnostic skills based on learner responses in the VE. Studies have compared the automated grading ability of VSP platforms to traditional grading by a human instructor and found them to be largely equivalent in accuracy of feedback, and superior in speed of feedback due to the electronic nature of the platforms (Bond et al., 2019; Maicher et al., 2019). Additionally, the virtual nature of VSP platforms allow for repeatable practice with variable scenarios outside of the confines of SP availability and classroom space (Pottle, 2019). These features of VSP platforms could allow students to practice more often and receive quantitative feedback quickly to guide further honing of their communication skills. The COVID-19 pandemic forced many schools to resort to virtual video-based lessons with SPs (Alsoufi et al., 2020; Theoret and Ming, 2020), again demonstrating the need for students to be able to practice communication and empathy in virtual learning environments. As XR, AI, and speech-to-text technology inevitably improve, VSPs will approach SPs in terms of educational effectiveness and availability in medical programs. Some subtleties of interacting with a real human (actor or patient) may never be fully mimicked by simulation, however, VSP platforms have demonstrated their utility in terms of accurate and immediate feedback, repeatability, and virtual access, solidifying them as an essential component of medical education of the future.

XR Applications for Surgical Simulation and Procedure Training

Simulation as an electromechanical technology first arose in 1929 to train airplane pilots for their high-risk profession in a low-risk environment (Yanagawa et al., 2019; Zweifach and Triola, 2019). Similarly, the field of surgery relies on the accurate and precise performance of procedures under pressure with little room for error (Vozenilek et al., 2004). However, unlike pilot training, there is a lack of opportunities for equivalent practice of surgical skills in low-risk settings. Clinical procedure and surgical skills training have traditionally been conducted under the adage “see one, do one, teach one” in which complex skills are learned via limited observation and practice on live patients (Vozenilek et al., 2004; Dubin et al., 2017; Yanagawa et al., 2019; Zweifach and Triola, 2019). In medical systems around the world, an increasing emphasis on patient safety, restrictions on resident work hours, and cost-saving measures in hospitals have further reduced the opportunities for traditional practice for surgical trainees (Vozenilek et al., 2004; Dubin et al., 2017; Yanagawa et al., 2019; Zweifach and Triola, 2019). XR technology is uniquely positioned to help fill these needs in surgical training (Pottle, 2019). Recent advancements in XR technology that allows for motion-tracking of HMDs and hand controllers as well as finger and hand tracking help bring a degree of realism to attempted simulations of surgical procedures and can provide a cheaper alternative to expensive surgical simulators (Coles et al., 2011; Hudson et al., 2019; Kourtesis et al., 2019). Combined with the ubiquity of smartphones and the increased prevalence of AR, simulations that were once entirely virtual or entirely physical can now incorporate elements of both (Tang et al., 2019; UpSurgeOn, 2021). Importantly, practice on virtual trainers poses no risk to patients and can in some cases be conducted remotely on a trainee’s own time, reducing the amount a trainee needs to practice on live patients (Coles et al., 2011; Franzeck et al., 2012).

XR surgical simulators are still in their infancy and various groups have reported on their initial experiences. These pioneering studies demonstrate the need to further optimize XR simulators into an effective educational modality that allows trainees to enhance their technical skills efficiently (Seymour et al., 2002; Vargas et al., 2017; Nicolosi et al., 2018; Bracq et al., 2019; Guedes et al., 2019; Khan et al., 2019). Before the current era of consumer-ready XR devices, Seymour et al. demonstrated through a randomized double-blinded study that VR-trained surgical residents had significantly fewer mistakes and faster procedure time when performing a laparoscopic cholecystectomy compared to a similar group of surgical residents trained via traditional methods (Seymour et al., 2002). This early study is especially relevant now as minimally invasive surgery (MIS) via laparoscopic or robotic instruments and XR surgical simulators are being used more often in surgical practice and training. A Swiss study conducted a decade later with more advanced laparoscopic simulators found that although there was no difference in performance times between simulator-trained and patient-trained residents, with simulator-trained residents requiring significantly less practice on live patients in the operating room to achieve this (Franzeck et al., 2012), thus improving patient safety. A promising recent study of the use of XR simulation in MIS training from Brazil found that VR simulator training resulted in superior trainee performance scores and completion times compared to standard training using laparoscopic instruments (Guedes et al., 2019). Due to the mechanical nature of laparoscopic tools, they can be more accurately simulated via XR haptic feedback devices (Coles et al., 2011).

A newer tool in MIS is the surgical robot. Vargas et al. evaluated the ability of robotic surgical simulators (VR training devices for robotic surgery platforms) to improve trainee proficiency at robotic surgery (Vargas et al., 2017). Their study analyzed medical student performance of a robotic cystotomy repair on a live porcine model with or without prior training on the da Vinci® Skills Simulator (DVSS). While the results did not show a significantly superior effect of simulation training on robotic performance, the authors hypothesize that a more targeted training curriculum or a better definition of surgical proficiency would yield more telling results (Vargas et al., 2017). As many surgeries become more instrument-based, further research is needed to examine the ways in which surgical trainees master these complicated new MIS tools via traditional simulators as well as XR trainers.

XR simulators have been utilized to not only enhance surgical skills but also to hone procedural skills. Bracq et al. aimed to utilize VR simulators to train scrub nurses as they prepare the instrumentation table prior to a craniotomy in the operating room (Bracq et al., 2019). Their results showed no difference in performance between those familiar with operating room (OR) procedures and those with no prior OR experience, indicating the feasibility, and reliability of VR simulators to bring novices up to speed with experts. The study noted that participants particularly appreciated the pedagogical interest, fun, and realism of the VR simulator, demonstrating the potential of XR simulators in procedural skills training for healthcare workers (Bracq et al., 2019). Encouragingly, recent meta-analyses have also supported the use of XR simulators for practicing procedural skills. Khan et al. performed a Cochrane review and meta-analysis investigating virtual reality simulation training in endoscopy (Khan et al., 2019). The aim was to investigate whether VR simulation could supplement conventional patient-based endoscopic training for healthcare providers in training with minimal prior experience. The results of their meta-analysis suggest that compared to no training at all, VR simulation training does offer advantages and can potentially supplement traditional endoscopic training (Khan et al., 2019).

Another potential advantage of virtual surgical and procedural simulators is, like VSP simulators, they can provide immediate objective feedback to learners following a training session. Dubin et al. compared automated feedback from VR robotic surgical simulators to feedback given by human reviewers via a standardized assessment rubric and found the scores to be statistically equivalent (Dubin et al., 2017). This and other findings support the further use of simulators in surgical skills training and assessment, especially in busy healthcare settings where regular human mentor assessment of trainee skill may not be possible (Dubin et al., 2017; Pottle, 2019).

Although the use of XR simulation in surgical training is increasing, numerous issues need to be solved before these technologies are widely adopted. Accurate haptics remains an issue as the various XR hand-controllers cannot yet replicate the minute tactile techniques and sensations required to be proficient in surgery (Coles et al., 2011; McGrath et al., 2018; Goh and Sandars, 2020). As mentioned earlier, while trainee practice scores may have shown improvement, major improvements in surgical outcomes have yet to be demonstrated from prior XR or simulator training (Vargas et al., 2017; Yanagawa et al., 2019). These issues are significant yet far from insurmountable, and these hurdles will be overcome as the need for robust and safe surgical training increases and as XR technology inevitably advances.

Discussion

In this review, we have elaborated on the current use of XR and simulation technologies in medical education. While we highlighted the advantages of adopting XR and simulation in anatomy, empathetic communication, and procedural training, there are many more areas of medical education that could benefit from these rapidly evolving technologies. These include biochemistry, embryology, pathology, radiology (Javan et al., 2020), practicing teamwork, learning hospital layout, and promoting physician self-awareness and behavior change (Fertleman et al., 2018) amongst many others. Any medical field that deals with understanding 3D spatial information, or requires learning by doing could potentially benefit from education using XR and simulation.

Challenges and Research Gaps

Despite the enormous potential afforded by XR technology, utilization of this tool in medical education is accompanied by certain obstacles. In spite of recent advances, technological issues exist in XR haptics, AV output, and motion tracking that need to be resolved to reduce the potential for cybersickness and maximize the utility of XR in medical education.

Tracking and haptic feedback are still not perfect resulting in variable accuracy of virtual interactions (Coles et al., 2011; Kourtesis et al., 2019; Kourtesis et al., 2020). In medical simulation, especially of surgical and procedural tasks, accurate, and precise haptic feedback is of the utmost importance to ensure proper trainee education (Basdogan et al., 2001; Coles et al., 2011). However, realistically simulating these minute haptic details is very computationally intensive and currently relies on numerous simplifications and assumptions in the computer modeling of these interactions (Basdogan et al., 2001; Kourtesis et al., 2019; Kourtesis et al., 2020). Additionally, there are currently very few practical haptic devices that provide tactile feedback and allow the user to experience the sense of touch when they interact with virtual objects (Coles et al., 2011; McGrath et al., 2018). As a result, current XR haptic input and feedback devices are still not accurate or precise enough to simulate intricate medical procedures realistically. Newer XR devices are examining hand and finger tracking as a solution to these bulky controllers, but while hand-tracking may allow for more precise movements it does not give the user the tactile feedback necessary for realistic practice of many medical procedures. Exciting new solutions are combining XR visualizations with traditional physical simulators (UpSurgeOn, 2021) to overcome the current limitations in haptics.

Cybersickness is a critical issue that affects many XR users. It is due to a discrepancy between the user’s visual and vestibular sensory systems (i.e., XR visual input tells the user they are moving, while the user’s brain and inner ear tell them they are stationary), caused by low quality of AV output, motion tracking, virtual locomotion, and virtual interactions (Moro et al., 2017; Weech et al., 2018; Kourtesis et al., 2019; Weech et al., 2019; Kourtesis et al., 2020; Yildirim, 2020). Cybersickness can induce nausea, disorientation, instability, dizziness, fatigue, and can reduce reaction time, significantly detracting from a user’s ability to learn from and participate in XR experiences (Nalivaiko et al., 2015; Kourtesis et al., 2019; Kourtesis et al., 2020). To prevent cybersickness, the XR experience must mimic the interactivity and fidelity of real-life interactions as closely as possible (Kourtesis et al., 2019; Weech et al., 2019; Kourtesis et al., 2020). This relies on both hardware and software to achieve optimal display resolution, refresh rate, FOV, and motion tracking. Motion tracking fidelity depends on the type and layout of XR sensors used to detect the user and must be optimized to be low latency and track the user within the entire play area to avoid cybersickness (Kourtesis et al., 2019). Virtual locomotion is a necessary component of modern XR experiences that are often conducted in confined play areas. The majority of current XR locomotion consists of walking within the play area until reaching the physical boundary, at which point the user must use their handheld controller to teleport to a new area of the VE. Teleportation as a form of virtual locomotion combined with walking was found to alleviate cybersickness (Kourtesis et al., 2019; Kourtesis et al., 2020). Examinations of the 2016 generation of consumer-grade VR HMDs have shown that even with the increased graphical resolution and refresh rate of modern headsets, cybersickness is still experienced (Yildirim, 2020). While this is clearly an issue that has to be resolved for XR to be adopted en-masse, there is little published data on the overall prevalence of cybersickness amongst XR users and conflicting theories on which demographic factors (age, sex, prior gaming/XR experience, etc.) predispose one to experiencing cybersickness (Kourtesis et al., 2019; Yildirim, 2020). New research is attempting to predict user susceptibility to cybersickness prior to XR exposure; the results are promising yet as of now cannot be feasibly applied in regular XR use sessions (Weech et al., 2018). If XR is to be adopted on a large scale in medical education, further examination is needed to understand overall prevalence of cybersickness and how to prevent its effects.

For institutions or education programs looking to invest in XR, the most salient barriers to XR technology include implementation time and cost, faculty resistance to change, and lack of conclusive evidence of educational superiority (Zweifach and Triola, 2019). The cost of most consumer-ready XR devices has dropped since their market debut, yet still ranges from $300 to $900 USD per HMD (Hauze et al., 2019; Kourtesis et al., 2019; Zweifach and Triola, 2019). Funding difficulties may restrict institutions from acquiring enough of these devices to equitably distribute to enough of their students (Kourtesis et al., 2019; Zweifach and Triola, 2019). Even if funding is not an issue, set up and technological literacy challenges may prevent widescale adoption of XR tools. XR HMDs that are both tethered and outside-in tracked, like those seen on the bottom left corner of Figure 2, need to be attached to a powerful PC and used in a designated area free from obstacles and hazards (Kourtesis et al., 2019). There is often significant set-up time and troubleshooting required for these HMDs, which may decrease their regular usage in settings without sufficient technical support. XR devices with outside-in tracking, like those seen on the left side of Figure 2, require external sensors to be calibrated each time the XR experience is started. While not excessively difficult, the increased setup time may dissuade regular use at an educational institution, especially without assigned technical support. Additionally, the required external gaming PCs for these high-end tethered XR devices can often cost thousands of dollars with technical support and upkeep often required (Kourtesis et al., 2019; Zweifach and Triola, 2019; Kourtesis et al., 2020). Although newer XR devices are trending towards cheaper and easier-to-use standalone HMDs without the need for external trackers or PCs, as seen in the top right corner of Figure 2, currently available standalone headsets have relatively low processing power and cannot run very high-intensity software on their own (Kourtesis et al., 2019).

All these technological challenges can be exacerbated by faculty resistance to curriculum modification. Faculty not already familiar with XR technologies may be resistant to adopt these new educational tools as conclusive data on their effectiveness is not yet widely agreed upon (Zweifach and Triola, 2019). Medical innovation is in a constant battle against the very appropriate and real need to maintain quality standards in medical practice. So as not to endanger patients, the global medical establishment must balance the need to keep up with the pace of technological advancement with the need for data on efficacy, safety, and outcomes on each new medical innovation (Dixon-Woods et al., 2011). Additionally, medical professionals spend decades learning and perfecting knowledge that was often only up-to-date much earlier during their instructors’ training and practice; these same individuals may be resistant to changes in thought and modern innovations once they are established enough to have a role in curriculum decisions. Medical educators and practitioners often fall into different categories on the innovation curve popularized by Everett Rogers: innovators, early adopters, early majority adopters, late majority adopters, and laggards (Rogers, 2003). Late majority adopters and laggards are resistant to adopting new technology until it is widely used and available, and these different schools of thought can significantly affect the rate at which XR and other innovations are accepted (Rogers, 2003). Professional groups and societies overseeing different medical disciplines paradoxically can often stifle innovation and interdisciplinary collaboration needed to bring about lasting change (Dixon-Woods et al., 2011). XR also faces a unique challenge to being adopted in medical education and practice as it may come off as a consumer fad. While there is intuitive appeal to XR use, there is limited conclusive evidence to its efficacy in mitigating patient harm: either through XR training improving outcomes, or XR training reducing nonessential practice on patients and resulting in equivalent or superior student competency (Vozenilek et al., 2004). This is very difficult to prove, and studies must be planned carefully to reduce confounding variables and show direct benefit. These issues along with the high financial and effort investment prevents XR and other similar innovative simulation technologies from being adopted at many institutions (Dixon-Woods et al., 2011).

These are all very real obstacles that cannot be ignored when determining how best to incorporate XR tools into a medical education program.

Promising New Developments

Despite these challenges, the latest generation of commercial XR devices (including the Valve Index, HTC Vive Pro, Oculus Rift S, and Oculus Quest 2) have made big strides in alleviating cybersickness by optimizing the above parameters and providing superior UI (Kourtesis et al., 2019; Kourtesis et al., 2020). The newest XR devices are also substantially cheaper and more portable than earlier iterations (Kourtesis et al., 2019). More institutions adopting XR for medical education, will lead to more studies and data published supporting its use, which could combat faculty resistance to implementation. Many of the seemingly insurmountable roadblocks to early XR adoption are currently being diminished and with the pace of technological advancement ever-increasing and more research being conducted, we anticipate that in the next 5 years many of the current barriers to adoption will have been overcome. We hope that this review sheds light on current research efforts and encourages future exploration that will result in more evidence-based decisions to incorporate XR into medical training and care. Highlighted below are a few promising applications that we believe will garner more attention and interest in the future.

The COVID-19 pandemic has demonstrated that concepts and skills once exclusively taught in-person can also be taught virtually. Additionally, the past year has revealed anecdotally that medical curriculums that had already invested in innovative teaching methods were better equipped to adapt to the challenging virtual educational environments forced by the pandemic. There have yet to be studies thoroughly examining differences in educational disruption as well as student performance and satisfaction between institutions that had robust XR and virtual teaching tools prior to and during COVID and those that did not. The unprecedented, unpredictable, and deadly nature of the pandemic made it difficult for these sorts of studies to be conducted in real time, and retrospective review will be necessary after the pandemic and its aftereffects are no longer hampering medical research. XR as a tool in remote learning has not been studied extensively in medicine, and studies on its impact on education during the pandemic will help shed light on its utility in this application.

Earlier in this review we discussed the value of objective assessments of learners using XR in both empathetic communication and surgical training. While some research has been done to validate these electronic assessments against widely used and accepted assessment metrics (Dubin et al., 2017), this is an area that needs more study. Quality and protocol standards for XR use in different fields should be adopted by national medical professional societies to further vet the use of XR as an educational assessment tool (Vozenilek et al., 2004). Increased data on XR as an automated objective assessment tool and increased oversight and validation of its use will help expand its use in this way.

Beyond aiding in patient-specific surgical anatomy review, 3D reconstructions of high-quality medical scans are now being used with XR viewers to study new surgical approaches and population-wide differences in surgical anatomy, research that was previously limited by the availability of cadavers at most institutions (Tai et al., 2020b). A growing group of researchers are conducting high-powered retrospective reviews of patient-specific 3D models generated from large numbers of medical scans on hospital image servers to illustrate the ability of 3D reconstructions combined with XR visualizations to enhance the understanding of new surgical approaches and variations in surgical anatomy (Bendok et al., 2014; Jean et al., 2019; Tai et al., 2020a; Donofrio et al., 2020; Jean et al., 2021; Tai et al., 2021). The results are promising and highlight a new tool to research and understand surgical anatomy on a larger scale.

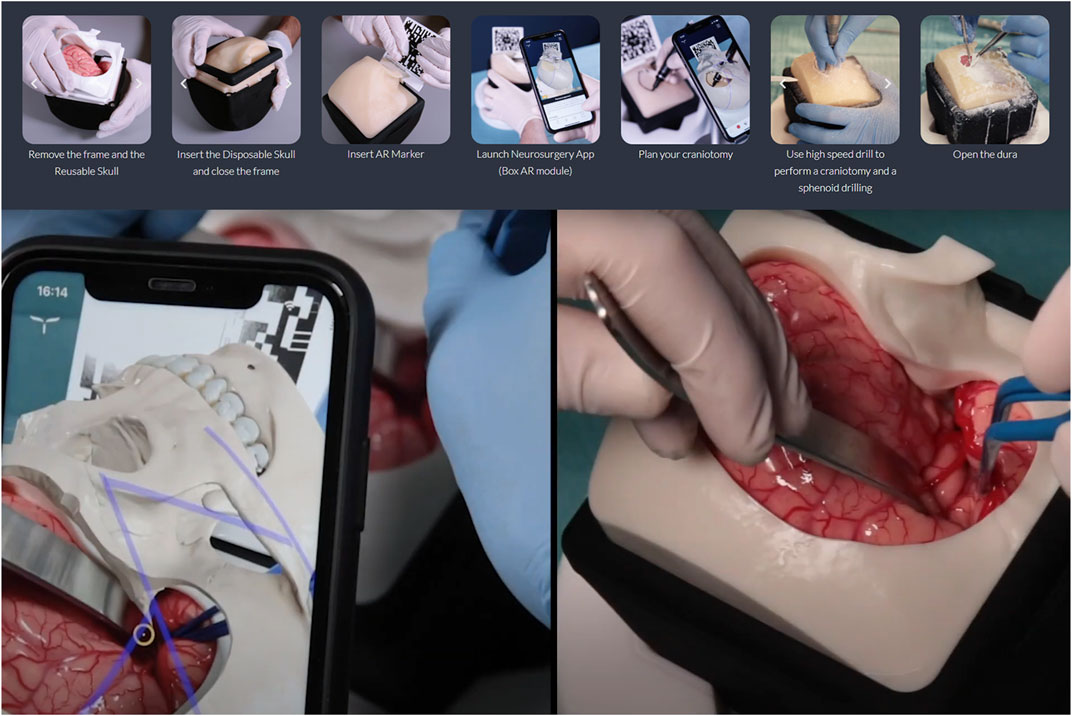

Microsurgical skills training often relies on animal models, cadavers or even live patients to practice techniques and procedures. As has been discussed earlier, these resources can be costly or unsafe to use regularly in training (Grow and Shiffman, 2017; Nicolosi et al., 2018). New XR tools are now fusing physical and virtual training creating a safe and replicable environment for learning complex surgical skills. The Italian company UpSurgeOn is advancing microsurgical training in neurosurgery by combining high-fidelity physical models of brains and skulls with smartphone-based AR overlays (UpSurgeOn, 2021). The physical models allow for tactile hands-on training, and even for practicing drilling and other surgical tasks (UpSurgeOn, 2021), while the AR overlays reveal more layers of information than the model alone could provide (Tang et al., 2019) as seen in Figure 5. The relatively low cost of the physical models and their modular nature along with the ubiquity of smartphones makes this a good solution for high-fidelity neurosurgical training in low-to-middle income countries (Nicolosi et al., 2018). Additionally, the novel combination of XR with physical simulation is an innovative new method for teaching complex manual surgical skills that rely on realistic haptic feedback as well as enhanced visualization, which traditionally could only be provided via practice on cadavers or live patients.

FIGURE 5. The UpSurgeOn BrainBox (UpSurgeOn, Milan, Italy) is an example of a new type of surgical simulator that fuses AR and a high-fidelity tactile physical model into a modular tool that allows for repetitive practice and perfection of precise neurosurgical skills. Screenshots of promotional images of UpSurgeOn BrainBox. Copyright of UpSurgeOn Srl. Used with permission.

Recommendations and Conclusion

It can be risky to adopt new technology before it has been proven to be effective, so we suggest using a phased approach to tailor new educational technologies to each institution’s medical curriculum needs. To be widely adopted, XR anatomy modules must align with the overall curriculum goals of the institution. A good understanding of inter and intra departmental funding, politics, and attitudes towards change and innovation is necessary prior to beginning a campaign to adopt XR technology at an institution of medical education. A 2019 review article from the NYU School of Medicine outlines a “Provider-Centered Approach” to driving the adoption of XR technology in medical education (Zweifach and Triola, 2019). Based on our own experiences, the authors of this review can corroborate this method of identifying a team of stakeholders including faculty, physician, administrator, technologist, and student champions to evaluate and drive iteration of the technology as it is adopted into practice (Zweifach and Triola, 2019). We recommend that the stakeholder team is chosen carefully to contain as many innovators and early adopters as possible, as defined by Everett Roger’s innovation curve, while still ensuring that some early and late majority adopters are on the team to temper the innovative spirit of the team with caution and practicality. Pilot programs and small-scale deployments using this stakeholder team could generate data around utility and test its effectiveness in a controlled manner before making curriculum-changing decisions. This could in-turn help expand institutional investment in the wider deployment of XR technology.

Concerning what type of XR devices and which XR software programs to invest in, we recommend brainstorming with your stakeholder team how your institution wants to use XR in medical education. For programs requiring high degrees of accurate movement in the XR educational experience (i.e. simulating emergency protocols, nursing maneuvers, surgical procedures, etc.) we currently recommend outside-in XR devices with high tracking accuracy as found on the bottom-left and left of Figure 2. For XR simulation of highly detailed tasks such as fine surgical skills and maneuvers, custom-made devices and software may be required. For programs requiring portability and off-campus use of XR experiences, we recommend XR devices in the top-right of Figure 2, with the caveat that these devices may be currently limited in terms of graphical output and processing power. These portable and cheaper devices are a good solution for at-home practice of concepts that require visual immersion without high-fidelity tactile immersion, such as anatomy or protocol review.

There will be challenges and pushback from more conservative educators, and this should be anticipated and planned for. All decisions regarding the project should be made by the stakeholder team, but with regular input from each stakeholder’s wider group on the general direction of the project, i.e. the student team member should collect feedback from fellow students, etc. By identifying further interested parties beyond the stakeholder team and keeping them informed and invested, your XR pilot program can garner more support from different areas of your institution, eventually leading to full adoption and backing.

In summary, medical simulation and specifically eXtended Reality technologies including Virtual and Augmented Reality are being adopted by many healthcare institutions and will be essential components of the post-pandemic future of medical education. As these technologies inevitably improve and are studied further, we anticipate more findings confirming their power to aid in medical education. Furthermore, we urge training programs for all medical disciplines and specialties to embrace these tools and “future-proof” their training programs to allow for immediate objective feedback to learners, remote training when necessary, and a more engaging and enjoyable learning experience for students to complement existing pedagogical methods.

Author Contributions

Author AH-R conducted the bulk of background research and used personal expertise to write the manuscript. Author NA was involved in background research and writing some subsections of the manuscript. Authors NA and JS reviewed drafts of the manuscript and provided feedback to author AH-R. Authors WG, DW, and AK conducted cursory reviews of the final manuscript prior to submission.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to thank Dr. Federico Nicolosi and UpSurgeOn Srl. for permitting us to use images of their BrainBox simulation platform in this manuscript. The authors would also like to thank Jon Brouchoud and Arch Virtual for permitting us to use images of the Acadicus virtual simulation platform in this manuscript.

References

Alfalah, S. F. M., Falah, J. F. M., Alfalah, T., Elfalah, M., Muhaidat, N., and Falah, O. (2019). A Comparative Study between a Virtual Reality Heart Anatomy System and Traditional Medical Teaching Modalities. Virtual Reality 23 (3), 229–234. doi:10.1007/s10055-018-0359-y

Alsoufi, A., Alsuyihili, A., Msherghi, A., Elhadi, A., Atiyah, H., Ashini, A., et al. (2020). Impact of the COVID-19 Pandemic on Medical Education: Medical Students' Knowledge, Attitudes, and Practices Regarding Electronic Learning. PLoS ONE 15 (11 November), e0242905–20. doi:10.1371/journal.pone.0242905

Aseeri, S., and Interrante, V. (2021). The Influence of Avatar Representation on Interpersonal Communication in Virtual Social Environments. IEEE Trans. Vis. Comput. Graphics 27 (c), 2608–2617. doi:10.1109/TVCG.2021.3067783

Basdogan, C., Ho, C.-H., and Srinivasan, M. A. (2001). Virtual Environments for Medical Training: Graphical and Haptic Simulation of Laparoscopic Common Bile Duct Exploration. Ieee/asme Trans. Mechatron. 6 (3), 269–285. doi:10.1109/3516.951365

Bendok, B. R., Rahme, R. J., Aoun, S. G., El Ahmadieh, T. Y., El Tecle, N. E., Hunt Batjer, H., et al. (2014). Enhancement of the Subtemporal Approach by Partial Posterosuperior Petrosectomy with Hearing Preservation. Neurosurgery 10 (Suppl. 2), 191–199. doi:10.1227/NEU.0000000000000300

Bond, G., and Franchi, T. (2021). Resuming Cadaver Dissection during a Pandemic. Med. Educ. Online 26 (1), 1842661. doi:10.1080/10872981.2020.1842661

Bond, W. F., Lynch, T. J., Mischler, M. J., Fish, J. L., McGarvey, J. S., Taylor, J. T., et al. (2019). Virtual Standardized Patient Simulation. Sim Healthc. 14 (4), 241–250. doi:10.1097/SIH.0000000000000373

Bracq, M.-S., Michinov, E., Arnaldi, B., Caillaud, B., Gibaud, B., Gouranton, V., et al. (2019). Learning Procedural Skills with a Virtual Reality Simulator: An Acceptability Study. Nurse Educ. Today 79 (February 2019), 153–160. doi:10.1016/j.nedt.2019.05.026

Coles, T. R., Meglan, D., and John, N. W. (2011). The Role of Haptics in Medical Training Simulators: A Survey of the State of the Art. IEEE Trans. Haptics 4 (1), 51–66. doi:10.1109/TOH.2010.19

Courteille, O., Josephson, A., and Larsson, L.-O. (2014). Interpersonal Behaviors and Socioemotional Interaction of Medical Students in a Virtual Clinical Encounter. BMC Med. Educ. 14, 64. doi:10.1186/1472-6920-14-64

Dixon-Woods, M., Amalberti, R., Goodman, S., Bergman, B., and Glasziou, P. (2011). Problems and Promises of Innovation: Why Healthcare Needs to Rethink its Love/hate Relationship with the New. BMJ Qual. Saf. 20 (Suppl. 1), i47–i51. doi:10.1136/bmjqs.2010.046227

Donofrio, C. A., Capitanio, J. F., Riccio, L., Herur-Raman, A., Caputy, A. J., and Mortini, P. (2020). Mini Fronto-Orbital Approach: "Window Opening" towards the Superomedial Orbit - A Virtual Reality-Planned Anatomic Study. Oper. Neurosurg. 19 (0), 330–340. doi:10.1093/ons/opz420

Drake, R. L., McBride, J. M., and Pawlina, W. (2014). An Update on the Status of Anatomical Sciences Education in United States Medical Schools. Am. Assoc. Anatomists 7 (4), 321–325. doi:10.1002/ase.1468

Dubin, A. K., Smith, R., Julian, D., Tanaka, A., and Mattingly, P. (2017). A Comparison of Robotic Simulation Performance on Basic Virtual Reality Skills: Simulator Subjective versus Objective Assessment Tools. J. Minimally Invasive Gynecol. 24 (7), 1184–1189. doi:10.1016/j.jmig.2017.07.019

Epstein, R. M., Franks, P., Shields, C. G., Meldrum, S. C., Miller, K. N., Campbell, T. L., et al. (2005). Patient-Centered Communication and Diagnostic Testing. Ann. Fam. Med. 3 (5), 415–421. doi:10.1370/afm.348

Erolin, C., Reid, L., and McDougall, S. (2019). Using Virtual Reality to Complement and Enhance Anatomy Education. J. Vis. Commun. Med. 42 (3), 93–101. doi:10.1080/17453054.2019.1597626

Fertleman, C., Aubugeau-Williams, P., Sher, C., Lim, A.-N., Lumley, S., Delacroix, S., et al. (2018). A Discussion of Virtual Reality as a New Tool for Training Healthcare Professionals. Front. Public Health 6, 44. doi:10.3389/fpubh.2018.00044

Franchi, T. (2020). The Impact of the Covid‐19 Pandemic on Current Anatomy Education and Future Careers: A Student's Perspective. Anat. Sci. Educ. 13 (3), 312–315. doi:10.1002/ase.1966

Franzeck, F. M., Rosenthal, R., Muller, M. K., Nocito, A., Wittich, F., Maurus, C., et al. (2012). Prospective Randomized Controlled Trial of Simulator-Based versus Traditional In-Surgery Laparoscopic Camera Navigation Training. Surg. Endosc. 26 (1), 235–241. doi:10.1007/s00464-011-1860-5

Ghosh, S. K. (2017). Cadaveric Dissection as an Educational Tool for Anatomical Sciences in the 21st century. Am. Assoc. Anatomists 10 (3), 286–299. doi:10.1002/ase.1649

Goh, P.-S., and Sandars, J. (2020). A Vision of the Use of Technology in Medical Education after the COVID-19 Pandemic. MedEdPublish 9 (1), 1–8. doi:10.15694/mep.2020.000049.1

Grow, B., and Shiffman, J. (2017). “The Body Trade: Cashing in on the Donated Dead,” in Reuters Investigates. Reuters. https://www.reuters.com/investigates/section/usa-bodies/.

Guedes, H. G., Câmara Costa Ferreira, Z. M., Ribeiro de Sousa Leão, L., Souza Montero, E. F., Otoch, J. P., and Artifon, E. L. d. A. (2019). Virtual Reality Simulator versus Box-Trainer to Teach Minimally Invasive Procedures: A Meta-Analysis. Int. J. Surg. 61 (November 2018), 60–68. doi:10.1016/j.ijsu.2018.12.001

Guetterman, T. C., Sakakibara, R., Baireddy, S., Kron, F. W., Scerbo, M. W., Cleary, J. F., et al. (2019). Medical Students' Experiences and Outcomes Using a Virtual Human Simulation to Improve Communication Skills: Mixed Methods Study. J. Med. Internet Res. 21 (11), e15459. doi:10.2196/15459

Hariri, S., Rawn, C., Srivastava, S., Youngblood, P., and Ladd, A. (2004). Evaluation of a Surgical Simulator for Learning Clinical Anatomy. Med. Educ. 38 (8), 896–902. doi:10.1111/j.1365-2929.2004.01897.x

Hauze, S. W., Hoyt, H. H., Frazee, J. P., Greiner, P. A., and Marshall, J. M. (2019). Enhancing Nursing Education through Affordable and Realistic Holographic Mixed Reality: The Virtual Standardized Patient for Clinical Simulation. Adv. Exp. Med. Biol. 1120, 1–13. doi:10.1007/978-3-030-06070-1_1

Houser, J. J., and Kondrashov, P. (2018). Gross Anatomy Education Today: The Integration of Traditional and Innovative Methodologies. Mo. Med. 115 (1), 61–65.

Hudson, S., Matson-Barkat, S., Pallamin, N., and Jegou, G. (2019). With or without You? Interaction and Immersion in a Virtual Reality Experience. J. Business Res. 100, 459–468. doi:10.1016/j.jbusres.2018.10.062

Iwanaga, J., Loukas, M., Dumont, A. S., Tubbs, R. S., Joe, Iwanaga., Marios Loukas, A. S. D., et al. (2020). A Review of Anatomy Education during and after the COVID ‐19 Pandemic: Revisiting Traditional and Modern Methods to Achieve Future Innovation. Clin. Anat. 34 (1), 108–114. doi:10.1002/ca.23655

Javan, R., Rao, A., Jeun, B. S., Herur-Raman, A., Singh, N., and Heidari, P. (2020). From CT to 3D Printed Models, Serious Gaming, and Virtual Reality: Framework for Educational 3D Visualization of Complex Anatomical Spaces from Within-The Pterygopalatine Fossa. J. Digit Imaging 33 (3), 776–791. doi:10.1007/s10278-019-00315-y

Jean, W. C., Tai, A. X., Hogan, E., Herur-Raman, A., Felbaum, D. R., Leonardo, J., et al. (2019). An Anatomical Study of the Foramen of Monro: Implications in Management of Pineal Tumors Presenting with Hydrocephalus. Acta Neurochir 161 (5), 975–983. doi:10.1007/s00701-019-03887-4

Jean, W. C., Yang, Y., Srivastava, A., Tai, A. X., Herur-Raman, A., Kim, J., et al. (2021). Study of Comparative Surgical Exposure to the Petroclival Region Using Patient-specific, Petroclival Meningioma Virtual Reality Models. Neurosurg. Focus. 51, E13. doi:10.3171/2021.5.focus201036

Keifenheim, K. E., Teufel, M., Ip, J., Speiser, N., Leehr, E. J., Zipfel, S., et al. (2015). Teaching History Taking to Medical Students: A Systematic Review. BMC Med. Educ. 15 (1), 159. doi:10.1186/s12909-015-0443-x

Khan, R., Plahouras, J., Johnston, B. C., Scaffidi, M. A., Grover, S. C., and Walsh, C. M. (2019). Virtual Reality Simulation Training in Endoscopy: a Cochrane Review and Meta-Analysis. Endoscopy 51 (7), 653–664. doi:10.1055/a-0894-4400

Kourtesis, P., Collina, S., Doumas, L. A. A., and MacPherson, S. E. (2019). Technological Competence Is a Pre-condition for Effective Implementation of Virtual Reality Head Mounted Displays in Human Neuroscience: A Technological Review and Meta-Analysis. Front. Hum. Neurosci. 13, 342. doi:10.3389/fnhum.2019.00342

Kourtesis, P., Korre, D., Collina, S., Doumas, L. A. A., and MacPherson, S. E. (2020). Guidelines for the Development of Immersive Virtual Reality Software for Cognitive Neuroscience and Neuropsychology: The Development of Virtual Reality Everyday Assessment Lab (VR-EAL), a Neuropsychological Test Battery in Immersive Virtual Reality. Front. Comput. Sci. 1, 12. doi:10.3389/fcomp.2019.00012

Krokos, E., Plaisant, C., and Varshney, A. (2018). Virtual Memory Palaces: Immersion Aids Recall. Virtual Reality 23 (1), 1–15. doi:10.1007/s10055-018-0346-3

Kron, F. W., Fetters, M. D., Scerbo, M. W., White, C. B., Lypson, M. L., Padilla, M. A., et al. (2017). Using a Computer Simulation for Teaching Communication Skills: A Blinded Multisite Mixed Methods Randomized Controlled Trial. Patient Educ. Couns. 100 (4), 748–759. doi:10.1016/j.pec.2016.10.024

Liu, Y., Dong, H., Zhang, L., and Saddik, A. E. (2018). Technical Evaluation of HoloLens for Multimedia: A First Look. IEEE Multimedia 25 (4), 8–18. doi:10.1109/MMUL.2018.2873473

Maicher, K., Danforth, D., Price, A., Zimmerman, L., Wilcox, B., Liston, B., et al. (2017). Developing a Conversational Virtual Standardized Patient to Enable Students to Practice History-Taking Skills. Sim Healthc. 12 (2), 124–131. doi:10.1097/sih.0000000000000195

Maicher, K. R., Zimmerman, L., Wilcox, B., Liston, B., Cronau, H., Macerollo, A., et al. (2019). Using Virtual Standardized Patients to Accurately Assess Information Gathering Skills in Medical Students. Med. Teach. 41 (9), 1053–1059. doi:10.1080/0142159x.2019.1616683

McBride, J. M., and Drake, R. L. (2018). National Survey on Anatomical Sciences in Medical Education. Am. Assoc. Anatomists 11 (1), 7–14. doi:10.1002/ase.1760

McGrath, J. L., Taekman, J. M., Dev, P., Danforth, D. R., Mohan, D., Kman, N., et al. (2018). Using Virtual Reality Simulation Environments to Assess Competence for Emergency Medicine Learners. Acad. Emerg. Med. 25 (2), 186–195. doi:10.1111/acem.13308

Moro, C., Štromberga, Z., Raikos, A., and Stirling, A. (2017). The Effectiveness of Virtual and Augmented Reality in Health Sciences and Medical Anatomy. Am. Assoc. Anatomists 10 (6), 549–559. doi:10.1002/ase.1696

Nalivaiko, E., Davis, S. L., Blackmore, K. L., Vakulin, A., and Nesbitt, K. V. (2015). Cybersickness Provoked by Head-Mounted Display Affects Cutaneous Vascular Tone, Heart Rate and Reaction Time. Physiol. Behav. 151, 583–590. doi:10.1016/j.physbeh.2015.08.043

Nicolosi, F., Rossini, Z., Zaed, I., Kolias, A. G., Fornari, M., and Servadei, F. (2018). Neurosurgical Digital Teaching in Low-Middle Income Countries: Beyond the Frontiers of Traditional Education. Neurosurg. Focus 45 (4), E17–E18. doi:10.3171/2018.7.FOCUS18288

O'Connor, P., Meekhof, C., McBride, C., Mei, C., Bamji, C., Rohn, D., et al. (2019). “Custom Silicon and Sensors Developed for a 2nd Generation Mixed Reality User Interface,” in IEEE Symposium on VLSI Circuits, Digest of Technical Papers, 2019-June(mm), C186–C187. doi:10.23919/VLSIC.2019.8778092

Ooi, S. Z. Y., and Ooi, R. (2020). Impact of SARS-CoV-2 Virus Pandemic on the Future of Cadaveric Dissection Anatomical Teaching. Med. Educ. Online 25 (1), 1823089. doi:10.1080/10872981.2020.1823089

Pather, N., Blyth, P., Chapman, J. A., Dayal, M. R., Flack, N. A. M. S., Fogg, Q. A., et al. (2020). Forced Disruption of Anatomy Education in Australia and New Zealand: An Acute Response to the Covid‐19 Pandemic. Anat. Sci. Educ. 13 (3), 284–300. doi:10.1002/ase.1968

Pottle, J. (2019). Virtual Reality and the Transformation of Medical Education. Future Healthc. J. 6 (3), 181–185. doi:10.7861/fhj.2019-0036

Ramsey‐Stewart, G., Burgess, A. W., and Hill, D. A. (2010). Back to the Future: Teaching Anatomy by Whole‐body Dissection. Med. J. Aust. 193 (11–12), 668–671. doi:10.5694/j.1326-5377.2010.tb04099.x

Rushton, M. A., Drumm, I. A., Campion, S. P., and O'Hare, J. J. (2020). The Use of Immersive and Virtual Reality Technologies to Enable Nursing Students to Experience Scenario-Based, Basic Life Support Training-Exploring the Impact on Confidence and Skills. Comput. Inform. Nurs. 38 (6), 281–293. doi:10.1097/cin.0000000000000608

Seymour, N. E., Gallagher, A. G., Roman, S. A., O’Brien, M. K., Bansal, V. K., Andersen, D. K., et al. (2002). Virtual Reality Training Improves Operating Room Performance. Ann. Surg. 236 (4), 458–464. doi:10.1097/00000658-200210000-00008

Stepan, K., Zeiger, J., Hanchuk, S., Del Signore, A., Shrivastava, R., Govindaraj, S., et al. (2017). Immersive Virtual Reality as a Teaching Tool for Neuroanatomy. Int. Forum Allergy Rhinol. 7 (10), 1006–1013. doi:10.1002/alr.21986

Tai, A. X., Herur-Raman, A., and Jean, W. C. (2020a). The Benefits of Progressive Occipital Condylectomy in Enhancing the Far Lateral Approach to the Foramen Magnum. World Neurosurg. 134, e144–e152. doi:10.1016/j.wneu.2019.09.152

Tai, A. X., Sack, K. D., Herur-Raman, A., and Jean, W. C. (2020b). The Benefits of Limited Orbitotomy on the Supraorbital Approach: An Anatomic and Morphometric Study in Virtual Reality. Oper. Neurosurg. 18 (5), 542–550. doi:10.1093/ons/opz201

Tai, A. X., Srivastava, A., Herur-Raman, A., Cheng Wong, P. J., and Jean, W. C. (2021). Progressive Orbitotomy and Graduated Expansion of the Supraorbital Keyhole: A Comparison with Alternative Minimally Invasive Approaches to the Paraclinoid Region. World Neurosurg. 146, e1335–e1344. doi:10.1016/j.wneu.2020.11.173

Tang, K. S., Cheng, D. L., Mi, E., and Greenberg, P. B. (2019). Augmented Reality in Medical Education: a Systematic Review. Can. Med. Ed. J. 23. doi:10.36834/cmej.61705

Theoret, C., and Ming, X. (2020). Our Education, Our Concerns: The Impact on Medical Student Education of COVID‐19. Med. Educ. 54 (7), 591–592. doi:10.1111/medu.14181

Uchida, T., Park, Y. S., Ovitsh, R. K., Hojsak, J., Gowda, D., Farnan, J. M., et al. (2019). Approaches to Teaching the Physical Exam to Preclerkship Medical Students. Acad. Med. 94 (1), 129–134. doi:10.1097/ACM.0000000000002433

UpSurgeOn (2021). Summer 2021 Promotion Period. Available at: https://www.upsurgeon.com/.

Vargas, M. V., Moawad, G., Denny, K., Happ, L., Misa, N. Y., Margulies, S., et al. (2017). Transferability of Virtual Reality, Simulation-Based, Robotic Suturing Skills to a Live Porcine Model in Novice Surgeons: A Single-Blind Randomized Controlled Trial. J. Minimally Invasive Gynecol. 24 (3), 420–425. doi:10.1016/j.jmig.2016.12.016

Vozenilek, J., Huff, J. S., Reznek, M., and Gordon, J. A. (2004). See One, Do One, Teach One: Advanced Technology in Medical Education. Acad. Emerg. Med. 11 (11), 1149–1154. doi:10.1197/j.aem.2004.08.003

Weech, S., Kenny, S., and Barnett-Cowan, M. (2019). Presence and Cybersickness in Virtual Reality Are Negatively Related: A Review. Front. Psychol. 10 (FEB), 1–19. doi:10.3389/fpsyg.2019.00158

Weech, S., Varghese, J. P., and Barnett-Cowan, M. (2018). Estimating the Sensorimotor Components of Cybersickness. J. Neurophysiol. 120 (5), 2201–2217. doi:10.1152/jn.00477.2018

Wilson, A. B., Miller, C. H., Klein, B. A., Taylor, M. A., Goodwin, M., Boyle, E. K., et al. (2018). A Meta-Analysis of Anatomy Laboratory Pedagogies. Clin. Anat. 31 (1), 122–133. doi:10.1002/ca.22934

Yanagawa, B., Ribeiro, R., Naqib, F., Fann, J., Verma, S., and Puskas, J. D. (2019). See One, Simulate many, Do One, Teach One. Curr. Opin. Cardiol. 34 (5), 571–577. doi:10.1097/HCO.0000000000000659

Yarramreddy, A., Gromkowski, P., and Baggili, I. (2018). “Forensic Analysis of Immersive Virtual Reality Social Applications: A Primary Account,” in Proceedings - 2018 IEEE Symposium on Security and Privacy Workshops, SPW 2018, 186–196. doi:10.1109/SPW.2018.00034

Yildirim, C. (2020). Don't Make Me Sick: Investigating the Incidence of Cybersickness in Commercial Virtual Reality Headsets. Virtual Reality 24 (2), 231–239. doi:10.1007/s10055-019-00401-0

Keywords: virtual reality, extended reality, medical education, simulation, 3D, learning, surgical training, medical school

Citation: Herur-Raman A, Almeida ND, Greenleaf W, Williams D, Karshenas A and Sherman JH (2021) Next-Generation Simulation—Integrating Extended Reality Technology Into Medical Education. Front. Virtual Real. 2:693399. doi: 10.3389/frvir.2021.693399

Received: 10 April 2021; Accepted: 23 August 2021;

Published: 07 September 2021.

Edited by:

Mel Slater, University of Barcelona, SpainReviewed by:

Panagiotis Kourtesis, Inria Rennes–Bretagne Atlantique Research Centre, FranceBrandon Birckhead, Johns Hopkins Medicine, United States

Copyright © 2021 Herur-Raman, Almeida, Greenleaf, Williams, Karshenas and Sherman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jonathan H. Sherman, anNoZXJtYW4wNjIwQGdtYWlsLmNvbQ==

Aalap Herur-Raman

Aalap Herur-Raman Neil D. Almeida

Neil D. Almeida Walter Greenleaf2

Walter Greenleaf2 Dorian Williams

Dorian Williams Jonathan H. Sherman

Jonathan H. Sherman