Abstract

Cochlear implants (CI) enable hearing in individuals with sensorineural hearing loss, albeit with difficulties in speech perception and sound localization. In noisy environments, these difficulties are disproportionately greater for CI users than for children with no reported hearing loss. Parents of children with CIs are motivated to experience what CIs sound like, but options to do so are limited. This study proposes using virtual reality to simulate having CIs in a school setting with two contrasting settings: a noisy playground and a quiet classroom. To investigate differences between hearing conditions, an evaluation utilized a between-subjects design with 15 parents (10 female, 5 male; age M = 38.5, SD = 6.6) of children with CIs with no reported hearing loss. In the virtual environment, a word recognition and sound localization test using an open-set speech corpus compared differences between simulated unilateral CI, simulated bilateral CI, and normal hearing conditions in both settings. Results of both tests indicate that noise influences word recognition more than it influences sound localization, but ultimately affects both. Furthermore, bilateral CIs are equally to or significantly beneficial over having a simulated unilateral CI in both tests. A follow-up qualitative evaluation showed that the simulation enabled users to achieve a better understanding of what it means to be an hearing impaired child.

1 Introduction

Hearing loss, defined as the partial inability to hear sounds (Nordqvist, 2016), is the most common sensory disability in the world (Vanderheiden, 1992). It affects around 5% of the world population, including 34 million children (1–2% of all children aged 5–14) (Stevens et al., 2011; Organization, 2020). Sensorineural hearing loss, the damage to the inner ear or to the auditory nerves, accounts for approximately 90% of cases (Hopkins, 2015; Nordqvist, 2016). Noisy environments at school entail increased difficulties for children with hearing loss, e.g., when listening to speech and taking part in a conversation (Oticon, 2018b). Such problems can quickly be overlooked by teachers and peers creating issues in inclusion and mutual understanding (Andersen, 2011). Consequently, children can be subject to bullying, victimization, and isolation, resulting in lower quality of life (Silton et al., 2019; Organization, 2020). For individuals with severe hearing loss, a cochlear implant (CI) can be surgically implanted; such a device processes sounds from the outside environment and sends electric currents to the auditory nerve (of America, 2018; on Deafness and Disorders, 2016). The nerve is stimulated and forwards signals to the brain to be interpreted as hearing (of America, 2018). For children with CIs, it is still crucial to have parents and peers understand the implications of and change their perception about living with CIs (Decibel, 2016; Oticon, 2018a). Understanding could lead to more empathetic behavior toward the child, with improved social inclusion. Wright and McCarthy identify empathy as an emerging trend within HCI studies involving attempts to more deeply understand and interpret user experiences (Wright and McCarthy, 2008).

Virtual reality (VR) technologies allow to simulate disabilities with a higher degree of ecological validity. As described in (Brewer and Crano, 2000; Rizzo and Kim, 2005; Parsons, 2015), we consider ecological validity as the degree of similarity between the designed intervention and the real world. Compared to non-VR interventions, studies show users elicit greater empathy for the disabled if they have previously been embodied as an avatar with a disability in VR (Kalyanaraman et al., 2010).

This study proposes a VR simulation allowing parents to experience being a child with CI, and thereby enable greater understanding and insight of experiencing the world with CIs. We describe the simulated environment together with a quantitative and qualitative study involving 15 parents of children with a CI.

2 Background and Related Work

2.1 Cochlear Implants

A cochlear implant (CI) is a surgically implanted device processing sounds from the outside environment. A microphone captures the sound and converts it to electrical currents, sending them to the auditory nerve through an electrode planted in the cochlea (of America, 2018; on Deafness and Disorders, 2016). The nerve is stimulated, and forwards signals to the brain to be interpreted as hearing (of America, 2018). In a CI, a spectral channel in the electrode in the cochlea spans a range of frequencies. An electrical impulse from the implant, sent to the channel, stimulates the whole range at once (on Deafness and Disorders, 2016). A typical CI has around eight channels (on Deafness and Disorders, 2016). Having more channels enhances fidelity by dividing the electrode array further, generally improving hearing performance (Croghan et al., 2017). However, having channels closer to each other physically can also introduce interference between them, limiting speech understanding (Cucis et al., 2018). Cost can be another factor when choosing the number of channels in a CI. Worth noting is managing expectations, because the CI conversion of audio signals to electrical impulses sacrifices frequency ranges and quality of perceived sound, which is typically what people refer to as sounding ‘digital’ (Croghan et al., 2017). In general, the way CIs are simulated and how individuals hear with CIs are fundamentally different. Yet, several simulations of CIs have been made to approximate how individuals with HL hear speech, environmental sounds, and music (Goupell et al., 2008). As an example, CIs can be simulated digitally by using a vocoder (Shannon et al., 1995).

Tests with patients trying to match the sound of their functioning ear with the output coming from the ear with the implant reveal differences in perception (Dorman and Natale, 2018; Dorman and Natale, 2019). These matching sessions are only possible because of unilateral hearing impairment in individuals, since they can hear with their other ear and perceive differences. The simulations can therefore include several tweakable parameters to account for these differences (de la Torre Vega et al., 2004). These methods have mostly been used in situations of tweaking the implant to the individual with HL, or for use in further research and development. Some have, however, tried to use simulations for other peers, caretakers, or family to facilitate understanding of how the individual hears with CIs (Sensimetrics, 2007).

2.2 Benefits of Cochlear Implant in Children

HL in infants are discovered earlier than before, allowing them to benefit from receiving a CI when they are 12 months of age or even younger (Holman et al., 2013). Such early interventions help them catch up with normal-hearing peers in terms of developmental outcomes (Oticon, 2018c). Studies found CIs offering advantages in hearing, educational, and communicational abilities, expecting increased quality of life and employability, while finding no harmful long-term effects (Waltzman et al., 2002). To be noted, intrinsic characteristics of the child with CI, including gender, family, socioeconomic status, age at onset of hearing loss, and pre-implant residual hearing may predispose a child to greater or lesser post-implant benefit (Geers et al., 2007). Fitting bilateral CIs in children has become relatively common, and numerous studies compare bilateral CIs with unilateral CIs. Such users are reported to have better horizontal localization of complex, broadband sound than unilateral users, but their abilities do not reach those of normal-hearing listeners (Preece, 2010). The largest improvements feature localization of sounds known to the listener. Differences apply in results when comparing adults and children with CIs, with children’s results being less clear; they see improvement over unilateral listening, but not in all subjects. This is, in part, a developmental issue (Preece, 2010). In terms of speech understanding, some studies found a significant advantage with bilateral CIs over unilateral CIs, while other studies found no significant differences (Litovsky et al., 2006; Beijen et al., 2007).

2.3 Measuring Hearing Abilities

Hearing encompasses many aspects, including spatial hearing (localization and separation), speech-in-noize detection, and general intelligibility. Difficulties of hearing can be caused by multiple issues, e.g., distance to the audio source, room reverberation, and competing speech or noise in the environment. For individuals to be able to communicate and understand each other, a crucial aspect is proper speech intelligibility and localization when participating in social development and interaction. Abilities of hearing can be measured e.g., using volume tests, frequency range tests, Hearing In Noise tests (HINT) (Nilsson et al., 1994), Words In Noise tests (Wilson et al., 2007), etc. To measure hearing abilities with varying amounts of hearing loss, examples of quantitative evaluations of hearing abilities are presented below. A study measured sound localization abilities in children with cochlear implants (age range 2–5) (Beijen et al., 2007). The setup was based on an earlier localization test by Moore et al. (1975). They measured using two setups depending on the children having either bi- or unilateral fittings of CI: the former with the loudspeakers positioned at 90 or −90° azimuth, and the latter at 30 or −30° azimuth. They played at least 15 melodies to each participant from the speakers selected at random each time. Several studies used similar setups for evaluating adults (M = 57) with CIs fitted at an early age. Speech was played from the front, and noise was played from two sides, with azimuths 90° from the participant in both directions (Laszig et al., 2004; Ramsden et al., 2005). Some studies used more than three speakers to perform testing (Schoen et al., 2005; Verschuur et al., 2005). Other studies used word recognition tests to measure speech intelligibility, with a score of how many words were correctly identified (Senn et al., 2005). Their setup was similar to the previously mentioned studies measuring sound localization, with three speakers located in azimuths 0, 90, and −90°, where speech was played from the front and noise from the side. Common for these studies was that noise had an impact on intelligibility, but some studies played sentences that turned out to be too predictable based on the number of available sentences to play. As such, these studies typically result in very low speech recognition thresholds when the competing speech signals are spatially separated.

To achieve higher thresholds that correspond more closely to natural communication situations, a study developed an open-set speech corpus called DAT (Nielsen et al., 2014) for measuring speech-on-speech masking to be used in noisy environments. Rather than utilizing an often-used closed-set coordinate response measure (Bolia et al., 2000), this study developed a corpus featuring different talkers as well as a larger set of sentences. The evaluation of the corpus presents multiple identical sentences at the same time as to replicate masking speech-on-speech situations, with a callsign (Asta, Tine, Dagmar) indicating which sentence to pay attention to. They evaluated the corpus by testing similarly to previously mentioned studies of one target speaker in front and interfering speakers from the sides, at 50 and −50° azimuth. This open-set corpus approach can approximate real-life without necessarily knowing the voice that is speaking the sentence one has to pay attention to. Furthermore, having more sentences removes the ability to remember words (or combinations of words) spoken, and approximates being able to interpret speech which has not been heard before. Common for the results of the studies of measuring hearing abilities through speech intelligibility and localization are perceivable differences depending on assigned hearing conditions (e.g., cochlear implant conditions as well as varying levels of noise from several azimuths). Intelligibility and localization abilities are vastly improved with bilateral CIs over unilateral CIs, as well as when presenting audio featuring higher signal-to-noize ratios (SNRs).

2.4 Disability Simulations

Disability simulations in virtual environments (VE) allow users to embody an avatar designed to enable them to experience the world from a disabled person’s perspective (Silton et al., 2019). Simulations in VR can help immerse individuals in highly sensorial and realistic worlds, creating the feeling of presence (Cummings and Bailenson, 2016). Role-playing someone else’s perspective has been one of the main techniques to increase empathy toward specific groups of people (Lim et al., 2011; Smith et al., 2011). Experiencing the world from someone else’s point-of-view can reduce the gap between oneself and others and create a better understanding of the other person’s feelings, motivations, and challenges.

Studies show feelings of environmental presence and immersion in VR can in turn help foster greater feelings of empathy and compassion, when embodying an avatar and its traits one does not possess in real life (Yee et al., 2009; Kilteni et al., 2012; Bailey et al., 2016; Nilsson et al., 2016). This is in turn helped by the individual’s concept of Theory-Of-Mind (Silton et al., 2019). These feelings materialize more easily because of the ecological validity of VR. By substituting the users’ real-world sensory information with digitally generated ones, virtual reality can create the illusion of being in a computer-generated environment. This allows the creation of virtual simulations that will enable their users to experience the world from someone else’s perspective, making VR the “ultimate empathy machine” (Bailenson, 2018). If the VE is realistic and allows for similar interactions as in the real world, there is a higher potential for an immersive media experience while limiting cognitive load (Moreno and Mayer, 1999). Interplays between senses are important since they rely on each other, and out-of-the-box commercial VR systems can stimulate both visual, auditory, and haptic senses simultaneously.

Specific cases of using VR to create and evaluate embodied disability simulations, e.g., foster empathy by embodying an avatar and its traits, called the Proteus Effect (Yee et al., 2009), are presented. The experiments outline the effectiveness of comparing groups of participants who either have or have not tried the virtual experience. They measure differences in displayed positive post-interventional traits toward the disabled individuals after being exposed to the virtual embodied experience. As an example, results from a study conducted by Hasler et al. (2017) illustrated the ability of VR to make the user feel like they are the person whose role they are playing in the virtual environment. A total of 32 white females participated in a study within which half of them embodied and controlled a black avatar and the other half a white avatar. In the virtual environment, they were asked to interact with either white or black avatars. During this interaction, the participants’ mimicry with the virtual avatars were measured. The results show that when embodying a black avatar, the participants tend to mimic black avatars more than white avatars and vice versa. Nonconscious behavioral mimicry is an unconscious way to show closeness to someone (Lakin and Chartrand, 2003), and the study by Hasler et al. (2017) shows that VR can increase the closeness with someone with a different skin color when seeing the world from their point of view.

Other studies have investigated how VR can be used to create empathy with individuals with specific physical or mental disorders. Sun et al. presented a study evaluating groups of participants in a virtual color blindness simulation (Ahn et al., 2013). Some participants were exposed to a red-green colorblind virtual embodied experience. Other participants were exposed to a normal-colored virtual perspective-taking experience and were instructed to imagine being colorblind. The first experiment compared the embodied experience against the perspective-taking experience and found that the embodied experience was effective for participants screened with lower tendencies to feel concern for others 24 h after treatment. The next experiment confirmed a heightened sense of realism during the embodied experience that led to greater self-other merging compared to the perspective-taking experience. The last experiment demonstrated the effect of the embodied experience being transferred into the physical world afterward, leading participants to voluntarily spend twice as much effort to help people with color blindness compared to participants who had only imagined being colorblind.

Another evaluation featured groups of participants in a schizophrenia simulation (Kalyanaraman et al., 2010). A 4-condition between-subjects experiment exposed participants to either a virtual simulation of schizophrenia, a written empathy-set induction of schizophrenia, a combination of both the simulation and written empathy conditions, or a control condition. Results indicated the combined condition induced greater empathy and a more positive perception toward people suffering from schizophrenia than the written empathy-set and control conditions. Interestingly, the greatest desire for social distance was seen in participants exposed to the simulation-only condition, while not significantly differing in empathy and attitude seen in either the written empathy or combined conditions.

Studies have illustrated how VR can be used to induce its users with “other-oriented empathy”. As an example, a virtual reality game was designed to foster empathy toward the Fukushima Daiichi nuclear disaster evacuees (Kors et al., 2020). Here, the player is given the role of a journalist interviewing an evacuee from the nuclear disaster, forcing the user to take an empathic approach toward the “other”. Results from the qualitative study indicate that the virtual reality game can increase the “other-oriented empathy” of its users.

VR has also been used to help individuals with disabilities. In Zhao et al. (2018), a device is presented helping visually impaired individuals to navigate using auditory and haptic feedback. For individuals with low vision, in Zhao et al. (2019) a set of tools that enable to access VR experiences is presented.

Despite the increasing number of studies investigating the possibilities of interactive VR interventions designed to increase empathy and accessibility, to our knowledge, no previous simulation has investigated the impact of exposing parents to a simulation of how their children with hearing loss perceive the world.

In Bennett and Rosner (2019), the authors argue how empathy, as performed by designers in order to know their users, may actually distance designers from the very lives and experiences they hope to bring near. We would like to point out how we agree with the approach of learning to be affected and attending to difference without reifying that difference once again (Despret, 2004).

2.5 Advantages and Disadvantages of Virtual Reality Disability Simulations

For a disability simulation to be effective and leave an insightful experience, it must be safe and stress-free, as it can otherwise be harmful (Kiger, 1992). Disability simulations can be implemented as virtual environments (VEs) featuring an avatar the user can embody. The avatar can feature certain disabilities that complicate interaction with the immediate environment (Silton et al., 2019). Participants without disabilities are put in situations designed to briefly mirror the lives of those with disabilities as realistic as possible. Simulations in VR can help immerse individuals in highly sensorial and realistic worlds, creating the feeling of presence (Cummings and Bailenson, 2016). Studies show feelings of environmental presence and immersion can in turn help foster greater feelings of empathy and compassion, when embodying an avatar and its traits (Nilsson et al., 2016; Bailey et al., 2016), also known as the Proteus effect (Yee et al., 2009). This, in turn, is helped by the individual’s concept of theory of mind (Silton et al., 2019). These feelings materialize more easily because of the ecological validity of VR. Ecological validity refers to the degree of similarity between the designed intervention and the real world (Parsons, 2015; Brewer and Crano, 2000). If the VE is realistic and allows for similar interactions in the real world, there is a higher potential for an immersive mediated experience while limiting cognitive load (Moreno and Mayer, 1999). Interplays between senses are important since they rely on each other, and out-of-the-box commercial VR head-mounted displays and controllers can stimulate both visual, auditory, and haptic senses simultaneously. Disability simulations can be effective if certain ethical precautions are taken, e.g., activities are well-designed and evaluated, and the simulation exercises are closely linked to social or behavioral science theory (Kiger, 1992). However, limitations and weaknesses of disability simulations exist in the form of how participants perceive and act upon the stimuli introduced in the VE. For example, actively engaging participants also make lessons livelier and more appealing, and therefore more likely to be recalled later (Silton et al., 2019). However, the disability is only presented to participants for a short periods of time, and participants are not given the time to develop the coping skills necessary to successfully absorb the simulation experience, which can often lead to negative feelings toward the simulated disability (Kiger, 1992). In general, more studies are needed to verify the current limited number of reported positive changes in the behavioral intentions and attitudes of typical participants following the simulations. The main issue is that, although the simulation does no long-term harm to participants, the positive effects may be negligible, and any positive attitudes or behavioral changes linked to disability simulations were reportedly brief (Silton et al., 2019). Simulations must be careful not to introduce or reinforce negative stereotypes and bias based on short experiences which can leave participants feeling frustrated, insecure, or humiliated. Simulations focus on presenting limitations, which can negate accommodations or even strengths of a particular disability (Silton et al., 2019).

3 Virtual Reality Simulation Design

We developed the virtual reality simulation HearMeVR, which is a 3D representation of a school environment, including a playground and a classroom. A screen capture from the environments can be seen in Figure 1. The simulated auditory feedback reproduced experiencing the world with an 8-channel cochlear implant (Stickney et al., 2004). Specifically, each audio example was filtered through eight parallel bandpass filters, tuned at the same frequencies as those typically found in an 8-channel cochlear implant. The number of channels was empirically chosen to be suitable for speech intelligibility and understanding, and to match the most common models of CIs (Cucis et al., 2018). The simulator was designed in collaborations with experts working with children with cochlear implant, who provided information regarding the frequency range and characteristics of the cochlear implants used by the target group. The experts also provided input on the specific scenarios to design, e.g., a classroom and a courtyard. The auditory feedback was delivered through headphones and with three different conditions: 1) binaural sound simulating an “normal” hearing person; 2) binaural sound simulating a bilateral cochlear implant; in this case the cochlear implant simulator was equally applied to the two years 2) unilateral cochlear implant: in this case the sound was delivered only thorugh 1 year, while the other ear was acoustically isolated.

FIGURE 1

The inside and outside scenes.

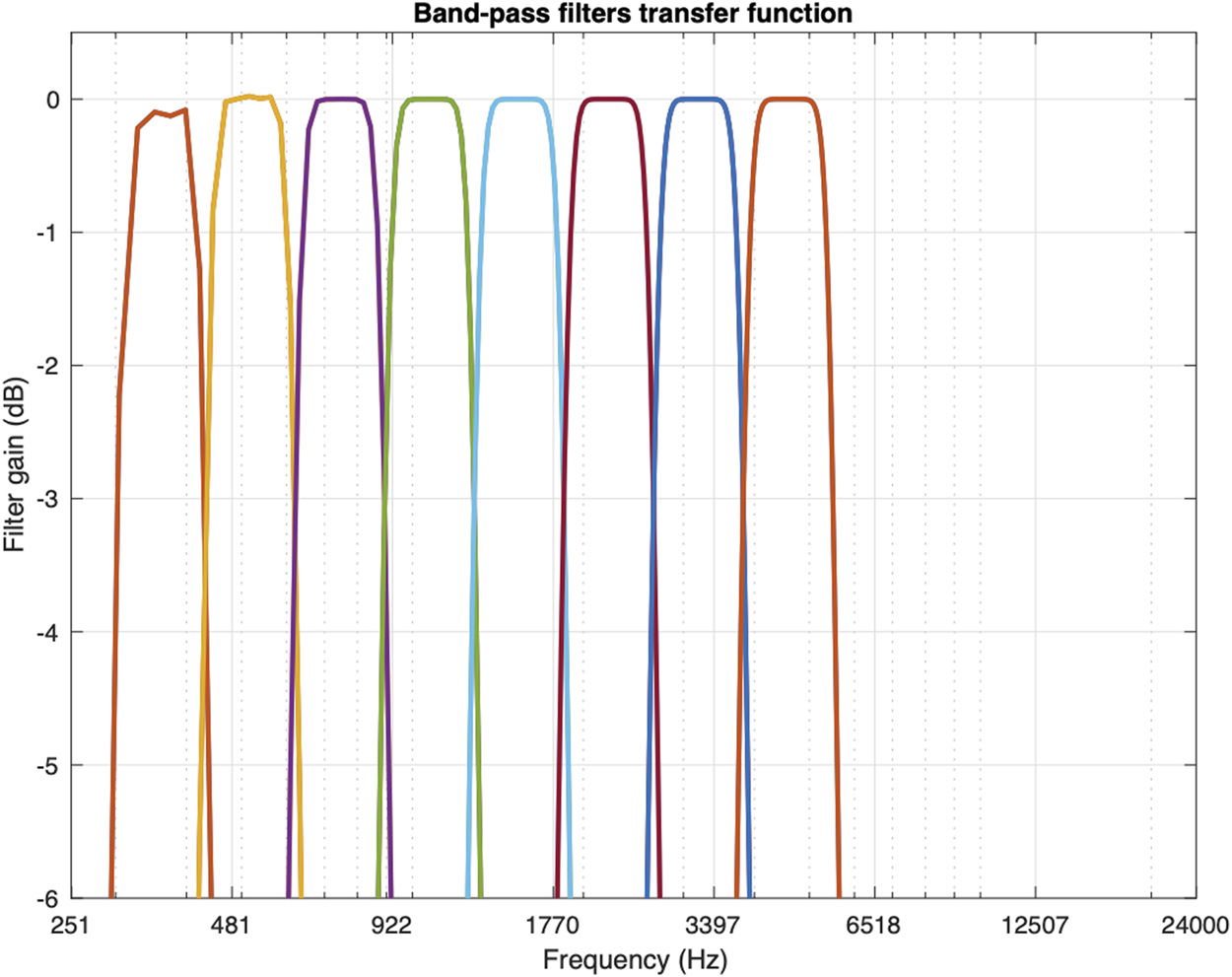

Figure 2 shows the frequency domain representation of the eight parallel bandpass filters used to simulate the cochlear implant.

FIGURE 2

The simulation of the CI in frequency domain. The eight frequency bands are clearly seen in the spectrum.

Figure 3 shows the result of applying the CI algorithm to an input speech signal. The perceivable differences, mainly additional noise and lower intelligibility, can be seen in the spectrum as additional noise at low frequencies (see Figure 4). However, as expected, temporal variations (amplitude envelope) of the original speech signal are kept unvaried, and the two signals contain similar spectral components.

FIGURE 3

An example of the result of applying the cochlear implant simulator to an input file. Blue waveform: time domain waveform of the original signal; red waveform: waveform of the signal passed through the simulator.

FIGURE 4

Spectrogram of the input file from Figure 2. (A) Spectrogram of the original signal. (B) spectrogram of the signal passed through the simulator. Notice the increased noisy components in the bottom signal.

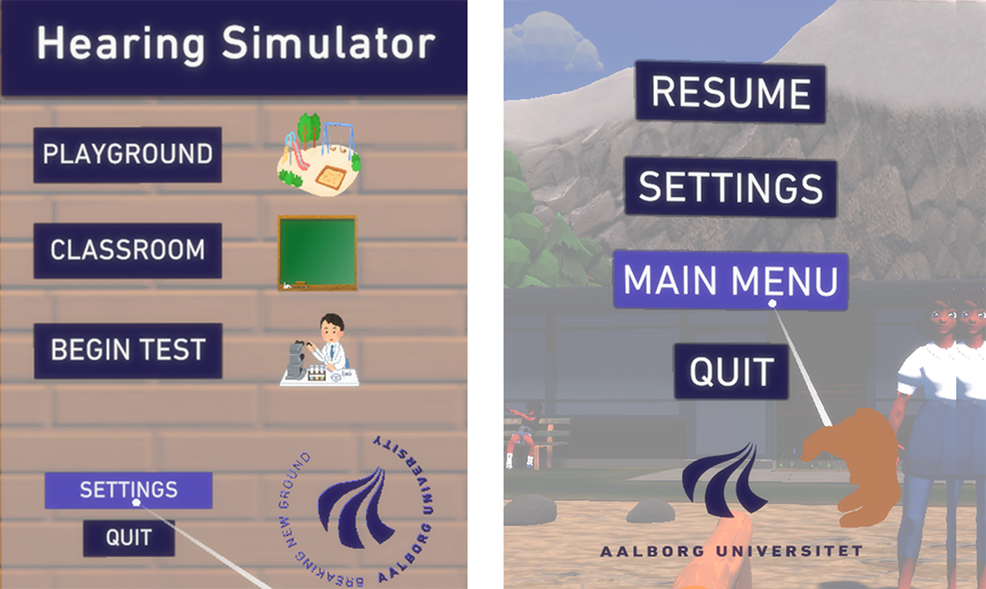

The VR simulation was created with two intended use cases: testing and free-roaming. The testing case requires an assistant to monitor and advance the test. The free-roaming case allows participants to explore the entire environment freely. When the simulation is run, the user is spawned outside a school building with an interactive main menu shown on the side of the building as seen in Figures 5, 6. In the menu, participants can initiate testing, go to the free-roam scenes, open a settings menu, or quit the simulation. From a technical perspective, the simulation was developed in Unity (v. 2019.3) (Unity, 2019) with supplementary software such as Blender (v. 2.8) (Foundation, 2019) and MATLAB (v. R2020a) (MATLAB, 2020). Blender was used to create models and textures. Support for VR was implemented using the OpenVR (v. 1.11) software development kit Steam (2019). The audio environment of the simulation is routed through the Google Resonance Audio software development kit, facilitating spatial audio in VR platforms (Google, 2018). Specifically, Resonance audio uses generic head-related transfer functions (HRTFs) from the SADIE database (Kearney and Doyle, 2015). The HRTFs were used to implement the spatial location of the sound sources, in positions that matched the one of the corresponding visual elements. The auditory source was first spatialized using the corresponding HRTF, one for each ear, and then processed through the cochlear implant simulator, for those conditions were the simulator was used (see experiment’s description below). The reverberation was chosen to fit the two different environments (outdoor and indoor). The simulation was delivered using a Vive Pro Head Mounted Display (HMD) and a pair of noise canceling headphones (Bose QC35 II). Those headphones were chosen since, as described in their specs, utilize both active and passive noise reduction technologies through sophisticated proprietary electronics approach to active noise reduction, placing microphones both inside and outside the earcups. The passive noise reduction is achieved by the combination of the acoustic design and materials chosen for the earcups and cushions. Those headphones were chosen to provide better isolation than the default headphones embedded in the Vive Pro HMD. The Vive Pro was chosen, as opposed to other portable HMDs such as Oculus Quest or Oculus Pro, for the ability to deliver higher fidelity and complex simulations. The Vive pro was connected to a desktop PC running both the auditory and visual feedback.

FIGURE 5

The menu scene, in which participants are initially spawned upon launching the simulation.

FIGURE 6

The main menu (A), and the pause menu accessed by clicking the menu button (B).

From the main menu, the user can either go to a playground or a classroom, see Figure 1. In the playground and classroom scenes, the user can explore the environment freely and walk around using controller-based continuous locomotion using the trackpad. The environment features both sounds representing normal hearing and sounds heard through a simulated CI. The soundscape is spatialized regarding binaural differences in time, level, and timbre (Yantis and Abrams, 2014), enabling more realistic localization of sound in the environment. All scenes are populated with ambient spatialized sounds to create a realistic soundscape. Outside, ambient sounds of the forest can be heard, as well as sounds from non-player characters (NPCs). Inside, ambient sounds of items and people are audible. Additionally, footsteps are generated depending on the surface on which participants are moving and the speed at which they move. The settings menu allows to select graphics presets and choose a hearing condition. The conditions are normal hearing, unilateral CI, and bilateral CI. The pause menu in Figure 6 is accessed by clicking the menu button on the controller, where participants can resume the simulation, access settings, go to the main menu, or quit the simulation.

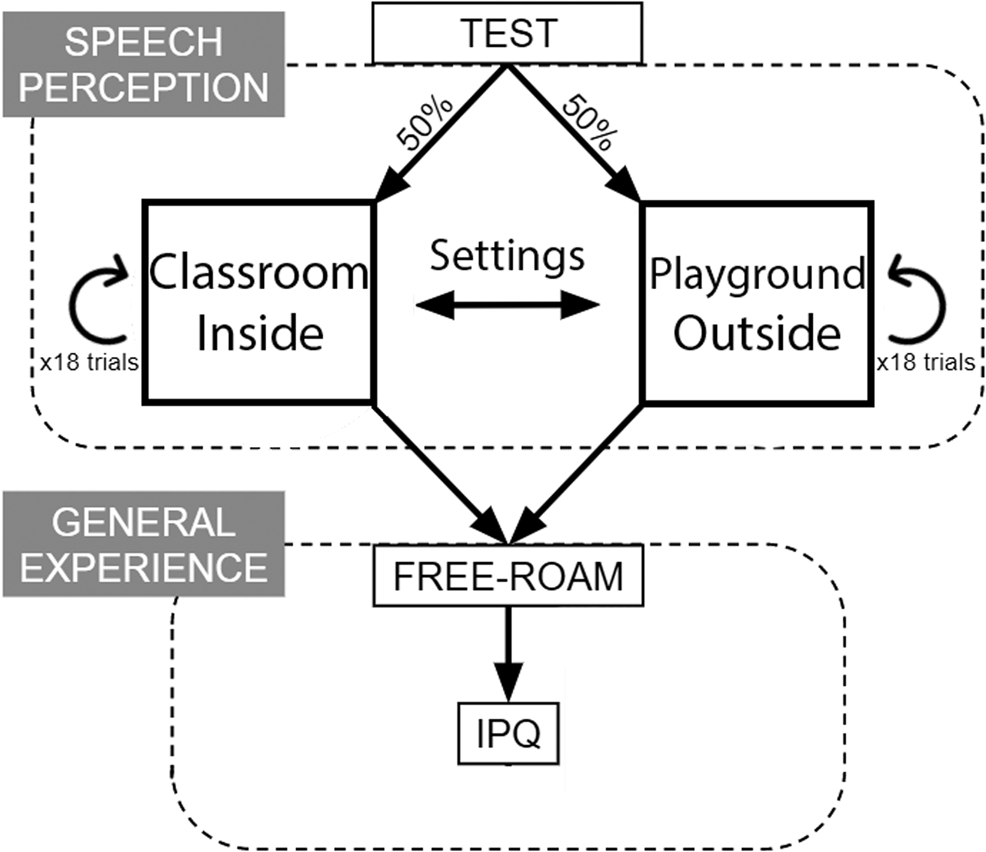

4 Evaluation

We recruited 15 participants (10 females, 5 males, aged 31–57, M = 38.5, SD = 6.6) using probability-based simple random sampling with main criteria of being a parent to a child who had hearing loss and needed a CI or had a CI already. The parents were recruited using a social media group of the association (Decibel). The experiment followed the guidelines from the ethical committee of the association (Decibel) and of University (Decibel). The experiment consisted first on a word recognition test followed by an exploration of the VR environments, both the playground and the classroom, while sitting on a rotating chair. A free exploration and a speech perception test were performed in order to ensure that the parents explored the different possibilities offered by the simulation (Figure 7). The experiment lasted on average 37 min (SD = 10.4 min); participants were asked if they agreed to be recorded.

FIGURE 7

An illustration of the experimental design procedure.

Upon proceeding, each participant was first introduced to the experiment, making sure that the hardware and software was properly working, and then spawned in either the classroom or the playground at random. When trials in the first setting were completed, they were spawned in the second setting to finish the remaining trials. Each participant was exposed to a total of 36 trials equally split between the two settings. In the classroom, each participant was virtually seated at a table facing the teacher, with children sitting at the other tables. Outside, the teacher was standing in the middle of the playground, with children playing in the vicinity. Sound localization and word recognition was tested by playing audio sentences from the DAT corpus from either 0, 90 or −90° azimuth in each trial. Figure 8 illustrates the three locations in both settings.

FIGURE 8

Overviews of the two settings ((A) the playground, (B) the classroom) in the VE. Green circles represent participants, and red speaker icons represent the three non-player characters talking during testing.

Sentences from the DAT corpus (Nielsen et al., 2014) are constructed in the form “Name thought about … and … yesterday”, where Name represents either of three Danish female names, and ellipses represent unique nouns. In each trial, two tasks were performed: 1) verbal repetition of the two nouns mentioned in the sentence, and 2) localizing where the sentence originated (left, right, or in front). Accuracy on word recognition and sound localization was logged in a text file for each participant. Word recognition was coded as correct if both nouns were stated correctly, and sound localization was coded as correct if a participant stated the correct direction of the sentence. The 18 trials in each setting were run in quasi-random order, ensuring 36 unique sentences with the same amount of sentences played from every location. Exactly three sentences were presented at each of the three locations around the participant in both settings. Upon completing the word recognition and sound localization test, the participant was free to explore both settings in the VE and change their simulated hearing conditions by themselves, guided by the researcher. Upon exiting the VR application, they were asked to fill out a questionnaire assessing their feelings of presence during the experiment in the VE. The iGroup Presence Questionnaire (IPQ) (igroup.org Project Consortium, 2016) was used, aiming to assess different aspects of presence, including general and spatial presence, as well as involvement with and experienced realness of the VE. After answering the IPQ, an informal discussion about their experience was conducted between the researcher and the participant.

5 Results

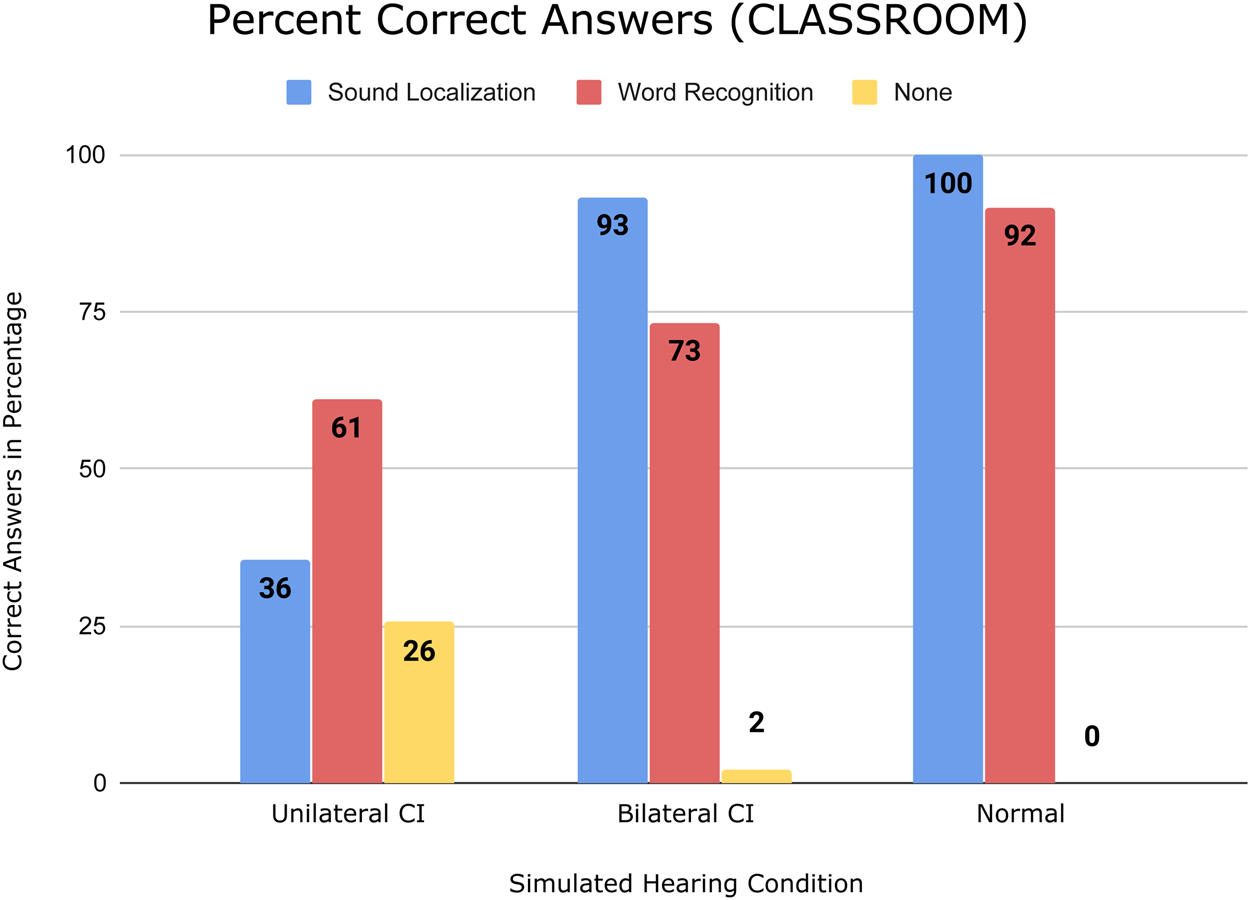

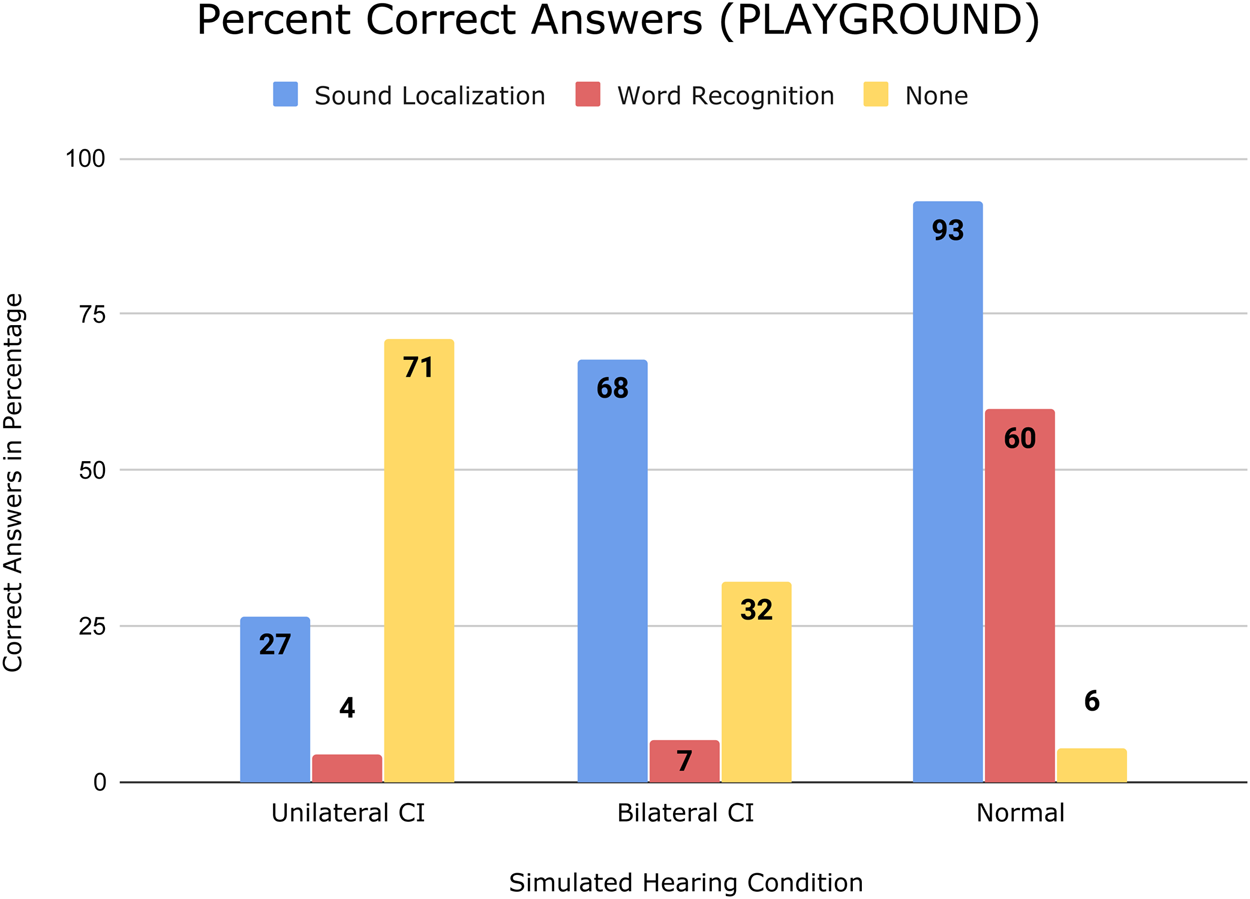

Figures 9, 10 show results of the word recognition and sound localization test in the classroom and playground setting, respectively.

FIGURE 9

Bar chart showing simulated hearing conditions on the horizontal axis and correct answers in percentage on the vertical axis, from testing in the classroom setting.

FIGURE 10

Bar chart showing simulated hearing conditions on the horizontal axis and correct answers in percentage on the vertical axis, from testing in the playground setting.

Figure 11 shows the IPQ results in a bar chart. Results are presented individually by calculating the mean of every subscale of the IPQ: general presence (G), spatial presence (SP), involvement (INV), and realness (REAL), including error bars. The scores range from 1-7 based on the seven-point Likert scale format of the questionnaire.

FIGURE 11

Bar chart showing IPQ subscales on the horizontal axis and respective mean scores on the vertical axis, including standard deviations.

Mann-Whitney U tests were calculated to test for a significant difference between hearing conditions and the two settings. In this study, specifically, the Mann-Whitney U test is one-tailed with the alternative hypotheses expecting normal hearing test accuracies to be higher than with bilateral CI, and expecting bilateral CI test accuracies to be higher than with unilateral CI. Finally, it is expected that test accuracies in the classroom setting are higher than in the playground setting. Given the relatively small sample size, the results of the Mann-Whitney U test should be interpreted with caution. Figure 12 shows each alternative hypothesis, regarding sound localization accuracy, with their respective Mann-Whitney U test results, and Figure 13 shows hypotheses regarding word recognition accuracy with the respective results.

FIGURE 12

Mann-Whitney U test results for each alternative hypothesis for the sound localization accuracy. The critical value of U at p¡.05 is 4. UniCI, BiCI, and Norm denote simulated unilateral CI, bilateral CI, normal hearing, respectively. Green and red colors indicate the hypothesis is accepted and rejected, respectively.

FIGURE 13

Mann-Whitney U test results for each alternative hypothesis for the word recognition accuracy. The critical value of U at p¡.05 is 4. UniCI, BiCI, and Norm denote simulated unilateral CI, bilateral CI, normal hearing, respectively. Green and red colors indicate the hypothesis is accepted and rejected, respectively.

In both the classroom and the playground settings, results show that both sound localization and word recognition accuracy is higher with bilateral CI than with unilateral CI, and is highest with normal hearing. Comparing unilateral CI with bilateral CI, the biggest difference of the two dependent variables is seen in sound localization accuracy, whereas if comparing bilateral CI with normal hearing, the biggest difference is seen in word recognition accuracy.

5.1 Sound Localization

The Mann-Whitney U test indicates significantly higher localization accuracy in the classroom setting with bilateral CI than with unilateral CI. However, there is no significant difference between them in the playground setting. The difference in the classroom setting is likely due to the advantage of having binaural hearing with bilateral CI. The noisy environment in the playground setting with a negative SNR may have caused participants to completely miss the signal in some trials, explaining the difference of not being significant. Some participants with a unilateral CI during the test expressed that it was ‘obviously impossible’ to localize sounds, as they were only receiving them in one ear. However, two participants with unilateral CI stated they could exploit the visuals to localize where sounds came from, one stating: “I can see the mouth move in front of me so that is where the sound comes from, but I hear it coming from the left”; recall that NPCs were animating when speaking. However, many participants did not take advantage of the possibility of rotating their head to look for the speaking NPC, possibly because twelve out of the fifteen participants had never tried VR before, or because the participants focused on the listening aspect, not considering utilizing their visuals for localization. Real life studies testing sound localization accuracy of bilateral CI users and unilateral CI users find tendencies similar to those of this study, namely bilaterally implanted users demonstrating significant benefits in localizing sounds (Litovsky et al., 2006; Beijen et al., 2007; Kerber and Seeber, 2012).

Comparing normal hearing with bilateral CI, a higher sound localization accuracy is seen in both settings, however, in respect to the Mann-Whitney U tests, not significantly higher. As there are no immediate advantages of localizing sounds with normal hearing against bilateral CI, it is a reasonable assumption that there is no significant difference between the two. However, looking at the correct answers as a percentage (68 vs 93% for bilateral CI and normal hearing respectively, see Figure 10), a difference is seen in the playground setting between the two hearing conditions. Assuming there is a difference, the explanation for lower accuracy scores with simulated bilateral CI could be that participants were overwhelmed by the CI sounds in the environment, and may have focused more on the word recognition task, as that was demonstrably more difficult with simulated CI than with normal hearing. Real life studies testing sound localization accuracy of normal hearing users and bilateral CI users find normal hearing to perform significantly better (Kerber and Seeber, 2012; Dorman et al., 2016). Looking at Figures 9, 10, a similar tendency is seen, although the Mann-Whitney U tests suggest no significant benefits.

Discussing the influence of the two settings, the Mann-Whitney U tests indicate significant differences with normal hearing and bilateral CI, but not with unilateral CI. With unilateral CI, sound localization accuracies in both the classroom at 36% and the playground at 27% are close to chance level (33%), indicating participants were simply guessing the location. Some participants even stated the same location in all trials, either left or right, dependending on which side their simulated unilateral CI was, which explains the near-chance level scores. The possibility of utilizing binaural hearing to localize sounds with bilateral CI and normal hearing allowed participants to make an educated guess on the location based on what they heard. Thus, the soundscapes produce different results. Not surprisingly, the noisy soundscape in the playground setting entailed a significantly lower accuracy compared with the quiet classroom setting. It was possibly due to participants completely missing the signal in some trials. Comparing normal hearing with bilateral CI, the latter seems more affected by the noisy playground. This may be explained by CI sounds being overwhelming to the participants, and by participants focusing more on the word recognition task. Real life studies testing sound localization accuracy of CI users and unilateral CI users find tendencies similar to those of this study, namely noisy environments negatively impacting localization accuracy in CI users (van Hoesel et al., 2008; Kerber and Seeber, 2012).

5.2 Word Recognition

In both the playground setting and the classroom setting, word recognition accuracy is seemingly higher with bilateral CI than with unilateral CI. However, the results of the Mann-Whitney U test on Figure 13, indicate they are only significantly higher in the classroom setting. Compared to the difference between unilateral CI and bilateral CI in the sound localization test, the differences here are much smaller, indicating that binaural hearing is not as advantageous in recognizing sentences as when localizing sounds. This is likely due to the sound qualities of both bilateral CI and unilateral CI being identical. In the classroom setting, the significantly higher word recognition accuracy with bilateral CI might be explained by sound localization being less difficult, hereby allowing to focus more on recognizing words. In the playground setting, a potentially higher accuracy with bilateral CI may be explained by being able to separate noise from speech with binaural hearing compared to unilateral hearing. Real life studies testing speech perception and word recognition of bilateral CI users and unilateral CI users find equal or higher scores with bilateral CI users (Müller et al., 2002; Peters et al., 2004). This tendency also shows in this study, as the difference between unilateral and bilateral CI was small, but favorable to the latter.

Word recognition accuracy is significantly higher with normal hearing than with bilateral CI in both settings, as indicated by the Mann-Whitney U tests. The difference is biggest in the playground setting. With normal hearing, the advantage of more natural sound qualities is present, explaining the significant difference in both settings. Compared to sound localization, the difference in word recognition accuracy in the playground setting is much bigger than in the classroom. This suggests that noise affects word recognition more than it affects sound localization. A real life study assessed speech in noise for CI users find that background noise is a significant challenge, and results show poorer performance in CI users compared to normal hearing peers, which is comparable to findings of this study (Zaltz et al., 2020).

The word recognition accuracy is significantly higher in the classroom setting than in the playground setting for all hearing conditions. However, differences are disproportionately bigger with a CI compared with normal hearing, as seen when comparing the word recognition bars on Figures 9, 10. That is, participants with a CI condition are disproportionately more influenced by noise than are participants with normal hearing. Hence, the noisy environment results in a bigger difference between bilateral CI and normal hearing than the quiet environment. This disproportionality is also reflected in statements from participants while using CI hearing conditions; “It was a bit weird walking around the playground, where even if you were really close, you could barely hear it [a sound in the VE]. But with normal hearing you could suddenly hear what was going on” and “It was very difficult to understand sound at the playground due to background noise”. A study investigating how noise influences CI users show that speech recognition deteriorates rapidly as the level of background noise increases (Fu and Nogaki, 2005). Considering the disproportionately bigger differences between the quiet and noisy environment with CI compared with normal hearing, a similar tendency is seen in this study.

5.3 Sense of Presence

Below, subscales of the IPQ (G, SP, INV, and REAL) are discussed individually. For the IPQ, the standard deviation might be affected by the amount of participants (N = 15) and how they interpreted questions individually. Some participants asked researchers about some of the questions, as they were not sure what to answer.

The score for G (general presence), indicating a general sense of ‘being there’, was 5.73, but had the highest standard deviation of all subscales (SD = 1.38). Participants were motivated due to the subject matter, and video recordings revealed them wanting to explore the environment and listen to the sounds present with the different hearing conditions. They especially wanted to experience and pay attention to the hearing condition similar to that of their own child. It is worth noting that 12 of 15 participants never tried VR before. This could also explain the standard deviation. The score is reflected in the scores from the other subscales, supported by SP and INV scores in particular. The mean score from questions of the REAL subscale could influence the standard deviation of G as well.

The score for SP (spatial presence) was 5.32 (SD = 0.49), which was slightly lower than the score for G, although with a lower standard deviation. SP is an indication of participants feeling physically present in the VE. The environment contains a number of features that help facilitate spatial presence, such as animated characters, lighting and shadows, and a realistic soundscape. Factors possibly reducing spatial presence could be participants clipping through objects in the VE (e.g., tables and walls), as seen from video recordings of their experience in the VE. Outbursts from participants when clipping could indicate a high level of spatial presence, as they experienced the collisions as being real.

The score for INV (involvement) was 5.18 (SD = 0.32). The subscale measures participants’ attention devoted to the VE, and if they were distracted by the real world around them. The subject matter of the experiment could potentially cause participants to think about their own child, possibly lowering the INV score. Some participants also visited together with spouses or even children, and they could distract the participant by physical touch or by noise. But having headphones with active noise-canceling technology could help mitigate this issue. Finally, due to the nature of the test and procedure, the researchers needed to communicate with the participant to give instructions on advancing the test, how to navigate the VE and change their hearing condition (e.g., from normal hearing to bilateral hearing). This could also have contributed to a lesser score of involvement, as participants often needed to change their focus.

The score for REAL (realness) is 3.75 (SD = 0.90), which concerns the subjective experience of realism in the VE. Results might be affected by participants not being able to communicate or play with non-player characters, or in other ways interact with the world other than walking around and observing what happens in the VE. Played sentences of the DAT corpus might have sacrificed ecological validity in the VE. Spoken sentences were without context, which meant connections between what was spoken and what one assumed was typically spoken at a school was removed. The stylized models of the NPCs could affect the sense of realism negatively. The implementation of NPCs looking at the player or moving around in the environment could enhance realism. Other effects such as adding visible footprints, adding view bobbing to the camera to minimize the sensation of floating, or adding more interactable objects might help reinforce the sense of realism. Furthermore, highly detailed graphics provide users with more realistic visuals, but come at the cost of performance. They can impact both loading times and the frame rate, possibly contributing to cybersickness (Rebenitsch and Owen, 2016). On the other hand, the simulation does not require nor encourage any fast or rapid movements from the user, which reduce side effects of decreased performance and cybersickness.

Overall, scores for G, SP, and INV are high, but REAL are lower. Focusing on improving realism through creating more dynamic and interactive VE could benefit both feelings of presence, as well as the general experience in VR. To be noted, the IPQ is taken post-simulation, causing the experience and the measuring of presence to not be done in a continuous manner. Additionally, changes to the design of the experiment could allow for comparing IPQ scores between conditions (unilateral CL, bilateral CL, and normal hearing) if participants did not experience other conditions beforehand, invalidating the scores for such use.

5.4 Qualitative Evaluation

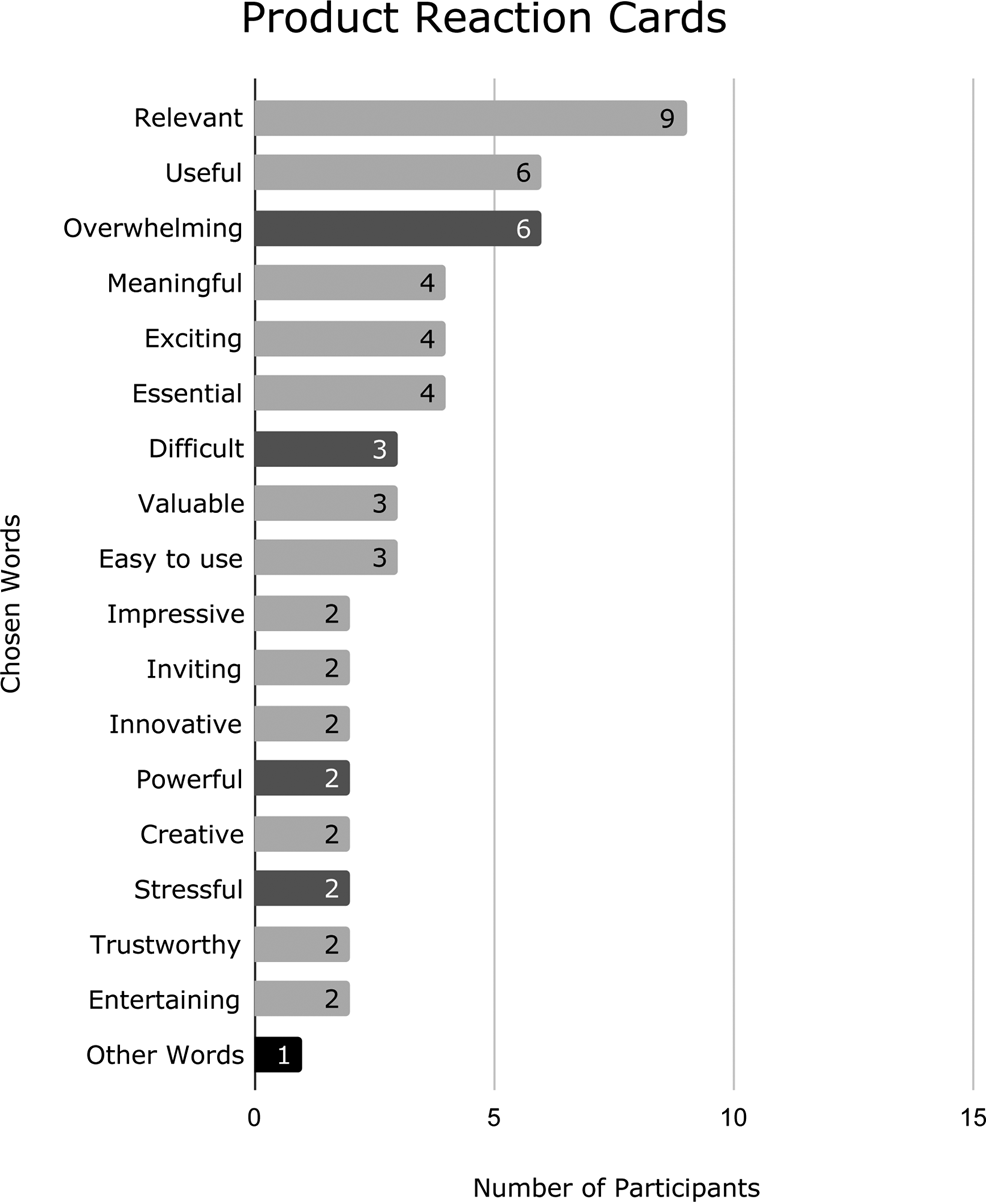

The qualitative evaluation was based on a product reaction cards (PRC) evaluation method from the Microsoft Desirability Toolkit (Benedek and Miner, 2002) followed by a semi-structured interview. For the PRC, from a list of 118 available words, participants were instructed to select five words that described their experience in the VE. Upon selecting the five words, they were asked to explain in detail why they selected those specific words. Their verbal explanations were recorded for later transcription.

A semi-structured interview was conducted following the PRC evaluation. The questions prepared asked both about participants’ previous experience with simulated cochlear implant examples and their general experience in the VE of this study.

Finally, an expert interview was conducted with an audiologist experienced in working with children using CI and their parents. After trying the VR intervention, they provided valuable feedback on the potentials of such an application.

Figure 14 shows the frequency distribution of the words chosen by the participants in the PRC evaluation.

FIGURE 14

Frequency distribution of the words chosen by participants in the PRC evaluation. Light gray represents positive words and dark gray represents either neutral or negative words. ‘Other Words’ comprise Disconnected, Engaging, Expected, Helpful, Inspiring, Intimidating, Intuitive, Straightforward, Optimistic, Compelling, Personal, Collaborative, Fun, Satisfying, Incomprehensible, Not secure, and Effective, which were each chosen once.

Statements from participants were translated from the participants’ native language. The card Relevant was chosen by 9 out of 15 participants (60%), mostly stating it was obviously relevant to them, as they have children with CI or soon will (Personal was chosen for the same reason). Some pointed out that the project could furthermore be relevant by allowing e.g. teachers at the school to experience having CI. Specifically, one participant stated: “I think parents are the wrong target group […] Parents are already convinced that there are a lot of difficulties. However, to convince others, such as teachers, is the issue, and this could be very effective at doing so”.

The reasons for choosing Useful, Meaningful, Essential, Valuable, Powerful, Optimistic, and Helpful are summed up by the following statement from a participant elaborating on why they chose Useful: “It gives an understanding of what everyday life requires, and where challenges occur, and what to be attentive about”. Another stated: “I think it gave an understanding of why it requires more of a child with CI to be in an institution” in regards to choosing the word Valuable. Finally, one participant mentioned it could be a very valuable tool for providing insights for professionals working with CI users, such as teachers, speech-language pathologists, as well as parents of children with CIs.

Overwhelming, Difficult, Stressful, Intimidating, Incomprehensible, and Disconnected were all chosen based on the participants’ experience of hearing with CI during their interaction with the simulation. In general, the parents found the NPCs talking incomprehensible or very difficult to understand, and it was stressful to be on the virtual playground. One of the participants stated: “I think it was overwhelming. I feel tired now. I spent a lot of energy listening around”, and another stated: “It was very stressing on the playground because there was so much noise that I could feel my pulse rise a little”. Some participants who chose Overwhelming and Intimidating expressed they felt a bit sad after the experience, because they realized how difficult it was to hear with CI, thinking of their child: “I was overwhelmed with negative feelings when I realized how hard it is for him [their child with CI] to hear and understand others. I got a bit sad. I feel he [their child with CI] hears more than what I experienced [in the simulation]. It was worse than I expected”. Lastly, one participant chose Difficult in relation to using the application, as they had never tried VR before.

Easy to use, Straightforward, Inviting, and Intuitive were chosen as participants found the navigation within the application intuitive and easy to use.

Participants chose Innovative, Creative, and Impressive both relating to the concept of simulating CIs in VR being innovative or creative and the implementation being impressive. One participant stated “I think it is very impressive what you have created. It [the audio effect of the CI] is very much like my daughter have explained”.

Trustworthy, Compelling, and Effective were chosen, as participants found the experience believable and authentic. One participant expressed: “I felt I was a part of it, so in that regard it was authentic”.

Reasons for choosing Entertaining, Fun, Satisfying, and Exciting are summed up by the following statement by a participant: “I think it is entertaining to try. Both to hear, as well as VR itself because I have never tried it. Especially being inside another world”. One participant was excited that there is “[…] someone addressing it [the problems of children with CIs”.

Inspiring was chosen “because it gives a new and better picture of what it is the child will experience”. One participant chose Engaging as they otherwise engage themself as much as possible in research related to their child’s condition. For one participant, the simulation met their expectations, hence choosing Expected. It annoyed the participant as they wanted to expand their knowledge on their child’s condition, however, they felt the simulation did not add anything to their knowledge. Collaborative was chosen with the explanation: “One should think about the child’s needs a bit more. One should cooperate more with the child. You think it is more normal than it is”. Finally, Not secure was chosen as the participant stated: “I felt a bit uncertain if the condition is actually like that”.

The semi-structured interview generated statements addressing different aspects of the participants’ experiences in VR. The most relevant statements are categorized and presented in Figure 15.

A final expert evaluation was also performed where an audiologist working closely with children and families with CI tried the application. The expert pointed out how it is important to teach parents and peers not only to learn about but also to embrace the disabilities and train to a better life with them, in line with the considerations made in Bennett and Rosner (2019).

As an example, she saw a strong potential for the simulation as a tool to help children to train their spatial awareness, in order to understand the best position in a classroom or playground to have better hearing experience. The classroom simulation included an acquarium. The expert found it particularly illuminating how the seats next to the acquarium created a worse listening experience if the task was to hear the teacher. In this case VR would be used to train children to find the sweet spot of their hearing experience, as well as peers and teachers to train where they should position themselves to be more inclusive toward the hearing impaired classmates. They also pointed out how these simulations, although important, should be experienced considering that the brain of children is still plastic (Kral and Tillein, 2006). We know the physical effect of a CI, but we do not completely understand yet the cognitive effects of how a brain adapts to having a CI.

6 Discussion

In this paper, we conducted a quantitative and qualitative evaluation of the VR application HearMeVR, designed to help parents of children with CI understand how their child is experiencing the world. The results of the PRC as well as specific analysis points and observations are discussed below. After discussing the PRC evaluation, a semi-structured interview method allowed participants to express and elaborate on their points. As the order of questions were flexible, it allowed the authors to ask the prepared questions when the current context of the conversation was fitting.

The VR simulation and evaluation design allowed participants to explore the virtual environment and switch between simulated hearing conditions (uni- and bilateral CI, and normal hearing) and locations at their own will. As expected, results showed a tendency of participants mainly choosing to replicate the condition of their own child (bilateral CI). However, the intended use of the application to compare hearing conditions was not utilized, as almost all participants did not seem to feel the need to compare hearing conditions, neither between CIs nor with normal hearing. From interview feedback, the behavior was confirmed to be influenced by their main motivation and interest in the condition of their child.

During the evaluation, some participants suggested tailoring the simulation to other target groups, mentioning teachers or normal-hearing peers in classes with a child using CI. Their arguments emphasized that the parents are already sufficiently informed about their child’s condition. Differences in motivation between parents and others suggest differences in time and resources invested when learning about the condition. This can be relevant since children with CIs are affected in ordinary learning institutions that cannot afford or are unaware of the need for special care and attention. Feedback from participants 5 and 9 examplified issues in social contexts of their children resulting in negative consequences such as bullying or social isolation. As suggested, if the simulation and associated target group is tailored toward usage by teachers or peers, it could provide them with valuable insight of the condition. This could encourage them to adapt the physical environment and social behavior more optimally toward children with CIs.

Some participants were overwhelmed and expressed negative feelings toward the experience when giving feedback on the simulation. Regarding simulated conditions, ethical precautions should be taken when comparing normal hearing to hearing with CIs. The comparison can possibly result in a negative experience when listening with CIs because they sound uncomfortable in comparison with normal hearing. Some participants were skeptic regarding the purported realism of the CI simulation, as seen in Figure 15. In the interview with the expert audiologist, it was outlined that the adaptation process by the brain will train it to recognize sounds through the CI over the duration of months or years; a process not directly replicable by the simulation. For this reason, it is practically impossible to fully simulate a CI. It is important to be aware of these limitations when designing the simulation, as the experience for the parents can quickly feel subjectively worse than what is experienced by their children in real life. Yet, several positive responses of curiosity, value, and effectiveness were expressed by participants. The VE would give participants the needed knowledge and experience of the difficulty of hearing between normal and CI conditions, helping to realize what types of sounds are difficult to pick up for their children. This knowledge can then be applied in real-world situations afterward.

Most participants were positively inclined toward the experience. Considering that 12 of 15 participants had never tried VR before, the novelty and its presence-facilitating capabilities of VR as a technology regarding controls, graphics and audio, could influence these responses. One participant found it difficult to navigate and control the avatar. However, most found it easy to use, as seen in the PRC results. Observations indicated minimal problems related to navigating in VR. Regarding movement in the virtual environment, a controller-based continuous locomotion technique was implemented. Continuous locomotion approximates real life walking more than does discrete locomotion, but 4 of 15 participants expressed symptoms of cybersickness such as feeling drunk or dizzy. Cybersickness is a sensation, similar to motion sickness, occurring when interacting with VR simulations, especially when there is an incongruency between sensory information of the user and movement data delivered by the VR system (Silton et al., 2019). These feelings were provoked even when participants were seated on a chair during the experiment. As the simulation was designed to feel as if one truly visited a playground or a classroom, the feelings of cybersickness could hinder the feelings of presence, and decrease the perceived senses of involvement and realism. As such, the effects of the simulation might suffer, possibly influencing the experiment.

Considering the above discussion, results suggest that the experience in VR had a positive influence on participants, combined with high interest and motivation for the concept itself. They felt the experience relayed an exciting and believable simulation of their child’s hearing condition, complementing and adding to their existing knowledge and beliefs on what their child can and cannot hear. Skepticism about true simulation realism was surfaced by participants regarding the possibility of simulating such a condition at all, possibly influenced by the short time they had to adjust to the new soundscape of simulated CIs. Together with signs of cybersickness, sometimes visual and movement glitches in the experience made participants more aware of the real world around them during the experiment; yet they could immerse themselves and feel present in the virtual world. However, statements indicate that participants did not feel as if they were at the school themselves, rather engaging in perspective-taking when comparing conditions and soundscapes. Going forward, being able to foster this engagement can still help facilitate further empathic responses and behavior in participants toward the children. As a participant stated: “I’ve been against some of those assistive devices during class, because he [their child with CI] shouldn’t differ too much from his peers, but now I see it should maybe be reconsidered”.

7 Conclusion

In this paper, we presented a VR simulation of the experience of being present in a school setting while having a cochlear implant vs. having no hearing condition. When situated in two different virtual settings of contrasting soundscapes, participants of the experiment (parents of children with bilateral CI) tended to produce similar results as real-world studies of speech recognition and sound localization when comparing test results for unilateral CI, bilateral CI, and normal hearing. It was seen that noise influenced word recognition more than it influenced sound localization, but ultimately affected both. Comparing unilateral CI to bilateral CI, the bilateral CI improved sound localization, but also improved word recognition, especially when the environment was sufficiently quiet. Results also confirmed the disproportionately greater difficulties listening in noisy soundscapes compared to quiet soundscapes for users of CIs in real life. The IPQ indicated that participants in general had a high sense of presence in the VE, even though the disability simulation drastically impacted the sound quality for participants, making speech recognition and localization more difficult. The sense of realism was lower, partly due to a less dynamic environment with limited interaction, as suggested by the IPQ and post-evaluation informal discussion. However, the VE seemed real to participants regarding the soundscape, as well as the nature and setting of the VE in general. The combination of high levels of presence as well as sound localization and word recognition results being similar to findings in real-life studies, the VE should provide a more effective, intrinsically motivating, and ecologically valid experience of what it is like to hear with cochlear implants. Future studies could focus on the qualitative aspect of users’ experiences in the VE and how they perceive the possibilities of utilizing the technology to learn about the condition of living with CIs. We will also focus on complementing the qualitative evaluations and observations with behavioral measurements of participants such as spatial trajectory data and eye-gaze information. We also plan to implement the software on HMDs such as Oculus Quest 2, in order to make it more accessible to the target population.

Concepts such as embodiment and immersion allow for presence in ecologically valid virtual environments, facilitating greater empathy and understanding for users in disability simulations. Digital simulations are also effective in simulating disabilities including hearing loss, the most common sensory disability in the world. This study proposed HearMeVR, a virtual simulation that let parents experience listening with cochlear implants in common real-life environments for children. The design adapted stylized graphics in an environment with naturally animated NPCs, spatialized audio, and a realistic simulation of hearing with cochlear implants. Results and feedback of a qualitative evaluation (N = 15) and expert interview feedback of the simulation suggested that by using the virtual experience to facilitate awareness and empathy regarding the difficulties of hearing with cochlear implants, both parents, teachers and normal-hearing peers can better understand the struggles of hearing impaired children as well.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the Ethical committee of Aalborg University. The patients/participants provided their written informed consent to participate in this study.

Author contributions

AJ, LE, CH, and DR implemented the system. Everyone contributed to the design of the experiment and writing the paper.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1

AhnS. J. G.LeA. M. T.BailensonJ. (2013). The Effect of Embodied Experiences on Self-Other Merging, Attitude, and Helping Behavior. Media Psychol.16, 7–38. 10.1080/15213269.2012.755877

2

AndersenO. D. (2011). Udfordringer I Det Inkluderende Arbejde Med Born Med Horetab. Specialpaedagogik31, 43–55.

3

BailensonJ. (2018). Experience on Demand: What Virtual Reality Is, How it Works, and what it Can Do. WW Norton & Company.

4

BaileyJ. O.BailensonJ. N.CasasantoD. (2016). When Does Virtual Embodiment Change Our Minds?Presence: Teleoperators and Virtual Environments25, 222–233.

5

BeijenJ.-W.SnikA. F. M.MylanusE. A. M. (2007). Sound Localization Ability of Young Children with Bilateral Cochlear Implants. Otology & Neurotology28, 479–485. 10.1097/mao.0b013e3180430179

6

BenedekJ.MinerT. (2002). Measuring Desirability: New Methods for Evaluating Desirability in a Usability Lab Setting. Proc. Usability Professionals Assoc., 57.

7

BennettC. L.RosnerD. K. (2019). The Promise of Empathy: Design, Disability, and Knowing the” Other”. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. 1–13.

8

BoliaR. S.NelsonW. T.EricsonM. A.SimpsonB. D. (2000). A Speech Corpus for Multitalker Communications Research. The J. Acoust. Soc. America107, 1065–1066.

9

BrewerM. B.CranoW. D. (2000). “Research Design and Issues of Validity,” in Handbook of Research Methods in Social and Personality Psychology. Editors ReisH. T.JuddC. M. (Oxford, UK: Cambridge University Press), 11–26. 10.1017/cbo9780511996481.005

10

CroghanN. B. H.DuranS. I.SmithZ. M. (2017). Re-examining the Relationship between Number of Cochlear Implant Channels and Maximal Speech Intelligibility. J. Acoust. Soc. America142, EL537–EL543. 10.1121/1.5016044

11

CucisP.Berger-VachonC.GallegoS.TruyE. (2018). Cochlear Implant: On the Number of Channels. Model. Meas. Control. C79, 179–184. 10.18280/mmc_c.790405

12

CummingsJ. J.BailensonJ. N. (2016). How Immersive Is Enough? a Meta-Analysis of the Effect of Immersive Technology on User Presence. Media Psychol.19, 272–309.

13

de la Torre VegaÁ.MartíM.de la Torre VegaR.QuevedoM. S. (2004). Cochlear Implant Simulation Version 2.0: Description and Usage of the Program.

14

Decibeldk. (2016). Den Første Tid Med Høretab.

15

DespretV. (2004). The Body We Care for: Figures of Anthropo-Zoo-Genesis. Body Soc.10, 111–134.

16

DormanM. F.LoiselleL. H.CookS. J.YostW. A.GiffordR. H. (2016). Sound Source Localization by normal-hearing Listeners, Hearing-Impaired Listeners and Cochlear Implant Listeners. Audiol. Neurotology21, 127–131. 10.1159/000444740

17

DormanM.NataleS. C. (2018). Demonstration of Ci Matching Procedure in Single Sided Deafness.

18

DormanM.NataleS. C. (2019). What a Cochlear Implant Sounds like to a Single Sided Deaf Individual.

19

FoundationB. (2019). Blender [software].

20

FuQ.-J.NogakiG. (2005). Noise Susceptibility of Cochlear Implant Users: The Role of Spectral Resolution and Smearing. J. Assoc. Res. Otolaryngol.6, 19–27. 10.1007/s10162-004-5024-3

21

GeersA. E.NicholasJ. G.MoogJ. S. (2007). Estimating the Influence of Cochlear Implantation on Language Development in Children. Audiological Med.5, 262–273. 10.1080/16513860701659404

22

Google (2018). Resonance Audio - unity. Available at: https://resonance-audio.github.io/resonance-audio/develop/unity/getting-started.

23

GoupellM. J.LabackB.MajdakP.BaumgartnerW.-D. (2008). Effects of Upper-Frequency Boundary and Spectral Warping on Speech Intelligibility in Electrical Stimulation. J. Acoust. Soc. America123, 2295–2309. 10.1121/1.2831738

24

HaslerB. S.SpanlangB.SlaterM. (2017). Virtual Race Transformation Reverses Racial In-Group Bias. PloS one12, e0174965.

25

HolmanM. A.CarlsonM. L.DriscollC. L. W.GrimK. J.PeterssonR. S.SladenD. P.et al (2013). 34. official publication of the American Otological Society, American Neurotology Society [and] European Academy of Otology and Neurotology, 251–258.Cochlear Implantation in Children 12 Months of Age and YoungerOtology & neurotology

26

HopkinsK. (2015). The Human Auditory System - Fundamental Organization and Clinical Disorders. Amsterdam, NL: Elsevier, 479–494. 10.1016/b978-0-444-62630-1.00027-5Deafness in Cochlear and Auditory Nerve Disorders

27

igroup.org Project Consortium (2016). Igroup Presence Questionnaire (Ipq).

28

KalyanaramanS. “.PennD. L.IvoryJ. D.JudgeA. (2010). The Virtual Doppelganger. J. Nervous Ment. Dis.198, 437–443. 10.1097/nmd.0b013e3181e07d66

29

KearneyG.DoyleT. (2015). An HRTF Database for Virtual Loudspeaker Rendering, in Audio Engineering Society Convention 139Audio Engineering Society.

30

KerberS.SeeberB. U. (2012). Sound Localization in Noise by normal-hearing Listeners and Cochlear Implant Users. Ear and Hearing33, 445–457. 10.1097/aud.0b013e318257607b

31

KigerG. (1992). Disability Simulations: Logical, Methodological and Ethical Issues. Disability. Handicap Soc.7, 71–78. 10.1080/02674649266780061

32

KilteniK.GrotenR.SlaterM. (2012). The Sense of Embodiment in Virtual Reality. Presence: Teleoperators and Virtual Environments21, 373–387.

33

KorsM. J.Van der SpekE. D.BoppJ. A.MillenaarK.van TeutemR. L.FerriG.et al (2020). The Curious Case of the Transdiegetic Cow, or a mission to foster Other-Oriented Empathy through Virtual Reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. 1–13.

34

KralA.TilleinJ. (2006). 64. Karger Publishers, 89–108.Brain Plasticity under Cochlear Implant Stimulation. In Cochlear and brainstem implants

35

LakinJ. L.ChartrandT. L. (2003). Using Nonconscious Behavioral Mimicry to Create Affiliation and Rapport. Psychol. Sci.14, 334–339.

36

LaszigR.AschendorffA.SteckerM.Müller-DeileJ.MauneS.DillierN.et al (2004). Benefits of Bilateral Electrical Stimulation with the Nucleus Cochlear Implant in Adults: 6-month Postoperative Results. Otology & Neurotology25, 958–968. 10.1097/00129492-200411000-00016

37

LimM. Y.LeichtensternK.KriegelM.EnzS.AylettR.VanniniN.et al (2011). Technology-enhanced Role-Play for Social and Emotional Learning Context–Intercultural Empathy. Entertainment Comput.2, 223–231.

38

LitovskyR. Y.JohnstoneP. M.GodarS. P. (2006). Benefits of Bilateral Cochlear Implants And/or Hearing Aids in Children. Int. J. Audiol.45, 78–91. 10.1080/14992020600782956

39

MATLAB (2020). MATLAB (R2020a). MathWorks, Inc.

40

MooreJ. M.ThompsonG.ThompsonM. (1975). Auditory Localization of Infants as a Function of Reinforcement Conditions. J. Speech Hearing Disord.40, 29–34. 10.1044/jshd.4001.29

41

MorenoR.MayerR. E. (1999). Cognitive Principles of Multimedia Learning: The Role of Modality and Contiguity. J. Educ. Psychol.91, 358–368. 10.1037/0022-0663.91.2.358

42

MüllerJ.SchonF.HelmsJ. (2002). Speech Understanding in Quiet and Noise in Bilateral Users of the MED-EL COMBI 40/40 Cochlear Implant System. Ear and Hearing23, 198–206. 10.1097/00003446-200206000-00004

43

NielsenJ. B.DauT.NeherT. (2014). A Danish Open-Set Speech Corpus for Competing-Speech Studies. J. Acoust. Soc. America135, 407–420. 10.1121/1.4835935

44

NilssonM.SoliS. D.SullivanJ. A. (1994). Development of the Hearing in Noise Test for the Measurement of Speech Reception Thresholds in Quiet and in Noise. J. Acoust. Soc. America95, 1085–1099. 10.1121/1.408469

45

NilssonN. C.NordahlR.SerafinS. (2016). Immersion Revisited: A Review of Existing Definitions of Immersion and Their Relation to Different Theories of Presence. Hum. Tech.12, 108–134. 10.17011/ht/urn.201611174652

46

NordqvistC. (2016). Deafness and Hearing Loss: Causes, Symptoms and Treatments.

47

of AmericaH. L. A. (2018). Cochlear Implants & Other Implantable Devices. https://www.hearingloss.org/hearing-help/technology/cochlear-implantable-devices/.

48

On Deafness, N. N. I. and Disorders, O. C (2016). Cochlear Implants.

49

Organization, W. H (2020). Deafness and Hearing Loss. Available at: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss.

50

Oticon (2018a). At have et barn med høretab. Available at: https://www.oticon.dk/hearing-aid-users/hearing-loss/children-with-hearing-loss/raising-children-with-hearing-loss.

51

Oticon (2018b). Barnets Miljø Og Sociale Liv. Available at: https://www.oticon.dk/hearing-aid-users/hearing-loss/children-with-hearing-loss/environment-and-social-life.

52

Oticon (2018c). Derfor Er Børns Hørelse Så Vigtig. Available at: https://www.oticon.dk/hearing-aid-users/hearing-loss/children-with-hearing-loss/why-childrens-hearing-is-important.

53

ParsonsT. D. (2015). Virtual Reality for Enhanced Ecological Validity and Experimental Control in the Clinical, Affective and Social Neurosciences. Front. Hum. Neurosci.9, 660. 10.3389/fnhum.2015.00660

54

PetersB.LitovskyR.LakeJ.ParkinsonA. (2004). Sequential Bilateral Cochlear Implantation in Children. Int. Congress Ser.1273, 462–465. 10.1016/j.ics.2004.08.020

55

PreeceJ. P. (2010). Sound Localization by Cochlear Implant Users. In Seminars in Hearing, 31. New York, NY: Thieme Medical Publishers, 037–046.

56

RamsdenR.GreenhamP.O??DriscollM.MawmanD.ProopsD.CraddockL.et al (2005). Evaluation of Bilaterally Implanted Adult Subjects with the Nucleus 24 Cochlear Implant System. Otology & Neurotology26, 988–998. 10.1097/01.mao.0000185075.58199.22

57

RebenitschL.OwenC. (2016). Review on Cybersickness in Applications and Visual Displays. Virtual Reality20, 101–125.

58

RizzoA. S.KimG. J. (2005). A Swot Analysis of the Field of Virtual Reality Rehabilitation and Therapy. Presence: Teleoperators & Virtual Environments14, 119–146.

59

SchoenF.MuellerJ.HelmsJ.NoppP. (2005). Sound Localization and Sensitivity to Interaural Cues in Bilateral Users of the Med-El Combi 40/40cochlear Implant System. Otology & Neurotology26, 429–437. 10.1097/01.mao.0000169772.16045.86

60

SennP.KompisM.VischerM.HaeuslerR. (2005). Minimum Audible Angle, Just Noticeable Interaural Differences and Speech Intelligibility with Bilateral Cochlear Implants Using Clinical Speech Processors. Audiol. Neurotology10, 342–352. 10.1159/000087351

61

Sensimetrics (2007). Helps V2:hearing Loss and Prosthesis Simulator. Available at: https://www.sens.com/products/helps-v2/.

62

ShannonR. V.ZengF.-G.KamathV.WygonskiJ.EkelidM. (1995). Speech Recognition with Primarily Temporal Cues. Science270, 303–304.

63

SiltonN. R.FontanillaE.FemiaM.RouseK. (2019). Employing Disability Simulations and Virtual Reality Technology to foster Cognitive and Affective Empathy towards Individuals with Disabilities, IGI Global). In Scientific Concepts Behind Happiness, Kindness, and Empathy in Contemporary Society. Marymount Manhattan College, USA. 10.4018/978-1-5225-5918-4.ch010

64

SmithA.GairJ.McGeeP.ValdezJ.KirkP. (2011). Teaching Empathy through Role-Play and Fabric Art: An Innovative Pedagogical Approach for End-Of-Life Health Care Providers. Int. J. Creat. Arts Interdiscip. Pract.10, 1–16.

65

Steam (2019). SteamVR framework [software].

66

StevensG.FlaxmanS.BrunskillE.MascarenhasM.MathersC. D.FinucaneM. (2011). Global and Regional Hearing Impairment Prevalence: an Analysis of 42 Studies in 29 Countries. Eur. J. Public Health23, 146–152. 10.1093/eurpub/ckr176

67

StickneyG. S.ZengF.-G.LitovskyR.AssmannP. (2004). Cochlear Implant Speech Recognition with Speech Maskers. J. Acoust. Soc. America116, 1081–1091.

68

Unity (2019). Unity [software].

69