94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

OPINION article

Front. Virtual Real., 17 May 2021

Sec. Virtual Reality and Human Behaviour

Volume 2 - 2021 | https://doi.org/10.3389/frvir.2021.673547

Cassandra Complex: from Greek mythology; someone whose valid warnings or concerns are disbelieved by others

Recently, great steps have been taken in making virtual, augmented, and mixed reality (we refer to three realities as XR) technologies accessible to a broad and diverse end user audience. The sheer breadth of use cases for such technologies has grown, as it has been embedded into affordable, widely accessible, and on-the-go devices (e.g., iPhone) in combination with some popular intellectual property (e.g., Pokémon Go). However, with this increase has come recognition of several ethical issues attached to the widespread application of XR technologies in everyday lives. The XR domain raises similar concerns as the development and adoption of AI technologies, with the addition that it provides immersive experiences that blur the line of what is real and what is not, with consequences on human behavior and psychology (Javornik, 2016; Ramirez, 2019).

It is easy to write off concerns with XR technology as unfounded or premature. However, the current state of the art in XR is capable of several use cases which we see as cause for concern: 1) XR can generate realistic holograms, thanks to advances in computer vision, of people. These hologram representations are lifelike and can be made to say or do things thanks to advances in deep fake technology where video footage of a person is generated in real time based on large data repositories of real captured footage (Westerlund, 2019). This can be used to promote disinformation. For example, a deepfake hologram portraying a movie celebrity sharing political propaganda which the celebrity themselves don’t endorse, targeting fans and spreading lies about the incumbent leader’s political opponents. The hologram could be made to harass or provoke viewers (Aliman and Kester, 2020), goading them into acting irrationally. This warrants ethical considerations when designing XR experiences for broadcasting and entertainment; 2) XR technology which can sense and interpret objects in the environment can be used to mask and/or delete recognized objects. This can be used to promote misleading and/or noncompetitive behavior in consumer goods marketing industries. For example, while a user is browsing an XR marketplace a soft drink manufacturer may identify a competitor’s can and make it look dented and/or undesirable, nudging the consumer to purchase their ‘superior’ looking product instead. In an XR environment, consumers have a more direct interaction with a product than in traditional broadcast based marketing, with XR providing powerful virtual affordances (Alcañiz et al., 2019) which can persuade consumers and their purchase intentions. When technology which can track our every move, and has knowledge of our preferences and desires, is given the power to make decisions on our behalf becomes widespread it may have unintended consequences (Neuhofer et al., 2020). Therefore, the use of XR in marketing should be subject to ethical considerations; 3) XR experiences may be so immersive that they distract from the user’s surroundings, opening them up to harm. For example, there have been several reports of Pokémon Go users being hit by passing vehicles as they play the game,1 completely immersed in the experience and unaware of what is happening around them in the real world. As these experiences revolve around storytelling, there are ethical responsibilities on the creators to ensure safe passage through the experience (Millard et al., 2019) for audiences and viewers, and as play takes place in real locations, one must consider the appropriateness of facilitating play in socio-historical or sacred locations (Carter and Egliston, 2020). Likewise, there are similar calls for standards in the design of experiences for educational purposes (Steele et al., 2020); and 4) XR technology can also be used to create realistic environments where though there may be no physical harm, certain experiences may expose participants to psychological trauma. Though there is no real threat in the environment, the participant perceives the virtual representation as such: it looks and 'feels' real. They may become overwhelmed with intense feelings of anxiety and fear as the graphical detail is staggering (Reichenberger et al., 2017; Lavoie et al., 2021; Slater et al., 2020). In this case, complex ethical situations arise when using XR technologies for therapy and research applications. These four use cases alone demonstrate the potential harm XR technologies may introduce for users, whether it be intentional or not, physical, or sociological.

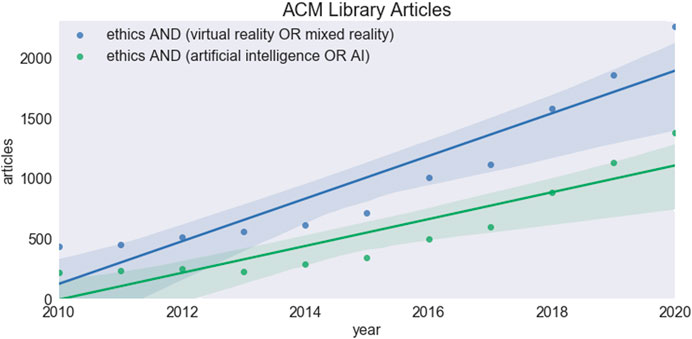

Social and political implications of emerging tech, for example social media sickening, are on the rise (Vaidhyanathan, 2018). The pace of emerging tools and technologies is so fast, as soon as we figure out what to do about one problem, a new one arises. Searching the Association for Computing Machinery (ACM) digital library reveals a growing trend in the area of XR and ethics that is nowhere close to slowing down (Figure 1). The point is: XR is following the same trend in publication outputs as AI. Given the bumps in the road that ethics and AI have observed in recent past, we note similar issues may begin to emerge soon in XR. Recent work has raised concerns over the practical utility of ethics documents written by governments, NGOs (Non-Governmental Organisations), and private sector agents. Schiff et al. cite several motivations extracted from a coding process over 80+ documents published between 2016 and 2020 regarding ethical approaches to AI (Schiff et al., 2020). They describe how motivations to publish documents can interact with one another; some agents may be motivated to act in a responsible manner, while others may be motivated to signal responsibility through publishing documents to increase their brand authority or take a leadership position for competitive advantage. XR is at risk of similar problems if all we do is publish policy documents with the aim to take positions on the global stage.

FIGURE 1. Number of articles returned per year in the ACM Digital Library featuring the words mixed reality (ethics, mixed reality, virtual reality) vs. artificial intelligence (ethics, artificial intelligence, AI).

As the application domain explodes, we must be vocal about the dangers to ethics and moral values in society. It may be necessary to impose a counsel for applied ethics upon developers and researchers: those who are creating and exploring XR technologies. As content creators shape applications and their use cases, it is naïve to pass the responsibility to policy makers: by this time, it is too late. We can no longer be reactive toward emerging problems in ethics of XR. We must strive for a proactive approach which goes beyond local policies and guidelines. If engineers and computer scientists are the ones to push the frontiers of XR technologies, we must accept our fallibility with grace and understand our own biases at play. We must work together with philosophers in ethics and governance to create a shared vision of what we want XR to be, together with industry, NGOs, and government to decide the best approach. This shared vision must consider not just the technical challenges to overcome in bringing immersive XR experiences to users, but how to do so in a way which is responsible and considers any and all hazardous consequences.

In the current global political climate, where science and technology are sometimes viewed with hostility and mistrust2,3,4 (Caprettini and Voth, 2020), there may be a danger of modern Luddites arguing against AI, XR and similar technologies, afraid of adopting them even in cases where there may be obvious benefits, e.g., health, environment. We do not want to throw the baby out with the bathwater. Instead, we need to identify and address issues convincingly and thoroughly avoiding misleading the public. The literature has overwhelming suggestions on reforming these technologies and guidelines for design and development of algorithms, interfaces, and data collection. Although there are a few good practices adopted from industry such as establishing an ethics boards and accountability and privacy policies, these are self-regulated and self-governed. We lack a formula for how to approach the challenges we have identified as a community. We seem to act as policy reflexes: while there are many well-cited papers in the literature, proposals remain a series of ad-hoc insights or fragments of a larger whole. Having a formula and solid foundation will help to bring all these fragmented but invaluable efforts together. We argue for bringing advocates, policymakers, citizens, researchers, technologists, human-right activists, from different jurisdictions worldwide into the discussion to direct XR technologies for civil society.

We need not look far for inspiration as promising steps toward reformation have been made in other domains. For example, there are invaluable lessons to be learned from responding to the climate crisis. For many decades, scientists rang the alarm bell about thinning ice sheets in the Arctic circles and increasing global temperatures. However, their international efforts were dismissed or ignored by the public and governments. As the overwhelming impact of global warming started affecting many populations around the world in the 1990s, the scientific community and activists were asked what they would propose instead. Thus the United Nations Framework Convention on Climate Change was defined, with most nations on earth agreeing to stabilize human induced greenhouse gas concentrations. In December 2015 official representatives from most other countries in the world gathered in Paris for the United Nations Climate Change Conference (or COP21), with legally binding consequences. In undertaking the Paris Agreement, governments agreed to work to limit temperature rise to well below 2 degrees Celsius. Agreements like this seek to form alliances across borders with like-minded nations in relation to climate change. A collective, united nations approach to ethical practice for mixed reality applications may prove beneficial and help to enact real impact and provide protective measures for participants, citizens, and consumers of XR research and products.

We are currently seeing organized attempts to address the pressing ethical issues that have arisen from the wide adoption and development of AI solutions. In June 2019, the High-Level Expert Group on AI (AI HLEG) presented at the first European AI Assembly recommendations to guide trustworthy AI promoting “sustainability, growth and competitiveness, as well as inclusion–while empowering, benefiting and protecting human beings.”5 In August of the same year, the US National Institute of Standards and Technology released the Federal Engagement in Developing Technical Standards and Related Tools for AI.6 This document includes a set of actions the US federal government should take to protect public trust and confidence in AI as a priority, and emphasizes the importance of interdisciplinary research to increase understanding of issues around ethics and responsibility across society. Still, those actions lack the global approach that is needed in today’s interconnected world. It is encouraging to see early signs of global approach, for example the recent EU proposals7 containing regulations and guidelines for Excellence and Trust in AI. Agreeing on a framework for emerging technologies such as XR will not be as demanding as climate change on economic or political systems of nations who produce and export such technologies. However, to avoid the pitfalls associated with AI in the past, progress needs to happen on a global stage rather than localized approaches.

The more frustrated academics become by not being heard the more tempted they may be to overstate, to provide even more information, and to use imperatives, all in all lowering communicative effectiveness. To avoid Cassandra’s fate, we need to appeal to people from all aspects of life via an international assembly. We need to shift discussions from ethics of XR to ethics for XR, and researchers across academia and industry must have effective communication with all stakeholders toward building a unified ethical framework for the development and deployment of XR technologies.

DF, AZ, PE wrote the paper. DF, AZ, PE conducted ACM library search. AZ generated Figure 1.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

1https://time.com/4405221/pokemon-go-teen-hit-by-car/

2https://www.independent.co.uk/life-style/gadgets-and-tech/news/google-artificial-intelligence-project-maven-ai-weaponise-military-protest-a8352751.html

3https://www.libertyhumanrights.org.uk/campaign/resist-facial-recognition/

4https://futureoflife.org/ai-open-letter

5https://ec.europa.eu/digital-single-market/en/news/policy-and-investment-recommendations-trustworthy-artificial-intelligence

6https://www.nist.gov/system/files/documents/2019/08/10/ai_standards_fedengagement_plan_9aug2019.pdf

7https://digital-strategy.ec.europa.eu/en/library/proposal-regulation-laying-down-harmonised-rules-artificial-intelligence-artificial-intelligence

Alcañiz, M., Bigné, E., and Guixeres, J. (2019). Virtual Reality in Marketing: A Framework, Review, and Research Agenda. Front. Psychol. 10, 1530. doi:10.3389/fpsyg.2019.01530

Aliman, N.-M., and Kester, L. (2020). “Malicious Design in AIVR, Falsehood and Cybersecurity-oriented Immersive Defenses,” in 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), 130–137. doi:10.1109/AIVR50618.2020.00031

Caprettini, B., and Voth, H.-J. (2020). Rage Against the Machines: Labor-Saving Technology and Unrest in Industrializing England. Ame. Econ. Rev. Insights 2, 305–320. doi:10.1257/aeri.20190385

Carter, M., and Egliston, B. (2020). Ethical Implications of Emerging Mixed Reality Technologies. Nanotechnology 14, 334–341. doi:10.25910/5ee2f9608ec4d

Javornik, A. (2016). ‘It’s an illusion, but it looks real!’ Consumer Affective, Cognitive and Behavioural Responses to Augmented Reality Applications. J. Market. Manage. 32, 987–1011. doi:10.1080/0267257X.2016.1174726

Lavoie, R., Main, K., King, C., and King, D. (2021). Virtual Experience, Real Consequences: the Potential Negative Emotional Consequences of Virtual Reality Gameplay. Virtual Reality 25, 69–81. doi:10.1007/s10055-020-00440-y

Millard, D. E., Hewitt, S., O'Hara, K., Packer, H., and Rogers, N. (2019). “The Unethical Future of Mixed Reality Storytelling,” in NHT ’19: Proceedings of the 8th International Workshop on Narrative and Hypertext (New York, NY, USA: Association for Computing Machinery), 5–8. doi:10.1145/3345511.3349283

Neuhofer, B., Magnus, B., and Celuch, K. (2020). The Impact of Artificial Intelligence on Event Experiences: a Scenario Technique Approach. Electron Markets 7, 133. doi:10.1007/s12525-020-00433-4

Ramirez, E. J. (2019). Ecological and Ethical Issues in Virtual Reality Research: A Call for Increased Scrutiny. Philos. Psychol. 32, 211–233. doi:10.1080/09515089.2018.1532073

Reichenberger, J., Porsch, S., Wittmann, J., Zimmermann, V., and Shiban, Y. (2017). Social Fear Conditioning Paradigm in Virtual Reality: Social vs. Electrical Aversive Conditioning. Front. Psychol. 8, 1979. doi:10.3389/fpsyg.2017.01979

Schiff, D., Biddle, J., Borenstein, J., and Laas, K. (2020). “What’s Next for AI Ethics, Policy, and Governance? A Global Overview,” in AIES ’20: Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society (New York, NY: Association for Computing Machinery), 153–158. doi:10.1145/3375627.3375804

Slater, M., Gonzalez-Liencres, C., Haggard, P., Vinkers, C., Gregory-Clarke, R., Jelley, S., et al. (2020). The Ethics of Realism in Virtual and Augmented Reality. Front. Virtual Real. 1, 113. doi:10.3389/frvir.2020.00001

Steele, P., Burleigh, C., Kroposki, M., Magabo, M., and Bailey, L. (2020). Ethical Considerations in Designing Virtual and Augmented Reality Products-Virtual and Augmented Reality Design with Students in Mind: Designers’ Perceptions. J. Educ. Technol. Syst. 49, 219–238. doi:10.1177/0047239520933858

Vaidhyanathan, S (2018). Antisocial Media: How Facebook Disconnects Us and Undermines Democracy. Oxford: Oxford University Press.

Keywords: mixed reality, ethics, Cassandra Complex, virtual reality, artificial intelligence

Citation: Finnegan DJ, Zoumpoulaki A and Eslambolchilar P (2021) Does Mixed Reality Have a Cassandra Complex?. Front. Virtual Real. 2:673547. doi: 10.3389/frvir.2021.673547

Received: 27 February 2021; Accepted: 30 April 2021;

Published: 17 May 2021.

Edited by:

Evangelos Niforatos, Delft University of Technology, NetherlandsReviewed by:

Aryabrata Basu, Emory University, United StatesCopyright © 2021 Finnegan, Zoumpoulaki and Eslambolchilar. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Daniel J. Finnegan, ZmlubmVnYW5kQGNhcmRpZmYuYWMudWs=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.