95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real. , 07 September 2021

Sec. Virtual Reality and Human Behaviour

Volume 2 - 2021 | https://doi.org/10.3389/frvir.2021.656473

This article is part of the Research Topic Presence and Beyond: Evaluating User Experience in AR/MR/VR View all 16 articles

Mark Roman Miller1*

Mark Roman Miller1* Jeremy N. Bailenson2

Jeremy N. Bailenson2Augmented reality headsets in use today have a large area in which the real world can be seen, but virtual content cannot be displayed. Users perceptions of content in this area is not well understood. This work studies participants perception of a virtual character in this area by grounding this question in relevant theories of perception and performing a study using both behavioral and self-report measures. We find that virtual characters within the augmented periphery receive lower social presence scores, but we do notfind a difference in task performance. These findings inform application design and encourage future work in theories of AR perception and perception of virtual humans.

One defining aspect of augmented reality (AR) is the integration of real and virtual content (Azuma, 1997). This integration is what separates AR from virtual reality and enables its unique applications. The development of AR headsets progresses towards a vision of computationally-mediated stimuli produced in fidelity high enough to be indistinguishable from reality.

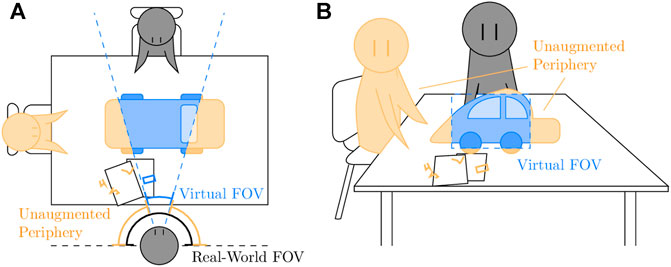

However, this vision has not yet come to fruition. The limitation with which this work concerns itself is the field-of-view (FOV). In all headsets in use today, there are regions of the visual field in which real objects are visible but virtual objects are not. This region has the technical name unaugmented periphery (Lee et al., 2018), defined as the area within the real-world FOV that is not included in the virtual FOV. Figure 1 illustrates these ranges from both a bird’s-eye and a first-person view.

FIGURE 1. Diagram of virtual and real-world fields of view. Virtual objects are drawn in blue and orange. Panel (A) shows a bird’s-eye view of a team collaborating over a table. On the table is a virtual object. The virtual FOV (blue dotted lines) is smaller than the real-world FOV (short grey lines), leading to large sections of virtual content in the unaugmented periphery (orange). Panel (B) shows a first-person view, denoting the virtual FOV (blue) and unaugmented periphery (orange). The grey character is visible, being physically present, while the orange character is not visible, being in the unaugmented periphery.

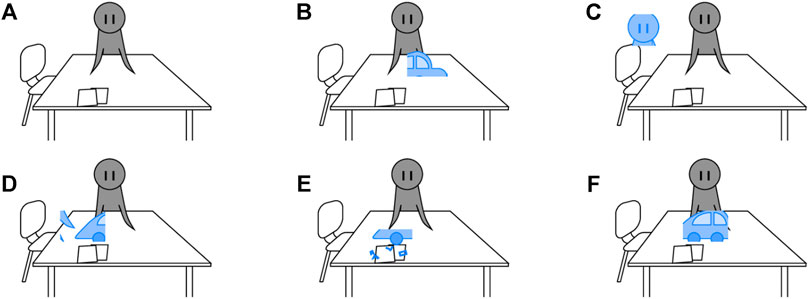

The narrow FOV of current headsets hampers interaction with virtual content. Anecdotally, we have observed first-time users become surprised and occasionally frustrated at the narrow FOV. To illustrate the effects of a narrow FOV, we provide a qualitative illustration of headset use in an example environment (Figure 2) and a quantitative calculation of the size of the unaugmented periphery in the Microsoft HoloLens.

FIGURE 2. Illustrations of a user’s view when using an AR headset with limited FOV. Virtual content is shown in blue. In this figure, there is no rectangle indicating the virtual field of view in order to more accurately show the user’s view of the scene. (A) The user is making eye contact with a physically co-located collaborator. (B) The user looks to part of the virtual car model that the collaborator is speaking about. The whole model does not fit into view, so only part is visible. (C) The user looks over at a remote collaborator. The virtual car is no longer visible. (D) The user looks down at the part of the car the remote collaborator is gesturing towards. The collaborator’s face is no longer visible. (E) The user checks notes, showing part of the virtual car above the virtual annotates made on the paper notes. (F) The user tries to view the entire car, but it does not fit within the headset’s FOV. To see the entire scene at once, see Figure 1 panel B.

To calculate the unagumented periphery, the real-world FOV must be compared with the virtual FOV. Virtual FOV has a straightforward measurement because it is often reported as a technical specification. The HoloLens virtual FOV is about 30° horizontally by 18° vertically (Kreylos, 2015).

The real-world FOV must be estimated based on the device and the size of the human visual field. An estimate for the real-world FOV for this device is approximately 180° horizontally by 100° vertically. This estimate comes in two parts, horizontal and vertical. The vertical field of view is limited slightly by the headset – an object at the very top edge of the human visual field (about 50° above horizontal) would be occluded by the headset’s brim. A fair estimate of the maximum vertical angle visible through the HoloLens is about 30° above horizontal. Combined with the fact that minimum vertical angle is about 70° below horizontal (Jones et al., 2013), a fair estimate is approximately 100° vertical.

The horizontal range is unobstructed by the device, i.e., a real-world object at the far left or far right of the visual field is visible with or without the headset. Therefore, the horizontal field of view is approximately 180°, the horizontal field of view of the human visual system (Jones et al., 2013). In all, the unaugmented periphery of a user wearing the Microsoft HoloLens extends about 20° above the virtual FOV, 60° below it, and 75° to each side. While other headsets have a larger field of view, such as the Microsoft HoloLens 2 and the Magic Leap One, these headsets still have a sizable unaugmented periphery.

The unaugmented periphery raises some questions for AR researchers, designers, and users. How does the brain perceive virtual objects in the unaugmented periphery? In some way, this process is like perceiving an occluded object, which is an everyday occurrence. How does this process extend to cases in which the obscuring object is not a visible one, but rather is the invisible edge of some display? Furthermore, what does this odd kind of invisibility imply for presence of the virtual object within the unaugmented periphery? In this paper, we investigate this by intentionally placing a virtual human in this unaugmented periphery during a task, and we collect data on its social presence.

The task chosen for this work is the social facilitation and inhibition study in Miller et al. (2019) In the experiment, participants solved word puzzles at one of two levels of difficulty either being ‘watched’ by a virtual person or with no virtual person present. By including both of these conditions in our study, in addition to a new condition of the virtual person being inside the resting unaugmented periphery, we can not only test a new hypothesis, but also perform a replication of this work.

The term unaugmented periphery is introduced by Lee et al. (2018) to refer to the area a user can see real-world objects but cannot see virtual objects, i.e., the area within the real-world FOV but not within the virtual FOV. In the study, Lee and colleagues explored differences between a restricted FOV, produced by blocking out the unaugmented periphery with opaque foam, and an unrestricted FOV. Participants walked between two locations about 6 m apart. In the middle of the two locations was an obstacle, either a real or virtual person. They found that a participant’s walking path around a real person was more similar to the walking path around a virtual person by a participant with restricted FOV than to the walking path around a virtual person by a participant with unrestricted FOV, which was interpreted as behavioral evidence that the restricted FOV causes the virtual person to have greater co-presence.

The sensory processing of vision information into coherent and persistent objects has been a subject of study in cognitive psychology. Object permanence, a concept rooted in Piaget (1954), is a person’s understanding that objects can remain in existence even though the person does not receive any sensory stimulation from the object. The natural next step in this line of work involves determining the conditions and causes in judging an object as permanent rather than transient.

We bring two theories to bear upon the user’s experience of the unaugmented periphery. In the first, we refer to work on the perceptual system and the importance of the visual patterns in the moments before the object ceases to be visible. In the second, we refer to a theory of presence that frames presence as the result of “successfully supported actions.”

Gibson et al. (1969) suggest the distinction between an object out of sight or out of existence is made based on whether the object’s final moments within view seem reversible. Reversible exits correspond to the object going out of sight, but non-reversible transitions correspond to objects going out of existence.

For example, picture an observer, Alice, in a hall watching Bob step into a room and close the door behind him. The moment Bob is no longer visible to Alice is the moment the door shuts. While Bob is closing the door, the sensory information that Alice receives indicating Bob’s existence is the portion of him that is visible behind both the doorframe and the door. As Bob is closing the door, that portion becomes thinner and thinner, and the edge of the occluding objects (the door and the doorframe) stay consistent. If this scene is played backwards, it is just as realistic from Alice’s point of view. Instead of the portion in which Bob is visible becoming smaller and smaller, it becomes larger and larger. This reversed scene would be visually very similar to Alice’s view of Bob opening the door. This plausibility of the scene played backwards is what Gibson specifies as the key perceptual difference in the brain’s conclusion of whether the object still exists.

As an example of a transition out of existence, consider a piece of newspaper burning up. If this scene is reversed in time, a newspaper would seem to appear from ashes. This implausible situation, according to Gibson’s theory, is a signal to the perceptual system that the object has gone out of existence, not merely out of sight.

The application of this theory to the unaugmented periphery is a straightforward one. The pattern of visual information from the virtual human follows the pattern of occlusion, which is reversible. Therefore, objects in the unaugmented periphery would be perceived as going out of view rather than out of existence.

The fact that the occluding object is invisible does not invalidate this theory’s application. Invisible occluding objects were discussed in Gibson’s original work, driven by the foundational work of Michotte et al. (1964) and have been the subject of following studies, e.g. (Scholl and Pylyshyn, 1999).

A primary construct in the psychological study of virtual and augmented reality is presence, which we follow Lee (2004) in defining as the “perception of non-mediation.” One theory of presence claims “successfully supported actions” (Zahorik and Jenison, 1998) are the root of presence. When actions are made towards an object, the object reacts, in some fashion, to the action made. When the object’s response is congruent with the person’s expectations of the response, the action is said to be successfully supported.

A very simple example of a successfully supported action is the counter-rotation of a virtual object when the user rotates their head. If a user rotates their head left to right, an object in front of them moves, relative to their field of view, from right to left. A second example would be a glass tipping over when bumped by a user’s hand. The action is the hand contacting the glass, and the support is the production of a realistic tipping over effect.

Recent work in augmented reality aligns with this theory of presence being the result of “successfully supported actions.” Work by Lee et al. (2016) placed participants at one end of a half-virtual, half-physical table. At the virtual end of the table was a virtual human interviewing the participant. The independent variable of the study was whether the wobbling of the table would be coherent between the virtual and physical worlds. In the experimental condition, if either the participant or the virtual character leaned on the table, the physical side of the table wobbled as if connected to the virtual side. This experimental condition resulted in higher presence and social presence of the virtual human than the control condition. In a study by Kim et al. (2018), co-presence of a virtual human was higher in the condition when virtual content (specifically, a person, some sheets of paper, and window curtains) in an AR scene to respond to the airflow from a real oscillating fan within the experiment room. In sum, realistic responses from virtual objects tend to increase presence.

When an object is within the unaugmented periphery, there is not only a lack of stimuli indicating existence, but also a violation of the user’s expectations as to the virtual object’s behavior, which are unsuccessfully supported actions. Because objects in front occlude objects in back, one expects that the virtual human should occlude the real-world objects behind it; this expectation is violated when the virtual human is virtually occluded in the unaugmented periphery. In sum, this theory of presence would predict objects in the unaugmented periphery to be less present than virtual objects within the virtual FOV.

Social facilitation and inhibition are complementary findings explaining the effect of an audience improving or impairing the performance of a task, depending on other contextual factors such as difficulty (Bond and Titus, 1983; Aiello and Douthitt, 2001). The earliest scientific mention of the social facilitation effect is from Triplett (1898) who found that children winding fishing reels of string in competition performed faster than those who wound alone.

These seemingly contradictory effects were synthesized into one theory by Zajonc (1965), who suggested that the effects were both due to the presence of others increasing arousal, and arousal increasing the likelihood of the dominant response. The bidirectional effects were due to the nature of the task. In the case the task was simple, and the dominant response was likely correct, an audience would improve performance. However, when the task was complex, the dominant response would be incorrect, and so an audience would impair performance.

The effects of social facilitation and inhibition can even occur due to the implied presence of others (Dashiell, 1930) or to virtual others (Hoyt, Blascovich and Swinth, 2003; Park and Catrambone, 2007; Zanbaka et al., 2007). In augmented reality, Miller et al. (2019) found evidence of a social facilitation and inhibition effect due to the presence of a virtual person displayed in augmented reality.

The current work builds upon work by Lee et al. (2018) and Miller et al. (2019) s, and so we take some space here to provide a more direct contrast with these works.

Our study design is similar to the work of Miller et al. (2019). In the study of social facilitation an inhibition, the first of the three reported in that paper, participants performed a cognitive task in two conditions, with or without a virtual person. That study did not investigate the unaugmented periphery. In contrast, in the current study, we examine both conditions from the previous work in addition to a third condition in which the virtual human is intended to be within the unaugmented periphery.

While Lee et al. (2018) do focus on the unaugmented periphery and use behavioral measures of presence, their contribution is the proposal and test of a technological solution for the challenges raised by the unaugmented periphery. Specifically, they proposed to reduce the real-world FOV to increase presence. While effective as a piecemeal solution, this approach leaves many unanswered questions, most prominently the perceptual status of a virtual person within the unaugmented periphery in contrast to a fully present or fully absent virtual person.

In sum, this work investigates the perception of virtual humans within the unaugmented periphery to better ground discussions of its importance and potential solutions.

The augmented reality display device was the Microsoft HoloLens headset, version 1. The field-of-view is 30° by 17.5°, and the resolution is 1,268 × 720 pixels for each eye. The device tracked its own position and orientation relative to the room and exported the data to a tracking file. The device also recorded the audio spoken by the participant during the task.

The cognitive task performed by the participant during the experiment was the anagram task used in both Park and Catrambone (2007) and Miller et al. (2019). Each anagram had five letters, and each participant was given ten anagrams to solve. Anagrams were broken up into easy and hard sets.

This study was performed in two locations to create a sufficiently large sample size. While this aggregation may reduce sensitivity, it also increases generalizability. The first was a booth at a science and technology museum in a large American city. This booth was approximately 2.5 m by 4 m. While seeing into the booth was not possible when the door was shut, the top of the booth was open and other museumgoers could easily be heard. In this location, the virtual person was 2.06 m from the participant on average.

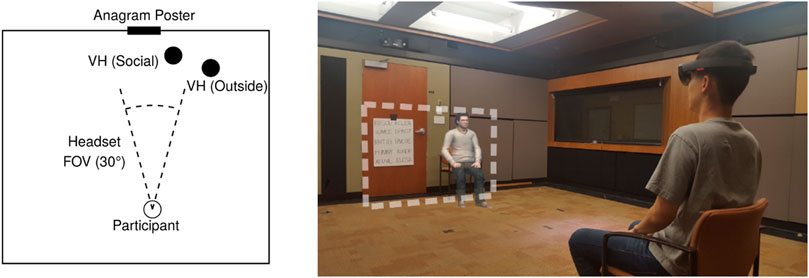

The second location was a study room on a medium-size, suburban private college campus, 5.6 m by 6.4 m. The layout of the room is depicted in Figure 3. This room was separated from other rooms, and while there was a window connecting this room to another, the experimenter ensured the other room was empty. The virtual person was 3.83 m from the participant on average.

FIGURE 3. The left panel is a bird’s‐eye diagram of the study setup. The right panel is a photo of the campus-based study location, with the researcher posing in the place of a participant. The white dotted area indicates the user’s virtual FOV.

The virtual content displayed to the participants consisted of a Rocketbox Virtual Character (Gonzalez-Franco et al., 2020). To reduce gender effects, the virtual human displayed to the participant was the same gender as the participant. When the researcher pressed a button, the virtual human spoke a 20 s recorded introduction with talking gestures. When not speaking, the virtual human idled using a looped animation with a small amount of head motion and tilt.

The study design was preregistered at the Open Science Foundation1, which included independent and dependent variables as well as covariates. Preregistering variables aims to reduce selective reporting of results based upon statistical significance (Nosek et al., 2018).

The study design was a 3 × 2 design, with three conditions of visibility and two conditions of task difficulty. Each participant only experienced one of these combinations, making both variables between-subjects.

The virtual human can be Social, Outside FOV, or Alone. In the Social condition, the virtual human is present and placed near the anagram poster. The placement was about five degrees horizontally from the poster, and was chosen so that the virtual human would be within the field of view during the experiment. In the Outside FOV condition, the virtual human was present, but was farther away from the anagram poster, about 25°. This value was chosen so that the virtual human would be outside the field of view, unless the participant looked in the direction of the virtual human. Note that the virtual human was visible as part of the pretest procedure even in the Outside FOV condition. Finally, there was the Alone condition, in which no virtual person was introduced or visible.

In order to test social facilitation and inhibition, rather than the virtual human changing performance across the board, there was two separate levels of difficulty, labeled as Easy and Hard. The anagram sets are the same ones as used in Miller et al. (2019). Each set consisted of 10 anagrams.

Social presence was measured as the average of the five-item Social Presence Questionnaire as used in Miller et al. (2019) with questions such as “To what extent did you feel like Chris was watching you?”. These questions are included in the supplemental material. The scale gives five verbal options “Not at All” to “Extremely” that are mapped to integer values 1 to 5. Social presence values in this study had mean of 2.03, a standard deviation of 0.88, and a range from 1 to 4.6.

The primary measure for measuring social facilitation and inhibition is the number of anagrams solved in the 3 minutes given for completing the task. The range of possible values was 0–10, as there were 10 anagrams to solve on each poster. On average, participants solved 5.92 anagrams, with a standard deviation of 3.19, and a range from 0 to 10.

Based upon a definition of presence as successfully supported action, a virtual human that is visible more often would be predicted to have higher presence, as visibility can be thought of an action that is successfully supported.

To calculate whether the virtual human was visible or not, we calculated the central angle around the head between the headset’s forward vector and the vector going from the headset to the virtual human. In short, this value measures the angular distance between the center of the field of view of the headset and the center of the virtual human. We chose 20° as the cut-off point such that the moments of time when the central angle was less than 20°, the virtual human was assumed to be visible, and the moments when the central angle was greater than 20°, the virtual human was assumed not to be visible. The final value used in statistics was the total time in seconds for which the virtual human was visible.

Participants began the study with an online pre-screening, disallowing participants with epilepsy or high susceptibility to motion sickness. For participants on campus, this pre-screening was done two to 7 weeks before the study. For participants at the museum, this screening included in the consent form and explicitly mentioned to participants. At both locations, participants over 18 filled out the consent form, while participants younger than 18 completed an assent form while a parent or legal guardian completed the parental consent form. Participants then were asked to complete a pre-survey.

The training phase began with an explanation and test in solving the anagrams. Participants were prompted with two-word puzzles and were given about 40 seconds to complete them. If they did not, the experimenter informed them the answers and confirmed the answers made sense in the context of the instructions. Then, the experimenter introduced the HoloLens, including instructions for headset fit. At this point, we differ from the method in Miller et al. (2019) and do not have the participant walk towards virtual objects. This was due to the space constraints at the museum. At this point, if the participant was in either the Social or Outside FOV conditions, the virtual human was visible and introduced himself or herself to the participant. Participants in the Alone condition did not see the character, hear the introduction, or otherwise receive sensory information suggesting the presence of a virtual character.

The testing phase occurred when the experimenter placed a poster of anagrams visible to the participant and started the 3 min timer. At this point the experimenter stepped out of the room and waited to return. Upon return, the experimenter collected the headset and asked the participant to complete a post-experiment survey. The text of this survey is included in the supplementary material. Finally, the participant was debriefed about the purpose and conditions of the study, and participants in the Alone condition were offered a chance to see the virtual person.

A total of 128 participants were collected under a university approved IRB protocol Twelve participants were not included in the analysis: three were not recorded, two recordings stopped halfway through, two elected to end early and leave, two participants tracking data were lost, two participants were not clearly informed of the anagram instructions, and one participant was not given the pre-survey. In total, the data of 116 participants (51 female, 64 male, and 1 nonbinary) could be analyzed. There were 29 participants from the museum, whose ages had a mean of 37.28, a standard deviation of 18.65, and a range from 13 to 81, and there were 87 participants on campus, whose ages had a mean of 20.34, a standard deviation of 1.42, and a range from 18 to 25. Breaking down by virtual human visibility and difficulty, there were 35 participants in the Alone condition (17 easy, 18 hard), 40 in the Outside FOV condition (18 easy, 22 hard), and 41 in the Social condition (22 easy, 19 hard).

In this study, we follow the preregistration distinction between confirmatory and exploratory tests (Nosek et al., 2018). Clarifying which kind of test is being run solidifies interpretations of confirmatory p-values while also allowing unexpected results to be reported. According to the preregistration, the primary confirmatory test is social facilitation and inhibition, which is an interaction effect between difficulty and visibility of the virtual human. This test is followed by others related to social presence and visibility.

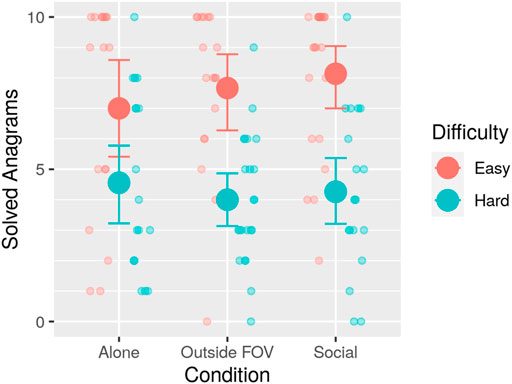

The confirmatory test in this study is an attempt of a replication of the first study in Miller et al. (2019) showing an effect on task performance based upon the interaction of task difficulty and virtual human visibility. This effect is interpreted as a social facilitation and inhibition effect. All three conditions (Alone, Outside FOV, Social) are given in Figure 4.

FIGURE 4. Larger points represent means; smaller transparent points represent individual entries. Error bars are 95% bootstrapped confidence intervals of the mean. While the trends of social facilitation and inhibition are present, i.e., more easy anagrams and fewer hard anagrams are solved in the social condition than in the alone condition, the difference is not significant.

The statistical analysis performed was to predict the number of anagrams solved based on difficulty and visibility, with visibility limited to merely the Alone and Social conditions. The model was an ordinal logistic regression using the “polr” function from the “MASS” package for the R programming language. This was performed rather than a linear model due to the non-normality of the residuals indicated by the Shapiro-Wilk test (W = 0.951, p = 0.005).

The difference in anagram score due to difficulty was significant [t (72) = −2.55, p = 0.013, r = −0.29] such that participants solved fewer Hard anagrams than Easy anagrams. The visibility of the virtual human did not have a significant effect on anagram score [t (72) = 0.863, p = 0.390, r = 0.10]. The interaction effect between difficulty and visibility, which is our predicted indicator of social facilitation and inhibition, was not significant either [t (72) = −0.698, p = 0.488, r = −0.08]. For reference, the test was sensitive to an effect size of r = +/-0.35 at a power of 0.8.

Other tests and results explore the consequences of virtual humans outside the device’s field of display. Because of their exploratory nature, these tests should encourage future confirmatory work.

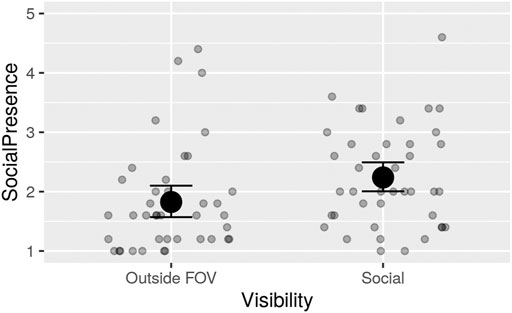

While the measure of anagrams solved was not sensitive enough to capture the social effect of others, the social presence questionnaire was able to. This questionnaire asked participants how socially present the virtual human felt to them during the experiment. Only participants in the Outside FOV and Social cases were asked this question, as there was no analogous question to ask when there was no virtual person. The results of this survey are plotted in Figure 5.

FIGURE 5. Self-reported social presence was significantly higher in the Social condition than the Outside FOV condition. Larger points represent means; smaller transparent points represent individual entries. Error bars are 95% confidence intervals of the mean.

The statistical analysis performed was to predict the social presence rating based on visibility, with visibility limited to the Social and Outside FOV conditions. The test was a Kruskal-Wallis rank sum test from the base library for the R programming language, as the linear test had non-normally distributed residuals. The effect of visibility on social presence was significant (χ2 (1, 79) = 6.87, p = 0.009, r = 0.27) such that participants in the Outside FOV condition reported less social presence than participants in the Social condition. This result validates intuition that when the virtual human is intentionally placed outside the device’s field of view, the participants feel the virtual human is less socially present.

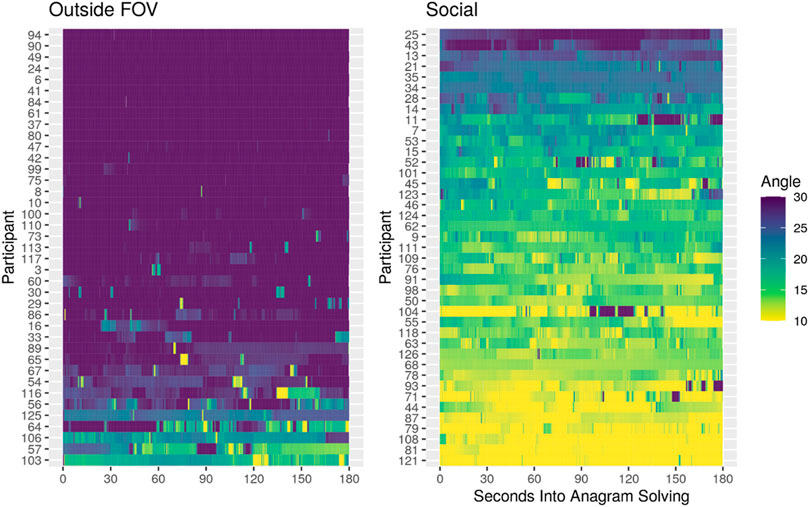

Gaze behavior of the participant is a useful behavioral variable. For the purposes of analysis, we have collapsed it into a single value, specifically, the amount of time the virtual human is in view while the participant is solving the anagrams. In this section, we wish to show the gaze behavior in finer detail. This serves two purposes: first, due to the few studies that investigate gaze behavior of objects within the unaugmented periphery, this can provide a global, intuitive sense of gaze behavior in this situation. Second, individual-level reporting of variables reveals unique features of participants (Molenaar and Campbell, 2009; Ram, Brose and Molenaar, 2013) and can provide opportunities to direct future lines of research.

Figure 6 displays how far, in degrees, the virtual human is from the center of the headset's field of view. In the plot, the angle values are clamped between 10 and 30° to focus on a more expressive range of values. This means that any values less than 10 are displayed as 10, and any values more than 30 are displayed as 30. These values were chosen because at a difference of 10°, the virtual human is certainly visible, and at 30°, the virtual human is certainly not visible.

FIGURE 6. Angle between head-forward and direction of virtual human for each participant in the study over time.

Looking at the graph, the first visual feature is a strong visual difference in color between the two conditions. Because color represents visibility of the virtual human, this difference is interpreted as a manipulation check that participants did tend to focus on the anagram poster, and this made the virtual human in the Outside FOV condition usually not visible (>30°) while the virtual human in the Social condition was visible (<10°). A t-test between conditions confirms this (t (79) = -8.90, p < 0.001) Some participants never look back at the virtual human in the Outside FOV condition (e.g., 47, 49, 84), while others make a point to be looking at the virtual person (e.g., 57, 103). Most participants in this condition look at the virtual human only a few seconds (e.g., 29, 30, 86, 113).

The primary finding of this experiment is exploratory evidence that social presence of virtual humans in the unaugmented periphery is less than virtual humans in the augmented center. These findings are in accordance with a “successfully-supported-action” theory of presence.

The second finding is for augmented reality experiences. Application designers may expect some level of “curiosity” from users, e.g., to look around at other objects even when focusing on a task. This expectation should be calibrated against these results, which show that a portion of participants in the Outside FOV condition (16 of 40) never looked back at the virtual person once starting the task.

Finally, we speak of the lack of replication of the social facilitation and inhibition effect. With non-replications, there are points that almost always can be made, such as the true effect is smaller than the originally reported effect. We may also propose a few other reasons the effect did not replicate. Because many in this participant pool participated in previous AR studies, this population is not as naive as in previous work. According to our survey questions, 42 of 116 participants had previous experience with AR. Furthermore, one difference in the procedures between Miller et al. (2019) and this work is the pre-study interaction with AR content. The anagram-solving portion of the study does not require any physical movement on the participant’s part, so when the avatar is present the experience may feel like a traditional screen-based character. Physical movement has been known to be a correlate of presence in some situations (Slater et al., 1998; Markowitz et al., 2018). In the original study, there was some minimal interaction with virtual content (walking towards virtual shapes) that could have increased presence due to successfully supported actions.

In this work, we present mixed results on the social presence of a virtual person outside the field of view. Participants who did not see the virtual person much, i.e., the virtual person most resided inside the unaugmented periphery, rated the virtual person as less socially present, but the behavioral measure of a word puzzle task was not significantly different. In addition, we attempt to replicate the first study in Miller et al. (2019) and do not find evidence of the same effect, but we do note the trends are in the same direction as originally reported.

One limitation of this study is the sample size. While the subject size is twice as large as Miller et al. (2019), it still may be too small to find an accurate measure of effect size, considering the test was sensitive to effect sizes of r = 0.35 or larger. In addition, while there are some subjects from the general public, the majority of subjects are still college-age participants. Findings among users with different levels of comfort with digital devices may be different. In addition, augmented reality is not part of everyday experience in the locale this study was performed in, so there may be differences between effects occurring today and in the future due to novelty.

It is also worth noting that the two separate study locations (museum and campus) may have increased the variance or affected the looking behavior. While ideally this effect should be robust across locations and populations, it may have influenced the power of the results.

For the experimental design, it must be noted that the task did not involve any interaction with the virtual human and the virtual human had low behavioral realism (Blascovich, 2002). This difference must be taken into context when application designers consider interaction with characters in the unaugmented periphery.

Questions remain towards the nature of the social facilitation and inhibition effect in augmented reality. If this effect is not present or significantly weaker than in virtual reality, then the features between the two media should be explored. For example, visual quality does not matter much for presence in virtual reality (Cummings and Bailenson, 2016), but with the juxtaposition of virtual content with the realism of the real world, there may be a much higher threshold in visual realism for augmented reality.

For the continuation of the investigation of unaugmented periphery, two variables we propose to investigate are varying modes of interaction and degrees of realism. For example, one may expect virtual characters that can interact verbally to be more socially present than those that interact only visually, especially when the character in the unaugmented periphery.

Studying the unaugmented periphery relates back to questions of perception and opens questions about presence in augmented reality. Better understanding of these unique situations of augmented reality will lead to better designed experiences in the future.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Stanford Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

JB and MM conceptualized the study. MM coordinated and performed the experiment. MM drafted the text and JB and MM edited substantially. JB provided study funding.

National Science Foundation, grants 1800922 and 1839974. The funders had no role in the study design, collection, analysis, or interpretation of the results.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2021.656473/full#supplementary-material

Aiello, J. R., and Douthitt, E. A. (2001). Social Facilitation from Triplett to Electronic Performance Monitoring. Group Dyn. Theor. Res. Pract. 5, 163–180. doi:10.1037/1089-2699.5.3.163

Azuma, R. T. (1997). A Survey of Augmented Reality. Presence: Teleoperators & Virtual Environments 6, 355–385. doi:10.1162/pres.1997.6.4.355

Blascovich, J. (2002). Social Influence within Immersive Virtual Environments, 127–145. doi:10.1007/978-1-4471-0277-9_8

Bond, C. F., and Titus, L. J. (1983). Social Facilitation: A Meta-Analysis of 241 Studies. Psychol. Bull. 94, 265–292. doi:10.1037/0033-2909.94.2.265

Cummings, J. J., and Bailenson, J. N. (2016). How Immersive Is Enough? A Meta-Analysis of the Effect of Immersive Technology on User Presence. Media Psychol. 19, 272–309. doi:10.1080/15213269.2015.1015740

Dashiell, J. F. (1930). An Experimental Analysis of Some Group Effects. J. Abnormal Soc. Psychol. 25, 190–199. doi:10.1037/h0075144

Gibson, Js. J., Kaplan, G. A., Reynolds, H. N., and Kirk, W. (2019). The Change from Visible to Invisible: A Study of Optical Transitions*. Perception & Psychophysics 5, 194–202. doi:10.4324/9780367823771-19

Gonzalez-Franco, M., Ofek, E., Pan, Y., Antley, A., Steed, A., Spanlang, B., et al. (2020). The Rocketbox Library and the Utility of Freely Available Rigged Avatars. Front. Virtual Real. 1, 1–23. doi:10.3389/frvir.2020.561558

Hoyt, C. L., Blascovich, J., and Swinth, K. R. (2003). Social Inhibition in Immersive Virtual Environments. Presence: Teleoperators & Virtual Environments 12, 183–195. doi:10.1162/105474603321640932

Jones, J. A., Swan, J. E., and Bolas, M. (2013). Peripheral Stimulation and its Effect on Perceived Spatial Scale in Virtual Environments. IEEE Trans. Vis. Comput. Graph 19, 701–710. doi:10.1109/TVCG.2013.37

Kim, K., Bruder, G., and Welch, G. F. (2018). “Blowing in the Wind: Increasing Copresence with a Virtual Human via Airflow Influence in Augmented Reality,” in International Conference on Artificial Reality and Telexistence Eurographics Symposium on Virtual Environments. Editors G. Bruder, S. Cobb, and S. Yoshimoto.

Kreylos, O. (2015). On the Road for VR: Microsoft HoloLens at Build 2015, 5. San Francisco. Available at: http://doc-ok.org/?p=1223 (Accessed May 3, 2021).

Lee, K. M. (2004). Presence, Explicated. Commun. Theor. 14, 27–50. doi:10.1111/j.1468-2885.2004.tb00302.x

Lee, M., Bruder, G., Hollerer, T., and Welch, G. (2018). Effects of Unaugmented Periphery and Vibrotactile Feedback on Proxemics with Virtual Humans in AR. IEEE Trans. Vis. Comput. Graphics 24, 1525–1534. doi:10.1109/TVCG.2018.2794074

Lee, M., Kim, K., Daher, S., Raij, A., Schubert, R., Bailenson, J., and Welch, G. (2016). “The Wobbly Table: Increased Social Presence via Subtle Incidental Movement of a Real-Virtual Table,” in Proceedings - IEEE Virtual Reality, 2016-July: 11–17, Greenville, South Carolina. doi:10.1109/VR.2016.7504683

Markowitz, D. M., Laha, R., Perone, B. P., Pea, R. D., and Bailenson, J. N. 2018. "Immersive Virtual Reality Field Trips Facilitate Learning about Climate Change." Front. Psychol. 9, 1–20. doi:10.3389/fpsyg.2018.02364

Michotte, A., Thines, G., and Crabbe, G. (1964). Amodal Completion of Perceptual Structures. Michotte's Experimental Phenomenology of Perception. New York, NY: Routledge.

Miller, M. R., Jun, H., Herrera, F., Yu Villa, J., Welch, G., and Bailenson, J. N. (2019). Social Interaction in Augmented Reality. PLOS ONE 14, e0216290. doi:10.1371/journal.pone.0216290

Molenaar, P. C. M., and Campbell., C. G. (2009). The New Person-specific Paradigm in Psychology. Curr. Dir. Psychol. Sci. 18, 112–117. doi:10.1111/j.1467-8721.2009.01619.x

Nosek, B. A., Ebersole, C. R., DeHaven, A. C., and Mellor, D. T. (2018). The Preregistration Revolution. Proc. Natl. Acad. Sci. USA 115, 2600–2606. doi:10.1073/pnas.1708274114

Park, S., and Catrambone, R. (2007). Social Facilitation Effects of Virtual Humans. Hum. Factors 49, 1054–1060. doi:10.1518/001872007X249910

Ram, N., Brose, A., Peter, C., and Molenaar, M. (2013). “Dynamic Factor Analysis: Modeling Person-specific Process,” in The Oxford Handbook of Quantitative Methods (Vol 2): Statistical Analysis. New York, NY: Oxford University Press, 441–457. Avaialable at: http://www.redi-bw.de/db/ebsco.php/search.ebscohost.com/login.aspx?direct=true&db=psyh&AN=2013-01010-021&site=ehost-live. doi:10.1093/oxfordhb/9780199934898.013.0021

Scholl, B. J., and Pylyshyn, Z. W. (1999). Tracking Multiple Items through Occlusion: Clues to Visual Objecthood. Cogn. Psychol. 38, 259–290. doi:10.1006/cogp.1998.0698

Slater, M., McCarthy, J., Maringelli, F., and Maringelli, F. (1998). The Influence of Body Movement on Subjective Presence in Virtual Environments. Hum. Factors 40, 469–477. doi:10.1518/001872098779591368

Triplett, N. (1898). The Dynamogenic Factors in Pacemaking and Competition. Am. J. Psychol. 9, 507–533. doi:10.2307/1412188

Zahorik, P., and Jenison, R. L. (1998). Presence as Being-In-The-World. Presence 7, 78–89. doi:10.1162/105474698565541

Keywords: augmented reality, field-of-view, unaugmented periphery, social presence, social facilitation, social inhibition

Citation: Miller MR and Bailenson JN (2021) Social Presence Outside the Augmented Reality Field of View. Front. Virtual Real. 2:656473. doi: 10.3389/frvir.2021.656473

Received: 20 January 2021; Accepted: 23 August 2021;

Published: 07 September 2021.

Edited by:

Mary C. Whitton, University of North Carolina at Chapel Hill, United StatesReviewed by:

Henrique Galvan Debarba, IT University of Copenhagen, DenmarkCopyright © 2021 Miller and Bailenson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mark Roman Miller, bXJtaWxsckBzdGFuZm9yZC5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.