94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real. , 21 April 2021

Sec. Virtual Reality and Human Behaviour

Volume 2 - 2021 | https://doi.org/10.3389/frvir.2021.654088

This article is part of the Research Topic Perception and Neuroscience in Extended Reality (XR) View all 6 articles

Walking is a fundamental physical activity in humans. Various virtual walking systems have been developed using treadmill or leg-support devices. Using optic flow, foot vibrations simulating footsteps, and a walking avatar, we propose a virtual walking system that does not require limb action for seated users. We aim to investigate whether a full-body or hands-and-feet-only walking avatar with either the first-person (experiment 1) or third-person (experiment 2) perspective can convey the sensation of walking in a virtual environment through optic flows and foot vibrations. The viewing direction of the virtual camera and the head of the full-body avatar were linked to the actual user's head motion. We discovered that the full-body avatar with the first-person perspective enhanced the sensations of walking, leg action, and telepresence, either through synchronous or asynchronous foot vibrations. Although the hands-and-feet-only avatar with the first-person perspective enhanced the walking sensation and telepresence, compared with the no-avatar condition, its effect was less prominent than that of the full-body avatar. However, the full-body avatar with the third-person perspective did not enhance the sensations of walking and leg action; rather, it impaired the sensations of self-motion and telepresence. Synchronous or rhythmic foot vibrations enhanced the sensations of self-motion, waking, leg action, and telepresence, irrespective of the avatar condition. These results suggest that the full-body or hands-and-feet avatar is effective for creating virtual walking experiences from the first-person perspective, but not the third-person perspective, and that the foot vibrations simulating footsteps are effective, regardless of the avatar condition.

Walking is a fundamental physical activity in the daily lives of humans. Rhythmic activity is controlled semi-automatically by a spinal locomotion network (central pattern generator) (MacKay-Lyons, 2002). Walking produces various perceptions that differ from standing, although humans are not typically conscious of them because of the semi-automatic process involved. For example, vestibular sensations occur during movements, visual elements move radially (optic flow), tactile sensations arise on the feet with the foot striking on the ground, and the proprioception of limbs changes each time a person moves their hands and feet. Conversely, a walking sensation can be induced by presenting stimuli perceived while walking.

Generating the pseudo-sensation of walking is a popular topic in the virtual reality (VR) field. A popular method entails having a person walk at a certain location in a stationary place (walking-in-place; e.g., Templeman et al., 1999) or using a specific apparatus such as omnidirectional treadmills (Darken et al., 1997; Iwata, 1999), foot-supporting motion platforms (Iwata et al., 2001), movable tiles (Iwata et al., 2005), and rotating spheres (Medina et al., 2008). Freiwald et al. (2020) developed a virtual walking system by mapping the cycling biomechanics of seated users' legs to virtual walking. These methods can produce a pseudo-sensation of walking because the participants move their legs, and the motor command and proprioception of the legs are similar to actual leg movements.

The perception of self-motion, which can be induced only by visual motion in a large visual field, is known as visually induced self-motion perception or vection (as a review, Dichgans and Brandt, 1978; Riecke, 2011; Palmisano et al., 2015). Vection is induced when motion is presented in a large visual field (Dichgans and Brandt, 1978; Riecke and Jordan, 2015), with background, rather than foreground motion (Brandt et al., 1975; Ohmi et al., 1987), and non-attended, rather than attended motion, (Kitazaki and Sato, 2003) being dominant. Vection is heightened by perspective jitter (Palmisano et al., 2000, 2003) and non-visual modality information, such as sound and touch (Riecke et al., 2009; Farkhatdinov et al., 2013). It is also facilitated by naturalistic and globally consistent stimuli (Riecke et al., 2005, 2006).

Several virtual walking systems that do not necessitate active leg movements have been developed using vection (Lécuyer et al., 2006; Terziman et al., 2012; Ikei et al., 2015; Kitazaki et al., 2019). Integrating simulated camera motions (similar to perspective jitter) with the vection stimulus, such as an expanding radial flow, improves the walking sensation of seated users (Lécuyer et al., 2006). By conveying tactile sensations to the feet in addition to vection, the pseudo-sensation of virtual walking sensation can be induced (Terziman et al., 2012; Ikei et al., 2015; Kitazaki et al., 2019). Kitazaki et al. (2019) demonstrated that foot vibrations that were synchronous to the oscillating actual optic flow induced not only the sensation of self-motion but also the sensations of walking, leg action, and telepresence. Kruijff et al. (2016) demonstrated that walking-related vibrotactile cues on the feet, simulated head bobbing as a visual cue, and footstep sounds as auditory cues enhance vection and presence. Telepresence, one of the crucial factors in VR, refers to the perception of presence at a physically remote or simulated site (Draper et al., 1998).

However, users cannot observe their bodies in these virtual walking systems. This is inconsistent with our daily experience. Turchet et al. (2012) developed a multimodal VR system to enhance the realism of virtual walking by combining visual scenes, footstep sounds, and haptic feedback on the feet that vary according to the type of ground; they also incorporated a walking or running avatar from a third-person perspective. Although they demonstrated that consistent multimodal cues enhance the realism of walking, they did not evaluate the effect of the avatar. Humans can have illusory body ownership of an avatar when they receive synchronous tactile sensations with it, such as the rubber hand illusion (Botvinick and Cohen, 1998) and full-body illusion (Ehrsson, 2007; Lenggenhager et al., 2007; Petkova and Ehrsson, 2008), or when the avatar moves synchronously with them (Gonzalez-Franco et al., 2010; Maselli and Slater, 2013). The sense of agency is the subjective experience of controlling one's own actions and events in the virtual world (Haggard and Chambon, 2012; Haggard, 2017). Kokkinara et al. (2016) demonstrated that despite the absence of any corresponding activity, except head motions on the part of the seated users, they exhibited an illusory sense of ownership and agency of their walking avatars in a virtual environment. The illusory ownership and agency were stronger with a first-person perspective, compared to a third-person perspective, and the sway simulating head motion during walking reduced the sense of agency.

Therefore, we propose a new virtual walking system that induces the sense of ownership and agency in the users of a walking self-avatar through a combination of vection and leg action induced by optic flow and foot vibrations, respectively. The illusory ownership of the avatar's body and sense of agency could enhance the sensations of telepresence and walking (Sanchez-Vives and Slater, 2005).

The walking avatars we employed were hands-and-feet-only and full-body avatars. When the virtual hands and feet exhibit an appropriate spatial relationship and moved synchronously with the user, they will feel as if the hands-and-feet-only avatar is their own body; furthermore, there will be a sense of having an invisible trunk between the hands and feet (Kondo et al., 2018, 2020). This method of inducing illusory body ownership is easy to implement, requires low computing power, and can potentially reduce the conflict between the appearance of the actual self's body and the avatar's body. Hence, we aim to compare hands-and-feet-only and full-body avatars in experiments.

We employed a first-person perspective in experiment 1 and a third-person perspective in experiment 2. The first-person perspective is more effective for inducing illusory body ownership in virtual environments (Slater et al., 2010; Maselli and Slater, 2013; Kokkinara et al., 2016), more immersive (Monteiro et al., 2018), and better for dynamic task performance (Bhandari and O'Neill, 2020). However, walking persons typically view a limited part of the avatar's body (such as their hands and insteps in the periphery) from the first-person perspective; therefore, a mirror is typically used to view the full-body. However, the presence of a mirror on the scene or viewing their own body in the mirror might interfere with walking sensations. By contrast, a person can have illusory ownership of the avatar's entire body from behind the avatar from a third-person perspective (Lenggenhager et al., 2007, 2009; Aspell et al., 2009). It might be preferable to view the avatar's walking movements from a third-person perspective, rather than a first-person perspective. Therefore, we also investigated the effects of the third-person perspective on the illusory body ownership of the walking avatar, in addition to those of the first-person perspective.

In summary, the purpose of this study was to analyze whether an avatar representing a seated user's virtual walking can enhance the sensation of walking through optic flow and foot vibrations that are synchronous with the avatar's foot movements. The full-body and hands-and-feet-only avatars with both the first- and third-person perspectives were compared. We predicted that either of the walking hand-and-feet or full-body avatar would enhance the virtual walking sensation. It must be noted that the preliminary analysis of experiment 1 has already been presented at a conference as a poster presentation (Matsuda et al., 2020).

Twenty observers (19 men) aged 21–23 (mean 21.95, SD 0.50) and 20 observers (all men) aged 19–24 (mean 21.65, SD 1.11) participated in experiments 1 and 2, respectively. The two experiments were comprised of different participants. All observers had normal or corrected-to-normal vision. Informed consent was obtained from all the participants prior to the experiment. The experimental methods were approved by the Ethical Committee for Human-Subject Research at the Toyohashi University of Technology, and all methods were performed in accordance with the relevant guidelines and regulations. The sample size was determined via a priori power analysis using G*Power 3.1 (Faul et al., 2007, 2009): medium effect size f = 0.25, α = 0.05, power (1 – β) = 0.8, and repeated measures ANOVA (three avatar conditions × two vibration conditions).

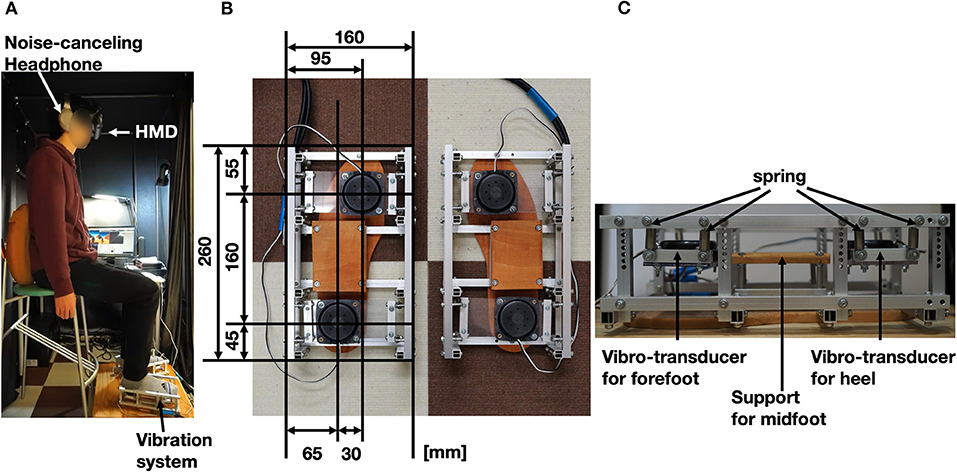

We used a computer (Intel Core i7 6700, NVIDIA GeForce GTX 1060 6GB) with Unity Pro to create and control the stimuli. The visual stimuli were presented using a head-mounted display [HMD; HTC VIVE, 1080 (width) × 1,200 (height) pixels, refresh rate, 90 Hz; Figure 1A). The final IK Unity plugin was used to associate the participants' head movements with the avatar's head movement in yaw, roll, and pitch. Tactile stimuli were created using Audacity and presented via four vibro-transducers (Acouve Lab Vp408, 16–15 kHz), each placed on the left and right forefeet and heels by inputting sound signals from a power amplifier (Behringer EPQ450, 40 W (8 Ω) × 4 ch) through a USB multichannel preamplifier (Behringer FCA1616, input 16 ch, output 16 ch) controlled by the computer. The maximum input to the vibro-transducers was 6 W, and the amplitude was fixed throughout all the experiments. The amplitude of the vibrations was strong enough for all the participants to feel vibrations while wearing socks. The foot vibration system was made of aluminum frames, vibro-transducers, acrylic plates, springs, and wood plates (Figure 1B). The relative positions of the vibro-transducers were constant for all the participants. The wood plates were firmly connected to the aluminum frame and supported the participants' midfoot. The vibro-transducers for the forefoot and heel were connected to the frame via an acrylic plate with springs to prevent transmission of vibrations (Figure 1C). To eliminate sounds from the vibro-transducers, white noise (70 dBA) was presented to the participants using a noise-canceling headphone (SONY WH-1000XM2). The system latency was as high as 11.11 ms.

Figure 1. Apparatus. (A) System overview. The participants, who sat on a stool, wore a head-mounted display (HMD) and placed their feet on the vibration system. (B) Top view of the vibration system that comprised four vibro-transducers. (C) Side view of the vibration system. Vibro-transducers were connected to the aluminum frame with springs to prevent transmission of vibrations.

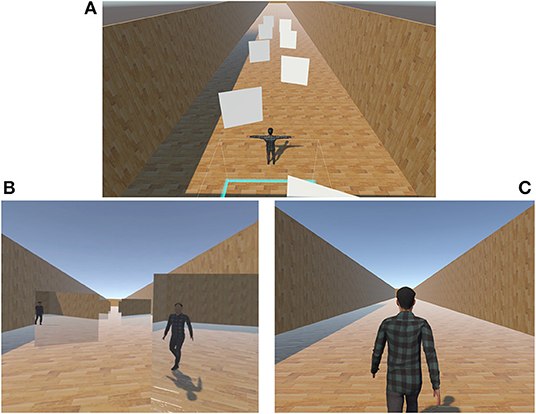

A virtual room and an avatar were presented in a virtual environment through an HMD. As shown in Figure 2A, the room comprised a textured floor with sidewalls (10-m width × 120-m depth × 5-m height); the textured floor was made of wooden materials. In experiment 1, 18 mirrors (2 × 2 m, 1.45 m left and right alternately from the center, every 5 m in the walking direction, oriented inward by 20°) were used to view the avatar in the first-person perspective.

Figure 2. Visual scenes. (A) Top view of virtual environment in experiment 1. In experiment 2, mirrors were excluded. (B) Example scene in experiment 1. (C) Example scene in experiment 2.

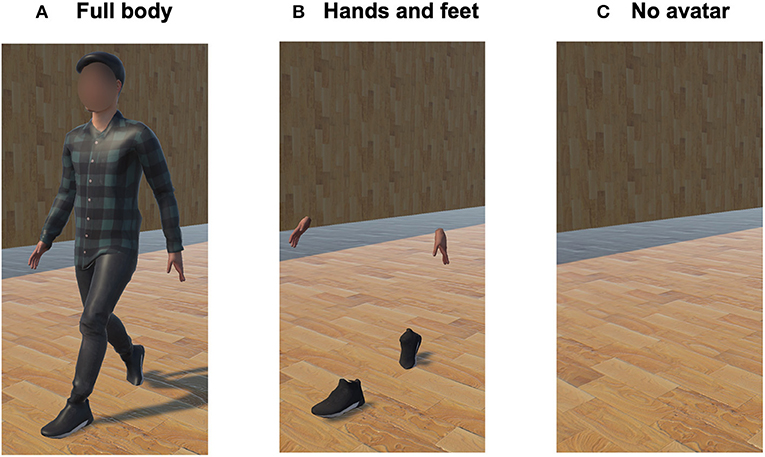

We used a three-dimensional human model, Toshiro (Renderpeople), as a full-body avatar for all participants. The height of the avatar was 170 cm, and the height of the eyes was 155 cm. The avatar was walking forward (5.16 km/h, 2.02 steps/s) with the walking animation. Participants observed the scene from the avatar's viewpoint (first-person perspective) in experiment 1 and from the viewpoint 2 m behind the avatar's eyes (third-person perspective) in experiment 2 (Figures 2B,C, Supplementary Videos 1, 2). The participants were able to observe around them in the virtual environment by turning their head, and the avatar's head motion was synchronized with the actual participant's head motion. The participants observed the avatar' s body movements, including head motion, both in the mirrors and in direct viewing in experiment 1, and from the back of the avatar in experiment 2. For the hands-and-feet-only avatar, only the hands and feet of the Toshiro were presented similar to those of the avatar of Kondo et al. (2018, 2020) (Figure 3). The walking motions were identical for both the full-body and hands-and-feet-only avatars.

Figure 3. Avatar conditions. (A) Full-body avatar; (B) hands-and-feet-only avatar; (C) no-avatar condition.

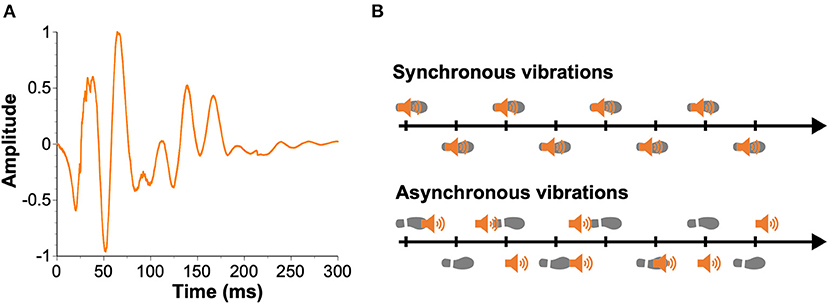

Foot vibrations were presented in the left and right forefeet and heels (Figure 1B), simulating the foot striking the ground. The vibration was generated from the footstep sound of walking on an asphalt-paved road (https://soundeffect-lab.info/) using Audacity software. The duration of the vibration was 300 ms, and the maximum amplitude occurred at 65 ms (Figure 4A). The same foot vibration was used for the heel and forefoot. The heel vibration was followed by the forefoot vibration. The stimulus onset asynchrony of the heel and forefoot vibrations was 105 ms. The timings of the foot vibrations were synchronous with the avatar's foot strikes on the floor in the synchronous condition, whereas they were randomized in the asynchronous condition (Figure 4B). The total number of vibrations was 158 under both conditions in each trial.

Figure 4. Tactile stimulus. (A) Amplitude profile of vibration. (B) Synchronous and asynchronous vibration conditions.

Three avatar conditions (full body, hands-and-feet only, and no avatar) and two foot-vibration conditions (synchronous and asynchronous timings) were used in both experiments 1 and 2. Twenty-four random trials (three avatar conditions × two foot vibration conditions × four repetitions) were performed by each participant. Hence, the avatar and vibration conditions were both within-subject factors.

Four items obtained from a previous study (Kitazaki et al., 2019) were presented to assess the participants' sensations after each stimulus (Figure 5).

1 : I felt that my entire body was moving forward (self-motion).

2 : I felt as if I was walking forward (walking sensation).

3 : I felt as if my feet were striking the ground (leg action).

4 : I felt as if I was present in the scene (telepresence).

The order of the items was constant throughout all the trials. The participants responded to these items using a visual analog scale (VAS); the leftmost side of the line implied no sensation, whereas the right side of the line implied the same sensation as in the actual walking experience. The data were digitized from 0 to 100 for analysis.

The participants sat on a stool and wore an HMD. The foot vibrators comprised four vibro-transducers and a headphone (Figure 1A). After a 5-s blank, a fixation point appeared for 2 s, following which the participants were instructed to study the environments for 5 s to confirm the contents of the virtual environment and embody the avatar. In the full-body condition, they observed the avatar's head motion in the mirrors in experiment 1 or from the third-person perspective in experiment 2, turning their heads to embody the avatar. Subsequently, they observed the walking animation from the first- (experiment 1) or third-person (experiment 2) perspective for 40 s. Then, a questionnaire was presented to the participants. All the participants participated in 24 trials (three avatar conditions × two vibration conditions × four repetitions). The order of the trials was randomized.

We calculated the mean digitized VAS data (0–100) for each question item and tested the normality of the data (Shapiro–Wilk test, α = 0.05). The data of leg action deviated significantly from the normality, whereas the others did not violate the normality. Thus, we conducted a two-way repeated measures ANOVA with the aligned rank transformation (ART) (ANOVA-ART) procedure (Wobbrock et al., 2011) on the non-parametric data (leg action) based on the three avatar conditions (full body, hands-and-feet only, and no avatar) and two foot-vibration conditions (synchronous and asynchronous timings). The Tukey method with Kenward-Roger degrees of freedom approximation was applied for the post-hoc multiple comparison for the non-parametric ANOVA-ART. We conducted a two-way repeated measures ANOVA on the parametric data (self-motion, walking, and telepresence). When the Mendoza's multisample sphericity test exhibited a lack of sphericity, the reported values were adjusted using the Greenhouse–Geisser correction (Geisser and Greenhouse, 1958). Shaffer's modified sequentially rejective Bonferroni procedure was applied for post-hoc multiple comparisons for the parametric ANOVA.

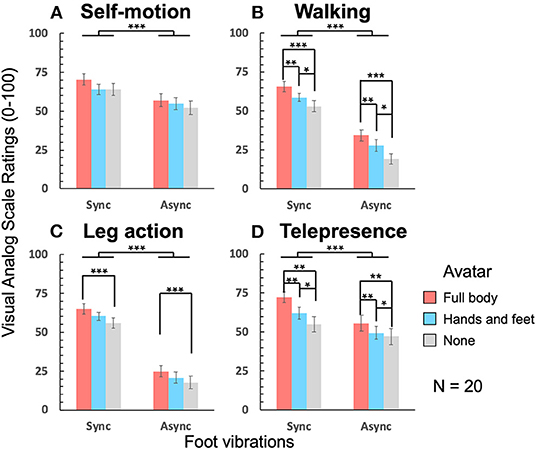

For the self-motion sensation, the ANOVA revealed significant main effects for the avatars [F(1.41, 26.71) = 4.03, p = 0.042, = 0.17] and foot vibrations [F(1, 19) = 15.74, p < 0.001, = 0.45] (Figure 6A). A post-hoc analysis of the avatars did not show any significant difference across the conditions, although the scores were slightly higher for the full-body avatar, compared to the others [t(19) = 2.13, adj. p = 0.101 between the full-body and hands-and-feet-only avatar conditions; t(19) = 2.29, adj. p = 0.101 between the full-body and no-avatar conditions]. The scores were higher in the synchronous vibration condition than in the asynchronous vibration condition, irrespective of the avatar conditions.

Figure 6. Results of experiment 1. (A) Self-motion, (B) walking sensation, (C) leg action sensation, and (D) telepresence. Bar plots indicate mean ± SEM. *p < 0.05; **p < 0.01; ***p < 0.001.

For the walking sensation, the ANOVA revealed significant main effects for the avatar [F(1.59, 30.22) = 14.33, p < 0.0001, = 0.43] and foot vibrations [F(1, 19) = 54.44, p < 0.0001, = 0.74] (Figure 6B). The post-hoc analysis of the avatar conditions showed that the scores for the full-body avatar were significantly higher than those for the hands-and-feet avatar [t(19) = 3.40, adj. p = 0.003] and the no-avatar conditions [t(19) = 4.45, adj. p < 0.001]. Additionally, the post-hoc analysis showed that the scores for the hands-and-feet avatar were significantly higher than those for the no-avatar condition [t(19) = 2.81, adj. p = 0.011]. The scores were higher in the synchronous vibration condition than in the asynchronous vibration condition, irrespective of the avatar conditions.

For leg action, the ANOVA with ART revealed significant main effects for the avatars [F(2, 38) = 8.32, p = 0.001, = 0.30] and foot vibrations [F(1, 19) = 86.62, p < 0.0001, = 0.82] (Figure 6C). The post-hoc analysis of the avatar conditions showed that the scores for the full-body avatar were significantly higher than those for the no-avatar condition [t(19) = 4.08, adj. p = 0.0006]. The scores were higher in the synchronous vibration condition than in the asynchronous vibration condition, irrespective of the avatar condition.

For telepresence, the ANOVA revealed significant main effects for the avatar [F(1.34, 25.41) = 10.10, p = 0.002, = 0.35] and foot vibrations [F(1, 19) = 15.08, p = 0.001, = 0.44] (Figure 6D). The post-hoc analysis of the avatar conditions showed that the scores for the full-body avatar were significantly higher than those for the hands-and-feet avatar [t(19) = 3.36, adj. p = 0.009] and no-avatar conditions [t(19) = 3.41, adj. p = 0.009]. Furthermore, the post-hoc analysis showed that the scores for the hands-and-feet avatar were significantly higher than those for the no-avatar condition [t(19) = 2.12, adj. p = 0.047]. The scores were higher in the synchronous vibration condition than in the asynchronous vibration condition, irrespective of the avatar conditions.

To summarize, the synchronous or rhythmic foot vibrations induced higher sensations of self-motion, walking, leg action, and telepresence in all the avatars. The full-body avatar induced greater walking and leg action sensations and telepresence than the other conditions, irrespective of the foot vibration timing. The walking sensation and telepresence induced by the hands-and-feet-only avatar were higher and lower than those of the no-avatar and full-body-avatar conditions, respectively.

We calculated the mean digitized VAS data (0–100) for each item and tested the normality of the data (Shapiro–Wilk test, α = 0.05). The data of self-motion and telepresence significantly deviated from the normality, whereas the others did not violate the normality. Thus, we conducted a two-way repeated measures ANOVA with ART on the non-parametric data (self-motion and telepresence) based on three avatar conditions (full body, hands-and-feet-only, and no avatar) and two foot-vibration conditions (synchronous and asynchronous timing). The Tukey method with Kenward-Roger degrees of freedom approximation was applied for the post-hoc multiple comparison of the non-parametric ANOVA with ART. We conducted a two-way repeated measures ANOVA on the parametric data (walking and leg action). When the Mendoza's multisample sphericity test exhibited a lack of sphericity, the reported values were adjusted using the Greenhouse–Geisser correction. Shaffer's modified sequentially rejective Bonferroni procedure was applied for post-hoc multiple comparisons for the parametric ANOVA.

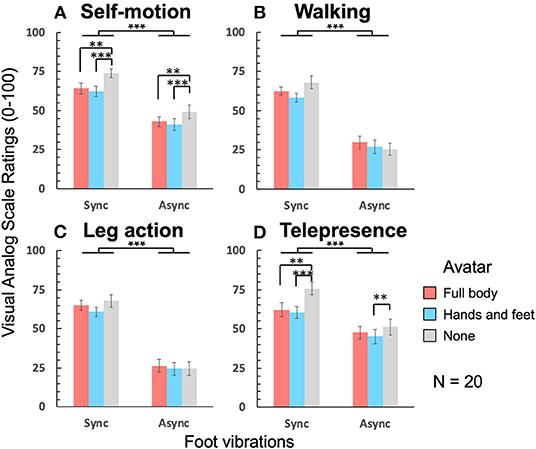

For self-motion, the ANOVA with ART revealed significant main effects for the avatar [F(2, 38) = 12.07, p < 0.0001, = 0.39] and foot vibrations [F(1, 19) = 45.85, p < 0.0001, = 0.71) (Figure 7A). The post-hoc analysis of the avatar conditions showed that the scores for the no-avatar condition were significantly higher than those for the full-body avatar [t(19) = 3.72, adj. p = 0.0018] and hands-and-feet-only avatar [t(19) = 4.64, adj. p = 0.0001]. The scores were higher in the synchronous vibration condition than in the asynchronous vibration condition, irrespective of the avatar conditions.

Figure 7. Results of experiment 2. (A) Self-motion, (B) walking sensation, (C) leg action sensation, and (D) telepresence. Bar plots indicate means ± SEM. *p < 0.05; **p < 0.01; ***p < 0.001.

For the walking sensation, the ANOVA revealed a significant main effect for foot vibrations [F(1, 19) = 76.70, p < 0.0001, = 0.80] and interaction [F(2, 38) = 6.07, p = 0.005, = 0.24] (Figure 7B). The analysis of the simple main effects showed that the scores were higher for the synchronous vibrations than the asynchronous vibrations in all the avatar conditions (full-body avatar: F(1, 19) = 57.63, p < 0.0001, = 0.75; hands-and-feet-only avatar: F(1, 19) = 54.88, p < 0.0001, = 0.74; no avatar: F(1, 19) = 71.88, p < 0.0001, = 0.79), whereas the avatar condition had slightly higher effects in the synchronous condition [F(1, 19) = 3.11, p = 0.056, = 0.14] than in the asynchronous condition [F(1, 19) = 2.35, p = 0.109, = 0.11], although the differences were not significant.

For leg action, the ANOVA revealed only a significant main effect for foot vibrations [F(1, 19) = 66.08, p < 0.0001, = 0.78] (Figure 7C). The scores were higher in the synchronous vibration condition than in the asynchronous vibration condition, irrespective of the avatar conditions.

For telepresence, the ANOVA with ART revealed significant main effects for the avatar [F(2, 38) = 8.77, p < 0.0001, = 0.32] and foot vibrations [F(1, 19) = 33.33, p < 0.0001, = 0.64], and interaction [F(2, 38) = 4.37, p = 0.0195, = 0.19] (Figure 7D). The analysis of the simple main effects showed that the scores were higher in the synchronous than asynchronous vibrations in all avatar conditions [full-body avatar: F(1, 19) = 19.64, p = 0.0003, = 0.51; hands-and-feet avatar: F(1, 19) = 26.25, p < 0.0001, = 0.58; no avatar: F(1, 19) = 35.35, p < 0.0001, = 0.65] and that the avatar difference presented significant effects both in the synchronous [F(2, 38) = 12.36, p < 0.0001, = 0.39] and asynchronous conditions [F(2, 38) = 5.19, p = 0.0102, = 0.21]. The post-hoc multiple comparison analysis of the simple effects of the avatar difference showed that the scores for the no-avatar condition were significantly higher than those for the full-body avatar [t(19) = 3.78, adj. p = 0.002) and hands-and-feet avatar conditions [t(19) = 4.69, adj. p = 0.0001] in the synchronous condition, whereas the scores for the no-avatar condition were significantly higher than those for the hands-and-feet avatar condition [t(19) = 3.20, adj. p = 0.008] in the asynchronous condition.

These results indicated that the avatar did not significantly enhance any of the walking-related sensations from the third-person perspective. In contrast, both the full-body and hands-and-feet avatars impaired the self-motion sensation and telepresence, irrespective of the foot vibrations, in comparison to the no-avatar condition.

We investigated whether the avatar enhanced the virtual walking sensation. Experiment 1 showed that the full-body avatar with the first-person perspective enhanced the sensations of walking, leg action, and telepresence, either with synchronous or asynchronous foot vibrations. Experiment 2 showed that the full-body avatar with the third-person perspective did not enhance the sensations of walking and leg action; rather, it impaired the sensations of self-motion and telepresence in comparison to the no-avatar condition. These results suggest that the role of the avatar in virtual walking differs, depending on whether it is first- or third-person perspective. Furthermore, experiment 1 showed that the hands-and-feet-only avatar with the first-person perspective enhanced the walking sensation and telepresence, in comparison with the no-avatar condition; however, the effect was less pronounced than that of the full-body avatar.

The effects of the avatar from the first-person perspective were significant in terms of walking sensation, leg action, and telepresence, but insignificant in terms of self-motion sensation. When the participants observed the avatar walking, they felt an illusory sense of the agency of walking (Kokkinara et al., 2016). Hence, the walking sensation and leg action would be enhanced by the illusory agency of the walking action. The sense of agency and/or illusory body ownership of the avatar can improve telepresence. Furthermore, the effect was observed in the hands-and-feet-only avatar in terms of the walking sensation and telepresence from the first-person perspective. This is reasonable because the hands-and-feet-only avatar induced an illusory ownership of the full-body by interpolating the hands and feet (Kondo et al., 2018, 2020).

Synchronous or rhythmic foot vibrations enhanced the sensations of self-motion, walking, leg action, and telepresence, irrespective of the avatar conditions, both in the first- and third-person perspectives. This is consistent with the results of a previous study (Kitazaki et al., 2019). However, the effects of the full-body and hands-and-feet body avatars might be more effective with synchronous than with asynchronous foot vibrations because the synchronicity between the avatar's footsteps (visual stimuli) and foot vibrations (tactile stimuli) should improve the illusory body ownership of the avatar, similar to the rubber-hand illusion (Botvinick and Cohen, 1998). We speculated that the effect of rhythmic foot vibration on the spinal locomotion network (central pattern generator) was much stronger than the effect of illusory ownership of the walking avatar. Because the effect of foot vibration was more prominent in the walking and leg action sensations than in the self-motion and telepresence (see Figures 6, 7), the rhythmic foot vibrations simulating footsteps were particularly effective for the active components of walking.

The self-motion sensation and telepresence induced by the avatar in the third-person perspective was impaired, compared to that of the no-avatar condition; furthermore, the avatar exerted no significant influence on the walking and leg action sensations, irrespective of the foot vibration conditions. This may be explainable from the perspective of the participants who may compare the no-avatar condition in the first-person perspective with the explicit third-person perspective conditions where the full-body or the hands-and-feet avatar was located in front of the participants. The first-person perspective is more immersive than the third-person perspective (Monteiro et al., 2018). This might result in heightened self-motion sensation and telepresence, compared to the no-avatar condition, as well as a juxtaposition of the advantages of the avatar and the disadvantage of the third-person perspective in terms of walking and leg action sensations.

In the third-person perspective, the avatar condition exerted a greater influence on the telepresence in the synchronous condition than in the asynchronous condition, and the scores for the no-avatar condition were significantly higher than those for the full-body avatar and hands-and-feet avatar conditions in the synchronous condition, but higher than those of the hands-and-feet-only avatar conditions in the asynchronous condition. Thus, the congruent foot vibration with the third-person avatar impaired telepresence, in comparison to the no-avatar condition. This might be due to a strange feeling of synchrony induced by the foot vibrations and the visual footsteps of the avatar in front of the observer. We speculate that the user's presence might be dissociated from the location of the viewpoint and that of the avatar from the third-person perspective because the sense of illusory body ownership or out-of-body experience was not sufficient in the experiment.

The scores for the no-avatar condition in experiment 2 were higher than those in experiment 1. This might be due to the exclusion of mirrors from the scene in experiment 2. We speculate that mirrors could interfere with the sensations of walking and telepresence because the visual motions in them were tiresome and confusing. As an exploratory analysis to investigate this difference between the experiments, we conducted a three-way mixed ANOVA based on three avatar conditions (full body, hands-and-feet only, and no avatar) and two foot-vibration conditions (synchronous and asynchronous timings) as within-subject variables (repeated factors) and two experiments as a between-subject variable because the participants were different. We applied the ANOVA for the walking sensation (parametric data) and the ANOVA-ART for self-motion, leg action, and telepresence (non-parametric data). We found significant interactions of the experiments and the avatar for all sensations [self-motion: F(2, 76) = 10.21, p = 0.0001, = 0.21; walking: F(2, 76) = 8.83, p = 0.0004, = 0.19; leg action: F(2, 76) = 4.40, p = 0.02, = 0.10; telepresence: F(2, 76) = 13.24, p < 0.0001, = 0.26]. The analysis of simple effects showed that the scores of experiment 2 (without mirror) in the no-avatar condition were significantly higher than those of experiment 1 (with mirror) for the sensation of walking [F(1, 38) = 7.46, p = 0.0100, = 0.16] and telepresence [F(1, 38) = 4.87, p = 0.0335, = 0.11], but not significant for the sensation of self-motion and leg action. For telepresence only, the three-way interaction of the experiment × avatar × foot vibration was significant [F(2, 76) = 6.61, p < 0.0001, = 0.15]. The analysis of simple effects showed that the scores for experiment 2 (without mirror) in the no-avatar condition were significantly higher, compared to experiment 1 (with mirror) only in the synchronous condition [F(1, 38) = 11.56, p = 0.0002, = 0.23]. This result indicated that the presence of mirrors impaired telepresence only when there was no avatar and the foot vibration was rhythmical. We cannot fully explain this result; however, we may conjecture that the combination of no reflection of the observer's own body in the mirrors with the rhythmical foot vibration might reduce the sense of body ownership and/or the sense of self-location and impair the telepresence in experiment 1.

The enhancing effect of the hands-and-feet-only avatar was less than that of the full-body avatar. This might be owing to the lack of visual–motor synchronicity between the avatar and user. Without the avatar's head, the movements of the hands-and-feet-only avatar were independent of the user, whereas the full-body avatar's head was associated with the observer's head motion. We speculated that only a body image similar to the biological motion with a point-light display (Johansson, 1973; Troje, 2008; Thompson and Parasuraman, 2012) in the hands-and-feet-only avatar might induce illusory body ownership and agency. However, the body visual discontinuity, such as an arm with a missing wrist or an arm with a thin rigid wire connecting the forearm and the hand, weakens the illusion of body ownership (Tieri et al., 2015). The weakened sense of body ownership owing to the discontinuous body stimulus might decrease the virtual walking sensations, compared to the full-body avatar. Synchronous foot vibrations with hands-and-feet-only avatar footsteps might contribute to illusory body ownership; however, the effect of the asynchronous foot vibrations was significant. This issue is yet to be elucidated and must be further investigated in future studies. The hands-and-feet-only avatar required less computing power and presented less conflict arising from appearance difference between the actual body and the avatar's body; however, its effect was limited, and a tradeoff was observed. If this limitation can be improved, then the hands-and-feet-only avatar will be useful.

Furthermore, although mirrors are effective for viewing the self-avatars in the first-person perspective, it is unnatural and tiresome to have many mirrors on the scene. In our virtual walking system, we eliminated mirrors such that the self-avatar was observed from a third-person perspective in experiment 2. However, we did not demonstrate the enhancing effect of the avatar from the third-person perspective. Some studies have revealed that the third-person perspective improves spatial awareness in non-immersive video games (Schuurink and Toet, 2010; Denisova and Cairns, 2015) and decreased motion sickness in VR games (Monteiro et al., 2018). Hence, the third-person perspective offers several advantages. A novel technique was proposed in a recent study that combined the advantages of the first- and third-person perspectives using immersive first-person perspective scenes with a third-person perspective miniature with an avatar (Evin et al., 2020). For future studies, we shall consider a method that combines the advantages of the first- and third-person perspectives in a virtual walking system without a mirror.

Although the floor of the scene was wood, we used the sound of feet treading an asphalt-paved road for foot vibrations. We chose this sound based on our preliminary observation of consistency with the scene; the footstep sound was clear and suitable for foot vibration. Inconsistency between the sound and the floor in VR could affect the sensation of walking, as revealed by Turchet et al. (2012). The effect of the consistency of the vibration and the nature of the ground should be examined in future studies.

We did not measure the illusory body ownership of the avatars in this study. Particularly from the third-person perspective, how much the participants embodied the avatar and whether self-localization was shifted toward the avatar are crucial for considering the effect of the avatar on the illusory walking sensations. Illusory ownership should be measured and compared with walking-related sensations in a future study to clarify the relationship between illusory body ownership and illusory walking sensation.

Furthermore, we did not use standard questionnaires to measure presence (Usoh et al., 2000) and simulator sickness (Kennedy et al., 1993), although these are crucial issues in virtual walking systems. We shall use these questionnaires to measure presence and motion sickness in our virtual walking system in future studies. In a preliminary observation, some participants experienced motion sickness when the optic flow included perspective jitter. Kokkinara et al. (2016) demonstrated that perspective jitter simulating head motion during walking reduces the sense of agency. Thus, we did not include perspective jitter in the present study. Some studies have shown that vection intensity correlates with the degree of motion sickness (Diels et al., 2007; Palmisano et al., 2007; Bonato et al., 2008). However, several factors that modulate the intensity of vection do not affect motion sickness (Keshavarz et al., 2015, 2019; Riecke and Jordan, 2015). This is an open question that should be investigated further to develop an effective virtual walking system without discomfort arising from motion sickness.

Using optic flow, foot vibrations simulating footsteps, and a walking avatar, we developed a virtual walking system for seated users that does not require limb action, and we discovered that the full-body avatar in the first-person perspective enhanced the sensations of walking, leg action, and telepresence. Although the hands-and-feet-only avatar in the first-person perspective enhanced the walking sensation and telepresence more than the no-avatar condition, its effect was less prominent than that of the full-body avatar. However, the full-body avatar in the third-person perspective did not enhance the sensation of walking. Synchronous or rhythmic foot vibrations enhanced the sensations of self-motion, waking, leg action, and telepresence, irrespective of avatars. The hands-and-feet-only avatar could be useful for enhancing the virtual walking sensation because of its easy implementation, low computational power, and reduction of the conflict of difference between the appearance of the user's actual body and the self-avatar's body.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Ethical Committee for Human-Subject Research at the Toyohashi University of Technology. The patients/participants provided their written informed consent to participate in this study.

YM, JN, TA, YI, and MK conceived and designed the experiments. JN collected and analyzed the data. YM, JN, and MK contributed to the manuscript preparation. All authors have reviewed the manuscript.

This research was supported in part by JST ERATO (JPMJER1701) to MK, JSPS KAKENHI JP18H04118 to YI, JP19K20645 to YM, and JP20H04489 to MK.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We would like to thank Editage (www.editage.com) for English language editing. Preliminary analysis of Experiment 1 was presented at the IEEE VR conference (Matsuda et al., 2020) as a poster presentation.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2021.654088/full#supplementary-material

Supplementary Video 1. Example scene that the participant observed in experiment 1 (left) and the apparatus and an observer (right).

Supplementary Video 2. Example scene that the participant observed in experiment 2 (left) and the apparatus and observer (right).

Aspell, J. E., Lenggenhager, B., and Blanke, O. (2009). Keeping in touch with one's self: multisensory mechanisms of self-consciousness. PLoS ONE 4:e6488doi: 10.1371/journal.pone.0006488

Bhandari, N., and O'Neill, E. (2020,. March). “Influence of perspective on dynamic tasks in virtual reality,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Atlanta, GA: IEEE), 939–948. doi: 10.1109/VR46266.2020.00114

Bonato, F., Bubka, A., Palmisano, S., Phillip, D., and Moreno, G. (2008). Vection change exacerbates simulator sickness in virtual environments. Presence 17, 283–292. doi: 10.1162/pres.17.3.283

Botvinick, M., and Cohen, J. (1998). Rubber hands ‘feel’ touch that eyes see. Nature 391, 756–756. doi: 10.1038/35784

Brandt, T., Wist, E. R., and Dichgans, J. (1975). Foreground and background in dynamic spatial orientation. Percept. Psychophys. 17, 497–503. doi: 10.3758/BF03203301

Darken, R. P., Cockayne, W. R., and Carmein, D. (1997,. October). “The omni-directional treadmill: a locomotion device for virtual worlds,” in Proceedings of the 10th Annual ACM Symposium on User Interface Software and Technology (New York, NY: ACM), 213–221. doi: 10.1145/263407.263550

Denisova, A., and Cairns, P. (2015,. April). “First person vs. third person perspective in digital games: do player preferences affect immersion?,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (Seoul: ACM), 145–148. doi: 10.1145/2702123.2702256

Dichgans, J., and Brandt, T. (1978). “Visual-vestibular interactions: effects on self-motion perception and postural control,” in Handbook of Sensory Physiology. Vol. 8, eds R. Held, H. W. Leibowitz, and H. L. Teuber (Berlin: Springer), 755–804. doi: 10.1007/978-3-642-46354-9_25

Diels, C., Ukai, K., and Howarth, P. A. (2007). Visually induced motion sickness with radial displays: effects of gaze angle and fixation. Aviat. Space Environ. Med. 78, 659–665.

Draper, J. V., Kaber, D. B., and Usher, J. M. (1998). Telepresence. Hum. Factors 40, 354–375. doi: 10.1518/001872098779591386

Ehrsson, H. H. (2007). The experimental induction of out-of-body experiences. Science 317:1048. doi: 10.1126/science.1142175

Evin, I., Pesola, T., Kaos, M. D., Takala, T. M., and Hämäläinen, P. (2020). “3PP-R: Enabling Natural Movement in 3rd Person Virtual Reality,” in Proceedings of the Annual Symposium on Computer-Human Interaction in Play (Ottawa, ON: ACM), 438–449. doi: 10.1145/3410404.3414239

Farkhatdinov, I., Ouarti, N., and Hayward, V. (2013). “Vibrotactile inputs to the feet can modulate vection,” in 2013 World Haptics Conference (WHC) (Daejeon: IEEE), 677–681. doi: 10.1109/WHC.2013.6548490

Faul, F., Erdfelder, E., Buchner, A., and Lang, A.-G. (2009). Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Freiwald, J. P., Ariza, O., Janeh, O., and Steinicke, F. (2020). “Walking by cycling: a novel in-place locomotion user interface for seated virtual reality experiences,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI: ACM), 1–12. doi: 10.1145/3313831.3376574

Geisser, S., and Greenhouse, S. W. (1958). An extension of Box's results on the use of the F distribution in multivariate analysis. Ann. Math. Stat. 29, 885–891 doi: 10.1214/aoms/1177706545

Gonzalez-Franco, M., Perez-Marcos, D., Spanlang, B., and Slater, M. (2010). “The contribution of real-time mirror reflections of motor actions on virtual body ownership in an immersive virtual environment,” in Proceedings of 2010 IEEE Virtual Reality Conference (VR) (Boston, MA: IEEE), 111–114. doi: 10.1109/VR.2010.5444805

Haggard, P. (2017). Sense of agency in the human brain. Nat. Rev. Neurosci. 18, 196–207. doi: 10.1038/nrn.2017.14

Haggard, P., and Chambon, V. (2012). Sense of agency. Curr. Biol. 22, R390–R392. doi: 10.1016/j.cub.2012.02.040

Ikei, Y., Shimabukuro, S., Kato, S., Komase, K., Okuya, Y., Hirota, K., et al. (2015). “Five senses theatre project: Sharing experiences through bodily ultra-reality,” in Proceedings of IEEE Virtual Reality (Arles: IEEE), 195–196. doi: 10.1109/VR.2015.7223362

Iwata, H. (1999). “Walking about virtual environments on an infinite floor,” in Proceedings IEEE Virtual Reality (Houston, TX: IEEE), 286–293.

Iwata, H., Yano, H., Fukushima, H., and Noma, H. (2005). “Circulafloor: a locomotion interface using circulation of movable tiles,” in Proceedings of IEEE Virtual Reality (Bonn: IEEE), 223–230.

Iwata, H., Yano, H., and Nakaizumi, F. (2001). “Gait master: A versatile locomotion interface for uneven virtual terrain,” in Proceedings of IEEE Virtual Reality (Yokohama: IEEE), 131–137.

Johansson, G. (1973). Visual perception of biological motion and a model for its analysis. Percept. Psychophys. 14, 201–211. doi: 10.3758/BF03212378

Kennedy, R. S., Lane, N. E., Berbaum, K. S., and Lilienthal, M. G. (1993). Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 3, 203–220. doi: 10.1207/s15327108ijap0303_3

Keshavarz, B., Philipp-Muller, A. E., Hemmerich, W., Riecke, B. E., and Campos, J. L. (2019). The effect of visual motion stimulus characteristics on vection and visually induced motion sickness. Displays 58, 71–81. doi: 10.1016/j.displa.2018.07.005

Keshavarz, B., Riecke, B. E., Hettinger, L. J., and Campos, J. L. (2015). Vection and visually induced motion sickness: how are they related? Front. Psychol. 6:472. doi: 10.3389/fpsyg.2015.00472

Kitazaki, M., Hamada, T., Yoshiho, K., Kondo, R., Amemiya, T., Hirota, K., and Ikei, Y. (2019). Virtual walking sensation by prerecorded oscillating optic flow and synchronous foot vibration. i-Perception 10:2041669519882448. doi: 10.1177/2041669519882448

Kitazaki, M., and Sato, T. (2003). Attentional modulation of self-motion perception. Perception 32, 475–484. doi: 10.1068/p5037

Kokkinara, E., Kilteni, K., Blom, K. J., and Slater, M. (2016). First person perspective of seated participants over a walking virtual body leads to illusory agency over the walking. Sci. Rep. 6:28879. doi: 10.1038/srep28879

Kondo, R., Sugimoto, M., Minamizawa, K., Hoshi, T., Inami, M., and Kitazaki, M. (2018). Illusory body ownership of an invisible body interpolated between virtual hands and feet via visual-motor synchronicity. Sci. Rep. 8:7541. doi: 10.1038/s41598-018-25951-2

Kondo, R., Tani, Y., Sugimoto, M., Inami, M., and Kitazaki, M. (2020). Scrambled body differentiates body part ownership from the full body illusion. Sci. Rep. 10:5274. doi: 10.1038/s41598-020-62121-9

Kruijff, E., Marquardt, A., Trepkowski, C., Lindeman, R. W., Hinkenjann, A., Maiero, J., et al. (2016). “On your feet! Enhancing vection in leaning-based interfaces through multisensory stimuli,” in Proceedings of the 2016 Symposium on Spatial User Interaction (Tokyo: ACM), 149–158. doi: 10.1145/2983310.2985759

Lécuyer, A., Burkhardt, J. M., Henaff, J. M., and Donikian, S. (2006). “Camera motions improve the sensation of walking in virtual environments,” in Proceedings of IEEE Virtual Reality Conference (Alexandria, VA: IEEE), 11–18.

Lenggenhager, B., Mouthon, M., and Blanke, O. (2009). Spatial aspects of bodily self-consciousness. Conscious. Cogn. 18, 110–117. doi: 10.1016/j.concog.2008.11.003

Lenggenhager, B., Tadi, T., Metzinger, T., and Blanke, O. (2007). Video ergo sum: manipulating bodily self-consciousness. Science 317:5841. doi: 10.1126/science.1143439

MacKay-Lyons, M. (2002). Central pattern generation of locomotion: a review of the evidence. Phys. Ther. 82, 69–83. doi: 10.1093/ptj/82.1.69

Maselli, A., and Slater, M. (2013). The building blocks of the full body ownership illusion. Front. Hum. Neurosci. 7:83. doi: 10.3389/fnhum.2013.00083

Matsuda, Y., Nakamura, J., Amemiya, T., Ikei, Y., and Kitazaki, M. (2020). “Perception of walking self-body avatar enhances virtual-walking sensation,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (Atlanta, GA: IEEE), 733–734. doi: 10.1109/VRW50115.2020.00217

Medina, E., Fruland, R., and Weghorst, S. (2008). “Virtusphere: walking in a human size VR “hamster ball”,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting (New York, NY: SAGE Publications) 2102–2106. doi: 10.1177/154193120805202704

Monteiro, D., Liang, H. N., Xu, W., Brucker, M., Nanjappan, V., and Yue, Y. (2018). Evaluating enjoyment, presence, and emulator sickness in VR games based on first-and third-person viewing perspectives. Comput. Animat. Virtual. Worlds 29:e1830. doi: 10.1002/cav.1830

Ohmi, M., Howard, I. P., and Landolt, J. P. (1987). Circular vection as a function of foreground-background relationships. Perception 16, 17–22. doi: 10.1068/p160017

Palmisano, S., Allison, R. S., Schira, M. M., and Barry, R. J. (2015). Future challenges for vection research: definitions, functional significance, measures, and neural bases. Front. Psychol. 6:193. doi: 10.3389/fpsyg.2015.00193

Palmisano, S., Bonato, F., Bubka, A., and Folder, J. (2007). Vertical display oscillation effects on forward vection and simulator sickness. Aviat. Space Environ. Med. 78, 951–956. doi: 10.3357/ASEM.2079.2007

Palmisano, S. A., Burke, D., and Allison, R. S. (2003). Coherent perspective jitter induces visual illusions of self-motion. Perception 32, 97–110. doi: 10.1068/p3468

Palmisano, S. A., Gillam, B., and Blackburn, S. (2000). Global-perspective jitter improves vection in central vision. Perception 29, 57–67. doi: 10.1068/p2990

Petkova, V. I., and Ehrsson, H. H. (2008). If I were you: perceptual illusion of body swapping. PLoS ONE 3:e3832. doi: 10.1371/journal.pone.0003832

Riecke, B. E. (2011). “Compelling self-motion through virtual environments without actual self-motion: using self-motion illusions (“vection”) to improve user experience in VR,” in Virtual Reality, ed. J. Kim (London: IntechOpen),149–176.

Riecke, B. E., and Jordan, J. D. (2015). Comparing the effectiveness of different displays in enhancing illusions of self-movement (vection). Front. Psychol. 6:713. doi: 10.3389/fpsyg.2015.00713

Riecke, B. E., Schulte-Pelkum, J., Avraamides, M. N., Heyde, M. V. D., and Bülthoff, H. H. (2006). Cognitive factors can influence self-motion perception (vection) in virtual reality. ACM Trans. Appl. Perception 3, 194–216. doi: 10.1145/1166087.1166091

Riecke, B. E., Schulte-Pelkum, J., Caniard, F., and Bulthoff, H. H. (2005). “Towards lean and elegant self-motion simulation in virtual reality” in IEEE Proceedings. VR 2005 (Bonn: IEEE), 131–138.

Riecke, B. E., Väljamäe, A., and Schulte-Pelkum, J. (2009). Moving sounds enhance the visually-induced self-motion illusion (circular vection) in virtual reality. ACM Trans. Appl. Perception 6, 1–27. doi: 10.1145/1498700.1498701

Sanchez-Vives, M. V., and Slater, M. (2005). From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 6, 332–339. doi: 10.1038/nrn1651

Schuurink, E. L., and Toet, A. (2010). Effects of third person perspective on affective appraisal and engagement: findings from SECOND LIFE. Simul. Gaming 41, 724–742. doi: 10.1177/1046878110365515

Slater, M., Spanlang, B., Sanchez-Vives, M. V., and Blanke, O. (2010). First person experience of body transfer in virtual reality. PLoS ONE 5:e10564. doi: 10.1371/journal.pone.0010564

Templeman, J. N., Denbrook, P. S., and Sibert, L. E. (1999). Virtual locomotion: Walking in place through virtual environments. Presence. 8, 598–617. doi: 10.1162/105474699566512

Terziman, L., Marchal, M., Multon, F., Arnaldi, B., and Lécuyer, A. (2012,. March). “The King-Kong effects: Improving sensation of walking in VR with visual and tactile vibrations at each step,” in Proceedings of 2012 IEEE Symposium on 3D User Interfaces (3DUI) (Costa Mesa, CA: IEEE), 19–26. doi: 10.1109/3DUI.2012.6184179

Thompson, J., and Parasuraman, R. (2012). Attention, biological motion, and action recognition. Neuroimage 59, 4–13. doi: 10.1016/j.neuroimage.2011.05.044

Tieri, G., Tidoni, E., Pavone, E. F., and Aglioti, S. M. (2015). Body visual discontinuity affects feeling of ownership and skin conductance responses. Sci. Rep. 5:17139. doi: 10.1038/srep17139

Troje, N. F. (2008). “Biological motion perception,” in The Senses: A Comprehensive References 1, eds R. H. Masland, T. D. Albright, P. Dallos, D. Oertel, D. V. Smith, S. Firestein, G. K. Beauchamp, A. Basbaum, M. Catherine Bushnell, J. H. Kaas, and E. P. Gardner (Oxford: Elsevier), 231–238. doi: 10.1016/B978-012370880-9.00314-5

Turchet, L., Burelli, P., and Serafin, S. (2012). Haptic feedback for enhancing realism of walking simulations. IEEE Trans. Haptics 6, 35–45. doi: 10.1109/TOH.2012.51

Usoh, M., Catena, E., Arman, S., and Slater, M. (2000). Using presence questionnaires in reality. Presence 9, 497–503. doi: 10.1162/105474600566989

Keywords: walking, self-motion, avatar, optic flow, tactile sensation, first-person perspective, third-person perspective

Citation: Matsuda Y, Nakamura J, Amemiya T, Ikei Y and Kitazaki M (2021) Enhancing Virtual Walking Sensation Using Self-Avatar in First-Person Perspective and Foot Vibrations. Front. Virtual Real. 2:654088. doi: 10.3389/frvir.2021.654088

Received: 15 January 2021; Accepted: 04 March 2021;

Published: 21 April 2021.

Edited by:

Jeanine Stefanucci, The University of Utah, United StatesReviewed by:

Omar Janeh, University of Hamburg, GermanyCopyright © 2021 Matsuda, Nakamura, Amemiya, Ikei and Kitazaki. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yusuke Matsuda, bWF0c3VkYS55dXN1a2UudnBAdHV0Lmpw; Junya Nakamura, bmFrYW11cmFqQHJlYWwuY3MudHV0LmFjLmpw

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.