94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Virtual Real., 30 April 2021

Sec. Technologies for VR

Volume 2 - 2021 | https://doi.org/10.3389/frvir.2021.645153

This article is part of the Research TopicProfessional Training in Extended Reality: Challenges and SolutionsView all 8 articles

Biao Xie1

Biao Xie1 Huimin Liu2

Huimin Liu2 Rawan Alghofaili1

Rawan Alghofaili1 Yongqi Zhang1

Yongqi Zhang1 Yeling Jiang3

Yeling Jiang3 Flavio Destri Lobo3

Flavio Destri Lobo3 Changyang Li1

Changyang Li1 Wanwan Li1

Wanwan Li1 Haikun Huang1

Haikun Huang1 Mesut Akdere3

Mesut Akdere3 Christos Mousas2

Christos Mousas2 Lap-Fai Yu1*

Lap-Fai Yu1*This study aimed to discuss the research efforts in developing virtual reality (VR) technology for different training applications. To begin with, we describe how VR training experiences are typically created and delivered using the current software and hardware. We then discuss the challenges and solutions of applying VR training to different application domains, such as first responder training, medical training, military training, workforce training, and education. Furthermore, we discuss the common assessment tests and evaluation methods used to validate VR training effectiveness. We conclude the article by discussing possible future directions to leverage VR technology advances for developing novel training experiences.

Thanks to the recent growth of consumer-grade virtual reality (VR) devices, VR has become much more affordable and available. Recent advances in VR technology also support the creation, application, evaluation, and delivery of interactive VR applications at a lower cost. The VR research community is more active than ever. The IEEE Virtual Reality conference has received a record number of submissions in 2020, an approximate 10% growth compared to the previous year (2019.) The latest commercial standalone VR headset Oculus Quest 2, which was released in 2020 has become the fastest selling VR headset Lang (2021). Such trends contribute to the increasing popularity and success of VR training across different domains. For example, VR training is the most common use of VR within enterprise—62% according to a recent survey Ostrowski (2018).

According to Merriam Webster1, training is defined either as 1) the act, process, or method of one that trains and 2) the skill, knowledge, or experience acquired by one that trains; or the state of being trained. Conventionally, training happens with physical setups such as classrooms and laboratory spaces through presentations and hands-on practice. However, there are cases in which trainees must travel to specific facilities to receive proper training. To date, VR technology makes it possible to provide real-world training through virtual environments while providing an effective and immersive training experience.

As virtual environments are used to train users to perform real-world tasks and procedures, it is important to compare real-world training with VR-based training. In general, real-world training has the following limitations: 1) it could be time-consuming due to the efforts and time needed to set up the real-world training site and to travel to the site; 2) it could be expensive due to the cost of preparing real-world training materials and hiring human coaches; 3) it could be unappealing and unintuitive due to the lack of visual hints such as 3D animations for illustrating skills and processes; and 4) it could not be possible to train some skills in the real world such as emergency procedures that can be only safely trained in simulators.

Depending on the domain, VR could drastically reduce the cost of training while increasing the number of training scenarios. As VR training scenarios mainly involve computer-generated 3D graphics, VR developers can easily create a variety of scenarios from existing 3D assets, which can be repeatedly applied for training different people. Delivered through the internet, the scenarios are also convenient and inexpensive to access. Although VR training does not guarantee a lower cost, the advantages that those systems bring to the training audience could justify the investment costs.

VR technologies allow trainees to learn in the comfort of their personal space. For example, a worker might learn to use a piece of equipment or follow safety procedures using VR at home. Depending on the domain, VR training could also provide privacy for a trainee, and this is especially important for cases in which a trainee might feel uncomfortable about his/her actions in a real-world training process due to the presence of observers. However, there are training situations that need to happen in the presence of instructors as they need to provide early feedback on the trainee’s performance and alert the trainee to issues that they may be experiencing, including negative trends in their performance.

Moreover, VR training provides a safe environment with minimal exposure to dangerous situations [e.g., fires Backlund et al., 2007; Conges et al., 2020; explosions, and natural disasters Li et al., 2017]. For example, an instructor can control a virtual fire (position, intensity, and damage caused) in which a firefighter is being trained to evacuate a building. Such scenarios could allow people to easily train in a safe environment that mimics real-world challenges.

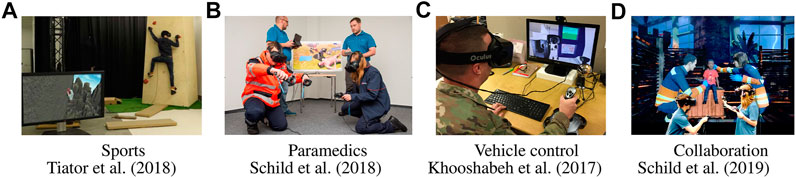

VR technologies alter users’ perceptions such that they are replaced by or blended with the output of a computer program. There are several elements of VR experiences distinguished roughly by the degree to which the user’s sensory perception is co-opted. Head-mounted VR displays with headphones, wearable haptic clothing, and equipment that allows the user to walk freely exemplify the most common consumer-grade VR setup. VR is not limited to visual information, as the auditory, haptic, kinesthetic, and olfactory senses can be technologically simulated. Additionally, high-quality virtual content designs greatly improve VR training experiences. We discuss how different types of VR setups and simulations are used to create effective VR experiences for different training purposes. Figure 1 shows some VR training applications.

FIGURE 1. VR training has been widely adopted by different domains. Image courtesy of the paper authors.

Motivated by recent advances in VR training, this study presents previous works to inform the community about the current state and future directions of VR training research. Many works are published on this topic every year and a workshop [“TrainingXR” at IEEE VR TRAININGXR@IEEEVR 2020, 2020] was recently established to fill the gap in this subdomain of VR research. We explore the methods, techniques, and prior developments in VR training that have been discussed in previous works. Most of the works that we selected belong to simulation-based training via VR technologies. These works often use a simulator that is either controlled by a real instructor or driven by a set of training tasks to improve the trainee’s performance on certain tasks or skills. Furthermore, the work has been validated through experiments and has some preliminary results. However, as there is a substantial amount of work that satisfies such criteria, it is not possible to cover and discuss of all them in this study. Our goal is to show how VR training can be applied to several major domains through some representative examples.

We limit the scope of this study to VR training. We did not consider training involving other extended reality domains (e.g., augmented and mixed reality). There are many conceivable perspectives through which to review VR training, from software choices to software development, from graphics fidelity to run-time efficiency, and from interaction techniques to interaction realism. To provide a succinct overview, in this article, we discuss VR training from the following perspectives:

Introduce the main technology for creating and delivering VR training experiences (Section 2)

Give an overview of VR training in different domains (Section 3)

Discuss the evaluation methods for assessing VR training effectiveness (Section 4)

Identify open issues and promising research directions for VR training (Section 5)

VR training applications have been developed for a variety of domains, including construction safety training (Filigenzi et al., 2000; Nasios, 2002; Li et al., 2018), natural disaster escape training (Smith and Ericson, 2009; Li et al., 2017), education (Le et al., 2015; Wang et al., 2018), etc. VR training applications are typically created according to specific tasks characterized by a fixed procedure and a practical purpose for a specific training target. Thus their implementations are generally miscellaneous and different from each other (Lang et al., 2018; Si et al., 2018; Taupiac et al., 2019).

Xie et al. (2006) explored generalized descriptions of different training scenarios. They integrated the creation process of the virtual reality safety training system with a scenario-based design method to construct a general framework for creating VR training experiences. Martin et al. (2009), Martin and Hughes (2010) developed software for automatic scenario generation, aiming at exploring the conceptual design of scenario generation approaches. Lin et al. (2002) presented an architecture of VR-based training systems, as well as an introduction to virtual training task planning and some task-oriented training scenario models. Overall, the creation pipeline of VR training can be roughly divided into three stages: 1) task analysis, 2) training scenario sketching, and 3) implementation.

One of the first steps that should be considered before training is the task analysis, which is the foundation that lies under the development process of any training objectives. The aim of task analysis was to set up the information needs for the specific training task and to summarize all the factors that should be involved in the training system during the design process. Early attempts for task analysis can be dated back to the 1940s. One famous example is the therbligs that was proposed by Gilbreth and Carey (2013). Although the therbligs has improved a lot in describing and representing some cognitive tasks such as search and find, Schraagen et al. (2000) compared to original scientific management methods most of them still focused on the description of physical behavior and relatively simple decisions (Clark and Estes, 1996) and usually the task analysis would emphasize the detailed procedures of the task. However, low-level task analysis is gradually replaced by more advanced problem-solving methods that allow not only overt physical behaviors but also complex cognitive tasks. Hierarchical task analysis (HTA) and cognitive task analysis (CTA) are the most famous and widely used ones among those new methods. Generally speaking, instead of merely concentrating on simple tasks, HTA describes “goals,” which are composed of different units, while CTA focuses more on dealing with unexpected situations. Salmon et al. (2010) provided a conclusive comparison of the two methods.

The purpose of scenario sketching, also called scenario design, was to obtain detailed descriptions of how people accomplish a task. For example, Carroll (2000) provides a general definition and introduction for scenario-based design. Farmer et al. (2017) explained the detailed methodology for specifying and analyzing training tasks. Recently, event templates have also been integrated into the scenario description for virtual training environments Papelis and Watson (2018). These works in devising efficient and comprehensive representations of training scenarios cannot only provide a general framework and inspiring new ideas for designing new VR training applications but also offer a theoretical basis for developing procedural approaches to automatically generate virtual training environments.

While modeling and simulations are the essential components of VR training software nowadays, traditional methods for training are generally based on technical manuals or multimedia resources Chao et al. (2017). Although such resources are simple to use and easy to produce, trainees lack the immersive experience, and therefore, the training results are not comparable to VR training (Hamilton et al., 2002; Lovreglio et al., 2020). In this article, we summarize VR training software in two aspects listed below.

A number of tools were developed in the past. Among them, the Virtual Reality Modeling Language (VRML) that was first introduced in 1994, was intended for the development of “virtual worlds” without dependency on headsets. After VRML faded out, a wide array of other programming languages and tools picked up where it left off. Among them we found 3DMLW, which discontinued in 2016, COLLADA2, O3D3, and X3D4, which is the official successor of VRML. Nowadays, game engines are one of the most popular development tools for virtual reality applications, with multiple packages and software development kits that support mainstream VR devices. For example, Unity 3D (Linowes, 2015) and Unreal Engine (Whiting and Donaldson, 2014) are popular choices. Both of them have integrated APIs which VR developers can easily use after installing the corresponding SDKs.

3D modeling is also important in creating virtual training environments. There are plenty of resources such as 3D models, 3D scenes, and sample projects on the Unity’s and Unreal’s asset stores, making the content creation process easier for people who may not have any knowledge or skills in 3D modeling. In addition, if developers want to customize their 3D content, they can use advanced 3D modeling software such as 3ds Max, Maya, and Blender to build and modify 3D models. On the other hand, Virtual Reality Peripheral Network (VRPN) (Taylor et al., 2001) provides local or networked access to various tracking, button, joystick, sound, and other devices used in VR systems.

Procedural generation techniques are also widely used in creating virtual content such as virtual environments, 3D models, and game level designs. These contents are typically created by designers manually from scratch. The content creation process is usually labor-intensive and time-consuming as it involves a lot of trials and errors. Procedural generation of 3D virtual content is a common approach for creating certain types of 3D content [e.g., terrains Smelik et al., 2009, cities Parish and Müller, 2001, and room layouts Yu et al., 2011]. As described above, exploring scenario-based descriptions for VR training can help devise procedural generation methods for training scenarios. Related works include Smelik et al. (2011), Smelik et al. (2014), Freiknecht and Effelsberg (2017), Lang et al. (2018), and Li et al. (2020). Basically, the general process starts by specifying the training scenario, including training tasks, environments, baselines, and randomness level. Then, based on the parameterized and pre-described scenario, a procedural generation framework is constructed, which applies an automatic process (e.g., a stochastic optimization process) to generate the whole training scenario.

The development of VR training is closely related to the advances of VR devices. Before the advances of consumer-grade VR devices and due to the lack of computation power Biocca and Delaney (1995), many training tasks were conducted using 1) window (computer) systems that provide a portal into an interactive 3D virtual world, 2) mirror systems in which users can observe themselves moving in a virtual world through a projection screen, 3) vehicle systems in which the user enters what appears to be a vehicle and operates controls that simulate movement in the virtual world, and 4) Cave Automatic Virtual Environment (CAVE) systems Cruz-Neira et al. (1992) in which users enter a room where they are surrounded by large screens that project a nearly continuous virtual space.

The ease of access of the current VR devices provides many benefits in VR training. Anthes et al. (2016) summarized the most commonly used VR hardware and software. Although many problems, such as motion sickness, still exist, there have been efforts on identifying suitable situations where available VR devices such as head-mounted displays (HMDs) can help in training skills. For example, Jensen and Konradsen (2018) found that cognitive skills, psychomotor skills, and affective skills can be better enhanced using HMDs, compared to other methods with a lower immersion level.

Besides HMDs, tracking and interaction devices, such as Leap Motion and Kinect sensors, have also been used in previously conducted VR training research projects. For example, Iosa et al. (2015) demonstrated the feasibility of using a Leap Motion sensor for rehabilitation of subacute stroke by conducting therapy experiments among elderly people. Wang et al. (2017) developed a VR training application based on a Leap Motion sensor to facilitate upper limb functional recovery process based on the conclusion drawn in the study by Iosa et al. (2015). On the other hand, Kinect sensors are frequently used in motor-function recovery training of limbs (Bao et al., 2013; Sin and Lee, 2013; Park et al., 2017). Bogatinov et al. (2017) explored the possibility for integrating a Kinect sensor to provide firearms shooting training scenarios with a low cost. What is more, sports training applications such as dance (Kyan et al., 2015), motion game (Zhang et al., 2019), badminton (He et al., 2014), table tennis (Liu et al., 2020), and golf (Lin et al., 2013) commonly use a Kinect sensor.

Depth-based sensors like Kinect and Leap Motion provide a direct and convenient solution for basic motion tracking of the human body at an affordable cost. Some companies use motion capture suit to increase the tracking precision. Commercial products like PrioVR, TESLASUIT, and HoloSuit all use embedded sensors and haptic devices to provide full-body tracking and haptic feedback of the user. Besides body tracking, there are devices for improving VR experiences through locomotion. Treadmill-based platforms (e.g., Cyberith Virtualizer, KAT VR, and Virtuix Omni), which physically simulate the feeling of walking and running in VR, are popular devices for VR locomotion. They allow the user to walk in a virtual environment.

Researchers have also investigated the relationship between training outcomes and different VR devices Howard (2019). Research in comparing the pedagogical uses of different VR headsets has been conducted as VR applications in education and training become more and more popular Papachristos et al. (2017), Moro et al. (2017). Although there are some disputes about whether mobile VR headsets tend to induce nausea and blurred vision Moro et al. (2017), previous results indicate little differences in immersion levels between high-end HMDs like Oculus Rift and low-end HMDs such as mobile VR headsets in aspects such as spatial presence Amin et al. (2016), usability, and training outcomes Papachristos et al. (2017).

In this section, we comprehensively review the challenges and approaches of employing VR training in different domains. We reviewed a total of 48 VR training applications and works. Approximately 54% of the works used head-mount displays (HMDs) in their framework, 6% used mobile VR, 10% used CAVE-like VR monitors, and 27% did not specify which VR devices they used. In addition, 12% of the work used additional trackers or sensors, for example, depth sensors to capture hand gesture information. 10% used advanced devices like treadmills or motion platforms to provide immersive navigation experiences. 21% of the works used custom hardware like Arduino, or other 3D-printed parts to satisfy their tasks’ needs. Most of the works (83%) that we reviewed are simulation-based applications, which simulate a real-world scenario for performing certain tasks in virtual reality. Finally, 71% of the works involved user evaluation, where the average number of the participants is 34 ± 29.

First responders (e.g., police officers, firefighters, and emergency medical services) frequently face dangerous situations that are uncommon in other work fields (Reichard and Jackson, 2010). First responders’ abilities to deal with critical events increases with the extent of training received. Successful mission completion requires intensive training. In contrast, inadequate training may cause the first responders to fail to complete missions and may even cause personnel injury. Setting up such a training environment in the real world can be expensive and time-consuming (Karabiyik et al., 2019). Training first responders in virtual reality can significantly reduce training costs and improve performances (Koutitas et al., 2020). The training setup can be highly customized to train first responders to deal with critical events effectively. Some government departments have begun to use virtual training as part of combat training. For example, the New York Police Department (NYPD) uses VR for active shooter training (Melnick, 2019).

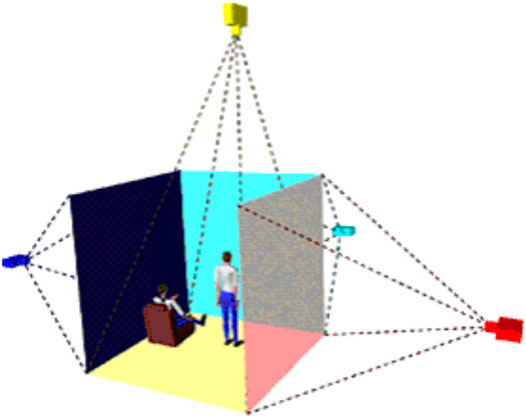

Wilkerson et al. (2008) proposed a method of using an immersive simulation, delivered through a cave automatic virtual environment (CAVE, see Figure 2), for training first responders for mass casualty incidents. A CAVE projected stereo images on the walls and floor to provide a fully immersive experience that included unrestricted navigation, interaction with virtual objects, and physical objects placed in the environment. The training experience was also enhanced by directional sounds. Similarly, a CAVE can be used to design and deliver other extreme, realistic, rare, but significant training scenarios. It allows trainees to train in a safe and immersive environment while reducing training costs.

FIGURE 2. An example of the cave automatic virtual environment (CAVE). Image courtesy of Wilkerson et al. (2008).

Conges et al. (2020) proposed an EGCERSIS project which provided a framework for training a crisis management team on critical sites. The team consisted of three actors: a policeman for traffic control, for example, pulling up a cordon to block an area; a fireman for dealing with danger, for example, using a fire hose to extinguish fires and clear obstacles, and a medical doctor for rescuing the wounded, for example, measuring vital signs and bandaging the wounded. Each actor used a unique set of tools to complete his/her mission. Using the framework, Conges et al. (2020) also demonstrated a scenario where a first responder team performed a mission to rescue an injured man lying on the floor of a subway station platform that was on fire. Mossel et al. (2017) proposed a VR platform that enables immersive first responder training on-site with squad leaders. Unlike the training of team members’ professional and practical ability, the training of team leader focuses more on leadership ability. Mossel et al. (2017) designed four leadership indicators in his method: 1) PLAN, 2) DO, 3) CHECK, and 4) ACT. It is referred to as the PDCA cycle. Specifically, the training involves exploring the site, making decisions, giving commands to request supply personnel, evaluating the results of commands, and identifying deviations from the plan, and adapting actions. In the early presentation, Mossel et al. (2017) demonstrated a scenario prototype which simulated a small fire and a traffic accident with injured people and animated bystanders.

Another important VR training project in the first responder domain is firefighting. Backlund et al. (2007) presented Sidh, a gamified firefighter training simulator. The simulator is run on a CAVE. Players interacted with the virtual environment through a set of sensors to complete 16 firefighter training levels. In addition to training professionals, some fire simulators aimed to train the general public about fire safety as well (Cha et al., 2012).

The use of extended reality techniques to train naval firefighters was proposed by Heirman et al. (2020). Since the Navy has strict firefighter training requirements, they devised a VR controller based on the firehouse used in daily training. Therefore, the trainee can simulate the use of machinery in the real world to the maximum in VR. Vehicles that use hydrogen as fuel may become a reality in the future. However, there are no suitable training tools for dealing with hydrogen vehicle accidents. Pinto et al. (2019) proposed the use of VR on rescue training for hydrogen vehicles accidents. Due to the difference between hydrogen and general gasoline characteristics, they used simulation technology to simulate the scene of a hydrogen vehicle after an accident in VR, providing a suitable platform for the training of rescuers. They demonstrated a solution composed of three components: tutorial mode, training mode, and certification mode.

Although the training of rare and high-risk scenarios are worthy of attention, the common and low-risk scenarios should not be ignored. Along this direction, Haskins et al. (2020) raised a set of challenges in real-world training: communication, situational awareness, high-frequency low-risk incidents, and assessment training. They explored a set of immersive training scenarios for the first responders for such scenarios. The training scenarios included the demolition of a bomb, using a fire truck panel (see Figure 3), handling hazardous materials, and monitoring a police checkpoint. In the bomb demolition scenario, a trainee wearing an HMD device played as a first responder, while another trainee played as a supporter giving complex bomb demolition instructions. The supporter gave instructions to the first responder about how to demolish the bomb, while the first responder described what he saw. Similarly, the other training scenarios showed simulated virtual environments that trained first responders to practice specialized missions in a safe and low-cost manner.

FIGURE 3. A fire truck panel in VR. Image courtesy of Haskins et al. (2020).

Koutitas et al. (2019) proposed to use the VR platform to train the crews of Ambulance Bus to improve their professional capabilities. Different from general ambulance training, the internal facilities of Ambulance Buses are more complicated and require higher professionalism in work tasks. They demonstrated the training environment inside the ambulance bus in VR and AR, respectively. The user study divided the subjects into three controlled groups, each in AR, VR, and PowerPoint presentation training modes. The results showed that after a week of training, subjects’ error rate in the AR and VR groups was significantly less than that in the PowerPoint presentation group.

Due to the limitations of VR setups, such as the limited range of motion, the common range of motion is within 3 × 2 m2, which cannot well simulate a training that requires an extensive range of movement. For example, long-distance chasing training cannot be conducted. Besides, there is also the lack of operation feedback, so it does not function well in training scenarios that require a fine operation. For example, if a nurse does not bandage the wounds of a patient properly, it may increase the suffering of the patient. It could be challenging to simulate the responses of a patient realistically in VR-based nurse training.

VR training will likely supplement rather than replace the traditional training model (Heirman et al., 2020). Compared with the traditional model, VR training’s low-cost and rapid deployment characteristics are suitable for training novices who are just getting started. The traditional training mode can be used as an assessment method after the training is completed. In the future, the alternate uses of traditional training mode and mature VR training mode will reduce the cost and improve the quality of training.

Researchers have thoroughly explored using virtual reality for medical training. Virtual reality has been used for treatment, therapy, surgery and creating experiences by simulating impairments. Previous work suggested that using a properly designed simulation-based training can significantly reduce errors of health-care workers and improve patient safety (Salas et al., 2005). Furthermore, researchers tackled the challenges of creating realistic training experiences for medical professionals by enhancing virtual patient systems.

Some VR programs were created to treat visual impairments, while others aimed to further the understanding of visual impairments through simulations. Hurd et al. (2019) explored the usage of VR video games for Amblyopia treatment. Amblyopia, commonly referred to as lazy eye, is a vision disorder occurring due to faulty connections between the eyes and brain. Typical treatments of the disorder involve occluding the patient’s dominant eye via wearing an eye-patch to strengthen the neurological connection between the weak eye and the brain. Hurd et al. (2019) designed engaging video games that were played using a VR headset with blurred vision. These therapy sessions were found to improve the visual acuity of participants in a user study.

ICthroughVR (Krösl et al., 2019) was created to simulate the effects of cataracts using VR as shown in Figure 4. This simulation is useful for evaluating architectural designs. More specifically, it evaluates lighting conditions along with the placement of emergency exit signs. The researchers adaptively reduced the HMD’s contrast to simulate these effects. They similarly blurred, clouded, and tinted the user’s vision. The researchers also utilized eye-tracking to simulate light sensitivity by monitoring changes in the user’s pupil size.

FIGURE 4. ICthroughVR simulates the effects of cataracts in VR. Image courtesy of Krösl et al. (2019).

Medical professionals also investigated using VR for surgical training. Gallagher et al. (1999) compared traditional training with VR training in the “fulcrum effect” of the body wall in surgical instructions. The results indicated that the VR group performed significantly better than the traditional group. Grantcharov et al. (2004) also validated that VR training outperformed traditional training using a randomized clinical trial of the performance of laparoscopic cholecystectomy. Similar results have been found in the studies by Westwood et al. (1998), Seymour et al. (2002), Gurusamy et al. (2009).

Researchers ventured to use VR to mitigate phantom limb pain for amputees (Fukumori et al., 2014, Ambron et al., 2018; Willis et al., 2019). In mirror therapy, a mirror is placed in front of the amputee’s affected limb to block it and to reflect the patient’s unaffected limb. This simulates motion in the affected limb and stimulates the corresponding areas of the brain, thus reducing pain. Virtual mirror therapy was conducted on amputees by placing them in virtual environments and using a data glove or visual tracking to simulate the affected limb’s movement (Fukumori et al., 2014; Ambron et al., 2018). Researchers also incorporated tactile sensation into the therapy session (Willis et al., 2019).

Several works explored the use of virtual agents to facilitate health-care training. Hochreiter et al. (2015) demonstrated the use of a projector and infrared (IR) camera setup to render an animated physical–virtual patient. The IR cameras enable the patient to sense touch events and react to them with an animation event. Daher et al. (2018) studied the efficacy of this physical–virtual patient in a medical training scenario, which was designed to train health-care professionals to assess patients who show neurological symptoms of a stroke. The researchers compared the physical–virtual patient with a high-fidelity touch-aware mannequin and found that the physical–virtual patient improved the health-care training experience for subjects.

VR also shows significant use for post-war recovery. Rizzo et al. (2014) used a VR system as an exposure therapy (VRET) tool to help soldiers suffering from posttraumatic stress disorder (PTSD). A Middle East–themed virtual city showing deserts and roads, called Virtual Iraq, was created to mimic the experience of soldiers deployed in Iraq. A clinical trial protocol was conducted with 20 active-duty service members who were recently redeployed from Iraq. These soldiers experienced no improvement under traditional PTSD treatment, including group counseling and medication. After 5 weeks of VRET treatment (each soldier received less than 11 sessions of treatment on average), 16 out of the 20 students no longer showed significant signs of PTSD. They showed a significant reduction in anxiety and depression. The improvement in their condition still persisted at a 3-month follow-up posttreatment.

Researchers also studied the usability of virtual patients in clinical psychology. Kenny et al. (2009) created a virtual patient simulating PTSD in the form of a 3D character that is responsive to clinicians’ speech and gestures. The researchers also conducted a user study to evaluate psychological variables like immersiveness and presence. They also assessed the clinician’s openness to absorbing experiences (Huguet et al., 2016), unlike other works that create virtual experiences mimicking traditional training, exploring the use of perturbed communication for medical training. Huguet et al. (2016) created a multiagent virtual experience, simulating a hospital. The agents within the hospital are equipped with an erroneous communication model to induce stress for the trainee.

Due to the importance of tactile feedback in medical professions, most training tools require tailor-made equipment. For example, Gallagher et al. (1999) and Grantcharov et al. (2004) used a VR setup outfitted with two laparoscopic instruments that the surgeon manipulated to simulate a surgical procedure. Moreover, Hochreiter et al. (2015) and Daher et al. (2018) built a specialty rig to simulate a physical–virtual patient. In this subfield of training, researchers must not only provide a faithful visual VR experience but also develop realistic and responsive physical devices. The creation of such training devices and experiences requires expertize in rendering, simulation, haptics, psychology, and physiology.

Military training could be dangerous and risky. Many soldiers die due to noncombat causes or accidents every year (Mann and Fischer, 2019). Technologies have allowed us to simulate different conditions of environments, such as day and night, different weather types, and other scenarios (Rushmeier et al., 2019; Shirk, 2019). Militaries began to use simulation software or serious games as training tools. Hussain et al. (2009) developed the Flooding Control Trainer (FTC) to train new recruits at the U.S. Navy in various skills. U.S. Aviation began to experiment with VR training to supplement the traditional hands-on training through the Aviator Training Next (ATN) program. Their preliminary results suggested that the VR training produced pilots with comparable quality and competence as pilots trained in a live aircraft (Dalladaku et al., 2020). Traditional training methods are often constrained by the real training environment and some specific devices or equipment. VR training provides a safe, controlled virtual environment at a relatively low cost for militaries to practice their technical skills and to improve their cognitive functions (Zyda, 2005).

Many traditional training methods require specific locations or sets of equipment. For example, the Landing Signal Officer Trainer, Device 2H111, is located in Oceana, Virginia. It is used to prepare all Landing Signal Officers (LSOs) for past decades. In order to create an immersive environment, the room consists of several large screen displays and physical renditions of the actual instructions. Due to these constraints, each LSO needs to share the room which houses the equipment, leaving gaps between training periods. Greunke and Sadagic (2016) created a lightweight VR LSO training system, as shown in Figure 5, that uses commercial off-the-shelf devices to support the capabilities of Device 2H111. Besides the basic capabilities of 2H111, the VR LSO training system included additional features that the 2H111 did not provide, such as speech recognition and visual cues to help trainees in the virtual environments. This approach was presented to real world LSOs at an LSO school, where a side-by-side comparison was made. The feedback was positive. The VR training system supports all elements needed for 2H111 with additional features. Similarly, Doneda and de Oliveira (2020) created the Helicopter Visual Signal Simulator (HVSS) to help LS trainees better improve their skills after theoretical studies. A user study was conducted with 15 LSOs, and the results demonstrated that the HVSS is comparable with the actual training, while incurring a much lower cost.

FIGURE 5. A user demonstrates using the Landing Signal Officer Display System for training. Image courtesy of Greunke and Sadagic (2016).

Furthermore, Girardi and de Oliveira (2019) observed that the training of army artillery is often constrained by the high costs and the limited instruction area. They presented a Brazilian Army VR simulator where the instructor can train observers at a minimum cost and without space limitation. The simulator was validated with 13 experienced artillery officers. The results indicated that the simulator attained the expectations for both presence and effectiveness. Siu et al. (2016) created VR surgical training system specified for health-care personal transitioning between operation and civilian. The training system incorporates the needs and skills that are essential to the militaries and adaptively generates optimal training tasks for the health-care workers to improve their efficiency in reskilling.

Another similar scenario where the VR greatly improved training quality and condition compared to the traditional training method was presented by Taupiac et al. (2019). The authors created virtual reality training software that helps the French Army infantrymen to learn the calibration procedure of the IR sights on a combat system called FELIN. In order to practice on the actual FELIN system, the soldiers need to repeatedly practice on the traditional software until they make no mistake. Traditional system only provides a 2D program for soldiers to practice calibration procedure, whereas the new VR method allows them to practice in a virtual environment with 3D-printed rifle model, as shown in Figure 6, which provides a similar control and feeling of use as its real-world counterpart. An ad hoc study was conducted on a group of French soldiers to compare these two methods. The result indicated that the VR method greatly improved soldiers’ learning efficiency and their intrinsic motivation to perform training tasks.

FIGURE 6. A 3D-printed rifle rack to simulate the actual rifle feeling for soldiers to practice the FELIN in VR. Image courtesy of Taupiac et al. (2019).

Overall, VR technologies show significant effects in technical training. These technologies greatly reduce military training expenses by replacing some part of the expensive equipment with affordable VR devices. VR devices will not fully replace traditional equipment, but can serve as a more affordable alternative for training. Researchers Greunke and Sadagic (2016), and Taupiac et al. (2019) have validated that the effect of VR training is comparable to the actual training in some cases, for example, for improving the proficiency of some military equipment usage.

Vehicle simulators provide a safe, controlled training environment which allows people to practice before operating the actual vehicle. Companies use professional simulators such as Iowa driving simulators (Freeman et al., 1995) to test their products or simulate the driving experiences. These platform-based simulators are usually bulky and very expensive for general public use. On the other hand, traditional flat-screen–based training program could be ineffective due to the lack of immersiveness. In current practice, people go to a driving school or hire a human coach to learn driving, which is labor-intensive and could be costly. Moreover, behind-the-wheel training could lead to a high anxiety of the trainees, resulting in a steeper learning curve and a longer training time. As VR devices are getting more affordable, researchers start to explore the possibilities of simulated driving training in virtual environments. VR filled the gap between platform-based and flat-screen–based simulators. A major advantage of VR simulation training is that it allows people to practice under immersive, simulated dangerous scenarios.

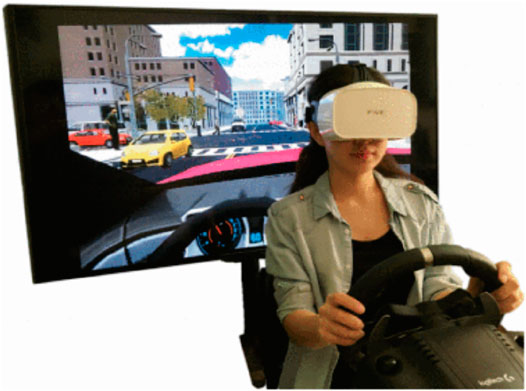

To improve driving habits, Lang et al. (2018) created a system to automatically synthesize a training program that improves bad driving habits of drivers (Figure 7). The system uses an eye-tracking VR headset to detect common driving errors such as not checking blind spots or not paying attention to pedestrians on the street. Based on the collected eye movement data, the system synthesizes a personalized training route through which the trainee will encounter events and practice their skills, hence improving their driving habits. The results indicated that the personalized VR driving system improved drivers’ bad driving habits. The training effect is more significant and long-lasting compared to that of traditional training methods (e.g., booklets, videos).

FIGURE 7. A user improves her driving skills through a personalized VR driving training program. Image courtesy of Lang et al. (2018).

Besides vehicle training simulators, VR is also employed for assistive transportation training, such as wheelchair training, for people with physical or cognitive disabilities. The history of VR wheelchair training can be traced back to the late 1990s, when Inman et al. (1997) first applied VR technology for simulated wheelchair training. They found that children were more motivated in VR training compared to traditional training. Even for some children with significant learning disabilities, they were still able to concentrate on the assigned tasks and follow instructions to gain operating skills. However, there were some children who favored flat-screen over HMDs for training, mainly due to the low-computing power and the technological limitations of HMDs at that time. Furthermore, Harrison et al. (2002) conducted an assessment which suggests that virtual environments are potentially useful for training inexperienced wheelchair users.

In view of the recent advancements of VR technology, John et al. (2018) conducted a validation study to compare VR training with traditional wheelchair training simulators. They created several tasks in the VR environment, including navigating through a maze, a room with obstacles, and other designed paths. The experiments showed a significant improvement in navigation performance and driving skills resulting from the use of the VR training system. Niniss and Nadif (2000) first explored simulating the kinematics behavior of powered wheelchairs using VR. Herrlich et al. (2010) adopted driving characteristics that are commonly used in electric wheelchairs to create virtual simulated wheelchairs. The system was validated through a physics simulator engine. Rodriguez (2015) later developed a wheelchair simulator that can help disabled children familiarize themselves with a wheelchair. In addition, Li et al. (2020) developed an approach to automatically synthesizing wheelchair training scenes for VR training (Figure 8). The approach was able to generate different scenarios with training paths of different moving distance, numbers of rotations, and narrowness, for practicing different wheelchair control skills. Participants showed improvement in wheelchair control skills in terms of proficiency and precision after training with the generated scenes in VR.

FIGURE 8. A user performs a wheelchair training in VR. Courtesy of Li et al. (2020).

The vehicle and wheelchair VR training examples demonstrated that VR training is suitable for improving driving skills. Furthermore, research indicated that the participants are more concentrated and involved in VR than traditional driving training through a monitor (Lhemedu-Steinke et al., 2018). As mentioned previously, VR reduces the risks of training; beginner drivers can improve their driving skills in the virtual environment without risks of accidents.

VR also benefits assistive training. For example, those who suffer from lower body disability due to accidents or sickness may need to practice the use of a wheelchair. VR provides a possible solution for wheelchair training in virtual environments. Overall, VR training in the transportation field is not limited to car driving or wheelchair control training. Researchers also explore the possibilities of other vehicle control training via VR, such as aircraft control (Wang et al., 2019), excavator operation (Sekizuka et al., 2019), and forklift operations (Lustosa et al., 2018), among others.

Many industrial projects and research have been devoted to creating or adopting virtual reality training for their needs as VR could reduce the loss of productivity during training and resolve the lack of qualified training instructors. For example, Manufacturing Extension Partnerships (MEPs) established by the U.S. government offers virtual training and resources to manufacturers for maintaining productivity on top of improving employee stability (Brahim, 2020). Retail businesses such as Walmart and Lowe’s use VR to deliver common and uncommon scenarios and train their employees to achieve a faster assimilation process (VIAR, 2019). Besides, VR vocational training could mitigate unemployment by offering a variety of hands-on experiences and workplace exploration to reskill the workforce. For example, Prasolova-Førland et al. (2019) presented a virtual internship project that introduced a variety of occupations as well as workforce training for young job seekers, while Baur et al. (2013) created a realistic job interview simulation that used social cues to simulate mock interviews. Both virtual simulators help fill the communication gap between the employers and job seekers in the labor market.

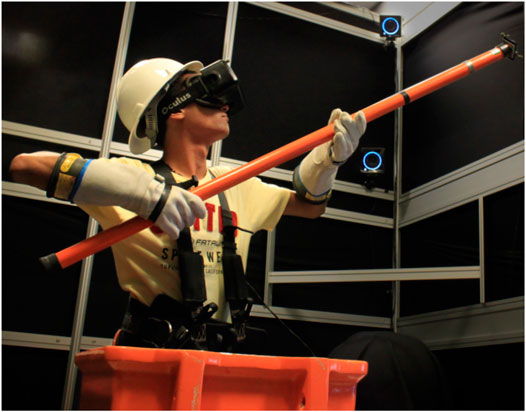

It is critical for a training session to faithfully mimic a real working environment and possible challenges that a worker may face in the workplace. Compared to a traditional training environment, virtual reality provides a low-cost and replicable training to satisfy such needs while offering a mistake-tolerant environment to relieve stress that a new worker may have Mechlih, 2016). For example, Di Loreto et al. (2018) created a virtual reality work-at-height simulator to reproduce real situations with high fidelity for training. Such a simulator not only allows companies to train their employees for performing work-at-height operations but also detects if new workers have acrophobia and help them assimilate to a work-at-height environment. Similarly, training for power line operation and maintenance are necessary to prevent injuries or fatalities. Borba et al. (2016) have worked on a procedure-training simulator that focused on workplace safety and risk assessment of common power line maintenance and operation. This simulator shown in Figure 9 included a real basket and a stick to simulate daily challenges imposed on the operators in an urban setting. It also enabled student workers to learn from the mistakes and successes of their peer’s performances. Moreover, a virtual application for safety training in the construction site was developed and assessed (Hafsia et al., 2018). Many researchers agreed that VR training could add value by reducing safety and health issues for high-risk occupations. For example, immersive virtual construction safety training experiences were developed (Li et al., 2012, Bhoir and Esmaeili, 2015; Le et al., 2015; Froehlich and Azhar, 2016). Sacks et al. (2013) indicated that virtual construction safety training is feasible and effective, especially in terms of teaching workers to identify and inspect construction safety risks. Aati et al. (2020) developed an immersive virtual platform for enhancing the training for work zone inspectors. Through the simulation of VR-based safety training programs developed by Zhao and Lucas (2015), users can effectively enhance their hazard inspection skills.

FIGURE 9. A user learning power line operation and maintenance skills in VR. Image courtesy of Borba et al. (2016).

Many scholars and industries have extensively researched integrating VR with virtual and physical factories by providing high-fidelity simulation tools to handle the concurrent evolution of products, processes and production systems (Tolio et al., 2010). There is a growing trend for designing VR training in assembly and manufacturing contexts. A study evaluated VR assembly training in terms of the effectiveness and transfer of training and found that VR training enhances assembly time and reduces error rate compared to traditional or baseline training groups Abidi et al. (2019). Besides, VR training can save costs and reduce risks for normal factory operation practice such as the risk of machinery and equipment operation, the risk associated with the movement of workers during work, and the risk of emergencies (Lacko, 2020). However, VR training does not always enable collaboration with others in real time. Yildiz et al. (2019) proposed a collaborative and coordinated VR training design model for the wind turbine assembly scenario. This simulation model overcomes the challenge of multiuser VR data integration with virtual and physical factories.

Last but not least, both employees and employers could benefit from workplace VR training. On one hand, new workers can familiarize themselves with the workplace environment and operation through replicable, mistake-tolerant VR training. Employers can use the training results to evaluate whether their employees are ready to work in the field. With a headset, as well as a few motion capture sensors and motion trackers, workplace VR training research has mainly focused on two aspects: 1) improving workers’ skill in performing machinery and equipment operations (e.g., work-at-height training), 2) raising workers’ awareness at work by providing safety and risk assessment training for high-risk occupation (e.g., hazard inspection at a construction site).

However, group training for emergency response (e.g., coping with fire and earthquake emergencies) still has much room for development. Multiuser VR training in real time is still an open research and engineering challenge. Moreover, we observe that most VR training setups use handheld controllers to capture the hand motion of users. For a complex task (e.g., wind turbine assembly), hand tracker devices like VR gloves are needed for capturing a trainee’s detailed hand motion to evaluate his work performance. We expect that the advancement and popularity of VR gloves will lead to more realistic and practical VR training applications.

Aside from training for highly specialized technical skills, VR has recently shown promise for interpersonal skills training. As VR hardware and software improve, the potential for rich interactions among people—a crucial factor for interpersonal skills development—becomes more likely, especially due to the psychological experience of “being there” (Colbert et al., 2016). For instance, VR has recently shown promise for investigating communication in health-care teams, although little work has examined VR in training settings (Cordar et al., 2017). VR has also shown promise for helping people collaboratively build plans for startup companies while having to negotiate team roles as they progress (Kiss et al., 2015). Similarly, VR has also demonstrated effectiveness in intercultural competence development (Akdere et al., 2021a).

A recent meta-analysis suggests that ongoing technical advances position VR to deliver effective training for human resource management, including issues related to recruiting and selecting new employees, and increasing knowledge and skills of current employees (Stone et al., 2015). Furthermore, VR is being combined with other emerging technologies, such as haptic interfaces, to support workforce training (Grajewski et al., 2015). The most critical interpersonal skills include openness, empathy, and verbal and nonverbal communication.

Scholars have long recognized the importance of the affective and behavioral learning dimensions to honing communication skills (Greene, 2003); in other words, cognitive development alone is insufficient. Good communicators, those who are best suited to maximize organizational tacit knowledge both as senders/speakers and receivers/listeners, need positive attitudes. Intercultural openness is widely recognized as an important component of intercultural competency. For example, in the AAC&U’s VALUE rubric for intercultural knowledge and competence, the attitude of openness involves both willingness to interact with culturally different others and the capacity to suspend judgment and consider alternate interpretations during those interactions.

Many other models of intercultural competency, including Deardorff’s Pyramid model (Deardorff, 2006), highlights the importance of openness. In fact, Deardorff’s model places openness at the base of the pyramid, implying that development of intercultural competency cannot continue without this foundational attitude. As such a well-recognized and foundational aspect of intercultural communication, openness is therefore a suitable focus for the VR-based training simulations. Given the nature of openness, a VR environment presents unique training opportunities allowing learners to fully experience controversial issues and topics and therefore enabling them to reflect on their individual convictions and opinions, thus developing the competence for being open. Contributing to the formation of cyberculture VR training “is shown to bring the functional conditionality to the forefront” (Gilyazova, 2019). Openness is also critical in team settings. In a study among surgeons, personal traits such as openness demonstrated “an important role in team performance, which in turn is highly relevant for optimal surgical performance” (Rosenthal et al., 2013). VR is proven to be “particularly useful for promoting change within students’ conceptualizations of representation, narrativity and affect” (Dittmer, 2010), thus promoting the notion of openness.

One way to evaluate openness development in the VR environment by using a pre- and posttest design, as well as a valid and reliable scale such as the Attitudinal and Behavioral Openness Scale (Caligiuri et al., 2000). This demonstrably valid instrument is a self-report Likert-scale survey of 16 items that is easily combined with other measures for online administration of data collection in pre- and posttraining assessments. We also recommend a direct measure for openness. Both at the beginning and the end of the VR simulation, one could collect data on a performance task incorporated seamlessly into the storyline. This task can involve choosing team members from a number of culturally diverse groups; the homogeneity or heterogeneity of teams that participants construct will function in this case as a proxy for their willingness to engage across cultural differences.

Empathy is a complex skill that involves understanding the experiences, perspectives, and emotions of others. Intercultural empathy is especially challenging because the cultural differences often function as barriers to such understanding of others. Deardorff places it toward the top of the pyramid in her model because the performance of this skill often requires positive attitudes such as openness as well as a certain threshold of cultural knowledge. Without these foundational components, empathetic responses are difficult, if not impossible. A recent study on the efficacy of VR environments in developing empathy in psychology education reported that “VR-based simulations of psychopathology may offer a promising platform for delivering a constructionist approach” (Formosa et al., 2018). Similarly, in the field of history, researchers argue that a VR environment is effective in helping learners develop historical empathy, explain why it is important, and discuss how it is taught (Sweeney et al., 2018). van Loon et al. (2018) explored whether VR is driven by increased empathy and whether the effect extends to ostensibly real-stakes behavioral games, and they reported that VR can increase prosocial behavior toward others.

In the context of intercultural competence, empathy is important because members of different cultures often struggle not only to understand the perspectives of other cultures but even to recognize those perspectives as valid (Johnson and Johnson, 2010). A VR training environment presents a new opportunity to help individuals develop empathy. An instrument that was developed to measure empathetic responses across ethnic and racial differences is the Scale of Ethnocultural Empathy Wang et al. (2003). This self-report instrument encompasses 4 factors: empathetic feeling and expression, empathetic perspective-taking, acceptance of cultural differences, and empathetic awareness. The instrument includes a Likert scale of 30 items and may be included with the other measures used for pre- and posttest design.

Regardless of what other components a scholar may or may not include in various definitions of intercultural competence, verbal and nonverbal communication skills are a constant across all models (Spitzberg and Changnon, 2009). Roberts et al. (2016) demonstrated “an approach in which a wide range of nonverbal communication between client and therapist can be contextualized within a shared simulation, even when the therapist is in the clinic and the client at home.” They ensured that VR is reported to allow participants to use nonverbal communication grounded in both the experience of the exposure and the current surroundings. Allmendinger (2010) studied “whether the actual use of nonverbal signals can affect the sense of social presence and thus help to establish and maintain the learner’s motivation and provide support for structuring social interaction in learning situations,” and reported that nonverbal communication can “support the conversational flow,” “provide cognitive support,” and “express emotions and attitudes.”

Verbal communication, on the other hand, is also critical for intercultural competence. In a study exploring enhancing verbal communication through VR environments in medical education, Chellali et al. (2011) reported that VR training “improves user’s collaborative performance.” Houtkamp et al. (2012) reported that “users may experience higher levels of presence, engagement, and arousal when sound is included in the simulations.”

Undoubtedly, the capacity to express oneself clearly and appropriately and the reciprocal ability to accurately interpret the verbal and nonverbal messages of others are vital to interacting successfully across cultural differences. The use of effective communication strategies can be measured with the Communication Scale (Barkman and Machtmes, 2001). This 23-item Likert-scale survey targets communication habits such as clearly articulating ideas, paying attention to the ways that others feel, using one’s own body language to emphasize spoken messages, using nonverbal signals to interpret their speech, and listening actively. This scale can also be included in the pre- and posttest design.

Training evaluation has traditionally focused on identifying immediate participant reactions to the overall training and on measuring the participant’s level of learning upon completion of training. Organizational calls for more accountability during the last decade shifted the focus to return on investment (Akdere and Egan, 2020). Current technological advancements have impacted both the delivery of training (virtual and simulated) and the evaluation of training (biometrics and analytics). VR simulation training can be assessed and evaluated through a number of modes of inquiry such as quantitative and qualitative.

The most commonly used method is the quantitative method in general, and the pre- and posttest experiment design in particular. Measurements (such as the participant’s performance) are taken prior to and after a treatment. The measurements typically take the form of a set of questionnaires before the treatment and the same set of questionnaires administered after the intervention. This enables the evaluation of any impact of training on the participant. Using various statistical analysis techniques (e.g., t-tests), the quantitative approach allows us to determine if the training induces any statistically significant improvement in performance.

As a newly established form of research, biometrics is defined as the use of distinctive, measurable, and physical characteristics to describe individuals, which can generally be divided into two groups: those that serve as identifiers of the body (such as fingerprints and retina scanning) and those that pertain to psychophysiological measures of human behavior and psychological state (Jain et al., 2007). These include the following:

Eye-tracking, which measures the distance between the pupil center and the reflection of the cornea, revealing the fixation and duration of an individual’s eye movement behavior (Bergstrom and Schall, 2014).

Facial expression analysis (fEMG), which records facial muscle activities via electrodes placed on two major facial skin regions in response to different emotion expressions (Yoo et al., 2017; McGhee et al., 2018).

Galvanic skin response (GSR), which reflects the variation of skin conductance caused by changes of sweat glands (Tarnowski et al., 2018).

Heart rate variability (HRV), which indicates an individual’s variance of heart rate speed (Williams et al., 2015).

GSR, HRV, and eye-tracking are the most widely used biometric indices because of their ability to predict the affective state of the subject (Cacioppo et al., 2007). Inclusion of biometric measurements in VR training helps researchers collect and analyze the emotional feedback of the trainees, broadening researchers’ understanding of VR experiences in addition to traditional evaluation approaches (Hickman and Akdere, 2018). Biometrics is best used in conjunction with conventional research methods, as physiological responses to stimuli are involuntary and beyond conscious control. To sum, biometrics can provide valuable information as to the emotional and attentive state of the trainee in real time, without the limitations of language or bias. However, concerns for biometric data safety and ethics should also be considered as part of the biometric-based assessment and evaluation approaches (Akdere et al., 2021b).

The next approach to training evaluation is qualitative design, which has interpretivism, epistemological orientation, and constructionism as its main ontological orientation (Bryman, 2006). It is typically used to describe complex phenomena (Zikmund et al., 2010). For VR training, qualitative research helps us understand an individual’s experiences related to a phenomenon. Such understanding is critical to enable and better support the “transfer of training,” which plays an important role as organizations equip their workforce with the relevant knowledge, skills, and abilities required to perform various job activities (Burke and Hutchins, 2007). However, according to Grossman and Salas (2011), the concept of transfer of either learning or training is a source of continuous study and debate, making it difficult for organizations to determine exactly what critical factors should be addressed when dealing with this topic. Using the Baldwin and Ford (1988) model of transfer and identify, Grossman and Salas (2011) suggest that “the factors relating to trainee characteristics (cognitive ability, self-efficacy, motivation, and perceived utility of training), training design (behavioral modeling, error management, and realistic training environments), and work environment (transfer climate, support, opportunity to perform, and follow-up)” are the ones “that have exhibited the strongest, most consistent relationships with the transfer of training.” Bossard et al. (2008) also raise important concerns about the transfer of learning and training in virtual environments. The authors point out the importance of focusing on the content in any training effort, referring to Detterman (1993)’s view on training transfer, which states that it is important to train people on the exact areas/topics needed for people to learn.

Another potential challenge of the transfer of training in virtual environments is situated learning. Lave argued that knowledge and skills are context-bound and consequently pose the challenge of conceptually learning in a simulated virtual environment and practically applying it to a real-life environment (transfer of training) (Lave, 1988). The critical role of the design of virtual environments and its impact on the transfer of training should be taken into account, starting with the design stage rather than posttraining, while embedding the critical learning elements that will be assessed as part of the evaluation of training (Bossard et al., 2008). This view is in line with Grossman and Salas’ identification of “training design” and “realistic training environments” as critical factors to the improvement of transfer success 2011.

Compared to situations with positive or no training transfer, negative training transfer raises more concern because it not only creates no value but further detriments the organization (Wagner and Campbell, 1994). Negative transfer occurs when the training outcome hinders real-world performance or even confuses the employee about the work instead of helping the employee improve, further risking the effective utilization of employee’s knowledge, skills, and abilities at work, and complicating organizational efforts on training. Such problems can definitely happen in VR-based training when the virtual environment is ineffective at simulating and reflecting real-world tasks (Rose et al., 2000; Michalski et al., 2019).

Some virtual environments fail to provide haptic feedback to trainees when performing tasks. Specifically, handlers and 3D mice are common input devices in a VR training platform. By clicking and holding the triggers/button, trainees manipulate objects through grasping or pulling them in the virtual environment (Hamblin, 2005). Such activities can be inaccurate compared to performing tasks in the real-world that require high-level motor skills, such as surgery and sports (Issurin, 2013; Våpenstad et al., 2017). When accuracy and precision are the priority of tasks, the VR training platform should combine visual, auditory, and haptic sensors together to imitate the real-world environment. Therefore, it is vital for the VR-based training design to incorporate the training transfer phase into the process to achieve higher training transfer rates among VR training participants.

For VR training, self-reported participant reflection is a commonly used qualitative approach which can be conducted verbally or by text. This method provides the participants with an opportunity to reflect more deeply about their training experiences through probing. The reflection inquired about how the training experience affected the participant, what new insights it generated, how the participant is going to feel/behave differently after the training, and so forth. Overall, the self-reported reflection method not only serves as a training evaluation method for VR-based simulations but also provides a further learning opportunity to participants.

We introduced the VR training creation pipeline, including training scenario sketching and implementation of VR training applications. We reviewed some of the latest works from many important VR training domains. We also discussed how to assess and evaluate VR training. We discuss some future directions of VR training in the following.

To improve VR experiences, companies are working on improving the resolution and field of view of VR headsets. There are efforts on improving VR experiences from other perspectives too, for example, using haptics to increase the sense of presence (SoP). In current practice, the haptics of VR experiences are often quite limited. Most VR setups use fixed-shape controllers with a joystick. The haptic feedback is provided by some simple vibrations generated by a motor. The latest controller from Valve called Index provides hand recognition which detects finger movements. Fang et al. (2020) developed a device called Wireality which enables a user to feel by hands the shape of virtual objects in the physical world.

Besides haptics, other devices try to improve the immersiveness of VR from other perspectives. For example, FEELREAL Sensory Mask can simulate more than 200 different scents for a user to smell in VR (FEELREAL, 2020). Cai et al. (2020) developed pneumatic gloves called ThermAirGlove, which provide thermal feedback when a user is interacting with virtual objects. Ranasinghe et al. (2017) developed a device called Ambiotherm which uses a fan and other hardware to simulate temperature and wind condition in VR. Future VR training applications can incorporate these devices to enhance the realism and immersiveness of training.

Networked VR enables multiple users to perform training simultaneously in a shared virtual space. Currently, applications such as VRChat and Rec Room allow multiple users to explore and chat in a virtual area for entertaining purposes. While the Internet enables people to “chat” with a stranger across the sea, networked VR enables people to “meet” at the same place. With virtual avatars, hand gesture recognition, facial emotion mapping, and other upcoming technologies, networked VR experiences will facilitate people around the world to socialize and collaborate. On the other hand, improvements in network quality and speed will make networked VR even more accessible. The fifth-generation (5G) network and cloud computing make it possible for people to use VR without an expensive local setup. To sum, networked VR will likely make VR training more available, accessible, and scalable.

Multiuser VR training is very useful in some domains. For example, first responders such as firefighters usually work in a squad. Each person in the squad has specific duties. To maximize the performance of a squad, seamless cooperation is needed. Multiuser VR training provides an affordable solution to individuals such that they can practice their specialized skills and communication skills whenever and wherever they want. For example, a group of medical professionals could practice surgery in VR even if some of the members are located remotely. In fact, NYPD has started to use collaborative VR training software for active shooter training (Melnick, 2019). Apart from emergency training, multiuser VR training could motivate and engage groups of learners. Multiuser training could induce positive peer pressure, where individuals support each other during the training process.

Augmented reality (AR) overlays real-world objects with a layer of virtual objects or information. Common AR devices include AR headsets (e.g., Microsoft Hololens and Magic Leap headsets) and AR-supported smartphones. Compared to navigating fully immersed VR scenes, users have more control over the physical world when using AR devices. AR has been applied for training in domains such as manufacturing, construction and medical (Rushmeier et al., 2019). For example, AR reduced the difficulty and workload for mechanics by adding extra labels and indicators to the machinery during training and maintenance (Ramakrishna et al., 2016). Medical professionals adopt AR for teaching neurosurgical procedures (de Ribaupierre et al., 2015; Si et al., 2018). AR training applications are also developed for improving work efficiency on construction sites (Kirchbach and Runde, 2011; Zollmann et al., 2014). Continuous advancements in AR devices and computer vision techniques (e.g., object recognition and tracking) promise to enhance the applicability of AR for in situ training in many real-world scenarios.

BX: manuscript preparation, figure design, and figure captions. HL, RA, YZ, FL, YJ, CL, and WL: manuscript preparation. HH, MA, CM, and L-FY: survey design, overall editing, and project oversight. All authors contributed to the article and approved the submitted version.

Publication of this article was funded by Purdue University Libraries Open Access Publishing Fund. This study was supported by two grants (award numbers: 1942531 & 2000779) funded by the National Science Foundation.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors would like to thank their colleagues from different disciplines on providing insights about the application of VR to different domains.

1https://www.merriam-webster.com/dictionary/training

https://www.khronos.org/collada/

3https://code.google.com/archive/p/o3d/

4https://www.web3d.org/x3d/what-x3d

Aati, K., Chang, D., Edara, P., and Sun, C. (2020). Immersive Work Zone Inspection Training Using Virtual Reality. Transp. Res. Rec. 2674 (12), 224–232. doi:10.1177/0361198120953146

Abidi, M. H., Al-Ahmari, A., Ahmad, A., Ameen, W., and Alkhalefah, H. (2019). Assessment of Virtual Reality-Based Manufacturing Assembly Training System. Int. J. Adv. Manuf. Technol. 105, 3743–3759. doi:10.1007/s00170-019-03801-3

Akdere, M., and Egan, T. (2020). Transformational Leadership and Human Resource Development: Linking Employee Learning, Job Satisfaction, and Organizational Performance. Hum. Resour. Dev. Q. 31, 393–421. doi:10.1002/hrdq.21404

Akdere, M., Acheson, K., and Jiang, Y. (2021a). An Examination of the Effectiveness of Virtual Reality Technology for Intercultural Competence Development. Int. J. Intercult. Relat. 82, 109–120. doi:10.1016/j.ijintrel.2021.03.009

Akdere, M., Jiang, Y., and Lobo, F., (2021b). Evaluation and Assessment of Virtual Reality-Based Simulated Training: Exploring the Human-Technology Frontier. Eur. J. Train. Dev. doi:10.15405/epsbs.2021.02.17

Allmendinger, K. (2010). Social Presence in Synchronous Virtual Learning Situations: The Role of Nonverbal Signals Displayed by Avatars. Educ. Psychol. Rev. 22, 41–56. doi:10.1007/s10648-010-9117-8

Ambron, E., Miller, A., Kuchenbecker, K. J., Buxbaum, L. J., and Coslett, H. B. (2018). Immersive Low-Cost Virtual Reality Treatment for Phantom Limb Pain: Evidence from Two Cases. Front. Neurol. 9, 67. doi:10.3389/fneur.2018.00067

Amin, A., Gromala, D., Tong, X., and Shaw, C. (2016). “Immersion in Cardboard Vr Compared to a Traditional Head-Mounted Display,” in Virtual, Augmented and Mixed Reality. Editors S. Lackey, and R. Shumaker (Cham: Springer International Publishing), 269–276. doi:10.1007/978-3-319-39907-2_25

Anthes, C., García-Hernández, R. J., Wiedemann, M., and Kranzlmüller, D. (2016). “State of the Art of Virtual Reality Technology”, in IEEE Aerospace Conf., Big Sky, MT, 1–19.

Backlund, P., Engstrom, H., Hammar, C., Johannesson, M., and Lebram, M. (2007). “Sidh – a Game Based Firefighter Training Simulation”, in 11th International Conference Information Visualization, Zurich, Switzerland, July 4–6, 2007 (New York, NY: IEEE), 899–907. doi:10.1109/iv.2007.100

Baldwin, T. T., and Ford, J. K. (1988). Transfer of Training: A Review and Directions for Future Research. Pers. Psychol. 41, 63–105. doi:10.1111/j.1744-6570.1988.tb00632.x

Bao, X., Mao, Y., Lin, Q., Qiu, Y., Chen, S., Li, L., et al. (2013). Mechanism of Kinect-Based Virtual Reality Training for Motor Functional Recovery of Upper Limbs after Subacute Stroke. Neural Regen. Res. 8, 2904–2913. doi:10.3969/j.issn.1673-5374.2013.31.003

Barkman, S., and Machtmes, K. (2001). Four-fold: A Research-Based Model Designing and Evaluting the Impact of Youth Development Programs. News & Views 54, 3–8.

Baur, T., Damian, I., Gebhard, P., Porayska-Pomsta, K., and André, E. (2013). “A Job Interview Simulation: Social Cue-Based Interaction with a Virtual Character,” in 2013 International Conference on Social Computing (IEEE), Alexandria, VA, Sep 8–14, 2013 (New York, NY: IEEE), 220–227.

Bergstrom, J. R., and Schall, A. (2014). Eye Tracking in User Experience Design. Amsterdam, Netherlands: Elsevier.

Bhoir, S., and Esmaeili, B. (2015). “State-of-the-art Review of Virtual Reality Environment Applications in Construction Safety”, in. In AEI 2015, Milwaukee, WI, March 24–27, 2015 (Reston, VA: American Society of Civil Engineers), 457–468.

Biocca, F., and Delaney, B. (1995). Immersive Virtual Reality Technology. Commun. Age Virtual Real. 15, 10–5555.

Bogatinov, D., Lameski, P., Trajkovik, V., and Trendova, K. M. (2017). Firearms Training Simulator Based on Low Cost Motion Tracking Sensor. Multimed. Tools Appl. 76, 1403–1418. doi:10.1007/s11042-015-3118-z

Borba, E. Z., Cabral, M., Montes, A., Belloc, O., and Zuffo, M. (2016). “Immersive and Interactive Procedure Training Simulator for High Risk Power Line Maintenance”, in ACM SIGGRAPH 2016 VR Village, Anaheim, California, July, 2016 (New York, NY: Association for Computing Machinery). doi:10.1145/2929490.2929497

Bossard, C., Kermarrec, G., Buche, C., and Tisseau, J. (2008). Transfer of Learning in Virtual Environments: a New Challenge?. Virtual Real. 12, 151–161. doi:10.1007/s10055-008-0093-y

Brahim, Z. (2020). Nhmep Offers Virtual Training and Resources to Assist Manufacturers. New Hampshire Manufacturing Extension Partnership — NH MEP. Available at: https://www.nhmep.org/nhmep-offers-virtual-training-and-resources-to-assist-manufacturers/ (Accessed April 23, 2020).

Bryman, A. (2006). Integrating Quantitative and Qualitative Research: How Is it Done? Qual. Res. 6, 97–113. doi:10.1177/1468794106058877

Burke, L. A., and Hutchins, H. M. (2007). Training Transfer: An Integrative Literature Review. Hum. Res. Dev. Rev. 6, 263–296. doi:10.1177/1534484307303035

Cacioppo, J. T., Tassinary, L. G., and Berntson, G. (2007). Handbook of Psychophysiology. Cambridge, United Kingdom: Cambridge University Press.

Cai, S., Ke, P., Narumi, T., and Zhu, K. (2020). “Thermairglove: A Pneumatic Glove for Thermal Perception and Material Identification in Virtual Reality,” in IEEE Conference on Virtual Reality and 3D User Interfaces, Atlanta, GA, March 22–26, 2020 (New York, NY: IEEE), 248–257.