94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

BRIEF RESEARCH REPORT article

Front. Vet. Sci., 10 March 2025

Sec. Animal Behavior and Welfare

Volume 12 - 2025 | https://doi.org/10.3389/fvets.2025.1548906

This study investigates the potential of non-invasive, continuous temperature measurement techniques for assessing cattle welfare. We employed advanced object detection algorithms and infrared thermography to accurately extract and continuously measure temperatures of the eyes and muzzles of 11 calves over several months (total, 33 samples). A mobile thermal imaging camera was paired with the Mask R-CNN algorithm (object detection) trained on annotated datasets to detect eye and muzzle regions accurately. Temperature data were processed by outlier rejection, standardization, and low-pass filtering to derive temperature change patterns. Cosine similarity metrics and permutation tests were employed to evaluate the uniqueness of these patterns among the individuals. The average cosine similarity between eye and muzzle temperature changes in the same individual across 33 samples was 0.72, with permutation tests yielding p-values <0.01 for most samples, indicating pattern uniqueness. This study highlights the potential of high-frequency, non-invasive temperature measurements for detecting subtle physiological changes in animals without causing distress.

Temperature is measured routinely in clinical settings and is a crucial diagnostic tool for assessing an animal’s health status and detecting potential illnesses or physiological changes. The most common and traditional method is rectal temperature measurement. However, sometimes this method can be stressful, which has prompted investigations into alternative, non-invasive techniques. Recent studies have explored the correlation between eye temperature, measured using infrared cameras, and rectal temperature in various species, including cats (1), sheep (2), and cattle (3, 4). These studies have demonstrated medium to strong correlations, suggesting that eye or muzzle temperature measurements could serve as viable substitutes for core body temperature measurements. Despite the advancements in measurement techniques, the current infrared camera-based temperature measurement techniques have limitations. The region of interest (ROI) in the images is typically defined using rectangular or circular shapes (1–3, 5, 6), which may not allow for precise extraction of the desired area. Furthermore, manual temperature measurements result in discontinuous data collection. Indeed, previous studies have consistently employed intermittent measurement protocols, with temporal intervals between data points (2, 4, 7, 8).

Recent advancements in artificial intelligence have led to its widespread application in scientific fields. A recent study combining object detection with infrared camera technology for respiratory pattern analysis (9) demonstrated the following two key advantages: (1) accurate ROI identification and (2) continuous temperature measurements at a rate of 8.7 frames/s. Regarding accurate ROI identification, this will help overcome the limitation of infrared camera measurements mentioned above. Moreover, continuous temperature measurement allows for consistent tracking of eye and muzzle temperatures, potentially opening up new research possibilities. At this point, establishing the reliability and validity of continuous measurement methods is crucial. Therefore, this study aims to substantiate these claims through two distinct processes. First, temperatures were accurately and continuously measured from different areas (eyes and muzzle) in cattle to determine if similar patterns of temperature change were observed in the same individual in continuous cases. Second, temperature change patterns from randomized patterns were compared to identify whether the characteristics of short-term, continuously measured temperature change patterns are unique.

For this study, data from 11 calves (aged 12–14 weeks) were collected and analyzed at the Kobe University farm (Food Resources Education and Research Center, Graduate School of Agricultural Science Kobe University). Each calf underwent a series of thermal imaging sessions at four-week intervals, including three separate imaging events, from February to September (ambient temperature range: 9°C–34.1°C). This systematic approach yielded a comprehensive dataset comprising 33 distinct imaging samples. Imaging was conducted between 11:00 and 13:00 during the post-feeding rumination period. To minimize disturbance, the researchers approached the calves slowly and conducted imaging from a distance of 1 m. Data collection included both thermal imaging using an infrared camera and conventional video recording (1–2 min) using a standard camera attached to an infrared camera device. The calves were housed at the Kobe University farm in a semi-open facility, characterized by one side being completely open to the external environment.

We used a mobile thermal imaging camera (FLIR One Pro for iOS [accuracy: ±3.0°C, sensitivity: 0.07°C, field of view: 55° × 43°, resolution: 80 × 60, temperature range: −20°C–400°C], FLIR Systems Inc., Santa Barbara, CA, USA).1 The emissivity was set to 0.95 (the correct emissivity for animal tissue is 0.98; however, it was set to 0.95 due to the limitation of the device’s emissivity setting).

This study was approved by the Institutional Animal Care and Use Committee of the Osaka Metropolitan University (approval number: 24–024).

Image extraction and temperature derivation were performed according to the protocols established in previous research (9).

Algorithm training for image extraction denotes a series of methodological steps (annotation, training, and inference) designed to enable the identification and isolation of specific features (namely, eyes and muzzle) in novel red, green, and blue (RGB) images.

Annotation: the process of creating a dataset (annotated images) for training. Annotated images are those that have labels added to highlight specific features of interest. These annotations may include bounding boxes, lines, arrows, or text indicate and describe specific regions within the image. In this study, we used lines to mark the relevant features (eyes or muzzles). We annotated eye and muzzle regions in 1000 images (700 for training and 300 for validation) using the VGG Image Annotator (VIA) (10). With the annotated images as dataset, the training models can later perform tasks like object detection, segmentation, and classification.

Training: it involves algorithms to locate objects in images through annotated datasets and iterative learning. As for training algorithm, we used the Mask R-CNN architecture (11) in conjunction with transfer learning, utilizing a pre-trained model for detection training. Transfer learning enhances learning outcomes by leveraging knowledge acquired from a previously mastered, related task. This approach is particularly beneficial when the available dataset is insufficient for comprehensive training, allowing the use of a model trained on a similar, existing dataset. Our dataset, comprising 1,000 images, was deemed inadequate for full-scale training. Consequently, we used the COCO (Common Objects in Context) dataset (12), which is a large-scale object detection dataset developed by Microsoft. The COCO dataset, which includes various animal categories, shared similarities with our target domain. We leveraged a pre-trained backbone weight based on the COCO dataset to expedite the training process. For the backbone architecture, we implemented ResNet 101 (13). By combining ResNet 101 with the COCO dataset, we fine-tuned a new classifier specifically for this eye and muzzle detection task. This process involved adjusting the model’s weights.

Inference: the process where a trained model localizes objects in new images with weights. Weights in neural networks are numerical values that determine how strongly different features influence the model’s decisions. During training, these weights are iteratively updated to improve the model’s ability to recognize specific patterns, in this case, eyes and muzzles. Using these optimized weights, the model can then effectively locate regions of interest in new, unseen images.

To determine temperature variation patterns, we obtained (1) temperature sequence data from the infrared camera and (2) conventional video images from the standard camera for 33 samples. The conventional video images were processed using the previously obtained weights to extract eye and muzzle regions. The identified eye and muzzle locations were then used to extract the corresponding temperature data from the temperature sequence data. Finally, mean temperatures were calculated to determine eye and muzzle temperatures. This process was repeated for all frames to derive time-dependent temperature changes in the eyes and muzzles.

To ensure the reliability and validity of our temperature variation analysis, we developed a comprehensive data processing protocol. It involved a multi-step approach to ensure data integrity and comparability. Initially, we implemented an outlier rejection protocol utilizing Tukey’s hinge method (g = 1.5) (14), which is a widely accepted technique in statistical analysis. This method defines an acceptable range based on the following formula:

where Q1 and Q3 represent the first and third quartiles of temperature distribution, respectively, and g denotes Tukey’s constant. Subsequently, to address the variability in absolute temperature measurements stemming from inter-individual differences and environmental factors, we applied a standardization procedure to the data. This step facilitates more meaningful comparisons across subjects and conditions. Finally, the graph incorporates various sources of noise, including camera frame artefacts, external environmental factors such as wind, and physiological interferences stemming from cardiac and respiratory activities. To mitigate these diverse noise elements, a low-pass filtering technique was employed, with a cut-off frequency set at 0.08 Hz. This methodological sequence ensures robust data preprocessing, accounting for outliers, individual variability, and measurement-induced fluctuations, thereby providing a solid foundation for subsequent analysis of temperature change patterns.

To analyze and compare the temperature change patterns of two distinct graphs, we employed cosine similarity as our primary metric. This approach allows the comparison of graph structures. The temperature change patterns of the eye and muzzle, extracted from the same individual, were represented as vectors. Subsequently, the cosine similarity between these two vectors was calculated using the following formula:

The resulting cosine similarity value, ranging from −1 to 1, was interpreted as follows: values closer to 1 indicate high similarity in variability patterns, values closer to 0 suggest orthogonality or dissimilarity, and negative values, if present, indicate inverse relationships.

To assess the statistical significance of the observed similarities, we conducted a permutation test (15) with 10,000 iterations. This procedure included the following steps: (1) calculation of the observed cosine similarity between the two graphs (the temperature change patterns of the eye and muzzle, extracted from the same individual), (2) random permutation of the elements of one of the vectors (the temperature change patterns of the muzzle) multiple times (10,000 iterations), (3) calculation of cosine similarity with the unpermuted vector (the temperature change patterns of the eye) for each permutation. The number of permuted similarities ≥ the observed similarity was recorded. This value was then divided by the total number of permutations (10,000) to derive the p-value. This analysis provided a p-value to quantify the likelihood of observing such similarity by chance. All computations and analyses were performed using Python 3.8 with the NumPy and SciPy libraries. The visualizations were generated using Matplotlib.

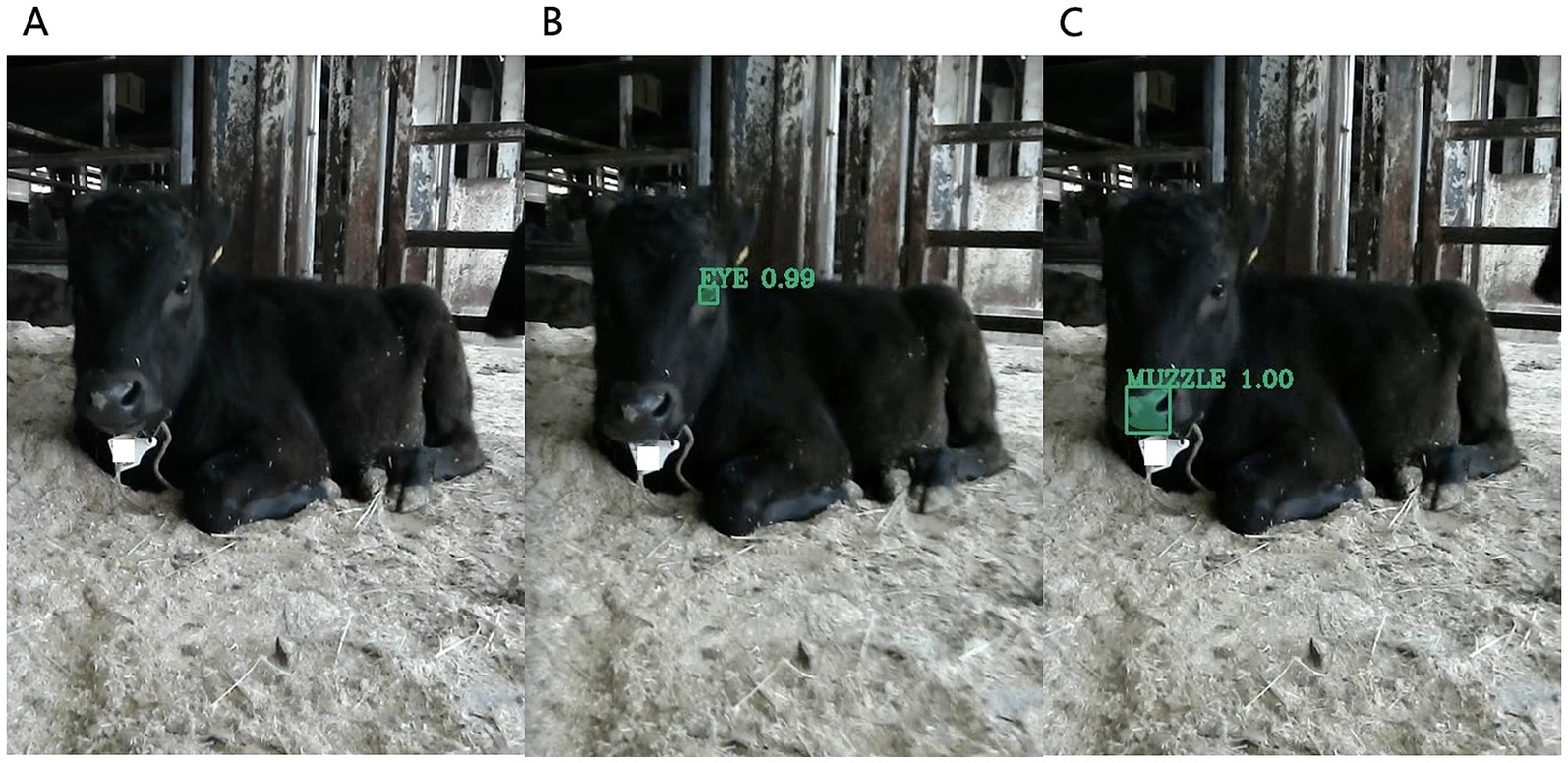

By training utilizing annotated images and the Mask R-CNN algorithm, we obtained weights that enabled the detection of eyes and muzzles in the images captured for this study (Figure 1).

Figure 1. Results of using object detection to identify calves eye and muzzle. (A) Image of a calf captured using a standard camera. (B) Image of the eye region extracted by object detection. (C) Image of the muzzle region. The green-bound boxes represent object detection, while the green hatched areas denote pixel-wise object detection, also known as segmentation.

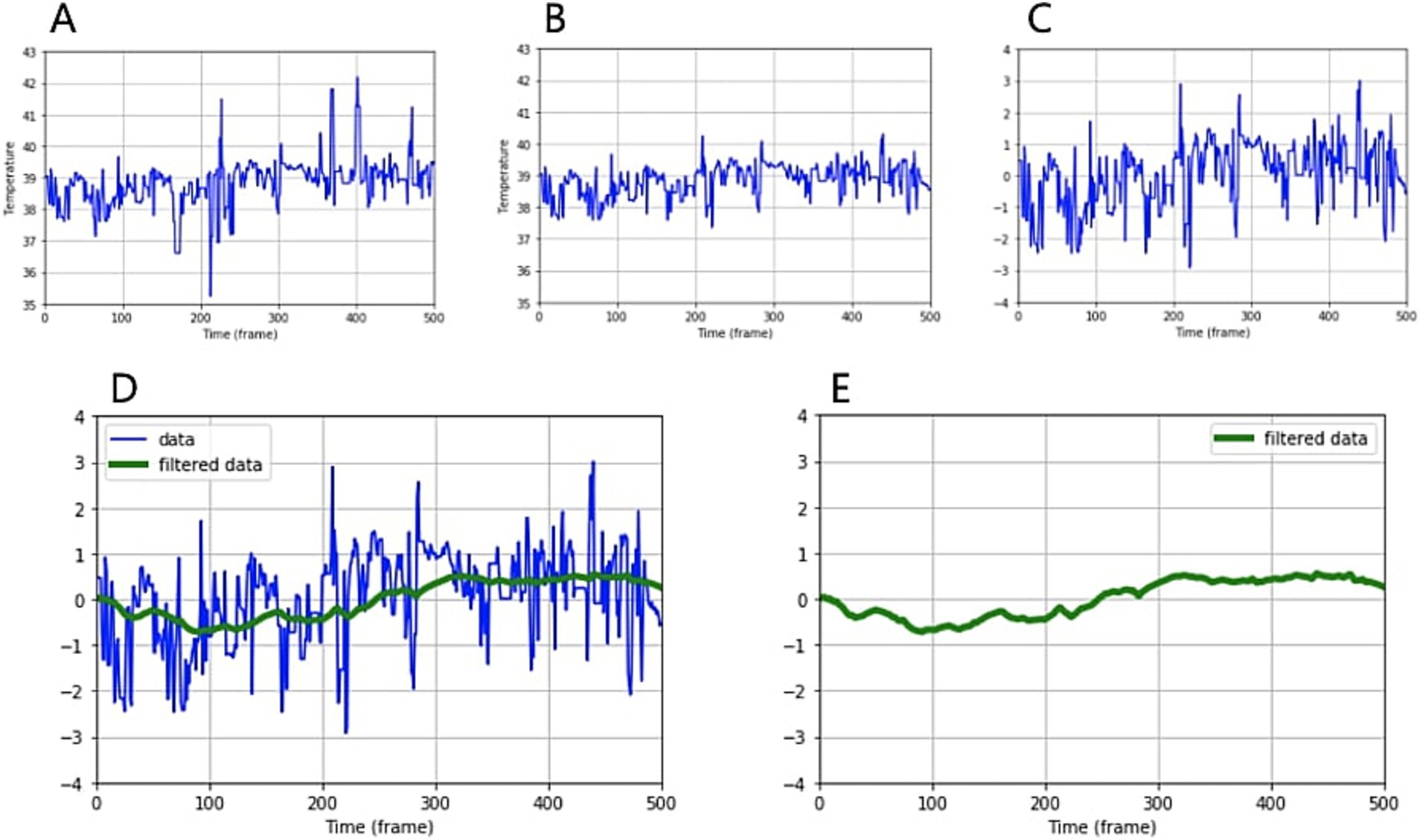

Subsequently, by combining temperature data from infrared cameras with the location data of eyes and muzzles extracted from standard images, we were able to derive temperature changes over time. After applying outlier rejection, standardization, and low-pass filtering, we obtained temperature change patterns for the eyes and muzzles (Figure 2).

Figure 2. Analyzing and processing temperature data over time. (A) Temperature graph over time obtained by combining infrared temperature data and object extraction (B) after outlier rejection, (C) after standardization, (D) after low-pass filtering. The green line represents the filtered graph. (E) A pattern of temperature change over time.

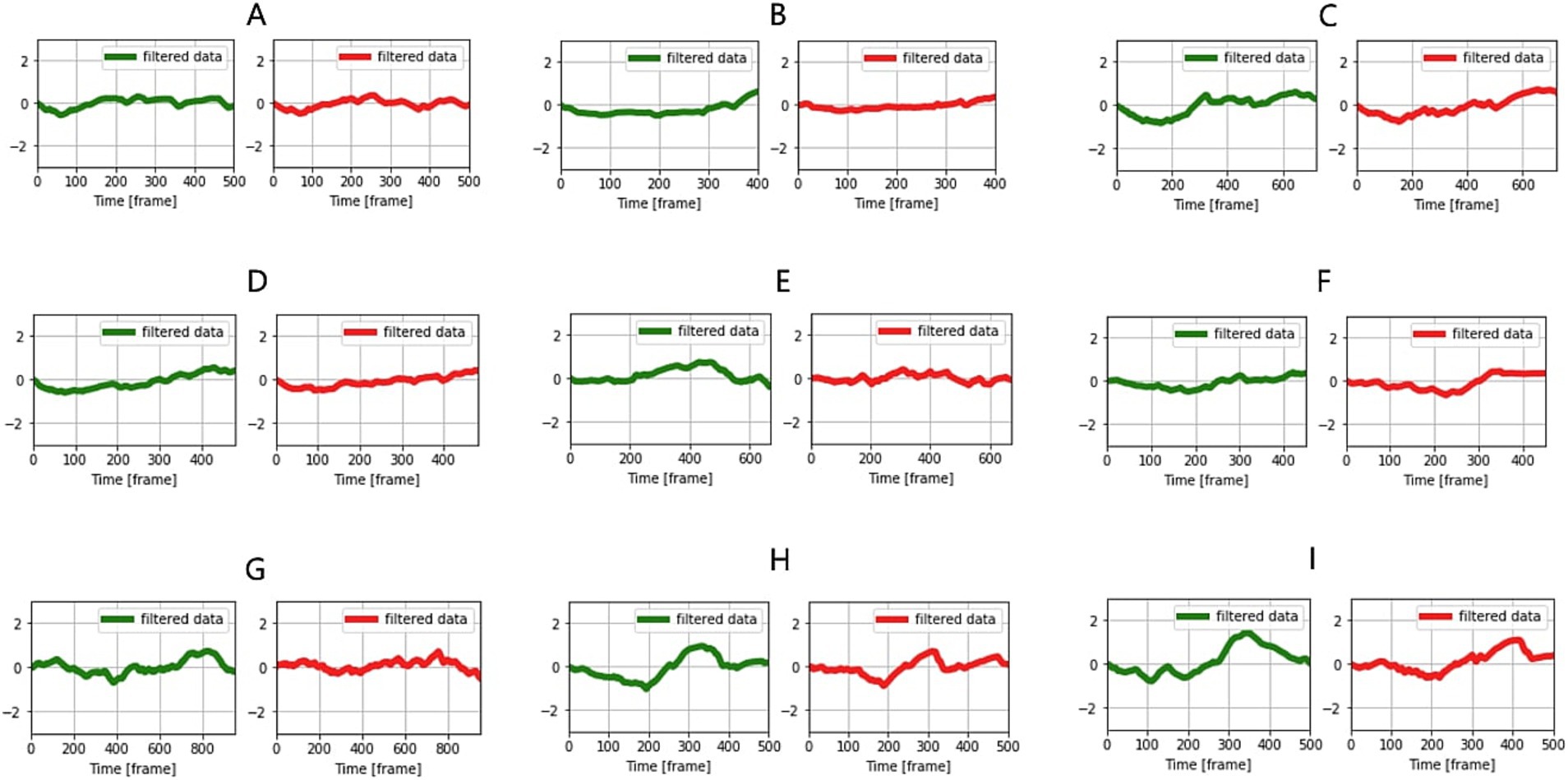

The average cosine similarity obtained by comparing the similarity of eye and muzzle temperature changes in the same individual across 33 samples (Figure 3) was 0.72 (Table 1).

Figure 3. Comparison of temperature change patterns in the eye and muzzle in the same individual (A–I). Green indicates eye and red, muzzles.

A permutation test, which involved randomizing one sample (muzzle temperature change) in the same individual and comparing its similarity with non-randomized samples (eye temperature change), yielded a p-value <0.01 (29 of 33 samples). This was interpreted to mean that randomized graphs could not exhibit high cosine similarity, and the original graph patterns possessed uniqueness. However, 4 of 33 samples showed cosine similarities close to 0 and p-values >0.5 in the permutation test. For these 4 samples, difficulties in detecting eyes in the captured images were observed, resulting in the complete failure to detect the eyes or erroneous identification of other parts (skin or body parts). Additionally, 3 samples failed to exceed a cosine similarity of 0.5. In these cases, the calves’ heads were turned sideways, and the muzzle portions in the images were small with prominently captured nostrils, suggesting that the muzzle temperatures were relatively strongly influenced by respiration.

In this study, we employed object detection, an AI technology, to enhance the accuracy of ROI detection. We also utilized temperature data from all frames of the infrared camera to obtain high-frequency temperature change variability. The reliability of this method was verified by comparing the variability between two areas (eye and muzzle). This results confirmed that short-term temperature changes (1–2 min) exhibit unique characteristics. Furthermore, distinctive temperature variations were identified by measuring eye and muzzle temperatures. However, muzzle measurements might have been influenced by the sideways movement of the calf’s head, resulting in a smaller muzzle area in the image, or strong respiration. Notably, this study was conducted in a field setting rather than a controlled environment, thus validating its potential for practical application in real-world scenarios.

Research utilizing Infrared Thermography (IRT) to detect physiological changes in animals is increasingly supported by studies across diverse species and contexts. For instance, IRT has been validated as a tool to identify heat stress in farm animals by detecting vasodilation-mediated temperature changes in thermal windows like the ocular region and muzzle (16). Additionally, IRT has been integrated with heart rate variability (HRV) metrics to comprehensively assess autonomic nervous system (ANS) activity during stress responses (17). In clinical applications, IRT’s non-invasive nature has proven valuable for monitoring ischemic events and tracking inflammatory responses during wound healing (18). Recent advances also highlight its utility in studying temporal body temperature dynamics. For example, infrared technology has been used to quantify circadian rhythmicity (24-h cycles) (19) and short-term fluctuations (~10-min intervals) in core body temperature (20). Notably, avian studies demonstrate rapid stress-induced thermal responses, such as transient eye temperature drops followed by rebounds within 30 s, underscoring the need for sub-second measurement resolution to capture acute physiological shifts (21, 22). Accordingly, in this study, we aimed to measure temperature at a frequency of less than 1 s using temperature data from all consecutive frames of the infrared camera. We were able to obtain reliability through measurement and comparison at two locations: the eyes and muzzle. The implementing high-frequency temperature measurements at intervals of ≤1 s, as employed in this study, represents a significant methodological advancement in animal welfare monitoring. This approach is comparable to the difference between simple heart rate measurement and continuous heart rate monitoring that enables HRV analysis, revealing information that would otherwise be undetectable. Continuous data collection allows for various mathematical calculations, and consequently, its improved sensitivity might detect subtle temperature fluctuations, potentially revealing minor stressors or physiological changes imperceptible with less frequent sampling. In fact, a human study (23) has successfully detected stress through continuous measurement of nasal tip temperature and various mathematical analyses of this data, such as temperature difference between from the start and the end, slope of thermal variable signal, and standard deviation of successive differences of thermal variable signal. The current study has verified the validity and reliability of temperature changes in calves’ eyes and muzzles, which we believe will serve as a foundation for diverse analyses in future research.

Recent advancements in AI have led to its integration with existing technologies, rather than relying solely on AI, enabling us to overcome the limitations of traditional devices. A prime example of this synergy is the integration of AI with infrared cameras in various studies. For instance, in bovine research, AI has been successfully integrated with infrared camera imagery to detect digital dermatitis (24), mastitis (25), respiratory patterns (26), and body temperature (27). These applications demonstrate how AI can augment the analytical power of thermal imaging. While the aforementioned integrations typically use AI to identify specific areas of interest within infrared images, our study takes a different approach. We aim to address the inherent limitations of infrared imaging by using object detection techniques on RGB images. This novel approach allows us to overcome some of the constraints associated with thermal imaging alone. To elaborate further on our methodology, traditional infrared camera-based methods of measuring eye or muzzle temperatures were limited by rectangular or circular ROI settings (5) and manual configuration requirements. These limitations often resulted in the inclusion of surrounding skin areas within the ROI, potentially leading to inaccurate temperature readings due to temperature differentials between the eye and surrounding skin. Previous studies (3) showing only medium correlations between rectal and muzzle temperatures may be attributed to imprecise muzzle area extraction. This study, however, employed object detection techniques, specifically utilizing the segmentation capabilities of Mask R-CNN. This approach enabled precise pixel-level detection of eye and muzzle regions, consequently enhancing the accuracy of temperature calculations, which in turn provided a more robust foundation for analyzing thermal variation patterns in cattle.

Despite these advancements, a significant challenge in our study’s AI-based detection of bovine eyes and muzzles lies in distinguishing the eyes within the cranial region. This difficulty arises from the chromatic similarity between the eye and surrounding fur. However, the detection process employed in this study proved successful in a majority of the cases. Despite this success, four samples yielded near-zero similarity scores, likely due to detection failures, which can be attributed to external factors, such as backlighting and overcast weather conditions. It is noteworthy that under such circumstances, even human visual discrimination of the eye region would be considerably challenging.

There are numerous constraints related to infrared camera temperature measurements in field settings. External environmental factors can influence measured temperatures, with cold or windy conditions often resulting in lower temperature readings (28, 29). In this study, measurements were taken across various seasons (February to September, ambient temperature range: 9°C–34.1°C), revealing a wide range of temperatures. Additionally, it is known that infrared camera temperature readings can vary due to individual factors such as skin thickness and hair density (30), differences in infrared emissivity based on color variations (31), and breed-specific characteristics (32). Indeed, in this study, we were able to observe temperature differences between individuals. The continuous temperature measurements and the resulting temperature variability analysis in this study are significant in their potential for standardization, which could help overcome the aforementioned limitations. Standardization allows us to focus on relative changes over time rather than absolute values. This approach is expected to minimize the differences in absolute temperatures caused by external environmental factors and individual variations among animals, thereby allowing for a clearer focus on physiological changes of interest. This approach adds significant value to the research, enabling more reliable analyses of temperature variability in animals, despite the inherent challenges of field-based infrared thermography.

This study demonstrates the efficacy of combining artificial intelligence-based object detection with infrared camera technology for continuous, non-invasive temperature measurement in cattle. The research yielded several significant findings. First, short-term temperature changes (1–2 min) in cattle exhibit unique characteristics, as evidenced by the high average cosine similarity (0.72) between eye and muzzle temperature patterns in the same individual. Second, the permutation test results (p < 0.01) confirm that these temperature change patterns possess distinctive features that cannot be replicated by random fluctuations. Finally, this study’s field setting validates its potential for practical application in real-world scenarios. The methodology developed here offers a promising approach for enhancing animal welfare monitoring in field conditions, potentially enabling early detection of stress or health issues in livestock.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The animal study was approved by Institutional Animal Care and Use Committee of the Osaka Metropolitan University (approval number: 24-024). The study was conducted in accordance with the local legislation and institutional requirements.

SK: Conceptualization, Data curation, Formal analysis, Methodology, Software, Writing – original draft. NY: Writing – review & editing. SI: Writing – review & editing. ST: Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors would like to express sincere gratitude to Takeshi Honda (Food Resources Education and Research Center, Graduate School of Agricultural Science Kobe University) for his support throughout this research.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Gen AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Giannetto, C, Di Pietro, S, Falcone, A, Pennisi, M, Giudice, E, Piccione, G, et al. Thermographic ocular temperature correlated with rectal temperature in cats. J Therm Biol. (2021) 102:103104. doi: 10.1016/j.jtherbio.2021.103104

2. de Freitas, ACB, Vega, WHO, Quirino, CR, Junior, AB, David, CMG, Geraldo, AT, et al. Surface temperature of ewes during estrous cycle measured by infrared thermography. Theriogenology. (2018) 119:245–51. doi: 10.1016/j.theriogenology.2018.07.015

3. de Ruediger, FR, Yamada, PH, Barbosa, LGB, Chacur, MGM, Ferreira, JCP, de Carvalho, NAT, et al. Effect of estrous cycle phase on vulvar, orbital area and muzzle surface temperatures as determined using digital infrared thermography in buffalo. Anim Reprod Sci. (2018) 197:154–61. doi: 10.1016/j.anireprosci.2018.08.023

4. George, WD, Godfrey, RW, Ketring, RC, Vinson, MC, and Willard, ST. Relationship among eye and muzzle temperatures measured using digital infrared thermal imaging and vaginal and rectal temperatures in hair sheep and cattle. J Anim Sci. (2014) 92:4949–55. doi: 10.2527/jas.2014-8087

5. Soroko, M, Howell, K, Zwyrzykowska, A, Dudek, K, Zielińska, P, and Kupczyński, R. Maximum eye temperature in the assessment of training in racehorses: correlations with salivary cortisol concentration, rectal temperature, and heart rate. J Equine Vet Sci. (2016) 45:39–45. doi: 10.1016/j.jevs.2016.06.005

6. Johnson, SR, Rao, S, Hussey, SB, Morley, PS, and Traub-Dargatz, JL. Thermographic eye temperature as an index to body temperature in ponies. J Equine Vet Sci. (2011) 31:63–6. doi: 10.1016/j.jevs.2010.12.004

7. Hoffman, AA, Long, NS, Carroll, JA, Sanchez, NB, Broadway, PR, Richeson, JT, et al. Infrared thermography as an alternative technique for measuring body temperature in cattle. Applied Anim Sci. (2023) 39:94–8. doi: 10.15232/aas.2022-02360

8. Valera, M, Bartolomé, E, Sánchez, MJ, Molina, A, Cook, N, and Schaefer, AL. Changes in eye temperature and stress assessment in horses during show jumping competitions. J Equine Vet Sci. (2012) 32:827–30. doi: 10.1016/j.jevs.2012.03.005

9. Kim, S, and Hidaka, Y. Breathing pattern analysis in cattle using infrared thermography and computer vision. Animals. (2021) 11:207. doi: 10.3390/ani11010207

10. Dutta, A., and Zisserman, A. (2019). The VIA annotation software for images, audio and video. Proceedings of the 27th ACM international conference on multimedia (pp. 2276–2279).

11. He, K., Gkioxari, G., Dollár, P., and Girshick, R. (2017). Mask r-cnn. In Proceedings of the IEEE international conference on computer vision (pp. 2961–2969).

12. Lin, TY, Maire, M, Belongie, S, Hays, J, Perona, P, Ramanan, D, et al. Microsoft coco: common objects in context In: Computer vision–ECCV 2014: 13th European conference, Zurich, Switzerland, September 6–12, 2014, proceedings, part V 13. Berlin: Springer International Publishing (2014). 740–55.

13. He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778).

14. David, FN, and Tukey, JW. Exploratory data analysis. Biometrics. (1977) 33:768. doi: 10.2307/2529486

15. Ramdas, A, Barber, RF, Candès, EJ, and Tibshirani, RJ. Permutation tests using arbitrary permutation distributions. Sankhya A. (2023) 85:1156–77. doi: 10.1007/s13171-023-00308-8

16. Ghezzi, MD, Napolitano, F, Casas-Alvarado, A, Hernández-Ávalos, I, Domínguez-Oliva, A, Olmos-Hernández, A, et al. Utilization of infrared thermography in assessing thermal responses of farm animals under heat stress. Animals. (2024) 14:616. doi: 10.3390/ani14040616

17. Ghezzi, MD, Ceriani, MC, Domínguez-Oliva, A, Lendez, PA, Olmos-Hernández, A, Casas-Alvarado, A, et al. Use of infrared thermography and heart rate variability to evaluate autonomic activity in domestic animals. Animals. (2024) 14:1366. doi: 10.3390/ani14091366

18. Mota-Rojas, D, Ogi, A, Villanueva-García, D, Hernández-Ávalos, I, Casas-Alvarado, A, Domínguez-Oliva, A, et al. Thermal imaging as a method to indirectly assess peripheral vascular integrity and tissue viability in veterinary medicine: animal models and clinical applications. Animals. (2023) 14:142. doi: 10.3390/ani14010142

19. van der Vinne, V, Pothecary, CA, Wilcox, SL, McKillop, LE, Benson, LA, Kolpakova, J, et al. Continuous and non-invasive thermography of mouse skin accurately describes core body temperature patterns, but not absolute core temperature. Sci Rep. (2020) 10:20680. doi: 10.1038/s41598-020-77786-5

20. Morimoto, A, Nakamura, S, Koyano, K, Nishisho, S, Nakao, Y, Arioka, M, et al. Continuous monitoring using thermography can capture the heat oscillations maintaining body temperature in neonates. Sci Rep. (2024) 14:10449. doi: 10.1038/s41598-024-60718-y

21. Jerem, P, Herborn, K, McCafferty, D, McKeegan, D, and Nager, R. Thermal imaging to study stress non-invasively in unrestrained birds. J Vis Exp : JoVE, (105). (2015). doi: 10.3791/53184-v

22. Jerem, P, Jenni-Eiermann, S, McKeegan, D, McCafferty, DJ, and Nager, RG. Eye region surface temperature dynamics during acute stress relate to baseline glucocorticoids independently of environmental conditions. Physiol Behav. (2019) 210:112627. doi: 10.1016/j.physbeh.2019.112627

23. Cho, Y., Bianchi-Berthouze, N., Oliveira, M., Holloway, C., and Julier, S. (2019) Nose heat: exploring stress-induced nasal thermal variability through mobile thermal imaging. 2019 8th international conference on affective computing and intelligent interaction (ACII) (pp. 566–572).

24. Feighelstein, M, Mishael, A, Malka, T, Magana, J, Gavojdian, D, Zamansky, A, et al. AI-based prediction and detection of early-onset of digital dermatitis in dairy cows using infrared thermography. Sci Rep. (2024) 14:29849. doi: 10.1038/s41598-024-80902-4

25. Xudong, Z, Xi, K, Ningning, F, and Gang, L. Automatic recognition of dairy cow mastitis from thermal images by a deep learning detector. Comput Electron Agric. (2020) 178:105754. doi: 10.1016/j.compag.2020.105754

26. Zhao, K, Duan, Y, Chen, J, Li, Q, Hong, X, Zhang, R, et al. Detection of respiratory rate of dairy cows based on infrared thermography and deep learning. Agriculture. (2023) 13:1939. doi: 10.3390/agriculture13101939

27. Jaddoa, MA, Gonzalez, L, Cuthbertson, H, and Al-Jumaily, A. Multiview eye localisation to measure cattle body temperature based on automated thermal image processing and computer vision. Infrared Phys Technol. (2021) 119:103932. doi: 10.1016/j.infrared.2021.103932

28. Church, JS, Hegadoren, PR, Paetkau, MJ, Miller, CC, Regev-Shoshani, G, Schaefer, AL, et al. Influence of environmental factors on infrared eye temperature measurements in cattle. Res Vet Sci. (2014) 96:220–6. doi: 10.1016/j.rvsc.2013.11.006

29. Jansson, A, Lindgren, G, Velie, BD, and Solé, M. An investigation into factors influencing basal eye temperature in the domestic horse (Equus caballus) when measured using infrared thermography in field conditions. Physiol Behav. (2021) 228:113218. doi: 10.1016/j.physbeh.2020.113218

30. Mota-Rojas, D, Pereira, AM, Wang, D, Martínez-Burnes, J, Ghezzi, M, Hernández-Avalos, I, et al. Clinical applications and factors involved in validating thermal windows used in infrared thermography in cattle and river buffalo to assess health and productivity. Animals. (2021) 11:2247. doi: 10.3390/ani11082247

31. Riaz, U, Idris, M, Ahmed, M, Ali, F, and Yang, L. Infrared thermography as a potential non-invasive tool for estrus detection in cattle and buffaloes. Animals. (2023) 13:1425. doi: 10.3390/ani13081425

32. Martello, LS, da Luze Silva, S, da Costa Gomes, R, da Silva Corte, RRP, and Leme, PR. Infrared thermography as a tool to evaluate body surface temperature and its relationship with feed efficiency in Bos indicus cattle in tropical conditions. Int J Biometeorol. (2016) 60:173–81. doi: 10.1007/s00484-015-1015-9

Keywords: infrared thermography, non-invasive temperature measurement, AI object detection, temperature change patterns, cattle welfare monitoring

Citation: Kim S, Yamagishi N, Ishikawa S and Tsuchiaka S (2025) Unique temperature change patterns in calves eyes and muzzles: a non-invasive approach using infrared thermography and object detection. Front. Vet. Sci. 12:1548906. doi: 10.3389/fvets.2025.1548906

Received: 02 January 2025; Accepted: 20 February 2025;

Published: 10 March 2025.

Edited by:

Daniel Mota-Rojas, Metropolitan Autonomous University, MexicoReviewed by:

Marcelo Ghezzi, Universidad Nacional del Centro de Buenos Aires, ArgentinaCopyright © 2025 Kim, Yamagishi, Ishikawa and Tsuchiaka. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sueun Kim, a2ltNzNAb211LmFjLmpw

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.