95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Vet. Sci. , 24 February 2025

Sec. Veterinary Humanities and Social Sciences

Volume 11 - 2024 | https://doi.org/10.3389/fvets.2024.1504598

Introduction: The integration of Artificial Intelligence (AI) into medical education and healthcare has grown steadily over these past couple of years, though its application in veterinary education and practice remains relatively underexplored. This study is among the first to introduce veterinary students to AI-generated cases (AI-cases) and AI-standardized clients (AI-SCs) for teaching and learning communication skills. The study aimed to evaluate students' beliefs and perceptions surrounding the use of AI in veterinary education, with specific focus on communication skills training.

Methods: Conducted at Texas Tech University School of Veterinary Medicine (TTU SVM) during the Spring 2024 semester, the study included pre-clinical veterinary students (n = 237), who participated in a 90-min communication skills laboratory activity. Each class was introduced to two AI-cases and two AI-SCs, developed using OpenAI's ChatGPT-3.5. The Calgary Cambridge Guide (CCG) served as the framework for practicing communication skills.

Results: Results showed that although students recognized the widespread use of AI in everyday life, their familiarity, comfort and application of AI in veterinary education were limited. Notably, upper-year students were more hesitant to adopt AI-based tools, particularly in communication skills training.

Discussion: The findings suggest that veterinary institutions should prioritize AI-literacy and further explore how AI can enhance and complement communication training, veterinary education and practice.

The study of mathematically explaining in detail the process of human learning and intelligence such that a machine can replicate was first described in 1955 by John McCarthy (1). Around this time, the term Artificial Intelligence (AI) was coined to describe the scientific method of developing computer algorithms that simulate human cognition (2, 3). The integration of AI has progressed more rapidly in human healthcare compared to veterinary medicine (4, 5). Presently in human medicine, AI-based solutions support clinical-decision making (6, 7), facilitate the understanding and analysis of written and verbal human language and generate appropriate dialogue as experienced in Chatbots (8), have the capacity process unstructured clinical text to generate predictive clinical outputs (9) and offer diagnostic tools to prevent disease (10). In veterinary medicine, AI has been used successfully in the areas of radiology (4, 11), disease surveillance, diagnosis and decision-making process (12, 13).

Despite significant advances and changes brought about by AI across various healthcare professions, veterinary medicine professionals remain skeptical about its applicability and the necessity to equip students with AI-related knowledge and skills (14, 15). Only a few examples in veterinary medical education have embraced AI, such as using it to support student learning, teach veterinary anatomy, and assess clinical skills (5, 16, 17).

Emerging technologies, evolving clients' and societal expectations are pushing for a paradigm shift in veterinary medical education. The growing emphasis on competency-based education, grounded in the principles of andragogy and student-centered learning (18) highlights the need to prepare veterinary graduates for a rapidly changing professional landscape. In response veterinary graduates from member institutions of the Association of American Veterinary Medical Colleges (AAVMC) are expected to meet minimum competencies across nine domains including clinical reasoning and decision-making, individual animal care and management, animal population care and management, public health, communication, collaboration, professionalism and professional identity, financial and practice management and scholarship (19, 20).

To ensure that students meet these competencies, veterinary education has increasingly integrated experiential learning methodologies. Kolb's experiential learning theory, for example, has proven effective in enhancing students' skills and confidence in clinical communication (21). By incorporating techniques such as role-play, practice with Standardized Clients (SCs), structured and constructive feedback, and presentation of skills in a helical approach along with repeated practice; all approaches align to develop well-rounded, competent veterinary professionals (22–25). Among the different approaches to communication skills training, the Calgary Cambridge Guide is a validated framework for teaching and assessing communication skills in veterinary education and has been widely used with a focus on relationship-centered medicine and effective and compassionate client interactions (26, 27).

Communication curricula are resource intensive requiring administrative support, recruitment and training of SCs and facilitators, continuing case development and refinement, investment in audiovisual software and facilities (23, 28). Limited studies across healthcare professions report the use of AI in communication skills training; AI-examples include virtual patients and Chatbots to practice the clinical interview and to provide feedback (29, 30) yet additional use of AI can potentially complement communication teaching, learning and assessment while reducing costs and increasing efficiency.

This is the first study to explore the integration of AI in a veterinary communication pre-clinical Doctor of Veterinary Medicine (DVM) program (5, 13). The study aimed to introduce veterinary students to AI-developed cases and AI-simulated clients (AI-SCs) and examine students' perceptions regarding the use of AI in veterinary education, particularly within communication skills training programs.

The Texas Tech University School of Veterinary Medicine (TTU SVM) follows an outcome and competency based 3-year pre-clinical curriculum followed by a 1-year practice through a community based clinical learning network around Texas and parts of New Mexico. The clinical and professional skills program (CPS) engages students in 6 hours weekly of hands-on experiential practice across small animal, food animal, equine and exotic species and organized across three focus areas: surgery, medicine and communication skills. Specifically, communication skills are delivered in three 50-min active, student-centered presentations where students are presented theoretical frameworks and evidence-based delineation of communication skills followed by three 3-h communication laboratories per semester across all three pre-clinical years. Communication teaching, learning outcomes and assessment is based on the Calgary Cambridge Guide (CCG) (25, 26). The CCG delineates 73 process skills and outlines the clinical interview into five sequential stages: initiating the session, gathering of information, physical examination, explanation and planning, closing the session, and two stages that run throughout the encounter: building rapport and providing structure. In line with the Calgary-Cambridge Guide (CCG), students participate in laboratory sessions where they work in groups of 4–5, alongside a facilitator, to practice communication skills in simulated encounters with standardized clients (SCs). During these sessions, students receive real-time feedback while engaging in self-assessment and reflective practices to enhance their communication skills.

With advancements in AI rapidly transforming various fields, its application in veterinary education, particularly in communication skills training, remains largely unexplored. This study, conducted during the Spring semester of 2024, represents a pioneering effort to integrate AI into the development of veterinary communication skills. All students (n = 243) enrolled in semesters two (n = 102), four (n = 79), and six (n = 62) were invited to practice communication skills with AI-generated cases and AI-standardized clients (AI-SCs) as part of a course assignment. By examining students' perceptions regarding the use of AI in veterinary education, this research offers valuable insights into the potential of AI to enhance communication training.

The study was approved by the Institutional Review Board (IRB) at Texas Tech University, IRB # 2023-1125.

We developed six AI-cases using the free conversational OpenAI Chat Generative Pre-Trained Transformer version, ChatGPT-3.5. ChatGPT is trained to retrieve information from large datasets which enables adding depth and contextual nuances to clinical scenarios that can be used in experiential practice of communication skills (31). The AI cases were incorporated into semesters 2, 4, and 6 during the 2nd, 3rd, and 14th weeks of the respective semesters to align with and support the learning objectives of the labs and communication curriculum. The cases developed were designed to engage learners on addressing animal health communication and how best to address human differences including disability, illness, exposure to various racial and ethnic backgrounds, sexual orientations, socioeconomic status, and other demographic differences that students may encounter when they enter veterinary medical practice. All human characteristics were presented to the learner as part of the patient and client history before the exercise and interaction.

A prompt is a natural language text that describes a task that an AI should perform in response (32) while prompt engineering describes the process of structuring an instruction that can be interpreted and understood by AI (33). For our study, the following prompt engineering technique was utilized to create and enrich cases with desired social and cultural characteristics; Name of the Owner: [INSERT NAME] Ethnicity: [INSERT ETHNIC GROUP] Location: [INSERT DEPARTMENT, RURAL/URBAN AREA] Language Spoken: [INSERT LANGUAGE] Occupation: [INSERT OCCUPATION] Household Composition: [INSERT HOUSEHOLD COMPOSITION] Type of Animal: [INSERT ANIMAL TYPE] Animal's Name: [INSERT ANIMAL NAME] Animal's Age: [INSERT AGE] Reason for Veterinary Visit: [INSERT REASON] Possible Socioeconomic or Cultural Factors Affecting the Case: [INSERT FACTORS]. Next, the scenarios were further enhanced with images utilizing photo animating application Talkr Inc. (2023) Talkr Live version 2.3 and voice over changing hardware Roland Corporation (2018) VT-4 voice transformer.

We designed a 90-min communication laboratory to practice communication skills following an individualized and reflective approach. The AI generated cases were hosted on Blackboard Inc. Learning Management System (LMS) accessed through the TTU SVM intranet. The laboratory encompassed a 30-min theory and evidence followed by 60 min of practice of communication skills. The theoretical component provided information on the evidence and theory of communication skills and the use of AI in teaching and learning communication and a review of core communication skills. The practical component was based on the premises of the CCG; students watched and listened the AI-Standardized Client (SC) case and were asked to develop a dialogue for a clinical veterinary encounter following the CCG framework with specific attention to the AI-SC narrative. The practice session included several pauses, allowing students to ask questions and enabling two facilitators to provide clarifications as needed. Students were encouraged to work in pairs and practice their developed dialogue; one student took on the role of the “writer” and wrote out specific communication skills while the other student focused on being the “listener” and provided feedback on how skills were practiced. Both the “writer” and the “listener” contributed to developing the dialogue. Students were encouraged to write out in words how non-verbal communication skills would be practiced and applied during the encounter.

The AI cases for semester 2 students focused on developing skills aligned with the Calgary-Cambridge Guide (CCG), specifically targeting the stages of initiating the consultation, gathering of information, building rapport, and providing structure. Additionally, students had the opportunity to center communication around socioeconomic and cultural factors.

AI-SC Suzanne Ouais (Figure 1) presented with her 14-year-old Black Lab mix with diarrhea. Suzanne Ouais is a student of American/Lebanese descent who recently relocated to Lubbock, from the Caribbean with her beloved 14-year-old mixed Black Lab, Pippa. Suzanne's limited finances and reliance on student loans make her budget-conscious and she struggles to meet all Pippa's healthcare needs. Suzanne is worried about Pippa's health especially as she is getting older and wants to ensure that she can provide the necessary care within her budget.

Figure 1. Still image of AI-SC Suzanne Ouais, a student of American/Lebanese descent, with her 14-year-old Black Lab mix with diarrhea.

AI-SC Erik Garcia (Figure 2) presented for a second opinion for purchasing a 200-head dairy farm. Students were encouraged to consider effective and compassionate communication to address socioeconomic, cultural factors and language barriers.

Figure 2. Still image of AI-SC Erik Garcia, a dairy owner of Latino descent, seeking a second opinion for purchasing a 200-head dairy farm.

Erik Garcia is a hardworking and family-oriented individual of Latino background and resides in the rural outskirts of Amarillo, Texas, where he and his family have been living for several years. Erik shared his strong commitment to ensuring the health and welfare of the dairy herd. He wants to provide top-quality care for his animals and ensure the success of the farm to support his family. Erik is bilingual, speaking both Spanish and English fluently, but he is more comfortable expressing himself in Spanish.

The AI cases for semester 4 students focused on developing skills aligned with the Calgary-Cambridge Guide (CCG), specifically targeting the stages of initiating the consultation, explanation, and planning, building rapport, and providing structure. Students were encouraged to explore the client's concerns and perspective.

AI-SC Rebekka Stone (Figure 3) presented with her 6-year-old neutered Rottweiler, Rambo, to receive test results for a diagnosis of osteosarcoma. Rebekka Stone, a mom to three children, a high-school math teacher is facing multiple challenges in her life. She has been diagnosed with stage 4 breast cancer and is currently undergoing chemotherapy. These factors impact her ability to provide the best care for her beloved Rottweiler, Rambo, who has been diagnosed with osteosarcoma. The costs associated with diagnosing and treating Rambo's cancer is a major concern for Rebekka, given her own ongoing medical expenses. Furthermore, Rebekka's chemotherapy treatments may limit her availability, ability and energy to care for Rambo during this challenging time.

Figure 3. Still image of AI-SC Rebekka Stone, a mother and high-school math teacher with stage 4 breast cancer, with her 6-year-old Rottweiler recently diagnosed with osteosarcoma.

AI-SCs 8-year-old Peter and his mom Anne Black (Figure 4) presented with DaisyBell, a therapy horse, for signs of colic. Students were encouraged to discuss the potential diagnosis of colic while exploring how best to accommodate and meet Peter's needs. Peter is diagnosed with autism and has difficulty expressing his feelings and struggles to understand what is happening with DaisyBell. Students were encouraged to use visual aids in discussing the diagnosis, treatment and risks.

Figure 4. Still image of AI-SCs 8-year-old Peter, an autistic child, and his mom Anne Black with Daisy Bell, a therapy horse, presenting for signs of colic.

The AI cases for semester 6 students focused on developing skills aligned with six stages of the Calgary-Cambridge Guide (CCG), initiating the consultation, gathering of information, explanation, and planning, building rapport, providing structure, and closing the consultation. Students were encouraged to explore communication skills that support compassionate care when working with vulnerable populations.

AI-SC Danny Erickson (Figure 5) and his 10-year-old dog, Rocket, was seen for hind limb lameness and a potential diagnosis of hip dysplasia. Danny Erickson is a marine veteran diagnosed with Post-Traumatic-Stress-Disorder (PTSD) and now homeless. Danny has a history of being in and out of the veteran's hospital, also impacting his ability to consistently care for Rocket, as he may have periods of hospitalization or treatment that prevent him from providing adequate care.

Figure 5. Still image of AI-SC Danny Erickson, a homeless marine veteran diagnosed with Post-Traumatic-Stress-Disorder (PTSD), with his 10-year-old dog Rocket presenting for hind limb lameness and potential hip dysplasia.

AI-SCs Phillip Nichols and his partner Marcus Stephen (Figure 6) are seen with their 13-year-old Savannah cat, Chloe, that is diagnosed with hypertrophic cardiomyopathy. Students were encouraged to discuss medical care for Chloe while considering the client's unique circumstances and medical condition. Phillip receives palliative care for Human Immunodeficiency Virus (HIV) and is very frail with limited mobility, which impacts on his ability to provide comprehensive care for Chloe.

Figure 6. Still image of AI-SCs Phillip Nichols, a client diagnosed with HIV, and his partner Marcus Stephen, with their 13-year-old Savannah cat Chloe presenting with hypertrophic cardiomyopathy.

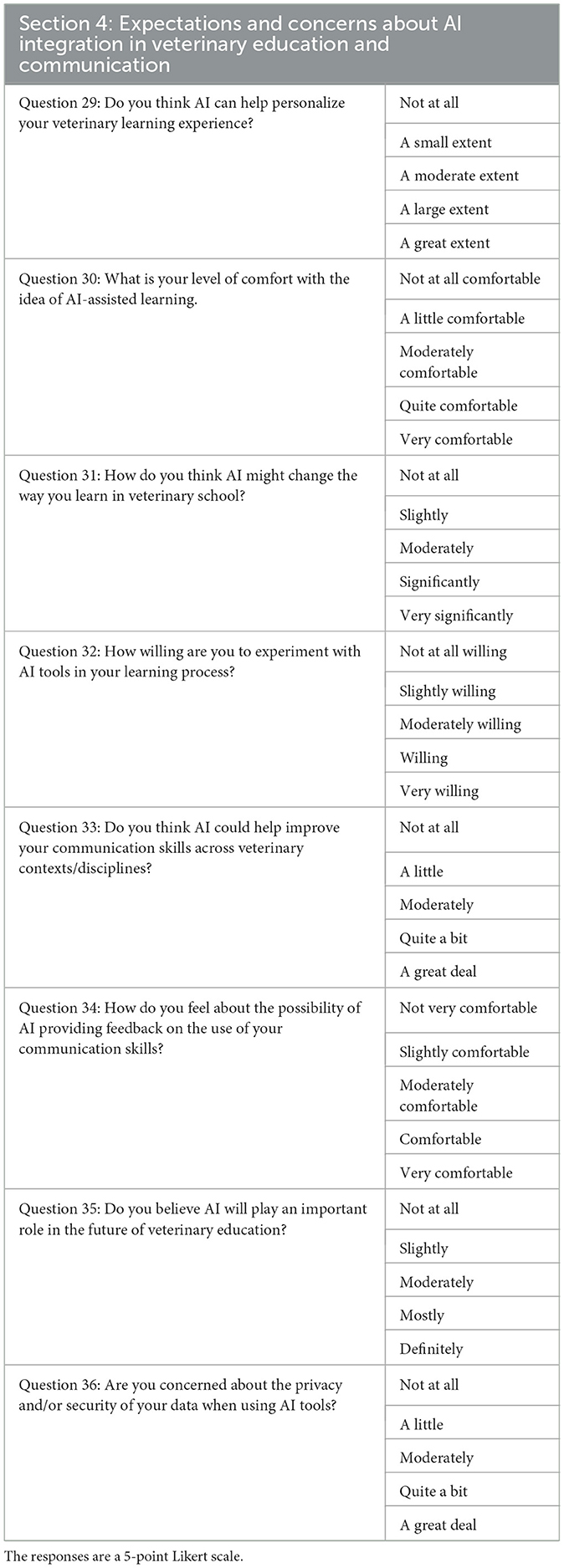

We utilized enterprise survey software to create and distribute an online questionnaire to understand veterinary students' perceptions regarding the use of AI and technology in their veterinary education and communication training. The questionnaire was piloted with veterinary and graduate students, and veterinary educators who were independent from the course. The final version of the survey included (i) 4 broad questions that established the student's semester of study; area of interest in veterinary medicine; age and gender, and (ii) four sections with 5-point Likert scale responses. The first section included nine questions that explored technology literacy and use in veterinary education (e.g., How often do you find yourself turning to software or apps to help with your coursework?, “Almost never = 0” to “All the time = 5”) (Table 1). Section 2 covered seven questions that investigated the student's experience with AI and GPTs (e.g., How familiar are you with the term Artificial Intelligence (AI)?, “Not at all familiar = 0” to “Very familiar = 5”) (Table 2). Section 3 comprised twelve questions that addressed students' experience with SCs and experiential practice, (e.g., How effective are encounters with Standardized Clients (SCs) as part of your veterinary training in communication skills?, “Not effective = 0” to “Very effective = 5”) (Table 3). Lastly the fourth section included 9 questions that focused on students' expectations and concerns about AI integration in veterinary education and communication (e.g., How do you think AI might change the way you learn in veterinary school?, “Not at all = 0” to “A great extend = 5”) (Table 4). Veterinary students completed the AI questionnaire at the end of the AI case experience.

Table 4. Expectations and concerns about AI integration in veterinary education and communication survey section.

Descriptive and inferential statistics were utilized to analyze survey results. Data was collected from a student assignment. All data was stored on TTU-approved University systems requiring two-factor authentication, accessible only to IRB-approved investigators. To further protect data privacy, the analysis was conducted using a dataset with all names removed and ages assigned to generations to ensure anonymity. We assigned students born between 1965 and 1980 to “Gen X,” those born between 1981 and 1996 to “Millennials,” and those born between 1997 and 2012 to “Gen Z.”

We performed all analyses using RStudio “Chocolate Cosmos” release for macOS with R (version 4.4.0) (34). The data exhibited a non-normal distribution leading to selection of Spearman's correlation coefficient to assess correlations among all survey questions, as Winter et al. suggested for non-normally distributed data with outliers (35). We used the R packages Rstatix (version 0.7.2) and GGally (version 2.2.1) to calculate Spearman's correlation coefficient, and subsequently the corrplot package (version 0.92) to construct visualizations of the results. We followed guidelines provided by Akoglu (36) to interpret correlation coefficients as weak with a ρ = 0.10–0.39, moderate ρ = 0.40–0.69, and strong ρ = 0.70–1.

We completed Kruskal-Wallis tests, with Holm adjusted p-values, using the R package ggstatsplot (version 0.12.4) to determine if response differences existed between semesters, generations, and career interests. Any significant Kruskal-Wallis test was followed by Dunn's test of multiple comparisons using dunn.test package (version 1.3.6) to determine which semesters were significantly different from each other. We constructed visualizations of the Likert data using the Likert (version 1.3.5) R package.

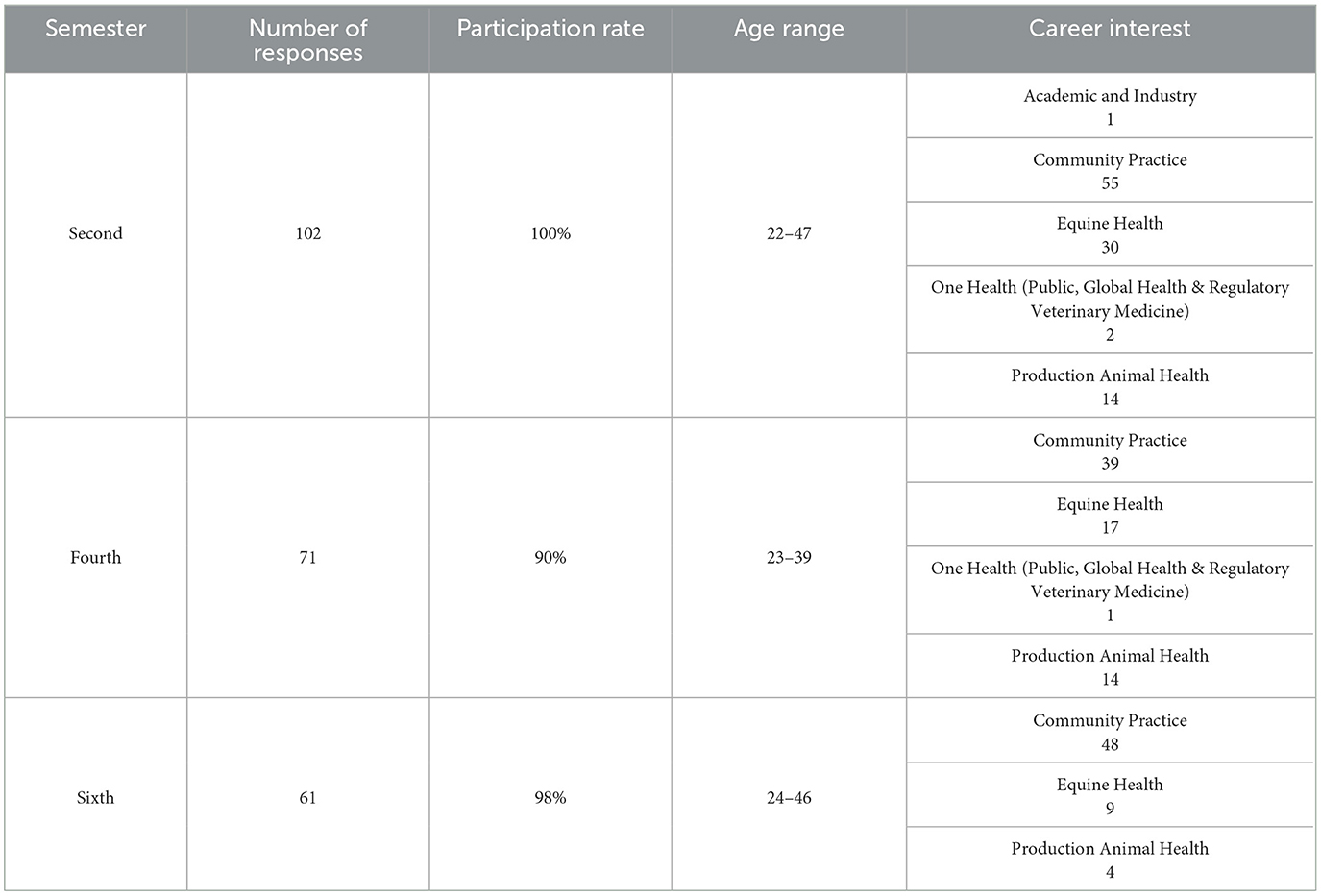

Table 5 shows the age range and career interest for the 237 students who agreed to participate in the study and completed the survey. One-hundred and thirty-eight students were identified as Gen Z, 51 as Millennials, and 2 as GenX. One student did not report his/her age.

Table 5. Number of complete survey responses, participation rate, age range, and career interest by semester.

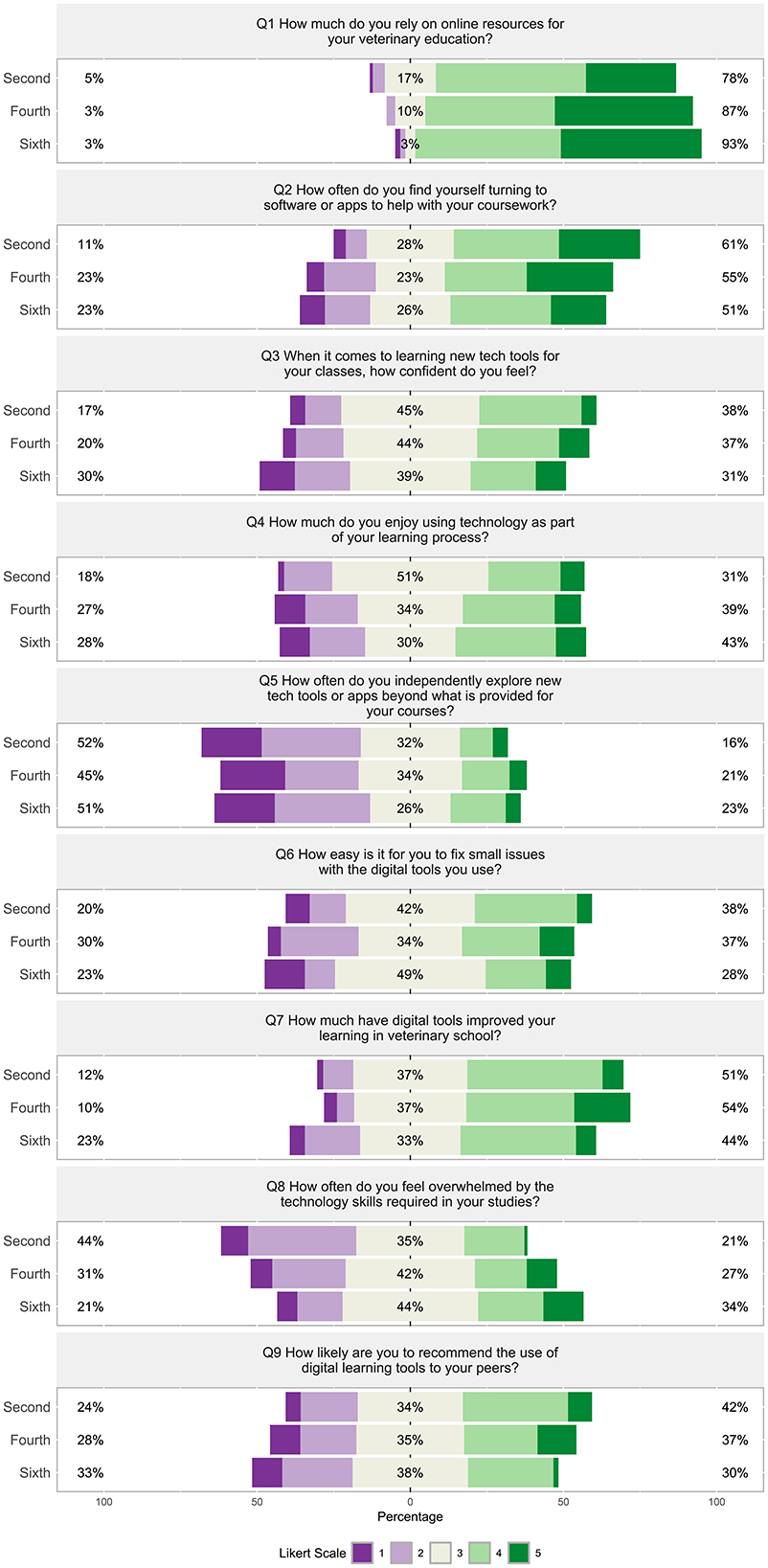

Throughout all the semesters, students reported “often” or “all the time” relying heavily on online resources (78%−93%) while less often relying upon software, apps, and new tech tools for their coursework (Figure 7, Q2). Forty-five to 52% responded that they were “not rarely” to “never” independently explore new tech tools or apps beyond what is provided in the course (Figure 7, Q5). However, 33%−37% of students felt digital tools moderately improved their learning with 44%−55% reporting that digital tools improved their learning by “quite a bit” to a “great deal” (Figure 7, Q7). Forty-four percent of second semester students were “almost never” to “rarely” overwhelmed by technology skills required, compared to fourth and sixth semester students who reported 31% to 21%, respectively (Figure 7, Q8).

Figure 7. (Q1–Q9): Visualization of the distribution of student responses across different semesters using the Likert scale for responding to in Section 1: technology and education survey section. Percentages on the left are more negative responses (Likert scale value 1 and 2: e.g., “almost never” and “very difficult”). The middle section shows the percentage of neutral responses (Likert scale value 3). Percentage on the right represent the more positive responses (Likert scale value 4 and 5: e.g., “all the time” and “a great deal”). Table 1 displays the Likert response options.

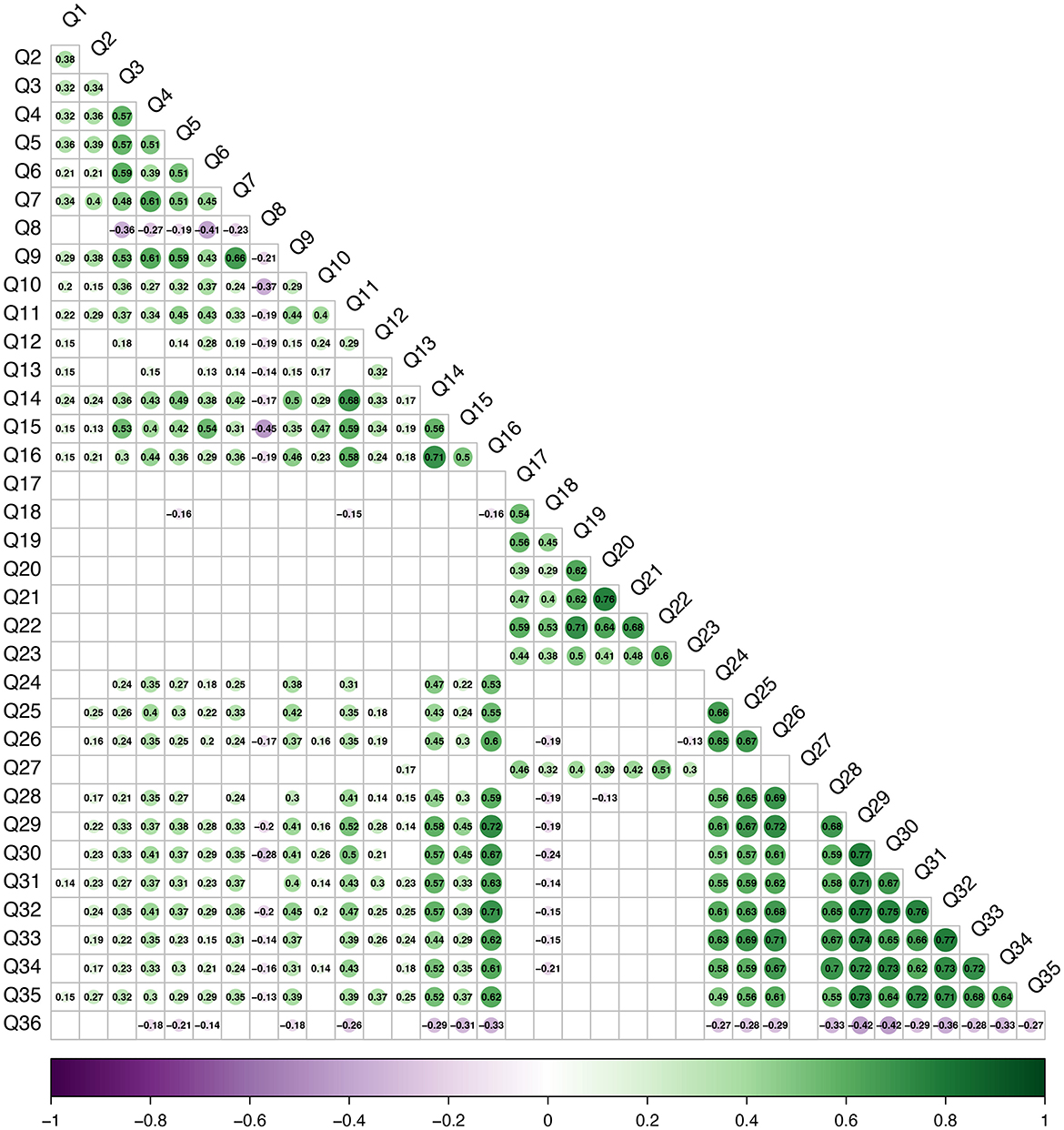

Questions 1 through 7 were weakly (ρ = 0.21) to moderately (ρ = 0.61) positively correlated (Figure 8, Q1–Q7). Question 8, which focused on students feeling overwhelmed by the technology skills was weakly (ρ =−0.19) to moderately (ρ = −0.41) negatively correlated with questions 3 through 7 (Figure 8, Q8 and Q3–Q7). Weak (ρ = 0.29) to moderate (ρ = 0.66) positive correlations were appreciated between question 9, focusing on the use of digital learning tools, and questions 1 through 7 (Figure 8, Q9 and Q1–Q7). Figure 8 shows that question 8, “How often do you feel overwhelmed by the technology skills required in your studies?” was weakly (ρ = −0.14) to moderately (ρ = −0.45) negatively correlated with questions in all other sections of the survey. Question 5, “How often do you independently explore new tech tools or apps beyond what is provided for your course?” was weakly negatively correlated to the student's level of engagement during simulated client encounters (Figure 8, Q5 and Q18, ρ = −0.16) and with the student's concern about their privacy/security of their data (Figure 8, Q5 and Q36, ρ = −0.21). Figure 8 displays all significant correlations (p < 0.05) for all questions in the survey.

Figure 8. Statistically significant (p < 0.05) Spearman's correlation coefficients. The circle size and color scale indicate the strength of the correlation coefficient with purple representing negative coefficients and green indicating positive correlation coefficients.

Students across all semesters ranged from “not at all familiar” to “very familiar” with the term “Artificial Intelligence” (AI) (Figure 9, Q10). The majority of students (75%−80%) “almost never” or “rarely” use or interact with AI tools in any context (Figure 9, Q11) and 65%−72% were “not very likely” or only “slightly likely” to explore AI tools on their own for personal or educational purposes (Figure 9, Q14). When students used AI-driven tools, around half (47%−56%) found them “not at all easy” or “slightly easy” to understand and utilize them (Figure 9, Q15), and most students (66%−84%) were “not at all interested” or “slightly interested” in learning more about AI and its applications in veterinary medicine (Figure 9, Q16). Sixty-two to 68% of students reported that AI is “often” to “all the time” involved in everyday technology (Figure 9, Q12) and the majority of students (85%−96%) “often” or “very often noticed” features like predictive text on their phones (Figure 9, Q13).

Figure 9. (Q10–Q16): Visualization of the distribution of student responses across different semesters using the Likert scale for responding to questions in the technology in Section 2: experience with AI and GPT survey section. Percentages on the left are more negative responses (Likert scale value 1 and 2: e.g., “almost never” and “not at all easy”). The middle section shows the percentage of neutral responses (Likert scale value 3). Percentage on the right represent the more positive responses (Likert scale value 4 and 5: e.g. “very familiar” and “very likely”). Table 2 displays the Likert response options.

Students' opinions about exploring AI tools strongly correlated to their interest in learning more about AI or its applications in veterinary medicine (Figure 8, Q14 and Q16, ρ = 0.71), and were moderately correlated with how often they use or interact with AI tools (Figure 8, Q14 and Q11, ρ = 0.68) and how easy they found them to understand and use (Figure 8, Q14 and Q15, ρ = 0.56). A moderate negative correlation was found between how easy it was for students to understand and use AI-driven tools and how often they were overwhelmed by the technology skills required in their studies (Figure 8, Q15 and Q8, ρ = −0.45). The question, “Rate your interest in learning more about AI and its applications in veterinary medicine” strongly correlated with the questions from Section 4, “Do you think AI can help personalize your veterinary learning experience?” (Figure 8, Q16 and Q29, ρ = 0.72) and “How willing are you to experiment with AI tools in your learning process?” (Figure 8, Q16 and Q32, ρ = 0.71).

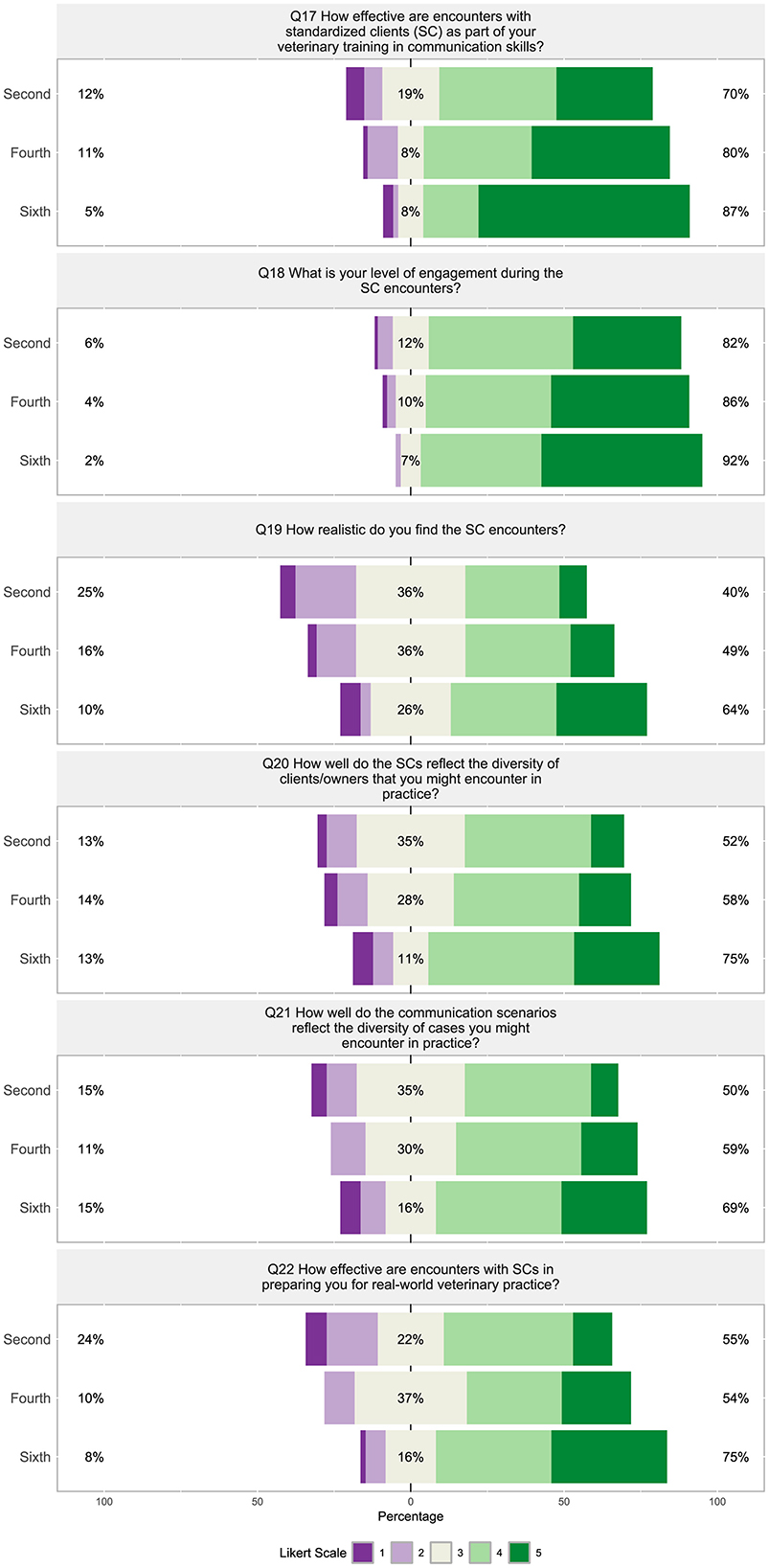

Students were overall positive toward SC encounters with 70%−87%, believing the encounters were “effective” to “very effective” (Figure 10, Q17), 82%−92% being “quite engaged” to “very engaged” (Figure 10, Q18), 59%-89% being “confident” to “very confident” in handling situations in real life (Figure 11, Q23), and 73%−82% believing feedback from instructors during SC encounters was “beneficial” to “very beneficial” (Figure 11, Q27). Overall students felt SC client encounters were “moderately realistic” (26%−36%) to “quite a bit realistic” or “very realistic” (40%−64%), and that the diversity of TTU SCs reflected the diversity of clients/owners that would be encountered in practice with 52%−75% believing it was “quite a bit” to “very well” (Figure 10, Q19 and Q20). Students also agreed “quite a bit” to “a great deal” (50%−69%) that the communication scenarios were reflective of the breath of cases they would encounter in practice (Figure 10, Q21).

Figure 10. (Q17–Q22): Visualization of the distribution of student responses across different semesters using the Likert scale for responding to questions 17 to 22 in the technology in Section 3: standardized clients survey section. Percentages on the left are more negative responses (Likert scale value 1 and 2: e.g., “not effective” and “not at all realistic”). The middle section shows the percentage of neutral responses (Likert scale value 3). Percentage on the right represent the more positive responses (Likert scale value 4 and 5: e.g., “very effective” and “very realistic”). Table 3 displays the Likert response options.

Figure 11. (Q23–Q28): Visualization of the distribution of student responses across different semesters using the Likert scale for responding to questions 23 to 28 in the technology in Section 3: standardized clients survey section. Percentages on the left are more negative responses (Likert scale value 1 and 2: e.g., “not effective” and “not at all realistic”). The middle section shows the percentage of neutral responses (Likert scale value 3). Percentage on the right represent the more positive responses (Likert scale value 4 and 5: e.g., “very effective” and “very realistic”). Table 3 displays the Likert response options.

Students were much less positive about technology within the veterinary communications program, with 65%−80% “not at all likely” to “slightly likely” to recommend the use of technologically advanced simulations (Figure 11, Q24), 62%−72% believing that technology can “not at all” or “a little” enhance SC encounters (Figure 11, Q25), 76%−87% being “not at all” to “slightly interested” in more technology-enhanced simulations like virtual reality being incorporated into communication training (Figure 11, Q26), and 76% to 84% believing that feedback from AI during the SC encounter would be “not beneficial” to “only slightly beneficial” (Figure 11, Q28).

A strong correlation was found between how students viewed the realism of SC encounters and how effective the encounters are for preparing them for real-world veterinary practice (Figure 8, Q19 and Q22, ρ = 0.71). Similarly, how students view the diversity of SCs compared to the diversity of clients/owners they may encounter in practice strongly correlated with how well the communication scenarios reflected the diversity of cases they may encounter in practice (Figure 8, Q20 and Q21, ρ = 0.76). The level of students' engagement was weakly negatively correlated with how interested they were in technology-enhanced simulations like virtual reality (Figure 8, Q18 and Q26, ρ =−0.19) and how beneficial they consider feedback from AI during an SC encounter (Figure 8, Q18 and Q28, ρ = −0.19). Questions surrounding the effectiveness (Q17, Q22) and realism (Q19) did not significantly correlate to questions about technology (Q24–Q26) and AI feedback (Q28) within the communications program (Figure 8). Question 18, “What is your level of engagement during the SC encounters?” with SCs did not significantly correlate to the use technology in simulations (Q24) nor if technology can enhance SCs encounters (Q25). Figure 8 shows how the questions in this section were found to inconsistently weakly to moderately correlate with the other sections of the survey (p < 0.05).

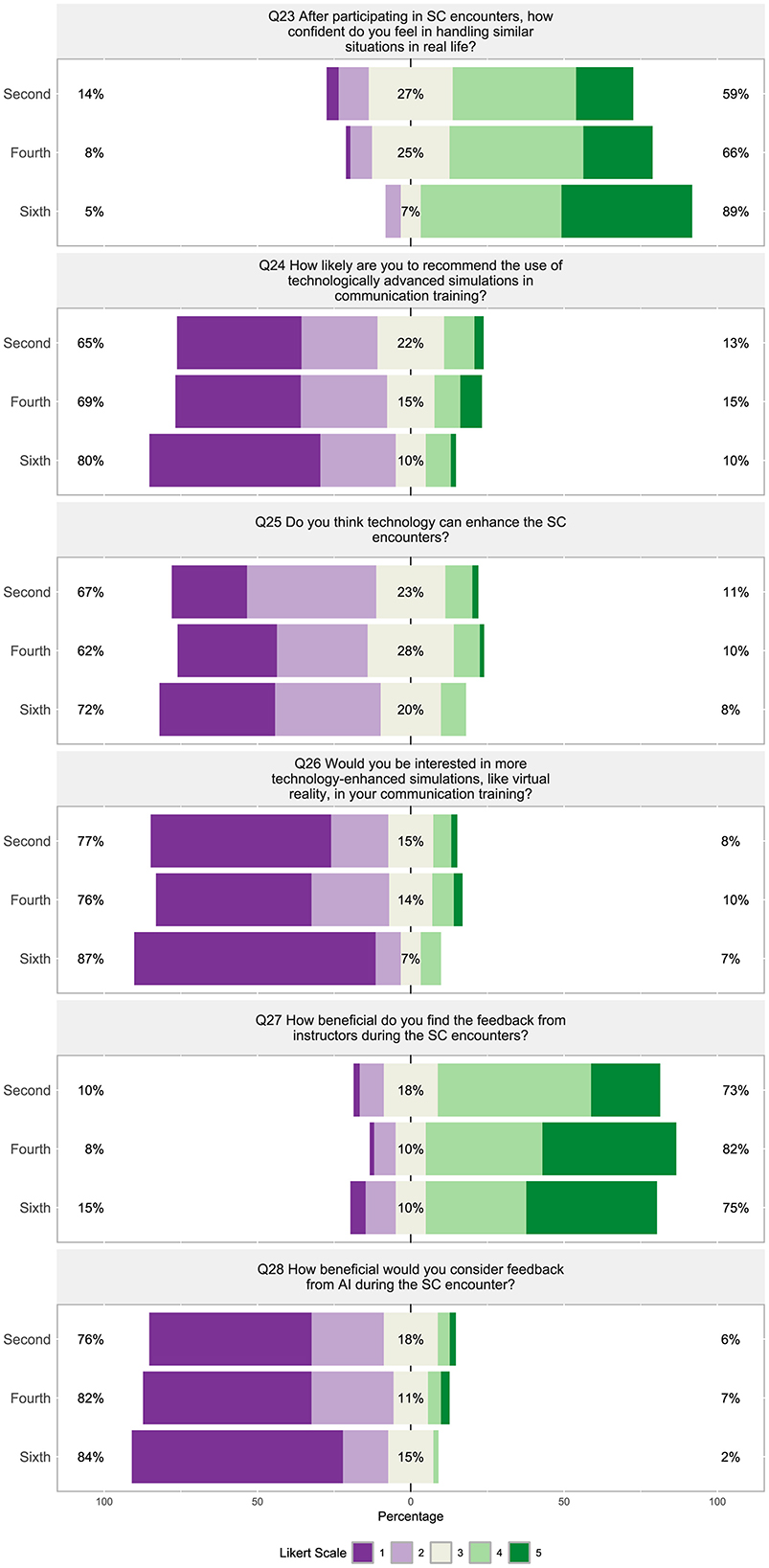

Overall, students appear to have a negative sentiment about AI integration in their veterinary program. Sixty-seven to 82% of students felt AI could “not at all” or only “a small extent” personalize their learning experience (Figure 12, Q29) and 69%−82% were “not at all willing” or “slightly willing” to experiment with AI tools in their learning processes (Figure 12, Q32). Seventy-five to 82% of students were “not comfortable” to only “a little comfortable” with the idea of AI-assisted learning (Figure 12, Q30), while 63%−75% felt AI would “only slightly” or “not at all” change the way they learn in veterinary school (Figure 12, Q31). Students were not open to AI providing feedback on their communication skills, with 79%−87% “not very comfortable” or “slightly comfortable” with this possibility (Figure 12, Q34), while concurrently 72%−90% felt AI could “not at all” or “a little” help improve their communication skills across veterinary contexts/disciplines (Figure 12, Q33). Sixty to 82% believe AI will “not at all” or “only slightly” play an important role in the future of veterinary education (Figure 12, Q34). Nearly two-thirds of students (65% to 69%) were “quite a bit” or “a great deal” concerned about the privacy and security associated with their data when using AI tools.

Figure 12. (Q29–Q36): Visualization of the distribution of student responses across different semesters using the Likert scale for responding to questions in the technology in Section 4: expectations and concerns about AI integration in veterinary education and communication survey section. Percentages on the left are more negative responses (Likert scale value 1 and 2: e.g., “not at all willing” and “not at all”). The middle section shows the percentage of neutral responses (Likert scale value 3). Percentage on the right represent the more positive responses (Likert scale value 4 and 5: e.g., “a great extent” and “very significant”). Table 4 displays the Likert response options.

Nearly all the questions in section four were moderately (ρ = 0.55) to strongly (ρ = 0.77) significantly correlated with each other (Figure 8, Q29 through Q35), except question 36, “Are you concerned about the privacy and/or security of your data when using AI tools?” This question about privacy and/or security was moderately, negatively correlated with “Do you think AI can help personalize your veterinary learning experience?” (Figure 8, Q36 and Q29, ρ = −0.42), “What is your level of comfort with the idea of AI-assisted learning?” (Figure 8, Q36 and Q30, ρ = −0.42), and weakly correlated with the other questions (Figure 8, Q31–Q35). Question 29, “Do you think AI can help personalize your veterinary learning experience?” and question 32, “How willing are you to experiment with AI tools in your learning process?” strongly correlated to question 16, “Rate your interest in learning more about AI and its applications in veterinary medicine?” (Figure 8, Q29 and Q16, ρ = 0.71; Q32 and Q16, ρ = 0.72).

Out of the 36 questions, student Likert responses were statistically different by semester on 16 questions.

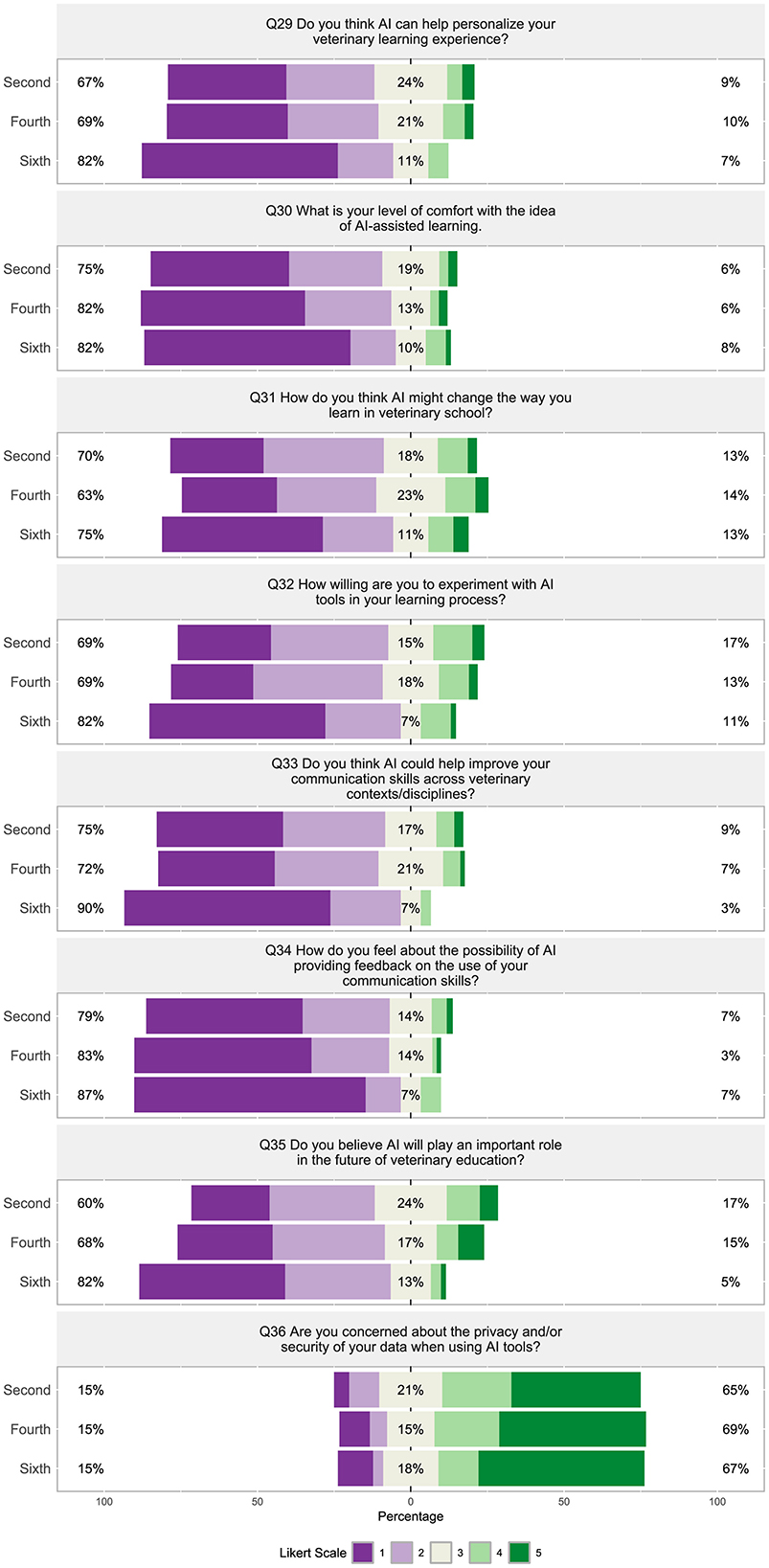

In response to the question “How much do you rely on online resources for your veterinary career,” second semester students showed significantly different viewpoints compared to fourth and sixth semester students (p Holm − adj < 0.05) (Figure 13A, Q1). Additionally, second semester students significantly differed in their response to the question “How often do you feel overwhelmed by the technology skills required for your studies” compared to fourth and sixth semester students (p Holm − adj < 0.01) with more second students reporting they are “rarely” overwhelmed by the required technological skills (Figure 13B, Q8).

Figure 13. Violin plots of the Kruskal-Wallis test results for [(A), Q1] “How much do you rely on online resources for your veterinary education?”, [(B), Q8] “How often do you feel overwhelmed by the technology skills required in your studies?”, [(C), Q16] “Rate your interest in learning more about AI and its applications in veterinary medicine.” [(D), Q17] “How effective are encounters with standardized clients (SC) as part of your veterinary training in communication skills?” by semester. Significant findings between semesters are denoted by a line with the reported Holms-adjusted p-values.

Sixth semester students were significantly less familiar with the term “Artificial Intelligence” (AI) compared to students in the second and fourth semester (p Holm − adj < 0.05, Figure 13C, Q16).

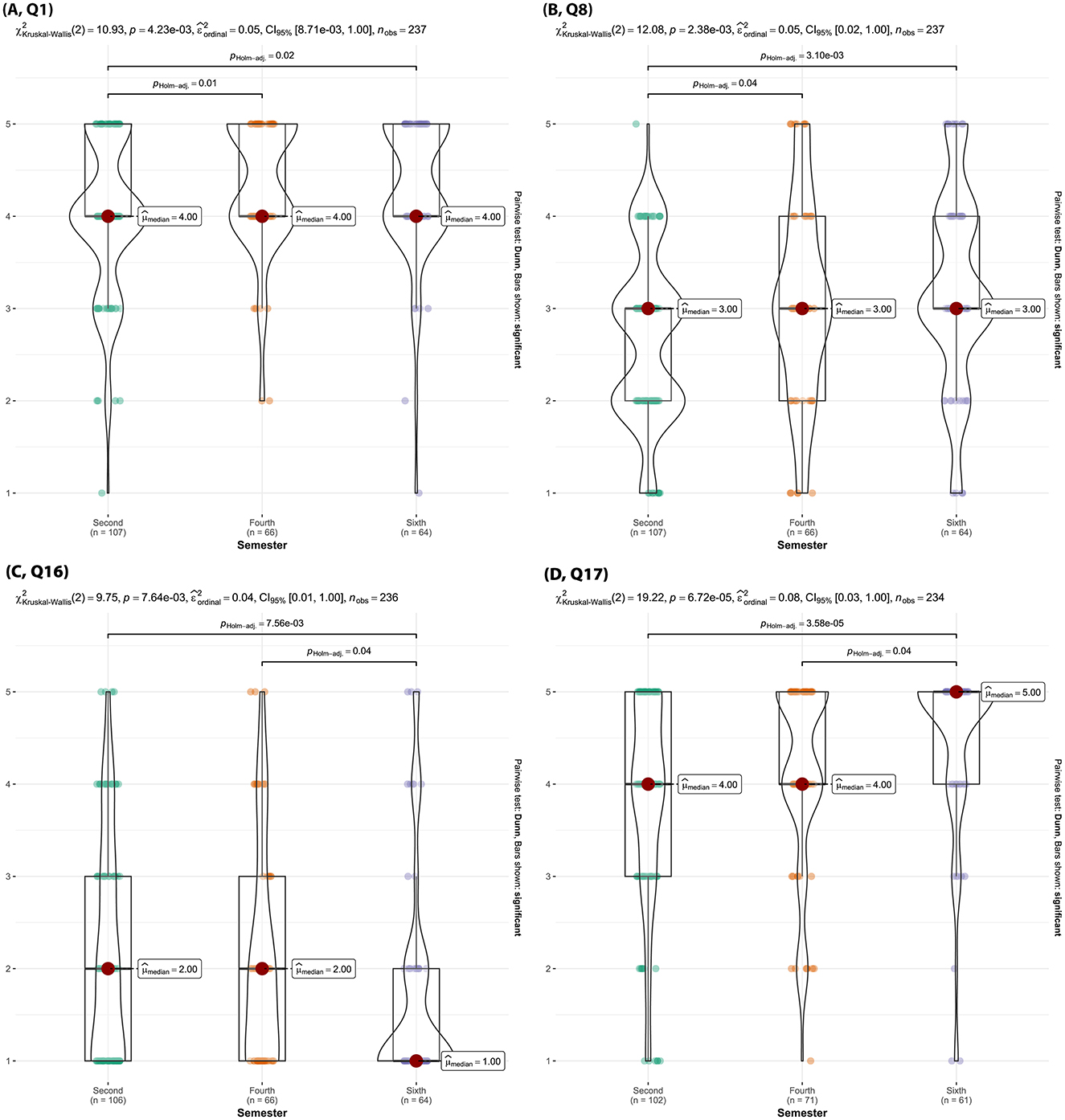

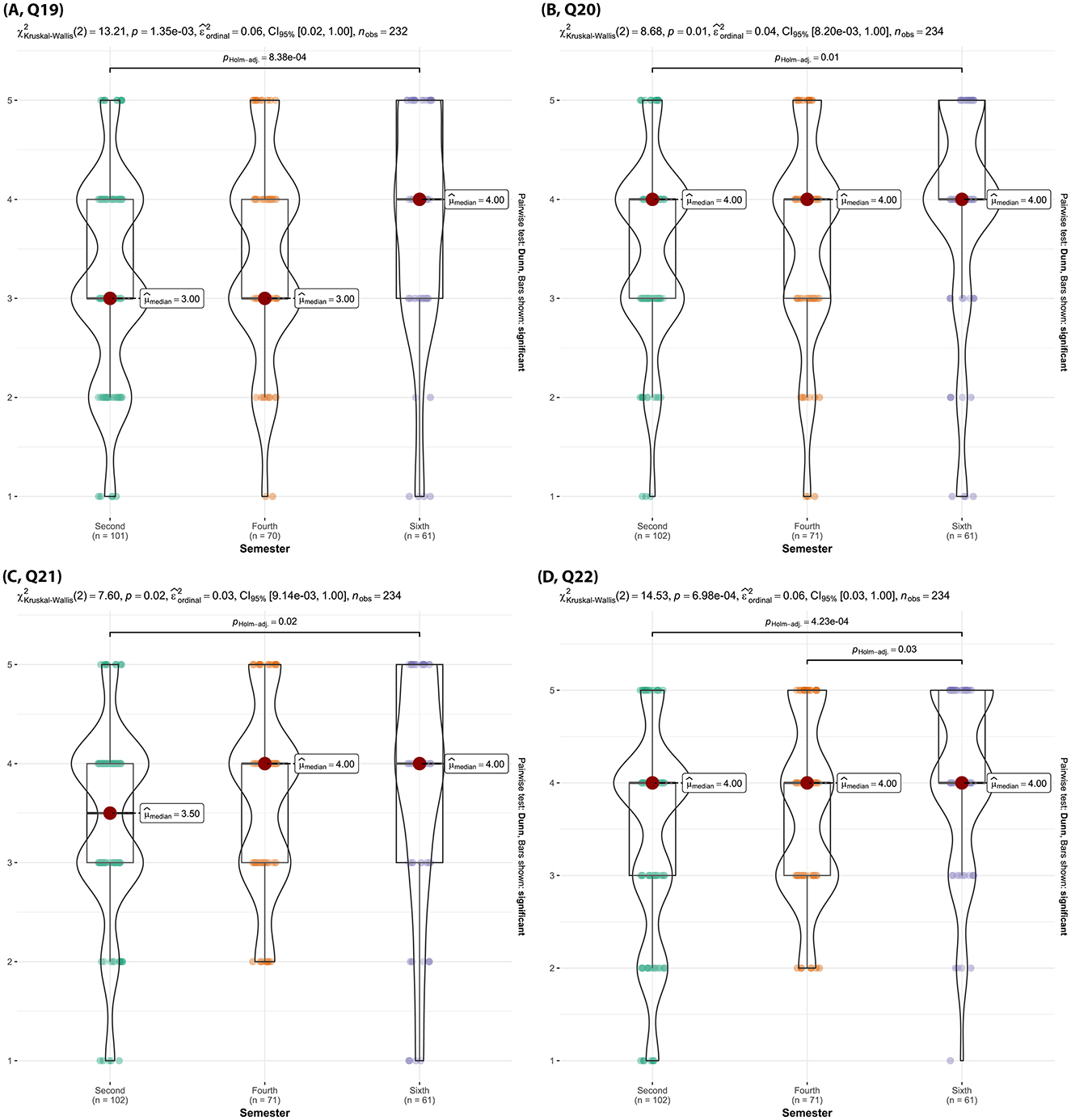

Sixth semester students rated encounters with SCs as “effective” to “very effective” significantly more often than second semester and fourth semester students did (p Holm − adj < 0.04) (Figure 13D, Q17). Sixth semester students also felt SC encounters were “quite a bit” realistic, whereas second semester felt they were “moderately” realistic (p Holm − adj < 0.01) with fourth semester students perceptions aligning more with the sixth semester (Figure 14A, Q19). Sixth semester students also more strongly believed that both TTU's SCs well reflected the diversity of clients/owners and the diversity of cases they would encounter in practice, compared to second semester students (p Holm − adj = 0.02) (Figures 14B, C Q20, Q21). Moreover, sixth semester students felt SC encounters were significantly more effective at preparing them for real-world veterinary practice compared to second semester and fourth semester students (p Holm − adj < 0.01, Figure 14D, Q22).

Figure 14. Violin plots of the Kruskal-Wallis test results for [(A), Q19] “How realistic do you find the SC encounters?”, [(B), Q20] “How well do the SCs reflect the diversity of clients/owners that you might encounter in practice?”, [(C), Q21] “How well do the communication scenarios reflect the diversity of cases you might encounter in practice?”, [(D), Q22] “How effective are encounters with SCs in preparing you for real-world veterinary practice.” by semester. Significant findings between semesters are denoted by a line with the reported Holms-adjusted p-values.

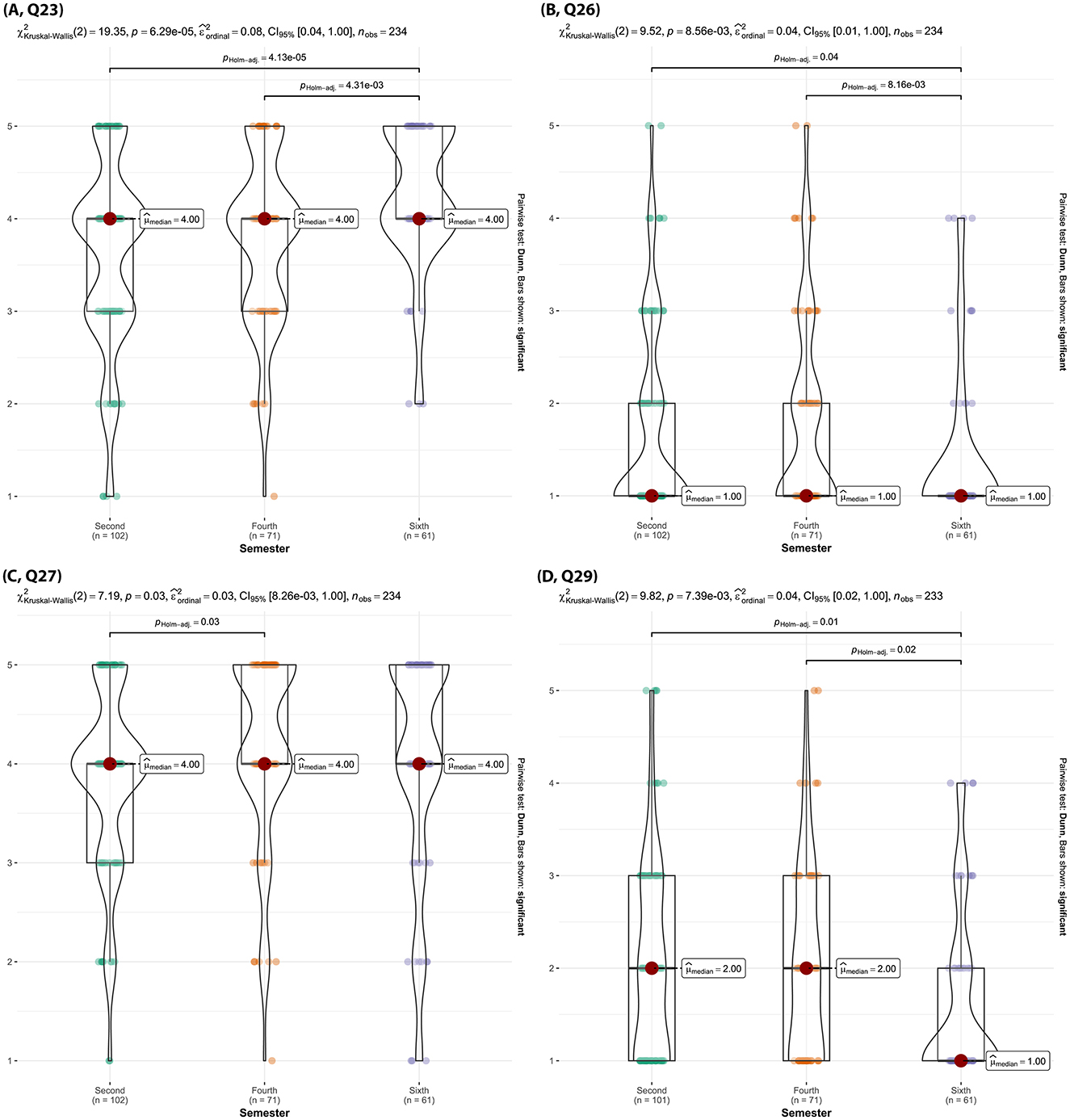

Second semester and fourth semester students felt significantly less confident in their ability to handle situations like those in the SC encounters scenarios compared to sixth semester students (p Holm − adj < 0.01, Figure 15A, Q23). Second semester and fourth semester students showed significantly increased interest in more technology-enhanced simulations like virtual reality compared to sixth semester students (p Holm − adj < 0.04, Figure 15B, Q26). Fourth semester students were significantly more positive about the feedback from instructors during SC encounters being beneficial compared to second semester students (p Holm − adj = 0.03, Figure 15C, Q27).

Figure 15. Violin plots of the Kruskal-Wallis test results for [(A), Q23] “After participating in SC encounters, how confident do you feel in handling similar situations in real life?”, [(B), Q26] “Would you be interested in more technology-enhanced simulations, like virtual reality, in your communication training?”, [(C), Q27] “How beneficial do you find the feedback from instructors during the SC encounters?”, [(D), Q29] “Do you think AI can help personalize your veterinary learning experience?” by semester. Significant findings between semesters are denoted by a line with the reported Holms-adjusted p-values.

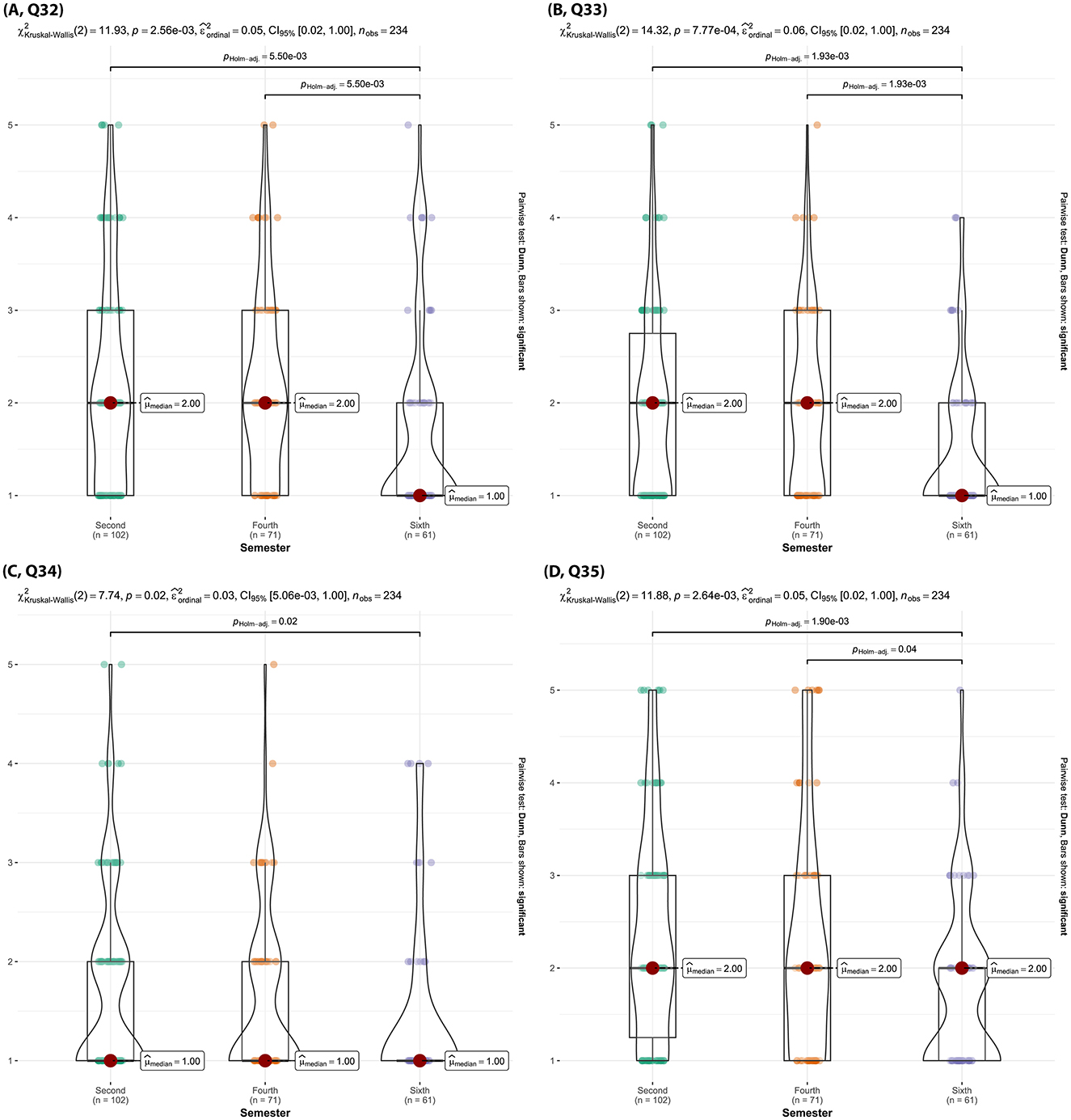

Sixth semester students were significantly more negative about AI's ability to personalize their veterinary learning experience compared to second and fourth semester students (p Holm − adj = 0.01, Figure 15D, Q29) and fourth semester students (p Holm − adj = 0.02, Figure 15D, Q29). Additionally, sixth semester students were significantly less willing to experiment with AI tools in their learning process (Figure 16A, Q32), less open to the idea that AI could help improve their veterinary communication skills (Figure 16B, Q33), and believed significantly less in AI's potential to play an important role in the future of veterinary education (Figure 16D, Q35) compared to second and fourth semester students (p Holm − adj < 0.05). Sixth semester students were also much less comfortable with the possibility of AI providing feedback on their communication skills compared to second semester students (p Holm − adj = 0.02, Figure 16C, Q34).

Figure 16. Violin plots of the Kruskal-Wallis test results for [(A), Q32] “How willing are you to experiment with AI tools in your learning process?”, [(B), Q33] “Do you think AI could help improve your communication skills across veterinary contexts/disciplines?”, [(C), Q34] “How do you feel about the possibility of AI providing feedback on the use of your communication skills?”, [(D), Q35] “Do you believe AI will play an important role in the future of veterinary education?” by semester. Significant findings between semesters are denoted by a line with the reported Holms-adjusted p-values.

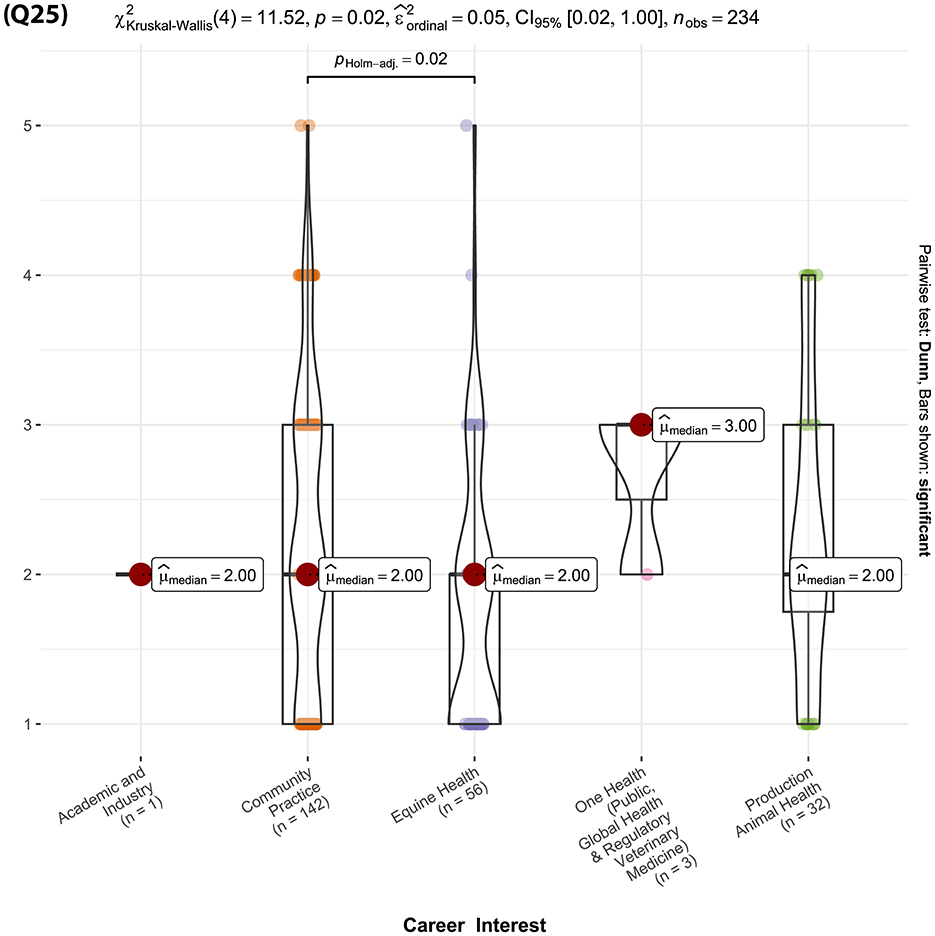

Out of the 36 survey questions, only one question—“Do you think technology can enhance the SC encounters?”—showed a significant difference between students planning to enter community practice and those planning to enter equine practice (p Holm − adj = 0.02, Figure 17, Q25). The responses of the single student interested in academia and industry were not included in the analysis.

Figure 17. (Q25): Violin plots of the Kruskal-Wallis test results for “Do you think technology can enhance the SC encounters?”—showed a significant difference between students planning to enter community practice and those planning to enter equine practice. Significant findings between students who plan to enter community practice and those planning to enter equine practice are denoted by a line with the reported Holms-adjusted p-values.

No significant differences were observed in the responses to any question among the Gen Z, Millennial, or Gen X generations.

Our study examined veterinary students' perceptions of AI-technology in veterinary education, particularly regarding the teaching, learning and practice of communication skills. As expected, given current enrollment trends, the majority of participants (58%) were members of Generation Z (born between 1995 and 2012), with 21.5% identifying as Millennials and a very small percentage (0.008%) belonging to Generation X. We recognize that generational differences in beliefs, attitudes, values, motivators and personality traits exist and appreciate that at the heart of generational changes lies the introduction and use of technology (37, 38). While Millennials typically engage with technology mainly for entertainment, Generation X is more information driven. In contrast, Generation Z, often described as “Millennials on steroids” or “iGenZ,” spends significant time daily in front of screens, communicates via emojis and short texts, and rapidly follows, adopts and becomes proficient with multiple technologies (39).

Our findings show no significant differences in veterinary students' perceptions surrounding the use of AI in veterinary medical education and specifically for communication skills training among Gen Z, Millennial or Gen X generations. Despite the heavy use of online resources among veterinary students and about half of our student population reporting that digital tools improved their learning, most expressed little interest in learning more about AI. They also found AI-driven tools challenging to understand and unlikely to be useful for enhancing communication skills. Nearly two-thirds of the veterinary students share concerns surrounding privacy and security of their information/data and these students felt most strongly that AI would not be able to personalize their veterinary learning experience.

There are several motivations for integrating technology into education, including increased accessibility, flexibility, and convenience, as well as improved learning outcomes, motivation, self-control, and interactivity among learners (40, 41). Consistent with studies on medical students' perceptions of technology and e-learning (42) most veterinary students in our study heavily relied on online resources. However, it was surprising to find that over 50% of students rarely explore new technological tools independently beyond what is provided in their courses. This may be related to their reported lack of confidence in learning new technologies and outside of video tutorials on YouTube and other social media platforms (43), there is a general disinterest in using other types of technology during the learning process. Evidence suggests that educators often overestimate students' technical skills, overlooking their limited digital knowledge and abilities in advanced computing functions that may be required for coursework (44). Similarly, veterinary students frequently reported that they are unlikely to recommend the use of digital learning tools to their peers.

The growing utilization of AI in classrooms presents significant opportunities alongside potential challenges and risks in education (4, 45, 46). Modern AI-driven tools, such as Chatbots, are designed to enhance teaching and learning by engaging with users and providing sophisticated, tailored responses. ChatGPT, a generative pre-trained transformer model, leverages natural language processing (NLP) to interpret, engage, and generate human-like-like responses in real-time conversations. It can add new content, offer suggestions, respond to follow-up questions, identify errors, and even understand social and emotional cues and queries (47). For students, ChatGPT can provide feedback, and assist with writing assignments, while it supports teachers by generating course content, syllabi, presentations, quizzes, and more (45). Additionally, in veterinary clinical practice, LLMs can compile data from unstructured veterinary records to help enhance patient outcomes and provide data in a format usable for animal disease surveillance (12). Our study findings demonstrate that veterinary students are generally aware of AI technology in everyday applications like social media and predictive text. However, while they are familiar with the term AI, they seldom use, interact with, or show interest in learning more about AI and its applications in veterinary education. These findings suggest that students may lack the technical skills to independently explore the use of technology and concurrently that they may be disconnected from recognizing the importance of understanding the use of AI in veterinary education and veterinary practice. Ethical considerations surrounding the use of such technologies in education and veterinary medicine necessitate clear guidelines to ensure acceptable and ethical usage, which are currently lacking in many institutions, potentially hindering the full use of AI (12, 47).

Small-group training that incorporates feedback and SCs is an effective approach in improving communication skills (22, 48). Likewise, our study findings reported that SC encounters are effective (69%−86%) and engaging (82%−91%) in practicing communication skills resulting in enhanced confidence when handling similar encounters in practice (58%−86%). Furthermore, veterinary students found the SC encounters quite realistic (40%−64%) aligning with evidence supporting authenticity during client encounters (49, 50). Surprising, our findings reported that SC encounters reflected the diversity that they may encounter in practice, yet evidence strongly delineates the need for expanding diversity across communication programs (51). Surveying AVMA-COE accredited institutions showed that the majority of SCs were primarily English speaking (77%), white (90.4%), non-Hispanic/Latinx (98.6%), female (57%), and over age 56 (64%) (51). SC demographics at TTU SVM are much similar with 84% reporting white, female (78%), heterosexual (100%), over age 30 and 56 (10% and 89% respectively), and retired (98%). Concurrently, our communication program explores cultural competence and exposes students to a diverse context as well as a variety of cases across species which may be contributing to a perceived adequate SC representation.

Our study reported that most veterinary students (66%−81%) found that technology, particularly technologically advanced simulation, cannot fully replace experiential practice with SCs. This finding aligns with results from studies that integrated technology using a blended approach (52, 53). There is mixed evidence about the use of emerging technologies. Some studies evaluated Virtual Patients (VP) and found their experience to be as effective as practicing with SCs, with VPs offering added visual and behavioral advantages (54, 55). However, other reports highlighted limitations when working with VPs. Unlike other studies, the majority (76%−88%) of veterinary students in our research showed little interest in technology-enhanced simulations, such as VPs, and emphasized the importance of expert feedback (73%−83%), while expressing little confidence in the benefits of AI-generated feedback (76%−84%). Other studies noted that both instructors and students appreciated AI feedback but acknowledged the importance of prioritizing instructor feedback generated before AI feedback. Additionally, students recognized the limitations of AI in interpreting non-verbal communication, attitudes and beliefs which can be complex (30).

Study results indicated that sixth-semester students reported a greater appreciation for and understanding of experiential learning with SCs when practicing communication skills. They recognized the effectiveness, realism, and diversity in these interactions, which reflected the challenges that they would face in clinical practice. This exposure gave them the confidence to handle similar situations, more so than students in earlier semesters, particularly those in semester two. Research evidence supports that senior students acknowledged effective communication as a core clinical competency for a successful veterinary career, highlighting the importance of communication skills training during both pre-clinical and clinical curricula (56, 57). In contrast, previous studies have shown that 1st-year-students often report inflated levels of communication competence (58), with perhaps less appreciation for the necessity of communication skills training which may also explain our second semester student views.

Interestingly, despite growing evidence of veterinary students' acceptance of AI in veterinary medicine (59), sixth-semester students expressed a strong lack of interest and reluctance toward using emerging technologies for communication training, particularly regarding the use of AI. Studies involving medical students and professionals have reported similar concerns, with fears that AI could replace physicians and lead to new professional liabilities. This personal and professional apprehension has contributed to a resistance to exploring AI's potential in academic and clinical settings (60). Our sixth-semester students informally shared that they were opposed to AI based on fears that AI would potentially replace the use of SCs in teaching and learning communication skills.

The fear of AI replacing SCs, may have greatly contributed to the strong lack of interest and reluctance in incorporating AI into their veterinary education and veterinary communication training. Our sixth semester students began their veterinary education prior to the advent of readily accessible LLMs like ChatGPT-3.5 released in November 2022, and only recently have specialized LLM veterinary tutors begun to be developed. This suggests perhaps the fears or replacement of SCs are based on the lack of exposure to AI as an educational tool. This is supported by second semester students being more interested in technology-enhanced simulations and having AI tutor tools such as VetClinPathGPT, https://chatgpt.com/g/g-rfB5cBZ6X-vetclinpathgpt, available for utilization while studying for their courses.

The findings of this study reflect the perspectives of veterinary students at Texas Tech University School of Veterinary Medicine and are not fully generalizable to other veterinary institutions. The lack of prior experience with AI integration in the curriculum may have heightened skepticism among students, compounded by unclear guidelines surrounding AI use, concerns about the authenticity of their work, and perceived risks associated with this technology. While the study effectively captured concerns about data privacy, it was not designed to investigate or propose solutions to these issues. A broader, multi-institutional approach that compares student responses across academic years and institutions could provide a more comprehensive understanding of perceptions related to AI in veterinary communication, particularly in communication skills training. Future qualitative studies could further explore deeper into the underlying reasons for negative attitudes and better understand institutional strategies to address data privacy concerns, reduce apprehensions, and support the thoughtful adoption of AI to support veterinary education training.

This study integrated artificial intelligence (AI) into the training of veterinary communication skills, offering valuable insights into the applicability and perception of AI within veterinary education. Our findings reveal that while students recognize the prevalence of AI in everyday technology, their familiarity and comfort with AI-driven tools in educational settings, particularly in communication training, remains limited. Furthermore, the data suggest that upper-semester students are less open to adopting AI-based tools in communication training compared to those in earlier semesters, likely due to their greater reliance on experiential learning with standardized clients (SCs). Future studies should focus on multi-institutional studies to assess the generalizability of these findings. Additionally, qualitative data and a longitudinal study (repeated measures with continued use of AI generated cases) would be additional opportunities for understanding how this technology and methodology could be used in a curriculum. Encouraging students to engage with AI in a structured, supportive environment may help alleviate some of the apprehension seen, paving the way for AI to play a more prominent role in veterinary education.

The dataset and the R code used for statistical analysis for this study can be found in the AI_VeterinaryCommunications Git Hub Repository available at https://github.com/MicroBatVet/AI_VeterinaryCommunications (61).

The studies involving humans were approved by the Texas Tech University Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

EA: Conceptualization, Data curation, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. SH: Data curation, Formal analysis, Resources, Software, Visualization, Writing – original draft, Writing – review & editing. LD: Data curation, Investigation, Methodology, Writing – review & editing. MS: Data curation, Investigation, Writing – review & editing. GG: Data curation, Investigation, Methodology, Resources, Software, Visualization, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors would like to acknowledge and share their appreciation to TTU SVM students for their participation and continuing support and enthusiasm for the TTU SVM communication program. Further we acknowledge Diego Tejeda for his contribution with assisting in ChatGPT prompt and initial survey creation.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that Gen AI was used in the creation of this manuscript. Figures 1–6 were generated by Fotor AI. The same images were animated and voiced over for video production using the application Talkr Live. GPT-3.5 by OpenAI was specifically utilized to perform the task of randomly generating details such as social, cultural and socioeconomic demographics for the AI-SCs being portrayed in the cases.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Toosi A, Bottino AG, Saboury B, Siegel E, Rahmim A, A. brief history of AI: how to prevent another winter (a critical review). PET Clin. (2021) 16:449–69. doi: 10.1016/j.cpet.2021.07.001

2. Hassani H, Silva ES, Unger S, TajMazinani M, Mac Feely S. Artificial intelligence (AI) or intelligence augmentation (IA): what is the future? AI. (2020) 1:8. doi: 10.3390/ai1020008

3. Paranjape K, Schinkel M, Panday RN, Car J, Nanayakkara P. Introducing artificial intelligence training in medical education. JMIR Med Educ. (2019) 5:e16048. doi: 10.2196/16048

4. Appleby RB, Basran PS. Artificial intelligence in veterinary medicine. J Am Vet Med Assoc. (2022) 260:819–24. doi: 10.2460/javma.22.03.0093

5. Owens A, Vinkemeier D, Elsheikha H. A review of applications of artificial intelligence in veterinary medicine. Companion Anim. (2023) 28:78–85. doi: 10.12968/coan.2022.0028a

6. Ghasemi A. Clinical reasoning and artificial intelligence. Ann Mil Health Sci Res. (2023) 21:58. doi: 10.5812/amh-134440

7. Reverberi C, Rigon T, Solari A, Hassan C, Cherubini P, Cherubini A. Experimental evidence of effective human–AI collaboration in medical decision-making. Sci Rep. (2022) 12:14952. doi: 10.1038/s41598-022-18751-2

8. Locke S, Bashall A, Al-Adely S, Moore J, Wilson A, Kitchen GB. Natural language processing in medicine: a review. Trends Anaesth Crit Care. (2021) 38:4–9. doi: 10.1016/j.tacc.2021.02.007

9. Kreimeyer K, Foster M, Pandey A, Arya N, Halford G, Jones SF, et al. Natural language processing systems for capturing and standardizing unstructured clinical information: a systematic review. J Biomed Inform. (2017) 73:14–29. doi: 10.1016/j.jbi.2017.07.012

10. Abràmoff MD, Lavin PT, Birch M, Shah N, Folk JC. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit Med. (2018) 1:39. doi: 10.1038/s41746-018-0040-6

11. Currie G, Hespel A-M, Carstens A. Australian perspectives on artificial intelligence in veterinary practice. Vet Radiol Ultrasound. (2023) 64:473–83. doi: 10.1111/vru.13234

12. Akinsulie OC, Idris I, Aliyu VA, Shahzad S, Banwo OG, Ogunleye SC, et al. The potential application of artificial intelligence in veterinary clinical practice and biomedical research. Front Vet Sci. (2024) 11:1347550. doi: 10.3389/fvets.2024.1347550

13. Pamuji E, Setiadi T, Yuliati T, Karli I, Hazin M, Winata MD, et al. Bridging the gap: human and AI interpersonal communication capacities for veterinary care management. J Electr Syst. (2024) 20:1857–66. doi: 10.52783/jes.3796

14. Lane IF, Root Kustritz MV, Schoenfeld-Tacher RM. Veterinary curricula today: curricular management and renewal at AAVMC member institutions. J Vet Med Educ. (2017) 44:381–439. doi: 10.3138/jvme.0417.048

15. Masters K. Artificial intelligence in medical education. Med Teach. (2019) 41:976–80. doi: 10.1080/0142159X.2019.1595557

16. Choudhary OP, Saini J, Challana A, Choudhary O, Saini J, Challana A. ChatGPT for veterinary anatomy education: an overview of the prospects and drawbacks. Int J Morphol. (2023) 41:1198–202. doi: 10.4067/S0717-95022023000401198

17. Kuzminsky J, Phillips H, Sharif H, Moran C, Gleason HE, Topulos SP, et al. Reliability in performance assessment creates a potential application of artificial intelligence in veterinary education: evaluation of suturing skills at a single institution. Am J Vet Res. (2023) 84:58. doi: 10.2460/ajvr.23.03.0058

18. Bok HG, Jaarsma DA, Teunissen PW, van der Vleuten CP, van Beukelen P. Development and validation of a competency framework for veterinarians. J Vet Med Educ. (2011) 38:262–9. doi: 10.3138/jvme.38.3.262

19. Chaney KP, Hodgson JL, Banse HE, Danielson JA, Foreman JH, Kedrowicz AA, et al. AAVMC Council on Outcomes-based Veterinary Education, CBVE 2.0 Model. Washington, DC: American Association of Veterinary Medical Colleges (2024).

20. Matthew SM, Bok HG, Chaney KP, Read EK, Hodgson JL, Rush BR, et al. Collaborative development of a shared framework for competency-based veterinary education. J Vet Med Educ. (2020) 47:578–93. doi: 10.3138/jvme.2019-0082

21. Meehan MP, Menniti MF. Final-year veterinary students' perceptions of their communication competencies and a communication skills training program delivered in a primary care setting and based on Kolb's Experiential Learning Theory. J Vet Med Educ. (2014) 41:371–83. doi: 10.3138/jvme.1213-162R1

22. Adams CL, Kurtz S. Coaching and feedback: enhancing communication teaching and learning in veterinary practice settings. J Vet Med Educ. (2012) 39:217–28. doi: 10.3138/jvme.0512-038R

23. Adams CL, Kurtz SM. Building on existing models from human medical education to develop a communication curriculum in veterinary medicine. J Vet Med Educ. (2006) 33:28–37. doi: 10.3138/jvme.33.1.28

24. MacDonald-Phillips KA, McKenna SL, Shaw DH, Keefe GP, VanLeeuwen J, Artemiou E, et al. Communication skills training and assessment of food animal production medicine veterinarians: a component of a voluntary Johne's disease control program. J Dairy Sci. (2022) 105:2487–98. doi: 10.3168/jds.2021-20677

25. Kurtz S. Teaching and learning communication in veterinary medicine. J Vet Med Educ. (2006) 33:11–9. doi: 10.3138/jvme.33.1.11

26. Kurtz S, Silverman J, Benson J, Draper J. Marrying content and process in clinical method teaching: enhancing the Calgary–Cambridge guides. Acad Med. (2003) 78:802–9. doi: 10.1097/00001888-200308000-00011

27. Manalastas G, Noble LM, Viney R, Griffin AE. What does the structure of a medical consultation look like? A new method for visualising doctor-patient communication. Patient Educ Couns. (2021) 104:1387–97. doi: 10.1016/j.pec.2020.11.026

28. Shaw JR. Evaluation of communication skills training programs at North American veterinary medical training institutions. J Am Vet Med Assoc. (2019) 255:722–33. doi: 10.2460/javma.255.6.722

29. Shorey S, Ang E, Yap J, Ng ED, Lau ST, Chui CK, et al. virtual counseling application using artificial intelligence for communication skills training in nursing education: development study. J Med Internet Res. (2019) 21:e14658. doi: 10.2196/14658

30. Stamer T, Steinhäuser J, Flägel K. Artificial intelligence supporting the training of communication skills in the education of health care professions: scoping review. J Med Internet Res. (2023) 25:e43311. doi: 10.2196/43311

31. Rasul T, Nair S, Kalendra D, Robin M, de Oliveira Santini F, Ladeira WJ, et al. The role of ChatGPT in higher education: Benefits, challenges, and future research directions. J Appl Learn Teach. (2023) 6:41–56. doi: 10.37074/jalt.2023.6.1.29

32. White J, Fu Q, Hays S, Sandborn M, Olea C, Gilbert H, et al. A prompt pattern catalog to enhance prompt engineering with chatgpt. ArXiv Prepr ArXiv230211382 (2023).

33. Giray L. Prompt engineering with ChatGPT: a guide for academic writers. Ann Biomed Eng. (2023) 51:2629–33. doi: 10.1007/s10439-023-03272-4

34. R Core Team. R: a language and environment for statistical computing. Viena, Austria: R Foundation for statistical computing. (2024)

35. de Winter JCF, Gosling SD, Potter J. Comparing the Pearson and Spearman correlation coefficients across distributions and sample sizes: a tutorial using simulations and empirical data. Psychol Methods. (2016) 21:273–90. doi: 10.1037/met0000079

36. Akoglu H. User's guide to correlation coefficients. Turk J Emerg Med. (2018) 18:91–3. doi: 10.1016/j.tjem.2018.08.001

37. Balon R. An explanation of generations and generational changes. Acad Psychiatry. (2024) 48:280–2. doi: 10.1007/s40596-023-01921-3

38. Calvo-Porral C, Pesqueira-Sanchez R. Generational differences in technology behaviour: comparing millennials and Generation X. Kybernetes. (2020) 49:2755–72. doi: 10.1108/K-09-2019-0598

39. Rue P. Make way, millennials, here comes Gen Z. Campus. (2018) 23:5–12. doi: 10.1177/1086482218804251

40. Lai JW, Bower M. How is the use of technology in education evaluated? A systematic review. Comput Educ. (2019) 133:27–42. doi: 10.1016/j.compedu.2019.01.010

41. Raja R, Nagasubramani PC. Impact of modern technology in education. J Appl Adv Res. (2018) 3:33–5. doi: 10.21839/jaar.2018.v3iS1.165

42. Edirippulige S, Gong S, Hathurusinghe M, Jhetam S, Kirk J, Lao H, et al. Medical students' perceptions and expectations regarding digital health education and training: a qualitative study. J Telemed Telecare. (2022) 28:258–65. doi: 10.1177/1357633X20932436

43. Muca E, Cavallini D, Odore R, Baratta M, Bergero D, Valle E. Are veterinary students using technologies and online learning resources for didactic training? A mini-meta analysis. Educ Sci. (2022) 12:573. doi: 10.3390/educsci12080573

44. Royal K, Hedgpeth M-W, McWhorter D. Students' perceptions of and experiences with educational technology: a survey. JMIR Med Educ. (2016) 2:e5135. doi: 10.2196/mededu.5135

45. Chu CP. ChatGPT in veterinary medicine: a practical guidance of generative artificial intelligence in clinics, education, and research. Front Vet Sci. (2024) 11:1395934. doi: 10.3389/fvets.2024.1395934

46. Coghlan S, Quinn T. Ethics of using artificial intelligence (AI) in veterinary medicine. AI Soc. (2024) 39:2337–48. doi: 10.1007/s00146-023-01686-1

47. Ngo TTA. The perception by university students of the use of ChatGPT in education. Int J Emerg Technol Learn Online. (2023) 18:39019. doi: 10.3991/ijet.v18i17.39019

48. Adams CL, Ladner L. Implementing a simulated client program: bridging the gap between theory and practice. J Vet Med Educ. (2004) 31:138–45. doi: 10.3138/jvme.31.2.138

49. Englar RE. Tracking veterinary students' acquisition of communication skills and clinical communication confidence by comparing student performance in the first and twenty-seventh standardized client encounters. J Vet Med Educ. (2019) 46:235–57. doi: 10.3138/jvme.0917-117r1

50. van Gelderen I, Taylor R. Developing communication competency in the veterinary curriculum. Animals. (2023) 13:3668. doi: 10.3390/ani13233668

51. Soltero E, Villalobos CD, Englar RE, Graham Brett T. Evaluating communication training at AVMA COE–accredited institutions and the need to consider diversity within simulated client pools. J Vet Med Educ. (2022) 50:192–204. doi: 10.3138/jvme-2021-0146

52. Artemiou E, Adams CL, Toews L, Violato C, Coe JB. Informing web-based communication curricula in veterinary education: a systematic review of web-based methods used for teaching and assessing clinical communication in medical education. J Vet Med Educ. (2014) 41:44–54. doi: 10.3138/jvme.0913-126R

53. Artemiou E, Adams CL, Vallevand A, Violato C, Hecker KG. Measuring the effectiveness of small-group and web-based training methods in teaching clinical communication: a case comparison study. J Vet Med Educ. (2013) 40:242–51. doi: 10.3138/jvme.0113-026R1

54. Sezer B, Sezer TA. Teaching communication skills with technology: creating a virtual patient for medical students. Australas J Educ Technol. (2019) 35:183–98. doi: 10.14742/ajet.4957

55. Sridharan K, Sequeira RP. Evaluation of artificial intelligence-generated drug therapy communication skill competencies in medical education. Br J Clin Pharmacol. (2024) 16:144. doi: 10.1111/bcp.16144

56. Barron D, Khosa D, Jones-Bitton A. Experiential learning in primary care: impact on veterinary students' communication confidence. J Exp Educ. (2017) 40:349–65. doi: 10.1177/1053825917710038

57. Brandt JC, Bateman SW. Senior veterinary students' perceptions of using role play to learn communication skills. J Vet Med Educ. (2006) 33:76–80. doi: 10.3138/jvme.33.1.76

58. Kedrowicz AA. The impact of a group communication course on veterinary medical students' perceptions of communication competence and communication apprehension. J Vet Med Educ. (2016) 43:135–42. doi: 10.3138/jvme.0615-100R1

59. Worthing KA, Roberts M, Šlapeta J. Surveyed veterinary students in Australia find ChatGPT practical and relevant while expressing no concern about artificial intelligence replacing veterinarians. Vet Rec Open. (2024) 11:e280. doi: 10.1002/vro2.80

60. Jussupow E, Spohrer K, Heinzl A. Identity threats as a reason for resistance to artificial intelligence: survey study with medical students and professionals. JMIR Form Res. (2022) 6:e28750. doi: 10.2196/28750

Keywords: artificial intelligence (AI), ChatGPT, prompt engineering, communication skills, veterinary medical education, standardized clients (SCs), AI-standardized clients (AI-SCs), AI-cases

Citation: Artemiou E, Hooper S, Dascanio L, Schmidt M and Gilbert G (2025) Introducing AI-generated cases (AI-cases) & standardized clients (AI-SCs) in communication training for veterinary students: perceptions and adoption challenges. Front. Vet. Sci. 11:1504598. doi: 10.3389/fvets.2024.1504598

Received: 01 October 2024; Accepted: 10 December 2024;

Published: 24 February 2025.

Edited by:

April Kedrowicz, North Carolina State University, United StatesReviewed by:

Christianne Magee, Colorado State University, United StatesCopyright © 2025 Artemiou, Hooper, Dascanio, Schmidt and Gilbert. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Elpida Artemiou, ZWxwaWRhLmFydGVtaW91QHR0dS5lZHU=; Guy Gilbert, R3V5LmdpbGJlcnRAdHR1LmVkdQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.