95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Syst. Neurosci. , 05 May 2023

Volume 17 - 2023 | https://doi.org/10.3389/fnsys.2023.1123221

This article is part of the Research Topic Brain Connectivity in Neurological Disorders View all 9 articles

Moebius syndrome (MBS) is characterized by the congenital absence or underdevelopment of cranial nerves VII and VI, leading to facial palsy and impaired lateral eye movements. As a result, MBS individuals cannot produce facial expressions and did not develop motor programs for facial expressions. In the latest model of sensorimotor simulation, an iterative communication between somatosensory, motor/premotor cortices, and visual regions has been proposed, which should allow more efficient discriminations among subtle facial expressions. Accordingly, individuals with congenital facial motor disability, specifically with MBS, should exhibit atypical communication within this network. Here, we aimed to test this facet of the sensorimotor simulation models. We estimated the functional connectivity between the visual cortices for face processing and the sensorimotor cortices in healthy and MBS individuals. To this aim, we studied the strength of beta band functional connectivity between these two systems using high-density EEG, combined with a change detection task with facial expressions (and a control condition involving non-face stimuli). The results supported our hypothesis such that when discriminating subtle facial expressions, participants affected by congenital facial palsy (compared to healthy controls) showed reduced connectivity strength between sensorimotor regions and visual regions for face processing. This effect was absent for the condition with non-face stimuli. These findings support sensorimotor simulation models and the communication between sensorimotor and visual areas during subtle facial expression processing.

Moebius Syndrome (MBS; Moebius, 1888) is a rare congenital neurological disorder characterized by the affection of cranial nerves VI and VII (Briegel, 2006), leading to impaired lateral eye movements and complete or nearly complete–usually bilateral–facial paralysis. In addition, other congenital conditions are sometimes present, such as limb anomalies (e.g., clubfoot and missing/underdeveloped fingers or hands; Richards, 1953; Verzijl et al., 2003). On the psychological side, individuals with MBS show difficulties in social interactions with different degrees of severity, mostly because they cannot express their emotions to others through their faces (Bogart and Matsumoto, 2010). Therefore, per the definition, MBS individuals are characterized by a deficit in the production of facial expressions.

A prominent theoretical model supports the existence of a close relationship between production (e.g., of gestures and facial expressions) and perception (e.g., of gestures and facial expressions) (Preston and de Waal, 2002; Rizzolatti and Sinigaglia, 2016). Several studies provided evidence in favor of shared neural representations of emotional facial expressions between production and perception in different brain regions. These include the inferior, middle, and superior frontal gyri, the amygdala, and the insula (Molenberghs et al., 2012), suggesting that these shared representations could hinge on mirror mechanisms (Van Overwalle and Baetens, 2009). Overall, this “shared representational system” is thought to subserve others’ social understanding and emotion perception by motor simulation (Goldman and Sripada, 2005; Bastiaansen et al., 2009; Likowski et al., 2012). It has recently been hypothesized that the primary mechanism through which motor simulation supports emotion perception is that of an iterative communication between motor, premotor, somatosensory cortices (overall the sensorimotor system), and the visual cortices (Wood et al., 2016a,b). Specifically, iterative communication would increase the quality/precision of the visual percept, allowing for more efficient discriminations of facial expressions (Wood et al., 2016a,b). In this context, facial mimicry, the visible or invisible contraction of the facial muscles congruent with the observed expression, is conceived as a peripheral manifestation of the central sensorimotor simulation. Sensorimotor simulation models (Goldman and Sripada, 2005; Bastiaansen et al., 2009; Likowski et al., 2012; Wood et al., 2016a,b) assume that facial mimicry contributes to the motor simulation through the feedback provided to motor areas.

Within this theoretical framework, MBS individuals should be characterized by altered facial feedback to the central nervous system (especially to the motor cortex) because of facial palsy, and, as a consequence of the congenital condition, they should not have (at least complete) facial motor programs for facial expressions. In short, MBS individuals could not efficiently exert the hypothesized sensorimotor simulation mechanism in recognizing/discriminating facial expressions. Nevertheless, it is possible that by mechanisms of plasticity and compensation, individuals with MBS can achieve normotypical performances (Vannuscorps et al., 2020) and have developed alternative and efficient neural pathways for the recognition/discrimination of facial expressions (Sessa et al., 2022). Therefore investigations using neuroimaging techniques are necessary to explore the neural bases of the emotional expression processing in MBS individuals beyond their behavioral performance (in terms of accuracy and/or reaction times) that could be normotypical.

Due to the absence of a shared representational system/motor simulation, one might expect that the neurological population of MBS is characterized by: (a) impaired recognition/discrimination of emotional facial expressions (in the case of lack of compensation) and (b) lower degree of connectivity (compared to healthy individuals) between sensorimotor and visual systems during subtle discrimination of emotional facial expressions.

In a previous investigation, our findings corroborated the hypothesis of compensatory mechanisms, which, in terms of neural pathways, might hinge on the recruitment of different brain regions in MBS compared to healthy individuals during emotional expression discrimination tasks (Sessa et al., 2022). The specific aim of the present study, instead, is precisely to test the predicted reduced connectivity between sensorimotor and visual systems in MBS, compared to healthy controls.

To this aim, we administered our participants, healthy and MBS, an emotional expression discrimination task. Cortical activity was recorded with high-density electroencephalography (hd-EEG) to investigate functional connectivity, i.e., the strength to which activity between a pair of brain regions covaries or correlates over time (Lachaux et al., 1999). In the case of EEG signals, phase synchronization is one of the most widely used indexes to investigate functional connectivity under the assumption that the phase of two oscillations of different brain regions should be correlated if the two regions are functionally connected (Lachaux et al., 1999; López et al., 2014). We computed the phase locking value (corrected imaginary phase locking value; ciPLV; see section “Materials and methods”) of the beta oscillatory activity according to the previous and convincing evidence that links the processing of stimuli with affective value to long-distance EEG connectivity in the beta band (Aftanas et al., 2002; Miskovic and Schmidt, 2010; Zhang et al., 2013; Wang et al., 2014; Kheirkhah et al., 2020; Kim et al., 2021).

Although not made explicit by the motor simulation models (Wood et al., 2016a,b), the visual cortices involved in the iterative communication must primarily entail regions delegated to the visual analysis of faces. The most accredited neural model of face processing, i.e., the distributed model of face processing by Haxby et al. (2000) and Haxby and Gobbini (2011), encompasses, indeed, a core system for faces’ visual processing (comprising the fusiform face area, the occipital face area, and the posterior superior temporal sulcus; Haxby et al., 2000; Grill-Spector et al., 2004; Winston et al., 2004; Yovel and Kanwisher, 2004; Ishai et al., 2005; Rotshtein et al., 2005; Lee et al., 2010; Gobbini et al., 2011), and an extended system for additional non-visual processing steps, including the attribution of meaning to facial expressions in terms of emotion (comprising the sensorimotor cortices; Haxby and Gobbini, 2011).

Based on this knowledge, we expected phase synchronization (i.e., the connectivity index) between the sensorimotor system and the core system to be significantly greater in healthy participants than in MBS participants. As preliminary evidence to circumscribe and characterize this effect as face-sensitive, we included an identical task but involving non-face stimuli (i.e., animal shapes). We did not expect to observe any difference between healthy participants and MBS participants with regard to the strength of the connectivity index for non-face stimuli.

In this research we enrolled 14 adults, seven MBS participants (MBS group: MBS 4 females and 3 males, mean age = 40, 43 years; s.d. = 11,03) and seven healthy control participants. Controls were matched for age, gender and level of education. Participants in the MBS group had a diagnosis of unilateral or bilateral facial paralysis (Terzis and Noah, 2003). See Table 1 for demographic data and clinical information for MBS participants. All the participants did not report any psychiatric or physical illness.

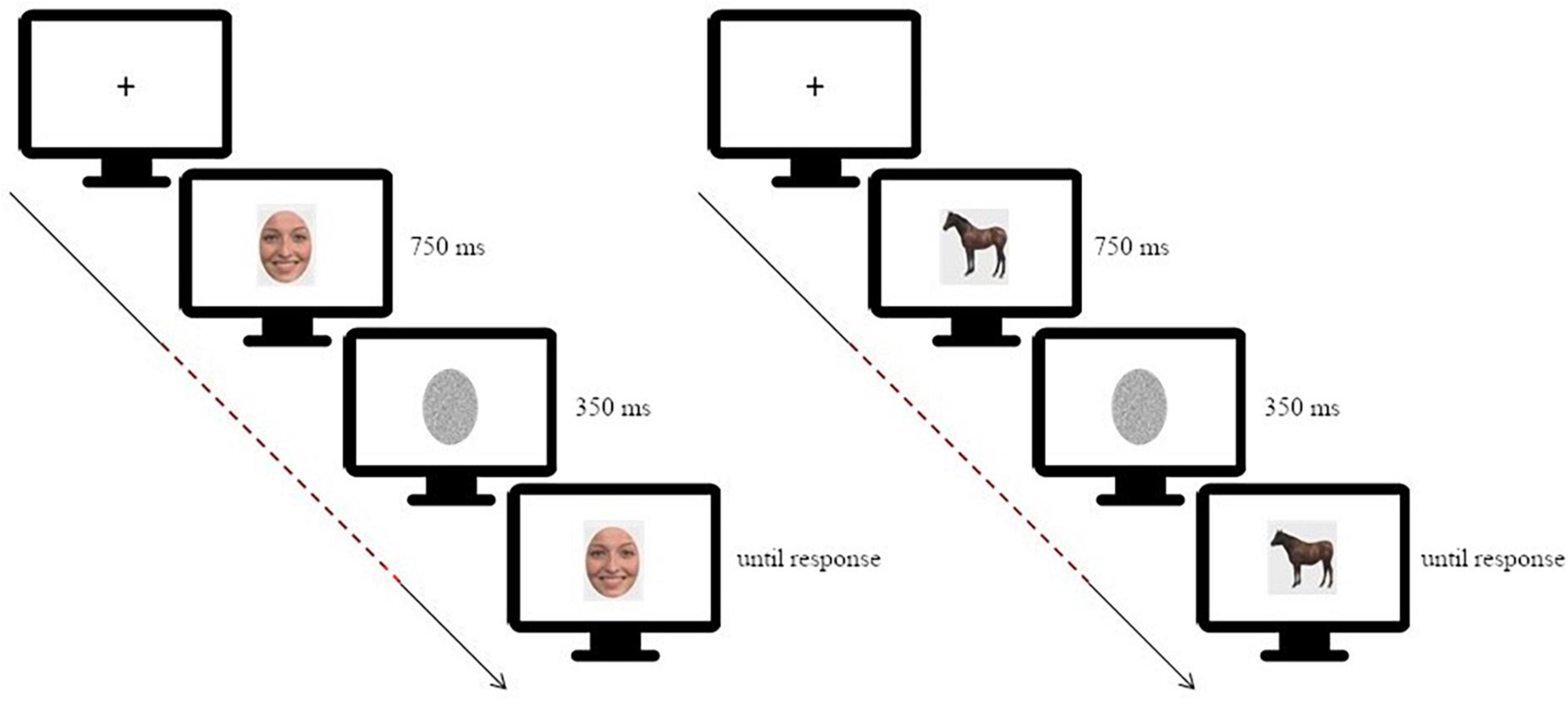

Participants performed a simple change detection task in which they had to judge if a test image was different or not compared to a target image. This task has been successfully used to investigate changes in neural activity, as well as connectivity, during face processing (Wood et al., 2016a; Lomoriello et al., 2021; Maffei and Sessa, 2021a). In each trial the target image was presented on a screen for 750 ms, masked with noise for 350 ms and then followed by the test image which lasted on screen until response (Figure 1).

Figure 1. Schematic depiction of the experimental paradigm [on the left: for the facial expression discrimination task (morphing continua happiness-disgust); on the right: for the animal shape discrimination task]. The dashed line highlights the time window considered for the connectivity analysis, starting at target stimulus onset and ending before test stimulus onset. Adapted from Sessa et al. (2022).

The stimuli were 11 digital images of faces and animals.

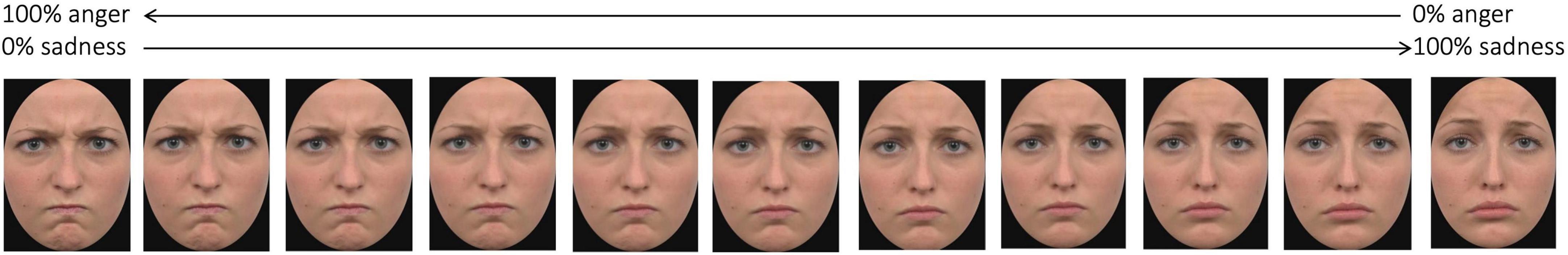

For each category, we created the morphing continuum as follows: for the face stimuli we had two continua, one ranging between the expression of anger and the expression of sadness, and one ranging between the expression of happiness and the expression of disgust (Figure 2 shows stimuli of one of the morphing continuum); for the animal stimuli the continuum ranged between the image of a horse and the image of a cow, both presented in the same posture. Each continuum started with an expression/animal shape consisting in 100% of one expression/animal shape and 0% of the other (i.e., 100% anger–0% sadness or 100% happiness–0% disgust; 100% horse–0% cow), and then changed in 20% increments/decrements until reaching the opposite end (e.g., 0% sadness–100% anger, 0% horse–100% cow). The stimuli are available at the following link of the Open Science Framework repository: osf.io/krpfb.

Figure 2. Example of stimuli of one of the morphing continua. Adapted from Sessa et al. (2022).

On each trial, the target stimulus was randomly selected from one of the continua, then it was followed by the mask, and finally the test stimulus was presented. This latter test stimulus was selected from the same continuum of the target pseudorandomly, such that it was maximum 40% apart on the morph continuum, to control for discrimination difficulty across participants.

Electroencephalography activity was recorded from 128 channels using an HydroCel Geodesic Sensor Net (HCGSN-128) connected to a Geodesic EEG System (EGI GES 300). Data were collected continuously with a sampling rate of 500 Hz using the vertex as online reference. Channel impedance was kept under 60 kΩ. For the purpose of the present research we analyzed the preprocessed data used in Sessa et al. (2022). Briefly, pre-processing consisted in downsampling the data to 250 Hz and band pass filtering (0.1–45 Hz), epoching between −500 to 1500 ms relative to target onset, rejection of artifactual components after ICA using the ICLabel algorithm (Pion-Tonachini et al., 2019), bad channel interpolation and referencing to the average of all channels. Further details regarding the pre-processing can be found in Sessa et al. (2022). The preprocessed data as well as the pre-processing script can be accessed at https://osf.io/krpfb/.

In order to estimate brain activity from the preprocessed scalp recordings, we first created a forward model using the three-layer boundary element method (BEM) from OpenMEEG, implemented in Brainstorm, and then estimated an inverse solution with the weighted Minimum Norm Estimation (wMNE) with default parameter. Finally, the estimated distributed source activity was downsampled to the 148 cortical parcels of the Destrieux et al. (2010), averaging the activity of all the vertices included in each parcel.

Functional connectivity was estimated using the phase locking value, which is a widely used statistic able to quantify the degree of phase synchronization in a given frequency band (Lachaux et al., 1999; Maffei and Sessa, 2021a,b). Specifically, we employed an updated version of the original PLV statistics, recently introduced by Bruña et al. (2018): the corrected imaginary part of PLV (ciPLV). As with the original PLV, ciPLV analysis first requires a time-frequency decomposition of the signals, which can be obtained either through wavelets or applying the Hilbert transform on narrow-band filtered signals. Then, for each pair of signals, ciPLV is estimated as the imaginary part of the phase difference between the two signals. Contrary to the classic PLV, taking only the imaginary part of the phase difference allows to discard any zero-lag interactions, making ciPLV robust to volume conduction and/or source leakage which are known to inflate classic PLV (Bruña et al., 2018).

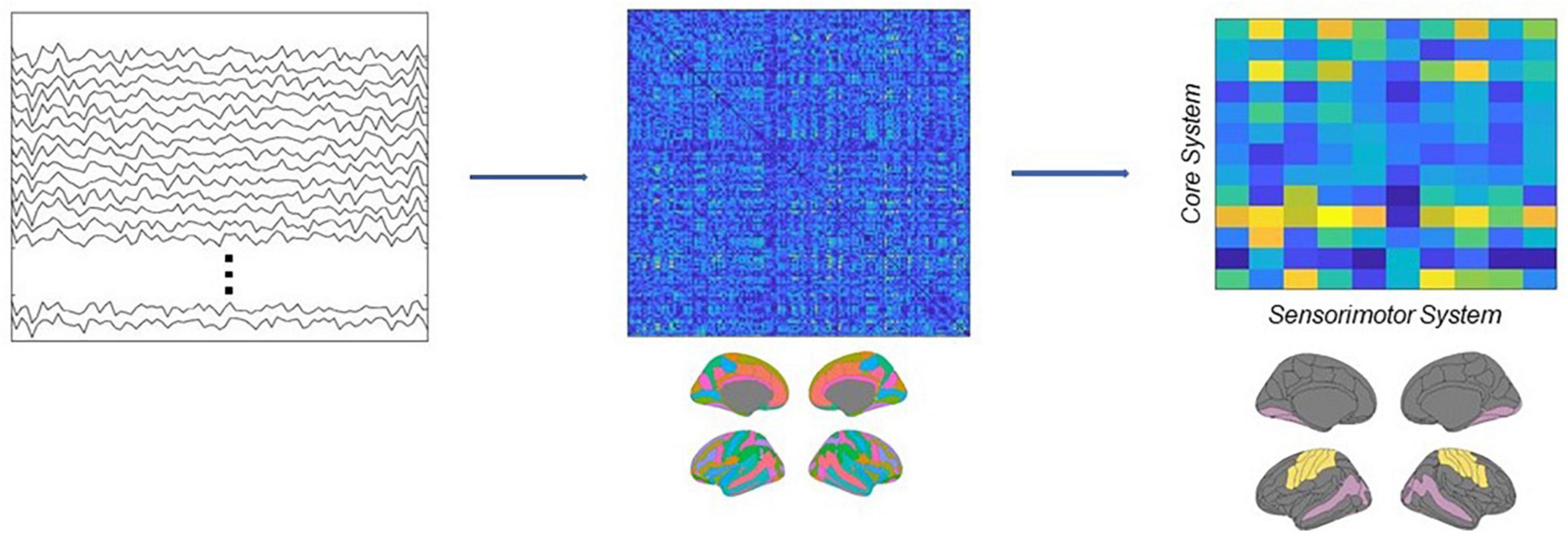

In this research, we first band-pass filtered the source estimated activity in the beta range (13–30 Hz), then applied the Hilbert transform to derive the analytical representation of the signals, and finally computed ciPLV for each pair of ROI of the Destrieux atlas in the time range between the onset of the target image and the onset of the test image (0–1100 ms). This workflow resulted in a 148 × 148 symmetric matrix M, where each entry represents the connectivity strength between each pair of regions. Then we subsampled this matrix, in order to extract a new rectangular matrix R, where the columns identify the regions belonging to the core system of the face processing network (Haxby and Gobbini, 2011; Maffei and Sessa, 2021a) and the rows identify the primary and secondary motor and somatosensory cortices (see Supplementary material). Each entry of this matrix thus represents the value of connectivity between a ROI belonging to the core system and a ROI belonging to the sensorimotor system. Finally, we computed the connectivity strength between the two systems as the sum of the matrix, w = ∑i,j Ri,j see Figure 3.

Figure 3. Schematic depiction of the analytical pipeline. Source activity was reconstructed from EEG recordings, then connectivity was estimated using the ciPLV in the beta band (13–30 Hz). Finally, the connectivity strength between the core and the sensorimotor systems was extracted from the full adjacency matrices.

The main goal of this research was to test if participants with MBS are characterized by an impaired connectivity between visual and somatomotor regions during the processing of facial expressions. To test this hypothesis we performed an independent samples t-test on the connectivity strength estimated from trials in which participants were presented with facial expressions. The null hypothesis was that the two groups, MBS and controls, should not differ in the degree of functional connectivity between visual and sensorimotor regions. We also performed an additional analysis on the connectivity strength estimated from trials in which participants were presented with images of animals as a preliminary assessment to test the face-sensitivity of this effect. We present this analysis with caution as we are aware of the limitations of the statistical approach due to the extreme rarity of the MBS condition.

For the readers interested instead in analysis of the behavioral performance we refer to Sessa et al. (2022).

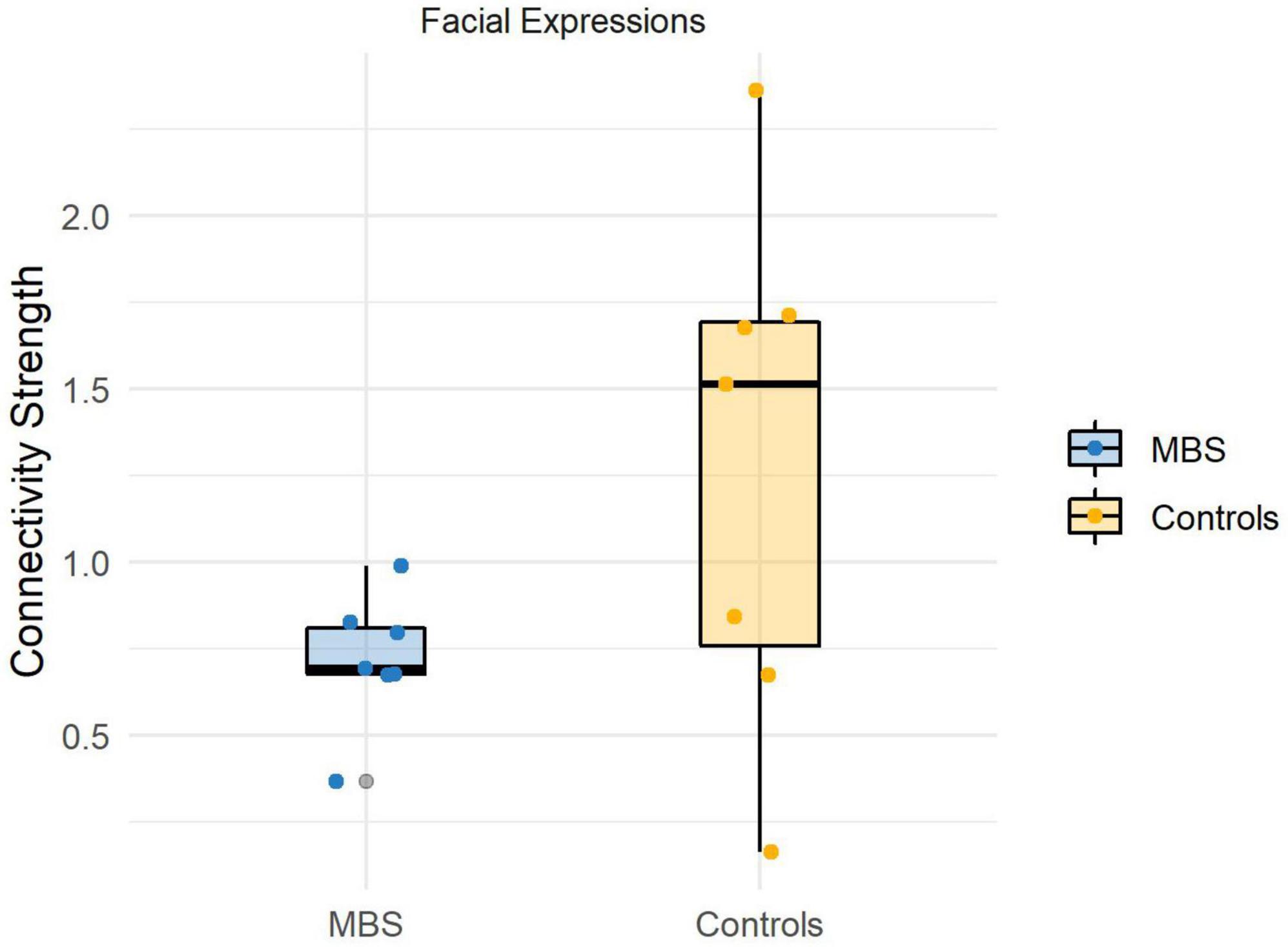

Before running the statistical comparisons, we used the Shapiro-Wilk statistics to check for the normality of the data, and the test suggests that there is no violation of normality (Wface_Moebius = 0.92, p = 0.52; Wface_Controls = 0.96, p = 0.83;Wanimal_Moebius = 0.93, p = 0.61; Wanimal_Controls = 0.97, p = 0.93). The analysis performed for the Face condition revealed a significant difference [t(12) = −1.91, p < 0.05, d = −1.2] between the two groups, showing that participants affected by facial palsy, compared to healthy controls, were characterized by a reduced connectivity strength between sensorimotor regions and visual regions comprised in the core system of the face processing network (MMBS = 0.7, MCTRL = 1.2; see Figure 4). Conversely, the analysis performed for the Animal condition did not reveal any significant difference between the two groups [t(12) = −1.36, p = 0.1].

Figure 4. Individual connectivity strengths during facial expressions processing in the beta band (13–30 Hz) for each group.

Over the last 20 years, various models of (sensori)motor simulation (Goldman and Sripada, 2005; Bastiaansen et al., 2009; Likowski et al., 2012; Wood et al., 2016a,b) have been proposed, all sharing the central theoretical hypothesis that facial expressions’ recognition and fine discrimination are supported by the recruitment–in the observer–of motor programs congruent with the facial expression observed, positing a relationship between production and recognition abilities. Therefore, it follows that individuals affected by clinical conditions limiting the production abilities, should also show recognition deficits. Although the congenital disorder in MBS subjects could trigger plastic cerebral modifications leading to alternative and efficient neural pathways to recognize emotional expressions, the deficiency of the simulation mechanism–if true–should necessarily translate into reduced functional connectivity between sensorimotor and visual systems, which is a central tenet of the most recent sensorimotor simulation models (see Wood et al., 2016b).

Here, we wanted to test this hypothesis by comparing the functional connectivity between the core system and the primary and secondary motor and somatosensory cortices in MBS and healthy individuals. We implemented a change detection task of facial expressions and animal shapes, and studied brain connectivity in terms of phase locking (corrected imaginary phase locking value; ciPLV; see section “Materials and method”). We restricted our analysis to beta oscillatory activity as the best approach to capture putative long-distance EEG connectivity involved in processing stimuli with affective value (Aftanas et al., 2002; Miskovic and Schmidt, 2010; Wang et al., 2014; Zhang et al., 2013; Kheirkhah et al., 2020; Kim et al., 2021).

The results supported our hypothesis. Indeed, as expected, for the facial expressions discrimination, reduced connectivity strength between sensorimotor regions and visual regions comprised in the core system of the face processing network was found in participants affected by facial palsy when compared to the matched healthy controls. Such a difference in the connectivity strength between the two groups was not observed for the animal shape condition. We are aware that the rarity of the syndrome and, consequently, the magnitude of the sample size cannot allow us to (statistically) conclude that the effect is selective for facial expressions.

Nevertheless, this reduction in the connectivity index for facial expressions was significant and, as such, it might indicate that the simulation mechanism is (at least) deficient in individuals with MBS. This result perfectly aligns with our hypothesis, since the alteration or absence of cranial nerves VI and VII as a consequence of the congenital condition restricts facial feedback to the central nervous system, and, more importantly, impairs the development (at least complete) of facial motor programs for facial expressions. Consequently, MBS individuals should not exhibit connectivity between the sensorimotor and the visual systems in the absence of a simulation mechanism (or, in the case of residual muscular functioning, they should exhibit reduced connectivity when compared to healthy individuals).

If the functional significance of this connectivity, as predicted in the model by Wood et al. (2016b), is to favor the fine processing of emotional facial expressions, one might expect that subjects with MBS should be less efficient in those tasks that require this type of processing. To note, however, studies that have investigated the ability to recognize emotional expressions in MBS individuals have produced conflicting results (e.g., De Stefani et al., 2019 for a review), although the most convincing evidence seems to indicate that the individuals with the syndrome may exhibit normotypical performance, at least in terms of correctness (i.e., accuracy) when recognizing/discriminating emotional faces (Rives Bogart and Matsumoto, 2010; Vannuscorps et al., 2020; Sessa et al., 2022). These last results, on the other hand, are in line with compensatory/plasticity mechanisms, plausibly starting from birth, as recently supported by a recent study by Sessa et al. (2022). This last study, indeed, provided evidence in favor of the recruitment of an alternative neural pathway in Moebius individuals (vs. healthy controls), which does not seem to involve the motor and somatosensory regions, but rather more ventral areas (from the occipital face area/fusiform face area to the anterior temporal lobe; compatible with the proposals by Duchaine and Yovel, 2015; Pitcher and Ungerleider, 2021) that in healthy individuals contribute to the processing of emotional expressions although preferentially involved in the processing of form information, for instance, for face identity processing (Vuilleumier et al., 2001; Ishai et al., 2004; Ganel et al., 2005; Xu and Biederman, 2010).

The present study has some limitations which should be mentioned.

First, the level and extension of the nerves alteration in MBS are different from one patient to another patient. From this point of view and due to the extreme rarity of the syndrome, it is not always possible recruiting a homogeneous group, so that one patient out of seven patients had a deficit of the facial/VII nerve alone in the absence of a concomitant impairment of the abducens/VI nerve (see Table 1).

Second, another potential limitation regards the smile surgery that allows MBS individuals to produce smile-like facial movements. Crucially, after smile surgery, MBS could develop smile-like motor programs and the associated motor representation, which could be also potentially dysfunctional for simulation. In the present study, the experimental procedure also envisaged the fine discrimination of facial expressions of happiness. However, the behavioral and connectivity analyses were not carried out on each category of emotional expression separately. As a consequence we cannot examine whether the processing (at the behavioral and neural level) of the expressions of happiness in patients who received smile surgery (all but one in our sample) differ from other emotional expressions.

Third, in the present study, we have used static facial expressions to investigate the impact of congenital facial palsy on the connectivity between the sensorimotor and visual systems. However, it is important to note that the dorsal pathway, which is involved in the processing of facial expressions, is more sensitive and more strongly recruited when dynamic rather than static facial expressions are processed (Duchaine and Yovel, 2015). This suggests that the sensorimotor simulation mechanism is more strongly triggered by dynamic facial expressions. Therefore, in the present study, we might have underestimated the impact of congenital facial palsy on the connectivity between the sensorimotor and visual systems. Future studies should consider using dynamic facial expressions to provide a more accurate understanding of the impact of congenital facial palsy on the sensorimotor and visual systems.

To conclude, our results support sensorimotor simulation models and the communication between sensorimotor and visual regions of the core system during subtle facial expression discrimination. Furthermore, they indicate that this communication is atypical in MBS individuals for facial expression processing.

Publicly available datasets were analyzed in this study. This data can be found here: https://osf.io/krpfb/.

The studies involving human participants were reviewed and approved by the Ethics Committee of the University of Padua (Protocol No. 2855). The patients/participants provided their written informed consent to participate in this study.

TQ: methodology and writing—original draft. AM: conceptualization, methodology, formal analysis, and writing—review and editing. FG: methodology and writing—review and editing. PF: conceptualization and writing—review and editing. PS: conceptualization, supervision, project administration, funding acquisition, and writing—review and editing. All authors contributed to the article and approved the submitted version.

This work was supported by funding assigned to PS (Fondazione Cariparo–“Ricerca Scientifica d’Eccellenza”, call 2021).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnsys.2023.1123221/full#supplementary-material

Aftanas, L. I., Varlamov, A. A., Pavlov, S. V., Makhnev, V. P., and Reva, N. V. (2002). Time-dependent cortical asymmetries induced by emotional arousal: EEG analysis of event-related synchronization and desynchronization in individually defined frequency bands. Int. J. Psychophysiol. 44, 67–82. doi: 10.1016/S0167-8760(01)00194-5

Bastiaansen, J. A. C. J., Thioux, M., and Keysers, C. (2009). Evidence for mirror systems in emotions. Philos. Trans. R. Soc. London Series B Biol. Sci. 364, 2391–2404. doi: 10.1098/rstb.2009.0058

Bogart, K. R., and Matsumoto, D. (2010). Living with Moebius syndrome: adjustment, social competence, and satisfaction with life. Cleft Palate-Craniofacial J. 47, 134–142. doi: 10.1597/08-257.1

Briegel, W. (2006). Neuropsychiatric findings of Möbius sequence – a review. Clin. Genet. 70, 91–97. doi: 10.1111/j.1399-0004.2006.00649.x

Bruña, R., Maestú, F., and Pereda, E. (2018). Phase locking value revisited: teaching new tricks to an old dog. J. Neural Eng. 15:056011. doi: 10.1088/1741-2552/aacfe4

De Stefani, E., Nicolini, Y., Belluardo, M., and Ferrari, P. F. (2019). Congenital facial palsy and emotion processing: the case of Moebius syndrome. Genes Brain Behav. 18:e12548. doi: 10.1111/gbb.12548

Destrieux, C., Fischl, B., Dale, A., and Halgren, E. (2010). Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. NeuroImage 53, 1–15. doi: 10.1016/j.neuroimage.2010.06.010

Duchaine, B., and Yovel, G. (2015). A revised neural framework for face processing. Ann. Rev. Vis. Sci. 1, 393–416. doi: 10.1146/annurev-vision-082114-035518

Ganel, T., Valyear, K. F., Goshen-Gottstein, Y., and Goodale, M. A. (2005). The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43, 1645–1654. doi: 10.1016/j.neuropsychologia.2005.01.012

Gobbini, M. I., Gentili, C., Ricciardi, E., Bellucci, C., Salvini, P., Laschi, C., et al. (2011). Distinct neural systems involved in agency and animacy detection. J. Cogn. Neurosci. 23, 1911–1920. doi: 10.1162/jocn.2010.21574

Goldman, A. I., and Sripada, C. S. (2005). Simulationist models of face-based emotion recognition. Cognition 94, 193–213. doi: 10.1016/j.cognition.2004.01.005

Grill-Spector, K., Knouf, N., and Kanwisher, N. (2004). The fusiform face area subserves face perception, not generic within-category identification. Nat. Neurosci. 7, 555–562. doi: 10.1038/nn1224

Haxby, J., and Gobbini, M. I. (2011). “Distributed neural systems for face perception,” in Oxford Handbook of Face Perception, eds G. Rhodes, A. Calder, M. Johnson, and J. Haxby (Oxford: OUP Oxford), 93–110. doi: 10.1093/oxfordhb/9780199559053.013.0006

Haxby, J. V., Hoffman, E. A., Gobbini, M. I., Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Ishai, A., Pessoa, L., Bikle, P. C., and Ungerleider, L. G. (2004). Repetition suppression of faces is modulated by emotion. Proc. Natl. Acad. Sci. U S A. 101, 9827–9832. doi: 10.1073/pnas.0403559101

Ishai, A., Schmidt, C. F., and Boesiger, P. (2005). Face perception is mediated by a distributed cortical network. Brain Res. Bull. 67, 87–93. doi: 10.1016/j.brainresbull.2005.05.027

Kheirkhah, M., Baumbach, P., Leistritz, L., Brodoehl, S., Götz, T., Huonker, R., et al. (2020). The temporal and spatial dynamics of cortical emotion processing in different brain frequencies as assessed using the cluster-based permutation test: an MEG study. Brain Sci. 10:352. doi: 10.3390/brainsci10060352

Kim, H., Seo, P., Choi, J. W., and Kim, K. H. (2021). Emotional arousal due to video stimuli reduces local and inter-regional synchronization of oscillatory cortical activities in alpha- and beta-bands. PLoS One 16:e0255032. doi: 10.1371/journal.pone.0255032

Lachaux, J. P., Rodriguez, E., Martinerie, J., and Varela, F. J. (1999). Measuring phase synchrony in brain signals. Hum. Brain Mapp. 8, 194–208. doi: 10.1002/(SICI)1097-0193(1999)8:4<194::AID-HBM4>3.0.CO;2-C

Lee, L. C., Andrews, T. J., Johnson, S. J., Woods, W., Gouws, A., Green, G. G. R., et al. (2010). Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia 48, 477–490. doi: 10.1016/j.neuropsychologia.2009.10.005

Likowski, K. U., Mühlberger, A., Gerdes, A. B. M., Wieser, M. J., Pauli, P., and Weyers, P. (2012). Facial mimicry and the mirror neuron system: simultaneous acquisition of facial electromyography and functional magnetic resonance imaging. Front. Hum. Neurosci. 6:214. doi: 10.3389/fnhum.2012.00214

Lomoriello, A. S., Maffei, A., Brigadoi, S., and Sessa, P. (2021). Altering sensorimotor simulation impacts early stages of facial expression processing depending on individual differences in alexithymic traits. Brain Cogn. 148:105678. doi: 10.1016/j.bandc.2020.105678

López, M. E., Bruña, R., Aurtenetxe, S., Pineda-Pardo, J. Á, Marcos, A., Arrazola, J., et al. (2014). Alpha-band hypersynchronization in progressive mild cognitive impairment: a magnetoencephalography study. J. Neurosci. 34, 14551–14559. doi: 10.1523/JNEUROSCI.0964-14.2014

Maffei, A., and Sessa, P. (2021a). Event-related network changes unfold the dynamics of cortical integration during face processing. Psychophysiology 58:e13786. doi: 10.1111/psyp.13786

Maffei, A., and Sessa, P. (2021b). Time-resolved connectivity reveals the “how” and “when” of brain networks reconfiguration during face processing. Neuroimage: Rep. 1:100022. doi: 10.1016/j.ynirp.2021.100022

Miskovic, V., and Schmidt, L. A. (2010). Cross-regional cortical synchronization during affective image viewing. Brain Res. 1362, 102–111. doi: 10.1016/j.brainres.2010.09.102

Moebius, P. J. (1888). Ueber angeborene doppelseitige Abducens-Facialis-Lahmung. Munchener Medizinische Wochenschrift 35, 91–94.

Molenberghs, P., Cunnington, R., and Mattingley, J. B. (2012). Brain regions with mirror properties: a meta-analysis of 125 human fMRI studies. Neurosci. Biobehav. Rev. 36, 341–349. doi: 10.1016/j.neubiorev.2011.07.004

Pion-Tonachini, L., Kreutz-Delgado, K., and Makeig, S. (2019). ICLabel: an automated electroencephalographic independent component classifier, dataset, and website. NeuroImage 198, 181–197. doi: 10.1016/j.neuroimage.2019.05.026

Pitcher, D., and Ungerleider, L. G. (2021). Evidence for a third visual pathway specialized for social perception. Trends Cogn. Sci. 25, 100–110. doi: 10.1016/j.tics.2020.11.006

Preston, S. D., and de Waal, F. B. M. (2002). Empathy: its ultimate and proximate bases. Behav. Brain Sci. 25, 1–20; discussion 20–71. doi: 10.1017/S0140525X02000018

Richards, R. N. (1953). The Möbius syndrome. J. Bone Joint Surg. 35, 437–444. doi: 10.2106/00004623-195335020-00017

Rives Bogart, K., and Matsumoto, D. (2010). Facial mimicry is not necessary to recognize emotion: facial expression recognition by people with Moebius syndrome. Soc. Neurosci. 5, 241–251. doi: 10.1080/17470910903395692

Rizzolatti, G., and Sinigaglia, C. (2016). The mirror mechanism: a basic principle of brain function. Nat. Rev. Neurosci. 17, 757–765. doi: 10.1038/nrn.2016.135

Rotshtein, P., Henson, R. N. A., Treves, A., Driver, J., and Dolan, R. J. (2005). Morphing marilyn into maggie dissociates physical and identity face representations in the brain. Nat. Neurosci. 8, 107–113. doi: 10.1038/nn1370

Sessa, P., Schiano Lomoriello, A., Duma, G. M., Mento, G., De Stefani, E., and Ferrari, P. F. (2022). Degenerate pathway for processing smile and other emotional expressions in congenital facial palsy: an hdEEG investigation. Philos. Trans. R. Soc. London Series B Biol. Sci. 377:20210190. doi: 10.1098/rstb.2021.0190

Terzis, J. K., and Noah, E. M. (2003). Dynamic restoration in Möbius and Möbius-like patients. Plastic Reconstruct. Surg. 111, 40–55. doi: 10.1097/00006534-200301000-00007

Van Overwalle, F., and Baetens, K. (2009). Understanding others’ actions and goals by mirror and mentalizing systems: a meta-analysis. NeuroImage 48, 564–584. doi: 10.1016/j.neuroimage.2009.06.009

Vannuscorps, G., Andres, M., and Caramazza, A. (2020). Efficient recognition of facial expressions does not require motor simulation. eLife 9:e54687. doi: 10.7554/eLife.54687

Verzijl, H. T. F. M., van der Zwaag, B., Cruysberg, J. R. M., and Padberg, G. W. (2003). Möbius syndrome redefined: a syndrome of rhombencephalic maldevelopment. Neurology 61, 327–333. doi: 10.1212/01.WNL.0000076484.91275.CD

Vuilleumier, P., Armony, J. L., Driver, J., and Dolan, R. J. (2001). Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron 30, 829–841. doi: 10.1016/S0896-6273(01)00328-2

Wang, X.-W., Nie, D., and Lu, B.-L. (2014). Emotional state classification from EEG data using machine learning approach. Neurocomputing 129, 94–106. doi: 10.1016/j.neucom.2013.06.046

Winston, J. S., Henson, R. N. A., Fine-Goulden, M. R., and Dolan, R. J. (2004). fMRI-Adaptation reveals dissociable neural representations of identity and expression in face perception. J. Neurophysiol. 92, 1830–1839. doi: 10.1152/jn.00155.2004

Wood, A., Lupyan, G., Sherrin, S., and Niedenthal, P. (2016a). Altering sensorimotor feedback disrupts visual discrimination of facial expressions. Psychon. Bull. Rev. 23, 1150–1156. doi: 10.3758/s13423-015-0974-5

Wood, A., Rychlowska, M., Korb, S., and Niedenthal, P. (2016b). Fashioning the face: sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 20, 227–240. doi: 10.1016/j.tics.2015.12.010

Xu, X., and Biederman, I. (2010). Loci of the release from fMRI adaptation for changes in facial expression, identity, and viewpoint. J. Vis. 10:36. doi: 10.1167/10.14.36

Yovel, G., and Kanwisher, N. (2004). Face perception domain specific. not process specific. Neuron 44, 889–898. doi: 10.1016/S0896-6273(04)00728-7

Keywords: Moebius syndrome, facial palsy, facial expressions, motor simulation, EEG functional connectivity, face processing

Citation: Quettier T, Maffei A, Gambarota F, Ferrari PF and Sessa P (2023) Testing EEG functional connectivity between sensorimotor and face processing visual regions in individuals with congenital facial palsy. Front. Syst. Neurosci. 17:1123221. doi: 10.3389/fnsys.2023.1123221

Received: 13 December 2022; Accepted: 17 April 2023;

Published: 05 May 2023.

Edited by:

Alessandra Griffa, Université de Genève, SwitzerlandReviewed by:

Marianne Latinus, INSERM U1253 Imagerie et Cerveau (iBrain), FranceCopyright © 2023 Quettier, Maffei, Gambarota, Ferrari and Sessa. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Paola Sessa, cGFvbGEuc2Vzc2FAdW5pcGQuaXQ=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.