94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Syst. Biol., 10 November 2022

Sec. Multiscale Mechanistic Modeling

Volume 2 - 2022 | https://doi.org/10.3389/fsysb.2022.959665

This article is part of the Research TopicCombining Mechanistic Modeling with Machine Learning to Study Multiscale Biological ProcessesView all 7 articles

Agent-based modeling (ABM) is a well-established computational paradigm for simulating complex systems in terms of the interactions between individual entities that comprise the system’s population. Machine learning (ML) refers to computational approaches whereby algorithms use statistical methods to “learn” from data on their own, i.e., without imposing any a priori model/theory onto a system or its behavior. Biological systems—ranging from molecules, to cells, to entire organisms, to whole populations and even ecosystems—consist of vast numbers of discrete entities, governed by complex webs of interactions that span various spatiotemporal scales and exhibit nonlinearity, stochasticity, and variable degrees of coupling between entities. For these reasons, the macroscopic properties and collective dynamics of biological systems are generally difficult to accurately model or predict via continuum modeling techniques and mean-field formalisms. ABM takes a “bottom-up” approach that obviates common difficulties of other modeling approaches by enabling one to relatively easily create (or at least propose, for testing) a set of well-defined “rules” to be applied to the individual entities (agents) in a system. Quantitatively evaluating a system and propagating its state over a series of discrete time-steps effectively simulates the system, allowing various observables to be computed and the system’s properties to be analyzed. Because the rules that govern an ABM can be difficult to abstract and formulate from experimental data, at least in an unbiased way, there is a uniquely synergistic opportunity to employ ML to help infer optimal, system-specific ABM rules. Once such rule-sets are devised, running ABM calculations can generate a wealth of data, and ML can be applied in that context too—for example, to generate statistical measures that accurately and meaningfully describe the stochastic outputs of a system and its properties. As an example of synergy in the other direction (from ABM to ML), ABM simulations can generate plausible (realistic) datasets for training ML algorithms (e.g., for regularization, to mitigate overfitting). In these ways, one can envision a variety of synergistic ABM⇄ML loops. After introducing some basic ideas about ABMs and ML, and their limitations, this Review describes examples of how ABM and ML have been integrated in diverse contexts, spanning spatial scales that include multicellular and tissue-scale biology to human population-level epidemiology. In so doing, we have used published studies as a guide to identify ML approaches that are well-suited to particular types of ABM applications, based on the scale of the biological system and the properties of the available data.

Rapid advances in experimental methodologies now enable us to obtain vast quantities of data describing the individual entities in a population, such as single cells within complex, multicellular tissues, or individual patients in large-scale epidemiological systems. Hence, a growing focus of systems biology and biomedical research involves elucidating patterns in these large datasets—what are the associations between the discrete, individual entities themselves, and what are the mechanisms by which the behaviors, states and interactions of these autonomous agents contribute to broader-scale, population-wide outcomes? For example, single-cell RNA sequencing (Hwang et al., 2018; Potter, 2018; Kulkarni et al., 2019), single-cell proteomics (Irish et al., 2006; Marx, 2019), and flow cytometry (Wu et al., 2012; Wu et al., 2013; Chen et al., 2019; Argüello et al., 2020) provide snapshots of an individual cell’s state at a single point in time. Yet, understanding the tissue–level and organ–level implications of these single-cell data requires a fundamentally different set of analytical approaches, with the capacity to spatiotemporally integrate potentially disparate data along at least two “dimensions”: across entire populations of entities, as well as across multiple scales (cellular → organismal). For instance, using single-cell RNA-Seq one can detect the presence and quantify the amount of RNAs in each of the cells in a tumor, but these data alone cannot illuminate how that particular collection of tumor cells, which undergo different behaviors (e.g., proliferation and migration) as dictated by their unique cellular states, contribute to tissue-level and organ-level outcomes such as angiogenesis and metastasis.

Epidemiological datasets have similarly expanded in recent years (Andreu Perez et al., 2015; Ehrenstein et al., 2017; Saracci, 2018). Many factors have driven this growth, including: 1) improved standardization and systematization of electronic health records [EHRs; (Andreu Perez et al., 2015; Booth et al., 2016; Casey et al., 2016; Ponjoan et al., 2019)], with concomitantly increased data storage, more sophisticated cyberinfrastructure (e.g., cloud computing) and improved data-mining capacities, 2) adoption of high-resolution, diagnostic medical imaging (Backer et al., 2005; Smith-Bindman et al., 2008; Andreu Perez et al., 2015; Preim et al., 2016), 3) acquisition of genomic and other “omics” big data, largely via next-generation sequencing (Thomas, 2006; Andreu Perez et al., 2015; Maturana et al., 2016; Chu et al., 2019), 4) technologies such as wearable patient health sensors (Atallah et al., 2012; Andreu Perez et al., 2015; Guillodo et al., 2020; Perez-Pozuelo et al., 2021), and 5) acquisition of spatial and environmental data from geographical information systems [GIS; (Krieger, 2003; Rytkönen, 2004; Andreu Perez et al., 2015)]. Despite these many technological advances, without a sound analytical and methodological framework to integrate and then explore these data it remains difficult to understand how, for example, certain lifestyle behaviors or healthcare policies might contribute to the spread of disease in a population of unique individuals. The challenges and unrealized potential of big data also hold true at the finer scale of physiological systems, from organs and tissues down to cellular communities, individual cells, and even at the subcellular scale. To handle biological big data, virtually all modern computational analysis pipelines employ machine learning (ML) methods, described below. While ML offers a powerful family of approaches for handling and analyzing big data, as well as drawing inferences, mechanistic insights and questions of causality are generally not as readily elucidated via ML; for this, we turn to mechanistic modeling.

The quantitative determination and forecasting of how individual mechanisms contribute to system-level outcomes (known as “emergent properties”) underpins much of basic research, and is also critical to applied areas such as creating targeted treatments, mitigating disease spread and, ultimately, guiding more informed healthcare policies. As described by Bonabeau (2002), these general types of problems—deciphering the global, collective behavior that emerges in a complex system, composed of a statistically large collection of (locally) interacting, individual components—are particularly amenable to the approach known as agent-based modeling (ABM). Thus, one can imagine synergistically integrating ABM and ML, leveraging the respective strengths of each: the mechanistic nature of ABMs is a potentially powerful instrument with which to analyze the (non-mechanistic, black-box) predictions that are accessible via ML. After briefly introducing ABM and ML, the remainder of this Review describes their potential synergy, including the relative strengths and limitations that have surfaced in recent studies that integrate these disparate approaches and apply them at various scales, from cellular to ecological.

ABM is now a well-established computational paradigm for simulating a system’s population-level outcomes based on interactions between the individual entities comprising the population. Such an approach or ABM “mindset” (Bonabeau, 2002) is necessarily bottom-up, and the general method has been applied to domains ranging from the dynamics of macroscopic systems, such as global financial markets (Bonabeau, 2002), to the microscopic dynamics of mRNA export from the cellular nucleus (Soheilypour and Mofrad, 2018). ABMs simulate spatially-discrete, autonomous individuals, or “agents”, that follow relatively simple “rules” across a series of discrete time-steps. Rules are formulated to describe the discrete, well-defined, individual behaviors that a single agent can enact at a given time-step depending upon (and possibly in response to) both its own state and its local environment; also, rules can be either probabilistic or deterministic, and can flexibly take into account prior agent states, simulation time, and other system-specific parameters. The agents—which can represent proteins, biological cells, individual organisms, or really any definable entity (e.g., individual traders in a stock market)—exhibit specific behaviors over time, and the collection of these behaviors (e.g., patterns of pairwise interactions) gives rise to population-level outcomes in the system/ensemble, such as in embryonic development (Longo et al., 2004; Thorne et al., 2007a; Robertson et al., 2007), blood vessel growth (Peirce et al., 2004; Thorne et al., 2011; Walpole et al., 2015), disease progression within a given organism (Martin et al., 2015; Virgilio et al., 2018), or infectious disease spread among subsets of organisms in a population (Cuevas, 2020; Rockett et al., 2020). As noted in a recent study that developed a “biological lattice-gas cellular automaton” (BIO-LGCA) for the collective behavior of cellular entities in complex multicellular systems (Deutsch et al., 2021), classical, on-lattice ABMs and cellular automata (CA) are similar approaches. In particular, CAs also simulate phenomena via a grid-based spatial representation, but often do not explicitly consider interactions between agents (in that way, CAs may be viewed as simplified forms of the ABM). For a recent introductory overview of ABMs, from a mathematical perspective and with an illustrative application to a three-state disease transmission model, see Shoukat and Moghadas (2020).

The discretized nature of ABMs, in time and space, allows them to capture the temporal stochasticity and spatial heterogeneities that are inherent to most complex dynamical systems, biological or otherwise. The variabilities that arise from stochasticity and heterogeneity1 can be handled in ABM simulations in a manner that is numerically robust (e.g., to singularities, divergences) and, thus, capable of emulating how real biological processes may progress towards potentially different outcomes (i.e., non-deterministic behavior), based on 1) heterogeneity among the unique agents in a population, 2) stochasticity at the level of individual agents (i.e. inherent variability stemming from differential responses of each individual agent), or 3) variability in the environment and the coupling of agents to that potentially dynamic environment (i.e., spatial inhomogeneities). Moreover, ABMs can quantitatively predict numerous outcomes for a dynamical system that may be difficult or impossible to quantify experimentally, at least with sufficient spatial and temporal resolutions2. ABMs can also be leveraged to predict system behaviors in response to a wide range of different perturbations and initial conditions, allowing for a more comprehensive understanding of biomedical systems than is accessible via experimentation alone. Another key benefit of ABM is that its accuracy as a modeling approach does not suffer from the requirement of average-based assumptions for a system (fully-mixed, mean-field approximations, etc.), in contrast to partial differential equation (PDE)–based approaches or other modeling frameworks that treat a system as a smooth continuum, free of singularities and irregularities. Combined with methodological approaches such as sensitivity analysis (ten Broeke et al., 2014; ten Broeke et al., 2016; Ligmann-Zielinska et al., 2020), these attributes make ABMs particularly useful tools for examining the dependencies of population-level phenomena on the behaviors and interactions of the individuals that comprise the population [e.g., using ABM simulations to map a “response surface”, in terms of some underlying set of features/explanatory variables (Willett et al., 2015)].

ABMs have been used extensively to model multicellular processes, such as tissue patterning and morphogenesis (Thorne et al., 2007a; Thorne et al., 2007b; Robertson et al., 2007; Taylor et al., 2017), tumorigenesis (Wang et al., 2007; Gerlee and Anderson, 2008; Zangooei and Habibi, 2017; Oduola and Li, 2018; Warner et al., 2019), vascularization (Walpole et al., 2015; Walpole et al., 2017; Bora et al., 2019), immune responses (An, 2006; Bailey et al., 2007; Woelke et al., 2011; Xu et al., 2018), and pharmacodynamics (Hunt et al., 2013; Cosgrove et al., 2015; Kim et al., 2015; Ji et al., 2016; Gallaher et al., 2018). In the field of epidemiology, ABMs have also been used to represent human individuals in a population in order to study infectious disease transmission and to create simulations of disease spread in a cohort of individuals over time (Marshall and Galea, 2015; Tracy et al., 2018; Çağlayan et al., 2018; Cuevas, 2020; Rockett et al., 2020); note that in epidemiological, geographic, social, economic and some other settings, the terms ABM and “microsimulations” are often used interchangeably, though they are distinct approaches (Heard et al., 2015; Bae et al., 2016; Ballas et al., 2019). Like CAs, microsimulations also simulate individual entities in a discretized space over discrete timesteps, and do not have individual agents interact3. While ABMs have been applied widely and fruitfully in biomedical research, they are not without recognized limitations. For example, the rules that govern ABMs can be difficult to abstract and formulate from experimental data alone, at least in a minimally-biased way. In addition, running ABMs can be computationally expensive, and selecting statistical measures that accurately and meaningfully summarize stochastic outputs can be challenging (ten Broeke et al., 2016). Some of these constraints and limitations may be alleviated by leveraging machine learning (ML) approaches as part of an ABM pipeline.

Machine learning is a vast set of approaches whereby algorithms use statistical formalisms and methods to “learn” from data on their own—that is, without being explicitly programmed to do so. In ML, whatever relationships, patterns or other associations that may latently exist in a body of data are gleaned from the data, without requiring an a priori theory or model to specify the details of the possible relationships in advance. Given that, note that some general form for a model must be posited—e.g., we assume datasets can be fit by a linear function, can be represented by a neural network, exist as natural clusters of associations, or so on; how these unavoidable assumptions manifest is known as the inductive bias of an ML model or learning algorithm. The next section introduces some foundational ML concepts and terminology via a basic description of supervised learning; then, the remaining subsections consider the issue of evaluating ML models (Section 1.3.2) and, finally, offer a broader perspective of ML more generally (Section 1.3.3), including a taxonomic overview of ML approaches (supervised, unsupervised, etc.).

Most broadly, every ML project begins with a question, data, and a model. Armed with a model, and as cogently put by Alpaydin (2021), “it is not the programmers anymore but the data itself that defines what to do next”. How this learning is achieved can be understood by considering ML, most generally, as a way to determine a function that optimally maps a dataset (captured as a set of independent variables, often termed “features”) to a set of results/outcomes (dependent variables). That is, we aim to find a function ℱ that maps the data to the “results”,

Model training, selection, and evaluation are critical stages of any ML development pipeline, including when considering potential integration with an ABM framework. Even in broad, highly generic terms—considering, e.g., classification problems versus regression problems, ANN-based ML versus other types of ML, and so on—interrelated topics such as model selection and performance evaluation of ML models comprise an entire field unto itself. For a thorough treatment of this topic, we suggest Raschka (2018). Here, we simply note a few points. First, model training (i.e., the core learning part of the ML pipeline) and model selection are generally not inseparable issues—e.g., when constructing “splits” of an overall dataset into training (say 80% of the data) and test (say 20% of the data) sets, and then further carving out a validation subset (disjoint from the rest of the training set). How an ML approach is evaluated greatly depends on the form and complexity of its model, and an evaluation approach that may be suitable for regression-based or other “shallow” learning methods, both in terms of the ratios of splits as well as how data are assigned to training/validation/test sets, may not be as justifiable for more complex models, such as DNNs. Second, model development and performance is closely linked to the key goal of mitigating overfitting/underfitting; optimally achieving that balance yields the most successful model, with the lowest “generalization error” (i.e., accuracy of future predictions with unseen data, particularly data-points that lie distant from the training set). Third, 1) the general approaches (e.g., cross-validation, Bayesian model selection, etc.) and 2) the types of evaluation metrics (e.g., a log-loss function for classification tasks, a mean-squared error for regression, etc.) can vary greatly with the type of ML being performed (e.g., ANN versus non-ANN). In short, when considering ML approaches for integration with an ABM framework, care must be taken so as to not inadvertently confound the various approaches used in training, testing, validating, and otherwise evaluating the training and performance of ML models, including such issues as whether the models are ANN–versus non-ANN–based. As with all applications of ML, caution must be exercised in taking into account the type of ML when formulating validation and evaluation strategies.

While that which precisely distinguishes an algorithm as a machine learning algorithm (cf. statistical modeling, for example) is debatable, here we consider ML algorithms as broadly defined by Mitchell’s criterion (Mitchell, 1997): an algorithm is said to “learn” if it improves its performance,

TABLE 1. This taxonomy of ML algorithms organizes a few popular approaches using a scheme based on Mitchell’s definition of ML.

Numerous useful reviews of ML in the biosciences have appeared in recent years, including the revolution in learning via DNNs [i.e., deep learning (DL)]. As but one example, Ching et al. (2018) have thoroughly reviewed the challenges and opportunities posed by applications of ML to big data in the biosciences. While focused on deep learning (LeCun et al., 2015; Goodfellow et al., 2016; Tang et al., 2019), many of the principles of that review apply to ML more broadly, e.g., viewing deep networks as analogous to ML’s classic regression methods, but sufficiently generalized so as to allow for nonlinear relationships among features5. In the biomedical realm, ML has emerged as a powerful and generalized paradigm for integrating data to classify and predict phenotypes, genetic similarities, and disease states within various biological processes; here, again, numerous reviews are available (Chicco, 2017; Park et al., 2018; Serra et al., 2018; Jones, 2019; Nicholson and Greene, 2020; Su et al., 2020; Peng et al., 2021; Tchito Tchapga et al., 2021).

Notwithstanding its many major successes, there are a few significant limitations associated with the application of ML to biology. Creating an accurate and robust ML model requires large amounts of experimental data, such as patient data, cellular-scale measurements, “omics” data, etc.; such data may be challenging to obtain for various reasons, including measurement inaccuracies, inherent sparsity of datasets, or other concerns such as paucity of health data stemming from privacy policies. Perhaps most fundamentally vexing for modelers, ML architectures and algorithms generally pursue optimality criteria in a manner that yields “black-box” solutions, and unfortunately the internal mechanisms that may link predictor and outcome variables remain unknown; thus, ML algorithms often do not illuminate the causal mechanisms that underlie system-wide behavior (in the language above, we have found a map ℱ, but with no explanatory basis for it). For these types of reasons, “explainable A.I.” has become a highly active research area (Jiménez-Luna et al., 2020; Confalonieri et al., 2021; Vilone and Longo, 2021). An interesting notion arises if we consider 1) the black-box property of ML methods, in tandem with 2) the bottom-up, mechanistic design of ABMs (e.g., in terms of their discrete, well-defined rule-sets), and view it through the lens of 3) the theory of causal inference (Pearl, 2000). Namely, ABM rule-sets are essentially collections of low-level causal mechanisms (i.e., structural causal models). Therefore, might it be possible to synergistically apply the data from ABM simulations to examine (high-level) ML-based predictions (i.e., hypotheses)? And, could doing so help “unwind” the “ladder of causation” (Bareinboim et al., 2022), or causal hierarchy, that is induced by the ABM’s set of causal mechanisms and that, at least loosely speaking, might underpin the “inner workings” (black-box) of the corresponding ML model? These types of questions can be explored by judiciously integrating ML and ABM.

With their respective strengths and limitations, the ABM and ML methodologies can be viewed as complementary approaches for modeling biological systems—particularly for systems and problems wherein the strengths of one type of methodology (ABM or ML) can be leveraged to address specific shortcomings of the other. For example, ML algorithms (e.g., DNNs) are often criticized because their predictions are arrived at in a black-box manner; in addition, most supervised algorithms require large amounts of accurately labeled training data, and overfitting is a common pitfall in many ML approaches. ABMs, on the other hand, 1) are built upon explicit representations/formulations of the precise interactions between system components (these rules are “low-level”, and thus relatively easily formulated), and 2) can easily generate, via suites of simulations, large quantities of output data. Similarly, while the creation of rules in ABMs is frequently accomplished by manual and subjective curation of the literature, which can lead to a biased or oversimplified abstraction of the true biological complexity, ML approaches such as reinforcement learning can be used to computationally infer optimal rule-sets for agents and their interactions. Thus, there is a naturally synergistic relationship between these pairs of relative strengths and weaknesses of ABM and ML.

Inspired by this potential synergy, the remainder of this Review highlights some published studies that have integrated ML with ABMs in the following ways, in order to create more advanced and accurate computational models of biological systems, at both the multicellular and epidemiological scales:

• Learning ABM Rules via ML: Reinforcement learning and supervised learning methods can be used to infer and refine agent rules, which are critical inasmuch as these rules are applied at each discrete time step and, thus, largely define the ABM.

• Parameter Calibration and Surrogate Models of ABMs: Stochastic optimization approaches, such as genetic algorithms and particle-swarm methods, can be used to calibrate ABM parameters. Supervised learning algorithms can be trained to create surrogate models of an ABM, which also aids in calibration and in mitigating the computational costs of having to execute numerous ABMs.

• Exploring ABMs via ML: ML methods can help explore the complex, high-dimensional parameter space of an ABM, in terms of sensitivity analysis, model robustness, and so on.

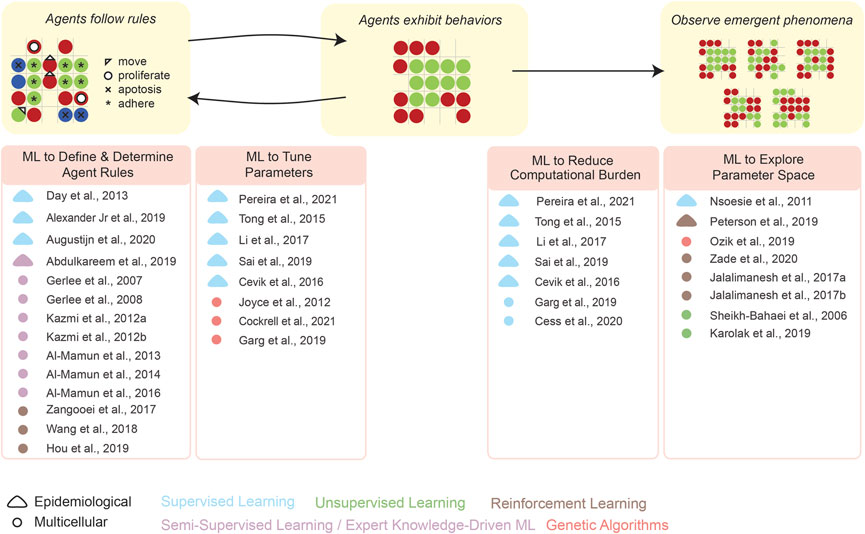

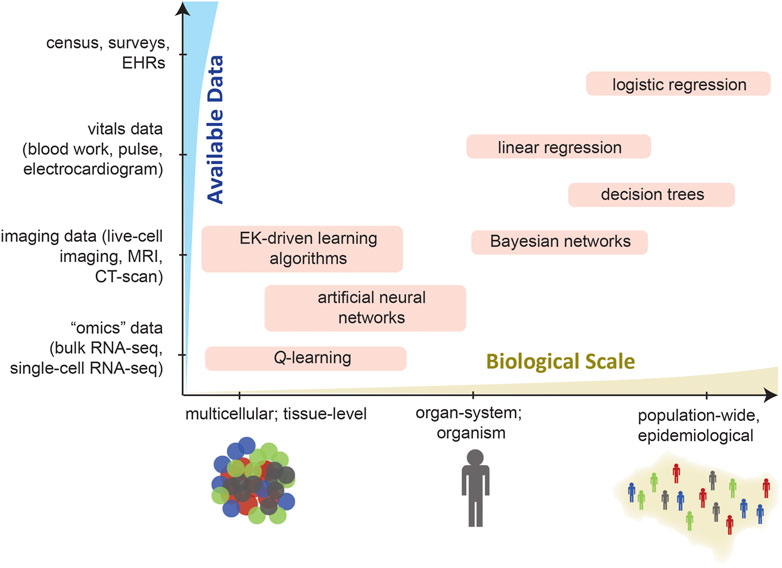

As an overview of the high-level organization of this Review, Figure 1 schematizes how individual studies, reported in the recent literature, have integrated ML into each step of formulating and analyzing an ABM. We emphasize that our present Review is by no means comprehensive: we have merely focused on specific examples of the above types of integrations. Several prior reviews have described how ML can be leveraged in computational modeling, e.g., by Alber et al. (2019) and by Peng et al. (2021). In addition, the idea of synergistically integrating ML and ABM (Figure 2) has existed since at least Rand’s (2006) early report, and includes more recent works such as by Giabbanelli (2019), Brearcliffe and Crooks (2021), and Zhang et al. (2021). The remainder of our present Review focuses more on the utility of ML within ABMs, and attempts to offer some guiding principles on how and when these integrations are feasible in simulating different scales of biology.

FIGURE 1. This overview schematizes how ML can aid the various stages in the development and application of an ABM—define/determine agent rules, tune parameters, explore parameter space, etc. Representative examples are given for various types of synergistic ML–ABM couplings (see literature citations).

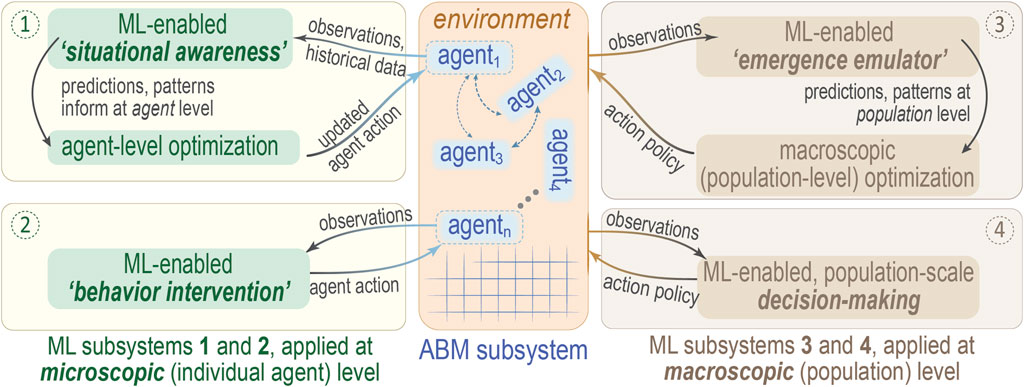

FIGURE 2. Schematic overview of potential ML/ABM integration schemes. This diagram suggests four modes (numbered circles) by which one might integrate ML and ABM, depending on whether the ML subsystem acts to optimize at the microscopic scale of individual agents (① and ②, left side) or else the macroscopic level of an entire population of agents (③ and ④, right side). Agents 1, 2, … , n are drawn in the ABM subsystem (middle panel), with coupling between agents denoted by dashed arrows; the square lattice is purely to emphasize the discrete nature of the ABM approach. As an example of how to interpret this diagram, note that mode ②, “behavior intervention”, entails application of “online” ML methods to modify agent behavior/action policies, which is essentially reinforcement learning. Note that this illustration is adapted from one that appears in Zhang et al. (2021), where further details may be found.

An ABM’s rules define the autonomous actions that an agent can perform as a function of its state and in response to changes in its local environment. For instance, a cell may undergo apoptosis if it experiences sustained hypoxia, or a healthy individual may be infected with a virus when in close proximity to an infected individual. Note that the words “can” and “may” occur in the previous sentences because an ABM’s rules are defined probabilistically. Rules link cause-and-effect in a manner that is enacted by individual agents/entities (molecules, cells, human individuals, etc.) in the population under consideration. Traditionally, these rules are manually generated by the modeler, who must curate and interpret empirical data describing the system, and synthesize that with expert opinions and/or dogma in the literature. In an ABM, the rule-set is only validated after ABM simulations have been run and predictions are compared to independent experimental data, or to a validation dataset. Hence, a common criticism of the traditional ABM rule-generation process is that there is inherent subjectivity on the part of the model-builder that could introduce bias in the rules, thereby skewing the biological relevance of its downstream results and predictions.

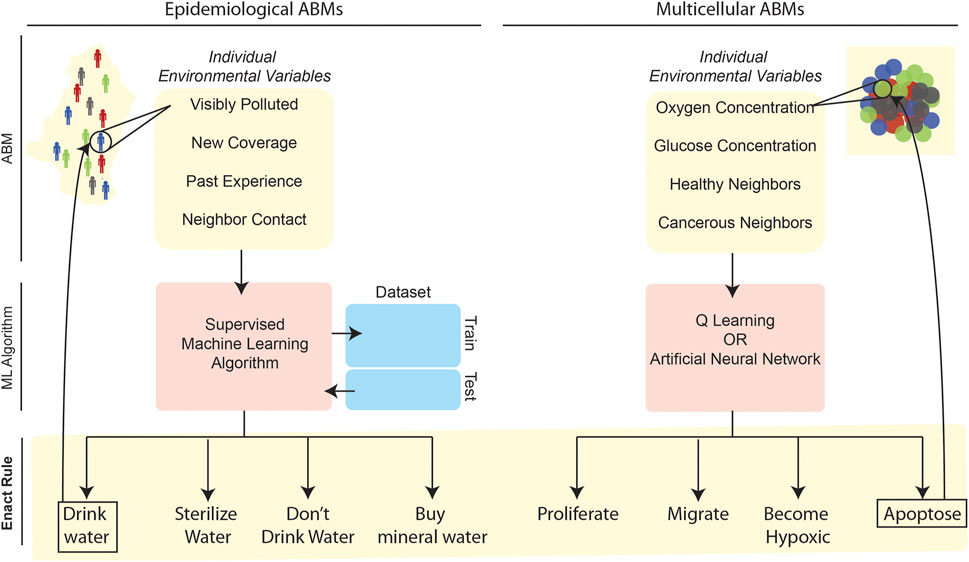

To overcome this potential issue, recent ABMs have begun leveraging ML to computationally determine—in a less ad hoc and heuristic manner—the rules governing agent behaviors based on an agent’s spatial environment at a given time-step. Instead of manually-generated rules, which could be unwittingly biased towards a particular set of predictions that are not statistically representative of the target population or system behavior at large, ML algorithms can learn the rules, parameterizations, and so forth more objectively—by examining experimental data or by applying fundamental mathematical relationships (Figure 3); indeed, this “learn from the data” capacity stems directly from the roots of ML in information theory and statistical learning (Hastie et al., 2009).

FIGURE 3. Application of ML to define rulesets in epidemiological (left) and multicellular (right) ABMs. In these two illustrative examples, ML-related stages are in red or blue while ABM-related steps are highlighted in yellow. In both contexts, individual agents survey environmental variables at a given time-step of the simulation. These environmental variables form the input for an ML algorithm that outputs a decision for the agent to enact. The left example refers to an ABM developed to simulate cholera spread in Kumasi, Ghana, wherein supervised learning algorithms trained on survey data were used to select the most probable behavior based on environmental variables (Abdulkareem et al., 2019; Augustijn et al., 2020). Several other epidemiological ABMs have leveraged large datasets to train supervised learning algorithms to determine agent behavior (Day et al., 2013; Abdulkareem et al., 2019; Alexander Jr et al., 2019; Augustijn et al., 2020). The right-hand example references multicellular ABMs that simulate individual cell behaviors in a tissue, with cellular “decisions” being made based on either Q-learning (Zangooei and Habibi, 2017) or ANN approaches (Gerlee and Anderson, 2007, 2008; Kazmi et al., 2012a; Kazmi et al., 2012b; Al-Mamun et al., 2013; Al-Mamun et al., 2014; Al-Mamun et al., 2016; Abdulkareem et al., 2019).

Supervised learning algorithms have been leveraged in epidemiological ABMs to define agent behaviors in simulations of the spread of both infectious and non-communicable diseases. Indeed, supervised learning can be useful in ABM rule generation because of the capacity to “learn” agent rules from labeled datasets that map agent features to agent behaviors under systematically varying conditions or circumstances. For example, a microsimulation (Day et al., 2013) of diabetic retinopathy (DR) in a cohort of individuals used a multivariate logistic regression algorithm to help build the rules that determine when each human agent will advance to the next stage of DR, based on features such as age, gender, duration of diabetes, current tobacco use, and hypertension. Instead of manually estimating DR stage advancement probabilities from the literature, these rules were computationally learned by training a multivariate logistic regression model on a dataset describing 535 DR patients. The logistic regression algorithm learned a function relating individual patient features to the probability of DR stage advancement, and at the beginning of every simulated year in the ABM this function was used to determine whether each human agent would advance to the next stage of DR. This approach showed that a simulated cohort of 501 patients had no significant differences from an actual live-patient cohort. Moreover, the logistic regression method was useful in identifying key predictors of DR stage advancement (Day et al., 2013). Finally, note that this example illustrates the general principle that regression models are highly applicable to constructing rules when large volumes of patient data are available.

Another study (Alexander Jr et al., 2019) evaluated multiple supervised learning methods to predict, in the context of an ABM platform, individual DR patient responses to pregabalin, a medication that targets the gabapentin receptor and which is used to treat several conditions, including diabetic neuropathy. The study found that “ensemble” methods that combined several “instance-based” learning methods, including supervised k-nearest neighbors and fuzzy c-means, yielded the highest classification accuracy (Alexander Jr et al., 2019).

Much recent effort in biomedical informatics has focused on developing approaches to automatically and systematically extract and infer statistically rigorous new information—so-called “real-world evidence” (RWE)—from primary data sources such as electronic health records (EHRs). The general aims of such efforts are manifold, including discovery of new uses for drugs already known to be safe and efficacious (an approach known as “repurposing”) and, ultimately, to reach high-confidence, clinically-actionable recommendations (e.g., a particular drug for a specific indication), ideally in a personalized, “precision medicine” manner. A potentially synergistic interface can be found between ABMs and RWE-related studies, for example by using raw (low-level), patient-derived data to both develop ABMs (define rule-sets, parameterizations, etc.) and also deploy them for predictive purposes. For instance, real-world data about the spread of COVID-19 in hospitals and other settings have been used to develop and deploy ABMs for use in optimizing policy measures and exploration of other epidemiological questions (Gaudou et al., 2020; Hinch et al., 2021; Park et al., 2021); also broadly notable, recent ABM-based studies of “information diffusion” have been used in the development of advanced community health resources (Lindau et al., 2021) and to examine how “medical innovation” might propagate among specific communities, such as cardiologists (Borracci and Giorgi, 2018).

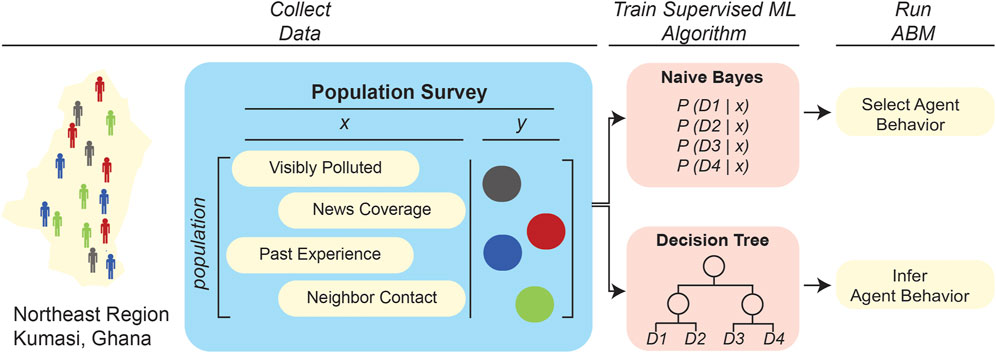

Other studies have demonstrated the utility of ML algorithms in ABM rule generation, even when there is limited available training data. Some studies train supervised learning algorithms on available data and augment the learned functions with expert knowledge. Bayesian networks (BNs), for example, are a common supervised learning algorithm that is paired with expert knowledge. First, the BN is trained on datasets to determine conditional probabilities of a certain event occurring based on predictor values, such as the probability of a sick individual infecting a healthy individual given the physical distance between them. Then, in a type of approach that has been termed “informed ML” (von Rueden et al., 2021), domain experts can subjectively adjust these learned probabilities based on experience and published literature. One study (Abdulkareem et al., 2019) used this approach to determine human agent rules in a previously developed ABM of cholera spread in Kumasi, Ghana. That work (Figure 3) compared four different BNs, trained with varying combinations of survey data and expert opinion support, to define a rule on whether a human agent would decide to use river water based on varying levels of 1) visual pollution, 2) media influence, 3) communication with neighbors, and 4) past experience. The ABM was found to be most accurate when the BNs combined low-level data with expert knowledge; this is somewhat unsurprising, as the available training data were from a limited number of participants that did not holistically represent the modeled population. Moreover, the study found that a “sequential learning” approach further improved the accuracy of the ABM. Sequential learning refers to training the BN in an “online” manner, simultaneously with the ABM simulation, such that the BN is re-trained on data that are generated during the ABM simulation. This study shows not only that Bayesian networks are a viable learning algorithm to incorporate expert opinion into ABMs when the available training data is limited, but also that the feedback process in sequential learning can further improve the accuracy of a learning algorithm by utilizing data generated by the ABM simulation. In another study, Augustijn et al. (2020) took an alternative approach to determining human agent water use in the aforementioned cholera–spread ABM: that work trained decision trees on the same training dataset as the earlier study (Abdulkareem et al., 2019) in order to determine whether an agent will use river water based on the same predictor variables. The decision tree scheme differs from the BN approach in that the decision tree does not require expert opinion or sequential learning, and instead derives (novel) agent rules from scratch by determining a tree-like model/path of how each agent considers the predictor variables to arrive at a decision regarding usage of river water. The decision tree-based approach yielded ABM predictions with different numbers of infected individuals (Augustijn et al., 2020). This discrepancy could be anticipated because, as outlined in Figure 4, the two different ML integrations led to two fundamentally different rulesets, thus affecting the emergent properties/outcomes of the system. That these different integrations of ML in these two studies yielded different ABM results underscores the importance of testing multiple ML approaches and integration strategies in order to assess which method will yield the highest accuracy ABMs for the particular systems being examined.

FIGURE 4. Contrasting two applications of supervised learning algorithms to define agent rulesets in epidemiological ABMs. Two studies applied ML to define agent rulesets in an ABM developed to simulate cholera spread in Kumasi, Ghana. The first study (upper pathway) applied a naive Bayes model to predict water usage of individual agents based on environmental variables (Abdulkareem et al., 2019), while the second one (lower path) trained a decision tree to derive agent behavior based on the same environmental variables (Augustijn et al., 2020). The two ML/ABM integrations predicted different numbers of total infected individuals in the population, illustrating that the ML algorithm used to adaptively refine an agent’s behavior can impact the overall system-wide trends predicted by an ABM.

Supervised learning approaches that are expert knowledge–driven have also been applied in studies that integrate artificial feed-forward neural networks (ANNs) into ABMs of multicellular systems. As the predecessors of today’s deep NNs, information processing in ANNs is inspired by the hierarchical, multi-layered, densely-interconnected patterns of signaling and information flow between layers of neurons in the human brain. In an ANN, each neuron (or “hidden unit”) processes input variables, e.g., via a linear summation, and “decides” how to pass this information on to the next neuron (downstream), the decision being based on whether or not the computed numerical values exceed an “activation threshold”. [The foundations of NNs are treated in the classic text by Haykin (2009).] ideally, an ANN’s input variables capture salient features about a system in terms of its dynamics, local environment, and so on; non-numerical information (e.g., categorical data) can be captured as input via a process known as feature encoding. Also, note that the activation function and the neuron’s input-combining functionality can range from relatively simple (e.g., a binary step function or a weighted linear combination of arguments) to more sophisticated forms, such as 1) those based on the hyperbolic tangent (or the similar logistic function, both of which sigmoidally saturate), or 2) the more recent piecewise-linear “rectified linear units” (ReLU), which are found to generally work well in training DNNs (Glorot et al., 2011).

As an ANN/ABM example, one study incorporated an expert knowledge-based feed-forward ANN to determine cellular behavior based on environmental conditions in an ABM of tumor growth (Gerlee and Anderson, 2007). Within the ABM, each cellular agent encoded an ANN that decided cell phenotype based on inputs describing a cell’s local environment, such as the number of cellular neighbors, local oxygen concentration, glucose concentration, and pH (Gerlee and Anderson, 2007). Each ANN processed these inputs to select one from a limited number of discrete phenotypic responses, such as proliferation, quiescence, movement, or apoptosis. Also, in that work the connection weights and activation thresholds of each neuron were manually set, thus “tuning” the ANN such that overall cellular behavior resembled that of cancer cells (i.e., a certain percentage of cells in the population had each of the output phenotypes), instead of training the ANN on actual cellular data. As cells proliferated, they implicitly passed on their ANN to successive generations. Genetic mutations were incorporated into the simulation model by introducing random fluctuations in the ANN weights and thresholds when passed on to daughter cells (Gerlee and Anderson, 2007). These simulated genetic mutations allowed the authors to study clonal evolution in tumors and the environmental factors that contribute to the emergence of the glycolytic phenotype—a cellular state characterized by upregulated glycolysis, and which is known to increase the invasiveness of a tumor (Gerlee and Anderson, 2007, 2008).

The above ANN framework was incorporated into follow-up studies aimed at modeling drug delivery and hypoxia. Those further studies increased the complexity of the cellular ANN by adding growth and inhibitory factors as inputs (Kazmi et al., 2012a; Kazmi et al., 2012b), and also by introducing infusion of a bioreductive drug into the ABM; these studies explored the effects of protein binding on drug transport (Kazmi et al., 2012a; Kazmi et al., 2012b). Other studies used a similar ANN architecture to pinpoint effects of hypoxia on tumor growth (Al-Mamun et al., 2014), and explored the efficacy of a chemotherapeutic agent, maspin, on tumor metastasis (Al-Mamun et al., 2013; Al-Mamun et al., 2016). Overall, this design scheme—i.e., “embedding” ANNs into the agent entities of an ABM—illustrates an intriguing and creative type of synergy that is possible when integrating ML and ABM–based approaches.

Another type of learning algorithm used in multicellular ABMs is reinforcement learning (RL), which learns cellular behaviors as “policies” that maximize a (cumulative) reward based on the surrounding environment and transitions of the system from one “state” to the next (Table 1). Conceptual similarities between RL and ABM rule-sets run deep: the RL approach can be largely viewed as being a type of agent-based Markov decision process (Puterman, 1990). The key elements of this approach are four interrelated concepts: 1) the state that is occupied by an agent at a given instant (e.g., a cell can be in a “quiescent” versus “proliferating” state); 2) the action which an agent can take (e.g., apoptose versus divide); 3) a probabilistic policy map, specifying the chance (and rewards) of transitions between a given combination of states and actions (call it

Recent studies of multicellular systems (Zangooei and Habibi, 2017; Wang et al., 2018; Hou et al., 2019) have exploited a type of “model-free” RL algorithm known as Q-learning (Table 1) to quantitatively learn which cellular behavior, or action, an agent should take on, based on its surroundings (environmental context). In this approach, state-action pairs (see above) are mapped to a reward space by a quality function, Q, which can be roughly viewed as the expectation value of the reward over a series of state-action pairs (i.e., a series of actions and the successive states that they link). Q-learning seeks state-action policies which are optimal in the sense of maximizing the overall/cumulative reward. As one might imagine, achieving this goal involves both exploration and exploitation in the solution space: 1) roughly speaking, “exploration” means sampling new, potentially distant regions of a system’s universe of possible state-action pairs under the current policy (this can be viewed as a long-term/delayed reward), whereas 2) “exploitation” means (re)sampling an already characterized and advantageous region of the space (e.g., a local energy minimum). The exploration/exploitation trade-off enters the Q equation as the (adjustable) learning rate. Intuitively, one can imagine that more exploration occurs relatively early in an RL episode (at which point the solution space, or policy space, has been less mapped-out), whereas the balance might shift towards exploitation in later stages (once the algorithm has learned more productive/rewarding types of actions, corresponding to particular regions of the state-action space).

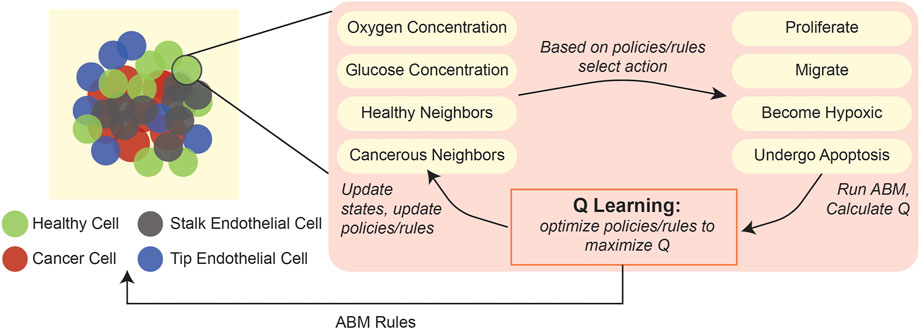

As an example of the applicability of this type of ML in ABMs, one study developed a 3D hybrid agent-based model of a vascularized tumor, wherein a Q-learning algorithm dynamically determined individual cell phenotypes based on features of their surrounding environment (Figure 5), such as local oxygen and glucose concentrations, cell division count, and number of healthy and cancerous neighbors (Zangooei and Habibi, 2017). Comparison with predictions from other, validated ODE-based models (Wodarz and Komarova, 2009; Gerlee, 2013) indicated that the ABM could accurately recapitulate cell phenotype selection and angiogenesis behaviors.

FIGURE 5. Application of Q-learning to update cellular states in an ABM of tumorigenesis. An ABM of 3D tumorigenesis (Zangooei and Habibi, 2017) applied Q-learning to find the optimal cell actions (proliferate, migrate, become hypoxic, undergo apoptosis) based on an individual cell’s surrounding environmental variables, including oxygen concentration, glucose concentration, number of healthy cell neighbors, and number of cancerous cell neighbors.

Q-learning has also been used to model cell migration behaviors in multicellular systems. Cell migration is an intricate and challenging process to model because a subtle combination of chemotactic gradients, cell···substrate interactions, and other factors influence the direction of movement. One study, which used Q-learning to develop cell migration rules in an ABM of C. elegans embryogenesis (Wang et al., 2018), trained a deep-Q network that optimizes individual cell migratory behaviors in the system. Deep-Q networks are a deep-RL approach which integrate deep NNs (e.g., deep convolutional neural nets) with the Q-learning framework in order to improve the power and efficiency of a basic RL approach (Alpaydin, 2021); this improvement is achieved by virtue of using a DNN, versus a variant of Bellman’s equation from dynamic programming (Eddy, 2004), to represent and optimize the Q-function mentioned above (which, again, underlies the mapping of state-action pairs and probabilistic policies to the reward space). In a similar way, Q-learning also has been used to define cell migration behaviors in leader-follower systems (Hou et al., 2019). In these contexts in particular, RL methods can be seen as a complement to popular “swarm intelligence”-based approaches (Table 1), such as the particle-swarm, ant-colony, and dragonfly stochastic optimization algorithms (Meraihi et al., 2020; Jin et al., 2021), which feature ants, dragonflies, etc., as the discrete agent-like entities.

Ideally, an ABM’s ruleset captures the underlying mechanisms that govern the behaviors of individual entities in response to their local surroundings. Thus, an implication of applying ML to extrapolate agent rules is that the structure and quantitative formulation of the ML model, itself, accurately expresses the decision-making process of an agent, and that the model is generalizable (at least to within some sufficient bounds). As an early example of using ANNs to model agent rules, Gerlee and Anderson (2007) embedded ANNs in cellular agents of a growing tumor to predict cell behaviors, such as proliferation, quiescence, movement, or apoptosis. In that approach, the ANN modeled the “response network” (or rules) governing the behavior of cellular agents in response to their local microenvironments, an assumption being that an NN architecture could reasonably well represent how individual cells enact behaviors in the complex tumor microenvironment. With somewhat similar aims but a different approach, Zangooei and Habibi (2017) applied an RL algorithm to define cancer cell agent behaviors in a growing three-dimensional tumor. The contrasting approaches used by these studies suggests that it could be interesting to assess their relative strengths in representing cellular decision-making in the intricate microenvironment of a tumor. Indeed, there is now an opportunity to evaluate how different ML approaches affect the accuracies and generality of ABM predictions. Augustijn et al. (2020) performed such a head-to-head comparison in an epidemiological ABM of cholera spread: specifically, they contrasted a decision-tree–based algorithm and an EK-driven naïve Bayes approach to simulating decision-making in the ABM. As might be expected, the study found that the emergent predictions of the ABM varied based on which ML approach was used. As was emphasized above for ML model validation, we stress here that there is no “one-size-fits-all” approach to integrating ML and ABM. While a key principle is to strive for an optimal “match” between the structure/architecture of the ML approach and the ABM simulation system, it nevertheless pays to systematically analyze the behavior of as many ML/ABM integration schemes as resources permit.

The choice of ML algorithm likely will be influenced by two factors: 1) the type and availability of data and 2) the ability to validate the ML algorithm, both on its own and after being integrated with an ABM framework. In epidemiological settings, survey data and EHRs enable the creation of massive training datasets that are amenable to training and validating supervised learning algorithms in isolation to define agent behaviors. Here, several supervised learning algorithms can be trained and the algorithm which exhibits highest predictive accuracy can be embedded in an ABM simulation. In contrast, there is not as much sheer data available describing cell behaviors within tissue contexts. In these situations, training and validating supervised learning algorithms may be less feasible; there, most studies leverage EK-driven ANNs or RL algorithms. As was alluded to earlier, recall that NNs are “universal predictors,” meaning that a functional relationship can be found for any data set. In biomedical datasets—which are generally high-dimensional and often noisy—this can easily lead to overfitting of the model to the data. To mitigate overfitting, deep learning models require large datasets for validation. Moreover, perturbations to NNs cannot be assessed using only the training data (see the aforementioned notes on causal hierarchy), and require new instances in order to thoroughly examine the effects of perturbations. We suggest that as many ML/ABM integrations be tested as is feasible, and that justification be provided (again, to the extent possible) for the specific learning algorithm applied for rule-making. Finally, note that an opportunity that can be envisioned in this field is to integrate ANNs with simulations, perturb the ANNs, and study emergent differences in the ABM.

Typically, ABMs include a variety of parameters that dictate agent behaviors and impact model outcomes. While some of these parameters may be experimentally accessible and well-characterized, such as the time for a cell to divide or the contagious period of an infected individual, often many parameters are unknown and impossible to measure experimentally. For example, the probability of two cells forming an adherens junction or the physical distance over which a virus spreads from individual to individual are parameters that are difficult to accurately determine. Also, certain widely-varying parameters may adopt values that are intrinsically quite broadly distributed. For example, at the molecular level the diffusive properties of proteins and other molecules can vary greatly based on cytosolic crowding, facilitated transport, etc., to such a degree that the distributions of a single parameter (e.g., the translational diffusion coefficient) are quite broadly distributed (greater than an order of magnitude). An acute challenge in ABM development is calibrating such parameter values so that model outputs are statistically similar to experimentally measured values, including their distributions. Parameter calibration typically involves the modeler formulating an appropriate “error” or “fitness” function that compares model outputs with experimental outputs; the calibration algorithm optimizes multiple parameters so as to minimize error or, equivalently, maximize fitness. For example, in an ABM of infectious disease transmission, an error function may be defined as the squared difference between the final fraction of infected individuals in the model versus a real-world example. The parameter calibration algorithm would then seek an ABM parameter combination that minimizes this error function—a daunting computational task, as exhaustive, brute-force “parameter sweeps” rapidly become intractable, even for relatively coarse sampling, because of a combinatorial explosion in the size of the search space [i.e., the curse of dimensionality (Donoho, 2000)]. ABMs generally have highly multidimensional parameter spaces, making it critical to have an efficient calibration pipeline that can rapidly explore this space and limit the number of parameter combinations that require evaluation. Genetic algorithms and other “evolutionary algorithms” offer effective stochastic optimization approaches for high-dimensionality searches, as has been recognized in the context of ABMs (Calvez and Hutzler, 2005; Stonedahl and Wilensky, 2011). Therefore, the following section considers genetic algorithms in a bit more detail, as an example of these types of biologically-inspired ML algorithms and their interplay with ABMs.

Genetic algorithms (GAs) are a widely used ML approach in parameter calibration and, more generally, in any sort of numerical problem that attempts to identify global optima in vast, multi-dimensional search spaces. Inspired by the native biological processes of molecular evolution and natural selection, as described in a timeless piece by Holland (1992), GAs are particularly adept at locating combinations of parameters (as “solutions” or “individuals” in an in silico population) that stochastically optimize a fitness function. A description of GAs in terms of the broader landscape of evolutionary computation can be found in Foster (2001); more recently, Jin et al. (2021) have provided a pedagogically helpful review of GAs in relation to swarm-based techniques and other population-based “metaheuristic” approaches for stochastic optimization problems (Table 1).

In general, a GA operates via several distinct stages: 1) initialization of a population of individuals as (randomized) parameter combinations that are encoded as chromosomes (e.g., as bit-strings, each chromosome corresponding to one individual), 2) numerical evaluation of the fitness of each individual in the population at cycle n, 3) a selection step, wherein parameter combinations/individuals of relatively high fitness are chosen as “parents” based on specific criteria/protocols, thereby biasing the population towards greater overall fitness [the selection protocol’s algorithm and its thresholds can be stochastic to varying degrees, e.g. “tournament selection”, “roulette wheel selection” or similar approaches (Zhong et al., 2005)], 4) the stochastic application of well-defined genetic operators, such as crossover (recombination) and mutation, to a subset of the population, thereby yielding the next generation of individuals as “offspring”. That next, n+1th set of individuals then becomes generation n, and the steps, from stage 2) onwards, are iteratively repeated. Over successive iterations, certain allelic variants (“flavors” of a gene) likely become enriched at specific chromosomal regions, indicating convergence with respect to those genes/regions. The GA cycles can terminate after a specific number of iterations/generations, or perhaps once a convergence threshold is reached. At the conclusion of this process, the set of available individuals (with encoded genotypes) will represent various “solutions” to the original problem—that is, the solution is read-out as the “genetic sequence” (i.e., genotype) of the final set of chromosomes, representing the “fittest” individuals (corresponding to optimal phenotypes). As the iterations of evaluate fitness → select → reproduce/mutate proceed, with hopeful exploration of new regions of the search space at each stage, the average fitness of a generation approaches more optimal values (e.g., maximal traffic flow, minimal free energy, minimal loss/error function). At that point, the GA can be considered as having converged and identified a parameter combination that optimizes the fitness function.

While GAs can find parameters that optimize multi-objective fitness functions, thus avoiding having to run an ABM for every possible parameter combination, GAs can still be quite computationally expensive. Because of its inherent stochasticity, an ABM must be run multiple times to reach stable values of a single parameter combination (genotype), for a given generation of the iteratively proceeding GA. Thus, as the complexity and computational burden of the ABM increases, traditional GAs become a less computationally feasible option, particularly for calibrating a sophisticated ABM. Nevertheless, note that GAs have been used in tandem with ABMs in areas as diverse as calibrating models of financial and retail markets (Heppenstall et al., 2007; Fabretti, 2013), in parameterizing an ABM “of the functional human brain” (Joyce et al., 2012), and for model refinement and rule-discovery in a “high-dimensional” ABM of systemic inflammation (Cockrell and An, 2021). An active area of research concerns the development of strategies by which GA/GA-like approaches can navigate a search space in a manner that is more numerically efficient and computationally robust (e.g., to a pathological fitness landscape). Such stochastic optimization methods include, for example, a family of covariance matrix adaptation–evolution strategy (CMA-ES) algorithms [see Slowik and Kwasnicka (2020) for this and related approaches] and, somewhat related, probabilistic model-building GAs (PMBGAs) that “guide the search for the optimum by building and sampling explicit probabilistic models of promising candidate solutions” (Wikipedia, 2022).

One way to increase the computational efficiency of GAs is to reduce the number of parameters being optimized, thereby reducing the dimensionality of the overall search space of the GA and the number of steps required to achieve convergence. In this context, ML methods can be applied to conduct sensitivity analyses on an ABM and identify the most sensitive/critical parameters to target for calibration. Random forests (RFs), which are composed of an ensemble of decision trees (Table 1), are a popular supervised learning algorithm for conducting sensitivity analysis (Strobl et al., 2007; Strobl et al., 2008; Criminisi et al., 2012). A study by Garg et al. (2019) used RFs to identify sensitive parameters in a multicellular ABM of three different cell populations in vocal fold surgical injury and repair. In that work, the ABM was first run for a variety of input parameter values to generate outputs and create a training dataset that relates input parameter combinations to output values. Then, an RF was trained on this data to classify model outputs based on initial parameter values. The RF hierarchically orders input parameters by Gini index, which is a measure of variance that relates to the probability of incorrectly classifying an output, were the input parameter randomly chosen from the list of all input parameters (the greater the variance, the greater the degree of misclassification). Viewing the Gini index as a measure of feature importance, a parameter with a higher Gini value can be seen as more disproportionately influencing the outcome (relative to other parameters), as the model is more likely to produce wrong (misclassified) output if that parameter value was randomly chosen. After training the RF, this study selected the top three parameters associated with each cell type in the model for calibration with a GA (Garg et al., 2019). This integrative and multipronged approach is mentioned here because it reduced the number of parameters required for calibration via the GA, thus improving the computational efficiency of the overall model calibration process.

Beyond computational efficiency, can GAs and ABMs be integrated in ways that might extend the low-level functionality of one (or both) of these fundamental algorithmic approaches? For example, can GAs enable adaptable agents in an ABM? As reviewed by DeAngelis and Diaz (2019), largely in the context of ecological sciences, the plasticity of agent rule-sets and decision-making processes is a key component in achieving greater accuracy and realism in modeling and simulating complex adaptive systems. Here, the decision-making rules and processes that govern the behavior of an individual agent, at a particular time-step in the simulation, are “generally geared to optimize some sort of fitness measure”, and—critically—there is the capacity for the decision-making process to change (evolve) via selection processes as a simulation unfolds. As concrete examples of approaches that have been taken to incorporate plasticity and heterogeneity in agent behaviors (across individual agent entities, and across time), we note that fuzzy cognitive maps (FCMs) have been employed with GAs and agent-based methodologies in at least two distinct ways: 1) In building a framework that uses FCMs to model gene-regulatory networks, Liu and Liu (2018) employed a multi-agent GA and random forests to address the high-dimensional search problem that arises in finding optimal parameters for their large FCM-based models. 2) More recently, Wozniak et al. (2022) devised a GA-based algorithm to efficiently create agent-level FCMs (i.e., one FCM per unique agent, versus a single global FCM for all agents); importantly, the capacity for agent-specific FCMs, or for agents to “have different traits and also follow different rules”, enables the emergence of more finely-grained (and more realistic) population-level heterogeneity. GA-based approaches to enable agents to be more adaptable will make ABMs more “expressive”, affording a more realistic and nuanced view of the systems being modeled. Drawing upon the parallel between the “agents” (real or virtual) in an ABM and those in RL, we note that Sehgal et al. (2019) found that using a GA afforded significantly more efficient discovery of optimal parameterization values for the agent’s learning algorithms in a deep RL framework, wherein agents were updated via Q-learning approaches [specifically, deep deterministic policy gradients (DDPGs) combined with hindsight experience replay (HER)]; although that work was in the context of robotics, it can be viewed as ABM-related because an RL algorithm’s “agents” are essentially abstracted, intelligent (non-random) agents that enact decisions6 based upon a host of feedback factors, at the levels of 1) intra-agent (i.e., an agent’s internal state), 2) inter-agent interactions (with neighbors, near or far) and 3) agent···environment interactions. Finally, we end with one “inverse” example: Yousefi et al. (2018) took the approach of developing “ensemble metamodels” (in order to reduce the number of models requiring eventual evaluation by a GA) that were trained on ABM-generated data, and then used the metamodels effectively as fitness functions in their GA framework, aimed at solving the constrained optimization problem of on-the-fly resource allocation in hospital emergency departments. We describe this as “inverse” because, rather than using a GA to aid in an underlying agent-based framework, ABMs were used to inform the GA process (albeit via the ML-based metamodels). We believe that much synergy of this sort is possible.

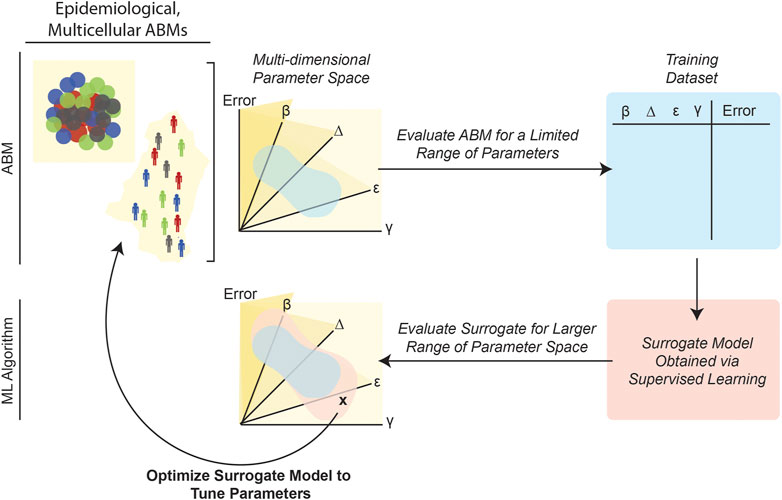

Supervised learning algorithms that create a more easily evaluated meta-model or “surrogate model” of an original ABM can also significantly reduce computational burden and make model calibration processes more computationally tractable. As schematized in Figure 6, this approach involves evaluating the ABM on an initial set of parameter combinations by computing the fitness, given an objective function constructed by the modeler. Then, a supervised learning algorithm is trained on this data in order to create a surrogate ML model that can predict ABM outputs for various initial parameter combinations. Finally, parameter-calibration approaches, such as GAs, particle swarm optimization or other methodologies amenable to vast search spaces (Table 1), can be applied to this surrogate model. Often, the surrogate model runs significantly faster than the ABM because the inference stage in an ML pipeline involves simply applying the already-trained model to new data (also, the ML model/function is evaluated for single data items instead of an entire simulation worth of data-points). In order to reduce the run-time of an epidemic model, Pereira et al. (2021) employed this general strategy by training a deep neural network (DNN) on data generated by ABM simulations. Application of the DNN (i.e., inference) was more computationally efficient than executing numerous ABM simulations; and, unlike the ABM, the DNN run-time did not increase as the number of ABM agents increased. This DNN-based surrogate model was then used for parameter calibration (Pereira et al., 2021). Other studies have taken similar approaches, for example, by using regression algorithms to train surrogate meta-models of an ABM of interest (Tong et al., 2015; Li et al., 2017; Sai et al., 2019; Lutz and Giabbanelli, 2022).

FIGURE 6. Training surrogate ML models can reduce the computational burden of ABM calibration. Because it requires repeatedly evaluating potentially complex numerical expressions, for multiple agents over numerous timesteps, ABM parameterization can be computationally expensive. Once trained (i.e., as applied in the inference stage), ML models are generally less computationally costly because they entail evaluating a single function to generate a prediction. Several studies (Tong et al., 2015; Cevik et al., 2016; Li et al., 2017; Sai et al., 2019; Pereira et al., 2021) have leveraged this advantage of ML by first evaluating an ABM for a limited range of parameters, and then creating a “surrogate” dataset that relates the ABM parameters to final error. Next, a surrogate supervised learning algorithm can be trained on this data, and the surrogate model can then be used to explore broader regions of the original ABM’s parameter space.

A relatively recent microsimulation study (Cevik et al., 2016), tracking the progression of breast cancer in women, used a novel active learning7 approach for parameter calibration. In that work, a surrogate ensemble of ANNs (a “bag” of ANNs, or “bagANN”) was trained based on ABM-generated training datasets. Then, the bagANN model was used to predict fitness for untested parameter combinations. Parameter combinations with low predicted fitness were reevaluated by the ABM, and a refined training dataset was developed to further train the bagANN. The bagANN was repeatedly trained on parameter combinations with increasing fitness, until the fitness converged at some maximal value. In this way, the overall computational pipeline essentially contained an iterative “bouncing” between the ML (bagANN, in this case) and the ABM stages, not unlike that described by Rand (2006) 8 in a game-theoretic social sciences context. Finally, we note that the biological bagANN study revealed that an active learning approach could find the optimal parameter combination by evaluating only 2% of the parameter space that had been required to be sampled in a prior study devoted to calibrating the same model (Batina et al., 2013; Cevik et al., 2016).

Surrogate ML models also present novel opportunities to capture and explore continuous system behaviors via ABMs. ABMs of multicellular interactions can represent cell–cell interactions, such as migration, adhesion and proliferation, that occur over discrete time steps. However, these discrete cell–cell behaviors stem from molecular processes that occur over an effectively continuous time domain, such as the rapid expression of proteins driven by complex intracellular signaling cascades. Multiscale models attempt to represent phenomena that span intra-cellular and inter-cellular biological scales in a more realistic and accurate manner than is otherwise possible; this is pursued by employing continuous approaches that predict intracellular signaling dynamics, and using these predictions to update the discrete rules describing cell-cell behaviors. However, a key challenge in these multiscale models is the increased computational cost of evaluating a continuum model, say of reaction kinetics, at each discrete time-step of an ABM. To address such challenges, a newly promising family of approaches views ABMs and conventional, equation-based modeling not as mutually exclusive approaches (Van Dyke Parunak et al., 1998) but rather as opportunities to calibrate ABMs (Ye et al., 2021) and/or “learn” (in the ML sense and beyond) a system’s dynamics in terms of classic, differential-equation–based frameworks; see, e.g., Nardini et al. (2021) for “a promising, novel and unifying approach” for developing and analyzing biological ABMs.

As regards equation-based mechanistic models, a recent study leveraged the training of surrogate models to improve the computational efficiency of a multiscale model of immune cell interactions in the tumor microenvironment (Cess and Finley, 2020). That work utilized an ODE–based mechanistic model to predict macrophage phenotype, based on surrounding cytokine concentrations, and employed an ABM to represent resultant interactions between cells in the tumor. To mitigate the computational burden of evaluating the mechanistic model at each time step, the group trained a NN on the mechanistic model in order to reduce the model to “a simple input/output system”, wherein the inputs were local cytokine concentrations and the outputs were cell phenotype. The NN achieved an accuracy exceeding 98%, and—by revealing that the detailed, intracellular mechanistic model could be recapitulated by a simple binary model—reduced the overall computational complexity of the hybrid, multi-scale ABM (Cess and Finley, 2020).

While surrogate models present an opportunity to reduce the computational burdens of re-running several ABMs, the choice of supervised learning algorithm, and training process used to obtain a surrogate model, is critical to ensuring that the surrogate model can accurately recapitulate ABM results under many circumstances and conditions. Such conditions include predictions with “out-of-distribution” (OOD) data (Hendrycks and Gimpel, 2017), i.e., those which are highly dissimilar/distant from the data used for training; again, this closely relates to the generalization power of the implemented ML, and recent reviews of OOD challenges can be found in Ghassemi and Fazl-Ersi (2022) and in Sanchez et al. (2022) (see Section 4.3 of the latter for a biomedical context). Surrogate models are generally trained on a subset of the parameter space and then applied to predict ABM behaviors in an unexplored region of the parameter space. However, obtaining a well-trained surrogate model that can be successfully applied in a broad range of scenarios (including OOD) simply may be unfeasible for ABMs of “difficult” systems, such as those which are highly stochastic, which predict a multitude of possibilities for a single combination of parameter values (a one-to-many mapping), which are characterized by exceedingly high intrinsic variability (imagine a difficult functional form, in a high-dimensional space, and with only sparsely-sampled data available), and so on.

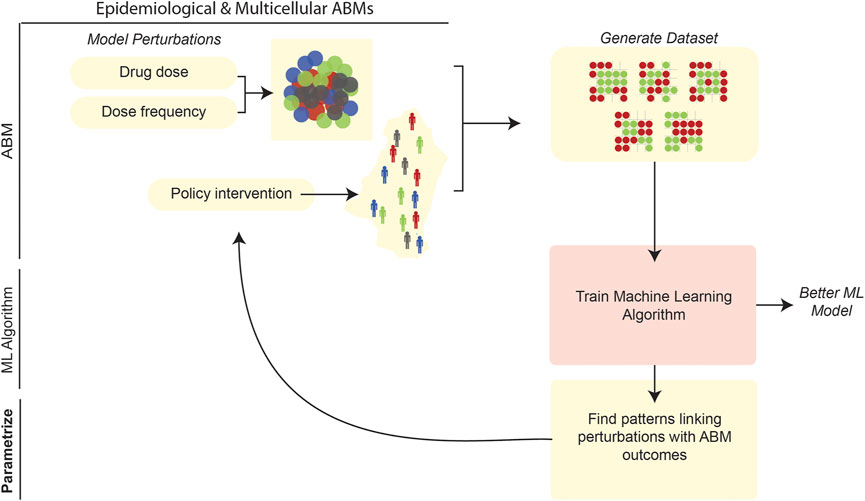

After model development and calibration, the behavior of an ABM can be quantitatively explored and used to address various questions. One conceivable approach to such exploration is sensitivity analysis (SA). In this general approach, one perturbs an ABM and its independent parameters, while monitoring predicted changes in dependent variables and other relevant outputs (ten Broeke et al., 2016). SA is an especially fine-grained instrument for probing the behavior of an ABM system. To assess the coarser-scale behavior of a model, e.g., for “what-if” type analyses, one might create interventions in the ABM (e.g., by setting certain parameter values to specific ranges corresponding to the intervention), make drastic alterations to thresholds, and/or make similarly large-scale changes to the ABM and its input. On a finer scale, examining regions of a parameter space via SA could aim to determine the optimal values (or assess the effects) of an intervention; for example, in an ABM of tumorigenesis the modeler could find the optimal dosage and scheduling of a drug agent to minimize tumor size. Another goal of parameter-space exploration might be to use ABM simulations to predict real-world responses. In an epidemiological ABM of infectious disease transmission, for example, one can use ABM simulations to predict the timeline of disease spread during an ongoing pandemic. Discrete simulation models also have been applied at the level of viral cell-to-cell transmission within a single human; for example, using CA approaches to model intra-host HIV-1 spread, Giabbanelli et al. (2019) examined model parameter estimations in terms of prediction accuracies (accounting for available biological/mechanistic information). Recent ABMs have leveraged a variety of unique ML approaches to aid in testing a wide range of perturbations and in characterizing stochastic results (Figure 7). Interestingly, our review of the literature reveals that the goal of parameter-space exploration—whether it be optimizing the efficacy of an intervention, training a predictive model on ABM simulation results, or so on—tends to be closely associated with the type of ML algorithm used.

FIGURE 7. ML can be applied to parameter space exploration of ABMs. ABMs can be used to generate vast volumes of data and explore how system perturbations affect population-level outcomes in the model. After generating simulation data from an ABM, ML can be used to characterize patterns in the ABM (Karolak et al., 2019). Simultaneously, the datasets generated by ABMs can be used to train more robust ML algorithms (Sheikh-Bahaei and Hunt, 2006; Nsoesie et al., 2011).

Reinforcement learning methods have been applied to optimization problems in epidemiological and multicellular ABMs, wherein an intervention, such as a drug, is considered an agent and ML is used to find the optimal policy to achieve an output of interest (e.g., minimizing tumor size). A precision medicine, multicellular ABM used deep reinforcement learning (DRL) to find the optimal multi-cytokine therapy dosage for sepsis patients in an ABM of systemic inflammation (Petersen et al., 2019); in that work, the DRL algorithm found the optimal dosage of 12 cytokines to promote patient recovery. RL also has been used, in a multicellular ABM of glioblastoma, to identify an optimal scheduling of Temozolomide treatment for tumor size minimization (Zade et al., 2020). Similarly, RL was also used to optimize radiotherapy treatment of heterogeneous, vascularized tumors (Jalalimanesh et al., 2017a; Jalalimanesh et al., 2017b).

The aforementioned active learning approaches have been used to accelerate the parameter space exploration of ABMs. Using the ABM framework PhysiCell (Ghaffarizadeh et al., 2018), a recent study compared GA-based and active learning–based parameter exploration approaches in the context of tumor and immune cell interactions (Ozik et al., 2019). The goal of the parameter space exploration was to optimize six different immune cell parameters, including apoptosis rate, kill rate and attachment rate, in order to reduce overall tumor cell count. In the GA approach, optimal parameters were found by iteratively selecting parameter combinations that reduced mean tumor cell count. The active learning approach involved training a surrogate RF classifier on the ABM, such that the RF predicts whether a set of input ABM parameters would yield mean tumor cell counts less than a predefined threshold. Then, in a divide-and-conquer–like strategy, the active learning approach selectively samples “viable” parameter subspaces that yield tumor cell counts less than the threshold in order to find a solution (Ozik et al., 2019). That work illustrates the possibilities of integrating ML-guided adaptive sampling strategies with ABM-based approaches.