- 1 Dipartimento di Fisica “E.R.Caianiello”, Università di Salerno, Fisciano, Italy

- 2 INFN, Sezione di Napoli e Gruppo Coll. di Salerno, Naples, Italy

- 3 Dipartimento di Scienze Fisiche, Università di Napoli Federico II, Naples, Italy

- 4 CNR-SPIN, Unità di Napoli, Naples, Italy

We study the storage and retrieval of phase-coded patterns as stable dynamical attractors in recurrent neural networks, for both an analog and a integrate and fire spiking model. The synaptic strength is determined by a learning rule based on spike-time-dependent plasticity, with an asymmetric time window depending on the relative timing between pre and postsynaptic activity. We store multiple patterns and study the network capacity. For the analog model, we find that the network capacity scales linearly with the network size, and that both capacity and the oscillation frequency of the retrieval state depend on the asymmetry of the learning time window. In addition to fully connected networks, we study sparse networks, where each neuron is connected only to a small number z ≪ N of other neurons. Connections can be short range, between neighboring neurons placed on a regular lattice, or long range, between randomly chosen pairs of neurons. We find that a small fraction of long range connections is able to amplify the capacity of the network. This imply that a small-world-network topology is optimal, as a compromise between the cost of long range connections and the capacity increase. Also in the spiking integrate and fire model the crucial result of storing and retrieval of multiple phase-coded patterns is observed. The capacity of the fully-connected spiking network is investigated, together with the relation between oscillation frequency of retrieval state and window asymmetry.

Recent advances in brain research have generated renewed awareness and appreciation that the brain operates as a complex non-linear dynamic system, and synchronous and phase-locked oscillations may play a crucial role in information processing, such as feature grouping, saliency enhancing (Singer, 1999; Fries, 2005; Fries et al., 2007) and phase-dependent coding of objects in short-term memory (Siegel et al., 2009). Many results led to the conjecture that synchronized and phase-locked oscillatory neural activity play a fundamental role in perception, memory, and sensory computation (Buzsaki and Draguhn, 2004; Gelperin, 2006; Düzel et al., 2010).

There is increasing evidence that information encoding may depend on the temporal dynamics between neurons, namely, the specific phase alignment of spikes relative to rhythmic activity across the neuronal population (as reflected in the local field potential, or LFP) (O’Keefe and Recce, 1993; König et al., 1995; Laurent, 2002; Mehta et al., 2002; Kayser et al., 2009; Siegel et al., 2009). Indeed phase-dependent coding, that exploits the precise temporal relations between the discharges of neurons, may be an effective strategy to encode information (Singer, 1999; Scarpetta et al., 2002a; Scarpetta et al., 2002b; Yoshioka et al., 2007; Latham and Lengyel, 2008; Kayser et al., 2009; Siegel et al., 2009). Data from rodents indicate that spatial information may be encoded at specific phases of ongoing population theta oscillations in the hippocampus (O’Keefe and Recce, 1993), and that spike sequences can be replayed at a different time scale (Diba and Buzsaki, 2007), while data from monkeys (Siegel et al., 2009) show that phase coding may be a more general coding scheme.

The existence of a periodic spatio-temporal pattern of precisely timed spikes, as attractor of neural dynamics, has been investigated in different recurrent neural models (Gerstner et al., 1993; Borisyuk and Hoppensteadt, 1999; Jin, 2002; Lengyel et al., 2005b; Memmesheimer and Timme, 2006a,b). In Jin (2002) it was shown that periodic spike sequences are attractors of the dynamics, and the stronger the global inhibition of the network, the faster the rate of convergence to a periodic pattern. In Memmesheimer and Timme (2006a,b) the problem of finding the set of all networks that exhibit a predefinite periodic precisely timed pattern was studied.

Here we study how a spike timing dependent plasticity (STDP) learning rule can encode many different periodic patterns in a recurrent network, in such a way that a pattern can be retrieved initializing the network in a state similar to it, or inducing a short train of spikes extracted from the pattern.

In our model, information about an item is encoded in the specific phases of firing, and each item corresponds to a different pattern of phases among units. Multiple items can be memorized in the synaptic connections, and the intrinsic network dynamics recall the specific phases of firing when a partial cue is presented.

Each item with specific phases of firing corresponds to specific relative timings between neurons. Therefore it seems that phase coding may be well suited to facilitate long-term storage of items by means of STDP (Markram et al., 1997; Bi and Poo, 1998, 2001).

Indeed experimental findings on STDP further underlined the importance of precise temporal relationships of the dynamics, by showing that long-term changes in synaptic strengths depend on the precise relative timing of pre and postsynaptic spikes (Magee and Johnston, 1997; Markram et al., 1997; Bi and Poo, 1998, 2001; Debanne et al., 1998; Feldman, 2000).

The computational role and functional implications of STDP have been explored from many points of view (see for example Gerstner et al., 1996; Kempter et al., 1999; Song et al., 2000; Rao and Sejnowski, 2001; Abarbanel et al., 2002; Lengyel et al., 2005b; Drew and Abbott, 2006; Wittenberg and Wang, 2006; Masquelier et al., 2009 and papers of this special issue). STDP has also been supposed to play a role in the hippocampus theta phase precession phenomenon (Mehta et al., 2002; Scarpetta and Marinaro, 2005; Lengyel et al., 2005a; Florian and Muresan, 2006), even though other explanations has also been proposed for this phenomena (see Leibold et al., 2008; Thurley et al., 2008 and references therein). Here we analyze the role of a learning rule based on STDP in storing multiple phase-coded memories as attractor states of the neural dynamics, and the ability of the network to selectively retrieve a stored memory, when a partial cue is presented. The framework of storing and retrieval of memories as attractors of the dynamics is widely accepted, and recently received experimental support, such as in the work of Wills et al. (2005), which gives strong experimental evidence for the expression of rate-coded attractor states in the hippocampus.

Another characteristic of the neural network, crucial to its functioning, is its topology, that is the average number of neurons connected to a neuron, the average length of the shortest path connecting two neurons, etc. In the last decade, there has been a growing interest in the study of the topological structure of the brain network (Sporns and Zwi, 2004; Sporns et al., 2004; Bullmore and Sporns, 2009). This interest has been stimulated by the simultaneous development of the science of complex networks, that studies how the behavior of complex systems (such as societies, computer networks, brains, etc.) is shaped by the way their constituent elements are connected.

A network may have the property that the degree distribution, i.e. the probability that a randomly chosen node is connected to k other nodes, has a slow power law decay. Networks having this property are called “scale-free”. Barabási and Albert (1999) demonstrated that this property can originate from a process in which each node is added preferentially to nodes that already have high degree. Scale-free properties have been found in functional network topology using functional magnetic resonance in human brain (Eguìluz et al., 2005), and have been investigated in some models in relation with scale-free avalanche brain activity and criticality (Pellegrini et al., 2007; de Arcangelis and Herrmann, 2010).

Another important class of complex networks is the so called “small world” networks (Watts and Strogatz, 1998). They combine two important properties. The first is an high level of clustering, that is an high probability of direct connection between two nodes, given that they are both connected to a third node. This property usually occurs in networks where the nodes are connected preferentially to the nearest nodes, in a physical (for example three-dimensional) space. The second property is the shortness of paths connecting any two nodes, characteristic of random networks. Therefore, a measure of the small-worldness of a network is given by a high ratio of the clustering coefficient to the path length.

There is increasing evidence that the connections of neurons in many areas of the nervous system have a small world structure (Hellwig, 2000; Sporns and Zwi, 2004; Sporns et al., 2004; Yu et al., 2008; Bullmore and Sporns, 2009; Pajevic and Plenz, 2009). Up to now, the only nervous system to have been comprehensively mapped at a cellular level is the one of Caenorhabditis elegans (White et al., 1986; Achacoso and Yamamoto, 1991), and it has been found that is has indeed a small world structure. The same property was found for the correlation network of neurons in the visual cortex of the cat (Yu et al., 2008).

In this paper we focus on the ability of STDP to memorize multiple phase-coded items, in both fully connected and sparse networks, with varying degree of small-worldness, in a way that each phase-coded item is an attractor of the network.

Partial presentation of the pattern, i.e. short externally induced spike sequences, with phases similar to the ones of the stored phase pattern, induces the network to retrieve selectively the stored item, as far as the number of stored items is not larger then the network capacity. If the network retrieves one of the stored items, the neural population spontaneously fires with the specific phase alignments of that pattern, until external input does not change the state of the network.

We find that the proposed learning rule is really able to store multiple phase-coded patterns, and we study the network capacity, i.e. how many phase-coded items can be stored and retrieved in the network as a function of the parameters of the network and the learning rule.

In Section 1 we describe the learning rule and the analog model used. In Section 2 we study the case of an analog fully connected network, that is a network in which each neuron is connected to any other neuron. In Section 3 we study instead the case of an analog sparse network, where each neuron is connected to a finite number of other neurons, with a varying degree of small-worldness. In Section 4 we study the case of a fully connected spiking integrate and fire (IF) model, and finally the summary and discussion is in Section 5.

The Model

We consider a network of N neurons, with N(N − 1) possible (directed) connections Jij. The synaptic connections Jij, during the learning mode when patterns to be stored are presented, are subject to plasticity and change their efficacy according to a learning rule inspired to the STDP. In STDP (Magee and Johnston, 1997; Markram et al., 1997; Bi and Poo, 1998, 2001; Debanne et al., 1998; Feldman, 2000) synaptic strength increases or decreases whether the presynaptic spike precedes or follows the postsynaptic one by few milliseconds, with a degree of change that depends on the delay between pre and postsynaptic spikes, through a temporally asymmetric learning window. We indicate with xi(t) the activity, or firing rate, of ith neuron at time t. It means that the firing probability of unit i in the interval (t, t + Δt) is proportional to xi(t)Δt in the limit Δt → 0. According to the learning rule we use in this work, already introduced in (Scarpetta et al., 2001, 2002a; Yoshioka et al., 2007), the change in the connection Jij occurring in the time interval [−T, 0] can be formulated as follows:

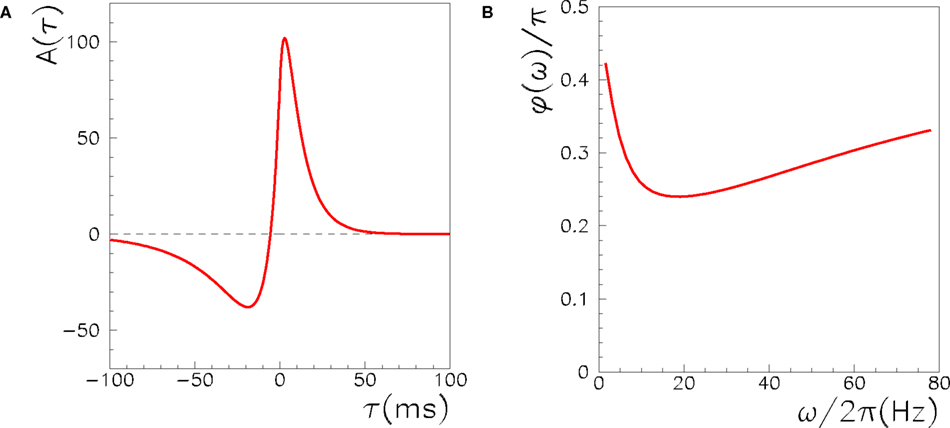

where xj(t) is the activity of the presynaptic neuron, and xi(t) the activity of the postsynaptic one. The learning window A(τ) is the measure of the strength of synaptic change when there is a time delay τ between pre and postsynaptic activity. To model the experimental results of STDP, the learning window A(τ) should be an asymmetric function of τ, mainly positive (LTP) for τ > 0 and mainly negative (LTD) for τ < 0. The shape of A(τ) strongly affects Jij and the dynamics of the networks, as discussed in the following. An example of the learning window used here is shown in Figure 1.

Figure 1. (A) The learning window A(τ) used in the learning rule in Eq. (1) to model STDP. The window is the one introduced and motivated by Abarbanel et al. (2002),  if τ > 0,

if τ > 0,  if τ < 0, with the same parameters used in Abarbanel et al. (2002) to fit the experimental data of Bi and Poo (1998), ap= γ[1/Tp + η/TD]−1, aD = γ[η/Tp + 1/TD]−1, with Tp = 10.2 ms, TD = 28.6 ms, η = 4, γ = 42. Notably, this function satisfies the condition

if τ < 0, with the same parameters used in Abarbanel et al. (2002) to fit the experimental data of Bi and Poo (1998), ap= γ[1/Tp + η/TD]−1, aD = γ[η/Tp + 1/TD]−1, with Tp = 10.2 ms, TD = 28.6 ms, η = 4, γ = 42. Notably, this function satisfies the condition  , i.e. Ã(0) = 0. (B) The phase of the Fourier transform of A(τ) as a function of the frequency.

, i.e. Ã(0) = 0. (B) The phase of the Fourier transform of A(τ) as a function of the frequency.

Writing Eq. (1), implicitly we have assumed that the effects of separate spike pairs due to STDP sum linearly. However note that non-linear effects have been observed when both pre and postsynaptic neurons fire simultaneously at more then 40 Hz (Sjostrom et al., 2001; Froemke and Dan, 2002), therefore our model holds only in the case of lower firing rates, and in cases where linear summation is a good approximation.

We consider periodic patterns of activity that are periodic patterns, in which the information is encoded in the relative phases, that is in the relative timing of the maximum firing rate of a neuron. Therefore, we define the pattern to be stored by

where phases  are randomly chosen from a uniform distribution in [0,2π), and ωμ/2π is the frequency of oscillation of the neurons (see Figure 2A). Each pattern μ is therefore defined by its frequency ωμ/2π, and by the specific phases of the neurons i = 1,..,N.

are randomly chosen from a uniform distribution in [0,2π), and ωμ/2π is the frequency of oscillation of the neurons (see Figure 2A). Each pattern μ is therefore defined by its frequency ωμ/2π, and by the specific phases of the neurons i = 1,..,N.

Figure 2. The activity of 10 randomly chosen neurons in a network of N = 3000 fully connected analog neurons, with P = 30 stored patterns. The learning rule is given by Eq. (4) with φ* = 0.24π. Neurons are sorted by increasing phase  of the first pattern, and shifted correspondingly on the vertical axis. (A) The first stored pattern, that is the activity of the network given by Eq. (2) used to encode the pattern in the learning mode, with frequency ωμ/2π = 20 Hz. (B) The self-sustained dynamics of the network, when the initial condition is given by the first pattern

of the first pattern, and shifted correspondingly on the vertical axis. (A) The first stored pattern, that is the activity of the network given by Eq. (2) used to encode the pattern in the learning mode, with frequency ωμ/2π = 20 Hz. (B) The self-sustained dynamics of the network, when the initial condition is given by the first pattern  . The retrieved the pattern has the same phase relationships of the encoded one. In this case the overlap is

. The retrieved the pattern has the same phase relationships of the encoded one. In this case the overlap is  , and the output frequency is in agreement with the analytical value tan(φ*)/2πτm = 15 Hz. (C) Same as in (B), but with φ* = 0.45π. Output frequency is in agreement with the analytical value tan(φ*)/2πτm = 100 Hz.

, and the output frequency is in agreement with the analytical value tan(φ*)/2πτm = 15 Hz. (C) Same as in (B), but with φ* = 0.45π. Output frequency is in agreement with the analytical value tan(φ*)/2πτm = 100 Hz.

In the limit of large T, when the network is forced in the state given by Eq. (2), using Eq. (1), the change in the synaptic strength will be given by

where Ã(ω) is the Fourier transform of the kernel, defined by

and φ(ω) = arg[Ã(ω)] is the phase of the Fourier transform. The factor η depends on the learning rate and on the total learning time T (Scarpetta et al., 2002a, 2008).

When we store multiple patterns μ = 1,2,…,P, the learned weights are the sum of the contributions from individual patterns. After learning P patterns, each with frequency ωμ/2π and phase-shift  , we get the connections

, we get the connections

In this paper we choose  , which gives a balance between potentiation and inhibition. Notably, the condition Ã(0) = 0 also holds when using the learning window of Figure 1. In the present study, we choose to store patterns all with the same ωμ, and to ease the notation we define φ* = φ(ωμ).

, which gives a balance between potentiation and inhibition. Notably, the condition Ã(0) = 0 also holds when using the learning window of Figure 1. In the present study, we choose to store patterns all with the same ωμ, and to ease the notation we define φ* = φ(ωμ).

In the retrieval mode, the connections are fixed to the values given in Eq. (4). In the analog model, the dynamic equations for unit xi are given by

where the transfer function F(h) denotes the input–output relationship of neurons, hi(t) = ΣjJijxj(t) is the local field acting on neuron i, τm is the time constant of neuron i (for simplicity, τm has the same value for all neurons), and Jij is the connection after the learning procedure given in Eq. (4). Spontaneous activity dynamics of the coupled non-linear system is therefore determined by the function F(h) and by the coupling matrix Jij. We take the function F(h) to be equal to the Heaviside function Θ(h). Note that in this case the learning factor η is immaterial.

During the retrieval mode, the network selectively replays one of the stored phase-coded patterns, depending on the initial conditions. It means that if we force the network, for t < 0, with an input which resembles one of the phase-coded patterns, and then we switch off the input at times t > 0, the network spontaneously gives sustained oscillatory activity with the relative phases of the retrieved pattern, while the frequency can be different (see Figure 2). For the analog model (5), where the state of the network is represented by the rates xi(t), the same can be achieved if we simply initialize the rates at t = 0 with values  corresponding to one of the phase-coded patterns, or also to a partially corrupted version of it. Analytical calculations (Yoshioka et al., 2007; Scarpetta et al., 2008) show that the output frequency of oscillation is given by

corresponding to one of the phase-coded patterns, or also to a partially corrupted version of it. Analytical calculations (Yoshioka et al., 2007; Scarpetta et al., 2008) show that the output frequency of oscillation is given by

and this is confirmed by numerical simulations of Eq. (5) with connections given by Eq. (4).

As an example, the learning window in Figure 1, when the frequency of the stored pattern is ωμ/2π = 20 Hz, gives φ* = 0.24π, and an output frequency of oscillation  = 15 Hz (with τm = 10 ms). Numerical simulations of the network with φ* = 0.24π, N = 3000 fully connected neurons and P = 30 stored patterns, are shown in Figure 2B. In Figure 2C the case φ* = 0.45π is shown. Here, the output frequency is much higher, while the oscillations of the firing rates with respect to the mean value 1/2 is much smaller.

= 15 Hz (with τm = 10 ms). Numerical simulations of the network with φ* = 0.24π, N = 3000 fully connected neurons and P = 30 stored patterns, are shown in Figure 2B. In Figure 2C the case φ* = 0.45π is shown. Here, the output frequency is much higher, while the oscillations of the firing rates with respect to the mean value 1/2 is much smaller.

In the following sections, we analyze the behavior when multiple patterns are stored and we study the network capacity as a function of learning window parameters and as a function of connectivity topology.

Capacity of the Fully Connected Network

In this section we study the network capacity, in the case of fully connected network, where all the N(N − 1) connections are subject to the learning process given by Eq. (4).

During the retrieval mode, the spontaneous dynamics of the network selectively replay one of the stored phase-coded patterns, depending on initial condition, so that, when retrieval is successful, the spontaneous activity of the network is an oscillating pattern of activity with phase of firing equal to the stored phases  (while the frequency of oscillation is governed by the time scale of single neuron and by the parameter φ* of learning window). Similarity between the network activity during retrieval mode and the stored phase-coded pattern is measured by the overlap |mμ|, introduced in (Scarpetta et al., 2002a) and studied in (Yoshioka et al., 2007),

(while the frequency of oscillation is governed by the time scale of single neuron and by the parameter φ* of learning window). Similarity between the network activity during retrieval mode and the stored phase-coded pattern is measured by the overlap |mμ|, introduced in (Scarpetta et al., 2002a) and studied in (Yoshioka et al., 2007),

If the activity xi(t) is equal to the pattern  (t) in Eq. (2), then the overlap is equal to 1/4 (perfect retrieval), while it is

(t) in Eq. (2), then the overlap is equal to 1/4 (perfect retrieval), while it is  when the phases of firing have nothing to do with the stored phases. Numerically we study the capacity of the network, αc = Pmax/N, where N is the number of neurons and Pmax is the maximum number of items that can be stored and retrieved successfully.

when the phases of firing have nothing to do with the stored phases. Numerically we study the capacity of the network, αc = Pmax/N, where N is the number of neurons and Pmax is the maximum number of items that can be stored and retrieved successfully.

We extract P different random patterns, choosing phases  randomly from a uniform distribution in [0, 2π). Then we define the connections Jij with the rule Eq. (4). The values of the firing rates are initialized at time t = 0 at the value given by Eq. (2) with t = 0 and μ = 1 of the first pattern, the dynamics in Eq. (5) is simulated, and the overlap Eq. (6) with μ = 1 is evaluated. If the absolute value |mμ(t)| tends to a constant greater than 0.1 at long times, then we consider that the pattern has been encoded and replayed well by the network. The maximum value of P at which the network is able to replay the pattern is the capacity of the network. We have verified that a small noise in the initialization do not change the results. A systematic study of the robustness of the dynamical basins of attraction from the noise in the initialization has not been carried out yet.

randomly from a uniform distribution in [0, 2π). Then we define the connections Jij with the rule Eq. (4). The values of the firing rates are initialized at time t = 0 at the value given by Eq. (2) with t = 0 and μ = 1 of the first pattern, the dynamics in Eq. (5) is simulated, and the overlap Eq. (6) with μ = 1 is evaluated. If the absolute value |mμ(t)| tends to a constant greater than 0.1 at long times, then we consider that the pattern has been encoded and replayed well by the network. The maximum value of P at which the network is able to replay the pattern is the capacity of the network. We have verified that a small noise in the initialization do not change the results. A systematic study of the robustness of the dynamical basins of attraction from the noise in the initialization has not been carried out yet.

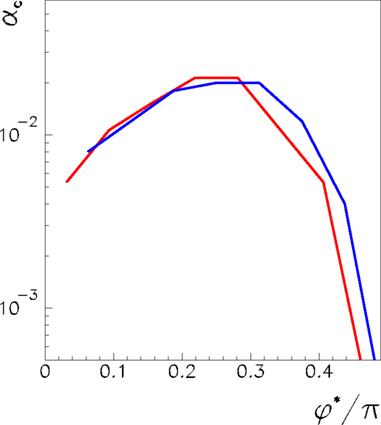

Here we study the dependence of the network capacity on the learning rule parameter φ*. The parameter φ* depends on the learning window shape, and on the frequency of oscillation ωμ/2π of the pattern presented during the learning process. In Figure 3 we plot the capacity as a function of 0 < φ* < π/2 for a fully connected network with N = 3000 and N = 6000, considering Pmax the maximum number of patterns such that the retrieved patterns have overlaps |mμ| > 0.1. The capacity is approximately constant with the network size, showing that the maximum number of patterns Pmax scales linearly with the number of neurons.

Figure 3. Maximum capacity αc = Pmax/N of a network of N = 3000 (red) and N = 6000 (blue) fully connected analog neurons, as a function of the learning window asymmetry φ*. The limit φ* = 0 corresponds to a symmetric learning window (Jij−Jji) that is to output frequency  that tends to zero. The limit φ* = π/2 corresponds instead to a perfectly anti-symmetric learning window, and output frequency

that tends to zero. The limit φ* = π/2 corresponds instead to a perfectly anti-symmetric learning window, and output frequency  = ∞. The intermediate value φ* ≃ 0.25π gives the best performance of the network.

= ∞. The intermediate value φ* ≃ 0.25π gives the best performance of the network.

We see that capacity strongly depends on the shape of learning window through parameter φ*. The limit of φ* equal to zero corresponds to output frequency  equal to zero, and therefore to the limit of static output. We see that the capacity of the oscillating network is larger then the static limit for a large range of frequencies. When φ* approaches π/2, then the output frequency

equal to zero, and therefore to the limit of static output. We see that the capacity of the oscillating network is larger then the static limit for a large range of frequencies. When φ* approaches π/2, then the output frequency  tends to infinity, and capacity decreases. The best performance is given at intermediate values of φ*. Therefore, since φ* depends on the degree of time asymmetry of the learning window, we see that there is a range of time asymmetry of the learning window which provides good capacity, while both the case of perfectly symmetric learning window φ* = 0, and the case of perfectly anti-symmetric learning window φ* = π/2, give worse capacity performances. Interestingly, the learning window in Figure 1 gives intermediate values of φ* for a large interval of frequencies ωμ.

tends to infinity, and capacity decreases. The best performance is given at intermediate values of φ*. Therefore, since φ* depends on the degree of time asymmetry of the learning window, we see that there is a range of time asymmetry of the learning window which provides good capacity, while both the case of perfectly symmetric learning window φ* = 0, and the case of perfectly anti-symmetric learning window φ* = π/2, give worse capacity performances. Interestingly, the learning window in Figure 1 gives intermediate values of φ* for a large interval of frequencies ωμ.

Note that the decrease in the capacity of the network when the phase φ* approaches π/2 is essentially due to the fact that the oscillations of the firing rates with respect to the mean value 1/2 become small in this regime. When the firing rates tend to a constant, the overlap defined by Eq. (6) goes to zero.

Capacity of the Sparse Network

In this section we study the capacity of the network described through Eq. (5) in the case of sparse connectivity, where only a fraction of the connections are subject to the learning rule given by Eq. (4), while all the others are set to zero. The role of connectivity’s topology is also investigated. We start from a network in which neurons are put on the vertices of a two- or three-dimensional lattice; each neuron is connected only to neurons within a given distance (in units of lattice spacings) and we call z the number of connections of a single neuron. For each neuron, we then “rewire” a finite fraction γ of its connections, deleting the existing short-range connections and creating, in place of them, long range connections to randomly chosen neurons.

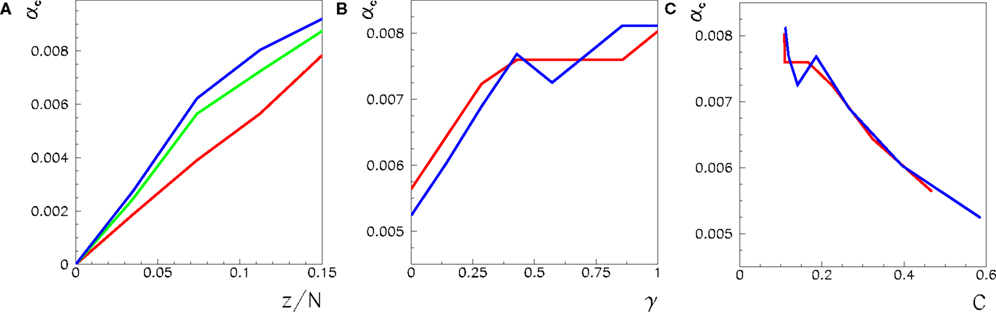

We consider a three-dimensional network with 243 neurons, and a two-dimensional one with 1182 neurons. In Figure 4A we plot the maximum capacity αc = Pmax/N as a function of the connectivity z/N for three different values of γ, for the three-dimensional case. The value γ = 0 (red curve) corresponds to the pure short-range network, in which all connections are between neurons within a given distance on the three-dimensional lattice, γ = 1 (blue curve) corresponds to the random network, where the three-dimensional topology is completely lost, and γ = 0.3 to an intermediate case, where 30% of the connections are long range, and the others are short range.

Figure 4. (A) Maximum capacity αc = Pmax/N for a network with 243 analog neurons, with z connections per neuron, with φ* fixed to its optimal value 0. 24π. The red curve corresponds to γ = 0, that is to a network with only short-range connections. The green one to γ = 0.3, and the blue one to γ = 1, that is to a random network where the finite dimensional topology is completely lost. (B) Maximum capacity αc = Pmax/N, for the same values of N and φ* and for connectivity z/N = 0.11, as a function of γ, for the 243 lattice (red) and for a two dimensional 1182 lattice (blue). (C) Same data as in (B), but as a function of the clustering coefficient C.

Considering that the capacity of the fully connected network (z/N = 1) is αc ≃ 0.02, we see that the random network with z/N ≃ 0.1 already has ∼40% of the capacity of the fully connected network. This means the capacity does not scale linearly with the density of connections, and when the density of connections grows there is a sort of saturation effect, due to the presence of partially redundant connections.

Then, we look at the dependence of the capacity from the fraction of short range and long range connections for z/N ≃ 0.11. In Figure 4B, we see that the capacity gain, with respect to the short-range network, given by 30% long range connections (γ = 0.3), is about 80% the one of the fully random network (γ = 1). Therefore, the presence of a small number of long range connections is able to amplify the capacity of the network. This is shown in Figure 4B, where the capacity as a function of γ for z/N = 0.11 is plotted (red curve). Note that the above effect is not so important in smaller networks, for example with N = 183 neurons, where the capacity of the short-range network is nearer to that of a random network. Therefore, it seems plausible that, for very large networks, the amplifying effect of a small fraction of long range connections will be even stronger.

It is reasonable to suppose that the fraction of long range connections, in real networks, is determined by a trade-off between the increase of capacity given by long range connections, and their higher wiring cost. A large amplifying effect of long range connections on the capacity, together with a large wiring cost with respect to short range ones, will result in the optimal topology of the network being small-world like, with a small fraction of long range connections, as observed in many areas of the brain, from C. elegans (White et al., 1986; Achacoso and Yamamoto, 1991) to the visual cortex of the cat (Yu et al., 2008).

The experiments on a two-dimensional lattice with 1182 neurons do not show any qualitative differences with respect to the three-dimensional case. In Figure 4B we plot the capacity of the two-dimensional network (blue curve) as a function of γ, along with the capacity of the three-dimensional one (red curve).

The Figure 4C shows the same data of Figure 4B, but as a function of the clustering coefficient C, defined as the probability that two sites, neighbors of a given site, are neighbors themselves. As reported in Figure 4C, once fixed the number of connections per neuron z, the lower the clustering coefficient, the higher the capacity of the network. This is reasonable, because a high clustering coefficient means that connections will be partially redundant, as already observed in the case of the dependence on the connectivity z/N.

Note that the mean path length λ, for the considered value of z/N = 0.11, is a decreasing function of γ from γ = 0 up to γ = 0.01, and then remains practically constant for higher values of γ. This means that the ratio between C and λ, that is the small-worldness of the network, has a maximum about γ = 0.01. The capacity therefore is not an increasing function of the small-worldness. Only when the wiring cost of the connections is taken in account, the optimal topology turns out to be one with a small fraction of long range connections. Note also that for more realistic values of z/N, much lower than z/N = 0.11, the maximum of the small-worldness shifts to higher values of γ.

Integrate and Fire Model

The previous results refer to network dynamics described in Eq. (5), that is simple enough to admit analytical predictions for dynamics when the connectivity is given by Eq. (4). The simple model defined by Eq. (5) has state variables xi(t), representing instantaneous firing rate or probability of firing, and it has only one time scale τm, which is the time constant of a single unit, allowing us to focus on the effect of the learning window shape and connectivity structure. However, we expect that, while details of dynamics may depend on the model of single unit, the crucial results of storing and recall of phase-coded patterns can be seen also in a spiking model. Therefore we simulate a leaky integrate and fire (IF) spiking model. We use a simple spike response model formulation (SRM) (Gerstner and Kistler, 2002; Gerstner et al., 1993) of the leaky integrate and fire model. While integrate and fire models are usually defined in terms of differential equations, the SRM expresses the membrane potential at time t as an integral over the past (Gerstner and Kistler, 2002). This allows us to use an event-driven programming and makes the numerical simulations faster than in the differential equation formulation.

Each presynaptic spike j, with arrival time tj, is supposed to add to the membrane potential a postsynaptic potential of the form Jij∈(t−tj), where

where τm is the membrane time constant (here 10 ms), τs is the synapse time constant (here 5 ms), Θ(t) is the Heaviside step function, and K is a multiplicative constant chosen so that the maximum value of the kernel is 1. The sign of the synaptic connection Jij set the sign of the postsynaptic potential change. The synaptic signals received by neuron i, after time ti of the last spike of neuron i, are added to find the total postsynaptic potential

When the postsynaptic potential of neuron i reaches the threshold T, a postsynaptic spike is scheduled, and postsynaptic potential is set to the resting value zero. We simulate this simple model with Jij taken from the learning rule given by Eq. (4), with P patterns in a network of N units. The learning rule Eq. (4) comes out of the learning process given by Eq. (1), when a sequence of spikes is learned, and spikes are generated in such a way that the probability that unit i has a spike in the interval (t, t + Δt) is proportional to  in the limit Δt → 0, with the rate

in the limit Δt → 0, with the rate  given by Eq. (2). The Jij are measured in units such that

given by Eq. (2). The Jij are measured in units such that  .

.

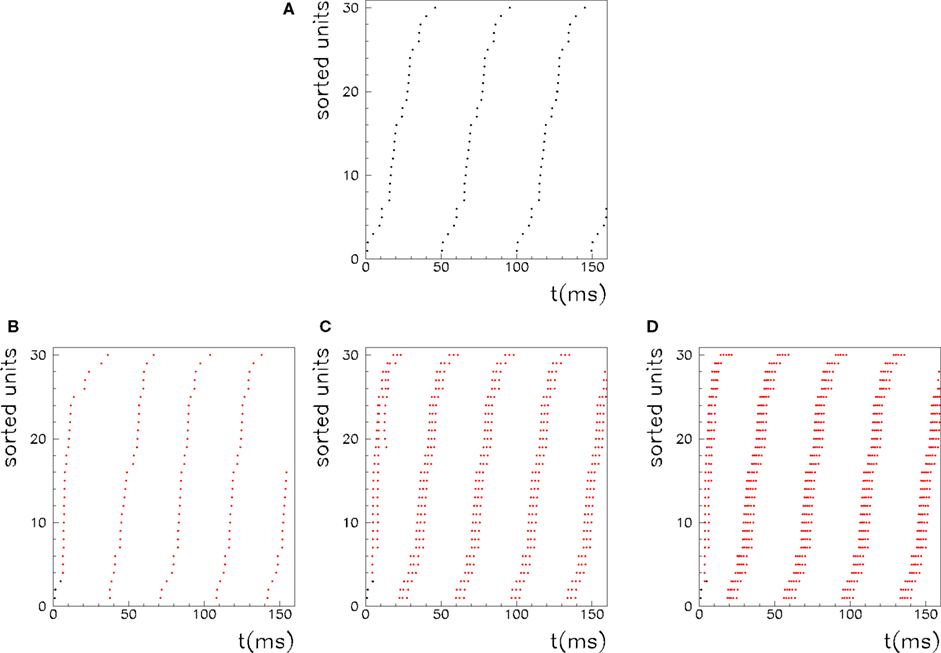

After the learning process, to recall one of the encoded patterns, we give an initial signal made up of M < N spikes, taken from the stored pattern μ, and we check that after this short signal the spontaneous dynamics of the network gives sustained activity with spikes aligned to the phases  of pattern μ (Figure 5).

of pattern μ (Figure 5).

Figure 5. Recall of a pattern by the spiking IF model, with N = 1000 neurons and φ* = 0. 24π. One pattern (P = 1) defined by the phases  is stored in the network with the rule given by Eq. (4). Then a short train of M = 150 spikes, at times

is stored in the network with the rule given by Eq. (4). Then a short train of M = 150 spikes, at times  with ωμ/2π = 20 Hz, is induced on the neurons that have the M lowest phases

with ωμ/2π = 20 Hz, is induced on the neurons that have the M lowest phases  . This short train triggers the replay of the pattern by the network. Depending on the value of T, the phase-coded pattern is replayed with a different number of spikes per cycle. Note that changing T in our model may correspond to a change in the value of physical threshold or in the value of the parameter ηÃ(ωμ) appearing in the synaptic connections Jij learning rule. (A) Thirty neurons of the network are randomly chosen and sorted by the value of the phase

. This short train triggers the replay of the pattern by the network. Depending on the value of T, the phase-coded pattern is replayed with a different number of spikes per cycle. Note that changing T in our model may correspond to a change in the value of physical threshold or in the value of the parameter ηÃ(ωμ) appearing in the synaptic connections Jij learning rule. (A) Thirty neurons of the network are randomly chosen and sorted by the value of the phase  . Then the phases of the encoded pattern are shown, plotting on the x axis the times

. Then the phases of the encoded pattern are shown, plotting on the x axis the times  , and on the y axis the label of the neuron. (B) The replayed pattern with threshold T = 85 is shown, plotting on the x axis the times of the spikes, and on the y axis the label of the spiking neuron. Black dots represent externally induced spikes, while red dots represent spikes generated by the intrinsic dynamics of the network. (C) The replayed pattern with threshold T = 50. (D) The replayed pattern with threshold T = 35.

, and on the y axis the label of the neuron. (B) The replayed pattern with threshold T = 85 is shown, plotting on the x axis the times of the spikes, and on the y axis the label of the spiking neuron. Black dots represent externally induced spikes, while red dots represent spikes generated by the intrinsic dynamics of the network. (C) The replayed pattern with threshold T = 50. (D) The replayed pattern with threshold T = 35.

We also investigate the role of the threshold T. As shown in Figure 5, when the threshold T is lower a burst of activity takes place within each cycle, with phases aligned with the pattern. Therefore the same phase-coded pattern is retrieved, but with a different number of spikes per cycle. This open the possibility to have a coding scheme in which the phases encode pattern’s informations, and rate in each cycle represents the strength and saliency of the retrieval or it may encode another variable. The recall of the same phase-coded pattern with different number of spikes per cycle, shown in Figure 5, accords well with recent observation of Huxter et al. (2003) in hippocampal place cells, showing occurrence of the same phases with different rates. They show that the phase of firing and firing rate are dissociable and can represent two independent variables, e.g. the animals location within the place field and its speed of movement through the field. Note that a change of T in our model may correspond to a change in the value of physical threshold, or to a change in the value of the parameter ηÃ(ωμ) appearing in the synaptic connections Jij.

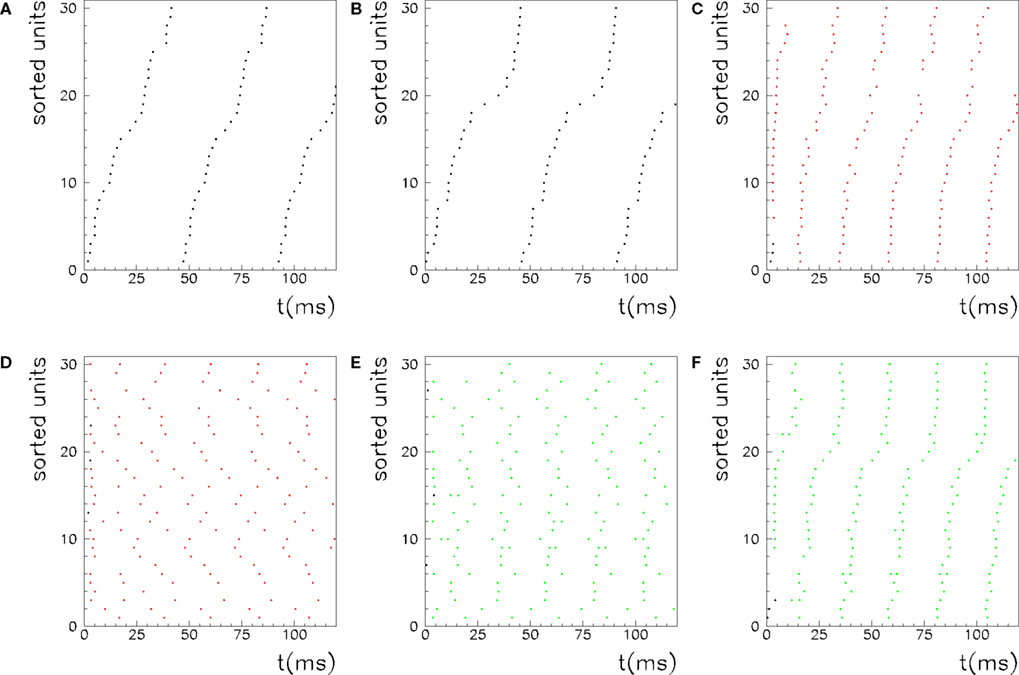

The role of parameter φ* in connections Jij in Eq. (4) is that of changing the output frequency of collective oscillation during retrieval. Here, as well as in the analog model of Eq. (5), lower values of φ* correspond to lower frequencies, even though in the spiking model both time constants of the single neuron (τm and τs) play a role to set output frequency. Therefore, the simple formula that holds for the output frequency of the analog model is not valid in the spiking model. Output activity during recall, for φ* = 0.24π, is shown in Figure 5, while output activity when φ* = 0.4π has a higher frequency, as shown in Figure 6. Selective recall of two of the stored patterns are shown in Figure 6 when P = 5 patterns are stored, and φ* = 0.4π.

Figure 6. Example of selective retrieval of different patterns, in a network with N = 1000 IF neurons and P = 5 stored patterns. Here we choose the asymmetry parameter to be φ* = −0.4π. Thirty neurons are chosen randomly, and then sorted by the value of  in (A–C), and sorted by the value of

in (A–C), and sorted by the value of  in (D–F). In (A) and (D) we show the phases of the first two stored patterns, plotting the times

in (D–F). In (A) and (D) we show the phases of the first two stored patterns, plotting the times  respectively for μ = 1 and μ = 2. In (B) and (E) we show the generated dynamics when a short train of M = 100 spikes corresponding to the μ = 1 pattern is induced on the network, while in (C) and (F) the dynamics when the μ = 2 pattern is instead triggered. The network dynamics selectively replay the stored pattern, depending on the partial cue stimulation. Note that, because the parameter φ* here is greater than in Figure 5, the frequency of the retrieved pattern here is greater.

respectively for μ = 1 and μ = 2. In (B) and (E) we show the generated dynamics when a short train of M = 100 spikes corresponding to the μ = 1 pattern is induced on the network, while in (C) and (F) the dynamics when the μ = 2 pattern is instead triggered. The network dynamics selectively replay the stored pattern, depending on the partial cue stimulation. Note that, because the parameter φ* here is greater than in Figure 5, the frequency of the retrieved pattern here is greater.

To estimate the network capacity of the spiking model, we did numerical simulations of the IF network in Eqs. (7) and (8), with N = 3000 neurons, and connections Jij given by Eq. (4), with different number of patterns P. We give an initial short train of M = 300 spikes chosen at times  from pattern μ = 1. To check if the initial train triggers the replay of pattern μ = 1 at large times, we measure the overlap mμ(t) between the spontaneous dynamics of the network and the phases of pattern μ = 1. In analogy with Eq. (6), the overlap mμ(t) is defined as

from pattern μ = 1. To check if the initial train triggers the replay of pattern μ = 1 at large times, we measure the overlap mμ(t) between the spontaneous dynamics of the network and the phases of pattern μ = 1. In analogy with Eq. (6), the overlap mμ(t) is defined as

where  is the spike timing of neuron j during the spontaneous dynamics, and T* is an estimation of the period of the collective spontaneous dynamics. The overlap in Eq. (9) is equal to 1 when the phase-coded pattern is perfectly retrieved (even though on a different time scale), and is of order

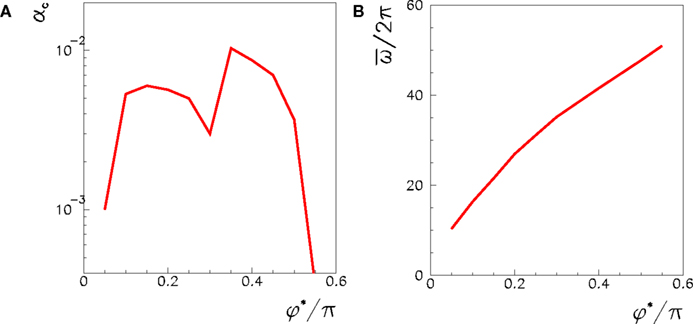

is the spike timing of neuron j during the spontaneous dynamics, and T* is an estimation of the period of the collective spontaneous dynamics. The overlap in Eq. (9) is equal to 1 when the phase-coded pattern is perfectly retrieved (even though on a different time scale), and is of order  when the phases of spikes have nothing to do with the stored phases of pattern μ. We consider a successful recall each time the overlap (averaged over 50 runs) is larger then 0.7. The capacity of the fully connected spiking network as a function of φ* is shown in Figure 7, for threshold T = 200 and N = 3000.

when the phases of spikes have nothing to do with the stored phases of pattern μ. We consider a successful recall each time the overlap (averaged over 50 runs) is larger then 0.7. The capacity of the fully connected spiking network as a function of φ* is shown in Figure 7, for threshold T = 200 and N = 3000.

Figure 7. (A) Maximum capacity αc = Pmax/N of a network of N = 3000 fully connected spiking IF neurons, as a function of the learning window asymmetry φ*. The spontaneous dynamics induced by a short train of M = 300 spikes with ωμ = 20 Hz and phases given by encoded pattern μ = 1 is considered, with threshold set to T = 200. (B) The output frequency of the replay, as a function of φ*, when P = 1, T = 200, N = 3000.

While in the analog model the output frequency tends to infinity when φ* → π/2, in the spiking model output frequency increases but not diverge at π/2, as shown in Figure 7B. As in the analog model, the capacity decreases as soon as φ* is near or larger then π/2. With parameters used in Figure 7, we see that, for φ* larger then 0.55π, the stimulation spikes are not able to initiate the recall of the pattern and the network is silent, unless we choose a lower threshold. This behavior is similar to a small oscillation obtained in the analog model at φ* → π/2.

A systematic study of the dependence of the storage capacity on the threshold T, on the number M of spikes used to trigger the recall, and on time constants τm and τs still has to be done. Future work will also consider the case in which patterns to be learned are not defined through the rate  in Eq. (2), but are defined as sequences of spikes whose timing is exactly given by

in Eq. (2), but are defined as sequences of spikes whose timing is exactly given by  . We expect for this case a higher storage capacity.

. We expect for this case a higher storage capacity.

Summary and Discussion

In this paper we studied the storage and recall of patterns in which information is encoded in the phase-based timing of firing relative to the cycle. We analyze the ability of the learning rule given by Eq. (4) to memorize multiple phase-coded patterns, such that the spontaneous dynamics of the network, defined by Eq. (5), selectively gives sustained activity which matches one of the stored phase-coded patterns, depending on the initialization of the network. It means that if one of the stored items is presented as input to the network at time t < 0, and it is switched off at time t ≥ 0, the spontaneous activity of the network at t > 0 gives sustained activity whose phases alignments match those presented before. It is not trivial that the network performed retrieval competently, as analog associative memory is hard (Treves, 1990).

We compute the storage capacity of phase-coded patterns in the analog model, finding a linear scaling of number of patterns with network size, with maximal capacity αc ≃ 0.02 for the fully connected network. Our model cannot be easily compared to classical models such as Hopfield’s, since the dynamical phases-coded patterns are strongly different from the classical static rate-coded patterns. Whereas the capacity of rate-coded networks has been well understood, that of spiking or phase-coded networks is not yet well understood. We are not aware of previous work who measures the storage capacity of phase-coded patterns, except of the phase-coded patterns in a spin model by Yoshioka (2009), whose capacity is in quantitative agreement with our analog model results. However, the scaling of capacity with connectivity displays properties similar to those of classical models. For strong dilution (z ≪ N), the capacity Pmax, that is the maximum number of patterns encodable in the network, is proportional to z rather than to N. On the other hand, for weak dilution (z of the order of N), Pmax is proportional to the number of neurons N.

Our results on the IF spiking model show that there is a qualitative agreement between the analog and the spiking model ability to store multiple phase-coded patterns and recall them selectively.

We also study the storage capacity of the analog model for different degrees of sparseness and small-worldness of the connections. We put neurons on the vertices of a two- or three-dimensional lattice, and connect each neuron to neurons that are nearer than some distance in units of lattice spacings. Then a fraction γ of these connections are rewired, deleting the short-range connection and creating a long range connection to a random neuron. The existing connections are then defined by the learning rule Eq. (4), while other connections are set to zero.

Changing the proportion γ between short-range and long-range connections, we go from a two- or three-dimensional network with only nearest-neighbors connections (γ = 0) to a random network (γ = 1). Small but finite values of γ give a “small world” topology, similar to that found in many areas of nervous system. We see that, for system size N = 243, the capacity of a random network with only 10% connectivity already has about 40% the capacity of the fully connected network, showing that there is a saturation effect when the density of connections grows above 10%.

Looking at the dependence on the fraction of short range and long range connections, we see that at z/N = 0.11 the capacity gain with respect to the short range network, given by 30% long range connections, already is about 80% the gain given by the full random network. This last factor is likely to increase for larger system sizes, because the larger the system, the more different are long range connections with respect to short-range ones. This is interesting considering that a long-range connection clearly have a higher cost then a short-range one, and implies that a small-world network topology is optimal, as a compromise between the cost of long range connections and the capacity increase.

These results have been found for the analog model in Eq. (5). However, this is not a really spiking model, since only “firing activity” or “probability of spiking” is evaluated. Therefore we perform numerical simulation of a IF model with spike response kernels, to show that while details of dynamics may depend on the model of single unit, the crucial results of ability to store and recall of phase-coded memories can be seen also in a spiking model. Indeed numerical simulations of the spiking model in Eq. (7) shows competent storage and recall of phase-coded memories. A pattern of spikes with the stored phases, i.e. with the phase-based timing of spikes relative to the cycle, is activated by the intrinsic dynamics of the network when a partial cue of the stored pattern (few spikes at proper timings) is presented for a short time. Figure 6 shows that the network is able to store multiple phase-coded memories and selectively replay one of them depending from the partial cue that is presented. Changing the parameter φ* of the learning rule changes the period of the cycle during recall dynamics, while changing the value of T changes the number of spikes per cycle, without changing the phase pattern. So the same phase-coded pattern can be recalled with one spike per cycle or with a short burst per cycle. This agrees well with recent observations, like occurrence of phase precession with very low as well as high firing rate (Huxter et al., 2003). The number of spikes per cycle is a sort of strength or saliency of recall. This leaves open the possibility that variations in number of spikes per cycle might convey additional information about other variables not coded in the phase pattern, as suggested in (Huxter et al., 2003; Huhn et al., 2005; Wu and Yamaguchi, 2010) for place cells, or may convey information about the saliency of retrieved pattern (Lengyel and Dayan, 2007).

In our treatment, we distinguish a learning mode, in which connections are plastic and activity is clamped to the phase-coded pattern to be stored in the synaptic connections Jij, from a recall mode, in which connection strengths do not change. Of course, this distinction is somewhat artificial; real neural dynamics may not be separated so clearly into such distinct modes. Nevertheless, data on cholinergic neuromodulatory effects (Hasselmo, 1993, 1999) in cortical structures suggesting that high levels of acetylcholine selectively suppress intrinsic but not afferent fiber transmission and enhance long-term plasticity, seems to provide a possible neurophysiological mechanism for this distinction in two operational modes.

Another point is given by the physical constraints on the sign of synaptic connections Jij, that for a given presynaptic unit j, are all positive when presynaptic unit j is excitatory, and all negative when presynaptic unit j is inhibitory, a condition not respected by our learning formula Eq. (4) so far. As a remedy, one may add an initial background weight J0 to each connection, independent of i and j, such that Jij + J0 > 0 for all i and j, to make all the units excitatory and plastic connections positive, and then one has to add a global inhibition equal to −J0 times the mean field y = Σjxj to save equilibrium between excitation and inhibition. In such a way, we have a network of N excitatory units, with positive couplings, and a global inhibition,

that is mathematically equivalent to Eq. (5) when y = Σjxj, and numerically also gives the same results when y = N/2.

Considering the IF model of section “Integrate and fire model”, also in this case we can consider a network of all excitatory neurons, with plastic positive connections given by Jij + J0 with J0 constant such that Jij + J0 > 0, and then add a proper inhibitory term to save the equilibrium between excitation and inhibition. In this way, the postsynaptic potential of neuron i, after time ti of its last spike, is given by

The inhibitory term  can be realized in different ways, for example imaging that for each excitatory unit j, there is a fast inhibitory interneuron j, that emits a spike each time its excitatory unit does, and is connected with all the other excitatory neurons with constant weight connections J0.

can be realized in different ways, for example imaging that for each excitatory unit j, there is a fast inhibitory interneuron j, that emits a spike each time its excitatory unit does, and is connected with all the other excitatory neurons with constant weight connections J0.

The task of storing and recalling phase-coded memories has been also investigated in (Lengyel et al., 2005b) in the framework of probabilistic inference. While we study the effects of couplings given by Eq. (4) in the network model Eq. (5), and in a network of IF neurons given by Eq. (7), the paper (Lengyel et al., 2005b) studies this problem from a normative theory of autoassociative memory, in which variable xi of neuron i represents the neuron i spike timing with respect to a reference point of an ongoing field potential, and the interaction H(xi, xj) among units is mediated by the derivative of the synaptic plasticity rule used to store memories. In (Lengyel et al., 2005b), the case of limited connectivity is studied, showing how recall performance depends to the degree of connectivity when connections are cut randomly. Here we show that performance also depends from the topology of the connectivity, and capacity depends not only from the number of connections but also from the fraction of long range versus short-range connections.

The role of STDP in learning and detecting spatio-temporal patterns has been studied recently in (Masquelier et al., 2009). They show that a repeating spatio-temporal spike pattern, hidden in equally dense distracter spike trains, can be robustly detected by a set of “listening” neurons equipped with STDP. When a spatio-temporal pattern repeats periodically, it can be considered a periodic phase-coded pattern. While in Masquelier et al. (2009) the detection of the pattern is investigated when it is the input of the “listening” neurons, in our paper we investigate the associative memory property, which makes the pattern imprinted in the connectivity of the population an attractor of the dynamics. When a partial cue of the pattern μ is presented (or in the analog case the network is initialized with  ), then the original stored pattern is replayed. Differently from Masquelier et al. (2009), here the pattern μ is imprinted in the neural population, in such a way that exactly the same encoded phase-coded pattern is replayed during persistent spontaneous activity. This associative memory behavior, that replay the stored sequence, can be a method for recognize an item, by activating the same memorized pattern in response of a similar input, or may be also a way to transfer the memorized item to another area of the brain (such as for memory consolidation during sleep).

), then the original stored pattern is replayed. Differently from Masquelier et al. (2009), here the pattern μ is imprinted in the neural population, in such a way that exactly the same encoded phase-coded pattern is replayed during persistent spontaneous activity. This associative memory behavior, that replay the stored sequence, can be a method for recognize an item, by activating the same memorized pattern in response of a similar input, or may be also a way to transfer the memorized item to another area of the brain (such as for memory consolidation during sleep).

Our results shows that in the spiking model a critical role is played by the threshold T: changing the threshold one goes from a silent state to a spontaneously active phase-coded pattern with one spike per cycle, and then to the same phase-coded pattern with many spikes per cycle (bursting). Therefore, a possible conjecture is that when we observe the replay of a periodic spatio-temporal pattern, then if one is able to change the threshold of spiking of the neurons, one can observe the same phase pattern but with more or less spikes per cycle.

In future we will also investigate the capacity when patterns with different frequencies are encoded in the same network. In this paper we compute the capacity when encoded patterns have all the same frequency, however it would be interesting to see how the network work with different frequencies. Preliminary results on the analog model (Scarpetta et al., 2008) show that it’s possible to store two different frequencies in a manner that both are stable if a relationship holds between the two frequencies and the shape of the learning window. We plan to investigate this both in the analog and in the spiking model.

Concerning the shape of learning kernel A(τ), in our model the values of the connections Jij depend on the kernel shape only through the time integral of the kernel, and the Fourier transform of the kernel at the frequency of the encoded pattern. As shown in our previous paper (Yoshioka et al., 2007), best results are obtained when the time integral of the kernel is equal to zero  . This choice will assure the global balance between excitation and inhibition. Hence in the present paper we choose to set this value to zero, and varied only the phase φ* of the Fourier transform. The case of a purely symmetric kernel corresponds to φ* = 0, while a purely anti-symmetric (causal) one corresponds to φ* = π/2. We find that intermediate values 0 < φ* < π/2 work better then values π/2 < φ* < π, for which the network is not able to replay patterns competently. We study here positive values of φ*, i.e. a causal kernel (potentiation for pre–post, and depotentiation for post–pre), however an anti-causal kernel would give a negative value of φ*, and a negative frequency of replay, that is the pattern would be replayed in time reversed order (Scarpetta et al., 2008).

. This choice will assure the global balance between excitation and inhibition. Hence in the present paper we choose to set this value to zero, and varied only the phase φ* of the Fourier transform. The case of a purely symmetric kernel corresponds to φ* = 0, while a purely anti-symmetric (causal) one corresponds to φ* = π/2. We find that intermediate values 0 < φ* < π/2 work better then values π/2 < φ* < π, for which the network is not able to replay patterns competently. We study here positive values of φ*, i.e. a causal kernel (potentiation for pre–post, and depotentiation for post–pre), however an anti-causal kernel would give a negative value of φ*, and a negative frequency of replay, that is the pattern would be replayed in time reversed order (Scarpetta et al., 2008).

For what concerns the variety of shapes of the learning window observed in the brain, it has to be considered that they may accomplish different needs, such as maximize storage capacity, or set the time scale of replay, which in our model depends on the kernel shape. Notably, in our model we observe a good storage capacity for values of phase φ* which correspond to a large interval of frequency of oscillation during replay, and therefore there is good storage capacity also for values of φ* which correspond to a compressed-in-time replay of phase pattern on very short time scale. Future work will investigate the relation between this framework and the time-compressed replay of spatial experience in rat. It has been indeed observed that during pauses in exploration and during sleep, ensembles of place cells in the rat hippocampus and cortex re-express firing sequences corresponding to past spatial experience (Lee and Wilson, 2002; Foster and Wilson, 2006; David et al., 2007; Diba and Buzsaki, 2007; Hasselmo, 2008; Davidson et al., 2009). Such time-compressed hippocampal replay of behavioral sequences co-occurs with ripple events: high-frequency oscillations that are associated with increased hippocampal–cortical communication. It’s intriguing the hypothesis that sequence replay during ripple is the recall of one of the multiple stored phase-coded memories, a replay that occur on a fast time scale (high  ), triggered by a short sequence of spike.

), triggered by a short sequence of spike.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Abarbanel, H., Huerta, R., and Rabinovich, M. I. (2002) Dynamical model of long-term synaptic plasticity. Proc. Natl. Acad. Sci. 99, 10132–10137.

Achacoso, T. B., and Yamamoto, W. S. (1991). AY’s Neuroanatomy of C. elegans for Computation. Boca Raton: CRC Press.

Barabási, A. L., and Albert, R. (1999). Emergence of scaling in random networks. Science 286, 509–512.

Bi, G. Q., and Poo, M. M. (1998). Precise spike timing determines the direction and extent of synaptic modifications in cultured hippocampal neurons. J. Neurosci. 18, 10464–10472.

Bi, G. Q., and Poo, M. M. (2001). Synaptic modification by correlated activity: Hebb’s postulate revisited. Ann. Rev. Neurosci. 24, 139–166.

Borisyuk, R. M., and Hoppensteadt, F. C. (1999). Oscillatory models of the hippocampus: a study of spatio- temporal patterns of neural activity. Biol. Cybern. 81, 359–371.

Bullmore, E., and Sporns, O. (2009). Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10, 186–198.

Buzsaki, G., and Draguhn, A. (2004). Neuronal oscillations in cortical networks. Science 304, 1926–1929.

David, R. E., Masami, T., and McNaughton, B. L. (2007). Sequences in prefrontal cortex during sleep fast-forward playback of recent memory. Science 318, 1147–1150.

Davidson, T. J., Kloosterman, F., and Wilson, M. A. (2009). Hippocampal replay of extended experience. Neuron 63, 497–507.

de Arcangelis, L., and Herrmann, H. J. (2010). Learning as a phenomenon occurring in a critical state. PNAS 107, 3977–3981.

Debanne, D., Gahwiler, B. H., and Thompson, S. M. (1998). Long-term synaptic plasticity between pairs of individual CA3 pyramidal cells in rat hippocampal slice cultures. J. Physiol. 507, 237–247.

Diba, K., and Buzsaki, G. (2007). Forward and reverse hippocampal place-cell sequences during ripples. Nat. Neurosci. 10, 1241–1242.

Drew, P. J., and Abbott, L. F. (2006). Extending the effects of spike-timing-dependent plasticity to behavioral timescales. Proc. Natl. Acad. Sci. 103, 8876–8881.

Eguìluz, V. M., Chialvo, D. R., Cecchi, G. A., Baliki, M., and Apkarian, A. V. (2005). Scale-free brain functional networks. Phys. Rev. Lett. 94, 018102.

Düzel, E., Penny, W. D., and Burgess, N. (2010). Brain oscillations and memory. Curr. Opin. Neurobiol. 20, 143–149.

Feldman, D. E. (2000). Timing-based LTP and LTD and vertical inputs to layer II/III pyramidal cells in rat barrel cortex. Neuron 27, 45–56.

Florian, R. V., and Muresan, R. C. (2006). Phase precession and recession with STDP and anti-STDP. Lect. Notes Comp. Sci. 4131, 718–727.

Foster, D. J., and Wilson, M. A. (2006). Reverse replay of behavioral sequences in hippocampal place cells during the awake state. Nature 440, 680–683.

Fries, N. (2005). A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trend Cogn. Sci. 9, 474–480.

Froemke, R. C., and Dan, Y. (2002). Spike-timing-dependent synaptic modification induced by natural spike trains. Nature 416, 433–438.

Gerstner, W., and Kistler, W. (2002). Spiking Neuron Models: Single Neurons, Populations, Plasticity. New York, NY: Cambridge University Press.

Gerstner, W., Kempter, R., van Hemmen, L., and Wagner, H. (1996). A neuronal learning rule for sub-millisecond temporal coding. Nature 383, 76–78.

Gerstner, W., Ritz, R., and van Hemmen, J. L. (1993). Why spikes? Hebbian learning and retrieval of time-resolved excitation patterns. Biol. Cybern. 69, 503–515.

Hasselmo, M. E. (1993). Acetylcholine and learning in a cortical associative memory. Neural Comput. 5, 32–44.

Hasselmo, M. E. (1999). Neuromodulation: acetylcholine and memory consolidation. Trends Cogn. Sci. 3, 351.

Hasselmo, M. E. (2008). Temporally structured replay of neural activity in a model of entorhinal cortex, hippocampus and postsubiculum. Eur. J. Neurosci. 28, 1301–1315.

Hellwig, B. (2000). A quantitative analysis of the local connectivity between pyramidal neurons in layers 2/3 of the rat visual cortex. Biol. Cybern. 82, 111–121.

Huhn, Z., Orbán, G., Érdi, P., and Lengyel, M. (2005) Theta oscillation-coupled dendritic spiking integrates inputs on a long time scale. Hippocampus 15, 950–962.

Huxter, J., Burgess, N., and O’Keefe, J. (2003). Independent rate and temporal coding in hippocampal pyramidal cells. Nature 425, 828–832.

Jin, D. Z. (2002). Fast convergence of spike sequences to periodic patterns in recurrent networks. Phys. Rev. Lett. 89, 208102.

Kayser, C., Montemurro, M. A., Logothetis, N. K., and Panzeri, S. (2009). Spike-phase coding boosts and stabilizes information carried by spatial and temporal spike patterns. Neuron 61, 597–608.

Kempter, R., Gerstner, W., and van Hemmen, L. (1999). Hebbian learning and spiking neurons. Phys. Rev. E 59, 4498–4514.

König, P., Engel, A. K., Roelfsema, P. R., and Singer, W. (1995). How precise is neuronal synchronization? Neural Comput. 7, 469–485.

Latham, P. E., and Lengyel, M. (2008). Phase coding: spikes get a boost from local fields. Curr. Biol. 18, R349–R351.

Laurent, G. (2002). Olfactory network dynamics and the coding of multidimensional signals. Nat. Rev. Neurosci. 3, 884–895.

Lee, A. K., and Wilson, M. A. (2002). Memory of sequential experience in the hippocampus during slow wave sleep. Neuron 36, 1183–1194.

Leibold, C., Gundlfinger, A., Schmidt, R., Thurley, K., Schmitz, D., and Kempter, R. (2008). Temporal compression mediated by short-term synaptic plasticity. Proc. Natl. Acad. Sci. U.S.A. 105, 11, 4417–4422.

Lengyel, M., and Dayan, P. (2007). “Uncertainty, phase and oscillatory hippocampal recall,” in Advances in Neural Information Processing Systems 19 eds D. Schölkopf et al. (Cambridge, MA: MIT Press), 833–840.

Lengyel, M., Huhn, Z., and Érdi, P. (2005a). Computational theories on the function of theta oscillations. Biol. Cybern. 92, 393–408.

Lengyel, M., Kwag, J., Paulsen, O., and Dayan, P. (2005b). Matching storage and recall: hippocampal spike timing-dependent plasticity and phase response curves. Nat. Neurosci. 8, 1677–1683.

Magee, J. C., and Johnston, D. (1997). A synaptically controlled associative signal for Hebbian plasticity in hippocampal neurons. Science 275, 209–212.

Markram, H., Lubke, J., Frotscher, M., and Sakmann, B. (1997). Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science, 275, 213–215.

Masquelier, T., Guyonneau, R., and Thorpe, S. J. (2009). Competitive STDP-based spike pattern learning. Neural Comput. 21, 1259–1276.

Mehta, M. R., Lee, A. K., and Wilson, M. A. (2002). Role of experience and oscillations in transforming a rate code into a temporal code. Nature 417, 741–746.

Memmesheimer, R.-M., and Timme, M. (2006a). Designing the dynamics of spiking neural networks. Phys. Rev. Lett. 97, 188101.

O’Keefe, J., and Recce, M. L. (1993). Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus 3, 317–330.

Pajevic, S., and Plenz, D. (2009). Efficient network reconstruction from dynamical cascades identifies small-world topology of neuronal avalanches. PLoS Comput. Biol. 5, e1000271. doi:10.1371/journal.pcbi.1000271.

Pellegrini, G. L., de Arcangelis, L., Herrmann, H. J., and Perrone-Capano, C. (2007) Activity-dependent neural network model on scale-free networks Phys. Rev. E. 76, 016107.

Rao, R. P., and Sejnowski, T. J. (2001). Spike-timing-dependent Hebbian plasticity as temporal difference learning. Neural Comput. 13, 2221–2237.

Scarpetta, S., and Marinaro, M. (2005). A learning rule for place fields in a cortical model: theta phase precession as a network effect. Hippocampus 15, 979–989.

Scarpetta, S., Yoshioka, M., and Marinaro, M. (2008). Encoding and replay of dynamic attractors with multiple frequencies. Lect. Notes Comp. Sci. 5286, 38–61.

Scarpetta, S., Zhaoping, L., and Hertz, J. (2001). “Spike-timing-dependent learning for oscillatory networks, in NIPS, Vol. 13, eds T. Leen, T. Dietterich and V. Tresp (Cambridge, MA: MIT Press), 152–165.

Scarpetta, S., Zhaoping, L., and Hertz, J. (2002a). Hebbian imprinting and retrieval in oscillatory neural networks. Neural Comput. 14, 2371–2396.

Scarpetta, S., Zhaoping, L., and Hertz, J. (2002b). “Learning in an oscillatory cortical model,” in Scaling and Disordered Systems, eds F. Family, M. Daoud, H. Herrmann and E. H. Stanley (Singapore: World Scientific Publishing), 292.

Siegel, M., Warden, M. R., and Miller, E. K. (2009). Phase-dependent neuronal coding of objects in short-term memory. PNAS 106, 21341–21346.

Singer, W. (1999). Neuronal synchrony: a versatile code for the definition of relations. Neuron 24, 49–65.

Sjostrom, P. J., Turrigiano, G., and Nelson, S. B. (2001). Rate, timing, and cooperativity jointly determine cortical synaptic plasticity. Neuron 32, 1149–1164.

Song, S., Miller, K. D., and Abbott, L. F. (2000). Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 3, 919–926.

Sporns, O., Chialvo, D., Kaiser, M., and Hilgetag, C. C. (2004). Organization, development and function of complex brain networks. Trends Cogn. Sci. 8, 418–425.

Sporns, O., and Zwi, J. D. (2004). The small world of the cerebral cortex. Neuroinformatics 2, 145–162.

Thurley, K., Leibold, C., Gundlfinger, A., Schmitz, D., and Kempter, R. (2008). Phase precession through synaptic facilitation. Neural Comput. 20, 1285–1324.

Treves, A. (1990). Graded-response neurons and information encodings in autoassociative memories. Phys. Rev. A 42, 2418.

Watts, D. J., and Strogatz, S. H. (1998). Collective dynamics of “small-world” networks. Nature 393, 440–442.

White, J. G., Southgate, E., Thomson, J. N., and Brenner, S. (1986). The structure of the nervous system of the nematode Caenorhabditis elegans. Philos. Trans. R. Soc. Lond. B Biol. Sci. 314, 1–340.

Wills, T., Lever, C., Cacucci, F., Burgess, N., and O’Keefe, J. (2005). Attractor dynamics in the hippocampal representation of the local environment. Science 308, 873–876.

Wittenberg, G. M., and Wang, S.-H. (2006). Malleability of spike-timing-dependent plasticity at the CA3-CA1 synapse. J. Neurosci. 26, 6610–6617.

Wu, Z., and Yamaguchi, Y. (2010). Independence of the unimodal tuning of firing rate from theta phase precession in hippocampal place cells. Biol. Cybern. 102, 95–107.

Yoshioka, M. (2009). Learning of spatiotemporal patterns in ising-spin neural networks: analysis of storage capacity by path integral methods. Phys. Rev. Lett. 102, 158102.

Yoshioka, M., Scarpetta, S., and Marinaro, M. (2007). Spatiotemporal learning in analog neural networks using spike-timing-dependent synaptic plasticity. Phys. Rev. E 75, 051917.

Keywords: phase-coding, associative memory, storage capacity, replay, STDP

Citation: Scarpetta S, de Candia A and Giacco F (2010) Storage of phase-coded patterns via STDP in fully-connected and sparse network: a study of the network capacity. Front. Syn. Neurosci. 2:32. doi: 10.3389/fnsyn.2010.00032

Received: 31 January 2010;

Paper pending published: 26 February 2010;

Accepted: 28 June 2010;

Published online: 23 August 2010

Edited by:

Wulfram Gerstner, Ecole Polytechnique Fédérale de Lausanne, SwitzerlandReviewed by:

Mate Lengyel, University of Cambridge, UKMarc Timme, Max-Planck-Institute for Dynamics and Self-Organization, Germany

Copyright: © 2010 Scarpetta, de Candia and Giacco. This is an open-access article subject to an exclusive license agreement between the authors and the Frontiers Research Foundation, which permits unrestricted use, distribution, and reproduction in any medium, provided the original authors and source are credited.

*Correspondence: Silvia Scarpetta, Dipartimento di Fisica “E.R. Caianiello”, Università di Salerno, via Ponte Don Melillo, Fisciano, Italy. e-mail:c2lsdmlhQHNhLmluZm4uaXQ=