- 1Frontiers Science Center for Intelligent Autonomous Systems, Shanghai, China

- 2Department of Control Science and Engineering, College of Electronics and Information Engineering, Tongji University, Shanghai, China

- 3National Key Laboratory of Autonomous Intelligent Unmanned Systems, Tongji University, Shanghai, China

- 4Department of Electrical Engineering, Prince Mohammad Bin Fahd University, Al Khobar, Saudi Arabia

- 5Department of Computer Science, Faculty of Computer Sciences, Ilma University, Karachi, Pakistan

- 6Department of Electrical and Communication Engineering, College of Engineering, United Arab Emirates University, Al Ain, United Arab Emirates

Explainable Artificial Intelligence (XAI) is increasingly pivotal in Unmanned Aerial Vehicle (UAV) operations within smart cities, enhancing trust and transparency in AI-driven systems by addressing the 'black-box' limitations of traditional Machine Learning (ML) models. This paper provides a comprehensive overview of the evolution of UAV navigation and control systems, tracing the transition from conventional methods such as GPS and inertial navigation to advanced AI- and ML-driven approaches. It investigates the transformative role of XAI in UAV systems, particularly in safety-critical applications where interpretability is essential. A key focus of this study is the integration of XAI into monocular vision-based navigation frameworks, which, despite their cost-effectiveness and lightweight design, face challenges such as depth perception ambiguities and limited fields of view. Embedding XAI techniques enhances the reliability and interpretability of these systems, providing clearer insights into navigation paths, obstacle detection, and avoidance strategies. This advancement is crucial for UAV adaptability in dynamic urban environments, including infrastructure changes, traffic congestion, and environmental monitoring. Furthermore, this work examines how XAI frameworks foster transparency and trust in UAV decision-making for high-stakes applications such as urban planning and disaster response. It explores critical challenges, including scalability, adaptability to evolving conditions, balancing explainability with performance, and ensuring robustness in adverse environments. Additionally, it highlights the emerging potential of integrating vision models with Large Language Models (LLMs) to further enhance UAV situational awareness and autonomous decision-making. Accordingly, this study provides actionable insights to advance next-generation UAV technologies, ensuring reliability and transparency. The findings underscore XAI's role in bridging existing research gaps and accelerating the deployment of intelligent, explainable UAV systems for future smart cities.

1 Introduction

Unmanned Aerial Vehicles (UAVs) have evolved from early military reconnaissance tools into essential assets across various industries (Mozaffari et al., 2019; Sharma et al., 2020). Initially developed for surveillance during World War I and later refined with radio-controlled advancements in World War II (Fahlstrom et al., 2022; Sullivan, 2006), UAVs remained largely confined to military applications until the late 20th century (Keane and Carr, 2013). Advances in consumer electronics, including lightweight batteries, high-resolution cameras, and compact processors, alongside regulatory changes, drove their expansion into civilian and commercial domains, enabling applications in photography, disaster response, environmental monitoring, and humanitarian efforts (Xing et al., 2024; Battsengel et al., 2020; Fotouhi et al., 2019; Nex et al., 2022).

Between 2000 and 2010, research focused on improving UAV design, navigation, control systems, and communication technologies, enhancing their ability to execute sophisticated autonomous operations (Hassanalian and Abdelkefi, 2017; Gyagenda et al., 2022; Gu et al., 2020; Ebeid et al., 2018). These advancements facilitated AI-driven navigation, adaptive flight control, and real-time decision-making, allowing UAVs to support critical applications such as disaster relief and climate monitoring (Hernández et al., 2021; Adade et al., 2021). With increasing adoption, modern UAVs now face pressing challenges, including scalability, efficient autonomy, and enhanced situational awareness. Addressing these challenges requires novel approaches such as Explainable AI (XAI) to improve trust, transparency, and decision-making in dynamic environments.

As UAVs have evolved, AI has emerged as a transformative force in navigation and control, enabling unprecedented levels of autonomy and precision. AI-driven systems process large datasets in real-time, allowing UAVs to adapt to dynamic environments and perform complex tasks such as obstacle avoidance, route optimization, and accurate target tracking (Lahmeri et al., 2021; Rovira-Sugranes et al., 2022; Su et al., 2023). These advancements have significantly expanded UAV operational scope, reducing human intervention and improving efficiency in applications like surveillance, environmental monitoring, and disaster response. A key component of AI-enabled navigation is monocular vision, which relies on a single camera to perceive the environment, offering a cost-effective and lightweight alternative to multi-sensor systems. By leveraging AI and Machine Learning (ML) algorithms, monocular vision enables UAVs to estimate depth, detect obstacles, and construct environmental maps in real time, facilitating precise navigation and responsive control even in dynamic and unpredictable environments (Padhy et al., 2018b). This integration allows UAVs to operate efficiently without the reliance on expensive or bulky multi-sensor setups, making monocular vision an essential technology for autonomous UAV operations.

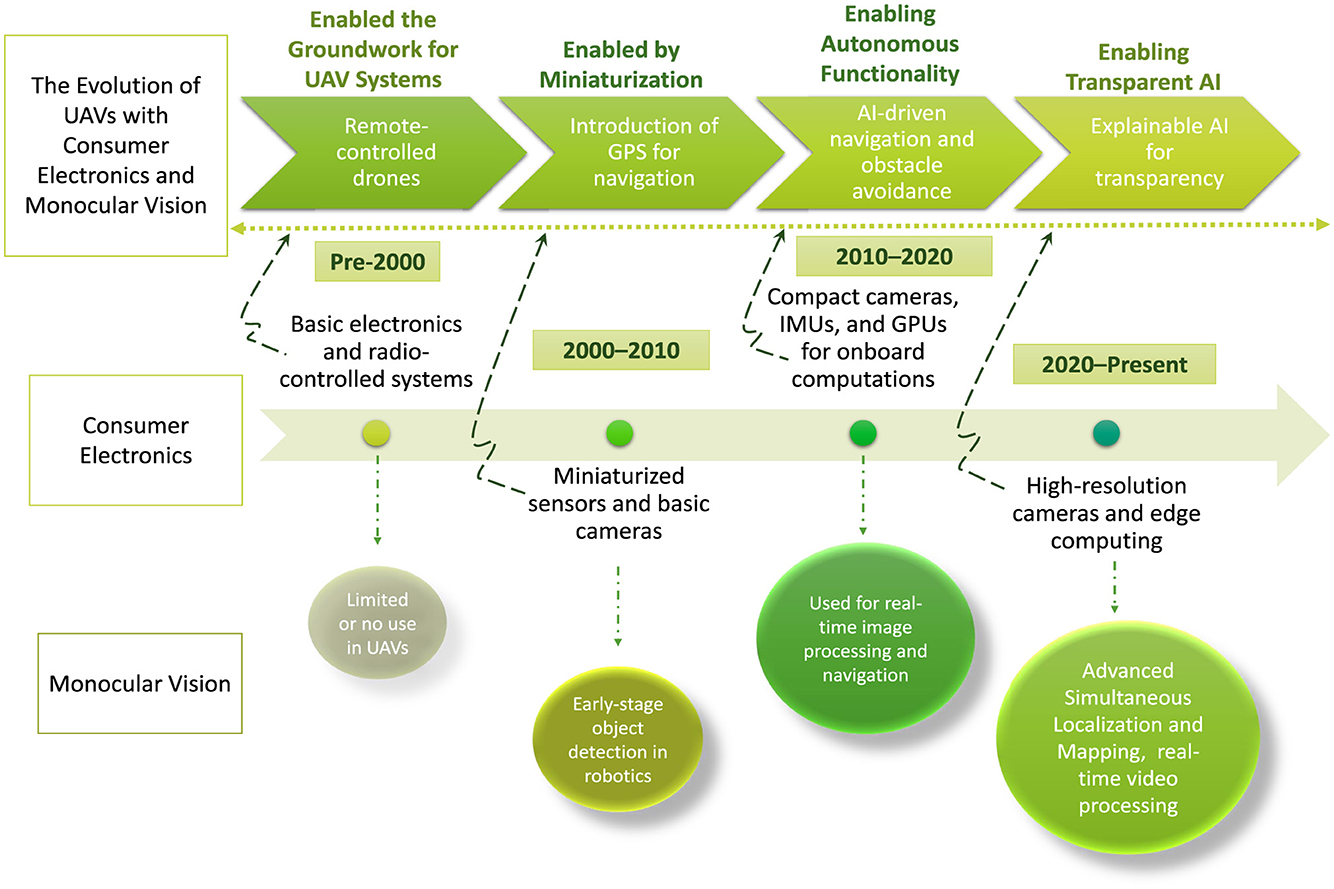

However, as UAVs become increasingly autonomous, the need for transparency and trust in their decision-making processes has brought Explainable Artificial Intelligence (XAI) to the forefront. XAI frameworks address the "black-box" nature of AI algorithms by providing interpretable and human-understandable explanations for UAV navigation decisions (Minh et al., 2022; Došilović et al., 2018). For instance, XAI techniques can explain how a UAV interprets sensor data, including data from consumer electronics-based components such as high-resolution cameras and lightweight processors, to avoid obstacles or prioritize routes under specific constraints. XAI is also significantly important for enhancing monocular vision by explaining the UAV's interpretations of visual data, such as how depth was inferred or why a specific obstacle avoidance maneuver was chosen. This is especially critical in high-stakes applications such as environmental monitoring and urban planning, where stakeholders require assurance about the reliability and rationale of UAV operations (Kurunathan et al., 2023; Wilson et al., 2021). Therefore, by combining AI-driven automation with XAI interpretability and advances in consumer electronics technologies, UAVs can achieve both operational efficiency and the transparency needed for greater acceptance and trust in their capabilities (Shakhatreh et al., 2019; Sharma et al., 2020). Figure 1 illustrates how the advancements in consumer electronics and monocular vision have collectively driven the evolution of UAVs, enabling innovations such as GPS navigation, autonomous functionality, and XAI.

Figure 1. The evolution of UAVs over time through combined advances in consumer electronics and monocular vision.

1.1 Related surveys

State-of-the-art survey papers have significantly advanced UAV navigation efforts by addressing various challenges and proposing innovative solutions. For instance, Lu et al. (2018) reviewed vision-based methods for UAV navigation, focusing on key components like visual localization, mapping, obstacle avoidance, and path planning. Similarly, Rezwan and Choi (2022) provided a comprehensive survey of AI approaches for autonomous UAV navigation, categorizing optimization-based and learning-based techniques while exploring their fundamentals, working principles, and applications. Gyagenda et al. (2022) analyzed independent navigation solutions for GNSS-denied environments, emphasizing perception, localization, and motion planning, whereas Balamurugan et al. (2016) reviewed vision-based navigation techniques, including Visual Odometry (VO) and Simultaneous Localization and Mapping (SLAM), alongside sensor fusion methods for enhanced performance. Alam and Oluoch (2021) examined adaptive navigation techniques for safe flight and landing, while Gu et al. (2020) focused on ANN-based flight controllers for optimization. Ebeid et al. (2018) highlighted the role of open-source platforms in accelerating UAV research and development.

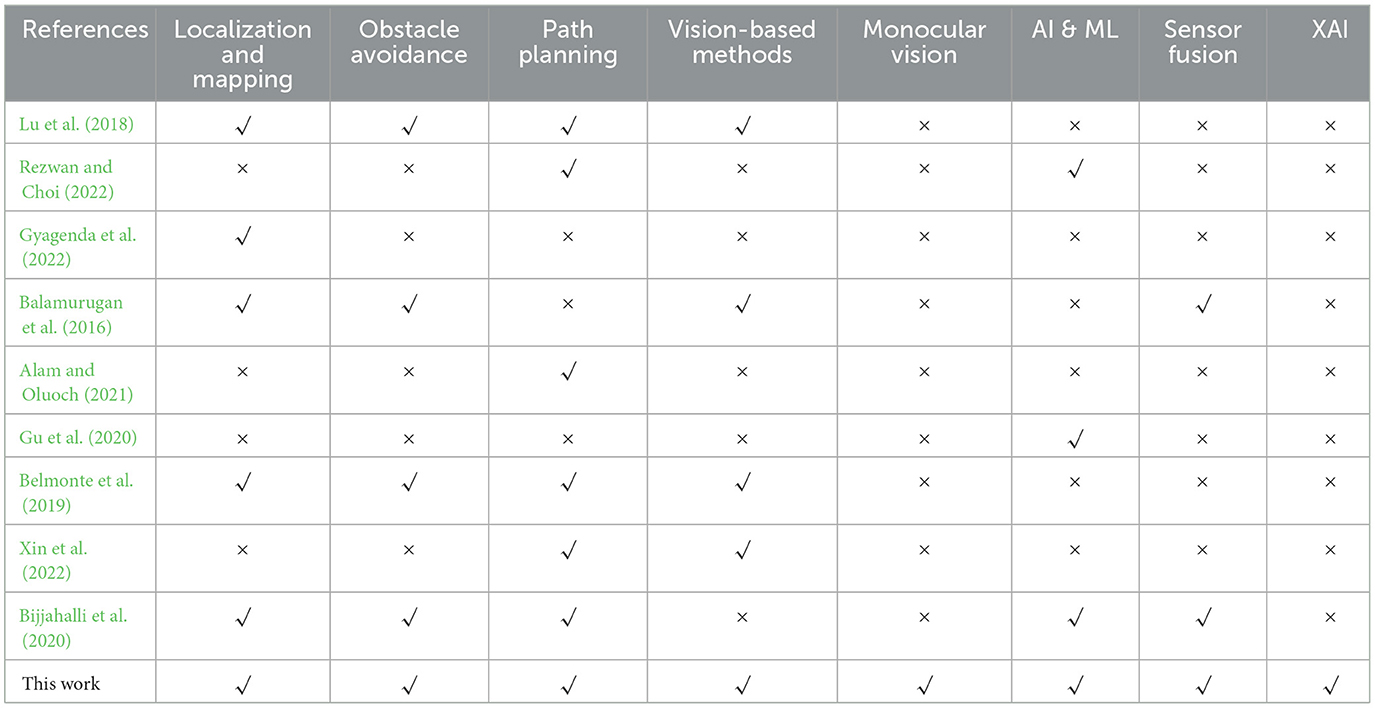

In the context of monocular vision, existing reviews such as Kakaletsis et al. (2021); Yang et al. (2018), and Tong et al. (2023) have explored its applications in UAV navigation. Belmonte et al. (2019) examined vision-based autonomous UAVs for tasks like navigation, control, tracking or guidance, and sense-and-avoid, emphasizing advancements in vision systems, algorithms, UAV platforms, and validation methods. This study highlighted the growing potential of UAVs as personal aerial assistants enabled by computer vision technologies. Similarly, Xin et al. (2022) investigated vision-based autonomous landing for UAVs, showcasing advantages like strong autonomy, low cost, and high anti-interference capability, while categorizing landing scenarios into static, dynamic, and complex environments. Bijjahalli et al. (2020) emphasized the use of AI-based methods, including Artificial Neural Networks (ANNs), Support Vector Machines (SVMs), and ensemble approaches, to enhance localization, obstacle detection, and data fusion for small UAVs. Collectively, these studies demonstrate the transformative impact of advanced navigation and control strategies, pushing UAV capabilities in increasingly complex environments. Table 1 presents a comparative analysis of existing survey papers and this review. The selected surveys were chosen based on their significant contributions to UAV navigation, obstacle avoidance, path planning, and vision-based methodologies. These works represent foundational studies in AI-driven UAV operations, encompassing techniques such as sensor fusion and monocular vision. However, despite their relevance, none of these surveys comprehensively integrate XAI into their frameworks, highlighting the unique contribution of this review.

1.2 Motivation and contribution

Existing survey papers have primarily focused on UAV navigation technologies, monocular vision, or the challenges of AI integration in isolation. However, the critical issue of interpretability in AI-driven UAV systems remains largely unaddressed. This review bridges these domains by examining how XAI can enhance trust, reliability, and decision-making in UAV navigation. The study provides a comprehensive analysis of UAV navigation's evolution–from traditional methods to modern AI and ML approaches while critically assessing the limitations of monocular vision and the transformative potential of XAI. Unlike prior works, it adopts a holistic perspective, emphasizing the synergy between XAI, monocular vision, and consumer electronics. By leveraging advancements such as high-resolution cameras and compact processors, it demonstrates how consumer-grade hardware facilitates the integration of XAI and monocular vision, making UAV navigation more accessible and interpretable. Furthermore, this review identifies key research gaps and offers actionable insights to enhance the reliability and performance of AI-driven UAV systems, particularly in high-stakes applications. By addressing these critical challenges, it represents a significant step toward bridging the gap between cutting-edge innovation and real-world deployment in UAV operations. The key contributions of this work are as follows:

• First, this paper provides a comprehensive overview of the evolution of UAV navigation and control systems, highlighting the transition from traditional methods, such as GPS and inertial navigation, to advanced AI- and ML-driven approaches. It investigates the role of XAI in UAV systems, addressing the "black-box" nature of traditional ML models. This study explores how XAI enhances autonomous navigation and decision-making in safety-critical applications, emphasizing the integration of technologies, including monocular vision and modern consumer electronics, to expand UAV capabilities across diverse use cases.

• Then, this work underscores the transformative potential of XAI in monocular vision frameworks, which, despite being lightweight and cost-effective, face challenges such as depth perception ambiguities and limited fields of view. This study demonstrates how the incorporation of XAI, significantly improves the reliability and interpretability of monocular vision systems by providing clearer insights into navigation paths, obstacle detection, and avoidance strategies.

• The work then examines how XAI frameworks enhance transparency and trust in UAV decision-making, particularly in high-stakes applications like urban planning and environmental monitoring. It identifies key challenges, including scalability, adaptability to dynamic environments, balancing explainability with performance, ensuring robustness in adverse conditions, and fostering collaboration between vision models and Large Language Models (LLMs) to improve UAV operations. By exploring opportunities for integrating advancements in consumer electronics, monocular vision, and XAI, the paper provides actionable insights that pave the way for future research and the development of next-generation UAV technologies that are both reliable and transparent.

The rest of the paper is organized as follows: Section methdology presents the methodology, Section navig-contr discusses UAV navigation and control, Section moncol explores monocular vision for UAVs, highlighting challenges and advances, Section sec:XAI focuses on XAI integration for UAV navigation and control, Section challenges addresses the challenges and future research directions, and Section conclusion concludes the paper.

2 Methodology

This study employs a structured methodological approach to investigate the role of XAI in monocular vision-based UAV navigation for smart cities. The methodology includes a systematic literature review and a methodology-driven analysis of research gaps and future directions, focusing on the integration of XAI into UAV systems to enhance interpretability, trust, and overall performance in dynamic urban environments.

2.1 Systematic literature review approach

The hypothesis driving this study asserts that the integration of XAI with monocular vision-based UAV navigation systems substantially improves interpretability, trust, and overall performance, especially within the dynamic and complex environments of smart cities. By addressing the "black-box" nature of AI models, XAI offers greater transparency into the decision-making processes of UAVs. This increased transparency is crucial for improving the safety, reliability, and adaptability of UAV operations in rapidly evolving urban settings. To establish the current state of UAV navigation, monocular vision techniques, and XAI applications, a comprehensive and systematic literature review was conducted. The review process was structured as follows:

• Selection Criteria: Articles and survey papers were selected from high-impact journals, conference proceedings, and technical reports to ensure credibility. The selection was based on relevance to UAV navigation, AI-based path planning, monocular vision methodologies, and explainability in AI-driven UAV applications. Only articles with more than 20 citations were initially considered to ensure the inclusion of high-quality, well-established research. Additionally, newer papers with fewer citations but emerging relevance were selected to capture the latest advancements in the field. Papers addressing advances in AI-driven UAV systems and their practical implementations in smart cities were prioritized.

• Classification of Studies: The studies were classified into key areas that reflect the core aspects of UAV navigation, monocular vision, and the integration of XAI. The first category, UAV Navigation Evolution, examines the transition from traditional navigation systems such as GPS and Inertial Measurement Units (IMUs) to AI-driven approaches, which leverage ML and data fusion techniques to enhance the autonomy, precision, and adaptability of UAV systems. The second category, Monocular Vision Limitations, addresses challenges inherent in monocular vision systems, such as depth perception issues, occlusions, and environmental sensitivities, which can limit the effectiveness of vision-based navigation in UAVs. Finally, the Role of XAI in UAV Decision-Making explores how various XAI techniques, including post-hoc explainability methods and interpretable model architectures, have been applied to improve transparency, trust, and decision-making in UAV operations. This classification emphasizes the evolution of these fields and underscores their interconnectedness in advancing UAV technologies, particularly for applications in smart cities.

• Comparative Analysis: A detailed comparative analysis was performed to assess the strengths and limitations of existing studies on UAV navigation, monocular vision, and XAI integration. While much of the existing literature addresses individual aspects such as AI-driven navigation or monocular vision techniques, there is a significant gap in studies that integrate XAI into monocular vision-based UAV systems. This gap highlights the need for further research in this area, particularly to enhance the interpretability and decision-making capabilities of UAV systems in dynamic environments. The current study aims to address this gap by focusing on the synergy between XAI and monocular vision, contributing to more reliable and transparent UAV operations.

2.2 Methodology-driven research gaps and future directions

A critical evaluation of existing UAV navigation studies identified several challenges and research gaps. These include scalability challenges, particularly the integration of XAI in UAV systems that operate at large scales in both urban and rural environments. There is also a need to explore computational efficiency improvements to deploy XAI without compromising UAV battery life and processing capacity. Furthermore, adaptability to environmental conditions is a key challenge, as weather variations, lighting conditions, and terrain differences impact the performance of XAI-based UAV navigation systems. Techniques for adaptive learning and real-time model adjustments must be examined to enhance performance in dynamic environments. The balancing of explainability with performance remains an ongoing challenge, requiring the development of hybrid approaches where simpler, interpretable models can assist more complex deep-learning models in critical decision-making processes. Ensuring robustness in adverse conditions is another key area of focus, particularly the resilience of XAI-enhanced UAV navigation in challenging environments, such as disaster zones and low-visibility conditions. Redundancy mechanisms should be investigated to ensure system stability in unpredictable scenarios.

Looking toward the future, synergy between XAI, vision models, and LLMs presents promising opportunities. This study explores the potential of multimodal AI models for UAVs, where LLMs are combined with computer vision models to improve UAV situational awareness and autonomous decision-making. The integration of LLMs with computer vision in UAV-based perception systems is examined through real-world applications. Human-UAV interaction via natural language explanations is also explored, leveraging LLMs to provide human-readable explanations of UAV decision-making processes, thereby enhancing human trust in autonomous UAV operations. Finally, enhancing UAV decision-making with LLMs involves evaluating how LLMs can be integrated into UAV mission planning, coordination, and adaptive response strategies, alongside examining novel architectures that blend vision, language understanding, and real-time UAV decision logic.

Based on these findings, several actionable insights and recommendations for advancing XAI-integrated UAV technologies are provided. These include guidelines for the effective deployment of XAI in UAV applications, strategies for selecting the most appropriate XAI models based on operational requirements, and the development of hybrid AI models that optimize the trade-offs between transparency and efficiency. The study also proposes the use of lightweight explainable models suited for real-time UAV applications and explores the feasibility of leveraging consumer-grade hardware, such as compact processors and high-resolution cameras, combined with edge AI techniques, to support cost-effective UAV navigation solutions. These insights inform the subsequent sections, which focus deeper into the application of these recommendations and explore real-world use cases.

3 UAV's navigation and control

Navigation and control have been primary areas of research since the inception of UAVs, undergoing significant evolution over the years. The focus has gradually shifted from basic navigation techniques to advanced systems that enable UAVs to operate autonomously in complex environments. Rapid advancements in consumer electronics have further bridged the gap between high-end military UAVs and affordable civilian models by introducing compact, high-performance components originally designed for consumer markets. This section provides a detailed discussion of the evolution of UAV navigation systems, the advancements in consumer electronics for UAV applications, the integration of AI and ML technologies, and the challenges in achieving robust UAV navigation and control.

3.1 Evolution and current state of UAV navigation systems

Initially, the navigation and control techniques focused on remotely piloted or semi-autonomous systems that attracted a growing interest in the sophisticated control mechanisms used in UAVs today, such as Ground Positioning System (GPS) guidance (Javaid et al., 2023b; Kwak and Sung, 2018), Inertial Navigation Systems (INS) (Zhang and Hsu, 2018), and visual navigation through cameras and computer vision (Elkaim et al., 2015). Over time, advancements in consumer electronics introduced compact, high-resolution cameras and lightweight processors, enabling more accessible and efficient visual navigation systems. These developments, alongside more sophisticated control mechanisms, led to the creation of algorithms such as Proportional-Integral-Derivative (PID), Linear Quadratic Regulator (LQR), Model Predictive Control (MPC), adaptive control, robust control, sliding mode control, and fuzzy logic controllers (Kim et al., 2006). These control techniques aim to enhance UAV stability, maneuverability, and the ability to respond to changing environmental conditions. With the rapid growth in UAV applications, new control strategies have been introduced to address the increasing complexity of tasks and environments. State-of-the-art schemes (Balamurugan et al., 2016; Gu et al., 2020; Ebeid et al., 2018) have also focused on addressing challenges in complex and GPS-denied environments through autonomous solutions that enhance perception, localization, and motion planning. Vision-based techniques have also been developed to ensure safe flight in dynamic conditions. Neural network-based controllers optimize performance, and open-source platforms have accelerated innovation, making advanced tools more accessible. These advancements have made UAVs highly versatile and capable of operating autonomously in a wide range of situations, from agriculture and logistics to disaster response.

3.2 AI and ML-based navigation and challenges

The integration of AI and ML has transformed UAV navigation systems, enabling unprecedented autonomy and precision in operational performance. Techniques such as Deep Reinforcement Learning (DRL) allow UAVs to optimize navigation strategies by learning from dynamic environments through trial-and-error frameworks (Wang C. et al., 2019; Wang et al., 2017). Similarly, Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) are employed for real-time feature extraction and sequence analysis in tasks such as obstacle detection and trajectory prediction (Maravall et al., 2015; Padhy et al., 2018a). Advanced sensor fusion techniques integrate data from multiple sources, including monocular vision, LiDAR, and Radar, to provide UAVs with comprehensive situational awareness (Alam and Oluoch, 2021). These AI-driven methodologies allows UAVs to execute complex tasks, such as multi-agent coordination, real-time path planning, and adaptive flight control, in challenging and GPS-denied environments.

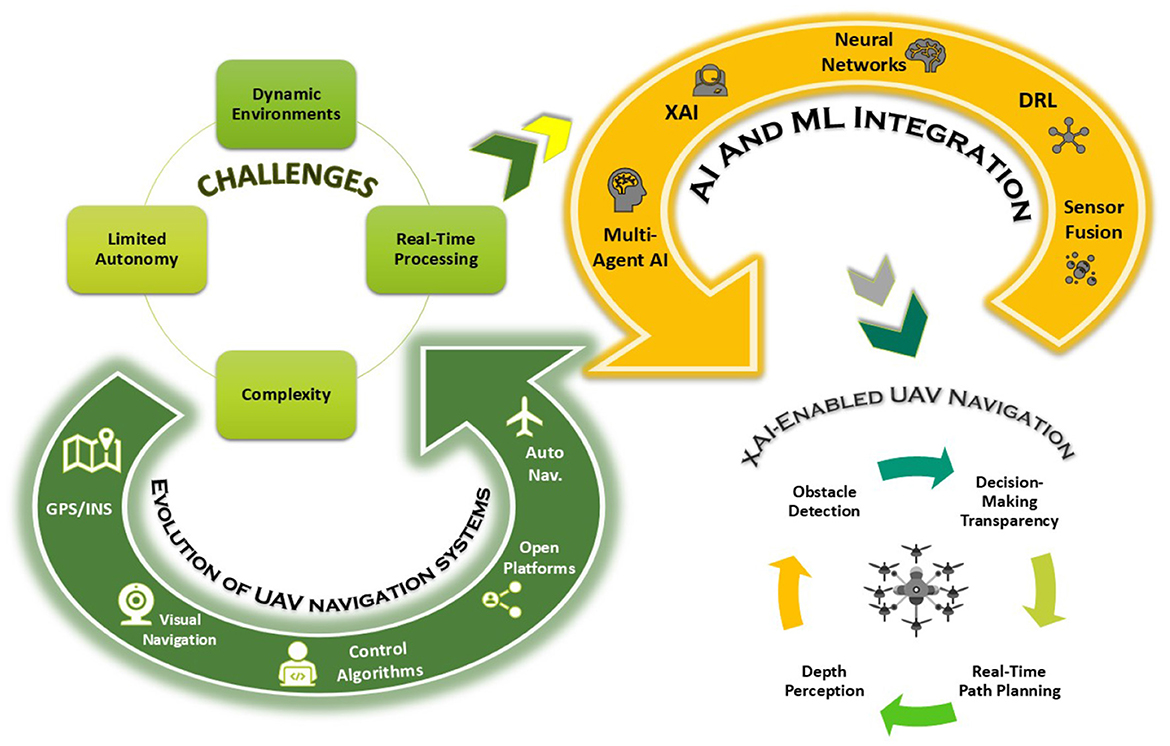

Despite these advancements, the integration of AI and ML in UAVs poses several challenges. Computational limitations remain a significant barrier, as real-time AI processing often exceeds the onboard capabilities of lightweight UAV platforms, even with optimized processors (Hashesh et al., 2022). Furthermore, the generalizability of AI models is hindered by the variability in operational environments, such as diverse terrains, dynamic obstacles, and unpredictable weather conditions. The reliance on training data introduces risks of bias and underperformance in novel scenarios, requiring robust domain adaptation techniques (Puente-Castro et al., 2022; Kurunathan et al., 2023). Additionally, the black-box nature of AI algorithms raises concerns about interpretability and trust, particularly in high-stakes applications. Integrating diverse sensors, including consumer electronics-based monocular vision and advanced sensing technologies such as LiDAR, introduces synchronization and computational challenges, further complicating real-time data fusion and decision-making. Addressing these challenges requires leveraging advancements in consumer electronics, which have introduced innovative hardware solutions to enhance UAV navigation and control capabilities. These contributions are explored in detail in the following section (Sun et al., 2024; McEnroe et al., 2022). Figure 2 further illustrates the progression from traditional UAV navigation to AI/ML integration, emphasizing XAI's role in enhancing capabilities such as obstacle detection.

3.3 Consumer electronics in UAV navigation

Advancements in consumer electronics have profoundly transformed UAV systems, driving remarkable improvements in navigation accuracy, operational efficiency, and system versatility. High-resolution cameras, lightweight and powerful processors optimized for edge computing, and energy-efficient batteries have enabled smaller UAVs to perform computationally intensive tasks under stringent resource constraints (Bebortta et al., 2024). Previously, UAVs depended on bulky multi-sensor arrays and centralized processing units, which limited their agility and deployment flexibility. The shift to monocular vision systems, utilizing compact and consumer-grade cameras, has revolutionized depth estimation and environmental mapping by leveraging cutting-edge advancements in image sensor technology and optical design. These systems efficiently capture high-fidelity, real-time visual data crucial for tasks such as obstacle detection, terrain mapping, and target tracking, offering a cost-effective alternative to traditional setups like stereo vision or LiDAR.

Furthermore, the integration of consumer-grade Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) has significantly enhanced UAV computational capabilities by enabling the deployment of on-device AI models. Modern GPUs deliver accelerated processing power tailored for ML inference, reducing latency in decision-making processes. This advancement facilitates real-time navigation and rapid response maneuvers, critical for operations in dynamic and unpredictable environments (Ahmed and Jenihhin, 2022). Edge computing frameworks, enabled by these processors, allow UAVs to process data locally, reducing reliance on ground-based control systems and increasing the reliability of autonomous operations. Energy-efficient battery technologies, such as Lithium-Polymer (LiPo) batteries, developed through consumer electronics innovations, have further bolstered UAV performance by extending flight durations and increasing payload capacities. These batteries, characterized by high energy density and improved discharge rates, support longer missions and enable UAVs to carry additional sensors or communication equipment without sacrificing efficiency. Complementary advancements in power management systems and lightweight electronic components have also contributed to UAV miniaturization, enabling the development of agile and versatile designs suitable for a wide range of applications (Sarkar, 2024; Mahmood et al., 2023).

The collaboration between consumer electronics and UAV technology has additionally fostered the creation of modular and customizable UAV systems. Wireless communication technologies, including Wi-Fi and 4G/5G modules, enhance UAV capabilities by enabling seamless data transmission and real-time remote control, essential for applications that require continuous monitoring and rapid intervention (Ullah et al., 2020; Huang et al., 2024). These synergies have propelled UAV systems into a new era of operational excellence, making them indispensable tools across various domains. Notably, monocular vision systems, driven by compact and consumer-grade cameras, exemplify how advancements in consumer electronics have reshaped UAV navigation. These systems have become a transformative solution for depth estimation and environmental mapping, offering cost-effective and efficient alternatives to traditional approaches, as explored in the next section (Yang et al., 2018; Padhy et al., 2019).

4 Monocular vision for UAVs: challenges and advances

Monocular vision systems have emerged as a pivotal innovation in UAV navigation, offering lightweight and cost-effective solutions for visual sensing. This section provides the overview, examines the key challenges, and discusses the existing methodologies that can enhance their performance and reliability in diverse applications.

4.1 Overview and challenges

Monocular vision enables UAVs to perceive depth and three-dimensional objects using a single camera, unlike binocular vision, which relies on two cameras for stereopsis. While monocular vision offers a cost-effective and lightweight alternative to stereoscopic systems, its primary drawback is limited depth perception, making accurate distance estimation inherently challenging. This limitation directly impacts essential UAV functions such as obstacle detection, navigation, and scene understanding (Miclea and Nedevschi, 2021; Madhuanand et al., 2021), increasing the risk of navigation errors and collisions.

Despite these challenges, monocular vision remains fundamental to UAV perception, thanks to recent technological advancements. High-resolution cameras, lightweight processors, and efficient image-processing algorithms have enhanced UAVs' ability to estimate distances, detect objects, and construct real-time environmental maps. These innovations provide a streamlined alternative to multi-sensor setups, reducing the size, weight, and complexity of onboard equipment while maintaining robust performance. However, while these advancements improve reliability, they do not eliminate the fundamental limitations of monocular vision, particularly in complex and dynamic environments.

In addition to depth estimation issues, monocular vision systems face several challenges that hinder UAV navigation and control (Lin et al., 2017; Choi and Kim, 2014; Zhao et al., 2019b). Size estimation remains problematic, as a single camera struggles to distinguish objects at varying distances, leading to ambiguities in detection (Kendall et al., 2014). A limited field of view further restricts situational awareness, particularly in complex urban environments where UAVs must track multiple moving objects such as vehicles and pedestrians. The constrained visual range reduces the UAV's ability to anticipate obstacles, often requiring frequent reorientation to compensate for blind spots. This leads to increased computational demands, energy consumption, and potential delays in real-time decision-making. In dense areas such as intersections or pedestrian zones, UAVs may fail to detect fast-moving objects in time, increasing the risk of navigation errors or inefficient path planning. Additionally, lighting variations, environmental textures, and sudden scene changes can degrade the reliability of object detection and tracking (Al-Kaff et al., 2016). The high mobility of UAVs introduces motion blur, reducing visual data quality and complicating feature extraction. Occlusions, where objects block one another within the camera's field of view, further hinder effective tracking (Lin et al., 2017; Bharati et al., 2018). Adverse weather conditions such as rain, snow, fog, and low light significantly affect monocular vision performance, limiting UAVs' ability to operate reliably. Ensuring precise camera calibration and alignment is also critical, as misalignment can cause navigation errors (Mori and Scherer, 2013).

To mitigate these limitations, ongoing research explores AI-driven image processing, sensor fusion, and adaptive enhancement techniques. Deep learning models refine depth estimation from single-camera inputs, improving object recognition and spatial awareness. Integrating monocular vision with other sensing modalities, such as LiDAR and IMUs, enhances depth perception and environmental mapping. Self-powered sensors (Javaid et al., 2023a) further improve UAV perception by enabling continuous environmental monitoring while reducing reliance on external power sources, contributing to more efficient and sustainable navigation. These advancements continue to refine monocular vision, ensuring it remains a viable solution for autonomous UAV operations.

4.2 Current state of monocular vision

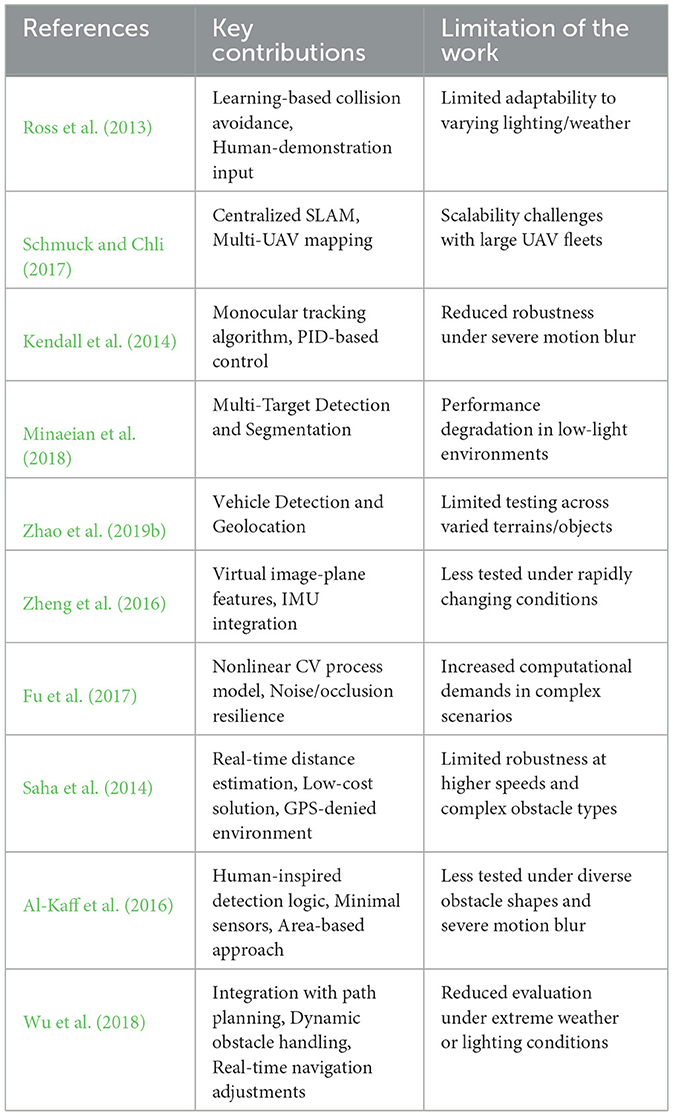

State-of-the-art literature aims to address the aforementioned challenges and enhance monocular vision by developing robust algorithms and techniques (Qin and Shen, 2017). Improved depth estimation algorithms, ML approaches, and sensor fusion techniques enhance UAVs' understanding of their surroundings, enabling more accurate obstacle avoidance, scene reconstruction, and overall autonomous capabilities. For instance, Ross et al. (2013) introduced a novel method for achieving high-speed, autonomous flight of micro aerial vehicles in densely forested environments using monocular vision. The proposed algorithm learns from human expertise that allows micro aerial vehicles to predict and avoid collisions with trees and foliage using affordable visual sensors. In another work (Schmuck and Chli, 2017), the authors introduced a novel centralized architecture for collaborative monocular SLAM using multiple small UAVs. Each UAV independently explores the environment, performing limited-memory SLAM, and sends its mapping data to a central server with greater computational resources, leading to enhanced mapping accuracy and efficiency. In another work (Kendall et al., 2014), the authors developed a cost-effective, self-contained quadcopter controller using monocular vision for object tracking without GPS or external sensors. The system employs a low-frequency algorithm and parallel PID controllers to track colored objects, enabling reliable and efficient UAV applications. Minaeian et al. (2018) introduced an effective, efficient, and robust method for the accurate detection and segmentation of multiple independently moving foreground targets within video sequences captured by UAVs. The presented scheme efficiently estimates camera motion by tracking background key points and employs a combination of local motion history and spatiotemporal differencing over a sliding window to robustly detect multiple moving targets. In another work (Zhao et al., 2019b), the authors proposed a framework combining a monocular camera, GPS, and inertial sensors to detect, track, and geolocate moving vehicles. Using correlation filters for visual tracking and a passive geolocation method, the system calculates vehicle GPS coordinates and guides the UAV to follow the target based on image processing results.

Zheng et al. (2016) developed a robust monocular vision-based control system for quadrotor UAVs, utilizing an onboard monocular camera and IMU sensors to enable precise navigation and positioning. Their approach defines image features on a virtual image plane, simplifying control and enhancing the UAV's ability to regulate its position, making it suitable for applications such as monitoring, landing, and other scenarios requiring accurate and efficient positioning. Similarly, Fu et al. (2017) introduced an improved pose estimation method for multirotor UAVs using an off-board camera. The scheme employed a novel nonlinear constant-velocity process model tailored to multirotor UAV characteristics, enhancing observability and robustness against noise and occlusion for improved vision-based pose estimation.

For collision avoidance, Saha et al. (2014) developed a real-time obstacle detection and avoidance system for low-cost UAVs operating in unstructured, GPS-denied environments. Their mathematical model estimates the relative distance from the UAV's camera to obstacles, enabling effective collision avoidance while ensuring safety and applicability in complex scenarios. In another study, Al-Kaff et al. (2016) proposed a real-time obstacle detection and avoidance method for micro and small UAVs with limited onboard sensors due to payload and battery constraints. This method mimics human-like behavior by tracking changes in obstacle size within the image sequence, identifying feature points, and extracting obstacles with a high likelihood of approaching the UAV. By comparing area ratio changes in detected obstacles and estimating their 2D positions in the image, the UAV determines the presence of obstacles and takes appropriate avoidance actions. Additionally, Wu et al. (2018) combined obstacle detection with waypoint tracking to further refine avoidance maneuvers, enhancing the overall safety and operability of lightweight UAVs. These advancements in monocular vision have significantly improved UAV capabilities in autonomous navigation, optimized localization, pose estimation, and collision avoidance. However, as UAVs become increasingly autonomous, the need for transparency and trust in their decision-making processes has brought XAI to the forefront. The following section explores the role of XAI in enhancing UAV navigation and control, offering insights into how interpretable AI frameworks can address the “black-box” nature of traditional algorithms and improve operational reliability. Table 2 further summarizes the main contributions of these schemes.

5 Explainable Artificial Intelligence (XAI) integration for UAV navigation and control

This section examines how XAI enhances UAV navigation and monocular vision systems by improving the interpretability and reliability of navigation decisions. It also highlights the role of XAI in seamlessly integrating with monocular vision techniques to optimize depth perception, obstacle detection, and overall efficiency, enabling transparent and effective UAV operations in complex environments.

5.1 Role of XAI in UAV navigation

XAI plays a pivotal role in enhancing the navigation capabilities of UAVs by providing transparency, interpretability, and trustworthiness to the autonomous decision-making processes. Traditional AI models, particularly deep learning algorithms, often function as "black boxes", making it challenging to understand why UAVs make certain navigation decisions. XAI addresses this limitation by clarifying the underlying mechanisms and factors influencing UAV navigation, ensuring more reliable and accountable operations.

One of the primary contributions of XAI in UAV navigation is improving obstacle detection and avoidance. Monocular vision systems on UAVs capture real-time visual data, which XAI-enhanced algorithms analyze to identify potential obstacles and determine safe flight paths. State-of-the-art techniques such as saliency maps, Local Interpretable Model-agnostic Explanations (LIME), and SHAP highlight the most critical regions in the visual input that influence navigation decisions, making the decision-making process more interpretable. By providing visual justifications for avoidance maneuvers, XAI allows developers and operators to verify and refine navigation models, ultimately improving UAV safety and adaptability in complex environments (Çiçek et al., 2021; Gundermann, 2020).

Moreover, XAI enhances the adaptability of UAVs in diverse operational scenarios. In various applications, including search and rescue, environmental monitoring, and urban traffic management, UAVs must dynamically adjust their navigation strategies based on changing conditions and mission objectives. XAI provides valuable insights into how environmental factors such as weather variations, moving obstacles, and terrain shifts influence UAV navigation. Understanding these influences allows AI models to be fine-tuned, thereby increasing the robustness and flexibility of UAV navigation systems across different operational landscapes.

As XAI becomes more embedded in UAV navigation, it is critical to establish standardized evaluation metrics to assess its effectiveness and reliability consistently. Current evaluation methods often prioritize accuracy and performance but lack metrics for explainability and user trust. Future research should focus on developing benchmark datasets and evaluation protocols that incorporate interpretability scores, explanation fidelity, uncertainty quantification, and real-time obstacle avoidance accuracy. Establishing universally accepted evaluation frameworks will allow for a comparative analysis of XAI-driven navigation models, ultimately ensuring the scalability and robustness of these systems in real-world UAV applications.

In addition, trust and accountability are significantly strengthened through the integration of XAI in UAV navigation. Operators, regulatory bodies, and end-users are more likely to trust autonomous UAV systems when they can understand and verify the decision-making processes. XAI fosters this trust by providing clear explanations for route selection, speed adjustments, and obstacle avoidance maneuvers, which can be audited and validated against regulatory safety standards and operational protocols. This transparency is essential for regulatory compliance and for ensuring the broader acceptance of autonomous UAV technologies in industries such as logistics, surveillance, and disaster management (He et al., 2021; Zhu et al., 2024).

Furthermore, XAI supports human-in-the-loop decision-making, where human operators collaborate with UAVs to oversee navigation in complex or high-risk environments. In scenarios that require real-time intervention, such as search-and-rescue missions or disaster response, XAI-powered UAVs can explain their navigational choices, allowing human operators to assess the reasoning behind a maneuver and intervene if necessary. For example, if a UAV initiates an emergency rerouting due to an unexpected obstacle, XAI can clarify whether this action was based on obstacle proximity, terrain constraints, or learned behavioral patterns. This transparent and interpretable decision-making process ensures that UAVs operate with both autonomy and human oversight, reinforcing trust and reliability in critical missions.

5.2 Integration of XAI with monocular vision

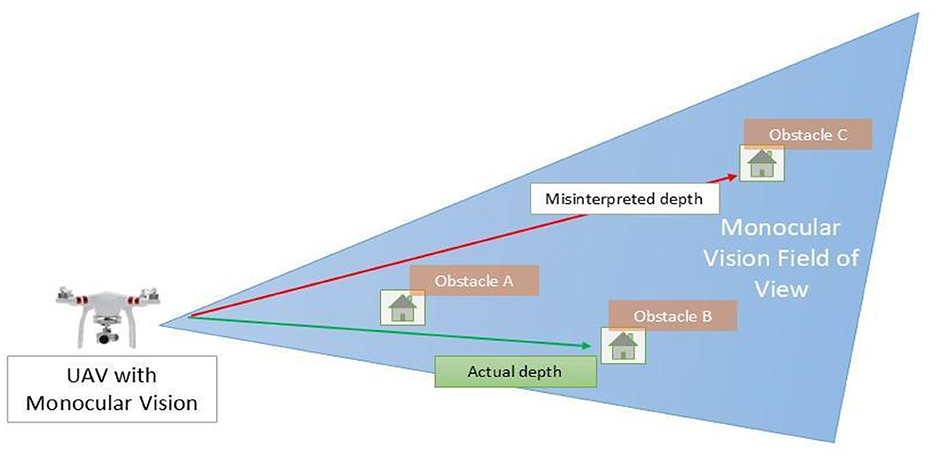

The integration of XAI with monocular vision systems significantly enhances the autonomy, reliability, and transparency of UAV operations. XAI provides interpretable insights into the visual perception and decision-making processes, fostering trust among users and stakeholders while facilitating effective human-AI collaboration. This, in turn, strengthens the adaptability and robustness of UAVs in dynamic and unpredictable (environments. Despite their cost and weight advantages, monocular vision systems face inherent limitations such as depth perception ambiguities and sensitivity to lighting variations. Figure 3 illustrates the challenges of depth perception in monocular vision-based UAV navigation. The UAV perceives obstacles within its field of view, but due to the lack of stereo depth cues, it misinterprets the actual distance of objects. Obstacle C is misjudged as being closer than it actually is (red arrow), while Obstacle B represents the correct depth perception (green arrow). Such depth estimation errors can lead to inaccurate obstacle avoidance and inefficient path planning in UAV operations.

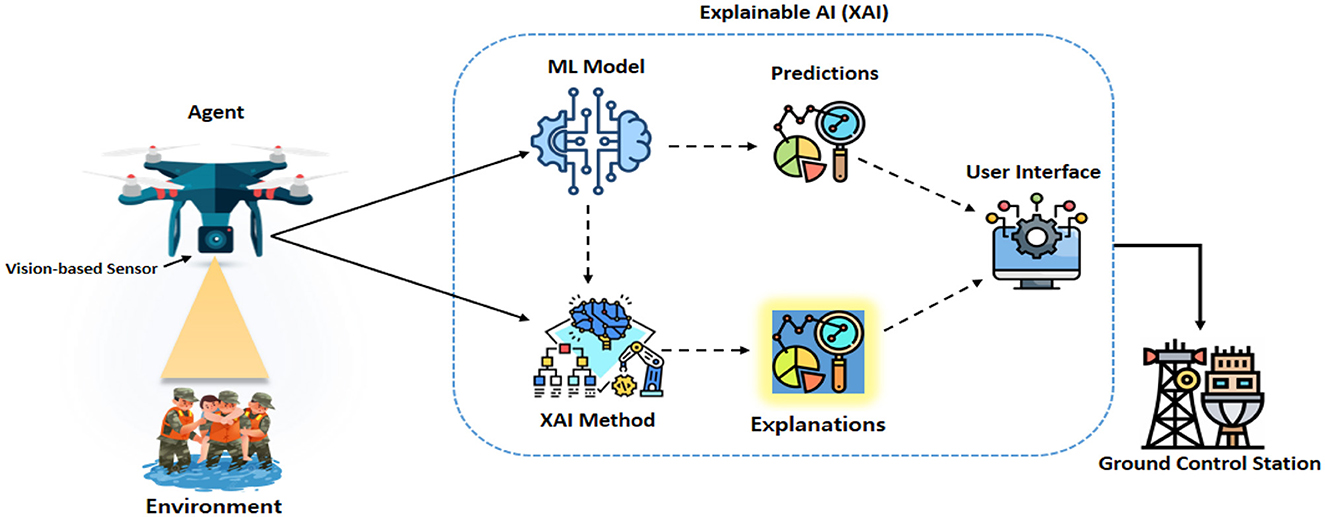

Embedding XAI within these AI-driven monocular vision systems has the potential to improve interpretability and reliability and bridge the gap between autonomous UAV functionality and human oversight, ensuring more transparent and dependable operations (Ghasemieh and Kashef, 2024). Figure 4 Illustrates UAV navigation leveraging the integration of XAI and monocular vision. However, to fully utilize the benefits of XAI in monocular vision-based UAV navigation, it is crucial to address inherent challenges such as depth estimation ambiguities and environmental sensitivity. This necessitates the use of AI-driven adaptive techniques, which will be explored in the following section.

Figure 4. Illustration of UAV navigation leveraging the integration of Explainable AI (XAI) and monocular vision.

5.2.1 AI-driven adaptive techniques for robust vision-based UAV navigation

To mitigate the impact of lighting variations and adverse weather conditions on vision-based UAV navigation, several adaptive techniques have been developed. AI-driven image enhancement methods, such as deep learning-based dehazing, contrast enhancement, and super-resolution techniques, improve visibility in challenging conditions such as fog, rain, and low-light environments (Cai et al., 2016; Ren et al., 2018). Dehazing networks leverages CNNs to restore clear images from foggy environments. Additionally, Contrast-Limited Adaptive Histogram Equalization (CLAHE) enhances image contrast in low-light conditions, improving overall visibility. Super-resolution algorithms, such as the Enhanced Deep Super-Resolution Network (EDSR), reconstruct finer details in degraded images, improving the UAV's perception of obstacles and terrain (Lim et al., 2017). Moreover, the integration of multi-spectral and infrared imaging further enhances situational awareness, allowing UAVs to detect thermal signatures and obstacles beyond the visible spectrum (Maes and Steppe, 2019). Infrared (IR) sensors help UAVs function during nighttime operations, heavy fog, or extreme glare conditions, where traditional RGB cameras fail. AI-based sensor fusion techniques combine thermal and visible-light images to improve real-time detection of objects, pedestrians, and vehicles (Zhao et al., 2019a). Additionally, polarization-based vision correction methods reduce glare from reflective surfaces such as water bodies, wet roads, or glass structures, making UAV navigation more stable in urban and coastal environments (Liu et al., 2021). ML models play a crucial role in weather condition classification and real-time adaptation. AI-powered frameworks, such as deep weather recognition networks, analyze environmental conditions and adjust UAV camera settings dynamically (Tahir et al., 2024). RL-based models further enable UAVs to adapt to varying illumination and weather conditions, ensuring reliable navigation in rapidly changing scenarios (Chronis et al., 2023). These systems improve UAV decision-making by adjusting exposure settings, sensor sensitivity, and image filtering techniques based on real-time weather data.

Moreover, sensor fusion approaches, such as combining monocular vision with LiDAR or radar, provide robust depth estimation and obstacle detection, reducing dependency on lighting conditions (Aung et al., 2024). LiDAR-assisted UAV navigation enhances depth perception in low-visibility environments, while radar-based object detection ensures obstacle avoidance even in extreme weather such as heavy rain or snow (Tang et al., 2021). By integrating multiple sensing modalities, UAVs can create a more comprehensive environmental model, ensuring greater accuracy in navigation, object tracking, and collision avoidance. These AI-driven advancements significantly enhance UAV autonomy, enabling safer and more efficient navigation in complex, dynamic environments. By leveraging adaptive image enhancement, multi-sensor fusion, and real-time weather-aware AI models, UAVs can maintain reliable operations across diverse terrains and environmental conditions, ensuring greater mission success in applications such as disaster response, surveillance, and environmental monitoring (Adão et al., 2017; Singh et al., 2020).

However, while these sensor fusion approaches enhance depth perception and environmental modeling, they do not inherently improve the interpretability of UAV decision-making. In safety-critical applications, it is equally important to understand the reasoning behind UAV navigation choices to ensure trust and accountability. This necessitates the integration of XAI techniques, which provide insights into UAV perception and decision-making processes.

5.2.2 XAI-driven interpretability and decision transparency in UAV navigation

One of the primary benefits of integrating XAI with monocular vision is the enhancement of perceptual understanding. Monocular vision systems capture real-time visual data, which DRLs process to perform tasks such as object detection, segmentation, and scene understanding. XAI facilitates the generation of interpretable insights into how visual features are utilized in decision-making processes. Saliency maps and Gradient-weighted Class Activation Mapping (Grad-CAM) can highlight regions of interest within the captured images that significantly influence the UAV's perception and subsequent actions. This enhanced perceptual understanding enables operators to verify that the UAV correctly identifies and prioritizes relevant objects, such as obstacles or points of interest, thereby improving the accuracy and reliability of autonomous operations (Zablocki et al., 2022).

Improving decision-making transparency is another critical aspect of integrating XAI with monocular vision. Autonomous UAVs must make critical decisions based on visual inputs, such as navigating through complex environments or selecting optimal paths for mission objectives. XAI provides transparency in these decision-making processes by elucidating the rationale behind each choice. For instance, when a UAV opts to alter its flight path to avoid an obstacle, XAI algorithms can generate explanations detailing the factors considered, such as the size, speed, and proximity of the obstacle. This transparency is crucial for debugging AI models, ensuring compliance with safety standards, and fostering trust among users and stakeholders. By making the decision-making process transparent, XAI facilitates a better understanding of UAV behavior, which is essential for both developers and end-users. The integration of XAI also facilitates adaptive learning and robustness in UAV operations. The dynamic nature of UAV missions, especially in environments with unpredictable elements, demands adaptive learning capabilities to maintain robust performance. XAI-enabled monocular vision systems allow UAVs to adapt their learning strategies based on explainable feedback. For example, if a UAV consistently misidentifies a particular type of obstacle under certain lighting conditions, XAI can provide insights into the underlying reasons, such as feature misrepresentation or model bias. This feedback allows developers to fine-tune AI models, enhancing their ability to generalize across diverse scenarios and improving the overall robustness of UAV operations (Hwu et al., 2021).

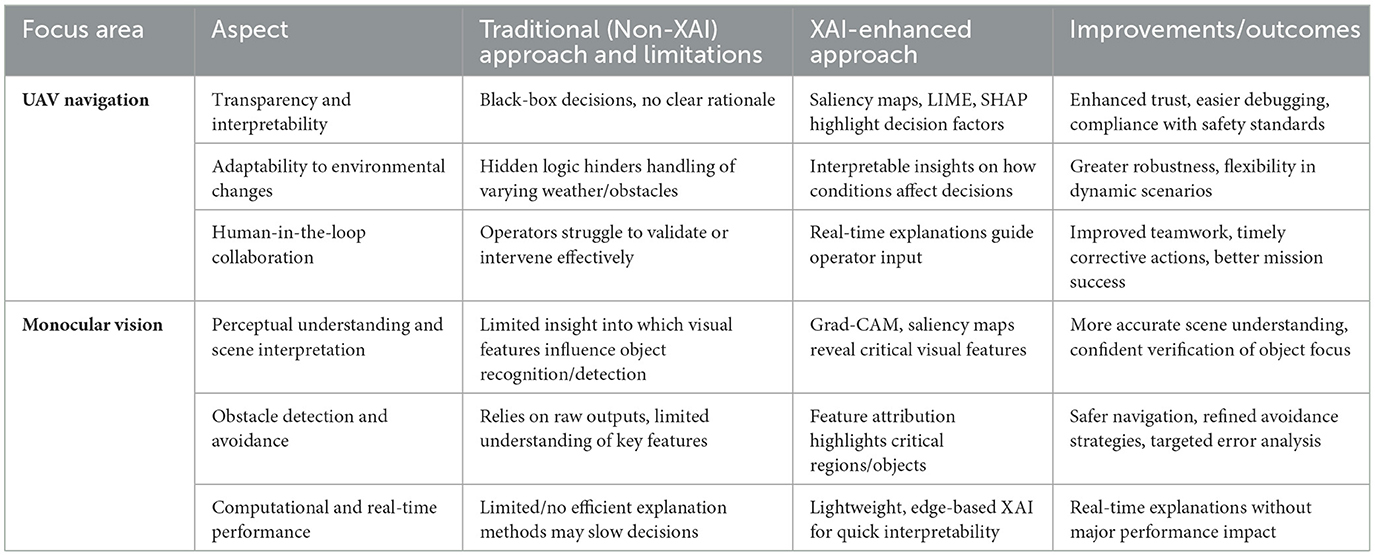

Furthermore, a real-world implementation of XAI with monocular vision can be seen in the Lifeseeker system, which integrates XAI-driven monocular vision for search and rescue operations. UAVs utilize monocular depth estimation and AI-powered scene segmentation to locate missing individuals in smoke-covered or remote areas. By leveraging XAI-powered saliency maps, the system highlights temperature variations and movement patterns, allowing human operators to validate and refine UAV decisions, reducing false detections, and improving mission success rates. This highlights a broader advantage of XAI-integrated monocular vision that supports human-in-the-loop systems, which is particularly crucial in real-time, high-stakes applications such as search and rescue or urban surveillance. XAI-enabled explanations provide critical insights that empower operators to make informed decisions and corrective interventions. For instance, when a UAV encounters an ambiguous visual scenario, XAI can articulate the reasoning behind its tentative classifications or actions, enabling the operator to validate, override, or adjust the UAV's behavior accordingly (He et al., 2021). Table 3 further highlights the role of XAI in UAV navigation and monocular vision.

Table 3. Comparing traditional approaches with XAI-enhanced strategies for UAV navigation and monocular vision.

5.3 Use cases

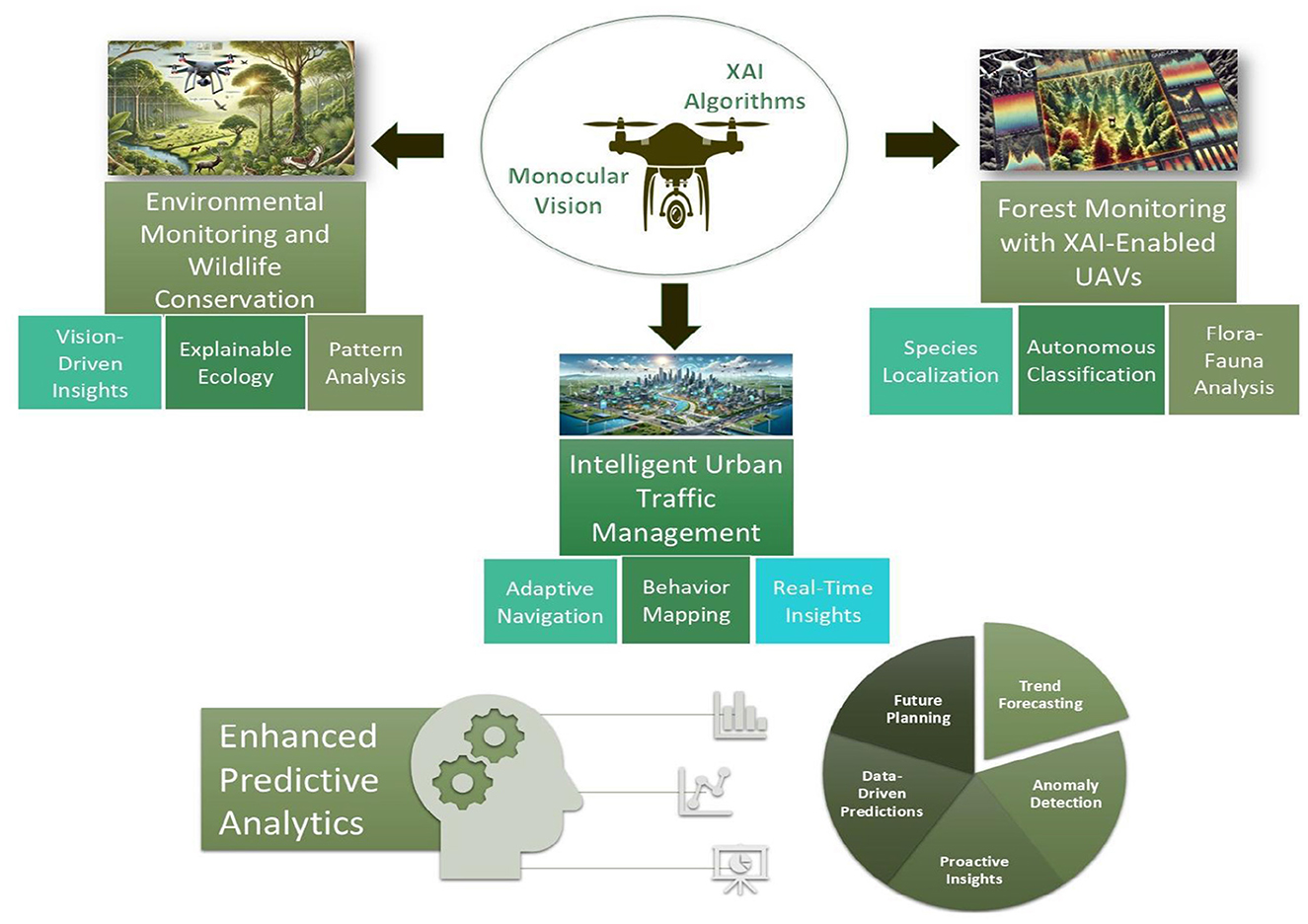

This section highlights the use cases for integrating XAI into UAV systems to showcase how it enhances their performance by improving decision-making transparency, enabling real-time adaptability, and optimizing navigation and operational efficiency in complex environments. Figure 5 illustrates the integration of monocular vision and XAI in key use cases, enabling enhanced predictive analytics for actionable insights.

Figure 5. Illustration of monocular vision and XAI algorithms in UAVs facilitating key use cases and contributing to enhanced predictive analytics for actionable insights.

5.3.1 Environmental monitoring and wildlife conservation

Consumer-grade drones equipped with single-camera systems can be deployed to monitor large and remote natural areas, such as forests, wetlands, and wildlife reserves, providing continuous aerial surveillance without the high costs associated with specialized equipment. Monocular vision enables these drones to capture detailed visual data, which can be used to track animal movements, detect changes in vegetation, and identify signs of environmental stress or illegal activities like poaching and deforestation. XAI processes this visual information to identify and classify different species or environmental conditions and to provide clear, understandable reasons for its assessments. For example, if the AI detects a decline in a particular plant species, it can highlight the affected areas and explain the potential causes, such as disease signs or adverse weather conditions. This transparency allows conservationists and environmental scientists to trust the AI's findings and make informed decisions based on accurate, actionable insights. Additionally, XAI can assist in predicting future environmental changes by analyzing trends and patterns in the data, offering explanations for its predictions that help stakeholders understand the underlying factors. The affordability and accessibility of consumer electronics make it feasible to deploy these drone systems widely, enabling comprehensive monitoring efforts even in budget-constrained projects. By leveraging monocular vision and XAI, UAVs can play a crucial role in preserving biodiversity, managing natural resources sustainably, and responding swiftly to environmental threats, thereby supporting global conservation initiatives in an efficient and transparent manner.

5.3.2 Intelligent urban traffic management

In densely populated cities, consumer-grade drones equipped with single-camera systems can be deployed to monitor traffic flow, detect congestion, and identify traffic violations in real-time. Monocular vision allows these drones to capture continuous video streams of streets, intersections, and public spaces without the complexity and cost of multi-camera setups. XAI processes the visual data to analyze patterns such as vehicle speeds, pedestrian movements, and the occurrence of traffic incidents. For example, if the AI detects a sudden slowdown or an accident, it can provide clear, understandable explanations by highlighting the specific vehicles involved, the location of the incident, and the factors contributing to the disruption, such as a traffic light malfunction or a jaywalking pedestrian.

This transparency is crucial for city planners and law enforcement agencies, as it builds trust in the system's assessments and decisions. Urban authorities can use these insights to make informed decisions about traffic light timings, road maintenance, and emergency response strategies. Additionally, XAI can assist in optimizing traffic flow by suggesting adjustments based on observed behaviors, such as rerouting traffic to alleviate congestion hotspots or implementing new pedestrian safety measures. The use of consumer-grade electronics ensures that these drone systems are affordable and scalable, allowing widespread deployment across various urban areas without significant investment. Moreover, the user-friendly nature of consumer drones enables local communities to participate in monitoring and improving their neighborhoods, fostering a collaborative approach to urban management.

5.3.3 Forest monitoring with XAI-enabled UAVs for flora and fauna classification

The integration of XAI into UAV systems significantly enhances their functionality by enabling the autonomous identification and classification of various flora and fauna while providing transparent explanations for these classifications. A fleet of consumer-grade UAVs equipped with high-resolution monocular cameras can be deployed across a sprawling forest ecosystem. These UAVs, integrated with advanced XAI algorithms, process real-time visual data to identify and classify different plant and animal species. The monocular vision system captures continuous video feeds, which are analyzed by DRLs trained on extensive datasets of local flora and fauna. XAI frameworks enable these models to generate interpretable insights into their decision-making processes. Techniques such as Grad-CAM and LIME highlight specific regions within captured images that influenced the UAVs' classifications. For instance, when a UAV identifies a particular tree species, Grad-CAM highlights distinctive features such as leaf patterns or bark textures, while LIME provides textual explanations, such as "The tree was identified as Oak due to its lobed leaf structure and rough bark texture.”

The deployment of XAI-enabled UAVs provides substantial benefits for environmental monitoring and research. Researchers can collect comprehensive data on species distribution and abundance across large areas with greater accuracy and efficiency compared to traditional ground surveys. The explainable outputs generated by XAI algorithms facilitate data validation by allowing researchers to cross-reference UAV classifications with field observations. For example, during a survey of the forest canopy, UAVs successfully identified several rare plant species that were previously under-documented. The explanations provided by XAI enable researchers to verify these findings by inspecting highlighted features and understanding the rationale behind each classification. This level of transparency increases trust in UAV-collected data and empowers researchers to refine models by addressing potential misclassifications or biases in the AI algorithms.

Furthermore, the ability of XAI-enabled UAVs to autonomously classify fauna, such as bird species and small mammals, offers valuable insights into ecosystem dynamics and species interactions. Real-time explanations facilitate immediate validation and adaptation of monitoring strategies, improving the overall effectiveness of conservation efforts. For instance, when a UAV detects an unusual concentration of a particular bird species, XAI explanations help researchers understand behavioral patterns or environmental factors contributing to the observation, informing targeted conservation actions.

6 Challenges and future research directions

This section examines key challenges that limit the application of XAI in UAV navigation and monocular vision systems and proposes potential future research directions. It emphasizes critical areas for development, including scalability, interpretability, adaptability to dynamic environments, and robustness in real-world applications.

6.1 Scalability and interpretability in XAI for monocular vision systems

One of the foremost challenges in integrating XAI with monocular vision systems is scalability across different UAV models and environments. UAV platforms vary significantly in sensor configurations, computational capacities, and environmental operating conditions, requiring extensive model fine-tuning for each system. Ensuring that XAI methods can generalize effectively across diverse UAV architectures without excessive retraining is critical for large-scale deployment. Additionally, monocular vision systems generate high-resolution visual data that must be processed in real-time for navigation and decision-making. Balancing computational efficiency with explainability is particularly challenging, as XAI models must provide interpretable insights while maintaining low latency in dynamic and unpredictable environments (Das and Rad, 2020; Ding et al., 2022).

To address these scalability concerns, future research should focus on developing lightweight, adaptive XAI models that can efficiently process high-resolution data across various UAV platforms. Techniques such as meta-learning, transfer learning, and model compression can enhance the generalizability of XAI methods, reducing the need for extensive system-specific retraining. Additionally, edge-based explainability and hybrid cloud-edge frameworks should be explored to optimize computational workloads and enable real-time decision-making. Future studies should also prioritize the development of contextual and hierarchical explainability techniques, breaking down UAV decisions into intuitive, multi-layered insights to improve interpretability across different operational contexts. Establishing benchmark datasets and standardized evaluation protocols tailored for multi-platform XAI scalability will further facilitate the development of widely adoptable, efficient, and robust explainable UAV systems.

6.2 Adapting XAI for dynamic environmental changes

Monocular vision systems in UAVs operate within highly dynamic environments where lighting conditions, terrain variations, and the presence of moving or static obstacles can change rapidly. These fluctuations pose significant challenges for XAI models, which may struggle to maintain the accuracy and relevance of their explanations in real-time. Traditional XAI frameworks often produce static or generalized explanations that do not capture the nuanced decision-making required in such unpredictable scenarios, thereby reducing user trust and operational reliability (Shah and Konda, 2021; Confalonieri et al., 2021).

To address these challenges, it is essential to develop adaptive XAI frameworks that incorporate real-time environmental context. Integrating DRL with XAI allows UAVs to update their explainability models based on evolving environmental data continuously. This integration ensures that explanations remain accurate and contextually relevant, even in highly dynamic settings. Additionally, context-aware XAI techniques can improve the adaptability of UAVs by utilizing temporal and spatial data to inform explanations. This approach ensures that explanations evolve with changing conditions, offering more precise and situation-specific insights. Implementing transfer learning and domain adaptation strategies further enhances the generalizability of XAI models, enabling UAVs to perform reliably across diverse and unforeseen environments.

6.3 Balancing explainability and performance

One of the critical challenges in building trust in UAV navigation using XAI and monocular vision lies in balancing the trade-off between explainability and system performance. High levels of explainability often require additional computational resources, leading to increased inference time, higher energy consumption, and reduced real-time responsiveness (Silva et al., 2024). Many explainability techniques, such as SHAP, and Grad-CAM, involve complex backpropagation computations and additional layers of processing, significantly increasing computational overhead. This additional processing load can be particularly problematic for UAVs operating in resource-constrained environments, where battery life, processing power, and response time are critical constraints. This trade-off is especially evident in autonomous UAV surveillance and disaster response missions, where UAVs must quickly analyze complex environments, detect anomalies, and make real-time navigational adjustments while simultaneously providing interpretable decisions to human operators. In high-risk applications, delays caused by computationally expensive XAI techniques can negatively impact situational awareness, collision avoidance, and time-sensitive decision-making. Additionally, excessive computational demands can drain battery life more rapidly, limiting the UAV's flight duration and mission effectiveness. As UAVs become increasingly autonomous, addressing the trade-off between explainability and computational efficiency is essential to ensure that these systems remain both transparent and operationally viable.

Future research should focus on developing lightweight XAI models optimized for real-time UAV deployment. Several approaches can help mitigate computational overhead while maintaining interpretability. Techniques such as model compression (e.g., knowledge distillation and weight pruning) reduce the complexity of deep learning models while preserving essential explainability features (Hinton et al., 2015). Edge computing architectures allow UAVs to offload explainability computations to low-power onboard processors or external edge nodes, reducing the burden on real-time decision-making (Xu et al., 2023). Additionally, selective explainability mechanisms can be implemented, where explanations are provided only for anomalies, high-risk decisions, or human-in-the-loop scenarios, rather than for every prediction (Wang D. et al., 2019). This adaptive XAI approach ensures that UAVs dynamically adjust the level of explainability based on mission requirements, prioritizing transparency when necessary while conserving computational resources in routine operations. Furthermore, hardware-accelerated explainability solutions, such as dedicated GPUs, TPUs, and neuromorphic processors, can significantly enhance computational efficiency by parallelizing explainability computations (Tahir et al., 2024). These specialized hardware components enable UAVs to perform real-time, interpretable decision-making without compromising flight endurance or mission effectiveness. Thus, explainability and computational efficiency remain an open challenge in deploying AI-driven UAVs. Future advancements should focus on energy-aware explainability techniques, mission-adaptive XAI frameworks, and real-time optimization strategies to ensure that UAVs remain both transparent and operationally effective across diverse applications. Addressing this trade-off will be crucial for the next generation of autonomous UAVs, particularly in time-sensitive, resource-constrained environments where both real-time decision-making and interpretability are equally vital.

6.4 Ensuring robustness of XAI in adverse conditions

XAI models integrated with monocular vision systems face difficulties in maintaining reliability under adverse conditions, such as low light, fog, rain, or high-motion scenarios. These conditions can degrade visual data quality, leading to less accurate explanations and undermining user trust in UAV decisions. Future efforts should focus on developing XAI models that incorporate uncertainty quantification to account for degraded input quality. By integrating techniques such as Bayesian neural networks or ensemble learning, XAI systems can provide confidence scores for their explanations, helping users assess the reliability of UAV decisions in adverse conditions. Furthermore, future research should leverage multimodal data fusion by integrating monocular vision with complementary sensors to enhance robustness and provide trustworthy explanations, even in challenging environments (Bai et al., 2021; Ali et al., 2023).

Future research should focus on developing adaptive explainability mechanisms that dynamically adjust explanation complexity based on operational context. In high-visibility environments with reliable sensor data, UAVs should be able to generate detailed, feature-rich explanations, while in low-confidence scenarios such as heavy fog or fast motion, XAI models should be designed to simplify explanations while incorporating uncertainty estimates to prevent misleading interpretations. Additionally, future work should explore the integration of physics-informed AI models to enhance the robustness of explanations by leveraging physical constraints, such as scene geometry and optical flow, to improve depth perception and motion estimation in degraded visual conditions. Future research should also investigate how context-aware explainability frameworks can be developed to ensure that interpretability dynamically adapts to environmental and operational uncertainties. Furthermore, advancements in self-supervised learning and reinforcement learning-based XAI models should be investigated to enhance UAV autonomy, enabling models to learn optimal explainability strategies from real-world interactions and ensuring a balance between transparency, accuracy, and operational feasibility.

6.5 Collaborative decision-making between vision models and LLMs with XAI

Monocular vision systems, while proficient in object detection and scene analysis, face challenges in contextualizing their outputs in real-world scenarios. Traditional AI models, such as CNNs, excel at recognizing patterns and detecting objects but lack interpretability, providing only binary or probability-based classifications without insight into the reasoning behind their decisions. For example, a CNN-based vision model can detect an obstacle but cannot determine its significance whether it is a temporary inconvenience or a critical mission threat. Similarly, existing XAI methods, though designed to enhance transparency, often struggle to provide intuitive and contextually relevant justifications, particularly in dynamic and high-stakes applications such as UAV navigation (Berwo et al., 2023). Furthermore, most current XAI implementations rely on static explanations, failing to adapt dynamically to real-time environmental changes, reducing user trust, and limiting deployment in critical missions.

Future work should focus on integrating monocular vision systems with contextual reasoning algorithms, such as LLMs, to bridge the gap between perception and contextual awareness (Javaid et al., 2024a). Unlike traditional AI models, LLMs can generate accurate, natural language explanations tailored to specific decision contexts, improving trust and usability. However, LLM-based vision models present significant challenges in handling real-world navigation complexities, particularly in dynamic environments where real-time decision-making is crucial. One of the key limitations is contextual misinterpretation, as LLMs rely on pre-trained datasets and lack direct interaction with real-world scenes. This limitation can lead to misclassification of transient obstacles, such as pedestrians or moving vehicles, which UAVs may treat as permanent barriers, resulting in inefficient route planning or unnecessary evasive maneuvers.

In addition, LLM-vision models struggle with spatiotemporal reasoning, making it difficult for UAVs to anticipate object movement patterns and differentiate between static and dynamic elements in complex environments. Unlike human pilots who instinctively recognize environmental cues and adapt, LLMs lack continuous learning mechanisms that allow them to refine their perception of transient vs. permanent objects in real-time. Future research should explore hybrid approaches, integrating LLM-based reasoning with sensor fusion techniques such as LiDAR, radar, and event-based cameras to enhance environmental understanding. Furthermore, adaptive and interactive explainability techniques should be developed to ensure that UAVs can dynamically adjust the level of interpretability based on mission constraints and operational uncertainties. Another important research direction is the development of lightweight LLM architectures or hardware-accelerated LLM inference, ensuring that real-time UAV applications maintain low latency and high computational efficiency while benefiting from improved contextual awareness. Addressing these limitations will be critical for deploying LLM-enhanced vision models in UAVs for real-world applications, ensuring both operational safety and mission success (Javaid et al., 2024b).

7 Conclusion

This paper provides a comprehensive exploration of the advancements and challenges in integrating Explainable Artificial Intelligence (XAI) into UAV navigation and monocular vision systems. It outlines the progression of UAV navigation from traditional methods, such as GPS and inertial navigation, to modern AI and ML-driven approaches, highlighting the transformative role of monocular vision and consumer electronics in enhancing UAV capabilities across various applications. This study comprehensively focuses on the role of XAI in addressing the "black-box" nature of AI algorithms, which has long hindered trust and transparency in UAV systems. By providing interpretable insights into navigation and control decisions, XAI fosters greater reliability and usability, particularly in high-stakes scenarios such as urban planning and environmental monitoring. The integration of XAI with monocular vision further demonstrates its potential to overcome limitations in depth perception and obstacle detection, enhancing the overall performance and adaptability of UAVs. By examining the interaction between advancements in consumer electronics, monocular vision, and XAI, this paper offers actionable insights to address these challenges and pave the way for future research and development. This work underscores the critical importance of combining technological innovation with explainability to achieve more reliable, efficient, and scalable UAV operations. The findings serve as a foundation for further exploration into the intersection of XAI, UAV navigation, and monocular vision, promoting advancements in next-generation autonomous UAV systems.

Author contributions

SJ: Conceptualization, Formal analysis, Investigation, Methodology, Writing – original draft. MK: Investigation, Project administration, Writing – review & editing. HF: Data curation, Formal analysis, Writing – original draft. BH: Supervision, Validation, Writing – review & editing. NS: Project administration, Resources, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the National Natural Science Foundation of China under Grant No. 62088101.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ad ao, T., Hruška, J., Pádua, L., Bessa, J., Peres, E., Morais, R., et al. (2017). Hyperspectral imaging: a review on uav-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 9:1110. doi: 10.3390/rs9111110

Adade, R., Aibinu, A. M., Ekumah, B., and Asaana, J. (2021). Unmanned aerial vehicle (UAV) applications in coastal zone management–a review. Environ. Monit. Assess. 193, 1–12. doi: 10.1007/s10661-021-08949-8

Ahmed, F., and Jenihhin, M. (2022). A survey on uav computing platforms: a hardware reliability perspective. Sensors 22:6286. doi: 10.3390/s22166286

Alam, M. S., and Oluoch, J. (2021). A survey of safe landing zone detection techniques for autonomous unmanned aerial vehicles (UAVS). Expert Syst. Appl. 179:115091. doi: 10.1016/j.eswa.2021.115091

Ali, S., Abuhmed, T., El-Sappagh, S., Muhammad, K., Alonso-Moral, J. M., Confalonieri, R., et al. (2023). Explainable artificial intelligence (XAI): What we know and what is left to attain trustworthy artificial intelligence. Inform. Fusion 99:101805. doi: 10.1016/j.inffus.2023.101805

Al-Kaff, A., Meng, Q., Martín, D., de la Escalera, A., and Armingol, J. M. (2016). “Monocular vision-based obstacle detection/avoidance for unmanned aerial vehicles,” in 2016 IEEE Intelligent Vehicles Symposium (IV) (Gothenburg: IEEE), 92–97.

Aung, N. H. H., Sangwongngam, P., Jintamethasawat, R., Shah, S., and Wuttisittikulkij, L. (2024). A review of LiDAR-based 3d object detection via deep learning approaches towards robust connected and autonomous vehicles. IEEE Trans. Intellig. Vehicl. 2024, 1–23. doi: 10.1109/TIV.2024.3415771

Bai, X., Wang, X., Liu, X., Liu, Q., Song, J., Sebe, N., et al. (2021). Explainable deep learning for efficient and robust pattern recognition: a survey of recent developments. Pattern Recognit. 120:108102. doi: 10.1016/j.patcog.2021.108102

Balamurugan, G., Valarmathi, J., and Naidu, V. P. S. (2016). “Survey on uav navigation in gps denied environments,” in 2016 International Conference on Signal Processing, Communication, Power and Embedded System (SCOPES) (Piscataway, NJ: Institute of Electrical and Electronics Engineers (IEEE)), 198–204.