95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Surg. , 07 November 2022

Sec. Visceral Surgery

Volume 9 - 2022 | https://doi.org/10.3389/fsurg.2022.939079

Anas Taha1,2*†

Anas Taha1,2*† Stephanie Taha-Mehlitz3,†

Stephanie Taha-Mehlitz3,† Vincent Ochs1

Vincent Ochs1 Bassey Enodien2

Bassey Enodien2 Michael D. Honaker4

Michael D. Honaker4 Daniel M. Frey2,‡

Daniel M. Frey2,‡ Philippe C. Cattin1,‡

Philippe C. Cattin1,‡

Hospitals are burdened with predicting, calculating, and managing various cost-affecting parameters regarding patients and their treatments. Accuracy in cost prediction is further affected when a patient suffers from other health issues that hinder the traditional prognosis. This can lead to an unavoidable deficit in the final revenue of medical centers. This study aims to determine whether machine learning (ML) algorithms can predict cost factors based on patients undergoing colon surgery. For the forecasting, multiple predictors will be taken into the model to provide a tool that can be helpful for hospitals to manage their costs, ultimately leading to operating more cost-efficiently. This proof of principle will lay the groundwork for an efficient ML-based prediction tool based on multicenter data from a range of international centers in the subsequent phases of the study. With a mean absolute percentage error result of 18%–25.6%, our model's prediction showed decent results in forecasting the costs regarding various diagnosed factors and surgical approaches. There is an urgent need for further studies on predicting cost factors, especially for cases with anastomotic leakage, to minimize unnecessary hospital costs.

Colorectal cancer (CRC) is one of the most prevalent cancers in the world today based on diagnoses, with about 1.8 million cases being diagnosed and about 0.7 million related deaths occurring annually. In addition, CRC accounts for 10% of all newly diagnosed cancers, a considerable social and economic burden for many nations worldwide (1). One of the treatment modalities for colorectal cancer is surgery. Surgery is aimed at obtaining an adequate oncologic resection while re-establishing intestinal continuity. Over time, there have been improvements in the way the disease is treated. However, existing patient comorbidities can limit surgical procedures. The time required to prepare patients for surgery and address their comorbidities contribute to increased surgical costs. However, despite many improvements, significant other complications still occur during, and especially after, a surgical procedure. To avoid this, the patient is placed in necessary postoperative care for 5 and 7 days after a surgical operation. Other postoperative risk factors will further add to the surgical cost, but their prediction is very vague due to the absence of sufficient datasets. These involve performing a colorectal anastomosis, anastomotic leak (2), delirium or prolonged ileus (3), other emergency surgeries; longer intraoperative time; and peritoneal contamination.

The comorbidities and longer stays result in a cost burden for patients and hospitals. This is why prediction models are now being updated to determine the costs for anastomotic insufficiency. Prediction models are normally used to estimate the probability of achieving a particular outcome (4). Many prediction models have been developed, but only a small number are used because not all models accurately predict the desired outcome (5). This study focuses on developing and validating a multivariable prediction model to predict costs for patients undergoing colon surgery while considering their stay in the hospital. This will help determine the cost burden due to variable hospital length of stay (LOS) and days spent in intensive care units (ICUs). The medical context is prognostic in that it is focused on predicting the cost of overall expenditure involved in colon surgery for the clinical center and the patient.

The rationale for developing and validating the multivariable model is that it will help accurately predict the costs associated with colon surgery. The accurate prediction will help patients and practices employed by the hospital make more informed decisions, as well as aid in policies enacted by the government. The results that come with the use of the model will also aid in surgical planning. In short, developing and validating the multivariable model will provide insight into the costs of colon surgery. In turn, it will allow revisions in care and help develop strategies for improved management. Similar studies for prediction purposes have been conducted in the field of medicine. For example, Musunuri et al. have used machine learning in the form of artificial intelligence to predict 90-day liver disease mortality. Focused on acute-on-chronic liver failure, they achieved a model with an accuracy of 94.12% and an area under the curve of 0.915 (6). Hameed et al. wrote about the impact of artificial intelligence on urological diseases. In their literature review, they have pointed to multiple publications using various models like support vector machine, nearest neighbor, random forest, convolutional neural network, or artificial neural networks to predict and classify diseases like prostate cancer, urothelial cancer, renal cancer, or urolithiasis. What differs between those publications and their work from ours is that they use a classification model instead of a regression model. The most important benefit of using a regression model compared to a classification model is that it helps predict continuous values, whereas classification models try to predict discrete class labels. To predict the costs associated with colon surgery in an accurate way, a machine learning regression model is used. Using this approach, we aim to contribute to an existing gap in this field (7).

• To develop prediction models for the final costs in patients based on multiple predictors.

• To test the models in terms of their ability to accurately predict the final costs associated with colon surgery in patients.

Data were extracted from a registry of patients who underwent colonic anastomosis for various reasons such as tumors, diverticulitis, mesenteric ischemia, iatrogenic or traumatic perforation, or inflammatory bowel disease (aggregated as “nontumor”) at the Hospital of Wetzikon from January 1, 2013, to December 31, 2019. No patients were excluded from the initial data collection. Furthermore, this study was completed based on the transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD) statement checklist for prediction model development (8).

Utilizing these data, we developed a machine learning model to predict the costs of colon surgery.

The registry data were approved by an institutional review board, where the patients’ informed consent was waived. The study was registered at [Req 2021–01107].

Recorded variables include insurance (general/semiprivate/private), age, surgical procedure (Hartmann/left-sided hemicolectomy and extended left-sided hemicolectomy/right-sided hemicolectomy and extended right-sided hemicolectomy/sigmoid resection), surgical approach (open/laparoscopic), diagnosis (tumor/nontumor), final cost (the sum of all cost factors), length of stay (in days), intensive care unit stay (in days), operation time (in minutes), anesthesia time (in minutes), ASA score (I, II, III, IV), gender (male/female), CCI (Charlson comorbidity index), anastomotic insufficiency, and emergent/nonemergent. The data on the final cost, which is the sum of all the costs incurred during the stay in the hospital for surgery, were collected in CHF (Swiss Francs). Other cost factors are not incorporated since they add up to the final costs including administrative costs, costs of hospitality, nurse costs, costs of infrastructure, doctor costs, medical costs, operational costs, anesthesia costs, and care costs.

Data were randomly split into two sets; 80% of the data was put into a training set to build the models, and 20% was utilized for a test set to validate the models and assess their performance internally. The two sets had approximately the same class distribution (Gaussian). The following 14 predictors were chosen to predict the final costs based on regression and clinical insights: age, gender, insurance, diagnosis, operation, surgical approach, hospitalization, intensive care unit stay, surgical procedure, anesthesia time, CCI, ASA score, anastomotic insufficiency, and emergency surgery (9).

By including variables such as the CCI and the ASA score, we can cover a large number of diseases that are included in the comorbidity index.

A variety of machine learning models were developed, including generalized boosted regression, random forest, and decision trees. An interaction depth of 3 and a total number of 500 trees were chosen, as were the type of random forest and the regression model. The classification/predictive performance was measured using the mean absolute percentage error (MAPE), where a result of <10% was classified as highly accurate, <20% denoted a good forecast, 20%–50% denoted a reasonable forecast, and everything >50% denoted an inaccurate forecast (10). The MAPE factor, also known as mean absolute percentage deviation, was used for accuracy of a forecasting prediction. Continuous data were reported as mean ± standard deviation (SD) or median [interquartile range (IQR)] and categorical data were reported as numbers (percentages). Hyperparameters were tuned, and the final model was selected based on the MAPE. The final model chosen was the random forest model based on its superior performance.

The analysis was carried out using R version 4.0.4. The random forest library was used for the random forest models, the metrics library used was used for the calculation of the performance measurements, the gbm library was used for the generalized boosted regression models, and the rpart library was used for the other models.

The best-performing model will be deployed as a web-based, user-friendly application using RShiny to predict the final cost that considers the different cost factors. (Accessed at: https://colonsurgerycost.shinyapps.io/Final_Cost/).

A total of 347 patients were included in our study. This number consists of all patients from the center who suffered from the diagnosed factors in this section and had to undergo the type of operations mentioned. The mean age was 67 ± 14 years (range 28–94). A total of 162 (47%) patients were men, and 185 (53%) were women. Tables 1 and 2 provide all baseline variables and their descriptive statistics. Continuous variables were recorded as mean ± SD (range) in Table 1.

Categorical variables were recorded as numbers (%) in Table 2. No missing values were detected. Table 3 provides the variables' characteristics and descriptive statistics that are not mentioned in Tables 1 and 2 and are based on their impact on the final costs.

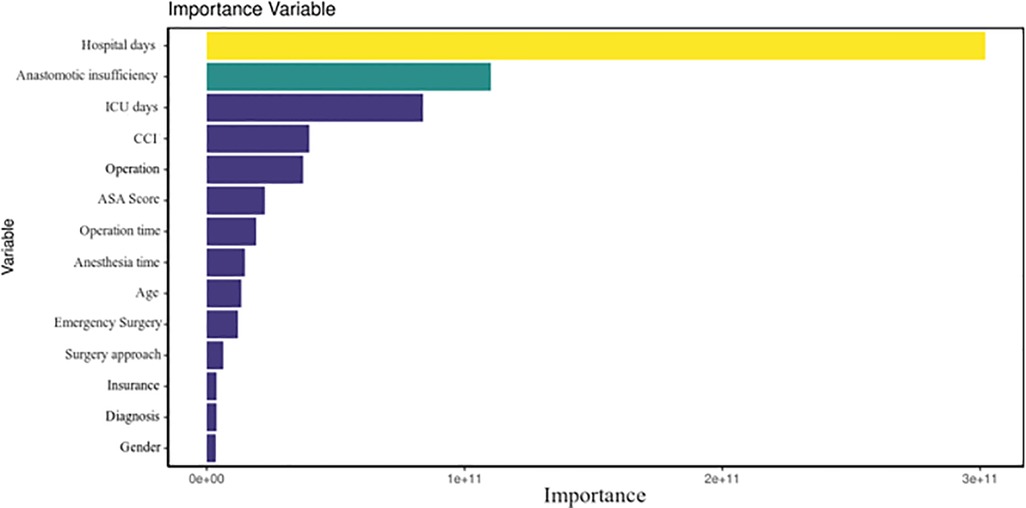

During internal validation, the performance of all three models was tested and stated with their mean values and 95% confidence intervals (Table 3). The random forest classifier provided the highest MAPE for predicting the final cost (21.4). Thus, it was the model with the best internal validation performance and was subsequently used for predicting costs (11). In comparison, the decision tree and general boosted regression model displayed results for MAPEs of only 25.5 and 29.7, respectively. Therefore, the average MAPE for the final cost is around 21.4, which means that, on average, the forecast of this prediction model regarding the final costs is off by 21.4%. Since a MAPE value of <20% is considered as being “good,” our result shows decent results. The percentage of the random forest classifier's variance, which was explained in the models, varied from 73.81% to 81.05%. Specific feature importance according to the random forest classifier is displayed as Gini index in Figure 1, while Figure 2 shows the prediction of the random forest classifier compared to the actual observed values from the test data set for the final cost factor.

Figure 1. Total decrease in node impurities, measured by the Gini index from splitting on the variable, averaged over all trees.

In Figure 1, one can see that factors such as LOS, anastomotic insufficiency, and intensive care unit stay are the best predictors in our model, which could be explained as being variables that are often correlated with postoperative complications and thus being more costly. The hospitalization factor can be explained as a good predictor of cost because the overall costs for a hospital will increase if the patient is not progressing after surgery. The same can be said about the intensive care unit. For the anastomotic insufficiency cases, it is evident that these complications bare a higher burden on the final costs. The mean decrease in the Gini index is the mean of a variable's total decrease in node impurity, weighted by the proportion of samples reaching that node in each individual decision tree in the random forest. A higher mean decrease in the Gini index indicates higher variable importance. In other words, node impurity measures how much the model error increases when a particular variable is randomly permuted or shuffled.

Figure 2 indicates that the predicted values are not far off the actual observed values based on our data set. For most of the observations, our model was able to perform decently in predicting the final costs.

Figure 3 displays the Bland–Altman plot. The following information can be derived visually from the diagram: (1) an estimate of the true value on the x-axis (mean), (2) standard deviation, (3) whether and to what extent systematic measurement errors (bias) lead to the deviations (variability was eliminated by difference formation on the y-axis), (4) whether the deviation of the methods or the dispersion of the deviation depends on the level of the measured values, and (5) whether outliers are present. Based on the plot, one can imply that the values are mostly well distributed and not many outliers occur.

Figure 3. Bland–Altman plot, measured by the difference of both measured values (S1–S2) plotted on the y-axis against the mean value (S1 + S2/2) on the x-axis.

Cost and finance play an increasingly important role in today's healthcare system. It is imperative that hospitals control their costs more accurately beforehand and estimate the expenditure so that they do not get into financial difficulties.

Especially in surgery, and specifically colon surgery, this predictive model allows us to manage better and optimize the process in front of the surgeon and hospital.

As indicated, in this study, three models were developed and tested. The results show that random forest has the lowest percentage for all the costs examined on MAPE.

The lowest MAPE percentage for the random forest model indicates that this model is the most accurate at predicting costs associated with surgeries compared to the other two models examined. Typically, MAPE is a measure of error. It is used to measure the accuracy of a forecast (12). In calculating MAPE, the difference between the actual value and the forecast value is determined and expressed as a percentage. This means that if the difference between the actual value and the forecast value is small, the percentage is small (13). On the other hand, if the difference between the actual value and the forecast value is large, the MAPE percentage is large. This implies that a small MAPE percent indicates that the forecast value is near the actual value. In other words, the forecast value is more accurate (14). In the case of the three models, since the random forest model had the lowest MAPE percent value for all the costs compared to the other models considered, it is the most effective model in predicting the cost.

Why is random forest the most effective predictive model compared to the decision tree and generalized boosted regression models? This question can be answered by examining the model. The random forest model is a machine learning technique that is used to solve classification and regression problems (15). This model uses ensemble learning, a technique that combines many classifiers to obtain solutions to complex problems. A random forest algorithm comprises multiple decision trees. The forest that is generated by the algorithm is trained through bootstrap aggregating or bagging (16). Bagging is a meta-algorithm that improves the machine learning algorithms’ accuracy.

The random forest algorithm establishes the result from the predictions of decision trees. It predicts by taking the mean of the prediction output of the various trees (17). This implies that the predicted outcome by the algorithm becomes more accurate when the number of decision trees is increased.

One of the features of the random forest model that makes it more accurate in predicting cost outcomes is it reduces the overfitting problem normally experienced when using the decision tree model. As indicated, the model uses an ensemble learning method based on bagging (15, 18). In other words, the model creates many decision trees and then considers the outcomes of all the trees in its final prediction, enhancing the prediction accuracy by the model.

However, despite the higher accuracy of the random forest model when compared to the decision tree and generalized boosted regression models, the model does not have the highest possible accuracy when considered alone. Normally, when examining the accuracy of a prediction using MAPE, a result of less than 10% is considered highly accurate. A MAPE score of less than 20% denotes a good forecast, while that between 20% and 50% is considered a reasonable forecast (12). The results show that the random forest model gives mostly reasonable forecasts rather than accurate forecasts. The model gave an outcome of over 20% when analyzed using the MAPE. This means that while it is the most accurate model compared to the other models, when considered alone, it has only considerable accuracy and does not accurately predict the cost incurred.

A number of similar studies have been carried out on the random forest model in terms of its accuracy in predicting outcomes. For example, Mei et al. examined the prediction accuracy of the random forest model when applying real-time forecasting of the New York electricity market (18). In reviewing the model's prediction accuracy, its results were compared to that of the auto-regressive-moving-average model and an artificial neural network model. It was established that the random forest model exhibited a lower MAPE value. The results of the study by Mei et al. are similar to those of this study, which also show that the random forest model has a higher level of making fewer mistakes by predicting when compared to other studies (18). However, a shortcoming of the study by Mei et al. is that it compared the random forest model to only two other models. This does not provide adequate insight into the model's prediction accuracy (18). A comparison with additional models would have helped determine whether the random forest model was the most accurate prediction model or if others were more accurate.

Another similar approach to comparing algorithms was made by Xu et al., who developed and tested an accurate prediction model based on the random forest classification algorithm (19). They evaluated the prediction for inland water quality. To evaluate the performance of the model, the researchers compared it to other models, namely, multilayer perceptron, SVR (support vector regression), KNN (K-nearest neighbor), ridge regression, gradient boosting regression, bagging, and decision tree. It was established that the random forest-based prediction model had the highest level of accuracy when compared to all the other prediction models examined. This implies that random forest provides the most accurate outcomes when used for prediction. The results in the study by Wang et al. align with those of this study since it was also established that the random forest model is the most accurate compared to other models. The study by Wang et al. provides better insight into the accuracy of the random forest model because it compared it to multiple models (19). It indicates that the random forest model is one of the most accurate prediction models that can be used to predict costs for surgery.

At last, the results are in line with those of Toqué et al., who also established that the random forest model has higher accuracy than other models (20). In the study, Toqué et al. built and tested machine learning models for forecasting the Montreal subway smart card entry logs using event data to find an optimal model that accurately predicts the number of incoming passengers at each station of a transportation network (20). The prediction models were random forest, gradient boosting decision trees, artificial neural networks, and kernel-based models, including a support vector regressor and a Gaussian process (20). The results showed that all random forest models performed best using root mean squared error for the evaluation and did decent using MAPE and mean absolute error.

The results in this study show that all models have reasonable accuracy as the MAPE for each cost highlighted is below 50%. This means that all models can be used to predict the costs to some level of accuracy. However, when compared, it can be seen that the random forest model is a more accurate predictor. These results are evident in similar studies showing that the random forest model is a more accurate prediction model.

One of the implications of the results is that hospitals and other concerned parties can employ the random forest model to forecast costs not only for colon surgery but also the costs of other risks and conditions mentioned previously. This work lays the foundation for further work and research in this area. This will allow for better financial calculations for hospitals. Through such a predictive model, it is possible to better estimate medical costs, which is especially important when factors such as LOS in the hospital and ICU, as well as complications such as anastomotic insufficiency, can have a large financial impact on the high cost. The results show that the random forest model provides more accurate predictions compared to other models like generalized boosted regression and decision tree models. For concerned parties to achieve more accurate results when predicting the costs of conditions or any other outcome, the random forest model should be employed.

Another implication is that there is a need for further research about the model in terms of enhancing the accuracy of the random forest model. The results show that for the final costs examined, the accuracy is more than 20%. This is only reasonable accuracy. However, it is way before the desired value. As indicated, the MAPE value of less than 20% indicates a good forecast, while that of less than 10% shows that the forecast is highly accurate. While achieving a highly accurate forecast is unlikely, any good prediction model should give a good forecast. With the random forest model being the most accurate model, this implies that it should be developed further to improve accuracy so as to give more credible results when used to predict outcomes, meaning further research is needed on the model.

Despite the good implications and the wide range of applications, the ethical aspect should not be ignored. Naik et al. have shown in their work that there are currently no well-defined guidelines when treating people with an application such as this. They mention that transparency must be created when working with such algorithms. Furthermore, weaknesses such as cyber attacks and privacy invasions should not be ignored if you want to advance this field and research (21).

The main limitation of the study is a lack of a representative sample. In this case, the focus was on patients undergoing colon surgery. However, in the sample dataset, only 347 individuals met this criterion. This implies that the sample was not selected in a manner that made it representative of patients undergoing colon surgery. The larger the dataset, the more accurate the results are. However, the limited number of individuals with common reasons for higher costs implies that it was impossible to effectively test the developed models in terms of their ability to predict costs associated with the disease. For such models, there is a need for adequate and detailed data to ensure they are tested thoroughly. Additionally, an overall increase in the sample size could result in more precise models by looking at the values in Table 4. Especially, the events per predictor should be bigger.

Postoperative complications such as anastomotic insufficiency and ICU or hospital LOS increase the cost burden for patients and hospitals. Also, preoperative conditions like CCI increase the cost. However, there is no way of predicting these costs so that a patient or healthcare system can prepare adequately to handle the condition. This study thereby aimed to develop and validate a prediction model to accurately predict costs and develop strategies to eliminate or cover them. Out of the three tested models, the results obtained based on MAPE analysis showed that the random forest model is the most accurate. Therefore, the results imply this model should be adopted for prediction. However, the fact that MAPE results were mostly above 20% means that further research should be undertaken to improve its accuracy.

The raw data supporting the conclusions of this article will be made available by the authors upon reasonable request.

Conceptualization, AT and BE; data collection, BE; analysis, VO and AT; visualization, AT; writing—original draft preparation, AT, STM and VO; writing—review and editing, DMF, MDH, and PCC. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Xi Y, Xu P. Global colorectal cancer burden in 2020 and projections to 2040. Transl Oncol. (2021) 14(10):101174. doi: 10.1016/j.tranon.2021.101174

2. Soeters PB, de Zoete JPJGM, Dejong CHC, Williams NS, Baeten CGMI. Colorectal surgery and anastomotic leakage. Dig Surg. (2002) 19(2):150. doi: 10.1159/000052031

3. Karliczek A, Harlaar NJ, Zeebregts CJ, Wiggers T, Baas PC, Van Dam GM. Surgeons lack predictive accuracy for anastomotic leakage in gastrointestinal surgery. Int J Colorectal Dis. (2009) 24(5):569–76. doi: 10.1007/s00384-009-0658-6

4. Kourou K, Exarchos TP, Exarchos KP, Karamouzis MV, Fotiadis DI. Machine learning applications in cancer prognosis and prediction. Comput Struct Biotechnol J. (2015) 13:8–17. doi: 10.1016/j.csbj.2014.11.005

5. Mosavi A, Ozturk P, Chau KW. Flood prediction using machine learning models: literature review. Water (Basel). (2018) 10(11):1536. doi: 10.3390/w10111536

6. Musunuri B, Shetty S, Shetty D, Vanahalli M, Pradhan A, Naik N, et al. Acute-on-chronic liver failure mortality prediction using an artificial neural network. Eng Sci. (2021) 15:187–96. doi: 10.30919/es8d515

7. Hameed BMZ, Dhavileswarapu AVLS, Raza SZ, Karimi H, Khanuja HS, Shetty DK, et al. Artificial intelligence and its impact on urological diseases and management: a comprehensive review of the literature. J Clin Med. (2021) 10:1864. doi: 10.3390/jcm10091864

8. Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. Br J Surg. (2015) 102(3):148–58. doi: 10.1002/bjs.9736

9. Bolenz G, Roehrborn L. Predictors of costs for robotic assisted laparoscopic radical prostatectomy, urologic oncology: seminars and original investigations. Urol Oncol. (2011) 29(3):325–9. doi: 10.1016/j.urolonc.2011.01.016

10. Abidin S, Jaffar MM. Forecasting share prices of small size companies in Bursa Malaysia. Appl Math Inf Sci. (2014) 8:107–12. doi: 10.12785/amis/080112

11. Sushmita N, Marquardt R, Prasad DC, Teredesai A. Population cost prediction on public healthcare datasets. Proceedings of the 5th International Conference on Digital Health 2015. New York, NY: Association for Computing Machinery (2015). p. 87–94.

12. Coleman CD, Swanson DA. On MAPE-R as a measure of cross-sectional estimation and forecast accuracy. J Econ Soc Meas. (2007) 32(4):219–33. doi: 10.3233/JEM-2007-0290

13. Hyndman RJ, Koehler AB. Another look at measures of forecast accuracy. Int J Forecast. (2006) 22(4):679–88. doi: 10.1016/j.ijforecast.2006.03.001

14. Rayer S. Population forecast accuracy: does the choice of summary measure of error matter? Popul Res Policy Rev. (2007) 26(2):163–84. doi: 10.1007/s11113-007-9030-0

15. Sinha P, Gaughan AE, Stevens FR, Nieves JJ, Sorichetta A, Tatem AJ. Assessing the spatial sensitivity of a random forest model: application in gridded population modeling. Comput Environ Urban Syst. (2019) 75:132–45. doi: 10.1016/j.compenvurbsys.2019.01.006

16. Bharathidason S, Venkataeswaran CJ. Improving classification accuracy based on random forest model with uncorrelated high performing trees. Int J Comput Appl. (2014) 101(13):26–30. doi: 10.5120/17749-8829

17. Wang L, Liu ZP, Zhang XS, Chen L. Prediction of hot spots in protein interfaces using a random forest model with hybrid features. Protein Eng, Des Sel. (2012) 25(3):119–26. doi: 10.1093/protein/gzr066

18. Mei J, He D, Harley R, Habetler T, Qu G. A random forest method for real-time price forecasting in New York electricity market. 2014 IEEE PES General Meeting | Conference & Exposition. National Harbor, MD, USA: IEEE (2014). p. 1–5.

19. Xu J, Xu Z, Kuang J, Lin C, Xiao L, Huang X, et al. An alternative to laboratory testing: random forest-based water quality prediction framework for inland and nearshore water bodies. Water (Basel). (2021) 13:3262. doi: 10.3390/w13223262

20. Toqué F, Côme E, Trépanier M, Oukellou F. Forecasting of the Montreal subway smart card entry logs with event data. TRB, CIRRELT-2020-33 (2020).

Keywords: cost prediction, colon surgery, machine learning, colon surgery cost, anastomotic insufficiency

Citation: Taha A, Taha-Mehlitz S, Ochs V, Enodien B, Honaker MD, Frey DM and Cattin PC (2022) Developing and validating a multivariable prediction model for predicting the cost of colon surgery. Front. Surg. 9:939079. doi: 10.3389/fsurg.2022.939079

Received: 8 May 2022; Accepted: 11 October 2022;

Published: 7 November 2022.

Edited by:

Falk Rauchfuß, Friedrich Schiller University Jena, GermanyReviewed by:

Dasharathraj K. Shetty, Manipal Institute of Technology, India© 2022 Taha, Taha-Mehlitz, Ochs, Enodien, Honaker, Frey and Cattin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anas Taha YW5hcy50YWhhQHVuaWJhcy5jaA==

†These authors have contributed equally to this work and share first authorship

‡These authors have contributed equally to this work and share last authorship

Specialty Section: This article was submitted to Visceral Surgery, a section of the journal Frontiers in Surgery

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.