94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

MINI REVIEW article

Front. Surg., 14 March 2022

Sec. Genitourinary Surgery and Interventions

Volume 9 - 2022 | https://doi.org/10.3389/fsurg.2022.862322

This article is part of the Research TopicTechnology Advancements, Social Media and Innovations in Uro-Oncology and EndourologyView all 15 articles

Nithesh Naik1,2

Nithesh Naik1,2 B. M. Zeeshan Hameed2,3

B. M. Zeeshan Hameed2,3 Dasharathraj K. Shetty4*

Dasharathraj K. Shetty4* Dishant Swain5

Dishant Swain5 Milap Shah2,6

Milap Shah2,6 Rahul Paul7

Rahul Paul7 Kaivalya Aggarwal8

Kaivalya Aggarwal8 Sufyan Ibrahim2,9

Sufyan Ibrahim2,9 Vathsala Patil10

Vathsala Patil10 Komal Smriti10

Komal Smriti10 Suyog Shetty3

Suyog Shetty3 Bhavan Prasad Rai2,11

Bhavan Prasad Rai2,11 Piotr Chlosta12

Piotr Chlosta12 Bhaskar K. Somani2,13

Bhaskar K. Somani2,13The legal and ethical issues that confront society due to Artificial Intelligence (AI) include privacy and surveillance, bias or discrimination, and potentially the philosophical challenge is the role of human judgment. Concerns about newer digital technologies becoming a new source of inaccuracy and data breaches have arisen as a result of its use. Mistakes in the procedure or protocol in the field of healthcare can have devastating consequences for the patient who is the victim of the error. Because patients come into contact with physicians at moments in their lives when they are most vulnerable, it is crucial to remember this. Currently, there are no well-defined regulations in place to address the legal and ethical issues that may arise due to the use of artificial intelligence in healthcare settings. This review attempts to address these pertinent issues highlighting the need for algorithmic transparency, privacy, and protection of all the beneficiaries involved and cybersecurity of associated vulnerabilities.

Increasing patient demand, chronic disease, and resource constraints put pressure on healthcare systems. Simultaneously, the usage of digital health technologies is rising, there has been an expansion of data in all healthcare settings. If properly harnessed, healthcare practitioners could focus on the causes of illness and keep track of the success of preventative measures and interventions. As a result, policymakers, legislators, and other decision-makers should be aware of this. For this to happen, computer and data scientists and clinical entrepreneurs argue that one of the most critical aspects of healthcare reform will be artificial intelligence (AI), especially machine learning (1). Artificial intelligence (AI) is a term used in computing to describe a computer program's capacity to execute tasks associated with human intelligence, such as reasoning and learning. It also includes processes such as adaptation, sensory understanding, and interaction. Traditional computational algorithms, simply expressed, are software programmes that follow a set of rules and consistently do the same task, such as an electronic calculator: “if this is the input, then this is the output.” On the other hand, an AI system learns the rules (function) through training data (input) exposure. AI has the potential to change healthcare by producing new and essential insights from the vast amount of digital data created during healthcare delivery (2).

AI is typically implemented as a system comprised of both software and hardware. From a software standpoint, AI is mainly concerned with algorithms. An artificial neural network (ANN) is a conceptual framework for developing AI algorithms. It's a human brain model made up of an interconnected network of neurons connected by weighted communication channels. AI uses various algorithms to find complex non-linear correlations in massive datasets (analytics). Machines learn by correcting minor algorithmic errors (training), thereby boosting prediction model accuracy (confidence) (3, 4).

The use of new technology raises concerns about the possibility that it will become a new source of inaccuracy and data breach. In the high-risk area of healthcare, mistakes can have severe consequences for the patient who is the victim of this error. This is critical to remember since patients come into contact with clinicians at times in their lives when they are most vulnerable (5). If harnessed effectively, such AI-clinician cooperation can be effective, wherein AI is used to offer evidence-based management and provides medical decision-guide to the clinician (AI-Health). It can provide healthcare offerings in diagnosis, drug discovery, epidemiology, personalized care, and operational efficiency. However, as Ngiam and Khor point out if AI solutions are to be integrated into medical practice, a sound governance framework is required to protect humans from harm, including harm resulting from unethical behavior (6–17). Ethical standards in remedy may be traced lower back to the ones of the health practitioner Hippocrates, on which the idea of the Hippocratic Oath is rooted (18–24).

Machine Learning-healthcare applications (ML-HCAs) that were seen as a tantalizing future possibility has become a present clinical reality after the Food and Drug Administration (FDA) approval for autonomous artificial intelligence diagnostic system based on Machine Learning (ML). These systems use algorithms to learn from large data sets and make predictions without explicitly programming (25).

The use of data created for electronic health records (EHR) is an important field of AI-based health research. Such data may be difficult to use if the underlying information technology system and database do not prevent the spread of heterogeneous or low-quality data. Nonetheless, AI in electronic health records can be used for scientific study, quality improvement, and clinical care optimization. Before going down the typical path of scientific publishing, guideline formation, and clinical support tools, AI that is correctly created and trained with enough data can help uncover clinical best practices from electronic health records. By analyzing clinical practice trends acquired from electronic health data, AI can also assist in developing new clinical practice models of healthcare delivery (26).

In the future, AI is expected to simplify and accelerate pharmaceutical development. AI can convert drug discovery from a labor-intensive to capital- and the data-intensive process by utilizing robotics and models of genetic targets, drugs, organs, diseases and their progression, pharmacokinetics, safety and efficacy. Artificial intelligence (AI) can be used in the drug discovery and development process to speed up and make it more cost-effective and efficient. Although like with any drug study, identifying a lead molecule does not guarantee the development of a safe and successful therapy, AI was used to identify potential Ebola virus medicines previously (26).

There is a continuous debate regarding whether AI “fits within existing legal categories or whether a new category with its special features and implications should be developed.” The application of AI in clinical practice has enormous promise to improve healthcare, but it also poses ethical issues that we must now address. To fully achieve the potential of AI in healthcare, four major ethical issues must be addressed: (1) informed consent to use data, (2) safety and transparency, (3) algorithmic fairness and biases, and (4) data privacy are all important factors to consider (27). Whether AI systems may be considered legal is not only a legal one but also a politically contentious one (Resolution of the European Parliament, 16 February 2017) (28).

The aim is to help policymakers ensure that the moral demanding situations raised by enforcing AI in healthcare settings are tackled proactively (17). The limitation of algorithmic transparency is a concern that has dominated most legal discussions on artificial intelligence. The rise of AI in high-risk situations has increased the requirement for accountable, equitable, and transparent AI design and governance. The accessibility and comprehensibility of information are the two most important aspects of transparency. Information about the functionality of algorithms is frequently deliberately made difficult to obtain (29).

Our capacity to trace culpability back to the maker or operator is allegedly threatened by machines that can operate by unfixed rules and learn new patterns of behavior. The supposed “ever-widening” divide is a cause for alarm, as it threatens “both the moral framework of society and the foundation of the liability idea in law.” The use of AI may leave us without anyone to hold accountable for any sort of damage done. The extent of danger is unknown, and the use of machines will severely limit our ability to assign blame and take ownership of the decision-making (30).

Modern computing approaches can hide the thinking behind the output of an Artificial Intelligent System (AIS), making meaningful scrutiny impossible. Therefore, the technique through which an AIS generates its outputs is “opaque.” A procedure used by an AIS may be so sophisticated that for a non-technically trained clinical user, it is effectively concealed while remaining straightforward to understand for a techie skilled in that area of computer science (5).

AISs, like IBM's Watson for oncology, are meant to support clinical users and hence directly influence clinical decision-making. The AIS would then evaluate the information and recommend the patient's care. The use of AI to assist clinicians in the future could change clinical decision-making and, if adopted, create new stakeholder dynamics. The future scenario of employing AIS to help clinicians could revolutionize clinical decision-making and, if embraced, create a new healthcare paradigm. Clinicians (including doctors, nurses, and other health professionals) have a stake in the safe roll-out of new technologies in the clinical setting (5).

The scope of emerging ML-HCAs in terms of what they intend to achieve, how they might be built, and where they might be used is very broad. ML-HCAs range from entirely self-sufficient synthetic intelligence diabetic retinopathy prognosis in primary care settings, to non-self-sufficient death forecasts, to manual coverage and resource allocation (25). Researchers ought to describe how those outputs can be included in the research, along with predictions. This information is essential to setting up the cost of the scientific trial and guiding scientific research (31).

AI applied in healthcare needs to adjust to a continuously changing environment with frequent disruptions, while maintaining ethical principles to ensure the well-being of patients (24). However, an easy, key component of figuring out the protection of any healthcare software relies upon the capacity to check out the software and recognize how the software would fail. For example, the additives and physiologic mechanisms of medications or mechanical devices are comparable to the technique for software programmes. On the other hand, ML-HCAs can present a “black box” issue, with workings that aren't visible to evaluators, doctors, or patients. Researchers ought to describe how those outputs can be included in the research, along with predictions. This information helps assess the cost of the scientific trial and guides scientific research (25).

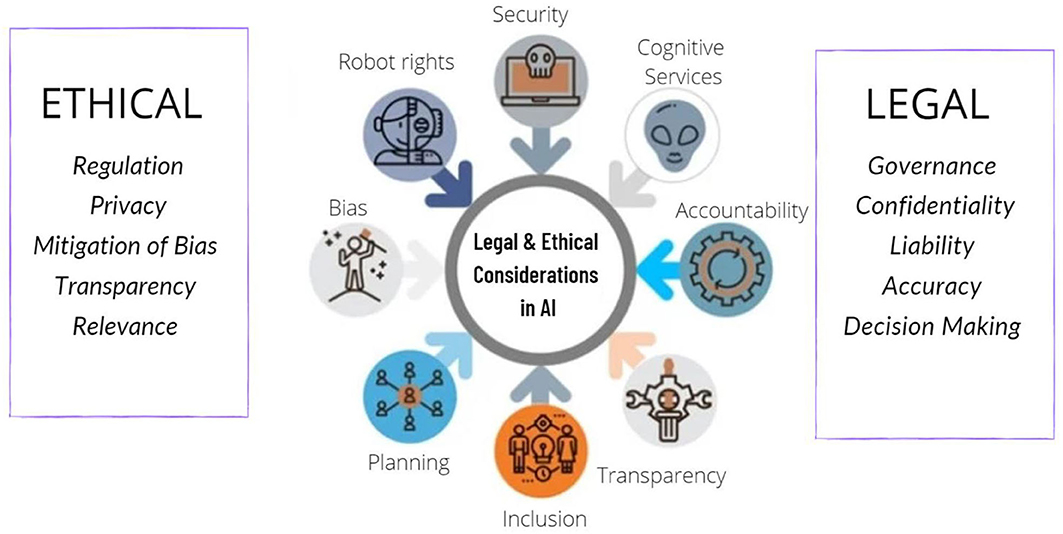

The Resolution of the European Parliament was based on research commissioned, supervised, and published by the policy department for “Citizens' Rights and Constitutional Affairs” in response to a request from the European Parliament's Committee on Legal Affairs. The report emphasizes the critical nature of a resolution calling for the immediate creation of a legislative instrument governing robots and AI, capable of anticipating and adapting to any scientific breakthroughs anticipated in the medium term (29). The various ethical and legal concerns associated with the use of AI in healthcare settings have been highlighted in Figure 1.

Figure 1. Various ethical and legal conundrums involved with the usage of artificial intelligence in healthcare.

When the setting or context changes, AI systems can fail unexpectedly and drastically. AI can go from being extremely intelligent to extremely naive in an instant. All AI systems will have limits, even if AI bias is managed. The human decision-maker must be aware of the system's limitations, and the system must be designed so that it fits the demands of the human decision-maker. When a medical diagnostic and treatment system is mostly accurate, medical practitioners who use it may grow complacent, failing to maintain their skills or take pleasure in their work. Furthermore, people may accept decision-support system results without questioning their limits. This sort of failure will be repeated in other areas, such as criminal justice, where judges have modified their decisions based on risk assessments later revealed to be inaccurate (32).

The use of AI without human mediation raises concerns about vulnerabilities in cyber security. According to a RAND perspectives report, applying AI for surveillance or cyber security in national security creates a new attack vector based on “data diet” vulnerabilities. The study also discusses domestic security issues, such as governments' (growing) employment of artificial agents for citizen surveillance (e.g., predictive policing algorithms). These have been highlighted as potentially jeopardizing citizens' fundamental rights. These are serious concerns because they put key infrastructures at risk, putting lives and human security and resource access at risk. Cyber security weaknesses can be a severe threat because they are typically hidden and only discovered after the event (after the damage is caused) (28).

In recent years, there has been an uptick in the feasibility, design, and ethics of lethal autonomous weapon systems (LAWS). These machines would have AI autonomy's vast discretion combined with the power to murder and inflict damage on humans. While these advancements may offer considerable advantages, various questions have been raised concerning the morality of developing and implementing LAWS (33).

The problem of selection bias in datasets used to construct AI algorithms is a typical occurrence. As established by Buolamwini and Gebru, there is bias in automated facial recognition and the associated datasets, resulting in lower accuracy in recognizing darker-skinned individuals, particularly women. A huge number of data points are required for ML, and the majority of frequently utilized clinical trial research databases come from selected populations. As a result, when applied to underserved and consequently probably underrepresented patient groups, the resulting algorithms may be more likely to fail (34).

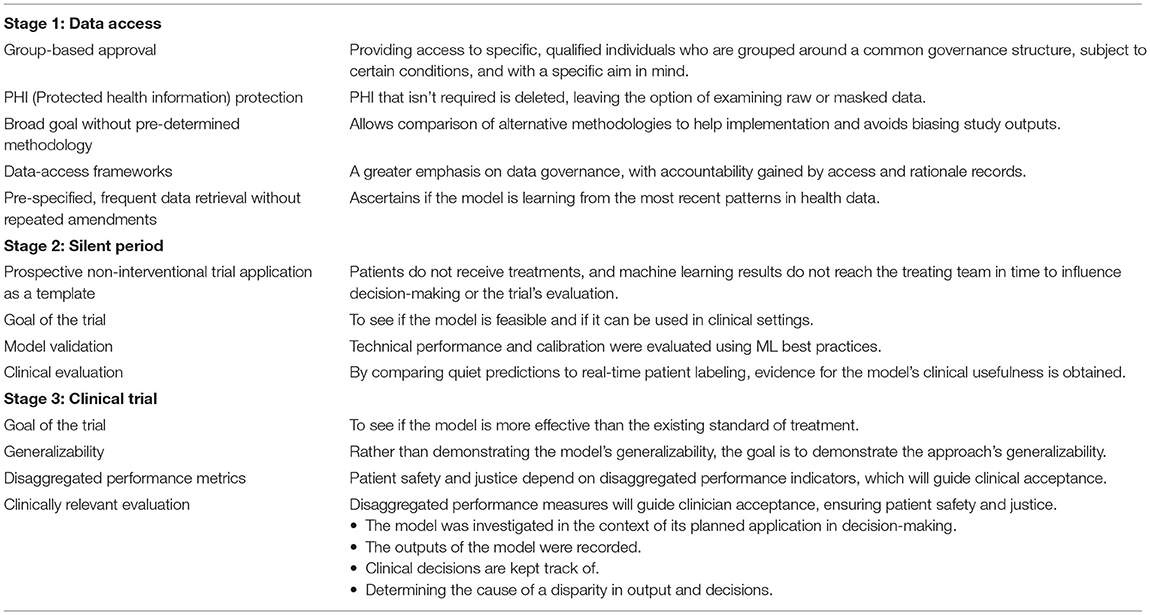

Unlike doctors, technologists are not obligated by law to be accountable for their actions; instead, ethical principles of practice are applied in this sector. This comparison summarizes the dispute over whether technologists should be held accountable if AIS is used in a healthcare context and directly affects patients. If a clinician can't account for the output of the AIS they're employing, they won't be able to appropriately justify their actions if they choose to use that data. This lack of accountability raises concerns about the possible safety consequences of using unverified or unvalidated AISs in clinical settings. Some scenarios show how opacity affects each stakeholder. Table 1 shows the necessary considerations for procedural and conceptual changes to be taken for ethical review for healthcare-based Machine learning research. It is indeed a challenging aspect of technology. We think that new framework and approach is needed for approval of AI systems but practitioners and hospitals using it need to be trained and hence have the ultimate responsibility of its use. Medical devices based AI will facilitate the decision making too carry out treatment and procedures by the individuals, and not to replace them in entirety. There is dearth of literature in this regard and a detailed frame-work needs to be developed by the highest bodies of policy makers.

Table 1. Considerations for ethical review for healthcare-based Machine learning research: procedural and conceptual changes (31).

AISs should be evaluated and validated, according to the Association for the Advancement of Artificial Intelligence. It is critical to establish, test, measure, and assess the dependability, performance, safety, and ethical compliance of such robots and artificial intelligence systems logically and statistically/probabilistically before they are implemented. If a clinician chooses to employ an AIS, verification and validation may help them account for their activities reasonably. As previously mentioned, clinical rules of professional conduct do not allow for unaccountable behavior. It has been suggested, however, that AIS is not the only thing that may be opaque, and doctors can also be opaque. If AIS cannot be punished, it will be unable to take on jobs involving human care. Managers of AIS users should make it clear that physicians cannot evade accountability by blaming the AIS (5).

Assistive ML-HCAs provide resources to healthcare providers by providing “ideas” for treatment, prognosis, or control while relying on individual interpretation of any suggestions to make judgments. Autonomous ML-HCAs provide direct prognostic and control statements without the intervention of a clinician or any other human. Because the developer's preference for an ML-autonomy HCA's stage has clear implications for the assumption of responsibility and liability, this autonomy stage must be visible (25). Instead of asking if they were aware of the hazards and poor decision-making, the question should be asked if they could grasp and recognize those risks (35).

Evidence suggests that AI models can embed and deploy human and social biases at scale. However, it is the underlying data than the algorithm itself that is to be held responsible. Models can be trained on data which contains human decisions or on data that reflects the second-order effects of social or historical inequities. Additionally, the way data is collected and used can also contribute to bias and user-generated data can act as a feedback loop, causing bias. To our knowledge there are no guidelines or set standards to report and compare these models, but future work should involve this to guide researchers and clinicians (36, 37).

AI is moving beyond “nice-to-have” to becoming an essential part of modern digital systems. As we rely more and more on AI for decision making, it becomes absolutely essential to ensure that they are made ethically and free from unjust biases. We see a need for Responsible AI systems that are transparent, explainable, and accountable. AI systems increase in use for improving patient pathways and surgical outcomes, thereby outperforming humans in some fields. It is likely to meager, co-exist or replace current systems, starting the healthcare age of artificial intelligence and not using AI is possibly unscientific and unethical (38).

AI is going to be increasingly used in healthcare and hence needs to be morally accountable. Data bias needs to be avoided by using appropriate algorithms based on un-biased real time data. Diverse and inclusive programming groups and frequent audits of the algorithm, including its implementation in a system, need to be carried out. While AI may not be able to completely replace clinical judgment, it can help clinicians make better decisions. If there is a lack of medical competence in a context with limited resources, AI could be utilized to conduct screening and evaluation. In contrast to human decision making, all AI judgments, even the quickest, are systematic since algorithms are involved. As a result, even if activities don't have legal repercussions (because efficient legal frameworks haven't been developed yet), they always lead to accountability, not by the machine, but by the people who built it and the people who utilize it. While there are moral dielemmas in the use of AI, it is likely to meager, co-exist or replace current systems, starting the healthcare age of artificial intelligence, and not using AI is also possibly unscientific and unethical.

NN, DSh, BH, and BS contributed to the conception and design of the study. MS, SI, DSw, SS, and KA organized the database. DSw, KA, VP, KS, SS, and SI wrote the first draft of the manuscript. NN, DSh, KS, BR, VP, and BH wrote sections of the manuscript. PC, BR, and BS critically reviewed and edited the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Morley J, Floridi L. An ethically mindful approach to AI for Health Care. SSRN Electron J. (2020) 395:254–5. doi: 10.2139/ssrn.3830536

2. Drukker L, Noble JA, Papageorghiou AT. Introduction to artificial intelligence in ultrasound imaging in Obstetrics and Gynecology. Ultrasound Obstetr Gynecol. (2020) 56:498–505. doi: 10.1002/uog.22122

3. Rong G, Mendez A, Bou Assi E, Zhao B, Sawan M. Artificial intelligence in healthcare: review and prediction case studies. Engineering. (2020) 6:291–301. doi: 10.1016/j.eng.2019.08.015

4. Miller DD, Brown EW. Artificial Intelligence in medical practice: the question to the answer? Am J Med. (2018) 131:129–33. doi: 10.1016/j.amjmed.2017.10.035

5. Smith H. Clinical AI: opacity, accountability, responsibility and liability. AI Soc. (2020) 36:535–45. doi: 10.1007/s00146-020-01019-6

6. Taddeo M, Floridi L. How AI can be a force for good. Science. (2018) 361:751–2. doi: 10.1126/science.aat5991

7. Arieno A, Chan A, Destounis SV. A review of the role of augmented intelligence in breast imaging: from Automated Breast Density Assessment to risk stratification. Am J Roentgenol. (2019) 212:259–70. doi: 10.2214/AJR.18.20391

8. De Fauw J, Ledsam JR, Romera-Paredes B, Nikolov S, Tomasev N, Blackwell S, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med. (2018) 24:1342–50. doi: 10.1038/s41591-018-0107-6

9. Kunapuli G, Varghese BA, Ganapathy P, Desai B, Cen S, Aron M, et al. A decision-support tool for renal mass classification. J Digit Imaging. (2018) 31:929–39. doi: 10.1007/s10278-018-0100-0

10. Álvarez-Machancoses Ó, Fernández-Martínez JL. Using artificial intelligence methods to speed up drug discovery. Expert Opin Drug Discov. (2019) 14:769–77. doi: 10.1080/17460441.2019.1621284

11. Hay SI, George DB, Moyes CL, Brownstein JS. Big data opportunities for global infectious disease surveillance. PLoS Med. (2013) 10:e1001413. doi: 10.1371/journal.pmed.1001413

12. Barton C, Chettipally U, Zhou Y, Jiang Z, Lynn-Palevsky A, Le S, et al. Evaluation of a machine learning algorithm for up to 48-hour advance prediction of sepsis using six vital signs. Comput Biol Med. (2019) 109:79–84. doi: 10.1016/j.compbiomed.2019.04.027

13. Cowie J, Calveley E, Bowers G, Bowers J. Evaluation of a digital consultation and self-care advice tool in primary care: a multi-methods study. Int J Environ Res Public Health. (2018) 15:896. doi: 10.3390/ijerph15050896

14. Dudley JT, Listgarten J, Stegle O, Brenner SE, Parts L. Personalized medicine: from genotypes, molecular phenotypes and the Quantified Self, towards improved medicine. Pac Symp Biocomput. (2015) 342–6. doi: 10.1142/9789814644730_0033

15. Wang Z. Data Integration of electronic medical record under administrative decentralization of medical insurance and healthcare in China: a case study. Israel J Health Policy Res. (2019) 8:24. doi: 10.1186/s13584-019-0293-9

16. Nelson A, Herron D, Rees G, Nachev P. Predicting scheduled hospital attendance with Artificial Intelligence. npj Digit Med. (2019) 2:26. doi: 10.1038/s41746-019-0103-3

17. Morley J, Machado CCV, Burr C, Cowls J, Joshi I, Taddeo M, et al. The ethics of AI in health care: a mapping review. Soc Sci Med. (2020) 260:113172. doi: 10.1016/j.socscimed.2020.113172

18. Price J, Price D, Williams G, Hoffenberg R. Changes in medical student attitudes as they progress through a medical course. J Med Ethics. (1998) 24:110–7. doi: 10.1136/jme.24.2.110

19. Bore M, Munro D, Kerridge I, Powis D. Selection of medical students according to their moral orientation. Med Educ. (2005) 39:266–75. doi: 10.1111/j.1365-2929.2005.02088.x

20. Rezler AG, Lambert P, Obenshain SS, Schwartz RL, Gibson JM, Bennahum DA. Professional decisions and ethical values in medical and law students. Acad Med. (1990) 65:. doi: 10.1097/00001888-199009000-00030

21. Rezler A, Schwartz R, Obenshain S, Lambert P, McLgibson J, Bennahum D.Assessment of ethical decisions and values. Med Educ. (1992) 26:7–16. doi: 10.1111/j.1365-2923.1992.tb00115.x

22. Hebert PC, Meslin EM, Dunn EV. Measuring the ethical sensitivity of medical students: a study at the University of Toronto. J Med Ethics. (1992) 18:142–7. doi: 10.1136/jme.18.3.142

23. Steven H. Miles, the hippocratic oath and the Ethics of Medicine (New York: Oxford University Press, 2004), XIV + 208 pages. HEC Forum. (2005) 17:237–9. doi: 10.1007/s10730-005-2550-2

24. Mirbabaie M, Hofeditz L, Frick NR, Stieglitz S. Artificial intelligence in hospitals: providing a status quo of ethical considerations in academia to guide future research. AI Soc. (2021). doi: 10.1007/s00146-021-01239-4. [Epub ahead of print].

25. Char DS, Abràmoff MD, Feudtner C. Identifying ethical considerations for Machine Learning Healthcare Applications. Am J Bioethics. (2020) 20:7–17. doi: 10.1080/15265161.2020.1819469

26. Stephenson J. Who offers guidance on use of Artificial Intelligence in medicine. JAMA Health Forum. (2021) 2:e212467. doi: 10.1001/jamahealthforum.2021.2467

27. Gerke S, Minssen T, Cohen G. Ethical and legal challenges of artificial intelligence-driven healthcare. Artif Intell Healthcare. (2020) 295–336. doi: 10.1016/B978-0-12-818438-7.00012-5

28. Rodrigues R. Legal and human rights issues of AI: gaps, challenges and vulnerabilities. J Respons Technol. (2020) 4:100005. doi: 10.1016/j.jrt.2020.100005

29. Report on the Proposal for a Regulation of the European Parliament and of the Council on the Protection of Individuals With Regard to the Processing of Personal Data and on the Free Movement of Such Data (General Data Protection Regulation). Europarleuropaeu. Available online at: https://www.europarl.europa.eu/doceo/document/A-7-2013-0402_EN.html (accessed February 3, 2022).

30. Tigard DW. There is no techno-responsibility gap. Philos Technol. (2020) 34:589–607. doi: 10.1007/s13347-020-00414-7

31. McCradden MD, Stephenson EA, Anderson JA. Clinical research underlies ethical integration of Healthcare Artificial Intelligence. Nat Med. (2020) 26:1325–6. doi: 10.1038/s41591-020-1035-9

32. Mannes A. Governance, risk, and Artificial Intelligence. AI Magazine. (2020) 41:61–9. doi: 10.1609/aimag.v41i1.5200

33. Taylor I. Who is responsible for killer robots? Autonomous Weapons, group agency, and the military-industrial complex. J Appl Philos. (2020) 38:320–34. doi: 10.1111/japp.12469

34. Safdar NM, Banja JD, Meltzer CC. Ethical considerations in Artificial Intelligence. Eur J Radiol. (2020) 122:108768. doi: 10.1016/j.ejrad.2019.108768

35. Henz P. Ethical and legal responsibility for artificial intelligence. Discov Artif Intell. (2021) 1:2. doi: 10.1007/s44163-021-00002-4

36. Nelson GS. Bias in artificial intelligence. North Carolina Med J. (2019) 80:220–2. doi: 10.18043/ncm.80.4.220

37. Shah M, Naik N, Somani BK, Hameed BMZ. Artificial Intelligence (AI) in urology-current use and future directions: an itrue study. Turk J Urol. (2020) 46(Suppl. 1):S27–S39. doi: 10.5152/tud.2020.20117

Keywords: artificial intelligence, machine learning, ethical issues, legal issues, social issues

Citation: Naik N, Hameed BMZ, Shetty DK, Swain D, Shah M, Paul R, Aggarwal K, Ibrahim S, Patil V, Smriti K, Shetty S, Rai BP, Chlosta P and Somani BK (2022) Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front. Surg. 9:862322. doi: 10.3389/fsurg.2022.862322

Received: 25 January 2022; Accepted: 18 February 2022;

Published: 14 March 2022.

Edited by:

Dmitry Enikeev, I.M. Sechenov First Moscow State Medical University, RussiaReviewed by:

Konstantin Kolonta, Moscow State University of Medicine and Dentistry, RussiaCopyright © 2022 Naik, Hameed, Shetty, Swain, Shah, Paul, Aggarwal, Ibrahim, Patil, Smriti, Shetty, Rai, Chlosta and Somani. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dasharathraj K. Shetty, cmFqYS5zaGV0dHlAbWFuaXBhbC5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.