- Department of Spine Surgery, Hospital for Special Surgery, New York, NY, United States

Background: Augmented reality (AR) is an emerging technology that can overlay computer graphics onto the real world and enhance visual feedback from information systems. Within the past several decades, innovations related to AR have been integrated into our daily lives; however, its application in medicine, specifically in minimally invasive spine surgery (MISS), may be most important to understand. AR navigation provides auditory and haptic feedback, which can further enhance surgeons’ capabilities and improve safety.

Purpose: The purpose of this article is to address previous and current applications of AR, AR in MISS, limitations of today's technology, and future areas of innovation.

Methods: A literature review related to applications of AR technology in previous and current generations was conducted.

Results: AR systems have been implemented for treatments related to spinal surgeries in recent years, and AR may be an alternative to current approaches such as traditional navigation, robotically assisted navigation, fluoroscopic guidance, and free hand. As AR is capable of projecting patient anatomy directly on the surgical field, it can eliminate concern for surgeon attention shift from the surgical field to navigated remote screens, line-of-sight interruption, and cumulative radiation exposure as the demand for MISS increases.

Conclusion: AR is a novel technology that can improve spinal surgery, and limitations will likely have a great impact on future technology.

Introduction

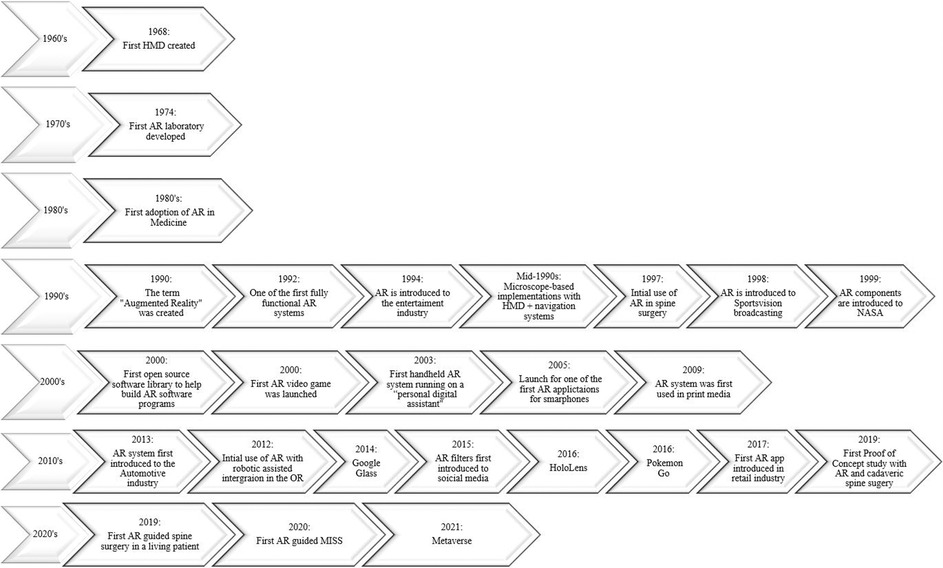

Augmented reality (AR) is an emerging technology that can overlay computer graphics onto the real world and enhance visual feedback from information systems (1). Based on advancements in optics, sensing, and computer systems, AR allows researchers to expand its applications (1). Modern-day AR systems have been integrated into our daily lives, including but not limited to social media, video games, retail, television broadcasting, wearable accessories, education, and in medicine, specifically in minimally invasive spine surgery (MISS) (Figure 1).

Over the past several decades, AR has grown to be an area of interest across many surgical fields, especially with its role in spinal surgery. Recently, AR systems have been implemented in treatment of degenerative cervical, thoracic, and lumbar spine diseases (2, 3). Studies have described AR as an alternative to current approaches such as traditional navigation, robotically assisted navigation (RAN), fluoroscopic guidance, and free hand, as it is capable of projecting patient anatomy directly onto the surgical field (2, 4–10). Therefore, it eliminates surgeon attention shift from the patient to the monitor for guidance (11, 12), line-of-sight interruption on live computer navigation, which may result in the loss of live navigation (13), and cumulative exposure to ionizing radiation as patients’ demand for MISS continues to grow (14).

Although AR is a novel technology that may distinguish itself from other state-of-the-art navigation systems, it is still in its nascency, and several limitations are important to recognize, such as mechanical and visual discomfort (15) and delays in the surgical learning curve, as it may be dependent on a generation of surgeons who grew up playing video games (16–18). Lastly, this is still a new field in research, and while pedicle screw insertion can be guided with AR, there has still yet to be an established system for pedicle screw accuracy (19).

The purpose of this article is to address previous and current applications of AR, AR navigation in MISS, limitations of today's technology, and future areas of innovation.

Methods

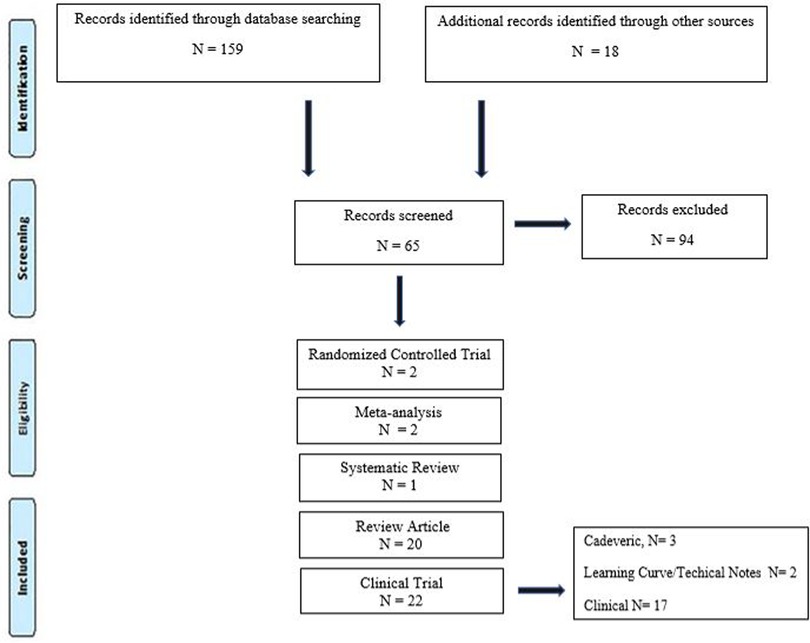

A systematic review was conducted using the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines.

Search strategy and data inclusion

Scientific evidence published from May 1997 to August 2022 in PubMed, Medline, and Google Scholar scientific databases was recorded. Keywords augmented reality, robotics-assisted surgery, navigation, heads-up display, minimally invasive surgery, spine surgery, pedicle screw, and accuracy were used and combined by means of Boolean operators AND and OR under English search. Categories were developed to classify studies as either clinical trial (cadaveric, clinical, and learning curve/technical notes), meta-analysis, randomized controlled trials, review articles, systematic review, and additional sources. The criterion for selecting the articles was published or supported by an indexed scientific database (Figure 1).

After performing each search, potentially relevant articles were identified after reading the title and the abstract. The following information was extracted from each included source: authors, year of publication, historical background, supported significant findings in AR and RAN, and possible limitations. At last, the risk of bias and study quality were assessed by both authors (FA and DRL) by eliminating selection bias, detection bias, reporting bias, and other biases. Ethical approval was not applicable for conducting this systematic review and meta-analysis.

AR: past and current applications

AR has become a point of interest in multidisciplinary research fields over the last few decades as it has been used in different applications to enhance visual feedback from information systems (1). Modern-day AR systems have been integrated into our daily lives based on previous systems created decades before (Figure 2). In 1968, the world's first head-mounted display (HMD), known as the “Sword of Damocles,” was created by Ivan Sutherland, a Harvard professor and computer engineer (20). The purpose of an HMD was to track the user’s head via an ultrasonic position sensor or mechanical linkage and create three-dimensional (3D) lines that appear stationary in the room (21). This allowed users to experience computer-generated graphics that enhanced their sensory perception of the world, which paved the road for AR systems that we currently use today (21). In 1974, a laboratory solely dedicated to AR was created at the University of Connecticut by Myron Kruger, a computer researcher and artist (20, 21). Within the laboratory walls, projections and camera technology were used to emit onscreen silhouettes surrounding users for an interactive experience (20, 21).

AR in flight

Throughout the 1970s and 1980s, Myron Krueger, Dan Sandin, Scott Fisher, and others experimented with many concepts of mixing human interaction with computer-generated overlays on video for interactive art experiences (22). In 1990, the term “Augmented Reality” was coined by Thomas Caudell and David Mizell, Boeing researchers (20, 22). Their technology assisted airplane factory workers as AR managed to display wire bundle assembly schematics in a see-through HMD (22). Around the same time, AR was implemented into different fields of independent research, which led to the creation of one the first fully functioning AR systems known as the “Virtual Fixture” by Louis Rosenburg, a researcher in the U.S. Air Force Armstrong's Research Lab (20). This system permitted military personnel to virtually control and guide machinery to perform tasks like training their U.S. Air Force pilots on safer flying practices. In 1999, the first hybrid synthetic vision system was created by NASA for their X-38 spacecraft. This form of AR technology displayed map data on the pilot's screen, which aided with better navigation during flights (20).

AR released to the public

Components of AR were then later introduced to the public, particularly in entertainment, television, games, social media, and wearable devices. AR made its first debut in the entertainment industry in 1994 by writer and producer Julie Martin. Martin brought AR to her theater production titled “Dancing in Cyberspace,” which featured acrobats dancing alongside projected virtual objects on the physical stage (20). In 1998, AR was introduced to Sportsvision broadcasts to draw the First and Ten Yard line in an NFL game (20, 21). By the dawn of the new century, the first open-source software library was created to help build an AR software program known as “ARTool Kit,” and the first AR game AR Quake was launched (20, 23, 24). The player users wore an HMD and backpack containing a computer and gyroscopes to be able to walk around in the real world and play Quake against virtual monsters (24).

By 2003, the first handheld AR system running autonomously on a “personal digital assistant” was created and became the precursor for today's smartphones (22). In 2005, one of the first face-to-face collaborative AR applications developed for mobile phones was created, known as “AR tennis,” by Nokia (23, 25). By 2016, Niantic and Nintendo launched Pokémon Go, which became a popular location-based AR game (20). This put AR on the map for the general masses leading to the development of similar games (20). Not only has AR been implemented into technology but it also has managed to have a grip on print media. In 2009, Esquire Magazine used AR for the first time; when readers scanned the cover, the AR-equipped magazine featured a celebrity speaking to readers (20).

By 2013, AR was introduced to the automotive industry as Volkswagen introduced the Mobile Augmented Reality Technical Assistance application (20). This was a groundbreaking adaption of AR because this system gave technicians step-by-step repair instructions within the service manual and would be applied to many different industries to align and streamline processes (20). That following year, Google released Google Glass to the public, a pair of AR glasses that users could wear for an immersive experience, where users wore the AR technology and communicated with the Internet via natural language processing commands and could access applications like Google Maps, Google+, Gmail, and more (20). Two years later, Microsoft created its own version of wearable AR technology known as HoloLens, which is more advanced than Google Glass as the headset runs on Windows 10 and is essentially a wearable computer. It also allows users to scan their surroundings and create AR experiences (20). Later on, a newer iteration known as the HoloLens 2 headset was created to target business and medicine (20).

Social media and retail industry started to later apply AR software to their products targeting everyday consumers. In 2015, Snapchat introduced its “Lenses” feature by overlaying various filters onto the camera's field of view to alter the perception of the user—Instagram and Facebook followed suit in 2017, applying similar software (20, 26, 27). In the same year, AR was introduced into the retail industry by IKEA, when it launched its AR app called IKEA Place, ultimately allowing customers to virtually preview their home decor options before actually making a purchase (20). By 2021, Meta, otherwise known as Facebook, released the first hyper-real alternative online virtual world that incorporates AR, virtual reality, and 3D holographic avatars, video, and other means of communication known as the “Metaverse” (28).

Applications of AR in surgery

One of the first AR adopters in medicine implemented AR in cranial neurosurgeries in the 1980s (15). Attempts to merge image injection systems in operating microscopes led to microscope-based implementations with integrated HMD and navigation systems in the mid-1990s (29). In 1997, Peuchot et al. first described a system known as “Vertebral Vision with Virtual Reality,” which allowed for fluoroscopy-generated 3D transparent visions of the vertebra to be superimposed onto the operative field (30, 31). From there, many generations were developed throughout the years as AR was able to blend intraoperative imaging or models with the surgical scene (31). At the time, this was an innovative approach as it aided in watching vertebral displacements occur without the distraction of referring to a monitor and had the potential to lower exposure to ionizing radiation (31). Studies such as Theocharopoulos et al. reported that levels of ionizing radiation in intraoperative fluoroscopy in spinal surgery are considerably higher than those in other subspecialties, and AR systems report a significantly lower dosage of radiation (31, 32).

In 2012, Leven et al. and later Schneider et al. proposed “flashlight” visualization to overlay the intraoperative ultrasound image onto a 3D representation of the imaging plane in the stereo view of the console, which then led to a drop-in tool for registering transrectal ultrasound images with laparoscopic video (21). Throughout the years, as AR technology was made available to the public and with the release of HoloLens in 2016, a newer iteration known as the HoloLens 2 headset was created where surgeons can implement this technology in the operation field (20, 33). This headset fits over the surgeon's head and displays transparent images that hover in the surgeon's field of vision. The application aligned images of the patient's anatomy with the real-life view. The surgeon then can walk around the patient, viewing three-dimensional holographic images of internal structures from different vantage points (33). Surgeons may also use voice commands or hand gestures to enlarge images or move information around. Even the patient's vital signs can be projected onto the field of vision (33).

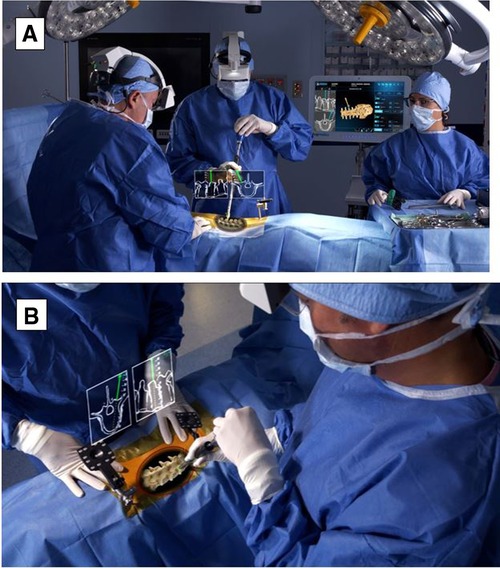

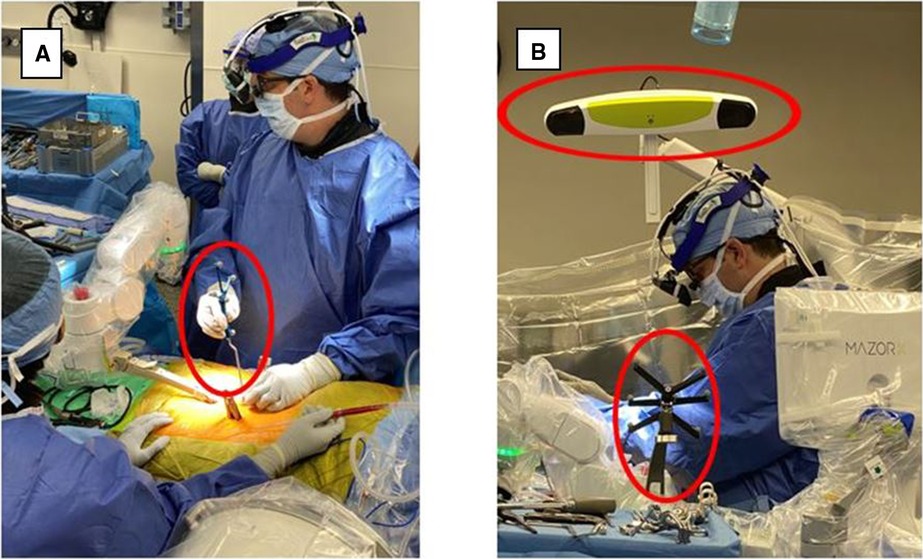

By 2020, the first AR-guided spine surgery in a living person was performed with the Xvision system by Augmedics at John Hopkins University by Dr. Timothy Witham (34, 35). The first procedure was performed on June 8, 2020, where six screws were used during a spinal fusion surgery to fuse three vertebrae to relieve the patient from chronic back pain (34). The second surgery was performed on June 10, 2020, where surgeons removed a cancerous tumor from the spine of a patient (34). However, the first proof-of-concept study with AR-assisted pedicle screw insertion and cadavers was published in 2019 by Dr. Frank Phillips at Rush University. Within that following year, Phillips performed the first AR-guided MISS by implementing the same Augmedics system (19, 36). Phillips was able to perform a lumbar fusion with spinal implants on a patient with spinal instability (36). During the MISS procedure, the headset projected a 3D visualization of the navigation data onto the surgeon's retina. This allows the surgeon to see a 3D image of the patient's spine with the skin intact and two-dimensional (2D) computed tomography (CT) images of the instruments’ path and trajectory while looking directly at the surgical field (36) (Figure 3).

Figure 3. (A) HMD projects a 3D visualization of the surgeon's retina. (B) Surgeon sees a 3D image of the patient's spine with two-dimensional CT images of the instruments’ path and trajectory while looking directly at the surgical field. HMD. head-mounted display; 3D, three-dimensional; CT, computed tomography. Courtesy of Augmedics.

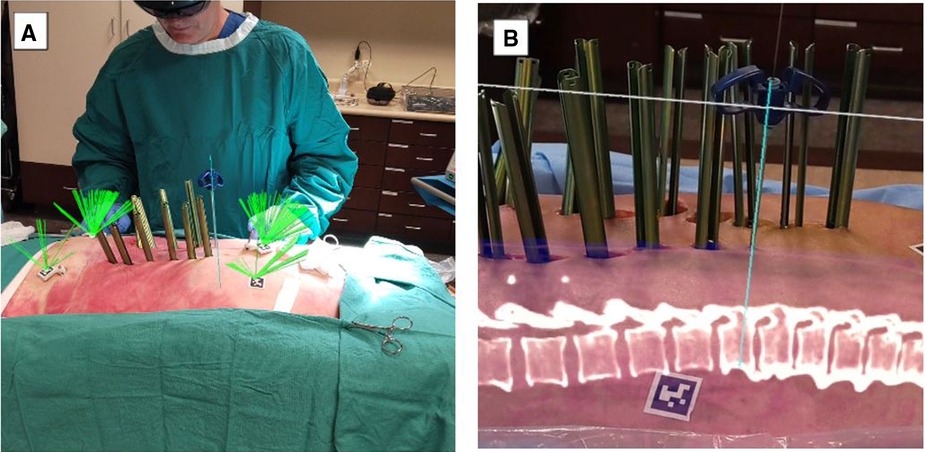

Currently, wearable devices, such as HMD, have been commonly used to display AR views for ease and speed and to accomplish feasibility, accuracy, and safety in workflows from open cases to MISS (14, 19). Current platforms include key features such as reducing attention shift from the surgical field to monitors, line-of-sight interruption, and cumulative exposure to ionizing radiation. Newer generations of AR systems, such as Augmedics and VisAR, have the capability to overlay virtual bony structures and preplanned screw trajectories on patients in the OR, enabling real-time feedback of all instruments in space in relation to anatomical structures (36) (Figures 3, 4). This removes the need for surgeons to avert their eyes to a screen and the traditional utilization of markers and tracking cameras, as AR systems are designed to align the hands and eyes of the surgeon (37, 38). Thus, real-time 3D capabilities allow the surgeon to “augment” the quantity of information that can be inferred by the sole surgeon's eyes (3, 39) (Figures 3, 4).

Figure 4. MISS navigation procedure utilizing VisAR technology. (A). The green ray (usually not seen) continuously monitors the centrum of each AprilTag for ongoing adjustment of the registration, if required. AprilTags are adhered to the skin and also placed on platforms stabilized by bone pins. The Jamshidi needle is aligned with the virtual needle/pathway and has been inserted percutaneously. (B). Lateral view of a MISS procedure in progress under VisAR navigation. Note the Jamshidi needle that has successfully penetrated the underlying pedicle. The center of the needle has been extracted, and a K-wire has been inserted for guidance of a cannulated screw. An optical fiducial (AprilTag) appears below the vertebrae. MISS, minimally invasive spine surgery. Courtesy of VisAR.

Pedicle screw accuracy in current AR platforms

Since accomplishing the first AR-guided surgeries in MISS surgeries, current platforms are assessing pedicle screw accuracy in MISS procedures. Studies have reported that Xvision by Augmedics has pedicle screw accuracies greater than at least 97.7% (40). Felix et al. compared pedicle screw accuracies between open and MISS procedures as both were guided by the AR system, VisAR (14). A total of 124 pedicle screws were inserted with VisAR navigation with 96% accuracy (Gertzbein–Robbins grades A and B), reporting that AR is an emerging technology can be highly accurate for both surgeries (14).

Discussion

The additive value of AR in RAN

With the emergence of AR and its multidisciplinary applications, AR is reported to assist RAN in more complex surgeries (41). Miller et al. reported prior AR work done on the DaVinci robot to show a 3D model of the patient's prostate superimposed on the intraoperative view (41, 42). Forte et al. explored alternative uses and interaction methods of AR and RAN and presented a robot-independent hardware and software system that provides four intuitive AR functions through computer graphics and vision (41). These functions can bring additional visual information into the surgeon's view, and the other functions leverage computer vision to provide more sophisticated computational capabilities (41). This relies only on vision rather than robotics to provide precise visual alignment between AR markers and the instrument and to avoid lengthy calibration procedures that are challenging for nontechnical personnel to perform (41, 43).

Although AR may bring an additive value to RAN, established RAN workflow for pedicle screw instrumentation may be subject to concern. Over the past decade, RAN has been implemented in surgeries as a practical tool to advance the field of MISS (44, 45). The first robotic-assisted system for adult spine surgery received U.S. Food and Drug Administration (FDA) clearance in 2004 (37). Robotic systems with integrated surgical navigation have the potential for improved accuracy, shorter time-per-screw placement, less fluoroscopy/radiation time, and shorter hospital stay than freehand (FH) techniques (46). Since then, newer robotic devices have been developed and cleared by the FDA for use in spine surgery (28). The RAN workflow for pedicle screw instrumentation can be simplified into three steps: preoperative planning, intraoperative registration, and robot-guided screw placement (47).

First, preoperative CT imaging is loaded onto the robotic planning software (48). Preoperative planning for screw insertion is carried out on robotic software, including the determination of the screw entry point, the size of screws, and the trajectories planned in axial and sagittal views preoperatively. Next, intraoperative registration is performed as a robotic mount is attached to the reference posterior superior iliac spine (PSIS) or spinous process reference clamp and ends with confirmation of fluoroscopic imaging, which is colocalized with the software planning template. During this step, all required surgical instrumentation can be registered and verified via 3Define cameras (Figure 5). At last, robotic-guided screw placements are initiated when a robotic arm is positioned over a single planned pedicle and end when the robotic arm is retracted following screw insertion (48) (Figure 6).

Figure 5. Ran registration process, all required surgical instrumentation was registered and verified via 3Define cameras. RAN, robotically assisted navigation.

Figure 6. RAN surgeries incorporating registered navigated instrumentation, drill guides, and surgical monitors. Drill guide during surgical procedure combined with real-time visual feedback from the surgical navigation monitors. The navigation monitor shows real-time visual feedback based on the positioning of the navigated instrument. RAN, robotically assisted navigation.

However, concerns related to RAN include surgeon attention shift from the surgical field to navigated remote screens, line-of-sight interruption, and cumulative radiation exposure as the demand for MISS increases.

Surgeon attention shift from the surgical field to navigated remote screens

Conventional navigated methods include a shift in surgeons’ attention from the surgical field to a navigated remote screen. Similar to manual navigated systems, RAN requires the surgeon to observe the navigated screw insertion trajectory on a remote screen, making RAN just as susceptible to similar attention shift errors (19). Molina et al. reported issues that arose with attention shift, including preoperative errors caused by errors in preoperative planning, soft tissue pressure on instrumentation, a shift in the entry point and instrument positioning, and morphology of the starting point causing skive (19, 48).

Attention shifts have been shown to negatively impact both cognitive and motor tasks and add time to performing the task (11, 12). Goodell et al. evaluated laparoscopic surgical simulation tasks designed to replicate the levels of cognitive and motor demands in surgical procedures and found a 30%–40% increase in task completion time in the distracted vs. undistracted condition (49). In addition to that, Léger et al. reported the number of attention shifts needed to perform a simple surgical planning task using both AR and conventional navigation and found that AR systems (mobile and desktop) were statistically different from the conventional navigation systems but were not statistically different from one another (11). The errors associated with attention shift can be removed by directly projecting the navigation guidance onto the surgical field, allowing surgeons to keep their attention on the surgical field (19).

Furthermore, Léger et al. described that when AR is utilized for procedures, the attention of the subject remains almost the whole time (90%–95%) on the guidance images; however, other guidance systems split the attention almost 50/50 between the patient and the monitor (11). Additionally, the ratio of time based on looking at the screen to total time taken may give an estimate of the user's confidence in what one is doing; therefore, the higher ratios obtained for AR systems may indicate that AR gives users more confidence that they are correct with respect to the data presented (11).

Line-of-sight interruption

Aside from attention shift, another common limitation of RNA guidance is line-of-sight interruption (19). During procedures, live computer navigation is interrupted by an obstacle that blocks the visualization of tracking markers by a remote tracking camera, resulting in the loss of live navigation until the obstruction is resolved (19). This is a common limitation as it may increase the operative time and decrease the accuracy (14). To combat such barriers, newer AR systems have developed an adjustable headset, a built-in tracking system, and an integrated headlight that projects AR onto a small optical display or directly onto the surgeon's retina. This form of AR is known as AR-HMD (Figures 3A, 4A) (14).

Patient anatomy is obtained by automated segmentation of the intraoperative cone beam CT scan. The surgeon is also able to see 2D sagittal and axial projections within the headset (Figures 3B, 4B). The headset projects holograms directly to the surgeon's retina, allowing for 3D superimposition of the anatomy over the real spine, and clinical accuracy is then measured shortly after (19). Studies such as Molina et al. reported the first cadaveric experience employing an AR-HMD that provides the ability to insert 120 pedicle screws and found overall insertion accuracies of 96.7% and 94.6% using the Heary–Gertzbein and Gertzbein–Robbins grading scales, respectively (19). Similarly, Liu et al. published a study with 205 consecutive pedicle screw placements recorded in 28 patients (49). Screw placement accuracy was graded only with the Gertzbein–Robbins scale and reported a 98.0% accuracy, in line with the reported accuracy of navigation (49, 50). Although AR navigation may present high accuracy based on nonstandardized grading scales, the initial use of the system may result in sensory overload, and a learning curve is to be expected (14). Nonetheless, AR-HMD maintains a major advantage of minimizing line-of-sight interruption, as it can be used for both open surgery and MISS (14, 35, 51).

Cumulative radiation exposure as the demand for MISS increases

Radiation exposure for spine surgeons, OR staff, and patients has been a concern for many years (14). According to Bratschitsch et al., there has been a more than 600% increase in the use of radiation for diagnostic procedures in the United States since the 1980s (52). A 2014 report published an increased risk of cancer by up to 13% among members of the Scoliosis Research Society, advising robust safety measures for staff and spine surgeons (53).

Over the years, intraoperative imaging and surgical approaches have evolved with spine surgery. This generally guided surgeons to less invasive approaches, such as MISS techniques. Stanford Medicine reported that MISS techniques and RAN integration have shown shorter operating times and reduced pain and discomfort in patients (54). Furthermore, a meta-analysis comparing percutaneous and open pedicle screw placement for thoracic and lumbar spine fractures suggested that MISS is a superior treatment approach for pedicle screw placement (55). However, this technique calls for more imaging guidance and ultimately increases radiation exposure because fluoroscopy remains necessary to confirm vertebral levels, check spinal alignment, and guide implant placement (56, 57).

Technologies to reduce intraoperative radiation have real potential to impact long-term risks. As wearable and independent of navigation monitors, AR guidance can avoid attention shift, decrease OR clutter, and does not use or require ionizing radiation for surgical guidance. Studies such as Felix et al. have documented recent advancements in AR as a “paradigm shift” in its application in a variety of surgical fields, including orthopedics, neurosurgery, and spine surgery (14).

Technical pearls associated with AR

Although AR is a novel technology that may distinguish itself from other state-of-the-art navigation systems, it is still in its nascency and several technical limitations are important to recognize. First, mechanical and visual discomfort may arise from AR devices such as AR headwear (AR-HMD) (15). Furthermore, visual discomfort, visual obstruction of anatomy by holographic images, and the need for intraoperative, rather than preoperative, CT scans for registration limit its applications (15). The surgeons’ initial experience with an AR system may be disorienting mostly due to factors such as mixing real visual input with holographic data projected onto the surgeons’ retina, resulting in sensory overload, and a learning curve is to be expected (50).

Delays in the surgical learning curve associated with AR may be attributed to a surgeon's ability to adapt and may depend on a generation of surgeons who grew up playing video games (16–18). Spine surgery learning curves can replicate in-line maneuvers while placing instrumentation, as well as adopting and developing proper technique while using image-guided technology. Rosser et al. described a correlation between faster completion and reduced errors in laparoscopic surgeries when the surgeons' background consists of more than 3 h per week of video game play (16, 58).

Lastly, there is a limited amount of literature in regard to AR assistance with workflow related to pedicle screw placement and no universally accepted standard method to grade screw accuracy and/or safety (14). Common systems such as Gertzbein and Robbins classification are typically utilized; however, they only measure medial, superior, and inferior cortical pedicle breaches and partial lateral breaches (such as in the in/out technique) (14). Heary et al. recognized thoracic pedicle screw accuracy grading without considering the direction of the breach being inadequate, specifically in the case of deliberate thoracic pedicle screw in–out–in trajectories (59). Thus, further studies need to be reported to find a standardized approach as AR technology is developing rapidly.

Future perspectives

With current advancements in the last several years, the use of AR in spinal surgery promises an exciting future. Future updates/versions of AR technology and wearable devices may improve the ability to manipulate display and radiographic scans, enhance surgeon's alertness when approaching critical structures via hepatic feedback, and reduce intraoperative complications (15). In addition to that, it has the potential to serve as a critical tool for preoperative planning and an educational tool for future medical students, residents, and fellows.

Furthermore, several RAN and AR systems for spine surgery have been released and tested in numerous clinical studies (42). Although RAN does have many advantages, such as lower risk of neurovascular damage, reoperation rates, postoperative infections, time for ambulation, and length of stay, and is typically studied for its pedicle screw accuracy rates compared to FH, many limitations are of concern (42). Currently, there are several limitations, such as complications with hardware or software failure, a demanding learning curve, no uniform consensus regarding operative time, cannula misplacements, and skiving off the drilling tip onto the pedicle surface due to the morphology of the starting point (48). Therefore, newer technologies such as AR systems have been reported to be more competitive in terms of an easier approach, setup, and real-time patient positioning monitoring for correction of surgical plans (42), as well as having the ability to address concerns associated with RAN as previously mentioned.

The role of AR in spine surgery is a rapidly evolving field where new technology and surgical techniques can help maximize surgical efficiency, precision, and accuracy. With collaborations from clinicians, engineers, and video game designers, this technology can profoundly improve components of MISS. Thus, it is conceivable that within the next few years surgeons will be wearing AR glasses during patient consultation, rehabilitation, training, and surgery. Such advancements, among others, will continue to drive the value of AR 3D navigation in MISS.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Conflict of interest

DRL receives royalties and is a consultant with Stryker; receives royalties from NuVasive; is a consultant with Depuy Synthes; is on the advisory board and has an ownership interest with Remedy Logic; is a consultant and has an ownership interest with Viseon, Inc.; and has an ownership interest with Woven Orthopedic Technologies, and Research Support from Medtronic; is consulstant and on the advisory board for Choice Spine, Vestia Ventures MiRus Investment LLC, ISPH II LLC, and HS2 LLC.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Makhataeva Z, Varol HA. Augmented reality for robotics: a review. Robotics. (2020) 9(2):21. doi: 10.3390/robotics9020021

2. Yoo JS, Patel DS, Hrynewycz NM, Brundage TS, Singh K. The utility of virtual reality and augmented reality in spine surgery. Ann Transl Med. (2019) 7(Suppl 5):S171. doi: 10.21037/atm.2019.06.38

3. Vadalà G, De Salvatore S, Ambrosio L, Russo F, Papalia R, Denaro V. Robotic spine surgery and augmented reality systems: a state of the art. Neurospine. (2020) 17(1):88–100. doi: 10.14245/ns.2040060.030

4. Burström G, Persson O, Edström E, Elmi-Terander A. Augmented reality navigation in spine surgery: a systematic review. Acta Neurochir. (2021) 163(3):843–52. doi: 10.1007/s00701-021-04708-3

5. Carl B, Bopp M, Saß B, Nimsky C. Microscope-based augmented reality in degenerative spine surgery: initial experience. World Neurosurg. (2019) 128:e541–51. doi: 10.1016/j.wneu.2019.04.192

6. Auloge P, Cazzato RL, Ramamurthy N, de Marini P, Rousseau C, Garnon J, Charles YP, Steib JP, Gangi A. Augmented reality and artificial intelligence-based navigation during percutaneous vertebroplasty: a pilot randomised clinical trial. Eur Spine J. (2020) 29(7):1580–9. doi: 10.1007/s00586-019-06054-6

7. Abe Y, Sato S, Kato K, Hyakumachi T, Yanagibashi Y, Ito M, Abumi K. A novel 3D guidance system using augmented reality for percutaneous vertebroplasty. J Neurosurg Spine. (2013) 19(4):492–501. doi: 10.3171/2013.7.SPINE12917

8. Carl B, Bopp M, Saß B, Pojskic M, Voellger B, Nimsky C. Spine surgery supported by augmented reality. Glob Spine J. (2020) 10(Suppl_2):41S–55S. doi: 10.1177/2192568219868217

9. Yuk FJ, Maragkos GA, Sato K, Steinberger J. Current innovation in virtual and augmented reality in spine surgery. Ann Transl Med. (2021) 9(1):94. doi: 10.21037/atm-20-1132

10. Bhatt FR, Orosz LD, Tewari A, Boyd D, Roy R, Good CR, Schuler TC, Haines CM, Jazini E. Augmented reality-assisted spine surgery: an early experience demonstrating safety and accuracy with 218 screws. Global Spine J. (2022). doi: 10.1177/21925682211069321. [Epub ahead of print]

11. Léger É, Drouin S, Collins DL, Popa T, Kersten-Oertel M. Quantifying attention shifts in augmented reality image-guided neurosurgery. Healthc Technol Lett. (2017) 4(5):188–92. doi: 10.1049/htl.2017.0062

12. Wachs JP. Gaze, posture and gesture recognition to minimize focus shifts for intelligent operating rooms in a collaborative support system. Int J Comput Commun Control. (2010) 5(1):106–24. doi: 10.15837/ijccc.2010.1.2467

13. Silbermann J, Riese F, Allam Y, Reichert T, Koeppert H, Gutberlet M. Computer tomography assessment of pedicle screw placement in lumbar and sacral spine: comparison between free-hand and O-arm based navigation techniques. Eur Spine J. (2011) 20:875–81. doi: 10.1007/s00586-010-1683-4

14. Felix B, Kalatar SB, Moatz B, Hofstetter C, Karsy M, Parr R, et al. Augmented reality spine surgery navigation. Spine. (2022) 47(12):865–72. doi: 10.1097/BRS.0000000000004338

15. Hersh A, Mahapatra S, Weber-Levine C, Awosika T, Theodore JN, Zakaria HM, et al. Augmented reality in spine surgery: a narrative review. HSS J. (2021) 17(3):351–8. doi: 10.1177/15563316211028595

16. Rahmathulla G, Nottmeier EW, Pirris SM, Deen HG, Pichelmann MA. Intraoperative image-guided spinal navigation: technical pitfalls and their avoidance. Neurosurg Focus. (2014) 36(3):E3. doi: 10.3171/2014.1.FOCUS13516

17. Bai YS, Zhang Y, Chen ZQ, Wang CF, Zhao YC, Shi ZC, et al. Learning curve of computer-assisted navigation system in spine surgery. Chin Med J. (2010) 123:2989–94. doi: 10.3760/cma.j.issn.0366-6999.2010.21.007

18. Sasso RC, Garrido BJ. Computer-assisted spinal navigation versus serial radiography and operative time for posterior spinal fusion at L5-S1. J Spinal Disord Tech. (2007) 20:118–22. doi: 10.1097/01.bsd.0000211263.13250.b1

19. Molina CA, Theodore N, Ahmed AK, Westbroek EM, Mirovsky Y, Harel R, et al. Augmented reality-assisted pedicle screw insertion: a cadaveric proof-of-concept study. J Neurosurg Spine. (2019):1–8. doi: 10.3171/2018.12.SPINE181142. [Epub ahead of print]

20. “A brief history of augmented reality (+future trends & impact)—G2.” G2 (2019), Available at: https://www.g2.com/articles/history-of-augmented-reality. (accessed October 2022)

21. Long Q, Wu JY, DiMaio SP, Nassier N, Kazanzides P. A review of augmented reality in robotic-assisted surgery. IEEE Trans Med Robot Bionics. (2020) 2(1):1–16. doi: 10.1109/TMRB.2019.2957061

22. Schmalstieg D, Höllerer T. Chapter 1. Introduction to augmented reality. In: Augmented reality: principles and practice. Crawfordsville, Indiana: Addison-Wesley (2016).

23. Yianni C. “Infographic: history of augmented reality—news.” Blippar. Available at: https://www.blippar.com/blog/2018/06/08/history-augmented-reality#:∼:text=2000%3A%20AR%20Quake%20launched%20%2D%20the,backpack%20containing%20a%20computer%20%26%20gyroscopes. (accessed October 2022)

24. “ARQuake: interactive outdoor augmented reality collaboration system.” ARQuake—Wearable Computer Lab. Available at: http://www.tinmith.net/arquake/. (accessed October 2022)

25. Henrysson A, Billinghurst MN, Ollila M, AR Tennis. ACM SIGGRAPH 2006 emerging technologies on AR tennis—SIGGRAPH ‘06 (2006). doi: 10.1145/1179133.1179135

26. Banuba. “Filters on Snapchat: What’s behind the augmented reality curtain.” Banuba AR Technologies: All-In-One AR SDK for Business (2021). Available at: https://www.banuba.com/blog/snapchat-filter-technology-whats-behind-the-curtain#:∼:text=However%2C%20it%20was%20not%20until,personal%20snaps%20and%20sponsored%20content. (accessed October 2022)

27. INDE Team. “The brief history of social media augmented reality filters—Inde—the World Leader in Augmented Reality.” INDE—The World Leader in Augmented Reality (2020). Available at: https://www.indestry.com/blog/the-brief-history-of-social-media-ar-filters. (accessed October 2022)

28. Snider M, Molina B. “Everyone wants to own the Metaverse including Facebook and Microsoft. But what exactly is it?” USA Today, Gannett Satellite Information Network (2022). Available at: https://www.usatoday.com/story/tech/2021/11/10/metaverse-what-is-it-explained-facebook-microsoft-meta-vr/6337635001/. (accessed October 2022)

29. Carl B, Bopp M, Saß B, Nimsky C. Microscope-based augmented reality in degenerative spine surgery: initial experience. World Neurosurg. (2019) 128:e541–51. doi: 10.1016/j.wneu.2019.04.192

30. Peuchot B, Tanguy A, Eude M. Augmented reality in spinal surgery. Stud Health Technol Informat. (1997) 37:441–4.

31. Sakai D, Joyce K, Sugimoto M, Horikita N, Hiyama A, Sato M, Devitt A, Watanabe M. Augmented, virtual and mixed reality in spinal surgery: a real-world experience. J Orthop Surg. (2020) 28(3):1–12. doi: 10.1177/2309499020952698

32. Theocharopoulos N, Perisinakis K, Damilakis J, Papadokostakis G, Hadjipavlou A, Gourtsoyiannis N. Occupational exposure from common fluoroscopic projections used in orthopaedic surgery. J Bone Jt Surg Series A. (2003) 85(9):1698–703. doi: 10.2106/00004623-200309000-00007

33. Marill MC. “Hey surgeon, is that a Hololens on your head?” Wired, Conde Nast (2019). Available at: https://www.wired.com/story/hey-surgeon-is-that-a-hololens-on-your-head/. (accessed October 2022)

34. John Hopkins Medicine. “Johns Hopkins performs its first augmented reality surgeries in patients.” (2021). Available at: https://www.hopkinsmedicine.org/news/articles/johns-hopkins-performs-its-first-augmented-reality-surgeries-in-patients. (accessed October 2022)

35. Molina CA, Sciubba DM, Greenberg JK, Khan M, Witham T. Clinical accuracy, technical precision, and workflow of the first in human use of an augmented-reality head-mounted display stereotactic navigation system for spine surgery. Oper Neurosurg. (2021) 20(3):300–9. Erratum in Oper Neurosurg. (2021) 20(4):433. doi: 10.1093/ons/opaa398

36. Rush University. “New technology simulates x-ray vision for surgeons.” News | Rush University (2020). Available at: https://www.rushu.rush.edu/news/new-technology-simulates-x-ray-vision-surgeons. (accessed October 2022)

37. Sandberg J. “Xvision spine system: augmedics: United States.” Augmedics (2022). Available at: https://augmedics.com/. (accessed October 2022)

38. “Visar: augmented reality surgical guidance.” Novarad. Available at: https://www.novarad.net/product/visar. (accessed October 2022)

39. Uddin SA, Hanna G, Ross L, Molina C, Urakov T, Johnson P, et al. Augmented reality in spinal surgery: highlights from augmented reality lectures at the emerging technologies annual meetings. Cureus. (2021) 13(10):e19165. doi: 10.7759/cureus.19165

40. Harel R, Anekstein Y, Raichel M, Molina CA, Ruiz-Cardozo MA, Orrú E, et al. The XVS system during open spinal fixation procedures in patients requiring pedicle screw placement in the lumbosacral spine. World Neurosurg. (2022) 164:e1226–32. doi: 10.1016/j.wneu.2022.05.134

41. Forte M-P, Gourishetti R, Javot B, Engler T, Gomez ED, Kuchenbecker KJ. Design of interactive augmented reality functions for robotic surgery and evaluation in dry-lab lymphadenectomy. Int J Med Robot. (2022) 18(2):e2351. doi: 10.1002/rcs.2351

42. Giri S, Sarkar DK. Current status of robotic surgery. Indian J Surg. 2012 Jun;74(3): Pages 242-7. doi: doi: 10.1007/s12262-012-0595-4. Epub 2012 Jul 5. PMID: 23730051; PMCID: PMC3397190.23730051

43. Reis G, Yilmaz M, Rambach J, Pagani A, Suarez-Ibarrola R, Miernik A, Lesur P, Minaskan N. Mixed reality applications in urology: requirements and future potential. Ann Med Surg. (2021) 66:102394. doi: 10.1016/j.amsu.2021.102394

44. Weiner JA, McCarthy MH, Swiatek P, Louie PK, Qureshi SA. Narrative review of intraoperative image guidance for transforaminal lumbar interbody fusion. Ann Transl Med. (2021) 9(1):89. doi: 10.21037/atm-20-1971

45. Devito DP, Kaplan L, Dietl R, Pfeiffer M, Horne D, Silberstein B, et al. Clinical acceptance and accuracy assessment of spinal implants guided with SpineAssist surgical robot. Spine. (2010) 35(24):2109–15. doi: 10.1097/BRS.0b013e3181d323ab

46. Avrumova F, Sivaganesan A, Alluri RK, Vaishnav A, Qureshi S, Lebl DR. Workflow and efficiency of robotic-assisted navigation in spine surgery. HSS J. (2021) 17(3):302–7. doi: 10.1177/15563316211026658

47. Molliqaj G, Schatlo B, Alaid A, Solomiichuk V, Rohde V, Schaller K, et al. Accuracy of robot-guided versus freehand fluoroscopy-assisted pedicle screw insertion in thoracolumbar spinal surgery. Neurosurg Focus FOC. (2017) 42(5):E14. doi: 10.3171/2017.3.FOCUS179

48. Avrumova F, Morse KW, Heath M, Widmann RF, Lebl DR. Evaluation of K-wireless robotic and navigation assisted pedicle screw placement in adult degenerative spinal surgery: learning curve and technical notes. J Spine Surg. (2021) 7(2):141–54. doi: 10.21037/jss-20-687

49. Goodell KH, Cao CG, Schwaitzberg SD. Effects of cognitive distraction on performance of laparoscopic surgical tasks. J Laparoendosc Adv Surg Tech A. (2006) 16(2):94–8. doi: 10.1089/lap.2006.16.94

50. Liu A, Jin Y, Cottrill E, Khan M, Westbroek E, Ehresman J, et al. Clinical accuracy and initial experience with augmented reality-assisted pedicle screw placement: the first 205 screws. J Neurosurg Spine. (2022) 36(3):351–7. doi: 10.3171/2021.2.SPINE202097

51. Dibble CF, Molina CA. Device profile of the XVisionspine (XVS) augmented-reality surgical navigation system: overview of its safety and efficacy. Expert Rev Med Devices. (2021) 18:1–8. doi: 10.1080/17434440.2021.1865795

52. Bratschitsch G, Leitner L, Stücklschweiger G, Guss H, Sadoghi P, Puchwein P, Leithner A, Radl R. Radiation exposure of patient and operating room personnel by fluoroscopy and navigation during spinal surgery. Sci Rep. (2019) 9:17652. doi: 10.1038/s41598-019-53472-z

53. Mastrangelo G, Fedeli U, Fadda E, Giovanazzi A, Scoizzato L, Saia B. Increased cancer risk among surgeons in an orthopedic hospital. Occup Med. (2005) 55:498–500. doi: 10.1093/occmed/kqi048

55. Tian NF, Huang QS, Zhou P, et al.. Pedicle screw insertion accuracy with different assisted methods: a systematic review and meta-analysis of comparative studies. Eur Spine J 20, 846-859 (2011). doi: 10.1007/s00586-010-1577-5

56. Wang CC, Sun YD, Wei XL, Jing ZP, Zhao ZQ. [Advances in the classification and treatment of isolated superior mesenteric artery dissection]. Zhonghua Wai Ke Za Zhi. 2023 Jan 1;61(1):81-85. Chinese. doi: doi: 10.3760/cma.j.cn

57. Yu E, Khan SN. Does less invasive spine surgery result in increased radiation exposure? A systematic review. Clin Orthop Relat Res. (2014) 472(6):1738–48. doi: 10.1007/s11999-014-3503-3

58. Rosser JC Jr., Lynch PJ, Cuddihy L, Gentile DA, Klonsky J, Merrell R. The impact of video games on training surgeons in the 21st century. Arch Surg. (2007) 142:181–6. doi: 10.1001/archsurg.142.2.181

Keywords: AR, MISS, fusion, pedicle screw, robotic-assisted navigation

Citation: Avrumova F and Lebl DR (2023) Augmented reality for minimally invasive spinal surgery. Front. Surg. 9:1086988. doi: 10.3389/fsurg.2022.1086988

Received: 1 November 2022; Accepted: 28 December 2022;

Published: 27 January 2023.

Edited by:

Qingquan Kong, Sichuan University, ChinaReviewed by:

Jiangming Yu, Shanghai Jiaotong University, ChinaMichel Roethlisberger, University Hospital of Basel, Switzerland

© 2023 Avrumova and Lebl. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Darren R. Lebl cmVzZWFyY2hAbGVibHNwaW5lbWQuY29t

Specialty Section: This article was submitted to Orthopedic Surgery, a section of the journal Frontiers in Surgery

Fedan Avrumova

Fedan Avrumova Darren R. Lebl*

Darren R. Lebl*