- 1BIASlab, Department of Electrical Engineering, Eindhoven University of Technology, Eindhoven, Netherlands

- 2Nested Minds Solutions, Liverpool, United Kingom

- 3GN Hearing, Eindhoven, Netherlands

In this paper we present Active Inference-Based Design Agent (AIDA), which is an active inference-based agent that iteratively designs a personalized audio processing algorithm through situated interactions with a human client. The target application of AIDA is to propose on-the-spot the most interesting alternative values for the tuning parameters of a hearing aid (HA) algorithm, whenever a HA client is not satisfied with their HA performance. AIDA interprets searching for the “most interesting alternative” as an issue of optimal (acoustic) context-aware Bayesian trial design. In computational terms, AIDA is realized as an active inference-based agent with an Expected Free Energy criterion for trial design. This type of architecture is inspired by neuro-economic models on efficient (Bayesian) trial design in brains and implies that AIDA comprises generative probabilistic models for acoustic signals and user responses. We propose a novel generative model for acoustic signals as a sum of time-varying auto-regressive filters and a user response model based on a Gaussian Process Classifier. The full AIDA agent has been implemented in a factor graph for the generative model and all tasks (parameter learning, acoustic context classification, trial design, etc.) are realized by variational message passing on the factor graph. All verification and validation experiments and demonstrations are freely accessible at our GitHub repository.

1 Introduction

Hearing aids (HA) are often equipped with specialized noise reduction algorithms. These algorithms are developed by teams of engineers who aim to create a single optimal algorithm that suits any user in any situation. Taking a one-size-fits-all approach to HA algorithm design leads to two problems that are prevalent throughout today’s hearing aid industry. First, modeling all possible acoustic environments is simply infeasible. The daily lives of HA users are varied and the different environments they traverse even more so. Given differing acoustic environments, a single static HA algorithm cannot possibly account for all eventualities—even without taking into account the particular constraints imposed by the HA itself, such as limited computational power and allowed processing delays (Kates and Arehart, 2005). Secondly, hearing loss is highly personal and can differ significantly between users. Each HA user consequently requires their own, individually tuned HA algorithm that compensates for their unique hearing loss profile (Nielsen et al., 2015; van de Laar and de Vries, 2016; Alamdari et al., 2020) and satisfies their personal preferences for parameter settings (Reddy et al., 2017). Considering that HAs nowadays often consist of multiple interconnected digital signal processing units with many integrated parameters, the task of personalizing the algorithm requires exploring a high-dimensional search space of parameters, which often do not yield a clear physical interpretation. The current most widespread approach to personalization requires the HA user to physically travel to an audiologist who manually tunes a subset of all HA parameters. This is a burdensome activity that is not guaranteed to yield an improved listening experience for the HA user.

From these two problems, it becomes clear that we need to move towards a new approach for hearing aid algorithm design that empowers the user. Ideally, users should be in control of their own HA algorithms and should be able to change and update them at will instead of having to rely on teams of engineers that operate with long design cycles, separated from the users’ living experiences.

The question then becomes, how do we move the HA algorithm design away from engineers and into the hands of the user? While a naive implementation that allows for tuning HA parameters with sliders on, for example, a smartphone is trivial to develop, even a small number of adjustable parameters gives rise to a large, high-dimensional search space that the HA user needs to learn to navigate. This puts a large burden on the user, essentially asking them to be their own trained audiologist. Clearly, this is not a trivial task and this approach is only feasible for a small set of parameters, which carry a clear physical interpretation. Instead, we wish to support the user with an agent that intelligently proposes new parameter trials. In this setting, the user is only tasked to cast (positive or negative) appraisals of the current HA settings. Based on these appraisal, the agent will autonomously traverse the search space with the goal of proposing satisfying parameter values for that user under the current environmental conditions in as few trials as possible.

Designing an intelligent agent that learns to efficiently navigate a parameter space is not trivial. In the solution approach in this paper, we rely on a probabilistic modeling approach inspired by the free energy principle (FEP) (Friston et al., 2006). The FEP is a framework originally designed to explain the kinds of computations that biological, intelligent agents (such as the human brain) might be performing. Recent years have seen the FEP applied to the design of synthetic agents as well (Millidge, 2019; van de Laar and de Vries, 2019; van de Laar et al., 2019; Tschantz et al., 2020). A hallmark feature of FEP-based agents is that they exhibit a dynamic trade-off between exploration and exploitation (Friston et al., 2015; Da Costa et al., 2020; Friston K. et al., 2021), which is a highly desirable property when learning to navigate an HA parameter space. Concretely, the FEP proposes that intelligent agents should be modeled as probabilistic models. These types of models do not only yield point estimates of variables, but also capture uncertainty through modeling full posterior probability distributions. Furthermore, user appraisals and actions can be naturally incorporated by simply extending the probabilistic model. Taking a model-based approach also allows for fewer parameters than alternative data-driven solutions, as we can incorporate field-specific knowledge, making it more suitable for computationally constrained hearing aid devices. The novelty of our approach is rooted in the fact that the entire proposed systems is framed as a probabilistic generative model in which we can perform (active) inference through (expected) free energy minimization.

In this paper we present AIDA,1 an active inference-based design agent for the situated development of context-dependent audio processing algorithms, which provides the user with her own controllable audio processing algorithm. This approach embodies an FEP-based agent that operates in conjunction with an acoustic model and actively learns optimal context-dependent tuning parameter settings. After formally specifying the problem and solution approach in Section 2 we make the following contributions:

(1) We develop a modular probabilistic model that embodies situated, (acoustic) scene-dependent, and personalized design of its corresponding hearing aid algorithm in Section 3.1.

(2) We develop an expected free energy-based agent (AIDA) in Section 3.2, whose proposals for tuning parameter settings are well-balanced in terms of seeking more information about the user’s preferences (explorative agent behavior) versus seeking to optimize the user’s satisfaction levels by taking advantage of previously learned preferences (exploitative agent behavior).

(3) Inference in the acoustic model and AIDA is elaborated upon in Section 4 and their operations are individually verified through representative experiments in Section 5. Furthermore, all elements are jointly validated through a demonstrator application in Section 5.4.

We have intentionally postponed a more thorough review of related work to Section 6 as we deem it more relevant after the introduction of our solution approach. Finally, Section 7 discusses the novelty and limitations of our approach and Section 8 concludes this paper.

2 Problem Statement and Proposed Solution Approach

2.1 Automated Hearing Aid Tuning by Optimization

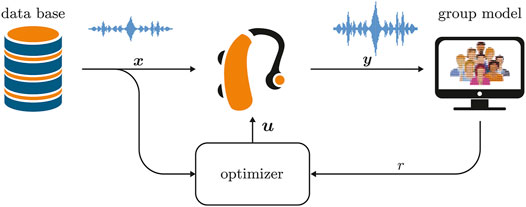

In this paper we consider the problem of choosing values for the tuning parameters u of a hearing aid algorithm that processes an acoustic input signal x to output signal y. In Figure 1, we sketch an automated optimization-based approach to this problem. Assume that we have access to a generic “signal quality” model which rates the quality of a HA output signal y = f(x, u), as a function of the HA input x and parameters u, by a rating r(x, u) ≜ r(y). If we run this system on a representative set of input signals

FIGURE 1. A schematic overview of the conventional approach to hearing aid algorithm tuning. Here the parameters of the hearing aid u are optimized with respect to some generic user rating model r(y) for a large data base

Unfortunately, in commercial practice, this optimization approach does not always result in satisfactory HA performance, because of two reasons. First, the signal quality models in the literature have been trained on large databases of preference ratings from many users and therefore only model the average HA client rather than any specific client (Rix et al., 2001; Kates and Arehart, 2010; Taal et al., 2011; Beerends et al., 2013; Hines et al., 2015; Chinen et al., 2020). Secondly, the optimization approach averages over a large set of different input signals, so it will not deal with acoustic context-dependent client preferences. By acoustic context, we consider signal properties that depend on environmental conditions such as being inside, outside, in a car or at the mall. Generally, client preferences for HA tuning parameters are both highly personal and context-dependent. Therefore, there is a need to develop a personalized, context-sensitive controller for tuning HA parameters u.

2.2 Situated Hearing Aid Tuning With the User In-The-Loop

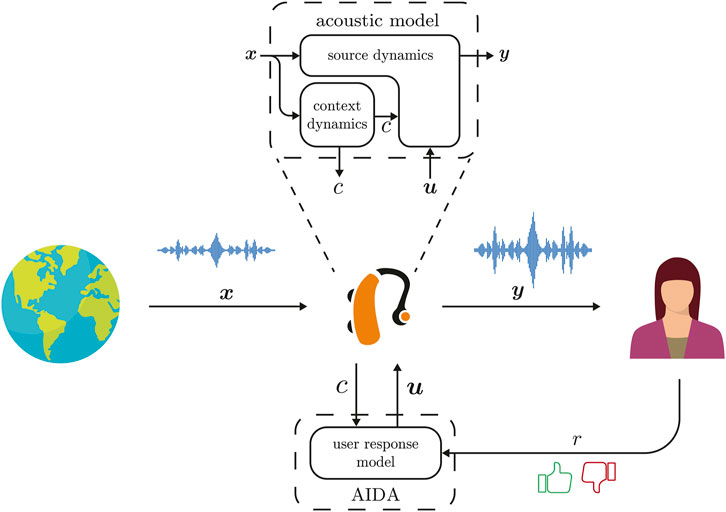

In this paper, we will develop a personalized, context-aware design agent, based on the architecture shown in Figure 2. In contrast to Figure 1, the outside world (rather than a database) produces an input signal x under situated conditions that is processed by a hearing aid algorithm to produce an output signal y. A particular human hearing aid client listens to the signal y and is invited to cast at any time binary appraisals r ∈ {0, 1} about the current performance of the hearing aid algorithm, where 1 and 0 correspond to the user being satisfied and unsatisfied, respectively. Context-aware trials for HA tuning parameters are provided by AIDA. Rather than an offline design procedure, the whole system designs continually under situated conditions. The HA device itself houses a custom hearing aid algorithm, based on state inference in a generative acoustic model. The acoustic model contains two sub-models: 1) a source dynamics model and 2) a context dynamics model.

FIGURE 2. A schematic overview of the proposed situated HA design loop containing AIDA. An incoming signal x enters the hearing aid and is used to infer the context of the user c. Based on this context and previous user appraisals, AIDA proposes a new set of parameters u for the hearing aid algorithm. Based on the input signal, the proposed parameters and the current context, the output y of the hearing aid is determined, which are used together with the context in the hearing aid algorithm. The parameters u are actively optimized by AIDA, based on the inferred context c from the input signal x and appraisals r from the user in the loop. All individual subsystems represent parts of a probabilistic generative model as described in Section 3, where the corresponding algorithms follows from performing probabilistic inference in these models as described in Section 4.

Inference in the acoustic model is based on the observed signal x and yields the output y and context c. Based on this context signal c and previous user appraisals r, AIDA will actively propose new parameters trials u with the goal of making the user happy. Technically, the objective is that AIDA expects to receive fewer negative appraisals in the future, relative to not making parameter adaptations, see Section 3.2 for details.

The design of AIDA is non-trivial. For instance, since there is a priori no personalized model of HA ratings for any particular user, AIDA will have to build such a model on-the-fly from the context c and user appraisals r. Since the system operates under situated conditions, we want to impose as little burden on the end user as possible. As a result, most users will only once in a while cast an appraisal and this complicates the learning of a personalized HA rating model.

To make this desire for very light-weight interactions concrete, we now sketch how we envision a typical interaction between AIDA and a HA client. Assume that the HA client is in a conversation with a friend at a restaurant. The signal of interest, in this case, is the friend’s speech signal while the interfering signal is an environmental babble noise signal. The HA algorithm tries to separate the input signal x into its constituent speech and noise source components, then applies gains u to each source component and sums these weighted source signals to produce output y. If the HA client is happy with the performance of her HA, she will not cast any appraisals. After all, she is in the middle of a conversation and has no imperative to change the HA behavior. However, if she cannot understand her conversation partner, the client may covertly tap her watch or make another gesture to indicate that she is not happy with her current HA settings. In response, AIDA, which may be implemented as a smartwatch application, will reply instantaneously by sending a tuning parameter update u to the hearing aid algorithm in an effort to fix the client’s current hearing problem. Since the client’s preferences are context-dependent, AIDA needs to incorporate information about the acoustic context from HA input x. As an example, the HA user might leave the restaurant for a walk outside. Walking outside presents a different type of background noise and consequently requires different parameter settings.

Crucially, we would like HA clients to be able to tune their hearing aids without interruption of any ongoing activities. Therefore, we will not demand that the client has to focus visual attention on interacting with a smartphone app. At most, we want the client to apply a tap or make a simple gesture that does not draw any attention away from the ongoing conversation. A second criterion is that we do not want the conversation partner to notice that the client is interacting with the agent. The client may actually be in a situation (e.g., a business meeting) where it is not appropriate to demonstrate that her priorities have shifted to tuning her hearing aids. In other words, the interactions must be very light-weight and covert. A third criterion is that we want the agent to learn from as few appraisals as possible. Note that, if the HA has 10 tuning parameters and 5 interesting values (very low, low, middle, high, very high) per parameter, then there are 510 (about 10 million) parameter settings. We do not want the client to get engaged in an endless loop of disapproving new HA proposals as this will lead to frustration and distraction from the ongoing conversation. Clearly, this means that each update of the HA parameters cannot be selected randomly: we want the agent to propose the most interesting values for the tuning parameters, based on all observed past information and certain goal criteria for future HA behavior. In Section 4.2, we will quantify what most interesting means in this context.

In short, the goal of this paper is to design an intelligent agent that supports user-driven situated design of a personalized audio processing algorithm through a very light-weight interaction protocol.

In order to accomplish this task, we will draw inspiration from the way how human brains design algorithms (e.g., for speech and object recognition, riding a bike, etc.) solely through environmental interactions. Specifically, we base the design of AIDA on the Active Inference (AIF) framework. Originating from the field of computational neuroscience, AIF proposes to view the brain as a prediction engine that models sensory inputs. Formally, AIF accomplishes this through specifying a probabilistic generative model of incoming data. Performing approximate Bayesian inference in this model by minimizing free energy then constitutes a unified procedure for both data processing and learning. To select tuning parameter trials, an AIF agent predicts the expected free energy in the near future, given a particular choice of parameter settings. AIF provides a single, unified method for designing all components of AIDA. The design of a HA system that is controlled by an AIF-based design agent involves solving the following tasks:

(1) Classification of acoustic context.

(2) Selecting acoustic context-dependent trials for the HA tuning parameters.

(3) Execution of the HA signal processing algorithm (that is controlled by the trial parameters).

Task 1 (context classification) involves determining the most probable current acoustic environment. Based on a dynamic context model (described in Section 3.1.2), we infer the most probable acoustic environment as described in Section 4.1.

Task 2 (trial design) encompasses proposing alternative settings for the HA tuning parameters. Sections 3.2, 4.2 describe the user response model and execution of AIDA’s trial selection procedure based on expected free energy minimization, respectively.

Finally, task 3 (hearing aid algorithm execution) concerns performing variational free energy minimization with respect to the state variables in a generative probabilistic model for the acoustic signal. In Section 3.1 we describe the generative acoustic model underlying the HA algorithm and Section 4.3 describes the inferred HA algorithm itself.

Crucially, in the AIF framework, all three tasks can be accomplished by variational free energy minimization in a generative probabilistic model for observations. Since we can automate variational free energy minimization by a probabilistic programming language, the only remaining task for the human designer is to specify the generative models. The next section describes the model specification.

3 Model Specification

In this section, we present the generative model of the AIDA controlled HA system, as illustrated in Figure 2. In Section 3.1, we describe a generative model for the HA input and output signals x and y respectively. In this model, the hearing aid algorithm follows through performing probabilistic inference, as will be discussed in Section 4. Part of the hearing aid algorithm is a mechanism for inferring the current acoustic context. In Section 3.2 we introduce a model for agent AIDA that is used to infer new parameter trials. A concise summary of the generative model is also presented in Supplementary Appendix SB and an overview of the corresponding symbols is given in Supplementary Appendix Table SA1.

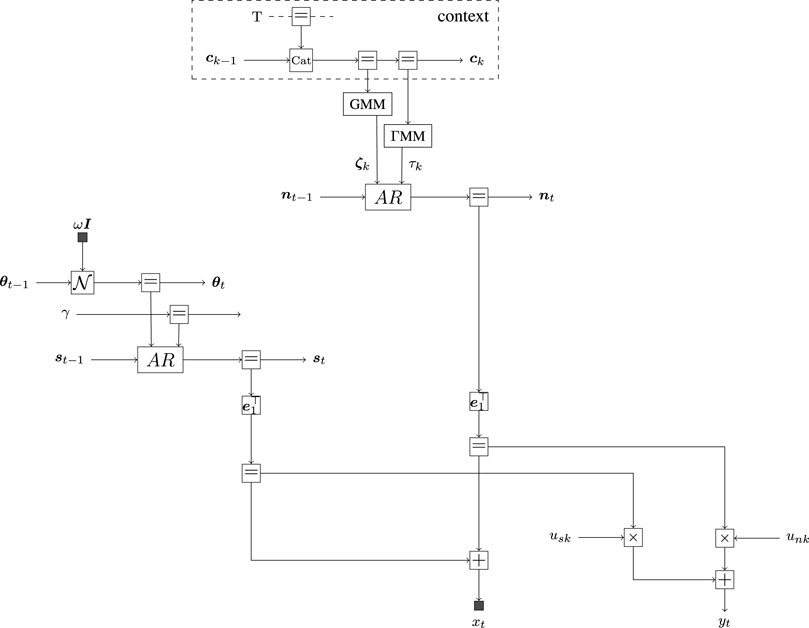

Throughout this section, we will make use of factor graphs for visualization of probabilistic models. In this paper we focus on Forney-style factor graphs (FFG), as introduced in Forney (2001) with notational conventions adopted from Loeliger (2004). FFGs represent factorized functions by undirected graphs, whose nodes represent the individual factors of the global function. The nodes are connected by edges representing the mutual arguments of the factors. In an FFG, a node can be connected to an arbitrary number of edges, but edges are constrained to have a maximum degree of two. A more detailed review of probabilistic modeling and factor graphs has been provided in Supplementary Appendix SA.

3.1 Acoustic Model

Our acoustic model of the observed signal and hearing aid output consists of a model of the source dynamics of the underlying signals and a model for the context dynamics.

3.1.1 Model of Source Dynamics

We assume that the observed acoustic signal x consists of a speech signal (or more generally, a target signal that the HA client wants to focus on) and an additive noise signal (that the HA client is not interested in), as

where

FIGURE 3. A Forney-style factor graph representation of the acoustic source signals model as specified by Eqs 3–11 at time index t. The observation xt is specified as the sum of a latent speech signal st and a latent noise signal nt. The speech signal is modeled by a time-varying auto-regressive process, where its coefficients θt are modeled by a Gaussian random walk. The noise signal is a context-dependent auto-regressive process, modeled by Gaussian (GMM) and Gamma mixture models (ΓMM) for the parameters ζk and τk, respectively. The selection variable of these mixture models represents the context ck. The model for the context dynamics is enclosed by the dashed box. The composite AR factor node represents the auto-regressive transition dynamics specified by Eq. 3b. The output of the hearing aid yt is modeled as the weighted sum of the extracted speech and noise signals.

Historically, Auto-Regressive (AR) models have been widely used to represent speech signals (Kakusho and Yanagida, 1982; Paliwal and Basu, 1987). As the dynamics of the vocal tract exhibit non-stationary behavior, speech is usually segmented into individual frames that are assumed to be quasi-stationary. Unfortunately, the signal is often segmented without any prior information about the phonetic structure of the speech signal. Therefore the quasi-stationarity assumption is likely to be violated and time-varying dynamics are more likely to occur in the segmented frames (Vermaak et al., 2002). To address this issue, we can use a time-varying prior for the coefficients of the AR model, leading to a time-varying AR (TVAR) model (Rudoy et al., 2011).

where

Multiplication of a state vector by this companion matrix, such as A(θt)st−1, basically performs two operations: an inner product

The acoustic model also encompasses a model for background noise, such as the sounds at a bar or train station. Many of these background sounds can be well represented by colored noise (Popescu and Zeljkovic, 1998), which in turn can be modeled by a low-order AR model (Gibson et al., 1991; Gannot et al., 1998)

where

Here, ⌈⋅⌉ denotes the ceiling function that returns the largest integer smaller or equal than its argument, while W is the window length. The above equation makes sure that k is intuitively aligned with segments of length W, i.e., t ∈ [1, W] corresponds to k = 1. To denote the start and end indices of the time segment corresponding to context index k, we define t− = (k − 1)W + 1 and t+ = kW as an implicit function of k, respectively. The context can be assumed to be stationary within a longer period of time compared to the speech signal. However, abrupt changes in the dynamics of background noise may occasionally occur. For example, if the user moves from a train station to a bar, the parameters of the AR model that are attributed to the train station will now inadequately describe the background noise of the new environment. To deal with these changing acoustic environments, we introduce context-dependent priors for the background noise, using a Gaussian and Gamma mixture model:

The context at time index k, denoted by ck, comprises a 1-of-L binary vector with elements clk ∈ {0, 1}, which are constrained by ∑lclk = 1. Γ(α, β) represents a Gamma distribution with shape and rate parameters α and β, respectively. The hyperparameters μl, Σl, αl and βl define the characteristics of the different background noise environments.

Now that an acoustic model of the environment has been formally specified, we will extend this model with the goal of obtaining a HA algorithm. The principal goal of a HA algorithm is to improve audibility and intelligibility of acoustic signals. Audibility can be improved by amplifying the received input signal. Intelligibility can be improved by increasing the Signal-to-Noise Ratio (SNR) of the received signal. Assuming that we can infer the constituent source signals st and nt from received signal xt, the desired HA output signal can be modeled by

where

Finding good values for the gains u can be a difficult task because the preferred parameter settings may depend on the specific listener and on the acoustic context.

Next, we describe the acoustic context model that will allow AIDA to make context-dependent parameter proposals.

3.1.2 Model of Context Dynamics

As HA clients move through different acoustic background settings, such as being in a car, doing groceries, watching TV at home, etc.) the preferred parameter settings for HA algorithms tend to vary. The context signal allows to distinguish between these different acoustic environments.

The hidden context state variable ck at time index k is a 1-of-L encoded binary vector with elements clk ∈ {0, 1}, which are constrained by ∑lclk = 1. This context is responsible for the operations of the noise model in Eq. 7. Context transitions are supported by a dynamic model

where the elements of transition matrix T, are defined as Tij = p(cik = 1∣cj,k−1 = 1), which are constrained by Tij ∈ [0, 1] and

where αj denotes the vector of concentration parameters corresponding to the jth column of T. The context state is initialized by a categorical distribution as

where the vector

3.2 Active Inference-Based Design Agent’s User Response Model

The goal of AIDA is to continually provide the most “interesting” settings for the HA tuning parameters uk, where interesting has been quantitatively interpreted by minimization of Expected Free Energy. But how does AIDA know what the client wants? In order to learn the client’s preferences, she is invited to cast at any time her appraisal rk ∈ {∅, 0, 1} of current HA performance. To keep the user interface very light, we will assume that appraisals are binary, encoded by rk = 0 for disapproval and rk = 1 indicating a positive experience. If a user does not cast an appraisal, we will just record a missing value, i.e., rk = ∅. The subscript k for rk indicates that we record appraisals at the same rate as the context dynamics.

If a client submits a negative appraisal rk = 0, AIDA interprets this as an expression that the client is not happy with the current HA settings uk in the current acoustic context ck (and vice versa for positive appraisals). To learn client preferences from these appraisals, AIDA holds a context-dependent generative model to predict user appraisals and updates this model after observing actual appraisals. In this paper, we opt for a Gaussian Process Classifier (GPC) model as the generative model for binary user appraisals. A Gaussian Process (GP) is a very flexible probabilistic model and GPCs have successfully been applied to preference learning in a variety of tasks before (Chu and Ghahramani, 2005; Houlsby et al., 2011; Huszar, 2011). For an in-depth discussion on GPs, we refer the reader to Rasmussen and Williams (2006). Specifically, the context-dependent user response model is defined as

In Eq. 12a, vk(⋅) is a latent function drawn from a mixture of GPs with mean functions ml(⋅) and kernels Kl(⋅, ⋅). Evaluating vk(⋅) at the point uk provides an estimate of user preferences. Without loss of generality, we can set ml(⋅) = 0. Since ck is one-hot encoded, raising to the power clk serves to select the GP that corresponds to the active context. Φ(⋅) denotes the Gaussian cumulative distribution function, defined as

4 Solving Tasks by Probabilistic Inference

This section elaborates on solving the three tasks of Section 2.2: 1) context classification, 2) trial design and 3) hearing aid algorithm execution. All tasks can be solved through probabilistic inference in the generative model specified by Eqs 2b–b12b in Section 3. In this section, the inference goals are formally specified based on the previously proposed generative model.

For the realization of the inference tasks we will use variational message passing in a factor graph representation of the generative model. Message passing-based inference is highly efficient, modular and scales well to large inference tasks (Loeliger et al., 2007; Cox et al., 2019). With message passing, inference tasks in the generative model reduce to automatable procedures revolving around local computations on the factor graphs.

A thorough discussion on message passing and related topics is omitted here for readability, but made available in Supplementary Appendix SA to serve as reference.

4.1 Inference for Context Classification

The acoustic context ck describes the dynamics of the background noise model through Eqs 5, 7. For determining the current environment of the user, the goal is to infer the current context based on the preceding observations. Technically we are interested in determining the marginal distribution

The observation model is fully specified by the model specification in Section 3, similarly as the context dynamics. The prior distribution is a joint result of the iterative execution of both Eqs 13, 18, where the latter refers to the HA algorithm execution from Section 4.3. The calculation of this marginal distribution renders intractable and therefore exact inference of the context is not possible. This is a result of 1) the intractability resulting from the autoregressive model as described in the previous subsection and of 2) the intractability that is a result of performing message passing with mixture models. In Eq. 7 the model structure contains a Normal and Gamma mixture model for the AR-coefficients and process noise precision parameter, respectively. Exact inference with these mixture models quickly leads to intractable inference through message passing, especially when multiple background noise models are involved. Therefore, we need to resort to a variational approximation where the output messages of these mixture models are constrained to be within the exponential family.

Although variational inference with the mixture models is feasible (Bishop, 2006; van de Laar, 2019; Podusenko et al., 2021b), it is prone to converge to local minima of the Bethe free energy (BFE) for more complicated models. The variational messages originating from the mixture models are constrained to either Normal or Gamma distributions, possibly losing important multi-modal information, and as a result they can lead to suboptimal inference of the context variable. Because the context is vital for the above underdetermined source separation stage, we wish to limit the amount of (variational) approximations during context inference. At the cost of an increased computational complexity, we will remove the variational approximation around the mixture models and instead expand the mixture components into distinct models. As a result, each distinct model now contains one of the mixture components for a given context and now results in exact messages originating from the priors of ξk and τk. Therefore we only need to resort to a variational approximation for the auto-regressive node. By expanding the mixture models into distinct models to reduce the number of variational approximations, calculation of the posterior distribution of the context

4.2 Inference for Trial Design of Hearing Aid Tuning Parameters

The goal of proposing alternative HA tuning parameter settings (task 3) is to receive positive user responses in the future. Free energy minimization over desired future user responses can be achieved through a procedure called Expected Free Energy (EFE) minimization (Friston et al., 2015; Sajid et al., 2021).

EFE as a trial selection criterion induces a natural trade-off between explorative (information seeking) and exploitative (reward seeking) behavior. In the context of situated HA personalization, this is desirable because soliciting user feedback can be burdensome and invasive, as described in Section 2.2. From the agent’s point of view, this means that striking a balance between gathering information about user preferences and satisfying learned preferences is vital. The EFE provides a way to tackle this trade-off, inspired by neuro-scientific evidence that brains operate under a similar protocol (Friston et al., 2015; Parr and Friston, 2017). The EFE is defined as Friston et al. (2015).

where the subscript indicates that the EFE is a function of a trial u. The EFE can be decomposed into Friston et al. (2015).

which contains an information gain term and a utility-driven term. Minimization of the EFE reduces to maximization of both these terms. Maximization of the utility drive pushes the agent towards matching predicted user responses q(r∣u) with a goal prior over desired user responses p(r). This goal prior allows encoding of beliefs about future observations that we wish to observe. Setting the goal prior to match positive user responses then drives the agent towards parameter settings that it believes make the user happy in the future. The information gain term in Eq. 15 drives agents that optimize the EFE to seek out responses that are maximally informative about latent states v.

To select the next set of gains u to propose to the user, we need to find

Intuitively, one can think of Eq. 16 as a two step procedure with an inner and an outer loop. The inner loop finds the approximate posterior q using (approximate) Bayesian inference, conditioned on a particular action parameter u. The outer loop evaluates the resulting EFE as a function of u and proposes a new set of gains to bring the EFE down. For our experiments we consider a candidate grid of possible gains. For each candidate we compute the resulting EFE and then select the lowest scoring proposal as the next set of gains to be presented to the user.

The probabilistic model used for AIDA is a mixture GPC. For simplicity we will restrict inference to the GP corresponding to the MAP estimate of ck. Between trials, the corresponding GP needs to be updated to adapt to the new data gathered from the user. Specifically, we are interested in finding the posterior over the latent user preference function.

where we assume AIDA has access to a dataset consisting of previous queries u1:k−1 and appraisals r1:k−1 and we are querying the model at uk. While this inference task in the GPC is intractable, there exist a number of techniques for approximate inference, such as variational Bayesian methods, Expectation Propagation, and the Laplace approximation (Rasmussen and Williams, 2006). Supplementary Appendix SC2 describes the exact details of the inference realization of the inference tasks of AIDA.

4.3 Inference for Executing the Hearing Aid Algorithm

The main goal of the proposed hearing aid algorithm is to improve audibility and intelligibility by re-weighing inferred source signals in the HA output signal. In our model of the observed signal in Eqs 2–7 we are interested in iteratively inferring the marginal distribution over the latent speech and noise signals p(st, nt∣x1:t). This inference task is in literature sometimes referred to as informed source separation (Knuth, 2013). Inferring the latent speech and noise signals tries to optimally disentangle these signals from the observed signal based on the sub-models of the speech and noise source. This requires us to compute the posterior distributions associated with the speech and noise signals. To do so, we perform probabilistic inference by means of message passing in the acoustic model of Eqs 2–7. The posterior distributions can be calculated in an online manner using sequential Bayesian updating by solving the Chapman-Kolmogorov equation (Särkkä, 2013).

where zt and Ψk denote the sets of dynamic states and static parameters zt = {θt, st, nt} and Ψk = {γ, τk, ζk}, respectively. Here, the states and parameters correspond to the latent AR and TVAR models of Eqs 3, 5. Furthermore, we assume that the context does not change, i.e., k is fixed. When the context does change Eq. 18 will need to be extended by integrating over the varying parameters. Unfortunately, the solution of Eq. 18 is not analytically tractable. This happens because of 1) the integration over large state spaces, 2) the non-conjugate prior-posterior pairing, and 3) the absence of a closed-form solution for the evidence factor (Podusenko et al., 2021a). To circumvent this issue, we resort to a hybrid message passing algorithm that combines structured variational message passing (SVMP) and loopy belief propagation for the minimization of Bethe free energy (Şenöz et al., 2021). Supplementary Appendix SA describes these concepts in more detail.

For the details of the SVMP and BP algorithms, we refer the reader to Supplementary Appendix SA, Dauwels (2007), and Şenöz et al. (2021). Owing to the modularity of the factor graphs, the message passing update rules can be tabulated and only need to be derived once for each of the included factor nodes. The derivations of the sum-product update rules for elementary factor nodes can be found in Loeliger et al. (2007) and the derived structured variational rules for the composite AR node can be found in Podusenko et al. (2021a). The variational updates in the mixture models can be found in van de Laar (2019) and Podusenko et al. (2021b). The required approximate marginal distribution of some variable z can be computed by multiplying the incoming and outgoing variational messages on the edges corresponding to the variables of our interest as

Based on the inferred posterior distributions of st and nt, these signals can be used for inferring the hearing aid output through Eq. 8 to produce a personalized output which compromises between residual noise and speech distortion.

5 Experimental Verification and Validation

In this section, we first verify our approach for the three design tasks of Section 2.2. Specifically, in Section 5.1 we evaluate the context inference approach by reporting the classification performance of correctly classifying the context corresponding to a signal segment. In Section 5.2 we evaluate the performance of our intelligent agent that actively proposes hearing aid settings and learns user preferences. The execution of the hearing aid algorithm is verified in Section 5.3 by evaluating the source separation performance. To conclude this section, we present a demonstrator for the entire system in Section 5.4.

All algorithms have been implemented in the scientific programming language Julia (Bezanson et al., 2017). Probabilistic inference in our model is automated using the open source Julia package ReactiveMP2 (Bagaev and de Vries, 2022). All of the experiments presented in this section can be found at our AIDA GitHub repository.3

5.1 Context Classification Verification

To verify that the context is appropriately inferred through Bayesian model selection, we generated synthetic data from the following generative model:

with priors

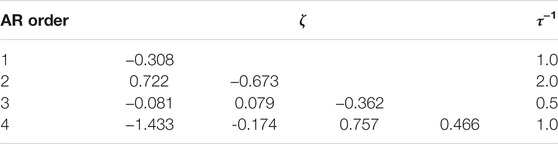

where co is chosen to have length L = 4. The event probabilities π and concentration parameters αj are defined as π = [0.25,0.25,0.25,0.25]⊺ and αj = [1.0,1.0,1.0,1.0]⊺, respectively. We generated a sequence of 1,000 frames, each containing 100 samples, such that we have 100 × 1,000 data points. Each frame is associated with one of the four different contexts. Each context corresponds to an AR model with the parameters presented in Table 1.

TABLE 1. The parameters of autoregressive processes that are used for generating a time series with simulated context dynamics.

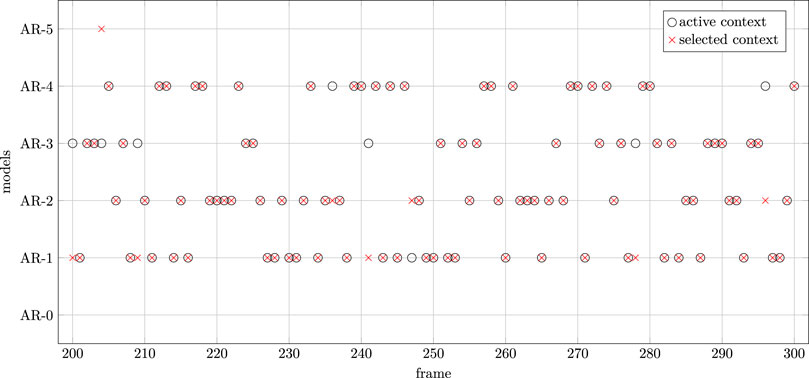

For verification of the context classification procedure, we wish to identify which model best approximates the observed data. To do that, four models with the same specifications as were used to generate the dataset were employed. We used informative priors for the coefficients and precision of AR models. Additionally, we extended our set of models with an AR(5) model with weakly informative priors and a Gaussian i.i.d. model that can be viewed as an AR model of zeroth order, i.e., AR(0). The individual frames containing 100 samples each were processed individually and we computed the Bethe free energy for each of the different models. The Bethe free energy is introduced in Supplementary Appendix SA4. By approximating the true model evidence using the Bethe free energy as described in Supplementary Appendix SC1, we performed approximate Bayesian model selection by selecting the model with the lowest Bethe free energy. This model then corresponds to the most likely context hat we are in. We highlight the obtained inference result in Figure 4.

FIGURE 4. True and inferred evolution of contexts from frames 200 to 300. Each frame consists of 100 data points. Circles denote the active contexts that were used to generate the frame. Crosses denote the model that achieves the lowest Bethe free energy for a specific frame.

We evaluate the performance of the context classification procedure using approximate Bayesian model selection by computing the categorical accuracy metric defined as

where tp, tn are the number of true positive and true negative values, respectively. N corresponds to the number of total observations, which in this experiment is set to N = 1,000. In this context classification experiment, we have achieved a categorical accuracy of acc = 0.94.

5.2 Trial Design Verification

Evaluating the performance of the intelligent agent is not trivial. Because the agent adaptively trades off exploration and exploitation, accuracy is not an adequate metric. There are reasons for the agent to veer away from what it believes is the optimum to obtain more information. As a verification experiment we can investigate how the agent interacts with a simulated user. Our simulated user samples binary appraisals rk based on the HA parameters uk as

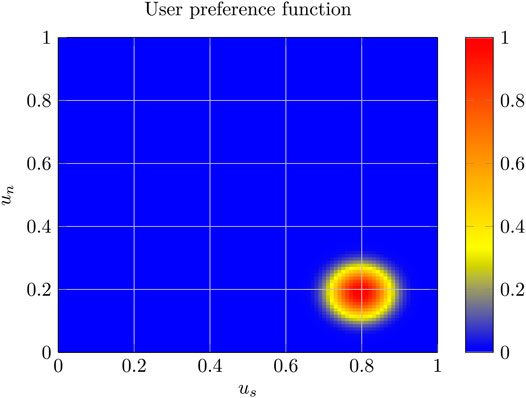

where u* denotes the optimal parameter setting, uk is the set of parameters proposed by AIDA at time k, Λuser is a diagonal weighing matrix that controls how quickly the probability of positive appraisals decays with the squared distance to u*. The constant 2 ensures that when uk = u*, the probability of positive appraisals is 1 instead of 0.5. For our experiments, we set u* = [0.8,0.2]⊺ and the diagonal elements of Λuser to 0.004. This results in the user preference function p(rk = 1∣uk) as shown in Figure 5.

FIGURE 5. Simulated user preference function p(rk = 1∣uk). The coloring corresponds to the probability of the user giving a positive appraisal for the search space of gains

The kernel used for AIDA is a squared exponential kernel, given by

where l and σ are the hyperparameters of this kernel. Intuitively, σ is a static noise parameter and l encodes the smoothness of the kernel function. Both hyperparameters were initialized to σ = l = 0.5, which is uninformative on the scale of the experiment. We let the agent search for 80 trials and update hyperparameters every fifth trial using conjugate gradient descent as implemented in Optim.jl (K Mogensen and N Riseth, 2018). We constrain both hyperparameters to the domain [0.1, 1] to ensure stability of the optimization. As we will see, for large parts of each experiment AIDA only receives negative appraisals. The generative model of AIDA is fundamentally a classifier and unconstrained optimization can therefore lead to degenerate results when the data set only contains examples of a single class. For all experiments, the first proposal of AIDA was a randomly sampled parameter from the admissible set of parameters, because the AIDA has no prior knowledge about the user preference function. This random initial proposal, lead to distinct behavior for all simulated agents.

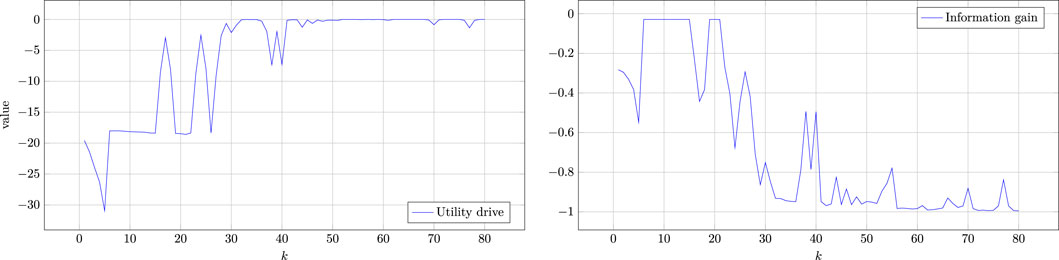

We provide two verification experiments for AIDA. First, we will thoroughly examine a single run in order to investigate how AIDA switches between exploratory and exploitative behavior. Secondly, we examine the aggregate performance of an ensemble of agents to test the average performance. To assess the performance for a single run, we can examine the evolution of the distinct terms in the EFE decomposition of Eq. 15 over time. We expect that when AIDA is primarily exploring, the utility drive is relatively low while the information gain is relatively high. When AIDA is primarily engaged in exploitation, we expect the opposite pattern. We show these terms separately in Figure 6.

FIGURE 6. Evolution of the utility drive and negative information gain after throughout a single experiment.

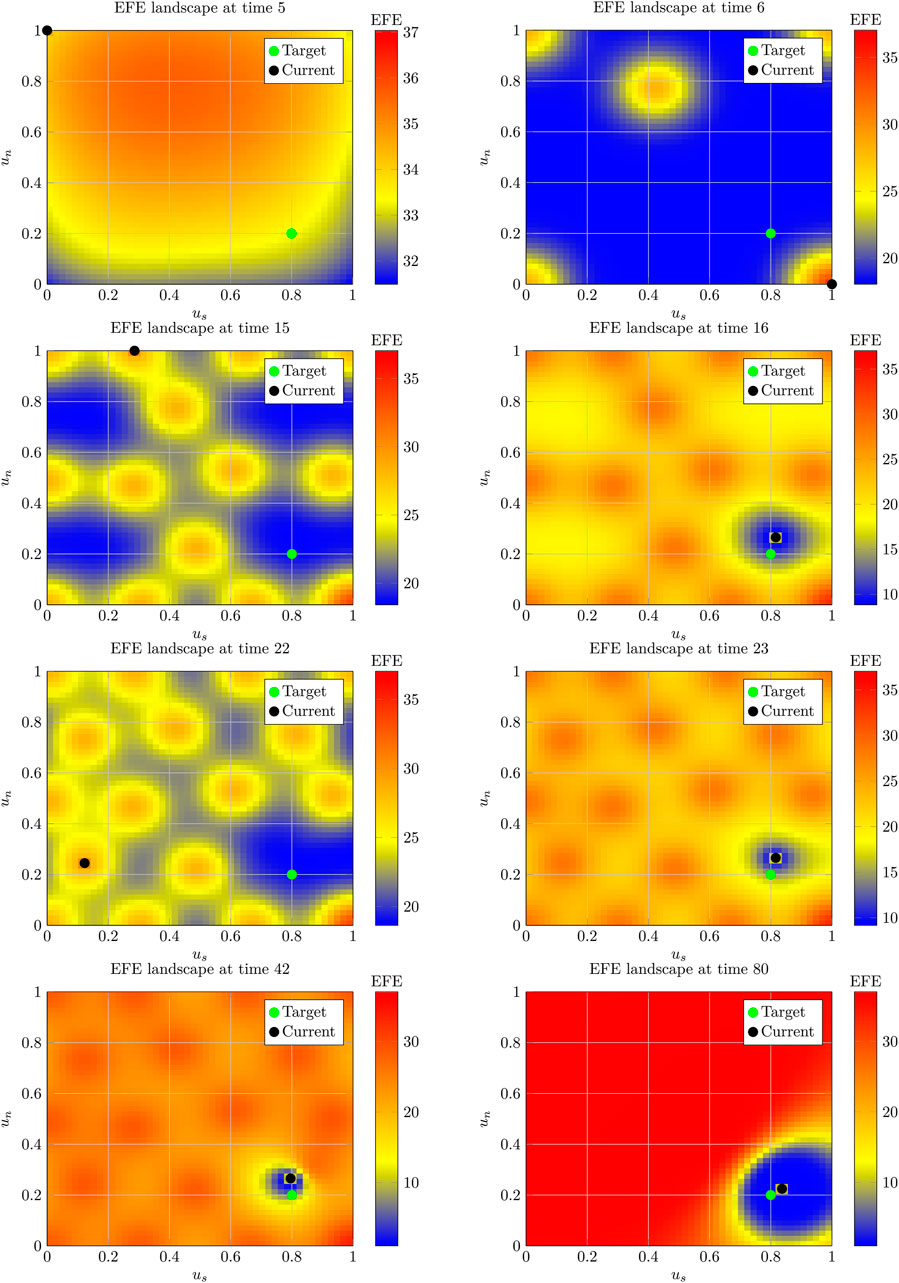

Figure 6 shows that there are distinct phases to the experiment. In the beginning (k < 5) AIDA sees a sharp decrease in utility drive and information gain terms. This indicates a saturation of the search space such that no points present good options. This happens early due to uninformative hyperparameter settings in the GPC. After trial 5, these hyperparameters are optimized and the agent no longer thinks it has saturated the search space, which can be explained by the jumps in Figure 6 from trial 5 to 6. From trial 6 throughout 15 we observe a relatively high information gain and relatively low utility drive, meaning that the agent is still exploring the search space for parameter settings which yield a positive user appraisal. The agent obtains its first positive appraisal at k = 16, as denoted by the jump in utility drive and drop in information gain. This first positive appraisal is followed by a period of oscillations in both terms, where the agent is refining its parameters. Finally AIDA settles down to predominantly exploitative behavior starting from 41st trial. To examine the first transition, we can visualize the EFE landscape at k = 5 and k = 6, the upper row of Figure 7.

FIGURE 7. Snapshot of EFE landscape at different time points as a function of gains us and un. The black dot denotes the current parameter settings and the green dot denotes u*.

Recall that AIDA is minimizing EFE. Therefore, it is looking for the lowest values corresponding to blue regions and avoiding the high values corresponding to red regions. Between k = 5 and k = 6 we perform the first hyperparameter update, which drastically changes the EFE landscape. This indicates that initial parameter settings were not informative, as we did not cover the majority of the search space within the first 5 iterations. The yellow regions at k = 6 indicates regions corresponding to previous proposals of AIDA that resulted into negative appraisals. We can visualize snapshots of the exploration phase starting from k = 6 in a similar manner. The second row of Figure 7 displays the EFE landscape at two different time instances during the exploration phase. It shows that over the course of the experiment, AIDA gradually builds a representation over the search space. In trial 16 this takes the form of patterns of connected regions that denote areas that AIDA believes are unlikely to results in positive appraisals.

Once AIDA receives its first positive appraisal at k = 16, it switches from exploring the search space to focusing only on the local region. If we examine Figure 6, we see that at this time the information gain term is still reasonably high. This indicates a subtle point: once AIDA receives a positive appraisal, it starts with local exploration around where the optimum might be located. However, the agent was located near the boundary of the optimum and next receives a negative appraisal. Therefore in trials 18 to 22 AIDA queries points which it deems most informative. At time 23 the position of AIDA in the search space (black dot in the third row of Figure 7) returns to the edge of the user preference function in Figure 5. This causes AIDA to receive a mixture of positive and negative appraisals in the following trials, leading to the oscillations seen in Figure 6. Finally, we can examine the landscape after AIDA has confidently located the optimum and switched to purely exploitative behavior. This happens at k = 42 where the utility drive goes to 0 and the information gain concentrates around −1.

The last row of Figure 7 shows that once u* is confidently located, AIDA disregards the remainder of the search space in favour of providing good parameter settings. Finally, if the user continues to supply data to AIDA, it will gradually extend the potential region of samples around the optimum. This indicates that if a user keeps requesting updated parameters, AIDA will once again perform local exploration around the optimum. This further indicates that AIDA accommodates gradual retraining as user’s hearing loss profile changes over time.

Having thoroughly examined an example run and investigated the types of behavior produced by AIDA, we can now turn our attention to aggregate performance over an ensemble of agents. To that end we repeat the experiment 80 times with identical hyperparameters, but with different initial proposals. The metric we are most interested in is how quickly AIDA is able to locate the optimum and produce a positive appraisal.

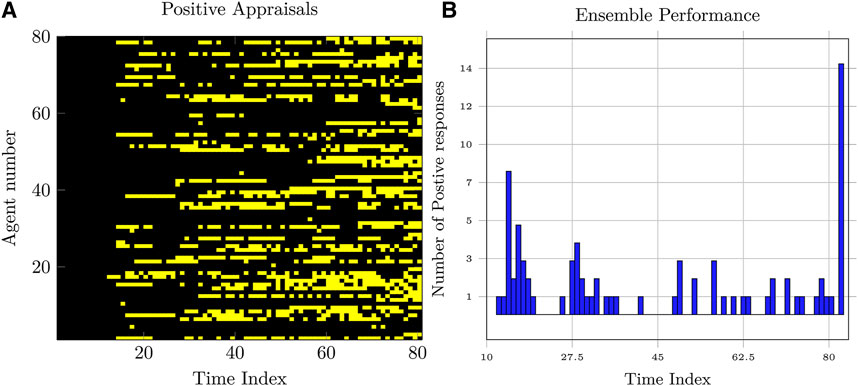

Figure 8 shows a heatmap of when each agent obtains positive responses. Positive responses are indicated by yellow squares and negative responses by black squares. Each row contains results for a single AIDA-agent and each column indicates a time step of the experiment. Consistent with the results for a single agent, we see that each experiment starts with a period of exploration. A large number of rows also show a yellow square within the first 35 trials, indicating that the optimum was found. Interestingly, no agents receive only positive responses, even after locating the optimum. This follows from AIDA actively trading off exploration and exploitation. When exploring, AIDA can select parameters that are suboptimal with respect to eliciting positive user responses, to gather more information. Figure 8 also shows a histogram indicating when each agent obtains its first positive appraisal. The very right column shows agents that failed to locate the optimum within the designated number of trials. In total, 66/80 agents correctly solve the task, corresponding to a success rate of 82.5%. Disregarding unsuccessful runs, on average, AIDA obtains a positive response in 37.8 trials with a median of 29.5 trials.

FIGURE 8. (A) Heatmap showing ensemble performance over 80 agents. Positive and negative responses are indicated with yellow and black squares, respectively. (B) Histogram showing time indices where the agents receive their first positive response. The right most column indicates agents that failed to obtain a positive appraisal. In total, 66/80 agents solve the task, corresponding to a success rate of 82.5%.

5.3 Hearing Aid Algorithm Execution Verification

To verify the proposed inference methodology for the hearing aid algorithm execution, we synthesized data by sampling from the following generative model:

with priors

where M and N are the orders of TVAR and AR models, respectively, and where M ≥ N holds, as we assume that the noise signal can be modeled by a lower AR order in comparison to the speech signal. We use an uninformative prior for the output of the hearing aid yt as in Figure 3 to prevent interactions from that part of the graph. We generated 1,000 distinct time series of length 100. For each generated time series, the (TV)AR orders M and N were sampled from the discrete domains [4, 8] and [1, 4], respectively. We resampled the priors that initially resulted into unstable TVAR and AR processes.

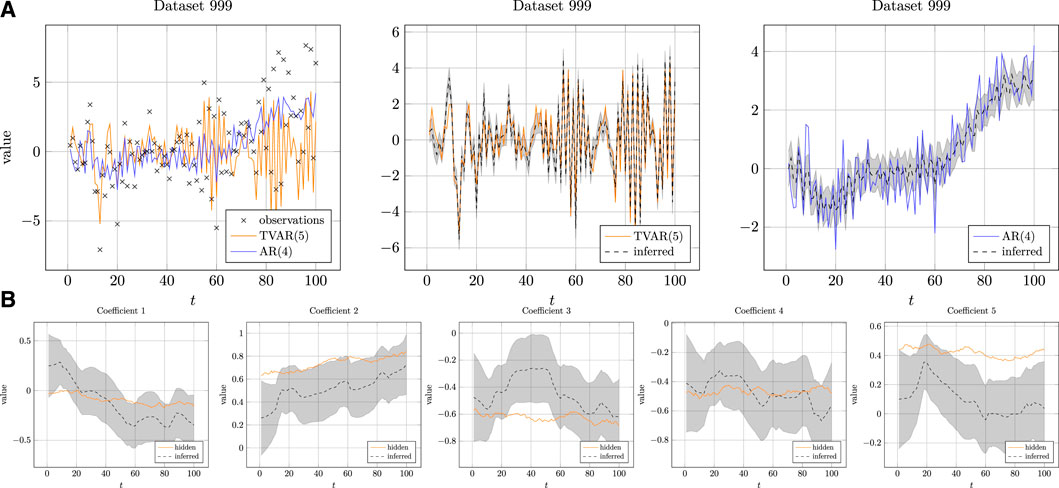

The generated time series were used in the following experiment. We first created a probabilistic model with the same specifications as the generative model in Eq. 24. However, we used non-informative priors for the states and parameters of the model that corresponds to the TVAR process in Eq. 24b. To ensure the identifiability of the separated sources, we used weakly informative priors for the parameters of the AR process in Eq. 24c. Specifically, the mean of the prior for ζ was centered around the real AR coefficients that were used in the data generation process. The goals of the experiment are 1) to verify that the proposed inference procedure recovers the hidden states θt, st and nt for each generated dataset and 2) to verify convergence of the BFE as convergence is not guaranteed, because our graph contains loops (Murphy et al., 1999). For a typical case, the inference results for the hidden states st and nt are shown in Figure 9A.

FIGURE 9. (A) Inference results for the hidden states st and nt of coupled (TV) AR process on dataset 999. (left) The generated observed signal xt with underlying generated signals st and nt. (center) The latent signal st and its corresponding posterior approximation. (right) The latent signal nt and its corresponding posterior approximation. The dashed lines corresponds to the mean of the posterior estimates. The transparent regions represent the corresponding remaining uncertainty as plus-minus one standard deviation from the mean. (B) Inference results for the coefficients θt of dataset 999. The solid lines correspond to the true latent AR coefficients. The dashed lines correspond to the mean of the posterior estimates of the coefficients and the transparent regions correspond to plus-minus one standard deviation from the mean of the estimated coefficients.

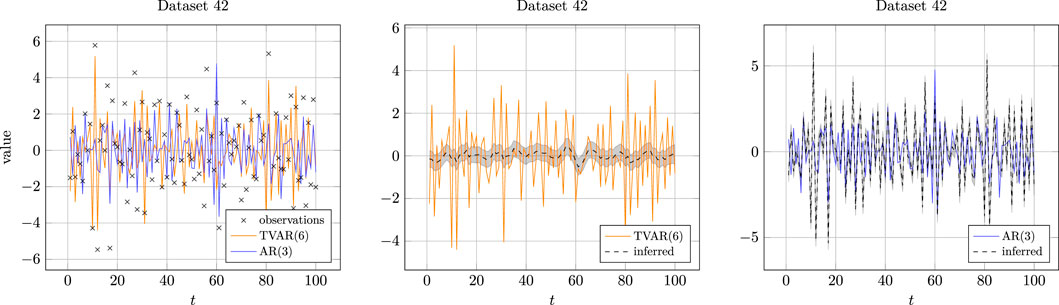

The Figure 9B shows the tracking of the time-varying coefficients θt. This plot does not show the correlation between the inferred coefficients, whereas this actually contains vital information for modeling an acoustic signal. Namely, the coefficients together specify a set of poles, which influence the characteristics of the frequency spectrum of the signal. An interesting example is depicted in Figure 10. We can see that the inference results for the latent states st and nt are swapped with respect to the true underlying signals. This behavior is undesirable in standard algorithms when the output of the HA is produced based on hard-coded gains. However, the presence of our intelligent agent can still find the optimal gains for this situation. The automation of the hearing aid algorithm and intelligent agent will relieve this burden on HA clients.

FIGURE 10. Inference results for the hidden states st and nt of coupled (TV) AR process on dataset 42. In this particular case it can be noted that the inferred states are swapped with respect to the true underlying signals. However, the accompanying intelligent agent is able to cope with these kinds of situations, such that the HA clients do not experience any problems as a result.

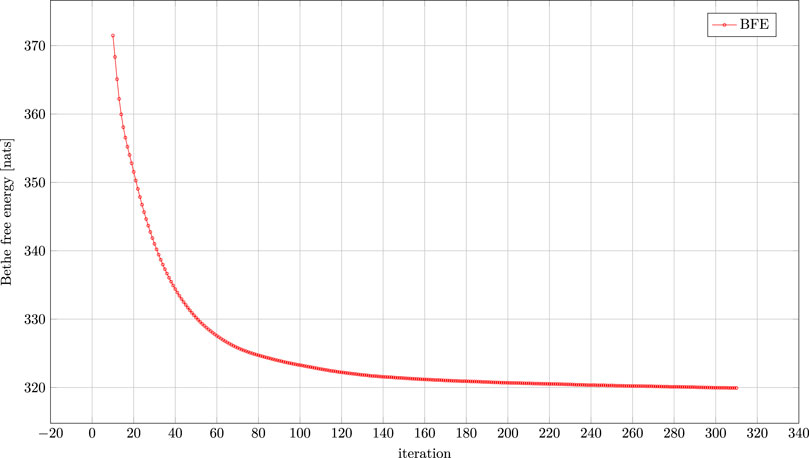

As can be seen from Figure 11, the Bethe free energy averaged over all generated time series monotonically decreases. Note that even though the proposed hybrid message passing algorithm results in a stationary solution, it does not provide convergence guarantees.

FIGURE 11. Evolution of the Bethe free energy for the coupled autoregressive model averaged over all generated time series. The iteration index specifies the number of marginal updates for all edges in the graph.

5.4 Validation Experiments

For the validation of the proposed HA algorithm and AIDA, we created an interactive web application4 to demonstrate the joint system. Figure 12 shows the interface of the demonstrator.

FIGURE 12. Screenshot of the interactive web application of AIDA. The dashboard consists of four distinct cells. The top cell Environment allows the user to change the interfering noise signal from a generated noise signal (synthetic) to a real noise signal. Furthermore it contains a reset button for resetting the application. The Hearing Aid cell provides an interactive plot of the input, separated speech, separated noise, and generated output waveform signals. Each waveform can be played when the corresponding button is pressed. The NEXT button loads a new audio file for evaluation. The thumbs-up and thumbs-down buttons correspond to providing AIDA with positive and negative appraisals, respectively. The brain button starts optimization of the parameters of GPC. The EFE Agent cell reflects the agent’s beliefs about optimal parameters for the user as an EFE heatmap. The Classifier cell shows the Bethe free energy (BFE) score for the different models, corresponding to the different contexts. For the real noise signal, the algorithm automatically determines whether we are surrounded by babble noise, or by noise from a train station.

The user listens to the output of the hearing aid algorithm by pressing the “output” button. The buttons “speech” and “noise” correspond to the beliefs of AIDA about the constituent signals of the HA input. Note that in reality the user does not have access to this information and can only listen to HA output. After listening to the output signal, the user is invited to assess the performance of the current HA setting. The user can send positive and negative appraisals by pressing the thumb up or thumb down buttons respectively. Once the appraisal is sent, AIDA updates its beliefs about the parameters’ space and provides new settings for the HA algorithm to make the user happy. As AIDA models user appraisals using a GPC, we provide an additional button that forces AIDA to optimize the parameters of GPC. This could be useful when AIDA has already collected some feedback from the user that contains both positive and negative appraisals.

The demonstrator works in two environments: synthetic and real. The synthetic environment allows the user to listen to a spoken sentence with two artificial noise sources, i.e., either interference from a sinusoidal wave or a drilling machine. In the synthetic environment the hearing aid algorithm exploits the knowledge about acoustic contexts, i.e., it uses informative priors for the AR model that corresponds to noise. The real environment uses the data from NOIZEUS speech corpus.5 In particular, the real environment consists of 30 sentences pronounced in two different noise environments. Here the user is either experiencing surrounding noise at a train station or babble noise. In the real environment, the HA algorithm uses weakly informative priors for the background noise which influences the performance of the HA algorithm. Both the HA algorithm and AIDA determine the acoustic context based on the Bethe free energy score, which is also shown in the demonstrator. The context with the lower Bethe free energy score corresponds to the selected acoustic context.

6 Related Work

The problem of hearing aid personalization has been explored in various works. In Nielsen et al. (2015) the HA parameters are tuned according to a pairwise user assessment tests, during which the user’s perception is encoded using Gaussian processes. The intractable posterior distribution corresponding to the user’s perception is then computed using a Laplace approximation with Expected Improvement as the acquisition function used to select the next set of gains. Our agent improves upon Nielsen et al. (2015) in two concrete ways. Firstly, AIDA places a lower cognitive load on the user by not requiring pairwise comparisons. This means the user does not need to keep in her memory what the HA sounded like at the previous trial but only needs to consider the current HA output. AIDA accomplishes this without requiring more trials for training. In fact, since AIDA does not require pre-training but can be trained fully online under in-situ conditions, AIDA requires less data to locate optimal gains. Secondly, AIDA can be trained and retrained in a continual learning fashion. In case the users preferences change over time, for instance by a change in the hearing loss profile, AIDA can smoothly accommodate the user as long as she continues to provide the agent with feedback. Using EFE as acquisition function means the agent will engage in local exploration once the optimum is located, leading the agent to naturally learn shifts in the users preferences by balancing exploration and exploitation. In Alamdari et al. (2020), personalization of the hearing aid compression algorithm is framed in terms of deep reinforcement learning. On the contrary, in our work we take inspiration from the active inference framework where agents act to maximize model evidence of their underlying generative model. Importantly, this does not require us to explicitly specify a loss function that drives exploitative and epistemic behavior. In the recent work of Ignatenko et al. (2021), the hearing aid preference learning algorithm is implemented through sequential Bayesian optimization with pairwise comparisons. Their hearing aid system comprises two subsystems representing a user with their preferences and the agent that guides the learning process. However, Ignatenko et al. (2021) focus only on exploration through maximizing information gain with a parametric model. The EFE additionally adds a goal directed term that ensures the agent will stay near the optimum once located, even if other parameter settings provide more information. Extending the model of Ignatenko et al. (2021) to employ the full EFE is an exciting potential direction for future work. Finally neither Nielsen et al. (2015) nor Ignatenko et al. (2021) takes context dependence into account.

Friston Karl J. et al. (2021) introduces Active Listening (AL), which performs speech recognition based on the principles of active inference. In Friston Karl J. et al. (2021), they regard listening as an active process that is largely influenced by lexical, speaker and prosodic information. Friston Karl J. et al. (2021) distinguishes itself from conventional audio processing algorithms, because it explicitly includes the process of word boundary selection before word classification and recognition, and that they regard this as an active process. Word boundaries are selected from a group of candidate word boundaries, based on Bayesian model selection, by choosing the word boundary that optimizes the VFE during classification. In the future, we see the potential of incorporating the AL approach into AIDA. Active inference is successfully applied in the work Holmes et al. (2021) that studies to model selective attention in a cocktail party listening setup.

The audio processing components of AIDA essentially perform informed source separation (Knuth, 2013), where sources are separated based on prior knowledge. Even though blind source separation approaches (Xie et al., 2012; Laufer and Gannot, 2021) always use some degree of prior information, we do not focus on this direction and instead we actively try to model the underlying sources based on variations of auto-regressive processes. For audio processing applications source separation has often been performed in the log-power domain (Frey et al., 2001; Rennie et al., 2006; Rennie et al., 2009). However, the interaction of the signals in this domain is no longer linear. The intractability that results from performing exact inference in this model is often resolved by simplifying the interaction function (Radfar et al., 2006; Hershey et al., 2010). Although this approach has shown to be successful in the past, its performance is limited because of the negligence of phase information.

7 Discussion

We have introduced a design agent that is capable of tuning the context-dependent parameters of a hearing aid algorithm by incorporating user feedback. Throughout the paper, we have made several design choices whose implications we shortly review in this section.

The audio model introduced in Section 3.1 describes the dynamics of the speech signal perturbed by colored noise. Despite the fact that the proposed inference algorithm allows for the decomposition of such signals into speech and noise components, there are a few limitations that must be highlighted. First, the identifiability of the coupled AR model depends on the selected priors. Non-informative priors can lead to poor source estimation (Kleibergen and Hoek, 1995; Hsiao, 2008). To tackle the identifiability issue, we use informative context-dependent priors. In other words, for each context, we use a different set of priors that better describe the dynamics of the acoustic signal in that context. Secondly, throughout our experiments we used fixed orders of TVAR and AR models. In reality, we do not have prior information about the actual order of the underlying signals. Therefore, to continuously update our models of the underlying sources we need to perform active order selection, which can be realized using Bayesian model reduction (Friston and Penny, 2011; Friston et al., 2018). Thirdly, our model assumes that the hearing aid device only has access to a monaural input, which means that the observed signal originates from single microphone. As a result we do not use any spatial information about an acoustic signal that could have been obtained using multiple microphones. This assumption is mostly influenced by our desire to focus on the concept of designing a novel class of hearing aid algorithms rather than building real-world HA engine. Fortunately, the proposed framework allows for the easy substitution of source models with more versatile models that might be better suited for speech. For instance, one can use several microphones, as commonly done in beamforming (Ozerov and Fevotte, 2010), or use a frequency decomposition for improving the source separation performance (Frey et al., 2001; Rennie et al., 2006, 2009). Inevitably, a more complex model will also likely result in a higher computational burden. Hence, the implementation of this algorithm on an embedded device remains a challenge.

The power of the agent comes from the choice of the objective function. Since the objective is independent of the generative model, a straightforward approach to improving the agent is to adapt the generative model. In particular, a GPC is a nonparametric model with very few assumptions on the underlying function. Placing constraints on the preference function, such as was done in Cox and de Vries (2017) and Ignatenko et al. (2021), is likely to improve data efficiency of the agent. Arguably, a core move of Cox and de Vries (2017) and Ignatenko et al. (2021) is to acknowledge that user preferences are likely to be peaked around one or a few optima. Even if the true preference function has multiple modes, assuming a single peak for the agent is safe since it only needs to locate one of the modes to provide good parameter settings. Making this assumption allows the authors to work with a parametric model over user preferences. Working with a less flexible model predictably leads to higher data efficiency, which can aid performance of the agent. Given that the target demographic for AIDA consists of HA users, it is of paramount importance that the agent is able to learn an adequate representation of user preferences in as few trials as possible to avoid inconveniencing the user.

During model specification in Section 3.2, we make some assumptions on the control variable uk and user appraisals rk. First, we set the domain of the elements of control variable uk to [0, 1]. Note that this is an arbitrary constraint which we use for illustrative purposes. The domain can be easily rescaled without loss of generality. For example, in our demonstrator, we use the default domain of uk ∈ [0,2]2. Secondly, we opt for binary user appraisals, i.e., rk ∈ {∅, 0, 1}. This design choice follows from the requirement of allowing users to communicate covertly to AIDA. Binary user appraisal can more easily be linked to for example covert wrist movements when wearing a smartwatch to update the control variables. With continuous user appraisals, e.g. rk ∈ [0, 1], or pairwise comparison tests the convergence of AIDA can be greatly improved as these appraisals yield more information per appraisal. However, providing AIDA with these appraisals requires more attention, which is undesirable in certain circumstances, for example during business meetings.

Real-world testing of AIDA has not been included in our work. The performance evaluation with human HA clients is not straightforward. To evaluate the performance of AIDA, we need to conduct a randomized controlled trial (RCT), where HA clients should be randomly assigned to either an experimental group or a control group. While the current intelligent AIDA agent can interact with users in real-time, the source separation framework is currently limiting the actual real-time performance. Under the current model assumptions, i.e., two auto-regressive filters under a variational approximation, we obtain a pretty good source separation performance at the cost of computational complexity. Hence, the complete framework is not suitable for the proper RCT setting. Nonetheless, we provide a demo that simulates AIDA and can be tested freely. In future work, we shall focus on specifying source models that exhibit cheap computations allowing us to run the source separation algorithms in real-time.

8 Conclusion

This paper has presented AIDA, an active inference design agent for novel situation-aware personalized hearing aid algorithms. AIDA and the corresponding hearing aid algorithm are based on probabilistic generative models that model the user and the underlying speech and context-dependent background noise signals of the observed acoustic signal, respectively. Through probabilistic inference by means of message passing, we perform informed source separation in this model and use the separated signals to perform source-specific filtering. AIDA then learns personalized source-specific gains through user interaction, depending on the environment that the user is in. Users can give a binary appraisal after which the agent will make an improved proposal, based on expected free energy minimization for encouraging both exploitative and epistemic behavior. AIDA’s operations are context-dependent and uses the context from the hearing aid algorithm, which is based on Bayesian model selection. Experimental results show that hybrid message passing is capable of finding the hidden states of the coupled AR model that are associated with the speech and noise components. Moreover, Bayesian model selection has been tested for the context inference problem where each source is modelled by AR process. The experiments on preference learning showed the potential of applying expected free energy minization for finding the optimal settings of the hearing aid algorithm. Although real-world implementations still present challenges, this novel class of audio processing algorithms has the potential to change the leading approach to hearing aid algorithm design. Future plans encompass developing AIDA towards real-time applications.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

Author Contributions

Conceptualization, AP, BvE, MK, and BdV; methodology, AP, BvE, and MK; software, AP, BvE, and MK; validation, AP, BvE, and MK; formal analysis, AP, BvE, MK, and BdV; writing—original draft preparation, AP, BvE, and MK; writing—review and editing, AP, BvE, MK, and BdV; visualization, AP, BvE, and MK; supervision, BdV; project administration, BdV; funding acquisition, BdV.

Funding

This work was partly financed by GN Advanced Science, which is the research department of GN Hearing A/S, and by research programs ZERO and EDL with project numbers P15-06 and P16-25, respectively, which are (partly) financed by the Netherlands Organisation for Scientific Research (NWO). The funders were not involved in the study design, collection, analysis, interpretation of data, the writing of this article or the decision to submit it for publication.

Conflict of Interest

During execution of the project, MK was also employed by Nested Minds Solutions. BdV is also employed by GN Hearing A/S.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to thank the BIASlab team members for insightful discussions on various topics related to this work.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frsip.2022.842477/full#supplementary-material

Footnotes

1Aida is a girl’s name of Arabic origin, meaning “happy.” We use this name as an abbreviation for an “Active Inference-based Design Agent” that aims to make an end user “happy.”

2ReactiveMP (Bagaev and de Vries, 2022) is available at https://github.com/biaslab/ReactiveMP.jl

3The AIDA GitHub repository with all experiments is available at https://github.com/biaslab/AIDA

4A web application of AIDA is available at https://github.com/biaslab/AIDA-app/

5The NOIZEUS database is available at https://ecs.utdallas.edu/loizou/speech/noizeus/

References

Alamdari, N., Lobarinas, E., and Kehtarnavaz, N. (2020). Personalization of Hearing Aid Compression by Human-In-The-Loop Deep Reinforcement Learning. IEEE Access 8, 203503–203515. doi:10.1109/ACCESS.2020.3035728

Bagaev, D., and de Vries, B. (2022). Reactive Message Passing for Scalable Bayesian Inference Submitted to the Journal of Machine Learning Research.

Beerends, J. G., Schmidmer, C., Berger, J., Obermann, M., Ullmann, R., Pomy, J., et al. (2013). Perceptual Objective Listening Quality Assessment (POLQA), the Third Generation ITU-T Standard for End-To-End Speech Quality Measurement Part I—Temporal Alignment. J. Audio Eng. Soc. 61, 366–384.

Bezanson, J., Edelman, A., Karpinski, S., and Shah, V. B. (2017). Julia: A Fresh Approach to Numerical Computing. SIAM Rev. 59, 65–98. doi:10.1137/141000671

Bishop, C. M. (2006). Pattern Recognition and Machine Learning. New York: Springer-Verlag New York, Inc.

Chinen, M., Lim, F. S. C., Skoglund, J., Gureev, N., O’Gorman, F., and Hines, A. (2020). ViSQOL V3: An Open Source Production Ready Objective Speech and Audio Metric. arXiv:2004.09584 [cs, eess].

Chu, W., and Ghahramani, Z. (2005). “Preference Learning with Gaussian Processes,” in ICML ’05: Proceedings of the 22nd international conference on Machine learning (New York, NY, USA: Association for Computing Machinery), 137–144. doi:10.1145/1102351.1102369

Cox, M., and de Vries, B. (2017). “A Parametric Approach to Bayesian Optimization with Pairwise Comparisons,” in NIPS Workshop on Bayesian Optimization (BayesOpt 2017), Long Beach, USA, 1–5.

Cox, M., van de Laar, T., and de Vries, B. (2019). A Factor Graph Approach to Automated Design of Bayesian Signal Processing Algorithms. Int. J. Approximate Reasoning 104, 185–204. doi:10.1016/j.ijar.2018.11.002

Da Costa, L., Parr, T., Sajid, N., Veselic, S., Neacsu, V., and Friston, K. (2020). Active Inference on Discrete State-Spaces: a Synthesis. arXiv:2001.07203 [q-bio] ArXiv: 2001.07203.

Dauwels, J. (2007). “On Variational Message Passing on Factor Graphs,” in IEEE International Symposium on Information Theory, Nice, France, 2546–2550. doi:10.1109/ISIT.2007.4557602

Forney, G. D. (2001). Codes on Graphs: normal Realizations. IEEE Trans. Inform. Theor. 47, 520–548. doi:10.1109/18.910573

Frey, B. J., Deng, L., Acero, A., and Kristjansson, T. (2001). “ALGONQUIN: Iterating Laplace’s Method to Remove Multiple Types of Acoustic Distortion for Robust Speech Recognition,” in Proceedings of the Eurospeech Conference, Aalborg, Denmark, 901–904.

Friston, K., Da Costa, L., Hafner, D., Hesp, C., and Parr, T. (2021a). Sophisticated Inference. Neural Comput. 33, 713–763. doi:10.1162/neco_a_01351

Friston, K. J., Sajid, N., Quiroga-Martinez, D. R., Parr, T., Price, C. J., and Holmes, E. (2021b). Active Listening. Hearing Res. 399, 107998. doi:10.1016/j.heares.2020.107998

Friston, K., Kilner, J., and Harrison, L. (2006). A Free Energy Principle for the Brain. J. Physiology-Paris 100, 70–87. doi:10.1016/j.jphysparis.2006.10.001

Friston, K., Parr, T., and Zeidman, P. (2018). Bayesian Model Reduction. arXiv:1805.07092 [stat] ArXiv: 1805.07092.

Friston, K., and Penny, W. (2011). Post Hoc Bayesian Model Selection. Neuroimage 56, 2089–2099. doi:10.1016/j.neuroimage.2011.03.062

Friston, K., Rigoli, F., Ognibene, D., Mathys, C., Fitzgerald, T., and Pezzulo, G. (2015). Active Inference and Epistemic Value. Cogn. Neurosci. 6, 187–214. doi:10.1080/17588928.2015.1020053

Gannot, S., Burshtein, D., and Weinstein, E. (1998). Iterative and Sequential Kalman Filter-Based Speech Enhancement Algorithms. IEEE Trans. Speech Audio Process. 6, 373–385. doi:10.1109/89.701367

Gibson, J. D., Koo, B., and Gray, S. D. (1991). Filtering of Colored Noise for Speech Enhancement and Coding. IEEE Trans. Signal. Process. 39, 1732–1742. doi:10.1109/78.91144

Hershey, J. R., Olsen, P., and Rennie, S. J. (2010). “Signal Interaction and the Devil Function,” in Proceedings of the Interspeech 2010, Makuhari, Chiba, Japan, 334–337. doi:10.21437/interspeech.2010-124

Hines, A., Skoglund, J., Kokaram, A. C., and Harte, N. (2015. ViSQOL: an Objective Speech Quality Model. J. Audio Speech Music Proc. 2015. doi:10.1186/s13636-015-0054-9

Holmes, E., Parr, T., Griffiths, T. D., and Friston, K. J. (2021). Active Inference, Selective Attention, and the Cocktail Party Problem. Neurosci. Biobehavioral Rev. 131, 1288–1304. doi:10.1016/j.neubiorev.2021.09.038

Houlsby, N., Huszár, F., Ghahramani, Z., and Lengyel, M. (2011). Bayesian Active Learning for Classification and Preference Learning. arXiv:1112.5745 [Cs, Stat].

Hsiao, T. (2008). Identification of Time-Varying Autoregressive Systems Using Maximuma PosterioriEstimation. IEEE Trans. Signal. Process. 56, 3497–3509. doi:10.1109/TSP.2008.919393

Huszar, F. (2011). “A GP Classification Approach to Preference Learning,” in NIPS Workshop on Choice Models and Preference Learning, Sierra Nevada, Spain, 4.

Ignatenko, T., Kondrashov, K., Cox, M., and de Vries, B. (2021). On Sequential Bayesian Optimization with Pairwise Comparison. arXiv:2103.13192 [Cs, Math, Stat] Arxiv: 2103.13192.

K Mogensen, P., and N Riseth, A. (2018). Optim: A Mathematical Optimization Package for Julia. Joss 3, 615. doi:10.21105/joss.00615