- 1The School of Physical and Engineering Sciences, Heriot-Watt University, Edinburgh, United Kingdom

- 2The National Robotarium, Edinburgh, United Kingdom

Introduction: This paper addresses the challenges of vision-based manipulation for autonomous cutting and unpacking of transparent plastic bags in industrial setups, contributing to the Industry 4.0 paradigm. Industry 4.0, emphasizing data-driven processes, connectivity, and robotics, enhances accessibility and sustainability across the value chain. Integrating autonomous systems, including collaborative robots (cobots), into industrial workflows is crucial for improving efficiency and safety.

Methods: The proposed system employs advanced Machine Learning algorithms, particularly Convolutional Neural Networks (CNNs), for identifying transparent plastic bags under diverse lighting and background conditions. Tracking algorithms and depth-sensing technologies are integrated to enable 3D spatial awareness during pick-and-place operations. The system incorporates vacuum gripping technology with compliance control for optimal grasping and manipulation points, using a Franka Emika robot arm.

Results: The system successfully demonstrates its capability to automate the unpacking and cutting of transparent plastic bags for an 8-stack bulk-loader. Rigorous lab testing showed high accuracy in bag detection and manipulation under varying environmental conditions, as well as reliable performance in handling and processing tasks. The approach effectively addressed challenges related to transparency, plastic bag manipulation and industrial automation.

Discussion: The results indicate that the proposed solution is highly effective for industrial applications requiring precision and adaptability, aligning with the principles of Industry 4.0. By combining advanced vision algorithms, depth sensing, and compliance control, the system offers a robust method for automating challenging tasks. The integration of cobots into such workflows demonstrates significant potential for enhancing efficiency, safety, and sustainability in industrial settings.

1 Introduction

Industry 4.0—also called the Fourth Industrial Revolution or 4IR—is the next phase in the digitization of the manufacturing sector, driven by disruptive trends including the rise of data and connectivity, analytics, human-machine interaction, and improvements in robotics (Adel, 2022; Zhong et al., 2017). This could make products and services more easily accessible and transmissible for businesses, consumers, and stakeholders all along the value chain (Goel and Gupta, 2020). Preliminary data indicate that successfully scaling 4IR technology makes supply chains more efficient and sustainable (Javaid et al., 2021), creates a safer and more productive environment for the employees, reduces occupational accidents and factory waste, and has countless other benefits.

Autonomous manipulation of plastic packages in industrial setups typically involves the use of robotic systems and automation technologies (Md et al., 2018). These systems are designed to handle, move, and manipulate plastic packages in a variety of industrial processes, such as packaging, recycling and sorting, food processing, and quality control (Gabellieri et al., 2023; Chen et al., 2023).

Collaborative robots, or cobots, are widely used in various industrial applications, working alongside humans without needing extensive safety barriers, cages, or other restrictive measures (Chen et al., 2018). These robots use different sensors to identify their environment, recognise objects and are programmed for better accessibility, flexibility and repeatability. Example cases can be found in the textile industry as described in Longhini et al. (2021), where the authors proposed a dual arm collaborative system for textile material identification. By imitating human behavior, in this work the robots use actions such as pulling and twisting to identify and learn more about textile properties. In recent years, the recycling and waste management industry has begun to use vision-based robotic systems for the classification and accurate sorting of waste materials like Zen Robotics,1 AMP,2 Robenso.3 Indicative examples can be found in different recycling industries for the management of construction waste (Chen et al., 2022; Lukka et al., 2014), recyclable materials (Koskinopoulou et al., 2021; Papadakis et al., 2020) or electronic parts (Parajuly et al., 2016; Naito et al., 2021)? Describe the application of various cobots, such as the UR5, for handling plastic materials; however, they explain that a common solution has yet to be discovered for these applications. This work aims to address this challenge by developing a robotic automation solution for handling plastic bags using the Franka Emika Panda robot and integrating the system with a custom-made automation solution developed for a specific industrial application.

The vision-based manipulation and autonomous cutting of transparent plastic bags presents a set of intricate challenges and a compelling need for innovative AI solutions (Billard and Kragic., 2019; Tian et al., 2023). The inherent transparency of the bags poses difficulties in accurate detection due to the reflection and refraction of light, demanding sophisticated computer vision algorithms for reliable identification (Sajjan et al., 2020). The deformable nature of plastic bags adds complexity to the grasping and manipulation process, necessitating advanced robotic control strategies to handle their variability (Makris et al., 2023). This study tackles the challenges associated with detecting and handling transparent plastic bags. Accurate detection is often impeded by light reflections, refractions, and background noise, while their deformable nature adds complexity to manipulation. These factors create significant obstacles for vision-based autonomous cutting, necessitating advanced computer vision techniques and sophisticated robotic control algorithms.

High-speed image processing is essential for this project to ensure timely detection, decision-making, and execution of tasks. Delays in image processing can lead to missed targets, misaligned actions such as robot dropping the plastic bag in wrong location. YOLOv5 addresses these challenges with its highly optimized architecture, offering millisecond-level inference times while maintaining robust object detection and localization accuracy. Additionally, autonomous cutting requires well-considered mechanical design and precise vision-guided tools to discern optimal cutting points while avoiding unintended damage. Ensuring the safety and efficiency of these systems in real-time, dynamic environments further amplifies the challenge. The pressing need for such technologies arises from the increasing demand for automated waste management, recycling, and packaging processes, where vision-based systems can enhance efficiency, reduce human intervention, and contribute to sustainable practices by facilitating the effective processing of transparent plastic bags (Hajj-Ahmad et al., 2023).

In this work, through the use of advanced Machine Learning algorithms, based on Convolutional Neural Networks (CNNs), the system can identify transparent plastic bags within its visual field, taking into account variations in lighting and background. Once the bags are detected, the system utilizes tracking algorithms to follow the pick and placement of the bags, and, integrate depth sensing technologies for 3D spatial awareness. The next steps involve developing algorithms for robotic grasping and manipulation, accounting for the challenges posed by the deformable and transparent nature of plastic bags. This includes considerations for optimal grasping points, compliance control using vacuum gripping technology, and real-time automation and processing to ensure effective and safe interaction with the bags in dynamic environments.

The rest of the paper is organized as follows. Section 2 describes the mechanical design of the proposed system. Section 3 presents the object detection and manipulation approach based on deep-learning and Section 4 presents the autonomous cutting mechanism and the automation process. The testing of the pilot proof-of-concept prototype is presented in Section 5. Finally, the last section discusses the obtained results and highlights directions for future work.

2 Mechanical design

Integration of the robotic manipulation with industrial automation solution presents many significant challenges such as space consideration, alignment with existing conveyor systems and environmental constraints. To address these issues, a robust system was developed with a design that adheres to the same dimensions as other sections in the automation setup. The mechanical design encompasses three key components:

(i) Feeding: This involves the precise picking and placing of eight packaged plastic-container stacks from an adjacent tote into eight individual enclosures aligned with the bulk loader’s feeding system.

(ii) Cutting: This entails the opening, removal, and disposal of the packaging surrounding the plastic-container stacks. This operation is performed while the plastic-container stacks are securely held within the eight individual enclosures.

(iii) Delivery: This stage involves opening the enclosures containing the plastic-containers and strategically placing the unpackaged stacks into the bulk loader, facilitating the seamless integration of the plastic-containers into the larger industrial process.

This section details each subsystem within the design of the prototype.

2.1 Feeding

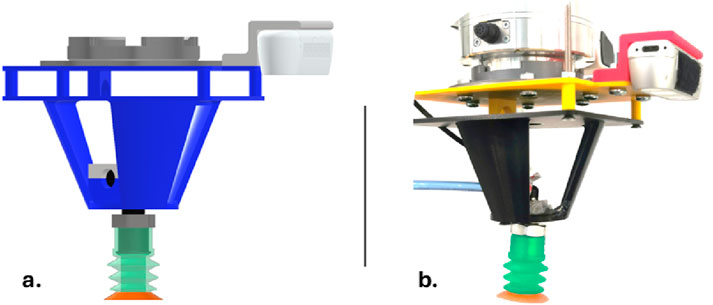

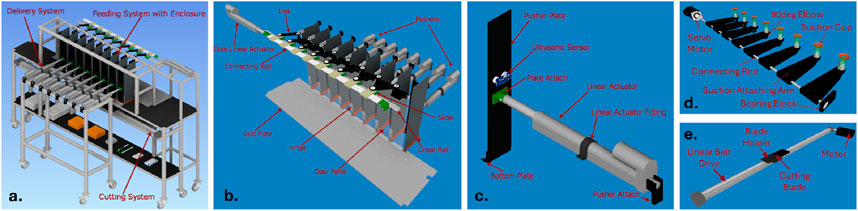

In the feeding system, a collaborative robot arm (the 7-axis Franka Emika Panda, equipped with an Intel RealSense D350 camera and a custom suction cup gripper) has been employed for manipulation and vision tasks (Figure 1B). The gripper comprises a Schmalz PSPF 33 SI-30/55 G1/8-AG suction cup, an SBP 15 G02 SDA vacuum generator, and a VS VP8 SA M8-4 pressure sensor enclosed in a custom 3D printed housing (Figure 1A: CAD model of the custom gripper and b:real printed griping system). The feeding process initiates with the camera capturing a top-down image of the tote, identifying the tops of the plastic stacks, and assigning a value to establish the picking order. The robot arm, guided by the established order, uses suction to pick and place individual stacks into 1 of 8 custom enclosures made of aluminum extrusion and acrylic (Figure 2A). Stack placement is verified using HC-SR04 ultrasonic sensors on the enclosure’s back wall. To facilitate picking, the tote containing the stacks is inclined at an angle (12

Figure 1. (A) CAD model of the vision-based gripping system; (B) Real system embedded in the Franka robot.

Figure 2. CAD model designs of the system’s components: (A) Eight-stack design assembly; (B) Delivery system; (C) Pushing mechanism; (D) Bottom suction mechanism; (E) Cutting mechanism.

2.2 Cutting

The cutting system consists of two interconnected components: a gripping mechanism and a cutting mechanism. The gripping mechanism employs eight vacuum-driven suction cup grippers to securely hold the bottom of each stacks packaging. A vacuum line, directed through two compressors and solenoid valves, divides the vacuum between the cobot arm’s end effector gripper and the eight suction cup grippers. Each of the eight individual air conduits is equipped with a dedicated vacuum generator, generating ample vacuum for secure suction, along with pressure sensors to ascertain suction power. Suction cups, mounted on 3D printed arms and connected to motors, swing into contact with the stack bases (Figure 2D). The cutting mechanism features a scalpel blade housed within a custom 3D printed mount, affixed to a linear belt drive (Figure 2E). The cutting process commences by opening the solenoid valve, supplying pressure to the vacuum generators. The swinging rod engages each suction cup gripper, making contact with the bottom of all eight packages. As suction secures the packages, the sensors on each gripper gauge the required suction power. Once optimal conditions are reached, the rod rotates, creating tension in the packaging. Simultaneously, the cutting mechanism traverses the linear rail, slicing through the packaging. Upon completion, the valves close, releasing the cut plastic into a container beneath the aluminum frame. After opening all eight stacks, the cobot arm reverses the pick-and-place task, using the suction cup gripper to grasp the top of each remaining packaging item. These are then placed into a designated disposal bin. This comprehensive cutting system ensures precise and efficient packaging removal, complementing the overall automated process for unpacking and cutting transparent plastic bags in industrial setups.

2.3 Delivery

The delivery system incorporates nine WL-22040921 linear actuators, with eight arranged in parallel through a two-channel relay module to create the pushing mechanism against the back walls of the enclosures. The remaining actuator, also connected to a two-channel relay module, is dedicated to the custom door mechanism. In the pushing mechanism (Figure 2C), each linear actuator, positioned against the back walls, executes forward movements, serving as pushers, and integrates ultrasonic sensors for precision. A custom plate at the base catches the stacks, facilitating their smooth displacement into the bulk loader. For the custom door mechanism (Figure 2B), a linear guide rail, linear actuator, door hinges, and acrylic doors are utilized. Eight individual sliders on the rail correspond to the linear actuator, doors, and hinges, enabling synchronized opening and closing. A limit switch at the rail’s end prevents excess movement, safeguarding the door mechanism. The delivery process initiates after the cobot arm removes all packaging, with the linear actuator autonomously opening the doors, and the eight linear actuators pushing the unpackaged stacks onto an acrylic skid plate. Upon successful placement, the linear actuators revert to their initial position, retracting the enclosure back walls, and closing the doors. Upon completion, the system undergoes a reset for the subsequent delivery cycle. This intricately designed delivery system ensures efficient and controlled movement of unpackaged stacks, contributing to the seamless integration of the transparent plastic bag unpacking and cutting process in industrial setups.

3 Deep-learning-based object detection and manipulation

The vision system’s comprehensive workflow for real-time detection and tracking of transparent bags is presented in the following. The camera framework operates on ROS Noetic, leveraging the ROS Wrapper for Intel RealSense Devices provided by Intel. By initiating the RealSense camera package, the camera commences the publication of depth and vision (RGB) data, readily accessible for subscription and utilization as needed. These captured data are transformed into monochrome and disseminated to subsequent detection stages. QR codes are employed for zone categorization, aiding in depth data estimation. The detection process is executed using YOLOv5, integrated into ROS through the ROS wrapper. YOLOv5 is renowned for its efficiency and performs real-time detection of plastic-container stacks, providing precise picking locations to the robotic system.

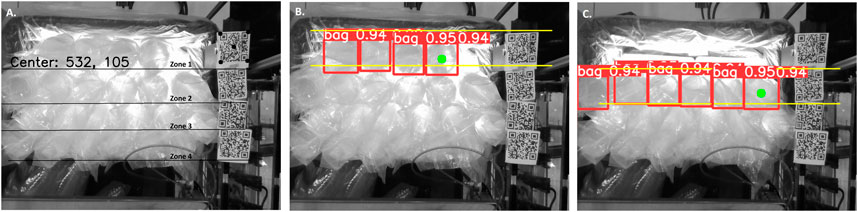

3.1 QR code based depth estimation and tracking

Depth estimation is required for the conversion of the camera coordinates to Cartesian coordinates. However, this is non-trivial as depth estimation using the current camera is not always robust due to inconsistencies caused by the transparency of the bags and plastic-containers. To overcome this, QR codes have been placed at the side of the tote as shown in Figure 3A. The QR codes provide clear points of reference and an accurate depth reading at the beginning of the task. Four QR codes have been used in total, each of which corresponds to one of the four rows of stacks in the tote. The distance between the stack and the camera is then calculated based on the distance between the camera and the respective QR code. This also allows the bags to be grouped into different zones as shown in Figure 3B. Picking of the stacks presupposes optimal tracking, such that the robot can return to the next stack in the sequence after loading the feeding system. In order to achieve this, the detection of the bags is performed by zone rather than by tote. In Figure 3B, the detection of the stacks of zone 1 is shown, by identifying 4 bags in red and their corresponding confidence level. The green dot within the right-hand red box indicates the target stack that the robot is going to pick next. Whereas, Figure 3C illustrates the process sequence after the first zone stacks are successfully picked and placed by the robot and the detection of the 6 stacks of zone 2. By finding the coordinates of the leftmost stack, the robot can detect and pick the stacks one by one from left to right. Once the picking of the stacks of zone 1 has been completed, it can then proceed to zones 2, 3 and finally 4.

Figure 3. Plastic-container stack detection process. (A) Zone identification and QR-code detection of zone 1, along with its center co-ordinates; (B) First zone stack detection and tracking of the next picking target (marked with the green dot); (C) plastic-container stack detection of zone 2.

3.2 Data acquisition

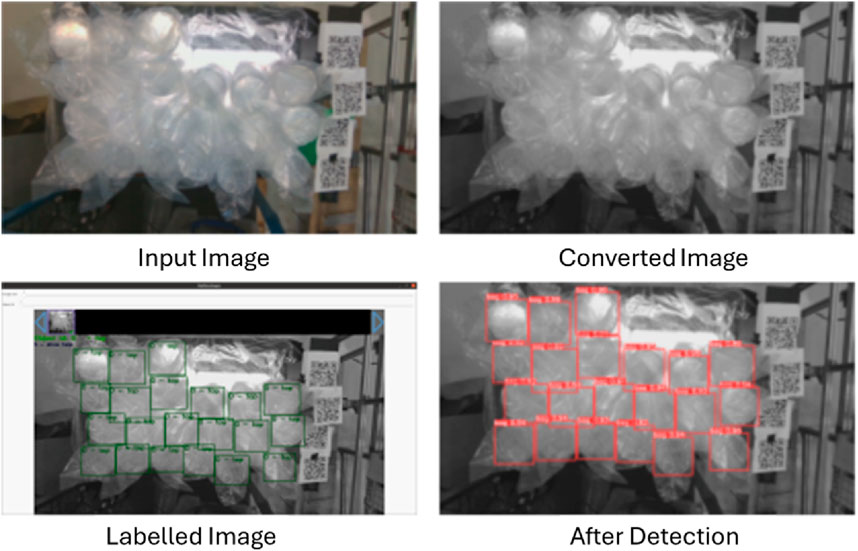

A custom data-set was created using the same Intel D435 camera used for object detection by capturing the plastic-container stacks and labelling them to be used as training data for the algorithm. A sample image from the raw images captured is shown in Figure 4 (Original). The images were grouped into training, validation and test sets of 150, 40 and 24 images respectively. Since the project was developed for a specific application the dimensions of the object under detection were fixed. The bags used in this study are having a height of 365 mm and a weight of 400 g. Each bag contains 24 plastic containers having a top diameter of 92 mm and a bottom diameter of 86 mm. The data were collected under different environmental lighting and across various time periods within this project. The 24 images in the test dataset were captured during experimentation, while emptying the tote by picking the stacks one by one. The camera parameters used for data collection in OBS Studio are listed in Table 1.

Figure 4. YOLO_V5 training process: Original is the captured image; Converted is the grayscale image; Labelled is the ground truth; and the last one is the result after detection.

The raw images are then converted to monochrome to reduce noise. The sharpness and contrast were also adjusted to obtain better results. Figure 4 (Converted) shows the results after this step of image processing.

The dataset was prepared and labelled using the annotation tool YOLO Mark4 as shown in Figure 4 (Labelled). After testing multiple offline labelling tools, YOLO_mark provided reliable results.

To train the model with the YOLO_V55 algorithm for object detection, we used the data acquired during the data acquisition process (Section 3.2). The training can be done using either the local machine if the local machine has a sufficient NVIDIA GPU or by using the Google Collab Cloud GPU. For the scope of this work Google Collab was used.

3.3 Robot control for pick-n-place of detected plastic bags

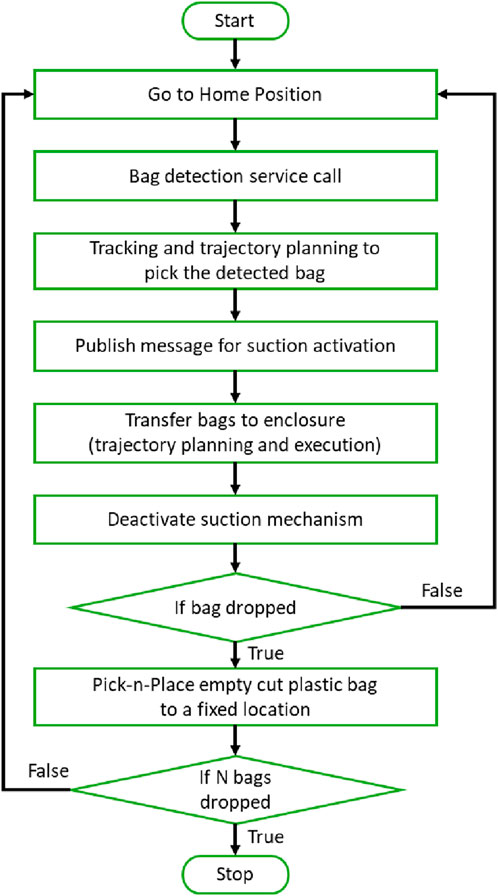

The robotic manipulation process begins with a fixed Home Pose, where the robot is positioned at a predefined location that provides a complete view of all the packaged stacks within the tote. This home position serves as the starting point for subsequent operations. Next, the robot initiates the detection of packaged stacks which marks commencement of the third workflow step, by invoking the packaged stack detection code through the ROS architecture using a ROS service. The outcome of this service query is the identification of the next single packaged stack to be picked by the robot within the camera’s visual frame. Figure 5 presents the overall automation logic of the robot control for this pick-n-place task.

Upon successfully detecting the packaged stack, the three-dimensional coordinates of the detected stack in the camera’s optical frame are transformed into the robot’s reference frame using MoveIt hand-eye calibration package.6 This calibration process generates a calibration file specific to the robotic setup which calculates the cobot configuration from the camera’s three-dimensional coordinates.

The cobot then initiates the fourth workflow step which involves trajectory planning and execution. The robot uses the integrated motion planner, MoveIt Pilz Industrial Motion Planner (LIN), to plan and execute a linear path to reach the identified packaged stack. LIN utilises the Panda Franka Emika’s Cartesian constraints to create a trapezoidal velocity profile in Cartesian space for the cobot’s movement. This profile ensures the cobot accelerates, maintains a constant speed, and then decelerates during its movements. This approach proves highly effective, especially when handling packaged stacks as they can deform after collisions with objects in the workspace if the speed profiles are not controlled.

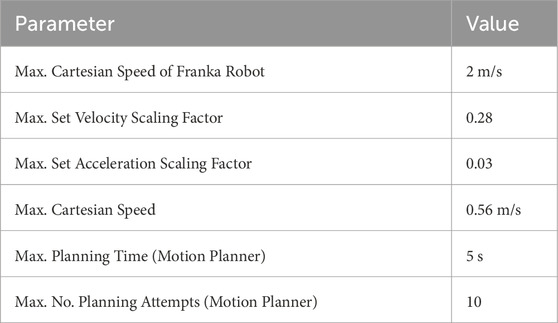

To further refine control, scaling factors for Cartesian velocity and acceleration are integrated in the system, which imposes a maximum speed limit on the trajectories generated by the planner. The speed and planning parameters used for the robots pick-n-place testing are tabulated in Table 2.

Following the workflow pipeline of Figure 5, after target position reached, the robot publishes a message to the ROS framework to activate the suction mechanism and grasp the identified packaged stack. The robot then transfers the stack to an empty enclosure in the cutting module completing the sixth workflow step. This is accomplished using the motion planner to plan a path through a set of predefined waypoints which ensures collision avoidance whilst navigating through the workspace.

After reaching the specified enclosure, the cobot begins the next process workflow step. Here, the robot utilises the ROS framework to publish another message to the suction node. This triggers the node to cut off the suction supply, thereby releasing the packaged stack to place it safely within the enclosure. This entire process is repeated for a further seven packaged stacks to fill the eight cutting module enclosures, following the eight-step workflow which loops previous steps until the decision block is true. The robot carries out the sequence, ensuring that each stack is picked up, transferred, and released with precision.

In turn, the cutting mechanism is activated and the robot is used to retrieve the cut plastic bags. The robot utilises the Pilz motion planner to plan and execute paths through sets of predefined waypoints to move the cobot from above the enclosures to a designated bin for disposing of the cut plastic bags, this entails the ninth step in the workflow. The end effector is positioned above the enclosure and the suction activated such that it grasps the top of the cut plastic packaging. The packaging is removed from the stack by a vertical upward movement of the cobot end effector. The robot then moves its end effector to above the designated bin, and the suction node is invoked to deactivate the suction mechanism and release the cut plastic bag, dropping it into the bin. This process is repeated for all eight packaged stacks in the enclosures. The tenth step in the workflow involves repeating the above actions until all twenty-four plastic bags are cut and disposed of, thus ending the workflow.

4 Autonomous cutting & control

The whole system is integrated with the aid of robotic control and electronic automation. The robotic control is developed in the Robot Operating System (ROS), through customisation of libraries such as motion-planner and move-it. A custom made automation solution oversees the whole operation through feed-back from various sensors and the electronic actuation of motors. The automation logic is implemented using a Raspberry-Pi module which acts as the master controller and commands the Arduino module, which in turn handles the actuation and feed-back based on the commands sent by the master module.

The automation system uses a combination of Arduino Mega, sensors (including ultrasonic and pressure sensors), linear actuators, solenoids, and a Raspberry Pi 4 for automation. The sensors and actuators are linked to the Arduino Mega. The Arduino communicates with the Raspberry Pi 4 via serial communication. The Raspberry Pi 4 reads sensor data transmitted by the Arduino and sends commands to activate solenoids, motors, and linear actuators.

The Raspberry Pi is coded using ROS python which is the automation controller in this implementation. It coordinates actions with the cobot by publishing and subscribing to the relevant topics at the appropriate time.

The operation starts with the Raspberry Pi controller publishing system ready to send commands to the cobot, which then initializes the cobot operation. The cobot then moves to a position over the tote, so that it can detect the stacks, and gives the ready for picking command to the controller. The Raspberry Pi then switches on the solenoid valve connected with the cobot suction gripper, which aids the picking of the bags from the tote. The cobot then approaches the bag for picking, meanwhile the controller monitors the pressure sensor value from the cobot gripper to check whether the pick has been successful. If the picking fails the cobot moves to the reset position and restarts the picking process. If the pick is successful, the cobot moves to the dropping position over the enclosure. The cobot then gives the drop command to the pi module, indicating its position over the dropping zone. The controller then activates the bottom suction solenoid valves and rotates the motor to position the suction cups below the stacks. The bottom suction will remain in this state for the rest of the cycle.

Feedback from the ultrasonic sensor is checked to ensure the stack’s position within the enclosure. If the stack is identified, feedback is given to the cobot, if not, the cobot will move back to drop position again and reattempt the placement of the stack. The pressure is checked during this time using the pressure sensors, and if it drops below a cut off value and the ultrasonic sensor still detects a stack, feedback is given which triggers the cobot to start the next feeding operation to the adjacent enclosure position. If the bottom suction pressure drop is not detected within the scheduled time, a failed state is fed back to the cobot, which moves back to the drop position to repeat the dropping again. This cycle is continued until all eight stack positions in the enclosure are filled with the bags. Once all 8 positions are filled, a finished cycle message is received from the cobot controller which then triggers the bottom suction motor to rotate to create tension on the bag. Cutting is done using the motor control interface separately. The automatic control of the system is paused until these operations are completed.

Once cutting is complete, the Raspberry controller publishes to start the removal of the bags, along with the turning off of the bottom suction and rotation of the suction cups back to their home position. The cobot then moves above the enclosures to remove the packaging, and publish a ready for removal command to the controller. The controller then activates the cobot suction and starts monitoring the pressure sensor value. The cobot then moves towards the stack to pick the bag from the enclosure. The cobot picks the bag and moves to a safe drop position, where it publishes a “removing bag” status check message, which enables the Raspberry Pi to turn off the cobot suction to release the bag.

This cycle has been repeated for all 8 stacks, and then the pushing mechanism starts, with the doors swinging open. Then the pushers are activated which push the unpacked plastic-containers into the bulk loader over the skid plate. Once this delivery is completed the pushers move back to their home position and the doors close. This completes one cycle of operations for all of the 8 stacks. The system is then reset by publishing system ready by the rasberry-pi controller to continue the operation for the next feeding cycle. A simplified representation of the automation logic is shown in Figure 6.

5 Experimental results

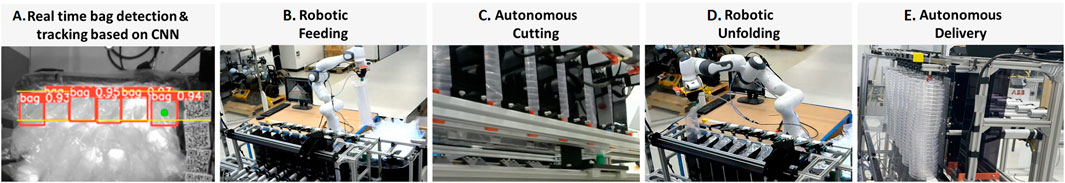

To evaluate the performance of our proposed vision-based manipulation system for the autonomous cutting and unpacking of transparent plastic bags, we conducted a series of experiments consisting of 10 iterations of the complete cycle. Each cycle involved five distinct phases as shown in Figure 7: real-time bag detection and tracking, robot feeding, autonomous cutting, robotic unfolding, and autonomous delivery to the bulk loader. The following sections detail the outcomes and observations for each phase.

Figure 7. Experimental procedure with five identified phases: (A) Real time bag-detection and tracking; (B) Robot feeding; (C) Autonomous cutting; (D) Robot unfolding and (E) Autonomous delivery.

5.1 Real-time bag detection and tracking: vision detection performance

The first phase involved the detection and tracking of transparent plastic bags using Convolutional Neural Networks (CNNs). The system demonstrated high accuracy in identifying the bags under varying lighting and background conditions. Across the 10 iterations, the average detection accuracy was 96.8%, with a standard deviation of 1.2%. The tracking algorithm maintained a robust performance, ensuring continuous monitoring of the bags’ positions with an average tracking error of 1.5 mm.

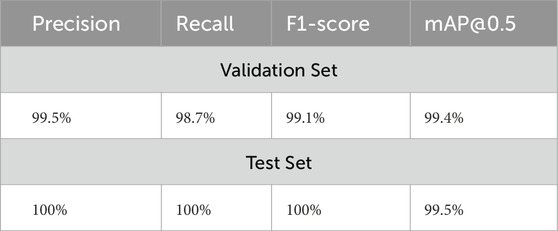

To assess the performance of the trained network we followed the standard evaluation procedure considering three metrics, namely (i) precision (Equation 1), (ii) recall (Equation 2), and (iii) F1-score (Equation 3), which are calculated as follows:

In these equations tp, fp and fn denote respectively the true positive, false positive and false negative identifications of the plastic bags. True positives were considered for the cases when the predicted and real bounding box pair has an IoU score that exceeds the imposed threshold IoU = 0.5. Table 3 summarises the results of the bag detection performance on both the validation and test set. As the model has been trained on a targeted dataset acquired from the same environment, the inference results very high scoring on average 100% accuracy to all the experiments conducted.

5.2 Robot feeding

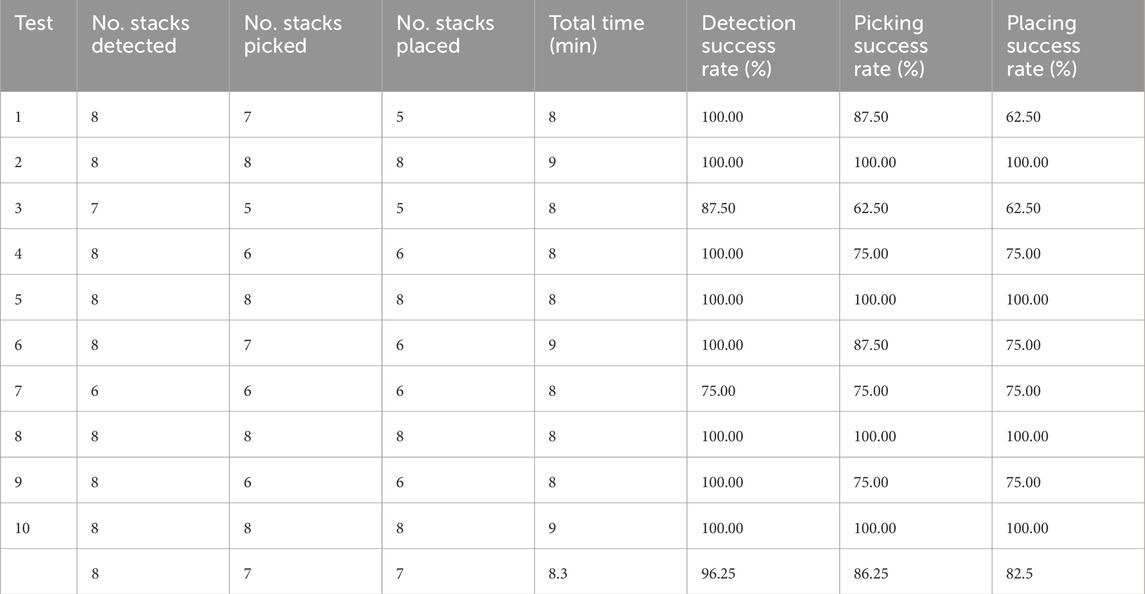

In the second phase, the FRANKA robot arm picked the bags one by one from the box and placed them into each enclosure of the feeding system until all eight enclosures were filled. Table 4 provides numerical counts for the number of stacks detected, picked, and placed, with the maximum result for each being eight. The average success rates for picking and placing were 86.25% and 82.5%, respectively, with an overall average of seven out of eight bags successfully handled. The average time taken for the robot to complete this task was 8.3 min per iteration, with a standard deviation of 0.5 min. Challenges in this phase included the suction system’s grip failures and workspace constraints, leading to collisions and placement errors. Overall, as tabulated in Table 4 the average numbers of successful picked and placed is 7 out of 10.

5.3 Autonomous cutting

During the third phase, the autonomous cutting mechanism was activated to cut all eight bags. The cutting process was highly efficient, with an average completion time of 15.7 s per iteration and no cutting errors observed across all 10 iterations. The precision of the cuts was within an acceptable margin, with an average deviation of 0.2 mm from the intended cut lines. This phase did not experience the interdependent issues observed in detection, picking, and placing, thus maintaining consistent performance.

5.4 Robotic unfolding

The fourth phase involved the robotic unfolding of each of the eight bags. The FRANKA robot arm demonstrated high dexterity and control, successfully unfolding all bags in each iteration. The average time taken for unfolding all eight bags was 38.9 s, with a standard deviation of 2.8 s. The system’s compliance control with vacuum gripping technology ensured minimal damage to the bags and plastic containers during the unfolding process.

5.5 Autonomous delivery to bulk loader

In the final phase, the unfolded bags were autonomously delivered to the bulk loader by activating the pushers. The system successfully delivered all eight bags in each iteration, with an average delivery time of 18.4 s and a standard deviation of 1.9 s. The placement accuracy was consistently high, with an average deviation of 0.1 mm from the target position.

5.5.1 Overall system performance

The integrated system’s performance across all 10 iterations was evaluated based on the cumulative time taken to complete all five phases, the accuracy of each task, and the overall reliability. The average total cycle time per iteration was recorded as 8.3 min, which includes the detection, picking, placing, cutting, unfolding, and delivery processes. The system demonstrated a high level of reliability, with no critical failures observed throughout the experiments.

The results from Table 4 emphasize the interdependence of the robot’s actions: a failure in detection directly impacts the picking and placing activities. For instance, in test 7, despite achieving complete success in picking and placing, full cycle success could not be attained due to detection failures. This underlines the importance of reliable detection to ensure overall process success. A Supplementary Video with the whole experimental procedure can be found at this link: https://youtu.be/MXxTeyBVJWg.

The successful testing and validation of the proposed solution in the laboratory environment with the FRANKA robot arm showcases its potential for widespread industrial applications. The system effectively automated the unpacking and cutting of transparent plastic bags for an 8-stack bulk-loader, meeting the specific requirements and demonstrating robustness under rigorous testing conditions. These results highlight the system’s capability to enhance efficiency and safety in industrial processes, aligning with the Industry 4.0 paradigm.

6 Discussion and conclusion

In this paper, we have presented a comprehensive and innovative system for the autonomous cutting and unpacking of transparent plastic bags in industrial setups, aligned with the principles of Industry 4.0. Leveraging advanced Machine Learning algorithms, particularly CNNs, our system successfully identifies and manipulates transparent plastic bags using a collaborative robot arm equipped with a custom suction cup gripper and depth sensing technologies. The cutting process is facilitated by a combination of vacuum-driven suction cup grippers and a precise linear belt-driven scalpel blade. The delivery system, employing linear actuators and custom door mechanisms, ensures the smooth transition of unpackaged stacks into the bulk loader.

Despite the achievements of our system, there are avenues for further exploration and improvement. Future work could involve enhancing the system’s robustness in handling variations in lighting and background conditions, refining the accuracy of detection and control algorithms using deep learning, segmentation and more sophisticated path planning, and extending the capabilities of the cutting mechanism to accommodate different types of packaging materials. Integration with more sophisticated artificial intelligence techniques and adaptive control strategies may contribute to further autonomy and efficiency in the unpacking and cutting process. Exploring the use of multi-spectral or hyper spectral imaging for transparent object detection can be explored in future work to enhance the system capabilities. Additionally, exploring the scalability of the system for diverse industrial applications including industrial IoT for better collaboration and evaluating its performance in real-world scenarios will be crucial for its widespread adoption. Continuous refinement and adaptation to evolving technologies will be essential to maximize the system’s potential in the dynamic landscape of industrial automation.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

FA: Conceptualization, Investigation, Methodology, Software, Validation, Writing–original draft, Writing–review and editing. AK: Conceptualization, Investigation, Methodology, Software, Validation, Writing–original draft, Writing–review and editing. PS: Conceptualization, Investigation, Methodology, Software, Validation, Writing–original draft, Writing–review and editing. SS: Conceptualization, Investigation, Methodology, Software, Validation, Writing–original draft, Writing–review and editing. FV: Writing–original draft, Writing–review and editing. UU: Conceptualization, Investigation, Methodology, Software, Validation, Writing–original draft, Writing–review and editing. RY: Conceptualization, Investigation, Methodology, Software, Validation, Writing–original draft, Writing–review and editing. AP: Conceptualization, Supervision, Validation, Writing–original draft, Writing–review and editing. EP: Conceptualization, Investigation, Supervision, Writing–original draft, Writing–review and editing. RP: Project administration, Supervision, Writing–original draft, Writing–review and editing. YP: Conceptualization, Project administration, Supervision, Writing–original draft, Writing–review and editing. MK: Methodology, Supervision, Validation, Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

This work has been partially supported by the MSc placement program of Heriot Watt University, Edinburgh, Unite Kingdom.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

4https://github.com/AlexeyAB/Yolo_mark

5https://github.com/ultralytics/yolov5

6https://github.com/moveit/moveit_tutorials/tree/master/doc/hand_eye_calibration

References

Adel, A. (2022). Future of industry 5.0 in society: human-centric solutions, challenges and prospective research areas. J. Cloud Comput. 11, 40. doi:10.1186/s13677-022-00314-5

Billard, A., and Kragic, D. (2019). Trends and challenges in robot manipulation. Science 364, eaat8414. doi:10.1126/science.aat8414

Chen, B., Wan, J., Shu, L., Li, P., Mukherjee, M., and Yin, B. (2018). Smart factory of industry 4.0: key technologies, application case, and challenges. IEEE Access 6, 6505–6519. doi:10.1109/ACCESS.2017.2783682

Chen, L. Y., Shi, B., Seita, D., Cheng, R., Kollar, T., Held, D., et al. (2023). “Autobag: learning to open plastic bags and insert objects,” in 2023 IEEE international conference on robotics and automation (ICRA), 3918–3925. doi:10.1109/ICRA48891.2023.10161402

Chen, X., Huang, H., Liu, Y., Li, J., and Liu, M. (2022). Robot for automatic waste sorting on construction sites. Automation Constr. 141, 104387. doi:10.1016/j.autcon.2022.104387

Gabellieri, C., Palleschi, A., Pallottino, L., and Garabini, M. (2023). Autonomous unwrapping of general pallets: a novel robot for logistics exploiting contact-based planning. IEEE Trans. Automation Sci. Eng. 20, 1194–1211. doi:10.1109/TASE.2022.3182362

Goel, R., and Gupta, P. (2020). Robotics and industry 4.0. Cham: Springer International Publishing, 157–169. doi:10.1007/978-3-030-14544-6_9

Hajj-Ahmad, A., Kaul, L., Matl, C., and Cutkosky, M. (2023). Grasp: grocery robot’s adhesion and suction picker. IEEE Robotics Automation Lett. 8, 6419–6426. doi:10.1109/LRA.2023.3300572

Javaid, M., Haleem, A., Singh, R. P., and Suman, R. (2021). Substantial capabilities of robotics in enhancing industry 4.0 implementation. Cogn. Robot. 1, 58–75. doi:10.1016/j.cogr.2021.06.001

Koskinopoulou, M., Raptopoulos, F., Papadopoulos, G., Mavrakis, N., and Maniadakis, M. (2021). Robotic waste sorting technology: toward a vision-based categorization system for the industrial robotic separation of recyclable waste. IEEE Robotics & Automation Mag. 28, 50–60. doi:10.1109/MRA.2021.3066040

Longhini, A., Welle, M. C., Mitsioni, I., and Kragic, D. (2021). “Textile taxonomy and classification using pulling and twisting,” in IEEE/RSJ international conference on intelligent robots and systems (IROS). Prague, Czech Republic: IEEE, 7564–7571. doi:10.1109/IROS51168.2021.9635992

Lukka, T. J., Tossavainen, T., Kujala, J. V., and Raiko, T. (2014). Zenrobotics recycler - robotic sorting using machine learning

Makris, S., Dietrich, F., Kellens, K., and Hu, S. (2023). Automated assembly of non-rigid objects. CIRP Ann. 72, 513–539. doi:10.1016/j.cirp.2023.05.003

Md, H. A., Aizat, K., Yerkhan, K., Zhandos, T., and Anuar, O. (2018). Vision-based robot manipulator for industrial applications. Procedia Comput. Sci. 133, 205–212. International Conference on Robotics and Smart Manufacturing (RoSMa2018). doi:10.1016/j.procs.2018.07.025

Naito, K., Shirai, A., Kaneko, S.-i., and Capi, G. (2021). “Recycling of printed circuit boards by robot manipulator: a deep learning approach,” in 2021 IEEE international symposium on robotic and sensors environments (ROSE), 1–5. doi:10.1109/ROSE52750.2021.9611773

Papadakis, E., Raptopoulos, F., Koskinopoulou, M., and Maniadakis, M. (2020). “On the use of vacuum technology for applied robotic systems,” in 2020 6th international conference on mechatronics and robotics engineering (ICMRE), 73–77. doi:10.1109/ICMRE49073.2020.9065189

Parajuly, K., Habib, K., Cimpan, C., Liu, G., and Wenzel, H. (2016). End-of-life resource recovery from emerging electronic products – a case study of robotic vacuum cleaners. J. Clean. Prod. 137, 652–666. doi:10.1016/j.jclepro.2016.07.142

Sajjan, S., Moore, M., Pan, M., Nagaraja, G., Lee, J., Zeng, A., et al. (2020). “Clear grasp: 3d shape estimation of transparent objects for manipulation,” in 2020 IEEE international conference on robotics and automation (ICRA), 3634–3642. doi:10.1109/ICRA40945.2020.9197518

Tian, H., Song, K., Li, S., Ma, S., Xu, J., and Yan, Y. (2023). Data-driven robotic visual grasping detection for unknown objects: a problem-oriented review. Expert Syst. Appl. 211, 118624. doi:10.1016/j.eswa.2022.118624

Keywords: autonomous systems, industrial applications, vision-guided manipulation, transparent bag detection, robotic solutions

Citation: Adetunji F, Karukayil A, Samant P, Shabana S, Varghese F, Upadhyay U, Yadav RA, Partridge A, Pendleton E, Plant R, Petillot YR and Koskinopoulou M (2025) Vision-based manipulation of transparent plastic bags in industrial setups. Front. Robot. AI 12:1506290. doi: 10.3389/frobt.2025.1506290

Received: 04 October 2024; Accepted: 06 January 2025;

Published: 27 January 2025.

Edited by:

Rajkumar Muthusamy, Dubai Future Foundation, United Arab EmiratesReviewed by:

Kuppan Chetty Ramanathan, SASTRA Deemed to be University, IndiaGanesan Subramanian, Symbiosis International University Dubai, United Arab Emirates

Copyright © 2025 Adetunji, Karukayil, Samant, Shabana, Varghese, Upadhyay, Yadav, Partridge, Pendleton, Plant, Petillot and Koskinopoulou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: M. Koskinopoulou, bS5rb3NraW5vcG91bG91QGh3LmFjLnVr

F. Adetunji1,2

F. Adetunji1,2 P. Samant

P. Samant S. Shabana

S. Shabana F. Varghese

F. Varghese U. Upadhyay

U. Upadhyay R. A. Yadav

R. A. Yadav A. Partridge

A. Partridge M. Koskinopoulou

M. Koskinopoulou