- Collaborative Robotics and Intelligent Systems Institute, Oregon State University, Corvallis, OR, United States

Human-robot teams collaborating to achieve tasks under various conditions, especially in unstructured, dynamic environments will require robots to adapt autonomously to a human teammate’s state. An important element of such adaptation is the robot’s ability to infer the human teammate’s tasks. Environmentally embedded sensors (e.g., motion capture and cameras) are infeasible in such environments for task recognition, but wearable sensors are a viable task recognition alternative. Human-robot teams will perform a wide variety of composite and atomic tasks, involving multiple activity components (i.e., gross motor, fine-grained motor, tactile, visual, cognitive, speech and auditory) that may occur concurrently. A robot’s ability to recognize the human’s composite, concurrent tasks is a key requirement for realizing successful teaming. Over a hundred task recognition algorithms across multiple activity components are evaluated based on six criteria: sensitivity, suitability, generalizability, composite factor, concurrency and anomaly awareness. The majority of the reviewed task recognition algorithms are not viable for human-robot teams in unstructured, dynamic environments, as they only detect tasks from a subset of activity components, incorporate non-wearable sensors, and rarely detect composite, concurrent tasks across multiple activity components.

1 Introduction

Human-robot teams (HRTs) are often required to operate in dynamic, unstructured environments, which poses a unique set of task recognition challenges that must be overcome to enable a successful collaboration. Consider a post-tornado disaster response that requires locating, triaging and transporting victims, securing infrastructure, clearing debris, and locating and securing potentially dangerous goods from looting (e.g., weapons at a firearms shop, drugs at a pharmacy). Achieving effective human-robot collaboration in such scenarios requires natural teaming between the human first responders and their robot teammates (e.g., quadrotors or ground vehicles). An important element of such teaming is the robot teammates’ ability to infer the tasks performed by the human teammates in order to adapt their interactions autonomously based on their human teammates’ state.

Tasks performed by human teammates can be classified into two categories: 1) Atomic, and 2) Composite. Atomic tasks are simple activities that are either short in duration, or involve repetitive actions that cannot be decomposed further. A Composite task aggregates multiple atomic actions or activities into a more complex task (Chen K. et al., 2021). For example, Clearing a dangerous item is a composite task comprised of several atomic tasks: a) Scanning the environment for suspicious items, b) Evaluating the threat level, c) Taking a picture, d) Discussing with the incident commander via walkie-talkie, and e) moving on to the next area.

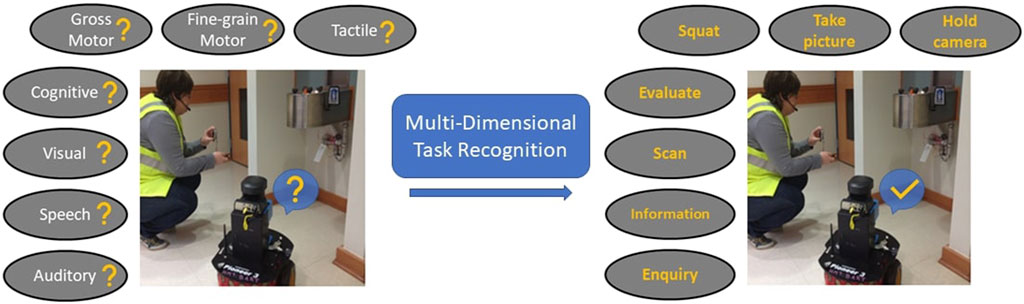

Humans conduct tasks using a breadth of their capabilities, as depicted in Figure 1. Depending on the complexity, the tasks can involve multiple activity components: gross motor, fine-grained motor, tactile, cognitive, visual, speech, and auditory (Heard et al., 2018a). For example, the Clearing a dangerous item task aggregates a visual component of scanning the environment to locate the item, a cognitive component of evaluating the item’s threat level, taking a picture task involves gross motor, fine-grained motor and tactile components, while discussing the next steps with the incident commander via walkie-talkie involves auditory and speech components for listening to queries and providing information.

FIGURE 1. An example of a multi-dimensional task recognition framework enabling a robot to detect a human teammate’s tasks across all activity components when the HRT is clearing a dangerous item.

HRTs often perform a wide variety of tasks, such that the set of tasks performed by human teammates may involve differing combinations of multi-dimensional activity components. The focus on disaster response environments, which are dynamic, uncertain, and unstructured, does not permit the use of environmentally embedded sensors (e.g., motion capture systems and cameras). Wearable sensors are a viable alternative that facilitate gathering the objective data necessary to identify a human’s task. Robots will require a multi-dimensional task recognition algorithm capable of detecting composite tasks composed of differing combinations of the activity components. Current state-of-the-art HRT task recognition using wearable sensors generally focuses on gross motor and some fine-grained atomic tasks. Prior research identified visual, cognitive, and some auditory tasks using wearable sensors; however, none of those methods recognize composite tasks across all activity components.

Humans often complete multiple tasks concurrently (Chen K. et al., 2021). For example, while clearing dangerous items, a human teammate may receive a communication request from the incident command center seeking important information and the teammate will be required to do both tasks simultaneously. Detecting this task concurrency will allow robots to better adapt to their teammate’s interactions, priorities or appropriations, which will improve the team’s overall collaboration and performance. A limitation of most existing algorithms assume that the human performs a single composite task at a time.

Individual differences are expected, even if the humans receive identical training, resulting in differing task completion steps, such as different atomic tasks being completed in different orders or with differing completion times. These differences can result in one task being mapped to multiple different sensor readings. For example, while applying tourniquet, a first responder may skip securing the excess band, as it is not a necessary step to stop the bleeding, while another responder may pack the wound with gauze, an additional step. Algorithmically identifying such individual differences is challenging. The existing approaches to addressing individual differences only considered trivial gross-motor and fine-grained motor atomic tasks (Mannini and Intille, 2018; Akbari and Jafari, 2020; Ferrari et al., 2020), not composite tasks involving multiple activity components.

Human responders train to respond to disasters, but each disaster differs and often requires the completion of unique tasks or tasks in a different manner. Performing tasks for which the robot has not been previously trained are called out-of-class tasks (Laput and Harrison, 2019). Misclassification of an out-of-class task can result in a robot adapting its behavior incorrectly, causing more harm than good.

This literature review evaluates task recognition algorithms within the context of HRTs, operating in uncertain, dynamic environments, by developing a set of relevant criteria. Sections 2, 3 provide the necessary background on task recognition and discuss prior literature reviews, respectively. Section 4 provides an overview of the relevant task recognition metrics, while Section 5 reviews relevant algorithms. Section 6 discusses the limitations of the current approaches, while Sections 7, 8 provide insights for future directions and concluding remarks, respectively.

2 Background

Task recognition involves classifying a human’s task action based on a set of domain relevant activities, or tasks (Wang et al., 2019). A task, tk, belongs to a task set T, for which a sequence of sensor readings Sk corresponds to the task. Task recognition is intended to identify a function f that predicts the task performed based on the sensor readings Sk, such that the discrepancy between the predicted task

Generally, human’s tasks encompass multiple activity components: Physical movements, cognitive, visual, speech and auditory. Physical movements can be categorized based on motion granularity: 1) Gross motor, 2) Fine-grained motor, and 3) Tactile. Gross motor tasks’ physical movements displace the entire body, such as walking, running, and climbing stairs, or major portions, such as swinging an arm (e.g., Cleland et al., 2013; Chen and Xue, 2015; Allahbakhshi et al., 2020). Fine-grained motor tasks involve body extremities’ motion (i.e., wrists and fingers), such as grasping and object manipulation (e.g., Zhang et al., 2008; Fathi et al., 2011; Laput and Harrison, 2019). Tactile tasks’ physical movements result in sense of touch, such as mouse clicks, keyboard strokes, and carrying a backpack (e.g., Hsiao et al., 2015; Lee and Nicholls, 1999). Cognitive tasks use the brain to process new information, as well as recall or retrieve information from memory (e.g., Kunze et al., 2013a; Yuan et al., 2014; Salehzadeh et al., 2020). Visual task examples include identifying different objects, and reading (e.g., Bulling et al., 2010; Ishimaru et al., 2017; Srivastava et al., 2018). Speech-reliant tasks are voice articulation dependent, such as communicating over the radio (e.g., Dash et al., 2019; Abdulbaqi et al., 2020), while auditory tasks are acoustic events in the environment, such as an important announcement or emergency sounds (e.g., Stork et al., 2012; Hershey et al., 2017; Laput et al., 2018). Most existing task recognition approaches focus primarily on detecting physical gross motor and fine-grained motor tasks. However, some tasks involve little to no physical movement. Robots need a holistic understanding of tasks’ various activity components to detect them accurately.

3 Related work

Human task recognition has been an active field of research for more than a decade; therefore, a number of review papers with a wide range of scope and objectives exist in the literature (Lara and Labrador, 2013; Cornacchia et al., 2017; Nweke et al., 2018; Wang et al., 2019; Chen K. et al., 2021; Ramanujam et al., 2021; Bian et al., 2022; Zhang S. et al., 2022). This manuscript focuses on wearable sensor-based human task recognition; thus, this section is only focused on this domain. A brief overview of the latest task recognition surveys from the last decade is presented.

One of the earliest surveys provided an overall task recognition framework, along with its primary components incorporating wearable sensors (Lara and Labrador, 2013). The survey categorized the manuscripts based on their learning approach (supervised or semi-supervised) and response time (offline or online), and qualitatively evaluated them in terms of recognition performance, energy consumption, obtrusiveness, and flexibility. The highlighted open problems included the need for composite and concurrent task recognition. Other comprehensive surveys of task recognition using wearable sensors discussed how different task types can be detected by i) breadth of sensing modalities, ii) choosing appropriate on-body sensor locations, iii) and learning approaches (Cornacchia et al., 2017; Elbasiony and Gomaa, 2020).

The prior reviews primarily surveyed classical machine learning based approaches (e.g., Support Vector Machines and Decision Trees) for task recognition. More recent surveys reviewed manuscripts that leveraged deep learning (Nweke et al., 2018; Wang et al., 2019; Chen K. et al., 2021; Ramanujam et al., 2021; Zhang S. et al., 2022). Nweke et al. (2018) and Wang et al. (2019) presented a taxonomy of generative, discriminative, and hybrid deep learning algorithms for task recognition, while Ramanujam et al. (2021) categorized the deep learning algorithms by convolutional neural network, long short-term memory, and hybrid methods to conduct an in-depth analysis on the benchmark datasets. A comprehensive review of the deep learning challenges and opportunities was presented (Chen K. et al., 2021). Zhang S. et al. (2022) focused on the most recent cutting-edge deep learning methods, such as generative adversarial networks and deep reinforcement learning, along with a thorough analysis in terms of model comparison, selection, and deployment.

Previous task recognition surveys primarily focused on discussing algorithms using wearable sensors. An in-depth understanding of the state-of-art sensing modalities is as important as the algorithmic solutions. A recent survey categorized task recognition-related sensing modalities into five classes: mechanical kinematic sensing, field-based sensing, wave-based sensing, physiological sensing, and hybrid (Bian et al., 2022). Specific sensing modalities were presented by category, along with the strengths and weaknesses of each modality across the categorization.

Other surveys focused solely on video-based task recognition algorithms, typically using surveillance-based datasets (Ke et al., 2013; Wu et al., 2017; Zhang H.-B. et al., 2019; Pareek and Thakkar, 2021), while Liu et al. (2019) reviewed algorithms that leveraged the change in wireless signals, such as the received signal strength indicator and Doppler shift to recognize tasks. The in situ, potentially deconstructed (e.g., 2023 Turkey–Syria earthquake) first response HRT cannot rely on such sensors for task recognition.

Most existing literature reviews focus primarily on algorithms that detect tasks involving physical movements; thus, the reviewed algorithms are biased to only include gross and fine-grained motor task recognition methods. Compared to the prior literature reviews, this manuscript acknowledges that tasks have multiple dimensions and proposes a task taxonomy based on motion granularity (i.e., gross motor, fine-grained motor, and tactile), as well as other task component channels (i.e., visual, cognitive, auditory and speech). The manuscript’s primary contributions are summarized below.

• A comprehensive list of wearable sensor-based metrics are evaluated to assess the metrics’ ability to detect tasks within the context of the first-response HRT domain.

• A systematic review of relevant manuscripts over the years that are categorized by the seven activity components and grouped based on the machine learning methods.

• A set of criteria to evaluate the reviewed manuscripts’ ability to recognize tasks performed by first-response human teammates operating in HRTs.The criteria developed to evaluate the metrics and algorithms may appear restrictive, as they are grounded in the first-response domain; however, the classifications provided are widely applicable for domains that involve unstructured, dynamic environments that require wearable sensors and demand a holistic understanding of a human’s task state.

4 Task recognition metrics

Task recognition algorithms require metrics (e.g., inertial measurements, pupil dilation, and heart-rate) to detect the tasks performed by humans. The task recognition metrics incorporated by an algorithm inform how accurately a given set of tasks and the associated activity components can be detected for a particular task domain; therefore, selecting the right set of metrics takes precedence over algorithm development. Thirty task recognition metrics were identified across the existing literature. Three criteria were developed to evaluate the metrics’ ability to detect tasks performed by first-response HRTs operating in uncertain, unstructured environments.

Sensitivity refers to a metric’s ability to detect tasks reliably. A metric’s sensitivity is classified as High if at least three citations indicate that the metric detects tasks with

Versatility refers to a metric’s ability to detect tasks across different task domains. A metric’s versatility is High if the metric is cited for discriminating tasks in at least two or more task domains. Similarly, if the metric was used for classifying tasks belonging to only one task domain, the versatility is Low.

Suitability evaluates a metric’s feasibility to detect tasks in various physical environments (i.e., structured vs unstructured), which depends on the sensor technology for gathering the metric. Some metrics (e.g., eye gaze) can be acquired using many different sensors and technologies, but the review’s focus on disaster response encourages the use of wearable sensors over environmentally embedded sensors. A metric’s suitability is conforming if it is cited to be gathered by a wearable sensor that is unaffected by disturbances (e.g., sensor displacement noise, excessive perspiration, and change in lighting conditions), while non-conforming otherwise.

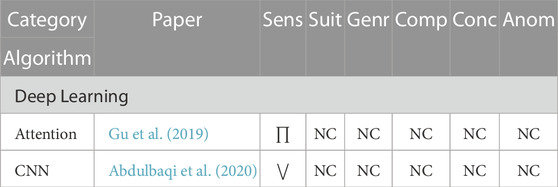

The task recognition metrics and the corresponding sensitivity, versatility, and suitability classifications are provided in Table 1. The Activity Component column in Table 1 indicates which component(s) (i.e., gross motor, fine-grained motor, tactile, visual, cognitive, auditory, and speech) are associated with the metric. The metrics are categorized based on their sensing properties. Each metric is evaluated based on the three evaluation criteria in order to identify the most reliable, minimal set of metrics required to recognize HRT tasks.

TABLE 1. Metrics evaluation overview by Sensitivity (Sens.), Versatility (Verst.), and Suitability (Suit.), where ⋁, ∏, and ⋀, represent Low, Medium, and High, respectively (.) indicates Indeterminate, while * indicate hypothesis predicted for the particular metric. Suitability is classified as conforming (C) or non-conforming (NC).

4.1 Inertial metrics

Inertial metrics consists of: i) linear acceleration, which measures a body region’s three-dimensional movement via an accelerometer; and ii) the body part’s three-dimensional orientation (i.e., rotation and rotational rate) using gyroscope and magnetometer. Inertial metrics are primarily used for detecting physical tasks, which includes gross motor, fine-grained motor and tactile tasks (Cleland et al., 2013; Lara and Labrador, 2013; Vepakomma et al., 2015; Cornacchia et al., 2017).

Linear acceleration is the most widely employed, and can be used as a standalone task recognition metric. Orientation is often used in combination with linear acceleration. The type and number of tasks detected by the inertial metrics can be linked to the number and placement of the sensors on the body (Atallah et al., 2010; Cleland et al., 2013). For example, inertial metric sensors to detect gross motor tasks are placed at central or lower body locations (i.e., chest, waist and thighs) (Ravi et al., 2005; Kwapisz et al., 2011; Mannini and Sabatini, 2011; Gjoreski et al., 2014), while fine-grained motor tasks require the sensor on the forearms and wrists (Zhang et al., 2008; Koskimaki et al., 2009; Min and Cho, 2011; Heard et al., 2019a), and tactile tasks place the sensors at the hand’s dorsal side and fingers (Jing et al., 2011; Cha et al., 2018; Liu et al., 2018). Linear acceleration has high sensitivity, while standalone orientation is indeterminate. The inertial metrics have high versatility and conform with suitability criteria.

4.2 Eye gaze metrics

Eye gaze metrics record the coordinates (gx, gy) of the gaze point over time. Raw eye gaze data is often processed to yield eye movement metrics representative of a human’s visual behavior and can be leveraged for task recognition. Fixations, saccades, scanpath, blink rate, and pupil dilation represent some of the important eye gaze-based metrics (Kunze et al., 2013b; Steil and Bulling, 2015; Martinez et al., 2017; Hevesi et al., 2018; Srivastava et al., 2018; Kelton et al., 2019; Landsmann et al., 2019). These metrics are commonly used for recognizing visual tasks, while some prior research have also detected cognitive tasks (Kunze et al., 2013c; Islam et al., 2021).

Fixations are stationary eye states during which gaze is held upon a particular location (Bulling et al., 2010), while saccades are the simultaneous movement of both eyes between two fixations (Bulling et al., 2010). Fixation has high sensitivity (e.g., Steil and Bulling, 2015; Kelton et al., 2019; Landsmann et al., 2019), while a saccade has medium sensitivity (e.g., Bulling et al., 2010; Ishimaru et al., 2017; Srivastava et al., 2018). Both metrics have high versatility and conform with suitability.

A scanpath is a fixation-saccade-fixation sequence (Martinez et al., 2017). Scanpaths have medium sensitivity (Martinez et al., 2017; Srivastava et al., 2018; Islam et al., 2021), conform with suitability, and have low versatility.

Blink rate represents the number of blinks (i.e., opening and closing eyelids) per unit time (Bulling et al., 2010). Blink rate is often used in conjunction with fixations and saccades to provide further context. The metric’s sensitivity is indeterminate, but it is hypothesized to have low sensitivity and is used predominantly to detect desktop or office-based visual tasks (e.g., Bulling et al., 2010; Ishimaru et al., 2014a). Prior research reviews indicate that blink rate highly correlates with the cognitive workload (Marquart et al., 2015; Heard et al., 2018a); therefore, blink rate is hypothesized to have high sensitivity toward cognitive task recognition. This metric is also hypothesized to have high versatility given its potential to be used in multiple task domains, and it conforms with suitability.

Pupil dilation, or pupillometry, is the change in pupil diameter. The metric’s sensitivity is indeterminate; however, hypothesized to have high sensitivity and versatility toward cognitive and visual task recognition based on its ability to reliably detect cognitive workload (Ahlstrom and Friedman-Berg, 2006; Marquart et al., 2015; Heard et al., 2018a), and its high correlation in various visual search tasks (Porter et al., 2007; Privitera et al., 2010; Wahn et al., 2016). Environmental lighting changes can significantly impact the metric’s acquisition, so it does not conform with suitability.

4.3 Electrophysiological metrics

The electrophysiological metrics refer to the electrical signals associated with various body parts (e.g., muscles, brain and eyes). These signals can be leveraged for task recognition, as they are highly correlated with tasks humans conduct. The most common electrophysiological metrics are electromyography, electrooculography, electroencephalography, and electrocardiography.

Electromyography measures the potential difference caused by contracting and relaxing muscle tissues. Surface-electromyography (sEMG) is a non-invasive technique, wherein electrodes placed on the skin measure the electromyography signals. A forearm positioned sEMG commonly detects fine-grained motor tasks (e.g., Koskimäki et al., 2017; Heard et al., 2019b; Frank et al., 2019), while upper limb positioned sEMG can detect gross motor tasks (e.g., Scheme and Englehart, 2011; Trigili et al., 2019). sEMG has high sensitivity and versatility for detecting gross and fine-grained motor tasks. The metric has been employed for detecting various finger and intricate hand motions (e.g., Chen et al. (2007a; b); Zhang et al. (2009)); thus, it is hypothesized to have high sensitivity for detecting tactile tasks. Finally, the metric does not conform with suitability, as sweat accumulation underneath the electrodes may compromise the sEMG sensor’s adherence to the skin, as well as the associated signal fidelity (Abdoli-Eramaki et al., 2012).

The Electrooculography (EOG) metric measures the potential difference between the cornea and the retina caused by eye movements. The metric has medium sensitivity for classifying visual tasks (e.g., typing, web browsing, reading and watching videos) (Bulling et al., 2010; Ishimaru et al., 2014b; Islam et al., 2021). The metric is capable of detecting cognitive tasks (e.g., Datta et al., 2014; Lagodzinski et al., 2018), but its cognitive sensitivity is indeterminate. The metric has high versatility (Datta et al., 2014; Lagodzinski et al., 2018). EOG does not conform with suitability, because it is susceptible to noise introduced by facial muscle movements (Lagodzinski et al., 2018).

Electroencephalography (EEG) collects electrical neurophysiological signals from different parts of the brain. EEG measures two different metrics: i) the event-related potential measures the voltage signal produced by the brain in response to a stimulus (e.g., Zhang X. et al., 2019; Salehzadeh et al., 2020); and ii) the power spectral density measures the power present in the signal spectrum (e.g., Kunze et al., 2013a; Sarkar et al., 2016). Both metrics have high sensitivity. EEG signals may be inaccurate when a human is physically active, so the metrics are best suited for detecting cognitive tasks in a sedentary environment. Therefore, the EEG metrics have low versatility. EEG signals suffer from low signal-to-noise ratios (Schirrmeister et al., 2017; Zhang X. et al., 2019), and incorrect sensor placement can create inaccuracies; therefore, EEG metrics do not conform with suitability.

Electrocardiography (ECG) measures the heart’s electrical activity. Standalone ECG signals are not sensitive enough to detect tasks; therefore, ECG is often used in conjunction with inertial metrics to detect gross motor tasks (Jia and Liu, 2013; Kher et al., 2013). ECG signal has low versatility, as it has been used to detect only ambulatory tasks (e.g., Jia and Liu, 2013; Kher et al., 2013), but it does conform with suitability.

The ECG signals can be used to measure two other metrics: i) heart-rate measures the number of heart beats per minute, while ii) heart-rate variability measures the variation in the heart-rate’s beat-to-beat interval. Heart-rate has low sensitivity for detecting gross motor tasks (e.g., Tapia et al., 2007; Park et al., 2017; Nandy et al., 2020) and is often used for distinguishing the humans’ intensity when performing physical tasks (Tapia et al., 2007; Nandy et al., 2020). Heart-rate has low versatility, as it can only detect ambulatory tasks. The heart-rate metric conforms with suitability if a human’s stress and fatigue levels remain constant. The heart-rate variability metric has seldom been used for task recognition (Park et al., 2017), but it is sensitive to large variations in cognitive workload (Heard et al., 2018a). Therefore, the metric is hypothesized to have high sensitivity for cognitive task recognition. The metric conforms with suitability, and is hypothesized to have high versatility.

4.4 Vision-based metrics

Vision-based metrics (e.g., optical flow, human-body pose and object detection) use videos and images containing human motions in order to infer the tasks being performed (Fathi et al., 2011; Garcia-Hernando et al., 2018; Ullah et al., 2018; Neili Boualia and Essoukri Ben Amara, 2021). These metrics are acquired via environmentally embedded cameras installed at fixed locations, or using wearable cameras mounted on a human’s shoulders, head, or chest (e.g., Mayol and Murray, 2005; Fathi et al., 2011; Matsuo et al., 2014).

Vision-based metrics detect gross and fine-grained motor tasks by enabling various computer vision algorithms [e.g., object detection, localization, and motion tracking He et al. (2016); Deng et al. (2009); Redmon et al. (2016)], which are relevant for task recognition. The metrics’ use is discouraged for the intended HRT domain, because i) environmentally embedded cameras are not readily available in unstructured domains; ii) high susceptibility to background noise from lighting, vibrations, and occlusion; iii) raise privacy concerns, and iv) computationally expensive to process (Lara and Labrador, 2013). The metrics have medium to high sensitivity, high versatility, and non-conforming with suitability.

4.5 Acoustic metrics

Acoustic metrics leverage the characteristic sounds in order to detect auditory events in the surrounding environment. Auditory event recognition algorithms commonly use two types of frequency-domain metrics. A spectrogram is a three-dimensional acoustic metric representing a sound signal’s amplitude over time at various frequencies (Haubrick and Ye, 2019). The spectrogram has high sensitivity and versatility (e.g., Laput et al., 2018; Haubrick and Ye, 2019; Liang and Thomaz, 2019). Cepstrum represents the short-term power spectrum of a sound, and is obtained by applying an inverse Fourier transformation on a sound wave’s spectrum. The Mel Frequency Cepstral Coefficients (MFCCs) represent the amplitudes of the resulting cepstrum on a Mel scale. The MFCC metric has high sensitivity and versatility (e.g., Min et al., 2008; Stork et al., 2012). Both metrics conform with suitability, as long as the audio is captured via a wearable microphone.

Noise level measures a task environment’s loudness in decibels. Noise level correlates to an increase in auditory workload (Heard et al., 2018b), but has not been used for task recognition; however, the metric is hypothesized to detect auditory events when the events are fewer

4.6 Speech metrics

Communication exchanges between human teammates can be translated into text, or a Verbal transcript, such that the message is captured as it was spoken. Transcripts can be generated manually (Gu et al., 2019), or using an automatic speech recognition tool [e.g., SPHINX (Lee et al., 1990), Kaldi (Povey et al., 2011), Wav2Letter++ (Pratap et al., 2019)]. The transcribed words are encoded into n − dimensional vectors [e.g., GloVe vector embeddings (Pennington et al., 2014)] to be used as inputs for detecting speech-reliant tasks (Gu et al., 2019). Representative keywords that are spoken more frequently can be used for detecting tasks (Abdulbaqi et al., 2020). Keywords can be detected for every utterance automatically using word-spotting (Tsai and Hao, 2019; Gao et al., 2020). Identifying keywords for each task is non-trivial and requires considerable human effort. Both transcript and keywords metrics’ sensitivity is indeterminate, but is hypothesized to be medium (Gu et al., 2019; Abdulbaqi et al., 2020). The metrics conform with suitability, provided the speech audio is obtained using a wearable microphone that minimize extraneous ambient noise. The metrics are exceptionally domain specific; therefore, their versatility is hypothesized to be low.

Several speech-related metrics (e.g., speech rate, pitch, and voice intensity) that do not rely on natural language processing have proven effective for estimating speech workload (Heard et al., 2018a; Heard et al., 2019a; Fortune et al., 2020). Speech rate captures verbal communications’ articulation rate by measuring the number of syllables uttered per unit time (Fortune et al., 2020). Voice intensity is the speech signal’s root-mean-square value, while Pitch is the signal’s dominant frequency over a time period (Heard et al., 2019a). These metrics have not been used for task recognition; therefore, additional evidence is required to substantiate their evaluation criteria. The metrics’ sensitivity and versatility are hypothesized to be high. The suitability criterion is conforming, assuming that the speech audio is obtained via wearable microphones.

4.7 Localization-based metrics

Localization-based metrics infer tasks by analyzing either the absolute or relative position of items of interest, including humans. The outdoor localization metric measures a human’s absolute location (i.e., latitude and longitude coordinates) using satellite navigation systems. This metric has low sensitivity, (Liao et al., 2007), but can support task recognition by providing context (Reddy et al., 2010; Riboni and Bettini, 2011). The metric is highly versatile and non-conforming with suitability (Lara and Labrador, 2013).

Indoor localization determines the relative position of items, including humans, relative to a known reference point in indoor environments by acquiring the change in radio signals. Radio Frequency Identification (RFID) tags and wireless modems installed at stationary locations are the standard options. The indoor localization metric infers tasks by determining humans’ location or identifying objects lying in close proximity (Cornacchia et al., 2017; Fan et al., 2019). The metric has high sensitivity and versatility. The metric requires environmentally embedded sensors; therefore, it is non-conforming for suitability.

4.8 Physiological metrics

Physiological metrics provide precise information about a human’s vital state. Several physiological metrics exist: i) Galvanic skin response, which measures the skin’s conductivity, ii) Respiration rate, which represents the number of breaths taken per minute, iii) Posture Magnitude, which measures a human’s trunk flexion (leaning forward) and extension (leaning backward) angle in degrees, and iv) Skin temperature, are the most commonly used physiological metrics for task recognition (Min and Cho, 2011; Lara et al., 2012). The physiological metrics have low sensitivity, as they react to activity changes with a time delay. The metrics have high versatility and conform with suitability.

5 Task recognition algorithms

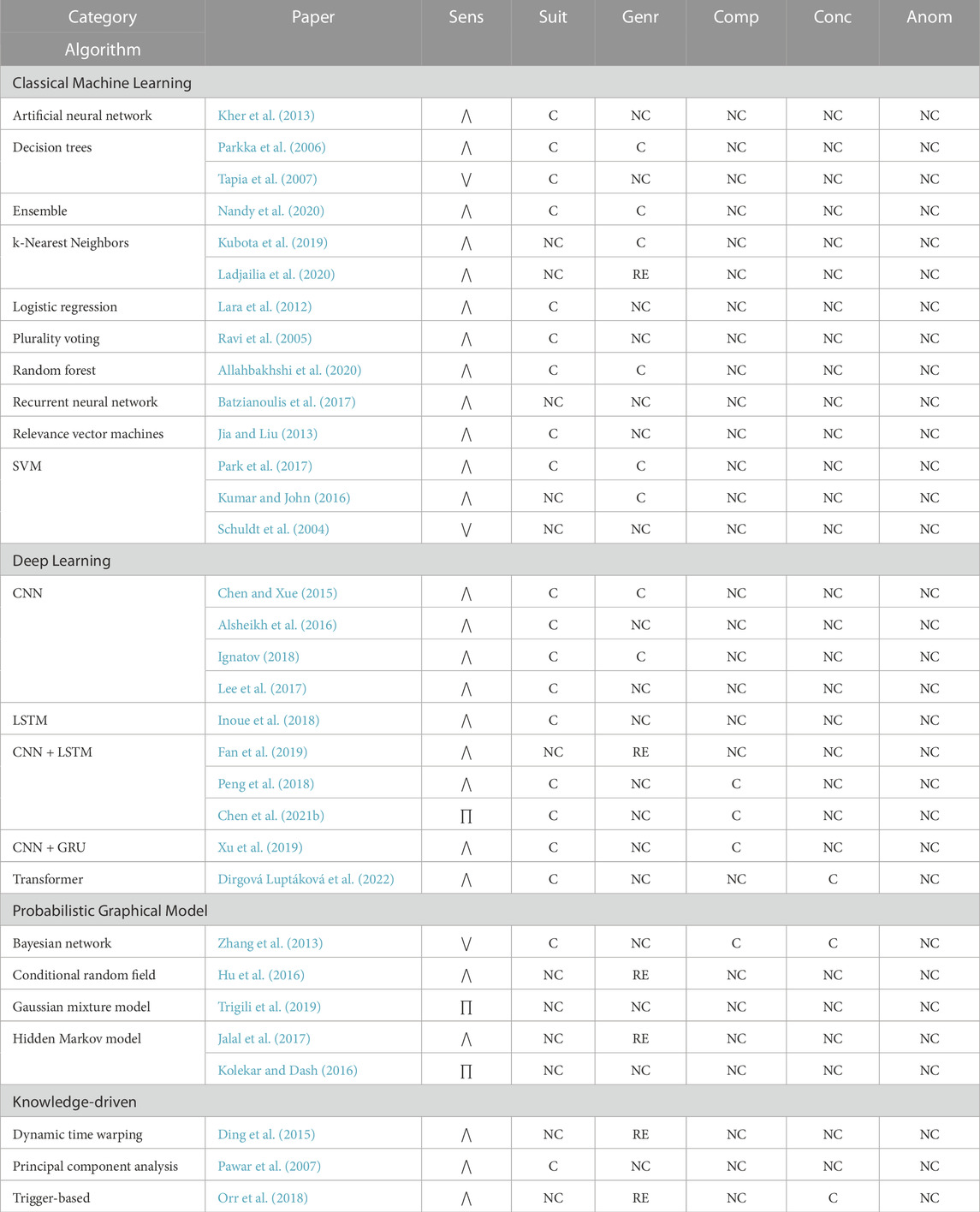

Over one hundred task recognition algorithms across different activity components and task domains were identified and reviewed. The algorithms are evaluated using the following criteria: sensitivity, suitability, generalizability, composite factor, concurrency, and anomaly awareness. The evaluation criteria and the corresponding requirements were chosen in order to assess an algorithm’s viability for detecting tasks in a human-robot teaming domain.

5.1 Evaluation criteria

Sensitivity refers to an algorithm’s ability to detect tasks reliably. An algorithm’s sensitivity is classified as High if the algorithm detects tasks with

An algorithm’s suitability evaluates its feasibility for detecting tasks in various physical environments. An algorithm conforms if it can detect tasks independent of the environment by incorporating wearable, reliable metrics, and is non-conforming otherwise.

Generalizability represents an algorithm’s ability to identify tasks across humans. The generalizability criterion depends on the achieved accuracy, given the algorithm’s validation method. An algorithm conforms if it achieves

The Composite factor criterion determines whether an algorithm can detect tasks composed of multiple atomic activities. If a detected task incorporates two or more atomic activities, then the algorithm conforms with the composite task criterion. Typically, long duration tasks that incorporate multiple action sequences per task are composite in nature.

Concurrency determines if the algorithm can detect tasks executed simultaneously. Concurrency has multiple forms: i) a task may be initiated prior to completing a task, such that a portion of the task overlaps with the prior task (i.e., interleaved tasks), and ii) multiple tasks performed at the same time (i.e., simultaneous tasks) (Allen and Ferguson, 1994; Liu et al., 2017). An algorithm conforms if it can detect at least one form of concurrency, and is non-conforming otherwise.

Anomaly Awareness determines an algorithm’s ability to detect an out-of-class task instance, which arises when an algorithm encounters sensor data that does not correspond to any of the algorithm’s learned tasks. An algorithm conforms with anomaly awareness if it can detect out-of-class instances.

Most task recognition algorithms can only detect a predefined set of atomic tasks and are unable to detect concurrent tasks or out-of-class instances (Lara and Labrador, 2013; Cornacchia et al., 2017). Thus, unless identified otherwise, the reviewed algorithms do not conform with composite factor, concurrency and anomaly awareness.

5.2 Overview of task recognition algorithm categories

Task recognition algorithms typically incorporate supervised machine learning to identify the tasks from the sensor data (see Section 2). These algorithms can be grouped into several categories based on feature extraction, ability to handle uncertainty, and heuristics. Three common data-driven task recognition algorithm categories exist in the literature, which are.

• Classical machine learning rely on features extracted from raw sensor data to learn a prediction model. Classical approaches are suitable when there is sufficient domain knowledge to extract meaningful features, and the training dataset is small.

• Deep learning avoids designing handcrafted features, learns the features automatically (Goodfellow et al., 2016), and is generally suitable when a large amount of data is available for training the model. Deep learning approaches leverage data to extract high-level features, while simultaneously training a model to predict the tasks.

• Probabilistic graphical models utilize probabilistic network structures (e.g., Bayesian Networks (Du et al., 2006), Hidden Markov Models (Chung and Liu, 2008), Conditional Random Fields (Vail et al., 2007)) to model uncertainties and the tasks’ temporal relationships, while also identifying composite, concurrent tasks.The data-driven models’ primary limitations are that they i) cannot be interpreted easily, and ii) may require large amount of training data to be robust enough to handle individual differences across humans and generalize across multiple domains.

Knowledge-driven task recognition models exploit heuristics and domain knowledge to recognize the tasks using reasoning-based approaches [e.g., ontology and first-order logic (Triboan et al., 2017; Safyan et al., 2019; Tang et al., 2019)]. Knowledge-driven models are logically elegant and easier to interpret, but do not have enough expressive power to model uncertainties. Additionally, creating logical rules to model temporal relations becomes impractical when there are a large number of tasks with intricate relationships (Chen and Nugent, 2019; Liao et al., 2020).

5.3 Gross motor tasks

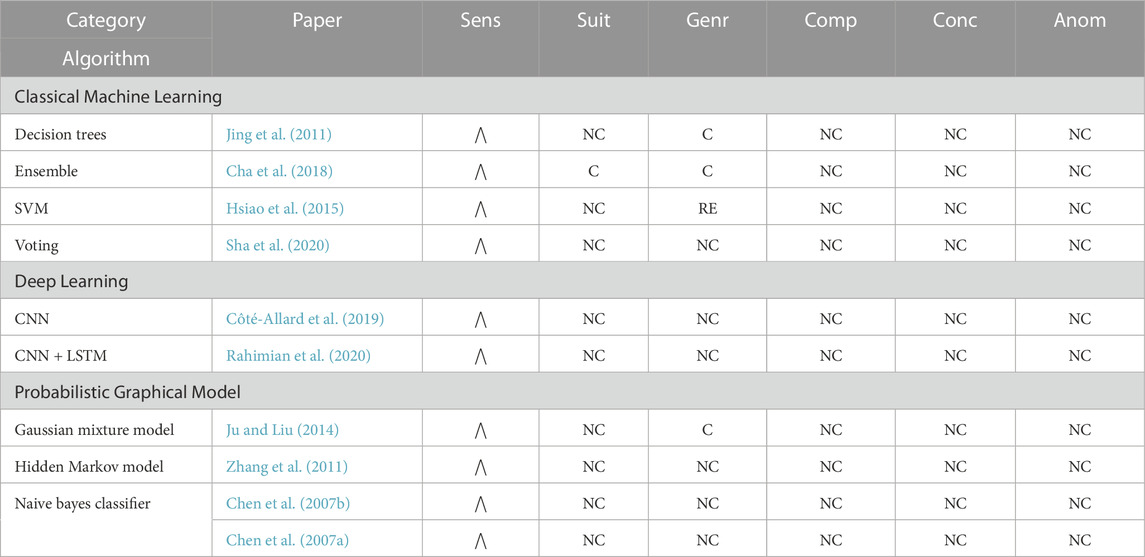

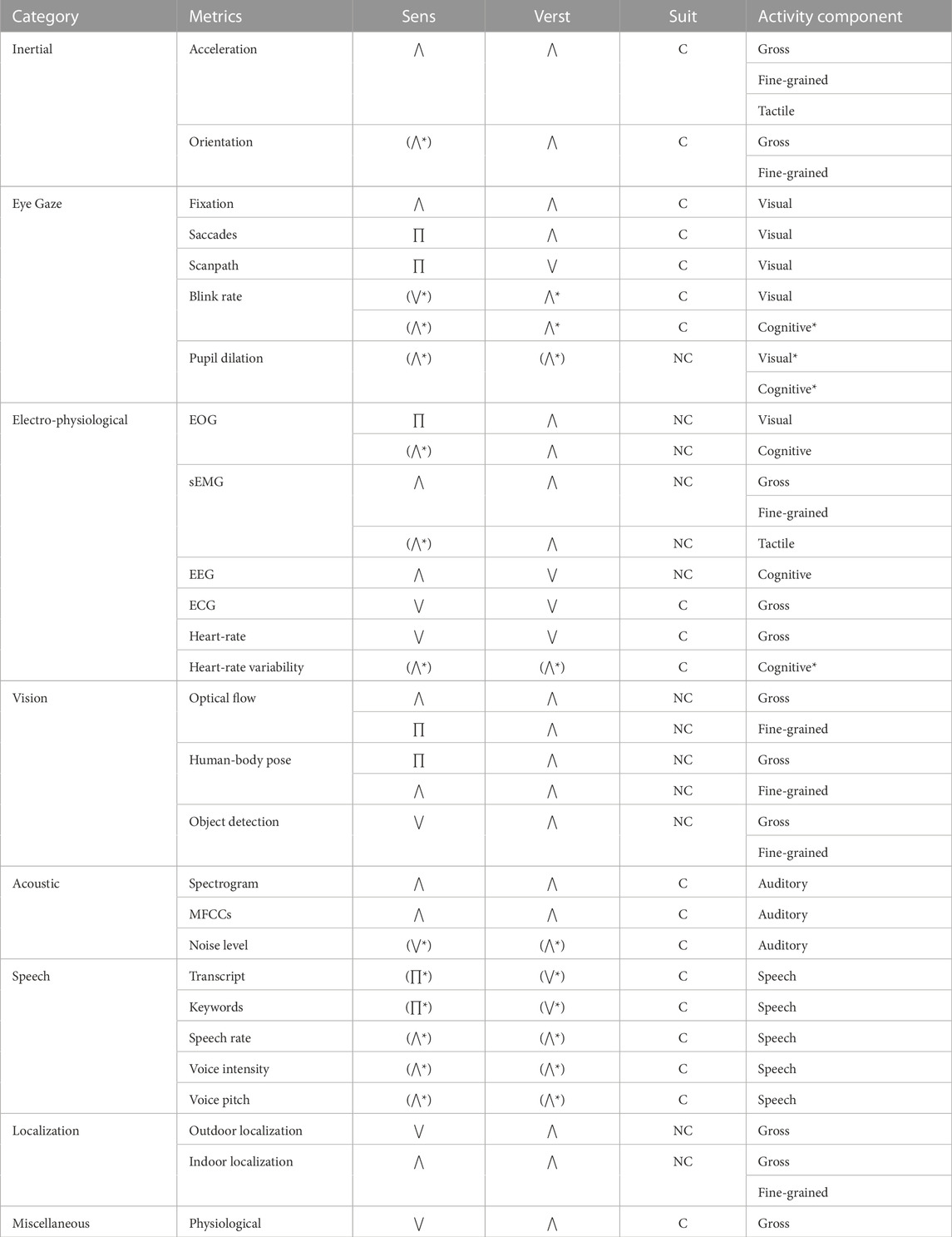

Gross motor tasks occur across multiple task categories, such as Activities of Daily Living (ADL), fitness, and, industrial. A high-level overview of the reviewed algorithms with regard to the evaluation criteria is presented by algorithm category in Table 2.

TABLE 2. Gross motor task recognition algorithms evaluation overview by Sensitivity (Sens.), Suitability (Suit.), Generalizability (Genr.), Composite Factor (Comp.), Concurrency (Conc.), and Anomaly Awareness (Anom.). Sensitivity is classified as Low (⋁), Medium (∏), or High (⋀), while other criteria are classified as conforming (C), non-conforming (NC), or requiring additional evidence (RE).

5.3.1 Classical machine learning

Most gross motor task recognition algorithms incorporate classical machine learning using inertial metrics, often measured at central and lower body locations (Cornacchia et al., 2017), such as the chest (e.g., Li et al., 2010; Lara et al., 2012; Braojos et al., 2014), waist (e.g., Arif et al., 2014; Weng et al., 2014; Capela et al., 2015; Allahbakhshi et al., 2020), and thighs (e.g., Braojos et al., 2014; Gjoreski et al., 2014; Weng et al., 2014). Generally, inertial metrics measured at upper peripheral locations (e.g., forearms and wrists) are not suited for detecting gross motor tasks (Kubota et al., 2019).

Algorithms may also combine inertial data with physiological metrics, such as ECG, heart-rate, respiration rate, or skin temperature (e.g., Parkka et al., 2006; Jia and Liu, 2013; Kher et al., 2013; Park et al., 2017; Nandy et al., 2020). These algorithms extract time- and frequency-domain features and use conventional classifiers [e.g., Support Vector Machine (SVM) (Jia and Liu, 2013; Park et al., 2017), Decision Trees (Parkka et al., 2006; Tapia et al., 2007), Random Forest (Allahbakhshi et al., 2020; Nandy et al., 2020), or Logistic Regression (Lara et al., 2012)]. Physiological data can increase recognition accuracy by providing additional context, such as distinguishing between intensity levels [e.g., running and running with weights (Nandy et al., 2020)]. However, the metrics may also disrupt real-time task recognition, as they are not sensitive to sudden changes in physical activity. For example, incorporating heart-rate reduced performance when heart-rate remained high after performing physically demanding tasks, even when the human was lying or sitting (Tapia et al., 2007).

Classical machine learning algorithms involving vision-based metrics leverage optical flow extracted from stationary cameras for gross motor task recognition (e.g., Kumar and John, 2016; Ladjailia et al., 2020). Task specific motion descriptors derived from optical flow are used as features to train a machine learning classifier (e.g., SVM (Kumar and John, 2016) or k-Nearest Neighbors (Ladjailia et al., 2020)).

Generally, classical machine learning based gross motor task detection algorithms typically have high sensitivity, primarily due to the atomic and repetitive nature of gross motor tasks. These algorithms conform with suitability when the metrics incorporated are wearable and reliable (Ravi et al., 2005; Parkka et al., 2006; Tapia et al., 2007; Lara et al., 2012; Jia and Liu, 2013; Kher et al., 2013; Park et al., 2017; Allahbakhshi et al., 2020; Nandy et al., 2020), and are non-conforming otherwise (Schuldt et al., 2004; Kumar and John, 2016; Kubota et al., 2019; Ladjailia et al., 2020). Overall, the algorithms conform with generalizability, as they typically achieved high accuracy using a leave-one-subject-out cross-validation (Parkka et al., 2006; Kumar and John, 2016; Park et al., 2017; Kubota et al., 2019; Allahbakhshi et al., 2020; Nandy et al., 2020). All evaluated algorithms are non-conforming for the concurrency, composite factor, and anomaly awareness criteria.

5.3.2 Deep learning methods

Classical machine learning algorithms require handcrafted features that are highly problem-specific, and generalize poorly across task categories (Saez et al., 2017). Additionally, those algorithms cannot represent the composite relationships among atomic tasks, and require significant human effort to select features and sensor data thresholding (Saez et al., 2017). Comparative studies indicate deep learning algorithms outperform classical machine learning when large amount of training data is available (Gjoreski et al., 2016; Saez et al., 2017; Shakya et al., 2018).

Deep learning algorithms involving inertial metrics typically require little to no sensor data preprocessing. A Convolutional Neural Network (CNN) detected eight gross motor tasks (e.g., falling, running, jumping, walking, ascending and descending a staircase) using raw acceleration data (Chen and Xue, 2015). Although inertial data preprocessing is not required, it may be advantageous in some situations. For example, a CNN algorithm transformed the x, y, and z acceleration into vector magnitude data in order to minimize the acceleration’s rotational interference (Lee et al., 2017). The acceleration signal’s spectrogram, which is a three dimensional representation of changes in the acceleration signal’s energy as a function of frequency and time, was used to train a CNN model (Alsheikh et al., 2016). Employing the spectrogram improved the classification accuracy and reduced the computational complexity significantly (Alsheikh et al., 2016).

Most recent algorithms leverage publicly available huge benchmark datasets (e.g., Roggen et al., 2010; Reiss and Stricker, 2012) to build deeper and more complex task recognition models. Deep learning algorithms combine CNNs with sequential modeling networks (e.g., Long Short-term Memory (LSTMs) (Peng et al., 2018; Chen L. et al., 2021), Gated Recurrent Units (GRUs) (Xu et al., 2019)) to detect composite gross motor tasks from inertial data. The DEBONAIR algorithm (Chen L. et al., 2021) incorporated multiple convolutional sub-networks to extract features based on the input metrics’ dynamicity and passed the sub-networks’ feature maps to LSTM networks to detect composite gross motor tasks (e.g., vacuuming, nordic walking, and rope jumping). The AROMA algorithm (Peng et al., 2018) recognized atomic and composite tasks jointly by adopting a CNN + LSTM architecture, while InnoHAR algorithm (Xu et al., 2019) combined the Inception CNN module with GRUs to detect composite gross motor tasks. Several other algorithms draw inspiration from natural language processing to detect gross motor task transitions (Thu and Han, 2021) and concurrency (Dirgová Luptáková et al., 2022) by utilizing bi-directional LSTMs and Transformers, respectively. Bi-directional LSTMs concatenate information from positive as well as negative time directions in order to predict tasks, whereas Transformers incorporate self-attention mechanisms to draw long-term dependencies by focusing on the most relevant parts of the input sequence.

RFID indoor localization is common for task recognition (e.g., Ding et al., 2015; Hu et al., 2016; Fan et al., 2019). The RFID’s received signal strength indicator and phase angle metrics are used to determine the relative distance and orientation of the tags with respect to the associated embedded environment readers (Scherhäufl et al., 2014). The two common task identification methods are: i) tag-attached, and ii) tag-free (Fan et al., 2019). DeepTag (Fan et al., 2019) introduced an advanced RFID-based task recognition algorithm that identified tasks in both tag-attached and tag-free scenarios. The deep learning-based algorithm used a preprocessed received signal strength indicator and phase angle information that combined a CNN with LSTMs in order to predict seven ADL tasks. Generally, the gross motor task recognition algorithms involving indoor localization have high sensitivity, but do not conform with suitability and composite factor.

Deep learning algorithms’ increased network complexity and abstraction alleviates most of the classical machine learning algorithms’ limitation, resulting in high sensitivity, especially when the data is abundant (Gjoreski et al., 2016; Saez et al., 2017); however, caution must be exercised to not overfit the algorithms. Deep learning algorithms can achieve high classification accuracy on multi-modal sensor data without requiring special feature engineering for each modality. For example, a hybrid deep learning algorithm trained using an 8-channel sEMG and inertial data detected thirty gym exercises (e.g., dips, bench press, rowing) (Frank et al., 2019). Deep learning algorithms rarely validate their results via leave-one-subject-out cross-validation, as in most cases the algorithms are validated by splitting all the available data randomly into training and validation datasets; therefore, the algorithms’ generalizability criteria either requires additional evidence, or is non-conforming.

5.3.3 Probabilistic graphical models

Algorithms’ task predictions are not always accurate, as there is always some uncertainty associated with the predictions, especially when tasks overlap with one another, or share similar motion patterns (e.g., running vs running with weights). Additionally, humans may perform two or more tasks simultaneously, which complicates task identification when using classical and deep learning methods that are typically trained to predict only one task occurring at a time. Probabilistic graphical task recognition algorithms are adept at managing these uncertainties, and have the ability to model simultaneous tasks.

Probabilistic graphical models can detect gross motor tasks across various metrics [e.g., indoor localization (Hu et al., 2016), sEMG (Trigili et al., 2019), inertial (Lee and Cho, 2011; Kim et al., 2015), human-body pose (Jalal et al., 2017), optical flow (Kolekar and Dash, 2016), and object detection (Zhang et al., 2013)]. Hidden Markov Models are the most widely utilized probabilistic graphical algorithm for gross motor task recognition (e.g., Lee and Cho, 2011; Kim et al., 2015; Kolekar and Dash, 2016; Jalal et al., 2017), because Hidden Markov Model’s sequence modeling properties can be exploited for continuous task recognition (Kim et al., 2015). Hidden Markov Models also allow for modeling the tasks hierarchically (Lee and Cho, 2011), and can distinguish tasks with intra-class variances and inter-class similarities (Kim et al., 2015). Other probabilistic models [e.g., Gaussian Mixture Models (Trigili et al., 2019)] can also detect gross motor tasks. A probabilistic graphical model, the Interval-temporal Bayesian Network, unified Bayesian network’s probabilistic representation with interval algebra’s (Allen and Ferguson, 1994) ability to represent temporal relationships between atomic events (Zhang et al., 2013) to detect composite and concurrent gross motor tasks. The algorithm’s sensitivity and generalizability are low and non-conforming, respectively. The algorithm’s suitability is non-conforming, as it employed vision-based metrics. Finally, the algorithm’s composite factor and concurrency conform.

5.3.4 Knowledge-driven algorithms

Gross motor rule-based task recognition algorithms incorporate template matching or thresholding to recognize tasks. A Dynamic Time Warping (Salvador and Chan, 2007) based algorithm detected free-weight exercises by computing the similarity between Doppler shift profiles of the reflected RFID signals (Ding et al., 2015). A principal component analysis thresholding algorithm detected ambulatory task transitions by analyzing the motion artifacts in ECG data induced by body movements (Pawar et al., 2007; 2006).

Rule-based algorithms can detect concurrent tasks, if the rules are relatively simple to derive using the sensor data. A multiagent algorithm (Orr et al., 2018) detected up to seven gross motor atomic tasks (e.g., dressing, cleaning, and food preparation). The algorithm detected up to two concurrent tasks using environmentally-embedded proximity sensors.

Rule-based systems are ideal for gross motor task detection when the sensor data is limited and can be comprehended in a relatively straightforward manner. For example, the prior rule-based multiagent algorithm detected concurrent tasks, as it was easy to form the rules using the proximity sensor data. Rule-based algorithms are unsuitable when the sensor data cannot be interpreted easily (i.e., instances of high dimensionality), or when there are a large number of tasks that have intricate relationships.

5.3.5 Discussion

Most machine learning based algorithms can detect gross motor tasks reliably with acceptable suitability and generalizability when the tasks are atomic and non-concurrent with repetitive motions (e.g., Parkka et al., 2006; Chen and Xue, 2015; Park et al., 2017; Allahbakhshi et al., 2020). The human-robot teaming domain often involves composite tasks that may occur concurrently. None of the existing gross motor task detection algorithms satisfy all the required criteria for the intended domain.

The interval-temporal algorithm (Zhang et al., 2013) is the preferred approach for gross motor task detection. The algorithm can detect concurrent and composite tasks, but had low sensitivity and is non-conforming for suitability and generalizability, which can be attributed to the vision-based metrics and low-level Bayesian network’s poor classification accuracy. However, the algorithm is independent of the metrics (Zhang et al., 2013), as it operates hierarchically, utilizing the low-level atomic event predictions. Therefore, a modified version more suited to the intended domain may incorporate a classical machine learning algorithm [e.g., Random Forest (Allahbakhshi et al., 2020)] or a deep network [e.g., CNN (Chen and Xue, 2015)], depending on the amount of data available, to detect the low-level atomic tasks using inertial metrics. The interval-temporal algorithm can be used to detect the composite and concurrent gross motor tasks.

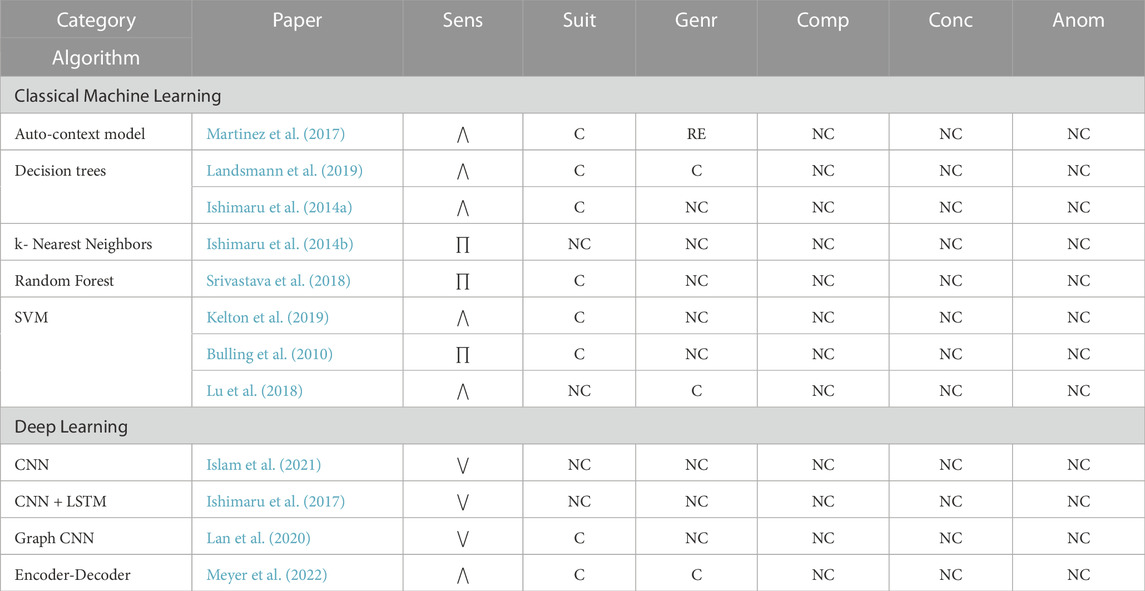

5.4 Fine-grained motor tasks

Fine-grained motor tasks often involve highly articulated and dexterous motions that can be performed in multiple ways. The execution and the time taken to complete the tasks differ from one human to the other. These aspects of fine-grained motor tasks can create ambiguity in the sensor data, making it difficult for the algorithms to detect such tasks; therefore, a wide range of methods adopting various sensing modalities exist for detecting fine-grained tasks accurately. The evaluation criteria for each reviewed fine-grained task recognition algorithm by algorithm category is provided in Table 3.

5.4.1 Classical machine learning

Classical machine learning algorithms are suitable for detecting fine-grained motor tasks only when the tasks are short in duration, atomic, or repetitive (Heard et al., 2019a). Among the classical machine learning algorithms, k-Nearest Neighbors (e.g., Koskimaki et al., 2009; Kubota et al., 2019; Ladjailia et al., 2020), Random Forest (e.g., Wang et al., 2015; Li et al., 2016a; Heard et al., 2019b), and SVM (e.g., Min and Cho, 2011; Pirsiavash and Ramanan, 2012; Matsuo et al., 2014) are the most popular choices for fine-grained motor task detection.

Several classical machine learning algorithms use egocentric wearable camera videos for detecting ADL tasks (e.g., Pirsiavash and Ramanan, 2012; Matsuo et al., 2014; Garcia-Hernando et al., 2018). Image processing techniques (e.g., histogram of orientation or spatial pyramids) are used to detect objects and conventional machine learning algorithms recognize the tasks from the detected objects. These algorithms may also incorporate saliency detectors (Matsuo et al., 2014), or depth information (Garcia-Hernando et al., 2018) to identify the objects being manipulated. Temporal motion descriptive features from optical flow can also be used for recognizing fine-grained tasks. A k-Nearest Neighbors algorithm classified the fine-grained motor tasks (Ladjailia et al., 2020) based on a histogram constructed using the motion descriptors from optical flow.

Forearm sEMG signals can detect tasks that are difficult for a vision-based algorithm to differentiate when using the same conventional classifiers. A comparison between an sEMG [i.e., Myo armband (Sathiyanarayanan and Rajan, 2016)] and a motion capture sensor revealed that the former had higher efficacy in recognizing fine-grained motions (e.g., grasps and assembly part manipulation tasks) (Kubota et al., 2019). The classifiers with the sEMG data detected the minute variation in the muscle associated with each grasp; resulting in significantly higher recognition accuracy than using the motion capture data.

Some classical machine learning algorithms that use a single Inertial Measurement Unit (IMU) can classify fine-grained ADL tasks [e.g., eating and drinking (Zhang et al., 2008)], and assembly line activities [e.g., hammering and tightening screws Koskimaki et al. (2009)]. This approach is suitable for tasks involving a single hand (i.e., the dominant), when the number of recognized tasks is small (e.g.,

A recent system attempted to recognize twenty-three composite clinical procedures by using metrics from two Myo armbands and statically embedded cameras (Heard et al., 2019a). The Myo’s sEMG and inertial metrics were combined with the camera’s human body pose metric to train a Random Forest classifier with majority voting. Many clinical procedures require multiple articulated fine-grained motions that range from

A multi-modal framework, incorporating five inertial sensors, data gloves and a bio-signal sensor, detected eleven atomic tasks (e.g., writing, brushing, typing) and eight composite tasks (e.g., exercising, working, meeting) (Min and Cho, 2011). A hybrid ensemble approach combined classifier selection and output fusion. The sensors’ inputs were initially recognized by a Naive Bayes selection module. The selection module’s task probabilities chose a set of task-specific SVM classifiers that fused their predictions into a matrix in order to identify the tasks.

Classical machine learning algorithms’ classification accuracies range between 45% and 65%; thus, they generally have low sensitivity. The algorithms’ suitability criterion depend on the metrics employed. The composite factor and generalizability criteria also vary across algorithms, as they depend on the tasks detected and the validation methodology. Overall, most algorithms are non-conforming for the concurrency and composite factors, making them unsuitable for detecting fine-grained motor tasks for the intended HRT domain.

5.4.2 Deep learning

The ambiguous, convoluted sensor data from fine-grained motor tasks causes the feature engineering and extraction to be laborious. Deep learning algorithms overcome this limitation by automating the feature extraction process. There are three different types of deep learning algorithms for fine-grained motor task recognition: i) Convolutional, ii) Recurrent, and iii) Hybrid. Convolutional algorithms typically incorporate only CNNs to learn the spatial features from sensor data for each task and distinguish them by comparing the spatial patterns (e.g., Castro et al., 2015; Li et al., 2016b; Ma et al., 2016; Laput and Harrison, 2019; Li L. et al., 2020). Recurrent algorithms detect the tasks by capturing the sequential information present in the sensor data, typically using memory cells (e.g., Garcia-Hernando et al., 2018; Zhao et al., 2018). Hybrid algorithms extract spatial features and learn the temporal relationships simultaneously by combining convolutional and recurrent networks (Ullah et al., 2018; Fan et al., 2019; Frank et al., 2019).

Deep learning algorithms using egocentric videos from wearable cameras combine object detection with task recognition. A CNN with a late fusion ensemble predicted the tasks from a chest-mounted wearable camera (Castro et al., 2015) by incorporating relevant contextual information (e.g., time and day of the week) to boost the classification accuracy. Two separate CNNs were combined together to recognize objects of interest and hand motions (Ma et al., 2016). The networks were fine tuned jointly using a triplet loss function to recognize fine-grained ADL tasks with medium to high sensitivity.

Analyzing changes in body poses spatially and temporally can provide important cues for fine-grained motor task recognition (Lillo et al., 2017). An end-to-end CNN network exploited camera images for estimating fifteen upper body joint positions (Neili Boualia and Essoukri Ben Amara, 2021). The estimated joint positions permitted discriminating features to recognize tasks. The CNN architecture had two levels: i) fully-convolutional layers that extracted the salient feature, or heat maps, and ii) fusion layers that learned the spatial dependencies between the joints by concatenating the convolutional layers. The CNN-estimated joint positions served as input to train a multi-class SVM that predicted twelve ADL tasks with high sensitivity.

Hybrid deep learning algorithms are becoming increasingly popular for task recognition across metrics (Ullah et al., 2018; Fan et al., 2019; Frank et al., 2019). An optical flow-based algorithm (Ullah et al., 2018) leveraged deep learning to extract temporal optical flow features from the salient frames, and incorporated a multilayer LSTM to predict the tasks using the temporal optical flow features. Another hybrid deep learning algorithm (Frank et al., 2019) trained on the sEMG and inertial metrics detected assembly tasks. The algorithm’s CNN layers extracted spatial features from the merits at each timestep, while the LSTM layers learned how the spatial features evolved temporally. Hybrid algorithms can provide excellent expressive and predictive capabilities; however, these algorithms’ performance relies heavily on the size of training dataset (Frank et al., 2019).

A CNN-based algorithm incorporated inertial metrics from an off-the-shelf smartwatch to detect twenty-five atomic tasks (e.g., operating a drill, cutting paper, and writing) (Laput and Harrison, 2019). A Fourier transform was applied to the acceleration data to obtain the corresponding spectrograms. The CNN identified the spatial-temporal relationships encoded in the spectrograms by generating distinctive activation patterns for each task. The algorithm also rejected (i.e., detected) unknown instances.

Deep learning algorithms can recognize concurrent and composite fine-grained motor tasks directly from raw sensor data using complex network architectures, provided sufficient data is available (Ordóñez and Roggen, 2016; Zhao et al., 2018). Human task trajectories are continuous in that the current task depends on both past and future information. A deep residual bidirectional LSTM algorithm (Zhao et al., 2018) detected the Opportunity dataset’s composite tasks by incorporating information from positive as well as negative time directions. The dataset contains five composite ADLs (e.g., relaxation, preparing coffee, preparing breakfast, grooming, cleaning), involving a total number of 211 atomic events (e.g., walk, sit, lying, open doors, reach for an object). Several metrics, including acceleration and orientation of various body parts, and three-dimensional indoor position were gathered.

Recent deep learning algorithms leverage attention mechanisms to model long-term dependencies from inertial data (Al-qaness et al., 2022; Zhang Y. et al., 2022; Mekruksavanich et al., 2022). The ResNet-SE algorithm (Mekruksavanich et al., 2022) classified composite fine-grained motor tasks on three publicly available datasets. The algorithm incorporated residual networks to address loss degradation, followed by a squeeze-and-excite attention function to modulate the relevance of each residual feature map. The Multi-ResAtt algorithm (Al-qaness et al., 2022) incorporated residual networks to process inertial metrics from IMUs distributed over different body locations, followed by bidirectional GRUs with attention mechanism to learn time-series features.

Generally, deep learning algorithms are highly effective at detecting atomic fine-grained motor tasks, but their ability to detect composite and concurrent tasks reliably is indeterminate. The latter may be due to insufficient ecologically-valid composite, concurrent task recognition datasets available publicly. Utilizing generative adversarial networks (Wang et al., 2018; Li X. et al., 2020) to expand datasets by producing synthetic sensor data may alleviate the issue.

5.4.3 Probabilistic graphical models

Bayesian networks are the most common probabilistic graphical models for fine-grained motor task detection (e.g., Wu et al., 2007; Fortin-Simard et al., 2015; Liu et al., 2017; Hevesi et al., 2018), followed by Gaussian Mixture Models (e.g., Min et al., 2007; Min et al., 2008). Many such algorithms augment the inertial data with a different sensing modality (e.g., Min et al., 2007; Wu et al., 2007; Min et al., 2008; Hevesi et al., 2018) in order to provide more task context, which enables discriminating a broader set of tasks. Recognition of up to three day-to-day early morning tasks (Min et al., 2007) augmented with a microphone, resulted in the recognition of six tasks (Min et al., 2008). The intended HRT domain requires multiple sensors to detect tasks belonging to different activity components, although adding new modalities arbitrarily may deteriorate the classifier performance (Frank et al., 2019).

Hierarchical graphical models detect composite tasks by decomposing them into a set of smaller classification problems. Fathi et al.’s (2011) meal preparation task detection algorithm decomposed hand manipulations into numerous atomic actions, and learned tasks from a hierarchical action sequence using conditional random fields. Another hierarchical model that operated at three levels of abstraction detected composite, concurrent tasks using body poses (Lillo et al., 2017).

Identifying the causality (i.e., action and reaction pair) between two events allows for easier human interpretation, and for modeling far more intricate temporal relationships (Liao et al., 2020). A graphical algorithm incorporated the Granger-causality (Granger, 1969; 1980) test for uncovering cause-effect relationships among atomic events (Liao et al., 2020). The algorithm employed a generic Bayesian network to detect the atomic events. A temporal causal graph was generated via the Granger-causality test between atomic events. Each graph represented a particular task instance. The graph nodes represented the atomic events and directed links with weights represented the cause-effect relationships between the atomic events. An artificial neural network is trained using these graphs as inputs to predict the composite, concurrent tasks. The algorithm was evaluated on the Opportunity (Roggen et al., 2010) and OSUPEL (Brendel et al., 2011) datasets, indicating that the algorithm is independent of the metrics.

Overall, probabilistic graphical models typically have high sensitivity for detecting fine-grained motor tasks. The algorithms, especially hierarchical (e.g., Lillo et al., 2017) and the Granger-causality based temporal graph (Liao et al., 2020), are independent of the metrics due to data abstraction; therefore, their suitability is classified as conforming. Most task recognition algorithms are susceptible to individual differences (see Table 3). Even those that conform with generalizability may experience a significant decrease in accuracy when classifying an unknown human’s data (Laput and Harrison, 2019); thus, the generalizability criterion requires additional evidence. Algorithms can only identify tasks reliably for humans on which they were trained, suggesting that online and self-learning mechanisms are needed to accommodate new humans (Wang et al., 2012). The composite factor and concurrency vary across algorithms, but are non-conforming overall. The anomaly awareness criterion is classified as non-conforming, as most probabilistic graphical models do not detect out-of-class tasks.

5.4.4 Discussion

Classical machine learning algorithms are unreliable for detecting fine-grained motor tasks due to poor sensitivity and generalizability. Deep learning algorithms can detect the atomic fine-grained motor tasks reliably, but not composite, concurrent tasks. Moreover, deep learning typically requires a large number of parameters, very large datasets and can be difficult to train (Lillo et al., 2017). Deep learning’s automatic feature learning capability prohibits exploiting explicit relationships among tasks and semantic knowledge, making it difficult to detect composite, concurrent fine-grained motor tasks. Probabilistic graphical models offer some suitable alternatives; however, none of the existing algorithms satisfy all the required criteria for the intended domain.

The Granger-causality based temporal graph algorithm (Liao et al., 2020) and the three-level hierarchical algorithm (Lillo et al., 2017) are the most suitable for fine-grained motor task detection given all the other algorithms. Both algorithms have high sensitivity and can detect concurrent and composite tasks. The Granger-causality algorithm conforms with suitability, but requires additional evidence to substantiate its generalizability. The hierarchical algorithm conforms with generalizability, but is non-conforming with suitability, as it employed a vision-based system for estimating human-body pose metric. However, the metric can be estimated using a series of inertial motion trackers (Faisal et al., 2019); therefore, a human-robot teaming domain friendly version of both algorithms can be developed theoretically.

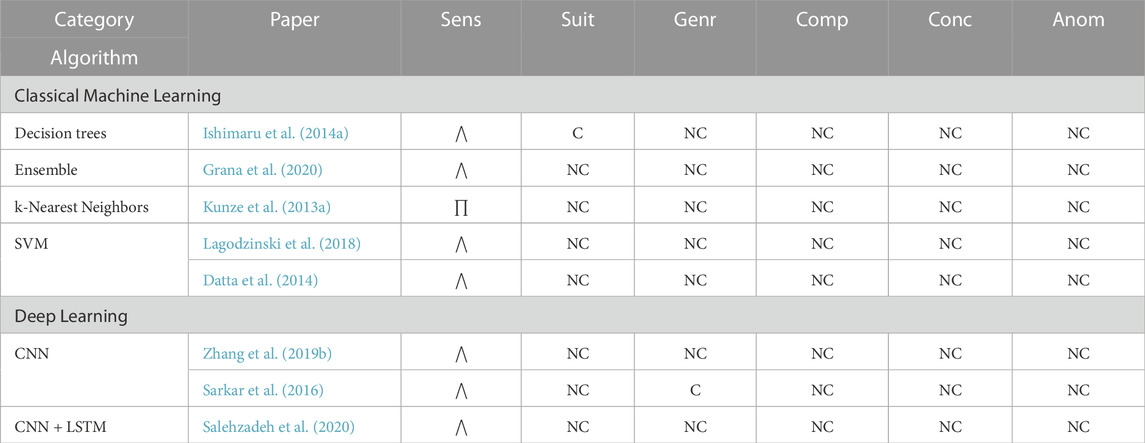

5.5 Tactile tasks

Tactile interaction occurs when humans interact with objects around them (e.g., keyboard typing, mouse-clicking and finger gestures). Individual classifications for each tactile task algorithm by its category are provided in Table 4.

5.5.1 Classical machine learning

Most classical machine learning algorithms incorporate inertial metrics measured at the fingers or dorsal side of the hand. These approaches typically detect finger gestures and keystrokes depending on the measurement site (e.g., Jing et al., 2011; Cha et al., 2018; Liu et al., 2018; Sha et al., 2020). Several of these approaches use multiple ring-like accelerometer device worn on the fingers (e.g., Jing et al., 2011; Zhou et al., 2015; Sha et al., 2020). The time- and frequency-domain features (e.g., minimum, maximum, standard deviation, energy, and entropy) extracted from the acceleration signals were used to train classical machine learning algorithms (e.g., decision tree classifier and majority voting) to detect finger gestures (e.g., finger rotation and bending) and keystrokes. Although these approaches incorporated inertial metrics, none conform with suitability due to lack of reproducibility (i.e., the ring-like sensor is not commercially available) and wearing a ring-like device may hinder humans’ dexterity, impacting task performance negatively.

Inertial metrics from the dorsal side of the hand detected seven office tasks (e.g., keyboard typing, mouse-clicking, writing) (Cha et al., 2018). Time- and frequency-domain features extracted from the acceleration signals were used to train an ensemble classifier. The algorithm achieved high accuracy

Classical machine learning algorithms’ generally have high sensitivity. The algorithms’ are typically non-conforming for the suitability criterion, as many supporting research efforts focus on developing and validating new sensor technology for sensing tactility, rather than detecting tactile tasks (e.g., Iwamoto and Shinoda, 2007; Ozioko et al., 2017; Kawazoe et al., 2019). The generalizability criteria also vary across algorithms, as they depend on the validation methodology. Finally, the algorithms are non-conforming for the concurrency and composite factors, making them unsuitable for detecting tactile tasks for the intended HRT domain.

5.5.2 Deep learning

Several publicly available sEMG-based hand gesture datasets (e.g., Atzori et al., 2014; Amma et al., 2015; Kaczmarek et al., 2019) support deep learning algorithms to detect tactile hand gestures (e.g., Côté-Allard et al., 2019; Rahimian et al., 2020). A hybrid deep learning model consisting of two parallel paths (i.e., one LSTM path and one CNN path) was developed (Rahimian et al., 2020). A fully connected multilayer fusion network combined the outputs of the two paths to classify the hand gestures.

Recognizing tactile tasks is an under-developed area of research, as the tasks are nuanced and often overshadowed by fine-grained motor tasks. Generally, deep learning algorithms have high sensitivity; however, the incorporated sEMG metrics with a random dataset split for validation cause them to not conform with the suitability and generalizability criteria.

5.5.3 Probabilistic graphical model

Probabilistic graphical models for tactile task recognition typically involve simple algorithms (e.g., Hidden Markov Models (Zhang et al., 2011), Gaussian Mixture Models (Ju and Liu, 2014), and Bayesian Networks (Chen et al., 2007a; b)) when compared to the prior gross motor and fine-grained motor sections (see Sections 5.3.3 and 5.4.3), as the tasks detected are inherently atomic (e.g., hand and finger gestures). sEMG signals are one of the most frequently used metrics for detecting hand and finger gestures (e.g., Chen et al., 2007b; Zhang et al., 2011; Côté-Allard et al., 2019; Rahimian et al., 2020). Chen et al.’s (2007b) gesture recognition algorithm pioneered the use of sEMG signals. Twenty-five hand gestures (i.e., six wrist actions and seventeen finger gestures) were detected using a 2-channel sEMG placed on the forearm. A Bayesian classifier was trained using the mean absolute value and autoregressive model coefficients extracted from the sEMG. The algorithm was extended to include two accelerometers, one placed on the wrist and the other placed on the dorsal side of the hand (Chen et al., 2007a).

Overall, probabilistic graphical models also tend to have high sensitivity for detecting tactile tasks. Most algorithms are susceptible to individual differences and incorporate sEMG metrics; thus, the algorithms are non-conforming for the suitability and generalizability criteria. Additionally, the algorithms are non-conforming for the concurrency and composite factors, as the evaluated tactile tasks are inherently atomic.

5.5.4 Discussion

All data-driven algorithms can detect tactile tasks with

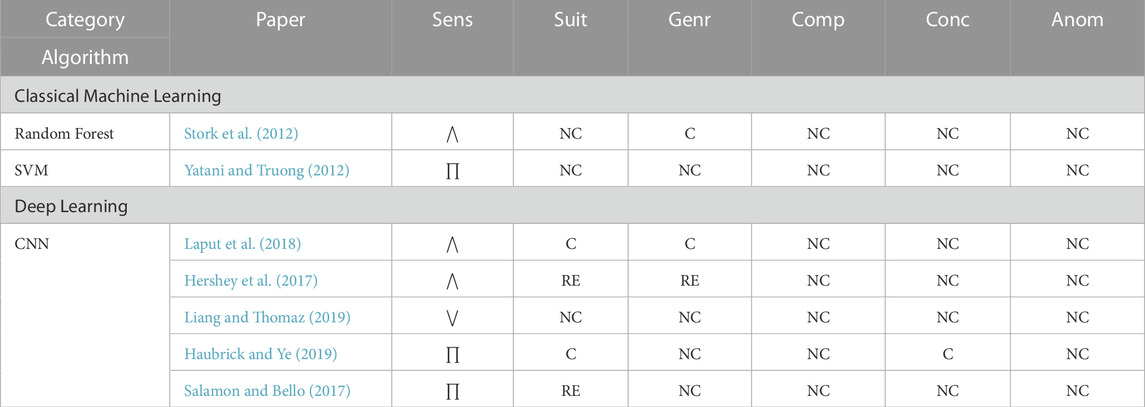

5.6 Visual tasks

Eye movement is closely associated with humans’ goals, tasks, and intentions, as almost all tasks performed by humans involve visual observation. This association makes oculography a rich source of information for task recognition. Fixation, saccades, blink rate, and scanpath are the most commonly used metrics for detecting visual tasks (Bulling et al., 2010; Martinez et al., 2017; Srivastava et al., 2018), followed by EOG potentials (Ishimaru et al., 2014b; Ishimaru et al., 2017; Lu et al., 2018). Visual tasks typically occur in office or desktop-based environments, where the participants are sedentary. The classifications of the reviewed visual task recognition algorithms are presented by algorithm category in Table 5.

5.6.1 Classical machine learning

Classical machine learning using eye gaze metrics (e.g., saccades, fixation, and blink rate) for visual task recognition was pioneered by Bulling et al. (2010). Statistical features (e.g., mean, max, variance) extracted from the gaze metrics, as well as the character-based representation to encode eye movement patterns, were used to train a SVM classifier to detect five office-based tasks. The algorithm’s primary limitation is that the classification is provided at each time instance t independently and does not integrate long-range contextual information continuously (Martinez et al., 2017). A temporal contextual learning algorithm, the Auto-context model, overcame this limitation by including the past and future decision values from the discriminative classifiers (e.g., SVM and k-Nearest Neighbors) recursively until convergence (Martinez et al., 2017).

Low-level eye movement metrics (e.g., saccades and fixations) are versatile and easy to compute, but are vulnerable to overfitting, whereas high-level metrics (e.g., Area-of-Focus) may offer better abstraction, but requires domain and environment knowledge (Srivastava et al., 2018). These limitations can be mitigated by exploiting low-level metrics to yield mid-level metrics that provide additional context. The mid-level metrics were built on intuitions about expected task relevant eye movements. Two different mid-level metrics were identified: shape-based pattern and distance-based pattern (Srivastava et al., 2018). The shape-based pattern metrics were based on encoding different combinations of saccade and scanpath, while distance-based pattern metrics were generated using consecutive fixations. The low- and mid-level metrics were combined to train a Random Forest classifier to detect eight office-based tasks, including five desktop-based tasks and three software engineering tasks.

Various algorithms were developed focused solely on detecting reading tasks using classical machine learning (e.g., Kelton et al., 2019; Landsmann et al., 2019). The complexity of the reading task varied across algorithms. Reading detection can be as rudimentary as classifying active reading or not (Landsmann et al., 2019), or as complex as distinguishing between reading thoroughly vs skimming text (Kelton et al., 2019).

Based on feature mining, existing reading detection algorithms can be categorized into two methods: i) Global methods that mine eye movement metrics over an extended period

Classical machine learning algorithms (e.g., Bulling et al., 2010; Ishimaru et al., 2014b; Martinez et al., 2017; Srivastava et al., 2018; Landsmann et al., 2019) have medium to high sensitivity. Most algorithms conform with the suitability criterion, while rarely conforming with the generalizability criterion. All algorithms are non-conforming for the concurrency and composite factors, making them unsuitable for the intended HRT domain.

5.6.2 Deep learning

Recent deep learning algorithms leverage CNNs to detect visual tasks directly using raw 2D gaze data obtained via wearable eye trackers. GazeGraph (Lan et al., 2020) algorithm converted 2D eye gaze sequence into a spatial-temporal graph representation that preserved important eye movement details, but rejected large irrelevant variations. A three-layered CNN trained on this representation detected various desktop and document reading tasks. An encoder-decoder based convolutional network detected seven mixed physical and visual tasks by combining 2D gaze data with head inertial metrics (Meyer et al., 2022).

Several other algorithms apply deep learning techniques using EOG potentials to detect reading task (Ishimaru et al., 2017; Islam et al., 2021). Two deep networks, a CNN and a LSTM, were developed to recognize reading in a natural setting (i.e., outside of the laboratory). Three metrics (i.e., blink rate, 2-channel EOG signals, and acceleration) from wearable EOG glasses were used to train the deep learning models.

Obtaining datasets at a large scale is difficult, due to high annotation costs and human effort, while lack of labeled data inhibits deep learning methods’ effectiveness. A sample efficient, self-supervised CNN detected reading task (Islam et al., 2021) using less labeled data. The self-supervised CNN employed a “pretext” task to bootstrap the network before training it for the actual target task. Three reading tasks (i.e., reading English documents, reading Japanese documents, both horizontally and vertically), as well as a no reading class, were detected by the self-supervised network. The pretext task recognized the transformation (i.e., rotational, translational, noise addition) applied to the input signal. The pretext pre-training phase initialized the network with good weights, which were fine-tuned by training the network on the target task (i.e., reading detection task) dataset.

The deep learning algorithms (e.g., Ishimaru et al., 2017; Lan et al., 2020; Islam et al., 2021) typically tend to have low sensitivity. Further, the EOG deep learning algorithms do not conform with suitability, as the employed metrics are unreliable, as it is susceptible to noise introduced by facial muscle movements (Lagodzinski et al., 2018). These limitations discourage the use of deep learning for visual task recognition.

5.6.3 Discussion

None of the existing algorithms detected visual tasks within the targeted HRT context. The two classical machine learning algorithms: i) Auto-context model (Martinez et al., 2017) and ii) Srivastava et al.’s (2018) algorithm appear to be more appropriate for detecting visual tasks. Both algorithms had

5.7 Cognitive tasks