- 1Neuroscience, Engagement, and Smart Tech (NEST) Laboratory, Department of Medicine, Division of Neurology, The University of British Columbia, Vancouver, BC, Canada

- 2Neuroscience, Engagement, and Smart Tech (NEST) Laboratory, British Columbia Children’s and Women’s Hospital, Vancouver, BC, Canada

As the market for commercial children’s social robots grows, manufacturers’ claims around the functionality and outcomes of their products have the potential to impact consumer purchasing decisions. In this work, we qualitatively and quantitatively assess the content and scientific support for claims about social robots for children made on manufacturers’ websites. A sample of 21 robot websites was obtained using location-independent keyword searches on Google, Yahoo, and Bing from April to July 2021. All claims made on manufacturers’ websites about robot functionality and outcomes (n = 653 statements) were subjected to content analysis, and the quality of evidence for these claims was evaluated using a validated quality evaluation tool. Social robot manufacturers made clear claims about the impact of their products in the areas of interaction, education, emotion, and adaptivity. Claims tended to focus on the child rather than the parent or other users. Robots were primarily described in the context of interactive, educational, and emotional uses, rather than being for health, safety, or security. The quality of the information used to support these claims was highly variable and at times potentially misleading. Many websites used language implying that robots had interior thoughts and experiences; for example, that they would love the child. This study provides insight into the content and quality of parent-facing manufacturer claims regarding commercial social robots for children.

1 Introduction

Socially assistive robots can provide companionship, facilitate education, and assist with healthcare for diverse populations, including children. We define social robots as possessing three elements: sensors to detect information, a physical form with actuators to manipulate the environment, and an interface that can interact with humans on a social level (del Moral et al., 2009). Social robots’ interactions with humans have four key aspects; 1) they are physical, 2) they can flexibly react to novel events, 3) they are equipped to realize complex goals, and 4) they are capable of social interaction with humans in pursuit of their goals (Duffy et al., 1999).

Child-specific uses of social robots in healthcare include providing support during pediatric hospitalization (Okita, 2013; Farrier et al., 2020), reducing distress during medical procedures (Trost et al., 2019), mitigating the effects of a short-term stressor (Crossman et al., 2018), and acting as a social skills intervention for children with Autism Spectrum Disorder (Diehl et al., 2012; Pennisi et al., 2016; Prescott and Robillard, 2021). While research on social robots has increased over the last decade, results have oftentimes been inconclusive, mixed, or limited due to small sample sizes (Dawe et al., 2019; Trost et al., 2019). Existing work has also been limited by a restricted focus on highly developed countries, the study of a limited number of robotic platforms, implementation of a heterogenous set of control conditions and outcome measures, and a lack of transparency in reporting (Kabaci et al., 2021). Previous research on social robots has predominantly occurred in clinical (Ullrich et al., 2016) or laboratory contexts (Crossman et al., 2018). Some individual social robots have received extensive investigation from the scientific community, often with a particular focus on children with autism (Beran et al., 2015; Arent et al., 2019; Rossi et al., 2020). Due to rapid turnover in the commercial robot market, there are relatively few studies that focus on social robots currently available for purchase as researchers oftentimes modify existing commercial social robots to better suit their experimental goals (Ullrich et al., 2016; Tulli et al., 2019). Social robots’ intended uses in the real world have received minimal investigation, and a description of the larger environment of child-specific commercial social robot functionality and impact is lacking from the scientific literature. The present study aimed to characterize how robots are marketed towards child consumers.

There are potential ethical ramifications of building social robots for children. Some have argued that the use of social technologies may diminish human-human interaction (Turkle, 2011), that ascribing moral standing to social robots is problematic (Coeckelbergh, 2014), and that deception is integral to human-robot relationships (Sætra, 2020). Others have suggested there is little evidence that introducing social robots reduces human interaction and that social isolation is not due to robots but to systematic and societal issues in how social needs are valued (Prescott and Robillard, 2021). It has been proposed that social robots may instead act as a “social bridge” to friends, relatives, and teachers of children (Dawe et al., 2019; Prescott and Robillard, 2021). The question of whether robots are “overhyped” has also been considered (Parviainen and Coeckelbergh, 2021), as many highly anticipated social robotics start-ups have had difficulty transferring technological breakthroughs in research fields to commercial and industrial applications (Tulli et al., 2019).

Despite this debate, COVID-19 has accelerated the demand for social robots as people seek to maintain social interaction while reducing potential disease exposure (Scassellati and Vázquez, 2020; Tavakoli et al., 2020; Zeng et al., 2020; Prescott and Robillard, 2021). When deciding whether to invest considerable sums of money into a social robot for a child, parents and caregivers are likely to seek information about these devices through the internet. Studies of how robots are incorporated into everyday life identify different stages of social robot acceptance. The first of these is an expectation or pre-adoption stage (de Graaf et al., 2018; Sung et al., 2010). In this stage, potential buyers seek to gather more information about the technology, form an idea of its value to them, and finally begin to create expectations for the technology, often using the internet as a tool for information gathering. Therefore, the content of online information about child-specific robots is likely to form a large part of potential users’ expectations for these devices. Despite the growing market for social robots, there is little knowledge of what parents and caregivers are exposed to when making decisions around robot adoption. To address this knowledge gap, we: 1) described the current landscape of social robots available to children from a prospective consumer’s perspective, 2) captured and assess the claims made by manufacturers around their functionality, and 3) evaluated the quality of the evidence supporting these claims. Qualitative analysis of manufacturer claims, in the manner done here, and evidence quality evaluation using the QUEST tool have been successfully used to understand the markets for health-related topics including wearable brain technologies, e-cigarettes, infant formula, dementia prevention, and prescription drugs (Kaphingst et al., 2004; Perry et al., 2013; Yao et al., 2016; Robillard and Feng, 2017; Coates et al., 2019; Pomeranz et al., 2021).

2 Materials and methods

2.1 Sampling

Google, Yahoo, and Bing, the most popular search engines in North America, were searched using a combination of keywords for “child” and “social robot,” as well as their synonyms (“kid,” “teen,” “youth,” “pediatric,” “paediatric,” “adolescent,” “robotic,” “social robot”). This method allowed the researchers to take the perspective of a consumer looking for social robots marketed towards children via the internet. The IP address used was in Vancouver, British Columbia, localizing search results to North America, and the personalization of search results was minimized using strict privacy settings and blockers.

Based on search engine user behaviour, the first three pages of search results from each engine, excluding advertisements, were considered (Beitzel and Jensen, 2007). Each search result page was loaded and manually screened for the names of potential social robots.

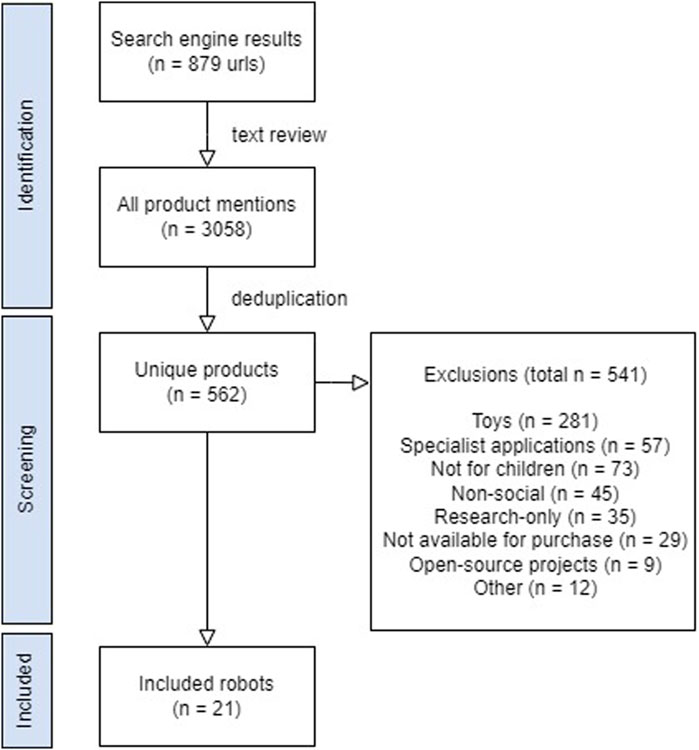

Inclusion criteria for robots to be included in the study were: 1) product is consistent with above-stated definition of a social robot; 2) product is targeted towards consumers 18 years of age or younger; 3) product is physically embodied and ready-to-use; and 4) product is commercially available for purchase or pre-order in North America at the time of the search or was out-of-stock after previously being available. Exclusion criteria were that the robot: 1) was not directed towards children or did not have any child-specific functionality; 2) was sold on secondary marketplace websites (e.g., Amazon, Ebay); 3) required consumer-initiated communication with the manufacturer (e.g., a direct email) as opposed to a manufacturer-created step towards purchasing the robot (e.g., a price quote or inquiry website field for the consumer to fill out, a “buy robot” page); and 4) was considered one of the following robot types: telepresence robots, open-access 3D printable robots, research-only robots, prototypes, and crowdfunded robots. A full list of inclusion and exclusion criteria can be found in Supplementary Table S1. These inclusion criteria were developed iteratively by all authors based on an agreed-upon definition of “social robot”—sensors, actuators, and a social interface—and through discussion of sample products. The final sample was achieved through consensus from all authors (Figure 1). Only the initial reason to exclude a product was documented. Figure 1 reports each product only once, despite the fact that many products would have met multiple criteria for exclusion had they been screened further (e.g., surgical robots are also not targeted to children, nor are they available to the public to easily purchase).

2.2 Claim analysis

Websites were reviewed, and claims were extracted and coded, during the period of April 12–July 6, 2021. Manufacturer claims around the social robots marketed towards children were collected by examining the manufacturer’s websites in full, which were archived at the time of coding. The text of each website was then coded for sentences that made claims about the robot. The inclusion criteria were that the claim: 1) was related to the child specific uses or impacts of the robot; 2) was explicitly stated in the text 3) pertained to the social robot, the purchase-maker (typically the parent/guardian), or the child/children. The claim was not included if it only described the social robot’s physical features (e.g., degrees of freedom, touch sensors, cameras), as these were not informative in terms of the social robot’s claimed benefits or functionality. It was also not included if it was a customer testimonial as researchers did not consider this a direct manufacturer claim. For each claim, we identified the user being referenced (e.g., parent vs. child), the theme of the claim via content analysis, and the specific topic of the claim.

Claim themes were classified by first coding the specific topic around which each claim centered, often the verb of the manufacturer’s sentence (e.g., coding “communication” if manufacturer claim stated “robot will help your child communicate more effectively”). These specific topics were then grouped into several themes. In this example, communication was grouped into the “Interaction” theme, which also included specific manufacturer claim topics such as collaboration and listening.

The quality of each website as a whole was characterized using a subset of items from the Quality Evaluation Scoring Tool (QUEST) for online health information (Robillard et al., 2018). Data were analyzed and visualized in R using tidyverse packages (Wickham et al., 2019). The complete dataset is available at https://osf.io/nbj9t/.

3 Results

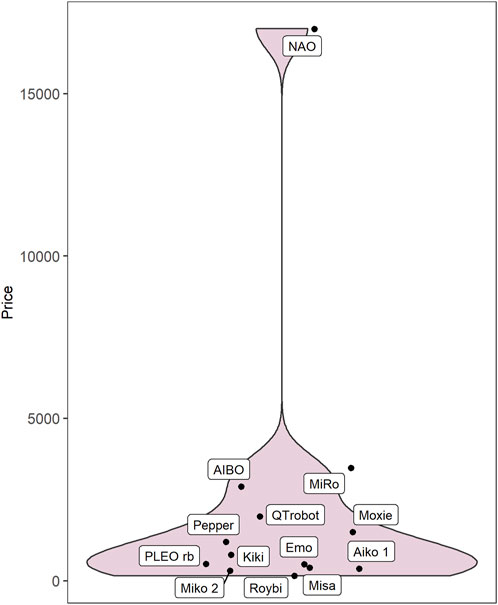

On the whole, robots that met all criteria for inclusion were frequently mentioned in our web searches (occurring in 26.7 unique web results on average), while excluded products were mentioned less frequently (3.6 results). Twenty-one robots met our inclusion criteria. They were manufactured in the United States (n = 6), Japan (n = 4), China (n = 3), France (n = 2), Spain (n = 2), and India, Luxembourg, Taiwan, and the United Kingdom (n = 1 each). Where it was possible to identify robot prices from manufacturer’s websites (13 of 21 robots), they ranged from 150 to 17,000 USD, with a median price of 799 USD (Figure 2).

3.1 Manufacturer claims

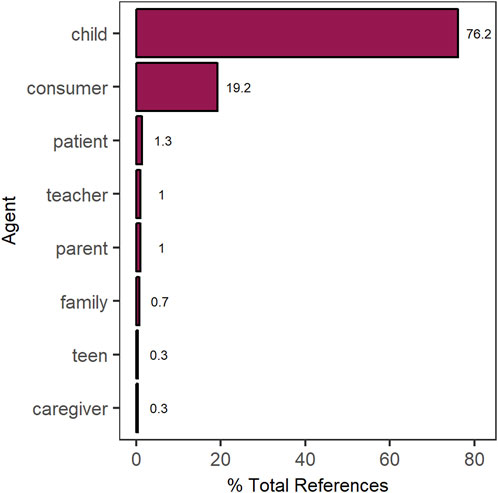

A total of 653 individual claims were identified, with a mean of 31 claims per robot (range: 5–75). For each claim, we identified the individual being referenced (Figure 3). Most claims focused on what the robot could do and how the child would benefit from social robot intervention. For example, “Aiko also could be your mentor to learn and develop your cognitive, emotional and social skills.” A minority of claims were centered around what the robot could do for a general consumer, e.g., “BUDDY is your personal assistant.” Claims referenced others, like parents or teachers, only rarely.

We used inductive content analysis to organize these claims into larger themes. The research team reviewed a random sample of claims (approximately 10% of the sample or 65 claims) as a group to develop an initial coding guide. This was applied to a new, similarly sized set of statements by one coder, who then brought their results and any edge cases to the group for discussion. This process was repeated several times until all authors felt that the coding guide was robust, at which point a single coder coded the entire sample.

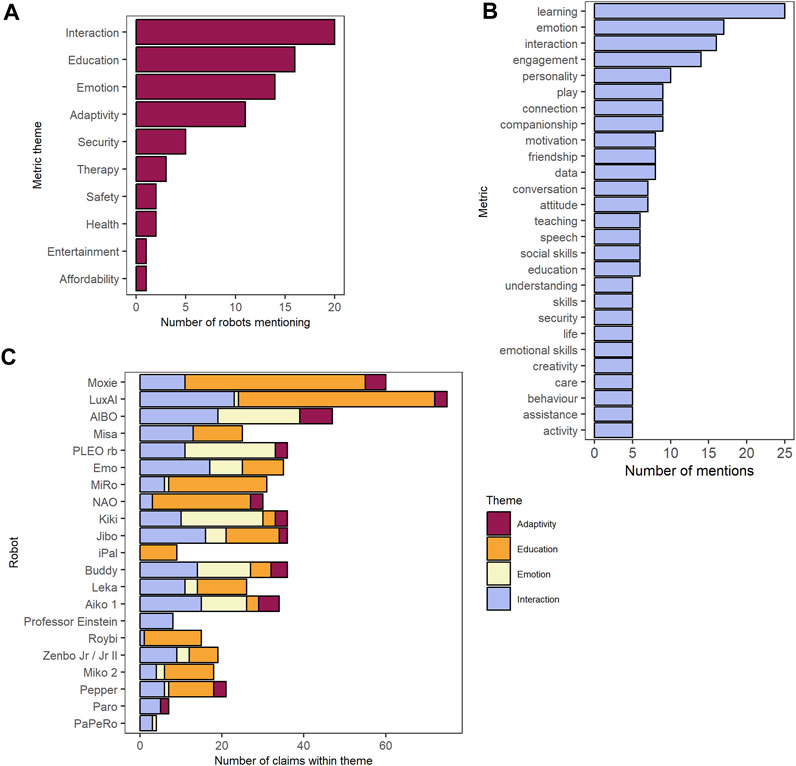

We identified eight major themes into which all claims fell (Figure 4A). These were Education (n = 251 claims), Interaction (n = 205), Emotion (n = 111), Adaptivity (n = 41), Health and Wellbeing (n = 20), Safety and Security (n = 19), Entertainment (n = 5), and Affordability (n = 1). Education claims centered on new or improved outcomes that could develop as a result of using the robot. For example, “QTrobot is an expressive social robot designed to increase the efficiency of special needs education … ” Interaction claims displayed the social robots’ capabilities of exchanging information with consumers or its environment. They also included the robot’s impact on the consumer’s interactions with the robot itself, and other humans, such as “[u]se Zenbo Lab to create interactive conversations and activities to help students practice speaking and listening skills.” Emotional claims predominantly illustrate the social robot’s capabilities to express emotion, or its effects on the emotions of the consumer: “[Kiki] is fully aware of her surroundings and expresses a diversity of emotions and reactions.” Finally, Adaptivity referred to claims revolving around the social robot’s ability to grow and learn from its interactions with the world, e.g., “Curiosity drives aibo, with new experiences fusing fun and learning together into growth. It’s these experiences that shape aibo’s unique personality and behavior.”

FIGURE 4. (A) Number of robots mentioning each metric theme at least once. (B) Metrics occurring for five or more claims. (C) Number of claims per robot for each of the four most common metric themes.

For each robot, we identified the theme for which the largest number of claims were made. Metrics were coded directly from the manufacturer’s own wording whenever possible, and were converted to singular nouns (e.g., “children have learned” would be coded as “learning”; “has many actions” would be coded as “action”). We collected 290 unique metrics (Figure 4C). Those which occurred more than four times in the total sample are shown in Figure 4B. The five most common were learning [“(t)his results in more attention and concentration from children and helps them to learn more effectively”], emotion (“BUDDY has a range of emotions that he will express naturally throughout the day based on his interactions with family members”), interaction (“BUDDY connects, protects and interacts with every member of your family”), engagement (“Leka makes it easier to keep each child engaged and motivated”), and personality (“Aibo even has likes and dislikes—another dimension of its personality”).

3.2 Evidence quality

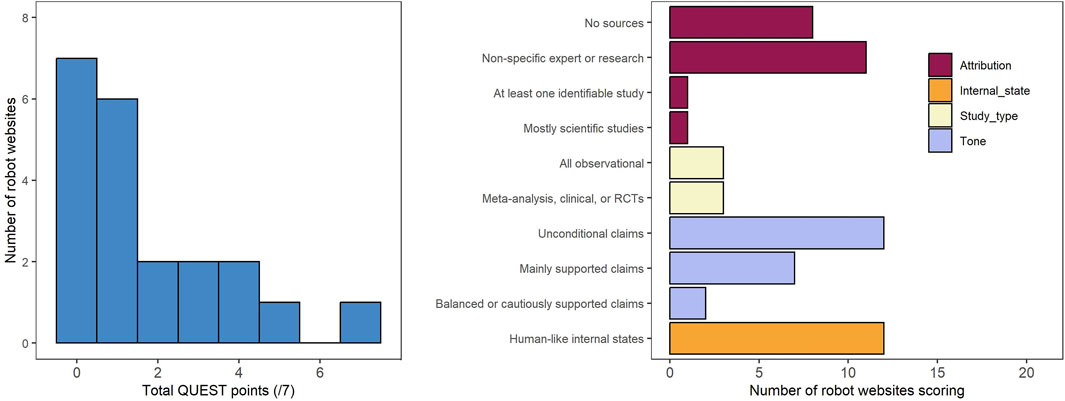

We evaluated the quality of the manufacturers’ claims (Figure 5) using a subset of relevant items from the QUEST instrument (Robillard et al., 2018). First, we looked at claim attribution–whether and how websites referenced sources. Eight websites mentioned no sources (receiving a score of 0). Eleven mentioned expert sources or research findings that could not be traced to a specific study, provided links to sites, advocacy bodies, or similar (score of 1). One referenced at least one identifiable scientific study (score of 2). One referenced mainly (>50% of claims) identifiable scientific studies (score of 3).

Next, we considered the types of studies that were mentioned by manufacturers. Three websites included mention of all observational work (score of 1), and three mentioned studies that were meta-analyses, randomized clinical trials, or clinical studies (score of 2). We also considered the tone of the claims that were made. Twelve websites used language that supported their claims without conditions (score of 0). Language included terms like “will,” “guarantee,” “does,” and there was no discussion of limitations. Seven websites made mainly supported claims, using language like “can reduce” or “may improve,” but with no discussion of limitations (score of 1). Two websites made claims with balanced or cautious support (score of 2), with statements of limitations or mention of findings that contradict claims of efficacy.

Finally, while not part of the QUEST instrument, we also coded websites for the presence of language attributing human-like internal states like emotions or motivations to the robot. We found these types of statements on 12 of 21 websites. Examples included: “A robot that understands how you feel and cheers you on…,” “He will be happy to give you a warm welcome to come home to

4 Discussion

Our analysis showed that the marketing of current commercial social robots was focused on their potential to act as emotional companions or educators for children. However, the quality of the information used to support these claims was highly variable and at times potentially misleading, such that parent-facing information about social robots for children may not be an accurate representation of the strength of the evidence in this area.

There is some evidence for the efficacy of social robots on each of the four main application dimensions identified in our sample (adaptivity, education, emotion, and interaction). However, the quality of the claims we documented is not aligned with the available evidence. Nineteen of the twenty-one websites we evaluated had no statements of limitations on their claims at all, and these claims were sometimes broad (e.g., “This smart robot will perform brilliantly with many services useful to all”). The gap between robot capabilities and user expectations likely contributes to the current limited success of commercial social robots (Tulli et al., 2019). Below, we consider the four largest themes we identified and existing research evidence for each.

The most common theme among claim statements was interactivity; statements were that the robot was interactive, capable of play, companionship, connection, and friendship. The child-robot interaction research literature does indeed prioritize questions of interactivity and social behaviour (Belpaeme et al., 2013; Di Dio et al., 2020; Parsonage, 2020), and the responsivity of a social robot has been shown to change human behaviour and willingness to use the devices (Birnbaum et al., 2016). However, the claims we documented (e.g., “a predictable and tireless new friend,” “she’s your loyal companion,” “develops a familiarity with people over time”) suggest long-term, intimate relationship formation between children and robots that is not evidence-based. For instance, a 2020 review of interactive social robotics work reports that most studies on robots for companionship take place over short time scales and focus on the best social cues for a robot to produce (Lambert et al., 2020). Relatively few studies look at the effect of human-robot interaction on the human user. Creating a truly interactive social robot is computationally very difficult, particularly if speech or emotion modeling are desired (Dosso et al., 2022). To circumvent these challenges, many studies of human-robot interaction rely on a Wizard-of-Oz methodology in which the device is controlled by a human operator. A 2021 scoping review of social robots as mental health interventions for children found only five papers featuring autonomously behaving robots, only two of which were speech-capable (Kabacińska et al., 2021). Market-ready devices vary in their degree of social immersiveness, but manufacturers claim that their robots can serve as a true friend to users run ahead of available data.

We also documented a number of claims based on robots’ putative educational functions, with language around supporting learning, providing motivation, teaching specific social and emotional skills, supporting creativity, and leading to academic success. Education has long been targeted as a potential application area for social robotics. A 2018 review of 101 articles on the subject reported positive outcomes of social robot use on cognitive (e.g., speed, number of attempts) and affective (e.g., persistence, anxiety) outcomes and documented different conceptualizations of a robot’s role in education: as tutor or teacher, peer, and novice (Belpaeme et al., 2018). However, they also point to logistical challenges of robot implementation, risks associated with delegating education to robots rather than human teachers, and technical limitations for existing robots. Educational robots were more effective when they targeted specific skills or topics, rather than being general purpose or used by multiple students. Relatedly, a 2020 systematic review found that robots were effective for supporting student engagement more so than teaching complex material (Lambert et al., 2020). Compared to the theme of interactivity, claims within the theme of education are perhaps better supported by the existing research literature. However, the particular robots being sold, and the fact that they are being marketed for unstructured home use, limits the generalizability of the data in the present study.

Turning next to the theme of emotion, we recorded a large number of claims that robots could feel love and happiness, have moods and feelings, were attuned to a user’s emotions, could cheer up the user or make them happy, and could be trusted. In terms of emotional impacts of social robots for children, there is evidence for mitigation of negative emotional responses and memories in children receiving vaccines, and robots may help with patient anxiety and pain perception (Beran et al., 2015; Trost et al., 2019). There has also been extensive research and protocols developed for modeling emotional systems in social robotics that mimic ones displayed by humans and animals (Paiva, 2014; Pessoa, 2017). However, research on social robots as mental health supports for adults has been recently described as “nascent,” with few conditions being studied and limited generalizability (Scoglio et al., 2019; Guemghar et al., 2022), and this is also true for children’s robots (Kabacińska et al., 2021). A 2022 review of work on robotic emotions identifies this as a topic of accelerating research interest (Stock-Homburg, 2022), but reports that studies are largely short-term, lab-based, and focused on robot development rather than a user’s own emotional responses to the encounter (e.g., asking people to rate a robot’s facial expression). As with the first two themes, we argue that manufacturer claims around robot emotion are overstated relative to available evidence. Interestingly, we noticed that many of the Emotion claims were about the robot’s own supposed inner experience (e.g., “loves you”). This may shed light on the intention of these websites–to create a fantasy narrative about how to relate to the product (i.e., to conceive of it as sentient and engaged in a relationship with the user) rather than making causal and factual claims about function.

The final major theme among the claims we examined was adaptivity. We noted statements that robots had evolving personalities, adaptable behaviour, and could learn, grow, and be customized. Models for social robot creation have incorporated behavioural adaptation -- the ability of the robot to grow and learn from social interactions (Salter et al., 2008). However, most existing work on this topic centers around robots adapting to a user’s performance on a pre-specified task, usually during a one-off interaction (Ahmad et al., 2017). Limited work on robot adaptation to simple elements of user personality, such as introversion, has been conducted (e.g., Tapus et al., 2008), and no studies on robots adapting to a user’s culture of demographic background were reported in a recent systematic review (Ahmad et al., 2017). As with the other themes, we argue that manufacturer claims about robots adapting to their child users, especially with the implication that robots detect complex features of the user like their temperament and personal quirks, are misaligned with the capabilities of existing robots.

There was often a mismatch between the strength of evidence for a particular robot and the degree to which their website referenced data and research. For example, one manufacturer’s website featured a page highlighting research on social robots titled “The Science Behind [Robot]”. The page contained graphics outlining results from peer-reviewed social robot research (conducted with robots other than the one being sold). The tone used in these claims meets the criteria for a rating of “0” for Tone on the QUEST scale, indicating full support of the claims by mostly using non-conditional verb tenses (“can,” “will”), with no discussion of limitations. While the website did not claim that these studies’ results pertained to their robot specifically, they used graphics in the robot’s signature colour scheme and intermixed the text with videos, pictures, and the silhouette of the robot. By contrast, the website of one of the most-researched social robots (NAO) made very few references to research or evidence. These two examples together illustrate the difficult task that faces consumers if they would like to evaluate the strength of evidence for social robots based on the information available from manufacturers. In this work, we only examined whether social robot claims referenced published work; we did not evaluate the quality of that published work itself, or the presence of sources of bias in this published work. An examination of the types of evidence manufacturers reference is a worthy area of investigation for future study. Furthermore, our analysis did not evaluate published work for sources of bias (e.g., funded or conducted by robot manufacturers versus independent investigators).

This analysis reveals the priorities of robot manufacturers, which is helpful for the Human-Robot Interaction (HRI) research community. First, the claim attribution analysis shows that HRI work is not being put in front of consumers in these contexts very often, pointing to an opportunity for improved science communication. Second, we see that social robots are offered primarily around the 800 USD price point, suggesting that researchers who want to study robots that may plausibly enter the home should focus their attentions on this band of devices. Third, we note that health and safety are mentioned by relatively few websites, in contrast with the focus on these themes in the scientific literature. This may mean that devices supporting these functionalities tend not to be marketed to families (instead to medical institutions, for example), or that these technologies are not yet market-ready. Finally, it is interesting to observe that emotion was a top-three metric theme for manufacturers’ claims. Computational models of human-robot emotional alignment are still emerging (e.g., Dosso et al., 2022) and this work suggests that manufacturers see this as a priority.

Recommendations for adults looking to purchase robots for children are lacking. Online resources about purchasing smart toys remind consumers to vet toys’ privacy capabilities before purchasing, know toy features, be wary of hacking and data misuse, look out for cheap toys with poor safety features, educate children about digital privacy, focus on reputable companies and retailers, and look at certifications like COPPA or the Federal Trade Commission’s kidSAFE Seal (InternetMatters, 2023; Miranda, 2019; Fowler, 2018; Best, 2022; Gummer, 2023; Gallacher and Magid, 2021; Safe Search Kids, 2022; Knorr, 2020).

We propose three additional recommendations based on our analysis and the unique properties of social robots as a child-facing product. We encourage potential customers to: 1) pay attention to the tone of the claims made in promoting a product; qualified language like “may support” and statements of limitations are indications of quality; 2) be cautious with claims that robots will adapt to the user, will respond to their emotions, or will create a long-term bond, as these statements are unlikely to be true on technical grounds, especially for robots at a low price point; 3) be aware that social robots for children is a product category with high turnover. Devices frequently go off the market and ongoing technical support once purchased is not guaranteed. Products with longer histories are more likely to be supported by more stable companies, but all devices should be purchased with caution.

A limitation of the current study is our focus on simulating the buyer’s experience. By using a search engine-based collection of robot names, we intended to mimic a potential consumer’s initial search process. While this process may not have captured every possible social robot in the “long tail” of the market, we feel confident that we identified the large majority of commercial social robots for children. Most robots included in our sample arose repeatedly in our search process. We chose to focus our study specifically on claims made by manufacturers themselves, rather than secondary sellers, to avoid cases where resellers might misunderstand the products, intentionally mislead consumers (e.g., due to sponsorships or paid partnerships), or over- or under-state robot capabilities. We also limited the analysis to robots that were currently available for purchase or easy to pre-order, excluding manufacturers’ websites listing defunct robots as simply “out of stock” or inviting visitors to “contact us.” These decisions were intended to create a sample of robots that were currently available and manufacturers’ current conceptions of their features and applications.

The present work establishes a baseline understanding of which social robots are currently being marketed for children and how manufacturers describe these devices and prompts several new lines of inquiry. One would be to gather more information on how consumers, particularly parents of children, make decisions around purchasing social robots. Another would be to consider in more detail the 290 metrics that were identified, many of which pointed to specific robot features. Which of these features are most important to parents and children when actually using the robot? Which are primary drivers of purchasing behaviour? Another topic arising in previous research is claims around the data security, privacy, and recording features of social robots (Chatterjee et al., 2021). Such claims were included in our sample and could be further investigated. Whether these claims are supported by the design of the robots, and the ethical implications of these design decisions, are outstanding questions.

Our results highlight the challenges faced by potential consumers when attempting to purchase a social robot for a child online. The information available from manufacturers is of mixed quality but shows that current social robots tend to be marketed as interactive, educational, emotional, and adaptive. Researchers have a responsibility to communicate high quality scientific information about the current state of social robotics to the public.

Manufacturer claims about child-facing social robots are not well grounded in evidence. This is likely to make consumers’ decision-making difficult. Furthermore, the research evidence that is available may not reflect the way that commercial devices are actually marketed and used. More research on the home-based applications of social robots, as well as greater transparency from manufacturers at the decision-making stage, are needed for consumers to be fully informed on the research-backed effects and uses of child-marketed social robots.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

Author contributions

JD: Conceptualization, Methodology, Investigation, Data curation, Formal analysis, Project administration, Validation, Visualization, Funding acquisition, Writing–original draft, Writing–review and editing. AR: Conceptualization, Methodology, Investigation, Data curation, Formal analysis, Project administration, Writing–original draft. JR: Conceptualization, Methodology, Investigation, Data curation, Formal analysis, Project administration, Funding acquisition, Writing–review and editing, Supervision. All authors approved the final manuscript as submitted and agree to be accountable for all aspects of the work.

Acknowledgments

The authors gratefully acknowledge the support of Michael Smith Health Research BC, the BC Children’s Hospital Research Institute and BC Children’s Hospital Foundation, and BC SUPPORT Unit. The authors thank Katelyn Teng for support in identifying existing consumer recommendations for parents.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2023.1080157/full#supplementary-material

References

Ahmad, M., Omar, M., and Orlando, J. (2017). A systematic review of adaptivity in human-robot interaction. Multimodal Technol. Interact. 1 (3), 14. doi:10.3390/mti1030014

Arent, K., Kruk-Lasocka, J., Niemiec, T., and Szczepanowski, R. (2019). “Social robot in diagnosis of autism among preschool children,” in 2019 24th International Conference on Methods and Models in Automation and Robotics (MMAR) (IEEE), 652–656. doi:10.1109/MMAR.2019.8864666

Beitzel, S. M., Jensen, E. C., Abdur, C., Ophir, F., and David, G. (2007). Temporal analysis of a very large topically categorized web query log. J. Am. Soc. Inf. Sci. Technol. 58 (2), 166–178. doi:10.1002/asi.20464

Belpaeme, T., Kennedy, J., Ramachandran, A., Scassellati, B., and Tanaka, F. (2018). Social robots for education: a review. Sci. Robotics 3 (21), eaat5954. doi:10.1126/scirobotics.aat5954

Belpaeme, T., Paul, B., de Greeff, J., Kennedy, J., Read, R., Looije, R., et al. (2013). “Child-robot interaction: perspectives and challenges,” in Social robotics. Editors H. Guido, M. J. Pearson, L. Alexander, B. Paul, S. Adam, and L. Ute (Cham: Springer International Publishing), 452–459. Lecture Notes in Computer Science. doi:10.1007/978-3-319-02675-6_45

Beran, T. N., Ramirez-Serrano, A., Vanderkooi, O. G., and Kuhn, S. (2015). Humanoid robotics in health Care: an exploration of children’s and parents’ emotional reactions. J. Health Psychol. 20 (7), 984–989. doi:10.1177/1359105313504794

Best, B. (2022). “Educational toys buying guide. Best buy blog.” Available at: https://blog.bestbuy.ca/toys/educational-toys-buying-guide.

Birnbaum, G. E., Moran, M., Hoffman, G., Reis, H. T., Finkel, E. J., and Sass, O. (2016). “Machines as a source of consolation: robot responsiveness increases human approach behavior and desire for companionship,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (IEEE) 165–172.

Chatterjee, S., Chaudhuri, R., and Vrontis, D. (2021). Usage intention of social robots for domestic purpose: from security, privacy, and legal perspectives. Inf. Syst. Front. Sept. doi:10.1007/s10796-021-10197-7

Coates, M. C., Lau, C., Minielly, N., and Illes, J. (2019). Owning ethical innovation: claims about commercial wearable brain technologies. Neuron 102 (4), 728–731. doi:10.1016/j.neuron.2019.03.026

Coeckelbergh, M. (2014). The moral standing of machines: towards a relational and non-cartesian moral hermeneutics. Philosophy Technol. 27 (1), 61–77. doi:10.1007/s13347-013-0133-8

Crossman, M. K., Kazdin, A. E., and Kitt, E. R. (2018). The influence of a socially assistive robot on mood, anxiety, and arousal in children. Prof. Psychol. Res. Pract. 49 (1), 48–56. doi:10.1037/pro0000177

Dawe, J., Sutherland, C., Barco, A., and Broadbent, E. (2019). Can social robots help children in healthcare contexts? A scoping review. BMJ Paediatr. Open 3 (1), e000371. doi:10.1136/bmjpo-2018-000371

de Graaf, M. M., Ben Allouch, S., and van Dijk, J. A. (2018). A phased framework for long-term user acceptance of interactive technology in domestic environments. New Media Soc. 20 (7), 2582–2603. doi:10.1177/1461444817727

del Moral, S., Pardo, D., and Angulo, C. (2009). Social robot paradigms: an overview” in Bio-inspired systems: computational and ambient intelligence: 10th international work-conference on artificial neural networks, IWANN 2009, Salamanca, Spain, June 10–12, 2009: Springer Berlin Heidelberg) 10, 773–780. doi:10.3389/fpsyg.2020.00469

Di Dio, , Cinzia, F. M., Peretti, G., Angelo, C., Harris, P. L., Massaro, D., et al. (2020). Shall I trust you? From child–robot interaction to trusting relationships. Front. Psychol. 11, 469. doi:10.3389/fpsyg.2020.00469

Diehl, J. J., Schmitt, L. M., Villano, M., and Crowell, C. R. (2012). The clinical use of robots for individuals with autism Spectrum disorders: a critical review. Res. Autism Spectr. Disord. 6 (1), 249–262. doi:10.1016/j.rasd.2011.05.006

Dosso, J. A., Bandari, E., Malhotra, A., Guerra, G. K., Michaud, F., Prescott, T. J., et al. (2022). User perspectives on emotionally aligned social robots for older adults and persons living with dementia. J. Rehabilitation Assistive Technol. Eng. 9, 205566832211083. doi:10.1177/20556683221108364

Duffy, B. R., Colm Rooney, G. M. P., Greg, M. P., and Ruadhan, O. (1999). What is a social robot?. Available at: https://researchrepository.ucd.ie/handle/10197/4412.

Farrier, C. E., Pearson, J. D. R., and Beran, T. N. (2020). Children’s fear and pain during medical procedures: a quality improvement study with a humanoid robot. Can. J. Nurs. Res. 52 (4), 328–334. doi:10.1177/0844562119862742

Fowler, B. (2018). Parents should Be cautious with connected toys, CR testing shows. Consumer Reports https://www.consumerreports.org/electronics-computers/privacy/test-of-connected-toys-shows-parents-should-be-cautious-a5673937392/ December 19, 2018).

Gallacher, K., and Magid, L. (2021). Parent’s guide to tech for tots - ConnectSafely. ConnectSafely.Org. January 4, 2021. https://connectsafely.org/tots/.

Graaf, M. M. A. D., Ben Allouch, S., and Jan, A. V. D. (2018). A phased framework for long-term user acceptance of interactive technology in domestic environments. New Media & Soc. 20 (7), 2582–2603. doi:10.1177/1461444817727264

Guemghar, I., Paula Pires de, O. P., Abdel-Baki, A., Jutras-Aswad, D., Paquette, J., and Marie, P. P. (2022). Social robot interventions in mental health Care and their outcomes, barriers, and facilitators: scoping review. JMIR Ment. Health 9 (4), e36094. doi:10.2196/36094

Gummer, A. (2023). Smart toys, smarter kids: a parent’s guide to connected toys.” The Good Play Guide (blog). https://www.goodplayguide.com/blog/connected-toys/. 2023.

InternetMatters (2023). Guide to tech buying a smart toy.” Internet Matters (blog). https://www.internetmatters.org/resources/guide-to-tech-buying-a-smart-toy (Accessed November 3, 2023).

Kabacińska, K., Prescott, T. J., and Robillard, J. M. (2021). Socially assistive robots as mental health interventions for children: a scoping review. Int. J. Soc. Robotics 13 (5), 919–935. doi:10.1007/s12369-020-00679-0

Kaphingst, K. A., DeJong, W., Rudd, R. E., and Daltroy, L. H. (2004). A content analysis of direct-to-consumer television prescription drug advertisements. J. Health Commun. 9 (6), 515–528. doi:10.1080/10810730490882586

Knorr, C. (2020). “Parents’ ultimate guide to smart devices.” common sense media (blog). Available at: https://www.commonsensemedia.org/articles/parents-ultimate-guide-to-smart-devices.

Lambert, A., Norouzi, N., Bruder, G., and Welch, G. (2020). A systematic review of ten years of research on human interaction with social robots. Int. J. Human–Computer Interact. 36 (19), 1804–1817. doi:10.1080/10447318.2020.1801172

Mark, C. (2021). How to use virtue ethics for thinking about the moral standing of social robots: a relational interpretation in terms of practices, habits, and performance. Int. J. Soc. Robotics 13 (1), 31–40. doi:10.1007/s12369-020-00707-z

Miranda, C. (2019). “What to ask before buying internet-connected toys.”. consumer advice Available at: https://consumer.ftc.gov/consumer-alerts/2019/12/what-ask-buying-internet-connected-toys (Accessed December 9, 2019).

Moral, S. d., Pardo, D., and Angulo, C. (2009). “Social robot paradigms: an overview,” in Bio-inspired systems: computational and ambient intelligence. Editors C. Joan, S. Francisco, P. Alberto, and M. C. Juan (Berlin, Heidelberg: Springer), 773–780. Lecture Notes in Computer Science. doi:10.1007/978-3-642-02478-8_97

Okita, S. Y. (2013). Self–Other’s perspective taking: the use of therapeutic robot companions as social agents for reducing pain and anxiety in pediatric patients. Cyberpsychology, Behav. Soc. Netw. 16 (6), 436–441. doi:10.1089/cyber.2012.0513

Paiva, A., Leite, I., and Ribeiro, T. (2014). Emotion modeling for social robots. The Oxford handbook of affective computing, 296.

Parsonage, G., Horton, M., and Read, J. C. (2020). “Designing experiments for children and robots,” in Proceedings of the 2020 ACM interaction design and children conference: extended abstracts, 169–174. doi:10.1145/3397617.3397841

Parviainen, J., and Coeckelbergh, M. (2021). The political choreography of the sophia robot: beyond robot rights and citizenship to political performances for the social robotics market. AI Soc. 36 (3), 715–724. doi:10.1007/s00146-020-01104-w

Pennisi, P., Tonacci, A., Tartarisco, G., Billeci, L., Ruta, L., Gangemi, S., et al. (2016). Autism and social robotics: a systematic review. Autism Res. 9 (2), 165–183. doi:10.1002/aur.1527

Perry, J. E., Cox, A. D., and Cox., D. (2013). Direct-to-Consumer drug advertisements and the informed patient: a legal, ethical, and content analysis. Am. Bus. Law J. 50 (4), 729–778. doi:10.1111/ablj.12019

Pessoa, L. (2017). Do intelligent robots need emotion? Trends Cognitive Sci. 21 (11), 817–819. doi:10.1016/j.tics.2017.06.010

Pomeranz, J. L., Chu, X., Groza, O., Cohodes, M., and Harris, J. L. (2021). Breastmilk or infant formula? Content analysis of infant feeding advice on breastmilk substitute manufacturer websites. Public Health Nutr. 26, 934–942. doi:10.1017/S1368980021003451

Prescott, T. J., and Julie, M. R. (2019). Robotic automation can improve the lives of people who need social Care. BMJ, January 364, l62. doi:10.1136/bmj.l62

Prescott, T. J., and Robillard, J. M. (2021). Are friends electric? The benefits and risks of human-robot relationships. iScience 24 (1), 101993. doi:10.1016/j.isci.2020.101993

Robillard, J. M., and Feng, T. L. (2017). Health advice in a digital world: quality and content of online information about the prevention of alzheimer’s disease. J. Alzheimer’s Dis. 55 (1), 219–229. doi:10.3233/JAD-160650

Robillard, J. M., Jessica, H. J., and Feng, T. L. (2018). The QUEST for quality online health information: validation of a short quantitative tool. BMC Med. Inf. Decis. Mak. 18 (1), 87. doi:10.1186/s12911-018-0668-9

Rossi, S., Larafa, M., and Ruocco, M. (2020). Emotional and behavioural distraction by a social robot for children anxiety reduction during vaccination. Int. J. Soc. Robotics 12 (3), 765–777. doi:10.1007/s12369-019-00616-w

Sætra, H. S. (2020). The foundations of a policy for the use of social robots in care. Technol. Soc. 63, 101383. doi:10.1016/j.techsoc.2020.101383

Safe Search Kids (2022). “Navigating smart technology in the home.”. Available at: https://www.safesearchkids.com/navigating-smart-technology-in-the-home/.

Salter, T., Werry, I., and Michaud, F. (2008). Going into the wild in child–robot interaction studies: issues in social robotic development. Intell. Serv. Robot. 1 (2), 93–108. doi:10.1007/s11370-007-0009-9

Scassellati, B., and Vázquez, M. (2020). The potential of socially assistive robots during infectious disease outbreaks. Sci. Robotics 5 (44), eabc9014. doi:10.1126/scirobotics.abc9014

Scoglio, A. A. J., Reilly, E. D., Gorman, J. A., and Drebing, C. E. (2019). Use of social robots in mental health and well-being research: systematic review. J. Med. Internet Res. 21 (7), e13322. doi:10.2196/13322

Stock-Homburg, R. (2022). Survey of emotions in human–robot interactions: perspectives from robotic psychology on 20 Years of research. Int. J. Soc. Robotics 14 (2), 389–411. doi:10.1007/s12369-021-00778-6

Sung, J. Y., Grinter, R. E., and Christensen, H. I. (2010). Domestic robot ecology. Int. J. Soc. Robotics 2 (4), 417–429. doi:10.1007/s12369-010-0065-8

Tapus, A., Ţăpuş, C., and Matarić, M. J. (2008). User—robot personality matching and assistive robot behavior adaptation for post-stroke rehabilitation therapy. Intell. Serv. Robot. 1 (2), 169–183. doi:10.1007/s11370-008-0017-4

Tavakoli, M., Carriere, J., and Ali, T. (2020). Robotics, smart wearable technologies, and autonomous intelligent systems for healthcare during the COVID-19 pandemic: an analysis of the state of the art and future vision. Adv. Intell. Syst. 2 (7), 2000071. doi:10.1002/aisy.202000071

Trost, M. J., Adam, R., Ford, , Lynn, K., Gold, J. I., and Matarić, M. (2019). Socially assistive robots for helping pediatric distress and pain: a review of current evidence and recommendations for future research and practice. Clin. J. Pain 35 (5), 451–458. doi:10.1097/AJP.0000000000000688

Tulli, S., Diego, A. A., Amro, N., and Francisco J Rodríguez Lera, (2019). Great expectations & aborted business initiatives: the paradox of social robot between research and industry. BNAIC/BENELEARN 1–10.

Turkle, S. (2011). Alone together: why we expect more from technology and less from each other. New York, NY, US: Basic Books.

Ullrich, D., Diefenbach, S., and Butz, A. (2016). “Murphy miserable robot: a companion to support children’s well-being in emotionally difficult situations,” in Proceedings of the 2016 CHI conference extended abstracts on human factors in computing systems, 3234–3240. doi:10.1145/2851581.2892409

Wickham, H., Averick, M., Bryan, J., Chang, W., Lucy, D. A., Mc, G., et al. (2019). Welcome to the tidyverse. J. Open Source Softw. 4 (43), 1686. doi:10.21105/joss.01686

Yao, T., Jiang, N., Grana, R., Ling, P. M., and Glantz, S. A. (2016). A content analysis of electronic cigarette manufacturer websites in China. Tob. Control 25 (2), 188–194. doi:10.1136/tobaccocontrol-2014-051840

Keywords: social robot, health, child development, consumer information, internet, social interaction, parents, emotion

Citation: Dosso JA, Riminchan A and Robillard JM (2023) Social robotics for children: an investigation of manufacturers’ claims. Front. Robot. AI 10:1080157. doi: 10.3389/frobt.2023.1080157

Received: 08 November 2022; Accepted: 15 November 2023;

Published: 19 December 2023.

Edited by:

Ramana Vinjamuri, University of Maryland, Baltimore County, United StatesReviewed by:

Hooi Min Lim, University of Malaya, MalaysiaKatriina Heljakka, University of Turku, Finland

Copyright © 2023 Dosso, Riminchan and Robillard. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Julie M. Robillard, anJvYmlsbGFAbWFpbC51YmMuY2E=

Jill A. Dosso

Jill A. Dosso Anna Riminchan

Anna Riminchan Julie M. Robillard1,2*

Julie M. Robillard1,2*