- Perception and Robotics Group, University of Maryland, College Park, MD, United States

Tactile sensing for robotics is achieved through a variety of mechanisms, including magnetic, optical-tactile, and conductive fluid. Currently, the fluid-based sensors have struck the right balance of anthropomorphic sizes and shapes and accuracy of tactile response measurement. However, this design is plagued by a low Signal to Noise Ratio (SNR) due to the fluid based sensing mechanism “damping” the measurement values that are hard to model. To this end, we present a spatio-temporal gradient representation on the data obtained from fluid-based tactile sensors, which is inspired from neuromorphic principles of event based sensing. We present a novel algorithm (GradTac) that converts discrete data points from spatial tactile sensors into spatio-temporal surfaces and tracks tactile contours across these surfaces. Processing the tactile data using the proposed spatio-temporal domain is robust, makes it less susceptible to the inherent noise from the fluid based sensors, and allows accurate tracking of regions of touch as compared to using the raw data. We successfully evaluate and demonstrate the efficacy of GradTac on many real-world experiments performed using the Shadow Dexterous Hand, equipped with the BioTac SP sensors. Specifically, we use it for tracking tactile input across the sensor’s surface, measuring relative forces, detecting linear and rotational slip, and for edge tracking. We also release an accompanying task-agnostic dataset for the BioTac SP, which we hope will provide a resource to compare and quantify various novel approaches, and motivate further research.

1 Introduction

Computational tactile sensing has myriad applications in robotics, especially in tasks related to grasping and manipulation. The robotics community has put a significant amount of effort into the design of hardware and algorithms to equip robots with tactile sensing capabilities that rival that of the human skin. Decades of research have led to the design of fluid based sensing mechanisms as the gold-standard for striking the balance between anthropomorphic shapes, sizes and responses. However, as computational algorithms have utilized such sensors widely, some largely unexplored issues still persist due to their non-linear behavior observed in both spatial and temporal responses due to external factors that are hard to model Wettels et al. (2008).

Primarily, these sensors have low Signal to Noise Ratios (SNR), owing to the use of a fluid-based transmission of forces from the skin to the sensing electronics which “damps” the values. Secondly, because of the non-uniform distribution of the sensing elements inside the mechanical construction, each sensing element has a different sensing range, and respective biases. These issues have prohibited the development of a standard representation of the data, and processing techniques have been designed engineered for a particular set of tasks rather than being general.

Many approaches have been proposed for interpreting the sensor data, with highly accurate computer models on one end Narang et al. (2021b,a), and a variety of signal processing techniques Wettels and Loeb (2011) on the raw data on the other. Both these approaches are computationally expensive and need extensive hand-crafted calibration procedures for them to be operational.

On the contrary, biological systems calibrate for these environmental factors on-the-fly by processing tactile information as spikes or events, which provides advantages for transmission and processing along with built-in robustness. This ideology inspired neuromorphic engineers to develop sensors and low-power hardware Brandli et al. (2014), that record and process events, as well as algorithms to compute events Mitrokhin et al. (2018); Gallego et al. (2020); Sanket et al. (2020). Recently event based hardware has become available for the research community. The best known among these is a vision sensor called DVS Brandli et al. (2014); Lichtsteiner et al. (2008), and another sensor is the event based audio cochlea Yang et al. (2016). Event-based processing has also been introduced to the olfactory domain Jing et al. (2016) and for tactile data Janotte et al. (2021).

We propose a novel intermediate representation computed directly from the raw fluid-based tactile data such as that of the BioTac SP sensor. Instead of accurately simulating the deformations and forces on the sensor, as in Narang et al. (2021a,b), we compute robust features from the spatio-temporal changes in the tactile data, which carry essential information about the sensor’s deformation and forces at the location of touch. The approach is computationally inexpensive and sufficiently accurate to perform a series of tasks.

The main idea is to compute from a sequence of raw data, the significant changes in data values from individual sensors, which we call Tactile Events, and then compute the essential tactile features from these events via a spatial interpolation. Specifically, by temporally accumulating the tactile events we construct surface contours, that can be used as a generic representation for tracking touch across the BioTac SP skin. Our approach handles the challenges mentioned above, i.e., it can account for noise and individual sensor biases.

1.1 Problem Formulation and Contribution

The question we tackle in this work can be summarised as “What representation do we need to handle noisy data from a Fluid Based Tactile Sensor (FBTS)?”. To answer this question, we draw inspiration from neuromorphic computing and propose a computational model for representing tactile data using spatio-temporal gradients. Our contributions are formally described next.

• We present an intuition for the relationship between the volumetric deformations of the skin and fluid on a fluid based tactile sensor and spatio-temporal gradients. We further discuss why our method can robustly compute the maximal region of deformation.

• We present a computational model to convert raw tactile signals from an FBTS into an interpolated spatio-temporal surface. This is then used to track regions of applied stimulus across the sensor’s skin surface which corresponds to the regions of touch.

• We demonstrate the capabilities of our proposed approach on several real-world experiments, including detecting slippage during grasp, detecting relative direction of motion between fingers, and following planar shape contours.

• We release a novel dataset containing the various experiments we perform on the BioTac SP. It can be used to validate not only our method, but also for comparing other tracking algorithms for the BioTac SP. and help push the field forward.

1.2 Prior Work

Tactile sensors broadly fall into several broad categories, including but not limited to piezoresistive, piezoelectric, optical, capacitive, and elastoresistive. We further categorize them into two main classes, based on their sensing modality: optical-tactile (i.e. indirect) and direct. This categorization is based on whether the sensing element makes direct contact with the surface being touched. The main tasks performed with tactile data found in the literature include: 1) estimation of the contact location and the net force vector, 2) estimation of high-density deformations on the sensor surface, 3) slip detection and classification, and 4) tracking object edges. We next discuss state-of-the-art works on using the various classes of tactile sensors and solving tasks related to those mentioned above.

Studies that perform estimation directly on the sensor data include Lin et al. (2013), who present an analytical method to estimate the 3D point of contact and net force acting on the BioTac sensor based on electrode values, where they assume that electrodes measure force in the direction their normals. Su et al. (2015) discuss several methods for force estimation from tactile data, including Locally Weighted Projection Regression and neural network based regression. They also present a signal processing technique for slip detection using the BioTac, comparing their results using an IMU. Sundaralingam et al. (2019) introduce a method to infer forces from tactile data using a learning-based approach. They implement a 3D voxel grid to maintain spatial relations of the data, and use a convolutional neural network to map forces to tactile signals.

Recently, some studies modeled a mapping between sensor readings and the field of deformations on the whole sensor surface. Narang Y. S. et al. (2021) presented a finite element model for the 19 taxel BioTac sensor and demonstrated the most accurate simulations of the sensor thus far. They relate forces applied to specific locations to the sensor’s skin deformation. They learn using data they collected, the mapping between 3D contact locations and netforce vectors to the 19 taxel readings, and then by combining the FEM simulation and experimental data they extrapoloate a mapping between taxel sensor measurements and skin deformations and vice-versa. In Narang Y. et al. (2021) the authors extended this work using variational autoencoder networks to represent both FEM deformations and electrode signals as low-dimensional latent variables, and they performed cross-modal learning over these latent variables. This enhanced the accuracy of the mapping between taxel readings and skin deformations previously obtained. However, they also showed that for unseen indenter shapes these methods poorly generalise in predicting deformation magnitudes and distributions from electrode values.

Lepora et al. (2019) using a TacTip optical-tactile sensor (Ward-Cherrier et al. (2018)) learn via a CNN to perform reliable edge detection, and then use that in a visual servoing control policy for tracking and moving across object contours. In related work by the authors (Cramphorn et al. (2018)), they present a Voronoi tesselation based processing pipeline to predict contact location, as well as shear direction and magnitude on the surface of the sensor. This method is novel in that it does not use any classification or regression techniques and is purely analytical in nature.

Taunyazoz et al. (2020) use the NeuTouch, a novel event-based tactile sensor along with a Visual-Tactile Spiking Neural Network to perform object classification and rotational slip detection. They also perform ablation studies with an event-based visual camera, and compare their spiking neural networks to traditional network architectures like 3D convolutional networks, and Gated Recurrent Units.

We use the prior work described above as a source of motivation for our pipeline, and we attempt to use the validated experiments in them as a proof of concept of our approach. We perform slip detection experiments as in Su et al. (2015), perform edge tracking using visual servoing as in Lepora et al. (2019) and compute forces from touch as described by Sundaralingam et al. (2019).

1.3 Organization of the Paper

Our paper is organized as follows: In Section 2, we present the motivation for using the BioTac SP sensor for tactile sensing, and how our method is a practical solution to the challenges posed by this particular type of sensor. We describe in detail why the spatio-temporal gradients are an intuitive way for computing features of deformation on the BioTac SP.

Section 3 discusses our high-level pipeline, and our experimental setup. We then go into detail regarding our algorithm to generate spatio-temporal gradients, i.e. events from raw tactile data, and then discuss how we generate contour surfaces from these events. Lastly, in this section we discuss how we use these spatio-temporal surfaces to track touch stimulus across the BioTac SP skin.

In Section 5 we demonstrate our pipeline on three distinctly different tasks, and discuss their results and outputs. We first show that our contour surfaces are able to accurately track tactile stimulus in motion across the surface of the BioTac SP skin. We then discuss results on experiments involving varying applied forces on the BioTac SP, where we show that our contour surfaces can distinguish between various levels of force. Lastly, we employ our algorithm on a more practical task of slippage detection during grasping, where we detect time of slippage, and distinguish between longitudinal and rotational slippage types.

2 Methods

2.1 Motivation

We consider for our work the BioTac SP tactile sensor, which comes with a unique sensing mechanism as compared to other contemporary tactile sensors. Tactile sensing mechanisms, as they are available commercially today, lie on a spectrum ranging from accurate sensing capabilities on one side to biomimetic form-factors on the other. Most sensors on this spectrum make trade-offs on form factor to provide high accuracy. The BioTac SP is one particular sensor that strikes a right balance and is in the middle of the range, where we have a physical shape and sensing mechanism very close to the human finger tip, but this comes at the cost of accuracy and fidelity of sensing.

2.2 Challenges With Fluid-Conductive Sensors

Unlike optical-tactile, magnetic or capacitive tactile sensors, fluid based tactile sensors use a conductive fluid to transmit electrical impulses from spatially distributed excitation electrodes to a few sensing locations (taxels) distributed over a solid core. The values generated by the taxels are thus primarily dependent on the characteristics of the fluid, specifically its conductivity.

The conductivity of a fluid, such as the electrolytic solution present in the BioTac SP sensor is non-linearly related to various external factors. These include, but are not limited to the temperature of the fluid, the humidity of the surroundings, the area and distance between the excitation and sensing electrodes, and the concentration of the conductive fluid. Each of these factors contribute non-linearly (Wettels et al. (2008)) to the noise of the individual taxels. Furthermore, the noise characteristics of the sensor electronics are also non-linear, which further exacerbates the situation.

We also need to consider sources of noise in the electronic implementation of each taxel’s sensing mechanism, which include amplification and analog-to-digital conversion circuitry among others.

2.3 Modelling Fluid-Conductive Sensors

While it might be feasible to model each of the aforementioned sources of noise independently and in isolation, the combination and interactions between them when considered together in the system makes it an arduous task. There have been several attempts to develop a physical model of the BioTac sensor, the most recent of which is presented in the work by Narang Y. et al. (2021). In this, the authors present a finite element model (FEM) of an ideal BioTac sensor, and provide an accurate simulation of the skin, the sensing core, and the internal fluid. While the FEM approach provides a physically accurate measurement of the deformation of the skin and fluid based on force stimuli, it does not account for the sources of noise described earlier. This is because the model of the sensor electronics is not considered along with the computational challenges of fluid modelling. Currently, to the best of our knowledge, there is no mathematical model between sensor readings and skin deformations, thereby inhibiting research in this area when utilizing raw sensor measurements.

2.4 Bio-Inspired Motivation for Logarithmic Change

In our work, we draw inspiration from nature regarding how changes over the skin surfaces may be related to location of touch and relative forces. To this end, we break away from the core robotics ideology that one requires a complex and accurate mathematical model or a very high quality sensor to perform useful tasks. In particular, we are driven by nature’s efficient and parsimonious implementations which perform amazingly well with minimal quality sensors and very simple computing.

To build such an efficient data representation for fluid based sensors, we turn to the Weber-Fechner Laws of psychophysics, which state that the perceived stimulus on any of the human senses is related via an exponential function to the actual stimulus. As a result, humans perceive stimuli such as touch, sound or light as the changes in the logarithm between existing values and new ones. It is thus not surprising that the manufacturers of the BioTac SP sensor, who designed it to be as anthropomorphic as possible, also recommend that the best way to process the data from such fluid-based sensors is to use relative changes instead of raw taxel values.

In practice, the two main challenges with the BioTac SP sensor are that 1) the different taxels do not have same baseline value, and 2) the taxel values exhibit a low signal to noise ratio. By computing only the taxel changes on a logarithmic scale, our values become independent of the baseline and are more robust to noise, thus tackling both aforementioned issues.

2.5 Computing Events From Raw Data

One of the primary outputs of our pipeline is to generate “events” from raw tactile data. The concept of an event is inspired from the neuromorphic research community, which essentially is a data point in time that is “fired” only when there exists a change in the stimulus above a specified threshold.

We consider two consecutive packets of taxel data, at times t and t + δ respectively. Each of these packets contains the raw taxel values

In other words, a positive event is said to be “fired” when

and a negative event when

This gives us intermediate taxel values between times t and t + δ, and their respective timestamps for each taxel j ∈ [1, 24].

We know from the design of the sensor, as well as ideal sensor simulations that the largest change in the values of the taxels correlates with the region of highest tactile stimulus. Also, this change is dependent on the forces already present on the region of touch, and reaches saturation and demonstrates hysteresis in the raw values. Our algorithm accounts for that by non-linearly interpolating the taxel data, on the log scale. The previously obtained taxel events thus give us a temporal gradient over the change in taxel values, caused by the deformation of the skin and fluid because of the applied force stimulus. Intuitively, these intermediate events between two discrete taxel data values signify change in localized volume of the skin and fluid over time due to the applied tactile stimulus.

As part of our algorithm, we then process these spatially discrete events for each of the 24 taxel locations and convert them into a continuous, interpolated surface. We use a Voronoi tesselation of the discrete and irregular grid, and perform Natural Neighbors Interpolation to construct an event surface, that gives us an interpolated event value at each point. This surface indirectly depicts the deformation of the skin and fluid, due to applied stimulus. Since the deformation due to applied forces on the BioTac SP is greatest at the region of touch, we generate iso-contours of the event surface, and consider only the maximal valued contour as the region of touch.

3 Our Approach

3.1 Pipeline

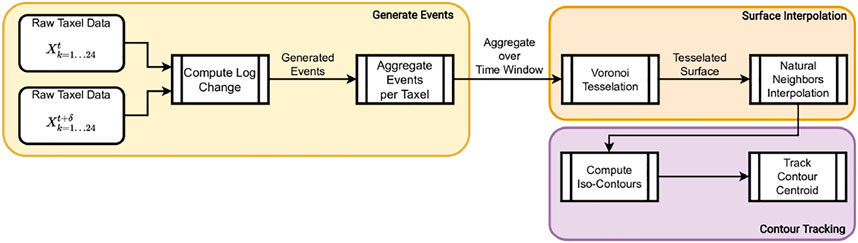

Figure 1 shows an overview of the proposed framework, where we start with 24 points of raw tactile data from the BioTac SP sensors and generate a contact trajectory as output. The pipeline involves converting the raw data into events, aggregating said events by spatial clusters, performing Voronoi Tessellation on the aggregate events, and then using the interpolated values to generate a contour plot whose centroid is tracked over time. We elaborate each of the steps of our pipeline below.

3.2 Setup and Methodology

Our hardware setup consists of a UR-10 manipulator equipped with the Shadow Dexterous Hand, which has the BioTac SP sensors attached to each finger tip. The BioTac SP provides a ROS interface to obtain the raw data, at a rate of 100 Hz. This data consists of 24 electrode values which we term “taxels” (tactile element), as well as overall fluid pressure and temperature flux. For our pipeline, we use only the 24 taxel readings. These readings are the result of forces due to contact and the resultant compression of the skin and the enclosed fluid. The nature of our pipeline allows for processing readings from any other tactile sensor, as long as they are spatially distributed across some surface, and timestamps for each data packet are provided. We perform basic min-max normalization and Savitzky-Golay filtering before using the data. Our event-generation algorithm is influenced by principles of event-based sensors, which record logarithmic changes of signal on individual sensing elements, independently and asynchronously.

3.3 Generating Events From Raw Tactile Data

In Section 2.1, we established that our approach does not approximate the entire sensor’s surface but only the regions with maximum tactile stimulus. The data from the BioTac SP sensor is obtained at a rate of 100 Hz, or one packet of data every 0.01 s. Our method computes the number of events at each taxel, where each event corresponds to the change of some threshold value τ. This essentially decides the granularity of change we are interested in measuring, and more events correspond to larger change, which is correlated to the amount of force that was applied to a particular region. For each event triggered, we also generate a corresponding timestamp between t and t + δ. Taking inspiration from the Weber-Fechner laws of psychophysics mentioned earlier in Section 1, we trigger events based on the natural log of the threshold τ. This intuitively means that the frequency of events are higher initially at time t, and gradually taper off as we get closer to the value at time t + δ.

Once the events have been computed for all the raw tactile data points, we aggregate them into temporal frames. The size of the temporal window used for aggregation is an important heuristic that can be fine tuned to favor robustness to noise or allow for a more sensitive tactile response.

3.4 Natural Neighbors Based Interpolation

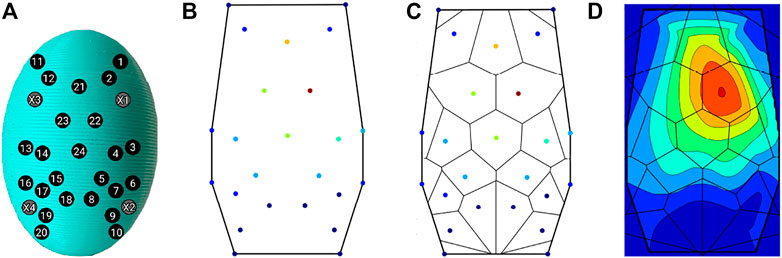

The 24 taxels are located in some 3D space inside the BioTac SP, as per the sensor’s design. We project these ellipsoidal locations onto a 2D surface, shown in Figure 2A, to get an irregular grid of locations on a plane. For each of these 24 2D points, we have the aggregate event counts, as shown in Figure 2B.

FIGURE 2. Contour Generation Pipeline. (A) 24 taxel locations, projected onto 2D plane, (B) Initial event aggregates per taxel, (C) Voronoi tesselation of the grid, (D) Contours generated from the interpolated surface.

We proceed to perform Voronoi tessellation of this grid, based on the aggregate event values, shown in Figure 2C. Compared to other methods of interpolation, such as Inverse Distance Weighting or Gaussian interpolation, Voronoi tessellation provides a more accurate representation of the underlying function we are trying to interpolate. Considering the unstructured nature of our data, i.e., an irregular grid of taxels, traditional methods of interpolation do not take into account the different areas of influence of each taxel when computing the interpolated function. Voronoi tesselation partitions the space proportional to the “strength” of each sample point, by “stealing” some area from the neighboring polygons any time a new point is interpolated Lucas (2021).

This is mathematically represented by:

where G(x) is the estimate computed at x, and wi are weights, and f (xi) are the known data values at xi, which are obtained from the 24 event aggregate values. A(x) is the volume of the new cell centered at x, and A (xi) is the volume of the intersection between the new cell centered in x and the old cell centered in xi.

Owing to the irregular structure of the sensing locations (taxels) within the BioTac SP, we want to employ a method of interpolation that gives weight to each taxel location proportional to the applied stimulus. Intuitively, Voronoi tessellation partitions the space into irregularly shaped polygons that are proportional to (or representative of) the tactile stimulus exerted on each taxel location. This is better than say, nearest neighbors interpolation which interpolates force values uniformly around each taxel. Although similar to a weighted-average interpolation, Natural Neighbors interpolation weights values by their proportionate area instead of just the raw values at each taxel. This resultant interpolation is a more “truthful” representation of the underlying surface function than other methods. The results of the Voronoi tessellation are used to interpolate points on the 2D surface of the BioTac SP, resulting in a continuous surface (Figure 2D) whose values correspond to the amount of force on each taxel, and consequently, the deformation of that region of the BioTac SP skin.

4 Dataset

There is a lack of standardized datasets in the tactile sensing community, especially when sensors like the BioTac SP are concerned. Most datasets available today are task-specific, or are from optical-tactile sensors. This makes quantitative comparisons difficult for novel algorithms being introduced to the field.

As part of our work, we are releasing an accompanying dataset on tactile motion on the BioTac SP sensor, which is independent of any particular task. The dataset samples include the following:

• Tactile responses from various indenter sizes, applied at different forces

• Motion across the sensor surface in various directional trajectories. We include 1) top-to-bottom, 2) bottom-to-top, 3) left-to-right, 4) right-to-left, 5) diagonal top-to-bottom, 6) diagonal bottom-to-top, 7) clockwise and 8) counter-clockwise data samples.

• Longitudinal slippage for various objects from a labelled list of objects, as well as the ground-truth timestamps for slip events.

• Rotational slippage for cylindrical object on a constant-speed turntable, as well as the ground-truth timestamps for slip events.

All our data is presented in both NumPy and CSV data formats, and includes all raw 24 taxel values as well as their timestamps. For ease of adoption and use, we eschew the use of ROS Bag format in this dataset, but it may be made available on request.

5 Experiments and Results

5.1 Experimental Setup

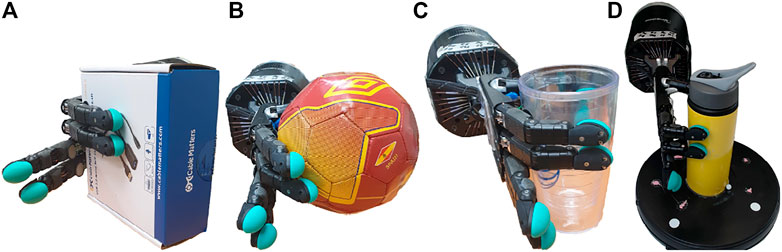

The hardware used to perform all experiments, shown in Figure 3, consists of a UR-10 manipulator equipped with a Shadow Dexterous Hand, with one BioTac SP sensor attached to each of the five finger tips.

Alongside the 24 taxel values from each BioTac SP sensor, the setup also provides us with the 6 DoF pose of the arm and each finger, relative to a world coordinate system at the base of the manipulator. This information is used in the shape tracking experiments.

Replication of the experiments as described in this work is only feasible with access to the BioTac SP hardware. As such, our algorithm can be applied to, and modified for other sensors. We will release an accompanying dataset with the labelled data collected for each experiment, along with their respective ground truth values.

5.2 Tracking Location of Touch

We collected data from the BioTac SP at a rate of 100 Hz by making contact at different sensor locations. Three different probes with varying indenter diameters (1, 2, and 5 mm respectively) were used to gather this dataset. The taxel values are time-synchronised with an RGB camera feed which provides us with visual ground truth of contact location at every instance. This data was then used to generate events according to the method described in Section 3.3.

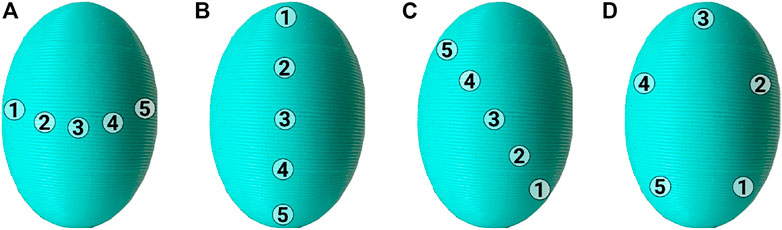

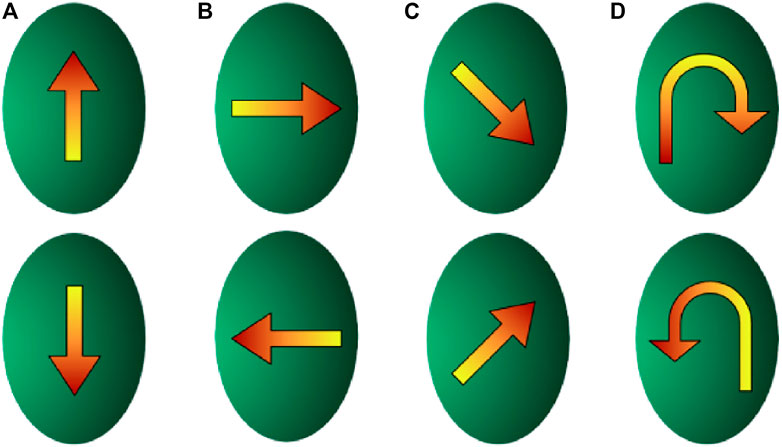

We evaluate our method of tracking contact by comparing it qualitatively with the ground truth trajectories of the probes obtained from the RGB images. We hand-label several marker locations (shown in Figure 4 on the physical sensor and align them in image coordinate space to the 2D projected locations of the taxels. We used 8 different trajectories, as shown in Figure 5.

FIGURE 4. Touch Tracking Ground Truth Marker Locations. (A) Markers for tracking horizontal trajectory, (B) Markers for tracking vertical trajectory, (C) Markers for tracking diagonal trajectory, and (D) Markers for tracking circular trajectory.

FIGURE 5. Touch tracking trajectories. (A) Up and down trajectories, (B) left and right trajectories, (C) diagonal trajectories, (D) circular trajectories.

We move the indenters on various trajectories along the surface of the BioTac SP, as shown in Figure 5, from top to bottom, bottom to top (Figure 5A), left to right, right to left ((Figure 5B), diagonally top to bottom, diagonally bottom to top (Figure 5C), circular clockwise, and circular counter-clockwise (Figure 5D).

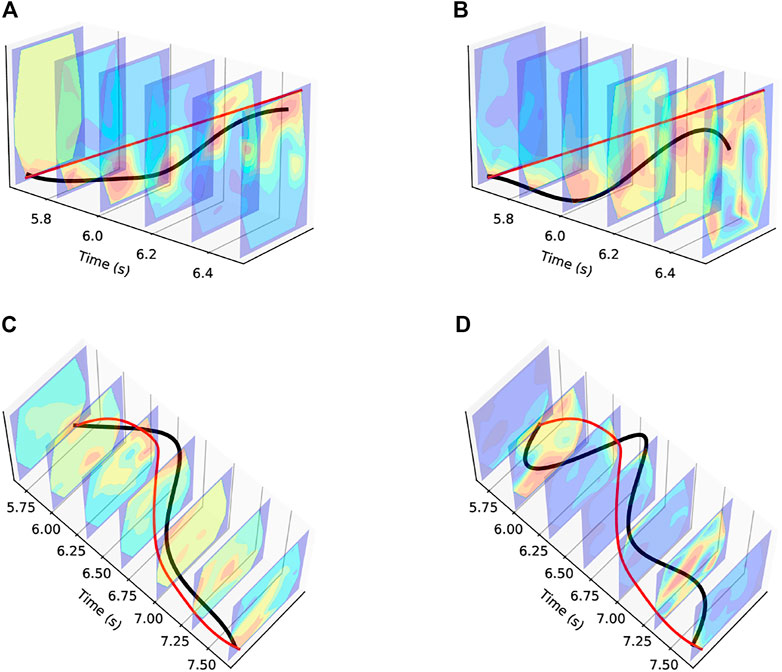

Figure 6 shows the outputs from two sample trajectories–diagonal motion from bottom left to top right and counter-clockwise circular motion. Figure 6A and Figure 6C show the trajectories overlaid on the contour surfaces generated from the event aggregates stacked along the time axis. In both outputs, we can clearly see the event aggregates in red representing the current region of touch. Tracking these across time, we can generate a trajectory of touch across the skin surface.

FIGURE 6. Touch Tracking Trajectory Plots. The red line denotes the ground truth trajectory. (A) Diagonal Trajectory using Events Data, (B) Diagonal Trajectory using Raw Data, (C) Circular Trajectory using Events Data, (D) Circular Trajectory using Raw Data.

For comparison, in Figures 6B,D we compare the outputs obtained from the filtered, but otherwise unprocessed raw data from the BioTac SP. It is clear that the outputs from our approach, shown in Figure 6, produces smoother trajectories with reduced noise.

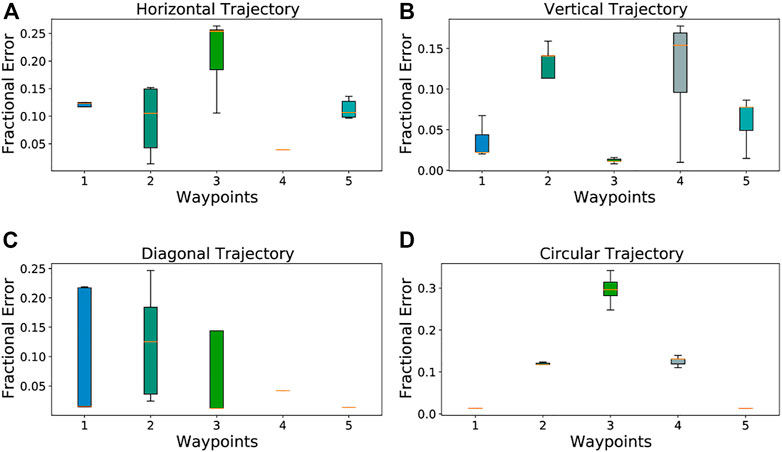

Figure 7 quantifies the median error in touch tracking results for each of the waypoints, for each of the four classes of trajectories (horizontal, vertical, diagonal and circular). Our results are most accurate for the waypoints in the center of the BioTac SP as compared to those near the edges due to the shape and fluid density of the underlying sensor.

FIGURE 7. Touch Tracking Error Plots. The middle bar represents the median error, the width of each bar is the Interquartile Range, and the fence widths are 1.5 ×IQR. (A) Average Deviation for Vertical Trajectory, (B) Average Deviation for Horizontal Trajectory, (C) Average Deviation for Diagonal Trajectory, (D) Average Deviation for Circular Trajectory.

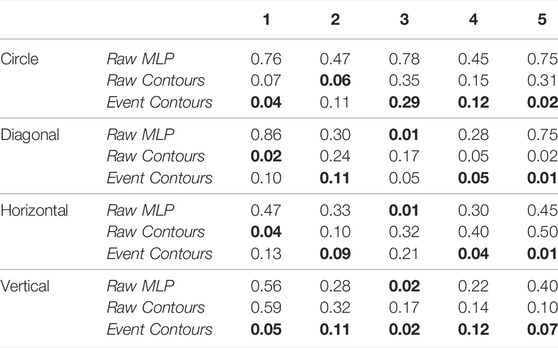

Table 1 provides a comparison of the average pixel-wise error in tracking the known waypoints (Figure 4), computed with three different methods: First, we take the 24 taxel values corresponding to the timestamp at which the indenter is on each of the known waypoints 1 through 5, and train a fully connected neural network for regression on predicted locations. The average pixel-wise errors are reported under the Raw MLP heading. Similarly, we obtain the average pixel-wise errors for each of the five waypoints, using the contours from raw data and from event data. These are reported under the Raw Contours and Event Contours headers respectively in Table 1.

TABLE 1. Mean errors in the ratio of computed contact location and BioTac SP width at each way-point over different trajectories. The values in bold refer to the best results for a given trajectory.

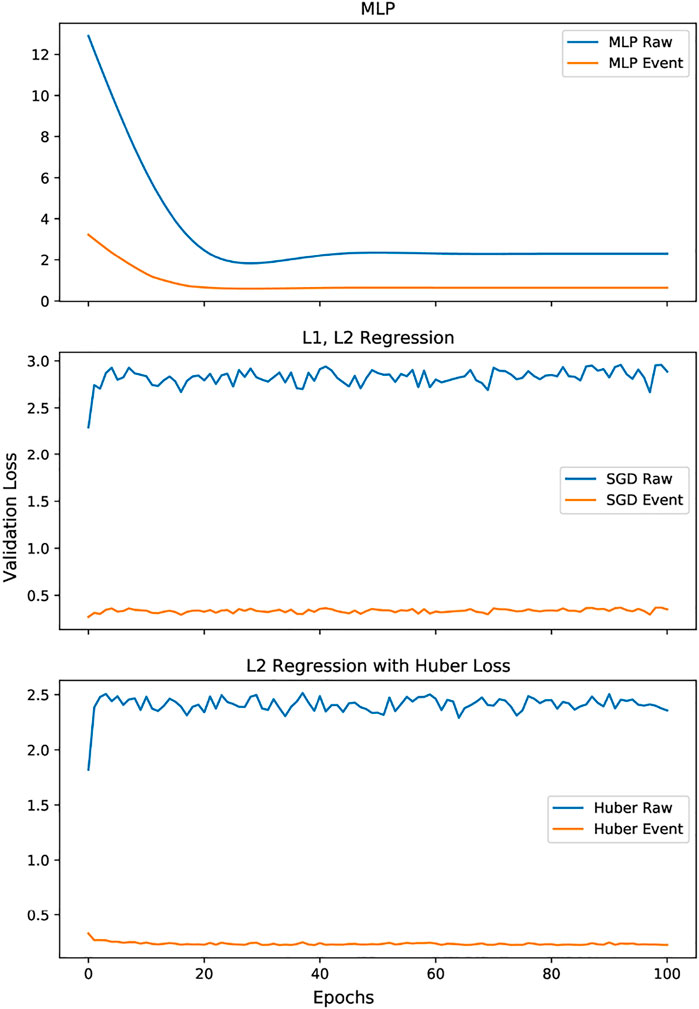

5.3 Magnitude of Force

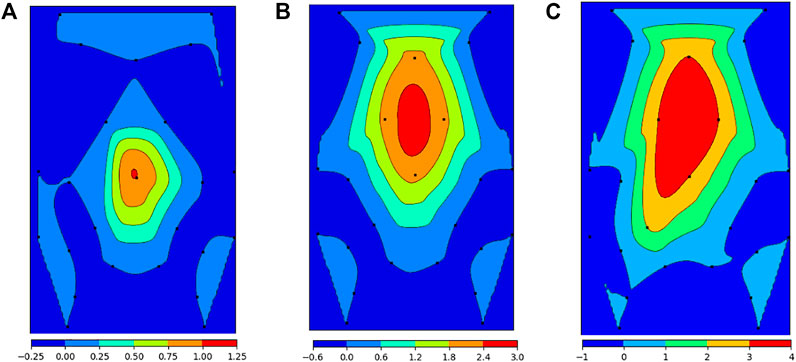

We applied varying forces on the BioTac SP skin using a 2 mm indenter to demonstrate the ability of our event contours to measure the correlation between the magnitude of contact force and the area of the maximal contour. The ground truth forces were measured with the help of a calibrated and accurate force sensor.

In order to obtain the relationship between contour areas and applied force, we trained a fully connected neural network with 7 hidden layers, with layer widths of 8, 16, 32, 64, 32, 16, and 8 respectively using L2 loss. This network was used to compute a regression curve mapping the forces to the contour areas. We applied a logistic activation function, and used an inversely scaled learning rate. The network was trained for 5,000 epochs, on 200 data points.As a point of comparison, we applied two other regression methods, a stochastic gradient descent regression with the ElasticNet regularization and log loss, and another L2 regularized regression with Huber loss.We used the mean absolute percentage error, defined as

where

Each of these methods were trained for 1,000 epochs over 200 data points, but for brevity in Figure 8 we only display 100 epochs. The figure also shows the results from the same regression techniques applied to the contours generated from raw data. It is evident from the plots, across all learning algorithms, that the event based data in comparison to the raw data, shows a better validation loss curve during training, and has an overall lower loss score at testing.

Figure 9 shows a visual representation of the contour regions correlated with the applied forces. As is qualitatively evident, higher forces correspond to larger regions of tactile stimulus, as shown by the highest contour regions in red.

FIGURE 9. Contour region areas correlated with applied force. (A) 3N applied force, (B) 6N applied force, (C) 12N applied force.

5.4 Slippage Detection and Classification

There are many different ways slippage detection has been achieved using the BioTac SP (Su et al. (2015); Veiga et al. (2020); Calandra et al. (2018); Naeini et al. (2019)), with most methods specifically designed for the task. Here we show that our generic method of spatio-temporal contours can also be used for slippage detection and classification, demonstrating that our approach is very adaptive.

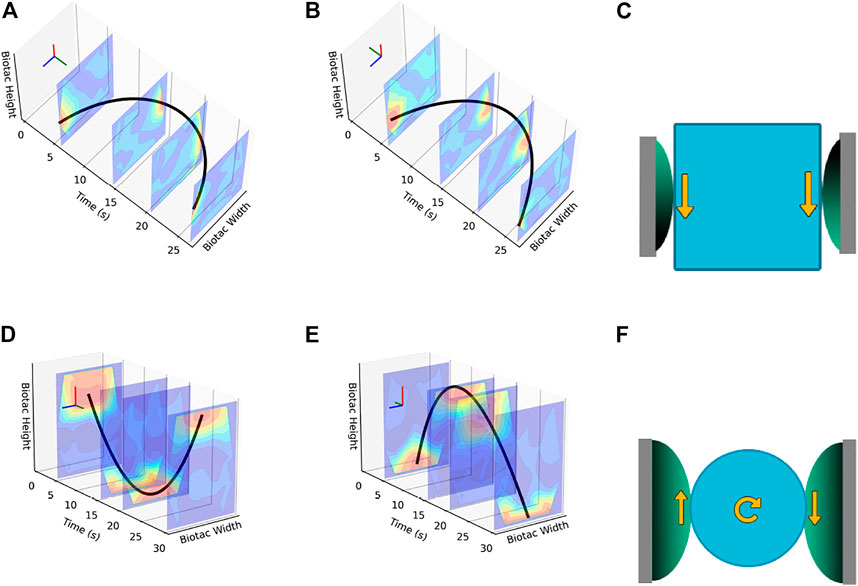

By tracking the contours spatio-temporally, we are able to detect both the time at which slippage occurs, as well as its directionality. In case of longitudinal slip, i.e. in which the object moves linearly between the fingers, we can measure the direction as “up” or “down”. In case of rotational slip, i.e. in which the object rotates between the fingers, we can measure clockwise vs. counter-clockwise rotation.

We do this by comparing the event contours from fingers on opposing sides of the object, while the object is fully grasped by the Shadow Hand, as shown in Figure 10. By tracking and comparing the trajectories generated by the contours on the first finger and the thumb, we can deduce both the time at which slippage occurs, as well as the direction. In case of longitudinal slippage, as in Figure 11C, based on the orientation of the BioTac SP sensors with respect to the object, both the contour trajectories have the same direction of motion. In case of rotational slippage, as in Figure 11F, because of opposing shear forces experienced on the thumb versus the first finger, the contour trajectories have opposing directions of motion.

FIGURE 10. Objects Used for Longitudinal and Rotational Slip Detection. (A) Box shape, (B) Spherical shape, (C) Cylinder shape, (D) Tumbler on constant-speed turntable.

FIGURE 11. Examples of trajectories during longitudinal and rotational slippage. (A), (B) First Finger and Thumb trajectories for longitudinal slippage, (C) Directional Diagram for longitudinal slippage, (D), (E) First Finger and Thumb trajectories for rotational slippage, (F) Directional Diagram for rotational slippage.

For the longitudinal slippage scenario, the object is allowed to slide down and is then gradually pulled back up, while maintaining a stable grasp. This can be seen by the contours moving from left to right spatially across the sensor’s surface, and then from right back to the left. As is evident from the trajectories, because of shear forces being in the same direction for both sensors, the direction of the respective trajectories are also the same. For the rotational slippage scenario, the object is affixed to a constant-speed turntable and allowed to rotate slowly between the opposing fingers. In this motion, due to the resultant opposing shear forces, the contour trajectories for the index finger and the thumb have clearly opposite directions.

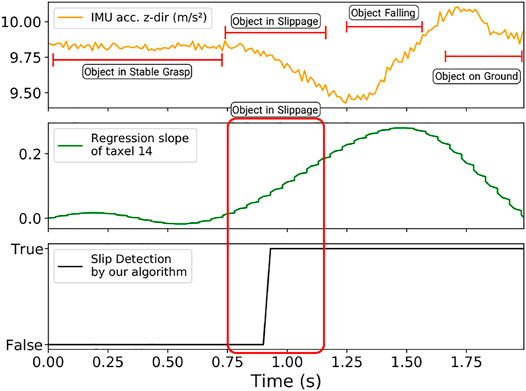

The event contour outputs of these experiments, longitudinal and rotational slippage, are shown in Figures 11A,B,C,D respectively. In both cases the contours on the BioTac SP sensor are tracked over time, separately for the index finger and the thumb, which as per the diagrams (Figures 11A,B,C,D) have different orientations.We obtain ground truth for our experiments using an 6-axis IMU mounted on each object, and use time synchronized outputs from the IMU to compute the time of slip. We compare our event-based approach to a regression slope computed on the raw data, and the results of one such experiment is shown in Figure 12.

FIGURE 12. Slip Detection Comparison Plots. From top to bottom, we have a low-pass filtered acceleration on the z-axis, the regression slope on the raw data, and the binary slip detection results from event contours.

5.5 Tracking Edges Using Contact Location

As another implementation of our contour tracking pipeline, we demonstrate a simple controller that takes the contour location relative to the UR-10 manipulator, and outputs a motion vector for the finger to follow. The controller is based on a simple tactile servoing algorithm, where we try to maintain the location of the contour location in the center of the surface frame.

As the finger and the attached sensor move over the edge, only one portion of the BioTac SP is in contact with the edge surface. This can be detected and tracked by our controller, and since we start our controller execution with the sensor’s center touching the edge, any deviations in the contours from this center is compensated by an opposing motion vector sent to the UR-10 manipulator as a control command.

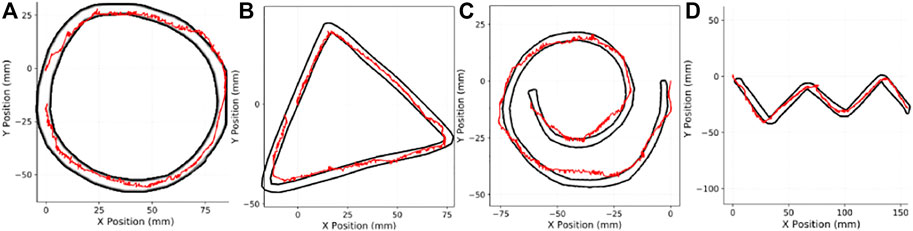

We track the edges of various non-trivial patterns, namely circle, spiral, triangle, and zig-zag. We overlay the ground truth image of our shapes over the trajectory that is tracked from the robot’s pose data for the finger. Barring minor alignment issues between the surface and the finger, and some sliding experienced during the execution, the controller is able to guide the finger across the edges with relative accuracy. The results, with the ground truth shapes, are shown in Figure 13. In each of the plots, we have the trajectory of the BioTac SP in world coordinate space in red, and the black polygons denote the inner and outer diameters of the edges of the shapes we track, also in world coordinate space measured in millimeters. We perform pixel-wise trajectory alignment to align the sensor pose to the ground truth boundary.

FIGURE 13. Edge tracking shapes, and results. (A) Circular edge, (B) Triangular edge, (C) spiral edge, (D) Zig-Zag Edge.

6 Discussion

In conclusion, the work proposes a novel method to convert raw tactile data from the BioTac SP sensor into a spatio-temporal gradient (events) surface that closely tracks the regions of maximum tactile stimulus. Our algorithm approximates the region of touch on the skin of the BioTac SP sensor sufficiently accurate to perform various tactile feedback tasks. Specifically, we demonstrated the usefulness of the new representation experimentally, for the tasks of tracking tactile stimulus across the sensor, measuring relative force, slippage detection and classification of direction, and tracking edges on a plane. In comparison to other methods for data processing of fluid-based tactile sensors, our method is real-time and requires minimal overhead in computation. Our approach provides a robust, analytical method for detecting and tracking location of tactile stimulus on the BioTac SP from just 24 data points, improves the signal-to-noise ratio of the raw data and is independent of the baseline taxel values. The benefits of this approach should be even more apparent if hardware-based implementations of our algorithm is considered, due to the inherent nature of event-based processing transmitting only changes in tactile stimulus. Lastly, our approach is also independent of any particular sensor type, and we present an accompanying dataset of task-agnostic data samples gathered with the BioTac SP sensor. These include motion tracking over known trajectories and their time-synchronized RGB images, force sensor readings for varying forces applied to the surface using different indenter diameters, and slippage data for several objects and their accompanying ground truth timestamps.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Author Contributions

KG and PM were the primary researchers involved in presenting the work. KG conceptualized the algorithms and prepared the codebase, performed the experiments, and prepared the drafts of the manuscript. PM collected data, performed experiments, prepared plots, and analyzed results for comparisons and the dataset. CP suggested modifications to the experiments and presentation of the drafts, as well as provided domain knowledge. CF suggested the topic of this study, was involved in discussions on the work and contributed to the paper writing. NS suggested modifications to all sections of the manuscript, was involved in proofreading, and provided overall structural changes to the final manuscript. YA was the academic advisor, and provided expert advice on the work. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

The support of the National Science Foundation under grants BCS 1824198 and OISE 2020624, and the Maryland Robotics Center for a Graduate Student Fellowship to KG are gratefully acknowledged.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to acknowledge Behzad Sadrfaridpour and Chahat Deep Singh, for their contributions to the presented work. This work has been submitted to ArXiv for pre-print publication.

References

Brandli, C., Berner, R., Minhao Yang, M., Shih-Chii Liu, S.-C., and Delbruck, T. (2014). A 240 × 180 130 dB 3 Μs Latency Global Shutter Spatiotemporal Vision Sensor. IEEE J. Solid-State Circuits 49, 2333–2341. doi:10.1109/jssc.2014.2342715

Calandra, R., Owens, A., Jayaraman, D., Lin, J., Yuan, W., Malik, J., et al. (2018). More Than a Feeling: Learning to Grasp and Regrasp Using Vision and Touch. IEEE Robot. Autom. Lett. 3, 3300–3307. doi:10.1109/lra.2018.2852779

Cramphorn, L., Lloyd, J., and Lepora, N. (2018). “Voronoi Features for Tactile Sensing: Direct Inference of Pressure, Shear, and Contact Locations,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, May 21–25, 2018. Institute of Electrical and Electronics Engineers (IEEE). doi:10.1109/ICRA.2018.8460644

Gallego, G., Delbrück, T., Orchard, G. M., Bartolozzi, C., Taba, B., Censi, A., et al. (2020). Event-based Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. PP, 154–180. doi:10.1109/TPAMI.2020.3008413

Janotte, E., Mastella, M., Chicca, E., and Bartolozzi, C. (2021). Touch in Robots: A Neuromorphic Approach. ERCIM News 2021, 34–51. doi:10.5465/ambpp.2021.13070abstract

Jing, Y.-Q., Meng, Q.-H., Qi, P.-F., Zeng, M., and Liu, Y.-J. (2016). Signal Processing Inspired from the Olfactory Bulb for Electronic Noses. Meas. Sci. Technol. 28, 015105. doi:10.1088/1361-6501/28/1/015105

Lepora, N. F., Church, A., De Kerckhove, C., Hadsell, R., and Lloyd, J. (2019). From Pixels to Percepts: Highly Robust Edge Perception and Contour Following Using Deep Learning and an Optical Biomimetic Tactile Sensor. IEEE Robot. Autom. Lett. 4, 2101–2107. doi:10.1109/LRA.2019.2899192

Lichtsteiner, P., Posch, C., and Delbruck, T. (2008). A 128$\times$128 120 dB 15 $\mu$s Latency Asynchronous Temporal Contrast Vision Sensor. IEEE J. Solid-State Circuits 43, 566–576. doi:10.1109/jssc.2007.914337

Lin, C.-h., Fishel, J., and Loeb, G. E. (2013). Estimating Point of Contact, Force and Torque in a Biomimetic Tactile Sensor with Deformable Skin. Available at: https://syntouchinc.com/wp-content/uploads/2016/12/2013_Lin_Analytical-1.pdf.

Lucas, G. (2021). A Fast and Accurate Algorithm for Natural Neighbor Interpolation. Available at: https://gwlucastrig.github.io/TinfourDocs/NaturalNeighborTinfourAlgorithm/index.html.

Mitrokhin, A., Fermüller, C., Parameshwara, C., and Aloimonos, Y. (2018). “Event-based Moving Object Detection and Tracking,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE), 1–9. doi:10.1109/iros.2018.8593805

Naeini, F. B., AlAli, A. M., Al-Husari, R., Rigi, A., Al-Sharman, M. K., Makris, D., et al. (2019). A Novel Dynamic-Vision-Based Approach for Tactile Sensing Applications. IEEE Trans. Instrum. Meas. 69, 1881–1893.

Narang, Y., Sundaralingam, B., Macklin, M., Mousavian, A., and Fox, D. (2021a). Sim-to-real for Robotic Tactile Sensing via Physics-Based Simulation and Learned Latent Projections. arXiv preprint arXiv:2103.16747.

Narang, Y. S., Sundaralingam, B., Van Wyk, K., Mousavian, A., and Fox, D. (2021b). Interpreting and Predicting Tactile Signals for the Syntouch Biotac. arXiv preprint arXiv:2101.05452.

Sanket, N. J., Parameshwara, C. M., Singh, C. D., Kuruttukulam, A. V., Fermüller, C., Scaramuzza, D., et al. (2020). “Evdodgenet: Deep Dynamic Obstacle Dodging with Event Cameras,” in 2020 IEEE International Conference on Robotics and Automation (ICRA) (IEEE), 10651–10657. doi:10.1109/icra40945.2020.9196877

Su, Z., Hausman, K., Chebotar, Y., Molchanov, A., Loeb, G. E., Sukhatme, G. S., et al. (2015). “Force Estimation and Slip Detection/classification for Grip Control Using a Biomimetic Tactile Sensor,” in IEEE-RAS International Conference on Humanoid Robots (Humanoids) (IEEE), 297–303. doi:10.1109/humanoids.2015.7363558

Sundaralingam, B., Lambert, A., Handa, A., Boots, B., Hermans, T., Birchfield, S., et al. (2019). Robust Learning of Tactile Force Estimation through Robot Interaction. arXiv:1810.06187.

Taunyazoz, T., Sng, W., See, H. H., Lim, B., Kuan, J., Ansari, A. F., et al. (2020). “Event-driven Visual-Tactile Sensing and Learning for Robots,” in Proceedings of Robotics: Science and Systems (IEEE).

Veiga, F., Edin, B., and Peters, J. (2020). Grip Stabilization through Independent Finger Tactile Feedback Control. Sensors 20, 1748. doi:10.3390/s20061748

Ward-Cherrier, B., Pestell, N., Cramphorn, L., Winstone, B., Giannaccini, M. E., Rossiter, J., et al. (2018). The Tactip Family: Soft Optical Tactile Sensors with 3d-Printed Biomimetic Morphologies. Soft Robot. 5, 216–227. doi:10.1089/soro.2017.0052

Wettels, N., and Loeb, G. E. (2011). “Haptic Feature Extraction from a Biomimetic Tactile Sensor: Force, Contact Location and Curvature,” in 2011 IEEE International Conference on Robotics and Biomimetics (IEEE), 2471–2478. doi:10.1109/robio.2011.6181676

Wettels, N., Santos, V. J., Johansson, R. S., and Loeb, G. E. (2008). Biomimetic Tactile Sensor Array. Adv. Robot. 22, 829–849. doi:10.1163/156855308x314533

Keywords: tactile-sensing, tactile-events, active-perception, event-based, bio-inspired

Citation: Ganguly K, Mantripragada P, Parameshwara CM, Fermüller C, Sanket NJ and Aloimonos Y (2022) GradTac: Spatio-Temporal Gradient Based Tactile Sensing. Front. Robot. AI 9:898075. doi: 10.3389/frobt.2022.898075

Received: 16 March 2022; Accepted: 18 May 2022;

Published: 17 June 2022.

Edited by:

Shan Luo, University of Liverpool, United KingdomReviewed by:

Qiang Li, Bielefeld University, GermanyGuanqun Cao, University of Liverpool, United Kingdom

Copyright © 2022 Ganguly, Mantripragada, Parameshwara, Fermüller, Sanket and Aloimonos. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kanishka Ganguly, a2dhbmd1bHlAdGVycG1haWwudW1kLmVkdQ==

Kanishka Ganguly

Kanishka Ganguly Pavan Mantripragada

Pavan Mantripragada Chethan M. Parameshwara

Chethan M. Parameshwara Cornelia Fermüller

Cornelia Fermüller Nitin J. Sanket

Nitin J. Sanket Yiannis Aloimonos

Yiannis Aloimonos