- Geomatics Group, Institute of Geography, Ruhr-University Bochum (RUB), Bochum, Germany

Post-industrial areas in Europe, such as the Rhine-Ruhr Metropolitan region in Germany, include cultural heritage sites fostering local and regional identities with the industrial past. Today, these landmarks are popular places of interest for visitors. In addition to portable camera devices, low-budget ultra-lightweight unmanned aerial vehicles, such as micro quadcopter drones, are on their way to being established as mass photography equipment. This low-cost hardware is not only useful for recreational usage but also supports individualized remote sensing with optical images and facilitates the acquisition of 3D point clouds of the targeted object(s). Both data sets are valuable and accurate geospatial data resources for further processing of textured 3D models. To experience these 3D models in a timely way, these 3D visualizations can directly be imported into game engines. They can be extended with modern interaction techniques and additional (semantic) information. The visualization of the data can be explored in immersive virtual environments, which allows, for instance, urban planners to use low-cost microdrones to 3D map the human impact on the environment and preserve this status in a 3D model that can be analyzed and explored in following steps. A case example of the old wage hall of the Zeche “Bonifacius” (Essen, Germany) with its simple building structure showed that it is possible to generate a detailed and accurate 3D model based on the microdrone data. The point cloud which the 3D model of the old wage hall was based on represented partly better data accuracy than the point clouds derived from airborne laser scanning and offered by public agencies as open data. On average, the distance between the point clouds was 0.7 m, while the average distance between the airborne laser scanning point cloud and the 3D model was −0.02 m. Matching high-quality textures of the building facades brings in a new aspect of 3D data quality which can be adopted when creating immersive virtual environments using the Unity engine. The example of the wage hall makes it clear that the use of low-cost drones and the subsequent data processing can result in valuable sources of point clouds and textured 3D models.

1 Introduction

The metropolitan region Rhine–Ruhr is known for its cultural heritage related to old industrial times (Müller and Carr 2009; Otto 2019). After manufacturing and, especially, coal mining decreased, brownfields, (often contaminated) mining heaps, and sites were revitalized. In the course of large structural change initiatives in the Ruhr area, many formerly industrial sites became destinations for recreational activities, which led to new forms of human–nature interactions in this region (Kilper 1999). Industrial urbanity becomes idealized and revitalized urban sites of industrial times shape out a new symbology of a “wild nature” (Kühne 2019). These sites are often considered as “landmarks” of cultural heritage (Edler et al., 2019).

Visiting these landmarks, such as old mining sites, attracts citizens. Such visits are accompanied by the usage of technologies to store impressions, such as photography. The modern technology of photography not only includes handheld camera devices but also microdrones which can be considered as affordable mass media devices. They can be used for individualized flights around areas of interest, such as touristic sites, and their media output goes beyond 2D images. These microdrones facilitate the acquisition of 3D point cloud data coupled with 2D images that could be used to derive matching 3D textures (Carvajal-Ramírez et al., 2019; Neitzel and Klonowski 2011; Nex and Remondino 2014). Based on established processing steps and modern software, this microdrone-based data collection can lead to photorealistic 3D models that can be imported into computer game engines and then be visualized and experienced in immersive virtual environments.

Different studies exist which are methodologically built on microdrones and structure-from-motion (SfM) approaches (e.g., Mora et al., 2019; Aboutalebi et al., 2019; Kwon et al., 2017). Mora et al. (2019) used a DJI Phantom 4 Pro apparatus. They examined the accuracy of an sUAS-derived point cloud of a parking lot located in Ontario, California, by comparing it to ground control points (GCPs). Aboutalebi et al. (2019) proposed a methodology used to incorporate 3D information extracted from an UAV point into a two-source energy balance (TSEB) model. Their drone AggieAir was developed by Utah State University. Kwon et al. (2017) used the potentials of a DJI Phantom 3 Advanced apparatus to develop a hybrid scanning method for the 3D modeling and analysis of atypical ground shapes which are continually changing according to the construction situation. These studies point to the diversity of sensors, methodologies, and applications. As recently stated by Ventura et al. (2022), with reference to an application of underwater monitoring, “no standardized methods currently exist to provide 3-dimensional high spatial resolution and accuracy cartographic products” (Balletti et al., 2015). Moreover, the potentials for exploring the strengths and usefulness of UAS data acquired through low-budget equipment are considered high (Papakonstantinou et al., 2019). This lack of established standard methods which are also valid for the 3D investigation and visualization of cultural heritage sites calls for an exploration of new sensors and methodologies.

In an exploratory research approach, we investigated the potentials but also the limitations of the data accuracy acquired with a low-budget microdrone (DJI Mavic Mini, 249 g). To consider a typical postindustrial colliery site in the Ruhr Area (“Zeche Bonifacius” in Essen), and, methodologically speaking, to conduct a more differentiated analysis, we selected a case example that contains a post-industrial wage hall (used as a hotel today). As an example of citizen science, the applied method provides the possibility for experts and non-experts to generate and evaluate 3D reconstructions using a customary (low-budget) ultralight and foldable drone.

In this study, we 1) present a methodological workflow of how to create an immersive virtual environment from low-budget microdrone data based on processing steps conducted by open-source software. Moreover, we 2) discuss the data accuracy referring to the wage hall in our case example and in comparison to other openly available (geospatial) data sources. The key question of this exploratory approach is: how do the point clouds generated by the low-budget microdrone perform in comparison to airborne laser-scanning (ALS) point clouds provided by governmental surveying departments as open geospatial data sets? The performance analysis includes the established 3D deviation measures of the cloud-to-cloud distance and cloud-to-mesh distance (Esposito et al., 2017; Sofonia et al., 2019).

In terms of the methodological workflow, the underlying processing steps of the OpenDroneMap software for the derivation of point clouds and 3D models are based on the following individual freely available software components. The point cloud is created using the overlapping images from the drone using a structure-from-motion method. The point cloud is further processed into a polygon mesh using the Screened Poisson surface reconstruction algorithm. Based on the input images, this polygon mesh is textured by Mvs-Texturing software. The result is a textured 3D model that is available in the open file format Wavefront OBJ file (.obj) and can be imported in Unity and other software applications compatible with the file format.

Section 2 describes the hardware and software used to acquire images and process them in order to create point clouds and 3D models. In addition, the description of the workflow explains how to evaluate the created point clouds and 3D models using the cloud-to-cloud distance and cloud-to-mesh distance. The results of the evaluation are presented in Section 3 and are then discussed in Section 4. Finally, Section 5 summarizes the results and provides an outlook on further research topics.

2 Case Study, Materials, and Methods (From Unmanned Aerial Vehicle Images to Immersive Virtual Environments)

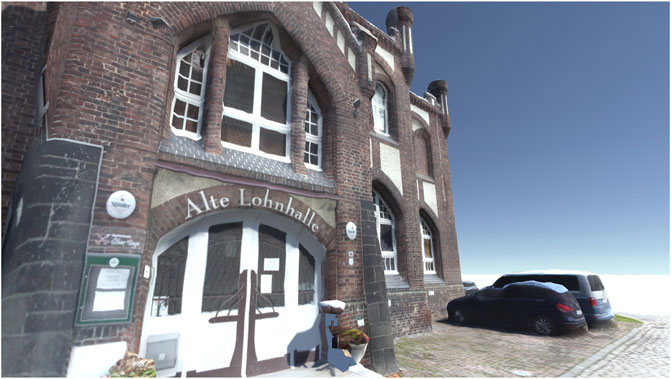

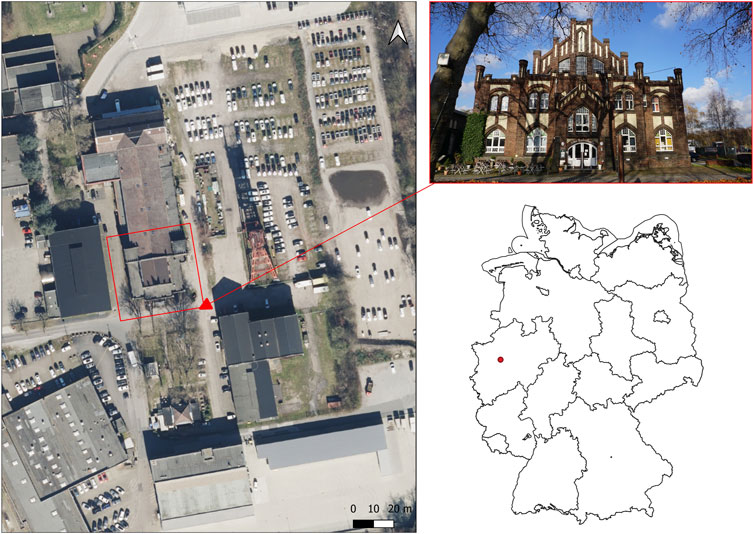

The specified study object (case study) is located on the site of the former Bonifacius colliery. The site is located in the Kray district of the city of Essen. The mine was established in 1851 (Historisches Portal Essen, 2022). Since 1985, the colliery has been under monument protection. The wage hall has a neo-Gothic style facade and is now used as a restaurant and hotel (Regionalverband Ruhr 2021). Figure 1 shows the study site and the wage hall. The wage hall is visually recognizable by dark bricks. In addition, there is plaster on the facades and white window elements. The words “Alte Lohnhalle” (old wage hall) can be read above the entrance area. Today the Bonifacius colliery is part of the Industrial Heritage Trail (Regionalverband Ruhr 2021).

FIGURE 1. Location of the former colliery site in Essen-Kray [digital orthophotos from the district government of Cologne and the administrative boundaries of Germany from GeoBasis-DE/BKG (2022)].

The drone model used for data collection was the DJI Mavic Mini model. This is a drone model for private usage and has a weight of 249 g. The camera sensor is a 1/2.3″ CMOS with a resolution of 12 megapixels (DJI 2019). The camera is mounted on a gimbal, which allows setting the tilt angles of the camera from −90° to +20°. On the bottom side of the microdrone are downward facing vision and infrared sensors (DJI 2019). Obstacle detection is not implemented in this model. The microdrone is controlled via remote control. A smartphone is attached to this remote control, which serves as a screen for the manufacturer’s software. The frequencies for signal transmission between the microdrone and controller are 2.4 and 5.8 GHz (DJI 2019), respectively. The used software for the control of the microdrone was the DJI Fly v.1.2.1. The user interface of the software allows flight settings to be made and basic flight information to be read during and before the flight. The drone and associated software were not modified. They were used according to their state of delivery.

2.1 Workflow (Low-Budget Microdrone Images to the 3D-Model and Point Clouds)

The workflow applied consists of three parts. First, images of the examination object were acquired with the microdrone. No ground control points were used during data collection, and the georeferencing of imagery collected by the drone was based on GPS coordinates available in the imagery metadata. The control of the drone and the operation of the camera trigger were performed manually. Before each shot, the drone was stopped, and then, the shot was taken while hovering. This was performed to prevent blurry images. The flight altitudes were set via the software. The collected images were then processed with the free software OpenDroneMap. This software reconstructs point clouds and textured 3D models of the survey objects from the collected images. For the analysis of the accuracy of the point clouds and 3D models, CloudCompare software was used.

2.2 Data Acquisition During Flight Campaign

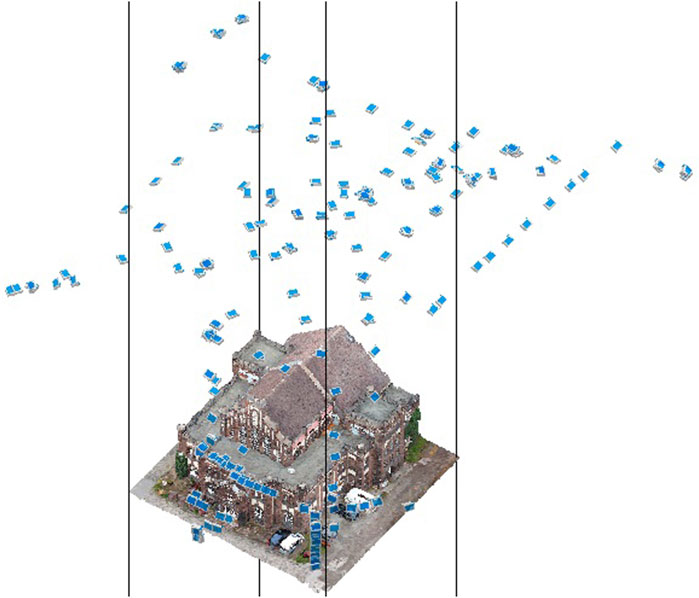

The aerial survey of the wage hall was conducted on 26 November 2020 at 11:01 a.m. The data acquisition resulted in 382 images. It was divided into four individual flight sections, each using a charged battery. The camera locations are shown in Figure 2. The flight lasted 66 min from the first to the last image. During the aerial survey, no shadows were visible in the study area due to the presence of cloud cover, which provided a diffuse illumination of the study area. There was no wind during data collection, and the DJI app did not display any warnings for increased wind speeds. During the flight, care was taken to include a high number of oblique images of the building facade to test how detailed the facade could be reconstructed and textured.

2.3 Image Data Processing and Point Cloud Preparation

In the next step, the collected images of the wage hall were converted to a point cloud and a textured 3D model using the freely available software WebODM, which is a web interface of the OpenDroneMap software. The collected images were processed in WebODM with the setting of high resolution.

Prior to the analysis, the point clouds from the drone and the 3D measurement data were cut to a uniform study area since the focus of the analysis was placed on the wage hall. In the further course of this work, these point cloud data will be referred to as ALS (airborne laser scanning) point cloud, whereas the point cloud generated from the microdrone data will be named as the UAV (unmanned aerial vehicle) point cloud. The point clouds of the 3D measurement data of the district government of Cologne are available in the form of tiles on a communal scale. The area of the Bonifacius colliery was covered by the data tile with the designation 3dm_32_366_5704_1_nw. It had a total number of 14,772,007 points. For comparison with the UAV point clouds, both data sets should be limited to the study object. This was to prevent the analysis methods from being affected by other objects in the study area. To achieve this processing step, the point clouds were adjusted in the CloudCompare software. In the first processing step, a vector file in the shapefile format was created in the geoinformation software QGIS, covering the area of the old wage hall.

These vector files serve as a template for cropping the point clouds in CloudCompare. The coordinate system of both vector files was transformed to EPSG:32632. At this point, it should be added that the coordinate system of the 3D measurement data from the Cologne district government, which determines the location of the point cloud, was set as EPSG:25832. In preparation for cropping the point clouds from this data source, the vector data were transformed into this coordinate system. Afterward, the point clouds were trimmed to fit the study area.

Both point clouds were aligned to calculate the cloud-to-cloud distance. Weinmann (2016) refers to these processing steps as point cloud registration, point set registration, or 3D scan matching. The process of registration of the two point clouds follows in most cases a first rough approximation, which is then completed by precise matching (Weinmann 2016). For the implementation of the registration, the fine registration function in CloudCompare was used. It is based on the iterative closest point algorithm (CloudCompare 2015). The algorithm is used to align 3D models based on their geometric relation to each other (Rusinkiewicz and Levoy 2001).

The evaluation accuracy of the 3D models reconstructed by the software was also performed with the ALS point clouds. For this purpose, the cloud-to-mesh distance function was chosen. In this method, the distance between the point cloud and the polygon mesh is calculated (CloudCompare 2015). For this step, the data points representing the ground below the wage hall were removed from the ALS point cloud. Since the polygon mesh did not cover this area, these areas were removed from the clipped point cloud so that these areas would not affect the evaluation. The ground areas within the dataset were classified with a value of 2 (Köln 2020a). The remaining points were classified with a value of 3,032. To separate the two classes, the filter-by-value function was used in CloudCompare. The ground values were thus removed from the point cloud. In addition, data points that could be assigned to a tree that was located in front of the pay hall were also removed. These points were removed using the segmentation function. After this processing step, the ALS point cloud had 15,833 points.

2.4 Evaluation of the Point Cloud Data Accuracy

For the analysis of the accuracy, the point cloud (.las) created by the software was used. It was compared with the freely available 3D measurement data published by the Cologne district government, which were acquired by airborne laser scanning (Köln 2020b). These datasets were available as point clouds in the file format LAS (.laz). The accuracy of the position is given with a deviation of +/− 30 cm and that of the height with +/− 15 cm (double standard deviation). First, there is the description procedure of the evaluation of the point clouds. Subsequently, the evaluation of the polygon meshes based on the ALS point cloud is explained.

For the analysis of the point cloud properties, the surface density of the point clouds was calculated. In this analysis approach, CloudCompare calculates the number of neighboring points from each point in a predefined radius and stores this number (CloudCompare 2015). The values of 1, 0.3, and 0.15 m were selected for the search radii used to calculate the surface density. In addition, the point density per square meter was calculated by dividing the number of points by the size of the comparison area. The point density of point clouds is an important influencing factor for the reconstruction of surfaces since they represent the surface in the shape of points, and the reconstruction is calculated on the basis of these points (Berger et al., 2017).

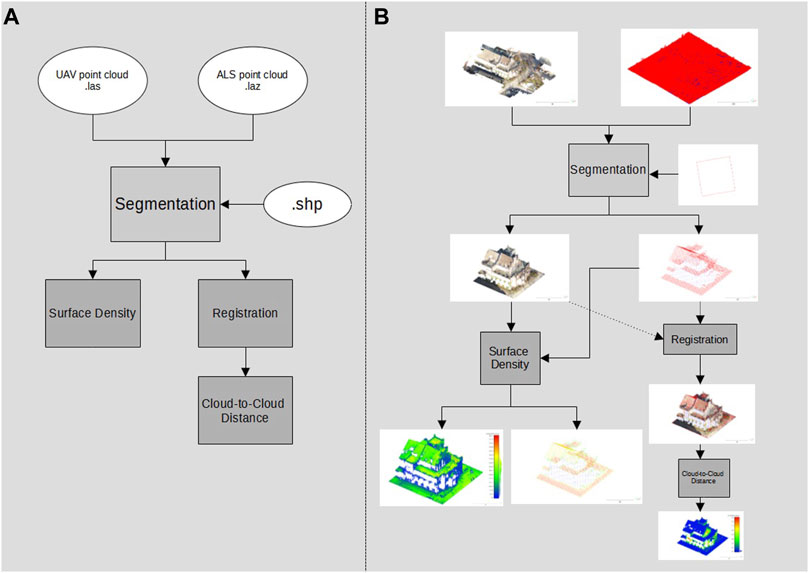

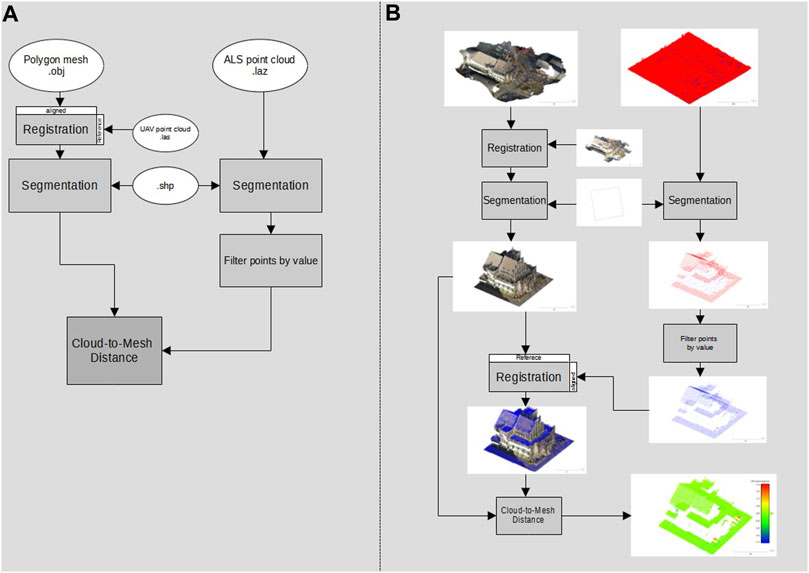

As a further analysis approach, the difference between the UAV point cloud and the ALS point cloud has been calculated. This could be implemented with the cloud-to-cloud distance function. This function calculated the Euclidean distance between points of a reference point cloud to the comparison point cloud (CloudCompare 2015). The ALS point cloud was used as the reference point cloud and compared with the UAV point clouds. It should be noted here that inaccuracies can occur if the reference point cloud has a lower density than the point cloud used for the comparison (CloudCompare 2015). A summary of the workflow used to evaluate the data accuracy of the point clouds, along with the previous data preparation, is presented in Figure 3.

FIGURE 3. Workflow of the point cloud evaluation (the cloud-to-cloud distance)—overview of data processing steps and formats (A) and the illustrated workflow output referring to the case study (B).

2.5 Evaluation of the 3D Reconstruction

The evaluation of the accuracy of the 3D reconstruction was performed based on the polygon mesh generated in the Wavefront OBJ (.obj) data format by WebODM. Here, the ALS point cloud again served as the comparison data set. As a method for evaluating the accuracy, the cloud-to-mesh distance was calculated. In doing so, the software application calculates the distance of each point of the comparison point cloud to the nearest part of the polygon mesh of the 3D reconstruction. (CloudCompare 2015). Figure 4 shows the workflow performed, from data preparation to the cloud-to-mesh distance.

FIGURE 4. Workflow for the evaluation of the generated 3D model (the cloud-to-mesh distance)—overview of data processing steps and formats (A) and the illustrated workflow output referring to the case study (B).

3 Results

According to the main research question dealing with the comparability of point clouds generated by UAV to freely available point clouds generated by ALS, the result presentation is divided into three parts. First, the description of the point cloud properties, in terms of surface density and the number of points, is described. Then, the accuracy, measured by the distance between the UAV and ALS point cloud, is presented based on the cloud-to-cloud distance. Last, the accuracy of the 3D reconstruction of the old wage hall is shown in comparison to the ALS point cloud based on the cloud-to-mesh distance.

3.1 Comparison of Point Cloud Properties

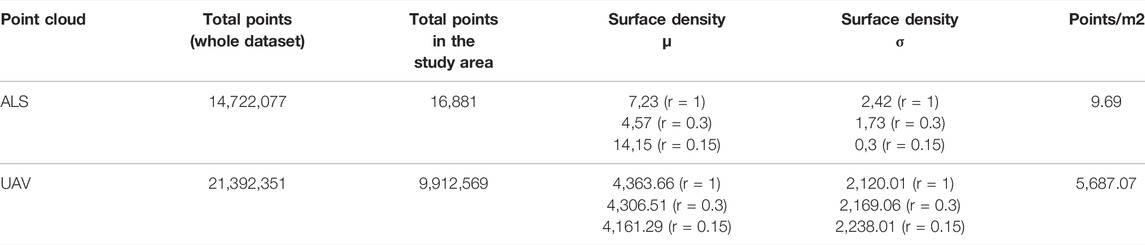

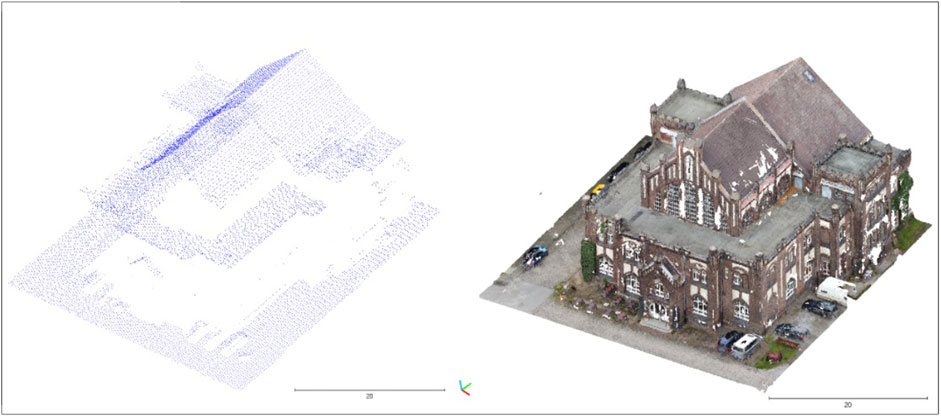

Table 1 shows the properties of the point clouds and includes the number of points in the entire data set, the number of points in the study area, the surface density, and the number of points per square meter. The entire data tile of the point cloud has a number of 14,722,007 points. After it was cropped to the vector file, there were 16,881 points. This results in a point density of 9.68 points per square meter for the comparison area of the wage hall. The surface density at a radius of 1 m (r = 1) was 7.23 points, with a standard deviation of 2.42 points. At a radius of 0.3 m (r = 0.3), the surface density was 4.57 points and a standard deviation of 1.73 points. For the radius of 0.15 m (r = 0.15), there was a surface density of 14.15 points and a standard deviation of 0.3 points. The coverage of the facades of the wage hall by data points in the ALS point cloud was barely visible when viewed visually, which can be seen in Figure 5. On the east side of the wage hall, data points were visible in the bay window area. In the visual comparison, the increased number of data points of the UAV point cloud can be seen in the area of the building facades. The properties of the UAV point cloud are now described.

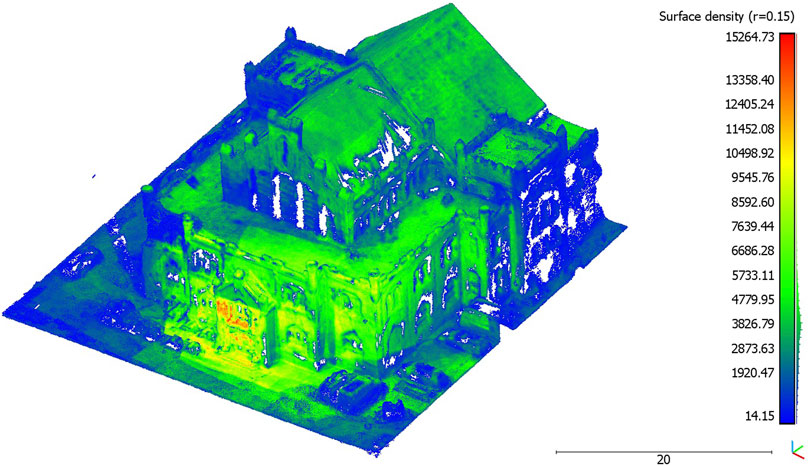

The point cloud of the UAV-based data collection of the wage hall had a total number of 21,392,351 points. After it was post-processed (cut) to fit the study area, it had a total number of 9,912,569 points. This resulted in a point density of 5,687.07 points per square meter. The mean surface density at a radius of 1 m (r = 1) was 4,363.66 points and had a standard deviation of 2,120.01 points. At a radius of 0.3 m (r = 0.3), the mean surface density was 4306.51 points. The standard deviation was 2,169.06 points. At a radius of 0.15 m (r = 0.15), the mean surface density was 4,161.29 points and had a standard deviation of 2,238.01 points. When considering the visualized surface density (r = 0.15) in Figure 6, it is shown that the entrance area of the wage hall had an increased surface density compared to the rest of the point clouds exhibited. The point cloud in the roof area of the wage hall was in the value range of the average surface density. In the right part of the front facade, an increased surface density could be seen. In contrast, the left part of the front facade showed lower surface densities and missing data points. Overall, increased surface densities can be seen in the front facade and entrance area of the wage hall.

3.2 Cloud-To-Cloud Distance

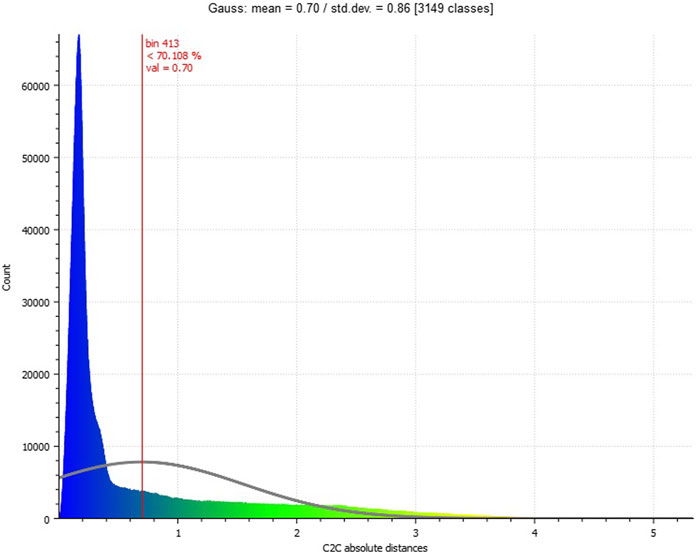

The result of the cloud-to-cloud distance is shown in Table 2. It depicts the average distance of each point of the ALS point cloud to each point of the UAV point cloud in a search radius of 0.15 m, including the standard deviation. When looking at the visualization of the cloud-to-cloud distance in Figure 7, the UAV point cloud of the data collection of the wage hall showed increased distances in the area of the facades of the building. The increased distances in the facade areas may be related to the fact that the surface density of the ALS point cloud showed a low density and coverage of data points in the area of the facade. It is noticeable that low distances prevail in the area of the facades, in which data points exist in the facade area of the ALS point cloud. In the roof and floor areas, small distances can be seen when looking at Figure 7. These are areas that are covered by data points in both point clouds. Moreover, the value distribution (histogram) of the cloud-to-cloud distance (Figure 8) shows that 70.1% of the distances are below the mean distance of 0.7 m.

TABLE 2. Cloud-to-cloud distance of UAV point clouds to the ALS point cloud (wage hall flight campaign).

FIGURE 7. Cloud-to-cloud distance between ALS and UAV point clouds visualized on the UAV point cloud.

3.3 Cloud-To-Mesh Distance

The visual impression of the 3D reconstruction of the wage hall, which serves as the data basis for the cloud-to-mesh distance, can be seen in Figure 9. In terms of appearance, the old wage hall and its (partly vegetated) historical facades are well represented in the 3D reconstruction. Around the building, reconstructions of additional objects, such as cars, can also be visually identified.

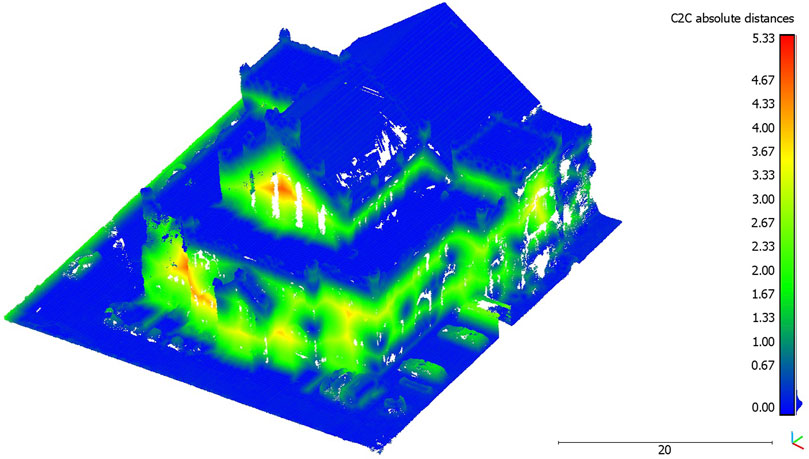

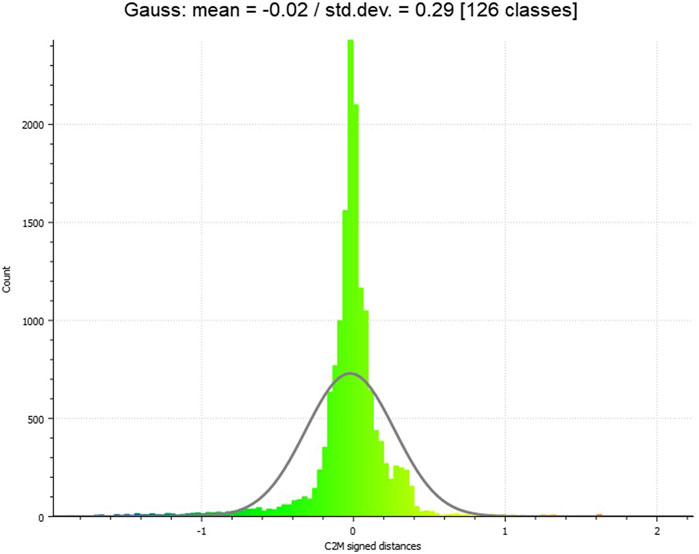

In Table 3, the result of the cloud-to-mesh distance is given. The visualized result of the cloud-to-mesh distance approach is presented in Figure 10. For the 3D reconstruction of the wage hall to the ALS point cloud, a mean distance of −0.02 m resulted and had a standard deviation of 0.29 m. Increased deviations in the eastern edge area of the investigation area could be determined. This could be caused by a partly inaccurate geo-referencing of the UAV point cloud, which caused a non-uniform comparison surface during the adjustment of the point clouds to the investigation area. In addition, increased distances can be seen at locations that can be recognized as representing vehicles, within the ALS point cloud. When examining the 3D reconstruction in these areas, it is noticeable that there are no vehicles there. As the distance of each point of the point cloud to the polygon mesh is calculated in the cloud-to-mesh distance, the missing data coverage of the facades in the UAV point cloud is omitted here, which was also the case within the cloud-to-cloud distance computing (Section 3.2). The distances from the points in the point cloud are, thus, only calculated for the locations where data points are also available. The missing data points for the building facade are, thus, not included in the distance calculation. When inspecting the roof and floor areas in Figure 10, small distances can be seen here, which is also represented by the data distribution in the data histogram (Figure 11).

FIGURE 11. Histogram on cloud-to-mesh distance distribution. The microdrone-based 3D model within an immersive virtual environment.

Unity Engine supports 3D models with the file formats Filmbox (.fbx), COLLADA (.dae), Drawing Interchange File Format (.dxf) and Wavefront OBJ (.obj) (Unity Technologies 2022). For technical use in Unity, the 3D model created in the workflow (in Wavefront OBJ file format) does not require any conversion steps and can, thus, be imported directly into the game engine.

The potential of representing photogrammetric 3D records of cultural heritage sites by the modern possibilities of freely accessible game engines and compatible virtual reality systems has been addressed in several recent studies (e.g., Büyüksalih et al., 2020; Kersten et al., 2021; Koutsoudis et al., 2007). The possibilities of low-budget ultra-lightweight UAVs, such as the microdrone DJI Mavic Mini, for deriving and creating immersive virtual environments have only hardly been explored so far.

Figure 12 gives an impression of the entrance facade of the case example (historical wage hall of a Ruhr area coal-mining site, today used as a restaurant). The view is taken ‘through the virtual eyes’ of the avatar. In this image, the quality is indicated: The complex facade including different ornaments is represented. Moreover, the microdrone-based acquisition of the 3D data and image (RGB) textures facilitate detailed representations. The figure indicates that textual ornaments on the facade in different sizes can be read, like the name of the building (“Alte Lohnhalle”) and the name of a local brewery (“Stauder”). Moreover, a menu card is indicated. The figure also indicates limitations in the final model, leading to slightly unrealistic impressions, which might also affect the user’s feeling of immersion. For example, the shifted window above the entrance is distorted as the 3D point cloud did not consider the offset. Additional objects, such as the parking cars or flowerpots, however, indicate good quality of the surrounding objects of the building.

4 Discussion

The data processing was based on open-source software solutions. The measures used to analyze this performance included two approaches: the cloud-to-cloud distance (comparative analysis of the point cloud data accuracy) and cloud-to-mesh distance (comparative analysis of the 3D reconstruction based on the point cloud data).

The analyses based on the cloud-to-cloud distance measure indicate the potentials of low-budget microdrones for highly accurate 3D point clouds. Comparing these data with professional official datasets (open geo-spatial data), the microdrone-based approach leads to a very similar data quality in terms of the spatial accuracy (Figure 7). The data value distribution (Figure 8) points to a concentration of more than 70 percent of the acquired data lower than the mean value (0.7 m, Table 2). Higher distances are caused by a lack of data availability in the officially offered ALS data as their acquisition does not cover data on building facades. The flexibility of easy-to-fly microdrones in terms of individualization of data acquisition points (Figure 2) not only fills data gaps regarding building facades but also facilitates an increase in acquisition points and, subsequently, the (surface) density of point clouds (Figure 6). This can contribute to a strengthening of data accuracy of, for example, complex (historical) facades, which is in line with previous studies on drone-based 3D reconstruction of cultural heritage sites (Febro 2017; Adami et al., 2019; Bakirman et al., 2020).

The results of the cloud-to-cloud distance analysis are underlined by the cloud-to-mesh distance analysis. The mean (signed) distance value (−0.02 m, Table 3) and its low standard deviation (0.29, Figure 11) represent a high accuracy of the 3D reconstruction. The 3D reconstruction of the meshed and textured model of the wage hall (Figure 9) gives a visual impression of the results and underlines the accuracy. This 3D model, originating in the microdrone-based flight within this exploratory study, can further be imported into a game engine, supporting the experience of an immersive virtual environment of the represented post-industrial landmark.

Due to the low number of data points in the ALS point cloud (Table 1), it would be interesting to evaluate and compare other professional-generated point clouds to the UAV point cloud. The focus could be on the comparison of the building facades. In this approach, the representation of the facade by the UAV point cloud predominated in terms of available data points in the facade. A direct comparison of the facade with the ALS point cloud could not be explored in this study caused by the lack of data coverage (Figure 5).

5 Summary and Outlook

This exploratory study points to the potential that photorealistic 3D models can be generated by using low-cost microdrone images in combination with freely available software. The point clouds derived from the acquired drone data were of a spatial accuracy similar to official airborne laser scanning (ALS) data sets provided by governmental open data initiatives in North Rhine–Westphalia, Germany. The used measures, cloud-to-cloud distance, and cloud-to-mesh distance, also pointed to an advantage of the microdrone-based approach over the ALS data sets, when it comes to questions of applying image textures and representing building facades. This is also represented in the data on the surface density. In this study, the number of GCPs and their distribution were not considered, but they should be included as an additional component in future approaches [cf. Balletti et al. (2015)].

This study provides a workflow that is based on a low-budget data acquisition approach and on a workflow of data processing built on open-source software solutions. It allows various users, including representatives of citizen science, to create and evaluate 3D reconstructions of an individual area of interest. This workflow may be applied by non-experts to create individualized and flexible image-textured 3D models. It may also animate to explore and exploit the full potential of mass media photography equipment that goes beyond traditional 2D photography of places of interest, such as post-industrial landmarks. In future research studies, the suitability of the detailed point clouds and (immersive) 3D models should be further explored for specific application purposes in spatial disciplines. This research should particularly include user feedback and empirical data on (cognitively) processing these 3D applications. For future studies, it could also be a valuable extension to use other microdrones equipped with RT/PPK capable receivers to further improve the data accuracy.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

We acknowledge the support from the Open Access Publication Funds of the Ruhr-Universität Bochum.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aboutalebi, M., Torres-Rua, A. F., McKee, M., Kustas, W. P., Nieto, H., Alsina, M. M., et al. (2020). Incorporation of Unmanned Aerial Vehicle (UAV) Point Cloud Products into Remote Sensing Evapotranspiration Models. Remote Sens. 12, 50. doi:10.3390/rs12010050

Adami, A., Fregonese, L., Gallo, M., Helder, J., Pepe, M., and Treccani, D. (2019). Ultra Light Uav Systems for the Metrical Documentation of Cultural Heritage: Applications for Architecture and Archaeology. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. XLII-2/W17, 15–21. doi:10.5194/isprs-archives-xlii-2-w17-15-2019

Bakirman, T., Bayram, B., Akpinar, B., Karabulut, M. F., Bayrak, O. C., Yigitoglu, A., et al. (2020). Implementation of Ultra-light UAV Systems for Cultural Heritage Documentation. J. Cult. Herit. 44, 174–184. doi:10.1016/j.culher.2020.01.006

Balletti, C., Beltrame, C., Costa, E., Guerra, F., and Vernier, P. (2015). Photogrammetry in Maritime and Underwater Archaeology: Two Marble Wrecks from Sicily. Proc. Optics Arts. Archit. Archaeol. V 9527. SPIE 952795270M. doi:10.1117/12.2184802

Berger, M., Tagliasacchi, A., Seversky, L. M., Alliez, P., Guennebaud, G., Levine, J. A., et al. (2017). A Survey of Surface Reconstruction from Point Clouds. Comput. Graph. Forum 36, 301–329. doi:10.1111/cgf.12802

Büyüksalih, G., Kan, T., Özkan, G. E., Meriç, M., Isın, L., and Kersten, T. P. (2020). Preserving the Knowledge of the Past through Virtual Visits: From 3D Laser Scanning to Virtual Reality Visualisation at the Istanbul Çatalca İnceğiz Caves. PFG 88, 133–146. doi:10.1007/s41064-020-00091-3

Carvajal-Ramírez, F., Navarro-Ortega, A. D., Agüera-Vega, F., Martínez-Carricondo, P., and Mancini, F. (2019). Virtual Reconstruction of Damaged Archaeological Sites Based on Unmanned Aerial Vehicle Photogrammetry and 3D Modelling. Study Case of a Southeastern Iberia Production Area in the Bronze Age. Measurement 136, 225–236. doi:10.1016/j.measurement.2018.12.092

CloudCompare (2015). User Manual. Available at: https://www.cloudcompare.org/doc/qCC/CloudCompare%20v2.6.1%20-%20User%20manual.pdf (Accessed February 25, 2022).

DJI (2019). Mavic Mini Benutzerhandbuch. Available at: https://dl.djicdn.com/downloads/Mavic_Mini/20200930/Mavic_Mini_User_Manual_v1.0_DE.pdf (Accessed February 25, 2022).

Edler, D., Keil, J., Wiedenlübbert, T., Sossna, M., Kühne, O., and Dickmann, F. (2019). Immersive VR Experience of Redeveloped Post-industrial Sites: The Example of "Zeche Holland" in Bochum-Wattenscheid. Kn. J. Cartogr. Geogr. Inf. 69, 267–284. doi:10.1007/s42489-019-00030-2

Esposito, G., Mastrorocco, G., Salvini, R., Oliveti, M., and Starita, P. (2017). Application of UAV Photogrammetry for the Multi-Temporal Estimation of Surface Extent and Volumetric Excavation in the Sa Pigada Bianca Open-Pit Mine, Sardinia, Italy. Environ. Earth Sci. 76, 6409. doi:10.1007/s12665-017-6409-z

Febro, J. D. (2017). 3D Documentation of Cultural Heritage Sites Using Drone and Photogrammetry: a Case Study of Philippine UNESCO-Recognized Baroque Churches. Int. Transc.J. Engineer. Manage. Applied. Sci. Tech. 1111A8M (8), 1–14. doi:10.14456/ITJEMAST.2020.154

Historisches Portal Essen (2022). Zeche. Bonifacius Die Geschichte der Zeche Bonifacius. Available at: https://historischesportal.essen.de/startseite_7/industrie/bergbau/bonifacius.de.html (Accessed February 25, 2022).

Kilper, H. (1999). Die internationale Bauausstellung Emscher Park. Eine Studie zur Steuerungsproblematik komplexer Erneuerungsprozesse in einer alten Industrieregion. Wiesbaden: Springer.

Köln, B. (2020a). 3D-Messdaten. Available at: https://www.bezreg-koeln.nrw.de/brk_internet/geobasis/hoehenmodelle/3d-messdaten/index.html (Accessed February 25, 2022).

Köln, B. (2020b). Nutzerinformationen für die 3D-Messdaten aus dem Laserscanning für NRW. Available at: https://www.bezregkoeln.nrw.de/brk_internet/geobasis/hoehenmodelle/nutzerinformationen.pdf (Accessed February 28, 2022).

Koutsoudis, A., Arnaoutoglou, F., and Chamzas, C. (2007). On 3D Reconstruction of the Old City of Xanthi. A Minimum Budget Approach to Virtual Touring Based on Photogrammetry. J. Cult. Herit. 8, 26–31. doi:10.1016/j.culher.2006.08.003

Kwon, S., Park, J.-W., Moon, D., Jung, S., and Park, H. (2017). Smart Merging Method for Hybrid Point Cloud Data Using UAV and LIDAR in Earthwork Construction. Procedia Eng. 196, 21–28. doi:10.1016/j.proeng.2017.07.168

Mora, O. E., Suleiman, A., Chen, J., Pluta, D., Okubo, M. H., and Josenhans, R. (2019). Comparing sUAS Photogrammetrically-Derived Point Clouds with GNSS Measurements and Terrestrial Laser Scanning for Topographic Mapping. Drones 3, 64. doi:10.3390/drones3030064

Müller, S., and Carr, C. (2009). Image Politics and Stagnation in the Ruhr Valley” in Whose Urban Renaissance? an International Comparison of Urban Regeneration Strategies. Editors L. Porter, and K. Shaw (London: Routledge), 84

Neitzel, F., and Klonowski, J. (2011). Mobile 3D Mapping with a Low-Cost Uav System. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. XXXVIII-1/C22, 39–44. doi:10.5194/isprsarchives-xxxviii-1-c22-39-2011

Nex, F., and Remondino, F. (2014). UAV for 3D Mapping Applications: a Review. Appl. Geomat. 6, 1–15. doi:10.1007/s12518-013-0120-x

Otto, K. H. (2019). (Post-)Industrielle Stadtnatur“ in Die Stadt der Städte. Das Ruhrgebiet und seine Umbrüche. Editors M. Farrenkopf, S. Goch, M. Rasch, and H. W. Wehling (Essen: Klartext), 61.

Papakonstantinou, A., Kavroudakis, D., Kourtzellis, Y., Chtenellis, M., Kopsachilis, V., Topouzelis, K., et al. (2019). Mapping Cultural Heritage in Coastal Areas with UAS: The Case Study of Lesvos Island. Heritage 2, 1404–1422. doi:10.3390/heritage2020089

Regionalverband Ruhr. (2021). Themenroute 17 Rheinische Bergbauroute. Available at: https://www.route-industriekultur.ruhr/fileadmin/user_upload/03_Route_Industriekultur_Microsite/8_Themenrouten/X_PDF/2021_TR17_Rheinische_Bergbauroute_RIK.pdf (Accessed Janary 15, 2022).

Rusinkiewicz, S., and Levoy, M. (2001). Efficient Variants of the ICP Algorithm.” in Proceedings Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, May 28–June 1, 2001. doi:10.1109/im.2001.924423

Sofonia, J. J., Phinn, S., Roelfsema, C., Kendoul, F., and Rist, Y. (2019). Modelling the Effects of Fundamental UAV Flight Parameters on LiDAR Point Clouds to Facilitate Objectives-Based Planning. ISPRS J. Photogrammetry Remote Sens. 149, 105–118. doi:10.1016/j.isprsjprs.2019.01.020

Unity Technologies (2021). Model File Formats. Available at: https://docs.unity3d.com/Manual/3D-formats.html (Accessed February 25, 2022).

Ventura, D., Mancini, G., Casoli, E., Pace, D. S., Lasinio, G. J., Belluscio, A., et al. (2022). Seagrass Restoration Monitoring and Shallow-Water Benthic Habitat Mapping through a Photogrammetry-Based Protocol. J. Environ. Manag. 304, 114262. doi:10.1016/j.jenvman.2021.114262

Keywords: microdrones, UAV, 3D mapping, point clouds, virtual reality

Citation: Weißmann M, Edler D and Rienow A (2022) Potentials of Low-Budget Microdrones: Processing 3D Point Clouds and Images for Representing Post-Industrial Landmarks in Immersive Virtual Environments. Front. Robot. AI 9:886240. doi: 10.3389/frobt.2022.886240

Received: 28 February 2022; Accepted: 20 April 2022;

Published: 24 May 2022.

Edited by:

Nuno Gracias, University of Girona, SpainReviewed by:

Ioannis Kansizoglou, Democritus University of Thrace, GreeceDaniele Ventura, Sapienza University of Rome, Italy

Copyright © 2022 Weißmann, Edler and Rienow. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marco Weißmann, bWFyY28ud2Vpc3NtYW5uQHJ1Yi5kZQ==

Marco Weißmann

Marco Weißmann Dennis Edler

Dennis Edler Andreas Rienow

Andreas Rienow