- 1Interdepartmental Neuroscience Program, Yale School of Medicine, New Haven, CT, United States

- 2Brain Function Laboratory, Department of Psychiatry, Yale School of Medicine, New Haven, CT, United States

- 3Social Robotics Laboratory, Department of Computer Science, Yale University, New Haven, CT, United States

- 4Departments of Neuroscience and Comparative Medicine, Yale School of Medicine, New Haven, CT, United States

- 5Department of Medical Physics and Biomedical Engineering, University College London, London, United Kingdom

Robot design to simulate interpersonal social interaction is an active area of research with applications in therapy and companionship. Neural responses to eye-to-eye contact in humans have recently been employed to determine the neural systems that are active during social interactions. Whether eye-contact with a social robot engages the same neural system remains to be seen. Here, we employ a similar approach to compare human-human and human-robot social interactions. We assume that if human-human and human-robot eye-contact elicit similar neural activity in the human, then the perceptual and cognitive processing is also the same for human and robot. That is, the robot is processed similar to the human. However, if neural effects are different, then perceptual and cognitive processing is assumed to be different. In this study neural activity was compared for human-to-human and human-to-robot conditions using near infrared spectroscopy for neural imaging, and a robot (Maki) with eyes that blink and move right and left. Eye-contact was confirmed by eye-tracking for both conditions. Increased neural activity was observed in human social systems including the right temporal parietal junction and the dorsolateral prefrontal cortex during human-human eye contact but not human-robot eye-contact. This suggests that the type of human-robot eye-contact used here is not sufficient to engage the right temporoparietal junction in the human. This study establishes a foundation for future research into human-robot eye-contact to determine how elements of robot design and behavior impact human social processing within this type of interaction and may offer a method for capturing difficult to quantify components of human-robot interaction, such as social engagement.

Introduction

In the era of social distancing, the importance of direct social interaction to personal health and well-being has never been more clear (Brooke and Jackson, 2020; Okruszek et al., 2020). Fields such as social robotics take innovative approaches to addressing this concern by utilizing animatronics and artificial intelligence to create simulations of social interaction such as that found in human-human interaction (Belpaeme et al., 2018; Scassellati, et al., 2018a) or human-pet companionship (Wada et al., 2010; Kawaguchi et al., 2012; Shibata, 2012). With our experiences and norms of social interaction changing in response to the COVID-19 pandemic, the innovation, expansion, and integration of such tools into society are in demand. Scientific advancements have enabled development of robots which can accurately recognize and respond to the world around it. One approach to simulation of social interaction between humans and robots requires a grasp of how the elements of robot design influence the neurological systems of the human brain involved in recognizing and processing direct social interaction, and how that processing compares to that during human-human interaction (Cross et al., 2019; Henschel et al., 2020; Wykowska, 2020).

The human brain is distinctly sensitive to social cues (Di Paolo and De Jaegher, 2012; Powell et al., 2018) and many of the roles that robots could potentially fill require not only the recognition and production of subtle social cues on the part of the robot, but also recognition from humans of the robot’s social cues as valid. The success of human-human interaction hinges on a mix of verbal and non-verbal behavior which conveys information to others and influences their internal state, and in some situations robots will be required to engage in equally complex ways. Eye-to-eye contact is just one example of a behavior that carries robust social implications which impact the outcome of an interaction via changes to the emotional internal state of those engaged in it (Kleinke, 1986). It is also an active area of research for human-robot interaction (Admoni and Scassellati, 2017) which has been shown to meaningfully impact robot engagement (Kompatsiari et al., 2019). Assessing neural processing during eye-contact is one way to understand human perception of robot social behavior, as this complex internal cascade is, anecdotally, ineffable and thus difficult to capture via questionnaires. Understanding how a particular robot in a particular situation impacts the neural processing of those who interact with it, as compared to another human in the same role, will enable tailoring of robots to fulfill roles which may benefit from having some but not all elements of human-human interaction (Disalvo et al., 2002; Sciutti et al., 2012a). An additional benefit of this kind of inquiry can be gained for cognitive neuroscience. Robots occupy a unique space on the spectrum from object to agent which can be manipulated through robot behavior, perception, and reaction, as well as the development of expectations and beliefs regarding the robot within the human interacting partner. Due to these qualities, robots are a valuable tool for parsing elements of human neural processing and comparison of the processing of humans and of robots as social partners is a fruitful ground for discovery (Rauchbauer et al., 2019; Sciutti et al., 2012a).

The importance of such understanding for the development of artificial social intelligence has long been recognized (Dautenhahn, 1997). However, due to technological limitations on the collection of neural activity during direct interaction, little is known about the neurological processing of the human brain during human-robot interaction as compared to human-human interaction. Much of what is known has been primarily acquired via functional magnetic resonance imaging (fMRI) (Gazzola et al., 2007; Hegel et al., 2008; Chaminade et al., 2012; Sciutti et al., 2012b; Özdem et al., 2017; Rauchbauer et al., 2019), but this technique imposes many practical limitations on ecological validity. The development of functional Near Infrared Spectroscopy (fNIRS) has made such data collection accessible. FNIRS uses absorption of near-infrared light to measure hemodynamic oxyhemoglobin (OxyHb) and deoxyhemoglobin (deOxyHb) concentrations in the cortex as a proxy for neural activity (Jobsis, 1977; Ferrari and Quaresima, 2012; Scholkmann et al., 2014). Advances in acquisition and developments in signal processing and methods have made fNIRS a viable alternative to fMRI for investigating adult perception and cognition, particularly in natural conditions. Techniques for representation of fNIRS signals in three-dimensional space allows for easy comparison with those acquired using fMRI (Noah et al., 2015; Nguyen et al., 2016; Nguyen and Hong, 2016).

Presented here are the results of a study to demonstrate how fNIRS applied to human-robot interaction and human-human interaction can be used to make inferences about human social brain functioning as well as efficacy of social robot design. This study utilized fNIRS during an established eye-contact paradigm to compare neurological processing of human-human and human-robot interaction. Participants engaged in periods of structured eye-contact with another human and with “Maki”, a simplistic human-like robot head (Payne, 2018; Scassellati et al., 2018b). It was hypothesized that participants would show significant rTPJ activity when making eye-contact with their human partner but not their robot partner. In this context, we assume that there is a direct correspondence of neural activity to perception and cognitive processing. Therefore, if eye contact with a human and with a robot elicit similar neural activity in sociocognitive regions, then it can be assumed that the cognitive behavior is also the same. If, however, the neural effects of a human partner and a robot partner are different, then we can conclude that sociocognitive processing is also different. Such differences might occur even when apparent behavior seems the same.

The right temporoparietal junction (rTPJ) was chosen as it is a processing hub for social cognition (Carter & Huettel, 2013) which is believed to be involved in reasoning about the internal mental state of others, referred to as theory of mind (ToM) (Molenberghs et al., 2016; Premack and Woodruff, 1978; Saxe and Kanwisher, 2003; Saxe, 2010). Not only has past research shown that the rTPJ is involved in explicit ToM (Sommer et al., 2007; Aichhorn et al., 2008), it is also spontaneously engaged during tasks with implicit ToM implications (Molenberghs et al., 2016; Dravida et al., 2020; Richardson and Saxe, 2020). It is active during direct human eye-to-eye contact at a level much higher than that found during human picture or video eye-contact (Hirsch et al., 2017; Noah et al., 2020). This is true even in the absence of explicit sociocognitive task demands. What these past studies suggest is that: 1) human-human eye-contact has a uniquely strong impact on activity in the rTPJ; 2) spontaneous involvement of the rTPJ suggests individuals engage in implicit ToM during human-human eye-contact; and 3) appearance, movement, and coordinated task behavior—those things shared between a real person and a simulation of a person—are not sufficient to engage this region comparably to direct human-human interaction. These highly replicable findings suggests that studying the human brain during eye-contact with a robot may give unique insight into the successes and failures of social robot design based on the patterns of activity seen and their similarity or dissimilarity to that of the processing of humans. Additionally, assessing human-robot eye-contact will shed light on how the rTPJ is impacted by characteristics which are shared by a human and a robot, but which are not captured by traditional controls like pictures or videos of people.

Methods and Materials

Participants

Fifteen healthy adults (66% female; 66% white; mean age of 30.1 ± 12.2; 93% right-handed; (Oldfield, 1971)) participated in the study. All participants provided written informed consent in accordance with guidelines approved by the Yale University Human Investigation Committee (HIC #1501015178) and were reimbursed for participation.

Experimental Eye-Contact Procedure

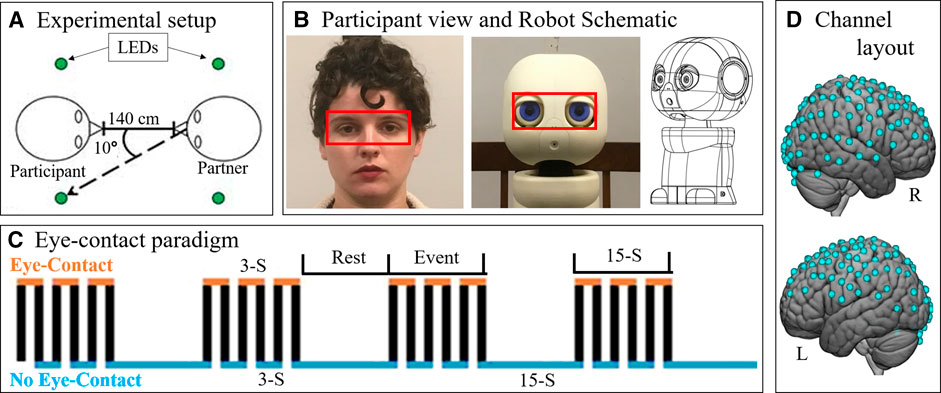

Participants completed an eye-contact task. See Figure 1A for a schematic of the room layout during the task. The eye-contact task used in this experiment is similar to previously published studies (Hirsch et al., 2017; Noah et al., 2020). The task was completed while the participant was seated at a table facing a partner—either the human or the robot (Figure 1B)—at a distance of 140 cm. At the start of each task, an auditory cue prompted participants to gaze at the eyes of their partner. Subsequent auditory tones cued eye gaze to alternatingly move to one of two light emitting diodes (LEDs) or back to the partner’s eyes, according to the protocol time series (Figure 1C). Each task run consisted of six 30 s epochs, with one epoch including one 15 s event block and one 15 s rest block, for a total run length of 3 min. Event blocks consisted of alternating 3 s periods of gaze at partners eyes and gaze at an LED. Rest blocks consisted of gaze at an LED.

FIGURE 1. Paradigm. (A) Schematic (view from above) showing the experimental setup. Participants were seated approximately 140 cm from their partner. On either side of the participant's and the partner's head were LEDs (green circles). Participants (n = 15) alternated between looking into the eyes of their partner and diverting their gaze 10° to one of the lights. (B) Participants completed the eye-contact task with two partners: a human (left) and a robot (patient view: center, schematic: right). Red boxes was used to analyze eye-tracking data in order to assess task compliance. Eye boxes took up approximately the same area of participant visual angle. Schematic source: HelloRobo, Atlanta, Georgia. (C) Paradigm timeline for a single run. Each run consisted of alternating 15-second (S) event and rest blocks. Event blocks consisted of alternating 3-S periods of eye contact (orange) and no eye contact (blue). Rest blocks consisted of no eye contact. (D) Hemodynamic data was collected during the task. This included 134 channels spread across both hemispheres of the cortex via 80 pairs of optodes placed against the scalp. Blue dots represent points of data collation relative to the cortex.

Every participant completed the task twice each with two partners: another real human—a confederate employed by the lab—and a robot bust (Figure 1B). The order of partners was counter balanced. In both partner conditions, the partner completed the eye-contact task concurrently with the participant, such that the participant and the current partner had gaze diverted to an LED and gaze towards partner at the same time. The timing and range of motion of participant eye movements were comparable for both partners. This task was completed twice with each partner, for a total of four runs. During the task, hemodynamic and behavioral data were collected using functional Near Infrared Spectroscopy (fNIRS) and desk mounted eye-tracking.

Robot Partner

The robot partner was a 3D-printed humanoid bust named Maki (HelloRobo, Atlanta, Georgia) (Payne, 2018; Scassellati et al., 2018b) which can simulate human eye-movements but which otherwise lacks human features, movements, or reactive capabilities. Maki’s eyes were designed to have comparable components to that of human eyes: whites surrounding a colored iris with a black “pupil” in the center (Figure 1B). Maki’s movements were carried out using an Arduino IDE controlling six servo motors, giving it six degrees of freedom: head turn left-right and tilt up-down; left eye move left-right and up-down; right eye move left-right and up-down; and eye-lids open-close. This robot was chosen due to the design emphasis on eye movements, as well as its simplified similarity to the overall size and organization of the human face which was hypothesized to be sufficient to control for the appearance of a face (Haxby et al., 2000; Ishai, 2008; Powell et al., 2018).

Participants were introduced to each partner immediately prior to the start of the experiment. For Maki’s introduction, it was initially positioned such that its eyes were closed, and its head was down. Participants then witnessed the robot being turned on, at which point Maki opened its eyes, positioned its head in a neutral, straightforward position, and began blinking in a naturalistic pattern (Bentivoglio et al., 1997). Participants then watched as Maki carried out eye-movement behaviors akin to those performed in the task. This was done to minimize any neural effects related to novelty or surprise at Maki’s movements. Participants introduction to Maki consisted of telling them its name and indicating that it would be performing the task along with them. No additional abilities were insinuated or addressed. Introduction to the human partner involved them walking into the room, sitting across from the partner and introducing themselves. Dialogue between the two was neither encouraged nor discouraged. This approach to introduction was chosen to avoid giving participants any preconceptions about either the robot or human partner.

The robot completed the task via pre-coded movements timed to appear as if it was performing the task concurrently with the participant. Robot behaviors during the task were triggered via PsychoPy. The robot partner shifted its gaze position between partner face and light at timed intervals which matched the task design such that the robot appeared to look towards and away from the participant at the same time that the participant was instructed to look towards or away from the robot. The robot was not designed to nor capable of replicating all naturalistic human eye-behavior. While it can simulate general eye movements, fast behaviors like saccades and subtle movements like pupil size changes are outside of its abilities. Additionally, the robot did not perform gaze fixations due to lack of any visual feedback or input. In order to simulate a naturalistic processing delay found in humans, a 300-ms delay was incorporated between the audible cue to shift gaze position and the robot shifting its gaze. The robot also engaged in a randomized naturalistic blinking pattern.

FNIRS Data Acquisition, Signal Processing and Data Analysis

Functional NIRS signal acquisition, optode localization, and signal processing, including global mean removal, were similar to methods described previously (Dravida et al., 2018; Dravida et al., 2020; Hirsch et al., 2017; Noah et al., 2015; Noah et al., 2020; Piva et al., 2017; Zhang et al., 2016; Zhang et al., 2017) and are summarized below. Hemodynamic signals were acquired using an 80-fiber multichannel, continuous-wave fNIRS system (LABNIRS, Shimadzu Corporation, Kyoto, Japan). Each participant was fitted with an optode cap with predefined channel distances, determined based on head circumference: large was 60 cm circumference; medium was 56.5 cm; and small was 54.5 cm. Optode distances of 3 cm were designed for the 60 cm cap layout and were scaled linearly to smaller caps. A lighted fiber-optic probe (Daiso, Hiroshima, Japan) was used to remove hair from the optode holders prior to optode placement, in order to ensure optode contact with the scalp. Optodes consisting of 40 emitter-detector pairs were arranged in a matrix across the scalp of the participant for an acquisition of 134 channels per subject (Figure 1D). This extent of fNIRS data collection has never been applied to human-robot interaction. For consistency, placement of the most anterior optode-holder of the cap was centered 1 cm above the nasion.

To assure acceptable signal-to-noise ratios, attenuation of light was measured for each channel prior to the experiment, and adjustments were made as needed until all recording optodes were calibrated and able to sense known quantities of light from each wavelength (Tachibana et al., 2011; Ono et al., 2014; Noah et al., 2015). After completion of the tasks, anatomical locations of optodes in relation to standard head landmarks were determined for each participant using a Patriot 3D Digitizer (Polhemus, Colchester, VT, USA) (Okamoto and Dan, 2005; Eggebrecht et al., 2012).

Shimadzu LABNIRS systems utilize laser diodes at three wavelengths of light (780 nm, 805 nm, 830 nm). Raw optical density variations were translated into changes in relative chromophore concentrations using a Beer-Lambert equation (Hazeki and Tamura, 1988; Matcher et al., 1995). Signals were recorded at 30 Hz. Baseline drift was removed using wavelet detrending provided in NIRS-SPM (Ye et al., 2009). Subsequent analyses were performed using Matlab 2019a. Global components attributable to blood pressure and other systemic effects (Tachtsidis and Scholkmann, 2016) were removed using a principal component analysis spatial global-component filter (Zhang et al., 2016; Zhang et al., 2017) prior to general linear model (GLM) analysis (Friston et al., 1994). Comparisons between conditions were based on GLM procedures using the NIRS-SPM software package (Ye et al., 2009). Event and rest epochs within the time series (Figure 1D) were convolved with the canonical hemodynamic response function provided in SPM8 (Penny et al., 2011) and were fit to the data, providing individual ‘‘beta values’’ for each channel per participant across conditions. Montreal Neurological Institute (MNI) coordinates (Mazziotta et al., 1995) for each channel were obtained using NIRS-SPM software (Ye et al., 2009) and the 3-D coordinates obtained using the Polhemus patriot digitizer.

Once in normalized space, the individual channel-wise beta values were projected into voxel-wise space. Group results of voxel-wise t-scores based on these ‘‘beta values’’ were rendered on a standard MNI brain template. All analyses were performed on the combined OxyHb + deOxyHb signals (Silva et al., 2000; Dravida et al., 2018). This was calculated by adding the absolute value of the change in concentration of OxyHb and deOxyHb together. This combined signal is used as it reflects through a single value the stereotypical task-related anti-correlation between increase in OxyHb and decrease in deOxyHb (Seo et al., 2012). As such, the combined signal provides a more accurate reflection of task related activity by incorporating both components of the expected signal change as opposed to just one or the other.

For each condition—human partner and robot partner—task related activity was determined by contrasting voxel-wise activity during task blocks with that during rest blocks which identifies regions which are more activated during eye-contact than baseline. The resulting single condition results for human eye-contact and robot eye-contact were contrasted with each other to identify regions which showed more activity when making eye-contact with one partner or the other. A region of interest analysis was performed on the rTPJ using a mask from a previously published study (Noah et al., 2020). Effect size (classic Cohen’s d) was calculated using ROI beta values for all subjects to calculate mean and pooled standard deviation. Whole cortex analyses were also performed, as an exploratory analysis.

Eye-Tracking Acquisition

Participant eye-movements during the experiment were recorded using a desk-mounted Tobii X3-120 (Stockholm, Sweden) eye-tracking system. The eye-tracker recorded eye behavior at 120 Hz. The reference scene video was recorded at 30 Hz from a scene camera positioned directly behind and above the participants’ head at 1280 x 720 pixels using a Logitech c920 camera (Lausanne, Switzerland). Eye-tracking data was analyzed using an “eye-box” which was determined via the anatomical layout of the partners face (Figure 1B, red boxes). When the eyes of the participant fell within the bounds of the eye-box of their partner, it was considered a “hit”. To assess results, the task time was divided into 3 s increments, which reflects the length of a single-eye-contact period. The percentage of frames with a hit were calculated for every 3 s period of the task. Data points in which at least one eye was not detected (due to technical difficulties or eye-blinks) were considered invalid and excluded from this calculation. Datasets which resulted in more than 1/3 of data points being invalid due to these dropped signals, blinks, or noise were excluded from analyses. Due to collection error, researcher error, or technical difficulties, 17 of 60 eye-tracking datasets were excluded. These were equally distributed between the two trial types (9 from human and 8 from robot) and therefore do not differentially impact the two conditions. If the participant complied with the task, then the proportion of eye-box hits per increment should reflect a pattern similar to that shown in Figure 1D, where eye-contact periods show a high proportion of hits and no eye-contact periods show few or no hits.

Results

Behavioral Eye-Tracking Results

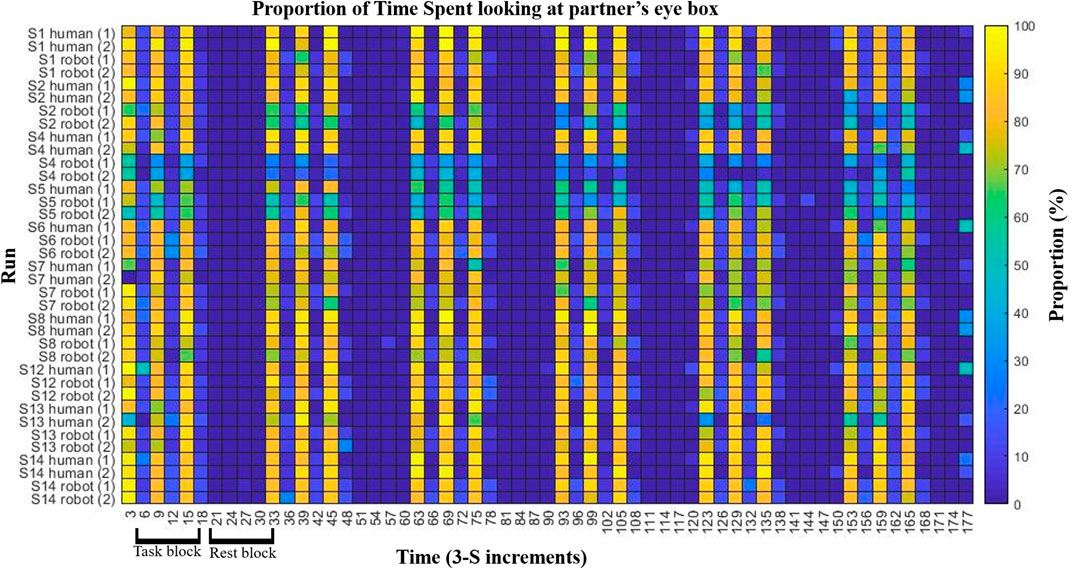

Figure 2 shows a colormap of eye-tracking behavioral results for subjects that had at least one valid eye-tracking data set per condition. The proportion of time per 3 s period spent looking at the eye-box of the partner is indicated by the color of the block with warmer colors indicating more hits and cooler indicating fewer. The figure shows that each participant engaged in more eye-contact during eye-contact periods of the task block than during no eye-contact periods. This demonstrates task compliance regardless of partner. Two participants appeared to make less eye-contact with their robot partner than they did with their human partner (subjects 2 and 4), though both still showed task compliance with the robot. In order to ensure that hemodynamic differences in processing the two partners were not confounded by the behavioral differences of these two subjects, contrasts were performed on the group both including (Figure 3, n = 15) and excluding the two subjects. Results were not meaningfully changed by their exclusion.

FIGURE 2. Eye tracking and task compliance. Behavioral results are shown indicating task compliance for both partners. The task bar was divided into 3 s increments (x-axis) and the proportion of hits on the partner’s eye box were calculated per increment. Subjects which had at least one valid eye-tracking data set per condition are shown (y-axis). Color indicates the proportion of the 3 seconds spent in the partners eye-box, after excluding data points in which one or more eyes were not recorded (e.g., blinks). Subjects showed more eye-box hits during the eye-contact periods (every other increment during task blocks) then during no eye contact periods (remaining task blocs increments as well as all rest block increments). Two subjects, S02 and S04, appear to have made less eye-contact with the robot than with the human partner. In order to ensure that this did not confound results, hemodynamic analyses were performed with both the inclusion and exclusion of those two subjects.

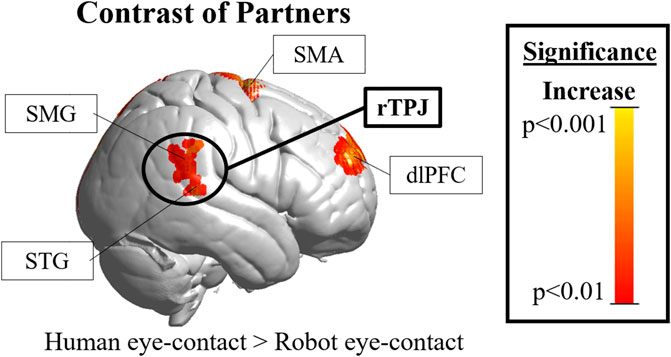

FIGURE 3. Cortical hemodynamic results. The rTPJ (black circle, the region of interest) was significantly more active during human than robot eye-contact (peak T-score = 2.8, uncorrected significance value p = 0.007, reaches FDR-corrected significance of p <0.05). rTPJ = right temporoparietal junction; DLPFC = right dorsolateral prefrontal cortex; SMA =supplementary motor area; SMG = supramarginal gyrus; STG = superior temporal gyrus.”

Hemodynamic Results

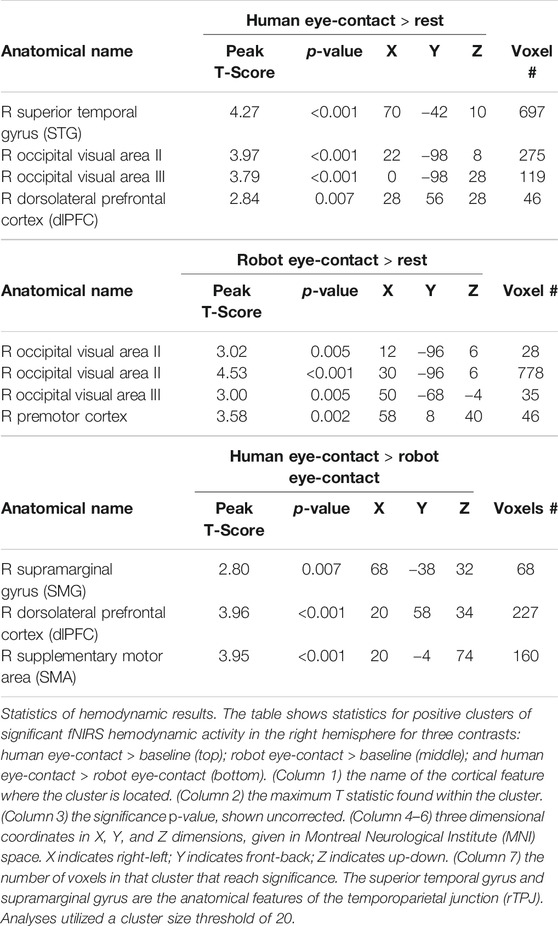

Results in the right hemisphere of hemodynamic contrasts are shown in Figure 3, Supplementary Figure S1 and Table 1. The black circle indicates the rTPJ region of interest. Eye-contact with another human contrasted with baseline (Supplemenatry Figure S1, left) resulted in significant activity in the rTPJ (peak T-score = 4.27; p <0.05 FDR-corrected). ROI descriptive statistics: beta value x̄ = 5.12x10−4; σ = 9.31x10−4; 95% confidence interval: 4.87x10−4–5.38x10−4. Eye-contact with a robot contrasted with rest (Supplementary Figure S1, right) resulted in no significant activity in the rTPJ. ROI descriptive statistics: beta value x̄ = -4.25x10−4; σ = 0.002; 95% confidence interval: -4.81x10−4 — -3.69x10−4.

A comparison of the two partners (Figure 3) resulted in significant differences in the rTPJ (peak T-score: 2.8, p <0.05 FDR-corrected). Effect size: Cohen’s d = 0.5844. Exploratory whole brain analyses were also performed to identify other regions that might be of interest in future studies. Un-hypothesized significant differences were found in the right dorsolateral prefrontal cortex (rDLPFC) in a comparison of human eye-contact and rest (peak T-score: 3.96, p = 0.007, uncorrected) as well as human eye-contact compared to robot eye-contact (peak T-score = 2.84; p <0.001, uncorrected). A list of all regions that reached significance in the exploratory analyses can be found in Table 1.

Conclusion and Discussion

The paradigm utilized here demonstrates how assessment of neural activity can give insight which may not be available through assessing behavior alone and offers an effective way to address some questions about naturalistic interaction between both humans and robots. Past attempts to apply fNIRS to human-robot interaction have explored its use as a signal transducer from human to robot as well as an evaluation tool (Kawaguchi et al., 2012; Solovey et al., 2012; Canning and Scheutz, 2013; Strait et al., 2014; Nuamah et al., 2019). This study builds on these by expanding data collection to the full superficial cortex and comparing neural processing during human-robot interaction to human-human interaction. It establishes a foundation for novel approaches to assess social robot design which may complement existing approaches (Gazzola et al., 2007; Sciutti et al., 2012a; Sciutti et al., 2012b).

As hypothesized, differences in rTPJ activity were found between processing of the human and robot partner, and these differences shed light on human social processing as well as Maki’s success in engaging it. While Maki’s simplified appearance was enough to control for low level visual features of making eye-contact with a human, as demonstrated by the lack of significant differences in occipital visual face processing areas (Dravida et al., 2019; Haxby et al., 2000; Ishai et al., 2005), Maki’s performance was not able to engage the rTPJ (Figure 3, black circle). This suggests that the combination of features that Maki shares with another human—superficial appearance, dynamic motion, coordinated behavior, physical embodiment (Wang and Rau, 2019), and co-presence (Li, 2015)—are not sufficient to engage the rTPJ. These results also give insight into Maki’s success in simulating a human partner: this robot, engaged as a novel partner with no preconceptions about abilities, and engaging in simulated eye-contact is not effective at engaging the naturalistic social processing network, and this difference was found despite comparable behavior with both partners.

These results inspire many questions: What in robot design is necessary and sufficient to engage the human rTPJ? Is it possible for Maki to engage it, and if so, what characteristics would do so? What impact would rTPJ engagement have on interpersonal relationship building with and recognition of Maki as “something like me” (Dautenhahn, 1995)? How does rTPJ engagement and any corresponding differences in impression and interaction relate to short- and long-term individual and public health, such as combatting loneliness?

Additional insight can be gleaned from the presented data: in addition to hypothesized rTPJ differences, the right dorsolateral prefrontal cortex (rDLPFC) also showed significant differences during human-human interaction and human-robot interaction, though these results were exploratory and thus do not reach FDR-corrected significance. The rDLPFC has been hypothesized to be critical for evaluating “motivational and emotional states and situational cues” (Forbes and Grafman, 2010), as well as monitoring for errors in predictions for social situations (Burke et al., 2010; Brown and Brüne, 2012) and implicit theory of mind (Molenberghs et al., 2016). This suggests that participants are spontaneously engaging in prediction of their human but not their robot-partners mind set, and are monitoring for differences in their expectations about their partner. RDLPFC activity has been tied to processing of eye-contact during human-human dialogue (Jiang et al., 2017) and to disengagement of attention from emotional faces (Sanchez et al., 2016). This suggests that candidate features to influence this system include subtle facial features meaningful for communication and reciprocation like eyebrows or saccades, or behavioral cues such as moving the gaze around the face during eye-contact. Activity in the rDLPFC has also been tied to strategizing in human-human but not human-computer competition (Piva et al., 2017). This suggests that top-down influences, such as preconceptions that people have of other people and of robots relating to agency, ability, or intention, are also important, and past research suggests that both bottom-up and top-down features are likely at play (Ghiglino et al., 2020a; Ghiglino et al., 2020b; Klapper et al., 2014). Lack of prior experience—which would create such preconceptions—may also be driving this difference.

The opportunities offered by the application of fNIRS to human-robot interaction are mutually beneficial to both social neuroscience and social robotics (Henschel et al., 2020). FNIRS to assess human-robot interaction can be used to interrogate whether robot interaction can incur naturalistic sociocognitive activity, how different elements of robot design impact that neurological processing, and how such differences relate to human behavior. It can also be used to parse the relationship between stimuli and human social processing. The data presented here offers a starting point for research to explore how the complex whole of appearance and behavior is brought to bear on social processing during direct eye-to-eye contact in human-human and human-robot interaction. Future studies may explore the impact of dynamic elements of robot design; subtle components of complex robot behavior; and pre-existing experience with or belief about the robot on human processing. This would give insight into the function of the human social processing system and answer the question of if it is possible for a robot to engage it. Processing during eye-contact should also be related to self-reported changes in impression of and engagement with the robot during various situations. Behavioral studies have shown that participants report greater engagement with a robot if it has made eye-contact with them prior to performance of a task (Kompatsiari et al., 2019). Whether these differences correlate with neural behavior remains to be seen. There are also other behaviors and brain regions that these techniques can be applied to. For instance, humans engage in anticipatory gaze when viewing goal directed robot behavior in a way more similar to viewing other humans than to viewing self-propelled objects (Sciutti et al., 2012b). Additionally, the rTPJ is just one system with implications on social perception of a robot. Motor resonance and the mirror neuron system are influenced by goals of behavior and show comparable engagement when human and robot behavior imply similar end goals, even when the kinematics of behavior are not similar (Gazzola et al., 2007). Future studies can explore how robot eye-contact behavior impacts this system and other such systems (Sciutti et al., 2012a). This approach may also be valuable for assessing nebulous but meaningful components of social interaction such as social engagement (Sidner et al., 2005; Corrigan et al., 2013; Salam and Chetouani, 2015; Devillers and Dubuisson Duplessis, 2017).

The promise of robots as tools for therapy and companionship (Belpaeme et al., 2018; Kawaguchi et al., 2012; Scassellati et al., 2018a; Tapus et al., 2007) also suggests that this approach can give valuable insight into the role of social processing in both health and disease. For instance, interaction with a social seal robot (Kawaguchi et al., 2012) has been linked to physiological improvements in neural firing patterns in dementia patients (Wada et al., 2005). This suggests a complex relationship between social interaction, social processing, and health, and points to the value of assessing human-robot interaction as a function of health. Social dysfunction is found in many different mental disorders, including autism spectrum disorder (ASD) and schizophrenia and social robots are already being used to better understand these populations. Social robots with simplified humanoid appearances have shown improvements in social functioning in patients with ASD (Pennisi et al., 2016; Ismail et al., 2019). This highlights how social robots can give insight which is not accessible otherwise as ASD patients regularly avoid eye-contact with other humans but seem to gravitate socially toward therapeutic robots. Conversely, patients with schizophrenia show higher amounts of variability than neurotypicals in perceiving social robots as intentional agents (Gray et al., 2011; Raffard et al., 2018) and show distinct and complex deficits in processing facial expressions in robots (Raffard et al., 2016; Cohen et al., 2017). Application of the paradigm used here to these clinical populations may thus be valuable for identifying how behaviors and expressions associated with eye-contact in robots and in people impact processing and exacerbate or improve dysfunction.

There are some notable limitations to this study. The sample size was small and some results are exploratory and do not reach FDR-corrected significance. As such, future studies should confirm these findings on a larger sample size. Additionally, fNIRS in its current iteration is unable to collect data from medial or subcortical regions. Past studies have shown that, for example, the medial prefrontal cortex is involved in aspects of social understanding (Mitchell et al., 2006; Ciaramidaro et al., 2007; Forbes and Grafman, 2010; Bara et al., 2011; Powell et al., 2018) and thus is hypothesized to be important to the paradigm utilized here. Such regions are important for gaining a comprehensive understanding of this system and thus some details of processing are being missed when using fNIRS.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Yale Human Research Protection Program. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual in Figure 1 for the publication of potentially identifiable images included in this article.

Author Contributions

MK, JH, and BS conceived of the idea. MK primarily developed and carried out the experiment and carried out data analysis. JH, AN, and XZ provided fNIRS, technical, and data analysis expertise as well as mentorship and guidance where needed. BS provided robotics expertise. MK along with JH wrote the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors are grateful for the significant contributions of members of the Yale Brain Function and Social Robotics Labs, including Jennifer Cuzzocreo, Courtney DiCocco, Raymond Cappiello, and Jake Brawer.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2020.599581/full#supplementary-material.

FIGURE S1 | Cortical hemodynamic results. Results of a contrast of task and rest blocks when partnered with the human (left) and the robot (right). (Left) Significantly greater activity during human eye-contact was found in visual regions (V2 and V3) as well as the rTPJ. (B) Significantly greater activity during robot eye‐contact was found in visual regions. rTPJ = right temporoparietal junction; rDLPFC=dorsolateral prefrontal cortex; V2 & V3 = Visual Occipital Areas II and III.

References

Admoni, H., and Scassellati, B. (2017). Social Eye Gaze in Human-robot Interaction: A Review. J. Hum.-Robot Interact 6 (1), 25–63. doi:10.5898/JHRI.6.1.Admoni

Aichhorn, M., Perner, J., Weiss, B., Kronbichler, M., Staffen, W., and Ladurner, G. (2008). Temporo-parietal junction activity in theory-of-mind tasks: falseness, beliefs, or attention. J. Cognit. Neurosci 21 (6), 1179–1192. doi:10.1162/jocn.2009.21082

Bara, B. G., Ciaramidaro, A., Walter, H., and Adenzato, M. (2011). Intentional minds: a philosophical analysis of intention tested through fMRI experiments involving people with schizophrenia, people with autism, and healthy individuals. Front. Hum. Neurosci 5, 7. doi:10.3389/fnhum.2011.00007

Belpaeme, T., Kennedy, J., Ramachandran, A., Scassellati, B., and Tanaka, F. (2018). Social robots for education: a review. Science Robotics 3 (21), eaat5954. doi:10.1126/scirobotics.aat5954

Bentivoglio, A. R., Bressman, S. B., Cassetta, E., Carretta, D., Tonali, P., and Albanese, A. (1997). Analysis of blink rate patterns in normal subjects. Mov. Disord 12 (6), 1028–1034. doi:10.1002/mds.870120629

Brooke, J., and Jackson, D. (2020). Older people and COVID-19: isolation, risk and ageism. J. Clin. Nurs 29 (13–14), 2044–2046. doi:10.1111/jocn.15274

Brown, E. C., and Brüne, M. (2012). The role of prediction in social neuroscience. Front. Hum. Neurosci 6, 147. doi:10.3389/fnhum.2012.00147

Burke, C. J., Tobler, P. N., Baddeley, M., and Schultz, W. (2010). Neural mechanisms of observational learning. Proc. Natl. Acad. Sci. Unit. States Am 107 (32), 14431–14436. doi:10.1073/pnas.1003111107

Canning, C., and Scheutz, M. (2013). Functional Near-infrared Spectroscopy in Human-robot Interaction. J. Hum.-Robot Interact 2 (3), 62–84. doi:10.5898/JHRI.2.3.Canning

Carter, R. M., and Huettel, S. A. (2013). A nexus model of the temporal–parietal junction. Trends Cognit. Sci 17 (7), 328–336. doi:10.1016/j.tics.2013.05.007

Chaminade, T., Fonseca, D. D., Rosset, D., Lutcher, E., Cheng, G., and Deruelle, C. (2012). “FMRI study of young adults with autism interacting with a humanoid robot,” in 2012 IEEE RO-MAN: the 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, September 9–13, 2012, 380–385. doi:10.1109/ROMAN.2012.6343782

Ciaramidaro, A., Adenzato, M., Enrici, I., Erk, S., Pia, L., Bara, B. G., et al. (2007). The intentional network: how the brain reads varieties of intentions. Neuropsychologia 45 (13), 3105–3113. doi:10.1016/j.neuropsychologia.2007.05.011

Cohen, L., Khoramshahi, M., Salesse, R. N., Bortolon, C., Słowiński, P., Zhai, C., et al. (2017). Influence of facial feedback during a cooperative human-robot task in schizophrenia. Sci. Rep 7 (1), 15023. doi:10.1038/s41598-017-14773-3

Corrigan, L. J., Peters, C., Castellano, G., Papadopoulos, F., Jones, A., Bhargava, S., et al. (2013). “Social-task engagement: striking a balance between the robot and the task,” in Embodied Commun. Goals Intentions Workshop ICSR 13, 1–7.

Cross, E. S., Hortensius, R., and Wykowska, A. (2019). From social brains to social robots: applying neurocognitive insights to human–robot interaction. Phil. Trans. Biol. Sci 374 (1771), 20180024. doi:10.1098/rstb.2018.0024

Dautenhahn, K. (1995). Getting to know each other—artificial social intelligence for autonomous robots. Robot. Autonom. Syst 16(2), 333–356. doi:10.1016/0921-8890(95)00054-2

Dautenhahn, K. (1997). I could Be you: the phenomenological dimension of social understanding. Cybern. Syst 28 (5), 417–453. doi:10.1080/019697297126074

Devillers, L., and Dubuisson Duplessis, G. (2017). Toward a context-based approach to assess engagement in human-robot social interaction. Dialogues with Social Robots: Enablements, Analyses, and Evaluation 293–301. doi:10.1007/978-981-10-2585-3_23

Di Paolo, E. A., and De Jaegher, H. (2012). The interactive brain hypothesis. Front. Hum. Neurosci 6, 163. doi:10.3389/fnhum.2012.00163

Disalvo, C., Gemperle, F., Forlizzi, J., and Kiesler, S. (2002). “All robots are not created equal: the design and perception of humanoid robot heads,” in Proceedings of DIS02: Designing Interactive Systems: Processes, Practices, Methods, & Techniques, 321–326. doi:10.1145/778712.778756

Dravida, S., Noah, J. A., Zhang, X., and Hirsch, J. (2018). Comparison of oxyhemoglobin and deoxyhemoglobin signal reliability with and without global mean removal for digit manipulation motor tasks. Neurophotonics 5, 011006. doi:10.1117/1.NPh.5.1.011006

Dravida, S., Noah, J. A., Zhang, X., and Hirsch, J. (2020). Joint attention during live person-to-person contact activates rTPJ, including a sub-component associated with spontaneous eye-to-eye contact. Front. Hum. Neurosci 14, 201. doi:10.3389/fnhum.2020.00201

Dravida, S., Ono, Y., Noah, J. A., Zhang, X. Z., and Hirsch, J. (2019). Co-localization of theta-band activity and hemodynamic responses during face perception: simultaneous electroencephalography and functional near-infrared spectroscopy recordings. Neurophotonics 6 (4), 045002. doi:10.1117/1.NPh.6.4.045002

Eggebrecht, A. T., White, B. R., Ferradal, S. L., Chen, C., Zhan, Y., Snyder, A. Z., et al. (2012). A quantitative spatial comparison of high-density diffuse optical tomography and fMRI cortical mapping. NeuroImage 61 (4), 1120–1128. doi:10.1016/j.neuroimage.2012.01.124

Ferrari, M., and Quaresima, V. (2012). A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. Neuroimage 63 (2), 921–935. doi:10.1016/j.neuroimage.2012.03.049

Forbes, C. E., and Grafman, J. (2010). The role of the human prefrontal cortex in social cognition and moral judgment. Annu. Rev. Neurosci 33 (1), 299–324. doi:10.1146/annurev-neuro-060909-153230

Friston, K. J., Holmes, A. P., Worsley, K. J., Poline, J.-P., Frith, C. D., and Frackowiak, R. S. J. (1994). Statistical parametric maps in functional imaging: a general linear approach. Hum. Brain Mapp 2 (4), 189–210. doi:10.1002/hbm.460020402

Gazzola, V., Rizzolatti, G., Wicker, B., and Keysers, C. (2007). The anthropomorphic brain: the mirror neuron system responds to human and robotic actions. Neuroimage 35 (4), 1674–1684. doi:10.1016/j.neuroimage.2007.02.003

Ghiglino, D., Tommaso, D. D., Willemse, C., Marchesi, S., and Wykowska, A. (2020a). Can I get your (robot) attention? Human sensitivity to subtle hints of human-likeness in a humanoid robot’s behavior. PsyArXiv doi:10.31234/osf.io/kfy4g

Ghiglino, D., Willemse, C., Tommaso, D. D., Bossi, F., and Wykowska, A. (2020b). At first sight: robots’ subtle eye movement parameters affect human attentional engagement, spontaneous attunement and perceived human-likeness. Paladyn, Journal of Behavioral Robotics 11 (1), 31–39. doi:10.1515/pjbr-2020-0004

Gray, K., Jenkins, A. C., Heberlein, A. S., and Wegner, D. M. (2011). Distortions of mind perception in psychopathology. Proc. Natl. Acad. Sci. Unit. States Am 108 (2), 477–479. doi:10.1073/pnas.1015493108

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cognit. Sci 4 (6), 223–233. doi:10.1016/S1364-6613(00)01482-0

Hazeki, O., and Tamura, M. (1988). Quantitative analysis of hemoglobin oxygenation state of rat brain in situ by near-infrared spectrophotometry. J. Appl. Physiol 64 (2), 796–802. doi:10.1152/jappl.1988.64.2.796

Hegel, F., Krach, S., Kircher, T., Wrede, B., and Sagerer, G. (2008). Theory of Mind (ToM) on robots: a functional neuroimaging study. 2008 3rd ACM/IEEE International Conference on Human-Robot Interaction (HRI), Amsterdam, Netherlands, March 12–15, 2008, (IEEE). doi:10.1145/1349822.1349866

Henschel, A., Hortensius, R., and Cross, E. S. (2020). Social cognition in the age of human–robot interaction. Trends Neurosci 43 (6), 373–384. doi:10.1016/j.tins.2020.03.013

Hirsch, J., Zhang, X., Noah, J. A., and Ono, Y. (2017). Frontal, temporal, and parietal systems synchronize within and across brains during live eye-to-eye contact. Neuroimage 157, 314–330. doi:10.1016/j.neuroimage.2017.06.018

Ishai, A. (2008). Let's face it: it's a cortical network. NeuroImage 40 (2), 415–419. doi:10.1016/j.neuroimage.2007.10.040

Ishai, A., Schmidt, C. F., and Boesiger, P. (2005). Face perception is mediated by a distributed cortical network. Brain Res. Bull 67 (1), 87–93. doi:10.1016/j.brainresbull.2005.05.027

Ismail, L. I., Verhoeven, T., Dambre, J., and Wyffels, F. (2019). Leveraging robotics research for children with autism: a review. International Journal of Social Robotics 11 (3), 389–410. doi:10.1007/s12369-018-0508-1

Jiang, J., Borowiak, K., Tudge, L., Otto, C., and von Kriegstein, K. (2017). Neural mechanisms of eye contact when listening to another person talking. Soc. Cognit. Affect Neurosci 12 (2), 319–328. doi:10.1093/scan/nsw127

Jobsis, F. F. (1977). Noninvasive, infrared monitoring of cerebral and myocardial oxygen sufficiency and circulatory parameters. Science 198 (4323), 1264–1267. doi:10.1126/science.929199

Kawaguchi, Y., Wada, K., Okamoto, M., Tsujii, T., Shibata, T., and Sakatani, K. (2012). Investigation of brain activity after interaction with seal robot measured by fNIRS. 2012 IEEE RO-MAN: the 21st IEEE International Symposium on robot and human interactive communication, Paris, France, September 9–13, 2012, (IEEE), 571–576. doi:10.1109/ROMAN.2012.6343812

Klapper, A., Ramsey, R., Wigboldus, D., and Cross, E. S. (2014). The control of automatic imitation based on bottom–up and top–down cues to animacy: insights from brain and behavior. J. Cognit. Neurosci 26 (11), 2503–2513. doi:10.1162/jocn_a_00651

Kompatsiari, K., Ciardo, F., Tikhanoff, V., Metta, G., and Wykowska, A. (2019). It’s in the eyes: the engaging role of eye contact in HRI. Int. J. Social Robotics doi:10.1007/s12369-019-00565-4

Li, J. (2015). The benefit of being physically present: a survey of experimental works comparing copresent robots, telepresent robots and virtual agents. Int. J. Hum. Comput. Stud 77, 23–37. doi:10.1016/j.ijhcs.2015.01.001

Matcher, S. J., Elwell, C. E., Cooper, C. E., Cope, M., and Delpy, D. T. (1995). Performance comparison of several published tissue near-infrared spectroscopy algorithms. Anal. Biochem 227 (1), 54–68. doi:10.1006/abio.1995.1252

Mazziotta, J. C., Toga, A. W., Evans, A., Fox, P., and Lancaster, J. (1995). A probabilistic Atlas of the human brain: theory and rationale for its development. Neuroimage 2 (2), 89–101

Mitchell, J. P., Macrae, C. N., and Banaji, M. R. (2006). Dissociable medial prefrontal contributions to judgments of similar and dissimilar others. Neuron 50 (4), 655–663. doi:10.1016/j.neuron.2006.03.040

Molenberghs, P., Johnson, H., Henry, J. D., and Mattingley, J. B. (2016). Understanding the minds of others: a neuroimaging meta-analysis. Neurosci. Biobehav. Rev 65, 276–291. doi:10.1016/j.neubiorev.2016.03.020

Nguyen, H.-D., and Hong, K.-S. (2016). Bundled-optode implementation for 3D imaging in functional near-infrared spectroscopy. Biomed. Optic Express 7 (9), 3491–3507. doi:10.1364/BOE.7.003491

Nguyen, H.-D., Hong, K.-S., and Shin, Y.-I. (2016). Bundled-optode method in functional near-infrared spectroscopy. PloS One 11 (10), e0165146. doi:10.1371/journal.pone.0165146

Noah, J. A., Ono, Y., Nomoto, Y., Shimada, S., Tachibana, A., Zhang, X., et al. (2015). FMRI validation of fNIRS measurements during a naturalistic task. JoVE 100, e52116. doi:10.3791/52116

Noah, J. A., Zhang, X., Dravida, S., Ono, Y., Naples, A., McPartland, J. C., et al. (2020). Real-time eye-to-eye contact is associated with cross-brain neural coupling in angular gyrus. Front. Hum. Neurosci 14, 19. doi:10.3389/fnhum.2020.00019

Nuamah, J. K., Mantooth, W., Karthikeyan, R., Mehta, R. K., and Ryu, S. C. (2019). Neural efficiency of human–robotic feedback modalities under stress differs with gender. Front. Hum. Neurosci 13, 287. doi:10.3389/fnhum.2019.00287

Özdem, C., Wiese, E., Wykowska, A., Müller, H., Brass, M., and Overwalle, F. V. (2017). Believing androids – fMRI activation in the right temporo-parietal junction is modulated by ascribing intentions to non-human agents. Soc. Neurosci 12 (5), 582–593. doi:10.1080/17470919.2016.1207702

Okamoto, M., and Dan, I. (2005). Automated cortical projection of head-surface locations for transcranial functional brain mapping. NeuroImage 26 (1), 18–28. doi:10.1016/j.neuroimage.2005.01.018

Okruszek, L., Aniszewska-Stańczuk, A., Piejka, A., Wiśniewska, M., and Żurek, K. (2020). Safe but lonely? Loneliness, mental health symptoms and COVID-19. PsyArXiv. doi:10.31234/osf.io/9njps

Oldfield, R. C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9 (1), 97–113. doi:10.1016/0028-3932(71)90067-4

Ono, Y., Nomoto, Y., Tanaka, S., Sato, K., Shimada, S., Tachibana, A., et al. (2014). Frontotemporal oxyhemoglobin dynamics predict performance accuracy of dance simulation gameplay: temporal characteristics of top-down and bottom-up cortical activities. NeuroImage 85, 461–470. doi:10.1016/j.neuroimage.2013.05.071

Pennisi, P., Tonacci, A., Tartarisco, G., Billeci, L., Ruta, L., Gangemi, S., et al. (2016). Autism and social robotics: a systematic review. Autism Res 9 (2), 165–183. doi:10.1002/aur.1527

Penny, W. D., Friston, K. J., Ashburner, J. T., Kiebel, S. J., and Nichols, T. E. (2011). Statistical parametric mapping: the analysis of functional brain images Amsterdam: Elsevier.

Piva, M., Zhang, X., Noah, J. A., Chang, S. W. C., and Hirsch, J. (2017). Distributed neural activity patterns during human-to-human competition. Front Hum. Neurosci 11, 571. doi:10.3389/fnhum.2017.00571

Powell, L. J., Kosakowski, H. L., and Saxe, R. (2018). Social origins of cortical face areas. Trends Cognit. Sci 22 (9), 752–763. doi:10.1016/j.tics.2018.06.009

Premack, D., and Woodruff, G. (1978). Does the chimpanzee have a theory of mind? Behavioral and Brain Sciences 1 (4), 515–526. doi:10.1017/S0140525X00076512

Raffard, S., Bortolon, C., Cohen, L., Khoramshahi, M., Salesse, R. N., Billard, A., et al. (2018). Does this robot have a mind? Schizophrenia patients’ mind perception toward humanoid robots. Schizophr. Res 197, 585–586. doi:10.1016/j.schres.2017.11.034

Raffard, S., Bortolon, C., Khoramshahi, M., Salesse, R. N., Burca, M., Marin, L., et al. (2016). Humanoid robots versus humans: how is emotional valence of facial expressions recognized by individuals with schizophrenia? An exploratory study. Schizophr. Res 176 (2), 506–513. doi:10.1016/j.schres.2016.06.001

Rauchbauer, B., Nazarian, B., Bourhis, M., Ochs, M., Prévot, L., and Chaminade, T. (2019). Brain activity during reciprocal social interaction investigated using conversational robots as control condition. Phil. Trans. Biol. Sci 374 (1771), 20180033. doi:10.1098/rstb.2018.0033

Richardson, H., and Saxe, R. (2020). Development of predictive responses in theory of mind brain regions. Dev. Sci 23 (1), e12863. doi:10.1111/desc.12863

Salam, H., and Chetouani, M. (2015). A multi-level context-based modeling of engagement in Human-Robot Interaction. 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, May 4–8, 2015. doi:10.1109/FG.2015.7284845

Sanchez, A., Vanderhasselt, M.-A., Baeken, C., and De Raedt, R. (2016). Effects of tDCS over the right DLPFC on attentional disengagement from positive and negative faces: an eye-tracking study. Cognit. Affect Behav. Neurosci 16 (6), 1027–1038. doi:10.3758/s13415-016-0450-3

Saxe, R, and Kanwisher, N. (2003). People thinking about thinking people: the role of the temporo-parietal junction in “theory of mind”. Neuroimage 19 (4), 1835–1842. doi:10.1016/S1053-8119(03)00230-1

Saxe, R.. (2010). The right temporo-parietal junction: a specific brain region for thinking about thoughts. in Handbook of Theory of MindTaylor and Francis, 1–35.

Scassellati, B., Boccanfuso, L., Huang, C.-M., Mademtzi, M., Qin, M., Salomons, N., et al. (2018a). Improving social skills in children with ASD using a long-term, in-home social robot. Science Robotics 3 (21), eaat7544. doi:10.1126/scirobotics.aat7544

Scassellati, B., Brawer, J., Tsui, K., Nasihati Gilani, S., Malzkuhn, M., Manini, B., et al. (2018b). “Teaching language to deaf infants with a robot and a virtual human,” Proceedings of the 2018 CHI Conference on human Factors in computing systems, 1–13. doi:10.1145/3173574.3174127

Scholkmann, F., Kleiser, S., Metz, A. J., Zimmermann, R., Mata Pavia, J., Wolf, U., et al. (2014). A review on continuous wave functional near-infrared spectroscopy and imaging instrumentation and methodology. Neuroimage 85, 6–27. doi:10.1016/j.neuroimage.2013.05.004

Sciutti, A., Bisio, A., Nori, F., Metta, G., Fadiga, L., Pozzo, T., et al. (2012a). Measuring human-robot interaction through motor resonance. International Journal of Social Robotics 4 (3), 223–234. doi:10.1007/s12369-012-0143-1

Sciutti, A., Bisio, A., Nori, F., Metta, G., Fadiga, L., and Sandini, G. (2012b). Anticipatory gaze in human-robot interactions. Gaze in HRI from modeling to communication Workshop at the 7th ACM/IEEE International Conference on Human-Robot Interaction, Boston, Massachusetts.

Seo, Y. W., Lee, S. D., Koh, D. K., and Kim, B. M. (2012). Partial least squares-discriminant analysis for the prediction of hemodynamic changes using near infrared spectroscopy. J. Opt. Soc. Korea 16, 57. doi:10.3807/JOSK.2012.16.1.057

Shibata, T. (2012). Therapeutic seal robot as biofeedback medical device: qualitative and quantitative evaluations of robot therapy in dementia Care. Proc. IEEE 100 (8), 2527–2538. doi:10.1109/JPROC.2012.2200559

Sidner, C. L., Lee, C., Kidd, C., Lesh, N., and Rich, C. (2005). Explorations in engagement for humans and robots. ArXiv

Silva, A. C., Lee, S. P., Iadecola, C., and Kim, S. (2000). Early temporal characteristics of cerebral blood flow and deoxyhemoglobin changes during somatosensory stimulation. J. Cerebr. Blood Flow Metabol 20 (1), 201–206. doi:10.1097/00004647-200001000-00025

Solovey, E., Schermerhorn, P., Scheutz, M., Sassaroli, A., Fantini, S., and Jacob, R. (2012). Brainput: enhancing interactive systems with streaming fNIRS brain input. Proceedings of the SIGCHI Conference on human Factors in computing systems, 2193–2202. doi:10.1145/2207676.2208372

Sommer, M., Döhnel, K., Sodian, B., Meinhardt, J., Thoermer, C., and Hajak, G. (2007). Neural correlates of true and false belief reasoning. Neuroimage 35 (3), 1378–1384. doi:10.1016/j.neuroimage.2007.01.042

Strait, M., Canning, C., and Scheutz, M. (2014). Let me tell you: investigating the effects of robot communication strategies in advice-giving situations based on robot appearance, interaction modality and distance. 2014 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Bielefeld, Germany, March 3–6, 2014, (IEEE), 479–486.

Tachibana, A., Noah, J. A., Bronner, S., Ono, Y., and Onozuka, M. (2011). Parietal and temporal activity during a multimodal dance video game: an fNIRS study. Neurosci. Lett 503 (2), 125–130. doi:10.1016/j.neulet.2011.08.023

Tachtsidis, I., and Scholkmann, F. (2016). False positives and false negatives in functional near-infrared spectroscopy: issues, challenges, and the way forward. Neurophotonics 3 (3), 031405. doi:10.1117/1.NPh.3.3.031405

Tapus, A., Mataric, M. J., and Scassellati, B. (2007). Socially assistive robotics [grand challenges of robotics]. IEEE Robot. Autom. Mag 14 (1), 35–42. doi:10.1109/MRA.2007.339605

Wada, K., Ikeda, Y., Inoue, K., and Uehara, R. (2010). Development and preliminary evaluation of a caregiver’s manual for robot therapy using the therapeutic seal robot Paro. 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, September 13–15, 2010, 533–538. doi:10.1109/ROMAN.2010.5598615

Wada, K., Shibata, T., Musha, T., and Kimura, S. (2005). Effects of robot therapy for demented patients evaluated by EEG. 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, Alta, August 2–6, 2005, 1552–1557. doi:10.1109/IROS.2005.1545304

Wang, B., and Rau, P.-L. P. (2019). Influence of embodiment and substrate of social robots on users’ decision-making and attitude. Internat. J. Social Robotics 11 (3), 411–421. doi:10.1007/s12369-018-0510-7

Wykowska, A. (2020). Social robots to test flexibility of human social cognition. International J. Social Robotics doi:10.1007/s12369-020-00674-5

Ye, J. C., Tak, S., Jang, K. E., Jung, J., and Jang, J. (2009). NIRS-SPM: statistical parametric mapping for near-infrared spectroscopy. Neuroimage 44 (2), 428–447. doi:10.1016/j.neuroimage.2008.08.036

Zhang, X., Noah, J. A., Dravida, S., and Hirsch, J. (2017). Signal processing of functional NIRS data acquired during overt speaking. Neurophotonics 4 (4), 041409. doi:10.1117/1.NPh.4.4.041409

Keywords: social cognition, fNIRS, human-robot interaction, eye-contact, tempoparietal junction, social engagement, dorsolateral prefontal cortex, TPJ

Citation: Kelley MS, Noah JA, Zhang X, Scassellati B and Hirsch J (2021) Comparison of Human Social Brain Activity During Eye-Contact With Another Human and a Humanoid Robot. Front. Robot. AI 7:599581. doi: 10.3389/frobt.2020.599581

Received: 27 August 2020; Accepted: 07 December 2020;

Published: 29 January 2021.

Edited by:

Astrid Weiss, Vienna University of Technology, AustriaReviewed by:

Jessica Lindblom, University of Skövde, SwedenSoheil Keshmiri, Advanced Telecommunications Research Institute International (ATR), Japan

Copyright © 2021 Kelley, Noah, Zhang, Scassellati and Hirsch. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joy Hirsch, Sm95LkhpcnNjaEB5YWxlLmVkdQ==

Megan S. Kelley

Megan S. Kelley J. Adam Noah

J. Adam Noah Xian Zhang

Xian Zhang Brian Scassellati

Brian Scassellati Joy Hirsch

Joy Hirsch