94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Robot. AI, 14 October 2020

Sec. Robotic Control Systems

Volume 7 - 2020 | https://doi.org/10.3389/frobt.2020.580415

This article is part of the Research TopicThe Art of Human-Robot Interaction: Creative Perspectives from Design and the ArtsView all 10 articles

In this paper, we present a robotic painting system whereby a team of mobile robots equipped with different color paints create pictorial compositions by leaving trails of color as they move throughout a canvas. We envision this system to be used by an external user who can control the concentration of different colors over the painting by specifying density maps associated with the desired colors over the painting domain, which may vary over time. The robots distribute themselves according to such color densities by means of a heterogeneous distributed coverage control paradigm, whereby only those robots equipped with the appropriate paint will track the corresponding color density function. The painting composition therefore arises as the integration of the motion trajectories of the robots, which lay paint as they move throughout the canvas tracking the color density functions. The proposed interactive painting system is evaluated on a team of mobile robots. Different experimental setups in terms of paint capabilities given to the robots highlight the effects and benefits of considering heterogeneous teams when the painting resources are limited.

The intersection of robots and arts has become an active object of study as both researchers and artists push the boundaries of the traditional conceptions of different forms of art by making robotic agents dance (Nakazawa et al., 2002; LaViers et al., 2014; Bi et al., 2018), create music (Hoffman and Weinberg, 2010), support stage performances (Ackerman, 2014), create paintings (Lindemeier et al., 2013; Tresset and Leymarie, 2013), or become art exhibits by themselves (Dean et al., 2008; Dunstan et al., 2016; Jochum and Goldberg, 2016; Vlachos et al., 2018). On a smaller scale, the artistic possibilities of robotic swarms have also been explored in the context of choreographed movements to music (Ackerman, 2014; Alonso-Mora et al., 2014; Schoellig et al., 2014), emotionally expressive motions (Dietz et al., 2017; Levillain et al., 2018; St.-Onge et al., 2019; Santos and Egerstedt, 2020), or interactive music generation based on the interactions between agents (Albin et al., 2012), among others.

In the context of robotic painting, the focus has been primarily on robotic arms capable of rendering input images according to some aesthetic specifications (Lindemeier et al., 2013; Scalera et al., 2019), or even reproducing scenes from the robot's surroundings—e.g., portraits (Tresset and Leymarie, 2013) or inanimated objects (Kudoh et al., 2009). The production of abstract paintings with similar robotic arm setups remains mostly unexplored, with some exceptions (Schubert, 2017). While the idea of swarm painting has been substantially investigated in the context of computer generated paintings, where virtual painting agents move inspired by ant behaviors (Aupetit et al., 2003; Greenfield, 2005; Urbano, 2005), the creation of paintings with embodied robotic swarms is lacking. Furthermore, in the existing instances of robotic swarm painting, the generation paradigm is analogous to those employed in simulation: the painting emerges as a result of the agents movement according to some behavioral, preprogrammed controllers (Moura and Ramos, 2002; Moura, 2016). The robotic swarm thus acts in a completely autonomous fashion once deployed, which prevents any interactive influence of the human artist once the creation process has begun. Even in such cases where the human artist participates in the creation of the painting along with the multi-robot system (Chung, 2018), the role of the human artist has been limited to that of a co-creator of the work of art, since they can add strokes to the painting but their actions do not influence the operation of the multi-robot team.

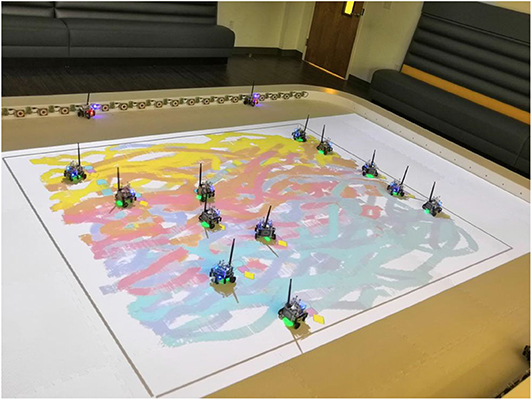

In this paper, we present a multi-robot painting system based on ground robots that lay color trails as they move throughout a canvas, as shown in Figure 1. The novelty of this approach lies in the fact that a human user can influence the movement of robots capable of painting specific colors, thus controlling the concentration of certain pigments on different areas of the painting canvas. Inspired by Diaz-Mercado et al. (2015), this human-swarm interaction is formalized through the use of scalar fields—which we refer to as density functions—associated with the different colors such that, the higher the color density specified at a particular point, the more attracted the robots equipped with that color will be to that location. Upon the specification of the color densities, the robots move over the canvas by executing a distributed controller that optimally covers such densities taking into account the heterogeneous painting capabilities of robot team (Santos and Egerstedt, 2018; Santos et al., 2018). Thus, the system provides the human user with a high-level way to control the painting behavior of the swarm as a whole, agnostic to the total number of robots in the team or the specific painting capabilities of each of them.

Figure 1. A group of 12 robots generates a painting based on the densities specified by a human user for five different colors: cyan, blue, pink, orange, and yellow. The robots lay colored trails as they move throughout the canvas, distributing themselves according to their individual painting capabilities. The painting arises as a result of the motion trails integrating over time.

The remainder of the paper is organized as follows: In section 2, we formally introduce the problem of coverage control and its extension to heterogeneous robot capabilities, as it enables the human-swarm interaction modality used in this paper. Section 3 elaborates on the generation, based on the user input, of color densities to be tracked by the multi-robot system along with the color selection strategy adopted by each robot for its colored trail. Experiments conducted on a team of differential-drive robots are presented in section 4, where different painting compositions arise as a result of various setups in terms of painting capabilities assigned to the robots. The effects of these heterogeneous resources on the final paintings are analyzed and discussed in section 5, which evaluates the color distribution in the paintings, both through color distances and chromospectroscopy, and includes a statistical analysis that illustrates the consistency of results irrespectively of initial conditions in terms of robot poses. Section 6 concludes the paper.

The interactive multi-robot painting system presented in this paper operates based on the specification of desired concentration of different colors over the painting canvas. As stated in section 1, this color preeminence is encoded through color density functions that the human user can set over the domain to influence the trajectories of the robots and, thus, produce the desired coloring effect. In this section, we recall the formulation of the coverage control problem as it serves as the mathematical backbone for the human-swarm interaction modality considered in this paper.

The coverage control problem deals with the question of how to distribute a team of N robots with positions , , to optimally cover the environmental features of a domain D ∈ ℝd, d = 2 and d = 3 for ground and aerial robots, respectively. The question of how well the team is covering a domain is typically asked with respect to a density function, ϕ : D ↦ [0, ∞), that encodes the importance of the points in the domain (Cortes et al., 2004; Bullo et al., 2009). Denoting the aggregate positions of the robots as , a natural way of distributing coverage responsibilities among the team is to let Robot i be in charge of those points closest to it,

that is, its Voronoi cell with respect to the Euclidean distance. The quality of coverage of Robot i over its region of dominance can be encoded as,

where the square of the Euclidean distance between the position of the robot and the points within its region of dominance reflects the degradation of the sensing performance with distance. The performance of the multi-robot team with respect to ϕ can then be encoded through the locational cost in Cortes et al. (2004),

with a lower value of the cost corresponding to a better coverage. A necessary condition for (2) to be minimized is that the position of each robot corresponds to the center of mass of its Voronoi cell (Du et al., 1999), given by

This spatial configuration, referred to as a centroidal Voronoi tessellation, can be achieved by letting the multi-robot team execute the well-known Lloyd's algorithm (Lloyd, 1982), whereby

The power of the locational cost in (2) lies on its ability to influence which areas of the domain the robots should concentrate by specifying a single density function, ϕ, irrespectively of the number of robots in the team. This makes coverage control an attractive paradigm for human-swarm interaction, as introduced in Diaz-Mercado et al. (2015), since a human operator can influence the collective behavior of an arbitrarily large swarm by specifying a single density function, e.g., drawing a shape, tapping, or dragging with the fingers on a tablet-like interface. In this paper, however, we consider a scenario where a human operator can specify multiple density functions associated with the different colors to be painted and, thus, a controller encoding such color heterogeneity must be considered. The following section recalls a formulation of the coverage problem for multi-robot teams with heterogeneous capabilities and a control law that allows the robots to optimally cover a number of different densities.

The human-swarm interaction modality considered in this paper allows the painter to specify a set of density functions associated with different colors to produce desired concentrations of colors over the canvas. To this end, we recover the heterogeneous coverage control formulation in Santos and Egerstedt (2018). Let be the set of paint colors and ϕj : D ↦ [0, ∞), , the family of densities associated with the colors in defined over the convex domain, D, i.e., the painting canvas. In practical applications, the availability of paints given to each individual robot may be limited due to payload limitations, resource depletion, or monetary constraints. To this end, let Robot i, , be equipped with a subset of the paint colors, , such that it can paint any of those colors individually or a color that results from their combination. The specifics concerning the color mixing strategy executed by the robots are described in detail in section 3.

Analogously to (1), the quality of coverage performed by Robot i with respect to Color j can be encoded through the locational cost

where is the region of dominance of Robot i with respect to Color j. A natural choice to define the boundaries of is for Robot i to consider those robots in the team capable of painting Color j that are closest to it. If we denote as the set of robots equipped with Color j,

then the region of dominance of Robot i with respect to Color j ∈ p(i) is the Voronoi cell in the tessellation whose generators are the robots in ,

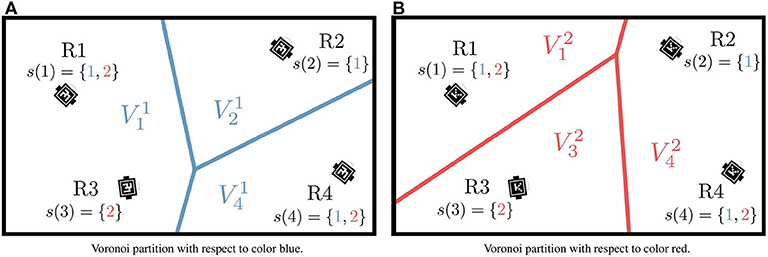

Note that, if Robot i is the only robot equipped with Color j, then the robot is in charge of covering the whole canvas, i.e., . Under this partition strategy, as illustrated in Figure 2, the area that Robot i is responsible for with respect to Color j, , can differ from the region to be monitored with respect to Color k, , j, k ∈ p(i).

Figure 2. Regions of dominance for four neighboring robots with respect to colors blue () (A), and red () (B). For each color, the resulting Voronoi cells are generated only by those robots equipped with that painting color. Source: Adapted from Santos and Egerstedt (2018).

With the regions of dominance defined, we can now evaluate the cost in (4). Thus, the overall performance of the team can be evaluated by considering the complete set of robots and color equipments through the heterogeneous locational cost formulated in Santos and Egerstedt (2018),

with a lower value of the cost corresponding to a better coverage of the domain with respect to the family of color density functions ϕj, .

Letting Robot i follow a negative gradient descent of establishes the following control law.

Theorem 1 (Heterogeneous Gradient Descent, Santos and Egerstedt, 2018). Let Robot i, with planar position xi, evolve according to the control law , where

with and , respectively, the heterogeneous mass and center of mass of Robot i with respect to Color j, defined as

Then, as t → ∞, the robots will converge to a critical point of the heterogeneous locational cost in (5) under a positive gain κ > 0.

Proof: See Santos and Egerstedt (2018).

Therefore, the controller that minimizes the heterogeneous locational cost in (5) makes each robot move according to a weighted sum where each term corresponds with a continuous-time Lloyd descent—analogous to (3)—over a particular color density ϕj, weighted by the mass corresponding to that painting capability.

The controller in (6) thus enables an effective human-swarm interaction modality for painting purposes where the human painter only has to specify color density functions for the desired color composition and the controller allows the robots in the team to distribute themselves over the canvas according to their heterogeneous painting capabilities. Note that, while other human-swarm interaction paradigms based on coverage control have considered time-varying densities to model the input provided by an external operator (Diaz-Mercado et al., 2015), in the application considered in this paper heterogeneous formulation of the coverage control problem, while considering static densities, suffices to model the information exchange between the human and the multi-robot system.

In section 2, we established a human-swarm interaction paradigm that allows the user to influence the team of robots so that they distribute themselves throughout the canvas according to a desired distribution of color and their painting capabilities. But how is the painting actually created? In this section, we present a strategy that allows each robot to choose the proportion in which the colors available in its equipment should be mixed in order to produce paintings that reflect, to the extent possible, the distributions of color specified by the user.

The multi-robot system considered in this paper is conceived to create a painting by means of each robot leaving a trail of color as it moves over a white canvas. While the paintings presented in section 4 do not use physical paint but, rather, projected trails over the robot testbed, the objective of this section is to present a color model that both allows the robots to produce a wide range of colors with minimal painting equipment and that closely reflects how the color mixing would occur in a scenario where physical paint were to be employed. To this end, in order to represent a realistic scenario where robots lay physical paint over a canvas, we use the subtractive color mixing model (see Berns, 2000 for an extensive discussion in color mixing), which describes how dyes and inks are to be combined over a white background to absorb different wavelengths of white light to create different colors. In this model, the primary colors that act as a basis to generate all the other color combinations are cyan, magenta, and yellow (CMY).

The advantage of using a simple model like CMY is two-fold. Firstly, one can specify the desired presence of an arbitrary color in the canvas by defining in which proportion these should mix at each point and, secondly, the multi-robot system as a collective can generate a wide variety of colors being equipped with just cyan, magenta and yellow paint, i.e., in the heterogeneous multi-robot control strategy in section 2.2. The first aspect reduces the interaction complexity between the human and the multi-robot system: the painter can specify a desired set of colors throughout the canvas by defining the CMY representation of each color as , and its density function over the canvas ϕβ(q), q ∈ D. Note that a color specified in the RGB color model (red, green, and blue), represented by the triple [βR, βG, βB], can be directly converted to the CMY representation by subtracting the RGB values from 1, i.e., [βC, βM, βY] = 1 − [βR, βG, βB]. Given that the painting capabilities of the multi-robot system are given by , the densities that the robots are to cover according to the heterogeneous coverage formulation in section 2.2 can be obtained as,

where ⊕ is an appropriately chosen composition operator. The choice of composition operator reflects how the densities associated with the different colors should be combined in order to compute the overall density function associated with each CMY primary color. For example, one way to combine the density functions is to compute the maximum value at each point,

The question remaining is how a robot should combine its available pigments in its color trail to reflect the desired color density functions. The formulation of the heterogeneous locational cost in (5) implies that Robot i is in charge of covering Color j within the region dominance and of covering Color k within , . However, depending on the values of the densities ϕj and ϕk within these Voronoi cells, the ratio between the corresponding coverage responsibilities may be unbalanced. In fact, such responsibilities are reflected naturally through the heterogeneous mass, , defined in (7). Let us denote as , , , , , the color proportion in the CMY basis to be used by Robot i in its paint trail. Then, a color mixing strategy that reflects the coverage responsibilities of Robot i can be given by,

Note that, when , the robot is not covering any density and, thus, , can be undefined.

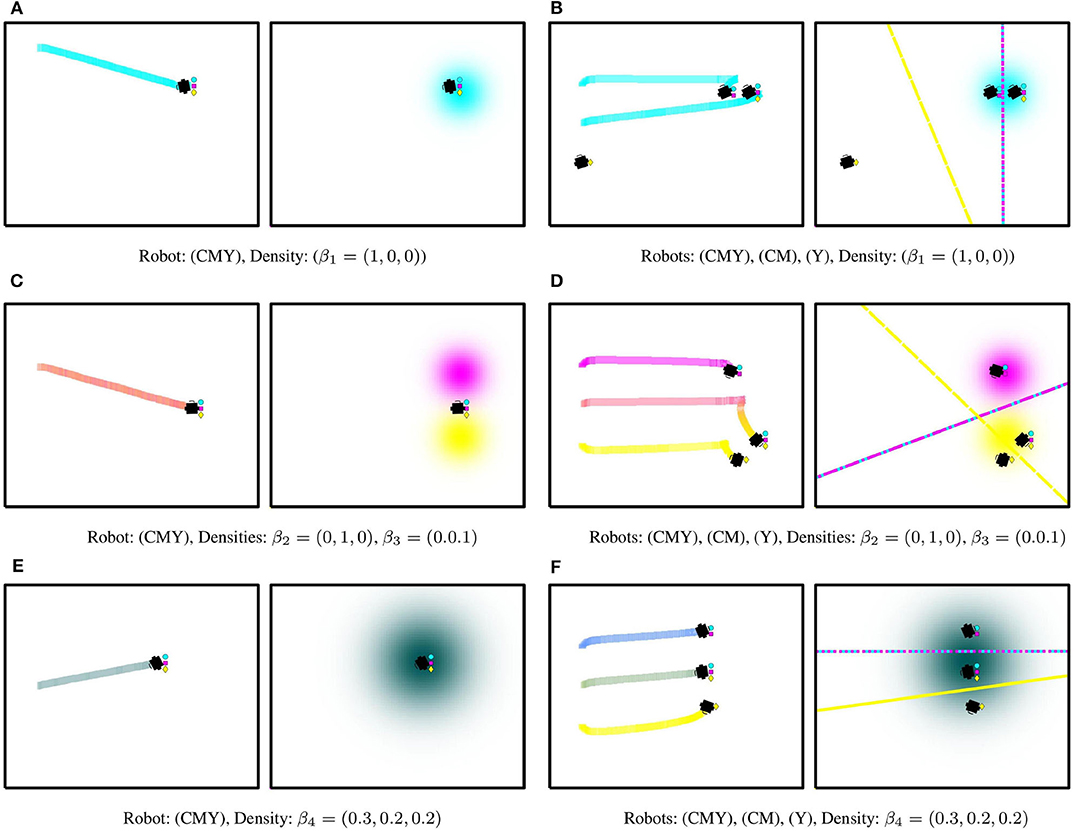

Figure 3 illustrates the operation of this painting mechanism for three different density color specifications. Firstly, the mechanism is simulated for a robot equipped with all three colors—cyan (C), magenta (M), and yellow (Y)—in Figures 3A,C,E. As seen, the robot lays a cyan trail as it moves to optimally cover a single cyan density function in Figure 3A. In Figure 3C, two different density functions are specified, one magenta and one yellow, and the robot lays down a trail whose color is a combination of both paints. Finally, in Figure 3E, the robot is tasked to cover a density that is a combination of the CMY colors. Since the robot is equipped with all three colors, the trail on the canvas exactly replicates the colors desired by the user.

Figure 3. Painting mechanism based on heterogeneous coverage control. Each subfigure shows the color trails laid by the robots (left) as they move to optimally cover a user-specified color density function (right) by executing the controller in (6). The symbols located to the right of the robot indicate its painting capabilities. (A,C,E) Show the operation of the painting mechanism in section 3 for a single robot equipped with all three colors, i.e., cyan (C), magenta (M), and yellow (Y), thus capable of producing all color combinations in the CMY basis. In (A), the robot lays a cyan trail according to the density color specification β1. The robot equally mixes magenta and yellow in (C) according to the color mixing strategy in (8), producing a color in between the two density color specifications, β2 and β3. Finally, in (E), the robot exactly replicates the color specified by β4. On the other hand, (B,D,F) depict the operation of the painting mechanism with a team of 3 robots, where the Voronoi cells (color coded according to the CMY basis) are shown on the density subfigures.

For the same input density specifications, Figures 3B,D,F illustrate the trails generated by a team of three robots equipped with different subsets of the color capabilities. As seen, the color of the individual robot trails evolve as a function of the robot's equipment, the equipments of its neighbors, and the specified input density functions. A simulation depicting the operation of this painting mechanism can be found in the video included in the Supplementary Materials.

The proposed multi-robot painting system is implemented on the Robotarium, a remotely accessible swarm robotics testbed at the Georgia Institute of Technology (Wilson et al., 2020). The experiments, uploaded via web, are remotely executed on a team of up to 20 custom-made differential-drive robots. On each iteration, run at a maximum rate of 120 Hz, the Robotarium provides the poses of the robots, tracked by a motion capture system, and allows the control program to specify the linear and angular velocities to be executed by each robot in the team. An overhead projector affords the visualization of time-varying images onto the test bed during the execution of the experiments. The data is made available to the user once the experiment is finalized.

The human-swarm interaction paradigm for color density coverage presented in section 2 and the trail color mixing strategy from section 3 are illustrated experimentally on a team of 12 robots over a 2.4 × 2m canvas. The robots lay trails of color as they cover a set of user-defined color density functions according to the control law in (6), where κ = 1 for all the experiments and the single integrator dynamics are converted into linear and angular velocities executable by the robots using the near-identity diffeomorphism from Olfati-Saber (2002), a functionality available in the Robotarium libraries. In order to study how the limited availability of painting resources affects the resulting painting, for the same painting task, nine different experimental setups in terms of paint equipment assigned to the multi-robot team are considered. While no physical paint is used in the experiments included in this paper, the effectiveness of the proposed painting system is illustrated by visualizing the robots' motion trails over the canvas with an overhead projector.

The experiment considers a scenario where the multi-robot team has to simultaneously cover a total of six different color density functions over a time horizon of 300 s. These density functions aim to represent commands that would be interactively generated by the user, who would be observing the painting being generated and could modify the commands for the color densities according to his or her artistic intentions. Note that, in this paper, these time-varying density functions are common to all the experiments and simulations included in sections 4, 5 to allow the evaluation of the paintings as a function of the equipment setups in Table 2. In an interactive scenario, the density commands are to be generated in real time by the user, by means of a tablet-like interface, for example. In this experiment, the color density functions involved are of the form,

with , q = [qx, qy]T ∈ D. The color associated with each density as well as its parameters are specified in Table 1, and and are given by

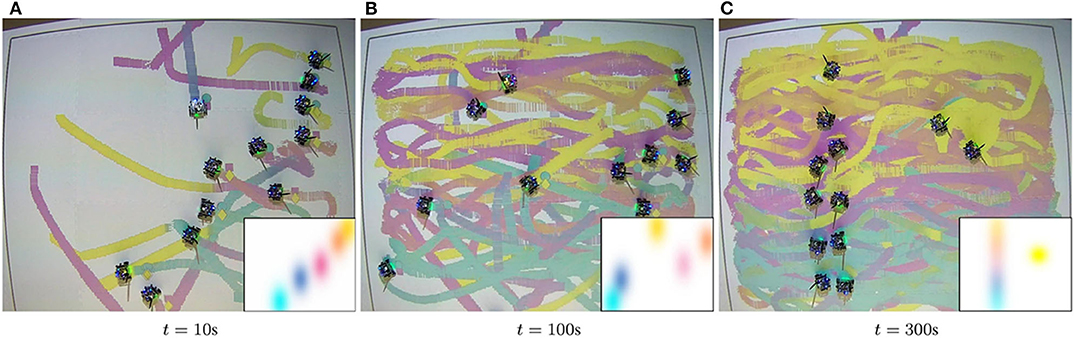

Figure 4 illustrates the evolution of the painting for a specific equipment setup as the robots move to cover these densities at t = 100s and t = 300s.

Figure 4. Evolution of the painting according to the density parameters in Table 1, for the Setup 3 given as in Table 2. The robots distribute themselves over the domain in order to track the density functions as they evolve through the canvas. In each snapshot, the densities that the multi-robot team is tracking at that specific point in time are depicted in the bottom right corner of the image. The color distribution of the color trails reflects the colors specified for the density functions within the painting capabilities of the robots. Even though none of the robots is equipped with the complete CMY equipment and, thus, cannot reproduce exactly the colors specified by the user, the integration of the colors over time produce a result that is close to the user's density specification.

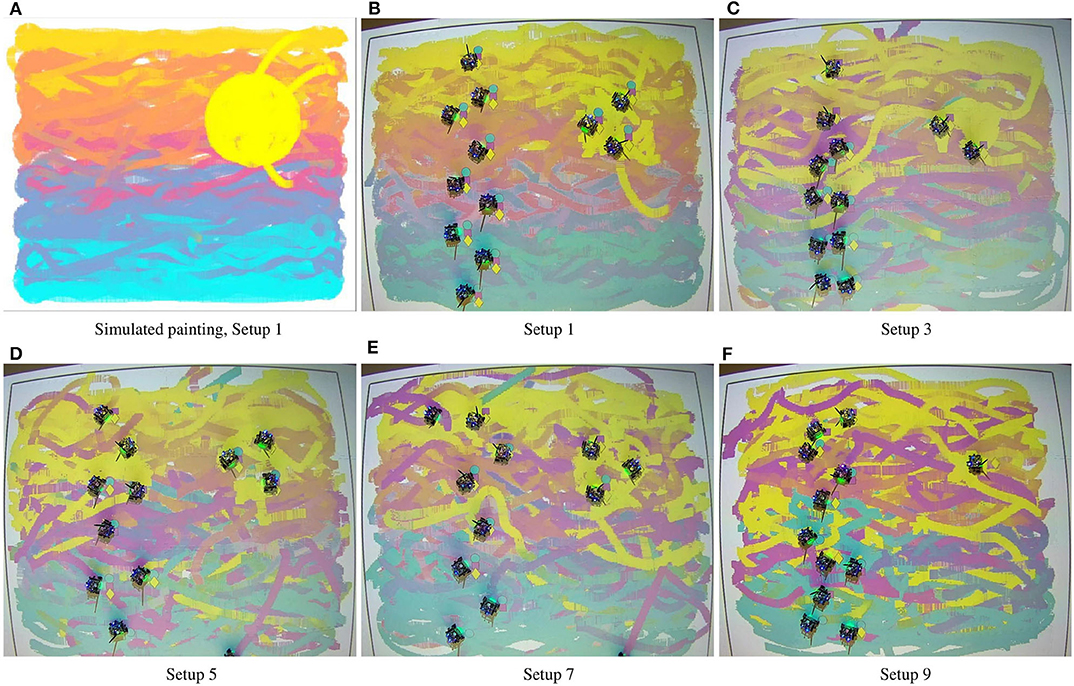

The multi-robot painting strategy is evaluated under a series of painting equipment setups to assess the differences that result from the heterogeneity of the team, which can be motivated by the scarcity or depletion of painting resources or by a design choice of the human user, for example. Table 2 outlines the color painting capabilities available to each of the robots in the different experimental setups. The paintings which result from five of these configurations (the ones with an odd setup ID) are shown in Figure 5. The generative process for the paintings in Figure 5 is illustrated in the video included in the Supplementary Materials. Note that all these experiments where run with identical initial conditions in terms of robot poses, according to the identifiers in Table 2. For the purpose of benchmarking, a simulated painting is generated for painting setup 1, i.e., with a homogeneous equipment capable of reproducing any color. This simulated painting is created under the same heterogeneous density coverage control and color mixing strategies as in the robotic experiments, but considering unicycle dynamics without actuator limits or saturation and with no communication delays (Figure 5A). Given the paintings in Figures 5B–F, we can observe how the closest color distribution to the simulated painting is achieved in Figure 5B, which corresponds to the case where all the robots have all the painting capabilities—i.e., the team is homogeneous—and, thus, can reproduce any combination of colors in the CMY basis.

Figure 5. Paintings generated for the densities in (9), with the team of 12 robots in their final positions. (A) Corresponds to a simulated painting and it is used for benchmarking. According to the painting equipment setups in Table 2 we can see how, as the robots in the team are equipped with more painting capabilities, the color gradients become smoother and more similar to the ideal outcome.

It is interesting to note the significant changes in the characteristics of the painting for different equipment configurations of the robots. For equipment setups 3, 5, 7, and 9, where some robots—or all—are not equipped with all the color paints, the corresponding paintings do not show as smooth color gradients as the one in Figure 5B. However, the distribution of color for these paint setups still qualitatively reflects the color specification given by the densities in Table 1. Even in the extreme case of Equipment 9 (see Figure 5F), where none of the robots is equipped with all CMY paints—in fact, half of the robots only have one paint and the other half have pairwise combinations—the robot team still renders a painting that, while presenting colors with less smooth blending than the other setups, still represents the color distribution specified by the densities in Table 1. For Setups 3 and 7, the team has the same total number of CMY painting capabilities but the distribution is different among the team members: in Setup 3 none of the robots are equipped with the three colors, while in Setup 7 there are some individuals that can paint any CMY combination and others can paint only one color. Observing the Figures 5C,E, while the resulting colors are less vibrant for the equipment in Setup 3, there seems to be a smoother blending between them along with the vertical axis. Setup 7 produces a painting where overall the colors are more faithful to the ideal outcome presented in Figure 5A, but that also contain stronger trails corresponding to the pure primary colors appear throughout the painting. If we compare Figures 5D,E we can see how, by adding a small amount of painting capabilities to the system, the color gradients are progressively smoothed. This observation suggests to further analyze the variations that appear on the paintings as a function of the heterogeneous equipment configurations of the different setups. This will be the focus of the next section.

As described in section 1, the robotic painting system developed in this paper generates illustrations via an interaction between the color density functions specified by the user and the different color equipment present on the robots. In particular, the different equipments not only affect the color trails left by the robots, but also affect their motion as they track the density functions corresponding to their equipment. While Figure 5 qualitatively demonstrates how the nature of the painting varies with different equipment setups, this section presents a quantitative analysis of the variations among paintings resulting from different equipment setups. We also analyze the reproducibility characteristics of the multi-robot painting system, by investigating how paintings vary among different realizations using the same equipment setups.

Let S denote the number of distinct equipment setups of the robots in the team—where each unique configuration denotes a robot species. We denote sι ∈ [0, 1] as the probability that a randomly chosen agent belongs to species ι, , such that

For each equipment setup in Table 2, these probabilities can be calculated as a function of how many agents are equipped with each subset of the paint colors.

We adopt the characterization developed in Twu et al. (2014), and quantify the heterogeneity of a multi-robot team as,

where E(s) represents the complexity and Q(s), the disparity within the multi-robot system for a given experimental setup, s. More specifically, E(s) can be modeled as the entropy of the multi-agent system,

and Q(s) is the Rao's Quadratic Entropy,

with a metric distance between species of robots. More specifically, δ represents the differences between the abilities of various species in the context of performing a particular task. For example, if we have three robots, one belonging to species s5 (p(s5) = {C}) and two belonging to species s8 (p(s8) = {C, M, Y}) and we have to paint only cyan, then the distance between agents should be zero, since all of them can perform the same task. However, if the task were to paint a combination of yellow and magenta, then the species s5 could not contribute to that task and, therefore, δ > 0.

Similar to Twu et al. (2014), we formalize this idea by introducing a task space, represented by the tuple (T, γ) where T denotes the set of tasks, and γ : T ↦ ℝ+ represents an associated weight function. In this paper, the set of tasks T simply correspond to the different colors specified by the user, as shown in Table 1. Consequently, a task corresponds to the component j, j ∈ {C, M, Y}, of color input . The corresponding weight functions for the tasks are calculated as,

With this task-space, the task-map, , as defined in Twu et al. (2014), directly relates the different robot species with the CMY colors, i.e., if the color equipment of species ι is denoted as p(ι), then it can execute tasks if j ∈ p(ι).

Having defined the task-space, (T, γ), and the task-map, ω, the distance between two agents i and j can be calculated as in Twu et al. (2014),

This task-dependent distance metric between different robot species can then be used to compute the disparity as shown in (11).

Having completely characterized the disparity, Q(s), and the complexity, E(s), of an experimental setup under a specific painting task, one can compute the heterogeneity measure associated with them according to (10). To this end, the third column in Table 2 represents the heterogeneity measure of the different setups. The heterogeneity values have been computed for the sunset-painting task from Table 1, as well as for a generic painting task that considers the whole 8-bit RGB color spectrum as objective colors to be painted by the team. This latter task is introduced in this analysis with the purpose of serving as a baseline to evaluate the comprehensiveness of the proposed sunset painting task. As it can be observed in Table 2, the heterogeneity values obtained for the sunset and the 8-bit RGB tasks are quite similar and the relative ordering of the setups with respect to the heterogeneity measure is the same, thus suggesting that the sunset task used in this paper requires a diverse enough set of painting objectives for all the equipment setups proposed. Armed with this quantification of team heterogeneity, we now analyze how the spatial characteristics of the painting differ as the equipment configurations change.

We first analyze the complex interplay between motion trails and equipment setups by computing the spatial distance between the mean location of the desired input density function specified by the user, and the resulting manifestation of the color in the painting. To this end, we use the color distance metric introduced in Androutsos et al. (1998) to characterize the distance from the color obtained in every pixel of the resulting painting to each of the input colors specified in Table 1.

Let ρ(q) represent the 8-bit RGB vector value for a given pixel q in the painting. Then, the color distance between two pixels qi and qj is given as,

Using (12), we can compute the distance from the color of each pixel to each of the input colors specified by the user (given in this paper by Table 1). For a given pixel in the painting q and input color β, these distances can be interpreted as a color-distance density function over the domain, denoted as φ

where, with an abuse of notation, dp(q, β) represents the color distance between the color β and the color at pixel q. For the experiments conducted in this paper, ς2 was chosen to be 0.1.

Since we are interested in understanding the spatial characteristics of colors in the painting, we compute the center of mass of a particular color β in the painting,

The covariance ellipse for the color β at a pixel q is given as,

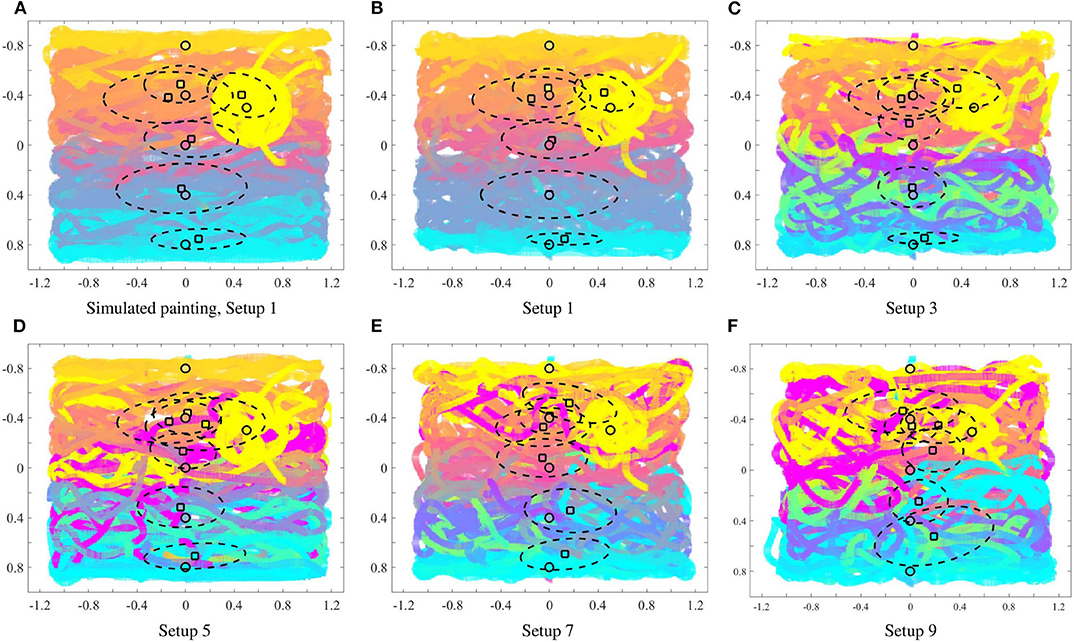

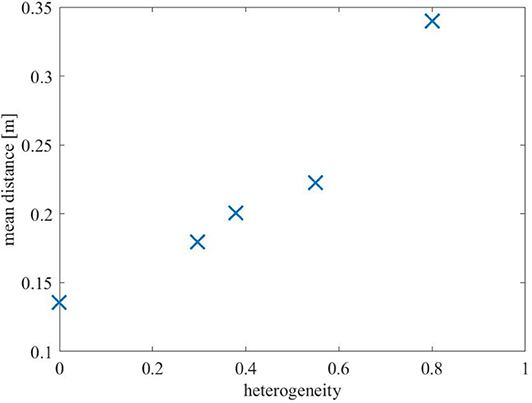

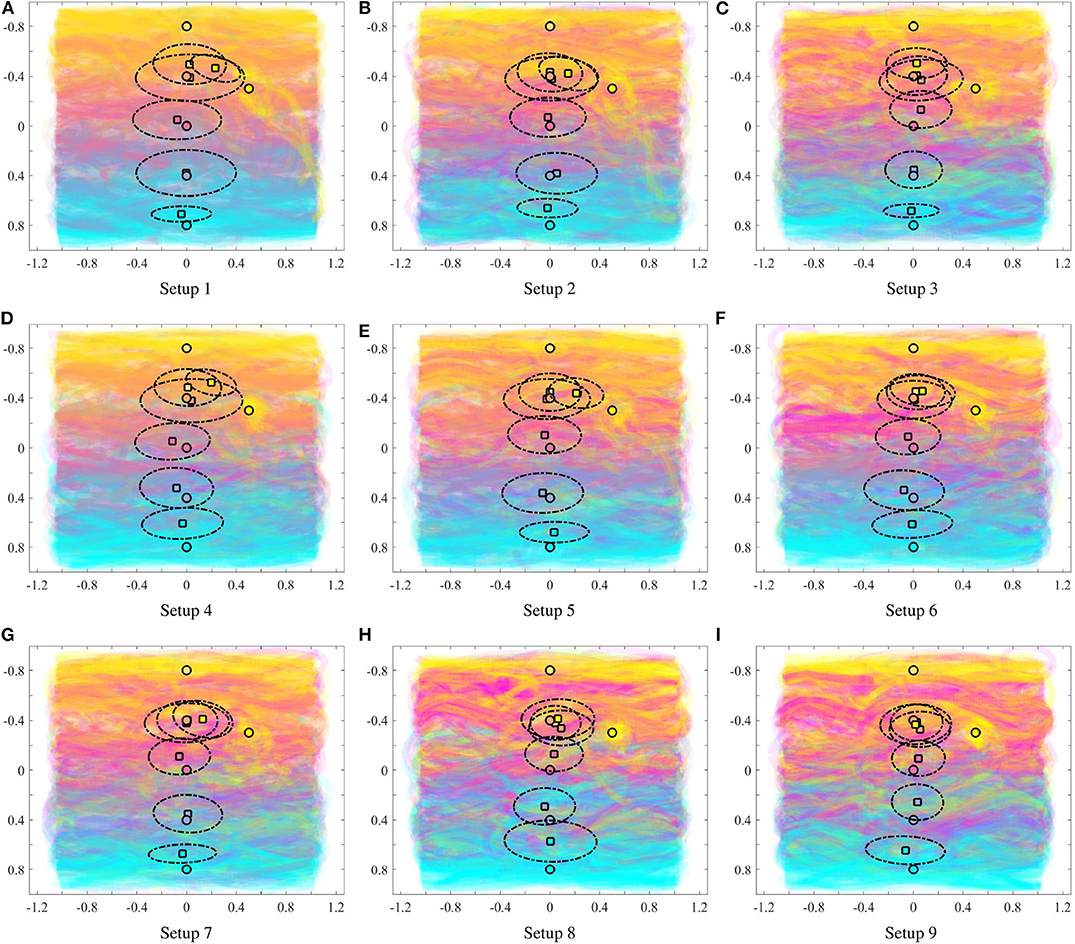

For each of the input colors, Figure 6 illustrates the extent to which the color center of masses (computed by (13) and depicted by the square filled by the corresponding color) are different from the mean locations of the input density functions (depicted by the circle). For all the painting equipment setups in Figure 6, as the heterogeneity of the team increases, the mean of the input density function for each color and the resulting center of mass become progressively more distant. This phenomenon is illustrated in Figure 7, where the mean distance between the input density and the resulting color center of mass is plotted as a function of the heterogeneity of the equipment of the robots. For a given painting P, this distance is computed as,

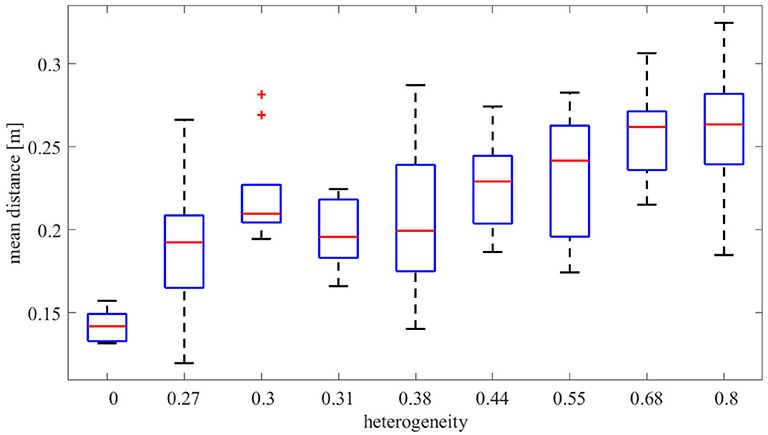

where represents the set of input colors, and μβ represents the mean of the input density function for color β. As seen, with increasing heterogeneity, the mean distance increases because lesser painting capabilities on the robots do not allow them to exactly reproduce the input color distributions. However, even with highly heterogeneous setups, such as Setups 7 or 9, the multi-robot team is still able to preserve highly distinguishable color distributions throughout the canvas, which suggests that the coverage control paradigm for multi-robot painting is quite robust to highly heterogeneous robot teams and resource deprivation.

Figure 6. For each input color (given in Table 1): mean of the input density function (circle), and center of mass of the resulting color according to (13) (square). The dotted lines depict the covariance ellipse according to (14). As seen the heterogeneity of the multi-robot team [as defined in (10)] impacts how far the colors are painted from the location of the input, as given by the user.

Figure 7. Average distance from mean density input to the resulting center of mass over the input colors of the painting as a function of the heterogeneity among the robots [as defined in (10)]. As seen, with increasing sparsity of painting equipment on the robots (signified by increasing heterogeneity), the mean distance increases, indicating that colors get manifested farther away from where the user specifies them.

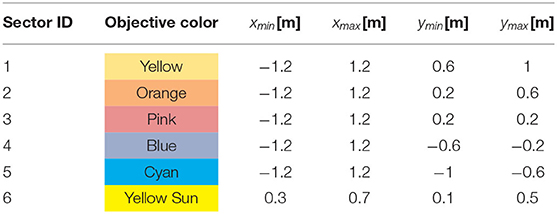

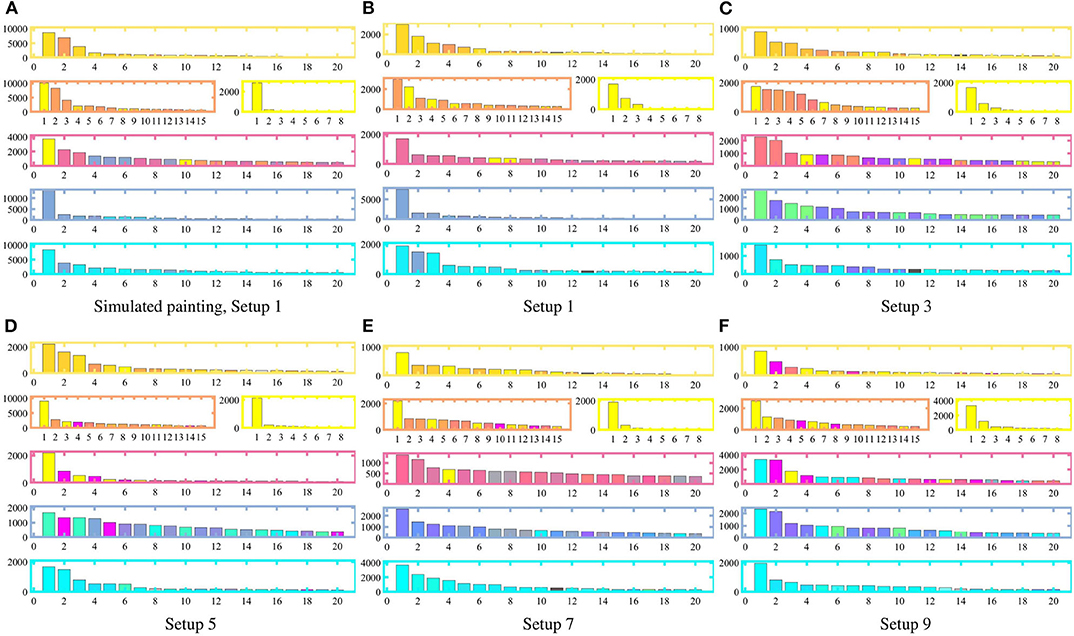

The second method we utilize to quantify the differences among the paintings as a function of the heterogeneity in the robot team is using chromospectroscopy (Kim et al., 2014), which analyzes the frequency of occurrence of a particular color over the canvas. To this end, the painting is divided according to the sectors described in Table 3, which are closely related to the areas of high incidence of the objective color densities in Table 1. A histogram representing the frequency of occurrence of each input color per sector is described in Figure 8. For the purposes of the chromospectroscopy analysis, the 8-bit RGB color map of the canvas is converted into a 5-bit RGB color map, by reducing the resolution of the color map and grouping very similar colors together, i.e., for an input color β ∈ [0, 255]3, the modified color for the chromospectroscopy analysis in Figure 8 is computed as , with b = 23.

Table 3. Color sectors throughout the painting used for the chromospectroscopy analysis, according to the density parameters specified in Table 1.

Figure 8. Chromospectroscopy by sectors on the canvas (as indicated in Table 3) for each equipment configuration (as specified in Table 2). With increasing heterogeneity, and consequently, sparser painting capabilities of the robots, colors distinctly different from the target colors begin to appear in each sector. For teams with lower heterogeneity (Setups 1 and 3), anomalous colors in the chromospectroscopy typically appear from neighboring sectors only.

As seen in Figure 8, the heterogeneity of the robot team significantly affects the resulting color distribution within each sector. More specifically, as the heterogeneity of the team increases, thus depriving the team of painting capabilities, the canvas presents more outlier colors which are present outside the corresponding target sectors. This is apparent in highly heterogeneous teams (Setup 9), where magenta-like colors appear in the top-most sector and cyan appears in the central sector. The three central sectors show a high occurrence of non-target colors. For slightly lesser heterogeneous teams, while the occurring colors often do not correspond with the target colors in the sectors—e.g., green in Sector 4 of Setup 3—, the colors seem consistent in their presence and correspond to limitations on the equipment of the robots: in Setup 3, all robots are equipped with only two colors, thus no robot is able to exactly replicate any target color with 3 CMY components by itself. In the case of teams with low heterogeneity, e.g., Setup 1 and Setup 3, resulting colors are mostly consistent with the input target colors. The presence of some colors which do not match the input corresponds to colors belonging to the neighboring sectors. Some specific examples of this include: (i) Setup 1: the presence of yellow in Sector 3, orange in Sector 2, and Blue in Sector 5, (ii) Setup 3: the presence of orange in Sector 1, and blue in Sector 5, (iii) Setup 5: magenta and cyan-like colors in Sector 4.

Indeed, as one could expect, the chromospectroscopy reveals that color distributions become less precise as the differences in the painting capabilities of the robots become more acute—observable as distinct paint streaks in Figure 5 which stand out from the surrounding colors. Nevertheless, the distribution of colors on each sector still matches the color density inputs even for the case of highly heterogeneous teams, which suggests that the multi-robot painting paradigm presented in this paper is robust to limited painting capabilities on the multi-robot team due to restrictions on the available paints, payload limitations on the robotic platforms, or even the inherent resource depletion that may arise from the painting activity.

In order to understand if the statistics reported above remain consistent for multiple paintings generated by the robotic painting system, we ran 10 different experiments with random initial conditions in terms of robot poses for each of the 9 equipment configurations described in Table 2. Figure 9 shows the average of the paintings generated for each equipment, along with the color density averages, computed using (13). Although averaging the 10 rounds seems to dampen the presence of outliers, we can still observe how the distance between the objective color (represented by a circle) and the resulting color distribution (square) generally increases as the team becomes more heterogeneous. Furthermore, if we observe the color gradient along the vertical axis of the painting, the blending of the colors becomes more uneven as the heterogeneity of the team increases. This phenomenon becomes quite apparent if we compare the top row of Figures 9A–C to the bottom row (G–I).

Figure 9. Averaged paintings over 10 trials. Mean of the input densities (circle), center of mass of the resulting colors according to φ from (13) (square), and covariance ellipse (dotted lines). The heterogeneity in the painting equipment of the robots has a significant impact on the nature of the paintings.

Quantitatively, this distancing between objective and obtained color density distribution is summarized in Figure 10, which shows the mean distance between the input density and the resulting colors. Analogously to the analysis in Figure 7, which contained data for one run in the Robotarium for five out of the nine setups, the average distances shown in Figure 10 show that the resulting color distributions tend to deviate from the objective ones as the team becomes more heterogeneous.

Figure 10. Box plots of the average distance between mean density input to resulting center of mass as computed in (15) for the 9 different equipment configurations. The results are presented for 10 different experiments conducted for each equipment. As seen, the average distance increases with increasing heterogeneity among the robots' painting equipment.

The results observed in this statistical analysis, thus, support the observations carried out in the analysis of the paintings obtained in the Robotarium. Therefore, the characterization of the painting outcome with respect to the resources of the team seems consistent throughout different runs and independent of the initial spatial conditions of the team.

This paper presents a robotic swarm painting system based on mobile robots leaving trails of paint as they move where a human user can influence the outcome of the painting by specifying desired color densities over the canvas. The interaction between the human user and the painting is enabled by means of a heterogeneous coverage paradigm where the robots distribute themselves over the domain according to the desired color outcomes and their painting capabilities, which may be limited. A color mixing strategy is proposed to allow each robot to adapt the color of its trail according to the color objectives specified by the user, within the painting capabilities of each robot. The proposed multi-robot painting system is evaluated experimentally to assess how the proposed color mixing strategy and the color equipments of the robots affect the resulting painted canvas. A series of experiments are run for a set of objective density functions, where the painting capabilities of the team are varied with the objective of studying how varying the painting equipment among the robots in the team affects the painting outcome. Analysis of the resulting paintings suggests that, while higher heterogeneity results in bigger deviations with respect to the user-specified density functions—as compared to homogeneous, i.e., fully equipped, teams—the paintings produced by the control strategy in this paper still achieve a distribution of color over the canvas that closely resembles the input even when the team has limited resources.

All datasets generated for this study are included in the article/Supplementary Material.

MS, GN, SM, and ME contributed to the conception and design of the study. MS, GN, and SM programmed the control of the robotic system and performed the design of experiments. MS conducted the data processing and the experiment analysis. MS, GN, and SM each wrote major sections of the manuscript. ME is the principal investigator associated with this project. All authors contributed to manuscript revision, read, and approved the submitted version.

This work was supported by ARL DCIST CRA W911NF-17-2-0181 and by la Caixa Banking Foundation under Grant LCF/BQ/AA16/11580039.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2020.580415/full#supplementary-material

Albin, A., Weinberg, G., and Egerstedt, M. (2012). “Musical abstractions in distributed multi-robot systems,” in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (Vilamoura), 451–458. doi: 10.1109/IROS.2012.6385688

Alonso-Mora, J., Siegwart, R., and Beardsley, P. (2014). “Human-Robot swarm interaction for entertainment: from animation display to gesture based control,” in Proceedings of the 2014 ACM/IEEE International Conference on Human-robot Interaction, HRI '14 (New York, NY: ACM), 98. doi: 10.1145/2559636.2559645

Androutsos, D., Plataniotiss, K. N., and Venetsanopoulos, A. N. (1998). “Distance measures for color image retrieval,” in Proceedings 1998 International Conference on Image Processing. ICIP98 (Cat. No.98CB36269), Vol. 2 (Chicago, IL), 770–774. doi: 10.1109/ICIP.1998.723652

Aupetit, S., Bordeau, V., Monmarche, N., Slimane, M., and Venturini, G. (2003). “Interactive evolution of ant paintings,” in The 2003 Congress on Evolutionary Computation, 2003. CEC '03, Vol. 2, 1376–1383 (Canberra, ACT). doi: 10.1109/CEC.2003.1299831

Berns, R. (2000). Billmeyer and Saltzman's Principles of Color Technology, 3rd Edn. New York, NY: Wiley.

Bi, T., Fankhauser, P., Bellicoso, D., and Hutter, M. (2018). “Real-time dance generation to music for a legged robot,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Madrid), 1038–1044. doi: 10.1109/IROS.2018.8593983

Bullo, F., Cortes, J., and Martinez, S. (2009). Distributed Control of Robotic Networks: A Mathematical Approach to Motion Coordination Algorithms. Applied Mathematics Series. Princeton, NJ: Princeton University Press. doi: 10.1515/9781400831470

Chung, S. (2018). Omnia Per Omnia. Available online at: https://sougwen.com/project/omniaperomnia (accessed September 8, 2019).

Cortes, J., Martinez, S., Karatas, T., and Bullo, F. (2004). Coverage control for mobile sensing networks. IEEE Trans. Robot. Autom. 20, 243–255. doi: 10.1109/TRA.2004.824698

Dean, M., D'Andrea, R., and Donovan, M. (2008). Robotic Chair. Vancouver, BC: Contemporary Art Gallery.

Diaz-Mercado, Y., Lee, S. G., and Egerstedt, M. (2015). “Distributed dynamic density coverage for human-swarm interactions,” in American Control Conference (ACC) (Chicago, IL), 2015, 353–358. doi: 10.1109/ACC.2015.7170761

Dietz, G., E, J. L., Washington, P., Kim, L. H., and Follmer, S. (2017). “Human perception of swarm robot motion,” in Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems (Denver, CO), 2520–2527. doi: 10.1145/3027063.3053220

Du, Q., Faber, V., and Gunzburger, M. (1999). Centroidal voronoi tessellations: applications and algorithms. SIAM Rev. 41, 637–676. doi: 10.1137/S0036144599352836

Dunstan, B. J., Silvera-Tawil, D., Koh, J. T. K. V., and Velonaki, M. (2016). “Cultural robotics: robots as participants and creators of culture,” in Cultural Robotics, eds J. T. Koh, B. J. Dunstan, D. Silvera-Tawil, and M. Velonaki (Cham: Springer International Publishing), 3–13. doi: 10.1007/978-3-319-42945-8_1

Greenfield, G. (2005). “Evolutionary methods for ant colony paintings,” in Applications of Evolutionary Computing, eds F. Rothlauf, J. Branke, S. Cagnoni, D. W. Corne, R. Drechsler, Y. Jin, P. Machado, E. Marchiori, J. Romero, G. D. Smith, and G. Squillero (Berlin; Heidelberg: Springer Berlin Heidelberg), 478–487. doi: 10.1007/978-3-540-32003-6_48

Hoffman, G., and Weinberg, G. (2010). “Gesture-based human-robot jazz improvisation,” in 2010 IEEE International Conference on Robotics and Automation (Anchorage, AK), 582–587. doi: 10.1109/ROBOT.2010.5509182

Jochum, E., and Goldberg, K. (2016). Cultivating the Uncanny: The Telegarden and Other Oddities. Singapore: Springer Singapore. doi: 10.1007/978-981-10-0321-9_8

Kim, D., Son, S.-W., and Jeong, H. (2014). Large-scale quantitative analysis of painting arts. Sci. Rep. 4:7370. doi: 10.1038/srep07370

Kudoh, S., Ogawara, K., Ruchanurucks, M., and Ikeuchi, K. (2009). Painting robot with multi-fingered hands and stereo vision. Robot. Auton. Syst. 57, 279–288. doi: 10.1016/j.robot.2008.10.007

LaViers, A., Teague, L., and Egerstedt, M. (2014). Style-Based Robotic Motion in Contemporary Dance Performance. Cham: Springer International Publishing. doi: 10.1007/978-3-319-03904-6_9

Levillain, F., St.-Onge, D., Zibetti, E., and Beltrame, G. (2018). “More than the sum of its parts: assessing the coherence and expressivity of a robotic swarm,” in 2018 IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (Nanjing), 583–588. doi: 10.1109/ROMAN.2018.8525640

Lindemeier, T., Pirk, S., and Deussen, O. (2013). Image stylization with a painting machine using semantic hints. Comput. Graph. 37, 293–301. doi: 10.1016/j.cag.2013.01.005

Lloyd, S. (1982). Least squares quantization in PCM. IEEE Trans. Inform. Theory 28, 129–137. doi: 10.1109/TIT.1982.1056489

Moura, L., and Ramos, V. (2002). Swarm Paintings–Nonhuman Art. Architopia: Book, Art, Architecture, and Science. Lyon; Villeurbanne: Institut d'Art Contemporain.

Nakazawa, A., Nakaoka, S., Ikeuchi, K., and Yokoi, K. (2002). “Imitating human dance motions through motion structure analysis,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, Vol. 3 (Lausanne), 2539–2544. doi: 10.1109/IRDS.2002.1041652

Olfati-Saber, R. (2002). “Near-identity diffeomorphisms and exponential ϵ-tracking and ϵ-stabilization of first-order nonholonomic Se(2) vehicles,” in Proceedings of the 2002 American Control Conference, Vol. 6 (Anchorage, AK), 4690–4695.

Santos, M., Diaz-Mercado, Y., and Egerstedt, M. (2018). Coverage control for multirobot teams with heterogeneous sensing capabilities. IEEE Robot. Autom. Lett. 3, 919–925. doi: 10.1109/LRA.2018.2792698

Santos, M., and Egerstedt, M. (2018). “Coverage control for multi-robot teams with heterogeneous sensing capabilities using limited communications,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Madrid), 5313–5319. doi: 10.1109/IROS.2018.8594056

Santos, M., and Egerstedt, M. (2020). From motions to emotions: can the fundamental emotions be expressed in a robot swarm? Int. J. Soc. Robot. doi: 10.1007/s12369-020-00665-6

Scalera, L., Seriani, S., Gasparetto, A., and Gallina, P. (2019). Watercolour robotic painting: a novel automatic system for artistic rendering. J. Intell. Robot. Syst. 95, 871–886. doi: 10.1007/s10846-018-0937-y

Schoellig, A. P., Siegel, H., Augugliaro, F., and D'Andrea, R. (2014). So You Think You Can Dance? Rhythmic Flight Performances With Quadrocopters, Cham: Springer International Publishing. doi: 10.1007/978-3-319-03904-6_4

Schubert, A. (2017). An optimal control based analysis of human action painting motions (Ph.D. thesis). Universität Heidelberg, Heidelberg, Germany.

St.-Onge, D., Levillain, F., Elisabetta, Z., and Beltrame, G. (2019). Collective expression: how robotic swarms convey information with group motion. Paladyn J. Behav. Robot. 10, 418–435. doi: 10.1515/pjbr-2019-0033

Tresset, P., and Leymarie, F. F. (2013). Portrait drawing by Paul the robot. Comput. Graph. 37, 348–363. doi: 10.1016/j.cag.2013.01.012

Twu, P., Mostofi, Y., and Egerstedt, M. (2014). “A measure of heterogeneity in multi-agent systems,” in 2014 American Control Conference (Portland, OR), 3972–3977. doi: 10.1109/ACC.2014.6858632

Urbano, P. (2005). “Playing in the pheromone playground: Experiences in swarm painting,” in Applications of Evolutionary Computing, eds F. Rothlauf, J. Branke, S. Cagnoni, D. W. Corne, R. Drechsler, Y. Jin, P. Machado, E. Marchiori, J. Romero, G. D. Smith, and G. Squillero (Berlin; Heidelberg: Springer Berlin Heidelberg), 527–532. doi: 10.1007/978-3-540-32003-6_53

Vlachos, E., Jochum, E., and Demers, L. (2018). “Heat: the harmony exoskeleton self - assessment test,” in 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (Nanjing), 577–582. doi: 10.1109/ROMAN.2018.8525775

Keywords: interactive robotic art, robotic swarm, painting, human-swarm interaction, heterogeneous multi-robot teams

Citation: Santos M, Notomista G, Mayya S and Egerstedt M (2020) Interactive Multi-Robot Painting Through Colored Motion Trails. Front. Robot. AI 7:580415. doi: 10.3389/frobt.2020.580415

Received: 06 July 2020; Accepted: 11 September 2020;

Published: 14 October 2020.

Edited by:

David St.-Onge, École de Technologie Supérieure (ÉTS), CanadaReviewed by:

Sachit Butail, Northern Illinois University, United StatesCopyright © 2020 Santos, Notomista, Mayya and Egerstedt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: María Santos, bWFyaWEuc2FudG9zQGdhdGVjaC5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.