94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Robot. AI, 16 November 2020

Sec. Biomedical Robotics

Volume 7 - 2020 | https://doi.org/10.3389/frobt.2020.567491

This article is part of the Research TopicInterfacing Humans and Machines for Rehabilitation and Assistive DevicesView all 19 articles

Human intention detection is fundamental to the control of robotic devices in order to assist humans according to their needs. This paper presents a novel approach for detecting hand motion intention, i.e., rest, open, close, and grasp, and grasping force estimation using force myography (FMG). The output is further used to control a soft hand exoskeleton called an SEM Glove. In this method, two sensor bands constructed using force sensing resistor (FSR) sensors are utilized to detect hand motion states and muscle activities. Upon placing both bands on an arm, the sensors can measure normal forces caused by muscle contraction/relaxation. Afterwards, the sensor data is processed, and hand motions are identified through a threshold-based classification method. The developed method has been tested on human subjects for object-grasping tasks. The results show that the developed method can detect hand motions accurately and to provide assistance w.r.t to the task requirement.

Grasping tasks are performed repeatedly in both the home and in workplaces. Studies have shown that a human in a work/home environment performs grasp and transition between different grasps approximately 4,700 times within a 7.45 h window (Zheng et al., 2011; Bullock et al., 2013). Performing these tasks repeatedly over a longer period of time can cause fatigue and injuries. Hand exoskeletons (Gull et al., 2020) have the capability to assist in these tasks, which can reduce human effort and the risk of getting injured/fatigued.

Proper control of the exoskeleton depends mainly on accurate human intention detection. Several methods to determine human intention that are based on electromyography (EMG) (Anam et al., 2017; Meng et al., 2017; Pinzón-Arenas et al., 2019; Qi et al., 2019; Zhang et al., 2019; Asif et al., 2020) and force myography (FMG) (Islam and Bai, 2019; Xiao and Menon, 2019, 2020) have been proposed. Leonardis et al. (2015) used EMG to control a hand exoskeleton for bilateral rehabilitation. Here, a paretic hand was provided with grasping assistance by estimating the grasping force of the non-paretic hand. In another work (Lu et al., 2019), pattern-recognition-based hand exoskeleton control was proposed for spinal cord injury patients. An FMG-based hand gesture classification method was proposed to control a hand prosthetic device in Cho et al. (2016). In total, 10 hand grips were classified using a linear discriminant analysis technique. A high-density force myography-based hand and wrist gesture classification approach was proposed by Radmand et al. (2016). It was shown that for static hand postures 0.33% RMSE is achieved. While variation in upper limb position reduces the accuracy, better performance can be achieved by introducing limb position variation in training protocol. Several other works on force estimation and pattern-recognition-based hand exoskeleton control have also been reported (Wege and Zimmermann, 2007; Rasouli et al., 2016; Ferigo et al., 2017; Secciani et al., 2019; Arteaga et al., 2020).

In all of the reported works, methods to control a hand exoskeleton are limited to either pattern recognition or force estimation. Furthermore, in these methods machine learning and deep leaning techniques are used that requires large training datasets to achieve good classification/estimation accuracy.

In this work we develop a new sensing method for both pattern recognition and force estimation using FMG. A multi-mode task detection approach, i.e., motion classification and grasp force estimation, is proposed for controlling a hand exoskeleton. In this method, four hand motion states are classified i.e., rest, open, close, and grasp. The classification algorithm is based on threshold approach and requires a small training dataset. Once the grasp is detected, the control mode is switched to grasp assistance. This is achieved by virtue of two sensor bands built with FSRs, which can detect muscle activities conveniently and effectively. In terms of its sensing method, FMG has exhibited a better performance than EMG in classification and estimation tasks considering accuracy, repeatability, and cost (Ravindra and Castellini, 2014; Jiang et al., 2017). Moreover, unlike EMG, FMG is not affected by skin conditions and has a simple electronics interface.

This paper is organized as follows. The design and implementation of the sensor band and exoskeleton control strategy are described in section 2. Section 3 presents the data processing and algorithm design for grasp detection and assistance. Experimental setup and testing results are described in section 4. Discussion on the developed method is presented in section 5. The work is concluded in section 6.

In this section, a methodology to detect hand motions i.e., rest, open, close, and grasp is described. Sensor bands, a hand exoskeleton, and control methods are also presented.

In this work, four hand motion states are classified, i.e., rest, open, close, and grasp. The last three motion states are classified as dynamic states, whereas rest is identified as a static hand state in any posture, e.g., keeping the hand fully opened/closed or holding an object in a fixed posture.

In object grasping, fingers have to be flexed. During flexion, the muscle belly shortens in length and contracts toward the side of the elbow joint, which is referred as isotonic muscle contraction. As the object comes into contact with the hand, muscle shortening stops, and an isometric contraction state is initiated. In this state the muscle belly along the forearm contracts as long as the force applied to hold an object reaches the steady state.

In this work contraction states and the transition between them, i.e., isotonic and isometric, are measured through FMG, using sensor bands built with FSR sensors. In this method, normal forces caused by muscle contraction and applied to the sensor band, hereafter called muscle contraction-induced (MCI) force, are measured. Flexor digitorum profundus and flexor digitorum superficialis are the prime muscles that govern fingers flexion to close the hand. During hand closing movement, the length of these muscle shortens and they contract toward elbow joint. MCI force near the elbow will therefore increase, while it will decrease near the wrist joint. As soon as the object is grasped, muscles stop shortening and isometric contraction takes over. In this case, MCI forces over the muscle belly will increase. This principle can be expanded further to explain hand opening task. In hand opening the object is ungrasped, MCI force on both ends of the forearm will decrease. On the other hand, as the object is released and the fingers are further extended, MCI force measured near the elbow will decrease, while the force measured near wrist will increase. From these changes of MCI force, hand motion states can be determined with certain algorithms.

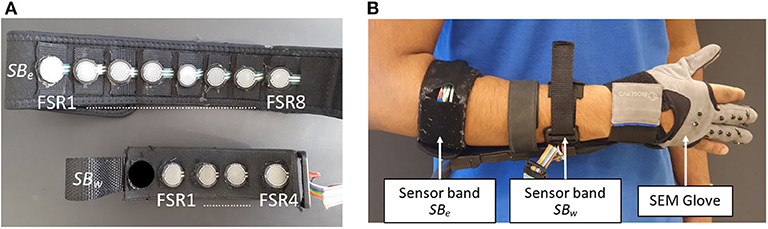

The aforementioned hand motion detection relies on an effective and convenient method to detect MCI forces. To this end, two sensor bands are constructed at Aalborg University exoskeleton lab, as shown in Figure 1A.

Figure 1. (A) FSR sensors placement inside sensor bands SBw and SBe and (B) SEM Glove and sensor bands placement on forearm.

The sensor bands are designed to be placed on the forearm, as shown in Figure 1B. One is placed near the elbow joint. This band, referred to as SBe, is comprised of eight FSR sensors. The other band is placed near wrist joint, referred to as SBw, which has an array of four FSR sensors embedded. The placements of FSR sensors inside the sensor bands are shown in Figure 1A. All FSR sensors are FSR-402, developed by Interlink electronics, and have the capability of measuring 0.1–10 N. More information on the construction of sensor bands can be found in Islam and Bai (2019).

In this work a soft hand exoskeleton SEM Glove (Nilsson et al., 2012; Hashida et al., 2019) is used to provide physical grasping assistance, as shown in Figure 1B. The SEM Glove is equipped with FSR sensors placed at the middle and index fingertips and at the thumb. The assistance provided by the exoskeleton can be measured by these sensors. Moreover, in the SEM Glove's own control unit, the assistance level is also controlled using the same sensor data. The tighter the object is grasped the higher the assistance level will be. In this work, the assistance level provided by SEM Glove is controlled through MCI force measured by the sensor band placed near elbow joint instead of using the SEM Glove's own sensors.

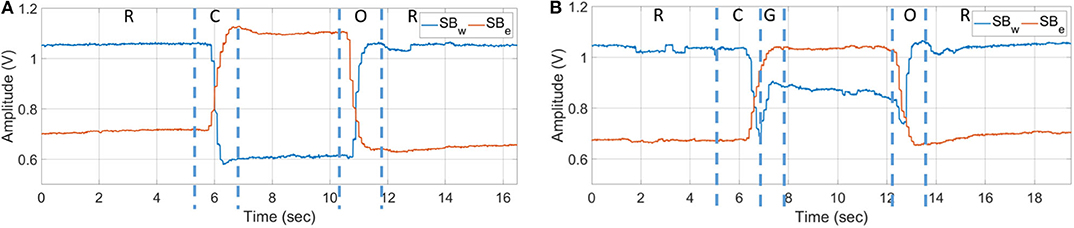

The sensor bands allow us to collect hand motion data effectively. An example of a dataset of rest, open, close, and grasp, labeled as “R,” “O,” “C,” and “G,” respectively, is shown in Figure 2. Isotonic contraction during opening and closing of hand can be seen in Figure 2A. Figure 2B shows the data of an object being grasped when isometric contraction occurs. The state when the object is grasped is labeled as “G.”

Figure 2. Net output voltage measured from sensor bands for opening and closing of hand (A) without grasping and (B) grasping an object.

In the hand opening task, shown in Figure 2B, it can be seen the sensor amplitude first goes down. This muscle activity represents loosening of grip on the object. Afterwards, increase in muscle activity at SBw and decrease in muscle activity at SBe are observed, which represents fingers extension to open the hand. In the implementation phase, loosening of grip is treated as a steady state.

In this work, a multi-mode control approach is used to assist in grasping, which is shown in Figure 3.

The control strategy is divided into two stages i.e., motion classification and grasp force assistance. Motion classification is based on a threshold approach. Out of four actions, i.e., rest, open, close, and grasp, once the algorithm identifies grasp action, the control mode is shifted to grasp force assistance. In this mode a proportional control is implemented, where the assistance force is determined using MCI force measured through SBe.

The FSR sensors in the two sensor bands are interfaced with a non-inverting amplifier. The output voltage of the amplifier is thus given by the following equation:

Here, Vout is the output voltage of the amplifier, Vin is the input voltage applied to positive terminal of the amplifier, Rref is the reference resistance, and Rfsr represents the FSR resistance, which varies with force applied on it.

With the amplifier designed, it is possible to change the range of force measured by FSR. This is done either by changing the reference resistance Rref or input voltage Vin. In our design, the reference resistance is fixed to 100 kohm. We therefore adjust the input voltage Vin through a DAC port from micro-controller for this purpose, which is a task of sensor calibration.

In the calibration stage, input voltage Vin is adjusted so that at least three of the FSR sensors from both SBe and SBw have reached the maximum voltage limit. In this way, the sensor bands can have high resolution in all detections.

During calibration of SBw, the subject is asked to keep the hand open, as shown in Figure 4A. This posture initiates the calibration procedure. An automated program checks the sensors outputs above threshold level. If the number is less than three, input voltage Vin is increased gradually until the condition is fulfilled, i.e., at least three sensors are above threshold limit. Similar procedure is followed for the calibration of SBe but for the close hand gesture, as shown in Figure 4B, to complete the calibration. In the current setup it is set to 1.5 V.

Figure 4. Gestures used in calibration and training stage. (A) open hand gesture to calibrate SBw, (B) close hand gesture to calibrate SBe, and (C) rest state gesture to collect data for threshold determination.

An example dataset of the calibration stage is shown in Figure 5. This dataset represents the task of hand closing from fully opened state. Figure 5A is the dataset collected before calibration and Figure 5B is the dataset collected after calibration.

The improvement in signal resolution, ν, is computed by taking the ratio of change in signal amplitude, from open to close hand gesture, to the standard deviation of signal value during the steady state condition. Mathematically it is given as,

Here, VO and VC represent the net voltage measured from the sensor bands for open hand and close hand gestures respectively, and μ and σ are the mean and standard deviation respectively. The results obtained through aforementioned equation are provided in Table 1. The results clearly show that the resolution of both sensor bands is increased significantly, more than two times, after calibration.

While grasping an object, sensor readings highly depend on the shape and weight of the object. Moreover, donning and doffing of the sensor band also affects the sensor response. Developing a threshold- or machine-learning-based task-detection algorithm will require a large amount of data if the signal amplitude or it's RMS value is used as the input feature. It is noted that when a user takes off the sensor band and puts it back on, it is desirable that the sensor band has to be placed exactly at the same place and with the same tightness, but this is very challenging. All these factors will affect the classification performance.

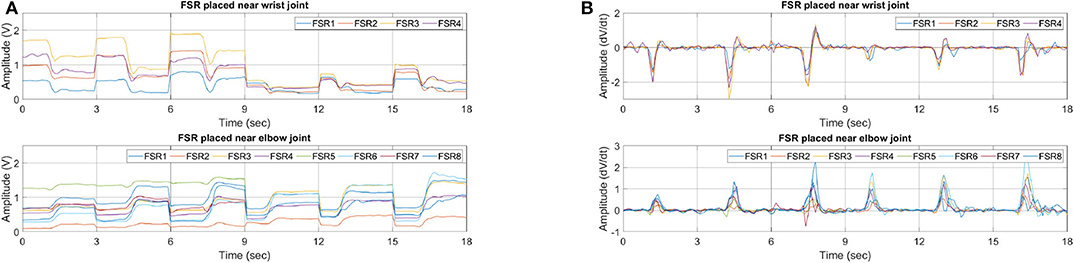

With experiments, it is observed that the feature that gives consistent results with less deviation is slope. This feature represents the change in signal amplitude w.r.t time. An example dataset of grasping different shape and weight objects is shown in Figure 6. A grasping dataset for each object is represented in 3s windows. From time 0 to 3, 3 to 6, and 6 to 9 s objects A, B, and C are grasped sequentially, as shown in Figure 8. From 9 to 18 s a dumbbell bar is grasped three times with different weight hanged on the sides of it. The weights of the dumbbells, applied from t = 9 to 12, 12 to 15, and 15 to 18 s were 1.2, 2.3, and 3.4 kg, respectively. Data sessions from 0 to 9 and from 9 to 18 s were recorded separately. It can be seen from Figure 6A that there is big variation in FSR reading, as it depends on the shape and weight of the object. However, if we look at the slope feature in Figure 6B, a similar pattern but with different peaks can be observed. Initially, fingers are flexed therefore we see opposite slopes for the FSR sensors placed near elbow and wrist joint. As soon as an object is grasped, positive slopes for both sensor bands are observed. By carefully selecting the threshold value, grasp action can be detected very effectively. In this work we therefore selected slope feature for detection of hand motion.

Figure 6. FSR feature dataset for grasping objects of different sizes and weights. (A) RMS and (B) slope.

Two features are extracted from raw sensor data, i.e., root mean square (RMS) and slopes. RMS from raw sensor data is obtained using a 150 ms window in which 100 ms is non-overlapping and 50 ms is overlapping from previous window. After calculating RMS values for each FSR sensor, slopes are obtained using the following equation:

Here, κ represents the slope feature, Ri represents the newest sample of RMS data, and tws is the window time to extract features.

In this method each state, i.e., rest, open, close, and grasp, is identified using a threshold-based classification approach. To determine the threshold limits, subject is asked to hold rest state, as shown in Figure 4C, for 5 s. Raw data obtained in this task is post processed to obtain slopes, which are further used to determine threshold limits.

After the computation of slope feature, the minimum and maximum slope value for each FSR was computed:

with

Here, N and M are the numbers of FSR sensors embedded inside the sensor bands SBw and SBe, respectively. and are row matrices of order 1 × N and contain minimum and maximum slope values of SBw sensor band data computed for rest state. and are also row matrices of order 1 × M and contain minimum and maximum slope values of SBe sensor band data. Δw is a I×N matrix, where I is the number of slope feature samples computed from rest gesture data, and Δe is also a matrix but of I×M dimension.

Using (4) and (5), threshold conditions to detect each task are given as

Here, HR, HO, HC, and HG are the thresholds for rest, open, close, and grasp task detection. and are row matrices that are computed during real-time testing. The information in these matrices is in same order as in Δw and Δe.

During the motion classification stage, if grasp action is detected, the control method is switched to grasp assistance. In this mode, we need to determine and control the grasp assistance provided by the SEM Glove. In this work, it is determined using the following equation:

Here, u is the control input relayed to the SEM Glove, K is the proportional gain and is the net FSR output measured from the sensor band SBe. LBe is the net FSR output measured at the time of grasp detection and is given by following equation:

Here, i is the sample when grasp action was detected, and i−1 represents the sample before.

The performance of the task detection technique is analyzed with a group of four parameters, namely, precision, recall, F1-score, and accuracy (Powers, 2011). Mathematically, these parameters are calculated by

Here, NTP, NTN, NFP, and NFN represent number of samples that are true positive, true negative, false positive, and false negative, respectively, as illustrated in Figure 7. Ppre, Prec, PF1, and Pacc are the performance measures that represents precision, recall, F1-score, and accuracy, respectively. Of these measures, precision, recall, and F1-score are defined in the range of 0–1, whereas, accuracy is expressed in percentage.

Using these four parameters we can evaluate the classification performance comprehensively and in an unbiased manner. From mathematical representations, we can see that the fundamental difference between accuracy and other parameters is TN samples. In our designed experiment the number of samples in each class is not consistent. In such cases precision and recall can also provide very useful insight into classification performance. Taking the example of rest task, precision calculates from the total number of samples that are classified as rest how many were actually rest. Meanwhile, recall calculates, from the number of times a user was instructed to keep rest state, how many samples were correctly identified as rest state. Finally, the F1-score tells the balance between precision and recall.

With the developed method, three experiments are performed, i.e., task identification, influence of sensor placement, and grasping assistance. Details and results of each task are provided in forthcoming sections.

Six subjects participated in this experiment. All of them were healthy, right-handed, and aged between 25 and 35 years. Ethical approval for these experiments was obtained from an ethical committee, Region Nordjylland, Denmark.

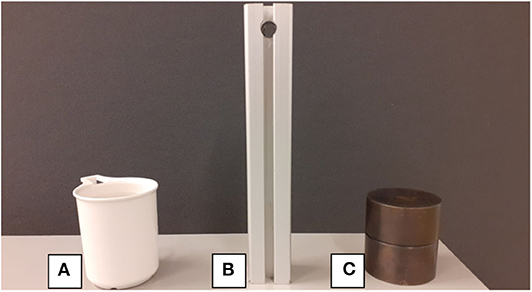

In this experiment, performance measures, i.e., precision, recall, F1-score, and accuracy, are computed to evaluate the classification performance. For this purpose, an experiment was designed where a subject performs hand opening and closing, first without any object and afterwards with three objects, as shown in Figure 8, of different attributes.

Figure 8. Objects of different shape and weight that are grasped during task identification experiment, (A) empty cup, (B) aluminum bar, and (C) solid metal cylinder.

The protocol of the experiment is as follows: the subject is instructed to sit in a chair with their hands resting on the table beside the objects. The first task the subject performs is calibration, as explained in section 3.1, which is followed by a rest state gesture, as shown in Figure 4C, which is held for 5 s to determine the threshold limits. Afterwards, real-time testing tasks are performed in which, for open and close tasks, the subject lifts his/her hand from the table and keeps it in open state, as shown in Figure 4A. The subject closes his/her hand when the instruction is shown on the screen and opens it up when the instruction to open is shown on the screen again. The subject is instructed that an open hand posture should be maintained throughout the experiment. For the grasp task, hand is lifted from the table and kept open, as shown in Figure 4A. When the grasp instruction is shown on the screen, subject grasps the object and slightly lifts it from the table with a small clearance of approximately 1.0 cm.

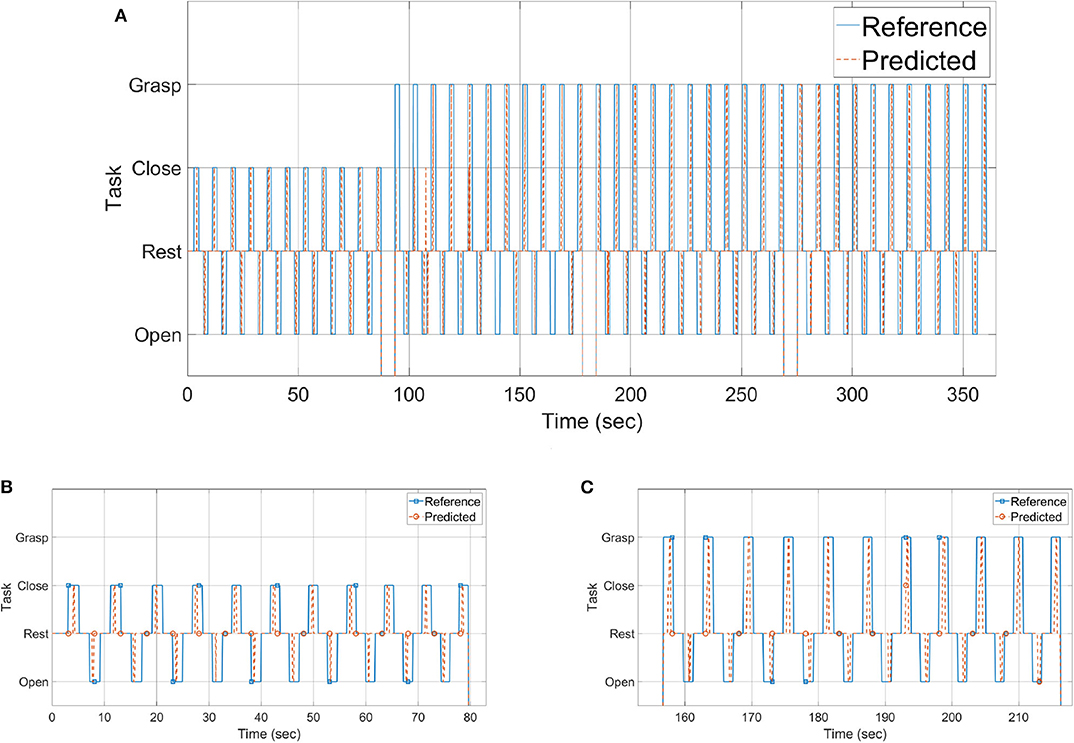

The results of the experiment are shown in Figures 9–12 and summarized in Table 2. Figure 9 shows the experimental results for one of the subjects. Figure 9A shows the reference and predicted tasks. In the first 80 s of the experiment, the subject is instructed to perform the rest, open, and close tasks. From t = 80 to 155, t = 155 to 220, and t = 220 to 285 s, the subject is instructed to grasp objects A, B, and C sequentially. In this figure, the solid blue line shows the task to be performed and the dotted red line the result predicted by a classifier when a subject performs that particular task. A zoomed-in view of open and close tasks is shown in Figure 9B and of grasping task for object B is shown in Figure 9C.

Figure 9. Tasks performed during (A) the whole span of time, (B) opening and closing of the hand, and (C) grasping object B.

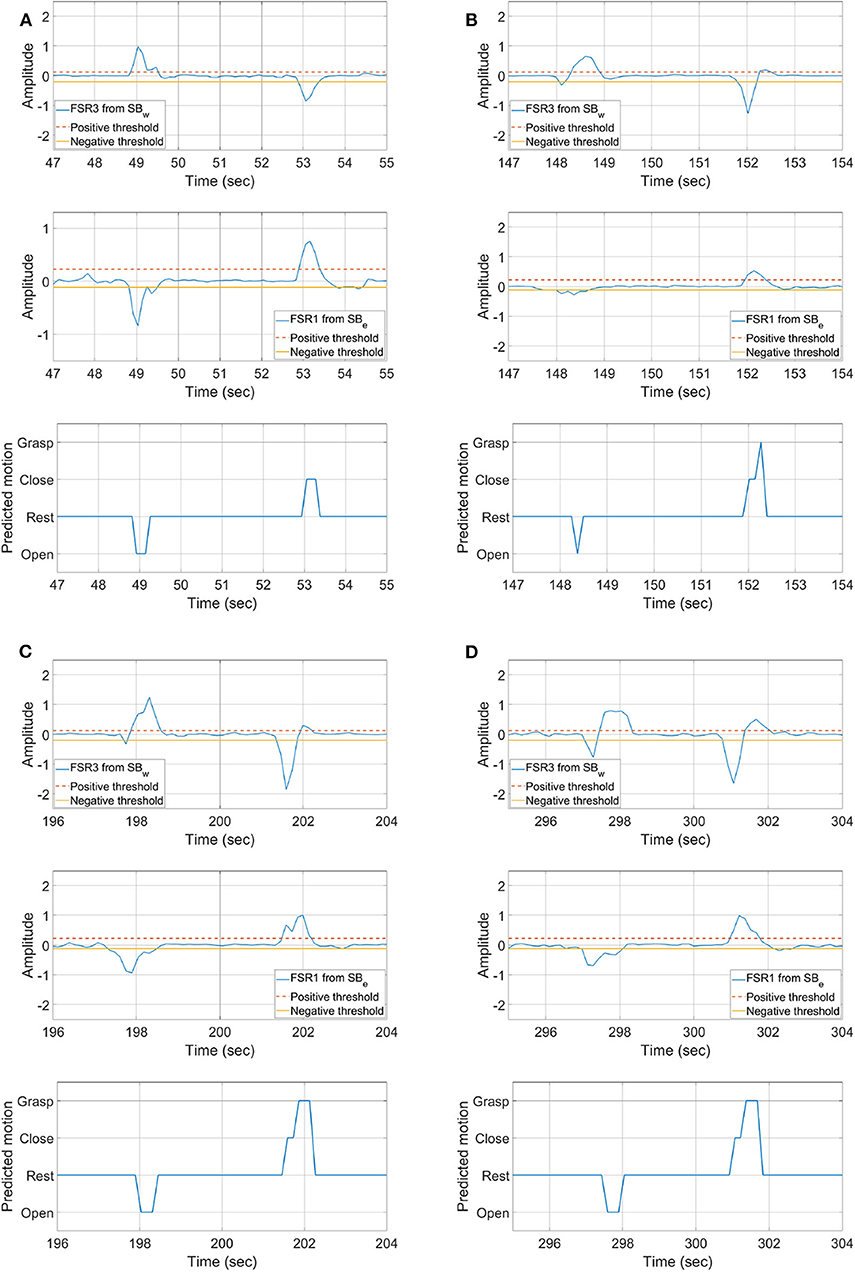

Figure 10. Results of single instances (A) open/close, grasping objects (B) A, (C) B, and (D) C, shown in Figure 8.

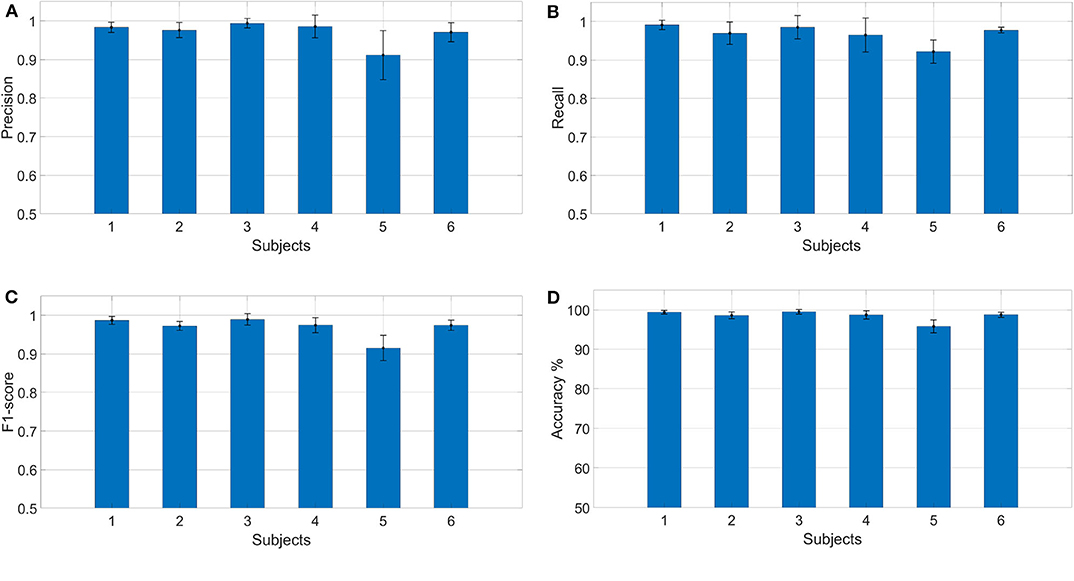

Figure 11. Results calculated for each subject individually (A) precision, (B) recall, (C) F1-score, and (D) accuracy.

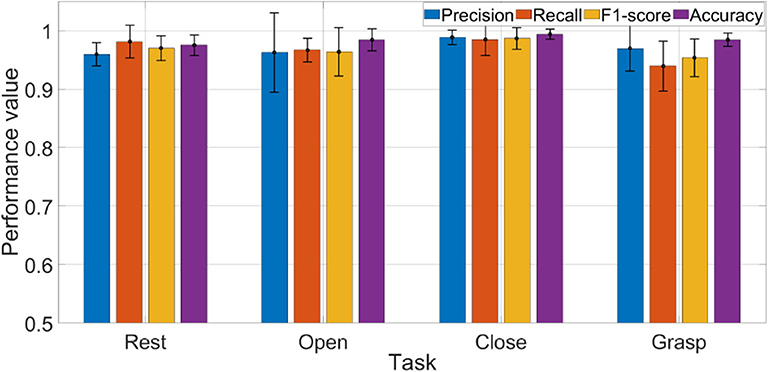

Figure 12. Average results of each performance measure w.r.t each task. Accuracy plot is shown normalized between 0 and 1.

Single instances of abovementioned tasks are shown in Figure 10. Figure 10A is the result of an open and close task. The results show that, initially, the hand was in the close state; as the subject opens the hand, a drop in signal amplitude near the elbow and an increase in signal amplitude near the wrist joint is observed. The classifier is able to detect that the hand is opened as the movement is performed. Afterwards, when the hand is closed, the inverse muscle activity pattern can be seen, and, as the movement is performed, the classifier is again able to detect that the hand is closed.

The instances of grasping object A, B, and C are shown in Figures 10B–D, respectively. Data is presented in the same order as represented for Figure 10A. Initially, the subject is holding the object. As the hand is opened, it is seen from the FSR readings that their associated muscle contraction near the wrist increases, and contraction near elbow is decreased. From the opened hand state when the subject is instructed to grasp the object, it can be seen that classifier first detects that the hand is closing. It can also be seen from the FSR readings that it is increasing near the elbow and decreasing near the wrist, indicating hand closing. As the object is grasped, an increase in readings on both sensor bands is seen, and the classifier correctly detects that an object is being grasped. These results show that the threshold-based classifier is able to distinguish between all four motion states, i.e., rest/steady, open, close, and grasp, accurately.

Results in terms of precision, recall, F1-score, and accuracy are shown in Figures 11, 12 and Table 2. In the figures, the error bar represents the performance deviation within the tasks, i.e., rest, open, close, and grasp.

The average performance values w.r.t each task are shown in Figure 12. Considering the rest state, it can be seen that average recall value is 0.98, which reveals that only 2% of the rest states were not detected. It is to be noted that rest state was held in all postures, i.e., open hand, close hand, and grasp. In the context of real-time operation, this result is very critical. Any miss-classification can cause undesirable movement/action, especially if subject is holding an object. The results show that the algorithm is highly accurate in detecting the rest state. Precision for detecting rest state is equal to 0.96, which shows that in only few cases where subject was performing another task (open, close, or grasp), classifier detected it as rest state.

For open and close tasks, it can be seen that recall and precision scores are very similar. For grasp, we can see that precision (0.97) is higher than recall (0.94). From precision, we can deduce that, of all the tasks that were classified as grasp, only 3% of them were miss-classifications. Meanwhile, the recall result tells us that 6% of the times when a subject grasped an object, the classifier did not detect it as grasp. To improve precision, the threshold level should be raised, but this will affect the performance of recall. Raising the threshold will have the opposite impact on other performances. It will improve the recall but might reduce the precision. With the current setup, classification performance of the algorithm depends on the trade-off between recall and precision. Depending on the applications, threshold levels can be tuned to get better results. The performance can be improved by incorporating more FSR sensors or by using more features for threshold determination.

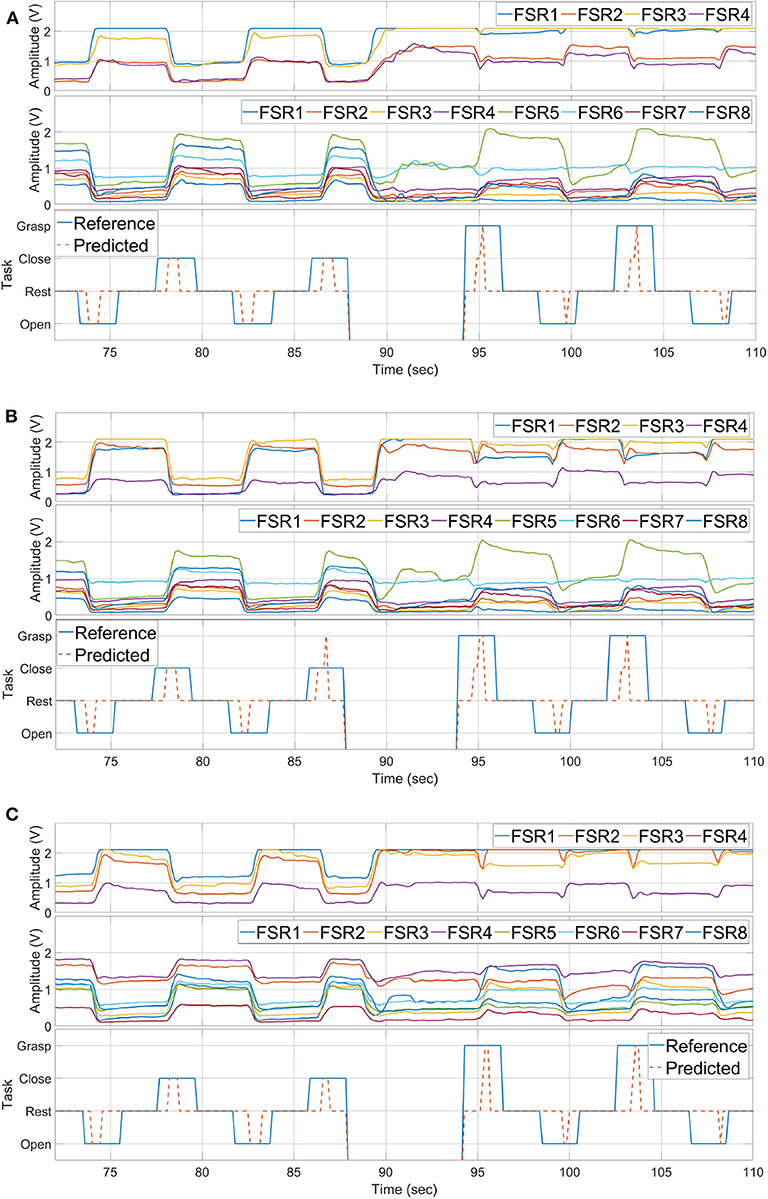

In this experiment, the effect of sensor placement on motion detection is studied. To achieve this objective sensor bands are placed over the forearm in three different orientations/placements, as shown in Figure 13. In placement A, FSR1 from sensor bands SBe and SBw is aligned with brachioradialis and near insertion of brachioradialis. In placement B, it is aligned with brachioradialis and flexor carpi ulnaris muscles. Finally, in placement C, it is aligned with palmaris longus and near the insertion of brachioradialis.

Figure 13. Three placements of sensor bands, (A) two FSR1 from SBe and SBw are aligned with brachioradialis and near insertion of brachioradialis, (B) aligned with brachioradialis and flexor carpi ulnaris, (C) aligned with palmaris longus and near insertion of brachioradialis.

Tasks performed for each placement of sensor bands are as follows:

• Open and close of hand without grasping any object

• Grasping object C as shown in Figure 8.

Each task is performed 10 times under same conditions as explained in section 4.1. The results of each experiment are shown in Figure 14, where Figures 14A–C are the results of placement A, B, and C, respectively, by sensor band orientation. In each sub-figure of Figure 14, the first figure is the FSR sensors data from the sensor band placed near the wrist, and the second is the data of FSR sensors placed near the elbow, and the third figure displays the reference and predicted tasks.

Figure 14. Hand motion detection with three placements of the sensor bands, (A) with placement A, (B) with placement B, (C) with placement C.

Even though the raw data is not similar for each sensor placement, the developed method is able to detect all four hand gestures accurately. The performance of task detection is less affected. As seen from predicted results, rest state, hand opening, closing, and grasping achieved the average accuracies of 98.15, 99.24, 100, and 98.16% for all three placements.

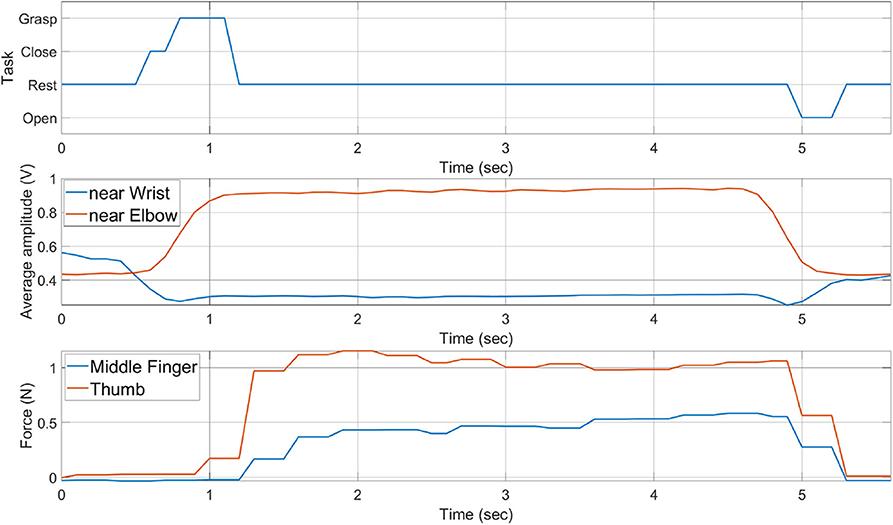

In this work, grasping assistance is provided using SEM Glove where the desired assistance level is regulated by implementing a proportional control scheme. The block diagram of the control scheme is shown in Figure 15. Referring to Equation (11), the input of the proportional control is the average MCI force measured by the sensor band placed near the elbow, and the output u is then relayed to the exoskeleton. Moreover, grasping assistance provided by SEM Glove is further validated by measuring the grasping force through force sensors embedded inside SEM Glove exoskeleton.

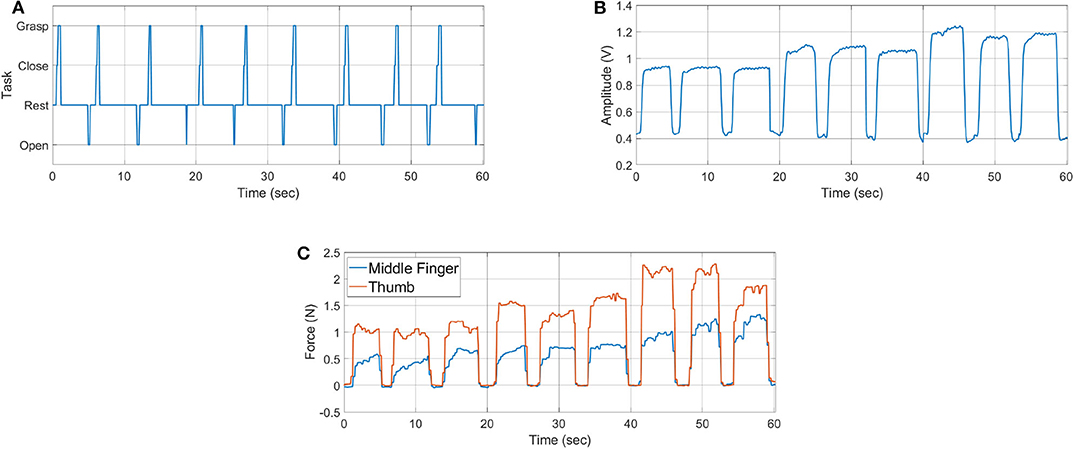

In this experiment the sensor bands are worn on right forearm and exoskeleton is worn on the left hand. Furthermore, three different payloads, i.e., 1.2, 2.3, and 3.4 kg, applied from t = 0 to 20, t = 20 to 40, and t = 40 to 60 s, respectively, are being grasped for three times each. The results of the experiment are shown in Figure 16.

Figure 16. Hand exoskeleton control results: (A) task identified, (B) MCI force measured from sensor band placed near elbow joint, and (C) assistance force provided by SEM Glove.

Figure 16A shows the task predicted by the classifier. Net MCI force measured by the SBe sensor band is shown in Figure 16B. The resulting grasping force measured from SEM Glove sensors is shown in Figure 16C. Whereas, the single instance of grasp task is shown in Figure 17. With the detection of a grasping task and MCI force, assistance is provided by the exoskeleton, which is evident from the sensor reading of the SEM Glove.

Figure 17. History of task performed, average MCI forces, and grasping forces measured by SEM Glove.

If we look closely at Figures 16B,C, we can see that the MCI forces are increasing with the payload grasped by the subject. It is also seen that the forces read by the sensors placed at the middle finger and thumb are increasing with the payload. These are the grasping forces that are caused by the physical interaction between fingertips and the object. When assistance provided by the exoskeleton is increased, the exoskeleton will help to grasp the object tightly and in turn grasping force measured the sensors, placed in finger tips, will increase. This validates that with the increase in MCI force, shown in Figure 16B, exoskeleton is able to provide the grasping assistance accordingly.

In this work a novel method is developed for hand motion detection and for the provision of assistance in carrying out an object grasping task. We also addressed the challenge of data collection for training and proposed an alternative solution for it.

The new method is advantageous in reducing the complexity and increasing the usability of the system for a longer period. In an AI-based pattern recognition method, obtaining a correct and sufficient training dataset is one of the major challenges. Moreover, even if the training data is obtained correctly there still exists another challenge of reusing it from time to time. The reason is due to the placement of sensor at the exact location and change in muscle activity levels. The method proposed in this work effectively addresses these challenges. The method requires sensor calibration and rest state data of the hand. Afterwards, the system can detect the hand motions based on change in activity level. Additionally, the requirement on placing sensor band at exact location is mitigated. Moreover, the calibration procedure increases the sensor's sensitivity and solves the problem of sensor resolution if the band tightness is changed from one day to the next.

Another advantage of this method is the dual working modes of the sensor band. Besides motion recognition, the sensor band is also used to control assistance level in grasping an object, which is proportional to the MCI force measured.

The results in this work are significant for physical assistance in workplaces. For a workplace environment, it is critical for any solution that it be accurate, robust, involving less training, and is not sensitive to environmental conditions. With these requirements in mind, comparing our method to other detection methods like sEMG, which is highly prone to noise that is caused by sensor placement, orientation, and skin conditions, our method is less affected by skin condition and can be worn without very exact orientation and placement. Moreover, our developed method has the advantage of using small training datasets. In Arteaga et al. (2020) and Pinzón-Arenas et al. (2019), each gesture was repeated for more than 10 times. Whereas, in our method beside calibration, rest data is recorded for only one time. By this advantage the user can take off the device and put it back on conveniently without worrying about its performance.

This novel method using FSR sensor bands offers a robust and accurate alternative for human-robot interaction. The works presented in this paper and in previous studies (Islam et al., 2018; Islam and Bai, 2019) have shown that FSR-based sensor bands can be applied for control of upper-body assistive exoskeletons in different ways. Beside these, sensor bands can be applied for other types of applications of upper-limb and lower-limb exoskeletons. Moreover, this method can be used to assess the muscle activities for medical purposes and design of control strategies.

Besides these advantages, some limitations of the method are noted. External contact with the sensor band can change the sensor readings, which can result in incorrect motion detection. Hand motion speed is also a factor that can lead to miss-classification. If the motion is performed at slow speed, the algorithm might not be able to detect the task. These challenges can be addressed by either placing the FSR array outside of the sensor band or by implementing robust AI techniques for fault detection. Movement speed challenge can be addressed by increasing the window size during features extraction stage. However, increasing the window size can introduce delay in exoskeleton response.

This work is aimed at developing an effective and convenient method to detect hand motions, i.e., rest, open, close, and grasp, using FSR-based sensor bands, which is further used to control hand exoskeleton and provide assistance in grasping task. The objectives are achieved by developing a threshold-based task detection algorithm to determine the hand motion, which is based on the change in MCI forces. Moreover, with the detection of grasping task a proportional force control is also implemented to provide assistance through a soft hand exoskeleton.

The contribution of this work is to experimentally validate whether the sensor bands can be used to detect hand motion and to implement proportional assistance control. Detection of hand motion with the requirement of minimal training data and its validation with testing on multiple subjects are other contributions of this work. The results showed that the developed method can detect each task with high precision, recall, and accuracy. Furthermore, experimental verification of proportional assistance control with SEM Glove in a grasping task is another contribution of this work. The results have shown that the developed method can be used with soft exoskeleton to assist workers in grasping tasks.

In this work, experiments were performed in a controlled environment. In order to test the method for daily routine activities, our future work will focus on sensor fusion techniques to improve robustness against disturbances, which can be caused by other limb movements. Furthermore, the method can be extended to detect other hand gestures and elbow and lower extremity motions.

The raw data supporting the conclusions will be made available by the lead author on reasonable request.

The studies involving human participants were reviewed and approved by ethical committee, Region Nordjylland, Denmark. The participants provided their written informed consent to participate in this study.

MI and SB defined and developed this research work. MI developed the initial protocol draft, collected data, performed the analysis, and wrote the first draft of the manuscript. SB finalized the protocol, reviewed the manuscript, and approved the final version. Both authors contributed to the article and approved the submitted version.

This work was supported by Innovation Fund Denmark for project EXO-AIDER (https://www.exo-aider.dk).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors would like to thank participants for their time contributed to this study.

Anam, K., Rosyadi, A. A., Sujanarko, B., and Al-Jumaily, A. (2017). “Myoelectric control systems for hand rehabilitation device: a review,” in 2017 4th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), 1–6. doi: 10.1109/EECSI.2017.8239091

Arteaga, M. V., Castiblanco, J. C., Mondragon, I. F., Colorado, J. D., and Alvarado-Rojas, C. (2020). EMG-driven hand model based on the classification of individual finger movements. Biomed. Signal Process. Control 58:101834. doi: 10.1016/j.bspc.2019.101834

Asif, A. R., Waris, A., Gilani, S. O., Jamil, M., Ashraf, H., Shafique, M., et al. (2020). Performance evaluation of convolutional neural network for hand gesture recognition using EMG. Sensors 20:1642. doi: 10.3390/s20061642

Bullock, I. M., Zheng, J. Z., De La Rosa, S., Guertler, C., and Dollar, A. M. (2013). Grasp frequency and usage in daily household and machine shop tasks. IEEE Trans. Hapt. 6, 296–308. doi: 10.1109/TOH.2013.6

Cho, E., Chen, R., Merhi, L.-K., Xiao, Z., Pousett, B., and Menon, C. (2016). Force myography to control robotic upper extremity prostheses: a feasibility study. Front. Bioeng. Biotechnol. 4:18. doi: 10.3389/fbioe.2016.00018

Ferigo, D., Merhi, L.-K., Pousett, B., Xiao, Z. G., and Menon, C. (2017). A case study of a force-myography controlled bionic hand mitigating limb position effect. J. Bion. Eng. 14, 692–705. doi: 10.1016/S1672-6529(16)60435-3

Gull, M. A., Bai, S., and Bak, T. (2020). A review on design of upper limb exoskeletons. Robotics 9:16. doi: 10.3390/robotics9010016

Hashida, R., Matsuse, H., Bekki, M., Omoto, M., Morimoto, S., Hino, T., et al. (2019). Evaluation of motor-assisted gloves (SEM Glove) for patients with functional finger disorders: a clinical pilot study. Kurume Med. J. 64, 1–18. doi: 10.2739/kurumemedj.MS652007

Islam, M. R., Xu, K., and Bai, S. (2018). “Position sensing and control with FMG sensors for exoskeleton physical assistance,” in International Symposium on Wearable Robotics (Pisa: Springer), 3–7. doi: 10.1007/978-3-030-01887-0_1

Islam, M. R. U., and Bai, S. (2019). Payload estimation using forcemyography sensors for control of upper-body exoskeleton in load carrying assistance. Model. Identif. Control 40, 189–198. doi: 10.4173/mic.2019.4.1

Jiang, X., Merhi, L.-K., Xiao, Z. G., and Menon, C. (2017). Exploration of force myography and surface electromyography in hand gesture classification. Med. Eng. Phys. 41, 63–73. doi: 10.1016/j.medengphy.2017.01.015

Leonardis, D., Barsotti, M., Loconsole, C., Solazzi, M., Troncossi, M., Mazzotti, C., et al. (2015). An EMG-controlled robotic hand exoskeleton for bilateral rehabilitation. IEEE Trans. Hapt. 8, 140–151. doi: 10.1109/TOH.2015.2417570

Lu, Z., Stampas, A., Francisco, G. E., and Zhou, P. (2019). Offline and online myoelectric pattern recognition analysis and real-time control of a robotic hand after spinal cord injury. J. Neural Eng. 16:036018. doi: 10.1088/1741-2552/ab0cf0

Meng, Q., Meng, Q., Yu, H., and Wei, X. (2017). “A survey on sEMG control strategies of wearable hand exoskeleton for rehabilitation,” in 2017 2nd Asia-Pacific Conference on Intelligent Robot Systems (ACIRS) (Wuhan), 165–169. doi: 10.1109/ACIRS.2017.7986086

Nilsson, M., Ingvast, J., Wikander, J., and von Holst, H. (2012). “The soft extra muscle system for improving the grasping capability in neurological rehabilitation,” in 2012 IEEE-EMBS Conference on Biomedical Engineering and Sciences (Langkawi), 412–417. doi: 10.1109/IECBES.2012.6498090

Pinzón-Arenas, J. O., Jiménez-Moreno, R., and Herrera-Benavides, J. E. (2019). “Convolutional neural network for hand gesture recognition using 8 different EMG signals,” in 2019 XXII Symposium on Image, Signal Processing and Artificial Vision (STSIVA) (Bucaramanga), 1–5. doi: 10.1109/STSIVA.2019.8730272

Powers, D. M. (2011). Evaluation: from precision, recall and F-measure to ROC, informedness, markedness and correlation. J. Mach. Learn. 2, 37–63.

Qi, J., Jiang, G., Li, G., Sun, Y., and Tao, B. (2019). Intelligent human-computer interaction based on surface EMG gesture recognition. IEEE Access 7, 61378–61387. doi: 10.1109/ACCESS.2019.2914728

Radmand, A., Scheme, E., and Englehart, K. (2016). High-density force myography: a possible alternative for upper-limb prosthetic control. J. Rehabil. Res. Dev. 53, 443–456. doi: 10.1682/JRRD.2015.03.0041

Rasouli, M., Chellamuthu, K., Cabibihan, J.-J., and Kukreja, S. L. (2016). “Towards enhanced control of upper prosthetic limbs: a force-myographic approach,” in 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob) (University Town), 232–236. doi: 10.1109/BIOROB.2016.7523629

Ravindra, V., and Castellini, C. (2014). A comparative analysis of three non-invasive human-machine interfaces for the disabled. Front. Neurorobot. 8:24. doi: 10.3389/fnbot.2014.00024

Secciani, N., Bianchi, M., Meli, E., Volpe, Y., and Ridolfi, A. (2019). A novel application of a surface electromyography-based control strategy for a hand exoskeleton system: a single-case study. Int. J. Adv. Robot. Syst. 16, 1–13. doi: 10.1177/1729881419828197

Wege, A., and Zimmermann, A. (2007). “Electromyography sensor based control for a hand exoskeleton,” in 2007 IEEE International Conference on Robotics and Biomimetics (ROBIO) (Sanya), 1470–1475. doi: 10.1109/ROBIO.2007.4522381

Xiao, Z. G., and Menon, C. (2019). A review of force myography research and development. Sensors 19:4557. doi: 10.3390/s19204557

Xiao, Z. G., and Menon, C. (2020). Towards the investigation on the effect of the forearm rotation on the wrist FMG signal pattern using a high-density FMG sensing matrix. Cogent Eng. 7:1795051. doi: 10.1080/23311916.2020.1795051

Zhang, Z., Yang, K., Qian, J., and Zhang, L. (2019). Real-time surface EMG pattern recognition for hand gestures based on an artificial neural network. Sensors 19:3170. doi: 10.3390/s19143170

Keywords: human intention detection, FSR sensor band, exoskeleton control, grasping assistance, soft hand exoskeletons

Citation: Islam MRU and Bai S (2020) Effective Multi-Mode Grasping Assistance Control of a Soft Hand Exoskeleton Using Force Myography. Front. Robot. AI 7:567491. doi: 10.3389/frobt.2020.567491

Received: 02 June 2020; Accepted: 04 September 2020;

Published: 16 November 2020.

Edited by:

Carlos A. Cifuentes, Escuela Colombiana de Ingenieria Julio Garavito, ColombiaReviewed by:

Dongming Gan, Purdue University, United StatesCopyright © 2020 Islam and Bai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shaoping Bai, c2hiQG1wLmFhdS5kaw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.