95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Robot. AI , 04 November 2020

Sec. Human-Robot Interaction

Volume 7 - 2020 | https://doi.org/10.3389/frobt.2020.561015

This article is part of the Research Topic Legacy Disruptors in Applied Telerobotics View all 6 articles

Pascal Gliesche1*

Pascal Gliesche1* Tobias Krick2,3

Tobias Krick2,3 Max Pfingsthorn4

Max Pfingsthorn4 Sandra Drolshagen4

Sandra Drolshagen4 Christian Kowalski1

Christian Kowalski1 Andreas Hein1,5

Andreas Hein1,5Ensuring care is one of the biggest humanitarian challenges of the future since an acute shortage in nursing staff is expected. At the same time, this offers the opportunity for new technologies in nursing, as the use of robotic systems. One potential use case is outpatient care, which nowadays involves traveling long distances. Here, the use of telerobotics could provide a major relief for the nursing staff, as it could spare them many of those—partially far—journeys. Since autonomous robotic systems are not desired at least in Germany for ethical reasons, this paper evaluates the design of a telemanipulation system consisting of off-the-shelf components for outpatient care. Furthermore, we investigated the suitability of two different input devices for control, a kinesthetic device, and a keyboard plus mouse. We conducted the investigations in a laboratory study. This laboratory represents a realistic environment of an elderly home and a remote care service center. It was carried out with 25 nurses. Tasks common in outpatient care, such as handing out things (manipulation) and examining body parts (set camera view), were used in the study. After a short training period, all nurses were able to control a manipulator with the two input devices and perform the two tasks. It was shown that the Falcon leads to shorter execution times (on average 0:54.82 min, compared to 01:10.92 min with keyboard and mouse), whereby the participants were more successful with the keyboard plus mouse, in terms of task completion. There is no difference in usability and cognitive load. Moreover, we pointed out, that the access to this kind of technology is desirable, which is why we identified further usage scenarios.

The future of nursing care faces several important challenges, such as an aging society and the shortage of nursing staff. The use of new technology is a promising way to counteract the otherwise expected shortage of nursing staff and the increase of patients in the need of care with innovative approaches based on robotics and human-technology interaction (Hülsken-Giesler, 2015).

78.8% of those in need of long-term care in Germany are cared for on an outpatient basis. Thirty-two percent of them are cared for jointly by an outpatient (formal) nursing service and their relatives (informal caregivers) or only by the nursing service (Statistisches Bundesamt (Destatis), 2019). Furthermore, completely autonomous robots in the field of care to support all participants are not realistic in the near future (Becker et al., 2013). The care service centers from which the nursing staff travel to their patients generally have their destinations within a radius of up to 25 km, which results in average journey times of 6 min. In rural areas, this can add up to 11 min on average (Neumeier, 2015). Caring relatives would in some cases also be able to rely on the professional expertise and guidance from a nurse. The (remotely) assisting nurse must be able to get a picture of the situation. If the person is then to be instructed on site, the possibility of handing things or pointing to them with the help of the manipulator will help.

As a general problem of engineering-driven solutions, it often turns out that individual application conditions in nursing have not been sufficiently considered during the development of the technology. As a result, caregivers are skeptical or even rejective when it comes to implementing these innovations in their daily lives (Berger, 2017; Fehling and Dassen, 2017; Merda et al., 2017).

This shows that systematic research approaches are needed to adequately include (formal and informal) caregivers, patients, and the conditions of use—but also ethical and legal issues—when developing new solutions and implementing them in nursing practice.

The system under investigation is being developed within the framework of integrated technology development (BMBF, 2015), which means that the technology development takes place in close cooperation with other departments and starts with an assessment of needs. The technology we developed intends to support and relieve the nursing staff; however, as shown in Figure 1, the technology should support caregivers and not replace or impose additional burdens.

Figure 1. Vision of telemanipulation in outpatient care: (B) the patient lies in his bed and receives support from the nurse (A) with the help of the telemanipulated robotic arm, in this case, the remote control that had fallen out of the bed and which he could no longer reach alone.

Our work focuses on developing a system that helps formal and informal caregivers. In order to do fulfill this ambition, we follow a rigorous participative design and development methodology. In a first step, the nursing sciences determined the initial situation by means of a scoping review (Krick et al., 2019). This was then compared with the demands determined in (Seibert et al., 2019). Toward useful and accepted robotic assistance systems, promising scenarios for robotic assistance were identified in (Gliesche et al., 2018). One of these scenarios in home care was “Picking up personal items that have fallen out of bed and hand them to the patient.” However, this short task requires either in the absence or to support informal caregivers the arrival of a nurse, which significantly increases the workload of nurses, especially in rural areas. Such small tasks, which do not necessarily require the presence of a nurse at the patient, are particularly suited for the use of (semi-)automation. The decision as to which use case we should consider first and its exact formulation was made in discussions with the nursing staff. We carried out the further development steps in constant coordination with them. We tested the expansion stages in the laboratory with the nursing staff, discussed and then further development adapted. Furthermore, ethicists accompanied these meetings, and we considered their concerns.

However, care in Germany is a field in which considerable reservations are raised against the use of autonomous robots. It is feared that people in need of care or assistance may experience less social and emotional support in the future due to the use of robotic techniques (Sparrow and Sparrow, 2006). However, professional caregivers also feel threatened by a change in their job description toward less relationship-oriented care (Kuhlmey et al., 2019). In its statement on healthcare robotics, the German Ethics Council (Deutscher Ethikrat) also states that nursing robots used instead of human caregivers are not desirable(Deutscher, 2020). To circumvent this ethical conflict, we used telemanipulation in this work as an intermediate solution. This also leads to the decision to use only commercially available and established components. We believe, that partly automation can be implemented step-by-step based on the experiences and training data gained during telemanipulation. In this way, acceptance can increase.

The benefits and challenges of new solutions in the field of human-technology interaction used in nursing care have so far mostly been studied with methods at a low level of evidence (Krick et al., 2019). In the specific field of application, we described in this study, is no experience with which input device nursing staff can control a manipulator best and thus support tasks.

The design of our teleoperation system derives from the needs of care. It focuses on the support of formal and informal caregivers in outpatient care centered around the bed. The approach of outpatient care carried out or supported by telemanipulation could reduce the long travel times and thus times that caregivers cannot spend on their actual work while avoiding the ethical problem area of automated robots in care. Furthermore, this approach offers the possibility that caregivers who are physically unable to work in traditional nursing care themselves can remain in this profession. The wealth of experience could thus be retained in nursing care for longer.

The initial scenario is the following: A patient is mainly cared for by a relative and from time to time a nursing service supports activities that the relative cannot perform alone or when the relative is not present. We use a simplified setup consisting of a manipulator with a camera mounted on the end effector, a user interface for the control station showing the robot state, and the camera image as well as standard input devices.

We carried out the following study to determine whether it is possible to control a manipulator in a nursing setting with standard input devices and a classic operating concept. In addition, we compared the input devices, further specified and expanded the application scenarios, and surveyed the acceptance and usefulness of the technology.

We conducted the study with professional caregivers. They had to perform two tasks each with both input devices in two rounds. The participants had to perform one manipulation task and one position reachability task. The participants had to perform one manipulation task and one position task. We recorded and evaluated the time expenditure for the tasks and the load for the subject.

Telemanipulation is a field that has long been studied (Chopra et al., 2008). Usually, telemanipulation is used to work in regions to which a person has no access or which are dangerous to access. Examples of these are radioactive (Castro et al., 2018) or extra-terrestrial regions (Deml, 2004). In medicine, it is also used to perform minimally invasive operations precisely [e.g., with the da Vinci robot (Kim et al., 2002)].

Even in these much-researched areas, there are still innovations, as the current works of (Gancet et al., 2015; Attard et al., 2018; Skilton et al., 2018; Klamt et al., 2019; Pervez et al., 2019) show. In addition, advances in telemanipulation are made possible by new technologies such as tactile feedback (Kuchenbecker et al., 2010; Fishel et al., 2016), augmented (Lee and Park, 2018), and virtual reality (Sagardia et al., 2015).

Telemanipulation is also being brought into new areas of the application close to people, such as care, with the goal to assist the patients, for example in (Vogel et al., 2018), or to relieve caregivers (Boll et al., 2018).

Most investigations of the suitability of different input devices were carried out on mobile robots, as the review by (Fong and Thorpe, 2001) shows, or on concentric-tube robots in a surgery setting (e.g., Burgner et al., 2014; Fellmann et al., 2015; El-Hussieny et al., 2018). Fellmann et al. (2015) also compared different input devices from the market for controlling a concentric tube robot. The comparison uses three tasks (Position Reachability, Pick and Place and Follow Path) and three off-the-shelf input devices (3D Connection SpaceMouse, Novint Falcon Haptic Controller, and a gamepad). The Novint Falcon performed best for the Position Reachability task, while the 3D Connection SpaceMouse for the other tasks.

Although there has been significant progress in the field of remote telemanipulation in recent years and it is being used successfully in some areas, there is still a need for research in the field of suitable input devices (Abi-Farraj et al., 2018). Especially in the field of application of a telemanipulator in telecare, there are no investigations yet (Krick et al., 2019). This paper intends to lay the foundations for this area.

Current telecare systems mostly include monitoring and personal interaction (telephone or videoconference) systems. The personal interaction of the remote caregiver can take place both with the patient and with the caregiver on site. There is no physical interaction so far (van den Berg et al., 2012; Becker et al., 2013).

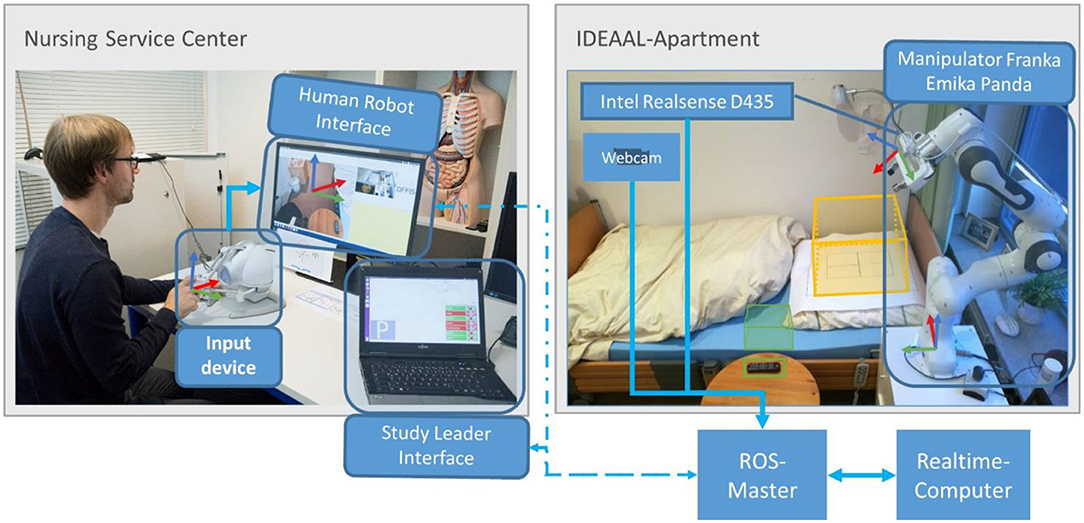

The experiments are performed in the IDEAAL apartment in Oldenburg, Germany (Kröger et al., 2011). This is a realistic replica of a typical senior apartment and thus forms a familiar environment for the nursing staff. The manipulator is installed in the bedroom at the head end of the patient's bed, as shown in Figure 2. The master side is situated in another lab, which is a replica of a care service center.

Figure 2. Architecture diagram of the system. It shows the division of the system into rooms and their connections.

The aim of our research is to relieve the strain on nursing staff. In this case, it is particularly important to reduce unnecessary trips to patients with relatives on site. We evaluated the following two scenarios:

(1) A relative is currently carrying out personal hygiene with the patient in bed. If the relative detects a skin irritation, wound, or similar change on the patient and is not sure whether or how he or she can or should treat it, he or she has the option of contacting his or her care service. Thanks to a camera mounted on the robot, the nursing staff can evaluate the situation remotely and provide their opinion. Here, the staff is completely free in the choice of the point of view and is not dependent on unsuitable perspectives in mobile phone pictures of the family member.

(2) In this scenario, we assume a bedridden patient living alone. The system enables professional support from the remote nurse in the care service center if the patient or a junior nurse requires care or help. A care service center is a place where experienced nursing staff, who may no longer be physically able to care by themselves, are available to relatives and new nursing staff as remote service. This allows the junior nurse to perform more tasks that he or she would not be able to do without the support of a nurse by telemanipulation. The robot serves as an additional arm on-site for the nurse. The possibility of grasping objects enables active participation in the nursing process by the remote nurse. The remote caregiver can perform tasks that vary greatly. This can be, for example, administer demand mediation, holding the patient, or the handing of personal items that have fallen out of bed. The great variety of tasks makes automation difficult.

For the system, this means that on the one hand, the possibility should be given to support complex sequences from the distance by an experienced caregiver, as well as to accomplish small activities, which do not require the journey of a caregiver. These are some of the critical activities that a nurse should perform with a manipulator. We selected the following two tasks from a variety of options for the study as they cover basic skills:

• To set a specific camera image

• Pick and place an object

We define the tasks in more detail in section Tasks.

The patients' bedrooms are often very small and confined. To be able to work effectively with a robot in a limited space, a 7-degree-of-freedom manipulator is used. Compared to a 6-degree-of-freedom manipulator, this offers a larger set of configurations to reach a certain place and therefore a better possibility to move in narrow spaces. Especially, since different objects, including the person on-site can be located between robot and patient. A manipulator with seven degrees of freedom makes it possible to reach around them.

The implementation of a telemanipulator for outpatient care consists of the following components, shown in Figure 2:

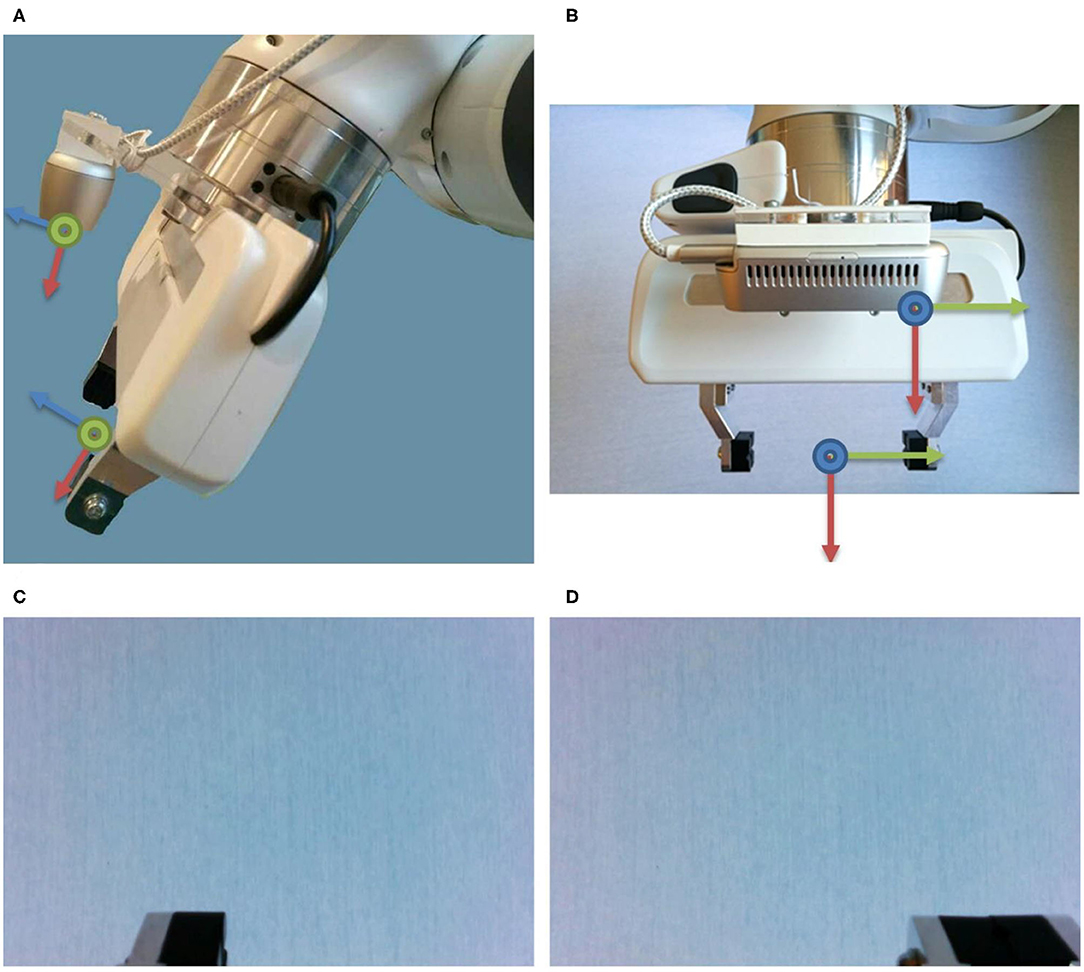

• Slave side (care service center): manipulator represented by the Franka Emika Panda, controlled by a real-time computer and enhanced with an Intel RealSense D435 RGB-D camera, Figure 3, for recording RGB video.

• Master side (IDEAAL-Apartment):

° The human-robot interface application to control the manipulator and display the camera images to the user.

° Two input devices to record the control commands, which should be compared.

Figure 3. The Intel Realsense D435 is mounted centrally, 2.56 cm above the gripper, the Panda Hand, and (A) tilted by 16.7°. (B) The center of the RGB camera is shifted by 6.2 cm in negative y-direction relative to the tool center point (TCP). The resulting RGB image that the subject sees with (C) open and (D) closed gripper. Thus, the last 2 cm of the finger (only the left one when the hand is open, both when the hand is closed) can be seen.

Figure 2 shows the physical structure: the manipulator is located next to the bed on a cupboard in the bedroom. This cabinet houses the real-time computer for robot control and the desktop master computer. The controller of the robot is located in another cabinet. The input devices and the user computer are located in an adjacent room, the care service center. All computers, except the controller of the robot, are in the same network.

The Franka Emika Panda represents the Manipulator. This is a 7-degree of freedom manipulator with force-torque sensors in each joint. It is a collaborative robot that may be operated in the workspace of a human being.

Between the flange of the robot and the Panda Hand, we mounted a camera mount for the Intel RealSense D435. The viewing direction of the camera is approximately parallel to the gripper fingers but tilted by 16.7° so that the rear half of the fingers is still displayed in the camera image. The exact position of the camera was determined by hand-eye calibration (Tsai and Lenz, 1989).

The controller of the robot is connected to a computer running a real-time Linux Kernel on Ubuntu. We have enhanced the Cartesian impedance example controller from Franka Emika by eliminating the use of an interactive marker and adding a simple avoidance of joint limitations. The current deflection of the joint is monitored. As soon as this reaches 95% of its maximum deflection, the torque of the affected joint is limited: the torque can now only take on the maximum value for statically holding the current Cartesian position of the end effector. Torques that lead to a reduction of the joint deflection are still permitted. The controller receives the Cartesian position to be set via ROS (Quigley et al., 2009). These Cartesian positions are derived from the incoming input commands from the user computer. The achievement of the conditions was automatically monitored and all data were recorded.

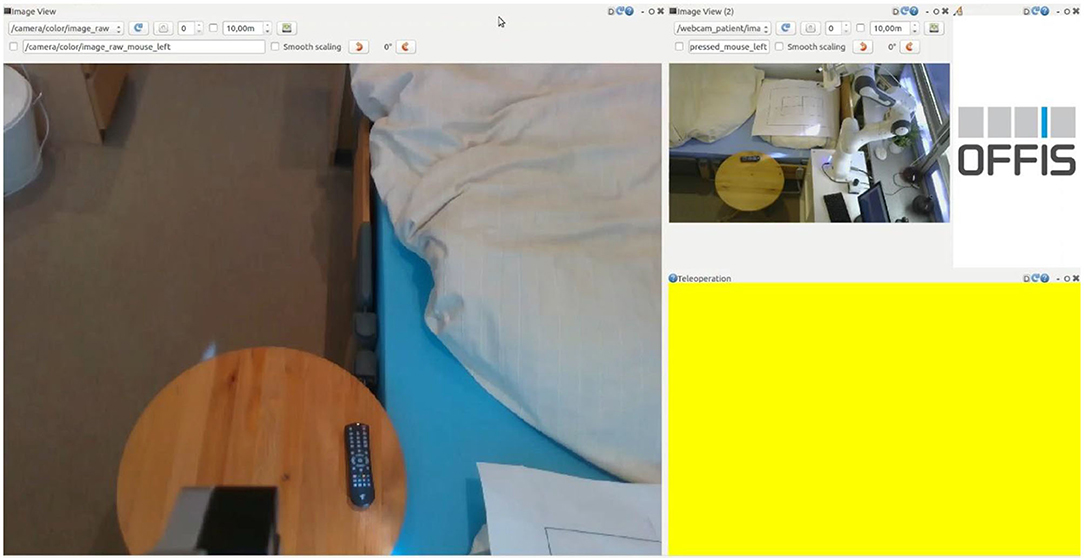

The Human-Robot interface consists of a graphical user interface (GUI) for the operator, as shown in Figure 4, and the input device. The user interface contains only the two camera images and a widget for recording the mouse position.

Figure 4. Graphical User Interfaces on the master side. On the left, the video image from the camera at the end effector, from this perspective the manipulator is controlled. On the top right is the video image for the bird's eye view, this is used for the overview and the recognition of the overall situation. The right-bottom part of the GUI, colored with yellow, indicates the position in the screen where the mouse should be located to control the manipulator. When the mouse is over this area, and the control is enabled, the area turns green.

The user does not actively control one of the seventh degrees of freedom of movement. Steering all seven degrees of freedom is very complex. To reduce the complexity, the steering is done from the perspective of the camera at the end effector, as shown on the left side of Figure 4. The users only determine the movement of the image of the camera at the end effector: the camera frame is fixed on the left side of the graphical user interface. With the input device, the user shifts the desired Cartesian position of the camera frame, shown in Figure 2. The robot controller determines the corresponding robot configuration. For the operator, this reduces the degrees of freedom to six. The controller still uses all seven degrees of freedom to set the desired end-effector position. The disadvantage of this is that the overall situational awareness by the operator is very difficult. Therefore, a second camera perspective from a bird's eye view is necessary and added to the right side of the graphical user interface in a smaller size, shown in Figure 4.

The input device can be used to specify a maximum shift of the image translation by 0.17 m/s and rotation by 0.25 rad/s constantly from the current position. The joystick commands are read with a rate of 30 Hz. This target position is set by a Cartesian impedance control with joint limit avoidance.

We used the system described above in this section in a user study. This will test the acceptance by nursing staff and its possible applications. In addition commercially available input devices were compared, to figure out which one is the best suited for controlling a robot arm (or manipulator). We determined the selected subset of input devices used in this study by a preliminary study (section Preliminary Study). The subjects perform two tasks with two different input devices each for this purpose.

The manipulator and its operator are located in two different labs. The operator looks at a camera image transmitted from the tool of the manipulator and controls from this perspective (Figure 4). As an additional aid, a camera image of the overall situation that is considerably smaller is also available.

The user should concentrate only on controlling and completing their tasks. Therefore, a researcher configures each trial on their separate interface. This contains buttons to set the condition, start the recording, switch the input device, and one to replace the manipulator to the start configuration.

We conducted a preliminary study to determine the most promising input devices for the study. As described in section Preliminary Study, we select the Novint Falcon 3D Haptic Controller and Keyboard together with a mouse as input devices. This represents two extremes on a spectrum of complexity: one is a more advanced and specialized device while the other is a generally very well-known device. The commands of the input devices are additive. This means that diagonal movements are also possible. For the keyboard and mouse, this means that a combined rotational and translational movement can also be performed. With the Novint Falcon 3D Haptic Controller, this is not possible in our implementation.

The supplementary questionnaires [two pages per device (SUS (Brooke, 1996) and NASA TLX (Hart and Staveland, 1988)) and a concluding sheet] provide information about the usability and the cognitive and physical stress for the controlling person. In addition, we expect information on the usefulness, acceptance, and usability of the overall system described below.

The main objective of this study was to find out whether nursing staff could remotely control a manipulator and thus complete tasks in a reasonable amount of time. The study bases on a within-subject design. The experiment consists of four conditions. These result from the possible combinations of input devices and tasks. We randomized the conditions regarding the order of the input devices but not balanced them. We defined as the main task performance indicators:

• Task execution times

• Cognitive load

• Usability

In addition, we wanted to examine the nursing staff's view of the use of a robotic manipulator in nursing. A questionnaire, using SUS (Brooke, 1996), NASA TLX (Hart and Staveland, 1988), and selected questions from TUI (Kothgassner et al., 2013) and TAM2 (Venkatesh and Davis, 2000), was designed for this purpose. The trajectories of the end effector that the users have driven allow us to conclude the potential for improvement of the control. This could be automatic rotation, for example, so that the camera is always perpendicular to a selected surface. For this purpose, these trajectories and the corresponding image from the camera at the end effector are recorded. We have formulated the following hypotheses, which we answered in section Discussion:

• H1: Caregivers can remote control a manipulator.

• H2: Remote control service is economically viable for the tested support actions.

• H3: The operator's task time decreases with the Novint Falcon 3D Haptic Controller concerning the time using the keyboard and mouse.

• H4: The cognitive load and usability increases with Novint Falcon 3D Haptic Controller.

• H5: The technology is well-accepted and considered to be helpful.

• H6: Users with prior joystick experience achieve shorter execution times.

• H7: The control of the orientation is more difficult for the users than that of the translation.

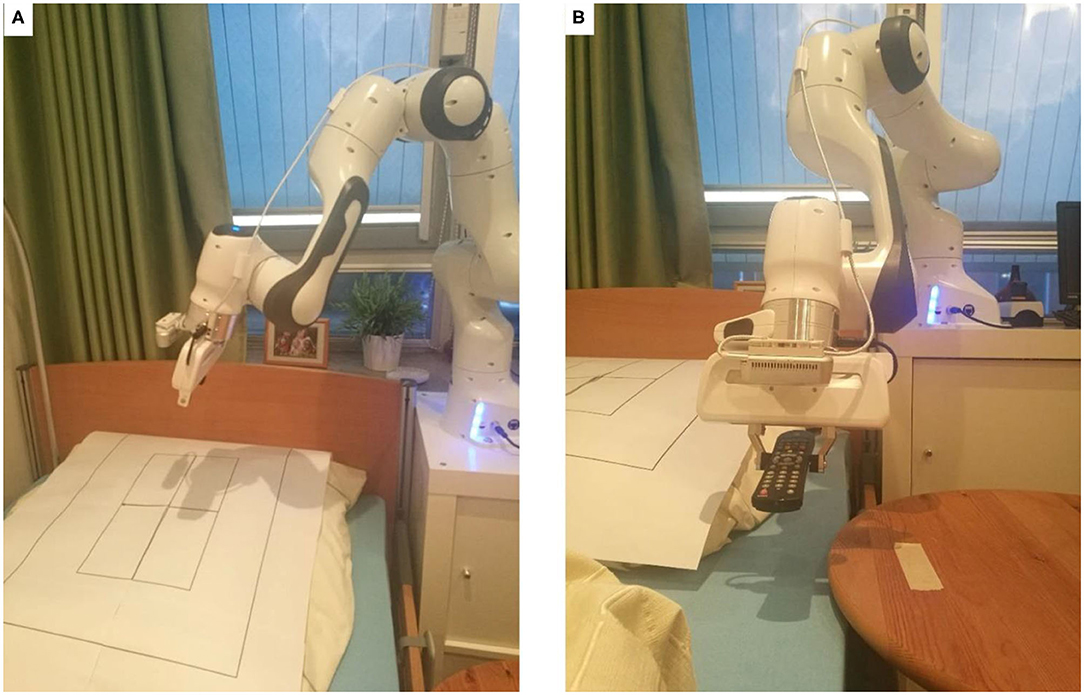

The tasks commonly used to compare input devices in manipulators are (1) Pick and Place and (2) Position Reachability, as also described in Burgner et al. (2014), Fellmann et al. (2015), and El-Hussieny et al. (2018). We have selected equivalent tasks from the needs of care: (1) as an equivalence to Pick and Place, the handing of things, in this case we choose a remote control, (2) as an equivalence to “Position Reachability” the image was set to get an idea of the situation on-site.

To make the tasks from the nursing context comparable for the study, we abstracted and standardized them.

Both tasks have the same initial configuration of the manipulator: the end effector with the camera is located above the base and is aligned to the remote control. The goal for the task “Set Camera View” had to be more abstract from the actual care activity. Especially so that the goal is clearly defined and set. Therefore, we designed a target, consisting of a target cross with an indicating arrow and two rectangular boxes, as shown in Figure 5. The test person aims to adjust the camera image so that they only can see the inner box and not the outer box. They must select the orientation in such a way that the arrow of the target cross points upwards in the image. This task requires translation and rotation of the end effector.

Figure 5. The two tasks: (A) the task “Set Camera View” with the target cross over which the camera should be placed vertically. The distance should be chosen so that the outer black frame is no longer visible and the inner black box is completely visible. (B) The task remote control with the starting position of the remote control marked on the table and the bed as the target.

The remote control lies on a table in a defined position in front of the bed. The position of the remote control on the table is fixed and marked, as shown in Figure 5. The target for the “Manipulation” task is the area in bed, which is directly next to the table, where the remote control is placed. This is also approximately the position in bed where the patient's hand would be expected to be. This task can be solved with only translational movements of the end effector. The rotation of the remote control at the target position is not relevant for this task.

A total of 25 nurses from various care institutions in northern Germany were invited as subjects. The mean age was 40.36 years (median 42) with a standard deviation of 13.59. Figure 6 shows the exact distribution. Twenty-two of the subjects were right-handed and two left-handed. More than half (14) had limited visual abilities and six subjects were familiar with the use of joysticks, PC game control, or remote control of drones or cars. Most of the test persons spend little working time at the PC (mean 2.87 h, median 2.25 h), as shown in Figure 6. Gender was not recorded because no correlations were expected here.

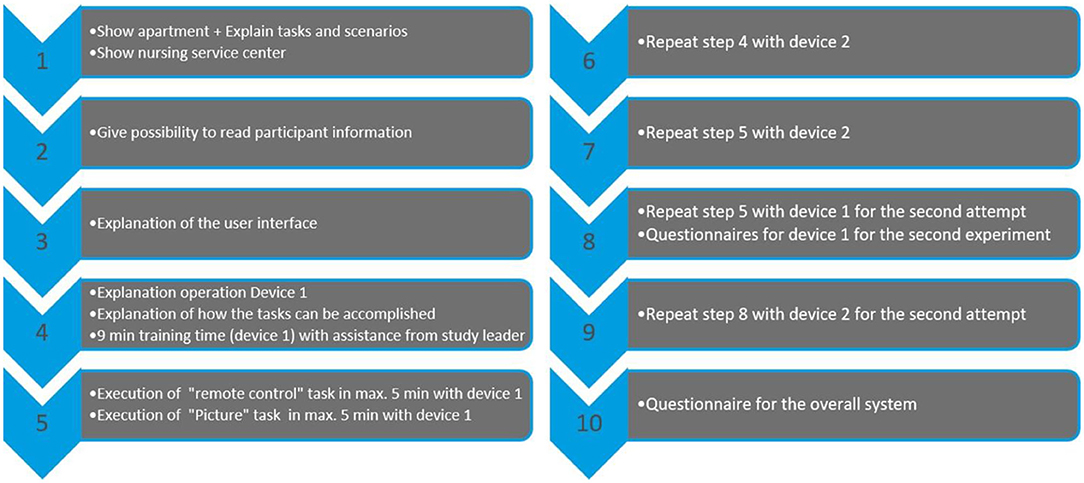

The study was then conducted in two rounds, within which the order of the input devices is randomly determined, see also Figure 7.

Figure 7. Procedure of the study for one subject: Study procedure for one participant: After an introduction phase to the system (steps 1 to 3), the actual conduct of the study begins in two rounds (round 1 is steps 4 to 7, round 2 is steps 8 and 9). In each round, both input devices are used in random order. The study is completed with step 10.

The first round began with an explanation of the first input device and a 9-min training phase with this device, during which the researcher assisted. Then the two tasks were carried out, for which the subject had a maximum of 5 min. The task timer stopped automatically as soon as the target area was reached. Then these steps were repeated with the second device.

The second round started directly with the execution of the two tasks with the same input devices in a new randomly selected order.

After the second round, the usability with the SUS (Brooke, 1996) questionnaire and the load with the NASA TLX (Hart and Staveland, 1988) questionnaire of the first device are queried.

A training phase as well as two rounds are also performed with the second device.

Finally, the subject is asked to complete a custom questionnaire, consisting of three questions from TUI (Kothgassner et al., 2013):

• Would you want access to this technology?

• This technology would help me to do my daily tasks more comfortably.

• Using this technology would bring me more disadvantages than advantages.

and one from TAM2 (Venkatesh and Davis, 2000):

• Given that I have access to the system, I predict that I would use it.

Custom questions, asking about improvement suggestions, application possibilities, age, handedness, visual restrictions, working time at the PC, and prior experience in handling joysticks/PC game control, were used.

In the literature, similar studies are often performed with different input devices. Based on Zhai (1995), Fong and Thorpe (2001), and Fellmann et al. (2015) we roughly divide them into four classes:

• Simple (e.g., keyboard, mouse, gamepads or simple joysticks)

• Specific for six dimensional inputs (e.g., 3DConnexion SpaceMouse or Vive Controller)

• Very specific for six dimensional inputs in combination with force feedback (e.g., Novint Falcon 3D Haptic Controller or Phantom Omni)

• Robots (e.g., the same manipulator for master and slave side or custom designed input devices).

For our study, we want to use commercially available, not too complex and affordable input devices, so that the results can be relevant for nursing care and the study is reproducible. We therefore select the following devices from the first four groups:

• Keyboard and mouse

• XBOX Controller

• Novint Falcon 3D Haptic Controller

• 3DConnexion SpaceMouse.

The study procedure described in section Procedure would have been too time-consuming for the subjects if they used all four input devices. Therefore, a preliminary study with four subjects was conducted. The structure and procedure of the preliminary study correspond exactly to that of the main study, but the tasks were performed with all four input devices. One subject, therefore, needed 2 h to complete the study.

The results of the preliminary study in Table 1 show that the Novint Falcon 3D Haptic Controller achieves the best execution times. The results of the usability and stress test in Table 1 do not correspond to the order of the times. Here the keyboard and mouse are felt to be the most operable and the least stressful. Not all subjects were able to complete successfully every task with every device.

Based on these results, we decided to conduct the study with keyboard and mouse, as the most comfortable device to use, and the Novint Falcon 3D Haptic Controller, as the device with the best execution times.

The teleoperation system presented in this work has also several limitations. We discuss the most important limitations in the following:

• Telerobot: the manipulator used has joints with limits. This leads to a restriction of the working space and singularities, which manifest for the user as inexplicable and invisible barriers.

• Robot mounting: The robotic manipulator is mounted on a fixed base beside the bed. This results in a restricted working area within a radius of ~0.85 m. A movable base would increase the working area and thus the possibility of performing tasks.

• Connectivity: as a study on input methods, the complexity of network latency is outside the scope of this work.

• Human-Robot Interface: the Human-Robot Interface has a big influence on the usability of the whole system. Since our system only displays the 2D camera images and the current mouse displacement.

• Input device: the work focuses on translation and rotation input for the end effector. It does not address higher-level commands and haptic feedback.

The study did not involve any medical experiments and also no biometric data was taken from participants. We did not take any personal data from participants besides age, whereas all taken data were fully anonymized. All subjects were informed in writing about the study and their rights. For the subjects there was (a) no physical risk as they were sitting in a room separate from the robot and (b) no psychological risk in terms of load as the duration of the study was reduced to 1 h and there was no human being in the room with the robot whom they could have injured. It was not necessary for an external ethics committee to consider the study.

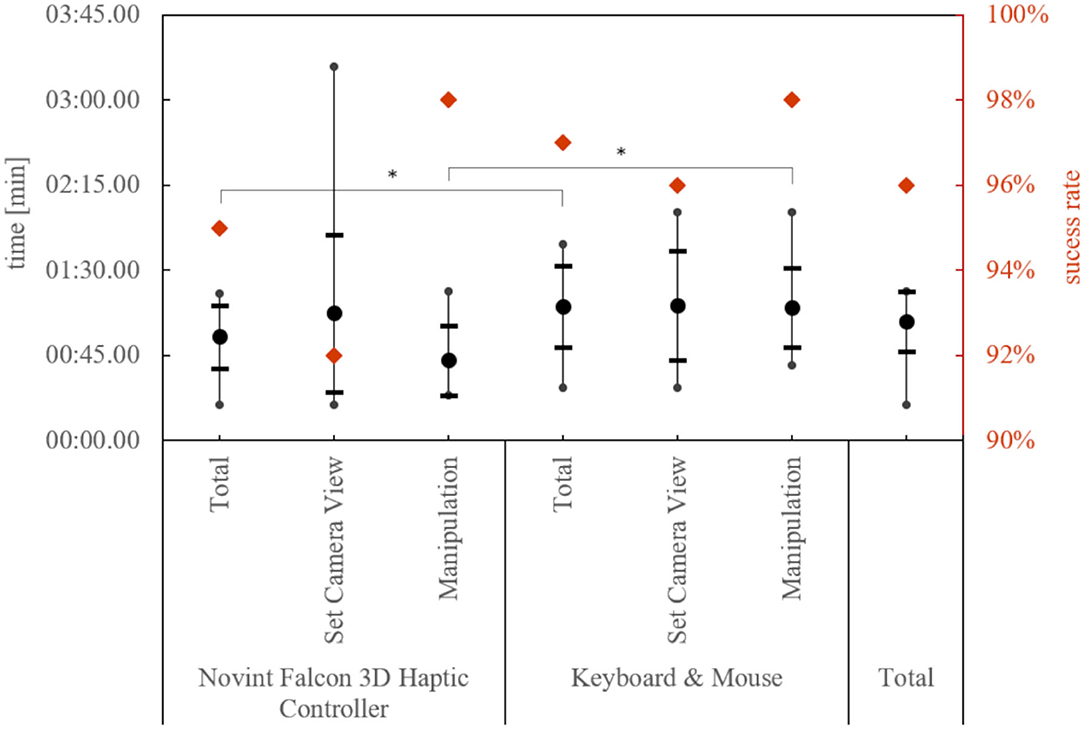

All 25 subjects had individual appointments and tested both control systems, Novint Falcon 3D Haptic Controler and keyboard and mouse. In total, there were 200 attempts to master the tasks, 192 of which were successful (96%). Figure 8 also shows the success rate in detail for both devices in total and divided for each of the two tasks. Furthermore, it shows the respective execution times. The average values here are close to 1 min. We describe the detailed analysis of the times in the next section. However, each test person has successfully completed each task at least once. In addition, the questionnaires SUS and NASA-TLX were answered 50 times, and the own questionnaire 25 times. Forty percent and 68% of the subjects answered the free text questions “Suggestion for improvement” and “Further meaningful application possibilities in care.”

Figure 8. Success rate and the mean of the minimum execution time of a subject by device and task. The thick dot represents the mean value, the horizontal lines the standard deviation, and the vertical lines the min/max limit. Significant differences in the execution times are marked with a *.

We divide the results can into five parts: (1) comparison of the input devices based on the execution times and (2) based on the SUS and NASA-TLX questionnaires, (3) the assessments of the overall system, as well as (4) the combination of the execution times with the other values and finally (5) the analysis of the trajectories.

For this purpose, we tested the best times of the test persons with the respective device, independent of the task, against the thesis of equality with a paired T-test. The execution time was approximately normally distributed, as assessed by the Kolmogorov-Smirnov-Test, p > 0.05. The best execution times of the subjects were significantly lower with the Novint Falcon 3D Haptic Controller, t(24) = −6.38, p < 0.001. This can also be shown by looking at the times of the task “Manipulation,” t(24) = −7.68, p < 0.001, but not for the task “Set Camera View,” t(24) = −0.55, p = 0.30. In addition, the test persons completed the task of “Manipulation” significantly faster than the task of “Set Camera View,” t(24) = 3.17, p = 0.004. The Novint Falcon 3D Haptic Controller shows a significant improvement in execution times from the first to the second attempt, t(24) = 2.23, p = 0.035, but not with the keyboard and mouse, t(24) = 1.51, p = 0.144.

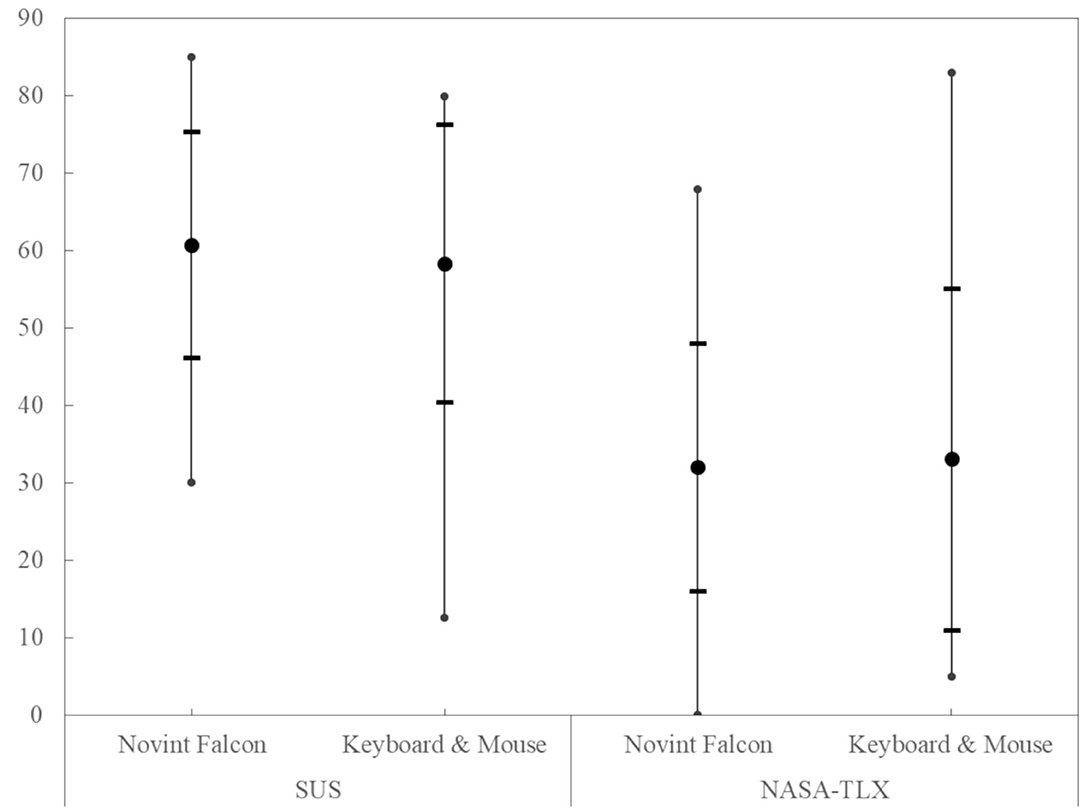

The usability with the SUS value and the load with the NASA-TLX of the two input devices, shown in Figure 9, are again compared with the paired T-test. The SUS value and the NASA-TLX were approximately normally distributed, as assessed by the Kolmogorov-Smirnov-Test, p > 0.05. The mean values hardly differ and the range of evaluations covers almost the entire range. For both values, no difference between the input devices could be determined. Also, the individual questions of the NASA-TLX show no difference between the devices.

Figure 9. The comparison of the SUS and NASA TLX scores for the input device shows no significant difference. The thick dot represents the mean value, the horizontal lines the standard deviation, and the vertical lines the min/max limit.

In our own questionnaire, we asked about the acceptance of such a system and whether it was helpful. The answers were approximately normally distributed on a 7-point Likert scale, as assessed by the Kolmogorov-Smirnov-Test, p > 0.05. Therefore, we tested the following statements with a one-sided T-test. The question of whether the subjects wanted access to this technology was answered positively and significantly more than 5 on the 7-point Likert scale, where 7 is the most positive, t(24) = 2.69, p = 0.006. On the same scale, the consent to use, if they had access to this technology, is also positive and greater than 4, t(24) = 4.24, p < 0.001. The statement that this technology would help to do their daily tasks more comfortably is rated higher than 4 on the same scale, t(24) = 5.69, p < 0.001. In addition, the subjects agree with the statement that this technology would bring them more advantages than disadvantages, significantly < 3, where 1 means complete agreement, t(24) = −5.93, p < 0.001. For none of the questions, we could identify an age dependency.

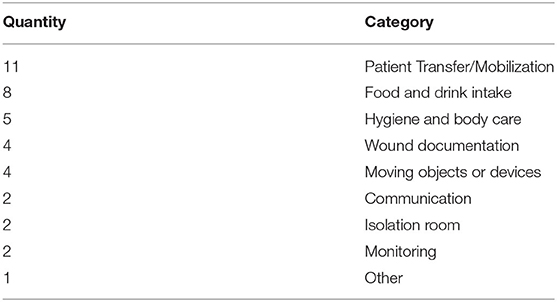

Table 2 shows the qualitative evaluation of the question, which application possibilities the nursing staff can imagine for this technology.

Table 2. Qualitative evaluation of the question: “Can you imagine further meaningful application possibilities of the technology in nursing?”

In this passage, we analyzed the correlations with the daily working time on the PC of the test persons. There was no correlation between the daily working time on the PC and the execution times with the keyboard and mouse, ρ = 0.02, t(24) = 0.08, p = 0.933. But there was a correlation between the daily working time on the PC and the SUS score and the NASA-TLX score of the keyboard and mouse. With increasing working time at the PC the usability of keyboard and mouse decreases [ρ = −0.63, t(24) = −3.92, p = 0.001] and the NASA-TLX score increases, ρ = 0.46, t(24) = 2.47, p = 0.022.

Further dependencies of the execution times could not be determined. The dependencies were investigated with testing the correlation or the two-sided two samples t-test assuming equal variances: age, ρ = 0.23, t(24) = 1,13, p = 0.269, sight restrictions, t(24) = 0.18, p = 0.859, joystick experience, t(24) = −0.66, p = 0.517, handiness, t(24) = −0.60, p = 0.555.

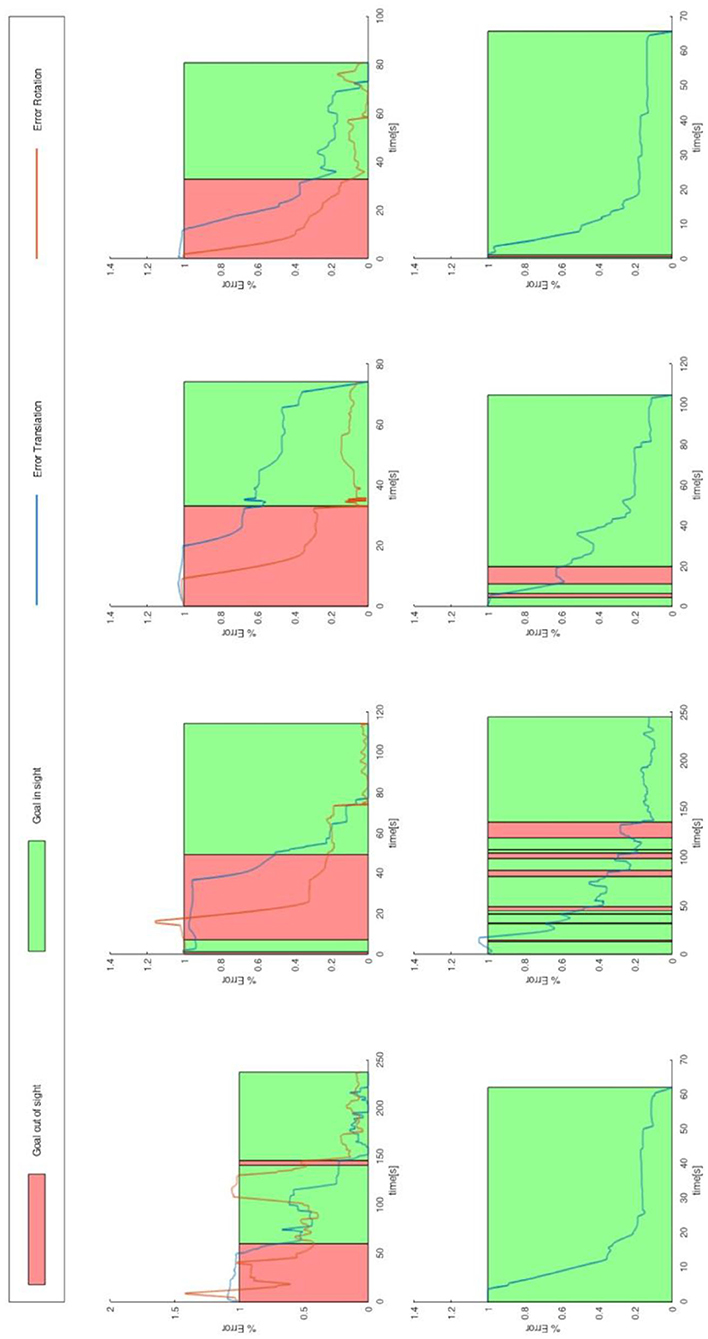

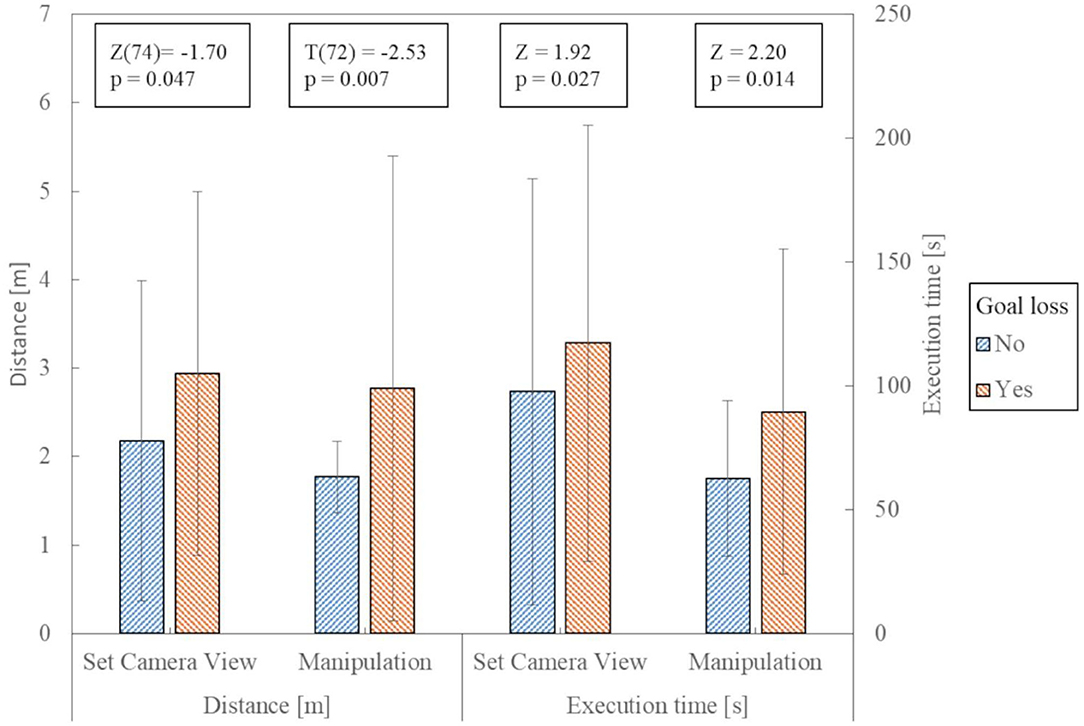

The execution of the task “Set Camera View” requires the combined execution of translations and rotations relative to the camera image on the gripper. The analysis of the trajectories shows that the translation was faster in the target range than the rotation: on average, only 74.58% of the total execution time was needed for setting up the translation correctly, t(18) = −2.19, p = 0.047, tested against < 90% with a one-sided T-test. Figure 10 shows this too. From the data, it can be seen that the subjects have the greatest difficulty in positioning the camera vertically above the target, which was their task. They often do not know in which direction they have to rotate to achieve the desired result. The subjects often lost sight of the target. Therefore, we investigate whether this affects the control: we manually segmented and labeled the trajectory segments with two categories:

(i) Target was visible to the subjects in the camera image

(ii) Target was not visible.

We compare the following trajectories:

• Task “Manipulation”: trajectories containing only label (i) with those where the label (ii) also occurs at least once.

• Task “Set Camera View”: trajectories containing label (ii) once (at the beginning), with those containing the label (ii) multiple times.

Figure 10. Relative error of translation and rotation of the end effector, with 100% of the errors in the start position and 0% of the errors at the target. The data shown are eight executions of a subject. The upper row show the task “Set Camera View” and the lower row show the task “Manipulation.” The intervals in which the subject could not see the target are highlighted in red. The outer rows were done with the Novint Falcon 3d Haptic Controller, the two inner rows with keyboard and mouse. No rotation target was defined for the task “Manipulation,” therefore no rotation error is specified for this task.

A significant difference can be observed in the time and distance required: the execution time and the distance traveled is longer if the target is lost sight of [trajectory contains also label (ii)]. We tested the difference in time with a Mann-Whitney U-test, and the difference in distance traveled with a T-test. Figure 11 shows the values of the test.

Figure 11. Comparison of the trajectories concerning temporal and spatial length. The error bars show the standard deviation. The Z/T and p-values apply to the comparison of the data under them.

After a short training phase, the nursing staff can control the telemanipulation system and complete the given tasks in 96% of the cases. Combined with an average SUS rating of “O.K.” (Rating according to (Bangor et al., 2009): interval for O.K. 50.9–71.4 on a scale from 0 to 100) saying it is usable for both input devices, this proves that caregivers can control a manipulator (hypothesis H1).

Hypothesis 2, which states that telemanipulation makes economic sense, can be confirmed under certain conditions. Using the example of the task “manipulation” the following calculations are carried out: An outpatient nursing service in Lower Saxony, Germany, can calculate 4.14 € per started 10 min in 2020 for activities of “domestic care,” under which this task would fall. This means that even for the ~1 min that the activity here took on average 4.14 € can be charged. A flat rate of 4.30 € can be charged for the way to the patient during the day. In the case of classic care, the nursing service would thus earn 8.44 € in ~13 min (6 min journey to the patient, estimated 1 min's activity at the patient's home, 6 min return journey). In the case of care with the telemanipulator, only 4.14 € could be invoiced, but the travel time to the patient is omitted, in which further patients can now be cared for. Consequently, the use of the telemanipulator is more lucrative if three patients are treated within the 13 min required in conventional care.

The Novint Falcon 3D Haptic Controller showed to be the best in terms of execution time (proves hypothesis H3), but no difference compared to keyboard and mouse in usability and load was evident (disproved hypothesis H4). Nevertheless, the usability is only “O.K.” Looking at the execution times, it can be seen that primarily translational movements (task “Manipulation”) were easier for the test persons. Regarding the two input devices, it can be seen that the combined execution of all translation directions, as it is more intuitively possible with the Novint Falcon 3D Haptic Controller, is advantageous for such tasks. For tasks that require both rotational and translational movements, it depends on the user whether the separation of translational and rotational movements or the enabling of parallel execution is more successful.

The results support the assumption (hypothesis H5) that the nursing staff desire and could accept the described telemanipulation system. Especially the possibility of supporting patients and caregivers during the transfer or mobilization, the handing of food, drinks, and care utensils, as well as know-how, can be well-imagined by the nurses interviewed. Contrary to expectations, technology acceptance and readiness do not decrease with age.

We could not show the in hypothesis 6 expected dependencies between coping with such a system and the age or joystick prior experience. The lack of dependency on experience with joysticks can either be due to the fact that the control is not similar enough to classic joystick control in games or that the developed concept is easy enough to use even for novices. In fact, it seems to be a mixture of both. The usability of the input devices (mean of both) is rated better by the test persons with joystick experience [t(23) = 2.17, p = 0.020], but with an average value of 67.14 it is only “O.K.” When comparing the cognitive load, no difference can be found, t(23) = −1.24, p = 0.115. This means that the test persons without previous joystick experience find the tasks just as easy as those with experience.

The observed increase in the times achieved with keyboard and mouse when a subject works a lot on the PC is unexpected. However, the keyboard and mouse are used completely differently in the daily work of the nursing staff than was necessary here. This habituation may have made it more difficult for these subjects to learn the new way of using them. However, the available data material does not provide a basis for a clear explanation. Further research could provide clarity here.

The result that it takes relatively less time to change a distance instead of changing an orientation confirms hypothesis 7 that adjusting the orientation is more challenging for the subjects. In combination with the longer execution times for experiments with loss of target out of sight. From this, we conclude that if the subject could choose a surface to which the camera automatically aligns vertically, would relieve the pilot and lead to faster success. This study shows an average savings potential of 25% or 24 s. At the peak, this can be up to 88% or 112 s.

In our work, we presented the first draft of a telemanipulation system for nursing and showed that nursing staff can operate it. The system already allows caregivers to execute tasks remotely within a reasonable time. There are strong indications that the users accept the system. This shows that such a system could in principle be suitable for outpatient care.

In comparison to related work, we only compared commercially available input devices for telemanipulation and used a standard manipulator. In addition, we conducted the study exclusively with professional nurses, who are technical non-experts. However, the study also showed possible points for improvement. The qualitative results showed that there could potentially be further fields of application for telemanipulation, which should be investigated in future studies.

The results suggest the next step is to automate the control of the rotation. This should always be selected so that the goal remains in sight. This is relevant for both tasks because in the “Set Camera View” task the subjects needed relatively more time to set the rotation correctly and in the “Manipulation” task they needed longer if they did not see the target anymore. It can also be a good way to visualize objects outside the field of view. Here, current augmented reality technologies, such as EyeSeeX (Gruenefeld et al., 2018), could be applied to telemanipulation.

In future work, we will improve the control system toward a semi-automatic system and compare this with the results achieved here. Follow-up work will also consider latency aspects in more detail. In addition, in future implementations, we take into account the newly determined deployment scenarios. Especially we should consider the display of depth information in future work. It may also be useful to display 3D images or higher-level information, such as possible gripping points. Tactile feedback should also be considered.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

PG, TK, MP, and AH contributed to the conception of the design of the study. PG implemented and designed the user interface and the robotic system, wrote the first draft of the manuscript, and performed the statistical analyses of the study. SD implemented and performed the calibration of the camera position. MP implemented the widget for the keyboard and mouse control. TK performed the qualitative analysis of the study. All authors contributed to manuscript revision, read, and approved the submitted version.

This work was funded in part by the German Federal Ministry of Education and Research (Project No. 16SV7819K).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We like to thank all participants for their time and effort.

Abi-Farraj, F., Pacchierotti, C., and Giordano, P. R. (2018). User evaluation of a haptic-enabled shared-control approach for robotic telemanipulation,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Madrid: IEEE), 1–9. doi: 10.1109/IROS.2018.8594030

Attard, L., Debono, C. J., Valentino, G., and Di Castro, M. (2018). Tunnel inspection using photogrammetric techniques and image processing: a review. ISPRS J. Photogramm. Remote Sens. 144, 180–188. doi: 10.1016/j.isprsjprs.2018.07.010

Bangor, A., Kortum, P., and Miller, J. (2009). Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 4, 114–123.

Becker, H., Scheermesser, M., Früh, M., Treusch, Y., Auerbach, H., Hüppi, R. A., et al. (2013). Robotik in Betreuung und Gesundheitsversorgung. TATup 22, 62–64. doi: 10.14512/tatup.22.1.62

Berger, R. (2017). (Deutsches I. für angewandte P. & P.-T. H. V. ePflege. Informations- und Kommunikationstechnologie für die Pflege. Studie im Auftrag des Bundesministeriums für Gesundheit. 91. Available online at: http://www.dip.de/fileadmin/data/pdf/projekte/BMG_ePflege_Abschlussbericht_final.pdf (accessed December 5, 2017).

BMBF (2015). Technik zum Menschen bringen. Available online at: https://www.bmbf.de/de/technik-zum-menschen-bringen-149.html(accessed March 22, 2019).

Boll, S., Hein, A., Kadmon, M., Wolf-Ostermann, K., Heuten, W., Lindemann, G., et al. (2018). “Pflegeinnovationszentrum,” in Zukunft der Pflege Tagungsband der 1. Oldenburg: Clusterkonferenz 2018, 1–7.

Brooke, J. (1996). “SUS - A quick and dirty usability scale,” in Usability Evaluation Individual. eds P. W. Jordan, B. Thomas, B. A. Weerdmeester, and A. L. McClelland (London: CRC Press) 189–194.

Burgner, J., Rucker, D. C., Gilbert, H. B., Swaney, P. J., Russell, P. T., Weaver, K. D., et al. (2014). A telerobotic system for transnasal surgery. IEEE/ASME Trans. Mechatronics 19, 996–1006. doi: 10.1109/TMECH.2013.2265804

Castro, M., Di, F.erre, M., and Masi, A. (2018). CERNTAURO: a modular architecture for robotic inspection and telemanipulation in harsh and semi-structured environments. IEEE Access 6, 37506–37522. doi: 10.1109/ACCESS.2018.2849572

Chopra, N., Ferre, M., and Peer, A. (2008). The field of telerobotics [From the Guest Editors]. IEEE Robot. Autom. Mag. 15:9. doi: 10.1109/MRA.2008.930402

Deml, B. (2004). Telepräsenzsysteme: Gestaltung der Mensch-System-Schnittstelle (Doctoral dissertation). Univ.-Bibl. der Univ. der Bundeswehr.

Deutscher, E. (2020). Robotik für gute Pflege. Available online at: https://www.ethikrat.org/pressekonferenzen/veroeffentlichung-der-stellungnahme-robotik-fuer-gute-pflege/ (accessed July 16, 2020).

El-Hussieny, H., Mehmood, U., Mehdi, Z., Jeong, S. G., Usman, M., Hawkes, E. W., et al. (2018). “Development and evaluation of an intuitive flexible interface for teleoperating soft growing robots,” in IEEE International Conference on Intelligent Robots and Systems (IROS) (Madrid: IEEE), 4995–5002. doi: 10.1109/IROS.2018.8593896

Fehling, P., and Dassen, T. (2017). Motive und Hürden bei der Etablierung technischer Assistenzsysteme in Pflegeheimen: eine qualitative Studie. Klin. Pflegeforsch. 3, 61–71. doi: 10.6094/KlinPfleg.3.61

Fellmann, C., Kashi, D., and Burgner-Kahrs, J. (2015). Evaluation of input devices for teleoperation of concentric tube continuum robots for surgical tasks. Proceedings 9415:2076741. doi: 10.1117/12.2076741

Fishel, J. A., Loeb, G. E., Matulevich, B., and Davoodi, R. (2016). U.S. Patent Application No. 14/796,647.

Fong, T., and Thorpe, C. (2001). Vehicle teleoperation interfaces. Auton. Robots 11, 9–18. doi: 10.1023/A:1011295826834

Gancet, J., Urbina, D., Letier, P., Ilzkovitz, M., Weiss, P., Gauch, F., et al. (2015). “DexROV: Enabling effective dexterous ROV operations in presence of communication latency,” in MTS/IEEE OCEANS 2015. (Genova: Discovering Sustainable Ocean Energy for a New World) doi: 10.1109/OCEANS-Genova.2015.7271691

Gliesche, P., Kowalski, C., Krahn, T., Drolshagen, S., Hein, A., and Pfingsthorn, M. (2018). A Qualitative Survey on Challenges and Use-Cases for Robotic Assistants in Nursing Care. Available online at: https://www.idiap.ch/workshop/iros2018/files/08f-2018-rfalws-survey-use-cases-final.pdf (accessed November 12, 2018).

Gruenefeld, U., Hsiao, D., and Heuten, W. (2018). “EyeSeeX: visualization of out-of-view objects on small field-of-view augmented and virtual reality devices,” in Proceedings of the 7th ACM International Symposium on Pervasive Displays PerDis '18. (New York, NY, USA: Association for Computing Machinery). doi: 10.1145/3205873.3210706

Hart, S. G., and Staveland, L. E. (1988). Development of NASA-TLX (Task Load Index): results of empirical and theoretical research. Adv. Psychol. 52, 139–183. doi: 10.1016/S0166-4115(08)62386-9

Hülsken-Giesler, M. (2015). Technische Assistenzsysteme in der Pflege in pragmatischer Perspektive der Pflegewissenschaft. Tech. des Alltags, 117.

Kim, V. B., Chapman Iii, W. H. H., Albrecht, R. J., Bailey, B. M., Young, J. A., Nifong, L. W., et al. (2002). Early experience with telemanipulative robot-assisted laparoscopic cholecystectomy using da Vinci. Surg. Laparosc. Endosc. Percutaneous Tech. 12, 33–40. doi: 10.1097/00019509-200202000-00006

Klamt, T., Rodriguez, D., Baccelliere, L., Chen, X., Chiaradia, D., Cichon, T., et al. (2019). Flexible disaster response of tomorrow – final presentation and evaluation of the CENTAURO system. arXiv Prepr. arXiv1909.08812. doi: 10.1109/MRA.2019.294124810.1109/MRA.2019.2941248

Kothgassner, O. D., Felnhofer, A., Hauk, N., Kastenhofer, E., Gomm, J., and Kryspin-Exner, I. (2013). Technology Usage Inventory (TUI). Wien: Manual.

Krick, T., Huter, K., Domhoff, D., Schmidt, A., Rothgang, H., and Wolf-Ostermann, K. (2019). Digital technology and nursing care: a scoping review on acceptance, effectiveness and efficiency studies of informal and formal care technologies. BMC Health Serv. Res. 19:400. doi: 10.1186/s12913-019-4238-3

Kröger, T., Brell, M., Müller, F., Lipprandt, M., Helmer, A., and Hein, A. (2011). “IDEAAL-Der Mensch im Mittelpunkt,” in Proceedings of Deutscher Kongress, Ambient Assisted Living, Vol. 4.

Kuchenbecker, K. J., Gewirtz, J., McMahan, W., Standish, D., Martin, P., Bohren, J., et al. (2010). “VerroTouch: high-frequency acceleration feedback for telerobotic surgery,” in International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (Amsterdam), 189–196. doi: 10.1007/978-3-642-14064-8_28

Kuhlmey, A., Blüher, S., and Nordheim, J. (2019). Technik in der Pflege – Einstellungen von professionell Pfle- genden zu Chancen und Risiken neuer Technologien und tech- nischer Assistenzsysteme. Berlin: Zentrum für Qualität in der Pflege.

Lee, D., and Park, Y. S. (2018). “Implementation of augmented teleoperation system based on robot operating system (ROS),” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE), 5497–5502. doi: 10.1109/IROS.2018.8594482

Merda, M., Schmidt, K., and Kähler, B. (2017). Pflege 4.0–Einsatz moderner Technologien aus der Sicht professionell Pflegender. Hambg: Forschungsbericht für die BGW.

Neumeier, S. (2015). Lokale Verteilung Ambulanter Pflegedienste nach SGB XI in Deutschland auf Basis eines rasterbasierten GIS-Erreichbarkeitsmodells. doi: 10.22004/ag.econ.210093

Pervez, A., Latifee, H., Ryu, J.-H., and Lee, D. (2019). “Human-agent shared teleoperation: a case study utilizing haptic feedback,” in International AsiaHaptics Conference (Incheon), 247–251. doi: 10.1007/978-981-13-3194-7_56

Quigley, M., Conley, K., Gerkey, B., Faust, J., Foote, T., Leibs, J., et al. (2009). “ROS: an open-source robot operating system,” in ICRA Workshop on Open Source Software (Kobe), 5.

Sagardia, M., Hertkorn, K., Hulin, T., Schatzle, S., Wolff, R., Hummel, J., et al. (2015). “VR-OOS: The DLR's virtual reality simulator for telerobotic on-orbit servicing with haptic feedback,” in 2015 IEEE Aerospace Conference (IEEE), 1–17. doi: 10.1109/AERO.2015.7119040

Seibert, K., Domhoff, D., Huter, K., Krick, T., Rothgang, H., and Wolf-Ostermann, K. (2019). “Innovative technologien in der ambulanten und stationären Pflege – Ergebnisse einer nationalen Bedarfsanalyse,” in Zukunft der Pflege Tagungsband der 2. Clusterkonferenz 2019, 31.

Skilton, R., Hamilton, N., Howell, R., Lamb, C., and Rodriguez, J. (2018). MASCOT 6: achieving high dexterity tele-manipulation with a modern architectural design for fusion remote maintenance. Fusion Eng. Des. 136, 575–578. doi: 10.1016/j.fusengdes.2018.03.026

Sparrow, R., and Sparrow, L. (2006). In the hands of machines? The future of aged care. Minds Mach. 16, 141–161. doi: 10.1007/s11023-006-9030-6

Statistisches Bundesamt (Destatis) (2019). Pflegebedürftige nach Versorgungsart, Geschlecht und Pflegegrade. Available online at: https://www.destatis.de/DE/Themen/Gesellschaft-Umwelt/Gesundheit/Pflege/Tabellen/pflegebeduerftige-pflegestufe.html (accessed December 9, 2019).

Tsai, R. Y., and Lenz, R. K. (1989). A new technique for fully autonomous and efficient 3D robotics hand/eye calibration. IEEE Trans. Robot. Autom. 5, 345–358. doi: 10.1109/70.34770

van den Berg, N., Schumann, M., Kraft, K., and Hoffmann, W. (2012). Telemedicine and telecare for older patients—a systematic review. Maturitas 73, 94–114. doi: 10.1016/j.maturitas.2012.06.010

Venkatesh, V., and Davis, F. D. (2000). A theoretical extension of the technology acceptance model: four longitudinal field studies. Manage. Sci. 46, 186–204. doi: 10.1287/mnsc.46.2.186.11926

Vogel, J., Leidner, D., Hagengruber, A., and Panzirsch, M. (2018). Das Projekt SMiLE. in Zukunft der Pflege Tagungsband der 1. Clusterkonferenz 2018, 212–216.

Keywords: teleoperation systems, telerobotics, telecare, human-robot interface, telemanipulation, outpatient care, input device

Citation: Gliesche P, Krick T, Pfingsthorn M, Drolshagen S, Kowalski C and Hein A (2020) Kinesthetic Device vs. Keyboard/Mouse: A Comparison in Home Care Telemanipulation. Front. Robot. AI 7:561015. doi: 10.3389/frobt.2020.561015

Received: 11 May 2020; Accepted: 15 October 2020;

Published: 04 November 2020.

Edited by:

Ioannis Dimitrios Zoulias, United Kingdom Atomic Energy Authority, United KingdomReviewed by:

Fabio Stroppa, Stanford University, United StatesCopyright © 2020 Gliesche, Krick, Pfingsthorn, Drolshagen, Kowalski and Hein. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Pascal Gliesche, cGFzY2FsLmdsaWVzY2hlQG9mZmlzLmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.