94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Robot. AI, 16 February 2017

Sec. Virtual Environments

Volume 4 - 2017 | https://doi.org/10.3389/frobt.2017.00003

This article is part of the Research TopicVirtual and Augmented Reality for Education and TrainingView all 10 articles

Mar Gonzalez-Franco1

Mar Gonzalez-Franco1 Rodrigo Pizarro1,2

Rodrigo Pizarro1,2 Julio Cermeron3

Julio Cermeron3 Katie Li1,3

Katie Li1,3 Jacob Thorn1,4

Jacob Thorn1,4 Windo Hutabarat3

Windo Hutabarat3 Ashutosh Tiwari3

Ashutosh Tiwari3 Pablo Bermell-Garcia1*

Pablo Bermell-Garcia1*

In complex manufacturing a considerable amount of resources is focused on training workers and developing new skills. Increasing the effectiveness of those processes and reducing the investment required is an outstanding issue. In this paper, we present an experiment (n = 20) that shows how modern metaphors such as collaborative mixed reality can be used to transmit procedural knowledge and could eventually replace other forms of face-to-face training. We implemented a mixed reality setup with see-through cameras attached to a Head-Mounted Display. The setup allowed for real-time collaborative interactions and simulated conventional forms of training. We tested the system implementing a manufacturing procedure of an aircraft maintenance door. The obtained results indicate that performance levels in the immersive mixed reality training were not significantly different than in the conventional face-to-face training condition. These results and their implications for future training and the use of virtual reality, mixed reality, and augmented reality paradigms in this context are discussed in this paper.

Modern mass assembly lines for high value manufacturing are either robotized or rely heavily on skilled workers. Nevertheless, training new workers in complex tasks is an outstanding challenge for the industry (Mital et al., 1999). On one hand, it involves having to dedicate limited physical equipment and professionals to instruct new personnel (Bal, 2012). On the other hand, the operation of dangerous equipment can rise health and safety concerns (Sun and Tsai, 2012). In this context, the use of novel technologies to train future workers on the processes could both increase the safety and reduce the training costs, which would eventually translate into an increase in productivity.

Up to now, several computer-based approaches have been proposed as alternative methods for reducing the impact of these hurdles in industrial training. Previous work includes the use of Virtual Environments which allow users to practice and rehearse situations that might otherwise be dangerous in a real environment (Williams-Bell et al., 2015). Despite some controversy with respect to the efficient transfer to real-life setups of the skills trained in Virtual Environments (VE) (Kozak et al., 1993), these approaches have been successfully used for training in a variety of disciplines including health and safety (Dickinson et al., 2011; Kang and Jain, 2011), medical training (Bartoli et al., 2012; Gonzalez-Franco et al., 2014a,b), fire services (Williams-Bell et al., 2015), and industrial training (Muratet et al., 2011). In the context of industrial setups, several studies have examined the effects of virtual training (Oliveira et al., 2000; Stone, 2001; Lin et al., 2002), finding that this type of training significantly improved users’ skills in equivalent real scenarios particularly when they reproduced a face-to-face Virtual Physical System (Webel et al., 2013; Bharath and Rajashekar, 2015).

However, most computer-based training systems are of low fidelity. To a extent that they are not realistic enough to completely replace conventional face-to-face training in complex manufacturing. This is partially due to the fact that in real life workers have access to physical equipment which they manipulate on demand, whereas computer-based training requires a digital version to be created. Using Augmented Reality (AR), workers can achieve higher levels of fidelity to make digital training more tangible. In fact, a prior study (Gavish et al., 2015) compared the use of AR in training for manufacturing and maintenance scenarios to video instructions and non-immersive computer training. The authors found that AR groups tended to perform better after training when compared to the groups that were only shown a video with the instructions. However, they did not find significant differences between computer training and AR groups, and they argued that a ceiling effect was likely the cause. Additionally, this study did not compare the performance of real face-to-face training to the performance of AR training. Other authors have explored the advantages of AR in the guidance of an assembly process (Yuan et al., 2008), with results indicating that AR is an effective method to improve performance. This is consistent with compiled reviews on the state of art in AR applications (Ong et al., 2008), since several AR-based training scenarios have been developed (Webel et al., 2013). In many AR applications, the user needs to hold a device with his hands to experience the augmentation. In this context, head-mounted devices are the only ones that can provide a hands-free experience and potentially a better face-to-face Virtual Physical System (Webel et al., 2013).

Face-to-face interaction is indeed a prominent characteristic of assembly training that seems to play a great role in learning (Lipponen, 2002). To achieve those levels of immersive interaction capable of providing better face-to-face training, we turn to Mixed Reality (MR) and Virtual Reality (VR), where it has been shown that objects can be manipulated naturally and from a first person perspective when the participants, position and movements are tracked (Chen and Sun, 2002; Spanlang et al., 2014).

Indeed, Immersive VR applications are especially powerful when participants experience the Presence illusion: the feeling of actually “being there” inside the simulation. Presence has been described by a combination of two factors: the plausibility of the events happening being real and the place illusion, the sensation of being transported to a new location (Sanchez-Vives and Slater, 2005; Slater et al., 2009). These illusions, especially when combined, can produce realistic behaviors from participants (Meehan et al., 2002). In this context, VR has successfully reproduced classical moral dilemmas to find out how people react without compromising their integrity (Slater et al., 2006; Friedman et al., 2014). Similarly, these realistic behaviors can also influence training, and several authors have already used VR as a tool for training and rehearsal in medical situations (Seymour et al., 2002; von Websky et al., 2013), disaster relief training (Farra et al., 2013), and other skill trainings related to motor control (Kishore et al., 2014; Padrao et al., 2016). However, while VR may be an excellent approach for isolated training, it is increasingly complex to use for collaborative training or face-to-face setups (Churchill and Snowdon, 1998; Monahan et al., 2008; Bourdin et al., 2013; Gonzalez-Franco et al., 2015). In such scenarios, systems require several computers, complex network synchronization, and labor-intensive application development. Furthermore, aspects of self-representation and virtual body tracking become of major importance (Spanlang et al., 2014), as to collaborate and communicate in face-to-face scenarios we usually turn to body language (Garau et al., 2001).

One approach to overcome the self-representation issue and simplify the tracking systems is to use mixed reality paradigms in see-through calibrated Head Mounted Display (HMD) enable the exploration of digital objects from a first person perspective but also allow to see the real setup with collocated real objects and people (Steptoe et al., 2014; Thorn et al., 2016). This paradigm is particularly interesting for collaborative scenarios where both instructor and trainee are together in the same space, and not remotely located. With this technology, participants can see the instructor guiding them through the process, but without the possible physical harm of the real operation. Additionally, a high degree of presence and a hands-free experience is guaranteed.

In this paper, we validate whether a MR setup could work for complex manufacturing training and we compare the results to conventional face-to-face training done on a physical scaled model.

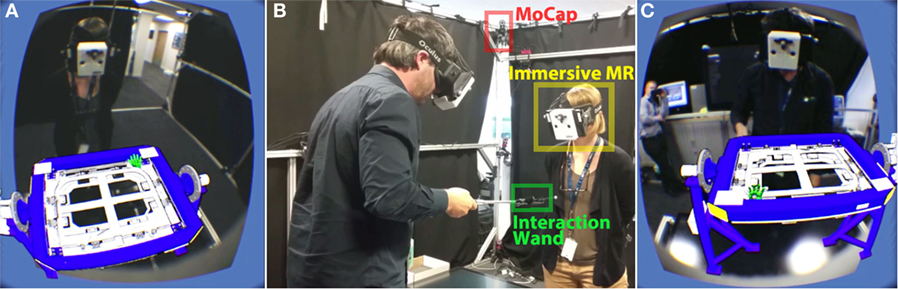

We built a mixed reality setup by modifying an Oculus Rift DK1 HMD with a 1,280 × 800 resolution (640 × 800 per eye), a 110° diagonal field of view (FOV) and approximately 90° horizontal FOV. A pair of cameras were mounted to the HMD to form a see-through mixed reality setup as in Steptoe et al. (2014) and Thorn et al. (2016). The scenario was implemented in Unity 3D, and the head tracking was performed with a NaturalPoint Motive motion capture system (24× Flex 13 cameras) running at 120 Hz and streaming the head’s position and rotation to our application with centimetric precision. With this information, we could display the virtual objects from a first person perspective providing strong sensorimotor contingencies as the participant moved his/her head (Gonzalez-Franco et al., 2010; Spanlang et al., 2014). Using the same camera capture system, objects in the real world with attached reflective markers were tracked and corresponding spatial coordinates were calculated to render 3D objects. The 2D feed of the camera was rendered into planes in the background of the HMD and was calibrated to match the 3D spatial axis using the camera lenses and HMD specifications (Steptoe et al., 2014; Thorn et al., 2016). The camera lenses optical distortion was also corrected in real time with a shader with camera calibrations (Zhang, 2000). Although the frame rate of the cameras was less than the one featured by the HMD’s (~45 and 60 Hz, respectively) the system was operative in real time with minimal perceptual lag. Indeed, none of the participants reported simulator sickness when operating the technology. To interact with the virtual jig, we attached a rigid body reflective marker to an Ipow z07-5 stick; this way, participants could view a virtual object matching the position of the marker and press the button to interact with the virtual jig (see Video S1 in Supplementary Material). This MR system allowed multi-user collaborations where different participants could interact with each other through a PhotonServer installed in the laboratory (Figure 1, see Video S1 in Supplementary Material).

Figure 1. Mixed reality setup. (A) Trainer’s view, see-through with the virtual assembly jig. (B) Laboratory equipped with 24 motion capture cameras, and two participants wearing the mixed reality setups set for collaboration: the trainer is carrying the interaction wand while the second person observes the operation (C). Participant’s view. The Interaction wand in is represented by the green actuator.

For the conventional face-to-face training condition, we manufactured a laser-cut physical model of the jig in transparent plastic (see Video S1 in Supplementary Material).

Twenty-four volunteers (age mean = 32.5, SD = 9.6 years, three females) participated in the user study. Due to the confidential nature of the manufacturing content, this study was conducted using only employees from the institution. Participants who volunteered for the study did not have previous manufacturing knowledge and were asked to complete a demographic questionnaire before participating. Following the Declaration of Helsinki all participants gave informed consent. This study was approved by the Science and Engineering Research Ethics Committee (SEREC) of Cranfield University.

We reproduced an aircraft maintenance door training manual in our MR setup. Through the proposed training, new operators are expected to achieve a reasonable level of knowledge of the assembly procedure before they are exposed to the real physical manufacturing equipment. Manufacturing of civil aircrafts is subjected to strict procedures due to the legal and safety implications of non-conformities. In this context, the ultimate goal of the training is to reduce the Cost of Non-Conformance (CONC) via a more interactive and cost-effective approach to minimize product defects or deviations from the design during production. The experiment implemented two different training conditions: (i) conventional face-to-face training, where participants were taught in a traditional face-to-face scenario manipulating a scaled assembly jig; and (ii) MR training. In the MR, participants were taught in a face-to-face scenario with a see-through HMD. This approach facilitated collaboration over a rendered digital model of the assembly jig and enabled virtual interactions when necessary for the training. This setup also implemented the manipulations and interactions with the jig necessary for the training. In both conditions, participants underwent the same procedural script obtained from a complex manufacturing manual of an aircraft maintenance door. Participants were then evaluated to assess how much knowledge they captured during the training (Figure 2). The training process was complex enough that it was not feasible to complete the tests successfully without previous training, but still procedural enough that, with a single training exposure, participants could complete the task and tests.

Participants were randomly assigned to one of the two experimental conditions in a between subjects’ study and underwent the following phases after completing the demographic questionnaire:

The trainer performed the inspection and operated the moving parts of a door assembly jig following the manual. During this phase, the trainee had to observe what the trainer was doing and tried to remember as much as possible for the evaluation phase.

After the training, the trainee was asked to complete two tests (a knowledge retention and a knowledge interpretation test) to compare both types of trainings. The knowledge retention test was a written test using a multiple-choice format with eight questions (Table 1). This test was designed to evaluate how much factual knowledge was retained from the training (Kang and Jain, 2011). The knowledge interpretation test evaluated whether the whole procedure of the assembly was properly captured. This test was executed in a scaled physical jig and the trainee was asked to perform step by step significant parts of the assembly training until completing the whole operation. If at any point the participant skipped a step or required intervention from the experimenter (e.g., one of the drills was not performed), this reduced one point in the score. The maximum score was 43, the equivalent to the sum of actions that were required to complete the operation.

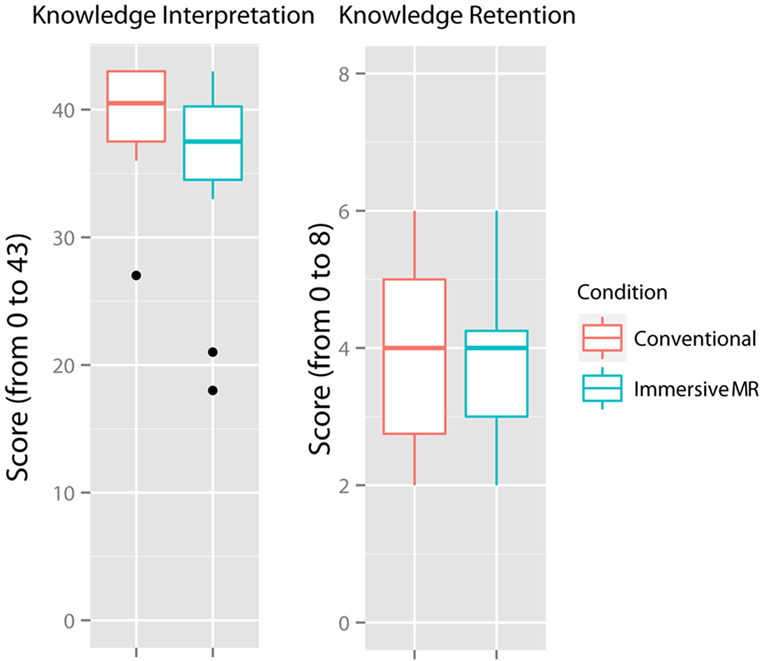

No significant differences were found for knowledge retention (scores from 0 to 8) between the two conditions [Kruskal–Wallis rank sum test χ2(1) = 0.1, p = 0.7]. The score for the MR condition was (M = 3.75, SD = 1.21), and the score for the conventional condition was (M = 3.91, SD = 1.44). Both methods of training were not providing significantly different level of factual knowledge, even if this was not very high, given that the maximal score was 8 and participants in both methods were below that score (Figure 3).

Figure 3. Knowledge retention and interpretation scores for both conventional face-to-face and MR conditions.

No significant differences were found for knowledge interpretation (scores from 0 to 43) between the two conditions [Kruskal–Wallis rank sum test χ2(1) = 1.9, p = 0.16]. The score for the MR condition was (M = 35.41, SD = 8.03), and the score for the conventional face-to-face condition was (M = 39.25, SD = 4.86). Given the high score for both conditions, the procedural training can be considered successful (Figure 3).

We ran an additional Two One-Sided Test (TOST) for equivalence and found that for the knowledge retention both populations showed a confidence level over 93%, indicating a trend in equivalence for the retention between the MR and the conventional face-to-face conditions. The same test on the knowledge interpretation did not show such a high equivalence and was rejected (p = 0.84); therefore, the knowledge interpretation results were not conclusive since although they were not significantly different they were also not significantly equivalent.

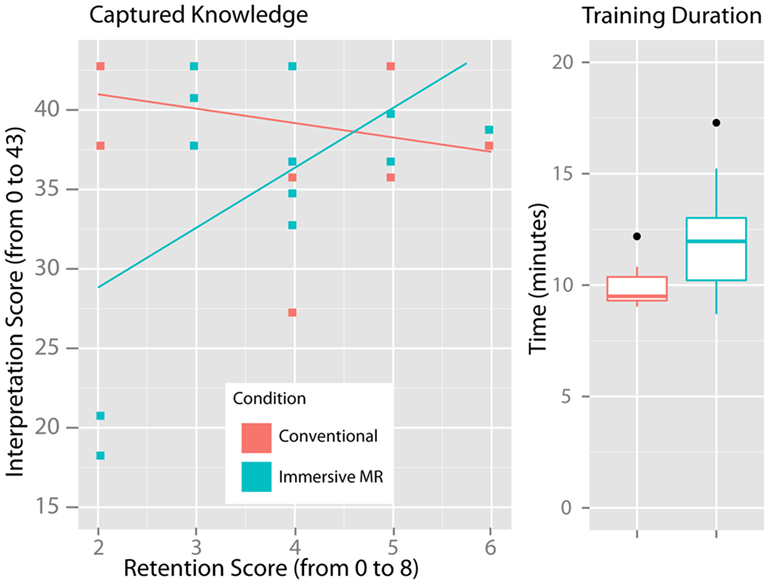

When studying the relation of both kinds of knowledge capture, we find that while in the MR condition a correlation trend was found between high scores in the interpretation and retention [Pearson r(12) = 0.57, p = 0.052], this was not true for the conventional face-to-face training condition (p > 0.39) (Figure 4). Moreover, it seems that top performing participants in the MR condition were as good as the ones in the conventional training. However, low performing participants in the MR were worse. We hypothesize that low performers may have been overwhelmed by the setup and that constrained their capacity to capture knowledge; however, this effect may fade away as participants become more used to the technology itself.

Figure 4. (Left) Correlation between knowledge retention and interpretation scores for both MR and conventional conditions. (Right) Training duration for both conditions.

The time spent to complete the training was significantly higher in the MR condition (M = 12.1, SD = 2.5 min) than in the conventional face-to-face training condition (M = 9.9, SD = 0.9 min) [Kruskal–Wallis rank sum test χ2(1) = 0.64, p = 0.01] (Figure 4). This could be partially due to the extra time some participants took to familiarize with the interaction metaphors and the novelty of the MR setup.

Overall, we found that the knowledge levels acquired both in the mixed reality setup and in the conventional face-to-face setup were not significantly different. Very high scores were found in the interpretation test in both conditions, scoring over 80% of accuracy with a single training session in a manufacturing operation that was totally novel to them. However, the training process was complex enough that it was not feasible to complete the tests successfully without previous training. These results validate our training methodology which was a practical example of a complex aircraft door manufacturing procedure. However, equivalence results failed to show significance between participants in the MR and the conventional face-to-face conditions. A trend was found with 93% confidence of equivalence on the retention results obtained by participants of the conventional face-to-face training when compared to the MR, which shows that MR scenarios can potentially provide a successful metaphor for collaborative training. In general, the scores in the retention test were low, we hypothesize that there might be two reasons to the difference in performance between the retention and the interpretation knowledge. First, the complexity of the task might require several training sessions to be properly retained. Second, we believe that, given the type of training, the participants developed a more hands-on memory of the procedure than an abstract knowledge. Indeed, many participants were able to remember the number of bolts involved in an operation if the jig was presented in front of them, but could not recall the number of bolts involved when asked in a written test. We did, however, find a correlation between high interpretation and retention scores in participants who completed the training through MR, such correlation was not found with the conventional face-to-face training results. The correlation shows that participants who were better in the interpretation task were also better in the retention task, while participants who performed poorly were bad in both types of tests. These results are aligned with previous studies that show higher cognitive load is needed when using novel technologies at first (Chen et al., 2007), and the MR setup might have placed some participants outside their comfort zone, making them unable to remember or guess what to do next. This would also contribute toward explaining the results that show that participants took longer in the MR condition than in the conventional face-to-face condition, because they were less familiar with the environment. Nevertheless, the actual post-training knowledge scores were not significantly different between participants of the MR condition and the physical one, thus evidencing the great possibilities in the use of MR for complex manufacturing training. We hypothesize that these positive results are closely linked to the theories of first person interaction with digital objects (Spanlang et al., 2014).

The current paper has presented and validated the use of mixed reality metaphors for complex manufacturing training by running a user study and measuring the post-training knowledge retention and interpretation scores. The results show trends of equivalent knowledge retention between MR training and the conventional face-to-face training. However, no significant differences or significant equivalences were found between the two conditions for knowledge interpretation. These results support the idea that MR setups can achieve high performances in the context of collaborative training. The implementation of this technology in the industry will have several benefits: this form of training will not require the physical equipment present, which will reduce the costs of training and also eliminate security issues and operational hazards. However, this setup would not be a complete substitute of a face-to-face training, since there will still be a need of professional trainers. Therefore, only one part of the overhead training costs would be reduced. The implications of these results are clear not only for the manufacturing industry but also MR and AR community as it shows evidence of how the integration of existing metaphors for collaborative work can be implemented in immersive MR.

This study was approved by the Science and Engineering Research Ethics Committee (SEREC) of Cranfield University. Following the Declaration of Helsinki all participants were given an information sheet explaining the experiment and could asked questions before participating, at which point they signed informed consent and agreed to participate in the study. Due to the confidential nature of the manufacturing content, this study was conducted using only employees from the Airbus Group who volunteered to participate. They were recruited via email.

MG-F, PB-G, and AT conceived the study; KL and JC prepared the testing material and ran the study; MG-F, RP, JT, and WH implemented the system and the real-time technology; MG-F analyzed the data; and MG-F, RP, JC, and KL wrote the paper.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This study was funded with an Innovate UK grant (TSB 101251). The authors are grateful for the support of Engineering and Physical Sciences Research Council (EPSRC) through a Doctoral Training Centre (DTC) award to KL (EP/K502820/1). The authors would also like to thank Richard Middleton for his insight on manufacturing operations, and Karl Jones and Paul Hannah for their help on 3D modeling and animations. All data are provided in full in Section “Results” of this paper.

The Supplementary Material for this article can be found online at http://journal.frontiersin.org/article/10.3389/frobt.2017.00003/full#supplementary-material.

Video S1. Demonstration of the Immersive Mixed Reality System.

Bal, M. (2012). “Virtual manufacturing laboratory experiences for distance learning courses in engineering technology,” in 119th ASEE Annual Conference and Exposition (San Antonio, TX: American Society for Engineering Education), 1–12.

Bartoli, G., Del Bimbo, A., Faconti, M., Ferracani, A., Marini, V., Pezzatini, D., et al. (2012). “Emergency medicine training with gesture driven interactive 3D simulations,” in 2012 ACM International Workshop on User Experience in E-Learning and Augmented Technologies in Education UXeLATE (Nara: ACM), 25–29.

Bharath, V., and Rajashekar, P. (2015). “Virtual manufacturing: a review,” in National Conference Emerging Research Areas Mechanical Enggineering Confrence Proceedings, Vol. 3 (Bangalore: IJERT), 355–364.

Bourdin, P., Sanahuja, J., Tomàs, M., Moya, C. C., Haggard, P., and Slater, M. (2013). “Persuading people in a remote destination to sing by beaming there,” in Proceedings 19th ACM Symposium on Virtual Reality Software and Technology – VRST ’13 (New York, NY: ACM Press), 123.

Chen, H., and Sun, H. (2002). “Real-time haptic sculpting in virtual volume space,” in Proceedings ACM Symposium on Virtual Reality Software and Technology (New York, NY: ACM), 81–88.

Chen, J. Y. C., Haas, E. C., and Barnes, M. J. (2007). Human performance issues and user interface design for teleoperated robots. IEEE Trans. Syst. Man. Cybern. C Appl. Rev. 37, 1231–1245. doi: 10.1109/TSMCC.2007.905819

Churchill, E. F., and Snowdon, D. (1998). Collaborative virtual environments: an introductory review of issues and systems. Virtual Real. 3, 3–15. doi:10.1007/BF01409793

Dickinson, J. K., Woodard, P., Canas, R., Ahamed, S., and Lockston, D. (2011). Game-based trench safety education: development and lessons learned. J. Inform. Tech. Construct. 16, 119–134.

Farra, S., Miller, E., Timm, N., and Schafer, J. (2013). Improved training for disasters using 3-D virtual reality simulation. West. J. Nurs. Res. 35, 655–671. doi:10.1177/0193945912471735

Friedman, D., Pizarro, R., Or-Berkers, K., Neyret, S., Pan, X., and Slater, M. (2014). A method for generating an illusion of backwards time travel using immersive virtual reality – an exploratory study. Front. Psychol. 5:943. doi:10.3389/fpsyg.2014.00943

Garau, M., Slater, M., Bee, S., and Sasse, M. A. (2001). “The impact of eye gaze on communication using humanoid avatars,” in Proceedings SIGCHI Conference on Human Factors in Computing Systems CHI 01 (Seattle, WA: ACM Press), 309–316.

Gavish, N., Gutiérrez, T., Webel, S., Rodríguez, J., Peveri, M., Bockholt, U., et al. (2015). Evaluating virtual reality and augmented reality training for industrial maintenance and assembly tasks. Interact. Learn. Environ. 23, 778–798. doi:10.1080/10494820.2013.815221

Gonzalez-Franco, M., Gilroy, S., and Moore, J. O. (2014a). “Empowering patients to perform physical therapy at home,” in 36th Annual International Conference IEEE Engineering in Medicine and Biology Society EMBC 2014 (Chicago, IL: IEEE), 6308–6311.

Gonzalez-Franco, M., Hall, M., Hansen, D., Jones, K., Hannah, P., and Bermell-Garcia, P. (2015). “Framework for remote collaborative interaction in virtual environments based on proximity,” in IEEE Symposium on 3D User Interfaces (Arles: IEEE), 153–154.

Gonzalez-Franco, M., Peck, T. C., Rodríguez-Fornells, A., and Slater, M. (2014b). A threat to a virtual hand elicits motor cortex activation. Exp. Brain Res. 232, 875–887. doi:10.1007/s00221-013-3800-1

Gonzalez-Franco, M., Perez-Marcos, D., Spanlang, B., and Slater, M. (2010). “The contribution of real-time mirror reflections of motor actions on virtual body ownership in an immersive virtual environment,” in 2010 IEEE Virtual Reality Conference (Waltham, MA: IEEE), 111–114.

Kang, J., and Jain, N. (2011). “Merit of computer game in tacit knowledge acquisition and retention,” in 28th International Symposium on Automation and Robotics in Construction ISARC 2011 (Seoul: IAARC), 1091–1096.

Kishore, S., González-Franco, M., Hintermuller, C., Kapeller, C., Guger, C., Slater, M., et al. (2014). Comparison of SSVEP BCI and eye tracking for controlling a humanoid robot in a social environment. Presence – Teleop. Virt. 23, 242–252. doi:10.1162/PRES_a_00192

Kozak, J. J., Hancock, P. A., Arthur, E. J., and Chrysler, S. T. (1993). Transfer of training from virtual reality. Ergonomics 36, 777–784. doi:10.1080/00140139308967941

Lin, F., Ye, L., Duffy, V. G., and Su, C. J. (2002). Developing virtual environments for industrial training. Inform. Sci. 140, 153–170. doi:10.1016/S0020-0255(01)00185-2

Lipponen, L. (2002). “Exploring foundations for computer-supported collaborative learning,” in CSCL ’02 Proceedings Conference on Computer Support for Collaborative Learning Found a CSCL Community (Colorado: ACM Boulder), 72–81.

Meehan, M., Insko, B., Whitton, M., and Brooks, F. P. (2002). “Physiological measures of presence in stressful virtual environments,” in Proceedings 29th Annual Conference Computer Graphics and Interactive Techniques – SIGGRAPH ’02 (New York, NY: ACM Press), 645.

Mital, A., Pennathur, A., Huston, R. L., Thompson, D., Pittman, M., Markle, G., et al. (1999). The need for worker training in advanced manufacturing technology (AMT) environments: a white paper. Int. J. Ind. Ergon. 24, 173–184. doi:10.1016/S0169-8141(98)00024-9

Monahan, T., McArdle, G., and Bertolotto, M. (2008). Virtual reality for collaborative e-learning. Comput. Educ. 50, 1339–1353. doi:10.1016/j.compedu.2006.12.008

Muratet, M., Torguet, P., Viallet, F., and Jessel, J. P. (2011). Experimental feedback on prog and play: a serious game for programming practice. Comput. Graph. Forum. 30, 61–73. doi:10.1111/j.1467-8659.2010.01829.x

Oliveira, J. C., Shen, X., and Georganas, N. D. (2000). “Collaborative virtual environment for industrial training and e-commerce,” in IEEE VRTS (Seoul: ACM), 288.

Ong, S. K., Yuan, M. L., and Nee, A. Y. C. (2008). Augmented reality applications in manufacturing: a survey. Int. J. Prod. Res. 46, 2707–2742. doi:10.1080/00207540601064773

Padrao, G., Gonzalez-Franco, M., Sanchez-Vives, M. V., Slater, M., and Rodriguez-Fornells, A. (2016). Violating body movement semantics: neural signatures of self-generated and external-generated errors. Neuroimage 124, 147–156. doi:10.1016/j.neuroimage.2015.08.022

Sanchez-Vives, M. V., and Slater, M. (2005). From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 6, 332–339. doi:10.1038/nrn1651

Seymour, N. E., Gallagher, A. G., Roman, S. A., O’Brien, M. K., Bansal, V. K., Andersen, D. K., et al. (2002). Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann. Surg. 236, 458. doi:10.1097/00000658-200210000-00008

Slater, M. (2009). Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philos. Trans. R. Soc. Lond. B Biol. Sci. 364, 3549–3557. doi:10.1098/rstb.2009.0138

Slater, M., Antley, A., Davison, A., Swapp, D., Guger, C., Barker, C., et al. (2006). A virtual reprise of the Stanley Milgram obedience experiments. PLoS ONE 1:e39. doi:10.1371/journal.pone.0000039

Slater, M., Perez-Marcos, D., Ehrsson, H. H., and Sanchez-Vives, M. V. (2009). Inducing illusory ownership of a virtual body. Front. Neurosci. 3:214–220. doi:10.3389/neuro.01.029.2009

Spanlang, B., Normand, J.M., Borland, D., Kilteni, K., Giannopoulos, E., Pomes, A., et al. (2014). How to build an embodiment lab: achieving body representation illusions in virtual reality. Front. Robot. A.I. 1:1–22. doi:10.3389/frobt.2014.00009

Steptoe, W., Julier, S., and Steed, A. (2014). “Presence and discernability in conventional and non-photorealistic immersive augmented reality,” in ISMAR (Munich: IEEE), 213–218.

Stone, R. (2001). Virtual reality for interactive training: an industrial practitioner’s viewpoint. Int. J. Hum. Comput. Stud. 55, 699–711. doi:10.1006/ijhc.2001.0497

Sun, S. H., and Tsai, L. Z. (2012). Development of virtual training platform of injection molding machine based on VR technology. Int. J. Adv. Manuf. Technol. 63, 609–620. doi:10.1007/s00170-012-3938-1

Thorn, J., Pizarro, R., Spanlang, B., Bermell-Garcia, P., and Gonzalez-Franco, M. (2016). “Assessing 3D scan quality through paired-comparisons psychophysics test,” in Proceedings 2016 ACM Multimedia Conference (Amsterdam: ACM), 147–151.

von Websky, M. W., Raptis, D. A., Vitz, M., Rosenthal, R., Clavien, P. A., and Hahnloser, D. (2013). Access to a simulator is not enough: the benefits of virtual reality training based on peer-group-derived benchmarks – a randomized controlled trial. World J. Surg. 37, 2534–2541. doi:10.1007/s00268-013-2175-6

Webel, S., Bockholt, U., Engelke, T., Gavish, N., Olbrich, M., and Preusche, C. (2013). An augmented reality training platform for assembly and maintenance skills. Rob. Auton. Syst. 61, 398–403. doi:10.1016/j.robot.2012.09.013

Williams-Bell, F. M., Murphy, B. M., Kapralos, B., Hogue, A., and Weckman, E. J. (2015). Using serious games and virtual simulation for training in the fire service: a review. Fire Technol. 51, 553–584. doi:10.1007/s10694-014-0398-1

Yuan, M. L., Ong, S. K., and Nee, A. Y. C. (2008). Augmented reality for assembly guidance using a virtual interactive tool. Int. J. Prod. Res. 46, 1745–1767. doi:10.1080/00207540600972935

Keywords: mixed reality, immersive augmented reality, training, manufacturing, head-mounted displays

Citation: Gonzalez-Franco M, Pizarro R, Cermeron J, Li K, Thorn J, Hutabarat W, Tiwari A and Bermell-Garcia P (2017) Immersive Mixed Reality for Manufacturing Training. Front. Robot. AI 4:3. doi: 10.3389/frobt.2017.00003

Received: 08 July 2016; Accepted: 27 January 2017;

Published: 16 February 2017

Edited by:

John Quarles, University of Texas at San Antonio, USAReviewed by:

Ana Tajadura-Jiménez, Loyola University Andalusia, SpainCopyright: © 2017 Gonzalez-Franco, Pizarro, Cermeron, Li, Thorn, Hutabarat, Tiwari and Bermell-Garcia. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Pablo Bermell-Garcia, cGFibG8uYmVybWVsbEBhaXJidXMuY29t

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.