Over the past ∼20 years, the proliferation of sensors and platforms in both the public and private sectors, combined with the open data policies of NASA, ESA, and other national space agencies, have resulted in a dramatic increase in the availability of remotely sensed data and the widespread adoption of remote sensing as an essential tool for a wide range of applications. Unlike many of the specialty research topics covered by the Frontiers journals, remote sensing image analysis and classification spans a wide range of disparate research disciplines, making the identification of grand challenges fundamentally different from that in more narrowly focused disciplines. For this reason, the challenges discussed in this article will be related to topically-relevant standards intended to maximize the utility of the work published by the journal.

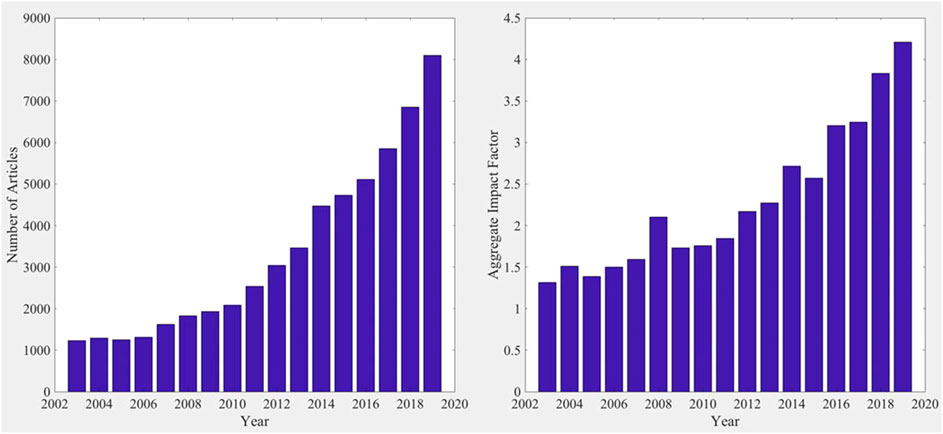

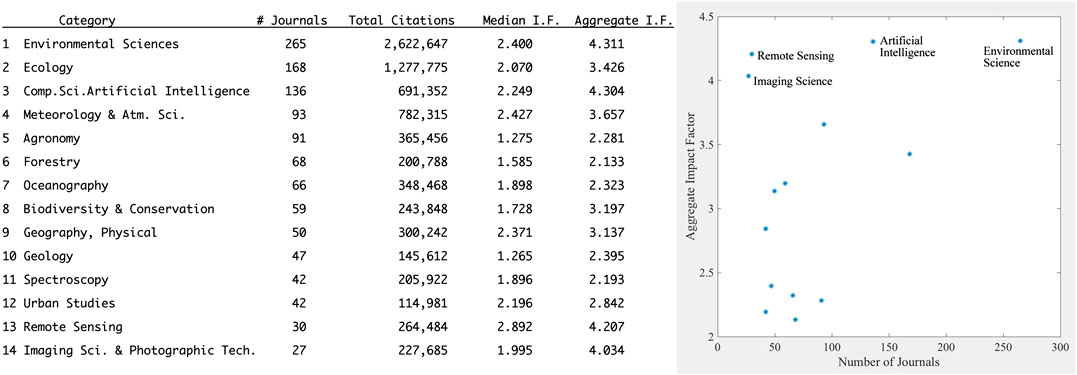

Discipline-specific bibliometrics compiled since the early 2000s suggest that remote sensing articles achieve disproportionate impact, even relative to their rapid and sustained growth in number. Figure 1 quantifies both of these trends since 2003, showing the aggregate impact factor of remote sensing publications on average more than tripling as the number of articles per year grew almost 8-fold. This may be due, in part, to the wide range of disciplines in which remote sensing is now used. Figure 2 shows comparative bibliometrics for remote sensing and several of these disciplines, as well as two that contribute methodologies (Imaging Science, and Artificial Intelligence—including machine learning) that have long been used in remote sensing image analysis and classification. Despite the comparatively small number of remote sensing journals (30 in 2019), the average number of citations per article is among the highest of these disciplines. Taken together, the continued growth in both volume and impact of remote sensing articles, and their relevance to such a wide range of related disciplines, emphasize the importance of standards going forward. This brief essay will discuss three fundamental criteria essential to the standard of articles published in the Image Analysis and Classification specialty section.

FIGURE 1. Parallel growth of remote sensing articles and aggregate impact factor since 2003. While the number of article published per year has increased almost 8-fold, the average number of citations per paper has more than tripled over the same time interval. Bibliometric data provided by Clarivate Analytics (www.clarivate.com).

FIGURE 2. Aggregate impact factor vs. number of journals for remote sensing and other disciplines which use or contribute to remote sensing image analysis and classification. The aggregate impact factor is the average number of citations per article published within the two prior years (2017–2019). Relative to the smaller number of journals (and papers), remote sensing and imaging science papers have relatively greater impact factor. Bibliometric data provided by Clarivate Analytics (www.clarivate.com).

Replicability

Despite the ongoing debate about the existence of a “reproducibility crisis” in science (e.g., Ioannidis, 2005; Fanelli, 2018), there is no debate about the necessity of replicability of analyses published in scientific journals. Authors must provide sufficient detail about data, models, assumptions, and methodology to allow their results to be replicated by colleagues. Moreover, a paper advancing a new analytical methodology obviously must provide sufficient detail to allow the interested reader to apply the methodology to their own analysis. While these details are often included in appendices and supplementary materials, many authors adopt a narrative approach to describing analyses and sometimes omit critical details. Standardizing the reporting of critical details could make it easier for authors to retain the narrative approach when appropriate, while still providing all information necessary for replicability.

It has been suggested that the scientific paper, in its current form, is now obsolete (Somers, 2018), and that it should be replaced by documents containing live code, equations, visualizations, and narrative text (e.g., Jupyter notebook). While this could certainly facilitate replicability of analyses done with open source code and small data, there remain many types of analyses that do not satisfy these constraints. A standardized format for reporting data sources, analytical procedures, models and hyperparameter settings could simplify both description by authors and implementation by readers. At the very least, sufficient detail to allow replicability can be made a standard review criterion.

Applicability

As the name suggests, the explicit focus of Frontiers in Remote Sensing; Image Analysis and Classification is on methodology and implementation. In order to be generally useful, a methodology should be applicable to a variety of settings, rather than specific to a particular location. If a methodology is specific with respect to a particular type of target (e.g., land cover, water body, and object class) associated with a specific geography, then it should be demonstrated effective across a range of examples of that type of target in different settings. The nature of most remote sensing applications often leads to geographic specificity of analyses. Because the geography of the study area may indeed be the focus of many studies, these types of studies may be better suited to geography or regional science journals rather than a methodology-oriented journal. In a meta-analysis of 6,771 remote sensing papers published since 1972 (Yu et al., 2014), found that 1783 of these papers provided explicit geographic bounds of the study area and that the median area of these was less than 10,000 km2 (100 km × 100 km). It is not clear what percentage of these studies were methodologically focused and what percentage were geographically focused, but it suggests a comingling of methodological and geographical focus in the remote sensing literature. It is common for remote sensing articles to have a geographic focus but introduce a methodology specifically developed for the geography of interest. In these cases, it is often not clear that the methodology is generally applicable beyond the geography for which it was developed. From a methodological perspective, this could be considered analogous to overfitting. Given a focus on methodology, applicability should be demonstrated over a variety of geographies.

The question of applicability, and the consequences of geographic specificity, are particularly relevant to thematic classification. Because supervised classifications can be sensitive to both training sample selection and hyperparameter settings, the question of uniqueness of result can be important. The result of a classification is generally presented (either explicitly or implicitly) as the map of the geography of interest, but it is usually just one of a potentially very large number of maps that could result from different choices of training samples and hyperparameter settings. While some classification approaches incorporate some degree of optimization, the model design that is manifest in the analyst’s choice of hyperparameter settings will necessarily influence the resulting classification. Sometimes considerably. For this reason, the rationale for the model design should be addressed explicitly. Accuracy assessments based on reference data provide some indication of how accurate the resulting map can be expected to be (if the reference data are truly representative), but these accuracy assessments say little or nothing about how much more or less accurate maps derived from slightly different combinations of training samples and hyperparameter settings might be. Sensitivity analysis offers some potential to address the question of uniqueness by demonstrating the stability of a result over a range of hyperparameter settings. The assumptions inherent in the model design should be made explicit when the classification model is introduced.

Interpretability

The simultaneous increase in remote sensing data dimensionality (e.g., hyperspectral, multi-sensor, and multi-temporal) and increase in application of machine learning (ML) approaches to image analysis and classification presents both opportunities and challenges. A significant challenge is related to the interpretability of results obtained from ML-based analyses. Many ML algorithms, and neural networks (NNs) in particular, suffer from a lack of transparency of mechanism (e.g., Knight, 2017). This is a potential obstacle for their use in the natural sciences where transparency is key to understanding the process that is the focus of the analysis. With low dimensional data (e.g., multispectral) and relatively simple algorithms (e.g., Maximum Likelihood), the basis of a classification can be easily understood in the context of the N-dimensional structure of the feature space and the statistical basis for assigning decision boundaries, but higher dimensional data combined with the emergent properties of NNs (Achille, 2019) presents a more serious challenge. Recognition of this challenge has led to the development of interpretable machine learning approaches (e.g., Doshi-Velez and Kim, 2017; Escalante et al., 2018; Molnar, 2020). Some types of ML approaches are naturally more amenable to interpretation than others (e.g., Palczewska et al., 2014; Fabris et al., 2018). Given the journal’s focus on physically-based remote sensing, it is essential for authors to explicitly address the question of why a methodology produced a given result. For example, an analysis describing a discrete classification of hyperspectral reflectance spectra that successfully distinguishes among different vegetation species should discuss the specific absorption features that are responsible for the spectral separability that underlies the result. Accuracy is a necessary, but not sufficient, condition for methodological progress. Understanding and explaining the basis of the methodology is essential.

In summary, the challenges discussed here are examples of characteristics of methodology-oriented articles that can either facilitate or impede the transmission of knowledge from authors to readers. The existing literature on remote sensing provides a full spectrum of articles which collectively span the space defined by these characteristics. It is our hope that striving to maintain editorial practice that prioritizes standards like these will facilitate knowledge transfer among the diversity of researchers and practitioners engaged in the analysis of remotely sensed data.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Achille, A. (2019). Emergent properties of deep neural networks. Doctoral dissertation. Los Angeles, CA: UCLA, 119.

Doshi-Velez, F., and Kim, B. (2017). Towards a rigorous science of interpretable machine learning. ArXiv. Available at: https://arxiv.org/pdf/1702.08608.pdf (Accessed September 11, 2020)

H. J. Escalante, S. Escalera, I. Guyon, X. Baró, Y. Güçlütürk, U. Güçlüet al. (Editors) (2018). Explainable and interpretable models in computer vision and machine learning. Cham, Switzerland: Springer Nature.

Fabris, F., Doherty, A., Palmer, D., de Magalhães, J. P., and Freitas, A. A. (2018). A new approach for interpreting Random Forest models and its application to the biology of ageing. Bioinformatics 34 (14), 2449–2456. doi:10.1093/bioinformatics/bty087

Fanelli, D. (2018). Opinion: is science really facing a reproducibility crisis, and do we need it to? Proc. Natl. Acad. Sci. U.S.A. 115 (11), 2628–2631. doi:10.1073/pnas.1708272114

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Med. 2 (8), e124. doi:10.1371/journal.pmed.0020124

Knight, W. (2017). “The dark secret at the heart of AI,” in MIT Technology Review (Cambridge, MA: Massachusetts Institute of Technology).

Molnar, C. (2020). Interpretable machine learning; a guide for making black box models interpretable. Victoria, BC, Canada:LeanPub, 315 pp.

Palczewska, A., Palczewski, J., Robinson, R. M., and Neagu, D. (2014). “Interpreting random forest classification models using a feature contribution method,” in Integration of reusable systems. Advances in intelligent systems and computing. Editors T. Bouabana-Tebibel, and S. Rubin (Cham, Switzerland: Springer).

Keywords: image analysis, remote sensing, classification, machine learning, standards

Citation: Small C (2021) Grand Challenges in Remote Sensing Image Analysis and Classification. Front. Remote Sens. 1:605220. doi: 10.3389/frsen.2020.605220

Received: 11 September 2020; Accepted: 28 September 2020;

Published: 01 April 2021.

Edited and reviewed by:

Jose Antonio Sobrino, University of Valencia, SpainCopyright © 2021 Small. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christopher Small, Y3NtYWxsQGNvbHVtYmlhLmVkdQ==

Christopher Small

Christopher Small