94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Rehabil. Sci., 05 December 2022

Sec. Rehabilitation in Children and Youth

Volume 3 - 2022 | https://doi.org/10.3389/fresc.2022.1051579

This article is part of the Research TopicInsights in Pediatric Rehabilitation 2022View all 6 articles

Danielle DuPlessis1,2

Danielle DuPlessis1,2 Emily Lam1,3

Emily Lam1,3 Fanny Hotze1

Fanny Hotze1 Ajmal Khan1

Ajmal Khan1 Hiba Al-Hakeem1

Hiba Al-Hakeem1 Stephanie McFarland1

Stephanie McFarland1 Andrea Hickling1,4

Andrea Hickling1,4 Michael Hutchison5,6,7

Michael Hutchison5,6,7 F. Virginia Wright1,6

F. Virginia Wright1,6 Nick Reed2,3

Nick Reed2,3 Elaine Biddiss1,2,3

Elaine Biddiss1,2,3 Shannon E. Scratch1,2,7*

Shannon E. Scratch1,2,7*

Objective: To design a multi-domain return-to-play assessment system (R2Play) for youth athletes with concussion.

Methods: The R2Play system was developed using an overarching user-centered approach, the Design Thinking Framework, and research activities included: 1) structured brainstorming within our research team, 2) interviews with clinician and youth sports coaches, 3) building a testable prototype, and 4) interface testing through cognitive walkthroughs with clinician partners.

Results: Clinician and coach participants provided feedback on the R2Play concept, which was integrated into the design process and provided future directions for research. Examples of feedback-driven design choices included reducing assessment time, increasing ecological validity by adding in background noise, and developing youth-friendly graphical results screens. Following refinement based on stakeholder feedback, the R2Play system was outlined in detail and a testable prototype was developed. It is made up of two parts: a clinician tablet, and a series of tablet “buttons” that display numbers and letters. Youth athletes run between the buttons to connect a “trail” in ascending alphanumeric order, 1-A-2-B, etc. Their performance across a series of levels of increasing difficulty is logged on the clinician tablet. Initial testing with five clinicians showed the system's interface to have excellent usability with a score of 81% (SD = 8.02) on the System Usability Scale.

Conclusion: Through this research, a prototype of the R2Play system was innovated and evaluated by clinician and coach stakeholders. Initial usability was excellent and directions for future iterations were highlighted. Outcomes suggest the potential benefits of using technologies to assist in complex clinical assessment, as well as utilizing a user-centered approach to design.

Pediatric concussion is a serious public health concern. While estimating the burden of these injuries is a challenge due to variable access to care and underreporting, a recent study on a Canadian sample estimates that more than 2000 per 100,000 youth will experience concussion annually (1, 2). Sport is a major cause of concussion among youth people aged 11–19 years; per United States data, approximately 41% of all concussions in this age group are sustained while playing sports (3).

Compared to adults, youth athletes may also be more susceptible to repeat concussion and are more likely to experience prolonged recovery (4). However, this population is historically underrepresented in research, resulting in few age-appropriate measures of assessment for youth (4). Many practices in pediatric concussion are carried over from adult populations, despite the fact that children and adolescents are not “little adults”: from the pathophysiology of the developing brain, to their neurobehavioral outcomes, to their return-to-activity goals, youth are unique (5, 6).

The consequences of misdiagnosis or inadequate treatment of concussion in youth athletes can be severe, ranging from prolonged recovery, to repeat concussions, to subsequent musculoskeletal injury, and, in extremely rare cases, death (6–11). As such, determining when the brain has recovered from concussion and athletes are ready to return to the playing field is of critical importance. While no youth-specific guidelines exist, the current concussion consensus statement best practice guidelines for youth and adult return-to-play recommend a multi-modal approach to assessment, which incorporates symptom self-reporting, neuropsychological, and physiological measures (12). This is typically carried out as a battery of single-domain assessments, where processes affected by concussion (e.g., balance, memory, exertion) are assessed in isolation (13).

Sport, however, is not a single-domain activity. Rather, it involves a complex multi-domain environment that requires integration of physical, cognitive, and socio-emotional skills (14–17). While performance on single-domain assessments may return to pre-injury levels as early as 7–10 days post-injury, a growing body of literature suggests that subclinical deficits persist and can be elicited by more complex tasks. These subtle differences in gait, postural control, and cognitive processing may have serious consequences if they manifest on the playing field (8, 16, 18–22).

Emerging research calls for implementation of more ecologically relevant, multi-domain assessments of concussion prior to return-to-play (23). While there has been an increase in the use of multi-domain assessments in research, barriers exist to translating this work into clinical practice (24). Current assessment paradigms often fall short of effectively simulating sport, and are challenging to implement and score (16, 24–27).

We aimed to bridge this gap by working collaboratively with stakeholders to develop a user-driven multi-domain assessment (R2Play) to help bolster return-to-play decision making following pediatric concussion. Ultimately, following rigorous testing, we hope that this multi-domain assessment will help promote the safety of youth athletes, and allow clinicians, athletes, and parents to have confidence in return-to-play clearance. This paper describes the co-creation process undertaken to develop the R2Play system along with usability testing of its clinician interface. To guide this work, we adopted the Design-Thinking Framework (28). This framework is a lens through which a problem can be understood and solved by working through a series of iterative modes: empathize, define, ideate, prototype, and test (28, 29). A design-thinking mindset places empathy with end-users and meaningful collaboration at the forefront (30) and is considered especially applicable to the kinds of complex, multidimensional challenges often seen in a healthcare context (30). This approach can encompass diverse research activities, applying a multi-method approach to fostering research innovation (30). Examples include:“needfinding” (i.e., defining the problem and identifying user priorities) through reviewing current approaches, conducting environmental scans, and interviewing end-users; brainstorming both within the research group and by seeking out opinions from multidisciplinary partners; and rapid prototyping in collaboration with end-users (31). Aligned with this framework, our three research objectives were:

• Objective One: to conduct (a) a scoping review (24), (b) discussion and brainstorming within our interdisciplinary design team composed of key partners and (c) qualitative interviews to capture broader stakeholder feedback for the purposes of empathizing with end users, defining our problem, and ideating on possible solutions.

• Objective Two: to build a prototype of the R2Play system. This involved collaboratively refining our ideas and incorporating stakeholder feedback to move from low, to medium, then high-fidelity prototypes.

• Objective Three: to test the R2Play system prototype. Of note, validation of R2Play is anticipated to be a multiyear iterative process. In this paper, we describe usability testing of the R2Play software interface conducted via cognitive walkthroughs with clinician stakeholders.

This paper is organized with respect to the three research objectives with separate methods and results section for each, followed by an integrated discussion section. Ethics approvals for all studies were obtained from the Holland Bloorview Kids Rehabilitation Hospital (HBKRH) Research Ethics Board (REB #19–855). Due to restrictions associated with the COVID-19 pandemic, all phases of this research were carried out virtually using a videoconferencing platform.

This project emerged from a clinician-identified desire for more comprehensive assessment tools to increase confidence in return-to-play decision making for youth athletes post-concussion. An interdisciplinary team of health practitioners, engineers, athletes, and researchers was established to address this need. Knowledge-sharing activities were carried out amongst the team. Clinical partners demonstrated how return-to-play assessments are currently carried out at HBKRH (e.g., neurocognitive tests, balance and endurance tests), engineering partners showcased different technologies that could be used to support assessments (e.g., 3D depth sensors, tablets, inertial sensors), and youth and family leaders with a history of concussion described their experiences. A scoping review, reported in detail in another paper (24), of sixty-four articles describing 36 unique assessments was conducted. Overall, this scoping review revealed (i) a lack of assessments that effectively simulated the speed and complexity at which cognitive processing occurs in sport, and, (ii) multiple barriers to clinical implementation of multidomain assessments including cost, set-up time, space, and the cognitive load on clinicians while scoring (24). Guided by learnings from the scoping review, knowledge sharing activities, and the clinical experience of our interdisciplinary team, a set of design objectives for a novel return-to-play assessment were established (Table 1).

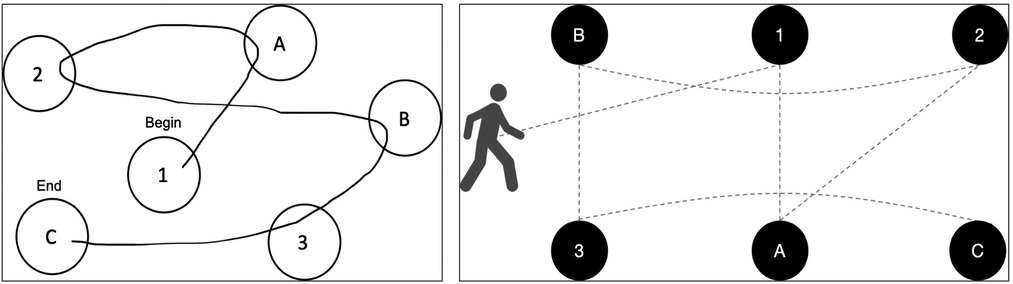

These design objectives guided early brainstorming and ideation from which two themes emerged: 1) the potential use of technology to support clinicians in administering and scoring more complex sport-like assessments; and, 2) the idea of integrating components of existing neuropsychological tests that clinicians are familiar with (e.g., Color-Word Interference (34), Trail Making (34), Contingency Naming (35), Digit Span (34)) into a sport-like activity. Further discussion with clinicians and amongst the team identified the Trail Making Test as a promising candidate to serve as the cognitive backbone of R2Play, due to its loading on fluid cognitive abilities, working memory, and processing speed (36). Traditionally, the Trail Making Test is completed by connecting numbers and/or letters in sequence using a pencil on paper (37). The task is easy to explain and routinely used by clinicians to assess executive functioning and neuropsychological impairment in children and adults (38, 39). In our early conceptualization, we envisioned recreating the test in a physical space wherein athletes connect the trail by running and pressing buttons displaying the letters and numbers. With this concept in mind, we created a series of rough prototype drawings to present to stakeholders for feedback and refinement (Figure 1).

Figure 1. The Trail Making Test (left), and a diagram depicting the embodied R2Play task concept (right).

To engage with stakeholders more broadly, we conducted a series of semi-structured qualitative interviews. Two groups were included in the interviews: clinicians, who are the target end-users of the R2Play system, and youth sports coaches, who are experienced in designing ecological sports drills and engaging with youth athletes. The research question guiding this inquiry was: “How can we design the R2Play system to best meet the needs of clinician end-users?”. Notably, we anticipate that the R2Play system is most relevant for youth athletes returning to open-skill sports which are among the highest risk for concussion and require a high degree of multi-domain processing (40, 41). As such, coaches were primarily recruited from open-skill sport backgrounds.

We sampled purposively to seek out information-rich stakeholders and worked deliberately to include diverse perspectives (42). As interviews were carried out virtually, clinicians and youth sports coaches were recruited from across Canada. Inclusion criteria: clinicians – ≥ one year of experience working with children and adolescents (6–19 years) who had sustained a concussion; coaches – ≥ one year of experience coaching youth (6–19 years) in organized sport. Participants were recruited until the sample had sufficient information power to address our research question, which corresponded to 6 clinicians and 4 youth sports coaches (43).

Semi-structured interviews (carried out by DD) were conducted over the online videoconferencing platform Zoom (44). Audio was recorded for review. Interviews began by exploring experiences with return-to-play broadly. Next, the interviewer shared their screen with participants and presented a brief demonstration of the low-fidelity prototype using Microsoft PowerPoint (45). Afterwards, participants were encouraged to share their impressions and give targeted feedback on the assessment concept. See Supplementary Materials Table S2 for complete interview guide. Following each interview, a reflexive analytic audio memo was recorded by the interviewer (DD) describing impressions and insights.

Interview data were analyzed using content analysis (46). Codes were derived inductively from the data without imposing a theoretical framework (46). In doing so, we helped ensure that the codes were grounded in the participant's experiences (46). The first three interview transcripts were read by two reviewers (DD, EL) to familiarize themselves with the data. During this time, the interviews were pre-coded by highlighting key ideas, important passages, and potential codes (47). Analytic memos also provided context to this pre-coding process. After transcripts were reviewed, the reviewers fully coded the first three interviews independently. Whenever possible, concepts were coded “in vivo”, using participants' own words as codes (47). Next, codes were discussed in team meetings, and then codes, their definitions, and instances of the codes were gathered in an iterative codebook. The codebook formed the beginning of an audit trail, which tracked the evolution of data from codes, to categories, to themes, to study findings (47, 48). The codes from the first three interviews were then transitioned into NVivo qualitative data analysis software (49), which facilitated the coding of the remainder of the dataset (49). Coding was a cyclical process, such that as new codes were added, earlier interviews were iteratively re-coded. Strategies for rigour consisted of collaborative coding of the first three interviews (DD, EL), verification of codes by a team member (EL), documentation of analysis using an iterative code book, and discussions of codes and themes within the research group. This analysis led to the creation of a “design table” summarizing stakeholder-identified considerations for the R2Play system and prototype. For each consideration, potential design recommendations and revisions were brainstormed and included to track the iterative co-creation process. Proposed design revisions were categorized as: implemented (i.e., incorporated into the R2Play system prototype), iterating (i.e., compatible with the R2Play concept, but not yet integrated), or incompatible. While not all feedback could be implemented in the first iteration of the R2Play design, the “design table” provided a medium to explain which pieces were addressed, why, and how. Additionally, we documented the feedback that we were unable to incorporate to increase the transparency of our work.

Table 2 summarizes demographics of the 10 participants.

Feedback collected during the interviews was positioned within the following constructed themes: 1) the individuals being assessed, 2) the R2Play system (assessment tasks, system hardware, clinician software interface, scoring), and 3) clinic integration (time, space, training). Detailed feedback and resultant design iterations are presented in the “design table” (See Supplementary Materials Table One). Here we summarize stakeholder feedback with respect to each of the above themes broadly, and focus on feedback that was integrated into the early R2Play prototype.

In thinking about the individuals being assessed, stakeholders indicated the importance of designing for accessibility (e.g., for wheelchair users, individuals with colour blindness, dyslexia or other learning disabilities, language) and for diverse ages. Understanding each athletes' individual context was also a stakeholder priority (e.g., concussion history, psychosocial influences, school, symptoms before/after, mental health, sport training profile, co-morbidities). Comments relating to capturing individual contexts were incorporated into the R2Play system through an optional pre-assessment client profile, where clinicians are guided through a detailed clinical history. To improve accessibility, the R2Play tablets were placed in raised stands. Pending promising usability and psychometric data, future work will strive to further improve accessibility of the R2Play system and to adapt our system to be suitable for younger children.

With respect to the R2Play system, feedback was provided on early ideation of the R2Play tasks, hardware, software interface, and scoring:

• Tasks: The R2Play tasks were considered sport-like by coach stakeholders who served as our “ecological validity experts,” taxing physical skills like speed, agility, and hand-eye coordination; perceptual skills like visual scanning and auditory cues; cognitive skills like planning and decision-making; and socio-emotional aspects associated with scoring/competition in sport. Stakeholders also introduced the need for dynamic decision-making and reaction (perception-action integration) and the possibility of auditory interference in line with a noisy sporting environment.

• Hardware: User-friendliness and durability were important components of system hardware.

• Software interface: Key stakeholder-identified considerations for the system software interface were the need for child-friendly performance reports as well as an interface for clinicians to document observational notes.

• Scoring: Lastly, scoring and interpretation of the R2Play assessment was a prominent theme. There was interest in establishing clinical normative data and also in establishing target heart rate zones for exertion. There was also interest in automatically logging errors and reaction times to support clinical interpretation and decision-making.

The addition of background noise and a scramble condition stresses perception-action integration were implemented (see Section 3.1 for detail). Soft rubber cases were added to increase the durability of the R2Play tablets. To assist in recording observations and errors, a virtual notepad was developed to be used by clinicians on the R2Play interface. Results screens were designed to be graphic and simple, to assist in communicating assessment findings with youth clients.

Not all user feedback was able to be implemented. At this time, the task does not include sport-specific implements (e.g., sticks, balls) or contact/physicality (these may be incorporated in future iterations). Additionally, it does not simulate communication with teammates or coaches, which may also be an area for future development. Next steps for the R2Play system will also be focused on contextualizing and interpreting R2Play results, and understanding the psychometric properties of the assessment.

Lastly, in providing feedback on R2Play and considering needs for a multidomain return-to-play assessment, the theme of clinic integration emerged from the data and in particular, issues of timing, space, and training. Clinician feedback indicated a preferred assessment time of <30 min. The ability to set-up R2Play in different spatial configurations (e.g., a gym, a long hallway) was also expressed. Understanding the optimal spatial arrangement that can accommodate space constraints while still providing a sport-like, physically taxing experience was considered an important area of research and development by our stakeholders. Lastly, the importance of providing training resources both to navigate system setup and use, as well as for scoring/interpretation was emphasized. Comments relating to clinicians' use of time were especially salient, and R2Play levels were streamlined to shorten the duration of the assessment. A flexible system was developed to accommodate different assessment spaces, although additional research is required to understand impacts of different layouts on scoring/interpreting R2Play performance.

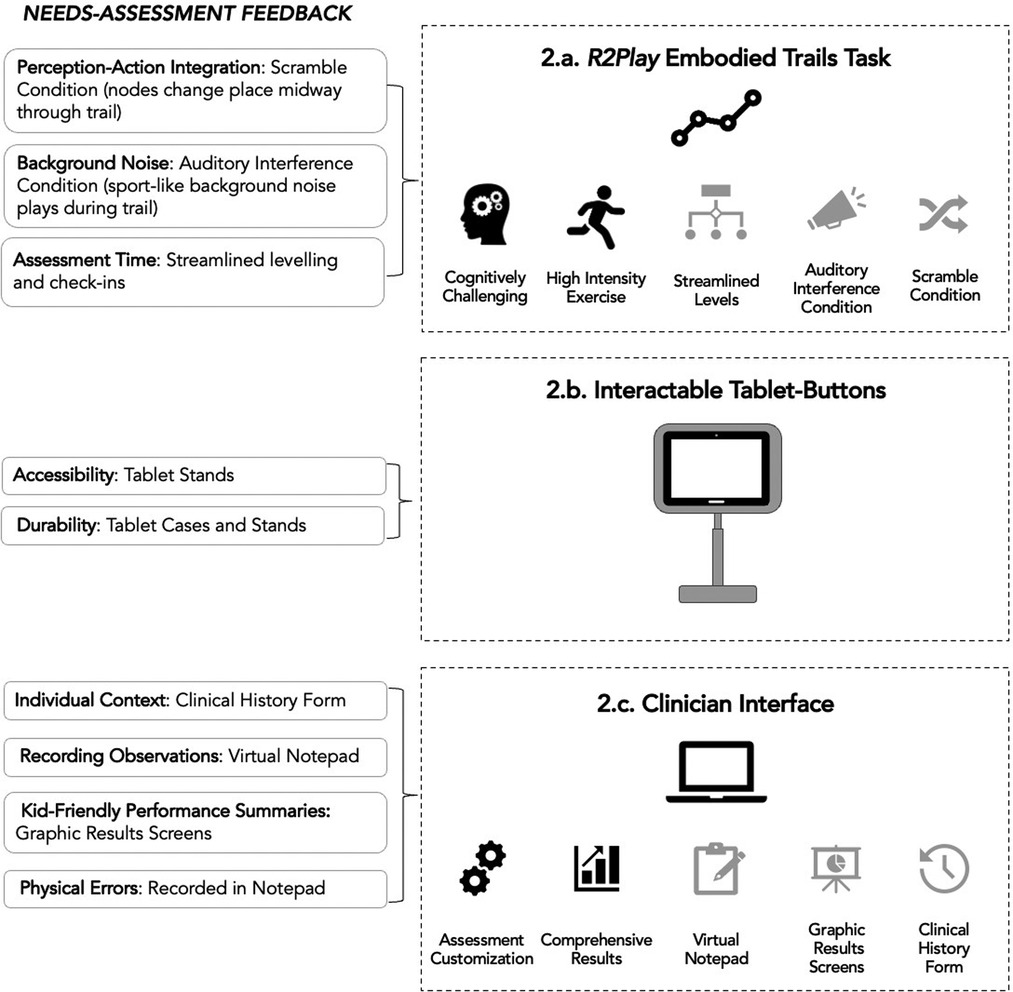

Following analysis of objective one interviews, our team (DD, EL, AK, FH) set out to build a testable high-fidelity R2Play prototype that incorporated user feedback. Figure 2 depicts some of the design iterations made and how they relate to the themes described above. The following objective two sections describe the design process and updated prototype with respect to key elements of the R2Play system, namely: (i) the task itself, (ii) the clinician software interface for setting up and administering the task, (iii) the hardware interface, and (iv) the scoring system (12).

Figure 2. Integration of interview feedback into a second iteration of the R2Play prototype. Black icons indicate priorities that were identified and integrated into the R2Play concept prior to interviews, while grey icons indicate changes made following stakeholder feedback.

Guided by objective one findings, the R2Play assessment was designed to include levels of varying physical effort and cognitive complexity, plus a pre- and post- motor task. The length of the assessment is customizable to either 6, 12, or 18 nodes in the trail with four levels: number-letter trail, exertion trail, go/no-go trail, and Stroop trail, depicted in Figure 3. The most basic level, the number-letter trail, is accomplished by pressing tablets in order (1-A-2-B-3-C). During the exertion trail, a sound cues participants to stop and complete a set of exercises (e.g., 3 burpees) before completing the trail. The type of exercise completed and the number of repetitions is also customizable, to suit the age and ability of the athlete. The go-no-go trail requires participants to exercise inhibitory control by completing the trail using only green numbers/letters and excluding any that are red. This rule is then reversed in the Stroop trail, where participants must select only the red numbers/letters and exclude any that are green. Each athlete completes four repetitions per level. Two of these repetitions have layered challenges: a scramble condition where the letters/numbers change places midway and the participant must adapt in a way that taxes perception-action integration, and an auditory interference condition where background noise play (e.g., gym sounds, cheering). A video demonstration is available. Each level begins with a training session carried out by the clinician interface software, followed by multiple repetitions, and ends with a brief check-in of perceived exertion and concussion symptoms. Additionally, it was recognized that a symbol trail could be preferable for younger children or children with different learning needs (e.g., dyslexia) and this will be considered for future extensions.

To assist in translating R2Play from a concept to a working prototype, a hierarchical task analysis (50) was conducted to build out an in-depth breakdown of the R2Play assessment structure and workflow. Following the established roadmap, team members (DD, EL) designed the graphical user interface for R2Play's clinician interface. This preliminary prototype consisted of a series of low-fidelity wireframes, which were two-dimensional illustrations of the layout of content clinicians would see while administering the task. The goal for the interface was to create a simple, user-friendly means of working through the R2Play assessment. Key functionalities for the interface include: connecting to the tablet-buttons, creating an athlete profile with optional fields for documenting a detailed history, creating an assessment layout, collecting a pre-assessment resting heart rate and adolescent postconcussion symptom inventory (51), training youth athletes on rules of the R2Play task, carrying out the assessment, and recording results, including functionality to document clinical observations and display results via child-friendly visuals. The graphical user interface and described functionalities were implemented by one of our engineers (FH) using Unity and C#.

The stakeholder team engaged in collaborative brainstorming exercises to develop a list of interactive technologies that could be used to support this novel return-to-play assessment concept with reference to our system design objectives (see Table 1). Using low-cost tablets as “buttons” was determined to be a suitable option due to their cost-effectiveness and flexibility to serve as a display to guide the task, as well as an interface which can register, log, and communicate participants' inputs throughout the task. We decided to implement a six-tablet system which would support varying trail lengths. Of note, for trails longer than 6 nodes, the tablet displays refresh after the first 6 nodes are completed so that the athlete can double-back to continue the trail. The final R2Play hardware configuration consisted of six low-cost Android tablet (e.g., Lenovo Tab E10) with cases and tablet stands, a clinician Windows tablet (e.g., Microsoft Surface Pro 7 with an Intel core i5 processor), a Polar H10 heart rate monitor, a wireless router, and a speaker. The system uses a single-server multi-client network model where the clinician interface acts as a server, and communicates with the six interactable tablet-buttons, or clients, via TCP/IP, using a custom communication protocol. The wireless router allows R2Play to use a local Wi-Fi network, thus ensuring it is not dependent on infrastructure Wi-Fi. During the assessment, the clinician interface sends relevant commands to the tablet-buttons (including the character to display and the color of the button), plays appropriate sounds (scramble tone, auditory interference noise, exercise cue, etc.), and receives information from the tablets each time the athlete touches their screens. Heart rate data are streamed into the clinician interface by means of a custom WPF desktop application which communicates with the Polar H10 heart rate monitor via Bluetooth Low Energy, using the standard GATT heart rate profile.

Data from tablet-button presses and the heart rate monitor are logged by the clinician interface and displayed live as the athlete completes each level. The system quantifies:

• Number of errors: Instances where the wrong button in the sequence is pressed.

• Completion time: How long it takes to complete a repetition of a given level. This is calculated relative to the number of buttons the athlete must press (e.g. number-letter trail would have six button presses, while the Go/No-Go level would have four button presses), and all trails are standardized to be approximately the same distance of path travelled by the athlete.

• Heart rate: ECG heart rate streamed from a Polar H10 heart rate monitor worn by the athlete.

Using these data, the system calculates a series of “costs” that reflect the change in performance between two levels or conditions. This analysis is aligned with traditional dual-task cost equations, where cost is calculated by computing the percent increase in completion time between a baseline level and another more challenging level. In this way, the R2Play system can be used to explore the “cost” of physical exertion (exertion trail vs. number-letter trail), cognitive processing (Go/No-Go or Stroop vs. number-letter trail), scramble (all scramble repetitions vs. all original repetitions), auditory interference (all auditory interference repetitions vs. all original repetitions), and fatigue (post-assessment motor trail vs. pre-assessment motor trail). Each of these costs is displayed within a “cost summary” in the R2Play results (Figure 4). While a larger study is required to understand the sensitivity of the R2Play assessment and the interpretation of R2Play results, cost analyses have shown promise in simpler dual-task assessments of concussion recovery (20, 52, 53), and in neurological populations outside of concussion (54, 55).

Lastly, the interface also provides input fields to record clinician's notes/observations (e.g., loss of balance) throughout the assessment and athlete's perceived exertion and concussion symptoms after each level. These data are synthesized, and results are presented upon task completion.

Having designed and developed a functional hi-fidelity stakeholder-guided prototype, the next stage of our design-thinking process was to test the system. Of note, evaluating a complex system like R2Play is anticipated to span multiple years with ongoing iteration. In this section, we describe the first stage of prototype testing, where we examine the usability of the clinician interface (i.e., the app that clinicians will use to facilitate administration of the R2Play assessment) in a streamlined cognitive walkthrough format. A cognitive walkthrough allows a system's designer to pinpoint areas where users are challenged by the interface or encounter usability problems. Through this process, we aimed to address the following questions: (1) Are clinicians able to use and effectively navigate the R2Play software interface as measured by the number of recoverable and irrecoverable errors? (2) Are participants satisfied with the R2Play interface as indicated on the System Usability Scale, and (3) What modifications or suggestions do clinicians have that will help inform the development of R2Play?

We employed convenience sampling to recruit five clinicians for the cognitive walkthrough. It has been established previously that approximately 75% of usability problems are typically identified by as few as three to five evaluators (56, 57). The inclusion criterion for clinicians was experience assessing children and adolescents with concussion aged 6–19 years.

Cognitive walkthroughs were carried out over the online videoconferencing platform Zoom (44). The researcher (DD) began by opening the R2Play interface on their computer, and then shared their screen with each clinician participant and gave them “remote control” of the screen. In this way, the walkthrough procedure was carried out without deploying software to individual participants. To begin, the researcher provided context to the walkthrough by presenting the clinician with an example scenario: the return-to-play assessment of a teenage athlete named Emma. The participant was then required to use the interface to navigate through tasks like “create an athlete profile for Emma” or “begin Emma”s R2Play assessment session”. Supplementary Table S3 provides a list of all key tasks included in the R2Play cognitive walkthroughs. Participants were also encouraged to “think aloud” to provide real-time feedback as they explored the interface, commenting on features that they liked, disliked, or found confusing.

Video of the screen recording and audio of the participant “thinking out loud” were recorded. After task completion, the System Usability Scale (SUS) was administered online as a subjective assessment of overall usability (58). This scale consists of ten five-point Likert questions and is a widely used validated measure of perceived usability, which allows researchers to rapidly appraise user experience (59). On this scale, systems with a score of over 68% are deemed to have above average usability (60). Each clinician also participated in a semi-structured exit interview where they were prompted to share any further comments on the interface, suggestions for changes, and discussions about any obstacles or difficulties with task completion.

A standardized observation checklist was developed to help document task performance, usability problems, and their severity. If the participant was unable to complete a task without help, this was classified as a severe usability problem. In some instances, participants recovered from errors on their own during the session and were eventually able to complete their tasks. These errors, documented as recoverable, were still considered as usability issues that should be addressed. Following review of field notes, screen/audio recordings, exit interviews, and task completion scoring, a copy of each interface screen was annotated to reflect areas where participants had difficulties and suggestions for changes (DD). These changes were then discussed within the broader group (DD, EL, EB, SS). This allowed the research team to compile a prioritized list of usability problems to address. The SUS score for the R2Play interface was also computed to quantify the usability of the system.

Demographic information for the 5 clinician participants is shown in Table 3.

The R2Play interface scored an average of 81% (SD = 8.02) on the SUS, placing it in the “good” to “excellent” usability range (60). This positive result was reflected in the analysis of cognitive walkthrough data, as feedback was largely related to refining layout and tweaking details, as opposed to serious usability problems. Participants seemed comfortable with navigating the R2Play interface and found the “flow” easy to follow. Specific feedback was given regarding the layout of the home screen and assessment screen, as well as the wording of the post-assessment check-in screen. Multiple participants made recoverable errors, such as clicking on areas of the screen that were not enabled as buttons, or had difficulty locating buttons including “create profile” and “start assessment”. No unrecoverable errors (i.e., terminating the assessment) were made during the cognitive walkthroughs. Following walkthroughs, the research team created an updated set of wireframes to depict design changes including adjustments to wording, font size, and screen behavior.

The goal of this project was to work collaboratively with stakeholders to develop a prototype of a multi-domain assessment to assist with return-to-play decision making after pediatric concussion. To this end, our research team leveraged a variety of methodological and design strategies, which were guided by an overarching design-thinking framework. This type of user-centered approach is well-suited for the type of complex, multifaceted problem that building a novel return-to-play assessment encompasses (30).

This paper documented the development of the R2Play system from inception to preliminary prototype testing. We aspired to build an assessment that reflected user needs and could be translated into clinical practice, addressing a gap in current concussion return-to-play protocols. We would like to highlight not only the system itself, but also two key takeaways from our research process: the benefits of a user-centered approach to design, and the potential of technology to bolster clinical assessment.

Multi-domain paradigms implemented in research are commonly dual-task assessments, consisting of a simple gait condition paired with an easy-to-explain cognitive task (e.g., walking while subtracting serially by sevens). The R2Play system, on the other hand, taxes youth athletes through multi-sensory stimuli both physically and cognitively in a way that strives to be more sport-like. Athletes must respond to a dynamic environment and carry out a series of increasingly complex cognitive tasks, all while exerting themselves physically. This aligns with best practice guidelines for return-to-play and may provide clinicians with richer clinical data due to the complexity of integrating across domains simultaneously, as opposed to in silos (18, 19).

Our system development was informed by feedback from clinicians and youth sports coaches through multiple iterations of design. In doing so, we aimed to identify and circumvent barriers to R2Play adoption from the outset and build a prototype that reflects the needs of end-users. Key criteria that were considered in the R2Play prototype design were that it should be: sport-like, fun for youth athletes, easy-to-use, low-cost, flexible, and clinically informative.

As a result of our user-centered design process, the R2Play system has a drastically different look and feel than current dual-task return-to-play assessments used predominantly in research (18, 19, 24). While common dual-task assessments prioritize experimental control by creating highly constrained testing environments, the R2Play task is designed to feel sport-like in terms of difficulty and skills (24).

Although seeking user feedback added to the initial workload of system development, it allowed us to move forward with a flexible concept that was firmly grounded in the feedback of end-users. Because of this early investment, refinements made later down the line were mostly minor changes. We also have a wealth of information extracted from interviews to guide next steps for iterative refinement of the R2Play system in a way that reflects the priorities of stakeholders. Our valuable experiences in R2Play development reflect the broader user-centered design literature, which point to seeking stakeholder engagement throughout the research process as a highly beneficial undertaking (30, 61).

The development of R2Play also sets an example for future work in health and rehabilitation. We have highlighted one way for low-cost technology to potentially support and fill a gap in clinical assessments when thoughtfully designed. The R2Play technology was chosen with a focus on flexibility and low-cost to eliminate possible barriers to clinical implementation. In our system, the tablet-buttons have a dual-purpose: they display the trail stimuli and assist in scoring the task. This sets the R2Play system apart from other multi-domain assessments, which are typically scored through motion capture or inertial sensors (24). While these scoring methods are useful for research, they are not readily available or amenable for use in clinical settings.

By logging button presses within the R2Play system, we are able to capture metrics like time to completion and errors, which can then be compared through “cost” analyses (see “scoring” section). Further work is being done to incorporate low-cost wearable sensors into the R2Play scoring system.

The system described here is an early prototype. Limitations to our design process exist, as we had relatively small samples for stakeholder feedback interviews and did not include youth athlete feedback in the initial design process of R2Play. It is important to note, however, that youth athletes will be continuously engaged with for feedback during the ongoing testing and refinement of the R2Play system prototype (currently in progress). Due to challenges in recruitment (likely aggravated by the COVID-19 pandemic), our sample of youth sports coaches was smaller than targeted. As a result, the study may be lacking perspectives from lacrosse, field hockey, or volleyball coaches, for example. This work merits expansion through future studies, as bolstering the ecological validity of multi-domain assessment through simulating sport was a central goal of our work. One possible avenue to capture a broader concept of “sport” and critical sports skills would be allowing a breadth of youth sport coaches to observe piloting of the R2Play assessment with healthy youth athletes to garner feedback on its relevance to their sports.

Next steps for R2Play include testing the system in its entirety with clinicians and healthy youth athletes to develop an understanding of whether our design objectives (Table 1) were met, if it is feasible in practice, and areas in need of refinement. Through this testing, we anticipate further iterations to R2Play based on the perspectives gained from youth athletes regarding whether R2Play is fun, appropriately challenging, and sport-like. Work must also be done to support clinicians in implementing the R2Play system, by developing training resources and validating the scoring of the R2Play assessment.

During interviews, clinicians and youth sports coaches also expressed excitement that R2Play could be translated to populations outside of concussion, including moderate-severe brain injuries, other types of sports injuries, or as a training tool for healthy athletes. The potential for R2Play to positively impact other populations also bears investigation in the future.

The current paper has described the first steps in the development of the R2Play system, summarizing a two-year research program which sought to build a novel multi-domain return-to-play assessment for youth athletes following concussion. This process was guided by the following core objectives: 1) to capture end-user perspectives, define our problem, and ideate solutions; 2) to build an R2Play system prototype; and, 3) to test the R2Play interface. To address these, we integrated various methodological and design strategies: from qualitative interviews, to iterative prototyping, to cognitive walkthroughs. Ultimately, we have described the design process behind the R2Play system and highlighted the strengths of a user-centered approach and potential applications for technology to support clinical practice. Through this work, we hope to establish a new standard of care for youth athletes after concussion, by implementing an assessment that is designed with their activities and needs in mind. In the future, R2Play will help promote the safety of youth athletes and allow clinicians, youth, and their caregivers to have confidence when returning to play following a concussion.

Data will be made available by the authors on request and in line with ethical standards of practice and institutional data transfer agreements.

The studies involving human participants were reviewed and approved by Holland Bloorview Research Ethics Board; Bloorview Research Institute. The patients/participants provided their written informed consent to participate in this study.

Author DD prepared the manuscript, carried out interviews and cognitive walkthroughs, analysed data, and collaborated with team members to refine the R2Play prototype. Author EL was the second coder for interview data, and contributed significantly to R2Play protoype design and refinement, as well as overarching study design for cognitive walkthroughs. Author FH integrated feedback from users and the research team into a working, testable R2Play prototype with support from AK. Author HA-H led the research ethics application for the various studies carried out. Authors AH, SM, MH, NR, and VW supported study design and R2Play iteration with valuable feedback. Authors EB and SS supervised this project, secured project funding, assisted with overarching study design, and collaborated on R2Play at the early ideation phase and throughout refinements. All authors reviewed the present manuscript. All authors contributed to the article and approved the submitted version.

This work was supported by the Centre for Leadership at Holland Bloorview Kids Rehabilitation Hospital.

We would like to acknowledge our participants who generously shared their time and expertise with us, as well as the clinicians at the Holland Bloorview Concussion Clinic for supporting our work.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fresc.2022.1051579/full#supplementary-material.

1. Langer L, Levy C, Bayley M. Increasing incidence of concussion: true epidemic or better recognition? J Head Trauma Rehabil. (2020) 35(1):E60. doi: 10.1097/HTR.0000000000000503

2. Statistics Canada. Focus on Geography Series, 2016 Census - Table 1.5 Population by five-year age groups and sex, 2016. 2016. Available at: https://www12.statcan.gc.ca/census-recensement/2016/as-sa/fogs-spg/desc/Facts-desc_Age.cfm?LANG=Eng&GK=PR&GC=35&TOPIC=2&#fd1_5

3. Meehan WP, Mannix R. Pediatric concussions in United States emergency departments in the years 2002–2006. J Pediatr. (2010) 157(6):889–93. doi: 10.1016/j.jpeds.2010.06.040

4. Pfister T, Pfister K, Hagel B, Ghali WA, Ronksley PE. The incidence of concussion in youth sports: a systematic review and meta-analysis. Br J Sports Med. (2016) 50(5):292–7. doi: 10.1136/bjsports-2015-094978

5. Kirkwood MW, Yeates KO, Wilson PE. Pediatric sport-related concussion: a review of the clinical management of an oft-neglected population. Pediatr. (2006) 117(4):1359–71. doi: 10.1542/peds.2005-0994

6. Purcell L. Child and adolescent athletes. In: Bloom GA, Caron JG, editors. Psychological aspects of sport-related concussions. London: Routledge (2019). p. 147–67.

7. Brooks MA, Peterson K, Biese K, Sanfilippo J, Heiderscheit BC, Bell DR. Concussion increases odds of sustaining a lower extremity musculoskeletal injury after return to play among collegiate athletes. Am J Sports Med. (2016) 44(3):742–7. doi: 10.1177/0363546515622387

8. Howell DR, Lynall RC, Buckley TA, Herman DC. Neuromuscular control deficits and the risk of subsequent injury after a concussion: a scoping review. Sports Med Auckl NZ. (2018) 48(5):1097–115. doi: 10.1007/s40279-018-0871-y

9. McCrea M, Guskiewicz K, Randolph C, Barr WB, Hammeke TA, Marshall SW, et al. Effects of a symptom-free waiting period on clinical outcome and risk of reinjury after sport-related concussion. Neurosurg. (2009) 65(5):876–83. doi: 10.1227/01.NEU.0000350155.89800.00

10. Tator C, Starkes J, Dolansky G, Quet J, Michaud J, Vassilyadi M. Fatal second impact syndrome in rowan stringer, A 17-year-old rugby player. Can J Neurol Sci J Can Sci Neurol. (2019) 46(3):351–4. doi: 10.1017/cjn.2019.14

11. Vagnozzi R, Signoretti S, Cristofori L, Alessandrini F, Floris R, Isgrò E, et al. Assessment of metabolic brain damage and recovery following mild traumatic brain injury: a multicentre, proton magnetic resonance spectroscopic study in concussed patients. Brain. (2010) 133(11):3232–42. doi: 10.1093/brain/awq200

12. McCrory P, Meeuwisse W, Dvorak J, Aubry M, Bailes J, Broglio S, et al. Consensus statement on concussion in sport—the 5th international conference on concussion in sport held in Berlin, October 2016. Br J Sports Med. (2017) 51:838–47. doi: 10.1136/bjsports-2017-097699

13. Haider MN, Leddy JJ, Pavlesen S, Kluczynski M, Baker JG, Miecznikowski JC, et al. A systematic review of criteria used to define recovery from sport-related concussion in youth athletes. Br J Sports Med. (2018) 52(18):1179–90. doi: 10.1136/bjsports-2016-096551

14. Balk YA, De Jonge J, Oerlemans WGM, Geurts SAE, Fletcher D, Dormann C. Balancing demands and resources in sport: adaptation and validation of the demand-induced strain compensation questionnaire for use in sport. J Sports Sci Med. (2018) 17(2):237–44. https://www.jssm.org/hfabst.php?id=jssm-17-237.xml29769825

15. Balk YA, Jonge JD, Oerlemans WGM, Geurts SAE. “What a match!”: the specific role of resources in the relation between demands and vigour in elite sport. Appl Psychol. (2020) 69(1):120–47. doi: 10.1111/apps.12188

16. Eagle SR, Kontos AP, Pepping GJ, Johnson CD, Sinnott A, LaGoy A, et al. Increased risk of musculoskeletal injury following sport-related concussion: a perception-action coupling approach. Sports Med Auckl. (2020) 50(1):15–23. doi: 10.1007/s40279-019-01144-3

17. Eime RM, Young JA, Harvey JT, Charity MJ, Payne WR. A systematic review of the psychological and social benefits of participation in sport for children and adolescents: informing development of a conceptual model of health through sport. Int J Behav Nutr Phys Act. (2013) 10(1):98. doi: 10.1186/1479-5868-10-98

18. Fino PC, Parrington L, Pitt W, Martini DN, Chesnutt JC, Chou LS, et al. Detecting gait abnormalities after concussion or mild traumatic brain injury: a systematic review of single-task, dual-task, and complex gait. Gait Posture. (2018) 62:157–66. doi: 10.1016/j.gaitpost.2018.03.021

19. Kleiner M, Wong L, Dubé A, Wnuk K, Hunter SW, Graham LJ. Dual-Task assessment protocols in concussion assessment: a systematic literature review. J Orthop Sports Phys Ther. (2018) 48(2):87–103. doi: 10.2519/jospt.2018.7432

20. Cossette I, Gagné MÈ, Ouellet MC, Fait P, Gagnon I, Sirois K, et al. Executive dysfunction following a mild traumatic brain injury revealed in early adolescence with locomotor-cognitive dual-tasks. Brain Inj. (2016) 30(13–14):1648–55. doi: 10.1080/02699052.2016.1200143

21. Fait P, Swaine B, Cantin JF, Leblond J, McFadyen BJ. Altered integrated locomotor and cognitive function in elite athletes 30 days postconcussion: a preliminary study. J Head Trauma Rehabil. (2013) 28(4):293–301. doi: 10.1097/HTR.0b013e3182407ace

22. Howell DR, Osternig LR, Chou LS. Dual-Task effect on gait balance control in adolescents with concussion. Arch Phys Med Rehabil. (2013) 94(8):1513–20. doi: 10.1016/j.apmr.2013.04.015

23. Toong T, Wilson KE, Hunt AW, Scratch S, DeMatteo C, Reed N. Sensitivity and specificity of a multimodal approach for concussion assessment in youth athletes. J Sport Rehabil. (2021) 30(6):1–10. doi: 10.1123/jsr.2020-0279

24. DuPlessis D. R2Play and Stakeholder Needs: Fostering User-Driven Technology to Support Return-to-Play Decision-Making [manuscript-based thesis]. [Ontario, Canada]: University of Toronto; 2021.

25. Prim JH, Favorov OV, Cecchini AS, Scherer MR, Weightman MM, McCulloch KL. Clinical utility and analysis of the run-roll-aim task: informing return-to-duty readiness decisions in active-duty service members. Mil Med. (2019) 184(5–6):e268–77. doi: 10.1093/milmed/usy425

26. Robitaille N, Jackson PL, Hébert LJ, Mercier C, Bouyer LJ, Fecteau S, et al. A virtual reality avatar interaction (VRai) platform to assess residual executive dysfunction in active military personnel with previous mild traumatic brain injury: proof of concept. Disabil Rehabil Assist Technol. (2017) 12(7):758–64. doi: 10.1080/17483107.2016.1229048

27. Scherer MR, Weightman MM, Radomski MV, Davidson LF, McCulloch KL. Returning service members to duty following mild traumatic brain injury: exploring the use of dual-task and multitask assessment methods. Phys Ther. (2013) 93(9):1254–67. doi: 10.2522/ptj.20120143

28. Thoring K, Müller RM. Understanding design thinking: a process model based on method engineering. 69 Proc EPDE 2011 13th Int Conf Eng Prod Des Educ Lond UK 08-09092011. (2011):493–8. https://www.designsociety.org/publication/30932/Understanding+Design+Thinking%3A+A+Process+Model+based+on+Method+Engineering

29. Luchs M, Swan S, Griffin A. Design Thinking New Product Development Essentials from the PDMA. Wiley-Blackwell; 2015 [cited 2021 Jun 9]. 448 p. Available at: https://www.perlego.com/book/998883/design-thinking-new-product-development-essentials-from-the-pdma-pdf

30. Roberts JP, Fisher TR, Trowbridge MJ, Bent C. A design thinking framework for healthcare management and innovation. Healthcare. (2016) 4(1):11–4. doi: 10.1016/j.hjdsi.2015.12.002

31. Seidel VP, Fixson SK. Adopting design thinking in novice multidisciplinary teams: the application and limits of design methods and reflexive practices. J Prod Innov Manag. (2013) 30(S1):19–33. doi: 10.1111/jpim.12061

32. King G, Chiarello LA, Ideishi R, D’Arrigo R, Smart E, Ziviani J, et al. The nature, value, and experience of engagement in pediatric rehabilitation: perspectives of youth, caregivers, and service providers. Dev Neurorehabilitation. (2020) 23(1):18–30. doi: 10.1080/17518423.2019.1604580

33. de Grood C, Raissi A, Kwon Y, Santana MJ. Adoption of e-health technology by physicians: a scoping review. J Multidiscip Healthc. (2016) 9:335–44. doi: 10.2147/JMDH.S103881

34. Delis DC, Kaplan E, Kramer JH. Delis-Kaplan executive function system (D–KEFS). [Database record]. APA PsycTests (2001). doi: 10.1037/t15082/000

35. Taylor HG, Albo VC, Phebus CK, Sachs BR, Bierl PG. Postirradiation treatment outcomes for children with acute lymphocytic leukemia: clarification of risks. J Pediatr Psychol. (1987) 12(3):395–411. doi: 10.1093/jpepsy/12.3.395

36. Salthouse TA. What cognitive abilities are involved in trail-making performance? Intelligence. (2011) 39(4):222–32. doi: 10.1016/j.intell.2011.03.001

37. Reitan RM. Validity of the trail making test as an indicator of organic brain damage. Percept Mot Skills. (1958) 8(3):271–6. doi: 10.2466/pms.1958.8.3.271

38. Tombaugh TN. Trail making test A and B: normative data stratified by age and education. Arch Clin Neuropsychol. (2004) 19(2):203–14. doi: 10.1016/S0887-6177(03)00039-8

39. Reitan RM. Trail making test results for Normal and brain-damaged children. Percept Mot Skills. (1971) 33(2):575–81. doi: 10.2466/pms.1971.33.2.575

40. Wang CH, Chang CC, Liang YM, Shih CM, Chiu WS, Tseng P, et al. Open vs. Closed skill sports and the modulation of inhibitory control. PLOS ONE. (2013) 8(2):e55773. doi: 10.1371/journal.pone.0055773

41. Zemek RL, Grool AM, Rodriguez Duque D, DeMatteo C, Rothman L, Benchimol EI, et al. Annual and seasonal trends in ambulatory visits for pediatric concussion in Ontario between 2003 and 2013. J Pediatr. (2017) 181:222–8. doi: 10.1016/j.jpeds.2016.10.067

42. Patton MQ. Two decades of developments in qualitative inquiry: a personal, experiential perspective. Qual Soc Work. (2002) 1(3):261–83. doi: 10.1177/1473325002001003636

43. Malterud K, Siersma V, Guassora AD. Sample size in qualitative interview studies: guided by information power. Qual Health Res. (2015) 1:1753–60. doi: 10.1177/1049732315617444

46. Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. (2005) 15(9):1277–88. doi: 10.1177/1049732305276687

47. Saldaña J. The coding manual for qualitative researchers. 2nd ed. Los Angeles: SAGE; 2013. 303 p.

48. Wolf ZR. Exploring the audit trail for qualitative investigations. Nurse Educ. (2003) 28(4):175–8. doi: 10.1097/00006223-200307000-00008

49. NVivo. QSR International Pty Ltd.; 2018. Available at: https://www.qsrinternational.com/nvivo-qualitative-data-analysis-software/home

50. Annett J. Hierarchical task analysis. In: Hollnagel E, editor. Handbook of cognitive task design 2. Boca Raton: CRC Press (2003). p. 17–35.

51. Sady MD, Vaughan CG, Gioia GA. Psychometric characteristics of the postconcussion symptom inventory in children and adolescents. Arch Clin Neuropsychol. (2014) 29(4):348–63. doi: 10.1093/arclin/acu014

52. Howell DR, Buckley TA, Lynall RC, Meehan WP. Worsening dual-task gait costs after concussion and their association with subsequent sport-related injury. J Neurotrauma. (2018) 35(14):1630–6. doi: 10.1089/neu.2017.5570

53. Cossette I, Ouellet MC, McFadyen BJ. A preliminary study to identify locomotor-cognitive dual tasks that reveal persistent executive dysfunction after mild traumatic brain injury. Arch Phys Med Rehabil. (2014) 95(8):1594–7. doi: 10.1016/j.apmr.2014.03.019

54. Liu YC, Yang YR, Tsai YA, Wang RY. Cognitive and motor dual task gait training improve dual task gait performance after stroke - A randomized controlled pilot trial. Sci Rep. (2017) 7(1):4070. doi: 10.1038/s41598-017-04165-y

55. Montero-Odasso MM, Sarquis-Adamson Y, Speechley M, Borrie MJ, Hachinski VC, Wells J, et al. Association of dual-task gait with incident dementia in mild cognitive impairment: results from the gait and brain study. JAMA Neurol. (2017) 74(7):857–65. doi: 10.1001/jamaneurol.2017.0643

56. Nielsen J. Usability engineering. San Francisco. CA, USA: Morgan Kaufmann Publishers Inc (1994). 362.

57. Nielsen J, Landauer TK. A mathematical model of the finding of usability problems. In: Proceedings of the SIGCHI conference on human factors in computing systems - CHI ‘93. Amsterdam, The Netherlands: ACM Press; 1993 [cited 2021 Jun 14]. p. 206–13. Available at: http://portal.acm.org/citation.cfm?doid=169059.169166

58. Brooke J. SUS - A quick and dirty usability scale. Usability Eval Ind. (1996) 8. doi: 10.1201/9781498710411

59. Lewis JR. The system usability scale: past, present, and future. Int J Human–Computer Interact. (2018) 34(7):577–90. doi: 10.1080/10447318.2018.1455307

60. Sauro J, Lewis J. Quantifying the User Experience. Elsevier; 2012 [cited 2021 Jun 14]. Available at: https://linkinghub.elsevier.com/retrieve/pii/C20100651923

Keywords: pediatrics, concussion, assessment, user-centered design, sport medicine, return-to-play

Citation: DuPlessis D, Lam E, Hotze F, Khan A, Al-Hakeem H, McFarland S, Hickling A, Hutchison M, Wright FV, Reed N, Biddiss E and Scratch SE (2022) R2Play development: Fostering user-driven technology that supports return-to-play decision-making following pediatric concussion. Front. Rehabilit. Sci. 3:1051579. doi: 10.3389/fresc.2022.1051579

Received: 23 September 2022; Accepted: 7 November 2022;

Published: 5 December 2022.

Edited by:

Erika Molteni, King's College London, United KingdomReviewed by:

Elizabeth Teel, Concordia University, Canada© 2022 DuPlessis, Lam, Hotze, Khan, Al-Hakeem, McFarland, Hickling, Hutchison, Wright, Reed, Biddiss and Scratch. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shannon Scratch c3NjcmF0Y2hAaG9sbGFuZGJsb29ydmlldy5jYQ==

Specialty Section: This article was submitted to Pediatric Rehabilitation, a section of the journal Frontiers in Rehabilitation Sciences

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.