- 1Department of Computer Science, University of Wah, Wah Cantt, Pakistan

- 2Department of Computer Science, COMSATS University Islamabad, Islamabad, Pakistan

- 3Department of Computer Science, Shah Abdul Latif University, Khairpur, Pakistan

- 4Department of Computer Science, Design and Journalism, Creighton University, Omaha, NE, United States

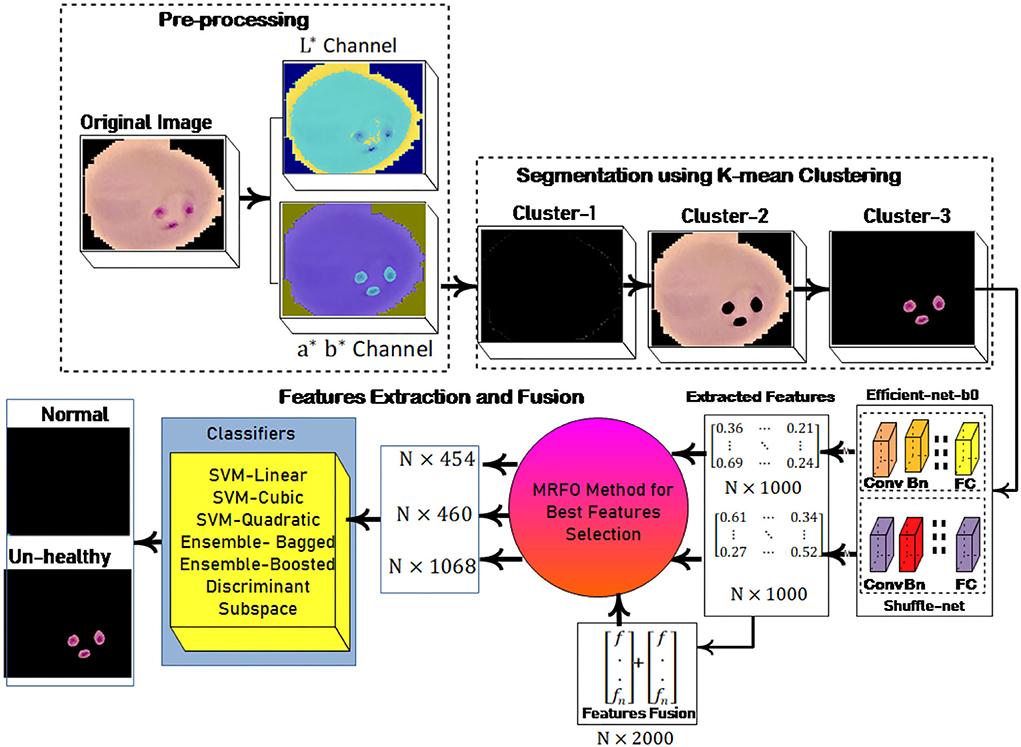

Malaria is a serious and lethal disease that has been reported by the World Health Organization (WHO), with an estimated 219 million new cases and 435,000 deaths globally. The most frequent malaria detection method relies mainly on the specialists who examine the samples under a microscope. Therefore, a computerized malaria diagnosis system is required. In this article, malaria cell segmentation and classification methods are proposed. The malaria cells are segmented using a color-based k-mean clustering approach on the selected number of clusters. After segmentation, deep features are extracted using pre-trained models such as efficient-net-b0 and shuffle-net, and the best features are selected using the Manta-Ray Foraging Optimization (MRFO) method. Two experiments are performed for classification using 10-fold cross-validation, the first experiment is based on the best features selected from the pre-trained models individually, while the second experiment is performed based on the selection of best features from the fusion of extracted features using both pre-trained models. The proposed method provided an accuracy of 99.2% for classification using the linear kernel of the SVM classifier. An empirical study demonstrates that the fused features vector results are better as compared to the individual best-selected features vector and the existing latest methods published so far.

Introduction

Approximately 200 million people die from the mosquito-borne disease malaria every year. It claimed the lives of 405,000 people in 2018, accounting for almost 67% of all children under the age of five (1). It is carried on by the Plasmodium virus, which has four distinct species that can infect people. The disease has been labeled endemic across 38 different parts of the globe. Initial malaria symptoms are fever, chills, vomiting/headache might be potentially moderate and hard to diagnose. The non-treatment of malaria produces sickness and leads to death (2). Malaria is generally linked to poverty, and it is the most common disease in developing countries. The gold standard for malaria diagnosis is microscopic sliding inspection; however, an alternative is a polymerase chain reaction (PCR) test (3). The examination through the sliding microscope method is commonly used for malaria diagnosis. The sensitivity of this method has relied on the pathologist's expertise. The manual slides examination is a tedious and time-consuming task that leads to misdiagnosis. This problem is tackled through a low-cost computer-aided diagnosis system (4). The red blood cells are segmented by a neural network that provides an accuracy of 93.72% (5). The convolutional neural network has been used for malaria detection with an accuracy of 75.39% (6). The deep learning algorithm has been embedded on mobile devices that might be utilized as an application for online malaria cell detection. The proposed technique consists of two steps, where the minimum global screening method is employed for the screening of malaria slides that are subsequently used with CNN with scratch for infected cell classification which provided prediction accuracy of 93.46% (7). The supervised/unsupervised learning models are widely used for the analysis of malaria cells. Several techniques have been developed to detect malaria, but there is still a void in this field, in which larger variation appears among the malaria cells. Furthermore, pertinent feature extraction, as well as the selection, is a challenge for accurate malaria cell segmentation and classification (8). The microscopic images of malaria have noise, poor contrast, illumination, stain, low quality, variations in intensity, size, and irregularity within the region of interest. That's why accurate detection of malaria is a challenging task (9). Therefore, the microscopic malaria images are preprocessed to improve their quality for accurate detection of malaria. After that, hand-crafted features are fused with the deep features and the best features are selected by using the MRFO method, which provides good malaria classification results. Therefore, in this research, a novel model has been proposed for segmentation and malaria cell classification. The foremost contribution steps are described as:

1. Color-based K-mean clustering is applied to three selected clusters to segment the malaria cells

2. Deep extracted features from segmented images are serially fused and then selected active features using MRFO for malaria cell classification.

Article organization is defined as Section Related works discusses the related work; Section Proposed methodology elaborates on the proposed method steps and results are manifested in Section Results and discussion and finally, the conclusion is written in Section Conclusion.

Related works

In the literature, several optimizations, clustering, and classification techniques are widely used for the analysis of malaria cells, some of which are discussed in this section (8, 10–14). The classification techniques used for the diagnosis of the malaria cells, in which AdaBoost (15), Naïve Bayes Tree (16), SVM (17), DT (18), and Linear Discriminant (19), classifiers are involved. Custom convolutional neural models are widely used for the analysis of malaria cells and provide an accuracy of 97.37%. Furthermore, transfer learning models are also commonly utilized for malaria cell classification such as VGG16, AlexNet, DenseNet121, ResNet50, and Xception with an accuracy of 91.50–95.9%. In these transfer learning models, VGG16 and ResNet50 provided competent results compared to the others. Custom CNN models provided improved results compared to transfer learning and they gives an accuracy of 97.37% (20). The Dense Attentive Circular Network is used for malaria cell detection and achieved results are compared to the transfer learning models such as DPN92 and DenseNet121.This method provided an accuracy of 97.47 and 87.88% on DenseNet121 and DPN-92, respectively (21). The pre-trained networks such as ResNet50, AlexNet, and VGG19 are used for malaria classification and provide an accuracy of 93.88, 96.33, and 93.72%, respectively. Features are extracted from pre-trained VGG-16 and input to SVM for discrimination between infected/uninfected cells of malaria with 93.1% accuracy (22). Custom CNN (23–31) and pre-trained efficientnet-b0 model are used for features extraction and they provided accuracy of 97.74 and 98.82%, respectively (20). DCNN model is used for the classification of blood smear images with a 94.79% classification accuracy (32). The original malaria cell images are preprocessed based on the L*a*b* and then extracted for deep features through Dense-Net-169 and Dense-Net 53 for deep feature analysis. Furthermore, the best features are selected using the whale optimization method for malaria cell classification with 99.67% accuracy (33). Malaria cells are classified using deep-sweep software with >0.95 ROC (34). Features are extracted from transfer learning models which are dense-net-201, dense-net-121, Resnet-101, Resnet-50, VGG-16, and VGG-19 for features extraction and input to SVM, NB, and KNN classifiers for malaria cell classification (35). LeNet, GoogLeNet, and AlexNet models are used for feature extraction with 94% accuracy for malaria cell classification (36). Mask-RCNN is used for malaria cell segmentation with a 94.57 correct rate (37). The faster-RCNN is used for model training with a single multi-shot detector SSD for localization of the malaria cells with a 0.94 prediction rate (38).

Proposed methodology

In this research, a method is proposed for the segmentation and classification of malaria cells. The three-dimensional segmentation of the malaria cells is performed using K-mean clustering. After segmentation, the segmented images are fed to the proposed classification model. In this model, features are extracted from pre-trained models such as efficient-net-b0 and shuffle-net (39) with a dimension of N × 1,000. In which best N × 454 features from the shuffle-net model and N × 460 features from efficient-net-b0 (40) model. Furthermore, extracted features from both models are fused serially to create a fused feature vector (FV) with a length of N × 2,000. The best N × 1,068 features are selected out of N × 2,000 using the proposed MRFO method and passed to benchmark classifiers to classify the infected and un-infected malaria cells. The proposed method steps are illustrated in Figure 1, a normal image is black because it has no infected region.

Segmentation of the malaria cells using K-mean clustering

The RGB input images φ are converted into the L*a*b* color space for contrast adjustment. The mathematical representation is defined as:

Here X∂, Y∂, Z∂ denotes the white-tristimulus

The K-mean clustering is applied to the luminance channel for segmentation. In K-mean clustering, we uniformly selected the observation centroid c1 from the input data X (41). The distance is computed to the observation to the c1 that is represented d(xm, cj). Then centroid c2 is selected randomly from the X data point with the probability is defined as follows:

The distance from each observation to the centroid is computed and allocated observation to the nearest centroid is mathematically expressed as:

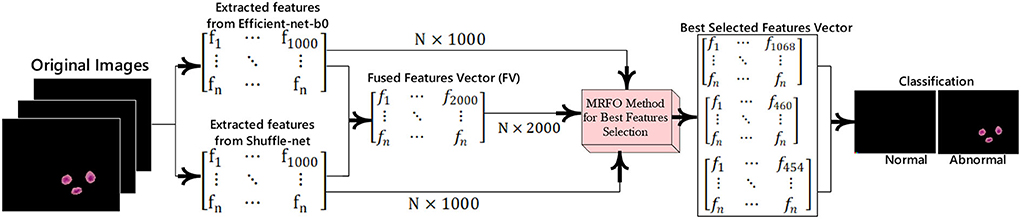

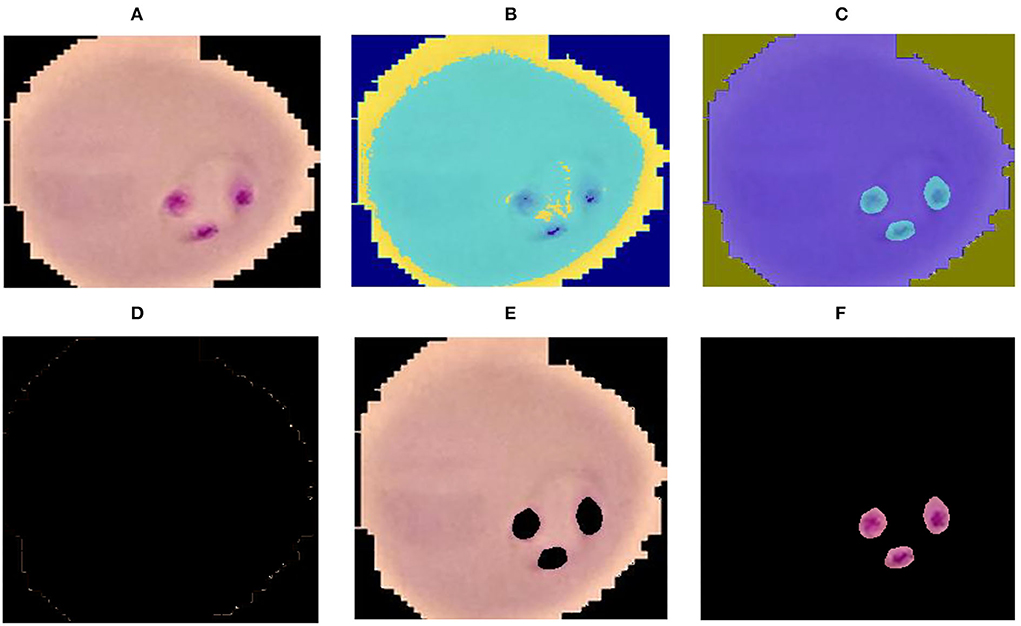

where cp denotes the closet centroid across each observation and xm relate to the cp. In the proposed method malaria-infected region is segmented on the selected k = 3 values. The proposed segmentation results are shown in Figure 2.

Figure 2. Segmentation results (A) input image (B) luminance channel (C) a and b channel (D) cluster-1 (E) cluster-2 and (F) cluster-3.

Malaria cells classification

The malaria cells are classified using the proposed convolutional neural network-based transfer learning model such as efficientnet-b0 and shuffle-net. The pre-trained efficientnet-b0 consists of 290 layers such as convolutional (65), batch-normalization (49), sigmoid (65), element-wise multiplication (65), convolution group (15), average global pooling (16), addition (9), fully connected (FC), addition (15), classification, softmax, and global average pool (16). The shuffle-net consists of 172 layers such as input (1), convolution (49), (49) batch-normalization, ReLU (33), max-pooling (01), average pooling (02), 16 shuffling channels, 1 fully connected (FC), 1 softmax, 1 classification, 15 addition, 01 average global pool, and 2 depth concatenation. This research extracted features from the MatMul FC layer of efficient-netb0 and node-202 FC layer of shuffle-net. The extracted features are fused serially and fed to the MRFO method for the selection of optimum features. The proposed feature extraction and selection process are presented in Figure 3.

Best features selection using MRFO method

Manta Ray Foraging Optimization (MRFO) method is used for best feature selection. MRFO consists of three main steps such as chain fore-aging, cyclone fore-aging, and somersault fore-aging (42–44).

Chain fore-aging

Manta rays might see the direction of the plankton in MRFO and move toward it. The better the position is, the greater will be the concentration of plankton. Although the optimal answer is unknown, MRFO believes that the optimal solution discovered so far has been the plankton that comprises a greater concentration of manta rays that desire to reach and consume it. Manta rays construct a foraging chain by queuing up head to toe. Everyone else moves toward the meal and the individuals in front of it, except for first. So that, everyone gets updated by optimal answers found thus far and explanations ahead of it in each iteration. The mathematical representation of the chain foraging is defined as:

Here ∂ is a random value in the range of [ 0 1], α represents the learning rate, d denotes the dimension and is the i individual at t time.

Cyclone foraging

When a group of the manta rays spots plankton in the intense water, they form an extensive foraging chain and travel in a spiral toward the meal. WOA uses a spiral foraging method that is comparable to this. According to the foraging technique of manta ray swarms, every manta ray moves toward the one ahead of it as well as spiraling toward the meal. The best answer identified through the cyclone foraging technique has better exploitation of the area because all individuals do the search at random with the food to their reference place. This trend is also employed to significantly enhance research. By allocating an unplanned place in the whole research area to the source point, we may need every participant to seek a different place.

where β represents the coefficient of weights and T is the total iterations.

Somersault fore-aging

This technique is mainly involved with research and permits MRFO to organize global research. The meal position is considered a hinge in these behaviors. Every person moves to and fro around the hinge, somersaulting to the given assignment. Consequently, they always adjust their placements to the greatest possible position found until now.

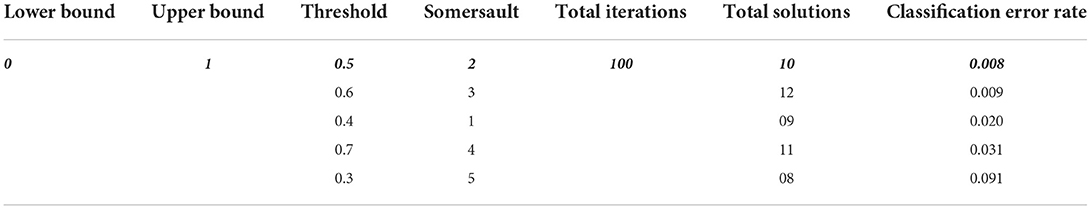

Here's denote the range of the somersault factor, S = 2, ∂2, ∂3 are random two numbers in [ 0.1] range. In this research parameters of MRFO are selected for best features selection as presented in Table 1.

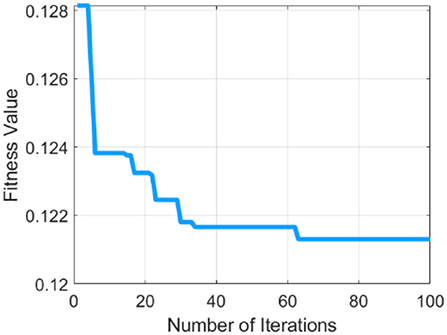

Table 1 describes the parameters of MRFO, in which 0.5 threshold,two Somersault, and 100 iterations with 10 total solutions are used for model training due to less error rate these are selected for further processing. This experimental model is converge after the 60 epochs on a 0.128 fitness value as presented in Figure 4.

This method is applied to individual feature vector and the fusion of both feature vectors. In this experiment, we achieve the dimension of N × 454 features from shuffle net and N × 460 features from efficient-net-b0 features. Finally, achieved N× 1,068 best-selected features after the fusion of both feature vectors. The selected feature vectors are fed to the ensemble and SVM classifiers for malaria cell classification.

Results and discussion

The proposed method results are evaluated on a publically available malaria benchmark dataset. This dataset contains 2,750 of two classes in which 1,375 infected and 1,375 un-infected images are included (45). The proposed method of experimentation is implemented on the Core-i7 window operating system, Nvidia 2070-RTX GPU.

Experiment#1: Malaria classification

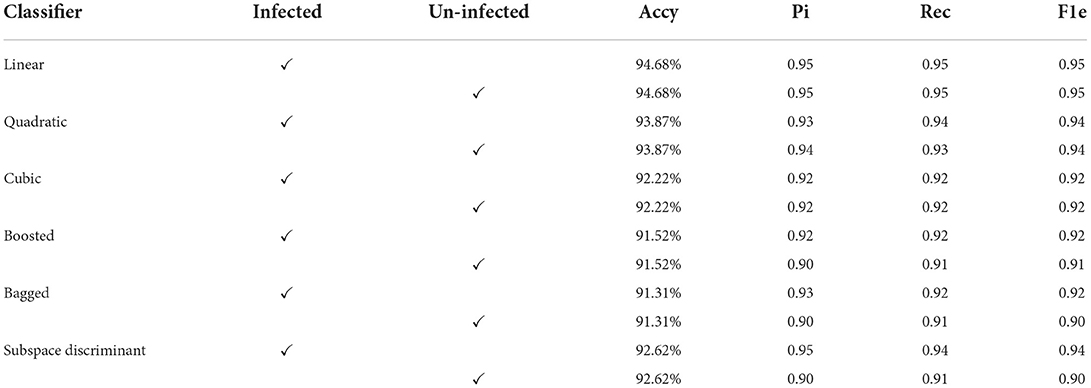

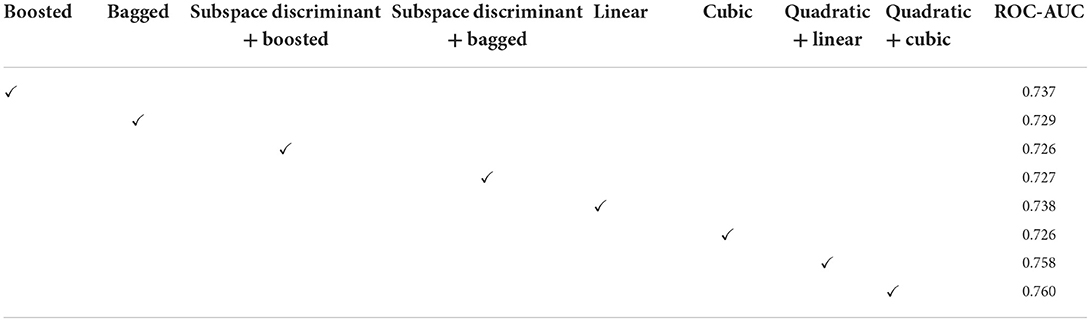

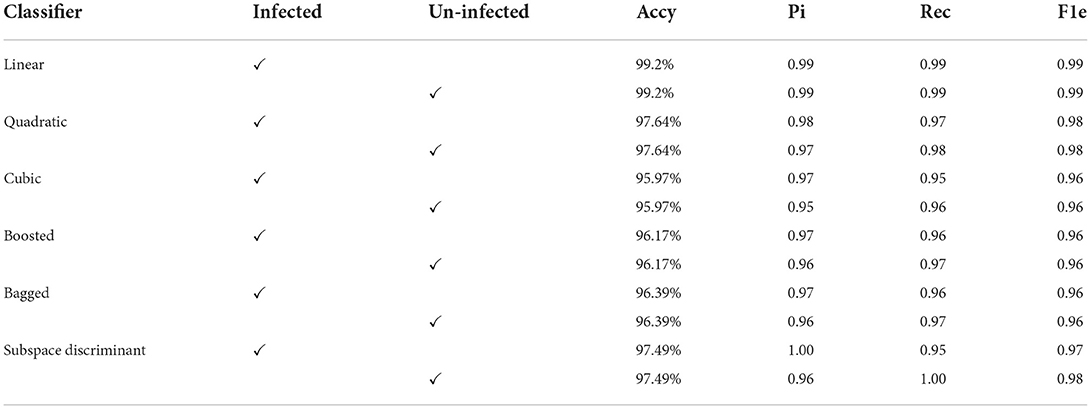

The input microscopic malaria cells are segmented using color-based k-mean clustering. In this experiment malaria, cells are classified into two classes using precision (Pi), accuracy (Accy), recall (Rec), and F1-score (F1e) as presented in Tables 2, 3. The proposed method classification results using efficient-net-b0 are shown in Table 2.

In Table 2, features are extracted from the efficientnet-b0 and the best features are selected using MRFO that are fed to the SVM and ensemble classifiers. In this experiment, we achieved a maximum accuracy of 94.68% using a linear classifier as compared to others. Ten-fold cross-validation is used to calculate the categorization results using the best-selected features vector dimension of N × 454 from the shuffle-net as presented in Table 3.

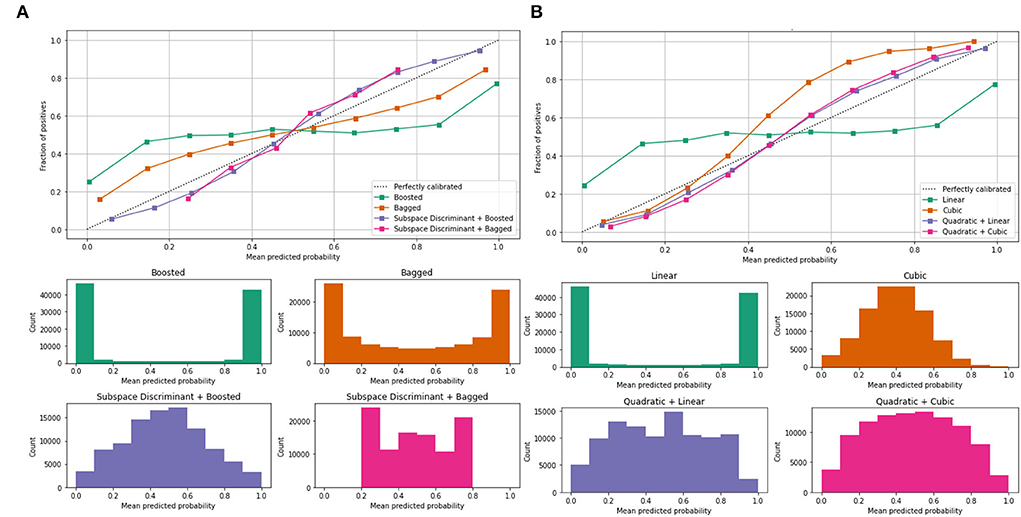

Mean predicted scores are also computed to authenticate the proposed method performance as shown in Table 4; Figure 5.

Figure 5. Mean predicted scores on the benchmark classifiers (A) ensemble (B) SVM. The ROC-AUC values are computed on benchmark classifiers as presented in Table 3.

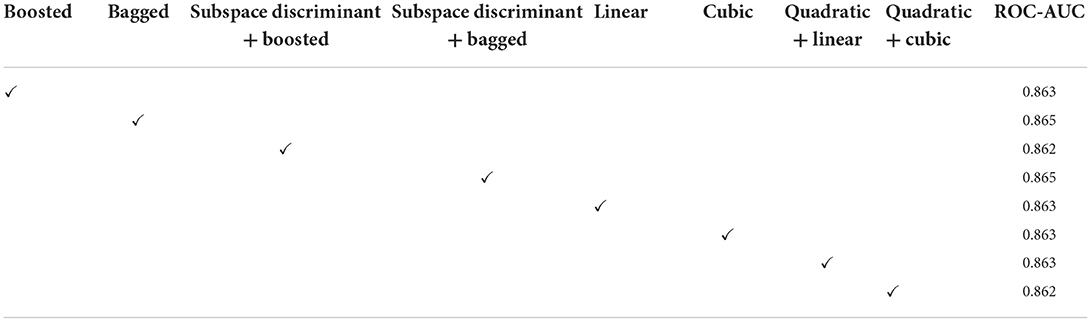

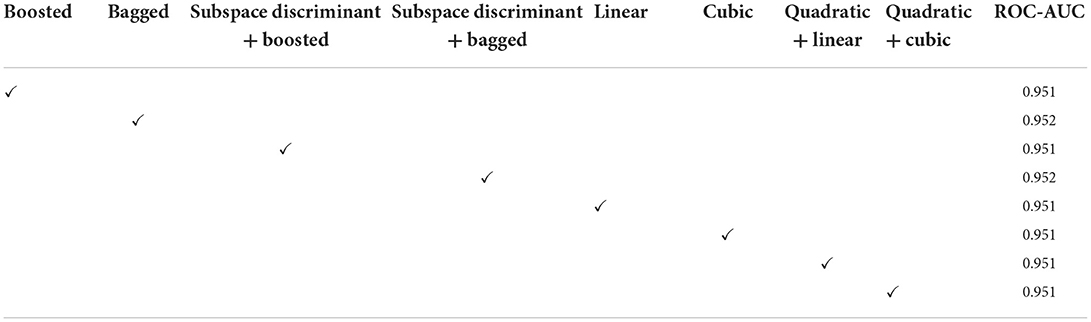

In Table 3, a significant test is performed in which the ROC–AUC value is computed on bagged, boosted, linear, cubic classifiers, and fusion of subspace discriminant + boosted, subspace discriminant + bagged, quadratic + linear, quadratic + cubic classifiers. This experiment achieved the highest ROC-AUC of 0.760 using the fusion of quadratic + cubic classifiers. The classification results based on shuffle-net features are presented in Table 4.

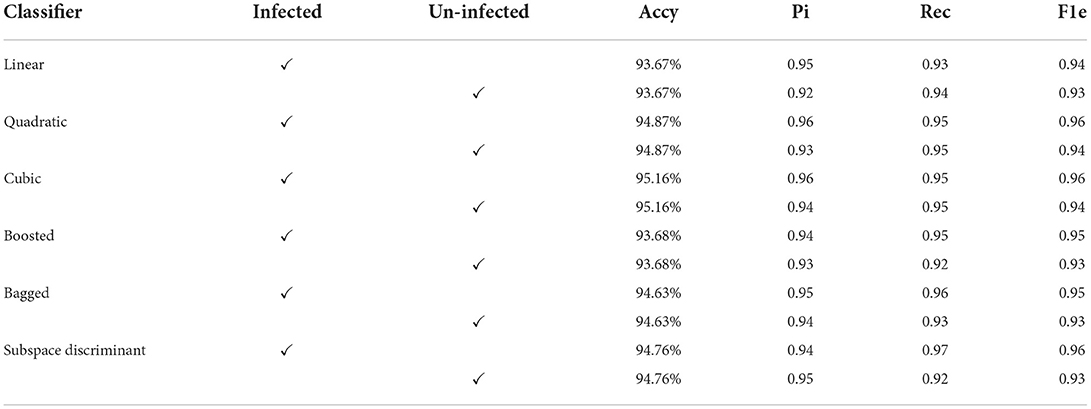

The classification results are mentioned in Table 4, in which we achieved 95.16 accuracies on the SVM cubic classifier, which is far better compared to others. Furthermore, classification results are also computed in terms of ROC–AUC, in which classifiers are used individually as well as the fusion of the boosted, bagged, linear, and cubic kernels with the subspace discriminant and quadratic, respectively. The quantitative analysis of the features vector obtained from the shuffle net is presented in Figure 6; Table 5.

Figure 6 shows the mean predicted scores on the benchmark classifiers. The quantitative computed results are mentioned in Table 5.

In the classification of malaria cells, we achieved a ROC–AUC of 0.865 using a bagged and subspace discriminant + bagged classifier. The classification results are computed using the fused features vector as presented in Table 6.

In the results in Table 6, after applying the fused features vector, we obtained an accuracy of 99.2% on the linear classifier and 97.64% on the quadratic kernel. The fused features vector provides excellent results compared to the individual features vector that authenticates the proposed method novelty. The mean of the correct prediction scores on the fused features vector is presented in Table 7.

Table 7. Classification of malaria cells in terms of mean predicted scores using fused features vector.

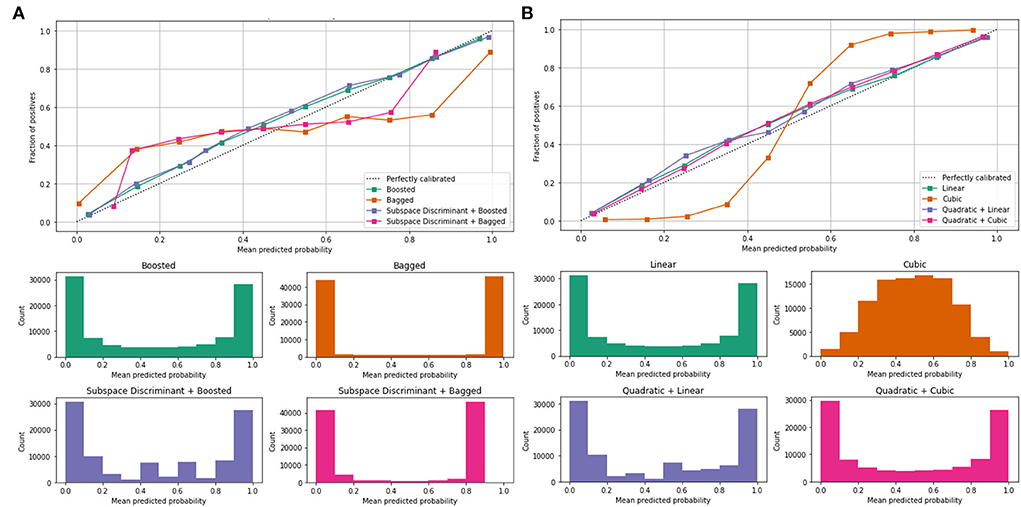

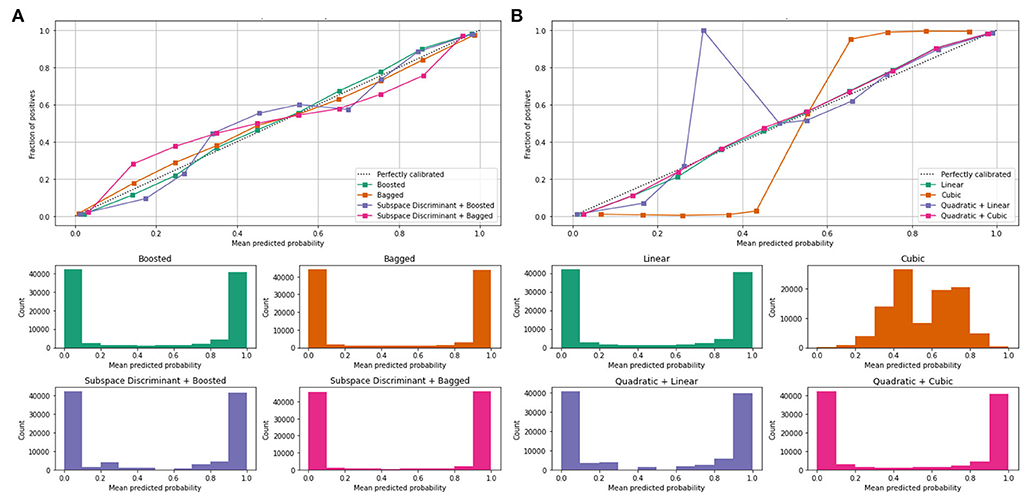

Mean values of the correct prediction scores are presented in Table 7, in which the highest ROC-AUC of 0.952 using bagged and subspace discriminant + bagged classifier as compared to others. In Figure 7, a combination of the ROC curve of the selected classifier is plotted as well as the mean predicted probability of the benchmark kernels such as linear, cubic, quadratic, boosted, bagged, and discriminant is plotted.

Figure 7. Mean predicted scores using fused features vector (A) boosted, bagged, and discriminant kernels of ensemble classifier and (B) linear, cubic, and quadratic kernels of SVM.

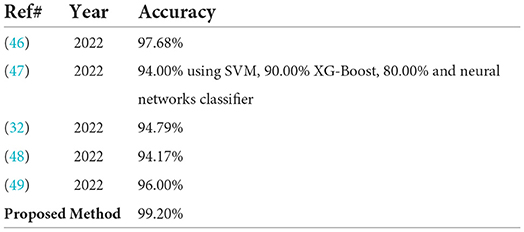

Figure 7 shows the computed classification results of the individual and fusion of the bags with discriminate, linear with quadratic, and quadratic with cubic. The proposed method results are compared to the current existing methods as presented in Table 8.

In Table 8, the proposed classification method results are contrasted with the most recent works published on the same benchmark dataset. In which the pre-trained ResNet-18 model is used for malaria classification it gives an accuracy of 98.68% (46). The classifiers SVM, neural network, and XG-boost are used for malaria classification with the accuracy of 94, 90, and 80%, respectively (47). The DAG–CNN model is used for parasite malaria classification and it gives an accuracy of 94.79% (32). NC–GCN model is used for feature extraction and malaria cells are classified with 94.17% accuracy (48). The pre-trained inception-v3 and VGG-19 models are used for feature extraction. The average achieved accuracy is 96%.

Compared to the most recent techniques used in this research a method is proposed to investigate the deep features analysis using efficient-net-b0 and shuffle-net. These features are used for classification in two ways. First, the MRFO method is applied to the extracted features of both models and the best-selected features are passed to the classifiers. In the second way, extracted features are serially fused and optimum features are selected using the MRFO method. In this experiment, we observed that the fused features vector performs significant improvement in malaria cell classification.

Conclusion

Parasite malaria segmentation and classification is an intricate task due to the large variation and illumination in microscopic malaria images. Therefore, in this research, a method is proposed in which the quality of the input images is improved by applying a pre-processing method. The RGB microscopic malaria images are converted into CIELab color space. The luminance channel is selected for further processing to improve the image contrast. The best-segmented results of the malaria cells are achieved in the third cluster. The segmented images are fed to the proposed classification model. The proposed method more accurately classifies the malaria cells due to features fusion, and optimum features selected by the MRFO method. The proposed method provides an accuracy of 95.16% on the individual best-selected features vector and 99.2% on the fused best-selected features vector. Furthermore, to authenticate the performance of the proposed classification method ROC-AUC values are computed using individual classifiers such as linear, cubic, bagged, boosted, and the fusion of cubic + quadratic, and linear + quadratic, bagged + subspace discrimination, boosted + subspace + discriminant classifiers. In this experiment highest, the 0.95 ROC-AUC was achieved using a bagged + subspace discriminant classifier. In comparison to the most recent approaches, the experimental results show that the fused features vector produces the best outcomes.

Data availability statement

The original contributions presented in the study are included in the article/supplementary files, further inquiries can be directed to the corresponding author/s.

Author contributions

JA performed writing draft, conceptualization, and implementation. MS contributed as the part of result validation team and writing the conclusion of the paper. SF and GM contributed to the data curation, investigation, and literature reviews. SF contributed to the analysis and editing the original draft. GM contributed to the resources and project administration. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. World Health Organization. World Malaria Report 2021 (2012). Available online at: https://www.who.int/malaria/publications/world–malaria–report−2017/report/en/ (accessed April 4, 2022).

2. Griffith KS, Lewis LS, Mali S, Parise ME. Treatment of malaria in the United States: a systematic review. JAMA. (2007) 297:2264–77. doi: 10.1001/jama.297.20.2264

3. Siwal N, Singh US, Dash M, Kar S, Rani S, Rawal C, et al. Malaria diagnosis by PCR revealed differential distribution of mono and mixed species infections by Plasmodium falciparum and P vivax in India. PLoS ONE. (2018) 13:e0193046. doi: 10.1371/journal.pone.0193046

4. Murphy SC, Shott JP, Parikh S, Etter P, Prescott WR, Stewart VA. Malaria diagnostics in clinical trials. Am J Trop Med Hyg. (2013) 89:824. doi: 10.4269/ajtmh.12-0675

5. Delgado–Ortet M, Molina A, Alférez S, Rodellar J, Merino A. A deep learning approach for segmentation of red blood cell images and malaria detection. Entropy. (2020) 22:657. doi: 10.3390/e22060657

6. Huertas–Tato J, Martín A, Fierrez J. Camacho D. Fusing CNNs and statistical indicators to improve image classification. Inform Fusion. (2022) 79:174–87. doi: 10.1016/j.inffus.2021.09.012

7. Yang F, Poostchi M, Yu H, Zhou Z, Silamut K, Yu J, et al. Deep learning for smartphone–based malaria parasite detection in thick blood smears. IEEE J Biomed Health Inform. (2019) 24:1427–38. doi: 10.1109/JBHI.2019.2939121

8. Das DK, Mukherjee R, Chakraborty C. Computational microscopic imaging for malaria parasite detection: a systematic review. J microsc. (2015) 260:1–19. doi: 10.1111/jmi.12270

10. Setyawan D, Wardoyo R, Wibowo ME, Murhandarwati EE, Jamilah J. Malaria classification using convolutional neural network: a review. In: 2021 Sixth International Conference on Informatics and Computing (ICIC). (2021). 1–9. doi: 10.1109/ICIC54025.2021.9632998

11. Amin J, Anjum MA, Sharif A, Raza M, Kadry S, Nam Y. Malaria Parasite Detection Using a Quantum–Convolutional Network. (2022) 70:6023–6039. doi: 10.32604/cmc.2022.019115

12. Elavarasan D, Vincent PM DR, Srinivasan K, Chang CY. A hybrid CFS filter and RF–RFE wrapper–based feature extraction for enhanced agricultural crop yield prediction modeling. Agriculture. (2020) 10:400. doi: 10.3390/agriculture10090400

13. Mahendran N, PM DR. A deep learning framework with an embedded–based feature selection approach for the early detection of the Alzheimer's disease. Comput Biolo Med. (2022) 141:1050–56. doi: 10.1016/j.compbiomed.2021.105056

14. Dey S, Nath P, Biswas S, Nath S, Ganguly A. Malaria detection through digital microscopic imaging using Deep Greedy Network with transfer learning. J Med Imaging. (2021) 8:054502. doi: 10.1117/1.JMI.8.5.054502

15. Zhang Z, Ong LS, Fang K, Matthew A, Dauwels J, Dao M, et al. Image classification of unlabeled malaria parasites in red blood cells. In: 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). (2016) 3981–4. doi: 10.1109/EMBC.2016.7591599

16. Aliyu A, Prasad R, Fonkam M. A framework for predicting malaria using naïve Bayes classifier. Int J Telemed Appl. (2018) 3:78–93. doi: 10.1504/IJTMCP.2018.093623

17. Pinkaew A, Limpiti T, Trirat A. Automated classification of malaria parasite species on thick blood film using support vector machine. In: 2015 8th Biomedical Engineering International Conference (BMEiCON). (2015) 1–5. doi: 10.1109/BMEiCON.2015.7399524

18. Arowolo MO, Adebiyi M, Adebiyi A, Okesola O. PCA model for RNA–Seq malaria vector data classification using KNN and decision tree algorithm. In: 2020 international conference in mathematics, computer engineering and computer science (ICMCECS). (2020) 1–8. doi: 10.1109/ICMCECS47690.2020.240881

19. Jimoh RG, Abisoye OA, Uthman MM. Ensemble feed–forward neural network and support vector machine for prediction of multiclass malaria infection. Int J Inf Commun Technol. (2022) 21:117–48. doi: 10.32890/jict2022.21.1.6

20. Marques G, Ferreras A., de la Torre–Diez I. An ensemble–based approach for automated medical diagnosis of malaria using EfficientNet. Multimed Tools Appl. (2022) 29:1–18. doi: 10.1007/s11042-022-12624-6

21. Shi B, Zhou X, Qin Z, Sun L, Xu Y. Corn ear quality recognition based on DCGAN data enhancement and transfer learning. In: The 4th International Conference on Electronics, Communications and Control Engineering. (2021). 62–68. doi: 10.1145/3462676.3462686

22. Rajaraman S, Antani SK, Poostchi M, Silamut K, Hossain MA, Maude RJ, et al. Pre–trained convolutional neural networks as feature extractors toward improved malaria parasite detection in thin blood smear images. Peer J. (2018) 6:e4568. doi: 10.7717/peerj.4568

23. Amin J, Anjum MA, Gul N, Sharif M. A secure two–qubit quantum model for segmentation and classification of brain tumor using MRI images based on blockchain. Neural Comput Appl. (2022). 1–14. doi: 10.1007/s00521-022-07388-x

24. Amin J, Anjum MA, Malik M. Fused information of DeepLabv3+ and transfer learning model for semantic segmentation and rich features selection using equilibrium optimizer (EO) for classification of NPDR lesions. Knowl Based Syst. (2022)249:108881. doi: 10.1016/j.knosys.2022.108881

25. Amin J, Anjum MA, Sharif M, Jabeen S, Kadry S, Moreno Ger P, et al. new model for brain tumor detection using ensemble transfer learning and quantum variational classifier. Comput Intell Neurosci. (2022). doi: 10.1155/2022/3236305

26. Amin J, Anjum MA, Sharif M, Kadry S, Nadeem A, Ahmad SF. Liver tumor localization based on YOLOv3 and 3D–semantic segmentation using deep neural networks. Diagnostics. (2022) 12:823. doi: 10.3390/diagnostics12040823

27. Amin J, Sharif M, Fernandes SL, Wang SH, Saba T, Khan AR. Breast microscopic cancer segmentation and classification using unique 4-qubit-quantum model. Microsc Res Tech. (2022) 85:1926–36. doi: 10.1002/jemt.24054

28. Amin J, Sharif M, Anjum MA, Nam Y, Kadry S, Taniar D. Diagnosis of COVID−19 infection using three–dimensional semantic segmentation and classification of computed tomography images. Comput Mater Contin. (2021) 68:2451–67. doi: 10.32604/cmc.2021.014199

29. Amin J, Sharif M, Anjum MA, Siddiqa A, Kadry S, Nam Y, et al. 3d semantic deep learning networks for leukemia detection. (2021). doi: 10.32604/cmc.2021.015249

30. Amin J, Anjum MA, Sharif A, Sharif MI. A modified classical–quantum model for diabetic foot ulcer classification. Intelligent Decision Technologies. Intell Decis. Technol. (2022) 16:1–6. doi: 10.3233/IDT-210017

31. Sadaf D, Amin J, Sharif M, Yasmin M. Detection of diabetic foot ulcer using machine/deep learning. Med Image Anal. (2000) 101–123. doi: 10.1201/9781003230540-7

32. Oyewola DO, Dada EG, Misra S, Damaševičius R. A novel data augmentation convolutional neural network for detecting malaria parasite in blood smear images. Appl Artif Intell. (2022) 1–22. doi: 10.1080/08839514.2022.2033473

33. Imran T, Sharif M, Tariq U, Zhang YD, Nam Y, Nam Y, et al. Malaria blood smear classification using deep learning and best features selection. Comput Mater Contin. (2021) 71:1–15. doi: 10.32604/cmc.2022.018946

34. Deelder W, Benavente ED, Phelan J, Manko E, Campino S, Palla L, et al. Using deep learning to identify recent positive selection in malaria parasite sequence data. Malar J. (2021) 20:1–9. doi: 10.1186/s12936-021-03788-x

35. Abubakar A, Ajuji M, Yahya IU. DeepFMD: computational analysis for malaria detection in blood–smear images using deep–learning features. Appl Syst Innov. (2021) 4:82. doi: 10.3390/asi4040082

36. Dong Y, Jiang Z, Shen H, Pan WD, Williams LA, Reddy VV, et al. Evaluations of deep convolutional neural networks for automatic identification of malaria infected cells. In: 2017 IEEE EMBS international conference on biomedical & health informatics (BHI). (2017). 101–4. doi: 10.1109/BHI.2017.7897215

37. Loh DR, Yong WX, Yapeter J, Subburaj K, Chandramohanadas R. A deep learning approach to the screening of malaria infection: Automated and rapid cell counting, object detection and instance segmentation using Mask R–CNN. Comput Med Imaging Graph. (2021) 88:101845. doi: 10.1016/j.compmedimag.2020.101845

38. Nakasi R, Mwebaze E, Zawedde A, Tusubira J, Akera B, Maiga G, et al. new approach for microscopic diagnosis of malaria parasites in thick blood smears using pre–trained deep learning models SN. Appl Sci. (2020) 2:1–7. doi: 10.1007/s42452-020-3000-0

39. Zhang X, Zhou X, Lin M, Sun J. Shufflenet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (2018). 6848–56. doi: 10.1109/CVPR.2018.00716

40. Tan M, Le Q. Efficientnet: rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning. (2019).6105–14.

41. Arthur D, Vassilvitskii S. k–means++: The advantages of careful seeding. In: Proceedings of the 18th Annual Acm–Siam Symposiumon Discrete Algorithms. (2006). 1027–35.

42. Zhao W, Zhang Z, Wang L. Manta ray foraging optimization: an effective bio–inspired optimizer for engineering applications. Eng Appl Artif Intell. (2020) 87:103300. doi: 10.1016/j.engappai.2019.103300

43. Got A, Zouache D, Moussaoui A, MOMRFO. multi–objective Manta ray foraging optimizer for handling engineering design problems. Knowl Based Syst. (2022) 237:107880. doi: 10.1016/j.knosys.2021.107880

44. El–Shorbagy MA, Omar HA, Fetouh T. Hybridization of manta–ray foraging optimization algorithm with pseudo parameter–based genetic algorithm for dealing optimization problems and unit commitment problem. Mathematics. (2022) 10:2179. doi: 10.3390/math10132179

45. Narayanan BN, Ali R, Hardie RC. Performance analysis of machine learning and deep learning architectures for malaria detection on cell images. In: Applications of Machine Learnin. (2019) 111390W. doi: 10.1117/12.2524681

46. Loddo, Fadda C, Di Ruberto C. An empirical evaluation of convolutional networks for malaria diagnosis. J Imaging. (2022) 8:66. doi: 10.3390/jimaging8030066

47. Alharbi AH, Lin M, Ashwini B, Jabarulla MY, Shah MA. Detection of peripheral malarial parasites in blood smears using deep learning models. Comput Intell Neurosci. (2022). doi: 10.1155/2022/3922763

48. Meng X, Ha Y, Tian J. Neighbor correlated graph convolutional network for multi–stage malaria parasite recognition. Multimed Tools Appl. (2022) 81:11393–414. doi: 10.1007/s11042-022-12098-6

Keywords: clusters, malaria, K-mean, MRFO, features

Citation: Amin J, Sharif M, Mallah GA and Fernandes SL (2022) An optimized features selection approach based on Manta Ray Foraging Optimization (MRFO) method for parasite malaria classification. Front. Public Health 10:969268. doi: 10.3389/fpubh.2022.969268

Received: 14 June 2022; Accepted: 03 August 2022;

Published: 06 September 2022.

Edited by:

Zhenxing Zhang, University of Illinois at Urbana-Champaign, United StatesReviewed by:

Durai Raj Vincent P M, VIT University, IndiaVenkatesan Rajinikanth, St. Joseph's College of Engineering, India

Copyright © 2022 Amin, Sharif, Mallah and Fernandes. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Javeria Amin, SmF2ZXJpYS5hbWluQHVvdy5lZHUucGs=

Javeria Amin

Javeria Amin Muhammad Sharif2

Muhammad Sharif2 Steven L. Fernandes

Steven L. Fernandes