Abstract

Early detection of vessels from fundus images can effectively prevent the permanent retinal damages caused by retinopathies such as glaucoma, hyperextension, and diabetes. Concerning the red color of both retinal vessels and background and the vessel's morphological variations, the current vessel detection methodologies fail to segment thin vessels and discriminate them in the regions where permanent retinopathies mainly occur. This research aims to suggest a novel approach to take the benefit of both traditional template-matching methods with recent deep learning (DL) solutions. These two methods are combined in which the response of a Cauchy matched filter is used to replace the noisy red channel of the fundus images. Consequently, a U-shaped fully connected convolutional neural network (U-net) is employed to train end-to-end segmentation of pixels into vessel and background classes. Each preprocessed image is divided into several patches to provide enough training images and speed up the training per each instance. The DRIVE public database has been analyzed to test the proposed method, and metrics such as Accuracy, Precision, Sensitivity and Specificity have been measured for evaluation. The evaluation indicates that the average extraction accuracy of the proposed model is 0.9640 on the employed dataset.

Introduction

Retinal vessel detection and identifying blood vessel properties, including diameter, shape, bifurcation, and tortuosity, mainly aim to diagnose various eye abnormalities and retinopathies (1). At the same time, the evaluation of bifurcation points and tortuosity assist in diagnosing cardiovascular ailments. Also, analyzing width can result in the prevention of retinopathies induced by hypertension (2). Besides, glaucoma prevention is only possible if fundus retinal images are analyzed regularly to diagnose any abnormal modification of blood vessels around the optic disk (3). Diabetic retinopathy is another disease imposed by blood vessel construction, distribution changes, and fresh vessel evolution. Late diagnosis may also result in adult blindness (4). These critical medical applications highlight the importance of vessel extrication from retinal fundus pictures for ophthalmologists in diagnosing diseases and acting properly for their patients.

Unfortunately, with such a significant role the retinal vessel detection, blood vessels are not visible easily in retinal images taken by the fundus. The red background of the retina alongside the red color of retinal vessels is combined with fading small vessels spread around the retina. Therefore, manual detection of blood vessels is considered a subjective task requiring specific expertise while time-consuming (5). The vessel's width varies subject to the breadth of the vessel and the image resolution. The retinal boundary, optic disc, and diseases such as cotton wool patches, bright and black lesions, and exudate can all be seen in ocular fundus images (3).

Image processing methods have improved the recognition of blood vessels by changing the intensity of images and normalizing the color distribution. These techniques can enhance the reading of retinal images; however, fully automatic extraction requires understanding the properties of the vessel and detecting them in the noisy background of retinal images at which inadequate contrast regions are distrusted non-uniformly. It has been more than three decades where Computer-Aided-Detection (CAD) methods have been employed to diagnose retinopathies. Unfortunately, most traditional methods are not well-suited for recent, accurate sensors. For instance, since the resolution of fundus scanners is increased, standard image-processing methods show their drawback in detecting small faded vessels. Briefly said, the traditional systems underperform the detection due to variations in image properties due to different capture devices that influence the intensity and resolution of retinal images; therefore, machine learning-based approaches are more suitable (6).

For vascular segmentation of retinal pictures, various machine learning (ML) approaches and procedures are available. These methods are divided into supervised and unsupervised approaches. Support vector machine (SVM), neural network, and classification based methods are examples of supervised methods. Matching filter, vessel tracking, and mathematical morphology methods are all examples of unsupervised approaches (7).

The remaining current paper is divided into three sections. In Section Literature Review, a brief review will be conducted on the approaches targeting the objective of the work via various techniques. The section is wrapped up by highlighting the importance of fusion approaches taking novel DL methods with successful traditional approaches at once. In the third section, the main idea of the research is proposed, while the user data set and the structure of the employed DL is detailed. Later in Section Results and Discussions, results will be reported, and evaluations will be carried out to identify the strengths and limitations of the suggested approaches compared with the other similar methods. The conclusion is then followed in Section Conclusion and Future Work to wrap up the discussed methodology.

Literature Review

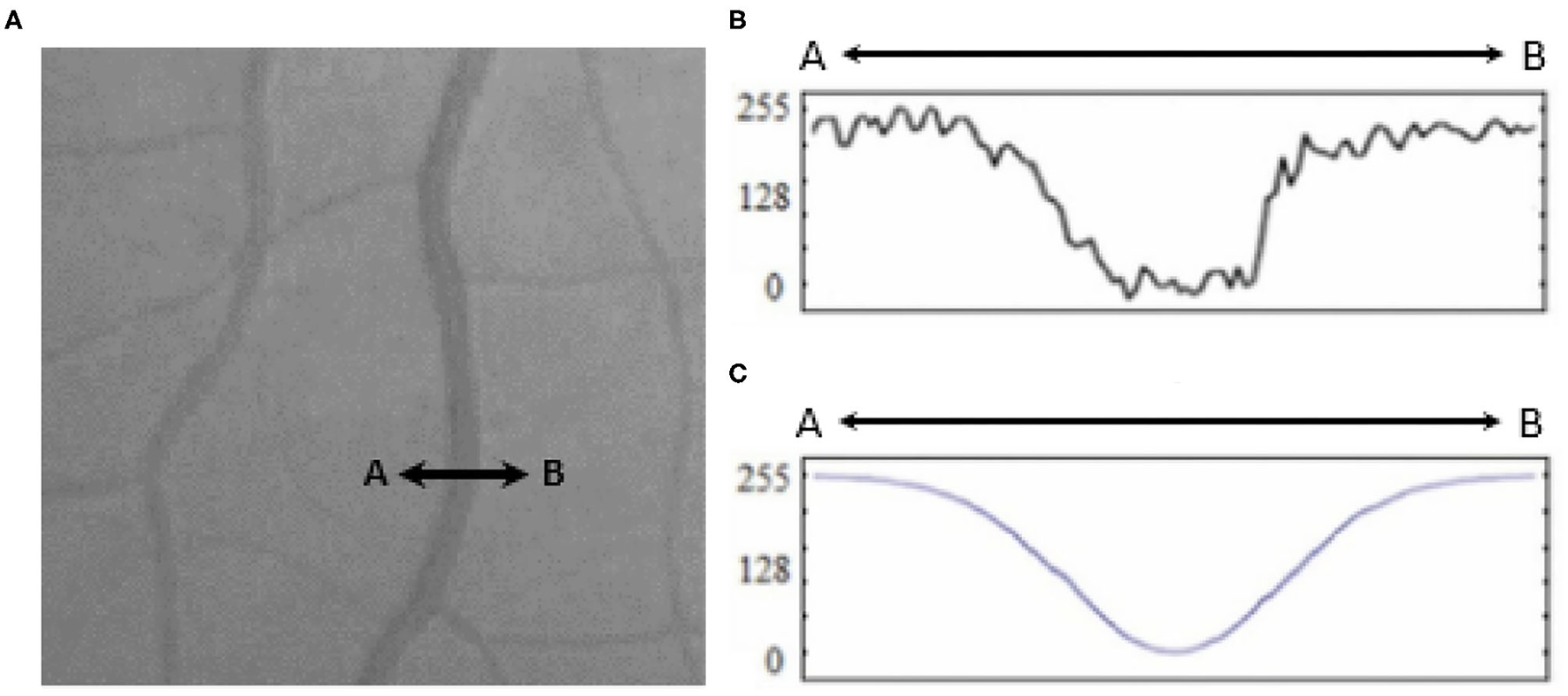

A broad look at the Literature review covering retinal blood vessel detection methodologies reveals that the current methodologies can be categorized into three classes (8) template matching based, tracking-based, and classifying based. In template matching approaches, a kernel is selected, which can be fitted well to a vessel. This kernel is later convoluted with each image. At the final stage, pixels with the highest responses are collected via a proper thresholding method to provide a binary map of vessel/background. A Gaussian-shaped curve proposed by Chaudhuri et al. (9) is used to highlight the vessels based on the intensity distribution of the vessel's cross-section (as illustrated in Figure 1).

Figure 1

(A) Retinal vessel cross-section (A to B), (B) intensities of the cross-section, and (C) fitted Gaussian curve proposed by Chaudhury et al. (9).

Later improvement has been proposed by Al-Rawi et al. (10) to tune the parameters to increase the Sensitivity and Accuracy and decrease false positive detection. Gaussian, Cauchy, and Gabor distributions have been employed with the same purpose (3).

In contrast to the previous two approaches in which a template of the vessels plays an essential role in the detection, tracking approaches use a model to trace vessels via seed growing. Each of the vessels can be traced separately from a starting point (which can be an ending point of the parent vessel) to the ending point at which the vessel is branched. Considering the contrast between vessels and other retina background intensity, Wu et al. (11) determined the mid-line of a vessel from a starting point inside it. Having calculated the vessel's width, tracing the orientation is taken into place automatically by assuming the vessel boundaries are parallel with the middle line. Several discussions have been conducted to detect the initial seed point via manual annotation or automatic template matching methods (7). For instance, the methodology proposed by Dizdaro et al. used level-set thresholding to identify an initial seed (12). The work carried out by Zhang et al., which applied active contours started from a seed found via Hessian vessel response (13), can be categorized in the second explained group using a tracking approach to detect vessels. Ali et al. used B-COSFIRE filter blending with adaptive thresholding for binarization of retinal blood vessels (14).

In classifying approaches, mostly ML techniques are taken into consideration. ML methods focus on two sets of features: common between pixels belonging to vessels and those that discriminate between background and vessel pixels. Provision of such pixels can increase the accuracy of a model being trained by artificial intelligence (AI) (15, 16).

On the one hand, practical ML approaches with supervised methods target pixel-based feature maps as training to identify vessel patterns. Various methodologies have been proposed to detect vessels using k-Nearest Neighbors (17), Decision Trees (18), SVM (19), and Neural Networks (20, 21). On the other hand, unsupervised methods employ rule-based algorithms, including filters, gradients, and thresholds for the same classification purpose. For instance, the work presented in (22) employs a neural network trained to model a matched filter.

Traditional ML algorithms and recent deep-learning methods can be listed as the third set of approaches. Recent studies on DL comprise two perspectives, in which the first one addresses retinal vessel detection via pixel-level classification. The later perspective uses DL to achieve semantic segmentation between the two classes of pixels. An instance can be the pipeline of methodologies proposed by Wang et al. (23). They extract a set of required features using DL and latterly distinguish each pixel via Random Forests.

Ensemble learning has also been used in several investigations. Lahiri et al. (24) suggested using an ensemble of stacked denoising autoencoders. Subsequently, the final conclusion is made via voting of a SoftMax layer over autoencoder outputs. In another work, Maji et al. (25) suggested an ensemble of 12 deep Convolutional neural networks (CNN). The final decision is made via performing averaging of the output result of the decision over each pixel. Few studies have targeted DNN and formulated the objective into a pixel-to-pixel classification (26, 27); however, these approaches are underperforming the performance by picking each pixel and deciding the proper class in terms of training or testing. A practical solution has been proposed by Dasgupta et al. (28) in which both training and testing phases are carried out over small retinal patches with an end-to-end perspective. These patches are individual from each other, and hence they can be processed simultaneously.

Wu et al. (29) suggested employing a CNN to extract binary masks followed by a generalized particle filtering technique aiming at retinal vessel tree extraction via a probabilistic tracking framework. Concerning long-range collaborations between pixels, Fu et al. (30) carried out the vessel detection by Conditional Random Field (CRF) developed based on a multi-level CNN model.

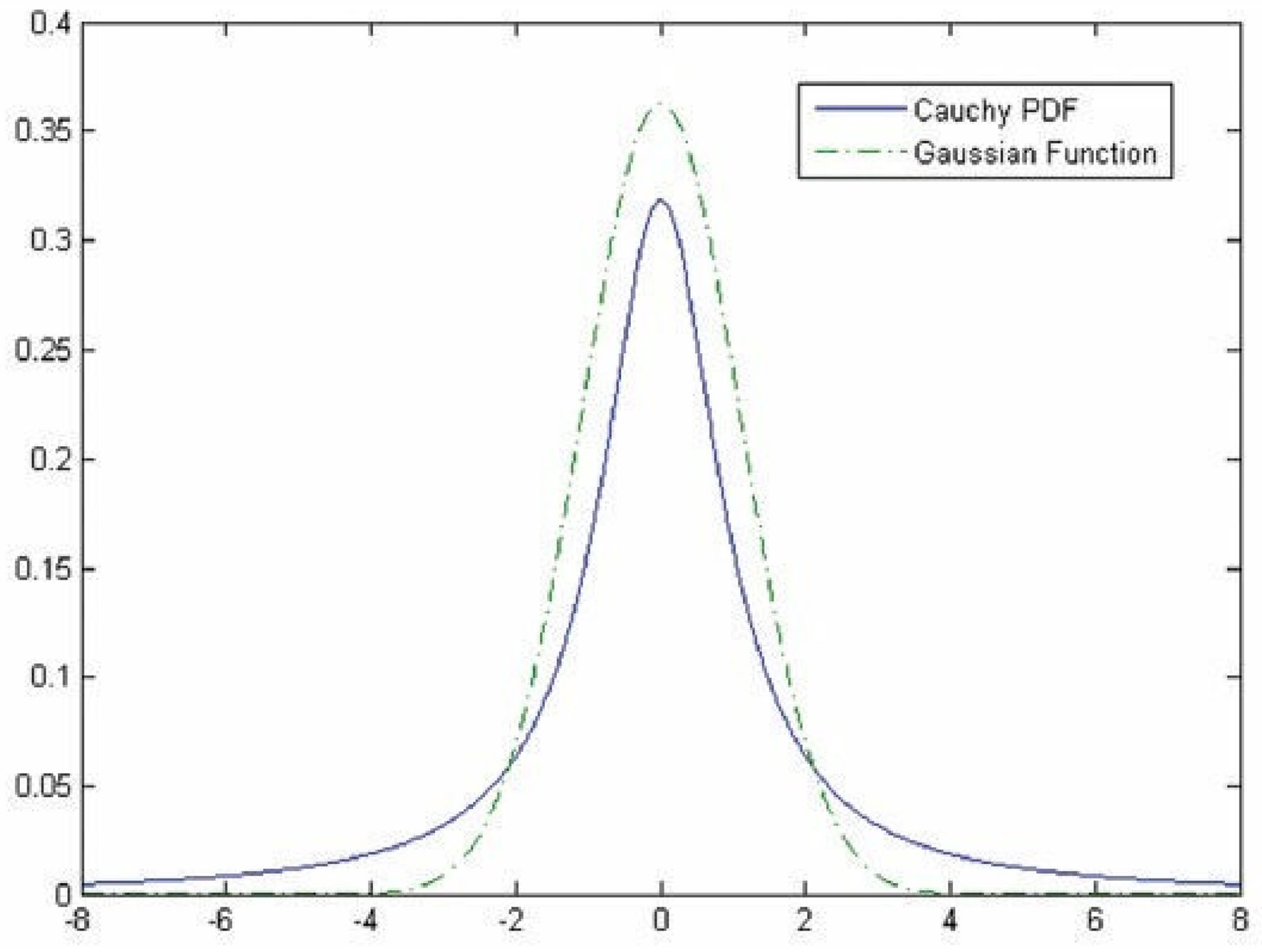

Hybrid methodologies can be suggested via a combination of the approaches mentioned above. For instance, the template matching approach can be utilized in the third approach to provide more features for classifying vessels. Studies combine these approaches in sequential order, as template matching has been considered in the preprocessing stage to highlight features that will be used later by the ML approach to discriminate pixels belonging to either retinal tissue or vessel (31, 32). For instance, the work proposed by Gao et al. (33) combines Gaussian matched filter (as a preprocessing stage) with a U-Net CNN to improve vessel detection. In other studies, Zolfagharnasab et al. (34) showed that the Cauchy probability distribution function (PDF) could be a model cross-section of vessels more accurate than the Gaussian. The comparison of Gaussian and the Cauchy curves for generating a template for retinal vessels has been shown in Figure 2.

Figure 2

Comparison of the Gaussian and the Cauchy curves for generating a template for retinal vessels [The image is adapted from (34)].

In this way, the conducted study aims to suggest two main contributions in the pipeline of the work proposed by Gao et al. First, supported by the investigation conducted in (33), we will employ Cauchy PDF instead of Gaussian. Second, a novel feature map will be prepared instead of raw pixel intensities by proposing a new three-channel image. Roy and Sharma presented a Residual Y-net design for retinal vessel detection inspired by U-net that help in Diabetic detection (35). Siddique et al. gave a review of U-Net and its variant techniques for image segmentation and specifically highlighted their applications in retinal fundus image segmentation (36). Yuliang et al. propose a retinal blood vessel segmentation model that combines a multiscale matched filter with a U-Net that has been tested on various available public datasets (37). Shabbir et al. describe different types of ML models for glaucoma detection from fundus image like multiscale, texture feature-based, Segmentation-based, CNN, Ensemble Learning approaches in detail (38). Wang et al. proposed a structure using Dense U-net and the patch-based learning approach for clinical applications (39). Table 1 given below provides a tabular representation of the related work discussed in the literature review section.

Table 1

| References | Used techniques | Main idea of paper |

|---|---|---|

| (24) | Unsupervised hierarchical feature learning and ensemble | Suggested using an ensemble of stacked denoising autoencoders. Subsequently, the final decision was made via voting of a SoftMax layer over autoencoder outputs. |

| (29) | Deep CNN and nearest neighbor search | Suggested the employment of CNN to extract binary masks followed by a generalized particle filtering technique for vascular structure identification from medical images aiming at retinal vessel tree extraction via a probabilistic tracking structure. |

| (30) | Conditional Random Field (CRF), multi-level CNN | Carried out the vessel detection by Conditional Random Field (CRF) developed based on a multi-level CNN model. |

| (34) | Cauchy PDF and Gaussian function | Showed that the Cauchy probability distribution function (PDF) could be a model cross-section of vessels more accurate than the Gaussian. |

| (33) | Gaussian matched filter with U-Net | To use Gaussian matched filter in the preprocessing stage with U-Net CNN, aiming to improve the detection of vessels. |

| (35) | Y-net | Presented a Residual Y-net design for retinal vessel detection inspired by U-net that help in Diabetic detection. |

| (36) | U-Net | A review of U-Net and its variant techniques for image segmentation and specifically highlight their applications in retinal fundus image segmentation. |

| (37) | Multiscale matched filter, U-Net | Proposes a model that combines a multiscale matched filter with a U-Net that has been tested on various available public datasets. |

| (38) | Multiscale, texture feature-based, Segmentation-based, CNN, Ensemble Learning approaches | Describe different types of ML models for glaucoma detection from fundus images like multiscale, texture feature-based, Segmentation-based, CNN, Ensemble Learning approaches in detail. |

| (39) | Dense U-net | Proposed a structure using Dense U-net and the patch-based learning approach for clinical applications. |

Related works.

Methodology

The end-to-end implementation of retinal vessel detection requires introducing a correct set of features per pixel in which the model can find meaningful discrimination between vessels and background. Concerning the intensity of each pixel in the fundus image, I(x, y) can be defined where x and y are the pixel intensity, and I is the content of the pixel intensity reported in the RGB vector. Several studies have suggested a preprocessing step to enhance the brightness of fundus images (29, 40).

Pre-processing

Illustrated in Figure 1, the original fundus images present low-intensity differences among the retina tissue and vessels; therefore, it is proposed to use contrast limited adaptive histogram equalization operation (known as CLAHE) to improve the overall image distinction (1). The CLAHE operation was performed for each color channel to normalize the pixel intensity values image via (1).

Where μ is mean, and σ is the standard deviation of each channel.

Concerning the red nature of blood vessels, since the retina texture is supplied via micro-vessels, the mean intensity of the red channel is mostly higher than other RGB channels. To this end, it has been reported to remove the red channel from the processing of retinal vessels. In contrast, the green channel and the blue one can be used instead to provide more information about the spread of blood vessels. To this end, an initial step comprising the decomposition of the channel is required, and the omitted channel will be replaced with an extra feature channel to highlight the presence of the vessels across the retina texture.

Construction of the Feature Map

As discussed through the literature review, the cross-section can be modeled as a Gaussian curve; however, in (10), it was shown that Cauchy PDF (2) could be fitted better than the traditional Gaussian in case, as they are compared in Figure 2.

Where γ and x stand for scaling parameter (defining the slope of the curve) and pixel intensity, respectively. By selecting non-zero values for x0, the curve peak is shifted horizontally. To generate the bank of filters, γ is chosen as 1, the template curves trunk value (T) is selected 9, while the length value of each template (L) was chosen 8, upon the recommendations for DRIVE dataset in (2).

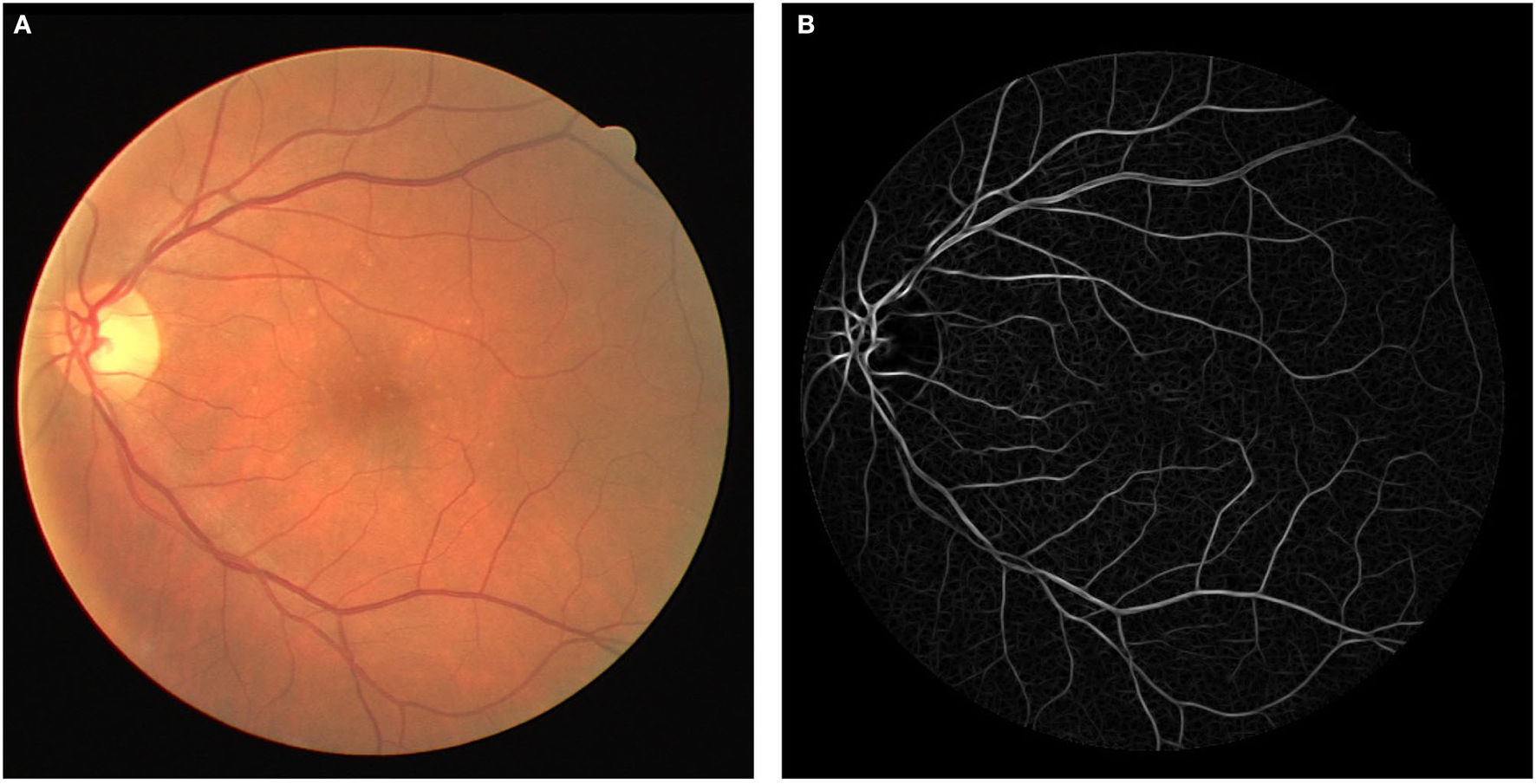

The template is then rotated every to detect vessels in different orientations. To prepare an image for either training or testing, each pixel is first convolved with the bank of filters, and the highest response is stored. Figure 3 depicts an image with its response after the convolution.

Figure 3

(A) Original fundus image and (B) Cauchy matched filter response.

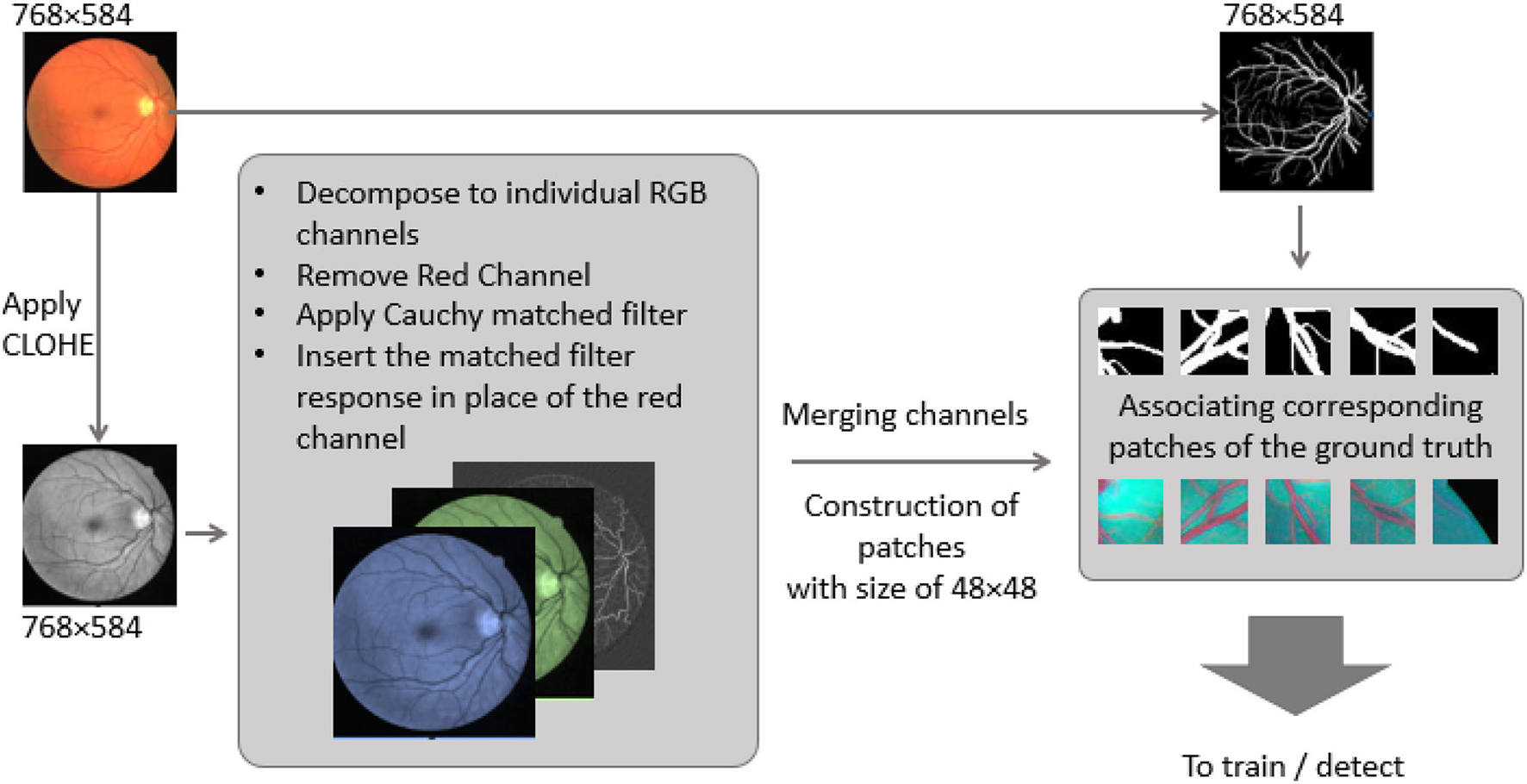

As the red channel was initially removed from the original image, the matched filter response is inserted in the red channel. To prepare the new feature images (Cauchy-G-B) for creating patches. Since there are few training images (20 for the dataset), a total of 500 overlapped 64 × 64 patches have been generated per training image. Besides, data augmentation has been employed via either flipping patches horizontal and vertically. This operation assured that more than 40k patches are available for the training and validation process. However, for testing purposes, the total ROI of each test image is segmented into 64 × 64 patches with no overlap. It assures that as soon as there is a prediction per each patch, they can be regrouped structurally to form the predicted image entirely. The image preparation pipeline and the patches are presented in Figure 4.

Figure 4

Image preparation pipeline, from original Fundus image to feature map patches.

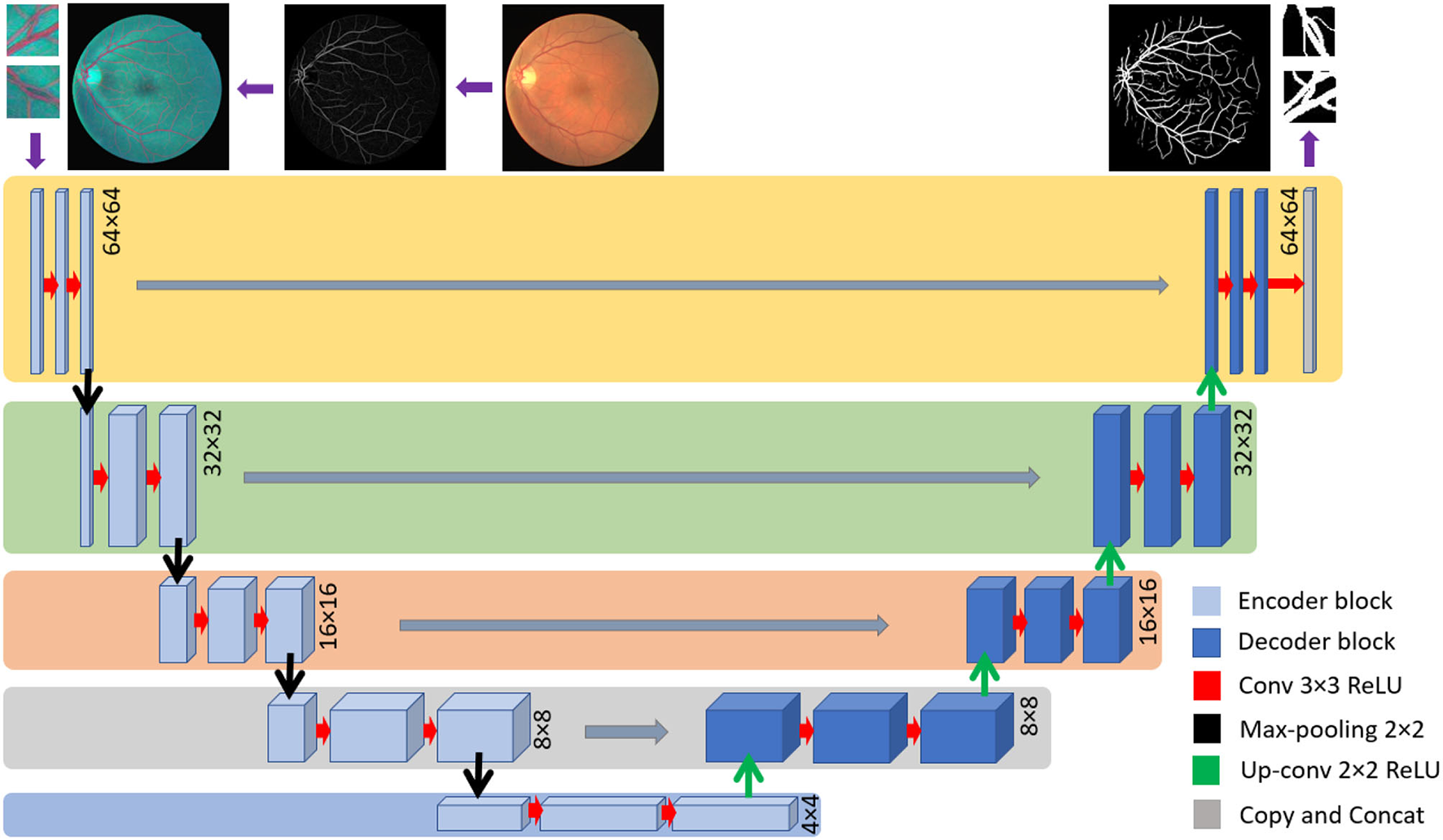

U-Net

The U-Net is widely employed among the different CNN architectures used for retinal vessel detection (41). U-net has been proven to have a promising output in various segmentation approaches, especially in retinal vessel segmentation (3, 4). Concerning the end-to-end training of pixels, it uses multi-level decomposition to incorporate low-level features and multi-channel filtering. On the one hand, employing U-net enhances training useful low-level features. Yet, on the other hand, through the testing, it discards the trained feature, which results in degradation of learning ability.

The employed U-net model is based on a fully connected network structure comprising an input layer, a convolutional layer, a pooling layer, and an up-sampling layer followed by the output layer. Bach to the encoding stage includes four down-sampling steps where there are two convolutional layers and one pooling layer in each. The encoding stage consists of four up-sampling steps, while each one consists of two convolutional layers and one up-sampling layer. The input layer is set to accept patches of the size of 64 × 64 × 3, while the output is designed to generate a 64 × 64 × 1 floating point image. The architecture consists of 32 filters with padding for the size equal to the input for the first and second convolutional layers in the internal layers. The Rectified Linear Unit (ReLU) activation function was used in the whole model except the last layer where SoftMax was employed presented in (3).

where ak(x) stands for the activation in feature channel k at the pixel position of (x, y). The number of classes is shown by K and pk(x) shows the approximated maximum function. The U-net structure is depicted in Figure 5.

Figure 5

U-net structure implemented in this investigation.

Results and Discussions

Database

The DRIVE database contains 40 pictures with 768 × 584 pixels captured digitally via a Canon CR5 camera at 45 fields of view (42). Total seven images show some lesions corresponding to degrees of retinopathy. Besides, binary maps containing manual segmentation of vessels are provided, and masks cover retina boundaries. Unfortunately, the images are provided in a lossy compression format (JPEG), which has affected some small details; however, most researchers have used this dataset as a reference for evaluation. The images are split into two groups, training and test, each containing 20 images with three images presenting retinopathy symptoms.

Implementation Details

We first extracted patches from DRIVE retinal images to train a DL model. Later, we fed them as the input of the designed network and the corresponding label patches obtained from ground truth data. In other words, With the approach used to train a U-net with the end-to-end strategy, the preprocessed patches and their corresponding ground truth extractions were employed to obtain a specific training model for DRIVE database images. Data splitting was carried out by considering 90% of all extracted patches for training and 10% for validation. This strategy has been adopted in several previous studies, including in (42–44), and (33). A total number of 200 epochs were considered for the training process, as well.

Training Details

Adam optimizer was employed to speed up the training process and avoid trapping on local minima while training. It included setting β2 = 0.9 and β2 = 0.999 to handle sparse gradients on noisy (45). While the initial learning rate was set to η = 0.01, and it was set to decrease with the shrinking rate of ηi = 0.9ηi−1 per each 10 epochs when the value of loss function is saturated.

The system configuration used for the training and testing process includes an Intel Core i7-7300 CPU with 64GB of memory. The code was developed in Python 3.7 using Anaconda and Keras as the DL framework and TensorFlow. The preprocessing, including the preparation of multi-channel feature images, was quite fast per each image (<10 s), but instead, each training epoch required more than 2 h to complete.

Evaluation Metric

Retinal vessel segmentation is commonly looked at as a binary classification task. The predicted labels for each pixel are compared with the ground truth classes to fit vessel (positive) or retinal tissue (negative) categories. Binary classification requires determining a true or false class (vessel or retinal tissue) class per pixel. Therefore, a predicted pixel can be represented in four groups:

-

True Positive (TP) are those pixels predicted as vessels and belong to vessels in ground truth images (correct prediction).

-

True Negative (TN) pixels are predicted as non-vessels and belong to retinal tissue (correct prediction).

-

False Positive (FP) indicates the predicted pixels as retinal background but instead belong to vessels (incorrect prediction).

-

Finally, False Negative (FN) are non-vessel pixels, but they have been classified as retinal vessels (incorrect prediction).

For the evaluation purposes, the performance of the trained models is obtained through measuring Sensitivity (Sen), specificity (Spc), accuracy (Acc), and Precision (Prc), which are standard approaches for evaluating binary results represented in (4–7).

In addition, the area under the Receiver Operating Characteristic curve (AUROC) for comparison with contemporary methods is obtained, as well.

The overall output of the proposed method is obtained via the receiver operating characteristic curve (ROC), which reflects the relationship between true positive and false positive rates concerning different thresholds when the network output is mapped into binary classes. For this purpose, global thresholding method with different threshold values was considered for the threshold-sensitive metrics by calculating sensitivity against the false-positive ratio () at various threshold values, and later plotting ROC curve per each FPR and thresholding rate pair. The same strategy is adopted for obtaining the average precision obtained via (7) against the threshold at various threshold values.

Sensitivity Analysis of Global Threshold

To have robust evaluations for metrics that are sensitive to the employed global thresholding method (Acc, Sen, Spc, and Prc), a range of values has been studied threshold values sampled in τi∈{ 0.01, …, 0.99}. The study revealed that the changes in the metrics are slight around τ = 0.5, which shows that this threshold value can be considered to be used for further evaluations.

Discussion and Comparison With Previous Approaches

Based on the obtained results, it can be discussed that the inclusion of an additional feature channel (the Cauchy matched filter response) improved the detection rate of vessels via enhancing vessel contrast against the background. In contrast, this strategy filtered the noisy red channel. Besides, vessels with various diameters were segmented by having a bank of filters at different scales for the matched filter. Vessels with multiple diameters were segmented, which assisted the DL method to improve the proposed classifier.

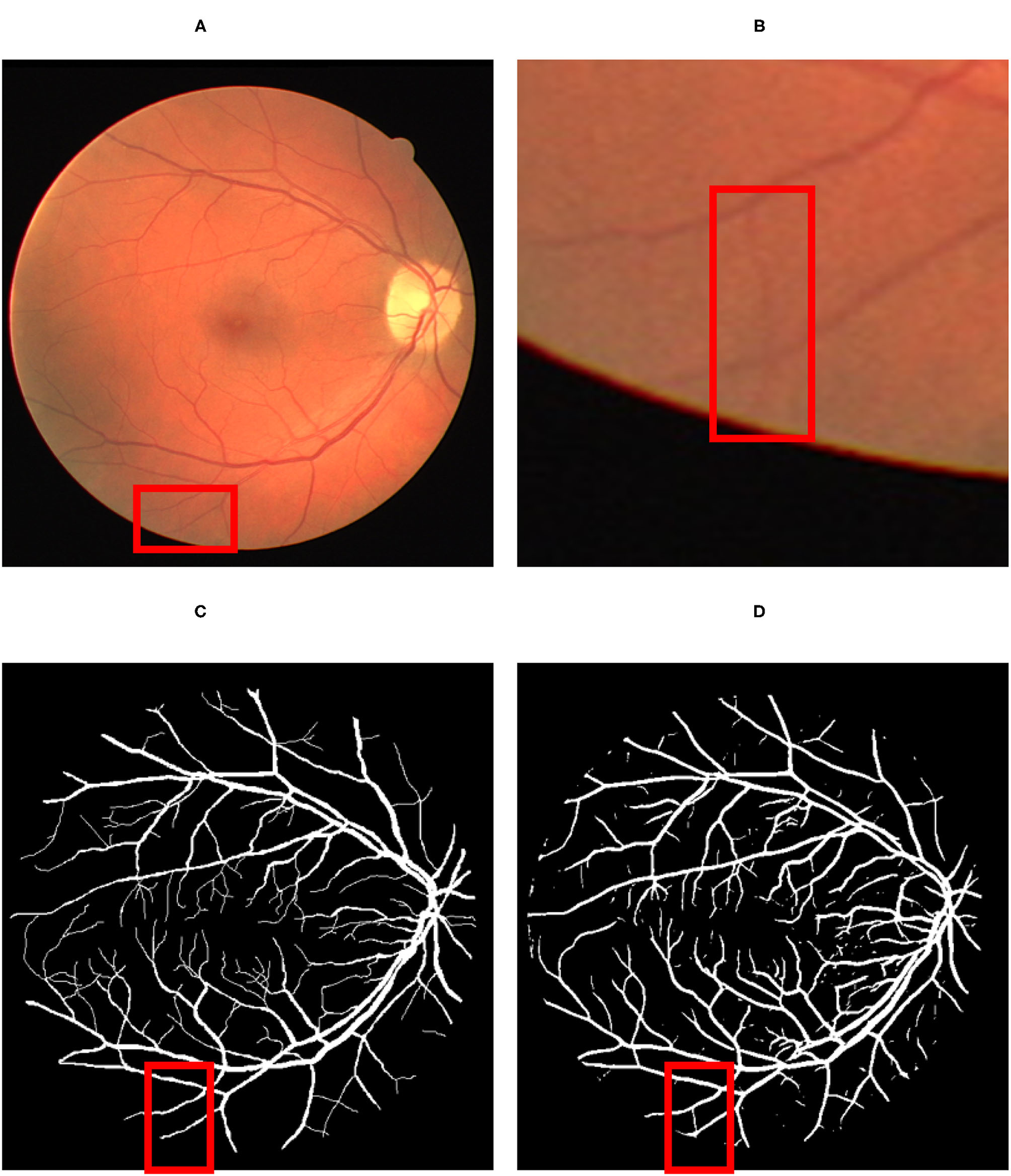

Concerning the subjective characteristic of manual labeling of the ground-truth data, a detailed visual comparison between the predicted vessel map (Figure 6D) and the ground-truth (Figure 6C) reveals that the proposed method can extract more vessels that had not been labeled before in ground truth images (Figures 6A,B).

Figure 6

The result of the detection: (A) Original fundus image; (B) a thin vessel; (C) the corresponding ground truth map which does not contain the vessel; (D) the thin vessel being detected by the proposed model. The red color square box part in (A) has been reflected by respective identified image in (C) by proposed map. Similarly the red square part reflected in (B) has been identified by red square box part in image (D).

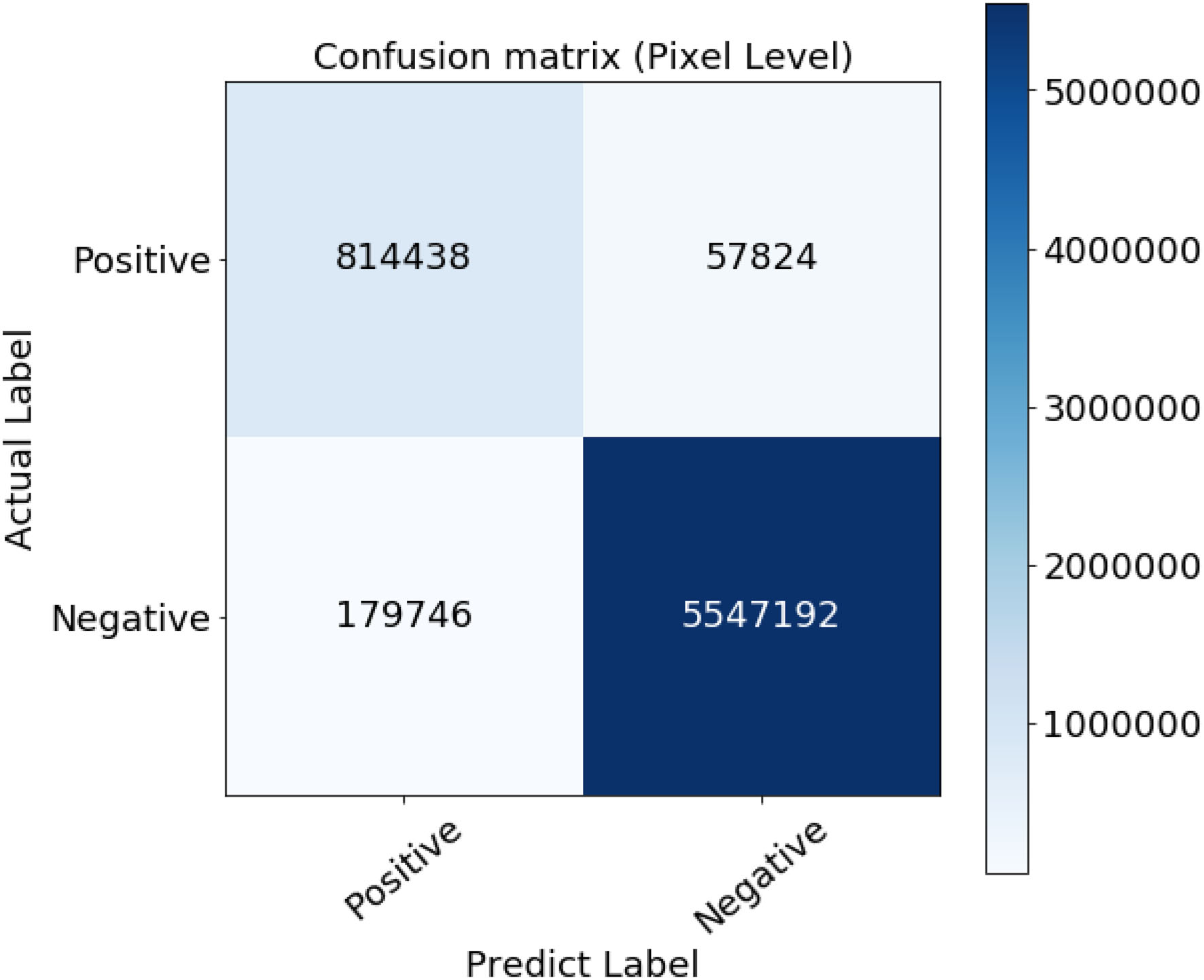

However, since those new extracted vessels are not included in the subjective extracted ground truth images, total TP and TN pixels decreased while FP and FN increased, respectively. Such imbalance labeling has impressed the metrics to approach slightly toward a perfect detection. Hopefully, since the ground truth data is common for all the compared methodologies, they can be evaluated simultaneously. The confusion matrix presented in Figure 7 certifies the details of the evaluation.

Figure 7

Confusion matrix of the evaluation results.

Additional visual comparison asserts that the proposed methods have detected thinner vessels in the central regions on the retina, where vessels are distributed in low density. It is due to the vessels' shape to fit better on the employed Cauchy curve.

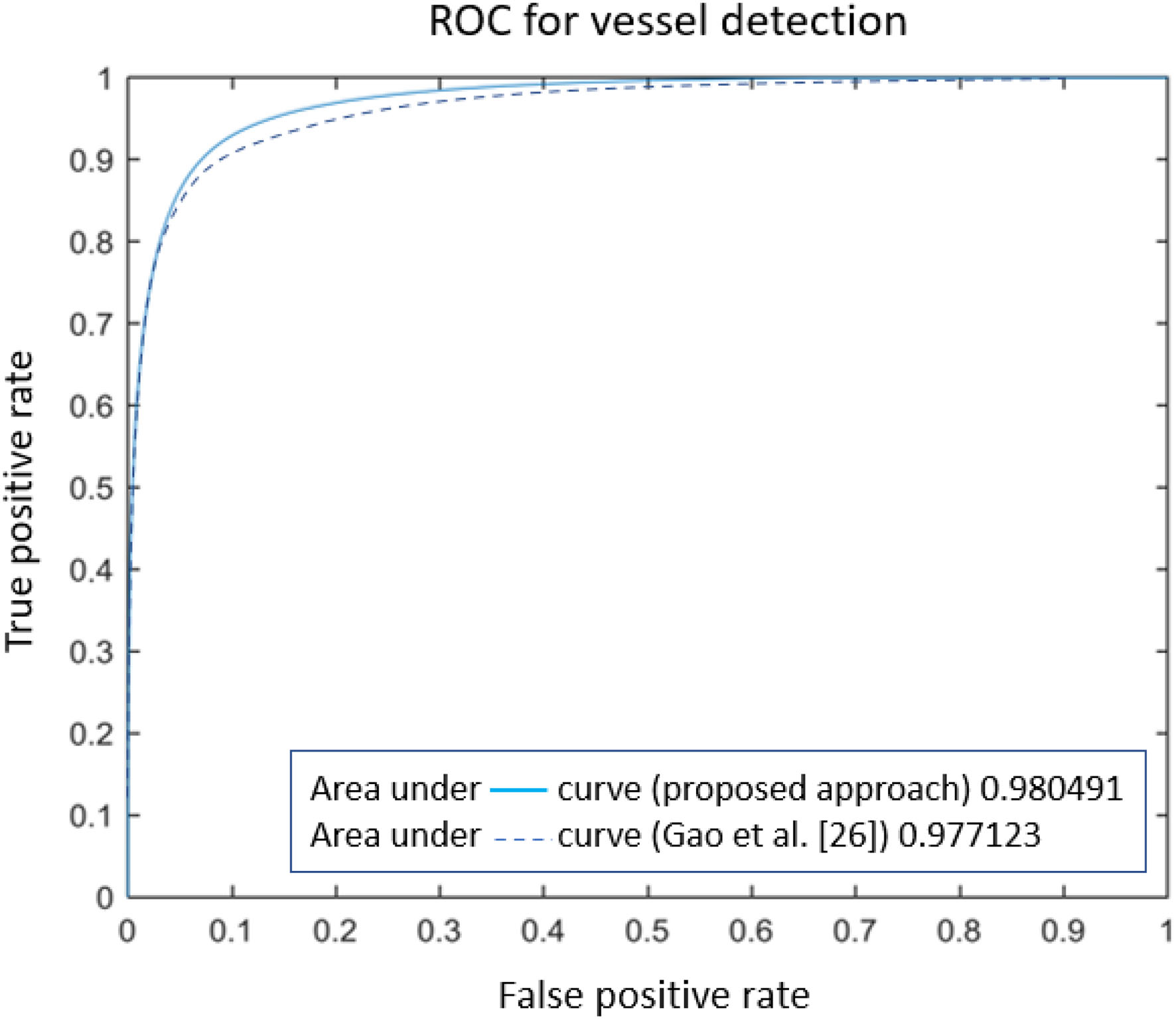

Numerical comparison with other approaches (reported in Table 2) certifies the proposed model is ranked among most state-of-the-art methods, based on Sensitivity, Specificity, accuracy, and area under the ROC. However, some methods are ranked higher [such as the one presented in (44)], as they employed octave convolutions to extract multiple-spatial-frequency features aiming to capture retinal vasculatures with different thicknesses. This technique allowed them to improve the U-net structure and, at the same time, take benefit from features to enhance the detection rate significantly. Figure 8 illustrates the AUROC of the proposed method, which shows a bigger area under the curve (0.9805) compared to the baseline model (0.9771) presented by Gao et al. (33).

Table 2

| Method | Acc | Sen | Spc | Prc | AUROC |

|---|---|---|---|---|---|

| Fraz et al. (40) | 0.9422 | 0.7302 | 0.9742 | 0.9600 | 0.9502 |

| Nicola et al. (48) | 0.9467 | 0.7731 | 0.9724 | NA | 0.9588 |

| Lahiri et al. (24) | 0.9480 | 0.7500 | 0.9800 | NA | 0.9500 |

| Melinscak et al. (27) | 0.9466 | 0.7276 | 0.9785 | NA | 0.9749 |

| Fu et al. (47) | 0.9470 | 0.7294 | NA | NA | 0.9523 |

| Gao et al. (33) | 0.9636 | 0.7802 | 0.7802 | 0.8948 | 0.9771 |

| Mou et al. (43) | 0.9607 | 0.8132 | 0.9783 | NA | 0.9796 |

| Fan et al. (44) | 0.9664 | 0.8374 | 0.9790 | NA | 0.9835 |

| Wang et al. (39) | 0.9511 | 0.7986 | 0.9736 | NA | 0.9740 |

| Saroj et al. (46) | 0.9509 | 0.7278 | 0.9724 | NA | 0.8501 |

| Proposed method | 0.9640 | 0.8192 | 0.9896 | 0.9337 | 0.9805 |

Assessment of the obtained results with contemporary approaches.

Figure 8

The comparison of ROC curves for retinal vessel detection methods.

Wang et al. Proposed a model that provides an accuracy rate of 0.9511 (39), while the model proposed by Saroj et al. Shows an accuracy rate of 0.9509 (46). Therefore, it can be concluded that the proposed model's accuracy in this paper is superior to existing models.

Conclusion and Future Work

Targeting retinal vessel detection, this paper offers a novel approach that pools the response of a traditional matched filter with a fully connected U-net network. The uniqueness of the suggested method is to replace the noisy red channel of fundus images with a channel that can provide more vessel details. It is intended since the red blood vessel are barely visible distributed over the red retina tissue. The new pseudo-color image, called a multi-channel feature map, is processed by generating several patches for training via the U-net. The corresponding labels for the training patches are also provided using the ground truth data. This technique accelerates the training process and provides enough data to train the DL model. Evaluation of the results shows that vessel detection can be accomplished with an accuracy of 96.40% and sensitivity around 82%. This difference is seen since thin vessels have been discarded during the subjective annotation of ground truth images. The blue channel of retinal images can be replaced with a Gabor-filter response in future work. The bank of the used filters can be equipped with vessels presented in a variety of widths. Additional channels are also intended to be investigated to assist the DL model in associating the pixel values to the vessel or retinal tissue classes. Last but not least, and through comparison of this work to the other state-of-the-art methodologies, the proposed method shows a promising result highlighting the importance of combining feature maps to improve the detection of retinal vessels via U-net assisted DL approaches.

Gathering large quantities of fundus images is one of the most perplexing in creating strong deep-learning systems. Designing algorithms that can work from restricted data is one solution. Apart from limited images available in public datasets, there is still an opportunity for testing blood vessel segmentation approaches on noisy real-life pathological retinal pictures. The offered model has been tested only on the DRIVE dataset. Still, it can also be tested on the other available datasets to evaluate its accuracy and compare with other models. Also, the proposed model needs to be tested to real-time pathological retinal images.

Funding

This work was supported by the Deanship of Scientific Research, King Faisal University, Saudi Arabia (grant number NA000256).

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Statements

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

SA and MS: conceptualization, writing—original draft, and preparation. SA, MH, and SB: methodology. SB: validation. MH and FJ: resources. SB, MH, and RA: writing—review and editing and funding acquisition. MS, FJ, and SA: supervision. SA, RA, and SB: project administration. All authors have read and agreed to the published version of the manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1.

Jusoh F Haron H Ibrahim R Che Azemin MZ . An Overview of Retinal Blood Vessels Segmentation BT - Advanced Computer and Communication Engineering Technology. Phuket: Springer International Publishing. (2016) p. 63−71. 10.1007/978-3-319-24584-3_6

2.

GeethaRamani R Balasubramanian L . Retinal blood vessel segmentation employing image processing and data mining techniques for computerized retinal image analysis. Biocybern Biomed Eng. (2016) 36:102–18. 10.1016/j.bbe.2015.06.004

3.

Fraz MM Remagnino P Hoppee A Uyyanonvara B Rudnika AR Owen CG et al . Blood vessel segmentation methodologies in retinal images-a survey. Comp Meth Prog Biosci. (2012) 108:407–33. 10.1016/j.cmpb.2012.03.009

4.

Soares J Leandro J Cesar Junior R Jelinek H Cree M . Retianl vessel segmentation using the 2-D gabor wavelet and supervised classification. IEEE Trans Med Imaging. 25:1214–1222. 10.1109/TMI.2006.879967

5.

Orujov F Maskeliunas R Damaševičius R Wei W . Fuzzy based image edge detection algorithm for blood vessel detection in retinal images. Appl Soft Comput. (2020) 94:106452. 10.1016/j.asoc.2020.106452

6.

Leopold HA Orchard J Zelek J Lakshminarayanan V . Use of Gabor filters and deep networks in the segmentation of retinal vessel morphology. In: Proc. SPIE. (2017). 10.1117/12.2252988

7.

Imran J Li Y Pei J Yang J Wang Q . Comparative analysis of vessel segmentation techniques in retinal images. IEEE Access. (2019) 7:114862–87. 10.1109/ACCESS.2019.2935912

8.

Vermeer KA Vos FM Lemij HG Vossepoel AM A model based method for retinal blood vessel detection . Comput Biol Med. (2004) 34:209–219. 10.1016/S0010-4825(03)00055-6

9.

Chaudhuri S Chatterjee S Katz N Nelson M Goldbaum M . Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans Med Imaging. (1989) 8:263–269. 10.1109/42.34715

10.

Al-Rawi M Qutaishat M Arrar M . An improved matched filter for blood vessel detection of digital retinal images. Comput Biol Med. (2007) 37:262–267. 10.1016/j.compbiomed.2006.03.003

11.

Wu C Agam G Stanchev P . A general framework for vessel segmentation in retinal images. in 2007. International Symposium on Computational Intelligence in Robotics and Automation, (2007). 37–42. 10.1109/CIRA.2007.382924

12.

Dizdaro B Ataer-Cansizoglu E Kalpathy-Cramer J Keck K Chiang MF Erdogmus D . Level sets for retinal vasculature segmentation using seeds from ridges and edges from phase maps. IEEE International Workshop on Machine Learning for Signal Processing. (2012) 1–6. 10.1109/MLSP.2012.6349730

13.

Zhang J Tang Z Gui W Liu J . Retinal vessel image segmentation based on correlational open active contours model. Chinese Automation Congress (CAC). (2015) 993–8.

14.

Ali A Zaki WMDW Hussain A . Retinal blood vessel segmentation from retinal image using B-COSFIRE and adaptive thresholding. Indones J Electr Eng Comput Sci. (2019) 13:1199–207. 10.11591/ijeecs.v13.i3.pp1199-1207

15.

Dhanamjayulu C et al . Identification of malnutrition and prediction of BMI from facial images using real-time image processing and machine learning. IET Image Process. (2021) ipr2.12222.

16.

Srinivasu PN Bhoi AK Jhaveri RH Reddy GT Bilal M . Probabilistic deep Q network for real-time path planning in censorious robotic procedures using force sensors. J. Real-Time Image Process. (2021) 18: 5,1773–1785. 10.1007/s11554-021-01122-x

17.

Rehman A Harouni M Karimi M Saba T Bahaj SA Awan MJ . Microscopic retinal blood vessels detection and segmentation using support vector machine and K-nearest neighbors. Microsc Res Tech. (2022) 1–16. 10.1002/jemt.24051

18.

Gu SH Nicolas V Lalis A Sathirapongsasuti N Yanagihara R . Complete genome sequence and molecular phylogeny of a newfound hantavirus harbored by the Doucet's musk shrew (Crocidura douceti) in Guinea. Infect Genet Evol. (2021) 20:118–12310.1016/j.meegid.2013.08.016

19.

Singla M Soni S Saini P Chaudhary A Shukla KK . Diabetic retinopathy detection using twin support vector machines. Adv Intell Syst Comput. (2020) 1064:91–104. 10.1007/978-981-15-0339-9_9

20.

Badar M Haris M Fathima A . Application of deep learning for retinal image analysis: A review. Comp Sci Rev. (2020) 35:100203. 10.1016/j.cosrev.2019.100203

21.

Gadekallu TR Rajput DS Reddy MPK Lakshmanna K Bhattacharya S Singh S . A novel PCA-whale optimization-based deep neural network model for classification of tomato plant diseases using GPU. J Real Time Image Process. (2020) 18:1383–96. 10.1007/s11554-020-00987-8

22.

Wu H Zhao S Zhang X Sang A Dong J Jiang K . Back-propagation artificial neural network for early diabetic retinopathy detection based on a priori knowledge. J Phys: Conf Serv. (2020) 1437:012019. 10.1088/1742-6596/1437/1/012019

23.

Wang S Yin Y Cao G Wei B Zheng Y Yang G . Hierarchical retinal blood vessel segmentation based on feature and ensemble learning. Neurocomputing. (2015) 149:708–717. 10.1016/j.neucom.2014.07.059

24.

Lahiri A Roy AG Sheet D Biswas PK . Deep neural ensemble for retinal vessel segmentation in fundus images towards achieving label-free angiography. 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). (2016) 1340–1343, 10.1109/EMBC.2016.7590955

25.

Maji D Santara A Mitra P Sheet D . Ensemble of deep convolutional neural networks for learning to detect retinal vessels in fundus images. CoRR. (2016) ArXiv abs/1603.04833:1–4.

26.

Liskowski P Krawiec K . Segmenting retinal blood vessels with deep neural networks. IEEE Trans Med Imaging. (2016) 35:2369–20. 10.1109/TMI.2016.2546227

27.

Melinscak M Prentasic P Loncaric S . Retinal vessel segmentation using deep neural networks. VISAPP 2015. - 10th Int Conf Comput Vis Theory Appl VISIGRAPP, Proc. (2015) 1:577–582. 10.5220/0005313005770582

28.

Dasgupta A Singh S . A fully convolutional neural network based structured prediction approach towards the retinal vessel segmentation. IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017). (2017) 248–251. 10.1109/ISBI.2017.7950512

29.

Wu A Xu Z Gao M Buty M Mollura DJ . Deep Vessel Tracking: A Generalized Probabilistic Approach via Deep Learning. (2016). Available online at: ieeexplore.ieee.org10.1109/ISBI.2016.7493520

30.

Fu H Xu Y Lin S Wong DWK Liu J . Deepvessel: retinal vessel segmentation via deep learning and conditional random field. Lect Notes Comput. Sci. (2016) 9901:132–13910.1007/978-3-319-46723-8_16

31.

Quasim MT Shaikh A Shuaib M Sulaiman A Alam S Asiri Y . Smart healthcare management evaluation using fuzzy decision making method. Res Sq. (2021) 1–19. 10.21203/rs.3.rs-424702/v1

32.

Upreti K Syed M Alam M Alhudhaif A Shuaib M . Generative adversarial networks based cognitive feedback analytics system for integrated cyber-physical system and industrial iot networks. Res Sq. (2021) 1–14. 10.21203/rs.3.rs-924288/v1

33.

Gao X Cai Y Qiu C Cui Y . Retinal blood vessel segmentation based on the Gaussian matched filter and U-net. 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI). (2017) 1–5. 10.1109/CISP-BMEI.2017.8302199

34.

Zolfagharnasab H Naghsh-Nilchi AR . Cauchy based matched filter for retinal vessels detection. J Med Signals Sens. (2014) 4:1. 10.4103/2228-7477.128432

35.

Roy A , KS. Retinal vessel detection using residual Y-net. (2021). Available online at: ieeexplore.ieee.org10.1109/SMARTGENCON51891.2021.9645749

36.

Siddique N Paheding S . Access E-I, undefined. U-net and its variants for medical image segmentation: A review of theory and applications. (2021). Available online at: ieeexplore.ieee.org10.1109/ACCESS.2021.3086020

37.

Ma Y Zhu Z Dong Z Shen T . Multichannel Retinal Blood Vessel Segmentation Based on the Combination of Matched Filter U-Net Network. (2021). Available online at: hindawi.com10.1155/2021/5561125

38.

Shabbir A Rasheed A Shehraz H Saleem A Zafar B Sajid M et al . Blood vessel segmentation methodologies in retinal images-a survey. Math Biosci Eng. (2021) 18:2033–76. 10.3934/mbe.2021106

39.

Wang C Zhao Z Ren Q Xu Y . Dense U-net based on patch-based learning for retinal vessel segmentation. (2019). Available online at: mdpi.com10.3390/e21020168

40.

Fraz MM Basit A Barman SA . Application of morphological bit planes in retinal blood vessel extraction. J Digit Imaging. (2013) 26:274–286. 10.1007/s10278-012-9513-3

41.

Gadekallu TR et al . Hand gesture classification using a novel CNN-crow search algorithm. Complex Intell Syst. (2021) 7:1855–68. 10.1007/s40747-021-00324-x

42.

Staal J Abràmoff MD Niemeijer M Viergever MA Van Ginneken B . Ridge-based vessel segmentation in color images of the retina. IEEE Trans Med Imaging. (2004) 23:501–9. 10.1109/TMI.2004.825627

43.

Mou L Chen L Cheng J Gu Z Zhao Y Liu J . Dense dilated network with probability regularized walk for vessel detection. IEEE Trans Med Imaging. (2020) 39:1392–403. 10.1109/TMI.2019.2950051

44.

Fan Z et al . Accurate retinal vessel segmentation via octave convolution neural network. arXiv. (2019) abs/1906.1.

45.

He K Zhang X Ren S Sun J . Delving deep into rectifiers: surpassing human-level performance on imagenet classification. Proc IEEE Int Conf Comput Vis. (2015) 2015:1026–1034. 10.1109/ICCV.2015.123

46.

Saroj S Kumar R . Frechet PDF based matched filter approach for retinal blood vessels segmentation. Elsevier. (2020). 10.1016/j.cmpb.2020.105490

47.

Fu H Xu Y Wong DWK Liu J . Retinal vessel segmentation via deep learning network and fully-connected conditional random fields. In: Proceedings - International Symposium on Biomedical Imaging. (2016) p. 698–701. 10.1109/ISBI.2016.7493362

48.

Strisciuglio N Azzopardi G Vento M Petkov N . Supervised vessel delineation in retinal fundus images with the automatic selection of B-COSFIRE filters. Mach Vis Appl. (2016) 27:1137–49. 10.1007/s00138-016-0781-7

Summary

Keywords

multichannel, retinal vessels, retinopathy, U-Net, Cauchy distribution

Citation

Bhatia S, Alam S, Shuaib M, Hameed Alhameed M, Jeribi F and Alsuwailem RI (2022) Retinal Vessel Extraction via Assisted Multi-Channel Feature Map and U-Net. Front. Public Health 10:858327. doi: 10.3389/fpubh.2022.858327

Received

19 January 2022

Accepted

04 February 2022

Published

17 March 2022

Volume

10 - 2022

Edited by

Thippa Reddy Gadekallu, VIT University, India

Reviewed by

Praveen Kumar, VIT University, India; Haider Khalaf Jabbar, Imam Jafar Al-Sadiq University, Iraq

Updates

Copyright

© 2022 Bhatia, Alam, Shuaib, Hameed Alhameed, Jeribi and Alsuwailem.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Surbhi Bhatia sbhatia@kfu.edu.sa

This article was submitted to Digital Public Health, a section of the journal Frontiers in Public Health

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.