- Department of Applied Linguistics, Faculty of Informatics and Management, University of Hradec Kralove, Hradec Kralove, Czechia

Nowadays, artificial intelligence (AI) affects our lives every single day and brings with it both benefits and risks for all spheres of human activities, including education. Out of these risks, the most striking seems to be ethical issues of the use of AI, such as misuse of private data or surveillance of people's lives. Therefore, the aim of this systematic review is to describe the key ethical issues related to the use of AI-driven mobile apps in education, as well as to list some of the implications based on the identified studies associated with this research topic. The methodology of this review study was based on the PRISMA guidelines for systematic reviews and meta-analyses. The results indicate four key ethical principles that should be followed, out of which the principle of algorithmovigilance should be considered in order to monitor, understand and prevent the adverse effects of algorithms in the use of AI in education. Furthermore, all stakeholders should be identified, as well as their joint engagement and collaboration to guarantee the ethical use of AI in education. Thus, the contribution of this study consists in emphasizing the need for joint cooperation and research of all stakeholders when using AI-driven mobile technologies in education with special attention to the ethical issues since the present research based on the review studies is scarce and neglected in this respect.

Introduction

At present artificial intelligence (AI) is an indispensable part of people's lives and affects all fields of human activity, including education where it helps to enhance personalized learning and thus makes learning more student-centered in the form of using exploratory learning, collaborative, automatic assessment systems (1, 2), mobile game-based learning (3, 4) or conversational chatbots for developing foreign language skills (5–7). However, there are many aspects of the use of AI that need to be researched as the lack of data in these areas is still an issue, such as the impact of AI-driven tools to enhance human cognition or second language acquisition. The facts we know are that the use of AI technology in classes not only contributes to students' learning but can also reduce in some respect a teacher's workload and can improve students' learning and their learning results (8–10). However, fortunately, AI-driven technology cannot replace teachers' pedagogical work in any case (11) since AI technology does not know which methods suit best to meet students' learning needs.

Despite the undeniable benefits that AI technology brings for both students and teachers, there are certain risks and threats associated with ethical issues and these risks should be carefully evaluated by both conceptual and empirical studies that will clearly delineate where the potential threats could be. One of these major risks is privacy or the lack of it. AI technology based on algorithmic applications intentionally collects human data from its users and they do not specifically know what kind of data and what quantities of them are collected. Although legislatively (in many countries or geographical/political regions, such as the European Union) user consent is required before using any AI technology, the user actually does not know what is happening with his/her data in the system (12). Therefore, AI technology companies should minimize this data and aim to include only the information that can enhance student learning (1).

In addition, using, for instance, chatbots for developing foreign language speaking and writing skills, indicates another problem and that is monitoring students' ideas, which might consequently decrease student engagement in using this tool since s/he does not want to be tracked or even stalked for his/her ideas [cf. (13)]. This aspect is also related to students' autonomy, i.e., the ability to govern their own learning since the use of algorithms can make predictions about their actions based on provided information input by students (14). As Reiss (15) puts it, within every AI system there are the fruits of countless hours of human thinking. Furthermore, another risk is connected with gender bias, for example, when using machine translation tools (16) that could actually create an environment that is not considered fair from the gender perspective.

Therefore, to reduce these risks, the European Commission (17) in October 2022 published a set of ethical guidelines for primary and secondary teachers, as well as for school leaders in order to effectively integrate AI technology and data into school education and raise awareness of their possible threats. The ethical use of AI and data in learning, teaching, and assessment is based on four key ethical considerations, which include human agency, fairness, humanity, and justified choice. In addition, the document lists new competencies of educators for the ethical use of AI technology and data for educational purposes, such as being able to critically describe the positive and negative impacts of AI and data use in education or being able to understand the basics of AI and learning analytics.

Therefore, the aim of this review is to describe the key ethical issues related to the use of AI-driven mobile apps in education and draw the attention of the academic community to these issues that might be set aside in the quest for research outcomes. The following research questions were formulated:

1. What are the major ethical issues that could be observed when using AI-driven mobile apps for educational purposes?

2. What are the future lines of research related to the given topic that can be obtained from the studies available?

Methodology

To obtain the answer to the research question, the study strictly followed the PRISMA methodology for systematic reviews and meta-analyses. This analysis was used to delineate a major trajectory of the given topic so that further implications could be shown. The following inclusion and exclusion criteria were followed.

Inclusion criteria

• All studies focusing on the research topic.

• Published between January 2018 and December 2022, i.e., last five years.

• Scopus and Web of Science databases.

• Peer-reviewed and only English-written journal articles were included.

• Search terms were applied in the title, abstract, or keywords of the articles.

• Open access journals.

Exclusion criteria

• Published earlier than 1 January 2018.

• AI-driven apps that are not used for educational purposes.

• Other (less reputable) databases.

• Other languages.

• Other than open access articles (such as gold and hybrid access, etc.).

Search string

The following search string was applied to create a dataset of all relevant articles.

(“AI” OR “artificial intelligence”) AND education AND ethic*.

As the search string was rather wide, it was necessary to manually eliminate all nonrelevant articles to yield only those that significantly contribute to the topic. The initial search using this search string generated 118 documents from Scopus and 467 studies from the Web of Science. After applying all inclusion and exclusion criteria and removing duplicates, 44 studies could be considered to be analyzed. The authors also conducted a backward search, i.e., they searched the references of detected studies for relevant research studies which could be missed during their research. This generated another 2 studies. Thus, altogether 46 experimental studies were identified for the full-text analysis. After this initial screening, all the studies were carefully checked for their relevance to the topic by the research team and only 8 documents remained to be included in this analysis as they all represented breakthrough ideas that pertain to the topic and bring a novel and unbiased approach.

Results

The following studies have been yielded as they contain a clear systematic approach to the topic and they fully focus on the ethical issues of AI in mobile apps (14, 18–24). The other detected texts only included more or less superficial comments on ethics, touching only on isolated issues, such as privacy [e.g., (25–28)]. These studies are interesting and important, however, they could not be included in this specific conceptual research frame.

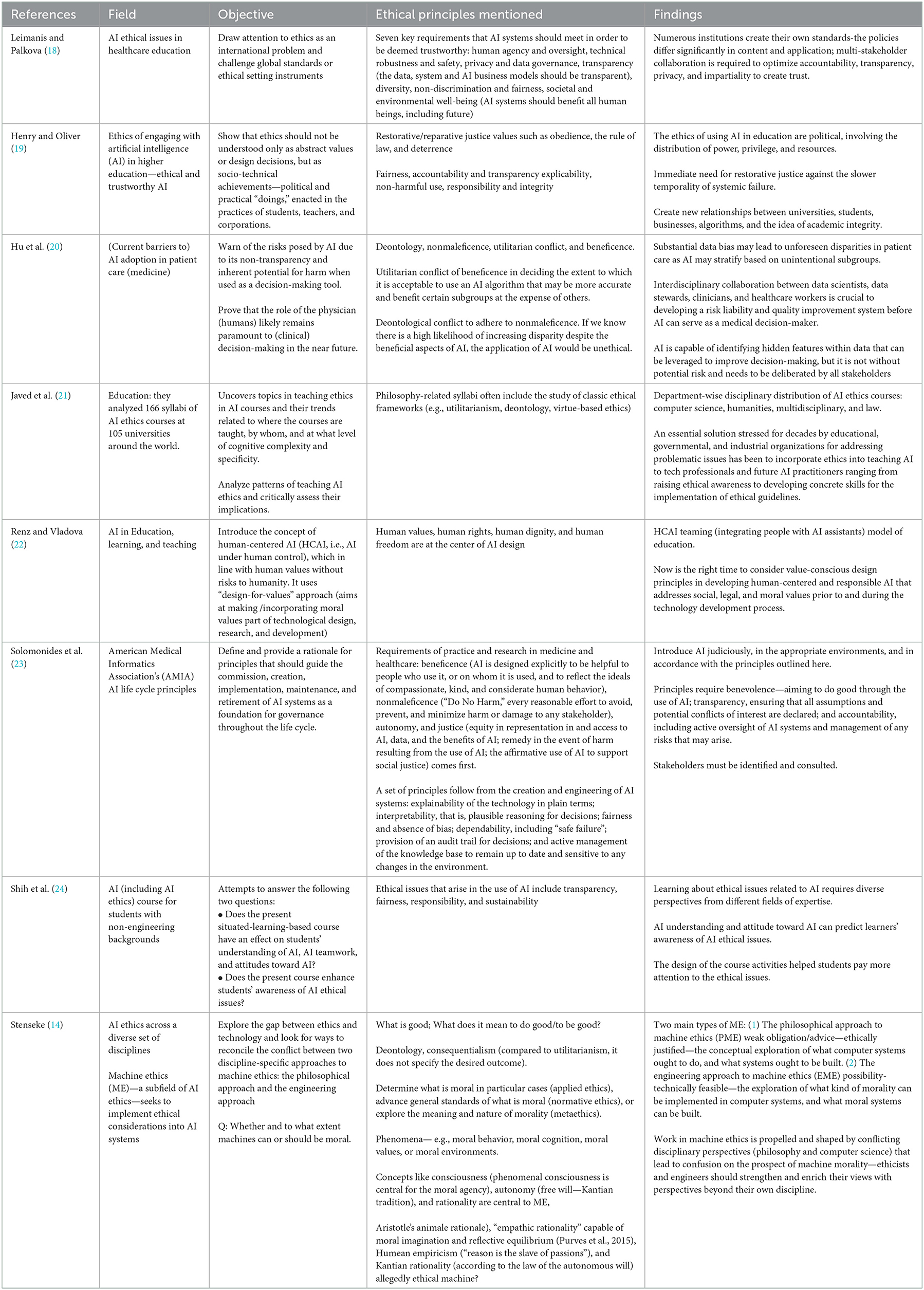

The chosen studies can be further subdivided into those that address purely educational issues related to AI (19, 21, 22, 24) and those that discuss the use of AI in medicine (20, 23) and could also be included in this study, while Leimanis and Palkova (18) address ethical issues of AI in medical education. The remaining study (14) deals with the ethics of AI in various disciplines, with machine ethics, a subfield of the ethics of AI, as a focal point but it provides insightful comments related to education, therefore, it also had to be included in this review. The summary of the key findings are in Table 1.

From a theoretical point of view, the key areas of ethics are at least mentioned in the texts studied. Stenseke (14) explains that ethics asks questions about what is good in particular cases, which points to applied ethics, then proposes general norms, which is what normative ethics is for, and explores the nature of morality, which is the topic of metaethics. The most widespread ethical theories are mentioned in at least one of the examined texts. These include deontology (14, 20, 21), utilitarianism [(14, 20, 21), consequentialism (14), virtue ethics (14, 21)]. These conceptual clarifications seem relevant and provide the reader with a necessary theoretical background that can be further extended into a practical application of this theoretical framing.

In terms of ethical principles that most often relate to the ethics of AI, the analyzed texts [e.g., Solomonides et al. (23) list the four prima facie ethical principles of beneficence, nonmaleficence, autonomy, and justice] include these that revolve around the following ones:

• Beneficence (beneficence, benevolence, nonmaleficence, do good, be good, goodness).

• Accountability (accountability, (risk) liability, responsibility, but also trust, explainability, interpretability, auditability, trustworthiness, transparency, sustainability, dependability).

• Justice (equality, justice, fairness, equity, no bias, no discrimination).

• Human values (human rights, dignity, freedom, autonomy, moral behavior, consciousness, rationality).

The texts studied bring some remarkable findings that seem to be very important to the understanding of the current situation. First, the most significant finding, included in six studies (14, 18–20, 23, 24), is that interdisciplinary, multi-stakeholder collaboration that takes into account different perspectives across disciplines is essential for establishing globally acceptable standards of AI ethics. These stakeholders include end-users and developers of AI systems, as well as other organizational and societal stakeholders, including manufacturers and indeed anyone who comes into contact with AI systems (23). Currently, there appear to be various barriers to such collaboration, as policies vary in content and application from institution to institution [e.g., Leimanis and Palkova (18)]. Perspectives also differ significantly from discipline to discipline (e.g., social science, philosophy, engineering, law) as ethicists and engineers rarely find common ground (14).

Javed et al. (21) studied the disciplinary distribution of AI ethics course delivery by the department and argue that AI courses are mainly delivered by computer science and humanities departments, but also by law departments, and some courses are multidisciplinary, i.e., offered by at least two different departments. The same authors recognize three basic approaches to teaching AI ethics, namely Build (engineering and technical aspects—trustworthy technical solutions), Assess (multidisciplinary teams focused on philosophy and application—judgments based on fundamental principles), and Govern (humanities and law disciplines focused on developing general knowledge—stakeholder protection). They add that holistic teaching that removes disciplinary, topic-oriented, and other barriers should be pursued (21). In the same vein, Stenseke (14) emphasizes the importance of avoiding a narrow disciplinary perspective by analytically understanding the different ways in which each discipline understands underlying concepts, conducts its research, and produces results.

Second, based on the texts studied, it can be assumed that the most promising areas for the development of AI ethics are medicine, education, and engineering (i.e., engineering directly related to AI). However, we cannot see these fields as necessarily strictly separated, but rather as overlapping or intertwined. This is especially so because interdisciplinary collaboration is seen as highly beneficial in the field of AI ethics as it is repeatedly supported by various research findings [see e.g., (14, 18–24)]. Engineering primarily encompasses the field of AI system manufacturing and includes all those involved in the entire AI system life cycle. It mainly covers the technical aspects related to the existence and use of AI. Medical ethics, because of its systematic nature and thoroughness based on a long tradition, carries the qualities necessary for the development of ethical theory and practice in the field of AI applied, among other areas, to education. Education actually brings all stakeholders together, because everyone can be educated in the ethics of AI. Education opens up new horizons for students, encourages them to ask new questions, and is aware of the different short-, medium- and long-term possibilities related to AI.

Third, there is a fundamental potential conflict within the ethics of AI. It consists in answering the question of whether to prioritize human-centered AI (HCAI, AI under human control) (22) or machine ethics (ME) (14). The former puts humans in the position of decision-makers, while the latter sees the future in (potential) AI being ethical in its own right without (unnecessary) human intervention. Stenseke (14) considers machine ethics as a subfield of AI ethics and adds that there are two types of machine ethics, namely the philosophical approach to machine ethics (PME) and the engineering approach to machine ethics (EME). While the former is based on weak obligations or advice—what AI ought to do and what AI systems ought to be created, the latter focuses on possibilities or technical feasibility—what morality can be implemented in AI and what moral AI systems can be created.

At least for now, we believe that AI should be controlled by humans. Although AI systems are (usually) designed for beneficial purposes, they can go awry and behave in ways that are unexpected, unclear, and counterintuitive from a human perspective (23). Nevertheless, theoretical discussions and research regarding artificial moral agents (AMAs, i.e., autonomous machines capable of making human-like moral decisions) are already underway (14).

Fourth, Solomonides et al. (23) and to some extent Renz and Vladova (22) consider the entire life cycle of AI systems. The main idea is that ethical considerations should provide ethical principles and guidelines for all activities related to the entire life cycle of AI systems, from the specification or commissioning to the creation and design, implementation, and maintenance, to the decommissioning of AI systems. Several key recommendations stem from this. One is the continuing engagement of identified stakeholders (23). Another is the application of an AI-specific ethical principle—algorithmovigilance, which is essentially the ongoing oversight of AI systems (23). Last but not least, social, legal, and moral values need to be consciously taken into account at all times (22).

Discussion

AI-related ethics, as a practical human endeavor that is studied with the help of theoretical insight, can provide very insightful comments and ideas that need further verification from a practical perspective. Moreover, it requires multi-stakeholder, interdisciplinary collaboration that embraces different perspectives because this is the only possible way how to obtain reliable results that could be further utilized in education, medicine, and other fields that utilize AI and other digital tools that can potentially pose a threat to the human mind and therefore they must be studied from various perspectives, one of them being ethics.

Fortunately, many authors (14, 18–20, 23, 24) are already aware of the need to respect and employ diverse perspectives on AI ethics when designing, programming and creating mobile apps for various purposes, including education. In the case of education, one must not forget that these tools will be widely used by children and the younger generation as they are in the process of formal and informal education and they will thus be massively impacted by these technologies. Despite the fact that they belong to the technologically savvy Gen Z and Millennials (29), they are still, or even more, vulnerable to the threats they are exposed to, such as surveillance or sexual harassment. It also seems that ethical-related issues will gain in their momentum when various kinds of virtual, augmented, and mixed reality will become an everyday part of our lives. For all these reasons, interdisciplinary interconnectedness seems crucial as it will be necessary to connect technological aspects with ethical considerations, which will enable our survival as a society and also the individual members of it (30).

Furthermore, when thinking about interdisciplinarity, Stenseke (14) suggest ways to make interdisciplinary integration and collaboration more effective by exploiting the possibilities of different perspectives while being aware of their limitations. Indeed, the perspectives differ based on disciplines, e.g., social science, philosophy, engineering, and law, but also based on time, i.e., short-term considerations as well as potential long-term risks (14). While all stakeholders can indicate various practical ethical problems related to AI and offer possible solutions, ethicists are there to refine all of this with respect to ethical theory and to point out possible pitfalls of a lay perspective. Still, even if there are (globally accepted) ethical guidelines for AI [e.g., Javed et al. (21) mention the ACM ethics guidelines—see https://ethics.acm.org/code-of-ethics/software-engineering-code/], they will not necessarily lead to the ethical functioning of AI. Businesses and institutions can abuse or misuse them as ethics-washing, i.e., a strategy to cover up unethical behavior (14).

The ethical functioning of AI also includes issues of AMAs and machine ethics. The question of whether and to what extent machines should/could be held accountable is extremely difficult to answer. We are leaning more toward human-controlled artificial intelligence, but technology is evolving so rapidly that it is almost impossible to predict what machines or artificial intelligence will be able to do in a few decades. Despite this, as long as stakeholders raise and discuss potential problems, AI ethics should be able to come up with relevant solutions to the looming problems posed by human interaction with AI (31).

The major limitation of this study seems to be a lack of clear-cut empirical research into the topic of AI and its ethical issues in relation to education. There is a need for at least descriptive studies that could analyze the current situational issues related to ethical issues in mobile apps as they appear based on the authors' everyday observations when using them. The volume of data available is surprisingly extremely limited to draw any concise conclusion and many authors come to very daring and unjustified suggestions regarding the implementation of AI in all teaching practices without realizing its potential problems and dangers [such as (32)]. However, these conclusions, at least preliminary, are needed and they should be considered a must for further development of this vast area of AI in mobile apps for educational purposes. If we ignore the topic's urgency, we could easily put the whole generation of young users of these apps in danger and the risks related to them are unrepairable.

In conclusion, the research questions set at the beginning provide the following summary: The major ethical issues that could be observed when using AI-driven mobile apps for educational purposes include key four ethical principles that should be followed—beneficence, nonmaleficence, autonomy, and justice. In particular, the principle of algorithmovigilance should be considered in order to monitor, understand and prevent the adverse effects of algorithms in the use of AI in education [cf. (33)]. Furthermore, all stakeholders should be identified, as well as their joint engagement and collaboration to guarantee the ethical use of AI in education.

As far as the second research question is concerned, i.e., the future lines of research related to the given topic, first, the findings of this systematic review revealed that there was an impetus for further studies that have to be conducted, be it just descriptive studies at the beginning, and later developed into more experimental studies that could verify where we are now regarding the ethical threat there are when using AI in education. Second, it clearly shows that the AI-related issues in mobile apps for education are still a big unknown but it somehow suggests, when working with recent sources, what could the possible theoretical framing and practical consequences be of the AI-driven environment. And finally, it also stresses that the only possible perspective on the topic must always be multidisciplinary. The reason for this approach is that it can never be only evaluated by the information specialist, a designer, a teacher, or a user if the implementation of AI is relevant, dangerous, or beneficial, but it must always be a consensual evaluation of the status quo, and from this point, it is necessary to proceed further to ensure safety and security related to data, individuals and the whole society.

Thus, the contribution of this study consists in emphasizing the need for joint cooperation and research of all stakeholders when using AI-driven mobile technologies in education with special attention to the ethical issues since the present research based on the review studies is scarce and neglected in this respect.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Acknowledgments

This research is part of the Excellence 2023 project run at the Faculty of Informatics and Management at the University of Hradec Kralove, Czech Republic.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Akgun S, Greenhow C. Artificial intelligence in education: addressing ethical challenges in K-12 settings. AI Ethics. (2022) 2:431–40. doi: 10.1007/s43681-021-00096-7

2. Holmes W, Bialik M, Fadel C. Artificial Intelligence in Education: Promises and Implications for Teaching and Learning. Boston, MA: Center for Curriculum Redesign (2019).

3. Krouska A, Troussas C, Sgouropoulou C. Applying genetic algorithms for recommending adequate competitors in mobile game-based learning environments. In:Kumar V, Troussas C., , editors. Intelligent Tutoring Systems. ITS 2020. Lecture Notes in Computer Science, 12149. Cham: Springer (2020).

4. Krouska A, Troussas C, Sgouropoulou C. Mobile game-based learning as a solution in COVID-19 era: modeling the pedagogical affordance and student interactions. Educ Inf Technol. (2022) 27:229–41. doi: 10.1007/s10639-021-10672-3

5. Meunier F, Pikhart M, Klimova B. Editorial: new perspectives of L2 acquisition related to human-computer interaction (HCI). Front Psychol. (2022) 13:1098208. doi: 10.3389/fpsyg.2022.1098208

6. Smutny P, Schreiberova P. Chatbots for learning: a review of educational chatbots for the Facebook Messenger. Comput Educ. (2020) 151:1–11. doi: 10.1016/j.compedu.2020.103862

7. Troussas C, Krouska A, Virvou M. Integrating an adjusted conversational agent into a mobile-assisted language learning application. In: 2017 IEEE 29th International Conference on Tools With Artificial Intelligence (ICTAI). Boston, MA: IEEE (2017). pp. 1153–7.

8. Chrysafiadi K, Troussas C, Virvou M. Personalized instructional feedback in a mobile-assisted language learning application using fuzzy reasoning. Int J Learn Technol. (2022) 17:53–76. doi: 10.1504/IJLT.2022.123676

9. Kerr K. Ethical Considerations When Using Artificial Intelligence-Based Assistive Technologies in Education. (2020). Available online at: https://openeducationalberta.ca/educationaltechnologyethics/chapter/ethical-considerations-when-using-artificial-intelligence-based-assistive-technologies-in-education/ (accessed December 4, 2022).

10. Troussas C, Krouska A, Sgouropoulou C. Enriching mobile learning software with interactive activities and motivational feedback for advancing users' high-level cognitive skills. Computers. (2022) 11:18. doi: 10.3390/computers11020018

11. Johnson G. In praise of AI's Inadequacies. (2020). Available online at: https://www.timescolonist.com/opinion/geoff-johnson-in-praise-of-ais-inadequacies-4678319 (accessed December 4, 2022).

12. Stahl BC, Wright D. Ethics and privacy in ai and big data: implementing responsible research and innovation. IEEE Secur Priv. (2018) 16:26–33. doi: 10.1109/MSP.2018.2701164

13. Regan PM, Jesse J. Ethical challenges of edtech, big data and personalized learning: twenty-first century student sorting and tracking. Ethics Inf Technol. (2019) 21:167–79. doi: 10.1007/s10676-018-9492-2

14. Stenseke J. Interdisciplinary confusion and resolution in the context of moral machines. Sci Eng Ethics. (2022) 28:24. doi: 10.1007/s11948-022-00378-1

15. Reiss MJ. The use of AI in education: practicalities and ethical considerations. Lond Rev Educ. (2021) 19:5. doi: 10.14324/LRE.19.1.05

16. Klimova B, Pikhart M, Benites AD, Lehr C, Sanchez-Stockhammer C. Neural machine translation in foreign language teaching and learning: a systematic review. Educ Inf Technol. (2022) doi: 10.1007/s10639-022-11194-2

17. European Commission Directorate-General for Education Youth Sport Culture. Ethical Guidelines on the Use of Artificial Intelligence (AI) and Data in Teaching and Learning for Educators. Publications Office of the European Union (2022). Available online at: https://data.europa.eu/

18. Leimanis A, Palkova K. Ethical guidelines for artificial intelligence in healthcare from the sustainable development perspective. Eur J Sustain Dev. (2021) 10:90. doi: 10.14207/ejsd.2021.v10n1p90

19. Henry JV, Oliver M. Who will watch the watchmen? The ethico-political arrangements of algorithmic proctoring for academic integrity. Postdigit Sci Educ. (2022) 4:330–53. doi: 10.1007/s42438-021-00273-1

20. Hu Z, Hu R, Yau O, Teng M, Wang P, Hu G, et al. Tempering expectations on the medical artificial intelligence revolution: the medical trainee viewpoint. JMIR Med Inform. (2022) 10:e34304. doi: 10.2196/34304

21. Javed RT, Nasir O, Borit M, Vanhee L, Zea E, Gupta S, et al. Get out of the BAG! silos in AI ethics education: unsupervised topic modeling analysis of global AI curricula. J Artif Intell Res. (2022) 73:933–65. doi: 10.1613/jair.1.13550

22. Renz A, Vladova G. Reinvigorating the discourse on human-centered artificial intelligence in educational technologies. Technol Innovat Manag Rev. (2021) 11:5–16. doi: 10.22215/timreview/1438

23. Solomonides AE, Koski E, Atabaki SM, Weinberg S, McGreevey III JD, Kannry JL, et al. Defining AMIA's artificial intelligence principles. J Am Med Inform Assoc. (2022) 29:585–91. doi: 10.1093/jamia/ocac006

24. Shih P-K, Lin C-H, Wu LY, Yu C-C. Learning ethics in AI—teaching non-engineering undergraduates through situated learning. Sustainability. (2021) 13:3718. doi: 10.3390/su13073718

25. Yang C, Lin C, Fan X. Cultivation model of entrepreneurship from the perspective of artificial intelligence ethics. Front Psychol. (2022) 13:885376. doi: 10.3389/fpsyg.2022.885376

26. Bamatraf S, Amouri L, El-Haggar N, Moneer A. Exploring the socio-economic implications of artificial intelligence from higher education student's perspective. Int J Adv Comput Sci Appl. (2021) 12:2021. doi: 10.14569/IJACSA.2021.0120641

27. Zhang H, Lee I, Ali S, DiPaola D, Cheng Y, Breazeal C. Integrating ethics and career futures with technical learning to promote AI literacy for middle school students: an exploratory study. Int J Artif Intell Educ. (2022). doi: 10.1007/s40593-022-00293-3

28. Blease C, Kharko A, Annoni M, Gaab J, Locher C. Machine learning in clinical psychology and psychotherapy education: a mixed methods pilot survey of postgraduate students at a Swiss University. Front Public Health. (2021) 9:623088. doi: 10.3389/fpubh.2021.623088

29. Pikhart M, Klímová B. eLearning 4.0 as a sustainability strategy for generation z language learners: applied linguistics of second language acquisition in younger adults. Societies. (2020) 10:38. doi: 10.3390/soc10020038

30. Zhang W, Wang Z. Theory and practice of VR/AR in K-12 science education—A systematic review. Sustainability. (2021) 13:12646. doi: 10.3390/su132212646

31. Alberola-Mulet I, Iglesias-Martínez MJ, Lozano-Cabezas I. Teachers' beliefs about the role of digital educational resources in educational practice: a qualitative study. Educ Sci. (2021) 11:239. doi: 10.3390/educsci11050239

32. Ahmad SF, Rahmat MK, Mubarik MS, Alam MM, Hyder SI. Artificial intelligence and its role in education. Sustainability. (2021) 13:12902. doi: 10.3390/su132212902

Keywords: artificial intelligence, mobile apps, ethics, ethical principles, education

Citation: Klimova B, Pikhart M and Kacetl J (2023) Ethical issues of the use of AI-driven mobile apps for education. Front. Public Health 10:1118116. doi: 10.3389/fpubh.2022.1118116

Received: 07 December 2022; Accepted: 23 December 2022;

Published: 11 January 2023.

Edited by:

Denise Veelo, Amsterdam University Medical Center, NetherlandsReviewed by:

Christos Troussas, University of West Attica, GreeceCopyright © 2023 Klimova, Pikhart and Kacetl. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marcel Pikhart,  bWFyY2VsLnBpa2hhcnRAdWhrLmN6

bWFyY2VsLnBpa2hhcnRAdWhrLmN6

Blanka Klimova

Blanka Klimova Marcel Pikhart

Marcel Pikhart Jaroslav Kacetl

Jaroslav Kacetl