94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Public Health, 02 November 2022

Sec. Infectious Diseases: Epidemiology and Prevention

Volume 10 - 2022 | https://doi.org/10.3389/fpubh.2022.1023098

This article is part of the Research TopicEmerging Technologies in Infectious Disease Treatment, Prevention and ControlView all 7 articles

Introduction: In this study, we developed a simplified artificial intelligence to support the clinical decision-making of medical personnel in a resource-limited setting.

Methods: We selected seven infectious disease categories that impose a heavy disease burden in the central Vietnam region: mosquito-borne disease, acute gastroenteritis, respiratory tract infection, pulmonary tuberculosis, sepsis, primary nervous system infection, and viral hepatitis. We developed a set of questionnaires to collect information on the current symptoms and history of patients suspected to have infectious diseases. We used data collected from 1,129 patients to develop and test a diagnostic model. We used XGBoost, LightGBM, and CatBoost algorithms to create artificial intelligence for clinical decision support. We used a 4-fold cross-validation method to validate the artificial intelligence model. After 4-fold cross-validation, we tested artificial intelligence models on a separate test dataset and estimated diagnostic accuracy for each model.

Results: We recruited 1,129 patients for final analyses. Artificial intelligence developed by the CatBoost algorithm showed the best performance, with 87.61% accuracy and an F1-score of 87.71. The F1-score of the CatBoost model by disease entity ranged from 0.80 to 0.97. Diagnostic accuracy was the lowest for sepsis and the highest for central nervous system infection.

Conclusion: Simplified artificial intelligence could be helpful in clinical decision support in settings with limited resources.

Although there have been successes in decreasing the disease burden of infectious diseases, there has been a dramatic emergence of infectious diseases, and they remain significant public health challenges (1). Southeast Asia is one of the ‘hot spots' for infectious diseases, and it has experienced a rapid surge of infectious diseases and emerging infectious diseases. This rapid increase in disease burden results from multiple reasons, including environmental factors (2, 3), changes in biodiversity (4), and economic factors (5). Several infectious diseases such as dengue fever (6), malaria (7), and central nervous system infection (8) are imposing a heavy disease burden on Southeast Asia as their prevalence grows.

Artificial intelligence (AI) is increasingly being used in fields of medical practices. Particularly, AI was proven to help assist medical decision-making. For example, AIs for diagnosing respiratory diseases (9, 10), cardiovascular diseases (11), and infectious diseases (12, 13) have been developed and used for diagnostic assistance. Particularly for infectious disease management, AIs to support clinical decision-making for managing emerging infectious diseases, including tuberculosis (14, 15), vector-borne diseases (16, 17) and COVID-19 (18, 19) had been developed. Such previous models have achieved high diagnostic accuracy, proving their effectiveness in medical decision-making for infectious diseases (16, 20, 21).

Previously, regression-based classifiers had been widely used for developing prediction model for clinical decision making, as it is highly intuitive and often one of the models with highest predictability for dichotomous outcome (22–24). However, in order to apply logistic regression model, data must conform statistical assumptions such as avoidance of multicollinearity of independent variables and independence of observation (25). Machine learning classifiers can avoid this issue and can be applied to wider range of unstructured dataset, and therefore have been implemented to develop and improve artificial models for disease (20, 26). A number of machine learning methods, including artificial neural networks (27), XGBoost (28), and support vector machine methods (29, 30) are being used for diagnostic assistance and clinical decision making.

Up to this date, however, little has been documented on applying AI for public health in resource-limited settings of low- and middle-income countries (LMICs) (31). Although there are vigorous activities on developing and using AI for public health in resource-limited settings (32–34), its application is still at its elementary level. Several difficulties in the application of AIs, such as challenges in building and maintenance of expert systems (35), limitations in IT infrastructure (36), differences in socioeconomic contexts (36, 37) and lack of personnel to supervise the procedure of development and application (38) hinder effective use of AIs for public health in the resource-limited setting of LMICs. To increase the availability and accessibility of AI in LMICs, AI needs to be tailored to the resource-limited settings and fit for local sociomedical contexts and infrastructure needs (31, 39).

Our objective of this research was to develop a simplified version of AI that we could effectively apply in resource-limited settings. We conducted a pilot study collaborating with local authorities and healthcare institutions in Da Nang, Vietnam. We tried to develop an AI for diagnosing infectious diseases that impose a heavy disease burden in the Central region of Vietnam.

We selected seven infectious disease categories that were most common in central Vietnam or imposed a heavy disease burden on central Vietnam: mosquito-borne diseases, acute gastroenteritis, respiratory tract infection including coronavirus disease (COVID-19), pulmonary tuberculosis, sepsis, central nervous system (CNS) infection, and viral hepatitis. Classification of disease entity was done following ICD-10 diagnostic codes (Supplemental material 1). We used the Delphi method to develop questionnaires for patient assessment and history taking. A preventive medicine specialist in English initially created a questionnaire. Then, it was reviewed by a Korean preventive medicine specialist, a Korean public health specialist, two Vietnamese internal medicine specialists, a Vietnamese public health specialist, and an English-Vietnamese interpreter. After review and feedback, a professional translator translated the questionnaire set into Vietnamese. We have attached the final questionnaire as Supplemental material 2. Before data collection, we conducted two rounds of a pilot study to receive feedback and edit questionnaires: once on 5 Vietnamese individuals residing in Korea and once on 10 Vietnamese recruited from Da Nang.

After the pilot study, a Korean preventive medicine specialist developed an instruction manual for research personnel, which was used to educate physicians and nurses at the Department of Tropical Medicine, Da Nang Hospital, before conducting the survey. In addition, a survey application was developed and installed to tablet PCs used for data collection.

We recruited patients who had been diagnosed with either of the target disease entities. About 160 participants for each disease category were recruited to ensure the model's predictive power. Due to decreased outpatient visits due to the COVID-19 outbreak in Vietnam, we used a two-track recruitment strategy. Patients admitted to the department of tropical medicine, Da Nang Hospital, Da Nang Hospital, and Da Nang Lung Hospital from November 8th, 2021, to January 1st, 2022, were recruited and responded to the survey. Simultaneously, research personnel conducted a phone survey on patients who had been diagnosed with target diseases. We recruited a total number of 1,131 patients either prospectively or retrospectively. We excluded two participants who were diagnosed with other conditions were excluded from the final analysis.

All participants then underwent an interview with a developed questionnaire and physical examination. Data was collected using the application on a tablet PC, then directly transmitted to the server. We measured systolic and diastolic blood pressure with a standardized sphygmomanometer after 5 min of rest. In addition, other vital signs such as pulse rate, respiratory rate, and body temperature were measured by physicians and nurses at the Department of Tropical Medicine, Da Nang Hospital. For retrospectively recruited participants, we collected records on electronic medical records (EMRs) of Da Nang Hospital, and we did an additional telephone survey to collect data. After the survey, the physician gave a final diagnosis to participants and was compiled with its corresponding ICD-10 codes. Following ICD-10 codes and diagnosis, participants were categorized into seven disease entity subgroups.

Survey results were processed to a dataset with 211 independent variables. We used three different algorithms (XGBoost, LightGBM, CatBoost) to develop AI for prediction and compared the predictive accuracy of the three models. XGBoost, one of the Gradient Boosting Models (GBM), is an ensemble model of decision trees (40). By implementing parallel processing and CART (Classification and Regression Tree) model-based regression, XGBoost works extremely faster compared to the previous gradient models and efficiently handles overfitting problem of GBM (40). LightGBM utilizes gradient-based one-side sampling (GOSS) and Exclusive Feature Bundling (EFB), adopting the leaf-wise tree grwoth algorithm unlike level-wise growth algorithms commonly used in previous GBMs (41). LightGBM has considerably lower false predictions due to application of leaf-wise tree growth, and it has faster training speed and lesser memory usage compared to conventional GBMs such as XGBoost by efficiently reducing the number of data instances and features (41). However, due to such characteristic, insufficient sample size may cause overfitting in LightGBM. CatBoost builds the base model with the residual error of independently sampled sub-dataset. The model is continuously updated by taking the residual error with the remaining dataset, solving the problem of prediction-shift (42). In addition, CatBoost creates clusters for each category during training, efficiently reflecting the categorical features to the model algorithm (42). The implementation of ordered boosting algorithm and ordered target statistics (TS) accelerates the training process, increases predictability, and reduces the possibility of overfitting (42). Moreover, the base parameters are already optimized in CatBoost, which minimizes the need of hyperparameter tuning (42).

We pre-processed the raw dataset into numerical dataset. For numeric variables, we modified the value to “– 1” for missing values and did not modify other responses before including them into the final dataset. For non-numeric features, we modified the value to “1” if the answer exists and to “– 1” if not. We did not consider the specific response of the question, as the presence of the feature was more important in predicting results.

The preprocessed dataset was divided into a training set, validation set, and test set. First, we divided the preprocessed data into a training set and a test set with a ratio of 9:1. Although there is no profound theoretical background on optimal training/test split ratio, a recent study had suggested that optimal training/test ratio with p features is approximately :1 (43). Since our study has 211 features, the optimal ratio would have been ~14.51:1, but we followed the precedent of previous studies on AI development with clinical purposes (44–46). Then we separated the training set into four groups for 4-fold cross-validation, rotationally using each set for validation and the rest for training (Figure 1). We conducted 9:1 splitting to secure the number of cases used for training and cross-validation. Finally, we modified the value of selected hyperparameters for each algorithm to optimize the model performance. The optimal value of each hyperparameter is shown in Supplemental material 3.

Feature importance, which is defined as ‘the increase in the model's prediction error after permuting the feature, was calculated for each variable included in the prediction model (47, 48). Global diagnostic accuracy and F1-score were measured to evaluate the developed prediction model. Global diagnostic accuracy was defined as the proportion of correct classification (Equation 1). Precision is defined as the proportion of true positive among samples classified as true (Equation 2). Recall, or sensitivity is defined as the proportion of true positive among positive samples (Equation 3). F1-score, which is a harmonic mean of precision and recall, shows the model performance of the developed model (Equation 4). After the global test for AI performance, we calculated performance parameters (precision, recall, specificity, and F1-score) by disease category.

Multi-comparison table for each classifier was constructed for further comparison of model performance.

The institutional review board of Da Nang Hospital, Da Nang, Vietnam reviewed and approved the study protocol (Supplemental material 4). In addition, we acquired informed consent from all participants of this study. All procedures were contributing to this work to comply with the ethical standards of the relevant national and institutional committees on human experimentation and with the 1975 Declaration of Helsinki, which was revised in 2008.

We recruited a total number of 1,129 participants for the survey. The number of patients diagnosed with mosquito-borne diseases, acute gastroenteritis, viral hepatitis, respiratory tract infection, pulmonary tuberculosis, sepsis, and CNS infection was 163 (14.44%), 162 (14.35%), 162 (14.35%), 158 (13.99%), 161 (14.26%), 161(14.26%) and 162 (14.44%), respectively. The mean age of participants was 45.96 years (standard deviation [SD] 17.88) at the point of diagnosis. Six hundred sixty-nine patients (59.26%) were men, and 460 (40.74%) were female.

There were significant differences in patterns of symptoms by disease entity. In mosquito-borne disease, fever/chill, fatigue, headache, anorexia, diarrhea, and myalgia were common. Gastrointestinal tract symptoms such as diarrhea, constipation, and abdominal pain were common in acute gastroenteritis. Systematic symptoms such as fever/chill and fatigue were the most common in sepsis and viral hepatitis, with no other prominent symptoms present (Table 1).

Figure 2 presents the top 20 influential features of the global model with feature importance values. The X-axis indicates the relative feature importance, and the y-axis represents the names of the feature. Headache and its duration were the most influential variables, followed by cough, fever, and pulse rate. Feature importance of variables by disease entity is presented in Supplemental material 5.

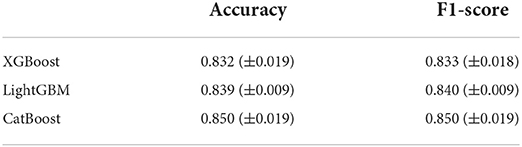

Table 2 shows the global diagnostic accuracy and F1-score of developed AI models. The model developed by the CatBoost algorithm showed the highest global diagnostic accuracy of 87.61%, and the model developed by the XGBoost algorithm showed the lowest global accuracy of 83.18%. We conducted 4-fold cross-validation on the model developed by the CatBoost algorithm, the model with the highest global diagnostic accuracy. Results from 4-fold cross validation also showed that diagnostic accuracy was the highest in CatBoost classifier: mean diagnostic accuracy of XGBoost, LightGBM, and CatBoost classifier were 0.832 (standard deviation [SD] 0.019), 0.839 (SD 0.009), and 0.850 (SD 0.019), respectively. Mean F1-score of XGBoost, LightGBM, and CatBoost classifier were 0.833 (SD 0.018), 0.840 (SD 0.009), and 0.850 (SD 0.019), respectively.

Multi-comparison table for each classifier showed that performance of CatBoost algorithm was relatively higher compared to XGBoost and LightGBM. False prediction rates of XGBoost, LightGBM and CatBoost classifier were 16.81, 16.81, and 11.76, respectively. (Table 3) The result from multi-comparison table is concurrent with Table 2, which shows higher global accuracy in CatBoost classifier compared to other two classifiers.

Table 3. Results from 4-fold cross validation on the model developed by XGBoost, LightGBM, and CatBoost algorithm.

Parameters on AI performance by disease category are shown below in Table 4. Precision and recall were the lowest in the “sepsis” category and the highest in the “CNS infection” category. On the other hand, precision, recall, and sensitivity were generally higher in AI developed by the CatBoost algorithm, with exceptions such as the “mosquito-borne disease” category and the “CNS infection” category.

In this pilot study, we achieved around 85% of global diagnostic accuracy with AI developed with limited data, only including medical histories, physical examination results, and symptom assessments. The global accuracy increases up to 90% after excluding sepsis, which is a condition that requires a complex diagnostic procedure for accurate assessment (49). The accuracy we have achieved in this study is reasonably high, considering that the prediction model developed in this study did not include any laboratory test results or radiologic findings. For instance, machine learning algorithms for predicting hepatitis C in patients enrolled in National Treatment Program of HCV patients in Egypt showed accuracy of 66–84.4%, while our CatBoost model showed accuracy of 88% (50). Accuracy of predicting pulmonary tuberculosis was 87% in our model developed by CatBoost algorithm, while previous models based on chest X-ray showed area under curve of 0.75–0.99 (51). Considering that artificial intelligence in this study relied solely on survey questionnaire, diagnostic accuracy of the models developed in this study is relatively high compared to previous studies.

The global diagnostic accuracy of AI developed in this study is relatively low compared to other AIs, usually achieving 90% of higher diagnostic accuracy (12, 52). However, these AIs typically rely on additional tests such as radiologic studies, laboratory tests, and pathologic results. Our study developed a survey-based AI without additional tests except for vital sign assessments and physical examination, making it applicable to resource-limited settings (53).

While the developed AI cannot be a replacement for the clinical decision-making process, we could use it for screening tests and initial disease evaluation under circumstances of insufficient medical expertise, which is a common condition in LMICs (53, 54). However, there are significant challenges in applying AI to LMICs, mostly from local governance capacity and AI literacy (31, 55). Using tailored AI with high cost-efficacy and collaboration with experts in AI development and management will effectively screen and manage the disease of interest. Our study showed that simplified survey-based AI provides certain benefits in detecting and controlling infectious diseases in the Central region of Vietnam. We are planning to improve the diagnostic accuracy of AI further and evaluate the cost and efficacy of the developed AI by applying it to multiple hospitals in Hue, Vietnam, and Da Nang, Vietnam.

It is one of few academic studies on the development and application of AI in LMICs. The study is a product of international collaboration, including epidemiologists, global health experts, professional programmers, and local physicians in Vietnam, facilitating questionnaire development, data collection, and AI development.

As this study is a pilot study, there are several limitations. First, we only used data from a single hospital at Da Nang, Vietnam, so additional validation is required before applying it to other regions. Due to the decrease in the number of patients visiting the hospital, a certain proportion of patients were recruited retrospectively via telephone survey. Although we tried to keep data integrity by reviewing EMR, data validity of retrospective data might have affected the result. Finally, our AI could not diagnose infectious diseases without definite clinical manifestation, such as sepsis. To correctly identify complex diseases and syndromes, we should include in-depth assessments of symptoms and clinical features in the model. To address these shortcomings, we plan to develop assessment questionnaires further, distribute the AI to multiple collaborating hospitals and healthcare centers in Vietnam and assess the efficacy of the AI in collaborating institutions.

This study is one of few academic studies on AIs in resource-limited settings. Our results implied that even survey-based questionnaires without laboratory or radiologic tests could be beneficial in screening infectious diseases in LMIC. Additional studies on other collaborating institutions will further develop and validate the current model we have developed and provide epidemiologic evidence on the effectiveness of AI application in a resource-limited setting.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the institutional review board of Da Nang Hospital. The patients/participants provided their written informed consent to participate in this study.

Concept and design: KK, M-kL, and SK. Drafting of the manuscript: KK and SK. Statistical analysis: KK, HL, and BK. Obtained funding: M-kL and HS. Administrative, technical, or material support: M-kL, HS, HL, and SK. Supervision: M-kL and SK. All authors had full access to all the data in the study, takes responsibility for the integrity of the data and the accuracy of the data analysis, acquisition, analysis, interpretation of data, and critical revision of the manuscript for important intellectual content.

This study was funded by RIGHT Fund (Investment ID RF-TAA-2021-H01). However, the funding source had no role in designing and conducting the study.

The authors hereby thank Acryl for providing technical assistance for AI development. We also would like to thank Department of Tropical Medicine, Da Nang Hospital for participating in data collection and dataset construction.

Author HS was employed by company Acryl. Authors HL, BK and HS were employed by company FineHealthcare.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2022.1023098/full#supplementary-material

1. Jones KE, Patel NG, Levy MA, Storeygard A, Balk D, Gittleman JL, et al. Global trends in emerging infectious diseases. Nature. (2008) 451:990–3. doi: 10.1038/nature06536

2. McMichael C. Climate change-related migration and infectious disease. Virulence. (2015) 6:548–53. doi: 10.1080/21505594.2015.1021539

3. Shah HA, Huxley P, Elmes J, Murray KA. Agricultural land-uses consistently exacerbate infectious disease risks in Southeast Asia. Nat Commun. (2019) 10:1–13. doi: 10.1038/s41467-019-12333-z

4. Morand S, Jittapalapong S, Suputtamongkol Y, Abdullah MT, Huan TB. Infectious diseases and their outbreaks in Asia-Pacific: biodiversity and its regulation loss matter. PLoS ONE. (2014) 9:e90032. doi: 10.1371/journal.pone.0090032

5. Coker RJ, Hunter BM, Rudge JW, Liverani M, Hanvoravongchai P. Emerging infectious diseases in Southeast Asia: regional challenges to control. Lancet. (2011) 377:599–609. doi: 10.1016/S0140-6736(10)62004-1

6. Tsheten T, Gray DJ, Clements ACA, Wangdi K. Epidemiology and challenges of dengue surveillance in the WHO South-East Asia Region. Trans R Soc Trop Med Hyg. (2021) 115:583–99. doi: 10.1093/trstmh/traa158

7. Hamilton WL, Amato R, van der Pluijm RW, Jacob CG, Quang HH, Thuy-Nhien NT, et al. Evolution and expansion of multidrug-resistant malaria in Southeast Asia: a genomic epidemiology study. Lancet Infect Dis. (2019) 19:943–51. doi: 10.1016/S1473-3099(19)30392-5

8. Robertson FC, Lepard JR, Mekary RA, Davis MC, Yunusa I, Gormley WB, et al. Epidemiology of central nervous system infectious diseases: a meta-analysis and systematic review with implications for neurosurgeons worldwide. J Neurosurg. (2018) 130:1107–26. doi: 10.3171/2017.10.JNS17359

9. Exarchos KP, Beltsiou M, Votti C-A, Kostikas K. Artificial intelligence techniques in asthma: a systematic review and critical appraisal of the existing literature. Eur Respirat J. (2020) 56:2000521. doi: 10.1183/13993003.00521-2020

10. Gonem S, Janssens W, Das N, Topalovic M. Applications of artificial intelligence and machine learning in respiratory medicine. Thorax. (2020) 75:695–701. doi: 10.1136/thoraxjnl-2020-214556

11. Iannattone PA, Zhao X, VanHouten J, Garg A, Huynh T. Artificial intelligence for diagnosis of acute coronary syndromes: a meta-analysis of machine learning approaches. Canad J Cardiol. (2020) 36:577–83. doi: 10.1016/j.cjca.2019.09.013

12. Harmon SA, Sanford TH, Xu S, Turkbey EB, Roth H, Xu Z, et al. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat Commun. (2020) 11:1–7. doi: 10.1038/s41467-020-17971-2

13. Smith KP, Kirby JE. Image analysis and artificial intelligence in infectious disease diagnostics. Clin Microbiol Infect. (2020) 26:1318–23. doi: 10.1016/j.cmi.2020.03.012

14. Dande P, Samant P. Acquaintance to artificial neural networks and use of artificial intelligence as a diagnostic tool for tuberculosis: a review. Tuberculosis. (2018) 108:1–9. doi: 10.1016/j.tube.2017.09.006

15. Jamal S, Khubaib M, Gangwar R, Grover S, Grover A, Hasnain SE. Artificial Intelligence and Machine learning based prediction of resistant and susceptible mutations in Mycobacterium tuberculosis. Sci Rep. (2020) 10:1–16. doi: 10.1038/s41598-020-62368-2

16. Kaur I, Sandhu AK, Kumar Y. Artificial intelligence techniques for predictive modeling of vector-borne diseases and its pathogens: a systematic review. Archiv Comput Methods Eng. (2022) 1–31. doi: 10.1007/s11831-022-09724-9

17. Pley C, Evans M, Lowe R, Montgomery H, Yacoub S. Digital and technological innovation in vector-borne disease surveillance to predict, detect, and control climate-driven outbreaks. Lancet Planetary Health. (2021) 5:e739–45. doi: 10.1016/S2542-5196(21)00141-8

18. Chen J, See KC. Artificial intelligence for COVID-19: rapid review. J Med Internet Res. (2020) 22:e21476. doi: 10.2196/21476

19. Jin C, Chen W, Cao Y, Xu Z, Tan Z, Zhang X, et al. Development and evaluation of an artificial intelligence system for COVID-19 diagnosis. Nat Commun. (2020) 11:1–14. doi: 10.1038/s41467-020-18685-1

20. Chiu H-YR, Hwang C-K, Chen S-Y, Shih F-Y, Han H-C, King C-C, et al. Machine learning for emerging infectious disease field responses. Sci Rep. (2022) 12:1–13. doi: 10.1038/s41598-021-03687-w

21. Malik YS, Sircar S, Bhat S, Ansari MI, Pande T, Kumar P, et al. How artificial intelligence may help the Covid-19 pandemic: pitfalls and lessons for the future. Rev Med Virol. (2021) 31:1–11. doi: 10.1002/rmv.2205

22. Buchlak QD, Esmaili N, Leveque J-C, Farrokhi F, Bennett C, Piccardi M, et al. Machine learning applications to clinical decision support in neurosurgery: an artificial intelligence augmented systematic review. Neurosurg Rev. (2020) 43:1235–53. doi: 10.1007/s10143-019-01163-8

23. Grigsby J, Kramer RE, Schneiders JL, Gates JR, Brewster smith w. predicting outcome of anterior temporal lobectomy using simulated neural networks. Epilepsia. (1998) 39:61–66. doi: 10.1111/j.1528-1157.1998.tb01275.x

24. Mohamadou Y, Halidou A, Kapen PT, A. review of mathematical modeling, artificial intelligence and datasets used in the study, prediction and management of COVID-19. Appl Intell. (2020) 50:3913–25. doi: 10.1007/s10489-020-01770-9

25. Song X, Liu X, Liu F, Wang C. Comparison of machine learning and logistic regression models in predicting acute kidney injury: a systematic review and meta-analysis. Int J Med Inform. (2021) 151:104484. doi: 10.1016/j.ijmedinf.2021.104484

26. Chen X, Huang L, Xie D, Zhao Q. EGBMMDA extreme gradient boosting machine for MiRNA-disease association prediction. Cell Death Dis. (2018) 9:1–16. doi: 10.1038/s41419-017-0003-x

27. Elveren E, Yumuşak N. Tuberculosis disease diagnosis using artificial neural network trained with genetic algorithm. J Med Syst. (2011) 35:329–32. doi: 10.1007/s10916-009-9369-3

28. Alim M, Ye G-H, Guan P, Huang D-S, Zhou B-S, Wu W. Comparison of ARIMA model and XGBoost model for prediction of human brucellosis in mainland China: a time-series study. BMJ Open. (2020) 10:e039676. doi: 10.1136/bmjopen-2020-039676

29. Kim J, Ahn I. Infectious disease outbreak prediction using media articles with machine learning models. Sci Rep. (2021) 11:4413. doi: 10.1038/s41598-021-83926-2

30. Sartakhti JS, Zangooei MH, Mozafari K. Hepatitis disease diagnosis using a novel hybrid method based on support vector machine and simulated annealing (SVM-SA). Comput Methods Programs Biomed. (2012) 108:570–9. doi: 10.1016/j.cmpb.2011.08.003

31. Wahl B, Cossy-Gantner A, Germann S, Schwalbe NR. Artificial intelligence (AI) and global health: how can AI contribute to health in resource-poor settings? BMJ Global Health. (2018) 3:e000798. doi: 10.1136/bmjgh-2018-000798

32. Hornyak T. Mapping dengue fever hazard with machine learning. Eos. (2017) 98. doi: 10.1029/2017EO076019

33. Naseem M, Akhund R, Arshad H, Ibrahim MT. Exploring the potential of artificial intelligence and machine learning to combat COVID-19 and existing opportunities for LMIC: a scoping review. J Prim Care Community Health. (2020) 11:2150132720963634. doi: 10.1177/2150132720963634

34. Owoyemi A, Owoyemi J, Osiyemi A, Boyd A. Artificial intelligence for healthcare in Africa. Front Digital Health. (2020) 2:6. doi: 10.3389/fdgth.2020.00006

35. Sheikhtaheri A, Sadoughi F, Hashemi Dehaghi Z. Developing and using expert systems and neural networks in medicine: a review on benefits and challenges. J Med Syst. (2014) 38:1–6. doi: 10.1007/s10916-014-0110-5

36. Caliskan A, Bryson JJ, Narayanan A. Semantics derived automatically from language corpora contain human-like biases. Science. (2017) 356:183–6. doi: 10.1126/science.aal4230

37. Angwin J, Larson J, Mattu S, Kirchner L. Machine Bias. Ethics of Data and Analytics. Boca Raton: Auerbach Publications (2016). p. 254–64. doi: 10.1201/9781003278290-37

38. Jiang L, Wu Z, Xu X, Zhan Y, Jin X, Wang L, et al. Opportunities and challenges of artificial intelligence in the medical field: current application, emerging problems, and problem-solving strategies. J Int Med Res. (2021) 49:03000605211000157. doi: 10.1177/03000605211000157

39. Schwalbe N, Wahl B. Artificial intelligence and the future of global health. Lancet. (2020) 395:1579–86. doi: 10.1016/S0140-6736(20)30226-9

40. Chen T, Guestrin C. Xgboost: A scalable tree boosting system. Proceedings of the 22nd ACM sigkdd International Conference on Knowledge Discovery and Data Mining. (2016). p. 785–94. doi: 10.1145/2939672.2939785

41. Ke G, Meng Q, Finley T, Wang T, Chen W, Ma W, Ye Q, Liu T-Y. Lightgbm: a highly efficient gradient boosting decision tree. Adv Neural Inf Process Syst. (2017) 30.

42. Prokhorenkova L, Gusev G, Vorobev A, Dorogush AV, Gulin A. CatBoost: unbiased boosting with categorical features. Adv Neural Inf Process Syst. (2018) 31.

43. Joseph VR. Optimal ratio for data splitting. Stat Anal Data Mining ASA Data Sci J. (2022) 15:531–538. doi: 10.1002/sam.11583

44. Irino T, Kawakubo H, Matsuda S, Mayanagi S, Nakamura R, Wada N, et al. Prediction of lymph node metastasis in early gastric cancer using artificial intelligence technology. J Clin Oncol. (2020) 38:289–289. doi: 10.1200/JCO.2020.38.4_suppl.289

45. Li L, Qin L, Xu Z, Yin Y, Wang X, Kong B, et al. Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology. (2020).

46. Nelson A, Herron D, Rees G, Nachev P. Predicting scheduled hospital attendance with artificial intelligence. NPJ Digital Med. (2019) 2:1–7. doi: 10.1038/s41746-019-0103-3

48. Fisher A, Rudin C, Dominici F. All models are wrong, but many are useful: learning a variable's importance by studying an entire class of prediction models simultaneously. J Mach Learn Res. (2019) 20:1–81.

49. Chen AX, Simpson SQ, Pallin DJ. Sepsis guidelines. N Engl J Med. (2019) 380:1369–71. doi: 10.1056/NEJMclde1815472

50. Hashem S, Esmat G, Elakel W, Habashy S, Raouf SA, Elhefnawi M, et al. Comparison of machine learning approaches for prediction of advanced liver fibrosis in chronic hepatitis C patients. IEEE/ACM Trans Comput Biol Bioinform. (2017) 15:861–8. doi: 10.1109/TCBB.2017.2690848

51. Harris M, Qi A, Jeagal L, Torabi N, Menzies D, Korobitsyn A, et al. systematic review of the diagnostic accuracy of artificial intelligence-based computer programs to analyze chest x-rays for pulmonary tuberculosis. PLoS ONE. (2019) 14:e0221339. doi: 10.1371/journal.pone.0221339

52. Li L, Qin L, Xu Z, Yin Y, Wang X, Kong B, et al. Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: evaluation of the diagnostic accuracy. Radiology. (2020) 296:E65–71. doi: 10.1148/radiol.2020200905

53. World Health Organization. Ethics and Governance of Artificial Intelligence for Health: WHO Guidance (2021).

54. Shukla VV, Eggleston B, Ambalavanan N, McClure EM, Mwenechanya M, Chomba E, et al. Predictive modeling for perinatal mortality in resource-limited settings. JAMA Network Open. (2020) 3:e2026750–e2026. doi: 10.1001/jamanetworkopen.2020.26750

Keywords: communicable diseases, artificial intelligence, Asia Southeastern, international health, low- & middle-income countries

Citation: Kim K, Lee M-k, Shin HK, Lee H, Kim B and Kang S (2022) Development and application of survey-based artificial intelligence for clinical decision support in managing infectious diseases: A pilot study on a hospital in central Vietnam. Front. Public Health 10:1023098. doi: 10.3389/fpubh.2022.1023098

Received: 22 August 2022; Accepted: 17 October 2022;

Published: 02 November 2022.

Edited by:

Buket Baddal, Near East University, CyprusReviewed by:

Emre Ozbilge, Cyprus International University, CyprusCopyright © 2022 Kim, Lee, Shin, Lee, Kim and Kang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kwanghyun Kim, c2tua3VoMTBAeXVocy5hYw==; Sunjoo Kang, a3NqNTEzOUB5dWhzLmFj

†ORCID: Hyun Kyung Shin orcid.org/0000-0001-5505-8312

Boram Kim orcid.org/0000-0002-6912-0698

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.