- 1Department of Biomedicine, Biotechnology, and Public Health, University of Cadiz, Cadiz, Spain

- 2School of Public Health, Imperial College London, London, United Kingdom

- 3Department of Applied Health Research, University College London, London, United Kingdom

Background: The widespread use of social media represents an unprecedented opportunity for health promotion. We have more information and evidence-based health related knowledge, for instance about healthy habits or possible risk behaviors. However, these tools also carry some disadvantages since they also open the door to new social and health risks, in particular during health emergencies. This systematic review aims to study the determinants of infodemics during disease outbreaks, drawing on both quantitative and qualitative methods.

Methods: We searched research articles in PubMed, Scopus, Medline, Embase, CINAHL, Sociological abstracts, Cochrane Library, and Web of Science. Additional research works were included by searching bibliographies of electronically retrieved review articles.

Results: Finally, 42 studies were included in the review. Five determinants of infodemics were identified: (1) information sources; (2) online communities' structure and consensus; (3) communication channels (i.e., mass media, social media, forums, and websites); (4) messages content (i.e., quality of information, sensationalism, etc.,); and (5) context (e.g., social consensus, health emergencies, public opinion, etc.). Studied selected in this systematic review identified different measures to combat misinformation during outbreaks.

Conclusion: The clarity of the health promotion messages has been proven essential to prevent the spread of a particular disease and to avoid potential risks, but it is also fundamental to understand the network structure of social media platforms and the emergency context where misinformation might dynamically evolve. Therefore, in order to prevent future infodemics, special attention will need to be paid both to increase the visibility of evidence-based knowledge generated by health organizations and academia, and to detect the possible sources of mis/disinformation.

Introduction

The COVID-19 pandemic has been followed by a massive “infodemic”, which has been recently defined as “an over-abundance of information – some accurate and some not – that makes it hard for people to find trustworthy sources and reliable guidance when they need it” (1). As a consequence, the excessive amount of information concerning the virus SARS-CoV-2 and the COVID-19 disease is making more difficult the identification and assessment of possible solutions. In this context, the frontiers between evidence-based knowledge, anecdotal evidence and health misinformation has become more diffuse (2, 3). The massive diffusion of health information in traditional and new media represents a serious problem due to the excess of noise (i.e., understood as an overabundance of signals about a certain topic), but also to the incorrect criteria of opinion leaders that can contribute to the development of misconceptions and risk behaviors which might subsequently alter the effectiveness of government and health authorities countermeasures (3).

Although, the formation of collective opinions is a widely studied theme in social sciences like sociology, political sciences or communication (4), this topic has recently gained special relevance in other areas of study such as health sciences (5). The new interest in this research topic is associated with the use and extension of Information and Communication Technologies (ICT) for health promotion and, in particular, with the progressive proliferation of health misinformation in social media platforms such as Twitter, Facebook, Instagram, WhatsApp, or YouTube. Today, these new tools have been incorporated in multiple spheres of our daily life, so they have radically changed our lives and the forms we interact with our peers (6). The way we currently communicate, share, receive, use, and search for both general and health-specific information has been deeply altered in the last 20 years. At present, 40% of the world population has access to Internet, the global expansion of social media covers around 39% of world population and 1.5 billion people use mobile devices to have instantaneously access to internet (7). In the EU context, 75% of European citizens considered the ICT as a good tool for finding health information (8). However, recent studies have showed that 40–50% of websites related to common diseases contained misinformation (9–11), and social media are also contributing to spread fake news on different health topics (5).

Research evidence show that the widespread use of social media represents an unprecedented opportunity for health promotion (12). We have more access to information and health-related knowledge than ever before regarding healthy habits, social and economic determinants of health, possible risk behaviors and health promotion (5). Currently, the accessibility to health contents has dramatically increased and we can find health information across multiple sources: health forums, thematic channels, direct (online) access to health experts or agencies, news online, or social media (13). Simultaneously, there is a greater availability of public data and contents about opinions, attitudes and behaviors that are continuously generated online and can be useful for encouraging healthy habits. In addition, the online expansion of health related knowledge make possible for patients to access and share medical information with other peers, acquire self-efficacy in fulfilling treatments and increase adherence in therapies and treatments (12, 14–18). However, these tools also bring some disadvantages since they also open the door to new social and health risks (19, 20). For instance, the lack of control of health information on the Internet indicates the current need to regulate the quality and public availability of health information online (21). Furthermore, the unequal access to information and the development of abilities for using new media can produce inequalities in the accessibility to health-related information, and therefore in health and social well-being of population (6, 8). The spread of self-medication cases, the proliferation of miracle diets and treatments, the anti-vaccine movements and the growing vaccine hesitancy, uninformed decision making about health-related questions, and inexpert diagnosis are some of the common risks associated to the use of new media (22).

Despite advantages may apparently be greater than disadvantages, some disease outbreaks such as the H1N1, Ebola, or Zika showed that, in times of social emergency, misinformation can provoke serious consequences, since the mechanisms of social influence can amplify fears during epidemics (15, 16). Although the concept of infodemics has only recently been defined, fear and massive misinformation through social platforms have undermined the actions taken to tackle outbreaks during the last two decades (23, 24). As an example, regarding the H1N1 or the Ebola epidemics, the misinformation phenomenon on social media has fostered unfounded myths and fears about these epidemics (25). The recent outbreak of Zika virus infections in South and Central America has also led to significant sustained myths and rumors about its pathophysiology, prevention and possible treatments that have captured massive public attention (26, 27). This problem has become more evident during the COVID-19 pandemic where the unknown social and health emergency context has brought an excess of information never before known, which is ultimately hindering the search and finding of solutions for the adequate control of the pandemic. Therefore, the determinants of infodemics during outbreaks are becoming a major priority for national governments and international health organizations in these days (3).

In order to fill this knowledge gap, the present work aims to study the determinants of infodemics during disease outbreaks. Specifically, this literature review seeks to (1) identify the factors that make possible the spread of medical/health misinformation during outbreaks and (2) reveal the needs and future directions for the development of new protocols that might contribute to the assessment and control of information quality in future infodemics.

Methods

A systematic review was conducted to explore the determinants of infodemics during disease outbreaks. We focused on health misinformation on epidemics and pandemics that have been widely reported and commented in new and traditional media. This systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (28).

Search Strategy for Study Identification

Databases were searched until December of 2019. According to the characteristic of the different search tools, specific research strategies were designed for Scopus, Medline, Embase, CINAHL, Sociological abstracts, Cochrane Library, plus gray literature. The searches were limited to the period from 01/01/2002 to 20/12/2019. The search terms were related with three basic dimensions: (1) epidemics; (2) misinformation; (3) internet and social media.

Specifically, we used the following search strategy and search terms: (“opinion” OR “opinions” OR “information” OR “misinformation” OR “rumor” OR “rumor” OR “rumors” OR “rumors” OR “gossip” OR “hoax” OR “hoaxes” OR “urban legend” OR “urban legends” OR “myth” OR “myths” OR “fallacy” OR “fallacies”) AND (“epidemics” OR “pandemics” OR “ebola” OR “ebola virus” OR “Ebola virus disease” OR “EVD” OR “zika” OR “zika virus” OR “zika fever” OR “H1N1” OR “Influenza A Virus” OR “Influenza in Birds” OR “avian flu” OR “avian influenza” OR “SARS” OR “severe acute respiratory syndrome”) AND (“online” OR “internet” OR “social media” OR “world wide web” OR “www” OR “social networks” OR “twitter” OR “facebook” OR “youtube” OR “whatsapp” OR “instagram” OR “forums”). The search of free words was complemented with MeSH terms on “information seeking behavior,” “epidemics,” “internet,” “social media” (additional information on the search strategy can be found in the Supplementary Table 1).

Inclusion and Exclusion Criteria

According to the multidisciplinary nature of our research objective, in this systematic review we collected studies focused in the study of determinants of infodemics during disease outbreaks, drawing on both quantitative and qualitative methods. We focus on published research articles, written in English language, addressing the problem of health/medical misinformation during the periods of epidemics and pandemics. We included different methodological perspectives: (a) Quantitative research: studies based on experimental research and survey methods focused in the analysis of health misinformation through social media and the internet. In this section, we also included studies based on quantitative content analysis techniques; (b) Qualitative research: studies focused on preferences and/or individual predisposition to certain messages or sources of (mis)information through these new communicative channels, and qualitative studies based on the thematic analysis of misinformation; (c) Computational methods: studies based on more innovative approaches using computational methods for the study of complex processes of social contagion using text mining techniques, big data, machine learning algorithms, simulations, and social networks analysis.

We chose studies related to outbreaks that have been widely discussed through social media platforms and the Internet (e.g., SARS, H1N1, Ebola, Zika virus, etc.,). Studies focused on non-epidemic diseases, related to vaccination or based on information from other channels (i.e., traditional mass media) were excluded. According to the type of documents we excluded the following: abstracts, doctoral theses, editorials, press articles, commentaries and journal letters, and book reviews.

Studies Quality Assessment

To assess the quality of the selected studies we used two instruments: one for quantitative studies and the other for qualitative ones (29, 30). The tool for assessment of quantitative studies included 10 items: (1) Did the study address a clearly focused issue?, (2) Did the authors use an appropriate method to answer their question?, (3) Was the study population clearly specified and defined?, (4) Were measures taken to accurately reduce measurement bias?, (5) Were the study data collected in a way that addressed the research issue?, (6) Did the study have enough participants to minimize the play of chance?, (7) Did the authors take sufficient steps to assure the quality of the study data?, (8) Was the data analysis sufficiently rigorous?, (9) How complete is the discussion?, and (10) To what extent are the findings generalizable to other international contexts? (where (0) “Can't tell”, (1) “poor”, (2) “fair”, (3) “good”).

The tool for assessment of qualitative studies included eight items: (1) Were steps taken to increase rigor in the sampling?, (2) Were steps taken to increase rigor in the data collected?, (3) Were steps taken to increase rigor in the analysis of the data? (where (0) “No, not at all/ Not stated/Can't tell,” (1) “Yes, a few steps were taken,” (2) “Yes, several steps were taken,” (3) “Yes, a fairly thorough attempt was made”), (4) Were the findings of the study grounded in/supported by the data? [(1) “Limited grounding/support,” (2) “Fair grounding/support,” (3) “Good grounding/support”], (5) Please rate the findings of the study in terms of their breadth and depth [(0) “Limited breadth or depth,” (1) “Good/fair breadth but very little depth,” (2) “Good/fair depth but very little breadth,” (3) “Good/fair breadth and depth”], (6) To what extent does the study privilege the perspectives and experiences of health care professionals and population health? [(0) “Not at all,” (1) “A little,” (2) “Somewhat,” (3) “A lot”], (7) Overall, what weight would you assign to this study in terms of the reliability/trustworthiness of its findings? [(1) “Low”, (2) “Medium,” (3) “High”], (8) What weight would you assign to this study in terms of the usefulness of its findings for this review? [(1) “Low,” (2) “Medium,” (3) “High”].

Results

Selection of the Studies

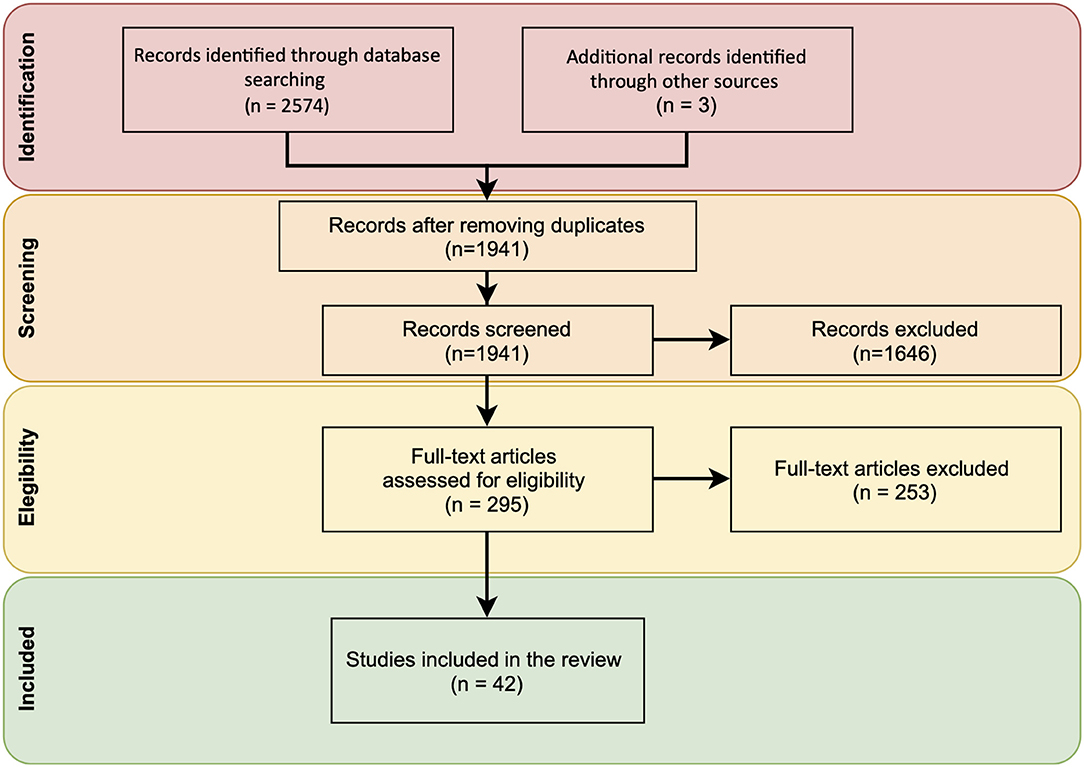

After searching the main biomedical databases and consulting key papers, 2,577 references were retrieved. Then, duplicated records were removed and 1,941 references were screened. In the next stage, the authors independently carried out a full-text selection process for inclusion. Discrepancies were shared and resolved by mutual agreement. Finally, after reviewing their titles and abstracts, 295 references were assessed by full-text. The main reasons for excluding references were that studies did not address misinformation during epidemics or pandemics. Finally, 42 studies were included in the review for further assessment (Figure 1).

The reliability of the study selection process was assessed by the two of the authors using Cronbach's Alpha. The final selection showed high inter-rater reliability (α = 0.97).

Characteristics of the Studies

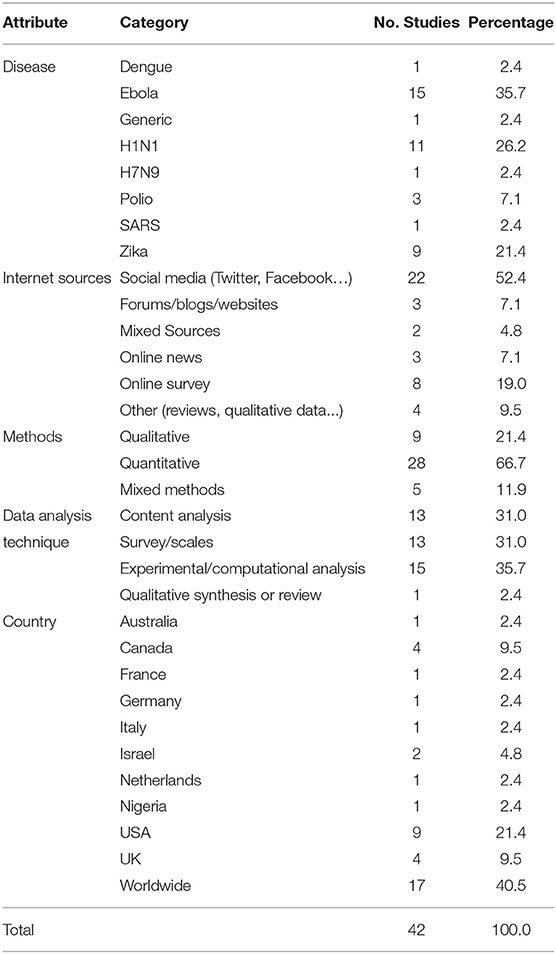

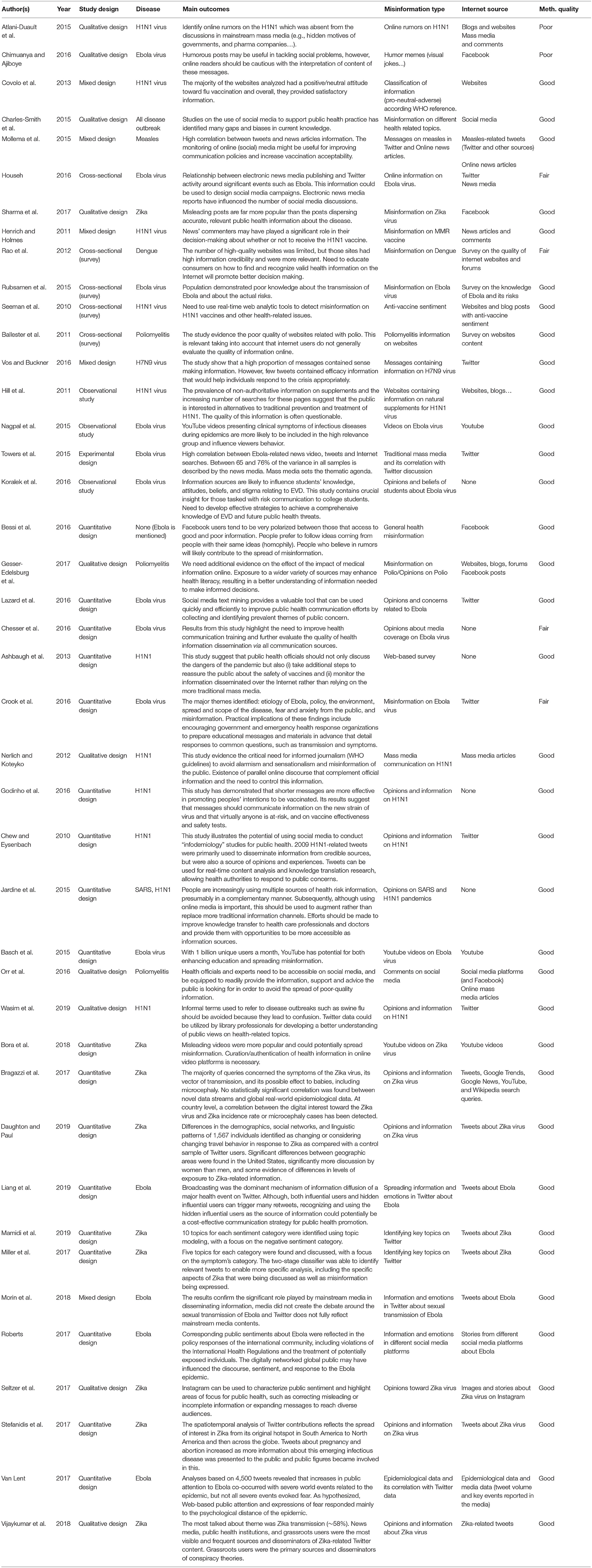

Included papers were focused on nine communicable disease topics: Dengue (1), Ebola (15), Generic diseases (1), H1N1 (11), H7N9 (1), Poliomyelitis (3), SARS (1), and Zika (9). Most studies were selected from the period 2011–2019, the second decade of XXI century when the use of social media platforms widely spread. The main sources under study were social media (52.4%) and online surveys (19.0%). Most studies were based on quantitative methods (66.7%), but we also found qualitative (21.4%) and mixed methods studies (11.9%). Considering that selected studies were based on opinions and content generated in the Internet, a relevant part of the works have no particular geographic area of study (40.5%). The main characteristics of the studies identified are described in Table 1.

According to the studies, different determinants of infodemics have been identified: (1) information sources (i.e., sender); (2) online communities' structure and consensus (i.e., receivers); (3) communication channels (i.e., mass media, social media, forums, etc.,); (4) messages content (i.e., quality of information, sensationalism, etc.,); and (5) the health emergency context (e.g., social consensus, health emergencies, public opinion, etc.,).

Health Misinformation Sources

Health misinformation can propagate through influencers or well-positioned individuals that may act as distractors or judges in specific social networks (31). In addition, certain individual profiles such as those belonging to anti-vaccine groups look for alternative information and treatments online (32), and this argument can also be applied to low educated groups, people having a low digital health literacy (33), or just poor general knowledge on specific diseases such as Ebola or Zika (34). However, gaps and biases in current scientific knowledge have also been found (35); therefore misinformation can be also derived from poor quality scientific knowledge (e.g., misleading scientific papers and/or studies based on preliminary or biased results) which can be subsequently spread through mass and social media. In fact, recent studies have demonstrated a high correlation between social media tweets/posts and news articles information (36). Therefore, traditional media can also contribute to the wrong interpretation of existing scientific evidence and thus to the massive spreading of poor-quality messages that often echoed in social media (24).

Online Communities' Structure

Among the assessed studies we have found that opinion polarization and echo chamber effects can increase (mis)information divides due to the homophily between social media users, but also the resistance to evidence-based knowledge and behavioral change between community members (37, 38). For instance, in the context of social media such as Facebook or Twitter, people tend to spread either good or bad information to their friends. Although expert knowledge from health authorities is also widely distributed in these platforms, misleading health contents propagate and reverberate among relatively closed online communities which ultimately reject expert recommendations and research evidence. Again, this is the case of anti-vax groups that increase vaccine hesitancy through the promotion of public debates around the medical benefits, ethical, and legal issues related to vaccination. Consequently, health misinformation and infodemics can spread easily among certain online communities composed by individuals with similar beliefs and interests (39), and this includes the scientific community which is constantly exposed to trending research topics (35).

Communication Channels

According to the selected studies, although mass media can also contribute to the propagation of poor-quality information during public health emergencies, social media seem to be an ideal channel to spread anecdotal evidence, rumors, fake news, and general misinformation on treatments and existing evidence-based knowledge about health topics (40, 41). For example, it is easy to find poor quality and misleading information on the MMR vaccine (and its relationship with autism) both in the internet and social media such as Twitter, Facebook, or YouTube (31). In general, studies demonstrated that the number of high-quality websites was profoundly limited (42), although today we can also find evidence that certain online sources may also enhance health literacy (43) and self-efficacy in fulfilling treatments of specific health information seekers (e.g., chronic patients looking for health solutions) (8).

Messages Content

Alarmist, misleading, shorter messages, and anecdotal evidence seem to have a stronger impact on the spread of health-related misinformation on epidemiological topics, which can develop and reproduce infodemics. For instance, misleading posts about the Zika virus have been found to be more popular than the posts disseminating accurate information (25, 27, 44). The narratives of misinformation and misleading contents commonly induce fear, anxiety, and mistrust around government and health institutions (45). However, shorter messages have also been found effective for promoting peoples' health, specifically in vaccination campaigns aimed to fight vaccine hesitancy and thus promoting vaccination behavior (46). Twitter, as a microblogging service, can be really fast to propagate evidence-based knowledge on health, and YouTube videos can be easily used to increase knowledge on specific epidemiological topics (or related concerns) that can be difficult to transmit through other communication channels (47).

Health Emergency Context

Regarding the emergency context of situations related to epidemiological concerns, and particularly during pandemics, studies showed ambivalent findings. Although, under health crises alarmist or misleading messages in social media might derive in risky behaviors (e.g., use of alternative medicines, miracle remedies, dangerous treatments, vaccination rejection, etc.,), these social platforms have also been found helpful to manage health information uncertainty and health behaviors through the rapid propagation of evidence-based knowledge which is needed to control specific diseases (46). During emergency circumstances, platforms such as Twitter, Facebook, Instagram, or YouTube have the potential for enhancing educational content on the etiology and prevention of disease, but also for spreading health misinformation (48–52). Therefore, during health crisis contexts, social media can be used to both promote and combat health misinformation.

On the other side, traditional media can also contribute to the propagation and reproduction of misleading contents, which may ultimately affect the development and prolongation of infodemics (53, 54). In this vein, recent studies point to the need to avoid alarmism and sensationalism of messages in order to avoid public misconceptions (55) and the reproduction of these messages through social media (24). These results are summarized in Table 2.

Quality Assessment of Included Studies

Most of the studies analyzed met the basic criteria of methodological quality. Of the total number of studies evaluated in this review, 85.7% (36) had a good quality assessment, 9.5% (4) had a moderate quality assessment and 4.8% (2) received a poor assessment (mainly due to the impossibility to complete certain methodological criteria). Therefore, the risk of bias is relatively low. Scores for the items of the used quality assessment instrument are included in the Supplementary Table 2.

Discussion

Although the concept of infodemics has recently been conceptualized to explain the difficulties for screening the current overabundance of information on the SARS-CoV-2 and the COVID-19 disease, our review demonstrates that this problem is not new since the same dynamics have occurred in previous pandemics (24–27, 32, 37, 44–47, 49–51, 56–77). These studies show that infodemics are closely related to the rapid and free flow of (mis)information through the Internet, social media platforms and online news (78). Although, these new media present a high potential to massively spread evidence-based knowledge, the speed and lack of control of health information contents (even coming from the scientific community) can easily undermine basic standards for trustworthy evidence and increase the risk of bias in research conclusions (79).

As many studies hypothesize, misinformation can easily propagate through social media platform such as Twitter, Facebook, YouTube, or WhatsApp. Misconceptions about H1N1, Zika, or Ebola pandemics are common on social media, but this unofficial narrative is generally absent from the discussions in mainstream mass media (56). This trend can also be observed on rumors regarding the association between vaccination and autism (particularly referred to the MMR vaccine), which generally proliferate on social media (41, 80) or, for instance, among messages around conspiracy theories of governments and pharmaceutical companies that are usual in the virtual environment of social media communities (81). However, studies also demonstrate that social media may be very useful for fighting misinformation during public health crisis (42, 74). Therefore, these social platforms have also an immense capacity to propagate evidence-based health information which might help for mitigating rumors and anecdotal evidence (41). In fact, social media such as Twitter or Facebook have been found to be particularly effective tools in combating rumors, as they can complement and support general information from the mainstream media (53).

These findings demonstrate that these tools have also a huge potential to fight health misinformation through the promotion of health-related educational content and material oriented to solve common questions such as those related to transmission, symptoms, and prevention, as long as they are properly coordinated by specific programmes and interventions from governments and health organizations (66). Nevertheless, taking into consideration that anecdotal evidence and rumors seem to be more popular in social media than evidence-based knowledge (44), public health authorities should find alternatives ways to reach health-information seekers while combating–both the official and unofficial–sources of misinformation, but in particular unauthorized and suspicious social media accounts whose affiliation is not clearly defined (57, 82). In this context, it is needed to consider the particular case of “expert patients” that might ambivalently promote accurate health information and anecdotal experiences (83). Although patients' expertise might be a valuable resource for understanding the patient's perspective, the health professionals are better prepared to confront uncertainty situations and identify concrete solution that would lead to positive health outcomes (84). In any case, it is fundamental to understand that during periods of health emergencies such as the COVID-19 pandemic, even researchers and health professionals can contribute to obscuring the scientific evidence since the hasty search for solutions can lead to biased conclusions (85). This argument also applies to opinion leaders. For example, after Trump suggested in the media that disinfectants could be used to treat Coronavirus, the Georgia Poison Center revealed that two men guzzled cleaning solution over the next weekend in misguided attempts to prevent catching COVID-19.

In order to understand how misinformation might propagate among social media, it is relevant to introduce the distinction between “simple” and “complex contagion” in the framework of opinion spreading in social networks. The fundamental difference between this concept is that while simple contagion depends on network connectivity (e.g., epidemiologic contagion of disease), the process of complex contagion of opinion and ideas requires multiple reinforcement that are based on legitimacy in online communities and normative social consensus (86, 87). Consequently, the effective propagation of misinformed ideas and anecdotal evidence related with health topics depends on the connectivity between social media users, but in particular on the social legitimacy to share these ideas in different normative contexts (88). Therefore, the processes of health misinformation spreading that might contribute to the development of infodemics through social media are also related to the social consensus between groups and the social structures among their online communities (i.e., the degree of connectivity between users and the topological configuration of their social network) (89).

The diversity of studies that we have found indicates the general complexity of delimiting evidence-based knowledge during health emergency contexts in which, due to the very situation of uncertainty and either because of an overabundance of evidence or because of an increase in false news, disinformation tends to emerge naturally (85, 90). Although the negative impact of health misinformation on public health is widely assumed in different studies (5), there is still little evidence on three central questions: (a) how to measure the prevalence of health misinformation in social media; (b) how to analyse the influence between exposure to health-related misinformation and health behaviors and outcomes; and in particular (c) how to reduce the spreading and exposure to health misinformation through specific interventions and programmes (5). Thus, understanding the phenomenon of complex contagion of misinformation during health emergencies, it will be essential to explain the emergence and reproduction of future infodemics.

Studied selected in this systematic review described different measures to combat health misinformation in mass and social media: (1) to develop formative programs for professional intervention and better knowledge transfer (91); (2) to improve health-related contents in mass media by using existing (peer-reviewed) scientific evidence (78); (3) to promote the development of multimedia products (videos, images, tutorials, infographics, etc.,) from trustworthy sources like academic institutions and health organizations (i.e., research evidence should be particularly oriented to the public so that scientific knowledge can produce social impact) (27); (4) to develop coordinated information campaigns between the media and health authorities, specifically during periods of health crisis (53); (5) to increase (digital) health literacy of health-information seekers (42, 48, 66); and (6) to develop new tools and information systems for health misinformation monitoring and health evidence quality assessment (61, 92). Considering that the highly changing and dynamic ecosystem were infodemics rise and evolve, the action of knowledge developers and spreaders is central for the control of fake news, anecdotal evidence, false, and misleading contents. Therefore, health organizations, reputed hospitals, universities, research institutes, mass media, and social media companies may play a more proactive role in the fight of health mis/disinformation. In general, scientific knowledge should have larger presence and visibility in the “information societies,” but particularly during contexts of health emergencies–as the COVID-19 pandemic–were overabundance of information might induce contradictory views, population fear, and undesired social consequences such as rejection of new governmental rules, lack of trust in health authorities, denial of expert advices, and vaccine and treatment hesitancy, among others social side effects.

Limitations and Future Directions

We found some limitations when conducting our systematic review. First, due to the novelty of the research topics, we detected a great heterogeneity of results in the articles included. Most studies selected were observational study designs based in survey methods or descriptive content analysis applied to different population groups (students, general population, or platform users), but we also found other works based on computational methods (i.e., including online data or social media data) and even on qualitative evidence. Second, considering the idiosyncrasies of every platform (i.e., microblogging services, video sharing, etc.,) we will need additional studies to obtain platform-oriented conclusions and further methodological insights on how to effectively combat the determinants health misinformation during health disease outbreaks and subsequent infodemics across different social media platforms.

Finally, it is worth to mention that as authors are editing this paper, the COVID-19 infodemic is currently taking place. Therefore, the consequences of the misinformation regarding symptoms of the new disease, the possible treatments and public health strategies to cope with the new pandemic are still unknown. Our text search covered up to December 2019 and did not capture studies on the COVID-19. Nevertheless, it emphasizes the existence of a problematic and recurrent social phenomenon that reinforce the need to collate and analyse evidence about the relationship between disease outbreaks and health misinformation. Thus, the COVID-19 pandemic confirms the relevance to internationally address this research topic from a multidisciplinary approach that could integrate existing evidence of different but complementary research fields.

Conclusion

Our systematic review provides a comprehensive characterization of the determinants of infodemics and describes different measures to combat health misinformation during disease outbreaks. The clarity of the health promotion messages has been proven essential to prevent the spread of a particular disease and to avoid potential risks, but it is also fundamental to understand the network structure of social media platforms and the context where misinformation and/or misleading evidence might dynamically evolve. Therefore, in order to prevent future infodemics, special attention will need to be paid both to increase the visibility of evidence-based knowledge generated by health organizations and academia, and to detect the possible sources of mis/disinformation. In any case, this fight against health misinformation will not be possible without the help of mass media and social media companies, particularly the latter since these platforms have a high penetration in our societies and, consequently, the capacity to work on the health literacy of the population. We must consider that the battle against misinformation is not exclusive to the health field. Misinformation is also rooted in the political and economic system of our societies, but –as we can observe in these days of pandemic– it is probably during the processes of social and health emergencies when the damage to our way of life may be greater.

Author Contributions

JA-G contributed conception of the study. AR-G and VS-L contributed in the selection of studies, the screening process, and grading of evidence. JA-G and AR-G wrote the first draft of the manuscript. All author contributed in the interpretation of results, have contributed substantially to the development of the paper, and approved the final version of the manuscript for its submission to this journal.

Funding

JA-G was subsidized by the Ramon & Cajal research programme operated by the Ministry of Economy and Business (RYC-2016-19353), and the European Social Fund.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to acknowledge the support of the INDESS (University of Cadiz), the Computational Social Science DataLab team, and the Ramon & Cajal programme of the Ministry of Economy and Business.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2021.603603/full#supplementary-material

References

1. WHO. EPI-WIN (2020). Available online at: https://www.epi-win.com/ (accessed March 9, 2020).

2. Naeem SB, Bhatti R. The COVID-19 ‘infodemic': a new front for information professionals. Heal Inf Libr J. (2020) 37:233–9. doi: 10.1111/hir.12311

3. Zarocostas J. How to fight an infodemic. Lancet. (2020) 395:676. doi: 10.1016/S0140-6736(20)30461-X

4. de Boer C. Public Opinion. In: Ritzer G, editor. The Blackwell Encyclopedia of Sociology. New York, NY: Blackwell Publishing. (2007). doi: 10.1002/9781405165518.wbeosp119

5. Chou W, Oh A, WP K. Addressing health-related misinformation on social media. JAMA. (2018) 320:2417–8. doi: 10.1001/jama.2018.16865

6. Xiong F, Liu Y. Opinion formation on social media: an empirical approach. Chaos. (2014) 24:013130. doi: 10.1063/1.4866011

7. Kuss D, Griffiths M, Karila L, Billieux J. Internet addiction: a systematic review of epidemiological research for the last decade. Curr Pharm Des. (2014) 20:4026–52. doi: 10.2174/13816128113199990617

8. Alvarez-Galvez J, Salinas-Perez JA, Montagni I, Salvador-Carulla L. The persistence of digital divides in the use of health information: a comparative study in 28 European countries. Int J Public Health. (2020) 65:325–33. doi: 10.1007/s00038-020-01363-w

9. Bratu S. Fake news, health literacy, and misinformed patients: the fate of scientific facts in the era of digital medicine. Anal Metaphys. (2018) 17:122–7. doi: 10.22381/AM1720186

10. Waszak PM, Kasprzycka-Waszak W, Kubanek A. The spread of medical fake news in social media – the pilot quantitative study. Heal Policy Technol. (2018) 7:115–8. doi: 10.1016/j.hlpt.2018.03.002

11. Allcott H, Gentzkow M, Yu C. Trends in the diffusion of misinformation on social media. Res Polit. (2019) 6:1–8. doi: 10.3386/w25500

12. Mcgloin AF, Eslami S. Digital and social media opportunities for dietary behaviour change. Proc Nutr Soc. (2015) 74:139–48. doi: 10.1017/S0029665114001505

13. Sama PR, Eapen ZJ, Weinfurt KP, Shah BR, Schulman KA. An evaluation of mobile health application tools. JMIR Mhealth Uhealth. (2014) 2:e19. doi: 10.2196/mhealth.3088

14. Altevogt BM, Stroud C, Hanson SL, Hanfling D, Gostin LOE, Institute of Medicine. Guidance for Establishing Crisis Standards of Care for Use in Disaster Situations. Washington, DC: National Academies Press (2009).

15. Funk S, Gilad E, Watkins C, Jansen VAA. The spread of awareness and its impact on epidemic outbreaks. Proc Natl Acad Sci U S A. (2009) 106:6872–7. doi: 10.1073/pnas.0810762106

16. Funk S, Salathé M, Jansen VAAA. Modelling the influence of human behaviour on the spread of infectious diseases: a review. J R Soc Interface. (2010) 7:1247–56. doi: 10.1098/rsif.2010.0142

17. Kim SJ, Han JA, Lee T-YY, Hwang T-YY, Kwon K-SS, Park KS, et al. Community-based risk communication survey: risk prevention behaviors in communities during the H1N1 crisis, 2010. Osong Public Heal Res Perspect. (2014) 5:9–19. doi: 10.1016/j.phrp.2013.12.001

18. Scarcella C, Antonelli L, Orizio G, Rossmann CC, Ziegler L, Meyer L, et al. Crisis communication in the area of risk management: the CriCoRM project. J Public Health Res. (2013) 2:e20. doi: 10.4081/jphr.2013.e20

19. Cavallo DN, Chou WYS, Mcqueen A, Ramirez A, Riley WT. Cancer prevention and control interventions using social media: user-generated approaches. Cancer Epidemiol Biomarkers Prev. (2014) 23:1953–6. doi: 10.1158/1055-9965.EPI-14-0593

20. Naslund JA, Grande SW, Aschbrenner KA, Elwyn G. Naturally occurring peer support through social media: the experiences of individuals with severe mental illness using you tube. PLoS ONE. (2014) 9:e110171. doi: 10.1371/journal.pone.0110171

21. Freeman B, Kelly B, Baur L, Chapman K, Chapman S, Gill T, King L. Digital junk: food and beverage marketing on facebook. Am J Public Health. (2014) 104:e56–64. doi: 10.2105/AJPH.2014.302167

22. Levy JAJA, Strombeck R. Health benefits and risks of the Internet. J Med Syst. (2002) 26:495–510. doi: 10.1023/A:1020288508362

23. Betsch C. The role of the Internet in eliminating infectious diseases. Managing perceptions and misperceptions of vaccination. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz. (2013) 56:1279–86. doi: 10.1007/s00103-013-1793-3

24. Towers S, Afzal S, Bernal G, Bliss N, Brown S, Espinoza B, Jackson J, Judson-Garcia J, Khan M, Lin M, et al. Mass media and the contagion of fear: the case of Ebola in America. PLoS ONE. (2015) 10:e0129179. doi: 10.1371/journal.pone.0129179

25. Seltzer EKK, Horst-Martz E, Lu M, Merchant RMM. Public sentiment and discourse about Zika virus on Instagram. Public Health. (2017) 150:170–5. doi: 10.1016/j.puhe.2017.07.015

26. Bragazzi NL, Alicino C, Trucchi C, Paganino C, Barberis I, Martini M, et al. Global reaction to the recent outbreaks of Zika virus: insights from a big data analysis. PLoS ONE. (2017) 12:e0185263. doi: 10.1371/journal.pone.0185263

27. Bora K, Das D, Barman B, Borah P. Are internet videos useful sources of information during global public health emergencies? A case study of YouTube videos during the 2015–16 Zika virus pandemic. Pathog Glob Health. (2018) 112:320–8. doi: 10.1080/20477724.2018.1507784

28. Moher D, Liberati A, Tetzlaff J, Altman DG, Altman DG, Antes G, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. (2009) 6:1000097. doi: 10.1371/journal.pmed.1000097

29. Rojas-García A, Turner S, Pizzo E, Hudson E, Thomas J, Raine R. Impact and experiences of delayed discharge: a mixed-studies systematic review. Health Expect. (2018) 21:41–56. doi: 10.1111/hex.12619

30. Barratt H, Rojas-García A, Clarke K, Moore A, Whittington C, Stockton S, et al. Epidemiology of mental health attendances at emergency departments: systematic review and meta-analysis. PLoS ONE. (2016) 11:e0154449. doi: 10.1371/journal.pone.0154449

31. Hara N, Sanfilippo MR. Co-constructing controversy: content analysis of collaborative knowledge negotiation in online communities. Inf Commun Soc. (2016) 19:1587–604. doi: 10.1080/1369118X.2016.1142595

32. Hill S, Mao J, Ungar L, Hennessy S, Leonard CE, Holmes J. Natural supplements for H1N1 influenza: retrospective observational infodemiology study of information and search activity on the Internet. J Med Internet Res. (2011) 13:e36. doi: 10.2196/jmir.1722

33. Gesser-Edelsburg A, Diamant A, Hijazi R, Mesch GS. Correcting misinformation by health organizations during measles outbreaks: a controlled experiment. PLoS ONE. (2018) 13:e0209505. doi: 10.1371/journal.pone.0209505

34. Rubsamen N, Castell S, Horn J, Karch AA, Ott JJJ, Raupach-Rosin H, et al. Ebola risk perception in Germany, 2014. Emerg Infect Dis. (2015) 21:1012–8. doi: 10.3201/eid2106.150013

35. Charles-Smith LE, Reynolds TL, Cameron MA, Conway M, Lau EHY, Olsen JM, et al. Using social media for actionable disease surveillance and outbreak management: a systematic literature review. PLoS ONE. (2015) 10:e0139701. doi: 10.1371/journal.pone.0139701

36. Mollema L, Harmsen IA, Broekhuizen E, Clijnk R, De Melker H, Paulussen T, et al. Disease detection or public opinion reflection? content analysis of tweets, other social media, and online newspapers during the measles outbreak in the Netherlands in 2013. J Med Internet Res. (2015) 17:e128. doi: 10.2196/jmir.3863

37. Liang H, Fung ICH, Tse ZTH, Yin J, Chan CH, Pechta LE, et al. How did Ebola information spread on twitter: broadcasting or viral spreading? BMC Public Health. (2019) 19:1–11. doi: 10.1186/s12889-019-6747-8

38. Salathé M, Khandelwal S. Assessing vaccination sentiments with online social media: implications for infectious disease dynamics and control. PLoS Comput Biol. (2011) 7:e1002199. doi: 10.1371/journal.pcbi.1002199

39. Shao C, Ciampaglia GL, Varol O, Yang K-C, Flammini A, Menczer F. The spread of low-credibility content by social bots. Nat Commun. (2018) 9:4787. doi: 10.1038/s41467-018-06930-7

40. Idoiaga Mondragon N. Social networks in times of risk: analyzing Ebola through twitter. Opcion. (2016) 32:740–56.

41. Wang Y, McKee M, Torbica A, Stuckler D. Systematic literature review on the spread of health-related misinformation on social media. Soc Sci Med. (2019) 240:112552. doi: 10.1016/j.socscimed.2019.112552

42. Rao NR, Mohapatra M, Mishra S, Joshi A. Evaluation of dengue-related health information on the internet. Perspect Health Inf Manag. (2012) 9:1c.

43. Gesser-Edelsburg A, Walter N, Shir-Raz Y. The “New Public” and the “Good Ol' Press”: evaluating online news sources during the 2013 polio outbreak in Israel. Health Commun. (2017) 32:169–79. doi: 10.1080/10410236.2015.1110224

44. Sharma M, Yadav K, Yadav N, Ferdinand KC. Zika virus pandemic—analysis of Facebook as a social media health information platform. Am J Infect Control. (2017) 45:301–2. doi: 10.1016/j.ajic.2016.08.022

45. Bessi A, Petroni F, Del Vicario M, Zollo F, Anagnostopoulos A, Scala A, et al. Homophily and polarization in the age of misinformation. Eur Phys J Spec Top. (2016) 225:2047–59. doi: 10.1140/epjst/e2015-50319-0

46. Godinho CA, Yardley L, Marcu A, Mowbray F, Beard E, Michie S. Increasing the intent to receive a pandemic influenza vaccination: testing the impact of theory-based messages. Prev Med. (2016) 89:104–11. doi: 10.1016/j.ypmed.2016.05.025

47. Nagpal SJS, Karimianpour A, Mukhija D, Mohan D, Brateanu A. YouTube videos as a source of medical information during the Ebola hemorrhagic fever epidemic. Springerplus. (2015) 4:1–5. doi: 10.1186/s40064-015-1251-9

48. Basch CH, Basch CE, Ruggles KV, Hammond R. Coverage of the Ebola virus disease epidemic on YouTube. Disaster Med Public Health Prep. (2015) 9:531–5. doi: 10.1017/dmp.2015.77

49. Stefanidis A, Vraga E, Lamprianidis G, Radzikowski J, Delamater PL, Jacobsen KH, et al. Zika in Twitter: temporal variations of locations, actors, and concepts. JMIR Public Heal Surveill. (2017) 3:e22. doi: 10.2196/publichealth.6925

50. Van Lent LGGG, Sungur H, Kunneman FA, Van De Velde B, Das E. Too far to care? Measuring public attention and fear for Ebola using twitter. J Med Internet Res. (2017) 19:e193. doi: 10.2196/jmir.7219

51. Daughton AR, Paul MJ. Identifying protective health behaviors on twitter: observational study of travel advisories and Zika virus. J Med Internet Res. (2019) 21:e13090. doi: 10.2196/13090

52. Bragazzi NL, Barberis I, Rosselli R, Gianfredi V, Nucci D, Moretti M, et al. How often people google for vaccination: qualitative and quantitative insights from a systematic search of the web-based activities using google trends. Hum Vaccin Immunother. (2017) 13:464–9. doi: 10.1080/21645515.2017.1264742

53. Househ M. Communicating Ebola through social media and electronic news media outlets: a cross-sectional study. Health Informatics J. (2016) 22:470–8. doi: 10.1177/1460458214568037

54. Catalan-Matamoros D, Peñafiel-Saiz C. How is communication of vaccines in traditional media: a systematic review. Perspect Public Health. (2019) 139:34–43. doi: 10.1177/1757913918780142

55. Nerlich B, Koteyko N. Crying wolf? Biosecurity and metacommunication in the context of the 2009 swine flu pandemic. Health Place. (2012) 18:710–7. doi: 10.1016/j.healthplace.2011.02.008

56. Atlani-Duault L, Mercier A, Rousseau C, Guyot P, Moatti JP. Blood libel rebooted: traditional scapegoats, online media, and the H1N1 epidemic. Cult Med Psychiatry. (2015) 39:43–61. doi: 10.1007/s11013-014-9410-y

57. Chimuanya L, Ajiboye E. Socio-semiotics of humour in Ebola awareness discourse on Facebook. Anal Lang Humor Online Commun. (2016):252–73. doi: 10.4018/978-1-5225-0338-5.ch014

58. Covolo L, Mascaretti S, Caruana A, Orizio G, Caimi L, Gelatti U. How has the flu virus infected the Web? 2010 influenza and vaccine information available on the internet. BMC Public Health. (2013) 13:83. doi: 10.1186/1471-2458-13-83

59. Henrich N, Holmes B. What the public was saying about the H1N1 vaccine: perceptions and issues discussed in on-line comments during the 2009 H1N1 pandemic. PLoS ONE. (2011) 6:e18479. doi: 10.1371/journal.pone.0018479

60. Rubsamen N, Castell S, Horn J, Karch A, Ott JJ, Raupach-Rosin H, et al. Risk perceptions during the 2014 Ebola virus disease epidemic: results of a german survey in lower saxony. Eur J Epidemiol. (2015) 30:816. doi: 10.1186/s12889-018-5543-1

61. Seeman N, Ing A, Rizo C. Assessing and responding in real time to online anti-vaccine sentiment during a flu pandemic. Healthc Q. (2010) 13:8–15. doi: 10.12927/hcq.2010.21923

62. Koralek T, Runnerstrom MG, Brown BJ, Uchegbu C, Basta TB. Lessons from Ebola: sources of outbreak information and the associated impact on UC irvine and Ohio University College students. PLoS Curr. (2016) 8. doi: 10.1371/currents.outbreaks.f1f5c05c37a5ff8954f38646cfffc6a2

63. Glowacki EM, Lazard AJ, Wilcox GB, Mackert M, Bernhardt JM, Joob B, et al. Identifying the public's concerns and the centers for disease control and prevention's reactions during a health crisis: an analysis of a Zika live Twitter chat. Am J Infect Control. (2016) 44:1709–11. doi: 10.1016/j.ajic.2016.05.025

64. Chesser AK, Keene Woods N, Mattar J, Craig T. Promoting health for all kansans through mass media: lessons learned from a pilot assessment of student Ebola perceptions. Disaster Med Public Health Prep. (2016) 10:641–3. doi: 10.1017/dmp.2016.61

65. Ashbaugh AR, Herbert CF, Saimon E, Azoulay N, Olivera-Figueroa L, Brunet A. The decision to vaccinate or not during the H1N1 pandemic: selecting the lesser of two evils? PLoS ONE. (2013) 8:e58852. doi: 10.1371/journal.pone.0058852

66. Crook B, Glowacki EM, Suran MK, Harris J, Bernhardt JM. Content analysis of a live CDC Twitter chat during the 2014 Ebola outbreak. Commun Res Rep. (2016) 33:349–55. doi: 10.1080/08824096.2016.1224171

67. Chew C, Eysenbach G. Pandemics in the age of Twitter: content analysis of tweets during the 2009 H1N1 outbreak. PLoS ONE. (2010) 5:e14118. doi: 10.1371/journal.pone.0014118

68. Ahmed W, Bath PA, Sbaffi L, Demartini G. Novel insights into views towards H1N1 during the 2009 Pandemic: a thematic analysis of Twitter data. Health Info Libr J. (2019) 36:60–72. doi: 10.1111/hir.12247

69. Mamidi R, Miller M, Banerjee T, Romine W, Sheth A. Identifying key topics bearing negative sentiment on Twitter: insights concerning the 2015-2016 Zika epidemic. J Med Internet Res. (2019) 5:e11036. doi: 10.2196/11036

70. Miller M, Banerjee T, Muppalla R, Romine W, Sheth A. What are people tweeting about Zika? An exploratory study concerning its symptoms, treatment, transmission, and prevention. JMIR Public Heal Surveill. (2017) 3:e38. doi: 10.2196/publichealth.7157

71. Morin C, Bost I, Mercier A, Dozon J-PP, Atlani-Duault L. Information circulation in times of Ebola: Twitter and the sexual transmission of Ebola by survivors. PLoS Curr. (2018) 10. doi: 10.1371/currents.outbreaks.4e35a9446b89c1b46f8308099840d48f

72. Roberts H, Seymour B, Fish SA, Robinson E, Zuckerman E. Digital health communication and global public influence: a study of the Ebola epidemic. J Health Commun. (2017) 22:51–8. doi: 10.1080/10810730.2016.1209598

73. Vijaykumar S, Nowak G, Himelboim I, Jin Y. Virtual Zika transmission after the first U.S. case: who said what and how it spread on Twitter. Am J Infect Control. (2018) 46:549–57. doi: 10.1016/j.ajic.2017.10.015

74. Vos SC, Buckner MM. Social media messages in an emerging health crisis: tweeting bird flu. J Health Commun. (2016) 21:301–8. doi: 10.1080/10810730.2015.1064495

75. Ballester R, Bueno E, Sanz-Valero J. Information, self-help, and identity creation: information and communication technologies (ICTs) and associations for the physically disabled. The example of poliomyelitis. Salud Colect. (2011) 7:39–47.

76. Jardine CG, Boerner FU, Boyd AD, Driedger SM. The more the better? A comparison of the information sources used by the public during two infectious disease outbreaks. PLoS ONE. (2015) 10:e0140028. doi: 10.1371/journal.pone.0140028

77. Orr D, Baram-Tsabari A, Landsman K. Social media as a platform for health-related public debates and discussions: the Polio vaccine on Facebook. Isr J Health Policy Res. (2016) 5:1–11. doi: 10.1186/s13584-016-0093-4

78. Suarez-Lledo V, Alvarez-Galvez J. Prevalence of health misinformation on social media: systematic review. J Med Internet Res. (2021) 23:e17187. doi: 10.2196/17187

79. Moynihan R, Macdonald H, Bero L, Godlee F. Commercial influence and covid-19. BMJ. (2020) 369:1–2. doi: 10.1136/bmj.m2456

80. Aquino F, Donzelli G, De Franco E, Privitera G, Lopalco PL, Carducci A. The web and public confidence in MMR vaccination in Italy. Vaccine. (2017) 35:4494–8. doi: 10.1016/j.vaccine.2017.07.029

81. Guidry JPD, Carlyle K, Messner M, Jin Y. On pins and needles: how vaccines are portrayed on pinterest. Vaccine. (2015) 33:5051–6. doi: 10.1016/j.vaccine.2015.08.064

82. Venkatraman A, Garg N, Kumar N. Greater freedom of speech on web 2.0 correlates with dominance of views linking vaccines to autism. Vaccine. (2015) 33:1422–5. doi: 10.1016/j.vaccine.2015.01.078

83. Seymour B, Getman R, Saraf A, Zhang LH, Kalenderian E. When advocacy obscures accuracy online: digital pandemics of public health misinformation through an antifluoride case study. Am J Public Health. (2015) 105:517–23. doi: 10.2105/AJPH.2014.302437

84. Shaw J, Baker M. “Expert patient” —dream or nightmare? BMJ. (2004) 328:723–4. doi: 10.1136/bmj.328.7442.723

85. London AJ, Kimmelman J. Against pandemic research exceptionalism. Science. (2020) 368:476–7. doi: 10.1126/science.abc1731

86. Alvarez-Galvez J. Network models of minority opinion spreading: using agent-based modeling to study possible scenarios of social contagion. Soc Sci Comput Rev. (2016) 34:567–81. doi: 10.1177/0894439315605607

87. Centola D. How Behavior Spreads: the Science of Complex Contagions. Princeton, NJ: Princeton University Press (2018). doi: 10.2307/j.ctvc7758p

88. Centola D, Macy M. Complex contagions and the weakness of long ties. Am J Sociol. (2007) 113:702–34. doi: 10.1086/521848

89. Tessone CJ, Toral R, Amengual P, Wio HS, Miguel MS. Neighborhood models of minority opinion spreading. Eur Phys J B. (2004) 39:535–44. doi: 10.1140/epjb/e2004-00227-5

90. Larson HJ. The biggest pandemic risk? Viral misinformation. Nature. (2018) 562:309–10. doi: 10.1038/d41586-018-07034-4

91. Afshar S, Roderick PJ, Kowal P, Dimitrov BD, Hill AG. Multimorbidity and the inequalities of global ageing: a cross-sectional study of 28 countries using the World Health Surveys. BMC Public Health. (2015) 15:1–10. doi: 10.1186/s12889-015-2008-7

Keywords: infodemics, social media, misinformation, epidemics, outbreaks

Citation: Alvarez-Galvez J, Suarez-Lledo V and Rojas-Garcia A (2021) Determinants of Infodemics During Disease Outbreaks: A Systematic Review. Front. Public Health 9:603603. doi: 10.3389/fpubh.2021.603603

Received: 07 September 2020; Accepted: 24 February 2021;

Published: 29 March 2021.

Edited by:

Pietro Ghezzi, Brighton and Sussex Medical School, United KingdomReviewed by:

Cristina M. Pulido, Universitat Autònoma de Barcelona, SpainLuigi Roberto Biasio, Sapienza University of Rome, Italy

Copyright © 2021 Alvarez-Galvez, Suarez-Lledo and Rojas-Garcia. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Javier Alvarez-Galvez, amF2aWVyLmFsdmFyZXpnYWx2ZXpAdWNhLmVz

Javier Alvarez-Galvez

Javier Alvarez-Galvez Victor Suarez-Lledo1

Victor Suarez-Lledo1