- 1Department of Pediatrics, Faculty of Nursing, Owerko Centre, Alberta Children's Hospital Research Institute, University of Calgary, Calgary, AB, Canada

- 2Department of Pediatrics, Faculty of Nursing, University of Calgary, Calgary, AB, Canada

- 3Faculty of Nursing, University of Calgary, Calgary, AB, Canada

- 4Department of Community Health Sciences, Faculty of Nursing, Owerko Centre, Alberta Children's Hospital Research Institute, University of Calgary, Calgary, AB, Canada

Nurses play an important role in promoting positive childhood development via early interventions intended to support parenting. Despite recognizing the need to deliver vital parenting programs, monitoring fidelity has largely been ignored. Fidelity refers to the degree to which healthcare programs follow a well-defined set of criteria specifically designed for a particular program model. With increasing demands for early intervention programs to be delivered by non-specialists, rigorous yet pragmatic strategies for maintaining fidelity are needed. This paper describes the step-by-step development and evaluation of a program fidelity measure, using the Attachment and Child Health (ATTACH™) parenting program as an exemplar. The overall quality index for program delivery varied between “very good” to “excellent,” with a mean of 4.3/5. Development of checklists like the ATTACH™ fidelity assessment checklist enables the systematic evaluation of program delivery and identification of therapeutic components that enable targeted efforts at improvement. In future, research should examine links between program fidelity and targeted outcomes to ascertain if increased fidelity scores yield more favorable effects of parenting programs.

Introduction

Parents influence children's affective and cognitive development, with lifelong impacts (1, 2). Nurses play an important role in intervening early to support parenting and promote healthy child development (3, 4). Despite recognizing the need to deliver vital parenting programs, monitoring fidelity has been historically ignored (5, 6). Fidelity refers to the extent to which a healthcare program follows an explicit set of criteria specifically designed for its particular program model (7–9). However, defining and operationalizing program fidelity for parenting programs is difficult due to their interactive and dynamic nature (10, 11). Attempts by program developers to assure adherence to their programs include the creation of training and protocol manuals, but these alone may not be sufficient to ensure fidelity of implementation (8, 12). With increased demands for early intervention to be widely delivered by non-specialists, rigorous yet pragmatic strategies for maintaining program fidelity are needed.

In the United States and Canada, the development and implementation of parenting programs to bolster healthy development of children has increased during the last 20 years (13). There is a growing awareness of the significance of developing and applying fidelity measures to evaluate the implementation of such programs (12, 14, 15). However, practical guidelines and exemplars are lacking. This paper describes how to develop and conduct an evaluation of a program fidelity checklist, including the step-by-step developmental process our research team used for the Attachment and Child Health (ATTACH™) parenting program. ATTACH™ is a psycho-educational parenting program that fosters parental reflective function (RF), the ability of parents to envision mental states in themselves and their children to promote healthy child development (16, 17). Compared to routine care, ATTACH™ has been shown to be effective in randomized controlled trials and quasi-experimental studies (16, 17); however, program fidelity assessment was needed to contextualize results and assure ongoing internal validity in support of wider implementation. Thus, the purpose of our paper is to demonstrate the step-by-step process for development of a fidelity checklist, specifically for ATTACH™, and to provide preliminary data on its utility.

Background

The practice of assessing fidelity in community-based interventions was adopted from trials of pharmacotherapy (drug) trials, in which strict adherence to protocol is a critical requirement (18, 19). In contrast to drug trials, systematic evaluation of program fidelity of psycho-educational programs, such as parenting programs, is more difficult due to the dynamic and often highly individualized interactions between facilitator and parent (20, 21). It is also more challenging to ascertain the therapeutic elements of parenting programs (22) as they tend to be tailored to parents' individual needs and preferences. However, tailoring a program does not mean that the facilitator may extemporize during the program administration; rather, program elements that are standardized vs. customized must be clearly defined and monitored (22, 23). Stated simply, facilitators need to be assessed on whether they delivered the program by using judgment and discretion appropriately.

Evaluation of program fidelity answers the following question: Did facilitators deliver the program as intended? (24). Strategies such as reviewing audio- or video-taped intervention sessions or direct observations to detect any diversions in program delivery have been recommended (25, 26). While helpful, these strategies offer insufficient guidance for rigorous evaluation of fidelity. The National Institutes of Health (NIH) Behaviour Change Consortium identified five elements to promote program fidelity (i.e., design, training, delivery, receipt, and enactment) (18, 27, 28); however, simply including the five steps does not ensure fidelity (14, 28, 29). The five steps offer little specific direction to the: (1) processes of conducting fidelity assessment, (2) determining which types of assessments are appropriate for a given program, or (3) how to define degree of adherence to and any deviations from program protocols (24, 30).

Assessing program fidelity includes consideration of content and process (20, 30–32). Content fidelity (or adherence) refers to the degree to which each main element is implemented as intended and if there are unplanned elements delivered (30, 31). Process fidelity (or competence) refers to the degree to which effective communication skills are used in response to facilitator and participant needs and situations, and essentially how well each intervention element is delivered (20, 21, 24, 30, 32–34). While adherence refers to the quantity of recommended behaviors, process refers to skillfulness in implementation of intervention (12, 35).

The cost and time required to develop a fidelity measure, training raters to code the intervention sessions, and establishing inter-rater reliability between the coders, contribute to the lack of reports of the systematic assessment of program fidelity. Although nurses may benefit from using extant program evaluation measures, instruments created for one program may not be promptly adaptable to others (36) as evaluation of program fidelity must be tailored to the program being tested (12). Additionally, nursing interventions are increasingly being delivered by other health professionals (37, 38); therefore, it is crucial to describe a step-by-step developmental process for a fidelity checklist that can be effectively used by many professionals.

How fidelity is measured and how checklists are developed matters a great deal in terms of assessing adherence to any kind of practice (39–41). Psycho-educational parenting programs like ATTACH™ require a great deal of mutual interaction (between the facilitator and the participant), which contributes to the skillful delivery of program elements; it may also pose difficulty in training facilitators and examining the quality of program delivery (42, 43). A checklist to assess program fidelity may facilitate a systematic evaluation of program delivery as well as facilitators' training and help with interpretation of intervention effects.

Materials and Methods

To develop and test the fidelity checklist for ATTACH™, we evaluated existing measures for monitoring fidelity of parenting programs. We determined their applicability to the ATTACH™ program and identified challenges that needed to be resolved for our fidelity checklist. Then we created the ATTACH™ fidelity checklist by tailoring standard recommendations (18, 24, 30, 32, 34, 44–47) to ATTACH™'s guiding theory and program structure.

The Attach™ Program

Details of the ATTACH™ program and the guiding theory are published elsewhere (16, 17). Briefly, ATTACH™ is a 1-h, 10–12 session, face-to-face intervention with dyadic (mother and infant) and triadic (mother, infant, and co-parent) elements. ATTACH™ is designed to help parents bolster a skill called parental Reflective Function (RF), the capacity of parents to think about mental states (thoughts, feelings, and intentions) in themselves and their children, and to consider how their mental states might affect their children to regulate behavior (16, 17). Parental RF is distinguishable from many related terms including mindful parenting (48–50), mindblindedness (51), empathy (52), insightfulness (53), and mind mindedness (54). ATTACH™ can be delivered by nurses or other health professionals with an undergraduate degree in health sciences, social work, psychology, sociology, or some post-secondary education that relates to child welfare. During weekly ATTACH™ sessions, that involve review of parent-child play sessions, and discussions of real-life and hypothetical or made-up stressful social situations, the facilitator helps the parents learn new RF skills, accomplished through leading by example, asking questions and practicing RF skills. The ATTACH™ studies were approved by an appropriate institutional review board.

Review of Extant Parenting Program Fidelity Measures

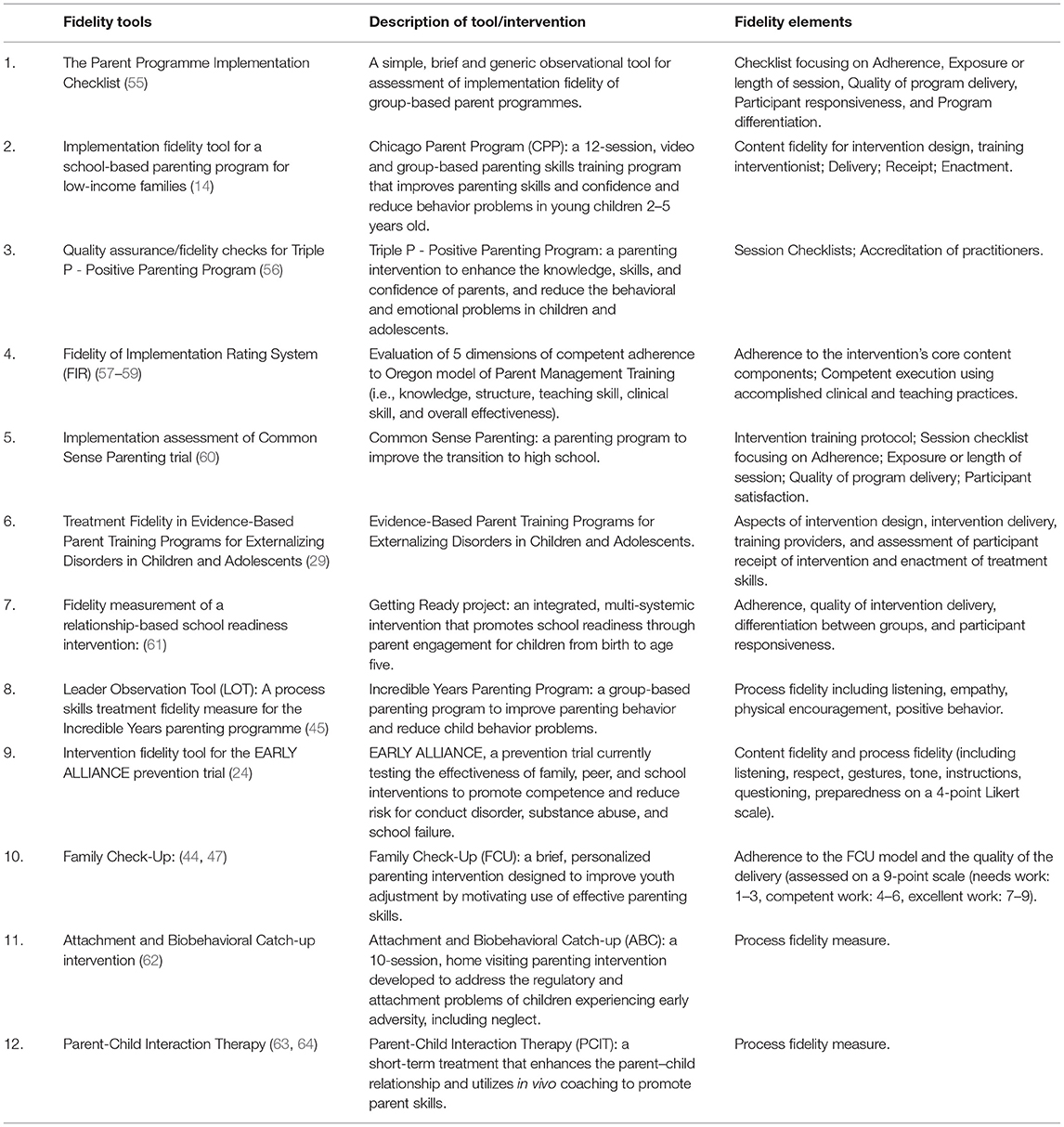

To examine utility for the evaluation of ATTACH™, twelve fidelity measures were reviewed to determine if and how content, process, adherence and competence fidelity were assessed (Table 1). All measures included “adherence” to program content elements as a part of the measures. Additionally, fidelity checklist developers of the Leadership Observation Tool (45), Common Sense Parenting Trial (60), Getting Ready Project (61), Family Check-Up (44, 47) and EARLY ALLIANCE prevention trial (24) included competence to process elements or as a part of their checklists to capture process skills of facilitators. Furthermore, “participants' responsiveness” was also taken into consideration in the Chicago Parent Program fidelity tool (14), and the Fidelity of Implementation Rating System (FIRS) (57, 58, 65) to ensure the program was received and understood by the participants. Finally, an overall score was assigned to rate the “overall quality index of program delivery” by using a specific criterion in the Chicago Parenting Program checklist (14).

One of the major challenges or limitations with the extant fidelity measures was the vague boundary between fidelity to content elements (or adherence) and fidelity to process elements (or competence), expected to be consistent with the theoretical model of the program (24, 45). Most of the program fidelity measures we reviewed were focused on monitoring a skill set or approach, a process element to measure content fidelity (24, 56, 62–64). Because the contents and the conceptual model of the ATTACH™ were closely related to the theoretical underpinnings of parental RF, in addition to the adherence, we needed to directly evaluate the use of the principles of parental RF deemed essential to the program. For example, exploration of “parental RF” representations was expected to be consistent with the theoretical model of ATTACH™ in each element of intervention.

Another challenge was the inattention to identifying unplanned program elements, an element of process fidelity (56). To describe the relationships between intervention delivery and outcomes accurately, it was essential to assess not only what and how many program elements were implemented but also whether and how many unplanned elements were delivered (57, 58, 65). This knowledge would improve training, monitoring and specific retraining for facilitators' adherence to the program protocol.

Adequate pacing, which allows the facilitators to deliver each step of intervention in a certain duration of time without rushing or dragging, is another important aspect of process fidelity (66). However, evaluation of facilitators' pacing has never been included in the fidelity measures of parenting measures that we reviewed. Although one fidelity tool (55) required calculating facilitator's and participant's talk time, talk time did not include facilitators' pacing through the program steps, which was necessary for our purposes. Also, cut-offs/criterion ratings for satisfactory levels of adherence are not frequently used in the parenting intervention fidelity literature. Although Caron et al. (67) described certification levels for the ABC fidelity measure, the decisions on the cut-offs appeared to be arbitrary. To over come these challenges, we focused on broader fidelity measure literature from psycho-educational interventions of non-parenting programs for guidance e.g., Song et al. (66), Miller et al. (68), Miller et al. (69) and Miller et al. (70).

ATTACH™ Fidelity Assessment Checklist

In developing the ATTACH™ Fidelity Assessment Checklist, we extracted elements from the extant measures that best met fidelity requirements for our ATTACH™ fidelity checklist. We created a dictionary of operational definitions of the program elements or checklist items that is available on request. This includes, but is not limited to the definitions of: thoughts and feelings: liked, disliked, and interesting moments; the hypothetical and real life situations; and rushing, connecting, disconnecting, dragging, resistant, going along, and pacing. For example, during the video feedback component of the intervention, the facilitator invites the parent to choose a part of the free play interaction they like or found interesting to ascertain what the parent is thinking (e.g., mother thinks her child is smart as child shakes the rattle) and feeling (e.g., mother describes pride) and what the parent thinks their baby is thinking (e.g., child wants to shake the rattle) and feeling (e.g., child is happy) in those moments.

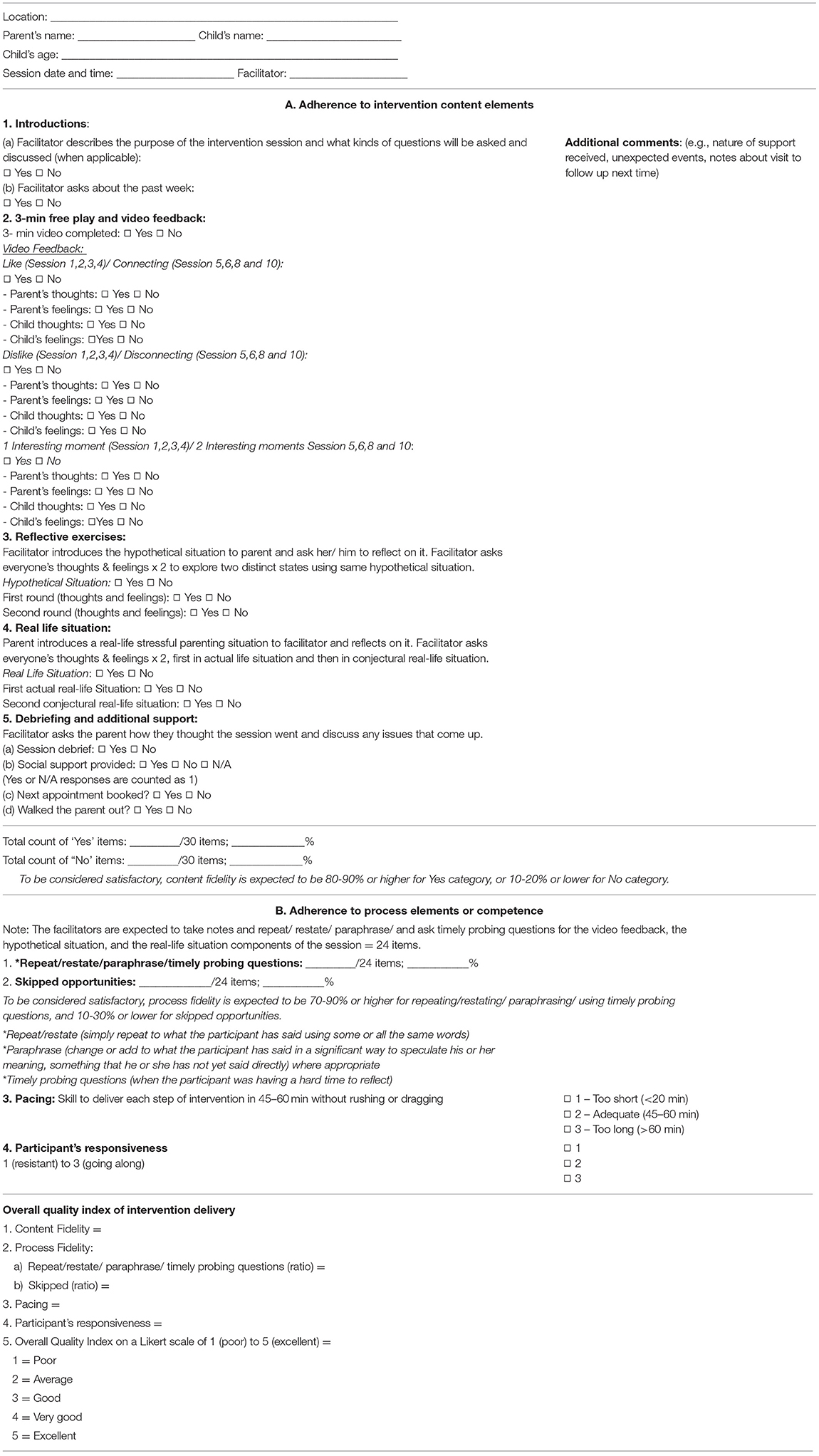

Adherence to Program Content Elements

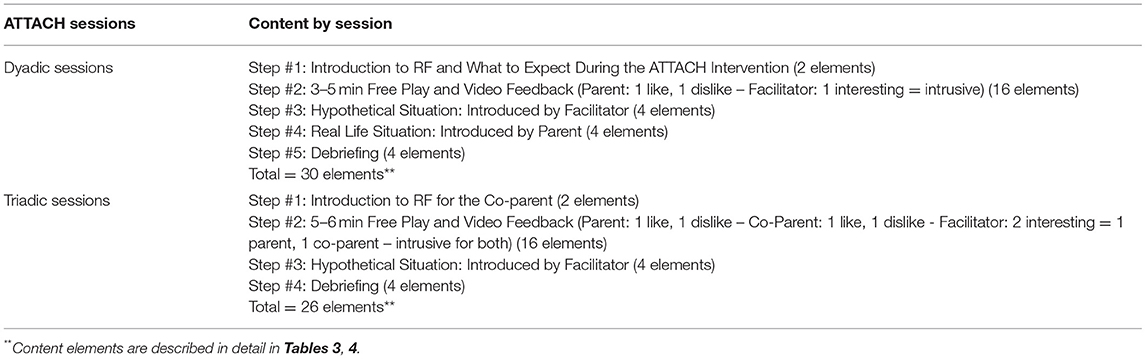

To explicitly define and evaluate delivery of theoretical components of the ATTACH™, we determined the program elements based on the guiding principle and prescribed steps of the program. The five steps included in the dyadic sessions and the four steps included in the triadic sessions had a total of 30 and 26 elements, respectively, as shown in Table 2. Each content element included several prescribed questions as shown in Tables 3, 4. To evaluate adherence to program content elements, each program component was coded as Yes (attempted = implemented as intended) or No (not attempted = never asked or failed to perform). Additionally, to evaluate the overall adherence to program content elements, the occurrences of Yes or No elements were simply summed. These numbers were then divided by the total number of elements (31) and multiplied by 100 to compute percentages; a higher score in the Yes category reflected higher program content fidelity. An intervention is typically regarded as implemented with high fidelity when there is >80–90% adherence to content (45, 62, 66, 71). Therefore, to be considered satisfactory, content fidelity was expected to be 90% or higher for Yes category, or 10–20% or lower for No category. Each element was expected to be treated as equal in importance at this stage of creation of the checklist.

Adherence to Process Elements or Competence

For the ATTACH™ fidelity checklist, we adapted the concepts of individual process skills largely from the Leadership Observation Tool (LOT) (45), the Common Sense Parenting Trial (60), the Getting Ready Project (61), and the EARLY ALLIANCE prevention trial (24). According to the ATTACH™ protocol, the facilitator was expected to maintain a positive communication behavior (for example, repeat or restate, paraphrase, and asking probing questions) by making notes for each component of the program, while using higher level of RF skills. For fidelity purposes, the occurrences of attempted communication behaviors were then counted followed by computing percentages. To be considered adequate, process fidelity or competence was expected to be 70–90% or higher, and 10–30% or lower for skipped opportunities, as suggested by literature (45, 62, 66). It deemed important to take both attempted communication behaviors and skipped opportunities into consideration when rating competence or adherence to process elements for accuracy purposes (45, 62, 66). A skipped opportunity is defined as the facilitator's missed opportunities to repeat/restate, paraphrase, or ask timely probing questions, e.g., asking about child's thoughts and feelings.

Pacing of the Program Delivery

For ATTACH™ to be paced adequately, the facilitator was expected to deliver each of the five steps in dyadic sessions, and four steps in triadic sessions in 45–60 min. We analyzed video-recorded sessions from pilot studies to determine recommended durations for the steps (17). For example, the duration of Step 2 may vary based on the time required to establish rapport with the participant; we expected this step to last at least 10 min to investigate all perspectives of the parent's representations of RF and should not last more than 20 min so that the remaining steps are completed without rushing. A 3-point rating scale from 1 (too short) to 3 (too long) was employed to rate the actual duration of the session as compared to the recommended duration range (55, 60, 66). Overall adherence to pacing was calculated by using a mean score of the ratings.

Participants' Responsiveness

Although the participants' responsiveness to the program is an aspect distinct from facilitators' adherence or competence, participants' acceptance of a program may impact fidelity (61). For example, if the participant is hesitant to further discuss reflective questions, this may hinder achieving the goals of the program. Others considered participants' responsiveness to be a potent mediator of the relationship between program or practice fidelity and participant outcomes (72, 73). For our checklist, we created items for this assessment as a determinant of fidelity, based on the concepts used in the Chicago Parent Program fidelity tool (14), and FIRS (57, 58, 65) to assess the degree to which ATTACH™ was received and understood by the participant. We used a Likert scale ranging from 1 (resistant) to 3 (going along) to rate participants' responsiveness (68).

Overall Quality Index of Program Delivery

The overall quality index included an overall assessment of the program delivery after getting a sense of the entire session by reviewing to the video-recorded session for adherence intervention content elements, competence, pacing and participants' responsiveness (14, 66, 68). This overall quality index was used to rate the quality of program delivery employing a predetermined criterion. The criterion was focused on facilitators' adherence to the program content elements and competence in delivering the program elements, without putting too much emphasis on frequencies of specific behaviors (14, 66, 70).

As described by (69, 70), typically this quality index evaluation included the extent to which the facilitator balanced their use of positive communication skills with their discretion in delivering the program on a 5-point Likert scale ranging from 1 (poor) to 5 (excellent). Each rating was defined e.g., overall quality index was expected to be rated 5 when the facilitator strictly adhered to the program components, by utilizing higher levels of clarification skills (e.g., repeating, reframing), making gentle and timely transitions, using probing questions ranging from fact to emotion probing, and missing few opportunities. Facilitators' overall quality index was expected to be rated 1 when they hardly used clarification skills (e.g., repeating, paraphrasing, reframing) probing questions, opportunities were often missed to explore further or promote RF, and the entire session felt like a question-answer session.

Decisions About Percentages and Ratings

The decisions about the percentages and ratings were adopted from fidelity assessments of psycho-educational interventions of both parenting and non-parenting programs (24, 30, 44, 45, 55, 66, 71). The percentages (used in the overall adherence to intervention content and process elements) and ratings (used in the pacing, dyad responsiveness, and over quality index of intervention delivery) were assessed in the ATTACH™ fidelity checklist. In deciding the number of points to be included in our rating scale (e.g., binary or Likert-type scale), we needed to carefully consider whether the rating scale would allow for raters to demonstrate their ability to differentiate among the set of behavior and activities to be coded (74). We also considered and whether such a detailed rating is useful and purposeful in evaluating fidelity. Taking these aspects into consideration, we elected to use 3-point ratings on the Likert scale items for pacing and participant's responsiveness, and 5-point ratings on the Likert scale items for overall quality index of program delivery (66).

Application of the ATTACH™ Fidelity Assessment Checklist

The resultant fidelity checklist was implemented as part of testing of the ATTACH™ program (see Tables 3, 4). There were two facilitators, authors LA and MH, responsible for program delivery. The facilitators completed comprehensive competency-based training, relying on role playing and skill demonstration and employed training manuals. The facilitators delivered the ATTACH™ sessions, which were video-recorded, and completed ATTACH™ visit forms. The video sessions were assessed to examine fidelity of the ATTACH™ sessions.

Data Analysis

To select a representative sample, we selected video recordings from one dyadic and one triadic session for each of 18 participants who completed ATTACH™, by selecting alternate sessions from both dyadic and triadic sessions. Thus, we examined 36 videotaped sessions (from pilot studies #1 and #2). Two trained raters independently coded session fidelity using the ATTACH™ fidelity checklist. We assessed inter-rater agreement between the coders by computing intraclass correlation coefficients [ICC; Type A (two-way mixed) using an absolute agreement definition; (55, 75)] using IBM SPSS Statistics version 26 software. Coders were also asked to comment on the utility of the checklist.

Results

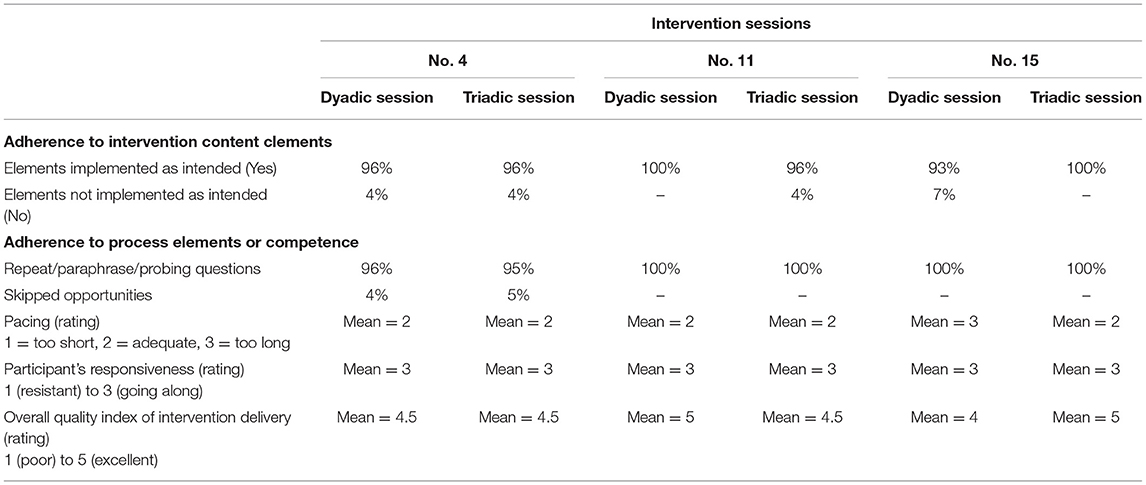

Table 5 shows an example of three ATTACH™ sessions assessed using the ATTACH™ fidelity assessment checklist. We selected the six sessions from three participants (both dyadic and triadic) to illustrate the full scope of assigned ratings and how the checklist may be used for monitoring fidelity and training. For example, for the first participant (No. 4), the overall quality index rating was 4.5 for both dyadic and triadic sessions. This rating was based on the 96–100% adherence on content and process fidelity, adequate pacing of program delivery, and high rating on participant's responsiveness for dyadic session. For the second participant (No. 11), an overall rating of 5 for dyadic session reflected completion of all elements of content fidelity (100%), process elements (100%) with no missed opportunities, completion of the session within time (adequate pacing), and high rating on participant's responsiveness for triadic session. For the third participant (No. 15), the overall rating was 4 for dyadic session, and adherence to content fidelity was 93% meaning that the facilitator skipped two items and took longer than the protocol required to complete the dyadic session.

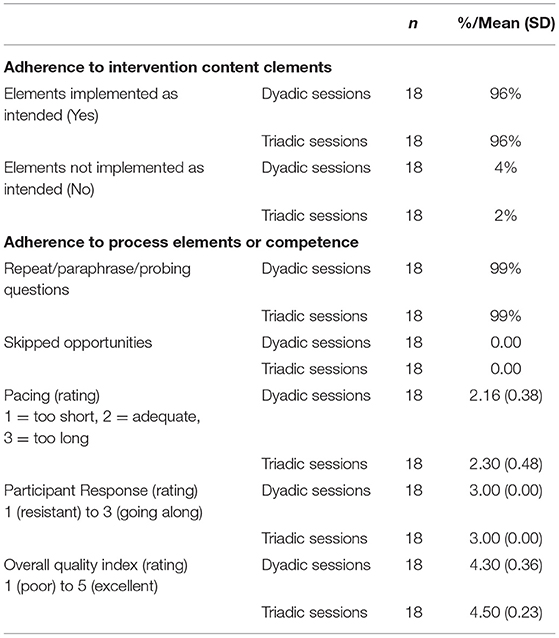

Table 6 indicates the adherence and competence of the ATTACH™ facilitators. Under adherence to program content elements, the percentage for implemented as intended was 96% for both dyadic and triadic sessions. The percentages for elements implemented as not intended was low, as expected, between 2 and 3%. Under adherence to program process elements, the fidelity for repeat/paraphrase/probing was 99%, while skipped opportunities was 0 as expected. For pacing, the mean was 2.16 for dyadic and 2.3 for triadic sessions out of possible 3. For participant responsiveness the mean was 3 for both dyadic and triadic sessions out of possible 3. The overall quality index for program delivery varied between “very good” to “excellent,” with a mean of 4.3/5. The coders who coded the videos provided positive feedback on the checklist describing that they found user friendly. There were minor changes to address clarifications requested by the coders. For example, our preliminary checklist only included what the parent like/disliked about the play session. However, coders noticed that in later sessions the facilitator changed from asking what the parent like/disliked about the play session to moments the parent felt they were connected/disconnected with their child. We revised our checklist accordingly. Evaluation of the sessions from the ATTACH™ from earlier to later participants showed that the facilitators improved over time, as demonstrated by the improved fidelity ratings. The ATTACH™ fidelity assessment checklist could be used for training and assessing fidelity. The ICC for agreement between the two coders for the three elements of the fidelity checklist (adherence to the ATTACH™ content elements, process elements, and overall quality index) was good to excellent (ICC = 0.85–1.00), suggesting strong inter-rater agreement.

Discussion

The ATTACH™ Fidelity Assessment checklist was developed to evaluate facilitators' adherence and competence in implementing an evidence-based, psycho-educational parenting program to high-risk families. We found that facilitators adhered to the program content and process fidelity close to 100%. They adequately paced or completed the sessions within 30–45 min without dragging or rushing and maintained excellent participant responsiveness. The overall quality index of program delivery ranged from 4 to 5 on a 5-point Likert scale (1 = poor, 5 = excellent). Coders for the ATTACH™ sessions helped to further refine the checklist by requesting minor changes.

Supported by previous research, we developed the ATTACH™ fidelity checklist to measure the elements of adherence to content and process, as well as participant responsiveness (44, 47, 55, 60, 62–64). Overall pacing of program delivery, used in our checklist, has never been included in previous parenting program fidelity checklists, to our knowledge. This item was adapted from fidelity measures of psycho-educational interventions (66). While overall fidelity of program delivery was only rated in a few parenting programs and mostly based on content validity (14, 55, 57), we created an overall quality index rating from psycho-educational intervention fidelity assessment based on both content and process elements (66, 70, 76–78). As ATTACH™ is a psycho-educational parenting program, we deemed it important to include both pacing and overall assessment items to ensure we capture the essence of program delivery.

As we reviewed in Table 1, most of the extant fidelity checklists of the parenting programs have limitations as they are developed to minimize or eliminate the possibility that a practitioner will miss or fail to perform one or more steps or actions. However, assessing adherence to process elements or competence by simple using “Yes” and “No” categories may not be the best answer to meet all needs, specifically in case of psycho-educational interventions such as ATTACH™ (44, 57, 66). We therefore also applied insights from other, non-parenting intervention fidelity measures (such as Song et al. and Miller et al.) to fully comprehend and assess process elements or competence. We also provided an overall quality index of the intervention delivery, as supported by other fidelity literature of parenting interventions and non-parenting interventions (14, 57, 66, 68).

The ATTACH™ fidelity checklist will be specifically important in evaluating the efficacy of the program on outcomes, exploring ways to improve the program, and learning how to overcome challenges and barriers to program implementation (8, 55). The checklist may also improve the efficiency of training facilitators and systematic evaluation of their adherence and competence (79). Assessing competence of facilitators in program delivery is crucial because a facilitator may implement all the content of the program, but in a non-prescribed manner that can result in low efficacy of the program (14). Any deviated delivery of a program element and not using positive communication behaviors can be discouraged and corrected by assessing the program sessions by using our fidelity checklist.

Although only two facilitators delivered the ATTACH™ sessions evaluated here, the approach of monitoring and assessment is applicable to multiple facilitators. While we developed the fidelity checklist for training and monitoring fidelity in research contexts, it has not been evaluated in a clinical setting. Although inter-rater reliability was high (80), only two highly trained coders were assessed. Inter-rater reliability with clinical raters and a larger sample of sessions is needed to confirm these results. A second limitation is the lack of variability in some of the scores (e.g., Participant Responsiveness) of fidelity. Although this indicates overall good adherence and competence in delivering the ATTACH™ intervention, which is a positive finding, lack of variability in fidelity items limit the ability to understand the distinct items that may influence outcomes (12). This should be discussed as a limitation and potential future direction for research. Further work may focus on greater variability in a sample of community-based facilitators, suggesting that it might be valuable to retain these measures until the measure can be validated in a community implementation setting.

Questions remain concerning whether variations in program implementation should be evaluated as part of program fidelity by taking participants' responsiveness into account as moderators of the program effects on outcomes, or both. In future research, weights for different elements may be taken into consideration when critical and core elements have been identified; however, this could be difficult to implement in clinical settings. There are important cost considerations in using fidelity measures (81), as we found that evaluation of an hour-long single session consumed up to 2.5 h for coding and 30 min for scoring by an experienced coder/rater. Other fidelity measures (e.g., 58, 60) did not involve rating a full intervention session, instead they rated a session segment that took as little as 5 min, as a solution to potential time and cost issues in the discussion. Given the creation and testing phase of ATTACH™, we did not know whether significant differences exist between the major program elements (video feedback and RF exercises). Thus, we treated all steps equally in importance and considered them to be delivered within a specific range of time set for the intervention within the protocol.

In future, an extension of this research would examine links between program fidelity and targeted outcomes (e.g., increased fidelity scores may yield more favorable effects or outcomes) to help ascertain if the benefits outweigh the costs (62, 82, 83). This further step would provide evidence for validity of the ATTACH™ fidelity checklist. Nonetheless, measuring fidelity is arguably even more important as interventions are implemented in community settings outside of research studies, with less control and oversight over fidelity, and decreases in fidelity are linked to reduced intervention outcomes [e.g., (84)].

Conclusion

The program content elements are unique to the ATTACH™ program; therefore, the process of developing and evaluating a fidelity checklist is specifically described for the ATTACH™ parenting program. However, the ATTACH™ fidelity checklist described in this paper, may inform and be adapted to fidelity evaluation tools for other parenting program. High fidelity scores in ATTACH™ sessions provided an evidence of validity for the measure. Establishing treatment differentiation between the ATTACH™ and other manualised interventions would provide another future direction or next step in validation of this measure (33). We have designed a reliable measure and shown how nurses may operationalize program adherence and competence to evaluate facilitators' adherence and competence so that elements of the implementation of parenting programs are more evident. Our evaluation showed the ATTACH™ intervention fidelity was high. Development of checklists like the ATTACH™ fidelity assessment checklist enables the systematic evaluation of program delivery and identification of therapeutic components that enable targeted efforts at improvement.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Ethics Statement

The studies involving human participants were reviewed and approved by Conjoint Health Research Ethics Board, University of Calgary, Calgary, Canada. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

LA: formulated the research question and oversaw all aspects of manuscript preparation from literature review, found all of the relevant articles for fidelity assessment checklist development, developed and refined the checklist with the help of KB and NL, conducted the data analysis, interpreted the results, and undertook writing and submission. KB: helped oversee the research question, literature review, development of checklist, interpretation of the results, and writing. CE: helped oversee the literature review and reviewed the relevant articles, interpreted the results, and writing. MH: helped review the relevant articles, development and refinement of checklist, interpreted the results, and writing. NL: helped formulate the research question, oversaw the literature review and reviewed the relevant articles, helped in the development and refinement of fidelity checklist, interpretation of the results, and writing. All authors have read and approved the final manuscript.

Funding

We are truly thankful to the Harvard Frontiers of Innovation to support this work.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. Knauer HA, Ozer EJ, Dow WH, Fernald LC. Parenting quality at two developmental periods in early childhood and their association with child development. Early Child Res Q. (2019) 47:396–404. doi: 10.1016/j.ecresq.2018.08.009

2. Kopala-Sibley DC, Cyr M, Finsaas MC, Orawe J, Huang A, Tottenham N, et al. Early childhood parenting predicts late childhood brain functional connectivity during emotion perception and reward processing. Child Dev. (2020) 91:110–28. doi: 10.1111/cdev.13126

3. Kemp L, Bruce T, Elcombe EL, Anderson T, Vimpani G, Price A, et al. Quality of delivery of “right@ home”: implementation evaluation of an Australian sustained nurse home visiting intervention to improve parenting and the home learning environment. PLoS ONE. (2019) 14:e0215371. doi: 10.1371/journal.pone.0215371

4. Reticena KDO, Yabuchi V. D. N. T., Gomes MFP, Siqueira LDE, Abreu C. P. D., Fracolli LA, et al. Role of nursing professionals for parenting development in early childhood: a systematic review of scope. Rev Lat Am Enfermagem. (2019) 27:e3213. doi: 10.1590/1518-8345.3031.3213

5. Schoenwald SK, Garland AF. A review of treatment adherence measurement methods. Psychol Assess. (2013) 25:146–156. doi: 10.1037/a0029715

6. Seay KD, Byers K, Feely M, Lanier P, Maguire-Jack K, McGill T. Scaling up: replicating promising interventions with fidelity. In: Daro D, Cohn Donnelly A, Huang L, Powell B, editors. Advances in Child Abuse Prevention Knowledge: The Perspective of New Leadership. New York, NY: Springer (2015). p. 179–201.

7. Biel CH, Buzhardt J, Brown JA, Romano MK, Lorio CM, Windsor KS, et al. Language interventions taught to caregivers in homes and classrooms: a review of intervention and implementation fidelity. Early Child Res Q. (2019) 50:140–56. doi: 10.1016/j.ecresq.2018.12.002

8. Bond GR, Drake RE. Assessing the fidelity of evidence-based practices: history and current status of a standardized measurement methodology. Adm Policy Ment Health. (2019) 47:874–84. doi: 10.1007/s10488-019-00991-6

9. Dusenbury L, Brannigan R, Falco M, Hansen WB. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. Health Educ Res. (2003) 18:237–56. doi: 10.1093/her/18.2.237

10. Goense PB, Boendermaker L, van Yperen. T. Measuring treatment integrity: use of and experience with measurements in child and youth care organizations. J Behav Health Serv Res. (2018) 45:469–88. doi: 10.1007/s11414-018-9600-4

11. Lange AM, van der Rijken RE, Delsing MJ, Busschbach JJ, Scholte RH. Development of therapist adherence in relation to treatment outcomes of adolescents with behavioral problems. J Clin Child Adolesc Psychol. (2019) 48 (Supp. 1):S337–46. doi: 10.1080/15374416.2018.1477049

12. Breitenstein SM, Gross D, Garvey CA, Hill C, Fogg L, Resnick B. Implementation fidelity in community-based interventions. Res Nurs Health. (2010) 33:164–73. doi: 10.1002/nur.20373

13. Shonkoff JP, Richmond J, Levitt P, Bunge SA, Cameron JL, Duncan GJ, et al. From Best Practices to Breakthrough Impacts a Science-Based Approach to Building a More Promising Future for Young Children and Families. Cambirdge, MA: Harvard University, Center on the Developing Child (2016).

14. Bettencourt AF, Gross D, Breitenstein S. Evaluating implementation fidelity of a school-based parenting program for low-income families. J School Nurs. (2018) 35:325–36. doi: 10.1177/1059840518786995

15. Wilson KR, Havighurst SS, Harley AE. Tuning in to kids: an effectiveness trial of a parenting program targeting emotion socialization of preschoolers. J Family Psychol. (2012) 26:56–65. doi: 10.1037/a0026480

16. Anis L, Letourneau NL, Benzies K, Ewashen C, Hart MJ. Effect of the attachment and child health parent training program on parent–child interaction quality and child development. Can J Nurs Res. (2020) 52:157–68. doi: 10.1177/0844562119899004

17. Letourneau N, Anis L, Ntanda H, Novick J, Steele M, Steele H, et al. Attachment & child health (ATTACH) pilot trials: effect of parental reflective function intervention for families affected by toxic stress. Infant Ment Health J. (2020) 41:445–62. doi: 10.1002/imhj.21833

18. Borrelli B. The assessment, monitoring, and enhancement of treatment fidelity in public health clinical trials. J Public Health Dent. (2011) 71:S52–63. doi: 10.1111/j.1752-7325.2011.00233.x

19. McLeod BD, Southam-Gerow MA, Jensen-Doss A, Hogue A, Kendall PC, Weisz JR. Benchmarking treatment adherence and therapist competence in individual cognitive-behavioral treatment for youth anxiety disorders. J Clin Child Adolesc Psychol. (2019) 48 (Supp. 1):S234–46. doi: 10.1080/15374416.2017.1381914

20. Carroll KM, Nich C, Sifry RL, Nuro KF, Frankforter TL, Ball SA, et al. A general system for evaluating therapist adherence and competence in psychotherapy research in the addictions. Drug Alcohol Depend. (2000) 57:225–38. doi: 10.1016/S0376-8716(99)00049-6

21. Santacroce SJ, Maccarelli LM, Grey M. Intervention fidelity. Nurs Res. (2004) 53:63–6. doi: 10.1097/00006199-200401000-00010

22. Reed D, Titler MG, Dochterman JM, Shever LL, Kanak M, Picone DM. Measuring the dose of nursing intervention. Int J Nurs Terminol Classifications. (2007) 18:121–30. doi: 10.1111/j.1744-618X.2007.00067.x

23. Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. (2014) 348:g1687. doi: 10.1136/bmj.g1687

24. Dumas JE, Lynch AM, Laughlin JE, Smith EP, Prinz RJ. Promoting intervention fidelity: Conceptual issues, methods, and preliminary results from the EARLY ALLIANCE prevention trial. Am J Prev Med. (2001) 20:38–47. doi: 10.1016/S0749-3797(00)00272-5

25. Resnick B, Bellg AJ, Borrelli B, De Francesco C, Breger R, Hecht J, et al. Examples of implementation and evaluation of treatment fidelity in the BCC studies: where we are and where we need to go. Ann Behav Med. (2005) 29:46. doi: 10.1207/s15324796abm2902s_8

26. Resnick B, Inguito P, Orwig D, Yahiro JY, Hawkes W, Werner M, et al. Treatment fidelity in behavior change research: a case example. Nurs Res. (2005) 54:139–143. doi: 10.1097/00006199-200503000-00010

27. Bellg AJ, Borrelli B, Resnick B, Hecht J, Minicucci DS, Ory M, et al. Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH Behavior Change Consortium. Health Psychol. (2004) 23:443–51. doi: 10.1037/0278-6133.23.5.443

28. Radziewicz RM, Rose JH, Bowman KF, Berila RA, O'Toole EE, Given B. Establishing treatment fidelity in a coping and communication support telephone intervention for aging patients with advanced cancer and their family caregivers. Cancer Nurs. (2009) 32:193–202. doi: 10.1097/NCC.0b013e31819b5abe

29. Garbacz LL, Brown DM, Spee GA, Polo AJ, Budd KS. Establishing treatment fidelity in evidence-based parent training programs for externalizing disorders in children and adolescents. Clin Child Fam Psychol Rev. (2014) 17:230–47. doi: 10.1007/s10567-014-0166-2

30. Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implement Sci. (2007) 2:40. doi: 10.1186/1748-5908-2-40

31. Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: are implementation effects out of control? Clin Psychol Rev. (1998) 18:23–45. doi: 10.1016/S0272-7358(97)00043-3

32. Moncher FJ, Prinz RJ. Treatment fidelity in outcome studies. Clin Psychol Rev. (1991) 11:247–66. doi: 10.1016/0272-7358(91)90103-2

33. Perepletchikova F, Kazdin AE. Treatment integrity and therapeutic change: issues and research recommendations. Clin Psychol Sci Pract. (2005) 12:365–83. doi: 10.1093/clipsy.bpi045

34. Stein KF, Sargent JT, Rafaels N. Intervention research: establishing fidelity of the independent variable in nursing clinical trials. Nurs Res. (2007) 56:54–62. doi: 10.1097/00006199-200701000-00007

35. Feely M, Seay KD, Lanier P, Auslander W, Kohl PL. Measuring fidelity in research studies: a field guide to developing a comprehensive fidelity measurement system. Child Adolesc Soc Work J. (2018) 35:139–52. doi: 10.1007/s10560-017-0512-6

36. Faulkner MS. Intervention fidelity: ensuring application to practice for youth and families. J Special Pediatr Nurs. (2012) 17:33–40. doi: 10.1111/j.1744-6155.2011.00305.x

37. Chartier M, Enns JE, Nickel NC, Campbell R, Phillips-Beck W, Sarkar J, et al. The association of a paraprofessional home visiting intervention with lower child maltreatment rates in First Nation families in Canada: a population-based retrospective cohort study. Child Youth Serv Rev. (2020) 108:104675. doi: 10.1016/j.childyouth.2019.104675

38. Lorber MF, Olds DL, Donelan-McCall N. The impact of a preventive intervention on persistent, cross-situational early onset externalizing problems. Prevent Sci. (2019) 20:684–94. doi: 10.1007/s11121-018-0973-7

39. Gawande A. The Checklist Manifesto: How to Get Things Right. New York, NY: Metropolitan Books (2009).

40. Perry AG, Potter PA, Ostendorf W. Skills Performance Checklists for Clinical Nursing Skills and Techniques. 8th ed. Maryland Heights, MO: Elsevier Mosby (2014).

41. Wilson C. Credible Checklists and Quality Questionnaires: A User-Centered Design Method. Waltham, MA: Morgan Kaufman (2013).

42. Becqué YN, Rietjens JA, van Driel AG, van der Heide A, Witkamp E. Nursing interventions to support family caregivers in end-of-life care at home: a systematic narrative review. Int J Nurs Stud. (2019) 97:28–39. doi: 10.1016/j.ijnurstu.2019.04.011

43. Eymard AS, Altmiller G. Teaching nursing students the importance of treatment fidelity in intervention research: students as interventionists. J Nurs Educ. (2016) 55:288–91. doi: 10.3928/01484834-20160414-09

44. Chiapa A, Smith JD, Kim H, Dishion TJ, Shaw DS, Wilson MN. The trajectory of fidelity in a multiyear trial of the family check-up predicts change in child problem behavior. J Consult Clin Psychol. (2015) 83:1006. doi: 10.1037/ccp0000034

45. Eames C, Daley D, Hutchings J, Hughes JC, Jones K, Martin P, et al. The leader observation tool: a process skills treatment fidelity measure for the incredible years parenting programme. Child Care Health Dev. (2008) 34:391–400. doi: 10.1111/j.1365-2214.2008.00828.x

46. Rixon L, Baron J, McGale N, Lorencatto F, Francis J, Davies A. Methods used to address fidelity of receipt in health intervention research: a citation analysis and systematic review. BMC Health Serv Res. (2016) 16:663. doi: 10.1186/s12913-016-1904-6

47. Smith JD, Dishion TJ, Shaw DS, Wilson MN. Indirect effects of fidelity to the family check-up on changes in parenting and early childhood problem behaviors. J Consult Clin Psychol. (2013) 81:962. doi: 10.1037/a0033950

48. Alexander K. Integrative review of the relationship between mindfulness-based parenting interventions and depression symptoms in parents. J Obstetr Gynecol Neonatal Nurs. (2018) 47:184–90. doi: 10.1016/j.jogn.2017.11.013

49. Friedmutter R. The effectiveness of mindful parenting interventions: a meta analysis. In: Dissertation Abstracts International: Section B: The Sciences and Engineering. New York, NY, (2016). p. 76.

50. Townshend K, Jordan Z, Stephenson M, Tsey K. The effectiveness of mindful parenting programs in promoting parents' and children's wellbeing: a systematic review. JBI Database Syst Rev Implement Rep. (2016) 14:139–80. doi: 10.11124/JBISRIR-2016-2314

51. Lombardo MV, Baron-Cohen S. The role of the self in mindblindness in autism. Conscious Cogn. (2011) 20:130–40. doi: 10.1016/j.concog.2010.09.006

52. Elliott R, Bohart AC, Watson JC, Greenberg LS. Empathy. Psychotherapy. (2011) 48:43–9. doi: 10.1037/a0022187

53. Oppenheim D, Koren-Karie N, Dolev S, Yirmiya N. Maternal insightfulness and resolution of the diagnosis are associated with secure attachment in preschoolers with autism spectrum disorders. Child Dev. (2009) 80:519–27. doi: 10.1111/j.1467-8624.2009.01276.x

54. Meins E, Fernyhough C, Wainwright R, Das Gupta M, Fradley E, Tuckey M. Maternal mind–mindedness and attachment security as predictors of theory of mind understanding. Child Dev. (2002) 73:1715–26. doi: 10.1111/1467-8624.00501

55. Bywater T, Gridley N, Berry V, Blower S, Tobin K. The parent programme implementation checklist (PPIC): the development and testing of an objective measure of skills and fidelity for the delivery of parent programmes. Child Care Pract. (2019) 25:281–309. doi: 10.1080/13575279.2017.1414031

56. Proctor KB, Brestan-Knight E. Evaluating the use of assessment paradigms for preventive interventions: a review of the triple p—positive parenting program. Child Youth Serv Rev. (2016) 62:72–82. doi: 10.1016/j.childyouth.2016.01.018

57. Forgatch MS, Patterson GR, DeGarmo DS. Evaluating fidelity: predictive validity for a measure of competent adherence to the Oregon model of parent management training. Behav Ther. (2005) 36:3–13. doi: 10.1016/S0005-7894(05)80049-8

58. Forgatch MS, DeGarmo DS, Beldavs ZG. An efficacious theory-based intervention for stepfamilies. Behav Ther. (2005) 36:357–65. doi: 10.1016/S0005-7894(05)80117-0

59. Thijssen J, Albrecht G, Muris P, de Ruiter C. Treatment fidelity during therapist initial training is related to subsequent effectiveness of parent management training—Oregon model. J Child Family Stud. (2017) 26:1991–9. doi: 10.1007/s10826-017-0706-8

60. Oats RG, Cross WF, Mason WA, Casey-Goldstein M, Thompson RW, Hanson K, et al. Implementation assessment of widely used but understudied prevention programs: an illustration from the common sense parenting trial. Eval Program Plann. (2014) 44:89–97. doi: 10.1016/j.evalprogplan.2014.02.002

61. Knoche LL, Sheridan SM, Edwards CP, Osborn AQ. Implementation of a relationship-based school readiness intervention: a multidimensional approach to fidelity measurement for early childhood. Early Child Res Q. (2010) 25:299–313. doi: 10.1016/j.ecresq.2009.05.003

62. Caron E, Bernard K, Dozier M. In vivo feedback predicts parent behavior change in the attachment and biobehavioral catch-up intervention. J Clin Child Adolesc Psychol. (2018) 47 (Supp. 1):S35–46. doi: 10.1080/15374416.2016.1141359

63. Barnett ML, Niec LN, Acevedo-Polakovich ID. Assessing the key to effective coaching in parent–child interaction therapy: the therapist-parent interaction coding system. J Psychopathol Behav Assess. (2014) 36:211–23. doi: 10.1007/s10862-013-9396-8

64. Barnett ML, Niec LN, Peer SO, Jent JF, Weinstein A, Gisbert P, et al. Successful therapist–parent coaching: how in vivo feedback relates to parent engagement in parent–child interaction therapy. J Clin Child Adolesc Psychol. (2017) 46:895–902. doi: 10.1080/15374416.2015.1063428

65. Forgatch MS, Patterson GR, Gewirtz AH. Looking forward: the promise of widespread implementation of parent training programs. Perspect Psychol Sci. (2013) 8:682–94. doi: 10.1177/1745691613503478

66. Song MK, Happ MB, Sandelowski M. Development of a tool to assess fidelity to a psycho-educational intervention. J Adv Nurs. (2010) 66:673–82. doi: 10.1111/j.1365-2648.2009.05216.x

67. Caron E, Weston-Lee P, Haggerty D, Dozier M. Community implementation outcomes of attachment and biobehavioral catch-up. Child Abuse Negl. (2016) 53:128–37. doi: 10.1016/j.chiabu.2015.11.010

68. Miller WR, Moyers TB, Ernst D, Amrhein P. Manual for the Motivational Interviewing Skill Code, Vol. 2007. Albuquerque, NM: University of New Mexico (2000).

69. Miller WR, Rollnick S. Motivational Interviewing: Preparing People to Change. New York, NY: The Guilford Press (2002).

70. Miller WR, Moyers TB, Arciniega L, Ernst D, Forcehimes A. Training, supervision and quality monitoring of the COMBINE Study behavioral interventions. J Stud Alcohol Drugs. (2005) 66(Suppl. 15):188–95. doi: 10.15288/jsas.2005.s15.188

71. Bragstad LK, Bronken BA, Sveen U, Hjelle EG, Kitzmüller G, Martinsen R, et al. Implementation fidelity in a complex intervention promoting psychosocial well-being following stroke: an explanatory sequential mixed methods study. BMC Med Res Methodol. (2019) 19:59. doi: 10.1186/s12874-019-0694-z

72. Hurley JJ. Social validity assessment in social competence interventions for preschool children: a review. Topics Early Childhood Special Educ. (2012) 32:164–174. doi: 10.1177/0271121412440186

73. Leko MM. The value of qualitative methods in social validity research. Remed Special Educ. (2014) 35:275–86. doi: 10.1177/0741932514524002

74. Beckstead JW. Content validity is naught. Int J Nurs Stud. (2009) 46:1274–83. doi: 10.1016/j.ijnurstu.2009.04.014

75. Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropract Med. (2016) 15:155–63. doi: 10.1016/j.jcm.2016.02.012

76. Miller WR, Moyers TB, Ernst D, Amrhein P. Manual for the Motivational Interviewing Skill Code (MISC). Unpublished Manuscript. Albuquerque: Center on Alcoholism, Substance Abuse and Addictions, University of New Mexico (2003).

77. Miller WR, Rollnick S. Motivational Interviewing: Helping People Change. New York, NY: Guilford Press (2012).

78. Madson MB, Campbell TC. Measures of fidelity in motivational enhancement: a systematic review. J Subst Abuse Treat. (2006) 31:67–73. doi: 10.1016/j.jsat.2006.03.010

79. Orwin RG. Assessing program fidelity in substance abuse health services research. Addiction. (2000) 95:309–27. doi: 10.1080/09652140020004250

80. Streiner DL, Norman GR. Health Measurement Scales: A Practical Guide to Their Development and Use. New York, NY: Oxford University Press (2003).

81. Tappin DM, McKay C, McIntyre D, Gilmour WH, Cowan S, Crawford F, et al. A practical instrument to document the process of motivational interviewing. Behav Cogn Psychother. (2000) 28:17–32. doi: 10.1017/S1352465800000035

82. Al-Ubaydli O, List JA, Suskind D. The Science of Using Science: Towards an Understanding of the Threats to Scaling Experiments. Working Paper 25848. National Bureau of Economic Research (2019). Available online at: http://www.nber.org/papers/w25848 (accessed October 20, 2020).

83. Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A Synthesis of the Literature. University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (FMHI Publication #231) (2005).

Keywords: parenting, intervention, program, fidelity tools, checklist, measure, early childhood, ATTACH

Citation: Anis L, Benzies KM, Ewashen C, Hart MJ and Letourneau N (2021) Fidelity Assessment Checklist Development for Community Nursing Research in Early Childhood. Front. Public Health 9:582950. doi: 10.3389/fpubh.2021.582950

Received: 23 November 2020; Accepted: 08 April 2021;

Published: 14 May 2021.

Edited by:

Wanzhen Chen, East China University of Science and Technology, ChinaReviewed by:

Carl Dunst, Orelena Hawks Puckett Institute, United StatesDawn Frambes, Calvin University, United States

Copyright © 2021 Anis, Benzies, Ewashen, Hart and Letourneau. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lubna Anis, bGFuaXNAdWNhbGdhcnkuY2E=

Lubna Anis

Lubna Anis Karen M. Benzies

Karen M. Benzies Carol Ewashen

Carol Ewashen Martha J. Hart

Martha J. Hart Nicole Letourneau

Nicole Letourneau