- 1College of Landscape and Horticulture, Yunnan Agricultural University, Kunming, China

- 2Barcelona School of Architecture, Universitat Politècnica de Catalunya, Barcelona, Spain

- 3Department of Ultrasound, Shenzhen Second People’s Hospital, Shenzhen, China

1 Introduction

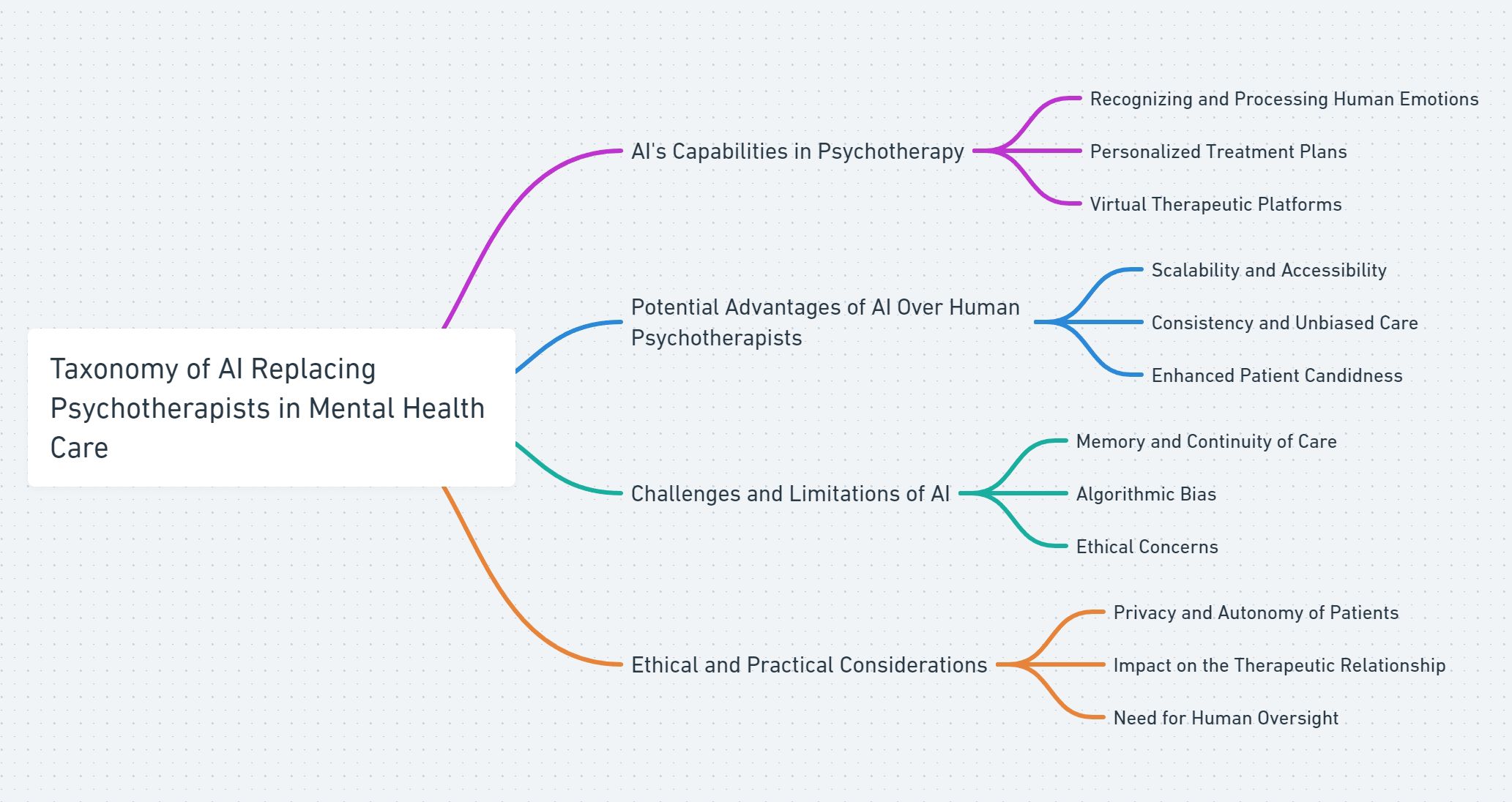

In the current technological era, Artificial Intelligence (AI) has transformed operations across numerous sectors, enhancing everything from manufacturing automation to intelligent decision support systems in financial services (1, 2). In the health sector, particularly, AI has not only refined the accuracy of disease diagnoses (3) but has also ushered in groundbreaking advancements in personalized medicine (4). The mental health field, amid a global crisis characterized by increasing demand and insufficient resources, is witnessing a significant paradigm shift facilitated by AI, presenting novel approaches that promise to reshape traditional mental health care models (5, 6) (see Figure 1).

Figure 1. Taxonomy of AI’s role in replacing psychotherapists, highlighting AI’s ability to recognize human emotions, potential advantages, challenges, and ethical considerations in mental health care.

Mental health, once a stigmatized aspect of health care, is now recognized as a critical component of overall well-being, with disorders such as depression becoming leading causes of global disability (WHO). Traditional mental health care, reliant on in-person consultations, is increasingly perceived as inadequate against the growing prevalence of mental health issues (7, 8). AI’s role in mental health care is multifaceted, encompassing predictive analytics, therapeutic interventions, clinician support tools, and patient monitoring systems (9). For instance, AI algorithms are increasingly used to predict treatment outcomes by analyzing patient data (10). Meanwhile, AI-powered interventions, such as virtual reality exposure therapy and chatbot-delivered cognitive behavioral therapy, are being explored, though they are at varying stages of validation (11, 12). Each of these applications is evolving at its own pace, influenced by technological advancements and the need for rigorous clinical validation (13, 14).

Furthermore, the role of AI in mental health extends to potentially replacing certain functions traditionally performed by human psychotherapists. Innovations in machine learning and natural language processing have enabled AI systems like ChatGPT to recognize and process complex human emotions, facilitating interactions that once required the nuanced understanding of trained therapists (16, 17). Preliminary studies suggest that AI-powered chatbots may help alleviate symptoms of anxiety and depression (18–20). However, these studies often involve small participant groups and lack long-term follow-up, making it difficult to draw definitive conclusions about their effectiveness. Consequently, while AI interventions hold promise, further research through large-scale, randomized controlled trials is necessary to establish their efficacy and sustainability over time.

As AI continues to evolve and become more deeply integrated into the mental healthcare sector, its potential to fundamentally transform the field is undeniable. At a time when mental health issues have reached pandemic proportions globally, affecting productivity and quality of life (23, 24), the need for innovative solutions is urgent. AI’s integration into mental health services offers promising avenues for enhancing care delivery and improving treatment efficacy and efficiency. However, it is crucial to approach this evolution with caution. We must carefully address the limitations of AI, such as algorithmic bias, ethical concerns, and the need for human oversight, to prevent future disparities and ensure that AI complements rather than replaces the essential human elements of psychotherapy. This balanced approach will be key to harnessing AI’s benefits while safeguarding the quality and accessibility of mental health care.

2 AI’s capabilities and progress in psychotherapy

The integration of Artificial Intelligence (AI) in Psychotherapy represents a significant phase in the evolution of mental health care, leveraging technology to enhance both treatment efficacy and accessibility. Initial experiments in the 1960s, notably with the ELIZA program, showcased AI’s potential for therapeutic applications by mimicking human-like conversations (25). This pioneering work established the foundation for AI’s increasing role in psychological therapy and assessment over the ensuing decades.

The development of AI systems in the 1980s aimed to replicate human psychological expertise, leading to advanced diagnostic and therapeutic tools across various psychological disciplines (26, 27). By the end of the 20th century, this evolution gave rise to computerized cognitive behavioral therapy (CBT) programs, which were designed to provide structured, evidence-based interventions for common mental health conditions (28). Although these early applications were more basic than current AI technologies, they marked a pivotal shift toward enhancing the accessibility of mental health services through digital means (28). As technology advanced, the role of AI in mental health care rapidly expanded to encompass early detection of mental health issues, the creation of personalized treatment plans, and the introduction of virtual therapists and teletherapy enhancements (4, 15).

Continuing advancements in AI technology, driven by increases in computational power and breakthroughs in machine learning and natural language processing (NLP), have enabled more sophisticated interactions between AI systems and users. AI models, particularly those utilizing the transformer architecture like OpenAI’s ChatGPT (Version GPT-4o), demonstrate an exceptional capacity for recognizing the complexities of human emotion and language nuances (17). These models facilitate engaging conversations that adeptly interpret emotional states, providing contextually and emotionally relevant response (29). Future enhancements are expected to introduce more natural, real-time voice interactions and enable conversations with ChatGPT through real-time video, broadening the potential applications of AI in Psychotherapy (30). The upcoming GPT-5 is anticipated to surpass the current capabilities of GPT-4, further augmenting the effectiveness of AI applications in mental health care and extending the range of services available to patients (31).

2.1 ChatGPT in emotional awareness and mental health

A study by Elyoseph utilized the Levels of Emotional Awareness Scale (LEAS) to assess ChatGPT’s ability to recognize and articulate emotions in hypothetical scenarios (16). The findings indicate that ChatGPT can generate responses that reflect a level of emotional awareness similar to the general population. However, it’s important to note that ChatGPT’s ‘understanding’ is based on pattern recognition and language modeling, not on genuine emotional comprehension. Therefore, while ChatGPT can mimic emotional responses, it does not experience emotions as humans do, and its capabilities in this area are limited to textual analysis and generation.

2.2 Applications in mental health interventions

This proficiency in emotional comprehension positions ChatGPT as a potentially valuable tool in the field of psychotherapy and mental health care. For example, ChatGPT has been involved in pilot studies where it assisted in identifying early warning signs of mental health issues such as suicidal tendencies and providing initial therapeutic interventions (32). Moreover, recent research has demonstrated that general-purpose AI models, like Gemini Pro and GPT-4, outperform traditional therapeutic bots, such as Wysa and Youper, particularly in correcting cognitive biases like overtrust, fundamental attribution error, and the just-world hypothesis. In these areas, GPT-4 scored the highest, while therapeutic bots like Wysa scored the lowest (33).

2.3 Practical application: a hypothetical scenario

To illustrate how ChatGPT-4 can function in a therapeutic context, consider the following hypothetical scenario involving an individual seeking support from an AI-powered platform.

Practical Application of ChatGPT-4 in Psychotherapy

Alex is experiencing symptoms of anxiety related to work stress. Unable to access immediate professional help, Alex turns to a mental health app powered by ChatGPT-4 for support.

Alex: “I’ve been feeling really overwhelmed with work lately. Deadlines are piling up, and I can’t seem to catch up.”

ChatGPT-4: “I’m sorry to hear that you’re feeling this way. It sounds like you’re under a lot of pressure. Would you like to talk more about what’s causing these feelings, or perhaps explore some strategies to manage your stress?”

Alex: “I just feel like no matter how much I do, it’s never enough. I’m worried I’m going to let everyone down.”

ChatGPT-4: “It seems you’re experiencing a fear of disappointing others, which can be quite stressful. Remember, it’s important to acknowledge your efforts. Would discussing some time management techniques or ways to set realistic expectations be helpful?”

In this interaction, ChatGPT-4 provides empathetic responses, validates Alex’s feelings, and offers to explore coping strategies. This example demonstrates how AI-driven support can offer immediate assistance, especially for individuals who may lack access to mental health professionals or prefer the anonymity and convenience of a digital platform.

2.4 Implications for future psychotherapeutic practice

The integration of Artificial Intelligence (AI) in Psychotherapy promises transformative advancements in mental health care, each highlighted by recent research. Cunzhou Ran’s work on emotion analysis using ChatGPT demonstrates AI’s ability to recognize emotional nuances in communication, indicating potential for more empathetic interactions in therapy (34). Graham explores AI’s use in diagnosing and treating a range of mental health disorders, suggesting enhanced accuracy and efficacy in treatment plans (35). Saadia Gabriel’s examination of Large Language Models in clinical settings reveals AI’s capacity to broaden access to mental health services (36), though it emphasizes the need for stringent ethical standards. Lastly, Gilmar Gutierrez’s review on online mental healthcare underscores AI’s role in improving treatment adherence and patient engagement through continuous monitoring and support (37). Collectively, these studies suggest that while AI can significantly augment psychotherapeutic practice, careful consideration of ethical implications is essential.

3 Limitations of human psychotherapists

3.1 Resource constraints

Human psychotherapists, while deeply committed to their practice, face significant constraints in terms of time and physical resources that impact their ability to manage large caseloads. The traditional model of psychotherapy, which involves one-on-one sessions lasting from thirty minutes to an hour, inherently limits the number of patients a therapist can see daily. This limitation becomes particularly acute in regions with a high demand for mental health services but a limited number of available professionals. The scarcity of resources can lead to increased wait times for patients, potentially delaying critical interventions and exacerbating mental health conditions. Extended wait times and limited access to necessary care can result in deterioration of patient conditions, which poses serious challenges to mental health systems globally (38, 39).

3.2 Emotional labor and professional burnout

The work of psychotherapists involves intense emotional labor as therapists routinely engage with the emotional and psychological distress of their clients. This constant exposure to high-stress situations requires a substantial emotional investment and can lead to significant professional burnout. Symptoms of burnout among psychotherapists often manifest as emotional exhaustion, depersonalization, and a reduced sense of personal accomplishment, which not only impacts their personal health and job satisfaction but also affects their professional performance. Over time, this can result in reduced empathy and attentiveness—key components of effective therapy—thereby negatively impacting therapeutic outcomes and patient satisfaction. The emotional toll of psychotherapy can thus lead to a higher turnover among mental health professionals, further straining the system and impacting the quality of care provided to patients (40–42).

3.3 Limited scope for personalization

Personalization in therapy is crucial for its effectiveness, yet human therapists face inherent limitations in how extensively and accurately they can tailor their approaches to each individual patient. Despite their best efforts, human memory constraints and cognitive biases can affect a therapist’s ability to consistently integrate and recall detailed patient histories or subtle behavioral nuances across extended treatment periods. These limitations can hinder their capacity to fully personalize care, which is especially crucial for patients with complex, co-morbid conditions that require nuanced understanding and approach. In contrast, AI technologies, with their ability to process and remember vast amounts of information without bias, offer promising prospects for supporting more personalized and precise mental health interventions. AI can handle complex datasets, identify patterns, and recall details from patient interactions with greater accuracy than human therapists, potentially leading to improved treatment planning and outcomes (43–45).

4 Advantages of AI over human capabilities

4.1 Scalability and accessibility

AI significantly enhances the scalability and accessibility of mental health services. Unlike traditional therapy, which often requires physical presence and can be limited by geographic and resource constraints, AI-powered systems can provide support globally and operate continuously without fatigue. This accessibility is crucial, particularly in regions where mental health professionals are scarce (46, 47). Additionally, AI-driven interventions can reduce healthcare costs, making mental health care more affordable and accessible. The economic and operational benefits of AI in enhancing the reach of mental health services are well-documented (48).

4.2 Enhanced candidness in patient interaction

One of the unique advantages of AI in mental health is the increased candidness and openness that patients often exhibit when interacting with machines. Studies have shown that individuals are sometimes more willing to disclose sensitive information to AI systems due to perceived non-judgmental nature of machines (49, 50). This phenomenon can lead to more honest exchanges during therapy sessions, allowing for more accurate assessments and potentially more effective treatment. The absence of perceived judgment not only encourages more honest disclosures but also can reduce the stigma associated with seeking help for mental health issues, thus enhancing patient engagement (21).

4.3 Consistency and unbiased care

AI systems offer a level of consistency in mental health care delivery that human practitioners can find challenging to achieve due to natural variations in mood, fatigue, and personal bias. AI-driven tools apply the same standards and protocols to every interaction, ensuring all patients receive the same quality of care (51, 52). Furthermore, AI has the potential to reduce biases that can influence human judgment. These systems can be programmed to ignore irrelevant factors such as race, gender, or socio-economic status, promoting a more equitable healthcare environment (53).

5 Limitations of AI in psychotherapy

5.1 Memory

While AI systems like GPT-4 exhibit strong short-term memory capabilities, they still face significant challenges with long-term memory retention and integration. Current models often require external processes to summarize and track interactions over time, which limits their ability to maintain a continuous and coherent therapeutic relationship without human oversight (54–56). This limitation can affect the continuity of care, as AI may struggle to retain and integrate information from past sessions, potentially leading to fragmented or inconsistent therapeutic interactions. For effective psychotherapy, the ability to recall and integrate past information is crucial, and this remains a key area where human therapists currently hold an advantage over AI systems.

5.2 Bias

Algorithmic bias is a critical concern in the application of AI to mental health care. As highlighted by Akter, biases can arise from the data used to train AI models, leading to unequal treatment of individuals based on race, gender, or socioeconomic status, thus perpetuating existing disparities in mental health care (57). Despite efforts to minimize these biases, they are often unavoidable due to the decisions made during the development of AI systems. These biases can influence the interaction and effectiveness of AI in mental health settings, affecting how information is presented or what therapeutic approaches are emphasized. This reflects the inherent biases and priorities of the developers, which can have unintended consequences on the care provided to patients.

5.3 Long-term efficacy

While AI-based therapies have shown effectiveness in reducing symptoms of anxiety and depression in the short term, their long-term efficacy remains questionable. Studies have indicated that the initial benefits of AI-driven interventions often diminish over time, with no significant long-term improvements observed (22). This may be due to AI’s current inability to adapt to the evolving and complex nature of human mental health needs over extended periods. Unlike human therapists, who can adjust their therapeutic strategies based on ongoing interactions and deeper understanding of a patient’s history, AI systems may lack the flexibility required to maintain therapeutic effectiveness in the long term. This limitation highlights the need for continuous human oversight and potentially hybrid models where AI supports but does not replace the human element in psychotherapy.

5.4 Ethical considerations

Beyond technical limitations like memory and bias, the use of AI in mental health care raises significant ethical questions regarding privacy, autonomy, and the potential stigmatization of patients. As noted by Walsh, AI systems, particularly those that utilize biomarkers and other sensitive data, must navigate the complex balance between improving care and respecting patient privacy and autonomy (58). The design and implementation of AI in healthcare must prioritize ethical considerations, including strategies to mitigate bias, ensure transparency, and maintain patient trust (59). Without careful ethical oversight, AI could lead to unintended consequences such as misdiagnosis or the erosion of the therapeutic relationship between patients and human therapists. Additionally, the concept of precision psychiatry, which leverages AI to tailor interventions to individual needs, presents its own set of ethical challenges. Fusar-Poli et al. (2022) emphasize the need for human oversight to ensure that AI complements rather than replaces the nuanced understanding that human therapists bring to psychiatric care (60).

5.5 Comparison to human psychotherapists

Despite the advancements in AI technology, there are critical areas where AI falls short compared to human psychotherapists. Firstly, AI systems lack genuine empathy and the ability to form deep emotional connections with patients. Human therapists use their own emotional understanding to build trust and rapport, which is fundamental in therapy (61, 62). AI cannot authentically replicate this emotional resonance, as it does not experience emotions. Secondly, human therapists rely on professional intuition and ethical judgment to navigate complex therapeutic situations (63). They can interpret subtle cues and adapt their approach in real-time, something AI cannot do due to its reliance on predefined algorithms. Additionally, human therapists are adept at interpreting non-verbal communication, such as body language and facial expressions (64), providing deeper insight into a patient’s emotional state. AI systems, particularly those limited to text-based interactions, cannot perceive these cues. Moreover, cultural competence and sensitivity are areas where human therapists excel. They can tailor their approaches to align with a patient’s cultural background (65), whereas AI may not fully grasp cultural nuances, potentially leading to misunderstandings. Lastly, human therapists provide continuity of care and personalized treatment plans based on an evolving understanding of the patient’s history (66). AI may struggle with personalization to the same extent, potentially leading to less effective therapy experiences.

6 Conclusion

As artificial intelligence (AI) technology continues to advance, its increasing sophistication holds significant potential to reshape the landscape of mental health services. AI may play an important role in supporting psychotherapy, particularly in addressing the global mental health service gap where access to trained professionals is limited. By augmenting traditional mental health care, AI can offer scalable and cost-effective solutions that reduce barriers such as cost, stigma, and logistical challenges.

However, as AI becomes more integrated into mental health care, it is essential to recognize and address its limitations. Challenges related to memory retention, algorithmic bias, and ethical considerations underscore the need for a balanced approach. AI systems lack genuine empathy, ethical judgment, and the ability to interpret non-verbal cues—qualities that are intrinsic to human therapists. Therefore, these systems must be carefully designed and implemented to complement rather than replace the critical human elements of empathy, cultural competence, and nuanced understanding in therapy.

In this emerging paradigm, AI is envisioned not as a replacement for human therapists but as a powerful tool that extends the reach of mental health services. By automating routine tasks and providing support in areas where human professionals are scarce, AI can help ensure that individuals have greater access to essential mental health resources. Nevertheless, the integration of AI into mental health care must be pursued with caution, emphasizing fairness, transparency, respect for patient rights, and the necessity of human oversight. Ongoing research and rigorous clinical validation are crucial to establish the long-term efficacy and safety of AI interventions. When managed responsibly, this progression can be a pivotal step toward a more inclusive and effective global mental health strategy, blending technological innovation with the irreplaceable value of human connection.

Author contributions

ZZ: Writing – review & editing, Writing – original draft, Software, Investigation, Conceptualization. JW: Writing – review & editing, Writing – original draft, Supervision, Conceptualization.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Howard J. Artificial intelligence: Implications for the future of work. Am J Ind Med. (2019) 62:917–26. doi: 10.1002/ajim.23037

2. Morosan C. The role of artificial intelligence in decision-making. Proc Comput Sci. (2016) 91:1036–42.

3. Lee D, Yoon SN. Application of artificial intelligence-based technologies in the healthcare industry: Opportunities and challenges. Int J Environ Res Public Health. (2021) 18:271. doi: 10.3390/ijerph18010271

4. Johnson KB, Wei WQ, Weeraratne D, Frisse ME, Misulis K, Rhee K, et al. Precision medicine, AI, and the future of personalized health care. Clin Trans Sci. (2021) 14:86–93. doi: 10.1111/cts.12884

5. Lee EE, Torous J, De Choudhury M, Depp CA, Graham SA, Kim HC, et al. Artificial intelligence for mental health care: clinical applications, barriers, facilitators, and artificial wisdom. Biol Psychiatry: Cogn Neurosci Neuroimaging. (2021) 6:856–64. doi: 10.1016/j.bpsc.2021.02.001

6. Espejo G, Reiner W, Wenzinger M. Exploring the role of artificial intelligence in mental healthcare: Progress, pitfalls, and promises. Cureus. (2023) 15:e44748. doi: 10.7759/cureus.44748

7. Wainberg ML, Scorza P, Shultz JM, Helpman L, Mootz JJ, Johnson KA, et al. Challenges and opportunities in global mental health: a research-to-practice perspective. Curr Psychiatry Rep. (2017) 19:28. doi: 10.1007/s11920-017-0780-z

8. Qin X, Hsieh C-R. Understanding and addressing the treatment gap in mental healthcare: economic perspectives and evidence from China. INQUIRY: J Health Care Organization Provision Financing. (2020) 57. doi: 10.1177/0046958020950566

9. Milne-Ives M, Selby E, Inkster B, Lam C, Meinert E. Artificial intelligence and machine learning in mobile apps for mental health: A scoping review. PloS Digital Health. (2022) 1:e0000079. doi: 10.1371/journal.pdig.0000079

10. Colledani D, Anselmi P, Robusto E. Machine learning-decision tree classifiers in psychiatric assessment: An application to the diagnosis of major depressive disorder. Psychiatry Res. (2023) 322:115127. doi: 10.1016/j.psychres.2023.115127

11. Carl E, Stein AT, Levihn-Coon A, Pogue JR, Rothbaum B, Emmelkamp P, et al. Virtual reality exposure therapy for anxiety and related disorders: A meta-analysis of randomized controlled trials. J Anxiety Disord. (2019) 61:27–36. doi: 10.1016/j.janxdis.2018.08.003

12. Balcombe L. AI chatbots in digital mental health. Inf (MDPI). (2023) 10:82. doi: 10.3390/informatics10040082

13. Bajwa J, Munir U, Nori A, Williams B. Artificial intelligence in healthcare: transforming the practice of medicine. Future Healthcare J. (2021) 8:e188. doi: 10.7861/fhj.2021-0095

14. Nilsen P, Svedberg P, Nygren J, Frideros M, Johansson J, Schueller S. Accelerating the impact of artificial intelligence in mental healthcare through implementation science. Implementation Res Pract. (2022) 3:26334895221112033. doi: 10.1177/26334895221112033

15. Minerva F, Giubilini A. Is AI the future of mental healthcare? Topoi. (2023) 42:809–17. doi: 10.1007/s11245-023-09932-3

16. Elyoseph Z, Hadar-Shoval D, Asraf K, Lvovsky M. ChatGPT outperforms humans in emotional awareness evaluations. Front Psychol. (2023) 14:1199058. doi: 10.3389/fpsyg.2023.1199058

17. Cheng SW, Chang CW, Chang WJ, Wang HW, Liang CS, Kishimoto T, et al. The now and future of chatGPT and GPT in psychiatry. Psychiatry Clin Neurosci. (2023) 77:592–6. doi: 10.1111/pcn.v77.11

18. Liu H, Peng H, Song X, Xu C, Zhang M. Using AI chatbots to provide self-help depression interventions for university students: A randomized trial of effectiveness. Internet Interventions. (2022) 27:100495. doi: 10.1016/j.invent.2022.100495

19. Ren X. Artificial intelligence and depression: How AI powered chatbots in virtual reality games may reduce anxiety and depression levels. J Artif Intell Pract. (2020) 3:48–58.

20. Zhong W, Luo J, Zhang H. The therapeutic effectiveness of artificial intelligence-based chatbots in alleviation of depressive and anxiety symptoms in short-course treatments: A systematic review and meta-analysis. J Affect Disord. (2024) 356:459–69. doi: 10.1016/j.jad.2024.04.057

21. Fulmer R, Joerin A, Gentile B, Lakerink L, Rauws M, et al. Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: randomized controlled trial. JMIR Ment Health. (2018) 5:e9782. doi: 10.2196/mental.9782

22. He Y, Yang L, Qian C, Li T, Su Z, Zhang Q, et al. Conversational agent interventions for mental health problems: systematic review and meta-analysis of randomized controlled trials. J Med Internet Res. (2023) 25:e43862. doi: 10.2196/43862

24. Arias D, Saxena S, Verguet S. Quantifying the global burden of mental disorders and their economic value. EClinicalMedicine. (2022) 54:101675. doi: 10.1016/j.eclinm.2022.101675

25. Weizenbaum J. Eliza—a computer program for the study of natural language communication between man and machine. Commun ACM. (1966) 9:36–45. doi: 10.1145/365153.365168

26. Tai MCT. The impact of artificial intelligence on human society and bioethics. Tzu chi Med J. (2020) 32:339–43. doi: 10.4103/tcmj.tcmj_71_20

27. Luxton DD. Artificial intelligence in psychological practice: Current and future applications and implications. Prof Psychology: Res Pract. (2014) 45:332. doi: 10.1037/a0034559

28. Blackwell SE, Heidenreich T. Cognitive behavior therapy at the crossroads. Int J Cogn Ther. (2021) 14:1–22. doi: 10.1007/s41811-021-00104-y

29. Amin MM, Cambria E, Schuller BW. Will affective computing emerge from foundation models and general artificial intelligence? a first evaluation of chatGPT. IEEE Intelligent Syst. (2023) 38:15–23. doi: 10.1109/MIS.2023.3254179

30. GPT-4o (2024). OpenAI. Available online at: https://openai.com/index/hello-gpt-4o/ (Accessed June 1, 2024).

31. Priyanka, Kumari R, Bansal P, Dev A. (2024). Evolution of ChatGPT and different language models: A review, In: Senjyu T, So–In C, Joshi A. (eds). Smart trends in computing and communications. SmartCom 2024 2024. Lecture notes in networks and systems, vol 949. Singapore: Springer. doi: 10.1007/978-981-97-1313-4_8

32. Levkovich I, Elyoseph Z. Suicide risk assessments through the eyes of chatgpt-3.5 versus ChatGPT-4: vignette study. JMIR Ment Health. (2023) 10:e51232.

33. Rzadeczka M, Sterna A, Stolińska J, Kaczyńska P, Moskalewicz M. The efficacy of conversational artificial intelligence in rectifying the theory of mind and autonomy biases: Comparative analysis. arXiv preprint arXiv:2406.13813. (2024).

34. Ran C. (2023). “Emotion analysis of dialogue text based on ChatGPT: a research study,” Proc. SPIE 12941, International conference on algorithms, high performance computing, and artificial intelligence (AHPCAI 2023). 1294137. doi: 10.1117/12.3011507

35. Graham S, Depp C, Lee EE, Nebeker C, Tu X, Kim HC, et al. Artificial intelligence for mental health and mental illnesses: an overview. Curr Psychiatry Rep. (2019) 21:1–18. doi: 10.1007/s11920-019-1094-0

36. Gabriel S, Puri I, Xu X, Malgaroli M, Ghassemi M. Can AI relate: Testing large language model response for mental health support. arXiv preprint arXiv:2405.12021. (2024).

37. Gutierrez G, Stephenson C, Eadie J, Asadpour K, Alavi N. Examining the role of AI technology in online mental healthcare: opportunities, challenges, and implications, a mixed-methods review. Front Psychiatry. (2024) 15:1356773. doi: 10.3389/fpsyt.2024.1356773

38. Trusler K, Doherty C, Mullin T, Grant S, McBride J. Waiting times for primary care psychological therapy and counselling services. Counselling Psychother Res. (2006) 6:23–32. doi: 10.1080/14733140600581358

39. van Dijk D, Meijer R, van den Boogaard TM, Spijker J, Ruhé H, Peeters F. Worse off by waiting for treatment? the impact of waiting time on clinical course and treatment outcome for depression in routine care. J Affect Disord. (2023) 322:205–11. doi: 10.1016/j.jad.2022.11.011

40. Simionato GK, Simpson S. Personal risk factors associated with burnout among psychotherapists: A systematic review of the literature. J Clin Psychol. (2018) 74:1431–56. doi: 10.1002/jclp.2018.74.issue-9

42. Deutsch CJ. Self-reported sources of stress among psychotherapists. Prof Psychology: Res Pract. (1984) 15:833. doi: 10.1037/0735-7028.15.6.833

43. Markin RD, Kivlighan DM Jr. Bias in psychotherapist ratings of client transference and insight. Psychotherapy: Theory Research Practice Training. (2007) 44:300. doi: 10.1037/0033-3204.44.3.300

44. Dougall JL, Schwartz RC. The influence of client socioeconomic status on psychotherapists’ attributional biases and countertransference reactions. Am J Psychother. (2011) 65:249–65. doi: 10.1176/appi.psychotherapy.2011.65.3.249

45. Smith ML. Sex bias in counseling and psychotherapy. psychol Bull. (1980) 87:392. doi: 10.1037/0033-2909.87.2.392

46. Saxena S, Thornicroft G, Knapp M, Whiteford H. Resources for mental health: scarcity, inequity, and inefficiency. Lancet. (2007) 370:878–89. doi: 10.1016/S0140-6736(07)61239-2

47. Olawade DB, Wada OZ, Odetayo A, David-Olawade AC, Asaolu F, Eberhardt J. Enhancing mental health with artificial intelligence: Current trends and future prospects. J Medicine Surgery Public Health. (2024) 3:100099. doi: 10.1016/j.glmedi.2024.100099

48. Abbasi J, Hswen Y. One day, AI could mean better mental health for all. JAMA. (2024) 331(20):1691–4. doi: 10.1001/jama.2023.27727

49. Chaudhry BM, Debi HR. User perceptions and experiences of an AI-driven conversational agent for mental health support. mHealth. (2024) 10. doi: 10.21037/mhealth-23-55

50. Sundar SS, Kim J. Machine heuristic: When we trust computers more than humans with our personal information, in: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19), New York, NY, USA: Association for Computing Machinery. 538:1–9. doi: 10.1145/3290605.3300768

51. Wahl B, Cossy-Gantner A, Germann S, Schwalbe NR. Artificial intelligence (AI) and global health: how can ai contribute to health in resource-poor settings? BMJ Global Health. (2018) 3:e000798. doi: 10.1136/bmjqs-2018-008370

52. Challen R, Denny J, Pitt M, Gompels L, Edwards T, Tsaneva-Atanasova K. Artificial intelligence, bias and clinical safety. BMJ Qual Saf. (2019) 28:231–7. doi: 10.1136/bmjqs-2018-008370

53. Lin YT, Hung TW, Huang LTL. Engineering equity: How ai can help reduce the harm of implicit bias. Philosophy Technol. (2021) 34:65–90. doi: 10.1007/s13347-020-00406-7

54. Bai L, Liu X, Su J. ChatGPT: The cognitive effects on learning and memory. Brain-X. (2023) 1:e30. doi: 10.1002/brx2.v1.3

55. Zhong W, Guo L, Gao Q, Ye H, Wang Y. (2024). Memorybank: Enhancing large language models with long-term memory, in: Proceedings of the AAAI Conference on Artificial Intelligence. 38:19724–31. doi: 10.1609/aaai.v38i17.29946

56. Wang Q, Ding L, Cao Y, Tian Z, Wang S, Tao D, et al. Recursively summarizing enables long-term dialogue memory in large language models. arXiv preprint arXiv:2308.15022. (2023). doi: 10.48550/arXiv.2308.15022

57. Akter S, McCarthy G, Sajib S, Michael K, Dwivedi YK, D’Ambra J, et al. Algorithmic bias in data-driven innovation in the age of AI. (2021) 60:102387. doi: 10.1016/j.ijinfomgt.2021.102387

58. Walsh CG, Chaudhry B, Dua P, Goodman KW, Kaplan B, Kavuluru R, et al. Stigma, biomarkers, and algorithmic bias: recommendations for precision behavioral health with artificial intelligence. JAMIA Open. (2020) 3:9–15. doi: 10.1093/jamiaopen/ooz054

59. Li F, Ruijs N, Lu Y. Ethics & AI: A systematic review on ethical concerns and related strategies for designing with AI in healthcare. AI. (2022) 4:28–53. doi: 10.3390/ai4010003

60. Fusar-Poli P, Manchia M, Koutsouleris N, Leslie D, Woopen C, Calkins ME, et al. Ethical considerations for precision psychiatry: a roadmap for research and clinical practice. Eur Neuropsychopharmacol. (2022) 63:17–34. doi: 10.1016/j.euroneuro.2022.08.001

61. Staemmler FM. Empathy in psychotherapy: How therapists and clients understand each other. Springer Publications, New York: Springer Publishing Company (2012).

62. Miller SD, Hubble MA, Chow D. Better results: Using deliberate practice to improve therapeutic effectiveness. American Psychological Association (2020). doi: 10.1037/0000191-000

63. Naik N, Hameed BZ, Shetty DK, Swain D, Shah M, Paul R, et al. Legal and ethical consideration in artificial intelligence in healthcare: who takes responsibility? Front Surg. (2022) 9:862322. doi: 10.3389/fsurg.2022.862322

64. Knapp ML, Hall JA, Horgan TG. Nonverbal communication in human interaction Vol. 1. . New York: Holt, Rinehart and Winston (1978).

65. Aggarwal NK, Lam P, Castillo EG, Weiss MG, Diaz E, Alarcón RD, et al. How do clinicians prefer cultural competence training? findings from the dsm-5 cultural formulation interview field trial. Acad Psychiatry. (2016) 40:584–91. doi: 10.1007/s40596-015-0429-3

Keywords: Artificial Intelligence (AI), psychotherapy, algorithmic bias, mental health care, ethical considerations

Citation: Zhang Z and Wang J (2024) Can AI replace psychotherapists? Exploring the future of mental health care. Front. Psychiatry 15:1444382. doi: 10.3389/fpsyt.2024.1444382

Received: 06 June 2024; Accepted: 02 October 2024;

Published: 31 October 2024.

Edited by:

David M. A. Mehler, University Hospital RWTH Aachen, GermanyReviewed by:

Eduardo L. Bunge, Palo Alto University, United StatesBeenish Chaudhry, University of Louisiana at Lafayette, United States

Copyright © 2024 Zhang and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jing Wang, MTY2OTc5ODdAcXEuY29t

Zhihui Zhang

Zhihui Zhang Jing Wang3*

Jing Wang3*