- 1Department of Information Medicine, National Institute of Neuroscience, National Center of Neurology and Psychiatry, Tokyo, Japan

- 2Department of Psychiatry, Yokohama City University, School of Medicine, Yokohama, Japan

- 3Department of Psychiatry and Behavioral Sciences, Graduate School of Medical and Dental Sciences, Tokyo Medical and Dental University, Tokyo, Japan

Background: Recent advancements in generative artificial intelligence (AI) for image generation have presented significant opportunities for medical imaging, offering a promising way to generate realistic virtual medical images while ensuring patient privacy. The generation of a large number of virtual medical images through AI has the potential to augment training datasets for discriminative AI models, particularly in fields with limited data availability, such as neuroimaging. Current studies on generative AI in neuroimaging have mainly focused on disease discrimination; however, its potential for simulating complex phenomena in psychiatric disorders remains unknown. In this study, as examples of a simulation, we aimed to present a novel generative AI model that transforms magnetic resonance imaging (MRI) images of healthy individuals into images that resemble those of patients with schizophrenia (SZ) and explore its application.

Methods: We used anonymized public datasets from the Center for Biomedical Research Excellence (SZ, 71 patients; healthy subjects [HSs], 71 patients) and the Autism Brain Imaging Data Exchange (autism spectrum disorder [ASD], 79 subjects; HSs, 105 subjects). We developed a model to transform MRI images of HSs into MRI images of SZ using cycle generative adversarial networks. The efficacy of the transformation was evaluated using voxel-based morphometry to assess the differences in brain region volumes and the accuracy of age prediction pre- and post-transformation. In addition, the model was examined for its applicability in simulating disease comorbidities and disease progression.

Results: The model successfully transformed HS images into SZ images and identified brain volume changes consistent with existing case-control studies. We also applied this model to ASD MRI images, where simulations comparing SZ with and without ASD backgrounds highlighted the differences in brain structures due to comorbidities. Furthermore, simulating disease progression while preserving individual characteristics showcased the model’s ability to reflect realistic disease trajectories.

Discussion: The results suggest that our generative AI model can capture subtle changes in brain structures associated with SZ, providing a novel tool for visualizing brain changes in different diseases. The potential of this model extends beyond clinical diagnosis to advances in the simulation of disease mechanisms, which may ultimately contribute to the refinement of therapeutic strategies.

1 Introduction

Rapid advancements in image-generative artificial intelligence (AI) have marked the beginning of new possibilities in various fields (1). Significant breakthroughs include the emergence of DALL-E (2) and stable diffusion (3), which have made the potential of AI for generating realistic and complex images widely known (4). This evolution has profound implications, particularly in the intricate landscape of medical imaging, where concerns regarding privacy, ethics, and legal constraints have historically constrained the sharing of patient data.

The utilization of generative AI models has demonstrated realistic and comprehensive potential for generating two-dimensional medical images, such as chest radiographs and fundus photography (5), and three-dimensional (3D) medical images, including magnetic resonance imaging (MRI) of the brain, chest, and knees (6). These studies have highlighted the potential of generative AI for synthesizing authentic medical images without compromising the confidentiality of sensitive patient information.

The limitation of available medical images, in contrast to the abundance of natural images, emphasizes the importance of generative AI, which facilitates the use of large amounts of labeled data in model training. In neuroimaging, the generative AI approach has been used to generate brain MRI (7), single-photon emission tomography (SPECT) (8), and positron emission tomography (PET) (9). Among these, generative AI is commonly used in medical imaging to improve the performance of models by generating a large number of images and using them as training data, that is, for data augmentation (10–12). It is difficult to increase the number of samples for MRI of actual psychiatric and neurological disorders. Therefore, strategies using generative deep learning techniques, such as generative adversarial networks (GANs), have been adopted to enhance the learning process by expanding the sample size (11, 13–15). Zhou et al. demonstrated that data augmentation based on a GAN framework could be developed to generate brain MRI images, improving performance and accuracy in classifying Alzheimer’s disease and mild cognitive impairment (16). Zhao et al. introduced a functional network connectivity-based GAN to distinguish patients with schizophrenia (SZ) from healthy subjects (HSs) using functional MRI data (17). Generative AI has also demonstrated strength in neuroimaging segmentation (18). Furthermore, it is imperative to investigate the efficacy of style transfers derived from generative AI. Style transfer involves applying the characteristics or style of one image to another while preserving the content of the latter. This technique holds promise for transforming easily obtainable images, such as computed tomography scans, into images exclusive to a limited number of facilities, such as MRI scans (19). In addition, the application of the style transfer technique is expected to effectively reduce bias in image quality caused by differences in imaging equipment and sites (20), which are unavoidably included in MRI images (21).

Although existing neuroimaging research using generative AI has been applied to data augmentation and image quality harmonization, its primary goals have largely been limited to specific areas, such as disease diagnosis and lesion detection. However, the use of these techniques to simulate more complex clinical phenomena presents an interesting area for further exploration of the potential use of generative AI in neuroimaging research (22). Examples from external medicine include attempts to simulate automated automobile driving (23) and the design of novel proteins (24).

Previous studies have highlighted the challenges of comorbidity and heterogeneity in psychiatric disorders (25, 26). Specifically, these studies have focused on the relationship between SZ and autism spectrum disorder (ASD). SZ and ASD are defined as distinct disorder groups based on diagnostic criteria but share some common features, such as difficulties in social interaction and communication (27). Furthermore, common features in brain structures and genetic alterations have been noted (28, 29). Owing to the complexity of their etiology, the relationship between these two disorders and their comorbid phenotypes remains to be elucidated. The ability of generative AI to simulate these conditions can shed light on the intricate relationship between these disorders and their overlapping phenotypes. However, to our knowledge, no such efforts have been made yet.

The first step in this study was to develop a generative AI that simulates brain volume changes caused by SZ, specifically a generative AI using CycleGAN. This enables the transformation of brain images from healthy individuals into images similar to those of patients diagnosed with SZ. We validated this artificial schizophrenic brain simulator by analyzing specific brain regions affected by transformation and comparing them with existing findings on SZ. Furthermore, we aimed to evaluate the feasibility of our “SZ brain generator” in simulations of the disease process of SZ and in simulation experiments of the comorbidity of ASD and SZ.

2 Materials and methods

2.1 Dataset description

In this study, we used the Center for Biomedical Research Excellence (COBRE; http://fcon_1000.projects.nitrc.org/indi/retro/cobre.html) dataset, which is anonymized and publicly available. All the subjects were diagnosed and screened using the Structured Clinical Interview for the Diagnostic and Statistical Manual of Mental Disorders, 4th edition Axis I Disorders (SCID) (30, 31). Individuals with a history of head trauma, neurological illness, serious medical or surgical illness, or substance abuse were excluded. We selected 142 subjects from this database, including 71 patients with SZ and 71 HSs.

We also used the Autism Brain Imaging Data Exchange (ABIDE; http://fcon_1000.projects.nitrc.org/indi/abide/) dataset, which is a multicenter project that focuses on ASD. It includes > 1000 ASD and typically developing (TD) subjects. The New York City University dataset was used in this study. Finally, we included 184 subjects from this dataset: 79 subjects with ASD and 105 TD subjects.

The demographic and clinical characteristics of the COBRE and ABIDE datasets are presented in Supplementary Table 1.

This study was conducted in accordance with the current Ethical Guidelines for Medical and Health Research Involving Human Subjects in Japan and was approved by the Committee on Medical Ethics of the National Center of Neurology and Psychiatry.

2.3 Data preprocessing

MRI data were preprocessed using the Statistical Parametric Mapping software (SPM12, Wellcome Department of Cognitive Neurology, London, UK, https://www.fil.ion.ucl.ac.uk/spm/software/spm12/) with the Diffeomorphic Anatomical Registration Exponentiated Lie Algebra registration algorithm (32). The MR images were processed using field bias correction to correct for nonuniform fields and were then segmented into gray matter (GM), white matter, and cerebrospinal fluid sections using tissue probability maps based on the International Consortium of Brain Mapping template. Individual GM images were normalized to the Montreal Neurological Institute template with a 1.5 × 1.5 × 1.5 mm3 voxel size and modulated for GM volumes. All normalized GM images were smoothed with a Gaussian kernel of 8 mm full width at half maximum. Consequently, the size of the input images for the proposed model was 121 × 145 × 121 voxels.

2.3 Cycle generative adversarial networks

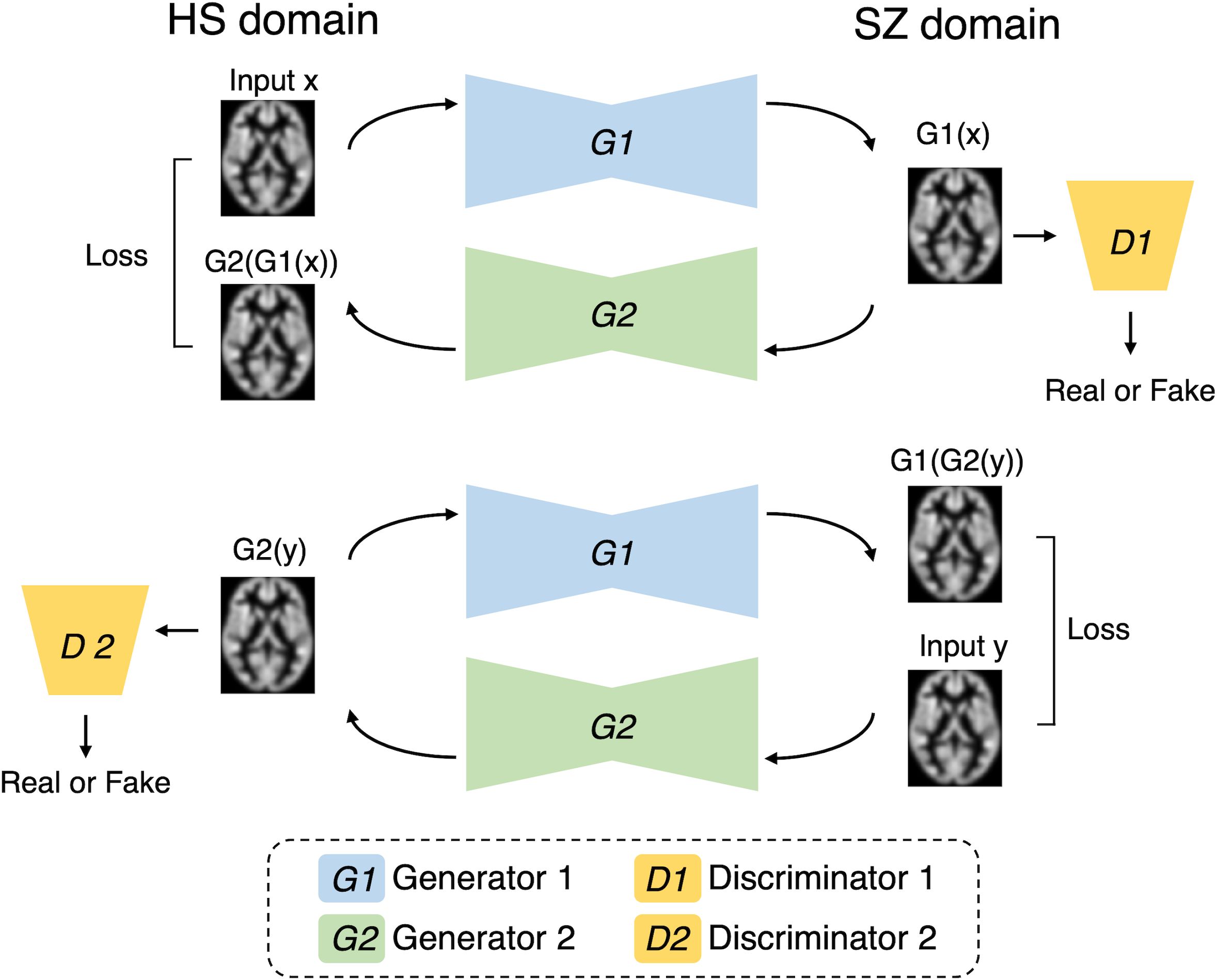

The CycleGAN algorithm, a generative AI method that has been actively used in recent years for style transformation, was used in this study (33). This algorithm simultaneously learns to generate Style B from Style A, and Style A from Style B using two style datasets. In addition, whether the image is real or fake is discriminated. This enables style transformations without the need for supervised data. The GAN learns to progressively generate high-resolution images through these competitive processes. Figure 1 shows the schematic workflow of the proposed method. In this study, we constructed a model to transform images by learning two styles: MRI of HS and MRI of SZ. Using the learned model, we transformed HS into SZ and investigated how the brain regions changed pre- and post-transformation.

Figure 1. Our cycle generative adversarial network. The model is designed to enable the transformation between domains of healthy subjects (HSs) and patients with schizophrenia (SZ). Generator 1 (G1) is responsible for transforming HS into SZ, whereas Generator 2 (G2) performs the conversion from SZ back to HS. Discriminator 1 (D1) discriminates between real SZ images and virtually generated SZ-like images. Similarly, Discriminator 2 (D2) discriminates between real HS images and synthetic HS-like images. The loss function is configured to optimize each component.

Figure 1 shows the proposed CycleGAN architecture. A 3D brain image was input into the proposed model to contain more spatial information, and the background area was cropped as much as possible (the voxel sizes were 96, 120, and 104). In the learning phase, the training HS MRI was input to Generator 1 (HS to SZ) to generate an SZ MRI (called virtual SZ). This virtual SZ was input to Generator 2 (SZ to HS) to generate an HS MRI (called reconstructed HS). Similarly, the training SZ MRI data were fed to the two generators in reverse order to generate a virtual HS and a reconstructed SZ. Next, two discriminators were used to judge the reality of the virtual and reconstructed images.

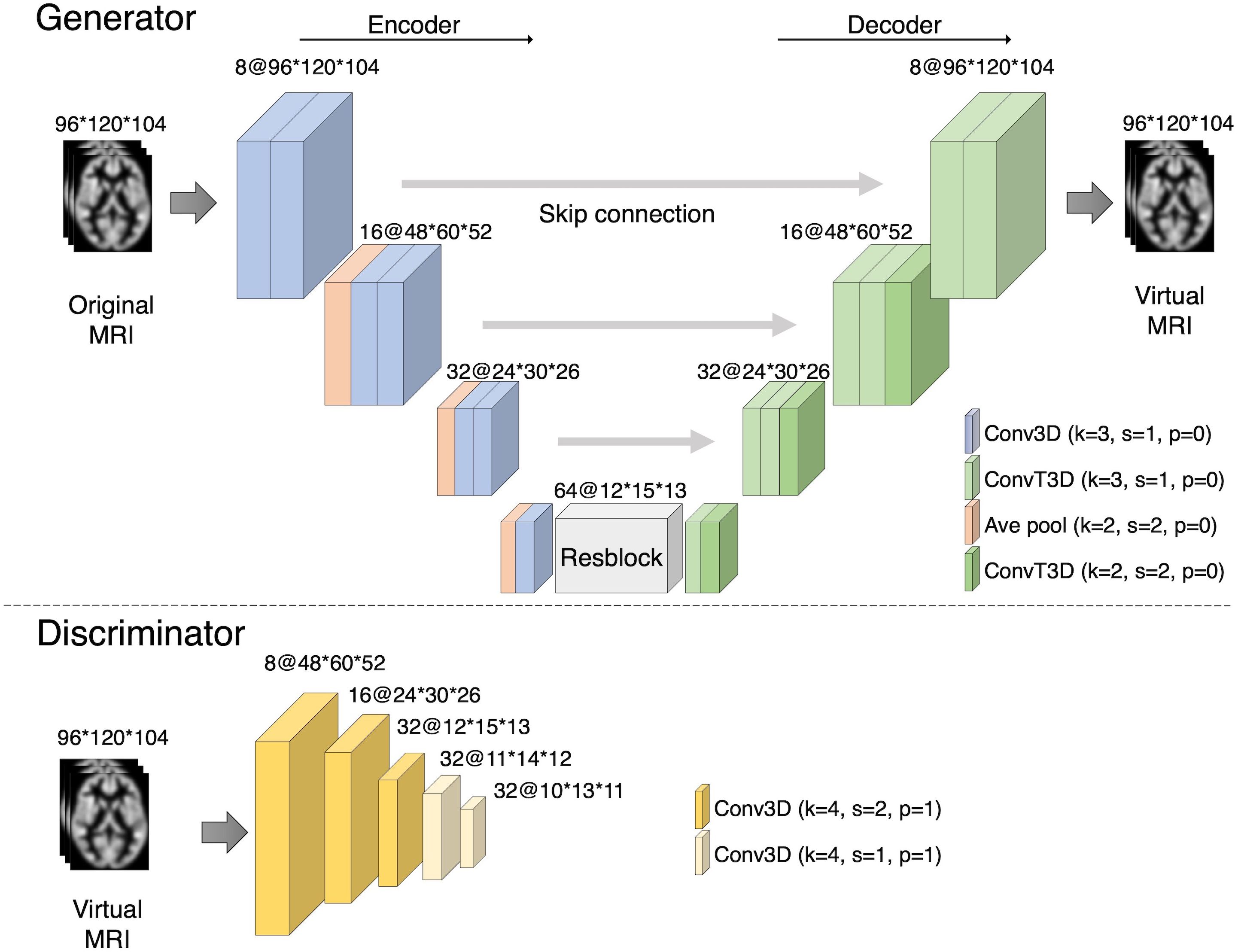

The network of Generators 1 and 2 consisted of U-Net (34), which is an autoencoder with skip connections. In this study, we proposed a U-Net model consisting of six consecutive 3D convolutional blocks (three encoding blocks and three decoding blocks) with instance normalization and rectified linear unit (ReLU) activation. The encoding blocks consisted of two convolutional layers and one pooling layer. The decoding block consisted of three transposed convolutional layers. Additionally, six layers of ResBlock were added to the intermediate layer of U-Net, which was used in the Residual Network to learn the residual function between the inputs and outputs of the layers (35). This structure is often used in GANs (2, 36, 37). The discriminator consisted of five blocks, including a 3D convolution layer with instance normalization and leaky ReLU activation. The proposed CycleGAN loss function comprises two parts: adversarial and cycle consistency losses. Adversarial loss is designed to optimize the generator’s ability to produce images that are indistinguishable from those belonging to the target domain by the discriminator. Generator G1 transforms an image in domain X into domain Y, where DY represents the discriminator for domain Y:

In addition, the same formulation is applied to generator G2, transforming an image in domain Y into domain X, with DX as the corresponding discriminator.

The cycle consistency loss ensures that the image is transformed back to its original domain and then back. This loss component is given by the following:

The network structure was explored preliminarily based on previous experiments (38, 39). The details of the architecture of our framework are shown in Figure 2.

Figure 2. Our proposed CycleGAN architecture. The numbers describe the number of channels and the size of the images. The generator consists of an encoder and a decoder, and the bottleneck contains a ResBlock. The encoder and decoder were designed as a U-Net with skip connections. The discriminator, a convolutional neural network, is trained to discriminate between the generated images and the ground truth.

We conducted the experiments in Python 3.8 using the PyTorch v.1.9.1 library (40). Our network was implemented on a workstation running a 64 Gigabytes NVIDIA Quadro RTX 8000 GPU.

2.4 Verification of generated virtual schizophrenia brain

In this study, we used a trained CycleGAN model to generate virtual SZ MRI images of HSs. Subsequently, we analyzed the different regions of volume pre- (original HSs) and post- (virtual SZ converted from HSs) transformations and verified whether the results were consistent with the brain changes due to SZ indicated in previous case-control neuroimaging studies.

To ensure robustness, we employed a 10-fold cross-validation approach. The COBRE dataset was divided into 10 subsets, each used as a validation set, and the model was trained on the remaining 9 subsets. For each fold, the dataset comprised 14 or 16 samples, with 7 or 8 samples derived from HS and 7 or 8 samples from SZ.

For verification, we performed voxel-based morphometry (VBM) using SPM12 (41, 42). VBM is a method for comparing brain GM volumes from segmented MRI images using statistical parametric mapping to identify and infer region-specific differences. Standard and optimized VBM techniques have been used to detect psychiatric and neurological disorders (43–48). Whole-brain voxel-wise t-tests were performed, and paired t-tests were specifically employed for pre- and post-transformation comparisons. To account for potential scaling differences between pre- and post-transformation brain images, global scaling was applied to normalize the overall image intensity of each image. Correction for multiple comparisons was conducted at a combined voxel level of P < 0.001.

To ensure the reproducibility of the generation, we additionally assessed any variations in the accuracy of age prediction between pre- and post-transformations. Owing to the ultrahigh dimensionality of the brain images, principal component analysis was performed for dimensionality reduction, retaining all 71 dimensions corresponding to our sample size. Subsequently, 5-fold cross-validation and linear regression were used to derive age predictions. To compare the age values predicted from the brain images pre- and post-transformation, a t-test was applied. The significance level was set at P < 0.05.

2.5 Brain alteration simulations using a schizophrenia brain generator

To simulate brain alterations, we chose to utilize a model trained on the entire COBRE dataset rather than on models derived from individual cross-validation folds. This decision was based on the observation that the model trained on all available data produced more stable and reliable results, which are essential for generating accurate simulation outcomes.

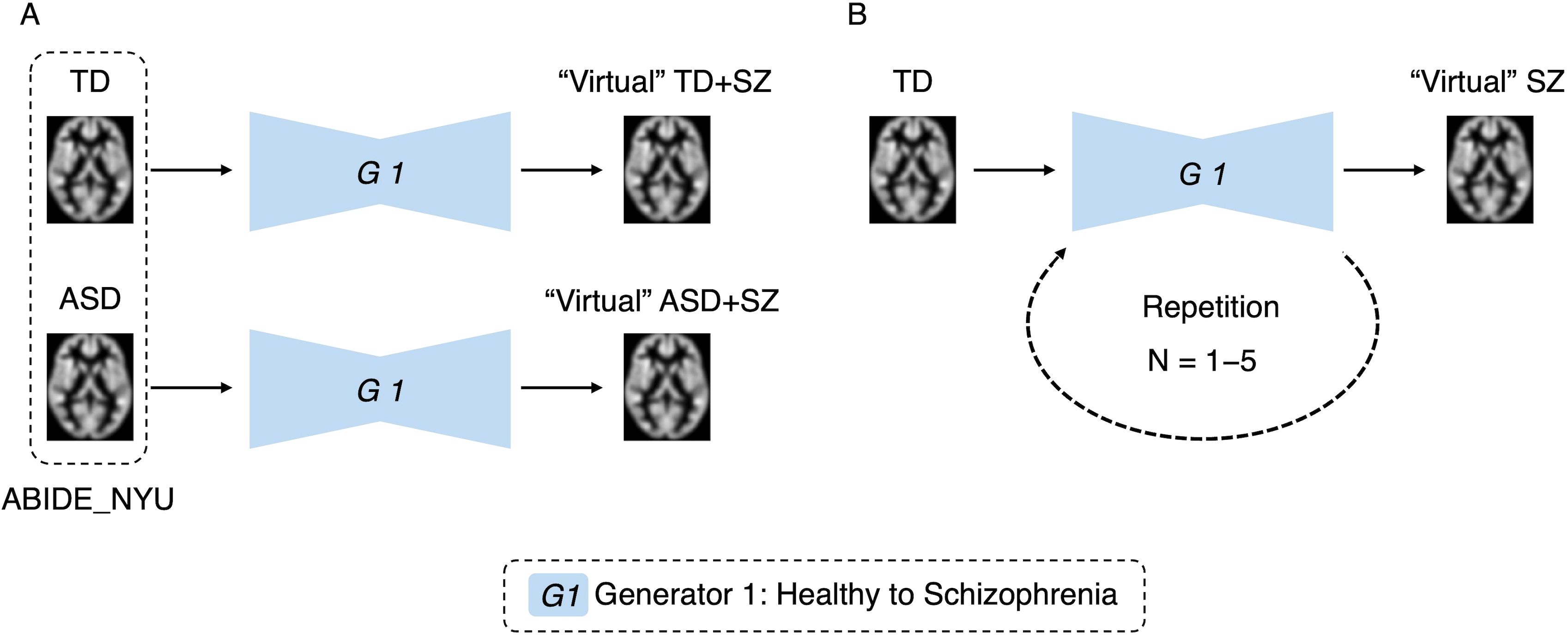

Two experiments were conducted to verify whether the developed generator can simulate brain alterations. The first involved simulating the comorbidities of the disease (Figure 3A). We applied the SZ brain generator to an independent dataset called ABIDE. This approach enables the virtual generation of an image depicting individuals with ASD and SZ. Using the original TD and ASD individuals as baselines, the SZ brain generator was used to generate “virtual” TD + SZ, where TD is transformed into SZ, and “virtual” ASD + SZ, where ASD is transformed into SZ. A comparative analysis was then performed pre- and post-transformation of ASD, specifically comparing ASD with “virtual” ASD + SZ to observe the effects of SZ on individuals with ASD. Furthermore, a comparative analysis of “virtual” TD + SZ and “virtual” ASD + SZ was conducted to identify brain differences that emerge with or without ASD in the context of SZ. This method employed VBM to examine and quantify changes in brain regions, allowing us to identify specific areas affected by the transformation and explore the interaction effects between SZ and ASD.

Figure 3. Simulation experiments by generative brain images. Panel (A) is an experiment simulating disease comorbidity. The autism spectrum disorder and typically developing data were used to generate virtual images with the schizophrenia brain generator. Panel (B) is an experiment simulating disease progression. Images were generated using one to five repetitions of the schizophrenia brain generator.

In the second experiment, we simulated disease progression, as shown in Figure 3B. We repeatedly applied the trained generator to the images and compared the results with those of the original images to validate the changes. Our analysis aimed to ascertain the effectiveness of this method for simulating brain alterations associated with disease progression. To confirm that the original individual characteristics were retained after repeated transformations of brain images, age predictions were made using each transformed image. An analysis of variance (ANOVA) was used to confirm the absence of significant differences in these predictions. The significance level was set at P < 0.05.

3 Results

3.1 Generation of virtual schizophrenia brain

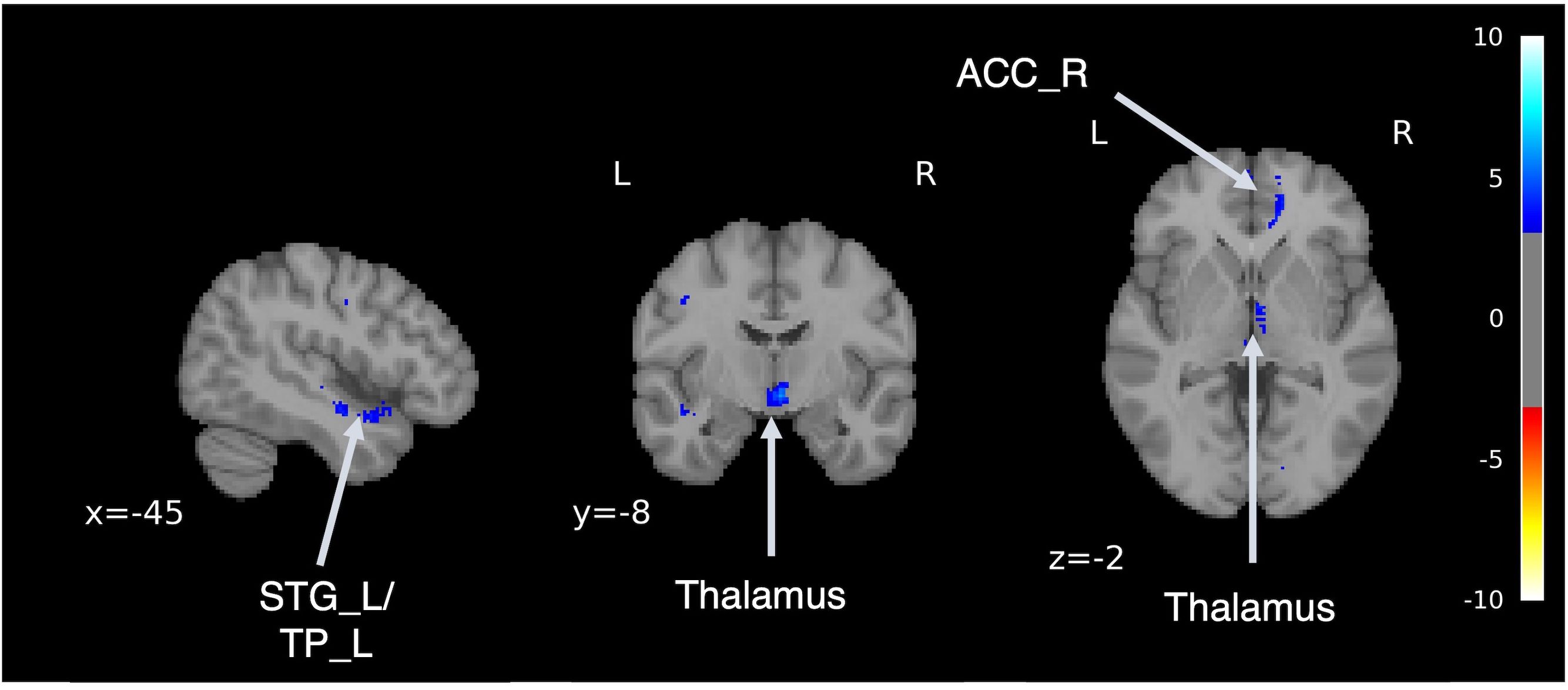

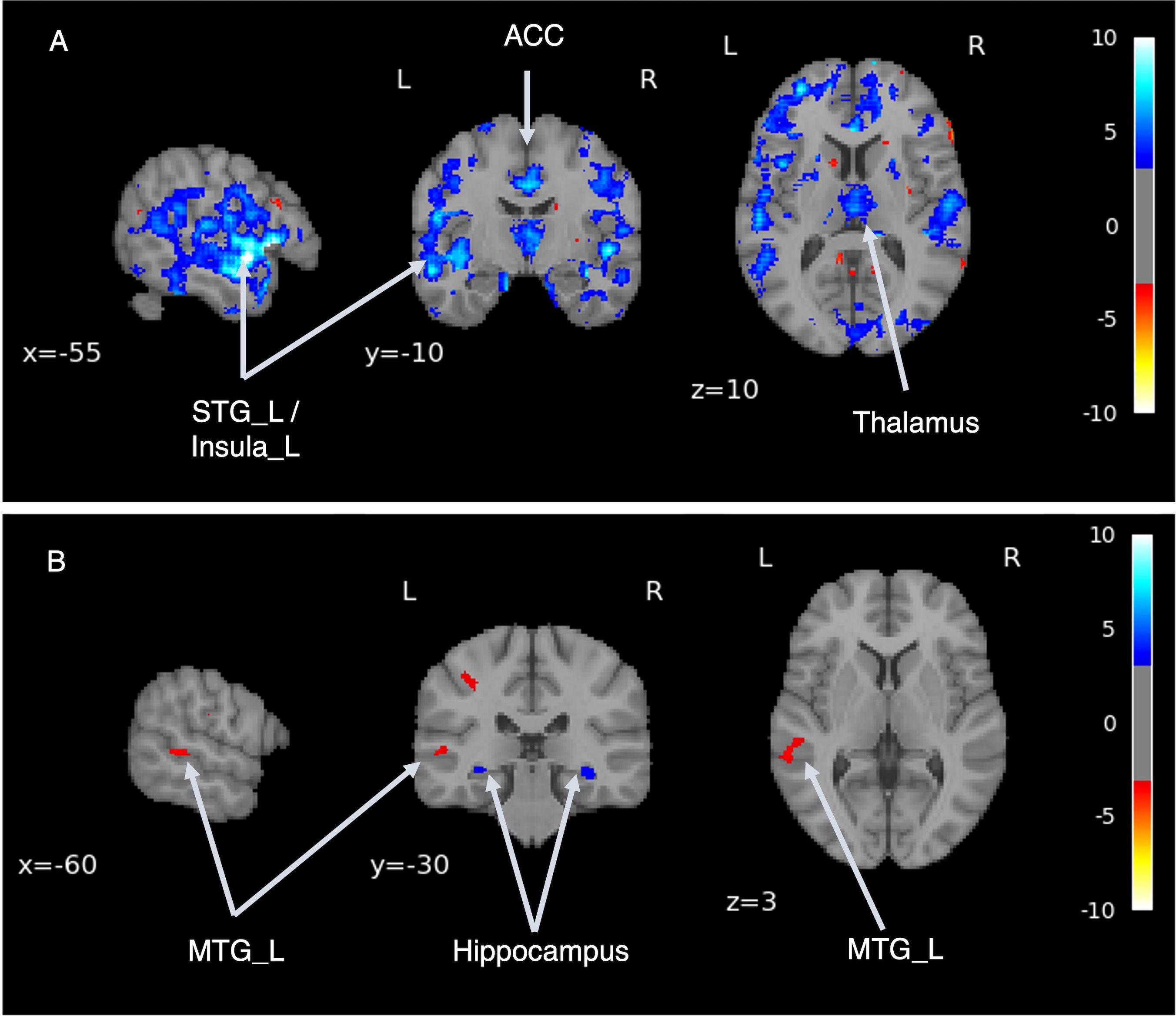

Following an adequate learning process, our model was able to generate brain MRI images qualitatively (Supplementary Figure 1). We performed VBM analysis pre- and post-transformation to confirm that the model generated from MRI of HSs to MRI of patients with SZ captured the characteristics of schizophrenic brain structures. VBM analysis confirmed the regions of volume reduction after transformation to an SZ-like state, including the bilateral anterior cingulate cortex, thalamus, orbitofrontal cortex, insula, temporal pole, and left superior temporal gyrus (Figure 4, Supplementary Table 2). This result is consistent with the findings of previous case-control studies on SZ (49–54), indicating that this model can reproduce the brain volume changes caused by SZ.

Figure 4. Difference in brain volume pre- and post-transformation. Voxel-based morphometry analysis was performed between pre- and post-transformation magnetic resonance imaging images from HSs and those with schizophrenia. Cold colors represent a decrease, and warm colors represent an increase. STG, superior temporal gyrus; TP, temporal pole; ACC, anterior cingulate cortex.

In addition, age prediction was performed using brain images obtained pre- and post-transformation, and there was no significant difference in the predicted values (P = 0.495). In addition to the t-test, the effect size was calculated using Cohen’s d, which revealed a small effect (d = 0.115) (Supplementary Figure 2).

Using a generator model that transforms the MRI of HSs into the MRI characteristics of patients with SZ, this model was applied to MRI images, and the volume differences of brain regions pre- and post-transformation were examined. After applying SZ brain generator images, volume reduction was observed in the bilateral superior temporal gyrus, right middle temporal gyrus, right hippocampus, and bilateral medial frontal gyrus to the anterior cingulate gyrus.

3.2 Simulation analysis of virtual schizophrenia with autism spectrum disorder brain

We performed a simulation using a trained SZ MRI generator, generating virtual MRI images of ASD with SZ (“virtual” ASD + SZ) by transforming existing MRI images. We then analyzed the differences in brain structures pre and post the transformation to SZ (i.e., comparing ASD with “virtual” ASD + SZ. In Figure 5A, the cold-colored areas represent regions where the volume was reduced when ASD was comorbid with SZ. We confirmed volume reduction, mainly in the bilateral temporal lobes and insular cortex.

Figure 5. Disease comorbidity simulation. (A) shows the volume differences before and after the application of the schizophrenia brain generator, which enabled the generation of an image of autism spectrum disorder (ASD) combined with schizophrenia (SZ). (B) shows the volume differences between the virtual SZ-like images with and without ASD. STG, superior temporal gyrus; ACC, anterior cingulate cortex; MTG, middle temporal gyrus.

Next, we performed a simulation to examine whether there were differences in brain structure between individuals diagnosed with SZ with and without background ASD. Virtual SZ MRI images were generated from ASD and TD MRI images using an SZ brain generator. Depicted in Figure 5B are regions where the cold regions show a volume reduction in the virtual SZ generated from the ASD (“virtual” ASD + SZ) compared with the virtual SZ generated from the TD (“virtual” TD + SZ). Volume reduction was observed bilaterally in the hippocampus. The warm regions showed volume increases in the “virtual” ASD + SZ images. An increase in volume was observed in the left middle temporal gyrus.

3.3 Simulation analysis of repetitive transformations

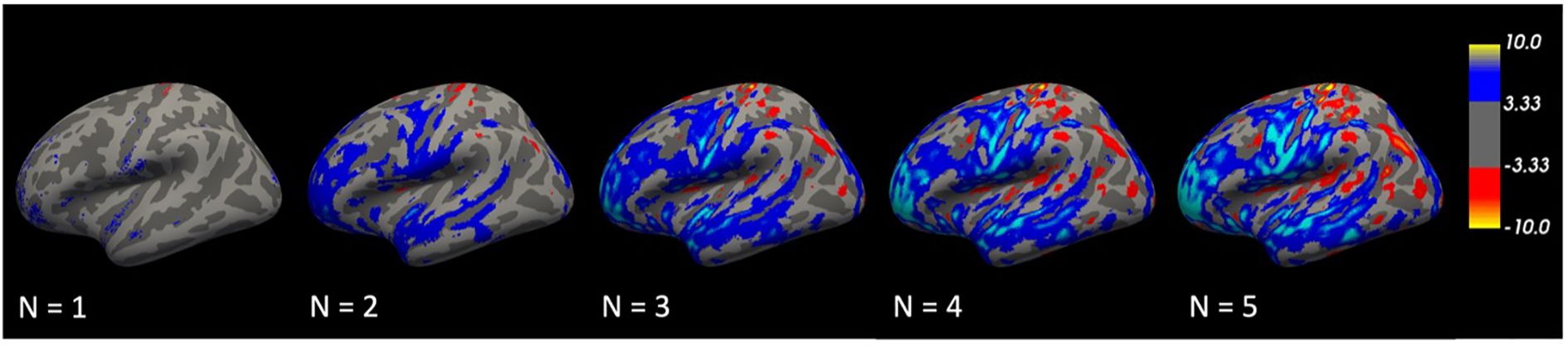

We hypothesized that repeated transformations of brain images could potentially reveal the evolutionary trajectory of a disorder. To verify this hypothesis, we conducted VBM analyses on the original images and the nth iterated transformation (n = 1–5). The results showed that the regions of difference expanded with each repeated transformation. Initially localized, by the fifth transformation, these differences had progressively become widespread, with a focus on the temporal lobe (Figure 6). This progressive expansion suggests that the model effectively captures the cumulative structural changes associated with the disorder.

Figure 6. Repetitive transformations. The volume differences were relatively localized at first but gradually became more extensive, especially in the temporal lobe, by the fifth transformation.

To confirm that the original individual characteristics were retained after repeated transformations of the brain images, age predictions were conducted using each transformed image.

Five-fold cross-validation and linear regression were used to predict age from repeatedly transformed brain images. The differences in prediction accuracy were evaluated using ANOVA. The results showed no significant differences in age prediction (P = 0.932, η²p = 0.01) (Supplementary Figure 3). This suggests that the original individual characteristics are preserved after multiple transformations.

4 Discussion

In this study, we introduced generative AI capable of depicting structural differences in the brain resulting from psychiatric disorders. Specifically, we developed a generative AI to transform brain images into SZ images. Our results confirm the potential of the model for facilitating several simulation experiments related to psychiatric disorders.

Our model was qualitatively successful in transforming the MRI images of HSs into images resembling those of patients with SZ. Subsequent VBM analysis confirmed the alignment of the model with previously established SZ studies. Patients with SZ exhibit structural anomalies in the superior temporal gyrus, thalamus, and hippocampus compared with healthy individuals, a finding that corroborates our results (53–55). This study offers a compelling solution to the problem of insufficient neuroimaging data by effectively generating data that capture the characteristics of SZ-related brain changes (56).

Subsequent simulations using a trained SZ brain generator extended the investigation to individuals with SZ and ASD. SZ and ASD are distinct disorders with unique clinical profiles and natural histories. However, ASD carries a significantly higher risk, three–six times, of developing SZ than TD individuals (57, 58). Recent studies have indicated a convergence between SZ and ASD. To investigate this intricate relationship, we conducted a virtual brain simulation and proposed a new hypothesis. This exploration revealed volume reduction patterns, primarily concentrated in the bilateral temporal lobes and insular cortex, which are characteristic of comorbidities. This observation suggests that the model captures distinct structural changes specific to this subgroup, thereby demonstrating its potential to unravel the complex interplay between different psychiatric conditions.

Further simulations were performed assuming a retrospective study. In this study, we generated brain images of patients with SZ with and without ASD and examined whether it was possible to analyze the differences in their structures. The distinct volume reductions observed in the bilateral hippocampus in the ASD + SZ group indicate a potential structural divergence associated with the comorbidity. Conversely, the volume increase in the left middle temporal gyrus in the same group offers an interesting avenue for understanding unique structural variations in this population. Zheng et al. reported that the higher the autistic traits, the lesser the improvement in psychiatric symptoms and life functioning after a year (59). Therefore, it is important to determine the presence or absence of ASD in the context of SZ to predict the prognosis and determine the course of treatment. The proposed model can provide decision support for treatment strategies.

The repeated transformation approach, which was designed to explore the evolutionary trajectory of brain changes, provides novel insights into ailment progression. The expansion of the difference region with each repeated transformation, culminating in a widespread pattern centered on the temporal lobe, underscores the model’s ability to capture and magnify the cumulative effects of structural alterations. A meta-analysis of longitudinal studies on SZ revealed that patients with SZ exhibited significantly higher volume loss over time (49). This loss included the entire cortical GM, left superior temporal gyrus, left anterior temporal gyrus, and left Heschl’s gyrus. These findings are consistent with the simulation results generated using the proposed model. This repeated approach can potentially aid in elucidating the progressive nature of the impact of the disorder on the brain structure.

Investigation of the preservation of the original individual characteristics after repeated transformations brought an essential dimension to the study. By evaluating age predictions across the transformed images, this study established the robustness of the model in retaining individual-specific features. The absence of statistically significant differences in age predictions reinforces the credibility of repeated transformations in preserving the key characteristics of the original images.

Generative models capable of producing high-resolution 3D brain images with morphological features similar to real brain images have been developed using large-scale datasets. Methods for modeling changes caused by brain aging and Alzheimer’s disease have been investigated (60, 61). However, no attempts have been made to generate brain images of SZ combined with other psychiatric disorders or to simulate the progression of the disease. Such simulations have the potential to enhance our understanding of the neural basis of psychiatric disorders and contribute to the development of novel therapeutic and diagnostic methods. It is important to acknowledge the limitations of this study despite these promising findings. One limitation is the reliance on a simulated data approach, which may not fully capture the complexity of real-world brain structural variations. Additionally, even if there is no difference in the accuracy of age prediction using the generated brain images, it may not cover the entire range of demographic factors that could influence brain structure. The disparate age and gender distributions across the COBRE and ABIDE datasets may have impacted the outcomes. Furthermore, it is well-established that the duration of illness and the amount of medication administered can influence brain structure in patients with SZ. Incorporating these parameters may be essential for elucidating the intricacies of this complex illness. Unfortunately, CycleGAN requires consistency of input factors across domains, which limits the ability to utilize information not possessed by HSs for training. Additionally, although this study simulated brains with comorbid ASD and SZ, it is important to acknowledge that real clinical cases cannot be fully represented by a simple overlay of images. The primary aim of this study was to propose an application of generative AI for simulation, focusing on a straightforward model that transforms brain images. Future research would benefit from developing models that incorporate a wider range of confounding factors, potentially leading to more realistic and comprehensive simulations.

In conclusion, this study demonstrated the potential of the developed model to capture and simulate brain structural alterations associated with SZ and its comorbidity with ASD. These findings provide a foundation for exploring the mechanisms underlying these conditions and their interconnections.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

Ethics statement

The studies involving humans were approved by National Center of Neurology and Psychiatry. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

HY: Writing – original draft, Writing – review & editing. GS: Writing – review & editing. MS: Writing – review & editing. YY: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was partially supported by the JSPS KAKENHI (Grant Numbers JP 18K07597, JP 20H00625, JP 22K15777, JP 22K07574, JST CREST JPMJCR21P4) and an Intramural Research Grant (4-6, 6-9) for Neurological and Psychiatric Disorders of the NCNP.

Acknowledgments

We thank all the authors of the included studies. We also would like to thank Editage (www.editage.com) for English language editing. This manuscript has been released as a pre-print at https://www.medrxiv.org/content/10.1101/2024.05.29.24308097v1.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2024.1437075/full#supplementary-material

References

1. Gong C, Jing C, Chen X, Pun CM, Huang G, Saha A, et al. Generative AI for brain image computing and brain network computing: a review. Front Neurosci. (2023) 17:1203104. doi: 10.3389/fnins.2023.1203104

2. Ramesh A, Dhariwal P, Nichol A, Chu C, Chen M. Hierarchical Text-Conditional Image Generation with CLIP Latents (2022). Available online at: http://arxiv.org/abs/2204.06125. (Accessed October, 2023).

3. Rombach R, Blattmann A, Lorenz D, Esser P, Ommer B. (2022). High-resolution image synthesis with latent diffusion models, in: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (Washington, DC: IEEE Computer Society), pp. 10674–85. doi: 10.1109/CVPR52688.2022.01042

4. Xiao J, Bi X. Model-guided generative adversarial networks for unsupervised fine-grained image generation. IEEE Trans Multimed. (2024) 26:1188–99. doi: 10.1109/TMM.2023.3277758

5. Müller-Franzes G, Niehues JM, Khader F, Arasteh ST, Haarburger C, Kuhl C, et al. Diffusion probabilistic models beat GANs on medical images (2022). Available online at: http://arxiv.org/abs/2212.07501. (Accessed June, 2023).

6. Khader F, Müller-Franzes G, Tayebi Arasteh S, Han T, Haarburger C, Schulze-Hagen M, et al. Denoising diffusion probabilistic models for 3D medical image generation. Sci Rep. (2023) 13:7303. doi: 10.1038/s41598-023-34341-2

7. Kwon G, Han C, Kim D. Generation of 3D brain MRI using auto-encoding generative adversarial networks. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 11766 LNCS, eds. Shen D, Liu T, Peters T, Staib LH, Essert C, Zhou S, et al (Cham: Springer) (2019). pp. 118–26. doi: 10.1007/978-3-030-32248-9_14.

8. Werner RA, Higuchi T, Nose N, Toriumi F, Matsusaka Y, Kuji I, et al. Generative adversarial network-created brain SPECTs of cerebral ischemia are indistinguishable to scans from real patients. Sci Rep. (2022) 12:18787. doi: 10.1038/s41598-022-23325-3

9. Islam J, Zhang Y. GAN-based synthetic brain PET image generation. Brain Inf. (2020) 7:3. doi: 10.1186/s40708-020-00104-2

10. Choi J, Chae H. methCancer-gen: a DNA methylome dataset generator for user-specified cancer type based on conditional variational autoencoder. BMC Bioinf. (2020) 21:181. doi: 10.1186/s12859-020-3516-8

11. Shorten C, Khoshgoftaar TM. A survey on image data augmentation for deep learning. J Big Data. (2019) 6:60. doi: 10.1186/s40537-019-0197-0

12. Kumar S, Arif T. CycleGAN-based Data Augmentation to Improve Generalizability Alzheimer ‘ s Diagnosis using Deep Learning. Research Square. Version 1. (2024). pp. 1–13. doi: 10.21203/rs.3.rs-4141650/v1.

13. Shin H-C, Tenenholtz NA, Rogers JK, Schwarz CG, Senjem ML, Gunter JL, et al. Medical image synthesis for data augmentation and anonymization using generative adversarial networks. In: Gooya A, Goksel O, Oguz I, Burgos N, editors. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer International Publishing, Cham (2018). p. 1–11. Lecture Notes in Computer Science. doi: 10.1007/978-3-030-00536-8_1

14. Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial networks (2014). Available online at: http://arxiv.org/abs/1406.2661. (Accessed June, 2021).

15. Zhang Y, Wang Q, Hu B. MinimalGAN: diverse medical image synthesis for data augmentation using minimal training data. Appl Intell. (2023) 53:3899–916. doi: 10.1007/s10489-022-03609-x

16. Zhou X, Qiu S, Joshi PS, Xue C, Killiany RJ, Mian AZ, et al. Enhancing magnetic resonance imaging-driven Alzheimer’s disease classification performance using generative adversarial learning. Alzheimers Res Ther. (2021) 13:60. doi: 10.1186/s13195-021-00797-5

17. Zhao J, Huang J, Zhi D, Yan W, Ma X, Yang X, et al. Functional network connectivity (FNC)-based generative adversarial network (GAN) and its applications in classification of mental disorders. J Neurosci Methods. (2020) 341:108756. doi: 10.1016/j.jneumeth.2020.108756

18. Chen X, Peng Y, Li D, Sun J. DMCA-GAN: dual multilevel constrained attention GAN for MRI-based hippocampus segmentation. J Digit Imaging. (2023) 36:2532–53. doi: 10.1007/s10278-023-00854-5

19. Li W, Li Y, Qin W, Liang X, Xu J, Xiong J, et al. Magnetic resonance image (MRI) synthesis from brain computed tomography (CT) images based on deep learning methods for magnetic resonance (MR)-guided radiotherapy. Quant Imaging Med Surg. (2020) 10:1223–36. doi: 10.21037/QIMS-19-885

20. Cackowski S, Barbier EL, Dojat M, Christen T. comBat versus cycleGAN for multi-center MR images harmonization. In: Proceedings of Machine Learning Research-Under Review. (2021), 1–11.

21. Fortin J-P, Cullen N, Sheline YI, Taylor WD, Aselcioglu I, Cook PA, et al. Harmonization of cortical thickness measurements across scanners and sites. Neuroimage. (2018) 167:104–20. doi: 10.1016/j.neuroimage.2017.11.024

22. Wang H, Fu T, Du Y, Gao W, Huang K, Liu Z, et al. Scientific discovery in the age of artificial intelligence. Nature. (2023) 620:47–60. doi: 10.1038/s41586-023-06221-2

23. Xu M, Niyato D, Chen J, Zhang H, Kang J, Xiong Z, et al. Generative AI-empowered simulation for autonomous driving in vehicular mixed reality metaverses. IEEE J Sel Top Signal Process. (2023) 17:1064–79. doi: 10.1109/JSTSP.2023.3293650

24. Anishchenko I, Pellock SJ, Chidyausiku TM, Ramelot TA, Ovchinnikov S, Hao J, et al. De novo protein design by deep network hallucination. Nature. (2021) 600:547–52. doi: 10.1038/s41586-021-04184-w

25. Feczko E, Miranda-Dominguez O, Marr M, Graham AM, Nigg JT, Fair DA. The heterogeneity problem: approaches to identify psychiatric subtypes. Trends Cognit Sci. (2019) 23:584–601. doi: 10.1016/j.tics.2019.03.009

26. Owen MJ. New approaches to psychiatric diagnostic classification. Neuron. (2014) 84:564–71. doi: 10.1016/j.neuron.2014.10.028

27. Shi L, Zhou H, Wang Y, Shen Y, Fang Y, He Y, et al. Altered empathy-related resting-state functional connectivity in adolescents with early-onset schizophrenia and autism spectrum disorders. Asian J Psychiatr. (2020) 53:102167. doi: 10.1016/j.ajp.2020.102167

28. Anttila V, Bulik-Sullivan B, Finucane HK, Walters RK, Bras J, Duncan L, et al. Analysis of shared heritability in common disorders of the brain. Sci (80- ). (2018) 360:eaap8757. doi: 10.1126/science.aap8757

29. Opel N, Goltermann J, Hermesdorf M, Berger K, Baune BT, Dannlowski U. Cross-disorder analysis of brain structural abnormalities in six major psychiatric disorders: A secondary analysis of mega- and meta-analytical findings from the ENIGMA consortium. Biol Psychiatry. (2020) 88:678–86. doi: 10.1016/j.biopsych.2020.04.027

30. American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders. 5th ed. Washington, DC: American Psychiatric Association (2013).

31. First MB, Spitzer RL, Gibbon M, Williams JBW. Structured clinical interview for DSM-IV Axis I Disorders SCID-I. Washington, DC: American Psychiatric Publishing (1997). Available at: https://books.google.co.jp/books?id=h5_lwAEACAAJ.

32. Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. (2007) 38:95–113. doi: 10.1016/j.neuroimage.2007.07.007

33. Zhu J-Y, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks, in: 2017 IEEE International Conference on Computer Vision (ICCV). (Washington, DC: IEEE Computer Society) (2017), pp. 2242–51, IEEE. doi: 10.1109/ICCV.2017.244

34. Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics). (2015) 9351:234–41. doi: 10.1007/978-3-319-24574-4_28

35. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition, in: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. (Washington, DC: IEEE Computer Society) (2016), pp. 770–8. doi: 10.1109/CVPR.2016.90

36. Xu W, Long C, Wang R, Wang G. DRB-GAN: A Dynamic ResBlock Generative Adversarial Network for Artistic Style Transfer. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV). (Washington, DC: IEEE Computer Society) (2022). pp. 6363–72. doi: 10.1109/iccv48922.2021.00632

37. Deng H, Wu Q, Huang H, Yang X, Wang Z. InvolutionGAN: lightweight GAN with involution for unsupervised image-to-image translation. Neural Comput Appl. (2023) 35:16593–605. doi: 10.1007/s00521-023-08530-z

38. Yamaguchi H, Hashimoto Y, Sugihara G, Miyata J, Murai T, Takahashi H, et al. Three-dimensional convolutional autoencoder extracts features of structural brain images with a “Diagnostic label-free” Approach: application to schizophrenia datasets. Front Neurosci. (2021) 15:652987. doi: 10.3389/fnins.2021.652987

39. Hashimoto Y, Ogata Y, Honda M, Yamashita Y. Deep feature extraction for resting-state functional MRI by self-supervised learning and application to schizophrenia diagnosis. Front Neurosci. (2021) 15:696853. doi: 10.3389/fnins.2021.696853

40. Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, et al. PyTorch: an imperative style, high-performance deep learning library. Adv Neural Inf Process Syst. (2019), 32. http://arxiv.org/abs/1912.01703.

41. Ashburner J, Friston KJ. Voxel-based morphometry - The methods. Neuroimage. (2000) 11:805–21. doi: 10.1006/nimg.2000.0582

42. Good CD, Johnsrude IS, Ashburner J, Henson RNA, Friston KJ, Frackowiak RSJ. A voxel-based morphometric study of ageing in 465 normal adult human brains. Neuroimage. (2001) 14:21–36. doi: 10.1006/nimg.2001.0786

43. Falcon C, Tucholka A, Monté-Rubio GC, Cacciaglia R, Operto G, Rami L, et al. Longitudinal structural cerebral changes related to core CSF biomarkers in preclinical Alzheimer’s disease: A study of two independent datasets. NeuroImage Clin. (2018) 19:190–201. doi: 10.1016/j.nicl.2018.04.016

44. Chételat G, Landeau B, Eustache F, Mézenge F, Viader F, de la Sayette V, et al. Using voxel-based morphometry to map the structural changes associated with rapid conversion in MCI: A longitudinal MRI study. Neuroimage. (2005) 27:934–46. doi: 10.1016/j.neuroimage.2005.05.015

45. Kim GW, Kim YH, Jeong GW. Whole brain volume changes and its correlation with clinical symptom severity in patients with schizophrenia: A DARTEL-based VBM study. PLoS One. (2017) 12:e0177251. doi: 10.1371/journal.pone.0177251

46. Schnack HG, van Haren NEM, Brouwer RM, van Baal GCM, Picchioni M, Weisbrod M, et al. Mapping reliability in multicenter MRI: Voxel-based morphometry and cortical thickness. Hum Brain Mapp. (2010) 31:1967–82. doi: 10.1002/hbm.20991

47. García-Martí G, Aguilar EJ, Lull JJ, Martí-Bonmatí L, Escartí MJ, Manjón JV, et al. Sanjuán J. Schizophrenia with auditory hallucinations: A voxel-based morphometry study. Prog Neuropsychopharmacol Biol Psychiatry. (2008) 32:72–80. doi: 10.1016/j.pnpbp.2007.07.014

48. Zhang T, Koutsouleris N, Meisenzahl E, Davatzikos C. Heterogeneity of structural brain changes in subtypes of schizophrenia revealed using magnetic resonance imaging pattern analysis. Schizophr Bull. (2015) 41:74–84. doi: 10.1093/schbul/sbu136

49. Vita A, De Peri L, Deste G, Sacchetti E. Progressive loss of cortical gray matter in schizophrenia: a meta-analysis and meta-regression of longitudinal MRI studies. Transl Psychiatry. (2012) 2:e190–0. doi: 10.1038/tp.2012.116

50. Walton E, Hibar DP, van Erp TGM, Potkin SG, Roiz-Santiañez R, Crespo-Facorro B, et al. Positive symptoms associate with cortical thinning in the superior temporal gyrus via the ENIGMA Schizophrenia consortium. Acta Psychiatr Scand. (2017) 135:439–47. doi: 10.1111/acps.12718

51. Modinos G, Costafreda SG, van Tol M-J, McGuire PK, Aleman A, Allen P. Neuroanatomy of auditory verbal hallucinations in schizophrenia: A quantitative meta-analysis of voxel-based morphometry studies. Cortex. (2013) 49:1046–55. doi: 10.1016/j.cortex.2012.01.009

52. Fan F, Xiang H, Tan S, Yang F, Fan H, Guo H, et al. Subcortical structures and cognitive dysfunction in first episode schizophrenia. Psychiatry Res - Neuroimaging. (2019) 286:69–75. doi: 10.1016/j.pscychresns.2019.01.003

53. van Erp TGM, Walton E, Hibar DP, Schmaal L, Jiang W, Glahn DC, et al. Cortical brain abnormalities in 4474 individuals with schizophrenia and 5098 control subjects via the enhancing neuro imaging genetics through meta analysis (ENIGMA) consortium. Biol Psychiatry. (2018) 84:644–54. doi: 10.1016/j.biopsych.2018.04.023

54. Van Erp TGM, Hibar DP, Rasmussen JM, Glahn DC, Pearlson GD, Andreassen OA, et al. Subcortical brain volume abnormalities in 2028 individuals with schizophrenia and 2540 healthy controls via the ENIGMA consortium. Mol Psychiatry. (2016) 21:547–53. doi: 10.1038/mp.2015.63

55. Honea R, Crow TJ, Passingham D, Mackay CE. Regional deficits in brain volume in schizophrenia: A meta-analysis of voxel-based morphometry studies. Am J Psychiatry. (2005) 162:2233–45. doi: 10.1176/appi.ajp.162.12.2233

56. Yamashita A, Yahata N, Itahashi T, Lisi G, Yamada T, Ichikawa N, et al. Harmonization of resting-state functional MRI data across multiple imaging sites via the separation of site differences into sampling bias and measurement bias. PLoS Biol. (2019) 17:e3000042. doi: 10.1371/journal.pbio.3000042

57. Jutla A, Foss-Feig J, Veenstra-VanderWeele J. Autism spectrum disorder and schizophrenia: An updated conceptual review. Autism Res. (2022) 15:384–412. doi: 10.1002/aur.2659

58. Chien Y, Wu C, Tsai H. The comorbidity of schizophrenia spectrum and mood disorders in autism spectrum disorder. Autism Res. (2021) 14:571–81. doi: 10.1002/aur.2451

59. Zheng S, Chua YC, Tang C, Tan GMY, Abdin E, Lim VWQ, et al. Autistic traits in first-episode psychosis: Rates and association with 1-year recovery outcomes. Early Interv Psychiatry. (2021) 15:849–55. doi: 10.1111/eip.13021

60. Tudosiu P-D, Pinaya WHL, Graham MS, Borges P, Fernandez V, Yang D, et al. Morphology-Preserving Autoregressive 3D Generative Modelling of the Brain. In: Zhao C, Svoboda D, Wolterink JM, Escobar M editors. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Cham: Springer International Publishing (2022). p. 66–78. doi: 10.1007/978-3-031-16980-9_7

Keywords: generative AI, deep learning, CycleGAN, brain MRI simulation, schizophrenia, disease simulation

Citation: Yamaguchi H, Sugihara G, Shimizu M and Yamashita Y (2024) Generative artificial intelligence model for simulating structural brain changes in schizophrenia. Front. Psychiatry 15:1437075. doi: 10.3389/fpsyt.2024.1437075

Received: 23 May 2024; Accepted: 12 September 2024;

Published: 04 October 2024.

Edited by:

Quan Wang, Chinese Academy of Sciences (CAS), ChinaReviewed by:

Yunzhi Pan, Central South University, ChinaRuochen Dang, Chinese Academy of Sciences (CAS), China

Copyright © 2024 Yamaguchi, Sugihara, Shimizu and Yamashita. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuichi Yamashita, eWFtYXlAbmNucC5nby5qcA==

Hiroyuki Yamaguchi

Hiroyuki Yamaguchi Genichi Sugihara

Genichi Sugihara Masaaki Shimizu

Masaaki Shimizu Yuichi Yamashita

Yuichi Yamashita