- 1School of Computing and Information Systems, University of Melbourne, Parkville, VIC, Australia

- 2Division of Psychology and Mental Health, School of Health Sciences, Manchester Academic Health Sciences Centre, University of Manchester, Manchester, United Kingdom

- 3Complex Trauma and Resilience Research Unit, Greater Manchester Mental Health NHS Foundation Trust, Manchester, United Kingdom

Fully automated mental health smartphone apps show strong promise in increasing access to psychological support. Therefore, it is crucial to understand how to make these apps effective. The therapeutic alliance (TA), or the relationship between healthcare professionals and clients, is considered fundamental to successful treatment outcomes in face-to-face therapy. Thus, understanding the TA in the context of fully automated apps would bring us insights into building effective smartphone apps which engage users. However, the concept of a digital therapeutic alliance (DTA) in the context of fully automated mental health smartphone apps is nascent and under-researched, and only a handful of studies have been published in this area. In particular, no published review paper examined the DTA in the context of fully automated apps. The objective of this review was to integrate the extant literature to identify research gaps and future directions in the investigation of DTA in relation to fully automated mental health smartphone apps. Our findings suggest that the DTA in relation to fully automated smartphone apps needs to be conceptualized differently to traditional face-to-face TA. First, the role of bond in the context of fully automated apps is unclear. Second, human components of face-to-face TA, such as empathy, are hard to achieve in the digital context. Third, some users may perceive apps as more non-judgmental and flexible, which may further influence DTA formation. Subdisciplines of computer science, such as affective computing and positive computing, and some human-computer interaction (HCI) theories, such as those of persuasive technology and human-app attachment, can potentially help to foster a sense of empathy, build tasks and goals and develop bond or an attachment between users and apps, which may further contribute to DTA formation in fully automated smartphone apps. Whilst the review produced a relatively limited quantity of literature, this reflects the novelty of the topic and the need for further research.

Introduction

More than one in 10 people globally live with a mental health condition (1), and more than half of the population in middle- and high-income countries will experience mental health problems during their lives (2). Mental health problems cause high levels of distress and impair the quality of life for people experiencing them and their families (3, 4). In the UK, mental health problems cost about 14% of the total budget within the National Health Service (NHS) (5). Economists predict that by 2030, the global cost of treating common mental health problems, such as depression and anxiety, will scale up to US $147 billion (6).

Psychological interventions, such as cognitive and behavioral therapy (CBT), are effective in treating a range of mental health problems, in addition to or in place of pharmacological treatments (7). However, access to face-to-face psychological therapy remains low. According to the World Health Organization (WHO) (8), the number of mental health professionals trained to deliver therapy does not meet the level of need. In addition to inadequate staffing, various other barriers, such as stigma (9, 10), prevent people from seeking professional help for mental health problems.

Digital mental health interventions are considered viable solutions for increasing mental health service accessibility, decreasing government financial burden, and helping to overcome the barriers of stigma (11–13). Among the various types of digital health interventions, mental health smartphone apps show strong promise in increasing access to psychological support due to their availability, flexibility, scalability and relatively low price (14, 15). In particular, unguided mental health apps (also termed fully automated mental health apps), which can be used in the absence of a clinician, can potentially decrease clinicians' workloads (16).

There are various types of mental health apps available on the market. Self-guided, unguided, self-supported or fully automated apps are apps without human support and are entirely dependent on self-use. Guided apps are used with the support of a healthcare professional. Apps are sometimes used in the context of blended therapy, which is an approach that uses “elements of both face-to-face and Internet-based interventions, including both the integrated and the sequential use of both treatment formats” (17). In blended therapy, smartphone apps are used as only part of the treatment plan and aim to support and augment face-to-face therapy.

Theory-driven and evidence-based mental health smartphone apps show promising signs of efficacy in delivering digital therapy. One meta-analysis found that both guided and unguided smartphone interventions can reduce anxiety (18). Other studies revealed that digital health interventions, including smartphone apps, showed significant clinical improvements in depression and anxiety (19), and psychosis (20). Although research shows that apps with human support are more effective than automated digital interventions (21), guided apps could potentially increase healthcare professionals' workload (16). As such, there is a tradeoff between ensuring psychological support and the provision of therapy that is scalable and accessible, whilst balancing staff workloads and availability of face-to-face resources.

The therapeutic alliance (TA), also termed working alliance (22), refers to the relationship between a healthcare professional and a client, and is considered to be a fundamental factor in face-to-face psychological therapy. The most well-known conceptualization of TA is Bordin's theory (22), which suggests that TA is composed of three components: agreement on goals, bonds between healthcare professionals and clients, and agreement on the tasks that need to be undertaken to achieve goals. The Working Alliance Inventory (WAI), a scale that was developed based on Bordin's conceptual model, is commonly used to measure TA in face-to-face therapy. Another scale that has been used to measure TA is the Agnew Relationship Measure (ARM), which comprises five dimensions: bond, partnership, confidence in therapy, client initiative, and openness (23). TA is an important component of building engagement (24) and improving clinical outcomes in face-to-face therapy. Previous research has found that the TA generally has moderate but reliable correlations with clinical outcomes across all types of mental health problems and treatment approaches in both young people and adults (25–27).

Although researchers primarily understand TA as occurring in the context of face-to-face therapy, Bordin (22) argued that a TA can exist between a person seeking change and a change agent, which may not be a human healthcare professional. Interpreting this in a modern digital context, we propose that agents other than human healthcare professionals, including mental health smartphone apps, can possibly be such change agents for clients seeking change. However, the in-person healthcare professional is not present in fully automated mental health apps meaning that a potentially important mediator of change is not present. Therefore, understanding whether the concept of digital therapeutic alliance (DTA) exists in the fully automated mental health app context, and how it may differ from the TA in traditional face-to-face therapy, is important in understanding how fully automated apps can be developed to lead to better clinical outcomes.

Whether a TA can be formed in the digital context is unclear, and the concept of a DTA is nascent and under-researched. Theories from Human-Computer Interaction (HCI) which explain how individuals relate to digital technologies suggest the potential for DTA by explaining how apps can build empathy (28), persuade users to perform tasks and support them in task achievement (29), provide the flexibility that facilitates the availability of therapy (14), promote attachment to the app (30) and support self-determination (31). All of these theories may play a part in helping to understand how a DTA can evolve.

A few studies have investigated the TA in the digital context with different digital interventions, such as apps, internet-based CBT, and virtual reality (VR). Most quantitative studies have used the traditional in-person TA scales, such as the WAI (32) and Agnew Relationship Measure (14), to analyze the TA in the digital context, while some studies created DTA instruments by editing WAI or ARM (33–37). Several reviews have concluded that in a range of digital health interventions, the ratings of alliance are generally as high as in face-to-face therapy (25, 38–40). However, some studies have not reported meaningful correlations between ratings and clinical outcomes in the digital context (32, 36, 41–43). A handful of studies have attempted to measure the DTA with mental health smartphone apps, and we are aware of only one review that focused specifically on the DTA with both guided and fully automated smartphone apps (44). Henson et al. (44) found only five papers that met their inclusion criteria for review; only one of these studies measured the DTA with a scale/measure. More research has been conducted in this area since this review, and no published reviews have focused specifically on examining the DTA in the context of fully automated mental health smartphone apps. In addition, Henson and colleagues' review focused on serious mental illness. However, a broader range of mental health problems also needs to be considered.

Therefore, the objectives of this narrative review are to: (1) integrate the extant but growing literature on the DTA in the context of fully automated mental health smartphone apps; (2) examine the research gaps; (3) identify future research directions in the investigation of DTA as applied to fully automated mental health smartphone apps.

Methods

A narrative review was conducted using PsycINFO, PubMed and Google Scholar to search for relevant literature. The databases were searched from inception to August 2021. DTA related articles were searched by using the snowball sampling and citation network analysis method (45–47). Lecy and Beatty (45) argued that in a given research topic, researchers should first find highly cited publications as seed articles. The seed articles should be highly cited and be several years old so that they can be exposed to a broad range of audiences. In the next stage, to expand the number of relevant papers, researchers find papers which cite the seed articles; and then in the second round, find the papers which cite the papers that cite the seed articles, and so on. They further suggested the process needs to be conducted over four rounds. While literature review can be limited by cognitive biases when using keywords, snowball sampling and citation network analysis offers a comprehensive approach to map a broader range of literature (45). Thus, to reach a broad range of studies, snowball sampling and citation network analysis was considered suitable for our study.

Specific Keywords (see Table 1) were used to search for seed articles. After identifying the seed articles, relevant papers that cited the seed articles were kept in the first round, then the relevant papers citing the first-round articles were kept in the second round, and so on. Four rounds of searching were conducted and all abstracts were examined to decide whether the full text needed to be further examined in detail. After four rounds, papers which were clearly within the topic scope at full text were selected for reviewing. Relevant articles were selected for inclusion in the review based on the following eligibility criteria. Inclusion criteria: (i) studies that investigated DTA related concepts in the context of fully automated mental health smartphone apps; (ii) both quantitative studies and qualitative studies. Exclusion criteria: (i) papers that only focused on text, email, online counseling, and video-conferencing; (ii) papers that only focused on guided apps or apps used in the context of blended therapy; (iii) studies that involve apps, but also require other digital devices, such as virtual reality (VR) headsets; (iv) reviews of papers.

Results

Twenty highly cited articles (minimum citation count of 100) on the topic of DTA (not only DTA in the context of fully automated mental health smartphone apps) were selected as seed articles. Those seed articles were highly cited and were published several years ago, so they have become central works on the topic of DTA. That said, all articles on the topic of DTA, including articles about DTA in the context of fully automated mental health apps, would cite those seed articles. In this way, we were able to reach a broad range of studies.

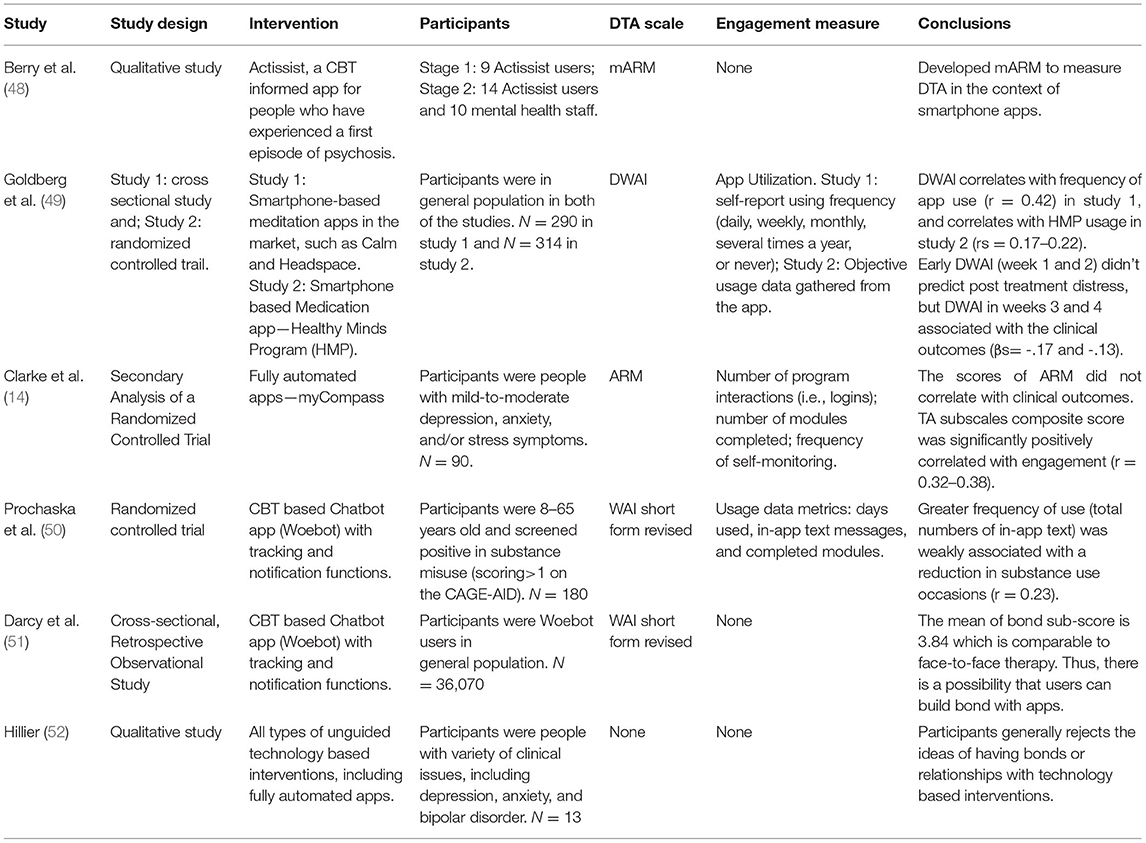

After four rounds of snowballing and citation network analysis, six studies were identified within the eligibility criteria. However, due to the under-researched nature of the topic of DTA in the context of fully automated mental health apps, none of the six identified articles had enough citations to become seed articles. Three of them were quantitative studies, two of the studies were qualitative studies, and the remaining study used a mixed methods approach. Basic study characteristics and conclusions are outlined in Table 2.

Ways in Which DTA Has Previously Been Measured in the Context of Fully Automated Mental Health Apps

Two studies measured the DTA using the WAI short form and one study measured DTA using the ARM. In addition, two measurements, the Mobile Agnew Relationship Measure (mARM) (48) and the Digital Working Alliance Inventory (DWAI) (49) have been proposed to measure the DTA in fully automated mental health apps. The mARM was created by replacing the word “therapist” with “app,” and adding, deleting, and rewording some questions based on qualitative feedback from both clients and healthcare professionals (48). The DWAI is a short 6-item survey based on the WAI, and was created by choosing two items from each subscale of WAI and replacing the term “therapist” with “app” (53).

Relationships Between DTA and Clinical Outcomes

Only two identified studies examined the relationships between the DTA and clinical outcomes in the context of fully automated mental health apps, with mixed findings. Goldberg et al. (49) assessed the DTA by using the DWAI in fully automated meditation apps and found that DWAI scores at weeks 3 or 4 only correlated with reductions in psychological distress (βs = −0.17 and −0.13). However, Clarke et al. (14) examined the relationship between the DTA (measured by the ARM) and clinical outcomes with a fully automated mental health app, comprising educational modules and multiple other functions, and found no statistically significant correlation. These mixed findings are inconsistent with findings from face-to-face therapy (25–27). One possible explanation is that the DTA is somewhat different from the TA, and the existing DTA scales are not comprehensively measuring all aspects of the DTA. Even if some studies found correlations between the ratings of the scales and clinical outcomes, this could be by chance. For example, Goldberg et al. (49) used the six-item DWAI to measure the DTA with meditation apps. However, the DWAI was seemingly developed by choosing two items from each subscale of WAI short form without employing a formal scale construction method. Thus, whether the DWAI comprehensively/adequately measures DTA is questionable.

Potential Differences in Characteristics Between TA and DTA in the Context of Fully Automated Mental Health Apps

First, bond is considered a critical element in the face-to-face TA conceptual model and is a subscale of both the WAI and ARM. However, the role of bond in the DTA is unknown. One qualitative study found that users generally rejected the ideas of having a connection or a bond with fully automated digital mental health interventions (52). In a study of the fully automated app myCompass, Clark et al. (14) found that the ratings of the bond subscale measured post-treatment did not predict clinical outcomes, while the task and goal subscales moderately correlated with clinical outcomes. However, two studies showed that users could potentially build a bond with chatbot apps. A study of the app Woebot (a therapeutic chatbot that delivers CBT), which was used to reduce substance misuse during the COVID-19, found that the bond subscale rating was higher than task and goal subscales ratings (50). Similarly, another study with Woebot found that the bond subscale rating was high and comparable to face-to-face therapy (51).

Second, some factors of the in-person TA are difficult to achieve in the digital context. Two studies which used qualitative interview methods found that although apps can mimic human support in some ways, users felt that apps were less understanding (14, 52).

On the other hand, some positive feelings, such as the sense of not being judged and the feeling of being accompanied, can be more easily derived from DTAs. Three studies which used qualitative research methods found that users felt more comfortable interacting with technology than healthcare professionals because participants were less fearful about being judged and consequently felt less stigmatized (14, 48, 52). In addition, by interviewing users, two studies found that interactivity might be an important component of DTA which was not a TA subscale (14, 52). Clark et al. (14) also argued that flexibility in time, location and duration, is a characteristic that apps can provide beyond human health professionals.

Impact of DTA on Engagement

TA is fundamental in building adherence or engagement with therapy (24, 54, 55). However, the importance of adherence and engagement with mental health apps remains unclear and there is no standard way of measuring engagement with mental health apps. Two studies defined engagement as app usage. However, the types of usage data in these two studies were different. Clarke et al. (14) measured number of program interactions (i.e., logins), number of modules completed, and frequency of self-monitoring, while Prochaska et al. (50) measured days used, in-app text messages, and completed modules. In addition, Goldberg et al. (49) adopted self-report usage frequency as engagement in study 1, and adopted usage data in study 2. They did not further explain what types of usage data were gathered.

Only one study analyzed correlations between engagement and outcomes. A study of the chatbot app Woebot, used to help reduce substance misuse, found that greater frequency of use (numbers of in-app text) was not significantly associated with a reduction in substance use occasions (r = 0.23) (50).

To the best of our knowledge, two studies have measured the correlations of engagement and DTA in fully automated apps and they used different measurement approaches. Clarke et al. (14) found that, in the fully automated myCompass app, the TA (measured by ARM) subscales composite score was significantly positively correlated with engagement, when engagement was measured by logins, numbers of modules completed and frequency in self-monitoring (r = 0.32–0.38). Goldberg et al. (49) found that in fully automated meditation apps, DWAI scores significantly positively related to app utilization, which was measured by either self-reported user frequency (r = 0.42) or usage data gathered from the app (rs = 0.17–0.22).

Discussion

TA originally refers to the relationship that can develop between healthcare professionals and clients; the concept has been mostly used in face-to-face therapy. Researchers suggest that a form of TA may exist in the digital context (48), but a further explication of the nature and quality of alliance is needed. Some researchers have examined the DTA in the context of fully automated apps by using or slightly modifying face-to-face therapy measurement scales, such as the ARM and WAI. However, the nature of DTA is unclear. Although two studies showed that DTA was positively associated with engagement, the approaches for measuring engagement were inconsistent. In addition, previous studies did not show consistent and reliable correlations between DTA and clinical outcomes in the context of fully automated apps (14, 49). This finding is at odds with the conclusions drawn from face-to-face therapy where there is a robust association between alliance and outcomes. One possible explanation is that the DTA is somewhat different from the TA, and the existing DTA scales are not comprehensively measuring all aspects of the DTA. Even if some studies found correlations between the ratings of the scales and clinical outcomes, this could be by chance.

It is worth noting that an app can lead to positive clinical outcomes without building a DTA. However, it is still possible that the app can bring better or more reliable clinical outcomes when it can build a DTA with users. In addition, some researchers have argued that a positive correlation between TA and clinical outcome at one time point was not sufficient in proving the importance of TA in face-to-face therapy (56). Moreover, apps outcomes might not be direct clinical outcomes, but rather indirect outcomes via promotion of behavior changes, such as increasing help seeking behaviors. Thus, the association between DTA and clinical outcomes, and how to assess the importance of DTA, requires further investigation. However, the priority is re-conceptualizing the DTA in the context of fully automated mental health apps.

Current evidence suggests that the conceptualization of a DTA in the context of fully automated apps in the first place may differ in significant ways from that of the traditional TA for the following reasons: (1) the role of bond in the DTA is unknown; (2) some human components of face-to-face TA, such as empathy, are hard to achieve in the digital context; and (3) some users may perceive apps as more non-judgmental and flexible, which may further influence DTA formation. For example, researchers found that time convenience and interactivity can enhance users' relationship commitment with smartphone apps (57). Considering the above discrepancies, it appears that differences exist between DTA and in-person TA. Thus, a new scale exploring the nuances of the DTA in the context of fully automated apps is warranted.

Herrero et al. (35) suggested that modifying the words in TA scales is not sufficient to develop new DTA scales. According to Boateng et al. (58), the first step of developing a new scale should be defining the domains of the scale. Therefore, instead of modifying TA scales, researchers need to understand what are the key components of a DTA. Multiple questions need to be answered. For example, is it possible for users to build a bond or an attachment with an app? Can users and apps agree on goals? What are the components that apps can provide beyond therapists? The subdisciplines of computer science and HCI theories mentioned in the introduction may help us to answer some of these questions.

Affective Computing: Building an Empathic App

While empathy is fundamental to building client-therapist TA, clinicians have expressed concerns around building empathy in the context of fully automated digital health interventions (14). Affective computing, defined as “computing that relates to, arises from, or influences emotions” (59), could possibly help to build DTA in the following three ways.

First, affective computing allows computers to detect users' emotions through text, which can further help computers understand users' individual needs. Bradley and Lang (60) created the Affective Norms for English Words (ANEW), which provided emotional and affective ratings to a large number of English words. Similarly, computer scientists developed a WordNet-Affect to identify whether a word is positive or negative (61). Emotion can also be detected in physiological ways. Calvo and Peters (62) indicated that emotion can be detected from measures of heart rate, respiration, blood-pressure, skin conductance, and so on. One of the benefits of smartphones and wearable devices is that they contain sensors, which can help to detect and track users' physiological states and detect emotions states. This allows apps and accompanying wearable devices to tailor activities or wellness suggestions to users in response to their changing emotional state (63) in a way that is similar to providing customized plans by human healthcare professionals.

Second, according to narrative empathy theory (28), digital health interventions are able to share empathy by using appropriate design and wordings. Narrative empathy is “the sharing of feeling and perspective-taking induced by reading, viewing, hearing, or imagining narratives of another's situation and condition” (28). Designers can express their empathy by using high-impact graphs (62) and creating scenarios (64). For example, Wright and McCarthy (64) argued that novels and films can usually draw people's empathic feelings, so in technology, designers can use novel-like scenarios to share empathy. Choosing text appropriately is another method by which designers show empathy and enhance the user experience. In a study of an app designed for Syrian refugees, the users expressed their fondness for the language with a Syrian accent, since it provided users the feelings of interacting with real people (65). Similarly, users of the app myCompass expressed their favor of the empathic voice of the content (14).

Third, relational agents (RAs), which can mimic human healthcare professionals' behavior, could be built based on affective computing. Relational agents are computer programs that can have conversations and potentially build relationships with users (66). An embodied agent aims to “produce an intelligent agent that is at least capable of certain social behaviors and which can draw upon its visual representation to reinforce the belief that it is a social entity” (67). A non-embodied agent is a text-based agent (68), and sometimes also named a chatbot. Bickmore and Gruber (66) further argued that RAs can build long-term relationships with clients when using certain design strategies, such as variability and self-disclosure. A review of mobile health interventions concluded that people automatically respond to computers in social ways and relational agents can be used to develop TA by providing empathy and respect (69). In a study of a health education and behavior change counseling app, researchers found that users felt the embodied conversational agents can mimic human, and can further lead to better DTA (70). Some researchers found that users can trust, have the feeling of being cared for, and build an alliance with various types of relational agents based tablet/computer apps, such as alcohol and substance misuse counseling apps, and stress management apps (71–74). Similarly, Suganuman et al. (75) used an embodied relational agent in a fully automated mental health app and found it was effective in bringing positive outcomes. They further argued that the RAs can enhance the DTA.

Computer scientists have also developed relational agents based on the theory of rapport. Rapport is believed to be a relationship quality that occurs during the interactions in crowds. It can be built via both verbal (prosody, words uttered, etc.) and non-verbal behaviors (nodding, directed gaze, gesture, etc.) (76). Researchers further found that relational agents, which were built based on the theory of rapport, can induce users' openness (77, 78). As openness is a subscale of ARM, relational agents can potentially help to enhance DTA by building the rapport with users.

However, users may also not trust relational agents. Concerns, such as cybersecurity and information accuracy, stop some people from using and trusting the relational agents (79). Thus, how to further utilize relational agents to build DTA needs to be further explored.

Persuasive Technology

Building Task and Goal With Persuasive Technology

Goals and tasks are subscales of WAI, and could also be dimensions of DTA, since some studies found that ratings of task and goal link with clinical outcomes in the context of fully automated mental health apps (80, 81). Persuasive technology can be used to enhance tasks and goals in the context of fully-automated mental health apps.

Persuasive technology, also termed behavior change technology, was defined as “any interactive computing system designed to change people's attitudes and behaviors” (82). Fogg (82) further argued that computers can be (1) tools that change users' attitudes and behaviors by making desired results easier to achieve; (2) media which can provide stimulated experiences to change users' behavior and attitudes; and (3) persuasive social actors which can trigger social responses in humans. In addition to the roles of computers, Fogg (82) further stressed the importance of mobility and connectivity, and believed that persuasiveness can be increased by interacting with the right people/things at the right time.

Drawing on Fogg's work, Oinas-Kukkonen and Harjumaa (29) introduced a Persuasive Design System Model, which is divided into four categories:

• Primary task support: This category is employed to support users to achieve their primary tasks. It includes reduction, tunneling, tailoring, suggestion, self-monitoring, surveillance, conditioning and rehearsal.

• Dialogue support: Dialogue support is employed to provide feedback to users and includes praise, rewards, reminders, suggestions, similarity, liking, and social role.

• System credibility support: This category described the ways of designing a computer or system to make it more credible, and consists of trustworthiness, expertise, surface credibility, real-world feel, authority, third-party endorsement, and verifiability.

• Social support: This category builds upon Fogg's mobility and connectivity theory and contains social learning, social comparison, normative influence, social facilitation, cooperation, competition, recognition.

Primary task support strategy is used to make task completion easier. Theoretically, this strategy can be used to help users to complete tasks and achieve their goals, which would further strengthen the DTA. In addition, in face-to-face therapy, one important strategy of building positive TA is customized treatment plans for different individuals (83). In a similar way, tailoring and personalization in primary task would enhance DTA. Previous research also supports this idea. Researchers found that personalization and tailoring features were highly requested by users (84, 85). Self-monitoring in primary task support strategies is favored by users. Clinicians found that the mood-tracking feature of BlueWatch, a mobile app for adults with depressive symptoms, received positive reviews from users (86). Moreover, self-monitoring has also proved effective. For example, a study using smartphone apps for treating eating disorder symptoms found a standard self-monitoring app led to significant improvements in outcomes (87).

Kelders and other researchers (88) found that the more dialogue support used the better the adherence. Reminders and notifications particularly contributed to higher adherence. A meta-analysis found that the apps with reminders have significantly lower attrition rates (89). From the DTA perspective, reminders and notifications can help users focus on completing tasks and achieving their goals. For example, one study of an app for reducing alcohol consumption found that the feedback function was highly rated by clients (87).

Building Flexibility With Persuasive Technology

Flexibility (in terms of using time, location, duration, the way of using and interacting with apps) is a characteristic that apps can provide beyond human health professionals (14). Clarke et al. further (14) suggested that flexibility should be added as a subscale when conceptualizing the DTA in the future. Persuasive technology strategies can help fully automated apps maximize their flexibility and potentially further enhance DTA. One of the advantages of having flexibility is that users can get support whenever and wherever they want. Dialogue support strategy can be applied to the apps in order to provide real-time responses. Tailoring and personalization in primary task support strategy can also help apps to provide better flexibility in the way of allowing users to choose their own way of using apps. For example, some users liked the design of the myCompass app which allowed users freely chose structured programs or self-paced study (14).

Human—App Attachment: Building Bond or Attachment With Mental Health Apps

As a subscale of both WAI and ARM, bond is considered critical in face-to-face therapy. However, whether and how users can build a bond with apps remains unclear as some users rejected the idea that they could form a relationship with digital mental health interventions (52). Thus, understanding how to help users build an attachment or a bond would be important in forming DTA in the context of fully automated apps.

Li et al. (90) indicated that when clients suffer from pain, illness, tiredness, and anxiety, they tend to seek external help and may bond with health applications. Some features, in particular, are believed to help build human-app attachment. Computer scientists have argued that users can trust, be open to, and keep a long-term relationship with relational agents (77, 78, 91, 92). In a study of technology for older people, participants described their experiences with relational agents as talking to a friend (30). Zhang and other researchers (93) found that through interactions with the device and personalized feedback, users can form an emotional bond with mobile health services. Game elements and gamification may be another solution for creating human-app attachment, as studies reported that users can build an attachment with customized game characters (94, 95).

Persuasive technology can also be applied to help users build bonds with apps. For example, a study of a smartphone app designed for children with Autism Spectrum Disorders found that user-app attachment could be enhanced by persuasive technology techniques (96). A system credibility support strategy in persuasive technology is also needed in building a bond. Trust and respect are considered important in building deeper bonds with healthcare professionals, which will further enhance client-therapist therapeutic relationships (22, 97). Thus, a system credibility support strategy can strengthen DTA by helping users build trust and respect in relation to mental health apps. In a qualitative study of a blended therapy app for men with intentional self-harm, Mackie et al. (98) found that trust in the function and effectiveness of the applications is crucial for building TA with apps rather than with people. Additionally, some items in the mARM are relevant to this strategy. For instance, the question – I have confidence in the app and the things it suggests requires trustworthiness, authority, and expertise principles.

However, not all users can build an attachment or a bond with digital health interventions (52). Kim et al. (95) argued that human—app attachment is influenced by the self-connection and social-connection that users can get through apps. Users have a greater possibility to build an attachment with apps when they feel the apps express who they are and offer them close relationships with the social world. The attachment style of individuals may also influence human-app attachment. Attachment style originally describes how people think, react, and behave in relationships with other people (99). In recent years, researchers also found that attachment style can be applied in the context of artificial intelligence (AI) and information technology (IT) (100, 101). For example, Gillath et al. (100) pointed out that people with attachment anxiety can have less trust in AI. However, how these theories can help users to build attachment with fully automated mental health apps requires further investigation.

Positive Computing and Positive Psychology

Positive psychology studies show how positive human functioning, such as life-purpose, self-realization and self-knowledge can contribute to well-being (102). They show out that well-being or happiness can be measured subjectively. Self-determination theory (SDT) in positive psychology examines how inherent human capabilities and psychological needs are influenced by biological, social, and cultural conditions (103). Although SDT contains multiple mini theories, the most well-accepted and well-known one is the Basic Psychological Needs Theory. Ryan and Deci (31) believe people can be motivated by the satisfaction of competence, relatedness, and autonomy. Competence refers to the feeling of effectiveness in interaction; relatedness refers to feeling connected with and being cared for by other people; autonomy refers to being the source of one's own behavior or value (31).

Positive computing is a computing area concerned with studying how to develop technology to support human well-being (62). It usually incorporates eudaimonic or positive psychological theories, such as SDT, into the process of designing technology (104). Motivation and TA are believed interrelated in face-to-face therapy (105, 106). Thus, positive computing that draws on SDT may contribute to DTA formation in fully automated mental health apps. Goldberg et al. (49) argued that in fully automated mental health apps, motivation boosting content can be given to users to enhance DTA when users report a low alliance rating. Lederman et al. (107) promoted the idea, finding that SDT-based online platform design can provide support for TA. SDT can also be interlinked with persuasive technology to enhance DTA. Villalobos-Zúñiga and Cherubini (108) argued that persuasive technology features link with SDT. They categorized persuasive technology features based on SDT:

• Autonomy: reminders, goal setting, motivational messages, pre-commitment

• Competence: activity feedback, history, log/self-monitoring, rewards

• Relatedness: performance sharing, messaging

In summary, multiple areas of computer science and HCI provide various theories and methodologies to build DTA in fully automated mental health apps, and developers and scientists should choose appropriate approaches depending on the determinant factors and purpose of the apps.

Limitations

This review has some limitations. First, only six identified studies examined DTA on fully automated mental health apps. Thus, it is still unclear whether the conclusions reached in these studies can apply to all types of fully automated mental health apps. In addition, since this is not a systematic review, there is a possibility that not all relevant literature has been captured. However, in examining and drawing out the similarities between these various related contexts we have raised some valuable points for consideration. Second, we did not include studies that examined DTA in tablet/computer apps. We acknowledge that tablet/computers apps can be similar to smartphone apps. However, their differences are significant in terms of our investigation. For example, tablets/computers are not as flexible/convenient as smartphones. In addition, tablets/computers may not be as accessible as smartphones. There is a larger population owning smartphones than owning tablets. These factors can influence the ways that users build DTA with apps. Thus, there is a risk that DTA in the context of fully automated smartphone apps may differ from tablets/computers. Third, we acknowledge that historically, there have been many critiques around the concept of TA. Some researchers have questioned the importance of TA in therapy, and have criticized Bordin's conceptual model (56, 109, 110). However, the TA is still a well-established concept, and has been measured in many studies (including studies of digital health interventions). Thus, our arguments in this paper were all based on the assumption that TA is a central factor in therapy.

Future Directions

In terms of future directions, many questions need to be answered to understand the development of DTA with fully automated mental health apps, but the priority is in formulating a valid conceptualization of DTA and how to formally measure it. A new qualitative interview-based study focused on fully automated mental health apps users is needed to re-conceptualize and redefine the DTA. Once a new comprehensive scale of the DTA has been developed, which can be administered in the context of fully automated mental health apps, the impact of DTA can be further investigated, including whether the DTA predicts outcomes and engagement. Choosing accurate approaches of measuring engagement is necessary when examining the relationship between DTA and engagement. Moreover, whether engagement is important to lead to better clinical outcomes needs to be further studied. Melvin et al. (111) indicated that an app for suicide prevention is still effective to help users cope with suicidal thoughts even if the users had only used it on one occasion. In addition, selecting appropriate methods to assess the association between DTA and clinical outcomes is also important. Furthermore, testing the DTA on various types of fully automated mental health apps is also essential. Whether the concept of DTA is influenced by different therapy methods also needs to be further investigated. For example, do the ways of building DTA differ in the interventions informed by CBT and the apps informed by dialectical behavior therapy (DBT)? Studies investigating what app features can contribute to DTA formation could also be carried out in the future.

Conclusion

In conclusion, understanding DTA is critical in examining how fully automated mental health smartphone apps can be developed to lead to better clinical outcomes. Currently, this topic is under-researched and more studies are needed. We found that the conceptualization of DTA may differ from TA for three reasons. First, the role of bond in the context of fully automated apps is unclear. Second, human components of face-to-face TA, such as empathy, are hard to achieve in the digital context. Third, some users may perceive apps as more non-judgmental and flexible, which may further influence DTA formation. Thus, the priority for future research is to develop a new DTA scale which can be administered in the context of fully automated mental health apps. Subdisciplines of computer science, such as affective computing and positive computing, and some human-computer interaction theories, such as those of persuasive technology and human-app attachment can potentially help researchers to understand DTA formation in fully automated apps.

Author Contributions

FT performed the article selection, developed the theoretical formalism, and took the lead in writing the manuscript. RL, SD'A, KB, and SB equally contributed to the research by providing critical feedback, helping shape the research, and editing the manuscript. All authors contributed to the article and approved the submitted version.

Funding

FT was supported by a University of Melbourne and University of Manchester Graduate Research Group PhD Scholarship.

Conflict of Interest

SB is a director and shareholder of CareLoop Health Ltd, which develops and markets digital therapeutics for mental health problems.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to thank Dr. Simone Schmidt for providing comments on an earlier version of this article.

References

1. Ritchie H, Roser M. Mental Health. Our World in Data. (2018). Available online at: https://ourworldindata.org/mental-health (accessed July 7, 2020).

2. Trautmann S, Rehm J, Wittchen H. The economic costs of mental disorders. EMBO Rep. (2016) 17:1245–9. doi: 10.15252/embr.201642951

3. Connell J, Brazier J, O'Cathain A, Lloyd-Jones M, Paisley S. Quality of life of people with mental health problems: a synthesis of qualitative research. Health Qual Life Outcomes. (2012) 10:138. doi: 10.1186/1477-7525-10-138

4. Szmukler GI, Herrman H, Bloch S, Colusa S, Benson A. A controlled trial of a counselling intervention for caregivers of relatives with schizophrenia. Soc Psychiatry Psychiatr Epidemiol. (1996) 31:149–55. doi: 10.1007/BF00785761

5. Baker C. Mental Health Statistics for England: Prevalence, Services Funding [Internet]. UK Parliament (2020). Available online at: https://dera.ioe.ac.uk/34934/1/SN06988%20(redacted).pdf

6. Chisholm D, Sweeny K, Sheehan P, Rasmussen B, Smit F, Cuijpers P, et al. Scaling-up treatment of depression and anxiety: a global return on investment analysis. Lancet Psychiatry. (2016) 3:415–24. doi: 10.1016/S2215-0366(16)30024-4

7. National Institute of Mental Health. NIMH ≫ Psychotherapies. (2016). Available online at: https://www.nimh.nih.gov/health/topics/psychotherapies/index.shtml (accessed June 9, 2020).

9. Sharp M-L, Fear NT, Rona RJ, Wessely S, Greenberg N, Jones N, et al. Stigma as a barrier to seeking health care among military personnel with mental health problems. Epidemiol Rev. (2015) 37:144–62. doi: 10.1093/epirev/mxu012

10. Suurvali H, Cordingley J, Hodgins DC, Cunningham J. Barriers to seeking help for gambling problems: a review of the empirical literature. J Gambl Stud. (2009) 25:407–24. doi: 10.1007/s10899-009-9129-9

12. Carswell K, Harper-Shehadeh M, Watts S, van't Hof E, Abi Ramia J, Heim E, et al. Step-by-Step: a new WHO digital mental health intervention for depression. Mhealth. (2018) 4:34. doi: 10.21037/mhealth.2018.08.01

14. Clarke J, Proudfoot J, Whitton A, Birch M-R, Boyd M, Parker G, et al. Therapeutic alliance with a fully automated mobile phone and web-based intervention: secondary analysis of a randomized controlled trial. JMIR Mental Health. (2016) 3:e10. doi: 10.2196/mental.4656

15. Torous J, Myrick KJ, Rauseo-Ricupero N, Firth J. Digital mental health and COVID-19: using technology today to accelerate the curve on access and quality tomorrow. JMIR Mental Health. (2020) 7:e18848. doi: 10.2196/18848

16. Richards P, Simpson S, Bastiampillai T, Pietrabissa G, Castelnuovo G. The impact of technology on therapeutic alliance and engagement in psychotherapy: the therapist's perspective. Clin Psychol. (2018) 22:171–81. doi: 10.1111/cp.12102

17. Erbe D, Eichert H-C, Riper H, Ebert DD. Blending face-to-face and internet-based interventions for the treatment of mental disorders in adults: systematic review. J Med Internet Res. (2017) 19:e306. doi: 10.2196/jmir.6588

18. Firth J, Torous J, Nicholas J, Carney R, Rosenbaum S, Sarris J. Can smartphone mental health interventions reduce symptoms of anxiety? A meta-analysis of randomized controlled trials. J Affect Disord. (2017) 218:15–22. doi: 10.1016/j.jad.2017.04.046

19. Ebert DD, Van Daele T, Nordgreen T, Karekla M, Compare A, Zarbo C, et al. Internet- and mobile-based psychological interventions: applications, efficacy, and potential for improving mental health. Eur Psychol. (2018) 23:167–87. doi: 10.1027/1016-9040/a000318

20. Bucci S, Barrowclough C, Ainsworth J, Machin M, Morris R, Berry K, et al. Actissist: proof-of-concept trial of a theory-driven digital intervention for psychosis. Schizophr Bull. (2018) 44:1070–80. doi: 10.1093/schbul/sby032

21. Possemato K, Kuhn E, Johnson E, Hoffman JE, Owen JE, Kanuri N, et al. Using PTSD Coach in primary care with and without clinician support: a pilot randomized controlled trial. General Hosp Psychiatry. (2016) 38:94–8. doi: 10.1016/j.genhosppsych.2015.09.005

22. Bordin ES. The generalizability of the psychoanalytic concept of the working alliance. Psychother Theory Res Pract. (1979) 16:252–60. doi: 10.1037/h0085885

23. Agnew-Davies R, Stiles WB, Hardy GE, Barkham M, Shapiro DA. Alliance structure assessed by the Agnew Relationship Measure (ARM). Br J Clin Psychol. (1998) 37:155–72. doi: 10.1111/j.2044-8260.1998.tb01291.x

24. Thompson SJ, Bender K, Lantry J, Flynn PM. Treatment engagement: building therapeutic alliance in home-based treatment with adolescents and their families. Contemp Fam Ther. (2007) 29:39–55. doi: 10.1007/s10591-007-9030-6

25. Flückiger C, Del Re AC, Wampold BE, Horvath AO. The alliance in adult psychotherapy: a meta-analytic synthesis. Psychotherapy. (2018) 55:316. doi: 10.1037/pst0000172

26. Karver MS, De Nadai AS, Monahan M, Shirk SR. Meta-analysis of the prospective relation between alliance and outcome in child and adolescent psychotherapy. Psychotherapy. (2018) 55:341. doi: 10.1037/pst0000176

27. Mander J, Neubauer AB, Schlarb A, Teufel M, Bents H, Hautzinger M, et al. The therapeutic alliance in different mental disorders: a comparison of patients with depression, somatoform, and eating disorders. Psychol Psychother Theory Res Pract. (2017) 90:649–67. doi: 10.1111/papt.12131

29. Oinas-Kukkonen H, Harjumaa M. Persuasive systems design: key issues, process model, and system features. Commun Assoc Inform Syst. (2009) 24:28. doi: 10.17705/1CAIS.02428

30. Bickmore T, Caruso L, Clough-Gorr K, Heeren T. ‘It's just like you talk to a friend' relational agents for older adults. Interact Comput. (2005) 17:711–35. doi: 10.1016/j.intcom.2005.09.002

31. Ryan RM, Deci EL. Overview of self-determination theory: An organismic dialectical perspective. In: Deci EL, and Ryan RM, editors. Handbook of Self-Determination Research. University of Rochester Press (2002) 2:3–3.

32. Anderson RE, Spence SH, Donovan CL, March S, Prosser S, Kenardy J. Working alliance in online cognitive behavior therapy for anxiety disorders in youth: comparison with clinic delivery and its role in predicting outcome. J Med Internet Res. (2012) 14:e88. doi: 10.2196/jmir.1848

33. Berger T, Boettcher J, Caspar F. Internet-based guided self-help for several anxiety disorders: a randomized controlled trial comparing a tailored with a standardized disorder-specific approach. Psychotherapy. (2014) 51:207–19. doi: 10.1037/a0032527

34. Gómez Penedo JM, Berger T, Holtforth M grosse, Krieger T, Schröder J, Hohagen F, et al. The Working Alliance Inventory for guided Internet interventions (WAI-I). J Clin Psychol. (2019) 76:973–86. doi: 10.1002/jclp.22823

35. Herrero R, Vara M, Miragall M, Botella C, García-Palacios A, Riper H, et al. Working Alliance Inventory for Online Interventions-Short Form (WAI-TECH-SF): the role of the therapeutic alliance between patient and online program in therapeutic outcomes. Int J Environ Res Public Health. (2020) 17:6169. doi: 10.3390/ijerph17176169

36. Kiluk BD, Serafini K, Frankforter T, Nich C, Carroll KM. Only connect: the working alliance in computer-based cognitive behavioral therapy. Behav Res Ther. (2014) 63:139–46. doi: 10.1016/j.brat.2014.10.003

37. Miragall M, Baños RM, Cebolla A, Botella C. Working alliance inventory applied to virtual and augmented reality (WAI-VAR): psychometrics and therapeutic outcomes. Front Psychol. (2015) 6:1531. doi: 10.3389/fpsyg.2015.01531

38. Berger T. The therapeutic alliance in internet interventions: a narrative review and suggestions for future research. Psychother Res. (2017) 27:511–24. doi: 10.1080/10503307.2015.1119908

39. Pihlaja S, Stenberg J-H, Joutsenniemi K, Mehik H, Ritola V, Joffe G. Therapeutic alliance in guided internet therapy programs for depression and anxiety disorders–a systematic review. Internet Interv. (2018) 11:1–10. doi: 10.1016/j.invent.2017.11.005

40. Sucala M, Schnur JB, Constantino MJ, Miller SJ, Brackman EH, Montgomery GH. The therapeutic relationship in e-therapy for mental health: a systematic review. J Med Internet Res. (2012) 14:e110. doi: 10.2196/jmir.2084

41. Andersson G, Paxling B, Wiwe M, Vernmark K, Felix CB, Lundborg L, et al. Therapeutic alliance in guided internet-delivered cognitive behavioural treatment of depression, generalized anxiety disorder and social anxiety disorder. Behav Res Ther. (2012) 50:544–50. doi: 10.1016/j.brat.2012.05.003

42. Kooistra L, Ruwaard J, Wiersma J, van Oppen P, Riper H. Working alliance in blended versus face-to-face cognitive behavioral treatment for patients with depression in specialized mental health care. J Clin Med. (2020) 9:347. doi: 10.3390/jcm9020347

43. Ormrod JA, Kennedy L, Scott J, Cavanagh K. Computerised cognitive behavioural therapy in an adult mental health service: a pilot study of outcomes and alliance. Cogn Behav Ther. (2010) 39:188–92. doi: 10.1080/16506071003675614

44. Henson P, Wisniewski H, Hollis C, Keshavan M, Torous J. Digital mental health apps and the therapeutic alliance: initial review. BJPsych open. (2019) 5:e15. doi: 10.1192/bjo.2018.86

45. Lecy J, Beatty K. Structured literature reviews using constrained snowball sampling and citation network analysis. (2012) 15.

46. Skolarus TA, Lehmann T, Tabak RG, Harris J, Lecy J, Sales AE. Assessing citation networks for dissemination and implementation research frameworks. Implement Sci. (2017) 12:97. doi: 10.1186/s13012-017-0628-2

47. Wnuk K, Garrepalli T. Knowledge management in software testing: a systematic snowball literature review. e-Informatica Softw Eng J. (2018) 12:51–78. doi: 10.5277/e-Inf180103

48. Berry K, Salter A, Morris R, James S, Bucci S. Assessing therapeutic alliance in the context of mhealth interventions for mental health problems: development of the Mobile Agnew Relationship Measure (mARM) questionnaire. J Med Internet Res. (2018) 20:e90. doi: 10.2196/jmir.8252

49. Goldberg SB, Baldwin SA, Riordan KM, Torous J, Dahl CJ, Davidson RJ, et al. Alliance with an unguided smartphone app: validation of the digital working alliance inventory. Assessment. (2021) 1–15. doi: 10.1177/10731911211015310

50. Prochaska JJ, Vogel EA, Chieng A, Baiocchi M, Maglalang DD, Pajarito S, et al. A randomized controlled trial of a therapeutic relational agent for reducing substance misuse during the COVID-19 pandemic. Drug Alcohol Depend. (2021) 227:108986. doi: 10.1016/j.drugalcdep.2021.108986

51. Darcy A, Daniels J, Salinger D, Wicks P, Robinson A. Evidence of human-level bonds established with a digital conversational agent: cross-sectional, retrospective observational study. JMIR Form Res. (2021) 5:e27868. doi: 10.2196/27868

52. Hillier L. Exploring the nature of the therapeutic alliance in technology-based interventions for mental health problems [Masters]. Lancaster: Lancaster University (2018).

53. Henson P, Peck P, Torous J. Considering the therapeutic alliance in digital mental health interventions. Harvard Rev Psychiatry. (2019) 27:268–73. doi: 10.1097/HRP.0000000000000224

54. Brown A, Mountford VA, Waller G. Is the therapeutic alliance overvalued in the treatment of eating disorders? Int J Eat Disord. (2013) 46:779–82. doi: 10.1002/eat.22177

55. Meier PS, Donmall MC, McElduff P, Barrowclough C, Heller RF. The role of the early therapeutic alliance in predicting drug treatment dropout. Drug Alcohol Depend. (2006) 83:57–64. doi: 10.1016/j.drugalcdep.2005.10.010

56. Zilcha-Mano S. Is the alliance really therapeutic? Revisiting this question in light of recent methodological advances. Am Psychol. (2017) 72:311. doi: 10.1037/a0040435

57. Kim S, Baek TH. Examining the antecedents and consequences of mobile app engagement. Telemat Informat. (2018) 35:148–58. doi: 10.1016/j.tele.2017.10.008

58. Boateng GO, Neilands TB, Frongillo EA, Melgar-Quiñonez HR, Young SL. Best practices for developing and validating scales for health, social, and behavioral research: a primer. Front Public Health. (2018) 6:149. doi: 10.3389/fpubh.2018.00149

59. Picard R. Affective computing, MIT media laboratory perceptual computing section technical. Report. (1995) 321:1–26.

60. Bradley MM, Lang PJ. Affective norms for English words (ANEW): Instruction manual and affective ratings. In: Technical Report C-1, the Center for Research in Psychophysiology. University of Florida (1999).

61. Strapparava C, Valitutti A, Stock O. The Affective Weight of Lexicon. In: LREC. (2006). p. 423–426.

62. Calvo RA, Peters D. Positive Computing: Technology for Wellbeing and Human Potential. Cambridge, MA; London: MIT Press (2014).

63. Ghandeharioun A, McDuff D, Czerwinski M, Rowan K. EMMA: an emotion-aware wellbeing chatbot. In: 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII). (2019). p. 1–7.

64. Wright P, McCarthy J. Empathy and experience in HCI. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. Florence (2008). p. 637–46.

65. Burchert S, Alkneme MS, Bird M, Carswell K, Cuijpers P, Hansen P, et al. User-centered app adaptation of a low-intensity e-mental health intervention for Syrian refugees. Front Psychiatry. (2019) 9:663. doi: 10.3389/fpsyt.2018.00663

66. Bickmore T, Gruber A. Relational agents in clinical psychiatry. Harv Rev Psychiatry. (2010) 18:119–30. doi: 10.3109/10673221003707538

67. Isbister K, Doyle P. The blind men and the elephant revisited. In: Ruttkay Z, Pelachaud C, editors. From Brows to Trust: Evaluating Embodied Conversational Agents. Human-Computer Interaction Series. Dordrecht: Springer Netherlands (2004). p. 3–26.

68. Hone K. Empathic agents to reduce user frustration: the effects of varying agent characteristics. Interact Comp. (2006) 18:227–45. doi: 10.1016/j.intcom.2005.05.003

69. Grekin ER, Beatty JR, Ondersma SJ. Mobile health interventions: exploring the use of common relationship factors. JMIR Mhealth Uhealth. (2019) 7:e11245. doi: 10.2196/11245

70. Olafsson S, Parmar D, Kimani E, O'Leary TK, Bickmore T. ‘More like a person than reading text in a machine': predicting Choice of Embodied Agents over Conventional GUIs on Smartphones. In: Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems. (2021). p. 1–6.

71. Olafsson S, Wallace BC, Bickmore TW. Towards a computational framework for automating substance use counseling with virtual agents. In: AAMAS. Auckland (2020). p. 966–74.

72. Zhou S, Bickmore T, Rubin A, Yeksigian C, Lippin-Foster R, Heilman M, et al. A Relational Agent for Alcohol Misuse Screening and Intervention in Primary Care. Washington, DC. p. 6.

73. Bickmore T, Rubin A, Simon S. Substance use screening using virtual agents: towards automated Screening, Brief Intervention, and Referral to Treatment (SBIRT). In: Proceedings of the 20th ACM International Conference on Intelligent Virtual Agents. Glasgow; Virtual Event Scotland UK: ACM (2020). p. 1–7.

74. Shamekhi A, Bickmore T, Lestoquoy A, Gardiner P. Augmenting group medical visits with conversational agents for stress management behavior change. In: de Vries PW, Oinas-Kukkonen H, Siemons L, Beerlage-de Jong N, van Gemert-Pijnen L, editors. Persuasive Technology: Development and Implementation of Personalized Technologies to Change Attitudes and Behaviors. Lecture Notes in Computer Science; vol. 10171. Cham: Springer International Publishing (2017). p. 55–67. Available online at: http://link.springer.com/10.1007/978-3-319-55134-0_5 (accessed April 11, 2022).

75. Suganuma S, Sakamoto D, Shimoyama H. An embodied conversational agent for unguided internet-based cognitive behavior therapy in preventative mental health: feasibility and acceptability pilot trial. JMIR Mental Health. (2018) 5:e10454. doi: 10.2196/10454

76. Tickle-Degnen L, Rosenthal R. The nature of rapport and its nonverbal correlates. Psychol Inquiry. (1990) 1:285–93. doi: 10.1207/s15327965pli0104_1

77. Gratch J, Artstein R, Lucas G, Stratou G, Scherer S, Nazarian A, et al. The distress analysis interview corpus of human and computer interviews. In: Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC'14). Reykjavik (2014) p. 3123–8.

78. Lucas GM, Rizzo A, Gratch J, Scherer S, Stratou G, Boberg J, et al. Reporting mental health symptoms: breaking down barriers to care with virtual human interviewers. Front Robot AI. (2017) 4:51. doi: 10.3389/frobt.2017.00051

79. Nadarzynski T, Miles O, Cowie A, Ridge D. Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: a mixed-methods study. Digital Health. (2019) 5:1–12. doi: 10.1177/2055207619871808

80. Gómez Penedo JM, Babl AM, Holtforth M grosse, Hohagen F, Krieger T, Lutz W, et al. The association of therapeutic alliance with long-term outcome in a guided internet intervention for depression: secondary analysis from a randomized control trial. J Med Internet Res. (2020) 22:e15824. doi: 10.2196/15824

81. Scherer S, Alder J, Gaab J, Berger T, Ihde K, Urech C. Patient satisfaction and psychological well-being after internet-based cognitive behavioral stress management (IB-CBSM) for women with preterm labor: a randomized controlled trial. J Psychosom Res. (2016) 80:37–43. doi: 10.1016/j.jpsychores.2015.10.011

82. Fogg BJ. Persuasive Technology: Using Computers to Change What We Think and Do. San Francisco, CA: Elsevier Science & Technology (2003). Available online at: http://ebookcentral.proquest.com/lib/unimelb/detail.action?docID=294303 (accessed August 18, 2020).

83. Muran JC, Barber JP. The Therapeutic Alliance: An Evidence-Based Guide to Practice. New York, NY: Guilford Publications (2010). Available online at: http://ebookcentral.proquest.com/lib/unimelb/detail.action?docID=570366 (accessed February 4, 2021).

84. Bakker D, Kazantzis N, Rickwood D, Rickard N. Mental health smartphone apps: review and evidence-based recommendations for future developments. JMIR Mental Health. (2016) 3:e7. doi: 10.2196/mental.4984

85. Stawarz K, Preist C, Tallon D, Wiles N, Coyle D. User experience of cognitive behavioral therapy apps for depression: an analysis of app functionality and user reviews. J Med Internet Res. (2018) 20:e10120. doi: 10.2196/10120

86. Fuller-Tyszkiewicz M, Richardson B, Klein B, Skouteris H, Christensen H, Austin D, et al. A mobile app–based intervention for depression: end-user and expert usability testing study. JMIR Mental Health. (2018) 5:e54. doi: 10.2196/mental.9445

87. Crane D, Garnett C, Michie S, West R, Brown J. A smartphone app to reduce excessive alcohol consumption: identifying the effectiveness of intervention components in a factorial randomised control trial. Sci Rep. (2018) 8:4384. doi: 10.1038/s41598-018-22420-8

88. Kelders SM, Kok RN, Ossebaard HC, Van Gemert-Pijnen JE. Persuasive system design does matter: a systematic review of adherence to web-based interventions. J Med Internet Res. (2012) 14:e152. doi: 10.2196/jmir.2104

89. Linardon J, Fuller-Tyszkiewicz M. Attrition and adherence in smartphone-delivered interventions for mental health problems: a systematic and meta-analytic review. J Consult Clin Psychol. (2020) 88:1–13. doi: 10.1037/ccp0000459

90. Li J, Zhang C, Li X, Zhang C. Patients' emotional bonding with MHealth apps: an attachment perspective on patients' use of MHealth applications. Int J Inform Manag. (2019) 51:102054. doi: 10.1016/j.ijinfomgt.2019.102054

91. Bickmore T. Relational agents: effecting change through human-computer relationships [Ph.D. thesis]. Massachusetts Institute of Technology, Cambridge, MA, United States (2003).

92. Kulms P, Kopp S. A social cognition perspective on human–computer trust: the effect of perceived warmth and competence on trust in decision-making with computers. Front Dig Human. (2018) 5:14. doi: 10.3389/fdigh.2018.00014

93. Zhang X, Guo X, Ho SY, Lai K, Vogel D. Effects of emotional attachment on mobile health-monitoring service usage: an affect transfer perspective. Inform Manag. (2020) 58:103312. doi: 10.1016/j.im.2020.103312

94. Bopp JA, Müller LJ, Aeschbach LF, Opwis K, Mekler ED. Exploring emotional attachment to game characters. In: Proceedings of the Annual Symposium on Computer-Human Interaction in Play. Barcelona (2019). p. 313–24.

95. Kim K, Schmierbach MG, Chung M-Y, Fraustino JD, Dardis F, Ahern L. Is it a sense of autonomy, control, or attachment? Exploring the effects of in-game customization on game enjoyment. Comput Hum Behav. (2015) 48:695–705. doi: 10.1016/j.chb.2015.02.011

96. Mintz J. The role of user emotional attachment in driving the engagement of children with autism spectrum disorders (ASD) in using a smartphone app designed to develop social and life skill functioning. In: International Conference on Computers for Handicapped Persons. Paris: Springer (2014). p. 486–93.

97. Crits-Christoph P, Rieger A, Gaines A, Gibbons MBC. Trust and respect in the patient-clinician relationship: preliminary development of a new scale. BMC Psychol. (2019) 7:91. doi: 10.1186/s40359-019-0347-3

98. Mackie C, Dunn N, MacLean S, Testa V, Heisel M, Hatcher S. A qualitative study of a blended therapy using problem solving therapy with a customised smartphone app in men who present to hospital with intentional self-harm. Evid Based Ment Health. (2017) 20:118–22. doi: 10.1136/eb-2017-102764

99. Ainsworth MDS, Blehar MC, Waters E, Wall SN. Patterns of Attachment: A Psychological Study of the Strange Situation. New York, NY; East Sussex: Psychology Press (2015). p. 467.

100. Gillath O, Ai T, Branicky MS, Keshmiri S, Davison RB, Spaulding R. Attachment and trust in artificial intelligence. Comput Hum Behav. (2021) 115:106607. doi: 10.1016/j.chb.2020.106607

102. Ryff CD. Psychological well-being revisited: advances in the science and practice of eudaimonia. PPS. (2014) 83:10–28. doi: 10.1159/000353263

103. Ryan RM, Deci EL. Self-Determination Theory: Basic Psychological Needs in Motivation, Development, Wellness. New York, NY: Guilford Publications (2017). Available online at: https://ebookcentral.proquest.com/lib/unimelb/detail.action?docID=4773318 (accessed August 19, 2020).

104. D'Alfonso S, Lederman R, Bucci S, Berry K. The digital therapeutic alliance and human-computer interaction. JMIR Mental Health. (2020) 7:e21895. doi: 10.2196/21895

105. Cudd T. Therapeutic Alliance and Motivation: The Role of the Recreational Therapist and Youth With Behavioral Problems. Doctoral dissertation. Oklahoma State University (2015).

106. Meier PS, Donmall MC, Barrowclough C, McElduff P, Heller RF. Predicting the early therapeutic alliance in the treatment of drug misuse. Addiction. (2005) 100:500–11. doi: 10.1111/j.1360-0443.2005.01031.x

107. Lederman R, Gleeson J, Wadley G, D'alfonso S, Rice S, Santesteban-Echarri O, et al. Support for carers of young people with mental illness: design and trial of a technology-mediated therapy. ACM Transac Comp Hum Interact. (2019) 26:1–33. doi: 10.1145/3301421

108. Villalobos-Zúñiga G, Cherubini M. Apps that motivate: a taxonomy of app features based on self-determination theory. Int J Hum Comp Stud. (2020) 140:102449. doi: 10.1016/j.ijhcs.2020.102449

109. Safran JD, Muran JC. Has the concept of the therapeutic alliance outlived its usefulness? Psychother Theory Res Pract Train. (2006) 43:286–91. doi: 10.1037/0033-3204.43.3.286

110. Elvins R, Green J. The conceptualization and measurement of therapeutic alliance: an empirical review. Clin Psychol Rev. (2008) 28:1167–87. doi: 10.1016/j.cpr.2008.04.002

Keywords: digital mental health, digital therapeutic alliance, mHealth, smartphone app, human-computer interaction

Citation: Tong F, Lederman R, D'Alfonso S, Berry K and Bucci S (2022) Digital Therapeutic Alliance With Fully Automated Mental Health Smartphone Apps: A Narrative Review. Front. Psychiatry 13:819623. doi: 10.3389/fpsyt.2022.819623

Received: 22 November 2021; Accepted: 30 May 2022;

Published: 22 June 2022.

Edited by:

Michael Patrick Schaub, University of Zurich, SwitzerlandReviewed by:

Eva Hudlicka, Psychometrix Associates, United StatesDeborah Richards, Macquarie University, Australia

Copyright © 2022 Tong, Lederman, D'Alfonso, Berry and Bucci. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fangziyun Tong, ZmFuZ3ppeXVudEBzdHVkZW50LnVuaW1lbGIuZWR1LmF1

Fangziyun Tong

Fangziyun Tong Reeva Lederman

Reeva Lederman Simon D'Alfonso

Simon D'Alfonso Katherine Berry2,3

Katherine Berry2,3 Sandra Bucci

Sandra Bucci