94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychiatry , 22 February 2022

Sec. Digital Mental Health

Volume 13 - 2022 | https://doi.org/10.3389/fpsyt.2022.812965

This article is part of the Research Topic Digital Mental Health Research: Understanding Participant Engagement and Need for User-centered Assessment and Interventional Digital Tools View all 13 articles

Punit Virk1,2*

Punit Virk1,2* Ravia Arora2

Ravia Arora2 Heather Burt1,2

Heather Burt1,2 Anne Gadermann1,2,3

Anne Gadermann1,2,3 Skye Barbic3,4

Skye Barbic3,4 Marna Nelson5

Marna Nelson5 Jana Davidson2,6

Jana Davidson2,6 Peter Cornish7

Peter Cornish7 Quynh Doan1,2,8

Quynh Doan1,2,8Background: Mental health challenges are highly prevalent in the post-secondary educational setting. Screening instruments have been shown to improve early detection and intervention. However, these tools often focus on specific diagnosable conditions, are not always designed with students in mind, and lack resource navigational support.

Objective: The aim of this study was to describe the adaptation of existing psychosocial assessment (HEARTSMAP) tools into a version that is fit-for-purpose for post-secondary students, called HEARTSMAP-U.

Methods: We underwent a three-phase, multi-method tool adaptation process. First, a diverse study team proposed a preliminary version of HEARTSMAP-U and its conceptual framework. Second, we conducted a cross-sectional expert review study with Canadian mental health professionals (N = 28), to evaluate the clinical validity of tool content. Third, we conducted an iterative series of six focus groups with diverse post-secondary students (N = 54), to refine tool content and language, and ensure comprehensibility and relevance to end-users.

Results: The adaptation process resulted in the HEARTSMAP-U self-assessment and resource navigational support tool, which evaluates psychosocial challenges across 10 sections. In Phase two, clinician experts expressed that HEARTSMAP-U's content aligned with their own professional experiences working with students. In Phase three, students identified multiple opportunities to improve the tool's end-user relevance by calling for more “common language,” such as including examples, definitions, and avoiding technical jargon.

Conclusions: The HEARTSMAP-U tool is well-positioned for further studies of its quantitative psychometric properties and clinical utility in the post-secondary educational setting.

In recent years, post-secondary students have reported increasing levels of mental health challenges including psychological distress and diagnosed conditions (e.g., anxiety, depression) (1). While the post-secondary years are often a period of self-exploration and interpersonal growth (2), they have also been associated with high stress, peer pressure, and greater responsibilities with reduced social supports (3, 4). For young adults, this period coincides with significant physiological, psychological, and social development (5, 6). In 2019, Canadian data from the National College Health Assessment (N = 55,284) showed that, within the last 12-months, most post-secondary students reported experiencing overwhelming anxiety (68.9%) and at least half reported functionally impairing depression (51.6%) (7). Among the sample, 16.4% of students endorsed active suicidal ideation in the last 12-months, compared to 2.5% of the general Canadian adult population and 6% of young adults (ages 15–24 years) that same year (8, 9). During the COVID-19 pandemic, the rate of mental health concerns escalated in the student population, one study (N = 1,388) reported a 30-day anxiety and/or depressive symptom prevalence of 75% among Canadian students during the pandemic's first-wave (up till May 2020) (10). Similarly, the Healthy Minds survey (N = 18,764) saw increased prevalence of depression and lower levels of resiliency among American students compared to pre-pandemic estimates (11). The pandemic has compounded psychological and social challenges (psychosocial stressors) (12, 13), magnifying an already severe campus mental health crisis (14).

Students experience individual- and system-level barriers that may impede timely access to age-appropriate care. Low mental health literacy, poor system navigation support, and service saturation (e.g., wait-times) all impede help-seeking (15–18). National Canadian standards for student mental health and well-being call for institutions to have early identification and preventative infrastructures (19), which can improve long-term mental health outcomes and timely connectedness into services (20). Universal mental health screening and navigational support tools can address challenges institutions experience with identifying mental health concerns and supporting connectivity to care. Such measures have been successfully integrated within post-secondary health systems (21–23). Digital screening tools may alleviate the need for in-person intake assessment/triaging and more seamlessly bridge in-person and digital resources (3, 24, 25). Digital self-reporting of psychosocial challenges also shows higher disclosure rates and may be preferred over clinician-administered or paper-based assessment (26–28), offering users privacy, time, and space to articulate needs.

Notwithstanding the potential of screening, existing scales often focus exclusively on common psychological issues, such as the PHQ-9 (depression), GAD-7 (anxiety), AUDIT (substance use), and SBQ-R (suicidality) (29–32). These tools are diagnoses-specific, have not been developed with student engagement, and generally lack comprehensive validity evidence in student populations (33–36). However, several instruments have been developed or adapted with students' unique contextual (e.g., academic stress, social autonomy) and clinical needs (e.g., emerging adulthood) in mind. Downs et al. (37) previously developed the 34-item Symptoms and Assets Screening Scale specifically for college students to self-screen on common mental health challenges (e.g., eating disorder, substance abuse, anxiety, depressive symptoms) and generalized distress (37). Similarly, Alschuler et al. (38) developed the 11-item College Health Questionnaire, which facilitates behavioral screening of psychological (e.g., anxiety, depression) and social concerns (e.g., academic problems, relationships, finances) (38). Other post-secondary-specific screening and assessment measures include the Counseling Center Assessment of Psychological Symptoms and the Mental Health Continuum model. However, these assessment tools lack an actionable, resource navigational component, which may support students' help-seeking and contribute to the utility of screening (39–41).

Our team has previously developed, validated, and implemented psychosocial instruments for the pediatric population. The clinical HEARTSMAP assessment and management guiding tool supports pediatric acute care providers with psychosocial interviewing and disposition planning (42). MyHEARTSMAP is a self-administered version allowing self-/proxy-screening, to facilitate universal screening by youth and parents (43). Both instruments have demonstrated evidence for strong psychometric properties (42–45), high clinical utility (46, 47), and user acceptability (48). These instruments expand on the seminal HEADSS psychosocial interview and history-taking tool (49, 50) and assess ten broad psychosocial sections: Home, Education and activities, Alcohol and drugs, Relationships and bullying, Thoughts and anxiety, Safety, Sexual Health, Mood, Abuse, and Professional resources. These psychosocial issues are clinically significant and theoretically supported within human development and socio-ecological models. According to Maslow's Hierarchy of Needs, individuals work up from physiological (e.g., Home, Safety) and psychological needs (e.g., “Relationships”) toward self-fulfillment-oriented needs (e.g., “Education and activities) (51, 52). Within socio-ecological models, these psychosocial areas demonstrate how youths' mental well-being is shaped through the interplay of individual (e.g., Mood, Thoughts and anxiety, Safety risk), interpersonal (e.g., Relationships, Abuse, Sexual Health), institutional (e.g., Education and activities), and community factors (e.g., Professionals and resources) (19, 53, 54). We provide further details on the HEARTSMAP tools' measurement model, assessment structure, and resource recommendation decision-making algorithm in Web-Appendix A.

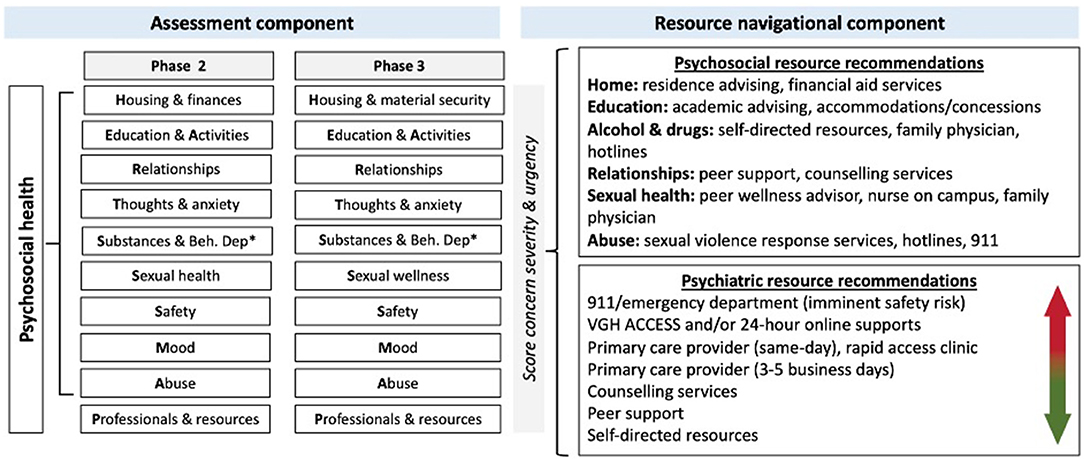

Adapted specifically for post-secondary students, HEARTSMAP-U is a brief, digital self-administered psychosocial screening tool. Similar to previous HEARTSMAP versions, HEARTSMAP-U assesses ten psychosocial areas ranging from Housing to Abuse. For each section, students first score their concerns on a 4-point Likert-type scale ranging from 0 (no concern) to 3 (severe concern), using anchor descriptions for each scoring option. Second, student's score whether they have previously accessed services pertaining to this section (yes/no). After students have answered these questions for all 10 sections, their responses feed into a built-in algorithm, triggering urgency-specific resource recommendations for identified mental health needs (13, 16, 19).

The current paper describes the three-phase process by which previously developed HEARTSMAP tools were adapted into HEARTSMAP-U, a version that is fit-for-purpose for the post-secondary student population, to help students self-identify psychosocial support needs. Our study will serve as a foundational paper on the HEARTSMAP-U tool and its preliminary adaptation. We will collect multi-faceted evidence of instrument validity and reliability in an ongoing manner and report it in later studies.

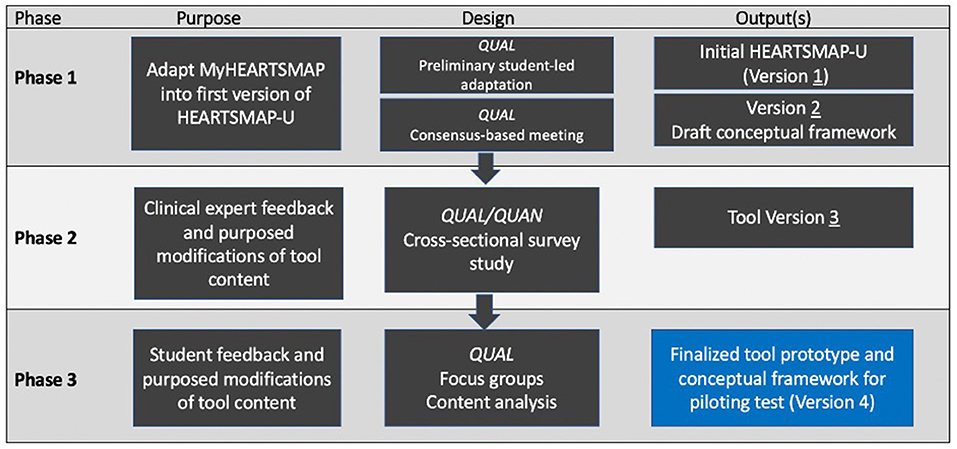

Our tool adaptation process includes three phases and has been informed by established guidelines for developing patient-reported outcome measures in the literature (55–58), and expertise from diverse stakeholders including clinical experts and student end-users. We used an iterative, multi-method approach, outlined in Figure 1. For each phase we describe the design, study procedures, and analytic approach. We obtained approval from our institutional research ethics board for Phase two and three, in which research participants were recruited.

Figure 1. Schematic outlining our reported multiphasic tool adaptation process. Figure adapted from Riff et al. (59). QUAN, quantitative; QUAL-qualitative.

We conducted virtual working meetings between November 2018 and April 2019 with a diversely assembled study team of students and co-investigators. The purpose of our one-on-one student consultations was to generate ideas on how HEARTSMAP-U needs to be adapted for fitness for purpose in the university context, through a collaborative and consensus-based process. Our co-investigators included a family physician, clinical psychologist, a youth psychiatrist, addiction psychiatrist, patient-reported outcome measurement expert, and a graduate student researcher. The purpose of our co-investigator meetings was to formalize HEARTSMAP-U's intended use and conceptual framework. This included ensuring the tool assessed relevant psychosocial stressors (e.g., student-specific, age-related), and that its resource recommendations were accessible and match desired clinical flow (e.g., how/when specific supports should be accessed).

Prior to co-investigator meetings, we had a group of gender and racially diverse research students (medical/undergrad/ graduate) review the pediatric MyHEARTSMAP tool and change language and content to be suitable for the post-secondary student population. We did not put restrictions or parameters on student researchers proposed modifications. This exercise resulted in the first HEARTSMAP-U version.

Co-investigators used the first HEARTSMAP-U version and existing HEARTSMAP conceptual framework as a starting point for tool modification. Discussions were free-flowing and open-ended, and investigators' feedback/suggestions were not constrained to the measurement model and conceptual framework of existing HEARTSMAP tools. We used a consensus-based decision-making process. Proposed tool changes required 100% investigator consensus. When we could not reach consensus, we held discussions until all investigators came to agreement. The lead investigator (PV) took comprehensive notes documenting all team decision-making and made approved tool modifications between each meeting. We held meetings until the team collectively felt a clinically and contextually relevant tool version had been reached.

Throughout all meetings, we summarized and reported general impressions and key discussion points. We made necessary tool modifications between co-investigator team meetings.

We conducted a cross-sectional survey study with Canadian mental health clinicians who support post-secondary students, guided by an expert review methodology (60).

We recruited a convenience and snowball sample of participants through our professional networks, until data saturation was reached. Participation was self-paced and took place remotely, over our secure study website from July 2019 to September 2019.

Participants watched a mandatory 3-min instructional video, explaining study procedures, the digital platform, and HEARTSMAP-U (purpose, structure). Second, we asked participants to reflect on their professional experience and formulate a fictional clinical vignette describing a student presenting to their practice in psychosocial distress (mild to severe). Clinicians were expected to provide a brief description of their vignette and used this information as they progressed throughout the tool.

Next, for each tool section, clinicians reviewed all HEARTSMAP-U guiding questions and scoring criteria, scored their fictional students' concerns (if any), and completed a survey item asking “Do HEARTSMAP-U's [guiding questions/scoring descriptors] sufficiently capture the full range of [section]-related stressors that youth in your practice might experience? (yes/no)” As a follow-up item, irrespective of their prior response, all participants were asked to provide a qualitative response to “what could be added or changed so the [guiding questions/scoring descriptors] better capture the range of concerns students may experience in relation to [section]?” Clinicians also provided high-level feedback (e.g., tool impressions, content suggestions). All qualitative responses were collected through open-ended survey questions (textbox response).

After scoring all sections, clinicians reviewed tool-generated support recommendations and assessed whether they over- or underestimated fictional students' needs. Clinicians had the choice of completing a second evaluation with a new vignette. Upon study completion, the core research team analyzed all feedback and found opportunities to further adapt each tool section (e.g., content, language), to ensure it covers a full range of concern severity, both in terms of distress and functional impairment. We used the HEARTSMAP-U version resulting from Phase two as a starting point for Phase three student focus group discussions.

We summarized clinician demographics and responses to dichotomous survey items (yes/no) as counts and proportions. A blended/abductive approach to qualitative content analysis was taken to synthesize and analyze all qualitative responses (61). Based on an initial, holistic exploration of the raw data (inductive process) and existing healthcare measurement literature (deductive process) (62), we developed a tentative coding framework that would encompass participants' qualitative responses (e.g., content coverage, context of use, etc.). We coded qualitative data in three cycles, each introducing an added layer of interpretation and data abstraction. Our research team used reflective memos documented throughout the data collection stage to support the coding process and interpretation. First, we conducted attribute coding, whereby all qualitative survey feedback was structurally coded and organized by tool section, to support feedback interpretation. Second, we conducted descriptive coding and, for each tool section, mapped all clinician responses to our pre-defined coding framework/categories. We separately analyzed and coded guiding question and scoring descriptor feedback. Third, we performed pattern coding to explore variations and sub-categories within existing codes. For clinicians who responded “no” to whether guiding questions and/or scoring descriptors aligned with their professional experience, we coded their qualitative responses into the most appropriate feedback category. For each tool section and feedback category, we report count data on the total number of clinicians/responses that map to them. Two investigators conducted qualitative coding, HB (first cycle) and PV (second, third cycle). We conducted analyses using Microsoft Office Excel and NVivo 12.0.

We conducted a qualitative study with UBC-Vancouver students, guided by cognitive testing and iterative design methodologies (63–65). Similar to Phase two, we incorporated a variation of verbal probing, asking participants targeted questions on tool content and functionality. Through a series of sequential focus groups, we iteratively modified HEARTSMAP-U based on participants' feedback on guiding questions, scoring criteria, tool language (e.g., unclear, insensitive), and other suggestions (e.g., new tool section, format/structure). Focus groups took place between November 2019 and May 2020. Initially, we held in-person sessions, but later made them virtual, to allow remote participation and compliance with COVID-19 restrictions.

We recruited students through an existing partnership with university administration, health centers, and student organizations. Prospective participants completed an online expression of interest and demographic form. Using this information, we recruited a purposive sample of UBC-Vancouver students ages 17 years and older and setup heterogenous focus groups. We strived for proportional representation of the overall UBC student population across demographics: age, gender and sexual identity, program-type, year of study, race/ethnicity, international/domestic status, and lived mental health experiences (Web-Appendix B). We excluded students uncomfortable with being audiotaped.

During each focus group, we first supplied participants with a high-level introduction to HEARTSMAP-U (e.g., purpose, components). Next, we reviewed tool components (guiding questions, scoring criteria), for each tool section. During this time, we asked participants to share their first impressions and engage in a dialogue around the tool's (1) comprehensiveness (issues important to you and your peers). (2) Relevance (realistic content reflecting your experiences). (3) Understandability (easily understood language). We encouraged participants to suggest tool modifications for the study team's consideration, either through group discussion or written feedback. We audiotaped focus groups, had them professionally transcribed (verbatim), and compared them against the original audio to confirm accuracy.

We conducted two sets of analyses using focus group data. Consistent with analytic guidelines, we treat the focus group as our unit of analysis (66). First, between each focus group, the core research team reviewed RA notes documenting tool modifications proposed by students. For each comment or suggested modification, we took into consideration the general response from other focus group members (e.g., endorsed, objected) and whether it was consistent with clinical guidelines and earlier focus groups. Focus groups were held until a point of data saturation was achieved, whereby no new feedback was received that investigators had not already considered or considerations were mostly minor (e.g., word choice, grammar) (66).

After reaching sufficient data saturation, we performed an in-depth, abductive qualitative content analysis, with inductive and deductive components, using verbatim transcripts and research memos. First, an investigator (RA) deductively conducted attribute-based coding, to organize and sort all student comments by session and tool section. A second investigator (PV) interpretatively performed descriptive coding using Stewart et al.'s framework to categorize sectional feedback as either content or format/interface-related (67). Tool content-related feedback and modification suggestions were further analyzed through pattern coding using two additional frameworks. The COSMIN content validity framework and it's operational definitions for content relevance, representativeness, and understandability were used to analyze and characterize students' proposed modifications with respect to these categories (55). Coons et al.'s framework was used to assess modifications as either (1) minor, those not expected to change content or meaning (e.g., switching format from paper to online). (2) Moderate, subtle content/meaning changes (e.g., item wording, ordering). (3) Substantial, extensive content/ meaning changes (e.g., changing response options, new guiding questions) (68). Inductive, descriptive coding was also performed to characterize and report comments and feedback that did not fit within our a priori analytic frameworks.

For each tool section, we report representative quotes for each modification-type and inductively derived category, and reference quotes by focus group number (FG X). We summarize participant sociodemographics using descriptive statistics and conduct the Chi-square test of independence (alpha = 0.05) to compare the demographic profile of participating students with those who expressed interest but did not take part in the study (e.g., not invited, declined).

A total of five students took part in preliminary tool adaptation activities, two undergraduate students and three medical students. Subsequently, we had five co-investigators who took part in three rounds of discussion and iterative tool modification, at which time all co-investigators agreed on HEARTSMAP-U prototype content. One clinical investigator took part and contributed feedback outside of organized group discussions.

We largely retained MyHEARTSMAP's conceptual framework, recognizing universality of the measured constructs, however several sections were redefined. MyHEARTSMAP's “Home” section only measures the safety and supportiveness of the home environment, which may not encompass the transient nature of student housing. For HEARTSMAP-U, we modified this section into “Housing arrangements and finances” to include an assessment of housing stability and ease of managing housing-related responsibilities (e.g., paying bills, cleaning, cooking, etc.), in addition to housing safety/supportiveness. Finalized construct definitions are reported in Web-Appendix C and our conceptual framework is illustrated in Figure 2.

Figure 2. Conceptual framework of the finalized HEARTSMAP-U tool version, following adaptation among Canadian mental health professionals (Phase 2) and post-secondary students (Phase 3).

Investigators decided MyHEARTSMAP's severity scoring spectrum (none to severe) required modification to accurately reflect the student population. “Alcohol and drugs” needed to reflect the social acceptability of leisurely drinking and marijuana usage among young adults. For several sections, investigators agreed that two different concepts were being measured together (e.g., thought disturbances and anxiety) which needed to be consistently assessed and delineated across all severity levels using “OR” Boolean operators. The team modified HEARTSMAP-U's resource recommendations so that they reflected the appropriate tier of resources/services as outlined by the post-secondary institution (69). Investigators identified opportunities to incorporate strength-building recommendations, triggered when students report no more than mild concerns. Feedback across all three working group sessions is summarized by tool section in Web-Appendix C.

Participating mental health clinicians (N = 28) mostly identified as women (89%) and worked at large-size Canadian post-secondary institutions (96%). Most clinicians were either registered counselors (32.1%) or psychologists (32.1%) and affiliated with their institutions counseling (60.9%) and/or health services (30.4%). Complete demographic details are summarized in Table 1.

Clinician-prepared vignettes scored across severity levels (0–3) for all sections, except “Alcohol and drugs” and “Abuse” which were only assessed on no (0) to moderate concerns (2). Of the 46 completed fictional cases, most described mild (46%) or moderate (44%) psychosocial concerns. Of cases reporting psychological challenges, participants assessed 20% as being severe, compared to only 2–4% of cases reporting on other psychosocial issue. A total of 18 (64%) clinicians decided to complete a second vignette evaluation and 17 (61%) expressed interest in referring a colleague to join the study.

Participating clinicians felt that HEARTSMAP-U's guiding questions (46–86%) and scoring criteria (54–82%) aligned with their own clinical characterization of each tool section. A majority felt the tool was “very thorough,” guiding questions were “simple yet broad” and scoring criteria were “easy” to understand and there was “nothing to add.” Conversely, 14% (Housing; Professionals and resources) to 54% (Education and activities) and 18% (Housing; Abuse) to 46% (Sexual Health) of clinicians felt that HEARTSMAP-U's guiding questions and scoring descriptors, respectively, required more characterization to match their observations of each psychosocial construct. From clinicians' qualitative responses, we derived four categories that feedback was related to: (1) coverage of concern severity, consistent with the tool's intended use; (2) tool suitability in the clinician's own context-of-use; (3) minor language/wording issues with minimal impact on sectional content/meaning; and (4) content that clinicians perceived as missing but was elsewhere in the tool. We elaborate on each of these themes below. Counts and proportions summarizing participants' feedback by coding category are summarized in Tables 2, 3, for guiding questions and scoring descriptors, respectively.

Respectively, 23 and 39% of all guiding question and scoring criteria comments focused on how well sections captured behaviors and experiences necessary for students to be able to self-evaluate the presence of concerns, across the entire spectrum of severity. Two major sub-categories emerged from these comments: improving scale gradation and broadening content. Clinicians felt scoring descriptors needed to accommodate students who may fit “in-between” existing criteria. For example, in the “Relationships” section, one participating clinician suggested we:

“Address [the] situation where someone is not losing connections but is working onbuilding confidence to have romantic connections.”

We changed the score 1 descriptor to include instances where students may have emotionally supportive connections but may struggle to build or maintain them. For “Education and activities,” clinicians indicated two instances where partial criteria could be met, and students may struggle to score themselves:

“Need to capture that mental health concerns are impacting academic performance, but student is still actively engaging in studies”

“Need options that capture languishing in one area only. Academics and activities are separate constructs. You can be functioning in one and not in the other.”

We used an “OR” Boolean operator to create two scoring pathways across scores 1–3, distinguishing academics from other activities, and allowing students to select the most severe score applicable to their situation. Under score 1, we have also taken into consideration instances where students may be engaged in class, but their academic performance may be declining.

Feedback often focused on broadening certain criteria and guiding questions to encompass a larger cross-section of the general student population, examples include:

“What about behavioural addictions (e.g., gambling, gaming)” (Alcohol and drugs)

“Include family relationships” (Relationships)

“Financial abuse is not listed - some family members have taken a client's student loanand used it for themselves” (Abuse)

“Needs to encompass more range of emotions - anger and shame in particular aremissing.” (Mood)

The “Alcohol and drugs” section was expanded to include additional substances (marijuana, prescribed medication, illicit substances) and behavioral addictions (e.g., excessive exercise or sex, gambling).

A sizable proportion of guiding questions (41%) and scoring criteria feedback (28%) focused on introducing a diagnostic level of detail and specificity to each section's content. Clinicians requested the tool assess sub-categories of its existing broad psychosocial areas. For example, in the “Relationships” section, clinicians felt that HEARTSMAP-U did not explore specific relationship types or problems and they proposed guiding questions that consider:

“Parental expectations to perform or excel impacting relationships, being able tocommunicate with one's parents.”

“Break-ups specifically”

“Friends nearby, versus those only met through social media (and not physicallyavailable)”

HEARTSMAP-U's general assessment of relationship challenges at mild, moderate, and severe levels is suitable for its intended use, as a multi-domain psychosocial screen. Feedback calling for added detail and subcategorization were deemed by the study team as most relevant to the clinician's own assessment context, rather than initial screening purposes. A lengthier tool may also reduce usability and increase respondent burden.

A large portion of concerns raised with guiding question (31%) and scoring descriptors (27%) had already been addressed in different tool sections, that participants may not have yet reviewed. In the “Thoughts and anxiety” section, participants expressed that:

“I'm not sure if this is coming later in the questionnaire but adding more depressive symptom questions. Perhaps that will be in the mood section I haven't come to yet.”

Language-related concerns made up a small proportion of guiding questions (4%) and scoring descriptor feedback (8%). These comments flagged language that students may misinterpret or find confusing such as “psychosocial,” “intoxication,” and “intrusive thoughts.” In another instance, participants felt the tool's singular use of “partner,” may stigmatize students in polyamorous relationships.

Participants rated the appropriateness of 265 triggered recommendations and perceived that most recommendations (70%) were consistent with the fictional students' support needs. A smaller portion of participants felt tool-generated recommendations underestimated (18%) or overestimated (12%) support needs. Participants also expressed concerns with recommending emergency services (e.g., 911, emergency department) in the absence of imminent safety risk. Rather, participants considered same-day primary care, rapid access clinics, and 24/7 e-counseling as appropriate supports.

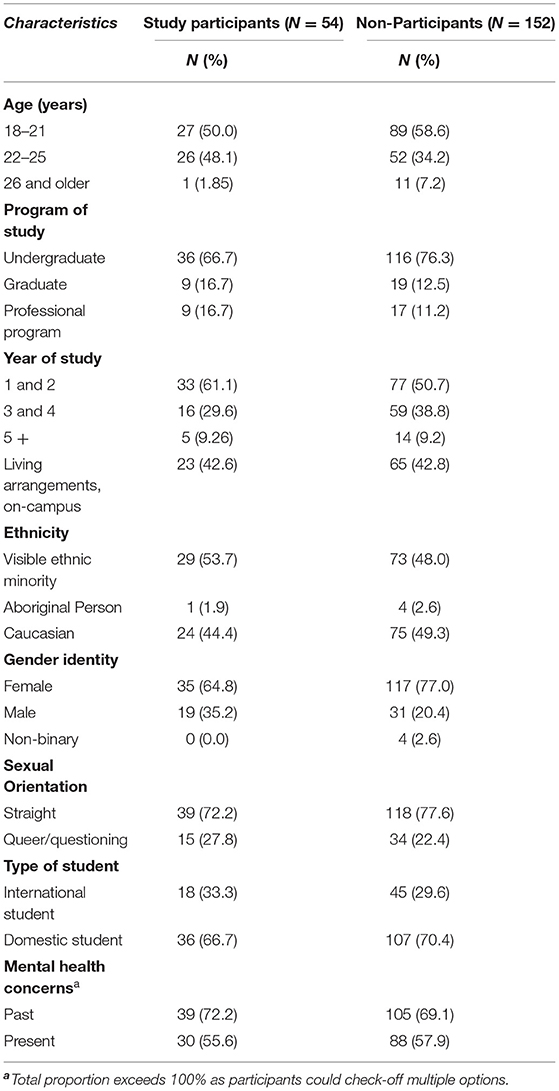

Demographic distributions did not significantly differ (P > 0.05) between participating students and those not invited to a focus group session (non-participating students). A total of 54 students took part in 6 focus group sessions, each 2 hours in length. We had nearly equal proportions of student's aged 18–21 (50%) and 22–25 years (48.1%). Approximately two-thirds of participants identified as female and undergraduate students. Most participants were in their first or second year (61.1%), living off-campus (57.4%), identified as straight (72.2%) and as part of a visible ethnic minority (53.7%). Over 80% reported experiencing mental health challenges in the past (72.2%) and/or present (55.6%). Demographic details are summarized in Table 4.

Table 4. Demographic characteristics of phase three student participants and non-participating students who expressed interest.

Earlier focus groups emphasized substantial content-related modifications (FG 1-3) relating to relevance and representativeness of HEARTSMAP-U's content. In later sessions, students raised mild/moderate content suggestions. The proportion of focus group participants engaged in group discussion remained consistent across sessions (Web-Appendix D).

Participants suggested multiple interface-related modifications summarized below. First, a privacy disclaimer at the beginning of the tool, so the user is aware of the scope, intended purpose, and confidentiality implications associated with completing HEARTSMAP-U. Second, a progress bar with a coordinated color scheme (e.g., green complete, orange=in-progress), to motivate users in completing the tool. Third, users should have the ability to download screening results to potentially share with their care provider, and that tool recommendations link to service information the user can directly act on. Finally, participants felt that pairing the tool with calendar apps would ease repeat screening and booking appointments.

When probed, students did not name any novel psychosocial concepts that were completely missing from the tool. However, in session one and two, participants felt that a student's financial situation is a crucial stressor that contributes to their mental well-being, however its assessment in the tool was limited to housing-related finances (e.g., bills, rent). One participant summarized the issue as:

“Regarding finances, this is quite broad, perhaps distinguish between living affordability (house/shelter, food, health) and school (tuition); perhaps a better term would be security or financial stability/security.” (FG1)

After the first focus group session, study investigators revised the overall concept to measure ‘Housing and Material Security,' shifting the focus away from strictly housing and financial difficulties and assessing whether necessities in general were met or not. Figure 2 displays our conceptual framework prior to and following focus groups.

Across all focus group sessions, most students felt that HEARTSMAP-U's psychosocial areas applied to their lived experiences and captured the challenges they experience within and outside the post-secondary educational context. One student described the tool's multi-dimensional nature as:

“Going into the different facets could be really helpful… people sometimes underestimate how much other stuff can really influence their mental health. Like if you're reallystruggling with school or rent money, that really has an impact on mental health. Butsometimes we don't realize it. We just think oh, it's because I'm just having a hardtime.” (FG 4)

Participants found the graded scoring spectrum to be an important attribute as it recognizes a middle ground, which could allow more students to see themselves in the options. One participant expressed:

“I like the use of “but” in [the] sections, a lot of questionnaires have all or nothing questions when sometimes you do struggle with the problems but have implemented coping skills.” (FG 3)

However, many students expressed concerns that the scoring gradation was not always clearly delineated in psychiatric sections such as “Mood” and “Thoughts and Anxiety,” as participants felt that descriptors for scores 0 and 1 were “blurred” and they “had a little bit of trouble distinguishing them.” Students also felt that descriptors should emphasize functional impairment and “refer more to actions” associated with various levels of concern severity, as opposed to just focusing on how students are feeling. Participants also found score 0 to be strength oriented whereas the remaining options reflected a gradient of deficits. They felt the score 0 language should be more neutral, and unassuming that the student is flourishing. One participant suggested:

“Resolve the language for 0 since it seems— it sounds a little idealistic for students. Instead of the word 'satisfied', …say like, “I'm keeping up and maintaining my academics and activities”…I think that would be a better capture of the baseline.” (FG 2)

Where applicable, the research team changed the scoring criteria to have a more consistent pattern across sections. A score 0 would indicate no perceived challenges (neutral), a 1 would indicate challenges with no to minimal functional impairment or distress (i.e., can still go about self-care/daily activities), a 2 would indicate challenges with moderate functional impact (i.e., difficulties going about self-care/daily activities), and a 3 representing challenges with severe impairment/distress, preventing self-care and daily activities. Participants in later sessions affirmed and supported these changes.

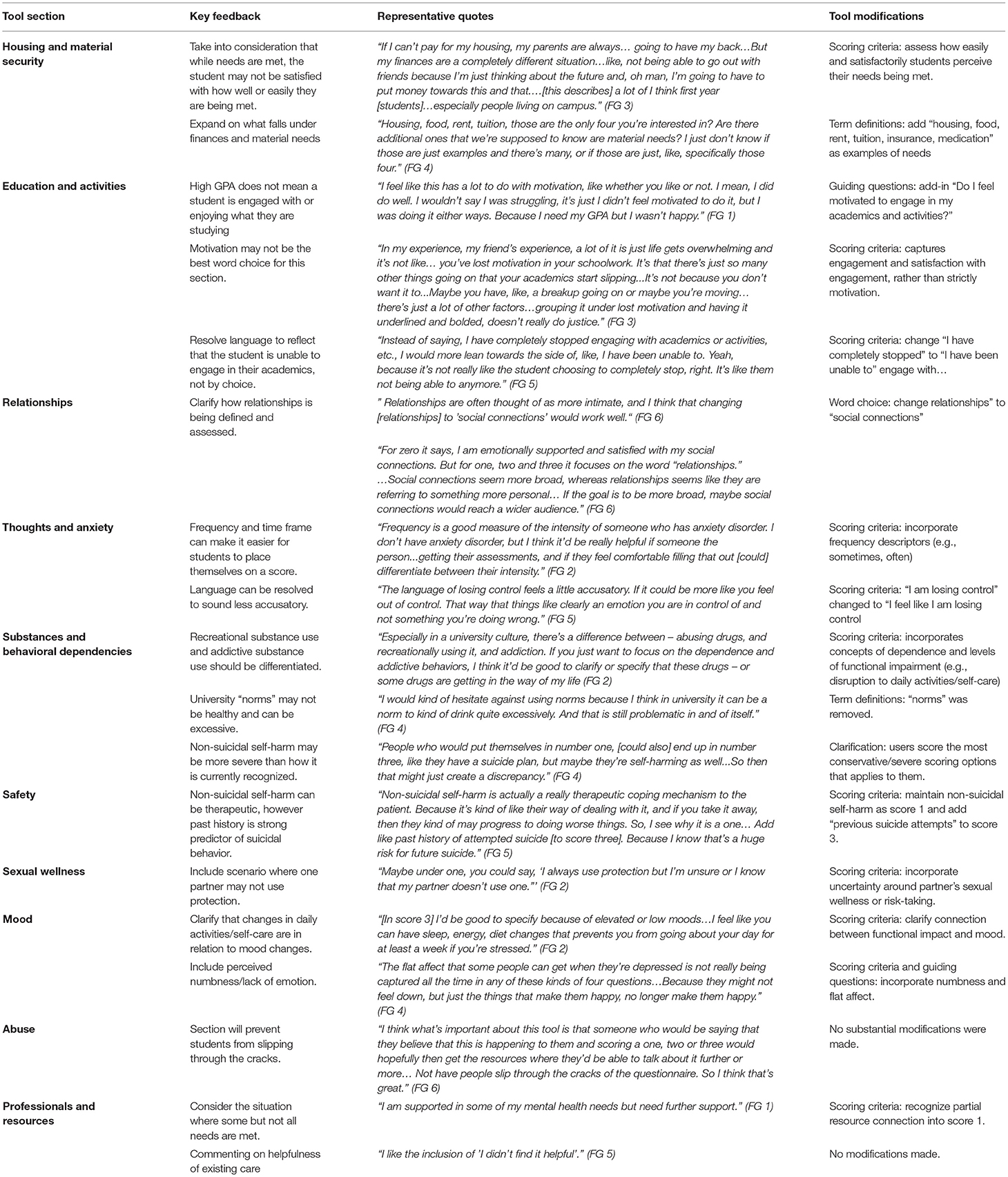

For each section, students highlighted opportunities to refine content and improve its relevance in assessing the concept it maps to. For example, in session one and two, participants expressed that engagement level and satisfaction should be included in “Education and Activities” to help evaluate how academics and extracurricular activities interact with students' mental well-being. One student expressed that being “engaged and [also] unsatisfied should be included [in the tool] because in that way [the University] can measure how meaningful or successful the activities [on] campus are for students.” (FG 6). In another instance, participants felt the “Mood” section overly focused on sad or anger-related emotions and needed to incorporate situations where students may perceive “no emotions or numb.” For “Sexual wellness,” students felt that score 0 (healthy sexual relationships) needed to clearly reflect protection-less, consensual sex between long-term, responsible intimate partners, and score 3 (high-risk behavior) needed to integrate discussion of capacity to consent. Table 5 reports representative quotes and corresponding modifications relating to student's perception of HEARTSMAP-U's relevance.

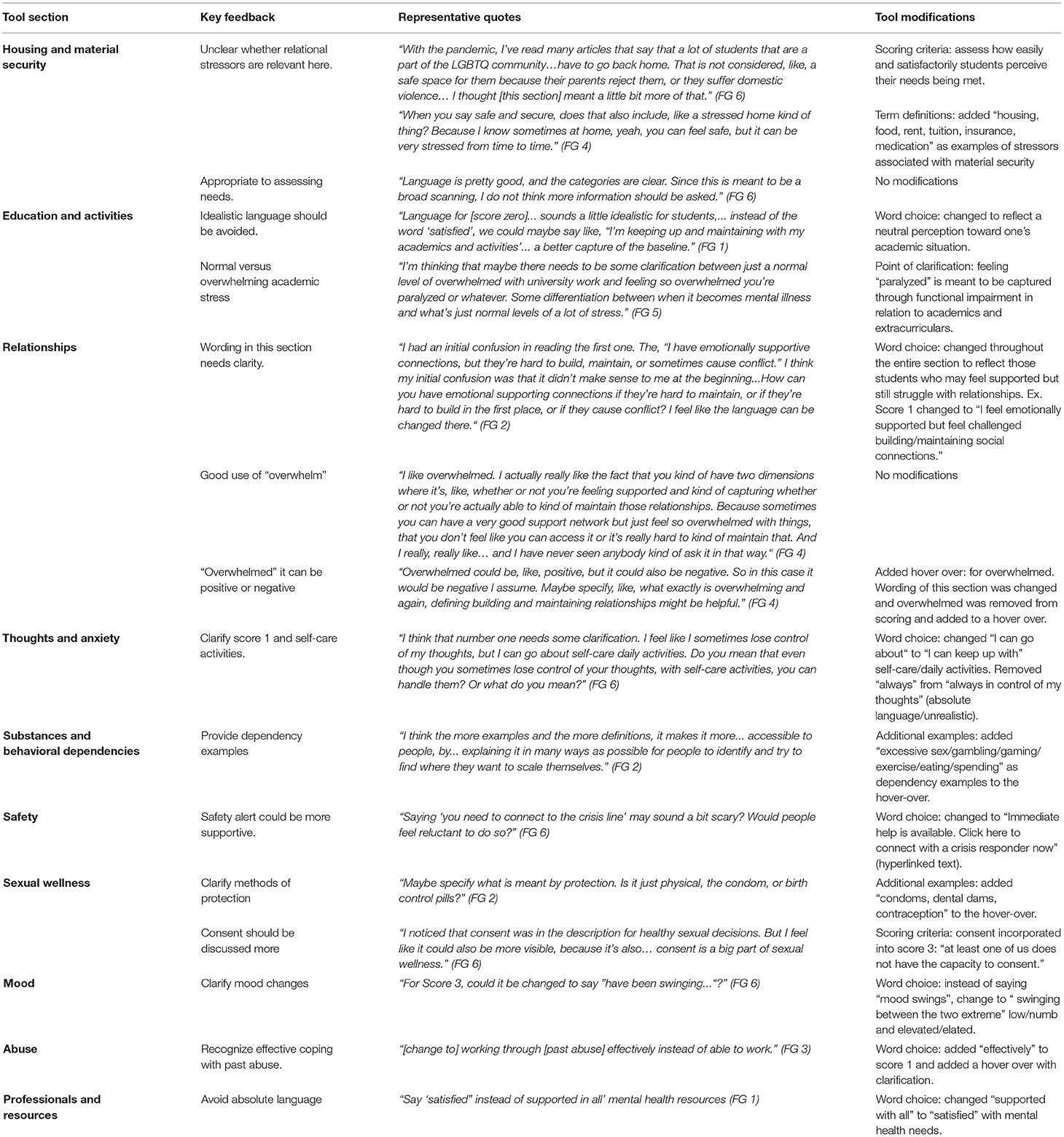

Table 5. Content-relevance related phase three student feedback with representative quotes and tool modifications.

Students agreed that overall, HEARTSMAP-U's scoring descriptors, guiding questions, and purpose were clear and easily understood. Guiding questions were perceived helpful and provided additional “clarification” on the section to be scored. Students suggested multiple modifications to improve content understandability. In session one, participants felt many terms and phrases (e.g., control over thoughts, basic needs, emotional support) were unfamiliar or ambiguous. Participants expressed the need for a “common language” between the tool user and researcher, so students comprehend questions and scoring criteria as intended, “that way connotations aren't playing as much of a role.” In response to this, we introduced a ‘hover-over' feature for any term or phrase students expressed uncertainty or confusion. In sessions 2-6, students consistently expressed approval of this feature and built a library of concise definitions with student-friendly language. Students stressed the need for a clear instructions page at the beginning of the tool, to ensure students knew how to approach each section. Participants felt if the user is uncertain between two scoring options (e.g., score 1 or 2), they should select the most conservative/higher score that applies to their situation. For example, under “Relationship,” “some relationships might be fine, but others aren't. Then you're basing [your score] on the struggling ones.” Table 6 summarizes participants comprehension-related feedback for each tool section, followed by the study teams agreed upon modifications. Overall, we found students in later sessions (5-6) affirmed and supported content modifications made in response to concerns raised in earlier sessions (1-4).

Table 6. Comprehension-related phase three student feedback with representative quotes and tool modification.

We document the multiphasic adaptation of previously developed pediatric psychosocial assessment tools into HEARTSMAP-U, a version fit-for-purpose for the post-secondary student population. In Phase one, the study team arrived at a prototype considered clinically and contextually suitable for post-secondary students. In Phase two, participants saw alignment between HEARTSMAP-U's content and their clinical experiences. Of those who offered constructive feedback, most called for a diagnostic level of content detail and specificity (28–41%), which may not be relevant for screening purposes. Between 23 and 39% clinicians provided modifications/feedback related to sectional content and severity coverage, as per the tool's intended use as a self-administered screener. In Phase three, students provided feedback for improving the content relevance and understandability. Modifications focused on creating a common language between tool users and researchers, as well as ensuring scoring options were realistic and distinguishable. Students did not propose novel psychosocial domains that HEARTSMAP-U does not already directly or indirectly measure.

Our tool adaptation process and methods built on existing screening literature and prior student-specific, rapid screening tools described in the literature. The Symptoms and Assets Screening Scale is a lengthier (34-item) instrument, and its content focuses mostly on psychological concerns. In the absence of more generalized psychosocial screening, students' resource needs may be underestimated or only partially understood. The College Health Questionnaire addresses these concerns and allows for multiple-domain screening. Both previous instruments display promising reliability and construct validity evidence. However, reporting of their development process is limited and describes a traditional “top–down” approach, with little mention of student and/or clinical expert (non-investigators) involvement. Engaging the target population has important implications in refining tool content, language, and instructions, which would contribute evidence toward the instrument's content validity, helping to ensure the measure reflects students' lived experiences and vernacular (63). While not intended for screening or assessing mental health issues, the Post-Secondary Stressor Index (PSSI) is an institution-facing tool that evaluates students' exposure to stress and supports targeted mental health intervention/programming (70, 71). In developing and evaluating the PSSI through an extensive process of student engagement, Linden et al. noted that their tool saw markedly stronger psychometric properties compared to similar tool's previously developed without involving students. The ISPOR Patient-Reported Outcomes Good Research Practices Task Force Report highlights the critical contribution that end-user engagement makes to the content validity argument of an instrument and its quantitative psychometric properties (72, 73). In line with this literature, HEARTSMAP-U's adaptation was closely informed by students, content experts of their own lived experiences and the collective experience of being a post-secondary student. While previously described measures have focused exclusively on assessment, scoring on HEARTSMAP-U feeds into a complex decision-making algorithm to generate severity and urgency-specific recommendations for both psychiatric and social/functional resources. The tool's action-oriented approach to assessment may help avoid “run-around” and potentially unnecessary referrals to already scarce psychiatric services.

During Phase two expert review, a fraction of clinician's (23–39%) identified opportunities to improve severity coverage across HEARTSMAP-U's sections, particularly of concerns that fit in-between “none” and “mild” scoring options. Capturing subthreshold and milder cases is a critical challenge with existing self-report measures (74). If transient and non-severe issues are not explicitly reflected in the scoring criteria, these cases may go underreported due to stigma and remain unmanaged until crisis situations. Recently, transdiagnostic clinical staging models of mental illness have received great attention as an improved means of characterizing the progression of mental disorders into adulthood. HEARTSMAP-U's symptomatic and functional characterization of low to high severity concerns may support its screening utility for mental disorders at their earliest stages, from non-specific to subthreshold symptoms (75). A sizable proportion of clinician's (28–41%) provided feedback more suited for their own practice and context of use (e.g., diagnostic-level probing), which would not be consistent with HEARTSMAP-U's intended use as a brief screener. These comments may reflect outstanding assessment needs and challenges in the post-secondary counseling settings, where validated, standardized intake procedures/measures are infrequently used, difficult to interpret, and can be time-consuming (76). A number of clinician's (18%) scored the tool's resource recommendations as underestimating the support needs of their fictional case. This may have been an artificial finding reflecting our online survey setup, where we asked participants to assess the appropriateness of each individual recommendation. By design, HEARTSMAP-U pairs intensive and lower tier resources, recognizing that multiple treatment and self-management modalities can help students cope with the long-wait times associated with scarcely available psychiatric resources (77). We believe that if clinicians had been asked to holistically assess the appropriateness of their case's service recommendations all-together (low tier and intensive options), support needs would have been perceived as sufficiently met. Future studies with a modified data collection instrument would help verify this was a methodological flaw.

In Phase three focus group sessions, student's felt the severity gradation (impairment, frequency, intensity) needed to be more distinguishable across scoring options. These comments are unsurprising given that internal, emotional states can be difficult to concretely self-score and numeric scales often have arbitrary scaling, with unclear distance between answer options (78). Student feedback also allowed us to revise tool language and build-in mechanisms (e.g., hover-overs, examples) to avoid assumptions and gender- and culturally-specific references, and use person first language where possible (79). Future validation studies will confirm whether students interpret and respond to tool content as intended.

Post-secondary student mental well-being is a growing national and international priority, with recent standards calling for the integration of student-centeredness within campus mental health strategies, to ensure responsiveness to students' perceived needs and experiences (19, 25). In striving toward these principles, our work demonstrates the development of early detection capacities built for, by, and with post-secondary students. Growing research demonstrates the potential for campus-based mental health screening interventions in helping students identify unmet support needs and initiate resource-seeking (33, 35, 80). Unfortunately, measuring what matters most to end-users/patients has not been traditionally prioritized in the psychological instrument development literature (81). Diverse student engagement was a key strength of the current study. Purposive sampling allowed us to ensure focus groups reflected student voices across a range of socially co-created realities, who may have differing experiences with respect to stigma, mental health literacy, barriers to care, and systemic challenges (e.g., oppression, discrimination). Another methodological strength is our use of vignettes during Phase two expert review, allowing us to interactively engage clinicians and elicit their feedback on tool content, given they could not self-administer the tool.

We note several study limitations. Phase one discussions and outputs may have been biased by the study team's proximity to the project. However, subsequent feedback and insights from clinicians and students offered additional perspectives and opportunities to further refine HEARTSMAP-U's content. In Phase two, we did not outline clear parameters for vignette development. As a result, no vignettes evaluated the tool's scoring criteria on severe “Alcohol and drug” and “Abuse” concerns. However, clinical investigators reviewed the tool's service provisions mapping to these severe scores, and found they matched current clinical safety protocols. Additionally, we restricted focus groups to students of a single, large-size post-secondary institution in Western Canada. Students from smaller institutions (e.g., community colleges, vocational schools), rural regions, and francophone communities may see the need for further tool content modification for alignment with their experiences and learning environment (82, 83). Still, our findings may be transferable to other similarly large, research-intensive institutions.

HEARTSMAP-U has undergone a rigorous, systematic, and multi-stage tool adaptation process with clinical experts and student end-users. Later validity investigations will report evidence of HEARTSMAP-U's measurement properties, which will be crucial in gauging the tool's suitability for universal screening utility and the early detection of students' mental health needs.

The datasets presented in this article are not readily available because the study participants did not agree for their data to be shared publicly, due to the nature of the research. Requests to access the datasets should be directed to Punit Virk, cHZpcmtAYmNjaHIuY2E=.

The studies involving human participants were reviewed and approved by University of British Columbia Behavioural Research Ethics Board. The patients/participants provided their written informed consent to participate in this study.

PV and QD contributed to conception and design of the study. PV and RA conducted data collection. PV, RA, and HB organized the database. PV performed the analysis and wrote the first draft of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

This work was supported by The University of British Columbia Faculty of Medicine Strategic Investment Fund and The University of British Columbia Office of the Vice-President, Students. Funding sources had no involvement in study design, data collection, data analysis, writing report, or in the decision to submit for publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We would like to express our deepest gratitude to Dr. Heather Robertson for her insights and guidance throughout Phase one of this work. We are grateful for all the time, support, and expertise of our student researchers Paula Gosse, Lea Separovic, Vanessa Samuel, Allison Lui, Max LeBlanc, Andy Lui, and Bohan Hans Yang, during the early stage of this work. We extend our deepest gratitude to Ms. Karly Stillwell for her coordination and administrative support. We thank Caitlin Pugh for her critical review of our draft manuscript. We would also like to acknowledge Gurm Dhugga, Naveen Karduri, and Vincent Lum for their IT support. We are also thankful of the University of British Columbia Mental Health Network for their support of our work and their commitment to advocating for student mental health.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2022.812965/full#supplementary-material

1. Linden B, Boyes R, Stuart H. Cross-sectional trend analysis of the NCHA II survey data on Canadian post-secondary student mental health and wellbeing from 2013 to 2019. BMC Public Health. (2021) 21:590. doi: 10.1186/s12889-021-10622-1

2. Ibrahim R, Sarirete A. Student development in higher education: a constructivist approach. In: Lytras MD, Ordonez De Pablos P, Avison D, et al., editors. Technology Enhanced Learning. Quality of Teaching and Educational Reform. Berlin, Heidelberg: Springer Berlin Heidelberg (2010). p. 467–73. doi: 10.1007/978-3-642-13166-0_66

3. Pedrelli P, Nyer M, Yeung A, Zulauf C, Wilens T. College students: mental health problems and treatment considerations. Acad Psychiatry. (2015) 39:503–11. doi: 10.1007/s40596-014-0205-9

4. Giamos D, Young Soo A, Suleiman A, Heather S, Shu-Ping C. Understanding campus culture and student coping strategies for mental health issues in five Canadian colleges and universities. Can J High Educ. (2017) 47:136–51. doi: 10.47678/cjhe.v47i3.187957

5. Kessler RC, Amminger P, Aguilar-Gaxiola S, Alonso J, Lee S, Ustün TB. Age of onset of mental disorders: a review of recent literature. Curr Opin Psychiatry. (2007) 20:359–64. doi: 10.1097/YCO.0b013e32816ebc8c

6. Singh SP, Tuomainen H. Transition from child to adult mental health services: needs, barriers, experiences and new models of care. World Psychiatry. (2015) 14:358–61. doi: 10.1002/wps.20266

7. American College Health Association. National College Health Assessment II: Canadian Consortium Executive Summary Spring 2019. Silver Spring, MD (2019).

8. Public Health Agency of Canada. Suicide in Canada: Key statistics (infographic). Available at: https://www.canada.ca/en/public-health/services/publications/healthy-living/suicide-canada-key-statistics-infographic.html. Published 2019 (accessed October 6, 2020).

9. Findlay L. Depression Suicidal Ideation among Canadians Aged 15 to 24. Vol 28 (2017). Available at: http://www.ncbi.nlm.nih.gov/pubmed/28098916

10. Vigo D, Jones L, Munthali R, et al. Investigating the effect of COVID-19 dissemination on symptoms of anxiety and depression among university students. BJPsych Open. (2021) 7:e69. doi: 10.1192/bjo.2021.24

11. The Healthy Minds Network, American College Health Association. The Impact of COVID-19 on College Student Well-Being(2020).

12. Vizzotto ADB, de Oliveira AM, Elkis H, Cordeiro Q, Buchain PC. Psychosocial characteristics. In: Gellman MD, Turner JR, editors. Encyclopedia of Behavioral Medicine. New York, NY: Springer New York (2013). p. 1578–80. doi: 10.1007/978-1-4419-1005-9_918

13. Jenei K, Cassidy-Matthews C, Virk P, Lulie B, Closson K. Challenges and opportunities for graduate students in public health during the COVID-19 pandemic. Can J Public Heal. (2020) 111:408–9. doi: 10.17269/s41997-020-00349-8

14. Lederer AM, Hoban MT, Lipson SK, Zhou S. Eisenberg D. More Than Inconvenienced: The Unique Needs of US College Students During the COVID-19 Pandemic. Heal Educ Behav. (2020) 48:14–9. doi: 10.1177/1090198120969372

15. Bray SR, Born HA. Transition to University and Vigorous Physical Activity: Implications for Health and Psychological Well-Being. J Am Coll Heal. (2004) 52:181–8. doi: 10.3200/JACH.52.4.181-188

16. Mahmoud JSR, Staten RT, Hall LA, Lennie TA. The relationship among young adult college students' depression, anxiety, stress, demographics, life satisfaction, and coping styles. Issues Ment Health Nurs. (2012) 33:149–56. doi: 10.3109/01612840.2011.632708

17. Eisenberg D, Golberstein E, Gollust SE. Help-seeking and access to mental health care in a university student population. Med Care. (2007) 45:594–601. doi: 10.1097/MLR.0b013e31803bb4c1

18. Czyz EK, Horwitz AG, Eisenberg D, Kramer A, King CA. Self-reported barriers to professional help seeking among college students at elevated risk for suicide. J Am Coll Heal. (2013) 61:398–406. doi: 10.1080/07448481.2013.820731

19. Canadian Standards Associations & Mental Health Commission of Canada. National Standard of Canada (Z2003:20): Mental Health and Well-Being for Post-Secondary Students. Toronto (2020). Available at: https://www.mentalhealthcommission.ca/English/studentstandard

20. Hetrick SE, Parker AG, Hickie IB, Purcell R, Yung AR, McGorry PD. Early identification and intervention in depressive disorders: towards a clinical staging model. Psychother Psychosom. (2008) 77:263–70. doi: 10.1159/000140085

21. Frick MG, Butler SA, deBoer DS. Universal suicide screening in college primary care. J Am Coll Heal. (2019) 69:1–6. doi: 10.1080/07448481.2019.1645677

22. Klein MC, Ciotoli C, Chung H. Primary care screening of depression and treatment engagement in a university health center: a retrospective analysis. J Am Coll Health. (2011) 59:289–95. doi: 10.1080/07448481.2010.503724

23. Forbes F-JM, Whisenhunt BL, Citterio C, Jordan AK, Robinson D, Deal WP. Making mental health a priority on college campuses: implementing large scale screening and follow-up in a high enrollment gateway course. J Am Coll Heal. (2019) 69:1–8. doi: 10.1080/07448481.2019.1665051

24. Canady VA. University mental health screening kiosk promotes awareness, prevention. Ment Heal Wkly. (2015) 25:1–5. doi: 10.1002/mhw.30334

25. Linden B, Grey S, Stuart H. National Standard for Psychological Health and Safety of Post-Secondary Students—Phase I: Scoping Literature Review. Kingston, ON (2018).

26. Bradford S, Rickwood D. Young People's Views on Electronic Mental Health Assessment: Prefer to Type than Talk? J Child Fam Stud. (2015) 24:1213–21. doi: 10.1007/s10826-014-9929-0

27. Bradford S, Rickwood D. Acceptability and utility of an electronic psychosocial assessment (myAssessment) to increase self-disclosure in youth mental healthcare: a quasi-experimental study. BMC Psychiatry. (2015) 15:305. doi: 10.1186/s12888-015-0694-4

28. Murrelle L, Ainsworth BE, Bulger JD, Holliman SC, Bulger DW. Computerized Mental Health Risk Appraisal for College Students: User Acceptability and Correlation with Standard Pencil-and-Paper Questionnaires. Am J Heal Promot. (1992) 7:90–2. doi: 10.4278/0890-1171-7.2.90

29. Garlow SJ, Rosenberg J, Moore JD, et al. Depression, desperation, and suicidal ideation in college students: Results from the American Foundation for Suicide Prevention College Screening Project at Emory University. Depress Anxiety. (2008) 25:482–8. doi: 10.1002/da.20321

30. Osman A, Bagge CL, Gutierrez PM, Konick LC, Kopper BA, Barrios FX. The suicidal behaviors questionnaire-revised (SBQ-R): Validation with clinical and nonclinical samples. Assessment. (2001) 8:443–54. doi: 10.1177/107319110100800409

31. Campbell CE, Maisto SA. Validity of the AUDIT-C screen for at-risk drinking among students utilizing university primary care. J Am Coll Heal. (2018) 66:774–82. doi: 10.1080/07448481.2018.1453514

32. Bártolo A, Monteiro S, Pereira A. Factor structure and construct validity of the Generalized Anxiety Disorder 7-item (GAD-7) among Portuguese college students. Cad Saude Publica. (2017) 33:e00212716. doi: 10.1590/0102-311x00212716

33. Williams A, Larocca R, Chang T, Trinh NH, Fava M, Kvedar J, et al. Web-based depression screening and psychiatric consultation for college students: a feasibility and acceptability study. Int J Telemed Appl. (2014) 2014:580786. doi: 10.1155/2014/580786

34. Chung H, Klein MC, Silverman D, Corson-Rikert J, Davidson E, Ellis P, et al. A Pilot for Improving Depression Care on College Campuses: Results of the College Breakthrough Series-Depression (CBS-D) Project. Vol 59). (2011). doi: 10.1080/07448481.2010.528097

35. Mackenzie S, Wiegel JR, Mundt M, Brown D, Saewyc E, Heiligenstein E, et al. Depression and suicide ideation among students accessing campus health care. Am J Orthopsychiatry. (2011) 81:101–7. doi: 10.1111/j.1939-0025.2010.01077.x

36. Khubchandani J, Brey R, Kotecki J, Kleinfelder J, Anderson J. The psychometric properties of PHQ-4 depression and anxiety screening scale among college students. Arch Psychiatr Nurs. (2016) 30:457–62. doi: 10.1016/j.apnu.2016.01.014

37. Downs A, Boucher LA, Campbell DG, Dasse M. Development and initial validation of the Symptoms and Assets Screening Scale. J Am Coll Health. (2013) 61:164–74. doi: 10.1080/07448481.2013.773902

38. Alschuler KN, Hoodin F, Byrd MR. Rapid Assessment for psychopathology in a college health clinic: utility of college student specific questions. J Am Coll Heal. (2009) 58:177–9. doi: 10.1080/07448480903221210

39. Bewick BM, West R, Gill J, O'May F, Mulhern B, Barkham M, et al. Providing Web-based feedback and social norms information to reduce student alcohol intake: A multisite investigation. J Med Internet Res. (2010) 12:e1461. doi: 10.2196/jmir.1461

40. Ebert DD, Franke M, Kählke F, Küchler AM, Bruffaerts R, Mortier P, et al. Increasing intentions to use mental health services among university students. Results of a pilot randomized controlled trial within the World Health Organization's World Mental Health International College Student Initiative. Int J Methods Psychiatr Res. (2019) 28:e1754. doi: 10.1002/mpr.1754

41. King CA, Eisenberg D, Zheng K, Czyz E, Kramer A, Horwitz A, et al. Online suicide risk screening and intervention with college students: a pilot randomized controlled trial. J Consult Clin Psychol. (2015) 83:630–6. doi: 10.1037/a0038805

42. Lee A, Deevska M, Stillwell K, Black T, Meckler G, Park D, et al. A psychosocial assessment and management tool for children and youth in crisis. Can J Emerg Med. (2019) 21:87–96. doi: 10.1017/cem.2018.1

43. Virk P, Laskin S, Gokiert R, Richardson C, Newton M, Stenstrom R, et al. MyHEARTSMAP: development and evaluation of a psychosocial self-assessment tool, for and by youth. BMJ Paediatr Open. (2019) 3:1–9. doi: 10.1136/bmjpo-2019-000493

44. Doan Q, Wright B, Atwal A, et al. Utility of MyHEARTSMAP for Psychosocial screening in the Emergency Department. J Pediatr. (2020) 219:56–61. doi: 10.1016/j.jpeds.2019.12.046

45. Li BCM, Wright B, Black T, Newton AS, Doan Q. Utility of MyHEARTSMAP in Youth Presenting to the Emergency Room with Mental Health Concerns. J Pediatr. (2021) 235:124–9. doi: 10.1016/j.jpeds.2021.03.062

46. Koopmans E, Black T, Newton A, Dhugga G, Karduri N, Doan Q. Provincial dissemination of HEARTSMAP, an emergency department psychosocial assessment and disposition decision tool for children and youth. Paediatr Child Heal. (2019) 24:359–65. doi: 10.1093/pch/pxz038

47. Gill C, Arnold B, Nugent S, et al. Reliability of HEARTSMAP as a tool for evaluating psychosocial assessment documentation practices in emergency departments for pediatric mental health complaints. Acad Emerg Med. (2018) 25:1375–84. doi: 10.1111/acem.13506

48. Virk P, Atwal A, Wright B, Doan Q. Exploring parental acceptability of psychosocial screening in pediatric emergency departments. J Adolesc Heal. (2021) Submitted.

49. van Amstel LL, Lafleur DL, Blake K. Raising Our HEADSS: adolescent psychosocial documentation in the emergency department. Acad Emerg Med. (2004) 11:648–55. doi: 10.1197/j.aem.2003.12.022

50. Cohen E, Mackenzie RG, Yates GL, HEADSS. a psychosocial risk assessment instrument: Implications for designing effective intervention programs for runaway youth. J Adolesc Heal. (1991) 12:539–44. doi: 10.1016/0197-0070(91)90084-Y

51. Gawel JE. Herzberg's theory of motivation and Maslow's hierarchy of needs. Pract Assessment, Res Eval. (1997) 5:1996–7.

52. Freitas FA, Leonard LJ. Maslow's hierarchy of needs and student academic success. Teach Learn Nurs. (2011) 6:9–13. doi: 10.1016/j.teln.2010.07.004

53. Versaevel LN. Canadian Post-Secondary Students, Stress, Academic Performance—A Socio-Ecological Approach. (2014). Available at: https://ir.lib.uwo.ca/cgi/viewcontent.cgi?article=4054&context=etd

54. Woodgate RL, Gonzalez M, Stewart D, Tennent P, Wener P. A Socio-Ecological Perspective on Anxiety Among Canadian University Students. Can J Couns Psychother. (2020) 54:486–519. Available at: https://cjc-rcc.ucalgary.ca/article/view/67904

55. Terwee CB, Prinsen CAC, Chiarotto A, et al. COSMIN methodology for evaluating the content validity of patient-reported outcome measures: a Delphi study. Qual Life Res. (2018) 27:1159–70. doi: 10.1007/s11136-018-1829-0

56. American Educational Research American Psychological Association National Council on Measurement in Education, Joint Joint Committee on Standards for Educational and Psychological Testing. Standards for Educational and Psychological Testing (2014).

57. Guidance for Industry Patient-Reported Outcome Measures: Use in Medical Product Development to Support Labeling Claims. (2009). Available at: https://www.fda.gov/media/77832/download.

58. Boateng GO, Neilands TB, Frongillo EA, Melgar-Quiñonez HR, Young SL. Best practices for developing and validating scales for health, social, and behavioral research: a primer. Front Public Heal. (2018) 6:149. doi: 10.3389/fpubh.2018.00149

59. Wong Riff KW, Tsangaris E, Goodacre T, Forrest CR, Pusic AL, Cano SJ, et al. International multiphase mixed methods study protocol to develop a cross-cultural patient-reported outcome instrument for children and young adults with cleft lip and/or palate (CLEFT-Q). BMJ Open. (2017) 7:1–9. doi: 10.1136/bmjopen-2016-015467

60. Davis LL. Instrument review: Getting the most from a panel of experts. Appl Nurs Res. (1992) 5:194–7. doi: 10.1016/S0897-1897(05)80008-4

61. Assarroudi A, Heshmati Nabavi F, Armat MR, Ebadi A, Vaismoradi M. Directed qualitative content analysis: the description and elaboration of its underpinning methods and data analysis process. J Res Nurs. (2018) 23:42–55. doi: 10.1177/1744987117741667

62. Barbic S, Celone D, Dewsnap K, Duque SR, Osiecki S, Valevicius D, et al. Mobilizing Measurement into Care: A Guidebook for Service Providers Working with Young Adults Aged 12–24 Years (2021).

63. Rothman M, Burke L, Erickson P, Leidy NK, Patrick DL, Petrie CD. Use of existing patient-reported outcome (PRO) instruments and their modification: The ISPOR good research practices for evaluating and documenting content validity for the use of existing instruments and their modification PRO task force report. Value Heal. (2009) 12:1075–83. doi: 10.1111/j.1524-4733.2009.00603.x

64. Geisen E, Romano Bergstrom J. Think aloud and verbal-probing techniques. In: Geisen E, Romano Bergstrom JBT-UT for SR, editors. Usability Testing for Survey Research. Boston: Morgan Kaufmann (2017). p. 131–61. doi: 10.1016/B978-0-12-803656-3.00006-3

65. Alwashmi MF, Hawboldt J, Davis E, Fetters MD. The iterative convergent design for mobile health usability testing: mixed methods approach. JMIR mHealth uHealth. (2019) 7:e11656. doi: 10.2196/11656

66. Carlsen B, Glenton C. What about N? A methodological study of sample-size reporting in focus group studies. BMC Med Res Methodol. (2011) 11:26. doi: 10.1186/1471-2288-11-26

67. Stewart AL, Thrasher AD, Goldberg J, Shea JA. A framework for understanding modifications to measures for diverse populations. J Aging Health. (2012) 24:992–1017. doi: 10.1177/0898264312440321

68. Coons SJ, Gwaltney CJ, Hays RD, Lundy JJ, Sloan JA, Revicki DA, et al. Recommendations on evidence needed to support measurement equivalence between electronic and paper-based patient-reported outcome (PRO) measures: ISPOR ePRO good research practices task force report. Value Heal. (2009) 12:419–29. doi: 10.1111/j.1524-4733.2008.00470.x

69. UBC Student Health and Wellbeing. Student Health & Wellbeing (Green Folder): Supporting Students in Distress in a Virtual Learning Environment. (2021). Available at: https://wellbeing.ubc.ca/sites/wellbeing.ubc.ca/files/u9/2021.01_Green Folder_with_date.pdf

70. Linden B, Boyes R, Stuart H. The post-secondary student stressors index: proof of concept and implications for use. J Am Coll Health. (2020). p. 1–9. doi: 10.1080/07448481.2020.1754222

71. Linden B, Stuart H. Psychometric assessment of the Post- Secondary Student Stressors Index (PSSI). BMC Public Health. (2019) 19:1139. doi: 10.1186/s12889-019-7472-z

72. Brod M, Pohlman B, Tesler Waldman L. Qualitative research and content validity. In: Michalos AC, editor. Encyclopedia of Quality of Life and Well-Being Research. Dordrecht: Springer Netherlands (2014). p. 5257–65. doi: 10.1007/978-94-007-0753-5_3848

73. Patrick DL, Burke LB, Gwaltney CJ, Leidy NK, Martin ML, Molsen E, et al. Content validity—Establishing and reporting the evidence in newly developed patient-reported outcomes (PRO) instruments for medical product evaluation: ISPOR PRO good research practices task force report: Part 2—Assessing respondent understanding. Value Heal. (2011) 14:978–88. doi: 10.1016/j.jval.2011.06.013

74. Tannenbaum C, Lexchin J, Tamblyn R, Romans S. Indicators for measuring mental health: towards better surveillance. Healthc Policy. (2009) 5:177–86. doi: 10.12927/hcpol.2013.21180

75. Shah JL, Scott J, McGorry PD, Cross SPM, Keshavan MS, Nelson B, et al. Transdiagnostic clinical staging in youth mental health: a first international consensus statement. World Psychiatry. (2020) 19:233–42. doi: 10.1002/wps.20745

76. Broglia E, Millings A, Barkham M. Challenges to addressing student mental health in embedded counselling services: a survey of UK higher and further education institutions. Br J Guid Counc. (2018) 46:441–55. doi: 10.1080/03069885.2017.1370695

77. Lipson SK, Lattie EG, Eisenberg D. Increased rates of mental health service utilization by U.S. college students: 10-year population-level trends (2007–2017). Psychiatr Serv. (2019) 70:60–3. doi: 10.1176/appi.ps.201800332

78. Blanton H, Jaccard J. Arbitrary metrics in psychology. Am Psychol. (2006) 61:27–41. doi: 10.1037/0003-066X.61.1.27

79. Thomas J, McDonagh D. Shared language:towards more effective communication. Australas Med J. (2013) 6:46–54. doi: 10.4066/AMJ.2013.1596

80. Shepardson RL, Funderburk JS. Implementation of universal behavioral health screening in a university health setting. J Clin Psychol Med Settings. (2014) 21:253–66. doi: 10.1007/s10880-014-9401-8

81. Krause KR, Bear HA, Edbrooke-Childs J, Wolpert M. Review: what outcomes count? a review of outcomes measured for adolescent depression between 2007 and 2017. J Am Acad Child Adolesc Psychiatry. (2019) 58:61–71. doi: 10.1016/j.jaac.2018.07.893

82. Hussain R, Guppy M, Robertson S, Temple E. Physical and mental health perspectives of first year undergraduate rural university students. BMC Public Health. (2013) 13:848. doi: 10.1186/1471-2458-13-848

83. Cooke M, Juliet Huntly. White Paper on Postsecondary Student Mental Health. (2015). Available at: https://occccco.files.wordpress.com/2015/05/ccvps-white-paper-on-postsecondary-student-mental-health-april-2015.pdf

Keywords: mental health, screening, validity, post-secondary students, focus groups

Citation: Virk P, Arora R, Burt H, Gadermann A, Barbic S, Nelson M, Davidson J, Cornish P and Doan Q (2022) HEARTSMAP-U: Adapting a Psychosocial Self-Screening and Resource Navigation Support Tool for Use by Post-secondary Students. Front. Psychiatry 13:812965. doi: 10.3389/fpsyt.2022.812965

Received: 10 November 2021; Accepted: 24 January 2022;

Published: 22 February 2022.

Edited by:

Abhishek Pratap, Centre for Addiction and Mental Health, CanadaReviewed by:

Lauren McGillivray, University of New South Wales, AustraliaCopyright © 2022 Virk, Arora, Burt, Gadermann, Barbic, Nelson, Davidson, Cornish and Doan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Punit Virk, cHZpcmtAYmNjaHIuY2E=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.