- 1Department of Psychology, Faculty of Education, Hubei University, Wuhan, China

- 2School of Artificial Intelligence, Changchun University of Science and Technology, Changchun, China

Depression is related to the defect of emotion processing, and people's emotional processing is crossmodal. This article aims to investigate whether there is a difference in audiovisual emotional integration between the depression group and the normal group using a high-resolution event-related potential (ERP) technique. We designed a visual and/or auditory detection task. The behavioral results showed that the responses to bimodal audiovisual stimuli were faster than those to unimodal auditory or visual stimuli, indicating that crossmodal integration of emotional information occurred in both the depression and normal groups. The ERP results showed that the N2 amplitude induced by sadness was significantly higher than that induced by happiness. The participants in the depression group showed larger amplitudes of N1 and P2, and the average amplitude of LPP evoked in the frontocentral lobe in the depression group was significantly lower than that in the normal group. The results indicated that there are different audiovisual emotional processing mechanisms between depressed and non-depressed college students.

Introduction

Depression is a common mood disorder characterized by depressive mood, anhedonia and cognitive deficits (1). Approximately 8% of men and 15% of women are likely to suffer from depression in their lifetime (2). As an affective disorder with high morbidity, recurrence and disability rates, depression poses a serious threat to human physical and mental health, so it has always been an important subject of human research (3). Depression is related to defects in emotion processing, which manifest as deviations in emotion perception and dysfunction in psychological mechanisms related to emotion regulation (4, 5). Specifically, compared with healthy individuals, depressed individuals are more sensitive to negative emotional stimuli but less sensitive to positive emotional stimuli, which are reflected in perception, attention and memory (6). In addition, depressed individuals' processing of neutral stimuli is different from that of healthy individuals. According to the emotion context insensitivity model, depressed individuals are more sensitive to neutral emotional stimuli than normal individuals (7). Other studies have shown that those with depression are more likely to perceive neutral faces as sad faces because depression affects the perception of negative stimuli (8, 9).

At the neurobiological level, there are also a large number of studies exploring the relationship between depression and emotional processing defects. For example, a magnetic resonance imaging (MRI) study showed that participants with a history of depression had a significant attentional bias to negative emotional stimuli (10), and negative attentional bias may be one of the key factors of depression recurrence (11). A study using event-related potential (ERP) technology examined the late positive potential (LPP) evoked in depressed participants when they processed sad pictures and happy pictures, and there was no significant difference in the amplitude of LPP (12). However, in the study of healthy subjects, it was found that the amplitude of LPP induced by sad pictures was significantly larger than that induced by happy pictures (13), and negative emotional stimulation could induce stronger electrophysiological responses (14–16), so subjects may devote more attention to negative emotional stimuli, thus realizing the fine coding of emotional stimuli. The difference of LPP amplitude of positive and negative emotional stimuli between depressed patients and healthy subjects indicates that the fine processing ability of depressed participants to negative emotional stimuli is weakened, or negative information may not be able to induce strong emotional and motivational responses in depressed individuals (12). At the same time, the research on the emotional sound of depression subjects also shows that the depression group has a very low degree of arousal to negative emotional sound stimulation, but the physiological response is very strong, which makes the autonomic nervous system in an abnormal state for a long time, which can easily lead to physical discomfort (12). In addition, compared with controls, depressed patients showed relatively high N2 and reduced P3 amplitudes to negative compared with positive target stimuli, as well as marginally reduced N2 amplitude to positive target stimuli (17).

However, most of the above was the result of an individual's single-channel emotional stimuli processing, that is, judging others' emotions through visual (such as observing facial expression) or auditory (such as analyzing tone) stimuli, but in daily life, people engage in crossmodal emotional processing. A large number of studies have shown that an individual's single-channel processing results may be affected by other channels (18–22); therefore, it is inappropriate to generalize the results of research on a single channel directly to the understanding of people's daily lives. The underlying neurocognitive processes that integrate separate streams of information from different sensory channels into the overall experience are often referred to as multisensory integration (MSI) (23). Researchers focused on healthy adults (18), patients with bipolar disorder (24), and patients with schizophrenia (25, 26) showed that multisensory integration of emotional cues is beneficial because the integration of information from multiple channels will increase the recognition speed and hit rate of emotional information.

For people with depression, although researchers agree that there may be problems in the process of emotional information recognition, there is a lack of a more ecological exploration of crossmodal emotional integration processing ability. Therefore, this study will adopt the perceptual crossmodal integration of auditory and visual stimuli and use ERP technology to study the effects of cross-channel integration of emotional information in depressed people to reveal the characteristics of crossmodal audiovisual emotional integration in depressed individuals and provide an experimental basis for the pathogenesis of depression. In previous studies on crossmodal integration of emotion, N1 and P2 were the two main components affected by the integration of visual and vocal emotional information (27, 28). N1 is associated with stimulus detection (29). It has been found that the amplitude of N1 induced by cross-channel emotional stimulation with consistent audiovisual emotional valance is lower than that of a single audio channel. N1 inhibition is considered a specific indicator of the integration of two-channel audiovisual emotional information (30, 31). However, other studies have found that consistent dual-channel audiovisual emotional information induces stronger N1 amplitudes than single-channel emotional information (32). P2 is an important indicator for the rapid detection of emotional stimuli (31, 33–35). Researchers believe that N1 and P2 are a reflection of visual and audio emotional information being automatically integrated within 200 ms, which represents the processing of low-level visual features of emotional stimuli (36). Therefore, previous studies used N1 and P2 as important indicators of crossmodal emotional integration. In addition, we also analyzed N2 and LPP components to test whether depression affects the processing of emotional information integration. The N2 component reflects the active inhibition of negative stimuli (37), and LPP components reflect the high-level cognitive processing of emotion (38). Therefore, we hypothesized that the amplitudes of the N1, P2, N2, and LPP components would be relatively larger in the depression group than in the normal group.

Methods And Materials

Participants

Forty-six college students from a university in Hubei participated in the experiment, including 23 in the healthy normal group (10 males and 13 females; age: 19.83 ± 1.30 years; depression score: 6.74 ± 2.83) and 23 in the depression group (10 males and 13 females; age: 20.14 ± 1.17 years; depression score: 24.78 ± 5.26). Both groups had normal hearing and visual or corrected visual acuity and were right-handed. Each subject signed informed consent before entering the study. The screening methods were as follows: First, the Beck Depression Inventory-II (BDI-II) questionnaire was distributed online (https://www.wjx.cn/) to undergraduates in a university in Hubei Province, China. A total of 352 people submitted the questionnaire; after excluding those with incomplete information and scale items, 337 questionnaires remained. College students with BDI-II scores ≥19 were selected as the depression group, and college students with BDI-II scores ≤ 13 were selected as the healthy control screening group (12). Then, within 1–2 weeks, 47 college students (24 in the depression group and 23 in the healthy normal group) were invited to participate in the structured interview by telephone. Based on the Structured Clinical Interview for DSM (SCID), one depressed patient who was taking medication was excluded after the interview. Finally, 23 people were enrolled in the depression group and 23 people in the healthy normal group. The participants provided written informed consent to participate in this study, which was previously approved by the Ethics Committee of Hubei University. All participants received payment for their time.

Stimuli

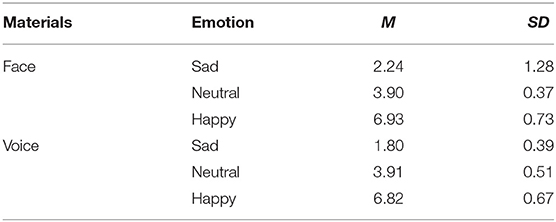

The visual materials for this experiment were selected from the Chinese Affective Face Picture System (CAFPS), and we selected 10 happy, 10 neutral and 10 sad pictures of emotional faces (half male and half female), a total of 30. Audio materials were selected from The Montreal Affective Voice (MAV), and we selected 10 happy, 10 neutral and 10 sad emotional voices, a total of 30. Fifty college students were invited in advance to evaluate the valence and arousal of the visual and audio materials on a 7-point rating scale (1 means very sad; 4 means neutral; 7 means very happy). The evaluation results were compared with a score of 4 by single sample t-tests. Three happy pictures and two sad pictures showing no significant differences were excluded.

Considering that the subjects of this experiment include special people, people with depression are less sensitive to emotion, in order to make people with depression have a higher level of emotional arousal and avoid the influence of gender factors on this experiment, finally, one picture with the highest average score and one voice with the highest average score were selected from the happy emotion pictures and voices that met the requirements (female face and voice). Select the one with the lowest average score from the required sad pictures and sounds and the experimental material with a sound of sadness (female faces and voices). The final evaluation results of the materials are shown in Table 1.

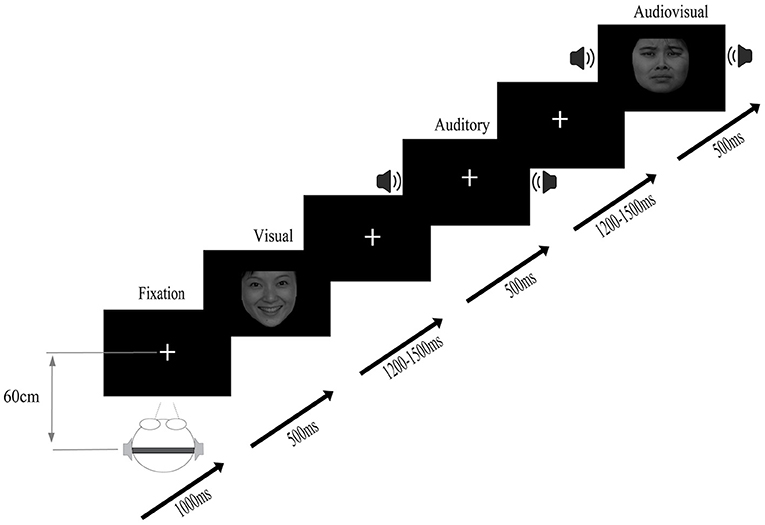

Procedure

The experiment was carried out in a quiet, soundproof laboratory at an appropriate temperature. The participants sat in front of a 15.6-inch color LCD (screen resolution was 1,024 × 768 pixels, refresh rate was 100 Hz), and the distance between the participants and the screen was ~60 cm. The experimental program was developed by E-Prime 2.0 (Psychology Software Tools, Inc., Pittsburgh, PA, USA). During the experiment, as shown in Figure 1, the participants fixated on a cross on the screen. The participants' task was to respond to the target stimuli as quickly and accurately as possible using their two hands on the keyboard (press “S” for sad, “G” for neutral, and “K” for happy; the keys setting makes the balance between the subjects), regardless of whether an auditory/visual/audiovisual stimulus was presented. The stimulus stream consisted of a visual stimulus, an auditory stimulus and an audiovisual stimulus, all presented using Presentation software (Neurobehavioral Systems Inc., Albany, California, USA). The sequence of the stimuli was randomized across different streams. Each participant needed to finish 5 blocks, and each block contained 720 trials. After finishing each block, they were allowed to rest.

Figure 1. Stimuli were randomly presented in audio, visual, and audiovisual conditions, and participants were asked to respond to target stimuli (auditory target, visual target, and audiovisual target).

Apparatus

The behavioral and electroencephalographic data were recorded simultaneously. Stimulus presentation was controlled using E-prime 2.0, and participants were asked to respond via the keyboard. The auditory stimuli were presented through an earphone. Electroencephalography (EEG) signals were recorded by an EEG system (BrainAmp plus, Gilching, Germany) through 32 electrodes (Easy-cap, Herrsching–Breitbrunn, Germany). Horizontal eye movements were detected using an electro-oculogram (EOG) electrode placed at the outer canthi of the left eye, and vertical eye movements and blinks were measured by the EOG from one electrode positioned ~1 cm below the participant's right eye. Impedance was maintained below 5 kΩ from all electrodes. All electrodes were referenced to the FCz electrode and were rereferenced offline to the average of both mastoids. The EEG was digitized at a sampling rate of 500 Hz.

Data Analysis

Behavioral Data Analysis

The collected experimental data were preprocessed and eliminated based on the following criteria: (1) participants whose average hit rate was lower than 80% and (2) participants who made error responses. Data from 20 participants in the depression group and 22 participants in the normal group were eventually included in the analysis. The average response time of each participant under each treatment condition was calculated, and the data exceeding plus or minus three standard deviations from the mean were deleted to obtain the average response time. To analyze the hit rate and response time (RT) of the two groups, we performed 2 (group: depression, control) × 3 (modality: visual, audio, audiovisual) × 3 (emotion: happy, neutral, sad) mixed-factors ANOVA with group as a between-subject factor and the other two variables as within-subject factors. The Greenhouse-Geisser method was used if necessary to correct for non-sphericity. The statistical significance level was set at p < 0.05 (Mauchly's sphericity test). The effect size estimates are reported.

ERP Data Analysis

The EEG data were analyzed by using Brain Vision Analyzer software (Version 2.0, Brain Products GmbH, Munich, Bavaria, Germany). Only the trials in which performance was correct were used for further analyses. All signals were referenced to both mastoid processes (TP9/TP10). EEG signals were bandpass filtered with a range of 0.01–60 Hz, divided into epochs (80 audiovisual epochs, 80 visual epochs, 80 auditory epochs for each emotion, 720 epochs in total) from 100 ms before stimulus onset to 800 ms after stimulus onset. Baseline correction was then performed with the signal measured from −100 ms to 0 ms relative to stimulus onset. The artifact correction was performed by rejecting signals that exceeded ± 100 μV. An overall average was obtained from the data for each stimulus type. In addition, bandpass filtering was carried out again at the standard of 0.3–30 Hz, and baseline correction was performed with the signals from −100 to 0 ms. The grand-averaged data were obtained for each stimulus type at each electrode in each group with each emotions. Participants who lost more than 70% of the epochs of one type of stimulus were excluded.

The difference wave [AV-(A+V)] was calculated as the effect of audiovisual integration (39, 40). In other words, audiovisual integration was the difference between the ERPs to bimodal (AV) stimuli and the ERPs to the sum of the unimodal stimuli (A+V) (41–45). The ERP components analyzed in the present study included N1, P2, N2, and LPP. Component amplitudes and latencies were recorded at the component peaks. N1 was scored as the maximum negative amplitude in the time window from 120 to 140 ms after stimulus onset; P2 was scored as the most positive amplitude in the time window from 160 to 200 ms; N2 was scored as the most negative peak in the time window from 300 to 340 ms; and LPP was scored as the most positive peak in the time window from 380 to 420 ms. Three regions of interest (frontocentral region: FC1, FC2, Fz; parietal region: P3, P4, Pz; central region: C3, C4, Cz) were selected for further analysis. Mixed-measures analysis of variance (ANOVA) was conducted on each ERP component with group as a between-subject factor and emotion and regions of interest (ROIs) as within-subject factors. The Greenhouse-Geisser adjustment was applied to the degrees of freedom of the F ratios as necessary. All statistical analyses were carried out using SPSS 21.0.

Results

Behavioral Results

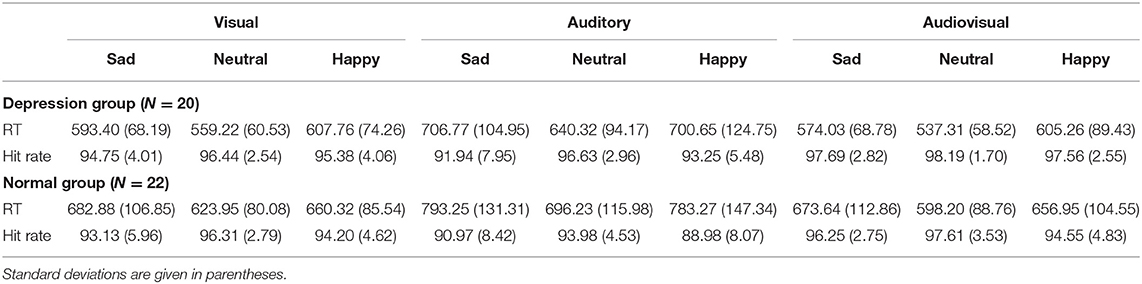

The response time and hit rate of the participants under various conditions are shown in Table 2.

Table 2. Mean response times (ms) and hit rates (%) in both the depression and normal groups in response to different emotions.

Hit Rate

The hit rate was analyzed by three-factor mixed ANOVA with 2 (group: depression group, normal group) * 3 (emotion: sad, neutral, happy) * 3 (modality: visual, auditory, audiovisual) factors. Among them, group is a between-subject factor, and emotion and modality are within-subject factors. The results showed that the main effect of modality was significant, F(2, 80) = 29.01, p < 0.001, = 0.42. The hit rate with the audiovisual modality (97%) was significantly higher than that with the visual modality (95%) and auditory modality (93%), which shows the advantage of crossmodal processing. The main effect of emotion was significant, F(2, 80) = 14.01, p < 0.001, = 0.259. Further analysis showed that the hit rate with happy emotional stimuli was significantly higher than that with sad and neutral emotional stimuli (all p < 0.001). There was no significant difference between the hit rate with sad and neutral emotional stimuli. The main effect of group was not significant, F(1, 40) = 3.03, p = 0.089, = 0.07. The hit rate in the depression group (96%) was not significantly different from that in the normal group (94%). The three-way interaction was not significant, F(4, 160) = 1.16, p = 0329, = 0.028. The interaction between modality and group was not significant, F(2, 80) = 0.88, p = 0.390, = 0.022. The interaction between emotion and group was not significant, F(2, 80) = 1.56, p = 0.217, = 0.038. The interaction between modality and emotion was significant, F(4, 160) = 3.04, p = 0.035, = 0.071. Further simple effect analysis showed that the hit rate with happy emotional stimuli was significantly higher than that with neutral and sad emotional stimuli under visual, auditory and audiovisual conditions (p < 0.001). There were no differences between neutral emotional stimuli and sad emotional stimuli (p > 0.05).

Response Time

The mixed ANOVA for response time with 2 (group: depression group, normal group) * 3 (emotion: sad, neutral, happy) * 3 (modality: visual, auditory, audiovisual) factors was carried out. The results showed that the main effect of modality was significant, F(2, 80) = 165.85, p < 0.001, = 0.806. Further analysis showed that the response time for participants to audiovisual stimuli (M = 608 ms, SD = 13 ms) was significantly faster than that to single-channel visual stimuli (M = 621 ms, SD = 12 ms, p = 0.001) and auditory stimuli (M = 720 ms, SD = 18 ms, p < 0.001), indicating that there was a certain dominant effect in crossmodal processing. It took the longest time to respond to an emotion in the auditory channel (p < 0.001). The main effect of emotion was significant, F(2, 80) = 51.49, p < 0.001, = 0.563. The participants' response to happiness (M = 609 ms, SD = 13 ms) was significantly faster than that to sadness (M = 671 ms, SD = 15 ms) and neutral (M = 669 ms, SD = 16 ms) emotional stimuli (all p < 0.001). There was no significant difference in response times between sad and neutral emotional stimuli (p = 0.744). The main effect of group was significant, F(1, 40) = 6.55, p = 0.014, = 0.141. The response time in the depression group (M = 614 ms) was significantly lower than that in the normal group (M = 685 ms). The three-way interaction was not significant, F(4, 160) = 2.51, p = 0.075, = 0.002. The interaction between modality and group was not significant, F(2, 80) = 0.08, p = 0.82, = 0.141. There was a marginally significant interaction between emotion and group, F(2, 80) = 3.09, p = 0.055, = 0.072. The interaction between modality and emotion was significant, F(4, 160) = 6.17, p = 0.002, = 0.134. A simple effect analysis showed that under visual, auditory, and audiovisual conditions, the responses of participants to happy emotional stimuli were significantly faster than those to neutral and sad emotional stimuli (p < 0.001). There were no differences between neutral emotional stimuli and sad emotional stimuli (p > 0.05).

ERP Results

N1 Component

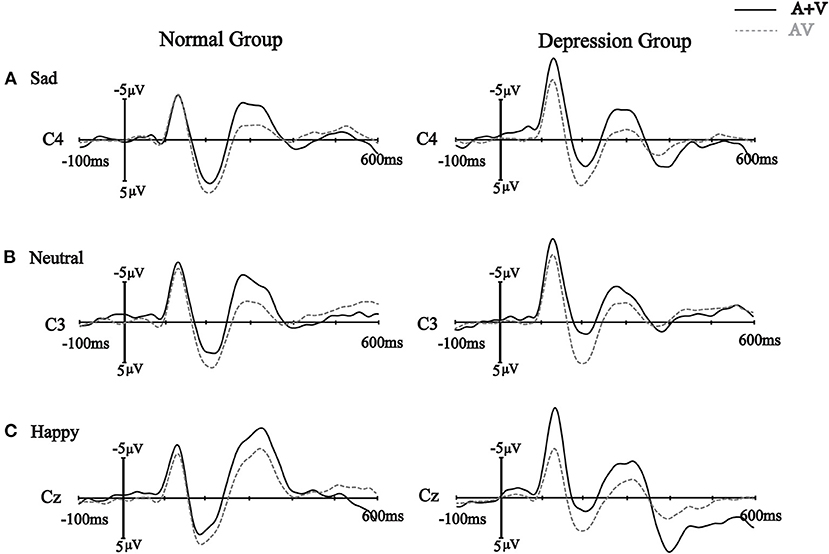

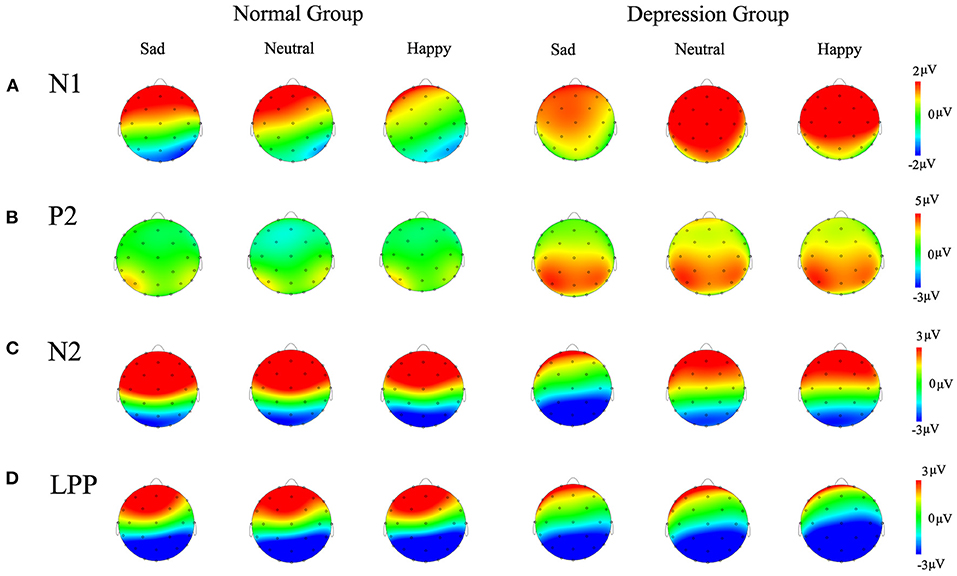

In the 120–140 ms interval, the amplitude of ERP(AV) - [ERP(A) +ERP(V)] was analyzed using a 2 (group: depression group, normal group) * 3 (emotion: sad, neutral, happy) * 3 (ROI: parietal lobe, central lobe, frontocentral lobe) mixed-factors ANOVA with group as a between-subject factor and the other two variables as within-subject factors. The results showed that the main effect of emotion was not significant, F(2, 80) = 0.27, p = 0.74, = 0.007. The main effect of group was significant, F(1, 40) = 5.52, p = 0.02, = 0.121. Further analysis showed that N1 amplitude in the depression group (M = 1.65 μV) was higher than that in the normal group (M = 0.51 μV; p = 0.02). The main effect of ROI was significant, F(2, 80) = 26.22, p < 0.001, = 0.396. The average N1 amplitude in the frontocentral lobe (M = 1.81 μV) was significantly higher than that in the central lobe (M = 1.20 μV; p < 0.001) and the parietal lobe (M = 0.23 μV; p < 0.001). The three-way interaction was significant, F(4, 160) = 3.70, p = 0.02, = 0.085. The interaction between emotion and group was not significant, F(2, 80) = 0.68, p = 0.50, = 0.017, and the interaction between group and ROI was not significant, F(2, 80) = 2.20, p = 0.14, = 0.052. However, the interaction between emotion and ROI was significant, F(4, 160) = 8.05, p < 0.001, = 0.168. Simple effect analysis showed that N1 amplitudes in the frontocentral lobe induced by the three kinds of emotional stimuli (neutral: M = 2.02 μV, happy: M = 2.07 μV, sad: M = 1.28 μV) were significantly larger than those in the central lobe (happy: M = 1.33 μV, neutral: M = 1.41 μV, sad: M = 0.78 μV; p < 0.001) and the parietal lobe (happy: M =-0.14 μV; neutral: M = 0.11 μV, sad: M = 0.60 μV; p < 0.001) (Figure 2).

Figure 2. The sum of the event-related potentials of the unimodal stimuli (A + V) and the event-related potentials of the bimodal stimuli (AV) from a subset of electrodes are shown from 100ms before to 600 ms after stimulus onset in the different groups with the different emotional stimuli (A) sad emotional stimuli, (B) neutral emotional stimuli and (C) happy emotional stimuli.

P2 Component

The results of ANOVA showed that the main effect of emotion was not significant, F(2, 80) = 0.21, p = 0.81, = 0.005. The main effect of group was significant, F(1, 40) = 8.54, p = 0.01, = 0.176. The amplitude of the P2 wave in the depression group (M = 2.86 μV) was significantly higher than that in the normal group (M = 0.87 μV). The main effect of ROI was significant, F(2, 80) = 16.53, p < 0.001, = 0.006. Further analysis showed that the P2 amplitude in the parietal lobe (M = 2.95 μV) was significantly larger than that in the central lobe (M = 1.70 μV; p < 0.001) and the frontocentral lobe (M = 0.94μV; p < 0.001). The three-way interaction was not significant, F(4, 160) = 1.47, p = 0.23, = 0.035. The interaction between emotion and group was not significant, F(2, 80) = 0.25, p = 0.71, = 0.006, the interaction between group and ROI was not significant, F(2, 80) = 0.23, p = 0.65, = 0.006, and the interaction between emotion and ROI was not significant, F(4, 160) = 0.64, p = 0.56, = 0.016.

N2 Component

The results of ANOVA showed that the main effect of emotion was significant, F(2, 80) = 4.85, p = 0.01, = 0.108. The average amplitude of N2 components induced by sadness was higher than that induced by happiness. In addition, there were no significant differences in the average amplitudes of N2 components between sad and neutral emotional stimuli and between neutral and happy emotional stimuli (all p > 0.05). The main effect of group was not significant, F(1, 40) = 1.75, p = 0.19, = 0.042. The main effect of ROI was significant, F(2, 80) = 107.97, p < 0.001, = 0.730. Further analysis showed that the N2 amplitude in the frontocentral lobe (M = 3.65 μV) was significantly larger than that in the central lobe (M = 1.64 μV; p < 0.001) and the parietal lobe (M = −2.55 μV; p < 0.001). The three-way interaction was not significant, F(4, 160) = 2.02, p = 0.13, = 0.048. The interaction between emotion and group was not significant, F(2, 80) = 0.71, p = 0.49, = 0.017. The interaction between emotion and ROI was not significant, F(4, 160) = 1.11, p = 0.34, = 0.027. The interaction between group and ROI was significant, F(2, 80) = 5.86, p = 0.02, = 0.128. Further simple effect analysis showed that the average N2 amplitude evoked in the frontocentral lobe (depression group: M = 2.37 μV; normal group: M = 4.94 μV) was significantly higher than that in the central lobe (depression group: M = 2.37 μV; normal group: M = 4.94 μV) and the parietal lobe (depression group: M = −2.38 μV, normal group: M = −2.71 μV).

LPP Component

The mixed ANOVA with emotion, modality and group factors on the LPP components showed that the main effect of emotion was not significant, F(2, 80) = 1.37, p = 0.26, = 0.033. The main effect of group was not significant, F(1, 40) = 0.19, p = 0.66, = 0.005. The main effect of ROI was significant, F(2, 80) = 103.74, p < 0.001, = 0.722. The average amplitude of LPP in the frontocentral lobe was significantly larger than that in the central lobe (M = −0.74 μV, p < 0.001) and the parietal lobe (M = −4.96 μV, p < 0.001). In addition, the average amplitude of LPP in the central lobe was significantly higher than that in the parietal lobe (p < 0.001). The three-way interaction was significant, F(4, 160) = 34.05, p < 0.001, = 0.460. We then conducted a mixed-factors analysis of variance with 2 (group: depression group, normal group) * 3 (ROI: frontocentral lobe, central lobe, parietal lobe) factors with the three emotions. For the three emotions, the average amplitude of LPP evoked in the frontocentral lobe was significantly higher than that evoked in the central lobe and parietal lobe (all p < 0.001). In addition, the average amplitude of LPP in the central lobe was significantly higher than that in the parietal lobe (p < 0.001). In addition, after the analysis, it was found that in the sadness condition, the average amplitude of LPP in the frontocentral lobe in the depression group (M = 1.32 μV) was significantly higher than that in the normal group (M = 5.04 μV). There were no significant differences in the average amplitudes of LPP components between the two groups in the other ROIs. However, in the happy condition, there was no significant difference in the average amplitude of the LPP component between the two groups. The average amplitude of LPP evoked in the frontocentral lobe in the depression group (M = 0.81 μV) was significantly lower than that in the normal group (M = 3.12 μV). There were no significant differences in the average amplitudes of the LPP components between the two groups in the other ROIs.

To explain the differences in the sad emotional conditions, neutral emotional conditions and happy emotional conditions, we also carried out repeated analysis of variance with 3 (emotions: sad, neutral, happy) × 3 (ROI: frontocentral lobe, central lobe, parietal lobe) factors between the depression group and the normal group. The results showed that the main effects of emotion were significant in both the depression group and the normal group. The average amplitude of LPP induced by sadness (M = −1.09 μV) was significantly higher than that induced by happiness (M = −3.24 μV; p = 0.005). In addition, there was no significant difference in the average amplitudes of LPP induced by the other emotions, but the opposite results were found in the normal group. That is, in the normal group, the average amplitude of LPP induced by the sad emotional stimuli was significantly lower than that induced by the happy emotional stimuli (M = −0.65 μV; p = 0.001) and neutral emotional stimuli (M = −0.77 μV; p = 0.004). There was no significant difference in the average amplitudes of LPP induced by neutral emotional and happy emotional stimuli (Figure 3).

Figure 3. Topography maps of the difference waves (A) N1 component, (B) P2 component, (C) N2 component, (D) LPP component are shown in the different groups for different emotions.

Discussion

In this paper, the processing of visual, audio and audiovisual stimuli evoking happy, neutral and sad emotions from depressed participants was studied, and the event-related potential technique was used to study the effects of crossmodal integration of emotional information in these depressed participants. It was found that the participants had a higher hit rate and shorter response time to audiovisual target stimuli, and the response time and hit rate to emotional stimuli in the depression group were better than those in the normal group. In addition, the N2 amplitudes induced by sadness were significantly higher than those induced by happy emotions. Larger amplitudes of N1 and P2 were evoked in the participants in the depression group, and the average amplitude of LPP evoked in the frontocentral lobe in the depression group was significantly lower than that in the normal group.

First, the participants had higher hit rate and shorter response times to audiovisual target stimuli than to visual target stimuli or auditory target stimuli. This showed that individuals show an enhancement of crossmodal integration of emotional information when processing emotional information. This result is consistent with previous research results; that is, when the participants made emotional category judgments, voices consistent with the emotional valence of the face promoted processing of the face, which resulted in faster responses and higher hit rates (46). In addition, we found that the response times and hit rates to emotional stimuli in the depression group were better than those in the normal group, indicating that people with depression were more sensitive to emotional information. A number of studies have suggested that depression is linked with emotional reactivity (47–51). Emotional reactivity is the intensity, duration, and breadth of emotional experiences (52); thus, it is possible that depressed people were activated by the emotional stimuli to perform better in the task.

This ERP study found that cross-channel emotional facial expressions and sound stimuli induced smaller N1 waves than single-channel facial or sound stimuli. This is consistent with previous studies showing that audiovisual integration automatically occurs as early as 110 ms after stimulus presentation (30, 53); furthermore, in more complex presentation patterns (sound, facial expression and body posture), two-channel emotional information induced a smaller N1 amplitude than that through a single channel (54). This study also found that the participants in the depression group showed larger N1 amplitudes than those in the normal group. Since N1 reflects attention-related brain activity in the perceptual stage of early information processing (55), the results of this study may suggest that the depression group had weaker cognitive processing abilities in the early cognitive stages following stimulus presentation and needed more cognitive resources for processing than the normal group.

At the same time, this study found that the average amplitudes of P2 components with all emotional stimuli in the depression group were higher than those in the normal group. Some electrophysiological studies have shown that changes in P2 amplitudes are related to redundant, coherent and convergent processing of emotional information in dual channels (31, 33). Some studies have shown that P2 reflects the more refined processing of perceptual stimuli (56), and more excitation with emotional stimuli has been associated with higher P2 amplitudes (57). In addition, some researchers have studied patients with depression and found that sad faces induced larger P2 amplitudes in the experimental group, indicating that the increase in P2 amplitudes reflected the increased attention of individuals to sad faces (58). Therefore, in this study, the participants in the depression group showed a stronger P2 amplitude, probably because emotional stimuli were allocated more attentional resources in this group, and therefore, these stimuli were given priority processing.

Regarding the midterm component N2, we found that the amplitude of N2 induced by sadness was significantly higher than that induced by happiness. This result supports the “negativity-bias” framework of emotional processing (13, 59), in which negative bias is considered to be the result of rapid attentional resource allocation to negative stimuli. This rapid response to negative stimuli is adaptive to individuals and populations and can help people detect and avoid threatening situations more quickly (13). Therefore, individuals' attention is automatically directed toward threatening stimuli (60), but not positive stimuli, which gives processing priority to those threatening stimuli. In addition, some researchers believe that the stronger N2 amplitudes are, the stronger the active inhibition ability of the participants (37). Therefore, when the participants are faced with negative stimuli, they may automatically suppress them, thus inducing larger N2 amplitudes.

Finally, we found that the average amplitude of LPP evoked in the frontocentral lobe in the depression group was significantly lower than that in the normal group, which was consistent with previous studies; that is, in the field of emotional information processing, depressed patients and individuals with higher degrees of depression showed weaker LPP amplitudes to positive and negative stimuli (61–64). In addition, the amplitude of LPP produced by processing sad stimuli in the depression group was significantly larger than that produced by processing happy stimuli, while the opposite was true in the normal group, where the amplitudes of LPP induced by happy stimuli were larger. This may suggest that negative emotional stimuli induced higher arousal in patients with depression, thus inducing greater LPP amplitudes (15), which could allow individuals to devote more attentional resources to achieve fine coding of negative emotional stimuli (13). For example, some depression-related words or sad faces can well-reflect patients' negative attention bias (65). In addition, studies have shown that LPP can reflect the intensity of motivation, and desired stimuli associated with higher motivation intensities can induce greater LPP amplitudes (66). Studies have shown that anxiety patients watching angry faces and scary faces show greater LPP amplitudes (63). Therefore, in this study, the depressed participants showed greater LPP amplitudes in response to sad stimuli. These data show that negative stimuli can induce stronger emotional and motivational responses in depressed individuals; that is, depressed participants have a stronger motivation to approach negative stimuli. In contrast, the participants in the normal group had larger LPP amplitudes to happy stimuli, indicating that normal individuals had a stronger motivation to approach positive stimuli.

In conclusion, our results showed that individuals show the effects of crossmodal integration of emotional information while processing emotional stimuli, and there was a difference in the effects of emotional stimuli between the depression group and the normal group. The difference in this integration effect is of great significance for us to understand the processing bias to negative stimuli in people with depression. We can speculate that this abnormal processing of emotional information may lead to the abnormal development of social cognition in patients with depression. Therefore, problems related to social emotional abilities that have been observed in patients with depression are not entirely caused by the social environment. Early information processing components may also play an important role. Therefore, based on these results, we suggest that future studies apply more naturalistic experimental designs to investigate emotional and social deficits in depression. These findings also have certain significance regarding the exploration for potential treatment options for patients with depression; for example, in future intervention programs, we can consider the training of crossmodal integration with personalized emotional stimuli.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Ethics Committee of Hubei University. The participants provided their written informed consent to participate in this study.

Author Contributions

TL and XZ wrote the manuscript. YC, XZ, ZG, and NW performed the experiments. JY, SL, and WY analyzed the data. TL, WY, and SL conceived and designed the experiments. JY, WY, ZG, and NW revised the manuscript and approved the final version. All authors contributed to the article and approved the submitted version.

Funding

This study was supported by the Humanity and Social Science Youth Foundation of the Education Bureau of Hubei Province of China (19Q004), the Natural Science Youth Foundation of Hubei University (201611113000001), the National Social Science Foundation of China (19FJKY004), and the National Natural Science Foundation of China (61806025).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. Kupfer DJ, Frank E, Phillips ML. Major depressive disorder: new clinical, neurobiological, treatment perspectives. Lancet. (2012) 379:1045–55. doi: 10.1016/s0140-6736(11)60602-8

2. Gold PW, Machado-Vieira R, Pavlatou MG. Clinical and biochemical manifestations of depression: relation to the neurobiology of stress. Neural Plasticity. (2015) 2015:1–11. doi: 10.1155/2015/581976

3. Phillips MR, Zhang J, Shi Q, Song Z, Ding Z, Pang S, et al. Prevalence, treatment, and associated disability of mental disorders in four provinces in China during 2001-05: an epidemiological survey. Lancet. (2009) 373:2041–53. doi: 10.1016/S0140-6736(09)60660-7

4. Disner SG, Beevers CG, Haigh EAP, Beck AT. Neural mechanisms of the cognitive model of depression. Nat Rev Neurosci. (2011) 12:467–77. doi: 10.1038/nrn3027

5. Roiser JP, Sahakian BJ. Hot and cold cognition in depression. CNS Spectrums. (2013) 18:139–49. doi: 10.1017/s1092852913000072

6. He Z, Zhang D, Luo Y. Mood-congruent cognitive bias in depressed individuals. Adv Psychol Sci. (2015) 23:2118–28. doi: 10.3724/SP.J.1042.2015.02118

7. Rottenberg J, Hindash AC. Emerging evidence for emotion context insensitivity in depression. Curr Opin Psychol. (2015) 4:1–5. doi: 10.1016/j.copsyc.2014.12.025

8. Bourke C, Douglas K, Porter R. Processing of facial emotion expression in major depression: a review. Austr N Zeal J Psychiatry. (2010) 44:681–96. doi: 10.3109/00048674.2010.496359

9. Elliott R, Zahn R, Deakin JFW, Anderson IM. Affective cognition and its disruption in mood disorders. Neuropsychopharmacology. (2010) 36:153–82. doi: 10.1038/npp.2010.77

10. Albert K, Gau V, Taylor WD, Newhouse PA. Attention bias in older women with remitted depression is associated with enhanced amygdala activity and functional connectivity. J Affect Disord. (2017) 210:49–56. doi: 10.1016/j.jad.2016.12.010

11. Elgersma HJ, Koster EHW, van Tuijl LA, Hoekzema A, Penninx BWJH, Bockting CLH, et al. Attentional bias for negative, positive, and threat words in current and remitted depression. PLoS ONE. (2018) 13:e0205154. doi: 10.1371/journal.pone.0205154

12. Li H, Yang X, Zheng W, Wang C. Emotional regulation goals of young adults with depression inclination: An event-related potential study. Acta Psychol Sinica. (2019) 6:5–15. doi: 10.3724/SP.J.1041.2019.00637

13. Olofsson JK, Nordin S, Sequeira H, Polich J. Affective picture processing: An integrative review of ERP findings. Biol Psychol. (2008) 77:247–65. doi: 10.1016/j.biopsycho.2007.11.006

14. Baumeister RF, Bratslavsky E, Finkenauer C, Vohs KD. Bad is stronger than good. Rev Gene Psychol. (2001) 5:323–70. doi: 10.1037/1089-2680.5.4.323

15. Cuthbert BN, Schupp HT, Bradley MM, Birbaumer N, Lang PJ. Brain potentials in affective picture processing: covariation with autonomic arousal and affective report. Biol Psycho. (2000) 52:95–111. doi: 10.1016/s0301-0511(99)00044-7

16. Schupp HT, Cuthbert BN, Bradley MM, Cacioppo JT, Ito T, Lang PJ. Affective picture processing: the late positive potential is modulated by motivational relevance. Psychophysiology. (2000) 37:257–61. doi: 10.1111/1469-8986.3720257

17. Yang W, Zhu X, Wang X, Wu D, Yao S. Time course of affective processing bias in major depression: An ERP study. Neurosci Lett. (2011) 487:372–7. doi: 10.1016/j.neulet.2010.10.059

18. Chen X, Han L, Pan Z, Luo Y, Wang P. Influence of attention on bimodal integration during emotional change decoding: ERP evidence. Int J Psychophysiol. (2016) 106:14–20. doi: 10.1016/j.ijpsycho.2016.05.009

19. de Gelder B, Vroomen J. The perception of emotions by ear and by eye. Cognit Emot. (2000) 14:289–311. doi: 10.1080/026999300378824

20. Paulmann S, Pell MD. Contextual influences of emotional speech prosody on face processing: How much is enough? Cognit Affect Behav Neurosci. (2010) 10:230–42. doi: 10.3758/cabn.10.2.230

21. Collignon O, Girard S, Gosselin F, Roy S, Saint-Amour D, Lassonde M, et al. Audio-visual integration of emotion expression. Brain Res. (2008) 1242:126–35. doi: 10.1016/j.brainres.2008.04.023

22. Logeswaran N, Bhattacharya J. Crossmodal transfer of emotion by music. Neurosci Lett. (2009) 455:129–33. doi: 10.1016/j.neulet.2009.03.044

23. Lugo JE, Doti R, Wittich W, Faubert J. Multisensory integration. Psychol Sci. (2008) 19:989–97. doi: 10.1111/j.1467-9280.2008.02190.x

24. Van Rheenen TE, Rossell SL. Multimodal emotion integration in bipolar disorder: an investigation of involuntary cross-modal influences between facial and prosodic channels. J Int Neuropsychol Soc. (2014) 20:525–33. doi: 10.1017/s1355617714000253

25. Dondaine T, Robert G, Peron J, Grandjean D, Verin M, Drapier D, et al. Biases in facial and vocal emotion recognition in chronic schizophrenia. Front Psychol. (2014) 5:900. doi: 10.3389/fpsyg.2014.00900

26. De Gelder B, Vroomen J, de Jong SJ, Masthoff ED, Trompenaars FJ, Hodiamont P. Multisensory integration of emotional faces and voices in schizophrenics. Schizophrenia Res. (2005) 72:195–203. doi: 10.1016/j.schres.2004.02.013

27. Knowland VCP, Mercure E, Karmiloff-Smith A, Dick F, Thomas MSC. Audio-visual speech perception: a developmental ERP investigation. Dev Sci. (2013) 17:110–24. doi: 10.1111/desc.12098

28. Romero YR, Senkowski D, Keil J. Early and late beta-band power reflect audiovisual perception in the McGurk illusion. J Neurophysiol. (2015) 113:2342–50. doi: 10.1152/jn.00783.2014

29. Eggermont J, Ponton C. The neurophysiology of auditory perception: From single units to evoked potentials. Audiol Neuro-otol. (2002) 7:71–99. doi: 10.1159/000057656

30. Jessen S, Kotz SA. The temporal dynamics of processing emotions from vocal, facial, bodily expressions. NeuroImage. (2011) 58:665–74. doi: 10.1016/j.neuroimage.2011.06.035

31. Hao T, Schröger, Erich K. Selective attention modulates early human evoked potentials during emotional face–voice processing. J Cognit Neurosci. (2015) 27:798–818. doi: 10.1162/jocn_a_00734

32. Pourtois G, Gelder BD, Vroomen J, Rossion B, Crommelinck M. The time-course of intermodal binding between seeing and hearing affective information. NeuroReport. (2000) 11:1329–33. doi: 10.1097/00001756-200004270-00036

33. Balconi M, Carrera A. Cross-modal integration of emotional face and voice in congruous and incongruous pairs: The P2 ERP effect. J Cognit Psychol. (2011) 23:132–9. doi: 10.1080/20445911.2011.473560

34. Huang XQ, Zhang J, Liu J, Sun L, Zhao HY, Lu YG, et al. C-reactive protein promotes adhesion of monocytes to endothelial cells via NADPH oxidase-mediated oxidative stress. J Cell Biochem. (2012) 113:857–67. doi: 10.1002/jcb.23415

35. Klasen M, Kenworthy CA, Mathiak KA, Kircher TTJ, Mathiak K. Supramodal representation of emotions. J Neurosci. (2011) 31:15218. doi: 10.1523/JNEUROSCI.2833-11.2011

36. Dai Q, Feng Z. Deficient inhibition of return for emotional faces in depression. Acta Psychol Sinica. (2009) 12:57–70. doi: 10.3724/SP.J.1041.2009.01175

37. Lewis MD, Granic I, Lamm C, Zelazo PD, Stieben J, Todd RM, et al. Changes in the neural bases of emotion regulation associated with clinical improvement in children with behavior problems. Dev Psychopathol. (2008) 20:913–39. doi: 10.1017/s0954579408000448

38. Suess F, Rabovsky M, Abdel Rahman R. Perceiving emotions in neutral faces: expression processing is biased by affective person knowledge. Soc Cognit Affect Neurosci. (2014) 10:531–6. doi: 10.1093/scan/nsu088

39. Barth DS, Goldberg N, Brett B, Di S. The spatiotemporal organization of auditory, visual, and auditory-visual evoked potentials in rat cortex. Brain Res. (1995) 678:177–90. doi: 10.1016/0006-8993(95)00182-p

40. Rugg MD, Doyle MC, Wells T. Word and nonword repetition within- and across-modality: an event-related potential study. J Cognit Neurosci. (1995) 7:209–27. doi: 10.1162/jocn.1995.7.2.209

41. Yang W, Yang J, Gao Y, Tang X, Ren Y, Takahashi S, et al. Effects of sound frequency on audiovisual integration: an event-related potential study. PLoS ONE. (2015) 10:e0138296. doi: 10.1371/journal.pone.0138296

42. Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cognit Neurosci. (1999) 11:473–90. doi: 10.1162/089892999563544

43. Teder-Sälejärvi WA, Russo FD, McDonald JJ, Hillyard SA. Effects of spatial congruity on audio-visual multimodal integration. J Cognit Neurosci. (2005) 17:1396–409. doi: 10.1162/0898929054985383

44. Molholm S, Sehatpour P, Mehta AD, Shpaner M, Gomez-Ramirez M, Ortigue S, et al. Audio-visual multisensory integration in superior parietal lobule revealed by human intracranial recordings. J Neurophysiol. (2006) 96:721–9. doi: 10.1152/jn.00285.2006

45. Van Wassenhove V, Grant KW, Poeppel D. Visual speech speeds up the neural processing of auditory speech. Proc Natl Acad Sci USA. (2005) 102:1181–6. doi: 10.1073/pnas.0408949102

46. Föcker J, Gondan M, Röder B. Preattentive processing of audio-visual emotional signals. Acta Psychol. (2011) 137:36–47. doi: 10.1016/j.actpsy.2011.02.004

47. Bylsma LM, Morris BH, Rottenberg J. A meta-analysis of emotional reactivity in major depressive disorder. Clin Psychol Rev. (2008) 28:676–91. doi: 10.1016/j.cpr.2007.10.001

48. Carver CS, Johnson SL, Joormann J. Major depressive disorder and impulsive reactivity to emotion: toward a dual-process view of depression. Br J Clin Psychol. (2013) 52:285–99. doi: 10.1111/bjc.12014

49. Hankin BL, Wetter EK, Flory K. Appetitive motivation and negative emotion reactivity among remitted depressed youth. J Clin Child Adolesc Psychol. (2012) 41:611–20. doi: 10.1080/15374416.2012.710162

50. Shapero BG, Abramson LY, Alloy LB. Emotional reactivity and internalizing symptoms: moderating role of emotion regulation. Cognit Therapy Res. (2015) 40:328–40. doi: 10.1007/s10608-015-9722-4

51. Van Rijsbergen GD, Bockting CLH, Burger H, Spinhoven P, Koeter MWJ, Ruhé HG, et al. Mood reactivity rather than cognitive reactivity is predictive of depressive relapse: A randomized study with 5.5-year follow-up. J Consult Clin Psychol. (2013) 81:508–17. doi: 10.1037/a0032223

52. Nock MK, Wedig MM, Holmberg EB, Hooley JM. The emotion reactivity scale: development, evaluation, and relation to self-injurious thoughts and behaviors. Behav Therapy. (2008) 39:107–16. doi: 10.1016/j.beth.2007.05.005

53. Kokinous J, Kotz SA, Tavano A, Schröger E. The role of emotion in dynamic audiovisual integration of faces and voices. Soc Cognit Affect Neurosci. (2014) 10:713–20. doi: 10.1093/scan/nsu105

54. Yeh P, Geangu E, Reid V. Coherent emotional perception from body expressions and the voice. Neuropsychologia. (2016) 91:99–108. doi: 10.1016/j.neuropsychologia.2016.07.038

55. Frings C, Groh-Bordin C. Electrophysiological correlates of visual identity negative priming. Brain Res. (2007) 1176:82–91. doi: 10.1016/j.brainres.2007.07.093

56. Schutter DJLG, de Haan EHF, van Honk J. Functionally dissociated aspects in anterior and posterior electrocortical processing of facial threat. Int J Psychophysiol. (2004) 53:29–36. doi: 10.1016/j.ijpsycho.2004.01.003

57. Dai Q, Feng Z. More excited for negative facial expressions in depression: Evidence from an event-related potential study. Clin Neurophysiol. (2012) 123:2172–9. doi: 10.1016/j.clinph.2012.04.018

58. Costafreda SG, Brammer MJ, David AS, Fu CHY. Predictors of amygdala activation during the processing of emotional stimuli: A meta-analysis of 385 PET and fMRI studies. Brain Res Rev. (2008) 58:57–70. doi: 10.1016/j.brainresrev.2007.10.012

59. Krendl AC, Zucker HR, Kensinger EA. Examining the effects of emotion regulation on the ERP response to highly negative social stigmas. Soc Neurosci. (2016) 12:349–60. doi: 10.1080/17470919.2016.1166155

60. Nieuwenhuis S, Yeung N, van den Wildenberg W, Ridderinkhof KR. Electrophysiological correlates of anterior cingulate function in a go/no-go task: Effects of response conflict and trial type frequency. Cognit Affect Behav Neurosci. (2003) 3:17–26. doi: 10.3758/cabn.3.1.17

61. Admon R, Pizzagalli DA. Dysfunctional reward processing in depression. Curr Opin Psychol. (2015) 4:114–8. doi: 10.1016/j.copsyc.2014.12.011

62. Hajcak G, MacNamara A, Olvet DM. Event-related potentials, emotion, and emotion regulation: an integrative review. Dev Neuropsychol. (2010) 35:129–55. doi: 10.1080/87565640903526504

63. MacNamara A, Kotov R, Hajcak G. Diagnostic and symptom-based predictors of emotional processing in generalized anxiety disorder and major depressive disorder: an event-related potential study. Cognit Therapy Res. (2015) 40:275–89. doi: 10.1007/s10608-015-9717-1

64. Proudfit GH, Bress JN, Foti D, Kujawa A, Klein DN. Depression and event-related potentials: emotional disengagement and reward insensitivity. Curr Opin Psychol. (2015) 4:110–3. doi: 10.1016/j.copsyc.2014.12.018

65. Joormann J, Gotlib IH. Selective attention to emotional faces following recovery from depression. J Abnormal Psychol. (2007) 116:80–5. doi: 10.1037/0021-843x.116.1.80

Keywords: ERPs, audiovisual integration, multisensory processing, depression, emotion

Citation: Lu T, Yang J, Zhang X, Guo Z, Li S, Yang W, Chen Y and Wu N (2021) Crossmodal Audiovisual Emotional Integration in Depression: An Event-Related Potential Study. Front. Psychiatry 12:694665. doi: 10.3389/fpsyt.2021.694665

Received: 14 April 2021; Accepted: 21 June 2021;

Published: 20 July 2021.

Edited by:

Yuan-Pang Wang, University of São Paulo, BrazilReviewed by:

Xiaoyu Tang, Liaoning Normal University, ChinaWu Qiong, Suzhou University of Science and Technology, China

Copyright © 2021 Lu, Yang, Zhang, Guo, Li, Yang, Chen and Wu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Weiping Yang, c3d5d3BAMTYzLmNvbQ==

†These authors have contributed equally to this work

Ting Lu

Ting Lu Jingjing Yang2†

Jingjing Yang2† Weiping Yang

Weiping Yang