94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Plant Sci. , 06 March 2025

Sec. Sustainable and Intelligent Phytoprotection

Volume 16 - 2025 | https://doi.org/10.3389/fpls.2025.1521594

The annual global economic losses from pest-induced crop damage are substantial and difficult to quantify. Real-time monitoring of pest dynamics and timely control strategies are crucial for food security. Among the primary monitoring techniques, sex pheromone-baited trapping technology is instrumental in the detection and management of agricultural pests. To address existing limitations-such as manual insect collection and counting in conventional traps, inaccuracies in photoelectric counting devices, and the requirement for manual replacement of sticky boards in image-based traps—an advanced agricultural pest monitoring system utilizing sex pheromone bait was designed and developed in this paper. The system integrates smart electro-killing pheromone traps, a pest detection model, and a pheromone monitoring platform. Male pests attracted to pheromones are neutralized by an electric grid and deposited on an image acquisition platform. A network camera captures images of the pests, which are processed by a YOLOv9-TrapPest detection model to identify and quantify them. This model incorporates an AKConv module to enhance feature extraction, reducing false detections from limb separation. The CBAM-PANet structure improves detection rates of sticky pests, while the FocalNet module optimizes fine-grained feature capture, excluding non-target pests. The YOLOv9-TrapPest model outperforms other detection models, achieving 97.5% average precision and 98.3% mAP50 for detecting seven pest species. Furthermore, a pest pheromone monitoring platform displays the images and identification results, supporting pest control decisions. This system incorporates automated functions for pest trapping, killing, counting, and clearing, thereby achieving complete automation in the monitoring of pests attracted by sex pheromones.

The annual global economic losses caused by pests damaging crops are incalculable (Savary et al., 2019). Real-time monitoring of pest population dynamics, combined with the timely implementation of effective control measures, is crucial for ensuring food security. In the realm of agricultural pest monitoring, alongside traditional manual field survey methods, various intelligent devices are frequently employed. These include ground light traps, sex pheromone-baited traps, searchlight traps, and insect radar, which are utilized to trap and monitor pests. The trapped pests are subsequently identified and counted, enabling accurate detection and reporting of pest populations (Jiang et al., 2021). Among them, sex pheromone-baited traps, which utilize synthetic pheromone lures to trap target pest males, are commonly used for the physical control and monitoring of pests. They have been widely used in agricultural pest monitoring and control due to their simplicity, low cost, and environmental friendliness.

At present, sex pheromone-baited traps can be classified into two main categories based on the methods used for capturing and counting pests attracted by pheromone lures. These categories have been widely adopted in the field for pest monitoring and management. (1) Simple sex pheromone-baited traps are traps that utilize sticky boards or buckets to capture pests and employ a manual identification and counting method (Yang et al., 2020). (2) Bucket traps are traps designed to prevent pests from escaping and use photoelectric counters to automatically count the pests (Potamitis et al., 2014; Zhou, 2020). The two types of traps have the following shortcomings: (1) Simple sex pheromone-baited traps, though cost-effective, require regular field visits by surveyors to check the number of trapped insects and manually report the data. This process is both time-consuming and labor-intensive. Moreover, the data are not available in real-time and are difficult to track for historical analysis. Additionally, these traps are easily damaged and cannot be reused. (2) Traps utilizing sticky boards necessitate timely manual replacement of the boards when large numbers of insects are captured, further increasing labor demands. (3) Since synthetic pheromone lures cannot guarantee a high degree of specificity, and the proportion of pheromone lures’ components may vary across regions for a particular pest, these lures often attract various similar pest species (Shen et al., 2019), or non-target pests that mistakenly enter the trap. This leads to inflated counts of target pests by photoelectric counters and thus reduces the reliability of target pest monitoring (Luo et al., 2016).

With the continuous development of machine vision technology and its successful application across various domains, a growing number of researchers are exploring its integration with sticky boards in pest sex pheromone-baited monitoring systems to improve the intelligence and precision of pest monitoring. In this approach, pests are first attracted by pheromone lures, and they are glued to death when they touch the sticky boards. The machine vision system then captures images of the sticky boards, which are subsequently analyzed using pattern recognition or deep learning techniques to identify and count the trapped pests. Traditional pattern recognition methods typically follow these steps: background segmentation, feature extraction of the pests, and the identification and counting of target pests using similarity measures or classifiers (Yalcin, 2015; Li et al., 2019). Due to prolonged exposure of sticky boards to the environment, the image backgrounds can become complex. When a large number of pests are captured, challenges such as pests sticking together and interference from visually similar species arise. Traditional pattern recognition methods often struggle to handle these complexities, leading to poor generalization and a reduced ability to continuously and accurately identify pests. Consequently, these methods have not seen widespread adoption in the field. In recent years, the exceptional performance of deep learning in object recognition and target detection has led to its application in pheromone-baited pests images recognition. For instance, Ding and Taylor (2016) utilized a sliding window approach and convolutional neural network to identify codling moths on pheromone-baited sticky board images. Qiu et al. (2021) used the YOLOv5 model to identify Spodoptera frugiperda on both the collecting boards of the pest image monitoring device FAAS-Pest Monitor, and the sticky boards, achieving a precision rate of 96.23% and a recall rate of 91.85%. However, the study was limited to a single pest species, restricting its broader applicability to other pest types in agricultural monitoring systems. Tian et al. (2023) designed a pest monitoring device to capture pest images, and proposed the MD-YOLO model to detect three major orchard pests Adoxophyes orana, Grapholitha molesta Busck, and Carposina niponensis Walsingham, on sticky board images. The model achieved a mAP50 of 86.2%. Zhang et al. (2022) designed smart sex pheromone-baited traps to capture images of Cnaphalocrocis medinalis on sticky boards. To address the reduced recognition rate caused by complex backgrounds, sticky pests, and false detections of similar pests, a two-layer network model incorporating an improved YOLOv3 and DBTNet-101 was developed. This model achieved a recognition precision of 97.6% and a recall rate of 98.6%. However, the image capture modules of these sex pheromone-baited traps are not enclosed. The sticky boards are exposed to the environment and the pests on the boards can damaged and decayed, which cause missed and false detections of model. Moreover, the background complexity of the sticky boards images increases over time, making the pest identification more difficult.

To address the challenges of frequent replacements of sticky boards and the increasing complexity of image backgrounds due to outdoor exposure, an electro-killing intelligent monitoring system was developed for agricultural pests based on machine vision. In this system, to eliminate the need of frequent replacements of sticky boards, we replace sticky boards with an electro-killing mechanism and utilize a rotating flat bottom tray to clear the pests. And the electro-killing mechanism is enclosed, which prevents the image background from becoming more complex, thereby reducing the missed and false detections of model. Moreover, to address specific challenges in pest recognition for images captured by electro-killing mechanisms, such as false detections of pests with fragmented bodies and similar pest species, missed detections of pests adhere to one another, we designed the YOLOv9-TrapPest pest identification model by incorporating the AKConv module, CBAM-PANet structure, and FocalNet module into the YOLOv9 model. This system realizes the unattended sex pheromone-baited pest monitoring, including automatic pest attraction, electrocution of pests, image capture, pest identification, pest counting, and data traceability. And it provides real-time data support for the monitoring and control of agricultural pests attracted by pheromone lures.

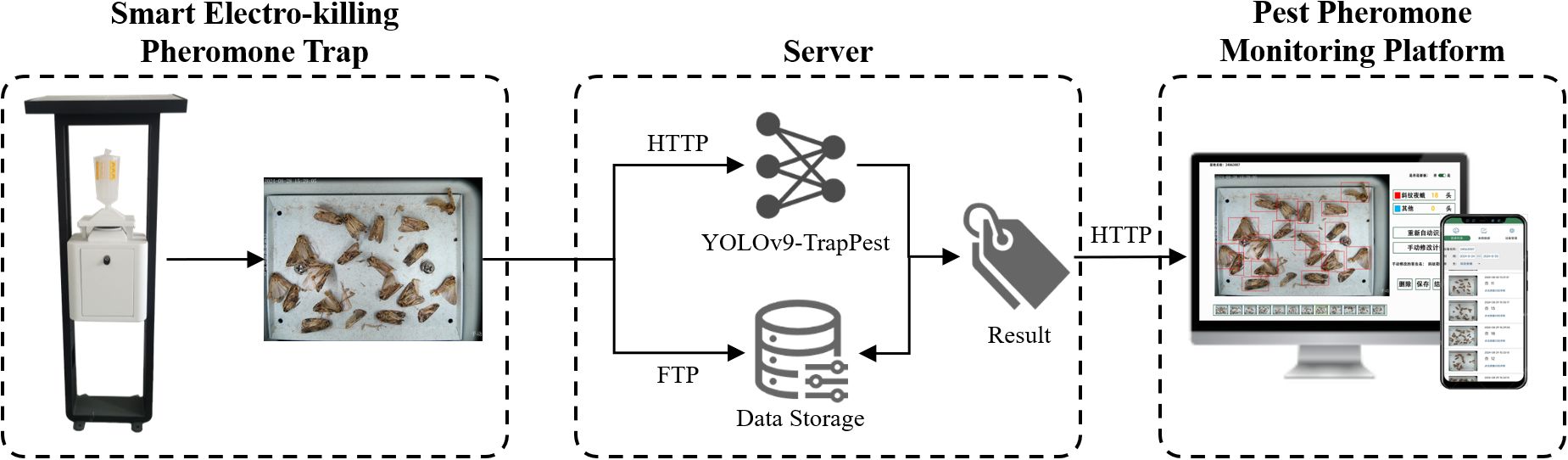

The electro-killing pheromone-baited intelligent agricultural pest monitoring system mainly consists of three modules, smart electrical pheromone traps, a server for data access and model deployment, and a platform for pest reporting, as shown in Figure 1. The smart electrical pheromone trap is responsible for trapping and electrocuting the pests and capturing the pest images. These images are then transferred to the server via FTP protocol, where a pest identification and counting model is deployed. The results are subsequently displayed on the platform.

Figure 1. Architecture of the electro-killing pheromone-baited intelligent agricultural pest monitoring system.

The smart electro-killing pheromone trap is mainly composed of four parts: solar electric panel, trap cage, electric shock module and machine vision module. The structure is shown in Figure 2. Among them, the machine vision module includes a control mainboard, a 1200w pixel industrial camera, a shooting flat bottom tray, a flip motor, and an insect collector.

Given the challenges of obtaining a reliable electricity supply in remote farmland, solar panels are utilized as the power source for the smart electro-killing pheromone traps, ensuring environmental sustainability. A dedicated software system has been developed on the control mainboard to manage various functions. This includes controlling the timing of the power supply to the electric grid, activating the camera to capture images, and rotating the flat bottom tray 360°, facilitating comprehensive pest monitoring and system efficiency.

Smart electro-killing pheromone traps attract pests using pheromone lures placed within the trap cage. When a pest enters the trap, it collides with the cage wall or cover and falls onto the electric shock module, where it is electrocuted. Since a large number of pests may lead to pest adhesion, we will set the number of times the electric grid operates to ensure that the number of trapped pests does not become too large. The pest then falls through the gaps in the electric grid of the shock module onto the flat bottom tray of the machine vision module. The control mainboard schedules the camera to automatically capture images of the pests on the flat bottom tray. The image files are uploaded to the server via FTP protocol for storage, while additional data—such as device number, device status, GIS coordinates, fault information, and image paths—are transmitted via HTTP protocol and stored in the server’s database. The server processes the uploaded images using an automatic identification and counting model to perform data analysis and generate statistical reports. Afterward, the control mainboard activates a flip motor that rotates the flat bottom tray 360° to reset it, causing the pests to fall into an insect collector located at the bottom of the trap. This automated sequence allows the smart electro-killing pheromone trap to fully automate the entire process of pest attraction, electrocution, image capture, data transmission, and analysis. As a result, the system enables completely unattended operation for pheromone-baited pest monitoring.

From 2023 to 2024, a total of 7 smart electro-killing pheromone traps were deployed across farms located in Jinhua City, Zhejiang Province; Zhuhai City, Guangdong Province; and Ningjiang District, Songyuan City, Jilin Province. Each device was equipped with a specific pheromone lure for monitoring pheromone-baited pests. Over the course of the deployment, 1,796 images were collected, capturing seven types of agricultural pests. The images, each with a resolution of 4000×3000 pixels, were stored in JPG format. The detailed data information is presented in Table 1.

The LabelImg tool was used to annotate the pests in the images, with category information and location information saved in XML files. After labelling, the pest image data was converted into YOLO dataset format. However, due to the insufficient solar power supply caused by consecutive rainy and cloudy days, the voltage provided by the solar panels was low. This low voltage led to images with insufficient brightness. To address this issue, the brightness augmentation technique was applied to enhance image quality, ensuring that the final detection results were not affected. Further, to improve the generalization capabilities of the pest detection model, additional data augmentation techniques were applied. These include rotation, mirroring, noise addition, and mosaic augmentation, helping the model become more robust in recognizing pests under various environmental and image conditions. The dataset was then split into training sets, validation sets and test sets in an 8:1:1 ratio.

YOLOv9 (Wang et al., 2024) is an improved target detection model built upon the YOLO framework. It utilizes the Programmable Gradient Information (PGI) technique to generate more reliable gradients through reversible branches, thereby improving the convergence and performance of the model. Additionally, the model introduces the Generalized Efficient Layer Aggregation Network (GELAN) to optimize parameter utilization and computational efficiency. Experimental results showed that YOLOv9 achieved performance comparable to YOLOv7AF (Wang et al., 2023) on the MS COCO dataset (Lin et al., 2014) with an AP of 53%, while using 42% fewer parameters and 21% less computational power. This makes it particularly suitable for resource-constrained environments. To meet the requirements for high-precision pest identification and counting in real-time monitoring systems while maintaining a lightweight and efficient model, the YOLOv9-TrapPest model was developed in this paper, based on YOLOv9, to detect and count seven types of agricultural pests in the images captured by smart electrical pheromone traps.

The difficulty of model identification is increased by the fact that pests struggle when they touch the electric grid after being lured over by the pheromone lures, which tends to cause separation or fragmentation of the pest limbs. In this paper, Alterable Kernel Convolution (AKConv) (Zhang et al., 2023) was introduced into the backbone network to improve the feature extraction and fusion module, RepNCSPELAN4, to reduce the interference of pests’ limbs. During peak pest periods, heavy adhesion of pests leads to a reduction in the model’s recall rate. To address this issue, we proposed the CBAM-PANet structure based on the attention mechanism, which improved the Path Aggregation Network (PANet) structure (Liu et al., 2018) in the Neck network of YOLOv9. By incorporating both channel attention and spatial attention mechanisms, the model’s ability to focus on important features and critical regions was significantly enhanced (Woo et al., 2018), allowing for more accurate identification and localization of targets in images with complex backgrounds and adhered pests. Although pheromone lures are specific, some closely related species have similar chemical components in their pheromone lures, which can easily attract similar pests. To reduce the false detections of similar pests, the Focal Modulation Network (FocalNet) (Yang et al., 2022) was employed in this paper to replace the SPPELAN module in the Head network. By introducing the focal modulation mechanism, the feature extraction network’s capacity to focus on high-confidence regions was enhanced, allowing the model to capture fine-grained features more effectively. This improvement enables the model to better distinguish between similar pest species by concentrating on the most relevant areas within an image. The structure of the YOLOv9-TrapPest model is illustrated in Figure 3.

RepNCSPELAN4 is the feature extraction-fusion module in YOLOv9, which combines two architectures with gradient path planning: CSPNet and ELAN. It is designed as a generalized efficient layer aggregation network, considering factors such as lightweight design, inference speed, and accuracy.

In convolutional neural networks, standard convolution operations are limited to a local window of fixed shape, with the kernel size fixed at k × k, resulting in a quadratic growth in the number of parameters. In pest recognition, the fixed sampling windows and convolutional kernels make it difficult for the model to accurately identify pests with partial or damaged bodies caused by the electric shock module, which in turn reduces the model’s recognition accuracy, as shown in Figure 4. Therefore, in this paper, the AKConv was introduced to enhance the feature extraction and fusion capabilities of the RepNCSPELAN4 module. The improved module, named AKRepNCE, and its model structure is shown in Figure 5.

AKConv enables the convolution kernel to adaptively adjust its shape and parameters according to the features of the input image by integrating Deformable Convolution and Dynamic Convolution techniques. This flexibility enables the kernel to better accommodate pests with different morphologies. Even when the body parts of pests are disarticulated or fragmented, the convolutional kernel can adaptively identify the primary characteristics of the pest, without being influenced by the disjointed fragments.

The neck network of YOLOv9 adopts PANet, which introduces a bottom-up path augmentation structure based on the Feature Pyramid Network (Lin et al., 2017). PANet improves the locational expressiveness of the feature pyramid by introducing the bottom-up path aggregation mechanism, enabling the upward transfer and fusion of low-level feature information. This enhancement notably strengthens the model’s ability to accurately detect objects across varying scales and in complex backgrounds. However, during peak periods of agricultural pest infestations, pests may adhere to one another, leading to issues of missed detection. To address this, the CBAM attention mechanism was integrated into the PANet architecture, resulting in the development of the CBAM-PANet module. The CBAM attention mechanism combines channel attention and spatial attention, enhancing the model’s focus on key features. As shown in Figure 6, regarding the input feature map, the CBAM attention mechanism infers attention maps sequentially across two independent dimensions—channel and spatial. These maps are then multiplied with the input feature map to adaptively refine features, resulting in enhanced feature details. By emphasizing critical features in both the channel and spatial dimensions, the model can more accurately identify and localize target pests that are adhered together.

YOLOv9 employs the SPPELAN module for spatial pyramid pooling, which integrates spatial pyramid pooling with an enhanced local attention network. However, in the context of this paper, it demonstrates some limitations in effectively handling feature diversity and multi-scale features. To more accurately identify similar agricultural pests (for example, as show in Figure 4, Spodoptera frugiperda and Spodoptera litura in this paper, which are difficult to recognize because many of the insects are positioned belly-up), the SPPELAN module was replaced with FocalNet.

In computer vision, different regions of an image contribute variably to a given task. Some regions contain critical information necessary for classification or detection, while others consist of background or irrelevant elements. The core concept of FocalNet is to replace the self-attention module with a focal modulation mechanism, which captures long-range dependencies and contextual information within the image. This mechanism allows the model to concentrate more precisely on essential features while reducing focus on irrelevant areas, enabling a more refined and targeted modulation of features. This approach not only improves the model’s sensitivity to key features but also reduces the computational complexity typically associated with traditional self-attention mechanisms. The network architecture of FocalNet is depicted in Figure 7.

FocalNet obtains contextual and global information by projecting input feature vectors into a new feature space and then using deep convolution and global average pooling. These features are then compressed into modulators and a dot product operation is performed using gated weights G to obtain the intermediate output Zout as shown in (Equation 1).

Zl represents the feature representation of layer l and Gl is the corresponding gating weight. Finally, the final output yi is obtained by combining the linear layer h and the query q to achieve the communication between different channels, as shown in (Equation 2).

With the integration of FocalNet, the model can effectively minimize background noise interference and more accurately capture small yet important feature differences within an image. This capability is especially beneficial for agricultural pest detection tasks, where it allows for the differentiation between pest species with visually similar characteristics, thereby reducing the likelihood of false detections.

The computer processor used in this study is an Intel Core i7 9700, with a base clock frequency of 3.0 GHz. The system is equipped with an 8TB hard drive and 16GB of memory. The graphics card is an RTX 1080 Ti. The development environment includes Ubuntu 20.04, Python 3.6, Anaconda 9.12, and CUDA version 11.6. During model training, the following hyperparameters were configured: the number of epochs was set to 200, the momentum was set to 0.937, the weight decay was set to 0.0005, and the batch size was set to 4.

To objectively evaluate the detection effect of the proposed agricultural pest detection model, Precision (P), Recall (R), Average Precision (AP), and mean Average Precision (mAP) were selected as the evaluation metrics, and the formulas were computed as shown in (Equations 3–6), respectively.

Precision Pk indicates the proportion of correctly identified target pests of class k in all samples identified as that target pest of class k. Recall Rk indicates the proportion of correctly identified target pests of class k in all samples of that target pest of class k. The average precision APkintegrates precision and recall, and the model is evaluated by calculating the area under the precision-recall curves at different thresholds to assess the model performance. mAP is the mean value of the average precision of all pest classes, which is a key indicator for comprehensively evaluating the model performance in multi-classification tasks. A higher mAP value indicates better detection performance, as it reflects the model’s ability to accurately detect and classify multiple pest species.

The pest pheromone monitoring platform adopts the architecture of front-end and back-end separation. The front-end includes the Web end and App end. The interactive interface for the Web end was developed using Vue, while the App end was built on Android. The back-end utilizes the SpringBoot framework to handle data processing and other operations, with MySQL serving as the database for data storage.

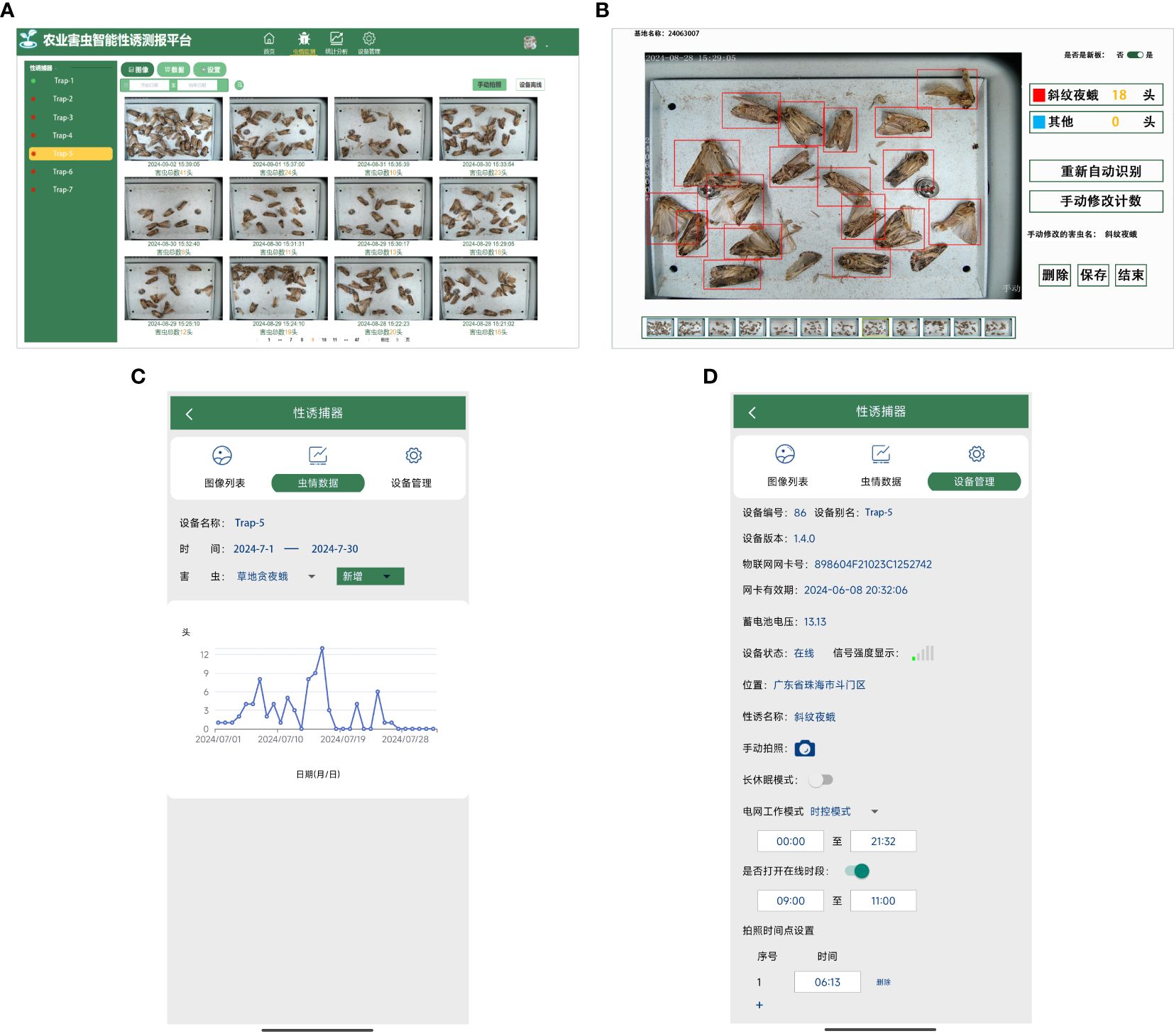

The functions of the pest pheromone monitoring platform are shown in Figure 8, and include features such as an image list, data analysis, and device management. After logging in, users can access the image list interface to view detailed information about pest identification results. Through the data analysis interface, users can review pest statistics, while the device management interface allows them to remotely send control commands. These commands can be used to configure equipment operating modes, set image capture times, and manually control the equipment to take pictures. The system ensures seamless collaboration between the front and back ends, providing users with accurate, real-time pest monitoring and analysis data.

To validate the effectiveness of the three improved strategies in the proposed YOLOv9-TrapPest model for pest recognition, the Precision, Recall, and mAP50 of each improved model were compared using the same test set. The results of the ablation experiments are summarized in Table 2, where the mark “✓” indicates the improvement strategy applied.

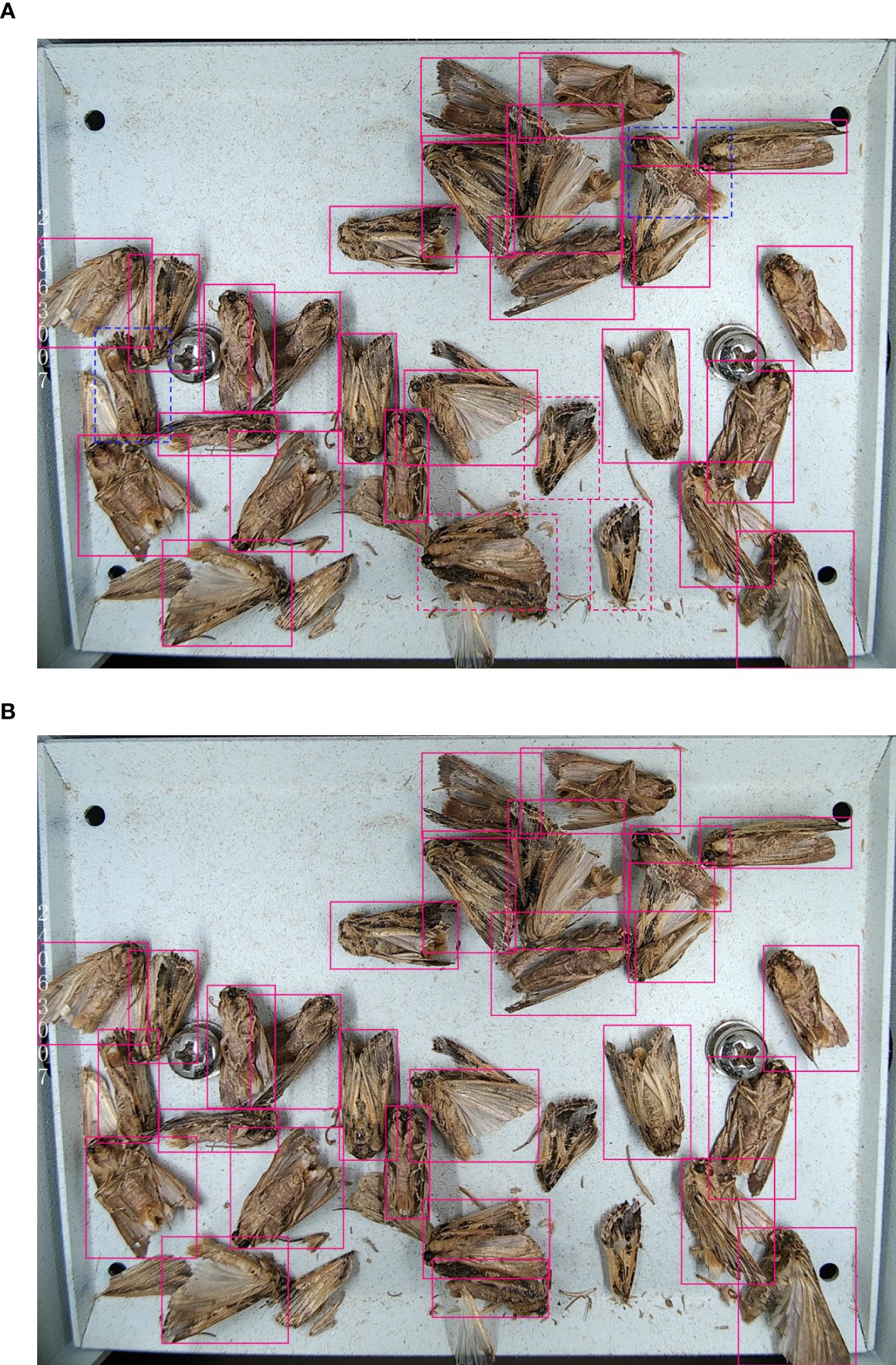

As shown in the table, original YOLOv9 model achieved a recall rate of 91.5% and a mAP50 of 93.9%. However, it exhibited several limitations, including a tendency to falsely detect separated limbs of pests as individual target pests, to incorrectly recognize multiple overlapping pests as a single target, and to misclassify visually similar pest species, leading to false detections. In Exp2, the introduction of the AKConv module resulted in a 1.9% increase in recall rate and a 1.2% increase in mAP50. AKConv addresses the issue of false detection of limb separation by adaptively adjusting the shape and parameters of the convolution kernel, better adapting the model to the morphological characteristics of various pests, and accurately capturing the entire target. In Exp3, the further addition of the CBAM-PANet module resulted in a 2.1% improvement in mAP50. This module enhances the model’s attention to important features and key regions by introducing the channel attention and spatial attention mechanisms, which makes the model perform better in processing adhesive pest images. In Exp4, the introduction of the FocalNet module resulted in a recall rate of 96.6% and a mAP50 of 98.3%. The focal modulation mechanism of FocalNet enhances the model’s ability to focus on high-confidence regions and capture fine-grained features, significantly reducing background interference and improving its capacity to distinguish between agricultural pest species with similar characteristics. As illustrated in Figure 9, the original YOLOv9 model faced challenges such as false detections of pest wings as target pests, missed detections due to adhered pests, and false detections when detecting visually similar pests. Yet, after incorporating the improved strategies proposed in this paper, these issues were effectively mitigated, leading to more accurate and reliable pest detection.

Figure 9. Comparison of test results. The red dashed box represents a missed detection of the Spodoptera litura, and the blue dashed box represents a false detection as the Spodoptera frugiperda: (A) YOLOv9 test result, (B) YOLOv9-TrapPest test result.

To validate the detection effect of the proposed agricultural pest detection model, YOLOv6m, YOLOv8m, YOLOv9, Cascade R-CNN, and DETR were selected for the comparison experiments in this paper, and the same training set, test set, and training parameter settings were chosen for all the detection models. The detection results of different models are shown in Table 3.

According to the results, the YOLOv9-TrapPest obtained the highest mAP50, which was 7.7%, 4.1%, 3.4%, 3.9%, and 4.5% higher compared to YOLOv6m, YOLOv8m, YOLOv9, Cascade R-CNN and DETR, respectively. The results of the confusion matrix for the YOLOv9-TrapPest model are shown in Figure 10. Due to the distinctive characteristics of the pest wings, some wings that were separated by the electric shock module were still falsely detected as target pests.

The interface of the proposed pest pheromone monitoring platform for agricultural pests is shown in Figure 11. It includes the image list interface, recognition result interface, data analysis interface and device management interface. In the image list interface, the images of pests acquired by smart electro-killing pheromone traps are displayed. The recognition result interface shows the results of these images processed by the algorithm, including detailed information such as the time of taking pictures, the type of pictures, and the number of various target pests. The data analysis interface statistically analyses the collected data, showing the occurrence of each type of target pest in a specific period, providing a scientific basis for agricultural pest control. The device management interface contains detailed information about the smart electro-killing pheromone traps, such as the serial number of the device, the latitude and longitude information of the device, and the power information of the device. The interface also has a remote-control function, through which users can remotely control the device to take pictures manually, thus improving the convenience and flexibility of device operation.

Figure 11. Display of the interface of the pest pheromone monitoring platform: (A) Image list interface in Web, (B) Recognition result interface in Web, (C) Data analysis interface in App, (D) Device management interface in App.

As a critical monitoring tool, sex pheromone-baited technology plays an essential role in the prevention and control of agricultural pests. By employing synthetic pheromone lures to attract and trap adult pests, this technology enables precise monitoring of pest population dynamics, providing a reliable basis for developing scientifically sound pest control strategies. This method is not only efficient, environmentally friendly, and cost-effective, but it also significantly reduces the reliance on chemical pesticides, thereby lowering environmental pollution and the risk of pesticide residues on crops. Ultimately, this approach contributes to truly sustainable and green pest prevention and control practices. There are a number of commercially available sex pheromone-baited traps in the international market, such as Trapview, iSCOUT and SightTrap (Preti et al., 2021; Suto, 2022). Nonetheless, fully automated monitoring of agricultural pests remains unattainable, primarily due to the necessity of manually replacing sticky boards once a certain number of pests have adhered to them. Moreover, Schrader et al. (2022) noted that commercial remote monitoring traps are adopted hesitantly by growers due to their high costs (approximately USD 1375/ha). To solve the aforementioned problems and realize an unattended and cheaper intelligent pest monitoring system, a smart electrical pheromone trap was designed in this paper. The trap utilizes an electric shock module in place of the traditional sticky board for pest elimination, allowing for continuous dynamic updating of the photographed area. This is achieved by rotating the flat bottom tray, ensuring that the area for pest monitoring remains unobstructed and up-to-date.

Despite advancements in sex pheromone-baited traps, field placement results in pest images with complex backgrounds. This necessitates the development of robust and highly generalized pest recognition models. Miao and Zhou (2019) enhanced the SSD model to detect six types of grain storage pests, addressing challenges such as complex backgrounds, small pest sizes, and varied postures in sticky board images. Their improvements included refining the object bounding box regression strategy and loss function, optimizing the feature extraction network, and incorporating scratch-window sampling alongside multi-scale feature fusion. These enhancements led to a mAP of 81.36% for the model. Li et al. (2021) used a modified Faster-RCNN to detect whitefly and thrips on sticky traps in the greenhouse. To address the problem of poor results in detecting small targets, the scale of the anchor frames was adjusted for better coverage of small pests and RoIAlign was used instead of RoIPooling to improve the positioning accuracy of the candidate frames, with a mAP of 95.2%. Zhang et al. (2022) established a two-layer network model based on improved YOLOv3 and DBTNet-101 to detect the Cnaphalocrocis medinalis. To address the challenges of complex backgrounds, sticky pests, and reduced recognition rates caused by false detections of similar pests, the authors introduced several improvements. They incorporated DropBlock regularization to mitigate the false detection of non-targets and replaced the traditional NMS with DIoU-NMS, which effectively minimized the loss of prediction frames for sticky targets. Further, by cascading a layer of the deep bilinear transform classification network, DBTNet-101, they reduced the mutual misdetection between target and interfering pests. As a result of these enhancements, the recognition precision and recall rates for Cnaphalocrocis medinalis reached 97.6% and 98.6%, respectively.

In this paper, to address the issue of reduced model recognition accuracy caused by localized and broken pests due to the electric shock module, the AKConv module was introduced to enhance the backbone network and minimize interference from pest limbs. To tackle the problem of pests sticking together during peak infestation periods, which reduced the model’s recall rate, a CBAM-PANet structure based on the attention mechanism was proposed. This structure enables the model to more accurately identify and locate targets within complex backgrounds and images containing sticky pests. To further address the issue of false detection of similar pest species, FocalNet was incorporated in place of the SPPELAN module in the Head network. This modification allows the model to capture fine-grained features, improving its ability to differentiate between visually similar pest species.

The electro-killing pheromone-baited intelligent agricultural pest monitoring system is fully unattended and can be applied in any location where pheromone-based pest monitoring is required. This includes diverse agricultural settings such as greenhouses, orchards, and rice paddies. And its pest recognition model can be further trained to detect more pest species. Moreover, compared to pheromone-based monitoring systems utilizing machine vision, this system is more cost-effective. By integrating an electric shock module, it eliminates the need for sticky boards, thereby reducing material costs and minimizing environmental pollution caused by the sticky boards. Additionally, it reduces labor costs by eliminating the need to replace sticky boards, making it a more budget-friendly choice for growers.

In this paper, an electro-killing pheromone-baited intelligent agricultural pest monitoring system based on machine vision was designed and implemented. It consists of smart electro-killing pheromone traps, a server for data access and model deployment and a pest pheromone monitoring platform. Using an electric shock module and base plate flipping technology, the smart electro-killing pheromone traps efficiently electrocute pests and capture images. To address the issues of false detections caused by pest limbs after electrocution, as well as the false detection of similar pests and missed detections due to large quantities of insects sticking together during peak periods, the AKConv module, CBAM-PANet structure, and FocalNet module were integrated into the YOLOv9 model. These enhancements significantly improved the identification of seven agricultural pests, achieving an average precision rate of 97.5%, an average recall rate of 96.6%, and a mAP50 of 98.3%. The electro-killing pheromone-baited intelligent agricultural pest monitoring system eliminates the need for regular replacement of sticky boards, while providing real-time data on the number of pests trapped each day, thereby offering crucial support for informed pest control decisions.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

GL: Conceptualization, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing, Software. ZL: Resources, Writing – review & editing, Data curation, Formal analysis, Validation, Software. ZF: Software, Supervision, Writing – review & editing, Data curation, Validation. JL: Formal analysis, Writing – review & editing, Investigation, Methodology, Validation. BL: Resources, Writing – review & editing, Formal analysis. GC: Writing – review & editing, Data curation, Resources. QY: Conceptualization, Formal analysis, Methodology, Supervision, Writing – review & editing, Funding acquisition.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This study is supported by the Key R&D Program of Zhejiang (No. 2022C02004).

Authors ZL, BL, and GC were employed by the Guizhou Aerospace Intelligent Agriculture Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Ding, W., Taylor, G. (2016). Automatic moth detection from trap images for pest management. Comput. Electron. Agric. 123, 17–28. doi: 10.1016/j.compag.2016.02.003

Jiang, Y., Liu, J., Zeng, J., Huang, C., Zhang, T. (2021). Occurrence of, and damage caused by, major migratory pests and techniques for monitoring and forecasting these in China. Chin. J. Appl. Entomology 58, 542–551. doi: 10.7679/j.issn.2095-1353.2021.056

Li, W., Chen, M., Li, M., Sun, C., Wang, L. (2019). Conference on Control Technology and Applications, (Jilin, China: Springer) p. 40–53.

Li, W., Wang, D., Li, M., Gao, Y., Wu, J., Yang, X. (2021). Field detection of tiny pests from sticky trap images using deep learning in agricultural greenhouse. Comput. Electron. Agric. 183, 106048. doi: 10.1016/j.compag.2021.106048

Lin, T.-Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S. (2017). “Feature pyramid networks for object detection,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). (Honolulu, HI, USA: IEEE). 936–944. doi: 10.1109/CVPR.2017.106

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., et al. (2014). “"Microsoft coco: Common objects in context,” in Computer Vision–ECCV 2014: 13th European Conference. (Zurich, Switzerland: Springer). p. 740–755.

Liu, S., Qi, L., Qin, H., Shi, J., Jia, J. (2018). “Path aggregation network for instance segmentation,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. (Salt Lake City, UT, USA: IEEE). p. 8759–8768. doi: 10.1109/CVPR.2018.00913

Luo, J., Chen, L., Lu, F., Lu, H., Zhao, J. (2016). Application of sex pheromone-baited forecasting system in the monitoring of the Spodoptera litura. China Plant Prot. 36, 50–53. doi: 10.3969/j.issn.1672-6820.2016.10.011

Miao, H., Zhou, H. (2019). Detection of stored-grain insects image on sticky board using deep learning. J. Chin. Cereals Oils Assoc. 34, 93–99. doi: 10.3969/j.issn.1003-0174.2019.12.016

Potamitis, I., Rigakis, I., Fysarakis, K. (2014). The electronic McPhail trap. Sensors 14, 22285–22299. doi: 10.3390/s141222285

Preti, M., Verheggen, F., Angeli, S. (2021). Insect pest monitoring with camera-equipped traps: strengths and limitations. J. Pest. Sci. 94, 203–217. doi: 10.1007/s10340-020-01309-4

Qiu, R.-Z., Zhao, J., He, Y.-X., Chen, S.-P., Huang, M.-L., Chi, M.-X., et al. (2021). An automatic identification and counting method of Spodoptera frugiperda (Lepidoptera: Noctuidae) adults based on sex pheromone trapping and deep learning. Acta Entomologica Sin. 64, 1444–1454. doi: 10.16380/j.kcxb.2021.12.010

Savary, S., Willocquet, L., Pethybridge, S. J., Esker, P., McRoberts, N., Nelson, A. (2019). The global burden of pathogens and pests on major food crops. Nat. Ecol. Evol. 3, 430–439. doi: 10.1038/s41559-018-0793-y

Schrader, M. J., Smytheman, P., Beers, E. H., Khot, L. R. (2022). An open-source low-cost imaging system plug-in for pheromone traps aiding remote insect pest population monitoring in fruit crops. Machines 10, 52. doi: 10.3390/machines10010052

Shen, J., Jian, Y., Wang, L., Yang, L. (2019). Experiments on the trapping of Spodoptera frugiperda with different formulations of sexual attractants. China Plant Prot. 39, 59–60. doi: 10.3969/j.issn.1672-6820.2019.12.012

Suto, J. (2022). Codling moth monitoring with camera-equipped automated traps: A review. Agriculture 12, 1721. doi: 10.3390/agriculture12101721

Tian, Y., Wang, S., Li, E., Yang, G., Liang, Z., Tan, M. (2023). MD-YOLO: Multi-scale Dense YOLO for small target pest detection. Comput. Electron. Agric. 213, 108233. doi: 10.1016/j.compag.2023.108233

Wang, C.-Y., Bochkovskiy, A., Liao, H.-Y. M. (2023). “YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” in 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (Vancouver, BC, Canada: IEEE). p. 7464–7475. doi: 10.1109/CVPR52729.2023.00721

Wang, C.-Y., Yeh, I.-H., Liao, H.-Y. M. (2024). Yolov9: Learning what you want to learn using programmable gradient information. arXiv. doi: 10.48550/arXiv.2402.13616

Woo, S., Park, J., Lee, J.-Y., Kweon, I. S. (2018). “Cbam: Convolutional block attention module,” in Proceedings of the European conference on computer vision (ECCV), (Cham, Switzerland). p. 3–19.

Yalcin, H. (2015). “Vision based automatic inspection of insects in pheromone traps,” in 2015 Fourth International Conference on Agro-Geoinformatics (Agro-geoinformatics). 333–338 (Istanbul, Turkey: IEEE).

Yang, J., Li, C., Dai, X., Gao, J. (2022). Focal modulation networks. Adv. Neural Inf. process. Syst. 35, 4203–4217. doi: 10.48550/arXiv.2203.11926

Yang, L.-P., Song, Z.-X., Li, Y.-H., Wang, L., Yu, W.-J., Wang, S.-K., et al. (2020). Evaluation of the trapping effect of different types of attractant lures and traps combinations on Spodoptera frugiperda. J. Environ. Entomology 42, 1344–1351. doi: 10.3969/j.issn.1674-0858.2020.06.8

Zhang, X., Song, Y., Song, T., Yang, D., Ye, Y., Zhou, J., et al. (2023). AKConv: Convolutional kernel with arbitrary sampled shapes and arbitrary number of parameters. arXiv. doi: 10.48550/arXiv.2311.11587

Zhang, Z., Sun, G., Yang, B., Liu, S., Lu, J., Yao, Q., et al. (2022). Pheromone-baited intelligent monitoring system of Cnaphalocrocis medinalis (Lepidoptera: Pyralidae) based on machine vision and deep learning. Acta Entomologica Sin. 65, 1045–1055. doi: 10.16380/j.kcxb.2022.08.013

Keywords: agricultural pests, machine vision, smart electro-killing pheromone traps, YOLOv9-TrapPest, pest pheromone monitoring platform

Citation: Li G, Liu Z, Feng Z, Lyu J, Li B, Chen G and Yao Q (2025) Research on a machine vision-based electro-killing pheromone-baited intelligent agricultural pest monitoring method. Front. Plant Sci. 16:1521594. doi: 10.3389/fpls.2025.1521594

Received: 02 November 2024; Accepted: 07 February 2025;

Published: 06 March 2025.

Edited by:

Xiao Ming Zhang, Yunnan Agricultural University, ChinaReviewed by:

Pappu Kumar Yadav, South Dakota State University, United StatesCopyright © 2025 Li, Liu, Feng, Lyu, Li, Chen and Yao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qing Yao, cS15YW9AenN0dS5lZHUuY24=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.