- College of Agriculture, Health, and Natural Resources, Kentucky State University, Frankfort, KY, United States

Phenotypic traits like plant height are crucial in assessing plant growth and physiological performance. Manual plant height measurement is labor and time-intensive, low throughput, and error-prone. Hence, aerial phenotyping using aerial imagery-based sensors combined with image processing technique is quickly emerging as a more effective alternative to estimate plant height and other morphophysiological parameters. Studies have demonstrated the effectiveness of both RGB and LiDAR images in estimating plant height in several crops. However, there is limited information on their comparison, especially in soybean (Glycine max [L.] Merr.). As a result, there is not enough information to decide on the appropriate sensor for plant height estimation in soybean. Hence, the study was conducted to identify the most effective sensor for high throughput aerial phenotyping to estimate plant height in soybean. Aerial images were collected in a field experiment at multiple time points during soybean growing season using an Unmanned Aerial Vehicle (UAV or drone) equipped with RGB and LiDAR sensors. Our method established the relationship between manually measured plant height and the height obtained from aerial platforms. We found that the LiDAR sensor had a better performance (R2 = 0.83) than the RGB camera (R2 = 0.53) when compared with ground reference height during pod growth and seed filling stages. However, RGB showed more reliability in estimating plant height at physiological maturity when the LiDAR could not capture an accurate plant height measurement. The results from this study contribute to identifying ideal aerial phenotyping sensors to estimate plant height in soybean during different growth stages.

1 Introduction

Soybean (Glycine max (L.) Merrill) is a vital source of oil and plant protein globally, recognized for its high nutritional value (Wilcox, 2016). In the U.S., it ranks as the second most cultivated crop, following corn (Vaiknoras and Hubbs, 2023), and it plays a significant role in agricultural exports, with the U.S. being the second-largest soybean exporter, accounting for 38% of global soybean trade. To meet the growing global demand and maintain its status as a top exporter, the U.S. must significantly enhance soybean yield. Like other crops, yield in soybean is significantly influenced by a complex interaction of genetic traits, environmental factors, and agricultural practices. Key yield-related traits like pod number and seeds per pod (Ning et al., 2018), seed size (Liu et al., 2011), plant architecture like plant height (Jin et al., 2010), phenology (Kantolic and Slafer, 2001), photosynthetic efficiency (Wang et al., 2023), reproductive efficiency (Tischner et al., 2003) and nitrogen fixation efficiency (Imsande, 1992) are critical to influence final yield. Among these traits, plant height (PH) is one of the main critical yield-related traits in soybean, impacting the crop’s ability to compete for light and, consequently, its overall productivity (Gawęda et al., 2020). Defined as the distance from the ground to the top of the primary photosynthetic tissue (Cornelissen et al., 2003), PH impacts essential factors such as biomass (Bendig et al., 2014; Tilly et al., 2015; Brocks and Bareth, 2018), crop yield (Yin et al., 2011; Sharma et al., 2016; Zhang et al., 2017), and soil nutrient availability (Yin and McClure, 2013). This makes it a pivotal trait in plant breeding and crop improvement programs. Traditionally, measuring PH involves using rulers in the field, a method that is labor-intensive, time-consuming, and susceptible to errors, especially over extensive areas. These manual measurement techniques also suffer from spatial and temporal limitations that can compromise the accuracy of this vital plant phenotype data. To address these challenges, non-destructive image-based phenotyping has become increasingly popular, providing a more efficient and accurate means to assess PH.

Advancements in remote sensing have led to the exploration of various sensor-based methods for effective PH assessment. Passive sensors like satellites have been explored to measure PH in forests (Petrou et al., 2012) and crops like corn (Gao et al., 2013) and rice (Erten et al., 2016). Cloud cover and the revisit time of satellites (Zhang et al., 2020) can limit their effectiveness in precision agriculture. In response to these limitations, recent decades have seen a shift towards proximal field phenotyping technologies. Devices such as ultrasonic sensors, RGB depth cameras, and Terrestrial laser scanners fitted in fixed platforms, tractors, or autonomous robots have become prominent for high throughput field phenotyping. These technologies have proven successful in various crops, including cotton (Jiang et al., 2016; Sun et al., 2017, 2018; Thompson et al., 2019), corn (Hämmerle and Höfle, 2016; Qiu et al., 2019), and soybean (Ma et al., 2019). However, these proximal sensor platforms face several challenges including high cost, limited area coverage, and reduced mobility as the crops reach advanced stages of growth (Deery et al., 2014).

To further enhance the scope and efficiency of phenotyping, high throughput aerial phenotyping (HTAP) using unmanned aerial vehicles (UAV) like drones has gained popularity. UAVs, equipped with various sensors provide rapid, extensive data collection capabilities. Among these, RGB-based photogrammetry has become a popular technique for estimating PH across different crop species, including cotton (Xu et al., 2019; Ye et al., 2023), wheat (Madec et al., 2017; Khan et al., 2018; Yuan et al., 2018; Volpato et al., 2021), maize (Han et al., 2018; Malambo et al., 2018; Su et al., 2019; Gao et al., 2022; Liu et al., 2024) and sorghum (Watanabe et al., 2017; Tunca et al., 2024). UAV-based RGB cameras are popularly used to estimate PH using the structure from motion (SfM) technique (Kalacska et al., 2017; Coops et al., 2021). The PH estimation techniques using RGB cameras are considered a low-cost and user-friendly approach (Li et al., 2019). However, some studies argued that the derived canopy height from the SfM technique showed some issues in height measurement (Cunliffe et al., 2016; Wijesingha et al., 2019). The overestimation of the digital surface model (DSM) by the RGB camera is attributed to its inability to penetrate the canopy and give precise information (Madec et al., 2017). Hence, the LiDAR technique is more popular for vertical structure measurement as its pulses have powerful penetration capacity (Lefsky et al., 2002). LiDAR is particularly noted for its capacity to provide detailed 3D structural information by penetrating dense canopies and differentiating between ground and non-ground points using multiple reflections of laser pulses (Calders et al., 2020; Coops et al., 2021). This technology has effectively estimated canopy height in forests, shrubs, and various crops (Liu et al., 2018; Zhao et al., 2022). Additionally, this technology has successfully predicted PH in many crops like cotton (Sun et al., 2017, 2018; Thompson et al., 2019), wheat (Madec et al., 2017; Yuan et al., 2018; Guo et al., 2019; ten Harkel et al., 2019; Blanquart et al., 2020), maize (Andújar et al., 2013; Zhou et al., 2020; Gao et al., 2022), sorghum (Hu et al., 2018; Wang et al., 2018; Waliman and Zakhor, 2020; Patel et al., 2023) and rice (Tilly et al., 2014a; Phan and Takahashi, 2021; Sun et al., 2022; Jing et al., 2023).

Despite these advancements, there remains a gap in comprehensive UAV-based HTAP studies specifically for estimating soybean height. Previous studies using an imaging system of RGB camera and photonic mixer detector (PMD) have provided valuable insights into PH in a controlled setting (Guan et al., 2018; Ma et al., 2019). Structure from motion (SFM) techniques yield PH that helps spot ideotype in soybeans (Roth et al., 2022). Canopy height and their temporal changes across the growing season were recorded using RGB imagery captured with a drone in different soybean cultivars (Borra-Serrano et al., 2020). Reliable information about soybean height was found when using a low-cost depth camera mounted on a ground-based phenomics platform (Morrison et al., 2021). This study further verified the PH information using information recorded in the field manually and using single-point LiDAR (SPL) with high precision, assuring the ability of LiDAR to perform precise PH estimation in soybeans. Similarly, Luo et al. (2021) recorded data using UAV-based LiDAR and explored the potential of UAV-based LiDAR sensors to estimate soybean height. To our knowledge, no other studies have used UAV-LiDAR multiple times to measure PH in soybeans. Furthermore, none of the previous studies compared the effectiveness of UAV-based LiDAR and RGB for PH estimation in soybean. As a result, there is not yet clear information regarding which aerial phenotyping sensors and timing are ideal for assessing PH in soybean. Hence, in this study, we used UAV-based RGB cameras and LiDAR sensors in the same field experiment to estimate soybean plant height across different periods. This study aims to evaluate the potential of UAV-based RGB and LiDAR sensors for accurately estimating soybean height at different growth and developmental stages. Hence, the objectives of this study is to assess the uncertainty in estimating soybean height with UAV-based RGB and LiDAR sensors and identify the best high throughput aerial phenotyping sensor for PH estimation in soybean.

2 Materials and methods

2.1 Experimental design

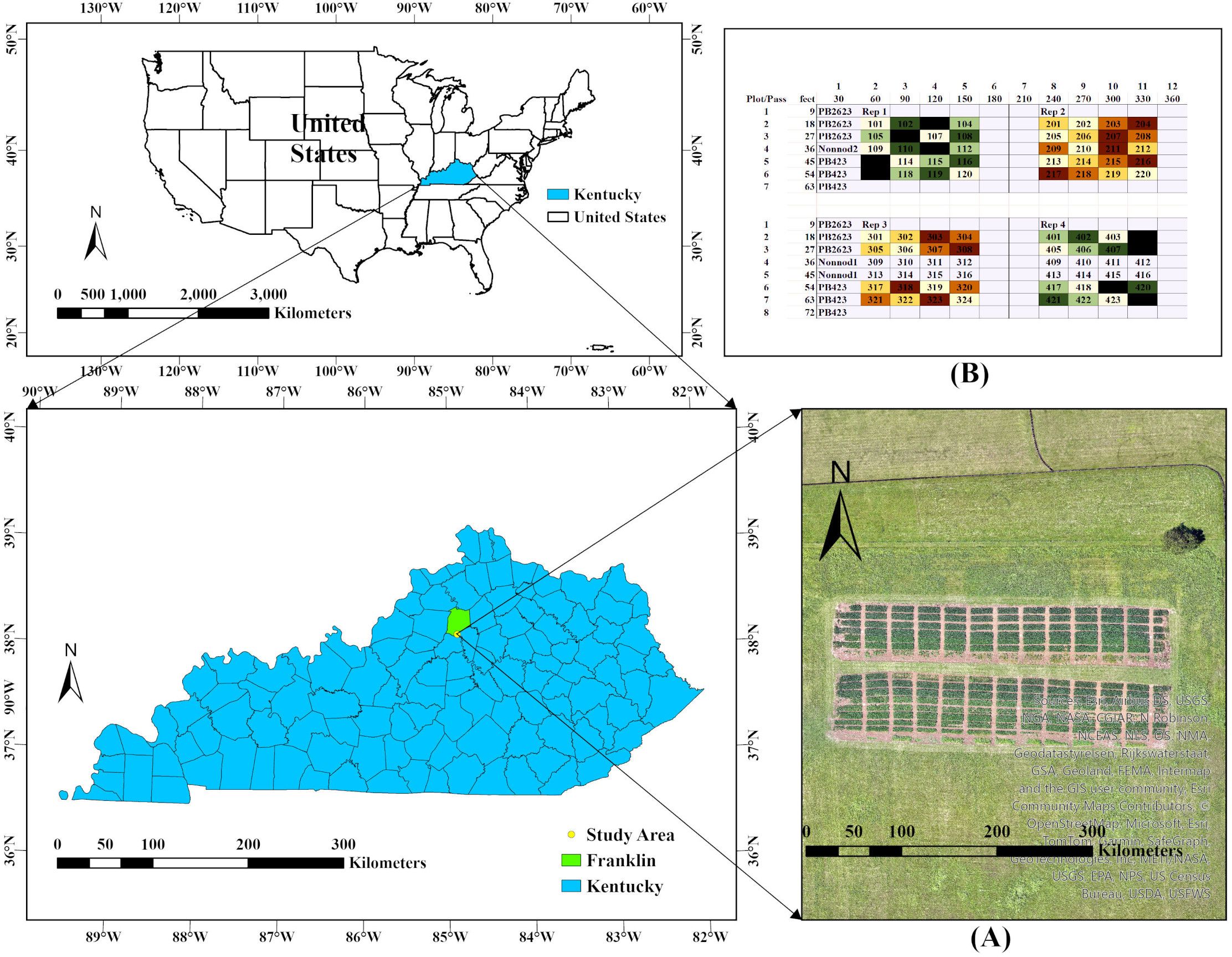

A field experiment was conducted in 2023 soybean growing season at Kentucky State University’s Harold R. Benson Research and Demonstration Farm (3807′ N; -84053′ W; and 207 masl). The experiment was set up at Split-Split-Plot Randomized Complete Block Design with four replications (Figure 1). The main plot was biochar application: no application or biochar application at 12 tons/ha before planting. Four soybean genotypes (two commercial cultivars - PB2623, PB423, and two advanced breeding non-nodulating soybean lines - KS4120NSGT and KS4120NSGT_NN_NIL-268) were used in the experiment as the subplot. Similarly, sub-subplots were four different levels of late-season N fertilization: 0, 40, 80, and 120 kg N ha-1. There were 88 plots, each measuring 7.32 m (24 ft) long and 1.83 m (6 ft) wide, with a 90 cm (3 ft) alley separating the plots. Each plot had five rows and was 38 cm (15 inches) apart. Urea was used as N fertilizer, which was equally split into 3 doses at R5, 1 week after R5, and 2 weeks after R5. Soybean was planted on mid-May and harvested on the last week of September.

Figure 1. Study area location and experimental design: (A) Experimental area location and (B) Experimental design in the field.

2.2 Data acquisition

2.2.1 UAV data

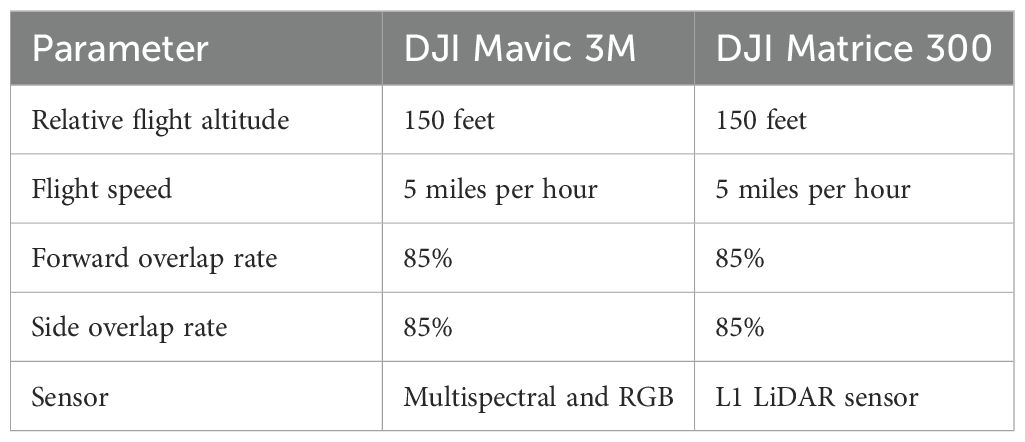

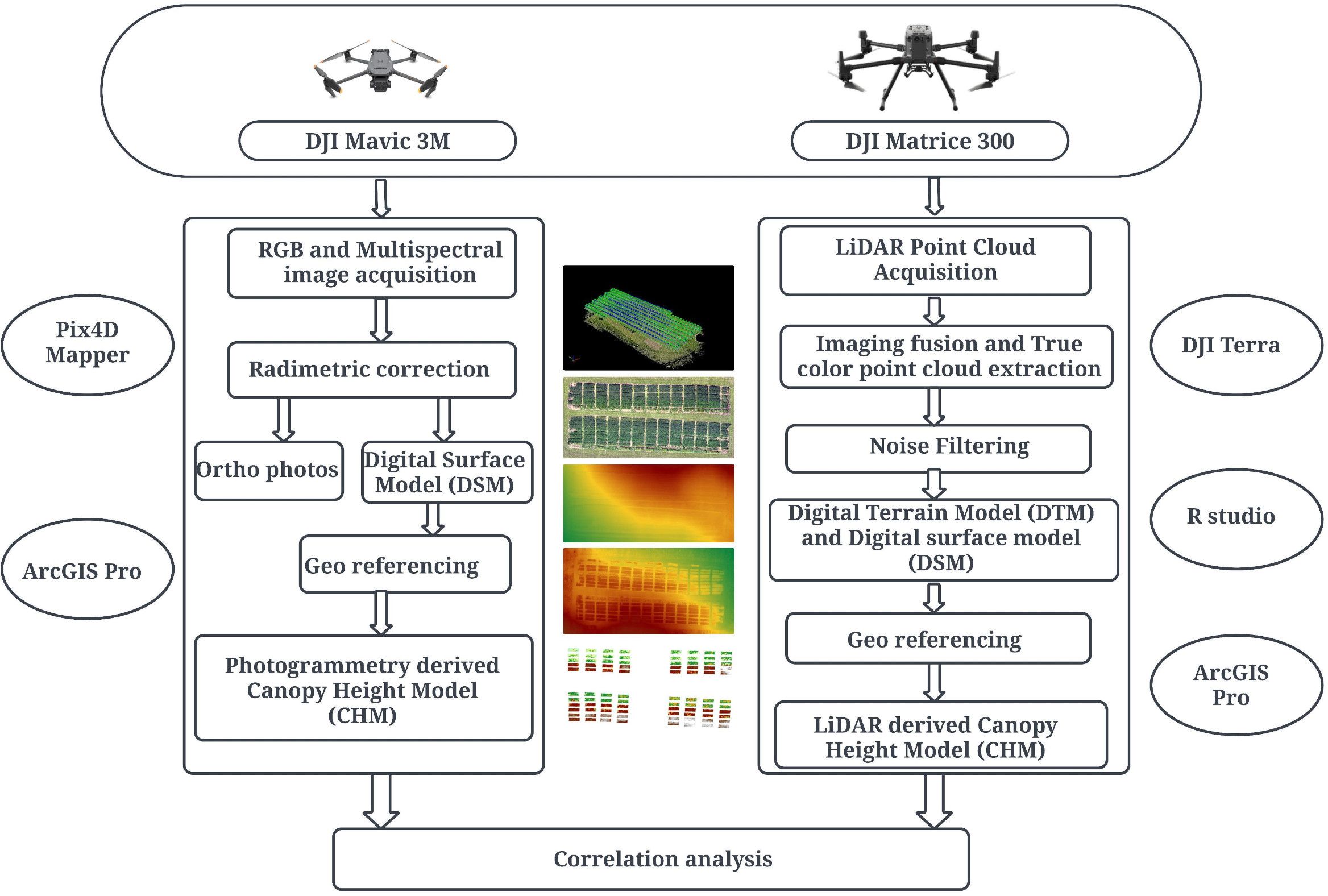

The UAV-DJI Mavic 3M (DJI Technology Co., Ltd., Shenzhen, China) fitted with RGB and Multispectral sensor (MS), was employed to capture aerial images of crops. The imaging sensor used was a 1/2.8 -inch CMOS with a 25 mm focal length, capturing images at a resolution of 5280 x 3956 pixels. Drone Deploy was utilized to identify the target area for aerial photography on a satellite map and to plan the flight route by entering the necessary flight and camera parameters. Additionally, the DJI Matrice 300 fitted with Zenmuse L1 LiDAR sensor was utilized to gather aerial LiDAR data. Table 1 presents the detailed parameters of the drones used in the study. These aerial operations were conducted during solar noon- between 10 AM and 2 PM for optimal lighting conditions, reduced shadows, and uniform illumination. The UAV maintained a consistent altitude of 150 feet above ground level and a flight speed of 5 miles per hour throughout the aerial data collection period.

Data collection with the UAV-LiDAR and RGB images occurred on June 6, July 7, July 18, and August 29. The first flight generated the reference ground to compute the digital terrain model (DTM). The rest of the dates correspond to critical growth stages of the crop, providing essential data for monitoring its development.

Six ground control points (GCPs) were set up and evenly distributed in the field, with three GCPs on each side. R12 Trimble (Trimble Inc., Sunnyvale, California) recorded the positions of the GCPs and checkpoints.

2.2.2 Field data

In the field, the PH was measured manually from the base to the tip of the main stem to serve as the ground reference height. Within each plot, three sampling locations were randomly chosen, each consisting of one plant from middle three rows to reduce border effect. This sampling approach ensures sufficient representation of plot level crop height (Dhami et al., 2020). The height of these plants was measured using a 1-meter ruler and the results were documented in a field notebook. Height measurements were taken on July 7, July 18, and August 29, aligning with key developmental stages of the soybean: R3 (beginning of pod development), R5 (onset of seed filling), and R7 (start of physiological maturity of the pod). Figure 2 shows the further steps carried out after the acquisition of the aerial data and field data.

2.3 UAV data processing

In the Pix4Dmapper (Pix4D, Lausanne, Switzerland), the captured RGB images were geometrically corrected through orthorectification and stitched together using mosaicking techniques. Orthorectification ensures spatial consistency by correcting geometric distortions caused by terrain variation and camera orientation (Toutin, 2004). Similarly, high overlap rates help to provide repetition in image alignment and reduce gaps and mismatch in processed images (Turner et al., 2012; Colomina and Molina, 2014). These two important steps were instrumental to minimize splicing errors during mosaicking technique. These processes were carried out in Pix4Dmapper recognized for its precision in photogrammetric technique (Gonçalves and Henriques, 2015). Ultimately, the digital surface model (DSM) and orthophotos were then generated using the software’s structure from motion (SFM) techniques.

The raw LiDAR point clouds collected by the UAV were uploaded to DJI Terra software (DJI Technology Co., Ltd., Shenzhen, China), where noise filtering was conducted. The data were formatted into LAS files with specified output coordinates. These LAS files were further post-processed in R studio using the lidR package, which involved setting up scan angle, ground classification, and normalizing elevations to produce the final Digital terrain model (DTM) and DSM.

The generated DTM and DSM were imported into ArcGIS Pro (Esri Inc., Redlands, United States). Here, each image was georeferenced using the georeferencing tool to add control points, utilizing the x and y locations of 6 ground control points (GCPs) and 14 checkpoints. Subsequent elevation adjustments were made to ensure accuracy in the final geographic positioning and elevation data.

2.4 Establishment of ground or terrain reference

We used the earliest dates of lidar data we had, classified ground points, filtered for ground class only, and used these to represent the bare earth surface. We then used the ground control points (or GCPs) to establish the adjustment needed to offset the generated DTM elevation to match the actual elevation recorded by the GCPs. We had several GCPs on bare or near-bare earth to measure the deviation between these points and the actual GCPs, thereby having the adjustment needed to match the elevations. All the while ensuring adherence to a projected KY StatePlane coordinate reference system.

The adjustment performed is primarily to correct an error introduced first using different takeoff locations (i.e., different elevations) and the proclivities of the GPS-equipped devices to slightly misjudge the vertical elevation of the takeoff locations (i.e., 234.5 msl versus 245.1 msl recorded at the same spot) on any given day. While the global baselines are impacted, the relative elevation variation in our image is not affected before normalization. The corrected images were further georeferenced, and elevation adjustment was performed to align our images to accurate terrain before generating plot-level data.

In the case of RGB images, the earliest date from the RGB camera was used to generate a bare earth surface using the photogrammetry technique in the Pix4Dmapper. After generating terrain in the software, georeferencing and elevation adjustment were conducted in the ArcGIS Pro software.

2.5 Determination of optimum LiDAR scan angle and elevation adjustment

The LiDAR scan angle, which is the angle at which laser pulses are directed toward the ground, plays a crucial role in generating a precise DTM using a LiDAR sensor. The study focused on minimizing the scan angle as closer angles to zero tend to yield more accurate elevation data (Ehlert and Heisig, 2013). To fine-tune our methodology, we conducted several trials to determine the most effective scan angle ranges. We aimed to select angles close to zero that still provided precise elevation information to generate accurate DTM.

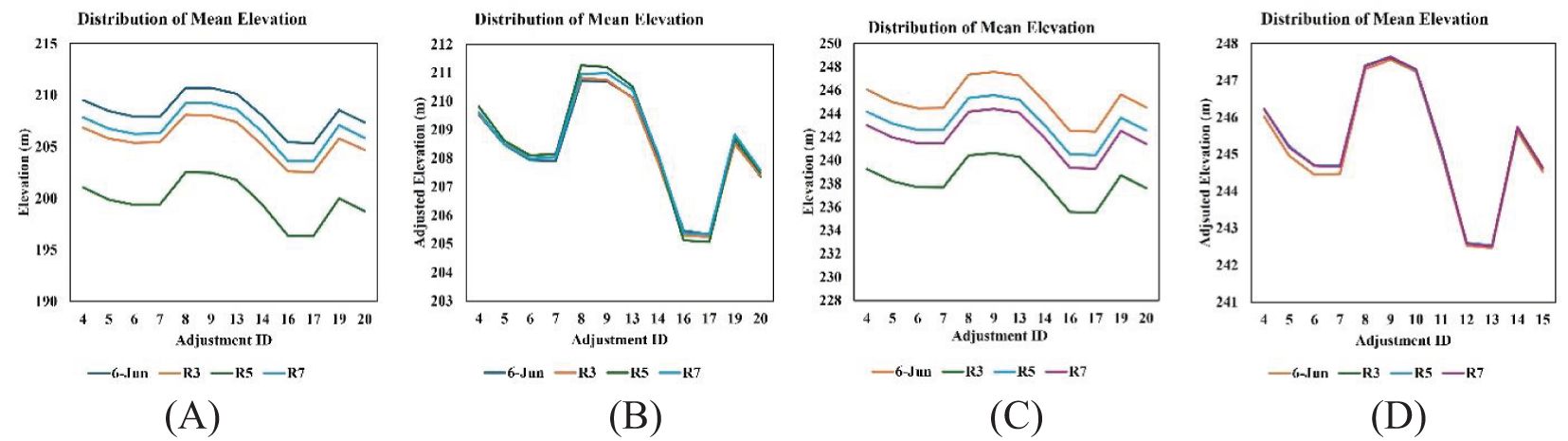

Terrain irregularities and slope variations can significantly affect the accuracy of height measurements from aerial data (Smith et al., 2019). As our study field had irregular terrain, elevation adjustment was carried out. To adjust the elevation values, buffers were created around adjustment points in the field. The mean elevation value of the buffers was computed, and the offset values were generated, which were further used to adjust the DTM and DSM raster. Figure 3 illustrates the distribution in mean elevation values before adjustment and corrected mean elevation values after adjustment across various GCPs and checkpoints used as adjustment IDs.

Figure 3. Distribution of mean elevation values across different GCPs and checkpoints: (A) before adjustment (RGB images); (B) after adjustment (RGB images); (C) before adjustment (LiDAR images); (D) after adjustment (LiDAR images).

This step ensures that all raster accurately represent the terrain by aligning them with actual elevation data. This process focuses on ensuring the elevation data within the raster was precisely adjusted, providing an accurate base for further analysis, like creating a CHM from adjusted DTM and DSM.

2.6 DTM, DSM, and CHM extraction

For this study, we initially generated a bare-ground Digital Terrain Model (DTM) by employing photogrammetry techniques to process RGB imagery captured during the early growth phase on June 6. Following this, Digital Surface Models (DSM) were created using RGB imageries acquired from UAV flights during reproductive stages. We integrated and spatially aligned the DTM and DSMs using Ground Control Points (GCPs) within ArcGIS Pro.

For LiDAR point clouds, the DTM was constructed by isolating ground points from the dense point cloud data and interpolating between these points to form a continuous ground elevation model. As outlined by Evans and Hudak (2007), the multiscale curvature classification algorithm facilitated the differentiation of ground and non-ground points. These points were further refined using a Triangulated Irregular Network (TIN) algorithm to produce the DTM for the LiDAR data recorded during the initial crop growth stage. Finally, the ‘pixel metrics’ function from the lidR package was used to compute the DTM value from the LiDAR point cloud.

The DSM for subsequent dates utilized the same function (pixel metrics) to calculate each pixel’s minimum, maximum, and difference. Typically, the maximum value represents the DSM and was utilized to compute CHM after georeferencing and elevation adjustment in ArcGIS Pro. We computed the CHM of the soybean crop in ArcGIS Pro using the raster calculator tool, which involved subtracting the DTM from the DSM at the pixel level. We further refined the CHM at the plot level using the ‘extract by mask’ tool, integrating this with the plot shapefile feature class containing 88 identical plot shapes. Then, plot-level statistics were derived using the ‘zonal statistics as a table’ as a spatial analyst tool. Through this methodical approach, we efficiently generated CHM data from both RGB images and LiDAR point cloud data in ArcGIS Pro.

2.7 Statistical analysis

The PH data derived from RGB, LiDAR, and manual measurements were analyzed using simple linear regression. In the simple linear regression model, manually measured PH was considered the dependent variable and sensor-based PH was used as an explanatory variable. We validated the soybean heights estimated from RGB and LiDAR against the manually measured PH. To evaluate the accuracy of these estimations, we calculated the coefficient of determination (R2), root mean square error (RMSE), and mean absolute error (MAE), which were computed to see the accuracy of LiDAR and RGB for estimating PH. The R2 value assessed how closely the estimated values aligned with the measured values with higher R2 values indicating a better fit. Conversely, lower RMSE and MAE values suggested greater accuracy in the estimates, quantifying the difference between the estimated and actual values. The formulas for calculating R2, RMSE, and MAE are provided to ensure a clear understanding of how these metrics are derived and interpreted.

Where N is the number of samples, and are the measured and estimated PH, is the absolute error for the data, respectively is the average measured PH.

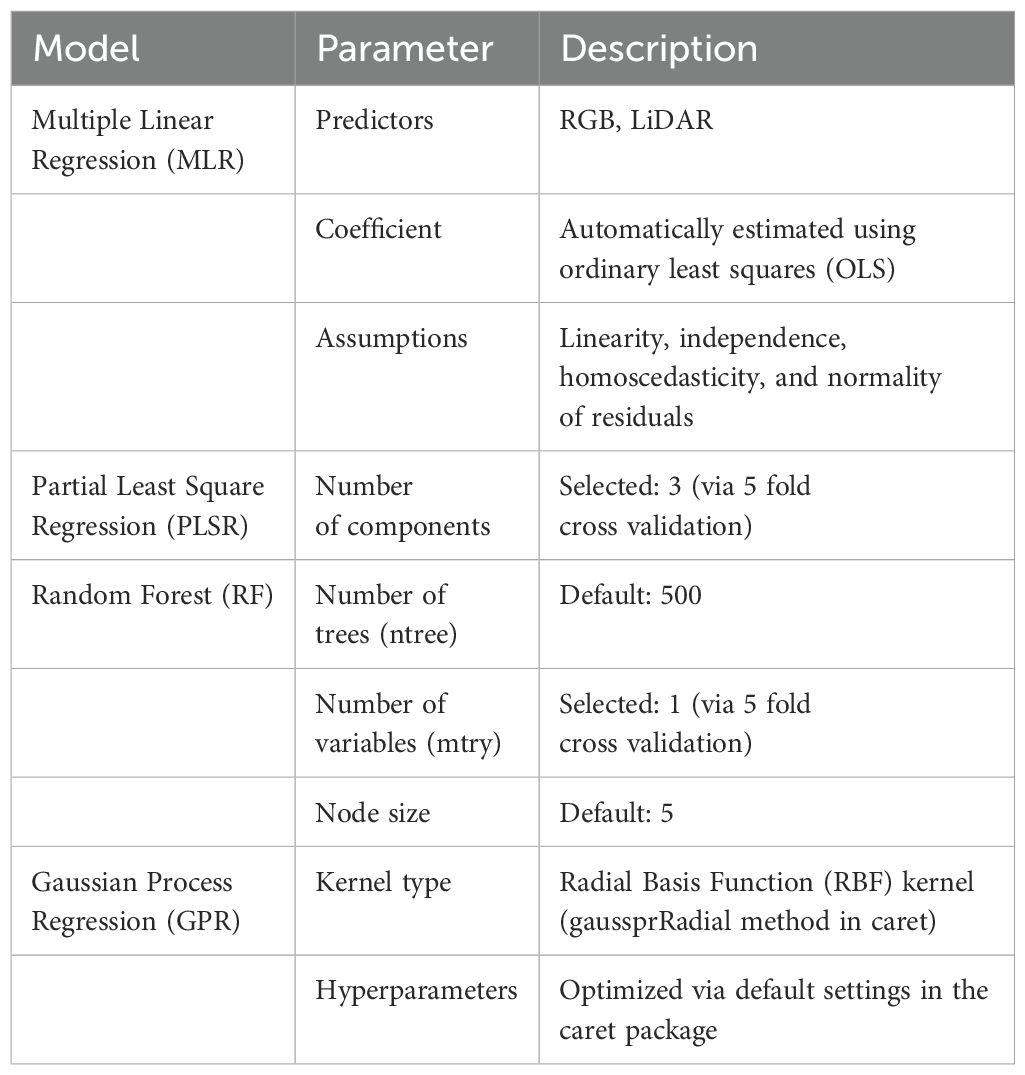

2.8 Modeling calibration and validation

Initially we assessed the individual sensor performance using a simple linear regression method. Later, we employed a variety of regression models, including multiple linear regression (MLR), partial least square regression (PLSR), random forest (RF), and Gaussian process regression (GPR) by integrating both RGB and LiDAR dataset to enhance predictive accuracy by leveraging the strength of both datasets. The dataset was split into training (80%) and testing (20%) sets using random partition method. The splitting was performed at plot level, so the training plots did not include any measurements from test plots. The training set was used to train the models, and the testing set was used to evaluate the performance metrics. The MLR and PLSR models were implemented using linear techniques, while RF and GPR were used for non-linear predictions. Each model was trained on the training dataset using the ‘caret’ package in R Studio. The models’ performance was evaluated using three metrics: R2, RMSE, and MAE. 5-fold cross-validation was performed to assess the generalizability of the models. The parameter configuration for each model is clearly explained in Table 2.

3 Results

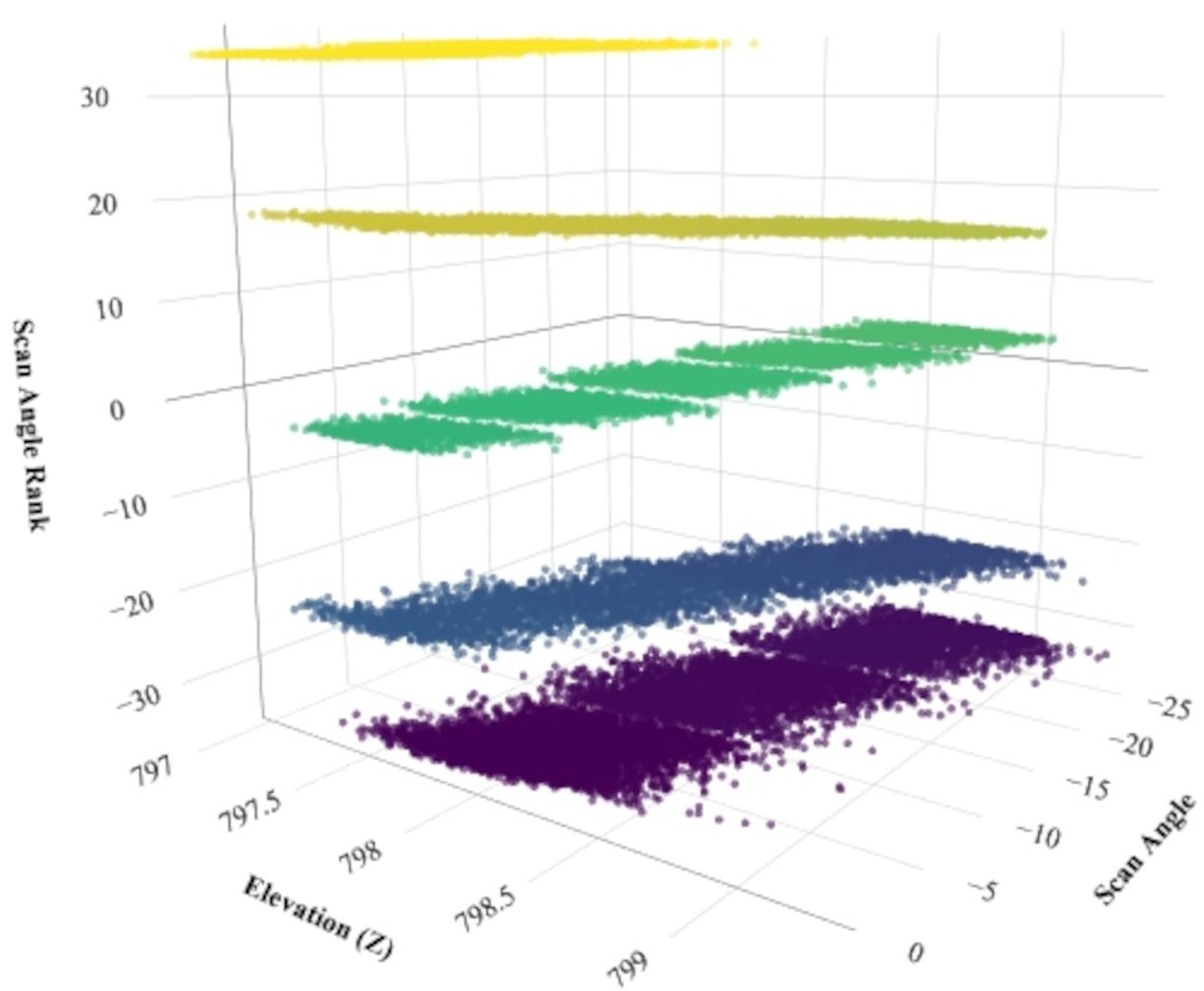

3.1 Estimation of scan angle for the soybean PH study

The point closer to the nadir tends to provide a consistent elevation reading as the LiDAR pulses hit the ground more directly (closer to perpendicular), reducing the distortions. In our study, the most consistent and precise capture of the ground elevation appears to occur within the scan angle range of -15 to +15 for DTM generation, as shown in Figure 4. The distribution of the LiDAR pulses within this range is more tightly clustered, indicating the consistency in elevation information with reduced variability. When looking at the LiDAR pulses distribution across other angles farther from the nadir, more outlier values appeared in the dataset.

Figure 4. Distribution of LiDAR pulses across different scan angles in various elevations within a transect in the experimental field.

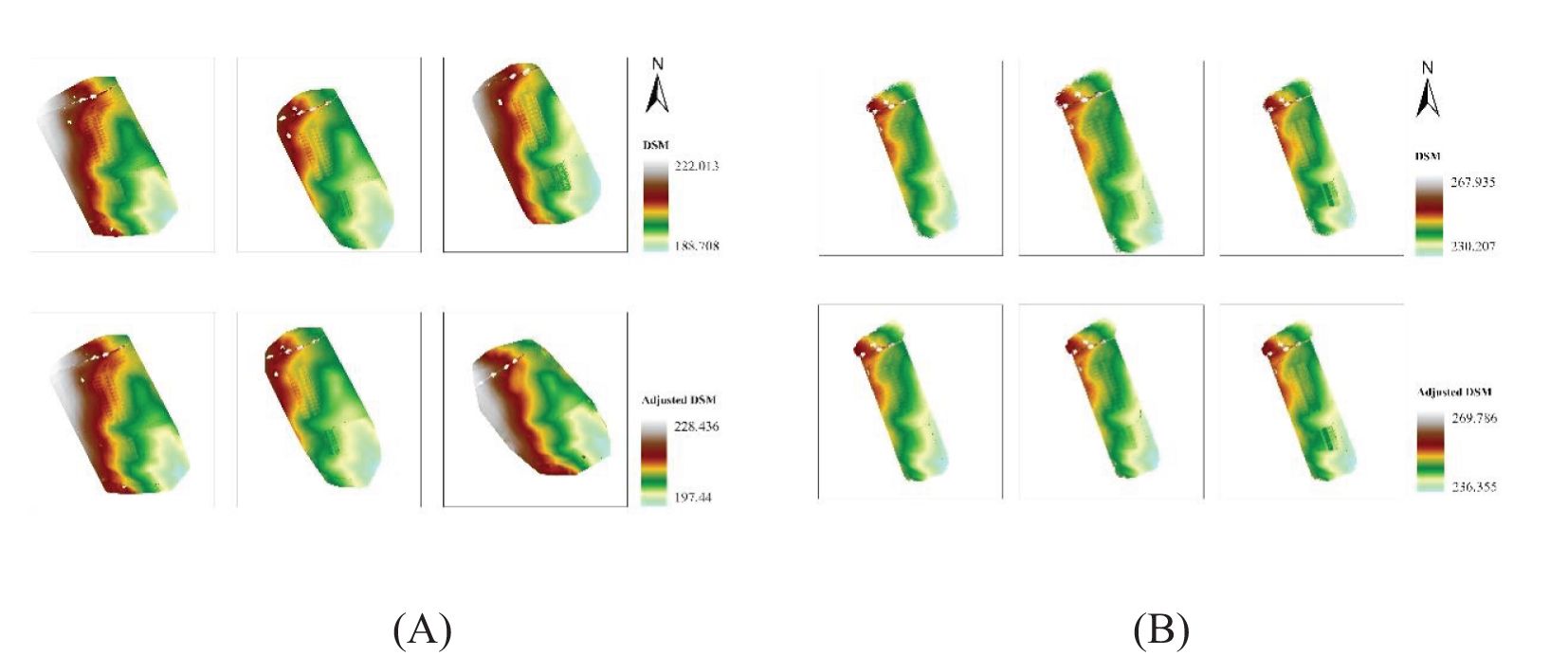

3.2 Elevation adjustment

DSM and DTM raster were adjusted to locate the aerial images to their absolute position in the ground. Using the offset values generated around the GCPs and checkpoints, the raster created using both RGB and LiDAR platforms was adjusted. The shift in the elevation values in RGB and LiDAR-generated DSM was noticed after correcting them using adjustment values. In the case of the DSM generated using an RGB camera, the uppermost elevation values shifted to 228.436 meters from 222.013 meters (Figure 5A). In the DSM generated from aerial LiDAR, the uppermost elevation value shifted to 269.786 meters in the adjusted DSM adjusted DSM (Figure 5B).

Figure 5. Digital surface model generated from (A) RGB and (B) LiDAR sensors on different dates. The upper plot layout shows the DSM before elevation adjustment, and the lower plots shows the adjusted DSM.

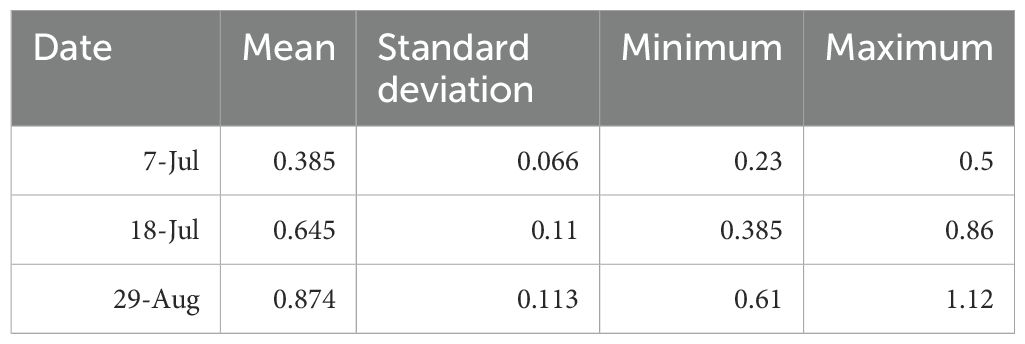

3.3 Ground reference height

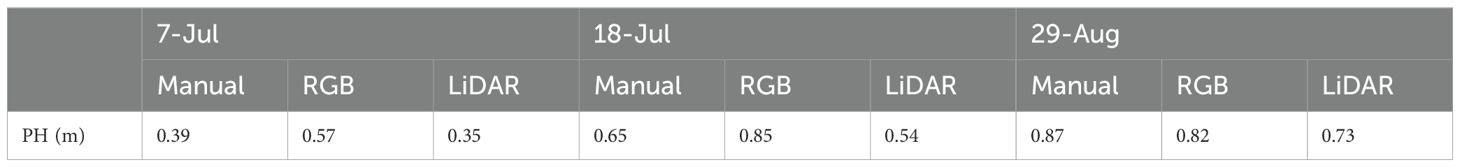

Table 3 shows the results from the manual height measurement in the field. It shows the descriptive data of field-measured PH conducted across 88 plots at three different soybean growth and developmental dates. The average PHs recorded on July 7, July 18, and August 29 were 0.39 meters, 0.65 meters, and 0.88 meters, respectively.

3.4 Estimation of crop height using CHM

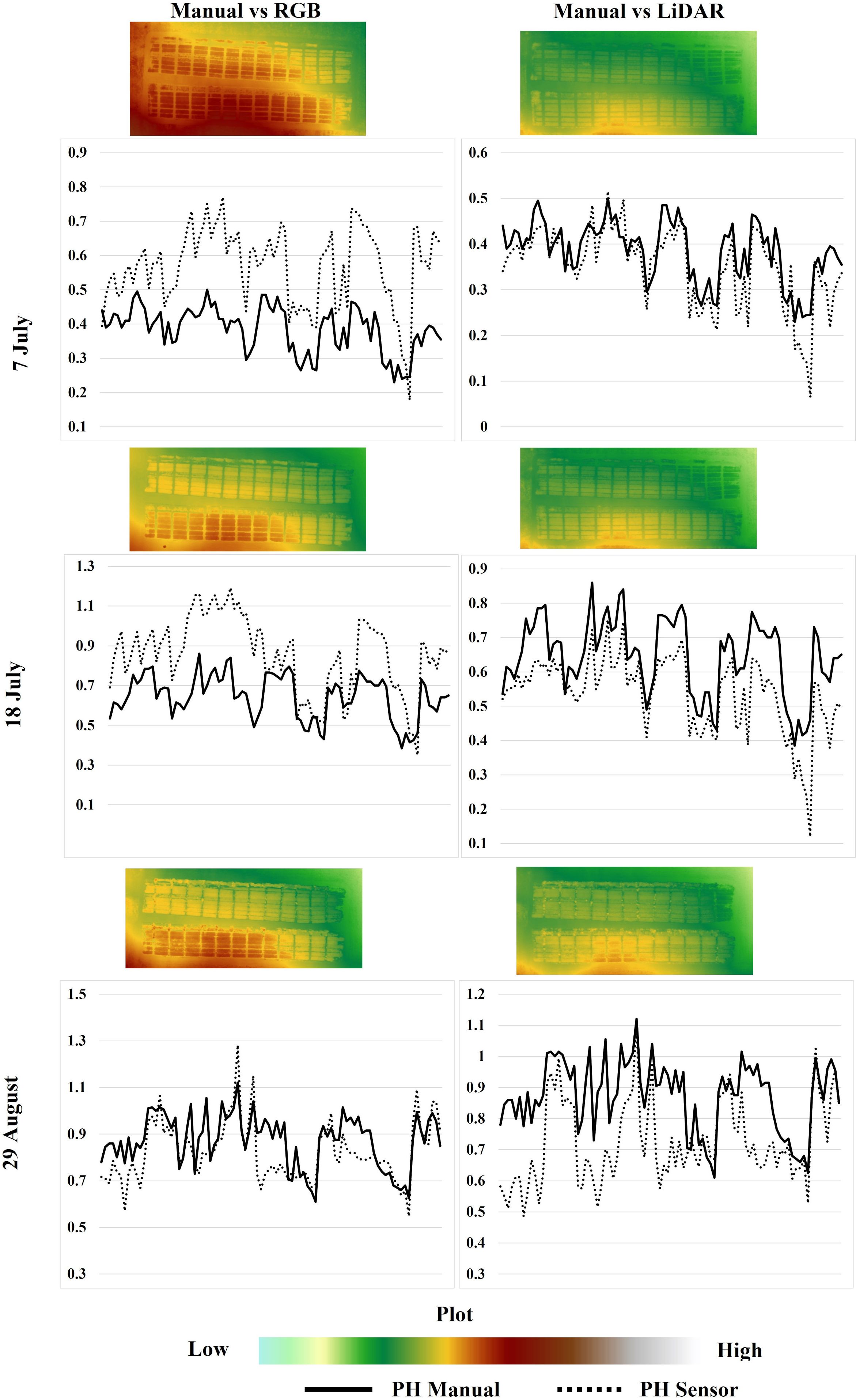

The PH measured manually in the field using a ruler was similar to the values estimated using aerial sensors mounted in the drones. The comparison can be seen in Table 4, where the distribution of the height values among different sensors and ground reference height is shown. The pattern of PH distribution, in general, is similar on all three dates. However, a considerable variation in the PH distribution can be noticed on the 7th of July and 18th of July in the case of RGB vs. Manual and the 29th of August in the case of LiDAR vs. Manual PH comparison. Furthermore, the complete distribution of ground reference PH and the PH collected using different aerial sensors across different dates can be visualized in Figure 6.

Figure 6. PH distribution among 88 plots across three dates showing ground reference height by the solid line and sensor-based height by dotted line. The 2D plots were generated using ArcGIS Pro for RGB and LiDAR images.

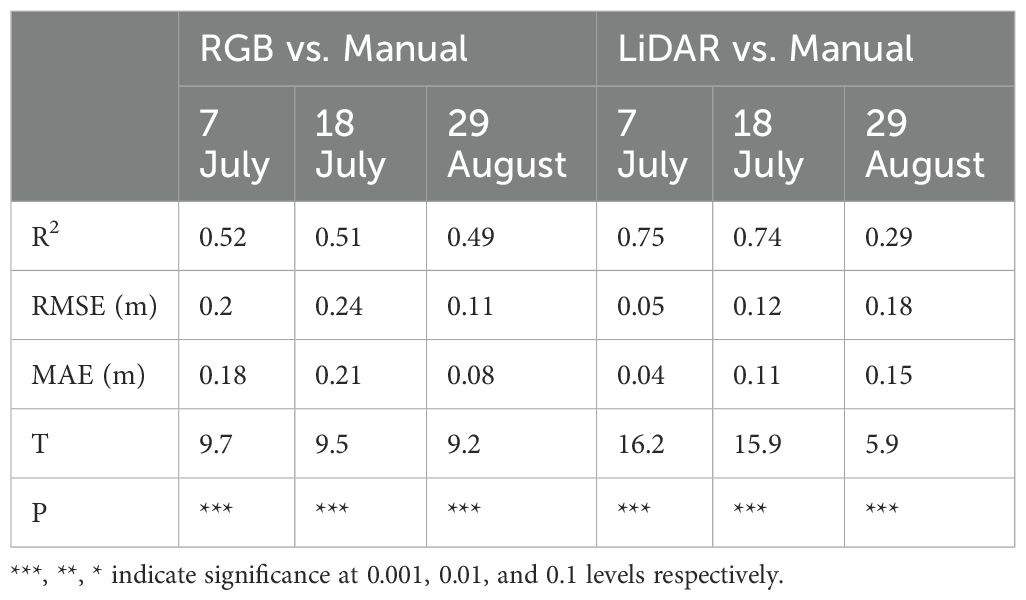

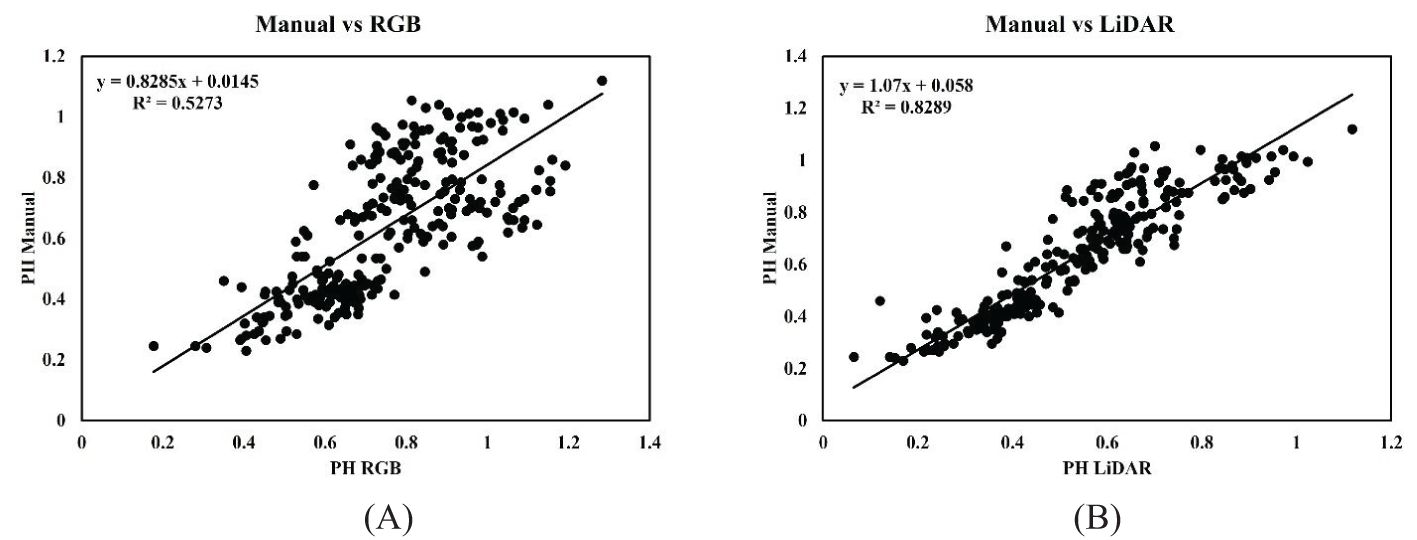

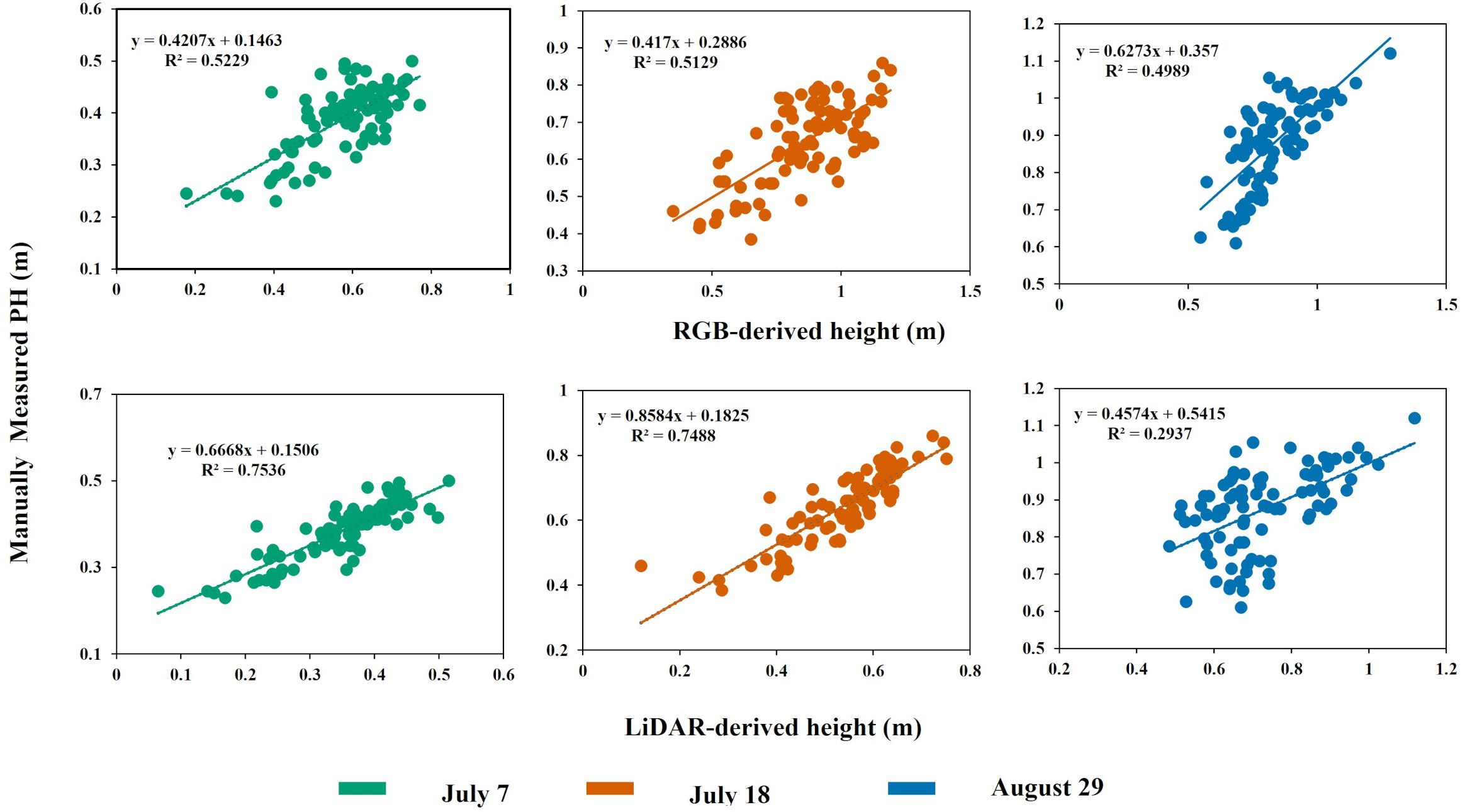

A simple linear regression analysis assessed the relationship between PHs derived from Canopy Height Models (CHM) using RGB and LiDAR sensors and the heights measured manually in the field across different dates. The ground reference PH and RGB-derived PH showed a moderate correlation with the coefficient of determination (R2) equal to 0.52. The comparison between manually measured soybean height against the LiDAR-derived PH showed a strong correlation with the coefficient of determination (R2) of 0.82. Scatterplots illustrating these comparisons are displayed in Figure 7: Figure 7A for UAV-based RGB vs manually measured PH, and Figure 7B for UAV-based LiDAR vs manually measured PH across various stages.

Figure 7. Linear relationship between PH estimated using a ruler in the field and the PH measured using (A) RGB camera and the (B) LiDAR sensor throughout the season.

In the case of manually measured PH versus RGB-derived PH, the coefficient of determination (R2) value ranged between 0.52 and 0.49 in decreasing order across July 7, July 18, and August 29. The correlation remained relatively consistent on July 7 and July 18, with an R2 of approximately 0.52. On August 29, the correlation decreased slightly with an R2 of 0.49. The PH distribution pattern was similar to RGB vs. manual PH measurement when comparing ground-measured and LiDAR-generated PH. The coefficient of determination (R2) between 0.75 and 0.29 was recorded across various dates in descending order, as shown in Figure 8. On July 7, the highest R2 value was recorded; however, by August 29, the correlation dropped significantly to an R2 of 0.29, suggesting that the precision of LiDAR may decrease as the crop progresses toward physiological maturity. Looking specifically at the RMSE and MAE values, as shown in Table 5, the comparison between manual measurements and LiDAR-based PH showed the lowest values on July 7. In contrast, the comparison between RGB-measured PH and ground reference PH on July 18 generated the highest RMSE and MAE values, indicating a lesser agreement between ground reference height and RGB-derived height at that particular stage of the crop. The lesser RMSE and MAE values indicate the substantial agreement between the ground reference PH and sensor-measured height. Despite the inharmonious correlation values between the PH generated using aerial sensors and ground reference PH, their relationship remains statistically significant (p < 0.001) across all the growth and developmental periods.

Figure 8. Comparison of the manually measured PH and estimated PH using aerial (top) RGB and (bottom) LiDAR sensor.

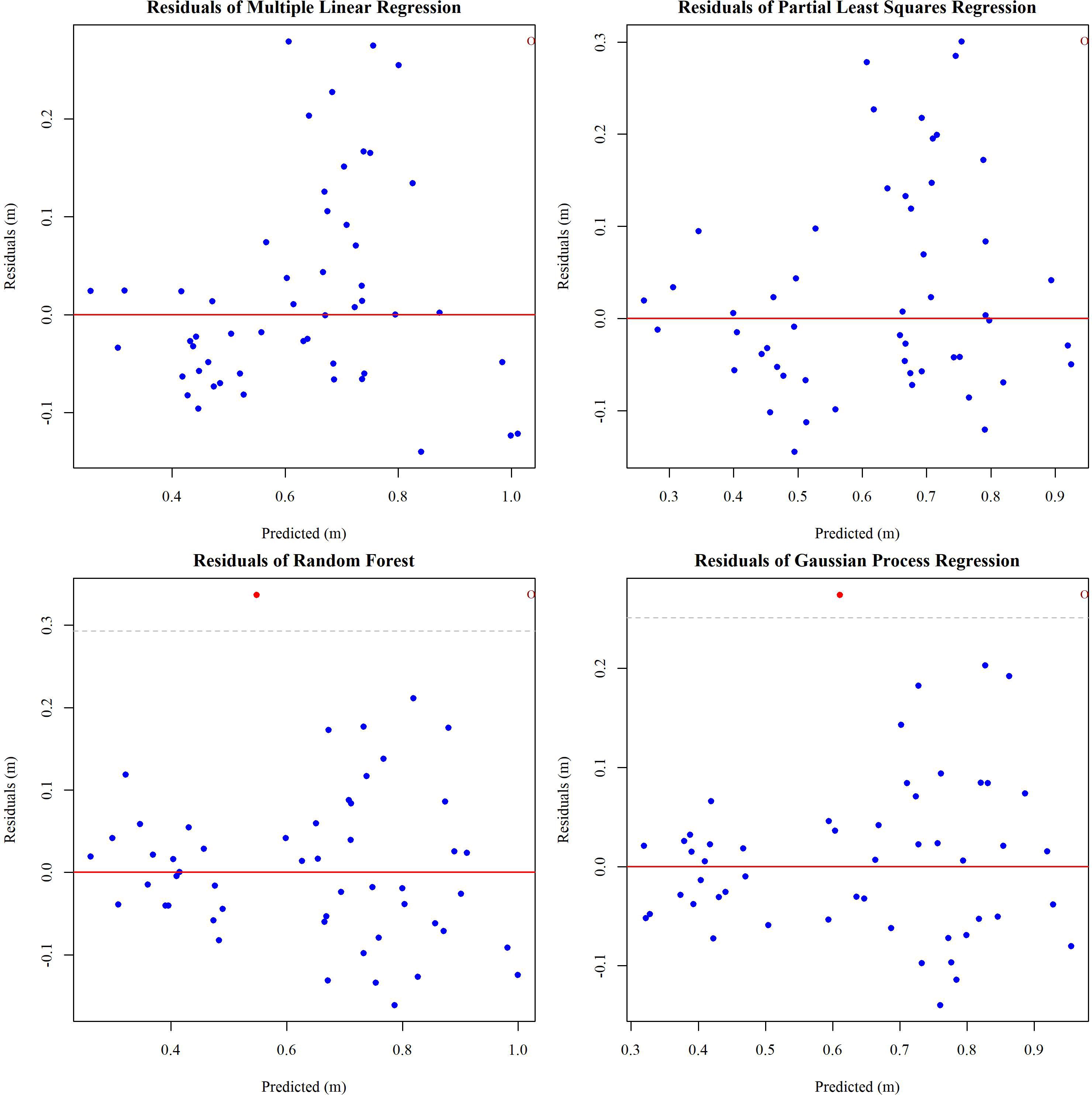

Various regression models were employed to evaluate the accuracy and effectiveness of LiDAR and RGB sensors in predicting soybean PH. Meanwhile, outliers were identified using residual diagnostic as shown in Figure 9. They were included in the analysis unless they exceeded 3 standard deviations, as their effect on the model was minimal.

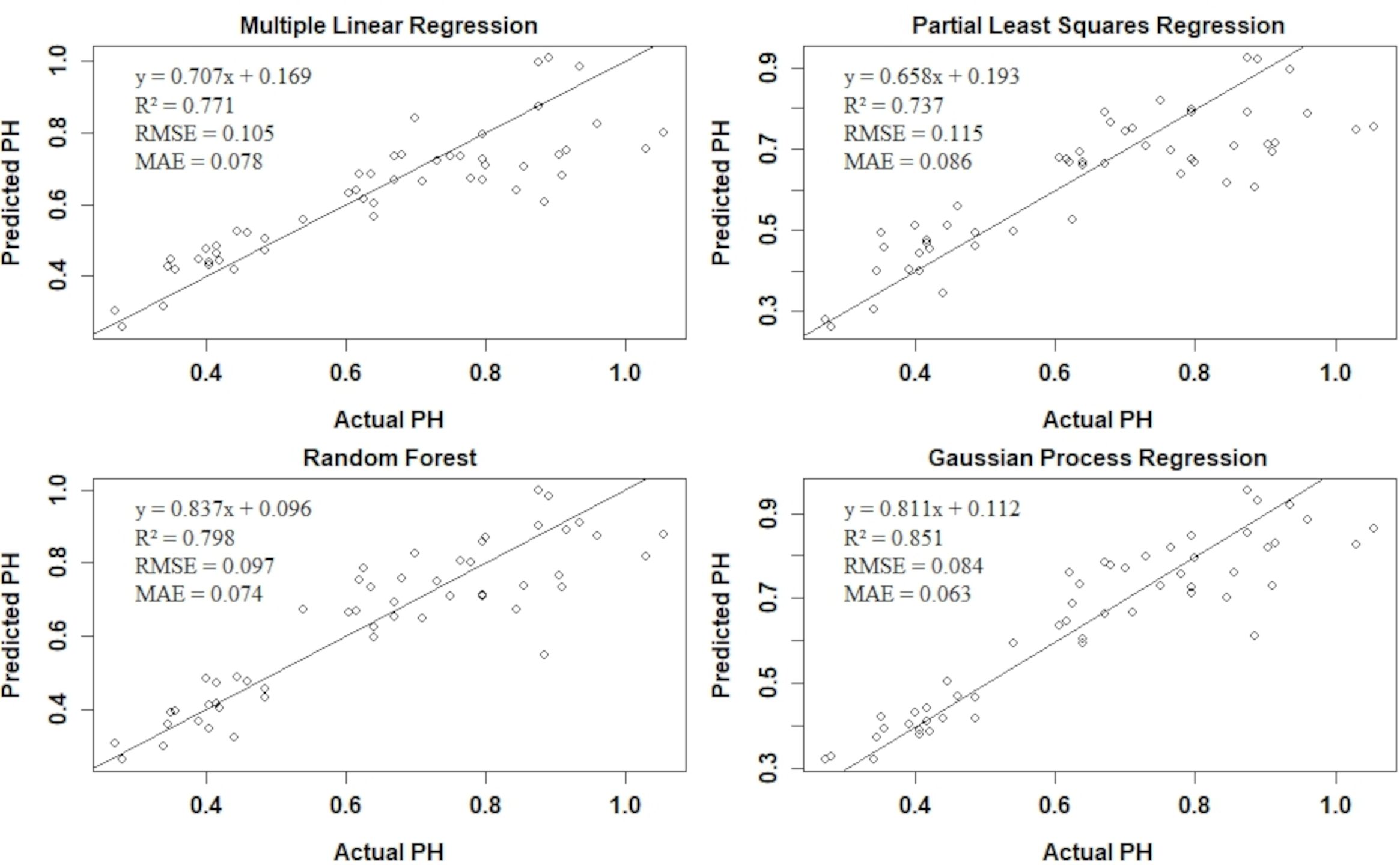

To evaluate the performance of our regression models in predicting PH, we calculated the R2, RMSE, and MAE on the test dataset summarized in the Figure 10.

Figure 10. Performance metrics for different regression models (Multiple linear regression, partial least square regression, random forest, and Gaussian process regression) on the test data set.

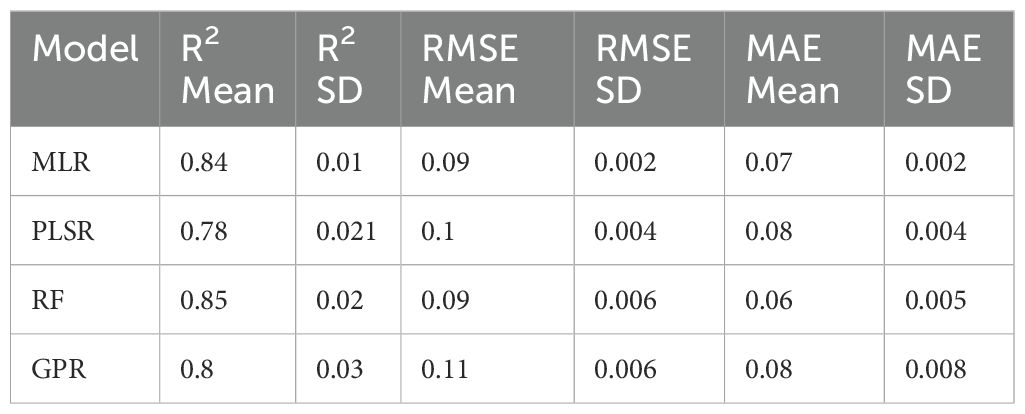

The GPR model exhibited the highest R2 value of 0.85, indicating that it explains 85% of the variance in the PH data. Additionally, GPR has the lowest RMSE and MAE, suggesting superior predictive accuracy and consistency compared to the other models. The RF model also performed well, with an R2 of 0.79, RMSE of 0.09, and MAE of 0.07. The MLR model achieved an R2 of 0.77, RMSE of 0.1, and MAE of 0.078, indicating reasonable performance with higher prediction error compared to GPR and RF. The PLSR model had the lowest performance, with an R2 of 0.73, RMSE of 0.11, and MAE of 0.08.

To assess the generalizability of the models, 5-fold cross-validation was performed, with the results summarized in Table 6. The cross-validation metrics provide a more robust estimate of the model performance by averaging the result across multiple folds. The RF model maintained strong performance in cross-validation, with a mean R2 of 0.85, RMSE of 0.09, and MAE of 0.06. The GPR model showed consistent results with a mean R2 of 0.8, RMSE of 0.11, and MAE of 0.08, demonstrating its reliability and stability.

The MLR model achieved a mean R2 of 0.84, RMSE of 0.09, and MAE of 0.07, indicating good performance with low variability, as reflected by the slight standard deviation. The PLSR model had a mean R2 of 0.78, RMSE of 0.1, and MAE of 0.08, showing slightly lower performance compared to the other models but with acceptable stability.

4 Discussion

This study focused on identifying the most effective sensor between the two most widely used sensors (RGB camera and LiDAR) for PH estimation using UAVs. Across the crop growth cycle, HTAP was conducted at three different times. Our result showed that PH estimation in soybean using UAV-based LiDAR (R2 = 0.83) could be the most reliable than the UAV-equipped RGB camera (R2 = 0.53) in the pod growth and seed filling stages. However, the result showed the reliability of deploying RGB cameras, specifically in the physiological maturity stage when LiDAR cannot capture highly correlated results. Similarly, the study highlighted factors like scan angle and elevation adjustment critical in canopy height generation using aerial platforms.

4.1 Estimation of crop height

In this study, we assessed crop height using RGB and LiDAR sensors across three soybean growth stages. To enhance the precision of crop height predictions from RGB imageries, we adjusted the DTM and DSM rasters by applying elevation adjustment. These adjustments used offset values calculated from buffers around GCPs and checkpoints. For LiDAR point clouds, we set appropriate scan angles and performed ground classification using the multiscale curvature classification (MCC) method, which effectively distinguishes between ground and non-ground points. The MCC algorithm, along with the Progressive morphological filter (PMF) and cloth simulation function (CSF), was evaluated to refine the DTM accuracy. PMF, as described by Zhang et al. (2003) classifies points as ground and non-ground points based on a dual-threshold approach. Similarly, CSF, as explained by Zhang et al. (2016) simulates a virtual cloth dropped over an inverted point cloud to identify ground points, and MCC uses curvature thresholds to interpolate the ground surface as explained by Evans and Hudak (2007). The PMF algorithm might remove essential terrain details by classifying ground points as non-ground (Zhang et al., 2003). Similarly, the CSF algorithm struggles with the classification of low vegetation and MCC is well-suited for the classification of complex vegetated surfaces (Roberts et al., 2019). In our study, ground classification was done during the extraction of the DTM dataset at the early growth stage of soybeans, so MCC was preferred over CSF to obtain accurate terrain information.

For UAV-based data collection, RGB cameras offer a cost-effective solution despite their limitations in penetrating dense canopies (Cao et al., 2021; Luo et al., 2021). The images can also be processed in user-friendly processing software. The structure from motion (SfM) photogrammetry technique is used to gather canopy height and structure details from high-resolution images as this method can generate a point cloud from several images (Kalacska et al., 2017; Coops et al., 2021). UAV-based RGB camera estimated PH and proved as an important proxy for dry biomass in summer barley (Bendig et al., 2015). UAV-based imaging measurement system quantified PH with minimum error in cotton (Feng et al., 2019). PH and leaf area index (LAI) of different soybean varieties were estimated using a Kinect 2.0 sensor indoors (Ma et al., 2019). Conversely, LiDAR technology excels by penetrating crop canopies to measure PH accurately, unaffected by external lighting (Yuan et al., 2018). The advantageous features of LiDAR include its ability to penetrate the crop canopy, enabling it to reach the ground (Dalla Corte et al., 2022) and supply 3D structural information invaluable for HTAP (Parker et al., 2004; Omasa et al., 2006). Terrestrial laser scanning (TLS) produced promising PH and showed its potential for non-destructive biomass estimation in maize (Tilly et al., 2014b) and rice (Tilly et al., 2014a). Multiple sensors mounted on commercial wild blueberry harvesters proved very efficient at estimating PH and fruit yield (Farooque et al., 2013). A study on winter wheat using a field phenomics platform (FPP) of LiDAR and a time of flight (ToF) camera produced a strongly correlated PH with manual height (Zhou et al., 2015). UAV-LiDAR was effective in estimating PH in sugar beet and wheat, while it was difficult in potatoes due to the complex canopy structure and uneven terrain created by ridges and furrows (ten Harkel et al., 2019).

Our study found that the UAV-mounted LiDAR more accurately predicted soybean height which aligns with similar conclusions in other crops like wheat (Madec et al., 2017; Jimenez-Berni et al., 2018; Yuan et al., 2018), sorghum (Maimaitijiang et al., 2020), and maize (Liu et al., 2024). In our study, there was a strong correlation between the PH obtained from the LiDAR sensor and manual measurement on July 7 and July 18. The R2 values obtained in all three growth stages were greater than those of the earlier study done by Luo et al. (2021) using UAV-LiDAR in soybean. This may be attributed to the overestimated DTM, which led to a smaller correlation value in the earlier studies. To avoid DTM overestimation, our study used aerial data when the soybean field was completely visible, and soybean were in a very small growth stage. We observe a decline in R2 value in the R7 stage which aligns with similar studies in maize by Zhu et al. (2020) where a significant decrease in plant length, PH, canopy height, and plant width was observed as the plant progressed toward maturity and leaves fell off. At the R7 stage, soybeans undergo senescence, leading to leaf drop and changes in canopy structure. These changes might affect the LiDAR’s ability to capture accurate plant height due to reduced canopy density and increased exposure to underlying structure that might have reduced the plot aggregated mean of all the pixels. Photogrammetry PH shows a moderate correlation in all the R3, R5, and R7 stages as demonstrated by moderate R2 values. However, increasing RMSE value indicated increasing deviation of RGB-derived PH from manual measurement. Similar results were found in the earlier studies where the CHM created using the SfM technique exhibited some inaccuracies in height measurement, specifically noticeable in shorter plants (Cunliffe et al., 2016; Wijesingha et al., 2019). Our finding of PH obtained from RGB showing moderate correlation aligns with the conclusion from previous research on corn (Grenzdörffer, 2014; Bareth et al., 2016), which indicated that the photogrammetry technique struggles to reconstruct the uppermost parts of the canopy accurately. The overestimation in the PH estimated from the RGB camera in comparison to the LiDAR sensor may be partly due to the disparity in the spatial resolution of the two sensor systems as well as differences in canopy penetration capacity (Madec et al., 2017).

Overall, the regression models validated the PH predictions, with Gaussian Process Regression (GPR) showing the best performance and MLR and PLSR the least. Incorporation of the model improved the soybean height prediction demonstrated by the increased R2 from 0.83 (LiDAR) to 0.85 (GPR). A similar result was obtained in the study of PH using UAV-based oblique photography and LiDAR sensor in maize (Liu et al., 2024). This study underscores the effectiveness of integrating multiple sensing technologies and analytical models to optimize the accuracy of crop height assessments throughout different stages of plant growth.

4.2 Influence of scan angle in PH prediction using LiDAR sensor

The complex plant morphology makes it harder for laser penetration which makes it difficult to obtain accurate measurements (Saeys et al., 2009). Earlier studies in winter wheat and winter rye demonstrated that overestimation is low for smaller angles and higher for increasing angles (Ehlert and Heisig, 2013). They further concluded the necessity of evaluating the role of scan angle in overestimating the measurement error in individual crop species (Xu et al., 2023) identified and developed a correction model based on scan angle to improve grassland canopy height estimation and demonstrated considerable improvement in PH from their corrected model (Guo et al., 2019) showed how varying scan angles and positions significantly influence accuracy in wheat height measurement throughout its growth stages. Earlier studies using LiDAR technology in predicting PH have not explored the influence of scan angle. However, we observed the distribution of LiDAR pulses across different scan angles and made efforts to identify appropriate scan angles. Reducing the scan angle towards zero can significantly enhance the accuracy of elevation data, particularly in establishing the digital elevation model (Lohr, 1998). To restrict our scan angle ranges to zero we evaluated the distribution of LiDAR pulses across various scan angles and identified that the -15 to 15-degree range consistently captured our ground feature. Our choice of scan angle range was further validated when ground points obtained using the MCC algorithm were uniformly distributed to zero elevation value. Thus, our study identified the optimum scan angle range in soybean PH estimation. Further, studies need to be conducted to quantify the effect of different scan angle ranges on the PH.

4.3 Significance of elevation adjustment in PH estimation

In our study, we observed inconsistencies in field elevation, underscoring the necessity of elevation adjustment to enhance the accuracy of PH predictions. PH estimation is particularly susceptible to biases stemming from errors in Digital Terrain Models (DTM) and Digital Surface Models (DSM), as well as the effects of wind (Han et al., 2018). Accurate elevation information is crucial for precision agriculture, as it allows for a detailed understanding of elevation gradients across the research field, which is essential for estimating precise elevation. Despite advancements in remote sensing technologies such as LiDAR and InSAR, which offer improved vegetation height assessments, adjustments for elevation are still required to refine these measurements (Breidenbach et al., 2008). The variability in ground profiles, influenced by external factors and the operation of agricultural machinery, further complicates accurate ground-level detection (Sun et al., 2022). Malambo et al. (2018) also highlight the significant impact that accurate ground surface detection has on PH estimation accuracy.

The role of elevation is particularly critical in ecological studies, such as predicting plant species distribution in mountainous areas, where the pattern of elevation is a key determinant (Oke and Thompson, 2015). Additionally, the creation of DTMs from aerial data, whether from LiDAR or photogrammetric methods, is susceptible to inherent inaccuracies due to sensor noise, atmospheric conditions, or the angle of data acquisition (Hug et al., 2004). Tilly et al. (2015) noted that elevation adjustments are essential when integrating multiple datasets collected at different times or using various technologies, to ensure a consistent reference point across datasets.

Adjusting for elevation not only enhances the precision of PH measurements but also improves the overall interpretation of remote sensing data, facilitating applications such as crop monitoring, yield prediction, and precision farming (Bendig et al., 2014; Mulla and Belmont, 2018). While many studies have acknowledged biases in PH prediction, few have addressed the influence of elevation adjustment as comprehensively as Tilly et al. (2015) and Bendig et al. (2014). In this study, we employed this approach to derive the improved DTMs and DSMs, leading to improved PH estimations from both aerial RGB and LiDAR sensors. This methodological advancement contributes significantly to the field of precision agriculture by providing more reliable data for crop management and research.

4.4 Practical application of the study

RGB cameras and LiDAR are the two most popular sensors used for high-throughput plant height estimation techniques across agricultural operations on proximal or aerial platforms. Multiple studies across various crops have already demonstrated the usefulness of both the sensors for reliable plant height estimation. Those results have been valuable sources for farmers, agronomists, and breeders for high throughput plant phenotyping. In the case of soybeans, there are fewer studies regarding UAV-based plant height estimation techniques. The results of this study suggest LiDAR as the most effective sensor for soybean plant height estimation between pod development to seed filling. However, low-cost RGB cameras was found more effective to predict plant height at a later stage (onset of physiological maturity). Thus, the experimental results from this study would be useful to the agricultural researchers and farmers for the selection of the most effective sensor for plant height estimation during different growth stages in soybeans. Recording plant height at an appropriate time using the most effective sensor will help farmers make an informed decision regarding crop management, as plant height is one of the most important proxies for estimating soybean yield and biomass.

5 Conclusion

This study explored low-cost RGB and LiDAR sensors (the most popular for PH studies) to evaluate which sensors produced more effective results for the PH estimation in soybean. An appropriate scan angle range was identified during the data processing, and ground classification was done using the MCC algorithm to compute precise DTM values. The CHM-based PH obtained from RGB and LiDAR sensors was compared with ground reference PH collected manually. Low-cost RGB cameras showed a moderate and consistent correlation across all three growth stages. In contrast, LiDAR demonstrated superior accuracy for soybean height estimation. However, aerial data collection timing and scan angle could significantly influence the result. Furthermore, low-cost RGB cameras could still be a more reliable option than LiDAR sensors for estimating soybean height at a later stage. This study verified the potential of low-cost RGB cameras and LiDAR in assessing soybean PH at different growth stages. The results from this study would help select appropriate aerial phenotyping sensors for estimating PH during different soybean growth stages.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

LP: Investigation, Project administration, Data curation, Formal Analysis, Methodology, Writing – original draft. JS: Investigation, Funding acquisition, Supervision, Writing – review & editing. DK: Investigation, Writing – review & editing. SP: Investigation, Writing – review & editing. SK: Investigation, Writing – review & editing. BG: Writing – review & editing, Funding acquisition, Supervision. MG: Funding acquisition, Writing – review & editing, Supervision. AC: Conceptualization, Funding acquisition, Writing – review & editing, Investigation, Project administration, Resources, Supervision, Validation.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was funded by USDA-NIFA 1890 Capacity Building Grant (Award Number 2023-38821-39960) to the Kentucky State University.

Acknowledgments

The authors are grateful to the farm staffs at Harold R. Benson Research and Demonstration Farm, Kentucky State University, for their help with experimental field management and related farm operations throughout the crop growing season.

Conflict of interest

The authors declare that the research was conducted without any commercial or financial relationships that could potentially create a conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Andújar, D., Escolà, A., Rosell-Polo, J. R., Fernández-Quintanilla, C., Dorado, J. (2013). Potential of a terrestrial LiDAR-based system to characterise weed vegetation in maize crops. Comput. Electron Agric. 92, 11–15. doi: 10.1016/j.compag.2012.12.012

Bareth, G., Bendig, J., Tilly, N., Hoffmeister, D., Aasen, H., Bolten, A. (2016). A comparison of UAV-and TLS-derived plant height for crop monitoring: using polygon grids for the analysis of crop surface models (CSMs). Photogramm. Fernerkund. Geoinf 2016, 85–94. doi: 10.1127/pfg/2016/0289

Bendig, J., Bolten, A., Bennertz, S., Broscheit, J., Eichfuss, S., Bareth, G. (2014). Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens (Basel) 6, 10395–10412. doi: 10.3390/rs61110395

Bendig, J., Yu, K., Aasen, H., Bolten, A., Bennertz, S., Broscheit, J., et al. (2015). Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Observation Geoinformation 39, 79–87. doi: 10.1016/j.jag.2015.02.012

Blanquart, J.-E., Sirignano, E., Lenaerts, B., Saeys, W. (2020). Online crop height and density estimation in grain fields using LiDAR. Biosyst. Eng. 198, 1–14. doi: 10.1016/j.biosystemseng.2020.06.014

Borra-Serrano, I., De Swaef, T., Quataert, P., Aper, J., Saleem, A., Saeys, W., et al. (2020). Closing the phenotyping gap: high resolution UAV time series for soybean growth analysis provides objective data from field trials. Remote Sens (Basel) 12, 1644. doi: 10.3390/rs12101644

Breidenbach, J., Koch, B., Kändler, G., Kleusberg, A. (2008). Quantifying the influence of slope, aspect, crown shape and stem density on the estimation of tree height at plot level using lidar and InSAR data. Int. J. Remote Sens 29, 1511–1536. doi: 10.1080/01431160701736364

Brocks, S., Bareth, G. (2018). Estimating barley biomass with crop surface models from oblique RGB imagery. Remote Sens (Basel) 10, 268. doi: 10.3390/rs10020268

Calders, K., Adams, J., Armston, J., Bartholomeus, H., Bauwens, S., Bentley, L. P., et al. (2020). Terrestrial laser scanning in forest ecology: Expanding the horizon. Remote Sens Environ. 251, 112102. doi: 10.1016/j.rse.2020.112102

Cao, X., Liu, Y., Yu, R., Han, D., Su, B. (2021). A comparison of UAV RGB and multispectral imaging in phenotyping for stay green of wheat population. Remote Sens (Basel) 13, 5173. doi: 10.3390/rs13245173

Colomina, I., Molina, P. (2014). Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogrammetry Remote Sens. 92, 79–97. doi: 10.1016/j.isprsjprs.2014.02.013

Coops, N. C., Tompalski, P., Goodbody, T. R. H., Queinnec, M., Luther, J. E., Bolton, D. K., et al. (2021). Modelling lidar-derived estimates of forest attributes over space and time: A review of approaches and future trends. Remote Sens Environ. 260, 112477. doi: 10.1016/j.rse.2021.112477

Cornelissen, J. H. C., Lavorel, S., Garnier, E., Díaz, S., Buchmann, N., Gurvich, D. E., et al. (2003). A handbook of protocols for standardised and easy measurement of plant functional traits worldwide. Aust. J. Bot. 51, 335. doi: 10.1071/BT02124

Cunliffe, A. M., Brazier, R. E., Anderson, K. (2016). Ultra-fine grain landscape-scale quantification of dryland vegetation structure with drone-acquired structure-from-motion photogrammetry. Remote Sens Environ. 183, 129–143. doi: 10.1016/j.rse.2016.05.019

Dalla Corte, A. P., de Vasconcellos, B. N., Rex, F. E., Sanquetta, C. R., Mohan, M., Silva, C. A., et al. (2022). Applying high-resolution UAV-liDAR and quantitative structure modelling for estimating tree attributes in a crop-livestock-forest system. Land (Basel) 11, 507. doi: 10.3390/land11040507

Deery, D., Jimenez-Berni, J., Jones, H., Sirault, X., Furbank, R. (2014). Proximal remote sensing buggies and potential applications for field-based phenotyping. Agronomy 4, 349–379. doi: 10.3390/agronomy4030349

Dhami, H., Yu, K., Xu, T., Zhu, Q., Dhakal, K., Friel, J., et al. (2020). “Crop height and plot estimation for phenotyping from unmanned aerial vehicles using 3D liDAR,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Las Vegas, NV, USA: IEEE), 2643–2649. doi: 10.1109/IROS45743.2020.9341343

Ehlert, D., Heisig, M. (2013). Sources of angle-dependent errors in terrestrial laser scanner-based crop stand measurement. Comput. Electron Agric. 93, 10–16. doi: 10.1016/j.compag.2013.01.002

Erten, E., Lopez-Sanchez, J. M., Yuzugullu, O., Hajnsek, I. (2016). Retrieval of agricultural crop height from space: A comparison of SAR techniques. Remote Sens Environ. 187, 130–144. doi: 10.1016/j.rse.2016.10.007

Evans, J. S., Hudak, A. T. (2007). A multiscale curvature algorithm for classifying discrete return LiDAR in forested environments. IEEE Trans. Geosci. Remote Sens. 45, 1029–1038. doi: 10.1109/TGRS.2006.890412

Farooque, A. A., Chang, Y. K., Zaman, Q. U., Groulx, D., Schumann, A. W., Esau, T. J. (2013). Performance evaluation of multiple ground based sensors mounted on a commercial wild blueberry harvester to sense plant height, fruit yield and topographic features in real-time. Comput. Electron Agric. 91, 135–144. doi: 10.1016/j.compag.2012.12.006

Feng, A., Zhang, M., Sudduth, K. A., Vories, E. D., Zhou, J. (2019). Cotton yield estimation from UAV-based plant height. Trans. ASABE 62, 393–404. doi: 10.13031/trans.13067

Gao, S., Niu, Z., Huang, N., Hou, X. (2013). Estimating the Leaf Area Index, height and biomass of maize using HJ-1 and RADARSAT-2. Int. J. Appl. Earth Observation Geoinformation 24, 1–8. doi: 10.1016/j.jag.2013.02.002

Gao, M., Yang, F., Wei, H., Liu, X. (2022). Individual maize location and height estimation in field from UAV-borne LiDAR and RGB images. Remote Sens (Basel) 14, 2292. doi: 10.3390/rs14102292

Gawęda, D., Nowak, A., Haliniarz, M., Woźniak, A. (2020). Yield and economic effectiveness of soybean grown under different cropping systems. Int. J. Plant Prod 14, 475–485. doi: 10.1007/s42106-020-00098-1

Gonçalves, J. A., Henriques, R. (2015). UAV photogrammetry for topographic monitoring of coastal areas. ISPRS J. Photogrammetry Remote Sens. 104, 101–111. doi: 10.1016/j.isprsjprs.2015.02.009

Grenzdörffer, G. J. (2014). Crop height determination with UAS point clouds. Int. Arch. Photogrammetry Remote Sens. Spatial Inf. Sci. XL–1, 135–140. doi: 10.5194/isprsarchives-XL-1-135-2014

Guan, H., Liu, M., Ma, X., Yu, S. (2018). Three-dimensional reconstruction of soybean canopies using multisource imaging for phenotyping analysis. Remote Sens (Basel) 10, 1206. doi: 10.3390/rs10081206

Guo, T., Fang, Y., Cheng, T., Tian, Y., Zhu, Y., Chen, Q., et al. (2019). Detection of wheat height using optimized multi-scan mode of LiDAR during the entire growth stages. Comput. Electron Agric. 165, 104959. doi: 10.1016/j.compag.2019.104959

Hämmerle, M., Höfle, B. (2016). Direct derivation of maize plant and crop height from low-cost time-of-flight camera measurements. Plant Methods 12, 50. doi: 10.1186/s13007-016-0150-6

Han, L., Yang, G., Yang, H., Xu, B., Li, Z., Yang, X. (2018). Clustering field-based maize phenotyping of plant-height growth and canopy spectral dynamics using a UAV remote-sensing approach. Front. Plant Sci. 9. doi: 10.3389/fpls.2018.01638

Hu, P., Chapman, S. C., Wang, X., Potgieter, A., Duan, T., Jordan, D., et al. (2018). Estimation of plant height using a high throughput phenotyping platform based on unmanned aerial vehicle and self-calibration: Example for sorghum breeding. Eur. J. Agron. 95, 24–32. doi: 10.1016/j.eja.2018.02.004

Hug, C., Ullrich, A., Grimm, A. (2004). Litemapper-5600-a waveform-digitizing LiDAR terrain and vegetation mapping system. Int. Arch. Photogrammetry Remote Sens. Spatial Inf. Sci. 36, W2.

Imsande, J. (1992). Agronomic characteristics that identify high yield, high protein soybean genotypes. Agron. J. 84, 409–414. doi: 10.2134/agronj1992.00021962008400030012x

Jiang, Y., Li, C., Paterson, A. H. (2016). High throughput phenotyping of cotton plant height using depth images under field conditions. Comput. Electron Agric. 130, 57–68. doi: 10.1016/j.compag.2016.09.017

Jimenez-Berni, J. A., Deery, D. M., Rozas-Larraondo, P., Condon, A.(. G., Rebetzke, G. J., James, R. A., et al. (2018). High throughput determination of plant height, ground cover, and above-ground biomass in wheat with LiDAR. Front. Plant Sci. 9. doi: 10.3389/fpls.2018.00237

Jin, J., Liu, X., Wang, G., Mi, L., Shen, Z., Chen, X., et al. (2010). Agronomic and physiological contributions to the yield improvement of soybean cultivars released from 1950 to 2006 in Northeast China. Field Crops Res. 115, 116–123. doi: 10.1016/j.fcr.2009.10.016

Jing, L., Wei, X., Song, Q., Wang, F. (2023). Research on estimating rice canopy height and LAI based on LiDAR data. Sensors 23, 8334. doi: 10.3390/s23198334

Kalacska, M., Chmura, G. L., Lucanus, O., Bérubé, D., Arroyo-Mora, J. P. (2017). Structure from motion will revolutionize analyses of tidal wetland landscapes. Remote Sens Environ. 199, 14–24. doi: 10.1016/j.rse.2017.06.023

Kantolic, A. G., Slafer, G. A. (2001). Photoperiod sensitivity after flowering and seed number determination in indeterminate soybean cultivars. Field Crops Res. 72, 109–118. doi: 10.1016/S0378-4290(01)00168-X

Khan, Z., Chopin, J., Cai, J., Eichi, V.-R., Haefele, S., Miklavcic, S. J. (2018). Quantitative estimation of wheat phenotyping traits using ground and aerial imagery. Remote Sens (Basel) 10, 950. doi: 10.3390/rs10060950

Lefsky, M. A., Cohen, W. B., Parker, G. G., Harding, D. J. (2002). Lidar remote sensing for ecosystem studies: Lidar, an emerging remote sensing technology that directly measures the three-dimensional distribution of plant canopies, can accurately estimate vegetation structural attributes and should be of particular interest to forest, landscape, and global ecologists. Bioscience 52, 19–30. doi: 10.1641/0006-3568(2002)052[0019:LRSFES]2.0.CO;2

Li, X., Bull, G., Coe, R., Eamkulworapong, S., Scarrow, J., Salim, M., et al. (2019). “High-throughput plant height estimation from RGB Images acquired with Aerial platforms: a 3D point cloud based approach,” in 2019 Digital Image Computing: Techniques and Applications (DICTA) (Perth, WA, Australia: IEEE), 1–8.

Liu, W., Kim, M. Y., Van, K., Lee, Y.-H., Li, H., Liu, X., et al. (2011). QTL identification of yield-related traits and their association with flowering and maturity in soybean. J. Crop Sci. Biotechnol. 14, 65–70. doi: 10.1007/s12892-010-0115-7

Liu, K., Shen, X., Cao, L., Wang, G., Cao, F. (2018). Estimating forest structural attributes using UAV-LiDAR data in Ginkgo plantations. ISPRS J. Photogrammetry Remote Sens. 146, 465–482. doi: 10.1016/j.isprsjprs.2018.11.001

Liu, T., Zhu, S., Yang, T., Zhang, W., Xu, Y., Zhou, K., et al. (2024). Maize height estimation using combined unmanned aerial vehicle oblique photography and LIDAR canopy dynamic characteristics. Comput. Electron Agric. 218, 108685. doi: 10.1016/j.compag.2024.108685

Lohr, U. (1998). Digital elevation models by laser scanning. Photogrammetric Rec. 16, 105–109. doi: 10.1111/0031-868X.00117

Luo, S., Liu, W., Zhang, Y., Wang, C., Xi, X., Nie, S., et al. (2021). Maize and soybean heights estimation from unmanned aerial vehicle (UAV) LiDAR data. Comput. Electron Agric. 182, 106005. doi: 10.1016/j.compag.2021.106005

Ma, X., Zhu, K., Guan, H., Feng, J., Yu, S., Liu, G. (2019). High-throughput phenotyping analysis of potted soybean plants using colorized depth images based on A proximal platform. Remote Sens (Basel) 11, 1085. doi: 10.3390/rs11091085

Madec, S., Baret, F., de Solan, B., Thomas, S., Dutartre, D., Jezequel, S., et al. (2017). High-throughput phenotyping of plant height: comparing unmanned aerial vehicles and ground LiDAR estimates. Front. Plant Sci. 8. doi: 10.3389/fpls.2017.02002

Maimaitijiang, M., Sagan, V., Erkbol, H., Adrian, J., Newcomb, M., LeBauer, D., et al. (2020). UAV-based sorghum growth monitoring: A comparative analysis of Lidar and photogrammetry. ISPRS Ann. Photogrammetry Remote Sens. Spatial Inf. Sci. V-3–2020, 489–496. doi: 10.5194/isprs-annals-V-3-2020-489-2020

Malambo, L., Popescu, S. C., Murray, S. C., Putman, E., Pugh, N. A., Horne, D. W., et al. (2018). Multitemporal field-based plant height estimation using 3D point clouds generated from small unmanned aerial systems high-resolution imagery. Int. J. Appl. Earth Observation Geoinformation 64, 31–42. doi: 10.1016/j.jag.2017.08.014

Morrison, M. J., Gahagan, A. C., Lefebvre, M. B. (2021). Measuring canopy height in soybean and wheat using a low-cost depth camera. Plant Phenome J. 4, 1–6. doi: 10.1002/ppj2.20019

Mulla, D. J., Belmont, S. (2018). Identifying and Characterizing Ravines with GIS Terrain Attributes for Precision Conservation. (Madison, WI, USA: ASA, CSSA, SSSA), 109–129. doi: 10.2134/agronmonogr59.c6

Ning, H., Yuan, J., Dong, Q., Li, W., Xue, H., Wang, Y., et al. (2018). Identification of QTLs related to the vertical distribution and seed-set of pod number in soybean [Glycine max (L.) Merri. PLoS One 13, e0195830. doi: 10.1371/journal.pone.0195830

Oke, O. A., Thompson, K. A. (2015). Distribution models for mountain plant species: The value of elevation. Ecol. Modell 301, 72–77. doi: 10.1016/j.ecolmodel.2015.01.019

Omasa, K., Hosoi, F., Konishi, A. (2006). 3D lidar imaging for detecting and understanding plant responses and canopy structure. J. Exp. Bot. 58, 881–898. doi: 10.1093/jxb/erl142

Parker, G. G., Harding, D. J., Berger, M. L. (2004). A portable LIDAR system for rapid determination of forest canopy structure. J. Appl. Ecol. 41, 755–767. doi: 10.1111/j.0021-8901.2004.00925.x

Patel, A. K., Park, E.-S., Lee, H., Priya, G. G. L., Kim, H., Joshi, R., et al. (2023). Deep learning-based plant organ segmentation and phenotyping of sorghum plants using LiDAR point cloud. IEEE J. Sel Top. Appl. Earth Obs Remote Sens 16, 8492–8507. doi: 10.1109/JSTARS.2023.3312815

Petrou, Z. I., Tarantino, C., Adamo, M., Blonda, P., Petrou, M. (2012). Estimation of vegetation height through satellite image texture analysis. Int. Arch. Photogrammetry Remote Sens. Spatial Inf. Sci. XXXIX-B8, 321–326. doi: 10.5194/isprsarchives-xxxix-b8-321-2012

Phan, A. T. T., Takahashi, K. (2021). Estimation of rice plant height from a low-cost UAVBased lidar point clouds. Int. J. Geoinformatics 17, 89–98. doi: 10.52939/ijg.v17i2.1765

Qiu, Q., Sun, N., Bai, H., Wang, N., Fan, Z., Wang, Y., et al. (2019). Field-based high-throughput phenotyping for maize plant using 3D LiDAR point cloud generated with a “Phenomobile. Front. Plant Sci. 10. doi: 10.3389/fpls.2019.00554

Roberts, K. C., Lindsay, J. B., Berg, A. A. (2019). An analysis of ground-point classifiers for terrestrial LiDAR. Remote Sens (Basel) 11, 1915. doi: 10.3390/rs11161915

Roth, L., Barendregt, C., Bétrix, C.-A., Hund, A., Walter, A. (2022). High-throughput field phenotyping of soybean: Spotting an ideotype. Remote Sens Environ. 269, 112797. doi: 10.1016/j.rse.2021.112797

Saeys, W., Lenaerts, B., Craessaerts, G., De Baerdemaeker, J. (2009). Estimation of the crop density of small grains using LiDAR sensors. Biosyst. Eng. 102, 22–30. doi: 10.1016/j.biosystemseng.2008.10.003

Sharma, L. K., Bu, H., Franzen, D. W., Denton, A. (2016). Use of corn height measured with an acoustic sensor improves yield estimation with ground based active optical sensors. Comput. Electron Agric. 124, 254–262. doi: 10.1016/j.compag.2016.04.016

Smith, T., Rheinwalt, A., Bookhagen, B. (2019). Determining the optimal grid resolution for topographic analysis on an airborne lidar dataset. Earth Surface Dynamics 7, 475–489. doi: 10.5194/esurf-7-475-2019

Su, W., Zhang, M., Bian, D., Liu, Z., Huang, J., Wang, W., et al. (2019). Phenotyping of corn plants using unmanned aerial vehicle (UAV) images. Remote Sens (Basel) 11, 2021. doi: 10.3390/rs11172021

Sun, S., Li, C., Paterson, A. (2017). In-field high-throughput phenotyping of cotton plant height using LiDAR. Remote Sens (Basel) 9, 377. doi: 10.3390/rs9040377

Sun, S., Li, C., Paterson, A. H., Jiang, Y., Xu, R., Robertson, J. S., et al. (2018). In-field high throughput phenotyping and cotton plant growth analysis using LiDAR. Front. Plant Sci. 9, 16. doi: 10.3389/fpls.2018.00016

Sun, Y., Luo, Y., Zhang, Q., Xu, L., Wang, L., Zhang, P. (2022). Estimation of crop height distribution for mature rice based on a moving surface and 3D point cloud elevation. Agronomy 12, 836. doi: 10.3390/agronomy12040836

ten Harkel, J., Bartholomeus, H., Kooistra, L. (2019). Biomass and crop height estimation of different crops using UAV-based Lidar. Remote Sens (Basel) 12, 17. doi: 10.3390/rs12010017

Thompson, A. L., Thorp, K. R., Conley, M. M., Elshikha, D. M., French, A. N., Andrade-Sanchez, P., et al. (2019). Comparing nadir and multi-angle view sensor technologies for measuring in-field plant height of upland cotton. Remote Sens (Basel) 11, 700. doi: 10.3390/rs11060700

Tilly, N., Aasen, H., Bareth, G. (2015). Fusion of plant height and vegetation indices for the estimation of barley biomass. Remote Sens (Basel) 7, 11449–11480. doi: 10.3390/rs70911449

Tilly, N., Hoffmeister, D., Cao, Q., Huang, S., Lenz-Wiedemann, V., Miao, Y., et al. (2014a). Multitemporal crop surface models: accurate plant height measurement and biomass estimation with terrestrial laser scanning in paddy rice. J. Appl. Remote Sens 8, 83671. doi: 10.1117/1.JRS.8.083671

Tilly, N., Hoffmeister, D., Schiedung, H., Hütt, C., Brands, J., Bareth, G. (2014b). Terrestrial laser scanning for plant height measurement and biomass estimation of maize. Int. Arch. Photogrammetry Remote Sens. Spatial Inf. Sci. XL–7, 181–187. doi: 10.5194/isprsarchives-XL-7-181-2014

Tischner, T., Allphin, L., Chase, K., Orf, J. H., Lark, K. G. (2003). Genetics of seed abortion and reproductive traits in soybean. Crop Sci. 43, 464–473. doi: 10.2135/cropsci2003.4640

Toutin, T. (2004). Review article: Geometric processing of remote sensing images: models, algorithms and methods. Int. J. Remote Sens 25, 1893–1924. doi: 10.1080/0143116031000101611

Tunca, E., Köksal, E. S., Taner, S.Ç., Akay, H. (2024). Crop height estimation of sorghum from high resolution multispectral images using the structure from motion (SfM) algorithm. Int. J. Environ. Sci. Technol. 21, 1981–1992. doi: 10.1007/s13762-023-05265-1

Turner, D., Lucieer, A., Watson, C. (2012). An automated technique for generating georectified mosaics from ultra-high resolution unmanned aerial vehicle (UAV) imagery, based on structure from motion (SfM) point clouds. Remote Sens (Basel) 4, 1392–1410. doi: 10.3390/rs4051392

Vaiknoras, K., Hubbs, T. (2023). Characteristics and Trends of US Soybean Production Practices, Costs, and Returns Since 2002. (Washington, DC, USA: U.S. Department of Agriculture, Economic Research Service).

Volpato, L., Pinto, F., González-Pérez, L., Thompson, I. G., Borém, A., Reynolds, M., et al. (2021). High throughput field phenotyping for plant height using UAV-based RGB imagery in wheat breeding lines: feasibility and validation. Front. Plant Sci. 12. doi: 10.3389/fpls.2021.591587

Waliman, M., Zakhor, A. (2020). Deep learning method for height estimation of sorghum in the field using LiDAR. Electronic Imaging 32, 1–7. doi: 10.2352/ISSN.2470-1173.2020.14.COIMG-343

Wang, X., Singh, D., Marla, S., Morris, G., Poland, J. (2018). Field-based high-throughput phenotyping of plant height in sorghum using different sensing technologies. Plant Methods 14, 53. doi: 10.1186/s13007-018-0324-5

Wang, L., Yang, Y., Yang, Z., Li, W., Hu, D., Yu, H., et al. (2023). GmFtsH25 overexpression increases soybean seed yield by enhancing photosynthesis and photosynthates. J. Integr. Plant Biol. 65, 1026–1040. doi: 10.1111/jipb.13405

Watanabe, K., Guo, W., Arai, K., Takanashi, H., Kajiya-Kanegae, H., Kobayashi, M., et al. (2017). High-throughput phenotyping of sorghum plant height using an unmanned aerial vehicle and its application to genomic prediction modeling. Front. Plant Sci. 8. doi: 10.3389/fpls.2017.00421

Wijesingha, J., Moeckel, T., Hensgen, F., Wachendorf, M. (2019). Evaluation of 3D point cloud-based models for the prediction of grassland biomass. Int. J. Appl. Earth Observation Geoinformation 78, 352–359. doi: 10.1016/j.jag.2018.10.006

Wilcox, J. R. (2016). World Distribution and Trade of Soybean. (Madison, WI, USA: American Society of Agronomy), 1-14–2. doi: 10.2134/agronmonogr16.3ed.c1

Xu, R., Li, C., Paterson, A. H. (2019). Multispectral imaging and unmanned aerial systems for cotton plant phenotyping. PloS One 14, e0205083. doi: 10.1371/journal.pone.0205083

Xu, C., Zhao, D., Zheng, Z., Zhao, P., Chen, J., Li, X., et al. (2023). Correction of UAV LiDAR-derived grassland canopy height based on scan angle. Front. Plant Sci. 14. doi: 10.3389/fpls.2023.1108109

Ye, Y., Wang, P., Zhang, M., Abbas, M., Zhang, J., Liang, C., et al. (2023). UAV -based time-series phenotyping reveals the genetic basis of plant height in upland cotton. Plant J. 115, 937–951. doi: 10.1111/tpj.16272

Yin, X., McClure, M. A. (2013). Relationship of corn yield, biomass, and leaf nitrogen with normalized difference vegetation index and plant height. Agron. J. 105, 1005–1016. doi: 10.2134/agronj2012.0206

Yin, X., McClure, M. A., Jaja, N., Tyler, D. D., Hayes, R. M. (2011). In-season prediction of corn yield using plant height under major production systems. Agron. J. 103, 923–929. doi: 10.2134/agronj2010.0450

Yuan, W., Li, J., Bhatta, M., Shi, Y., Baenziger, P., Ge, Y. (2018). Wheat height estimation using LiDAR in comparison to ultrasonic sensor and UAS. Sensors 18, 3731. doi: 10.3390/s18113731

Zhang, K., Chen, S.-C., Whitman, D., Shyu, M.-L., Yan, J., Zhang, C. (2003). A progressive morphological filter for removing nonground measurements from airborne LIDAR data. IEEE Trans. Geosci. Remote Sens. 41, 872–882. doi: 10.1109/TGRS.2003.810682

Zhang, C., Marzougui, A., Sankaran, S. (2020). High-resolution satellite imagery applications in crop phenotyping: An overview. Comput. Electron Agric. 175, 105584. doi: 10.1016/j.compag.2020.105584

Zhang, W., Qi, J., Wan, P., Wang, H., Xie, D., Wang, X., et al. (2016). An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens (Basel) 8, 501. doi: 10.3390/rs8060501

Zhang, Y., Yu, C., Lin, J., Liu, J., Liu, B., Wang, J., et al. (2017). OsMPH1 regulates plant height and improves grain yield in rice. PloS One 12, e0180825. doi: 10.1371/journal.pone.0180825

Zhao, X., Su, Y., Hu, T., Cao, M., Liu, X., Yang, Q., et al. (2022). Analysis of UAV lidar information loss and its influence on the estimation accuracy of structural and functional traits in a meadow steppe. Ecol. Indic 135, 108515. doi: 10.1016/j.ecolind.2021.108515

Zhou, L., Gu, X., Cheng, S., Yang, G., Shu, M., Sun, Q. (2020). Analysis of plant height changes of lodged maize using UAV-LiDAR data. Agriculture 10, 146. doi: 10.3390/agriculture10050146

Zhou, J., Zhang, C., Sankaran, S., Khot, L. R., Pumphrey, M. O., Carter, A. H. (2015). “Crop height estimation in wheat using proximal sensing techniques,” in 2015 ASABE Annual International Meeting (St.Joseph, MI, USA: American Society of Agricultural and Biological Engineers), 1.

Keywords: soybean, plant height, high throughput aerial phenotyping, unmanned aerial vehicles, RGB, lidar

Citation: Pun Magar L, Sandifer J, Khatri D, Poudel S, KC S, Gyawali B, Gebremedhin M and Chiluwal A (2025) Plant height measurement using UAV-based aerial RGB and LiDAR images in soybean. Front. Plant Sci. 16:1488760. doi: 10.3389/fpls.2025.1488760

Received: 30 August 2024; Accepted: 13 January 2025;

Published: 30 January 2025.

Edited by:

Zhenghong Yu, Guangdong Polytechnic of Science and Technology, ChinaReviewed by:

Yikun Huang, Fujian Normal University, ChinaZejun Zhang, Zhejiang Normal University, China

Copyright © 2025 Pun Magar, Sandifer, Khatri, Poudel, KC, Gyawali, Gebremedhin and Chiluwal. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anuj Chiluwal, YW51ai5jaGlsdXdhbEBreXN1LmVkdQ==

Lalit Pun Magar

Lalit Pun Magar Jeremy Sandifer

Jeremy Sandifer Deepak Khatri

Deepak Khatri Sudip Poudel

Sudip Poudel Suraj KC

Suraj KC Buddhi Gyawali

Buddhi Gyawali Maheteme Gebremedhin

Maheteme Gebremedhin Anuj Chiluwal

Anuj Chiluwal