- 1Department of Electronics and Communication Engineering, Sengunthar Engineering College, Tiruchengode, India

- 2Department of Computer Science and Engineering, Kalpataru Institute of Technology, Tiptur, India

- 3Department of Statistics and Operations Research, College of Science, King Saud University, Riyadh, Saudi Arabia

- 4Department of Animal Science, Michigan State University, East Lansing, MI, United States

- 5Department of Mathematics, Faculty of Science, Mansoura University, Mansoura, Egypt

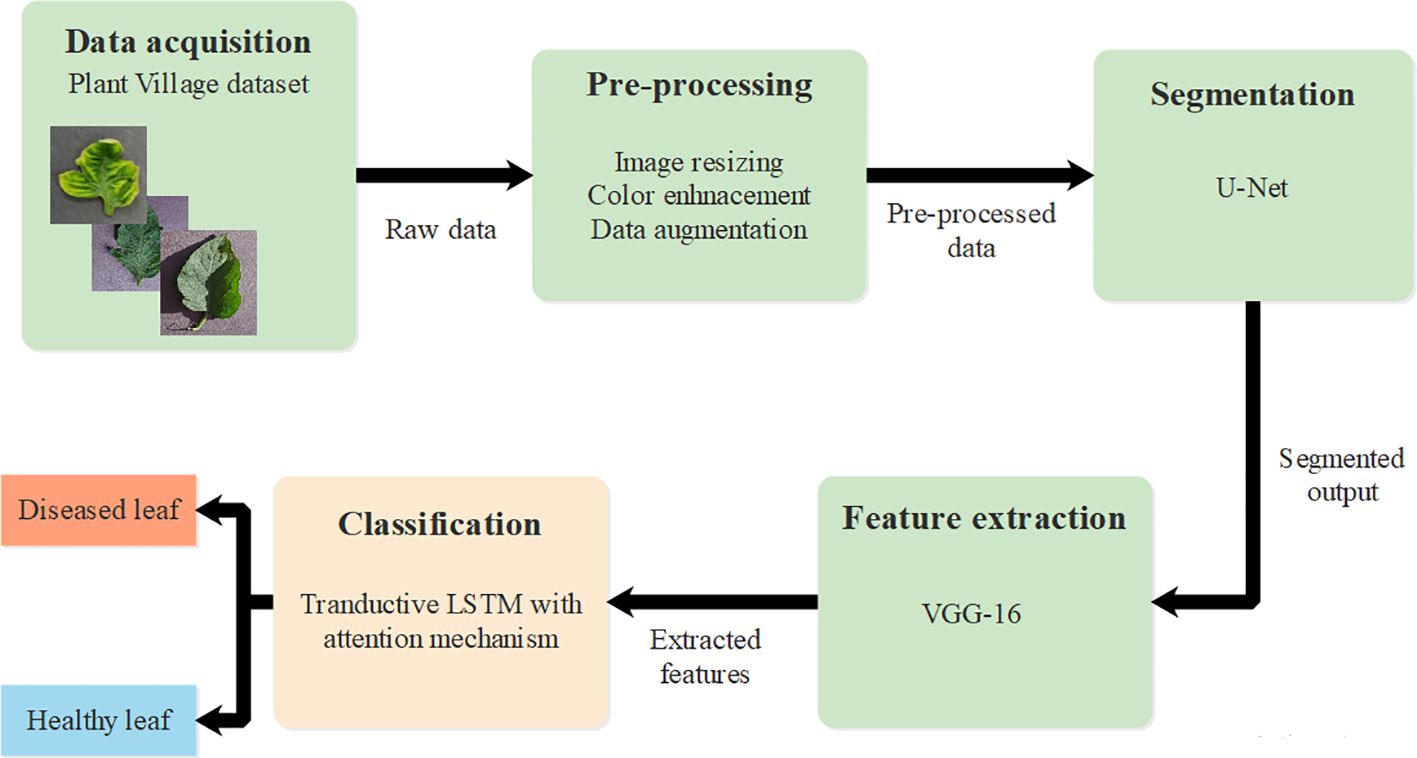

Tomatoes are considered one of the most valuable vegetables around the world due to their usage and minimal harvesting period. However, effective harvesting still remains a major issue because tomatoes are easily susceptible to weather conditions and other types of attacks. Thus, numerous research studies have been introduced based on deep learning models for the efficient classification of tomato leaf disease. However, the usage of a single architecture does not provide the best results due to the limited computational ability and classification complexity. Thus, this research used Transductive Long Short-Term Memory (T-LSTM) with an attention mechanism. The attention mechanism introduced in T-LSTM has the ability to focus on various parts of the image sequence. Transductive learning exploits the specific characteristics of the training instances to make accurate predictions. This can involve leveraging the relationships and patterns observed within the dataset. The T-LSTM is based on the transductive learning approach and the scaled dot product attention evaluates the weights of each step based on the hidden state and image patches which helps in effective classification. The data was gathered from the PlantVillage dataset and the pre-processing was conducted based on image resizing, color enhancement, and data augmentation. These outputs were then processed in the segmentation stage where the U-Net architecture was applied. After segmentation, VGG-16 architecture was used for feature extraction and the classification was done through the proposed T-LSTM with an attention mechanism. The experimental outcome shows that the proposed classifier achieved an accuracy of 99.98% which is comparably better than existing convolutional neural network models with transfer learning and IBSA-NET.

1 Introduction

In most Asian and African nations, agriculture is one of the primary sources of revenue (Raja Kumar et al., 2023). Plant disease identification and classification are essential for precise agriculture since they increase farmers’ production and raise their level of living. Early plant disease identification and classification can likely reduce farmers’ and the country’s financial losses (Ashwinkumar et al., 2022; Pandian et al., 2022). Since leaves aid in photosynthesis and supply vital nutrients and minerals for plant growth, it is necessary to see leaf diseases as a crucial phase (Espejo-Garcia et al., 2021; Reddy et al., 2023). Plant disease is a major issue that affects production quality and causes economic loss for individuals and society (Sreedevi and Manike, 2023). The diseases are due to bacteria, pathogens, fungi, and virus-like organisms and are a major variable that affects the lifespan of plants (Harakannanavar et al., 2022; Fukada et al., 2023; Singh et al., 2023). Therefore, the use of an automated system facilitates the efficient detection and classification of plant diseases with the application of deep learning and machine learning (ML) models (Mahato et al., 2022; Alzahrani and Alsaade, 2023). The rapid development in the field of ML has presented efficient results for plant disease identification.

The accessibility of cost-effective devices has allowed scholars to take images in real-time and provide superior results through ML. Some of the ML models, which include decision trees (DT) (Kiran and Chandrappa, 2023), support vector machines (SVM) (Sagar and Singh, 2023), and K-nearest neighbors (KNN) (Chong et al., 2023), have been assessed by scholars for classification. These models have a robust design and operate fine with less training data. However, they are incapable of dealing with interferences such as intensity disparities, color variations, and illumination modifications (Borugadda et al., 2023; Tabbakh and Barpanda, 2023). Furthermore, these traditional models have trade-offs between classification and detection (Sanida et al., 2023). The development of deep learning (DL) models has helped scholars overcome the drawbacks of traditional ML models. Numerous DL models such as convolutional neural network (CNN), recurrent neural networks (RNNs) (Chen et al., 2021), and Long Short-Term Memory (LSTM) (Lin and Leong, 2023) models have been found to be dependable in identifying plant diseases (Zhou et al., 2023) and are used in comparative evaluations. Due to these features, DL models have been found to be appropriate in the field of agriculture and plant disease classification (Zhong et al., 2023). The existing techniques for tomato leaf classification face substantial issues such as overfitting, low scalability, and diminished accuracy, especially when working with simulated data and larger databases. These restrictions arise from the existence of noise and insufficient feature extraction methods that struggle to differentiate appropriate features and accordingly, the performance is compromised. Crop disease detection is considered a vital factor of modern agriculture that enables farmers to recognize the diseases that affect crop yields and quality. Therefore, this research aims to address this problem by introducing a scalable and accurate crop disease detection technique by means of DL models (Shahi et al., 2023). This research introduced an effective classification approach called Transductive LSTM (T-LSTM) with an attention mechanism to classify diseased tomato leaves, enhancing the scalability and minimizing the overfitting.

The major contribution of this study is as follows:

1. The pre-processing based on color enhancement, image resizing, and data augmentation was performed and the data was then fed into the segmentation phase that used U-Net architecture.

2. The extraction of features from the segmented output used VGG-16 and finally, the classification of diseased leaves and healthy leaves was conducted using T-LSTM with an attention mechanism.

3. The transductive LSTM with an attention mechanism is introduced to classify tomato leaf disease. The T-LSTM is based on the transductive learning approach and an attention mechanism evaluates the weights of each step based on the hidden state and image patches.

This research work is organized as follows. Section 2 outlines the related works on the classification of leaf disease. The proposed methodology (materials and method) of this study is offered in Section 3. In Section 4, the experimental outcome attained while estimating the efficacy of the suggested framework is provided. Finally, the conclusion in Section 5.

2 Related works

Here, studies that focused on the detection of tomato plant leaf disease with their respective limitations are outlined in detail.

Abbas et al. (2021) describe a DL-based technique for detecting tomato leaf disease which employs a conditional generative adversarial network (C-GAN) to produce synthetic photos of tomatoes. Furthermore, this research used the DenseNet121 model to train, and the pre-trained model was fine-tuned on actual and synthetic images. The C-GAN-based augmentation approach improves generalizability and prevents overfitting issues. However, data replication occurs in DenseNet 121 when feature maps are spliced with previous layers. Saeed et al. (2023) developed a smart tomato leaf disease detection method based on transfer learning techniques such as a CNN. The method’s first layer was removed and it was replaced by softmax layers. The suggested method was a better classification approach as the dropout rate was lowered, but random connections of feature maps caused overfitting issues in the CNN.

Ahmad et al. (2021) demonstrated an approach for categorizing leaf disease using a CNN based on the symptoms of leaf disease. Initially, the dataset was evaluated based on class imbalances, and the stepwise transfer learning approach was used to reduce CNN convergence time. The proposed approach was tested using the PlantVillage and pepper disease databases and provided accurate solutions. However, the proposed approach ran into issues with long running times and high computational costs. Wu et al. (2020) used GAN-based data augmentation to improve leaf disease accuracy. Deep convolutional GAN (DCGAN) and GoogleNet has been used to produce augmented images and predict disease. However, there was an inefficiency due to the noise-to-image GANs, which displayed healthy leaves as diseased leaves.

Huang et al. (2023) introduced a fully convolutional-switchable normalization dual path network (FC-SNDPN) to detect the tomato leaf disease. This research utilized a fully convolutional network (FCN) which enhanced the segmentation capability. After this, an improved DPN was utilized for feature extraction and SNDPN was the combination which connected the Dense Net and ResNet layers. The SN layer optimized the parameters of the DPN by switching the normalized layer and helping to enhance the versatility. However, the suggested framework did not suit large datasets due to its constrained architecture. Chen et al. (2022) introduced the AlexNet CNN to detect and classify leaf disease. The CNN algorithm was utilized in the pre-processing stage and classification was performed based on a modified AlexNet to decide the accuracy. The combination of a CNN and AlexNet was fed as an algorithm in a mobile-based platform due to the limited memory capacity. This approach helps users detect the type of disease and manage it at earlier stages. However, the framework is invalid for new mobile devices which considered as the drawback of this approach.

Kaur et al. (2022) introduced a modified Mask Region CNN (Mask R-CNN) for automated segmentation of leaf disease. The suggested framework has a RCNN which helps to conserve the memory. The data pre-processing was performed using light subtraction, reduction of noise, and normalization. The magnitudes of anchor in RPN network helped to enhance the detection accuracy and enhanced the overall performance. However, the effective extraction of features based on color and texture was not considered. Bhujel et al. (2022) introduced a lightweight attention-based CNN for the classification of tomato leaf disease. The suggested approach utilized an attention module which was utilized to minimize the complexity of the CNN during classification. The key features were determined based on the location and the critical features were finalized by the position. The suggested framework was a lightweight module that increases CNN presentation and provided better classification results.

J. Arun Pandian and K. Kanchanadev (Arun Pandian and Kanchanadevi, 2022) introduced a dense convolutional neural network with five dense blocks that was referred to as 5DB-DenseConvNet to detect plant leaf disease. The architecture of the 5DB-DenseConvNet comprised five dense blocks and four transition layers. The dataset magnitude was improvised with the help of different augmentation approaches and a GAN. However, the DenseNet architecture faced issues related to data replication that affected categorization efficiency of the model. Attallah (2023) introduced a leaf disease classification model using a compact CNN along with transfer learning and feature selection. The suggested approach utilized three compact structures of a CNN which included deep layers and minimal parameters to reduce the running time and complexity. However, misclassification occurred while classifying images with complex backgrounds. Zhang et al. (2023) introduced IBSA-Net for disease identification on the basis of transfer learning with small sampled data. IBSA-Net was a combined inverted bottleneck network and shuffle attention model which incorporated a hard swish activation and a IBMax function. The suggested approach extracts the multi-level features and located the disease region with fine granularities. However, misjudgment and inappropriate detection were identified due to growth defects in the tomato leaves.

From the overall findings of the existing research studies, we found that problems occur due to the size of datasets and data duplication when classifying tomato leaves. Furthermore, a issue related to overfitting occurs due to the feature maps and existence of noise that affects the overall efficiency. These challenges highlight the requirement for innovative solutions. Thus, this research introduced an effective classification approach using transductive LSTM with an attention mechanism which is clearly described in the following section.

3 Materials and methods

This research introduced an effective deep learning classification technique using T-LSTM with an attention mechanism. The proposed approach provides effective classification of tomato leaf disease using T-LSTM which incorporates transductive learning. The T-LSTM has a uniform impact on parameters of the model such as weight and bias. Initially, raw data was obtained from PlantVillage (Dataset) and pre-processing was performed using image resizing, color enhancement, and data augmentation. Segmentation was then performed using U-Net architecture and feature extraction was performed using VGG-16. Finally, classification was accomplished through T-LSTM with an attention mechanism which classified leaves as healthy and diseased leaves. The complete process of tomato leaf disease classification is provided in Figure 1.

3.1 Data acquisition

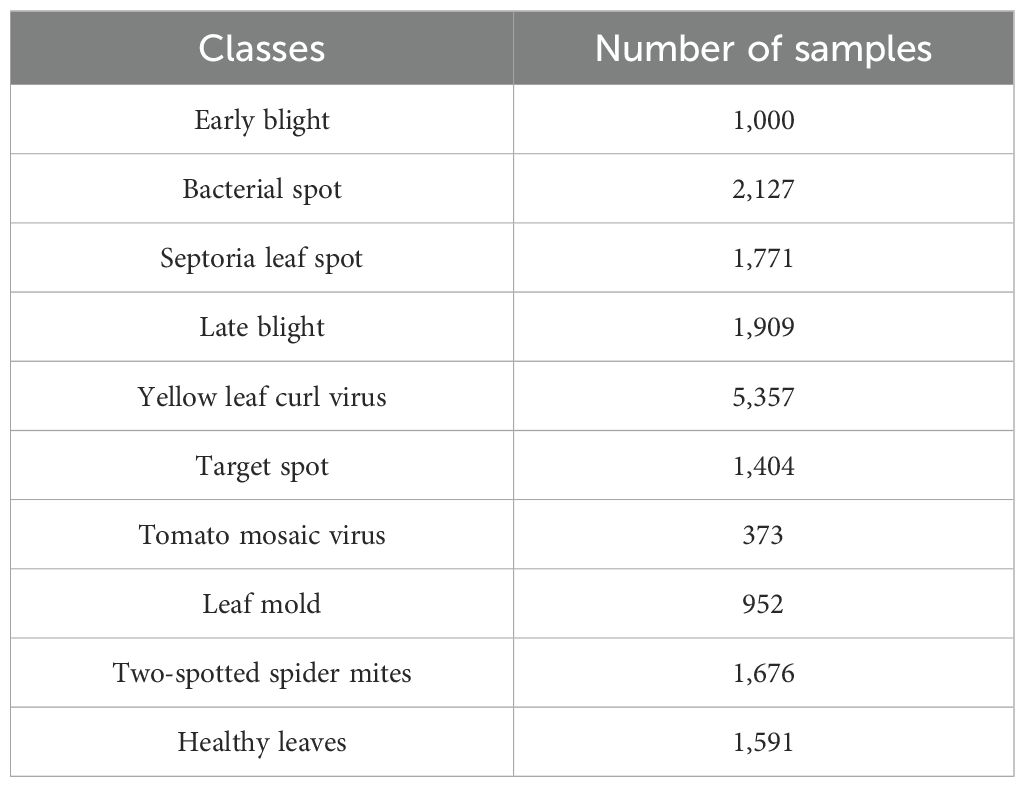

The process of data acquisition is the first stage, wherein this research exploits a publicly available dataset known as the PlantVillage dataset (Dataset) which comprises 16,012 leaf images with 10 classesOf these, nine classes are disease-affected tomato leaves and the remaining one class is the healthy class. For effective evaluation, the images were resized to . Furthermore, the dataset was divided into training, testing and validation sets in the ratio of 60:30:10. The distribution of the classes present in the dataset is tabulated in Table 1.

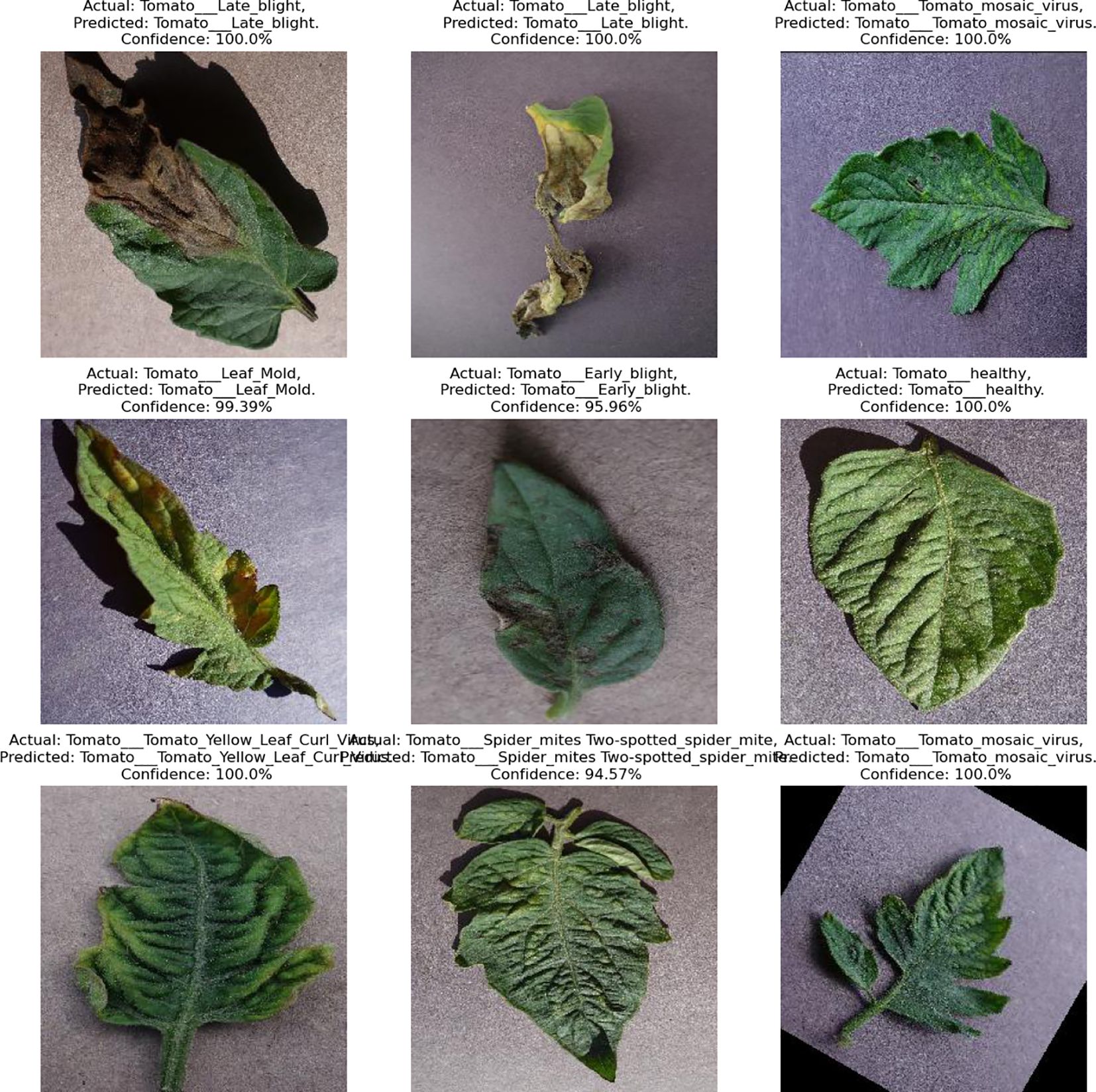

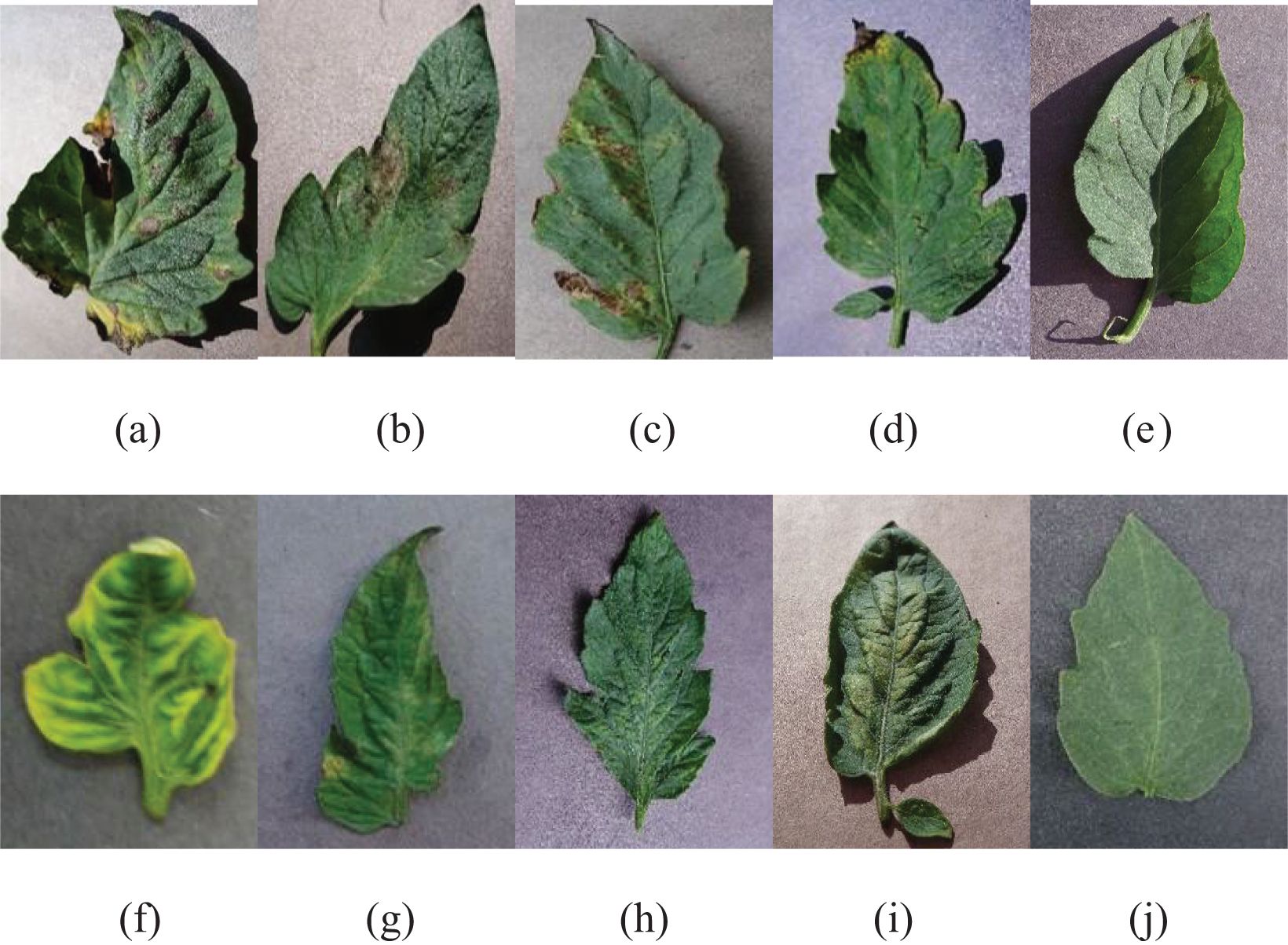

The data samples gathered from the tomato PlantVillage dataset are presented in Figure 2.

Figure 2. Sample images of tomato leaf disease dataset: (A) Early blight; (B) late blight; (C) bacterial spot; (D) Septoria leaf spot; (E) target spot; (F) yellow leaf curl virus; (G) leaf mold; (H) tomato mosaic virus; (I) two-spotted spider mites; (J) healthy leaf.

3.2 Pre-processing

The raw images obtained from the stated database were pre-processed using image resizing, color enhancement, and data augmentation. These stages are briefly discussed below.

3.1.1 Image resizing

Image resizing (Rahman et al., 2023) is a pre-processing technique where the size of the image depends on the input layer size. In this research, an input image of was resized to . The image resizing standardized the sizes of the images and reduced the computational complexity during classification.

3.1.2 Color enhancement

Color enhancement was used to enhance the visual quality by adjusting the color of the image. In this research, color enhancement was performed using contrast-limited histogram equalization (CLAHE) (Pavan et al., 2023) which generates a realistic form of the image by enhancing the color and brightness of the image.

3.1.3 Data augmentation

Data augmentation (Maliki and Prayoga, 2023) was executed to enhance the size of the training dataset by introducing different transformations such as flipping, rotating, and zooming. The commands random flip, random zoom, and random rotation were utilized to flip the image by reversing the pixel columns, zoom-in, zoom-out, and rotate the image, respectively. Data augmentation helps to maintain the data imbalance during classification.

3.3 Segmentation

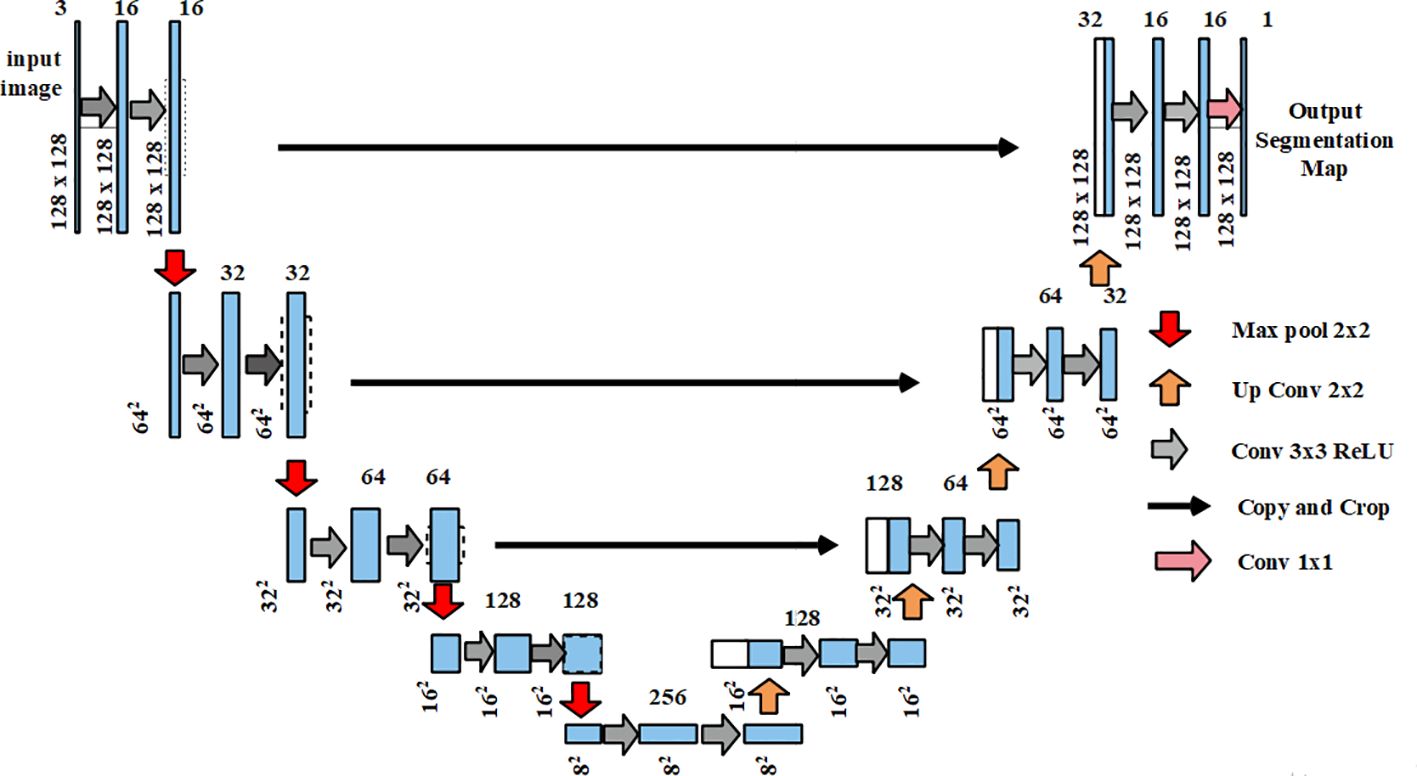

The output from the pre-processing stage was provided as the input for segmentation which used U-Net (Yin et al., 2022). The U-Net architecture comprised an expansive path and a contracting path on the right and left sides respectively. Moreover, U-Net comprised two unpadded convolutions of continued by a Rectified Linear Unit (ReLU) and a maxpooling layer. Every stage in the expansive path comprised upsampling which was followed by a convolution that equals the count of the feature channels. In the final layer, a convolutional layer was exploited to map the component feature vector of the chosen classes. The U-Net predicts the segmentation masks by adjusting weights based on the predicted output and ground truth values. For the evaluation, the suggested U-Net was analyzed alongside other models such as fully convolutional network (FCN), semantic segmentation network (SegNet), Mask R-CNN, RefineNet, and efficient neural network (ENet) which are described clearly in the result section. Figure 3 illustrates the architecture of U-Net.

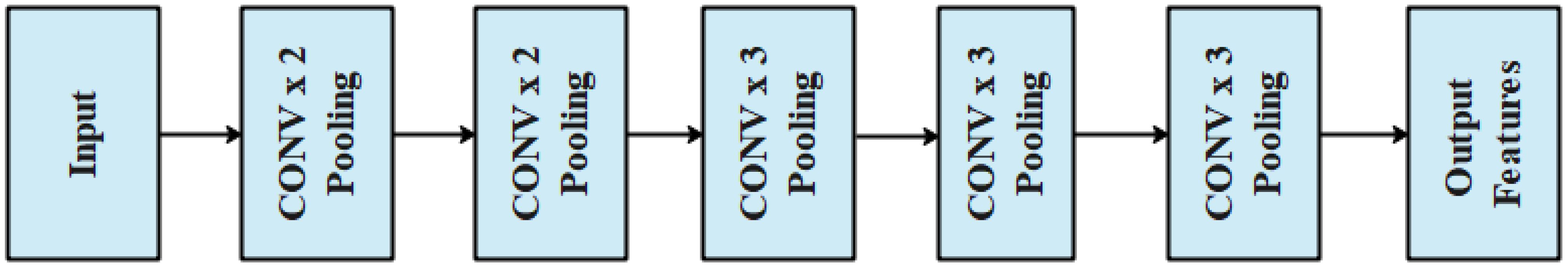

3.4 Feature extraction using VGG-16

The segmented output was utilized as the input for feature extraction which used VGG architecture. In this research, VGG-16 (Zhu et al., 2022) architecture was used to extract the features that help in the process of classification. VGG-16 is a type of CNN that is capable of extracting deep features. It comprised thirteen convolutional layers with the filter size of and the pooling layer size was . In general, the structural design helped to adjust the pixel values and helped extract features from the segmented image. The architecture of VGG-16 is presented in Figure 4.

The VGG-16 architecture allows us to alter the pixel values of the segmented leaf images and helps in effective feature extraction with its deep convolutional layers. The features obtained from the architecture of VGG-16 were evaluated alongside other feature extraction models such as GoogleNet, AlexNet, ResNet-50, and Inception Net which were then classified by the proposed T-LSTM.

3.5 Classification of healthy leaves and diseased leaves using T-LSTM with an attention layer

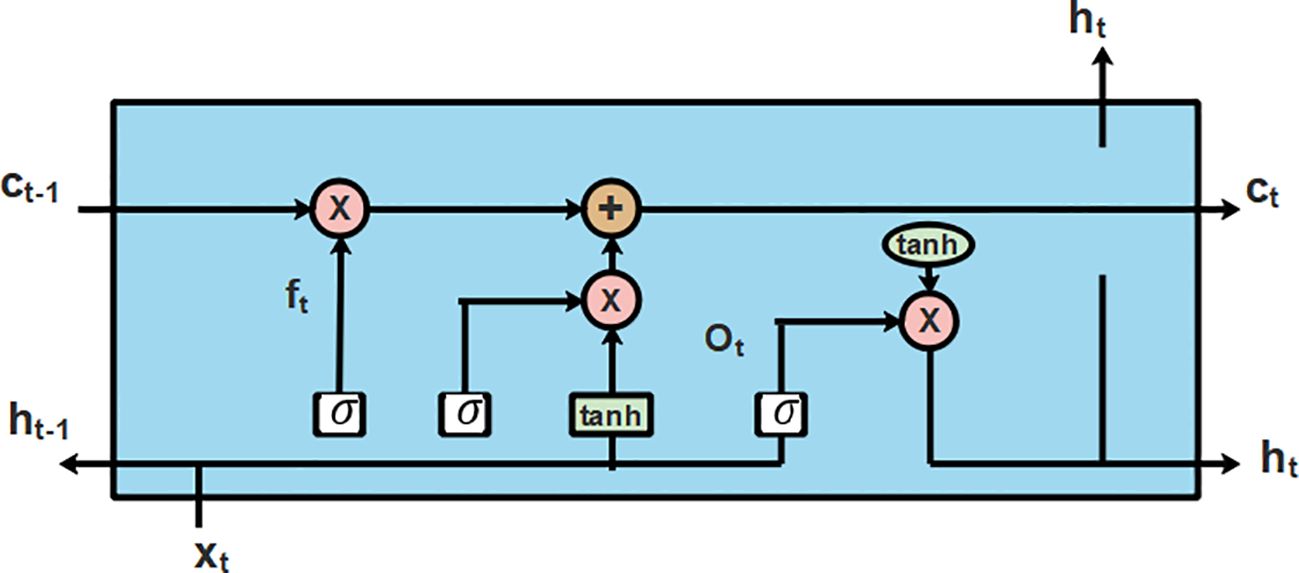

An LSTM (Gill and Khehra, 2022) is a particular type of recurrent neural network (RNN) that captures the interclass similarities between extended distances. The architecture of LSTM was selected due to the integrated hidden input and output layers. These layers in the architecture of LSTM have the capability to learn about the features for an ideal prediction by identifying the functional connection from the input data. The structural plan of LSTM is presented in Figure 5.

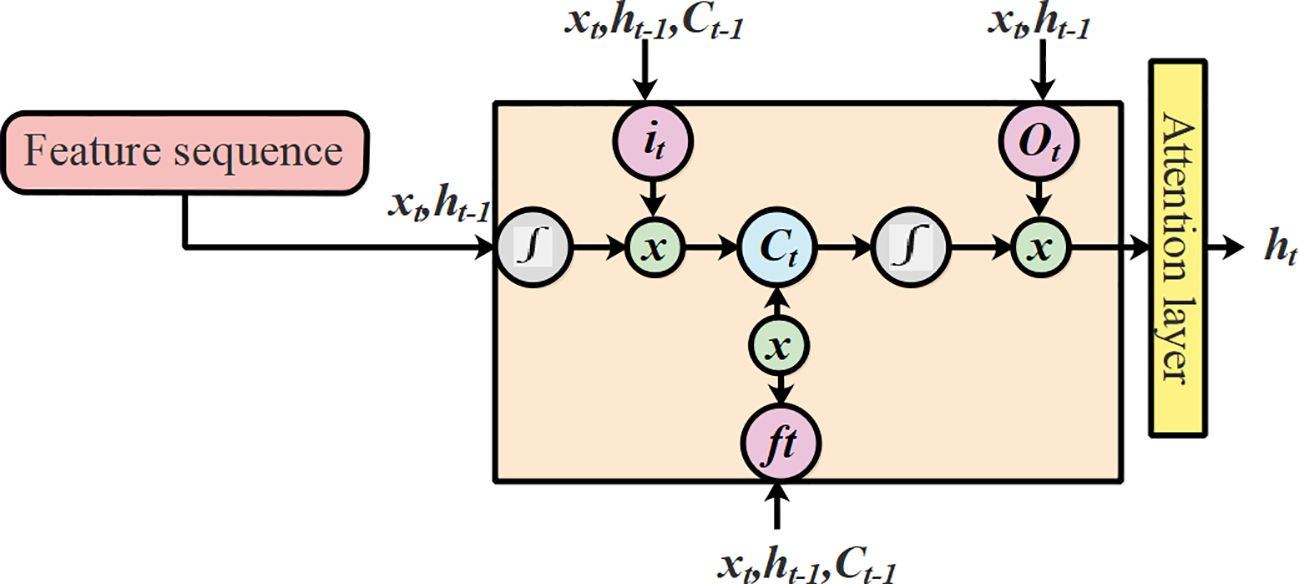

To overcome the aforementioned issues in LSTMs, this research utilized an extended version of LSTM known as T-LSTM. The process leading to output prediction using T-LSTM with an attention mechanism is presented in Figure 6.

The training data influenced the recommended features of T-LSTM which were based on the length of the test data point referred to as . The definitive aim of the training phase was to improve the efficacy closer to the test point and enhance the efficiency of the model. Considering a hidden state and the state space of T-LSTM model is presented in Equation 1 as follows (Peivandizadeh et al., 2024):

Where f (.) and g (.) refers to the mapping function of the cell state and the hidden state, respectively. The weighted parameters and the biased parameters are represented as and . The script value of the sequence is represented as . The hidden layer is represented as , the new input is represented as , the previous output is represented as the cell state is represented as , and the cell state at the previous stage is represented as . The T-LSTM varies from the previous one which was dependent on the feature space of the test points. The structural illustration of T-LSTM is shown in Figure 7.

The script value describes the linear models which were based on data point . During the training, the test point function depends on the significance of the data point that was closest to the feature vectors. The prediction performed using the dense layer was based on Equation 2, represented below:

Where the weighted and biased term of the dense layer is represented as and respectively. The new hidden point is determined by considering the resemblance factor among which specifies every constraint and . The objective function is represented in Equation 3 as follows:

Where and , and the tuning parameter is represented as . The tuning parameter based on the transductive method was exhibited in LSTM which was incapable of employing the training data from the data samples. In the feature space, the distance between the consecutive data points was minimized and the samples from the training phase were obtained prior to the test point. The parameters and are dependent on so the unseen samples were reformed which shows that the constraint of was diverse for each test point. The transductive learning approach trained the LSTM model at each test point which enhanced the model’s precise output prediction ability. The updating portion of the hidden state in was be based on Equation 4, as follows:

Where and the final prediction of output was achieved using Equation 5 as follows:

Where the input to the hidden state is denoted as .

3.5.1 T-LSTM with an attention mechanism

For the given query and the set of key-value pairs, an attention mechanism was presented by query and related keys. The query key , and value were based on Equation 6. The features are represented as query the image labels are represented as key , and the value represents input data.

Where the alignment model that was used to compute the basic dot product attention is characterized in Equation 7:

Where and the dimensions of the matrix are represented as and correspondingly. The dot product of the attention layer is fast and efficient because it has the ability to be implemented with the help of optimized matrix multiplication. This research utilized a special type of attention mechanism known as scaled dot product attention in a transformer model that is mathematically characterized in Equation 8:

Where the scaling factor is represented as and denotes the transposition of and . In scaled dot product attention, the input matrix is denoted as and the attention mechanism is expressed based on Equations 9–13) as follows:

Where the query and the key matrices are represented as and respectively. The trainable weights are represented as and , the attention score matrix is denoted by and the output is represented as . The length of the time step, dimension of the hidden unit, and secondary dimension of and is represented as and respectively. The significant point, (i.e.) the matrix , needs similar dimensionalities, represented as and it achieves this by performing modification in scaled dot product attention. Initially, is utilized as the value matrix without multiplying the weighted matrix. Then, the element-wise product is utilized to evaluate based on Equation 13. The scaling factor was neglected because the value was not large. In the last stage, the scaled-dot product attention layer was integrated with the T-LSTM which calculates the weights of the attention layer at every individual step based on the hidden state of T-LSTM and the features of the image patches. The attention layer evaluates the weight of the contextual vector which is obtained as a weighted sum of image patches. By using the context vectors, the model develops the ability to focus on the various regions of tomatoes based on disease characteristics. The integration of the attention mechanism has the ability to focus on relevant regions of the leaf image and it permits the model to selectively focus on the appropriate regions. This enhances its ability to discriminate among different classes of tomato leaf disease.

3.6 Experimental setup

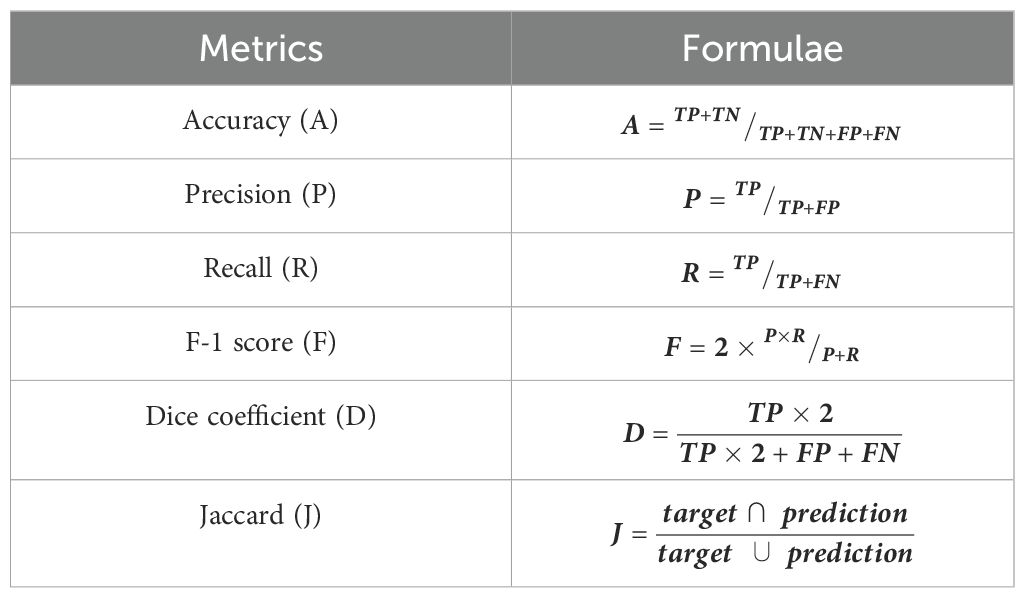

The evaluation of the proposed T-LSTM with an attention mechanism was executed in Python 3.9 software that included the Keras 2.12.0 library for constructing the T-LSTM. The system requirements were 16 GB RAM, Intel i9 computer, and Windows 10 OS. The mathematical formulations to evaluate the performance metrics are represented in Table 2.

Where the true positives and true negatives are characterized as and , and the false positives and false negatives are represented as and respectively. For the analysis results, the suggested T-LSTM was compared with state-of-the-art techniques such as RNN, deep belief networks (DBN), and LSTM which are clearly described in the following section.

4 Results

This section provides a detailed overview of the outcomes attained when evaluating the proposed T-LSTM with an attention mechanism. The results are assessed by comparing the efficacy of the proposed classifier with the other classification models listed in related works.

4.1 Performance analysis

Here, the performance of different segmentation, feature extraction, and classification techniques are presented. The collected tomato PlantVillage data were used to assess the efficiency of T-LSTM with an attention mechanism.

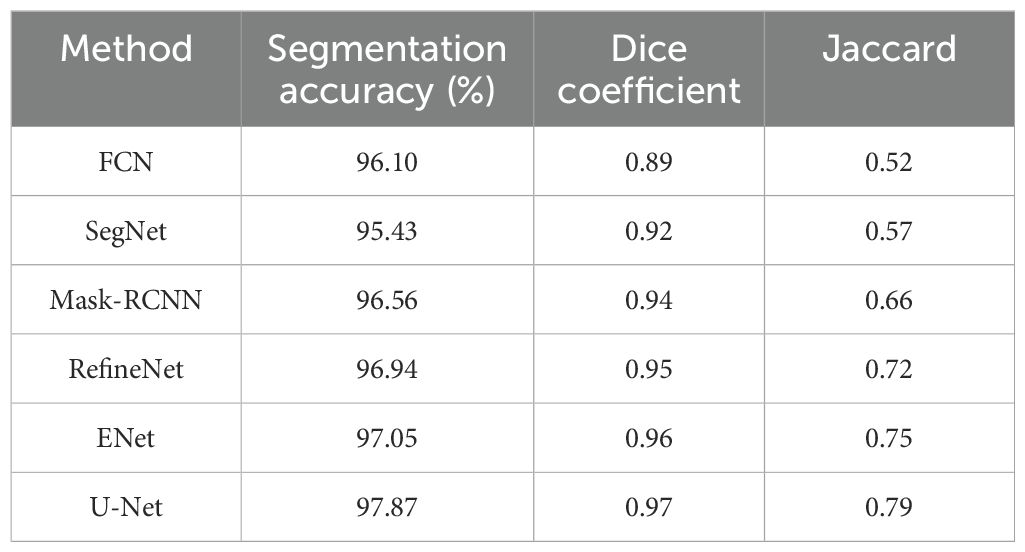

4.1.1 Evaluation of different segmentation techniques

In this section, the efficiency of the segmentation technique (i.e., U-Net) is analyzed and compared with state-of-the-art methods for segmenting the images. The results were assessed by analyzing the segmentation performance of images obtained from the PlantVillage dataset. Table 3 shows the experimental outcome attained when comparing U-Net with other state-of-the-art techniques such as FCN, SegNet, Mask-RCNN, RefineNet, and ENet.

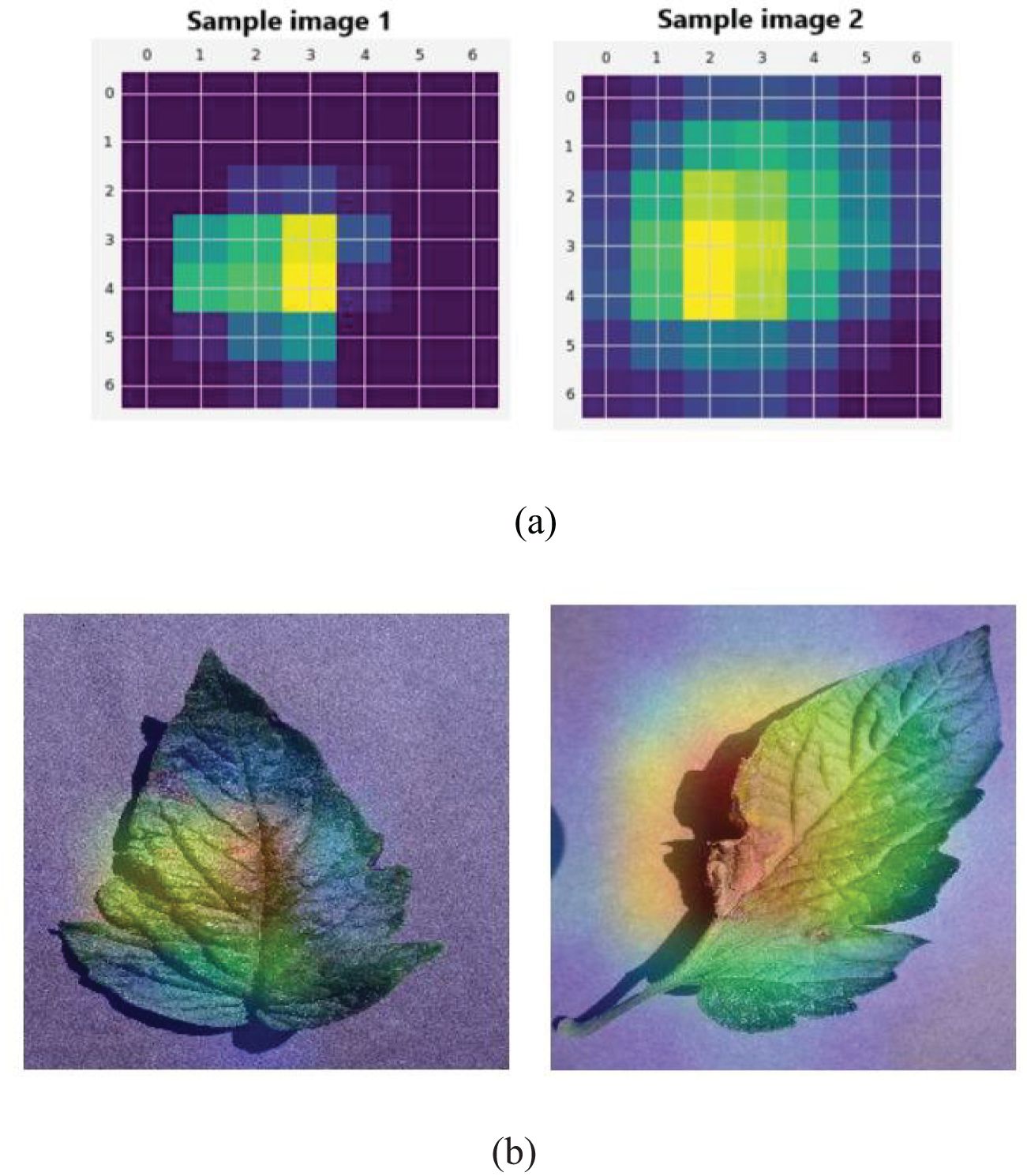

Table 3 shows that the proposed segmentation method obtained better segmentation in all metrics. For example, the segmentation accuracy of the U-Net architecture utilized in this research was 97.87%, which is evidently superior to other segmentation techniques, for example, FCN (96.10%), SegNet (95.43%), Mask RCNN (96.56%), RefineNet (96.94%) and ENet (97.05%). This result is due to the U-Net architecture effectively categorizing every individual pixel of the leaf image. Figure 8 shows an illustration of the feature maps of the UNet layers using GradCAM.

Figure 8. Illustration of (A) U-Net feature map visualization for sample images 1 and 2. (B) GradCAM visualization.

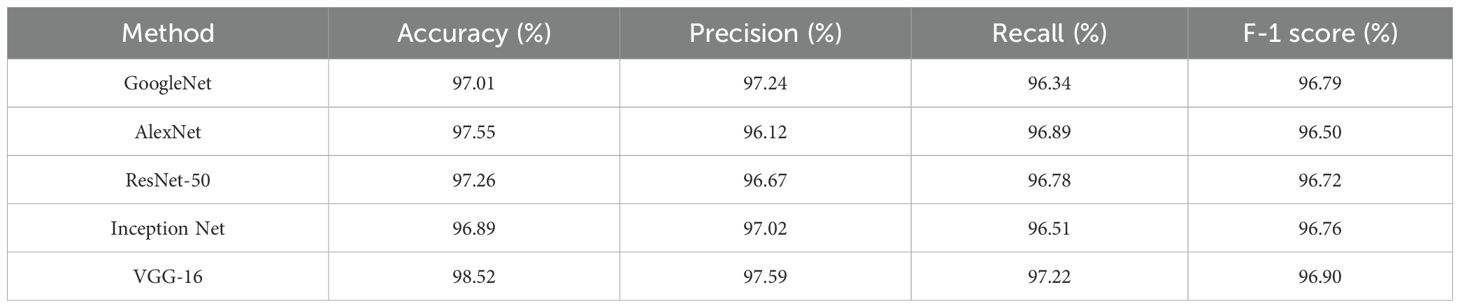

4.1.2 Evaluation of different feature extraction techniques

The effectiveness of the feature extraction technique (i.e., VGG-16) was compared to other traditional models when extracting features from a segmented output. The results were evaluated by considering efficiency in the extraction of features from segmented outputs obtained from the PlantVillage dataset. Table 4 shows the experimental outcomes attained when comparing VGG-16 with state-of-the-art techniques such as GoogleNet, AlexNet, ResNet-50, and Inception Net for the proposed classification technique. The stated metrics were used to estimate the efficiency of the VGG-16 architecture.

The results shown in Table 4 demonstrate that the VGG-16 architecture utilized in this research outperforms other state-of-the-art techniques such as GoogleNet, AlexNet, ResNet-50, and Inception Net. The accuracy obtained by the VGG-16 architecture was 98.52% which is higher than the state-of-the-art feature extraction techniques. This result is due to VGG-16’s ability to learn hierarchical features at various levels of extraction. Moreover, the deep layers and shallow layers of VGG-16 captures high level and low-level features respectively. The usage of the convolutional filter helps in the process of capturing refined information from the segmented output.

4.1.3 Evaluation of different classification techniques with an attention mechanism

The effectiveness of the proposed classification technique (i.e., T-LSTM with an attention mechanism) utilized in this research was compared with state-of-the-art methods utilized during the stage of classification. The results were the classification of the diseased leaves and healthy leaves from the PlantVillage dataset. Table 5 shows the experimental outcome attained when comparing the attention mechanism with state-of-the-art techniques such as RNN, DBN, LSTM, and T-LSTM. As shown in Table 5, the computation time per validation image for various classification models was evaluated; it shows that the proposed T-LSTM with an attention mechanism had a low computation time of 20 ms when compared with other classification models.

The outcome shown in Table 5 shows that T-LSTM with an attention mechanism obtained superior classification outcomes in the stated measures. The performance of the classifier was evaluated based on its efficiency in classifying healthy leaves and diseased leaves. For instance, the classification accuracy of T-LSTM with an attention mechanism was 99.98%, higher than the accuracies of RNN, DBN, and LSTM with attention mechanisms of 96.10%, 95.26%, and 97.01%, respectively. The outcome of the suggested classification model was better because the scaled-dot product attention layer was integrated with the T-LSTM which calculates the weights of the attention layer at every individual step based on hidden state of T-LSTM and the features of the image patches. The attention layer evaluates the weight of the contextual vector which is obtained as the weighted sum of image patches. The incorporation of the attention mechanism gives the model the ability to focus on relevant regions of the leaf image which enhances its ability to discriminate among different classes of tomato leaf disease.

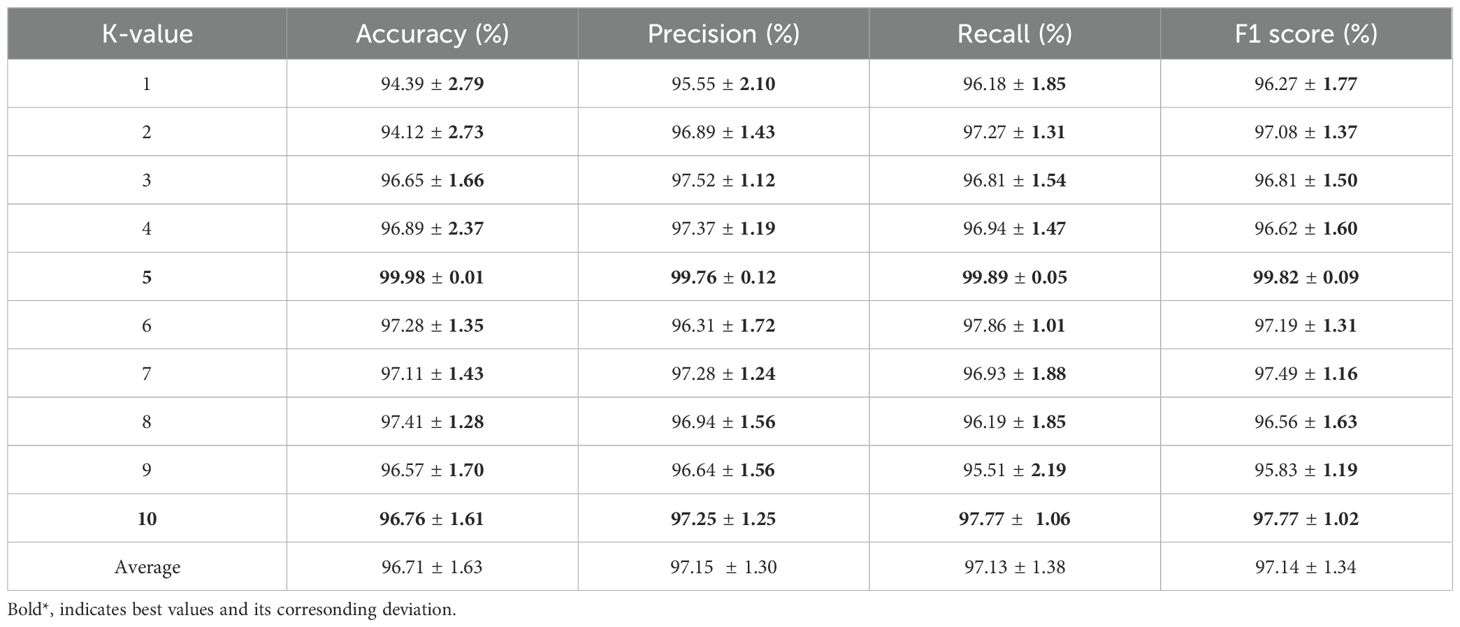

4.1.4 K-fold validation for T-LSTM with an attention mechanism

The efficiency of T-LSTM with an attention mechanism for various K-values, from K=1 to K=10, was obtained. Table 6 shows the obtained outcomes when the proposed LSTM with attention mechanism was evaluated with different K-values.

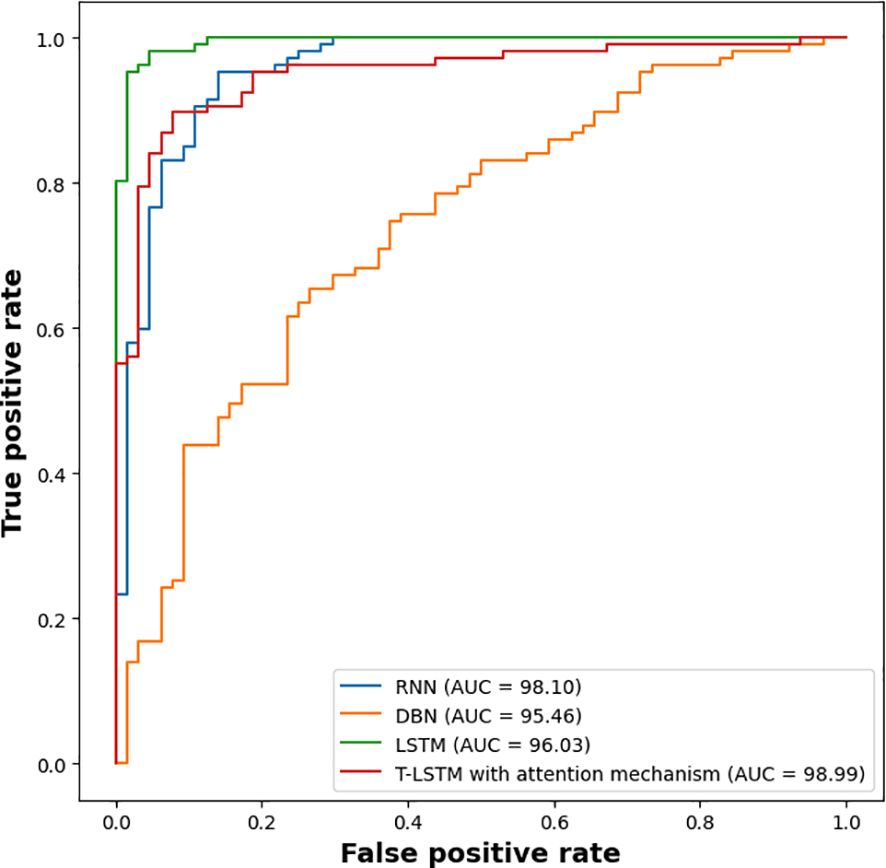

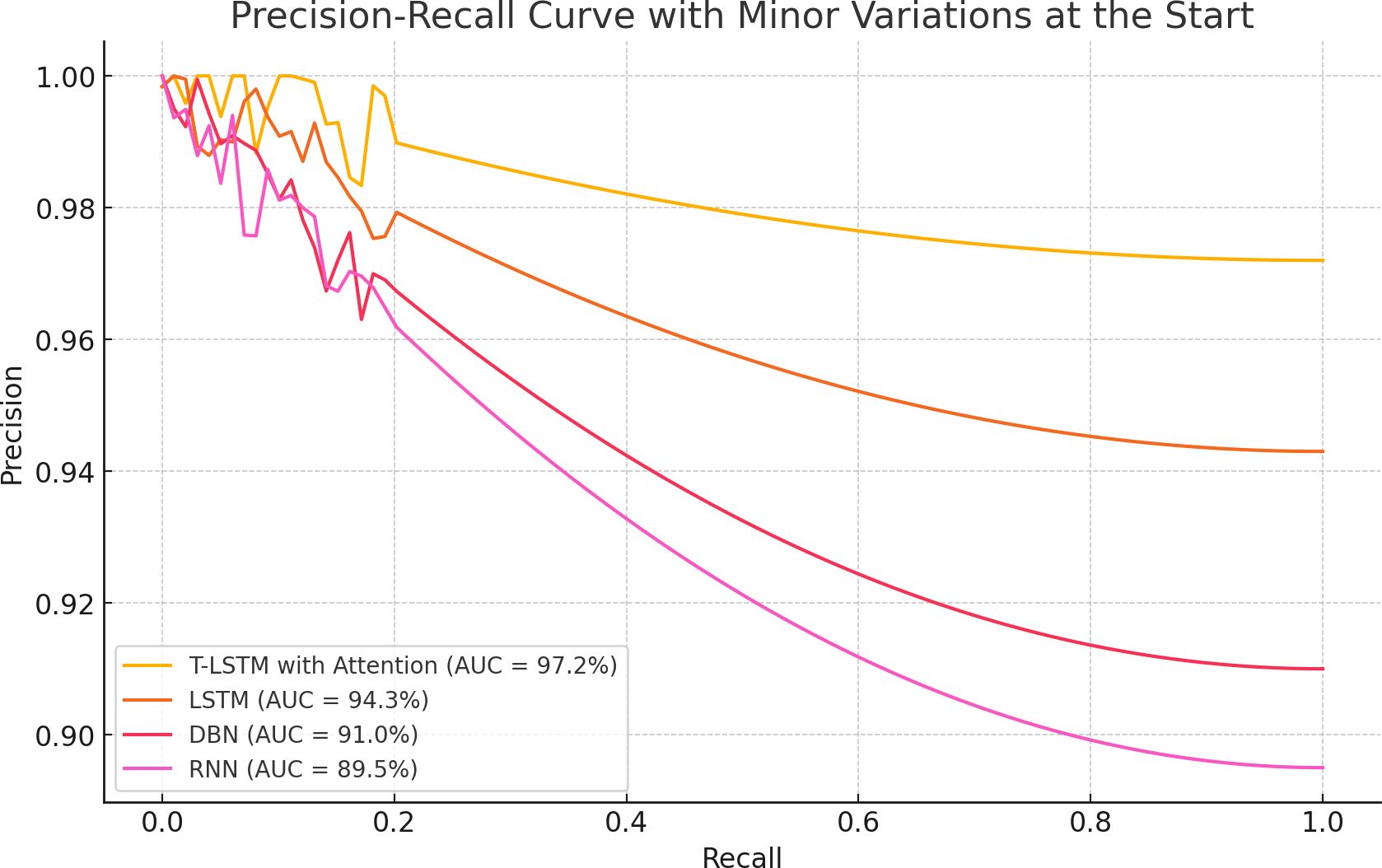

The experimental outcomes shown in Table 6 demonstrate the outcomes achieved when assessing the suggested classifier for different K-values. The proposed classifier achieved the best results when the K-value was 5, achieving an accuracy of 99.98%. The efficiency of the proposed classifier was evaluated by a receiver operational characteristics (ROC) curve, as shown in Figure 9. The area under the curve (AUC) value of RNN was 98.10, DBN was 95.46, LSTM was 96.03, and that of the proposed classifier was 98.99. The ROC curve presents the quality of the classifications by showing the true positive rate (TPR) and false positive rate (FPR). Figure 10 illustrates the precision-recall curve.

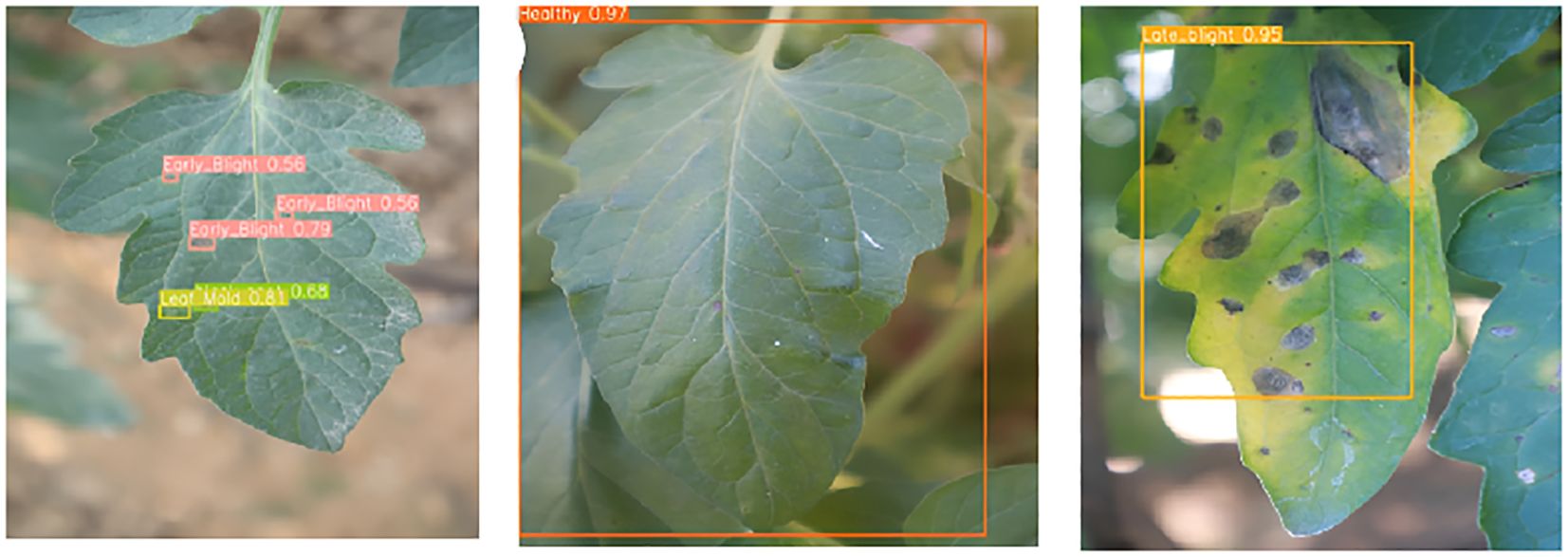

4.1.5 Independent analysis

This independent analysis was validated by obtaining real-time images, with only 9 images gathered because of logistical challenges. In particular, retrieving varied agricultural backgrounds, organizing with farmers, and capturing high-quality images under changeable environments proved challenging. Figures 11, 12 show the collected real-time images 1 (https://www.kaggle.com/datasets/ashishmotwani/tomato/data) and 2 (https://www.kaggle.com/datasets/farukalam/tomato-leaf-diseases-detection-computer-vision) for the independent analysis. The collected images had different confidence rates, ranging from 94% to 100% for real-time image 1. During the observation of real-time image 2, there were significant results for different regions of the leaf with various categories, namely, healthy (0.97), late-blight (0.95), early-blight (0.56), and leaf mold (0.81).

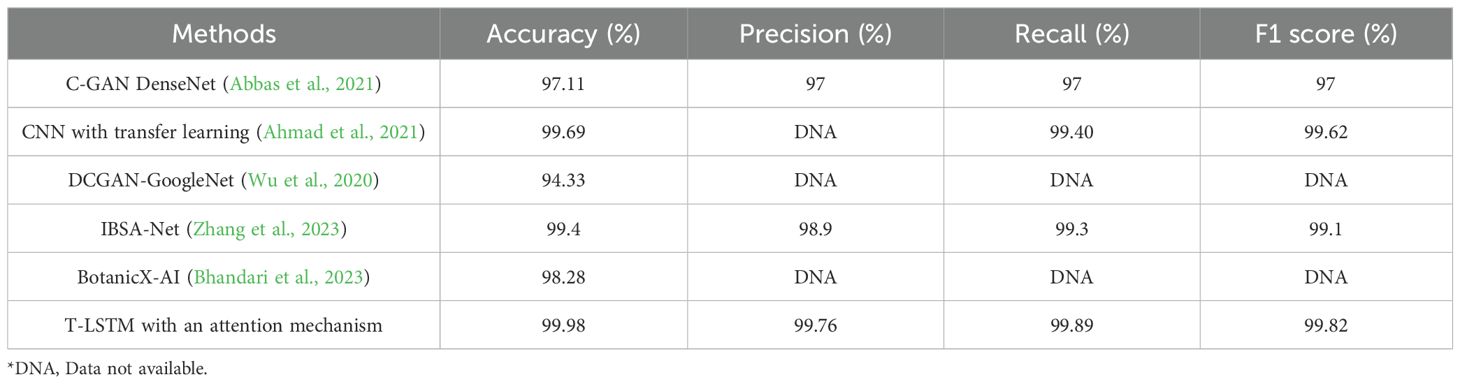

4.2 Comparative analysis

The main objective of this research was to effectively classify tomato leaves as diseased or healthy using T-LSTM with an attention mechanism. The performance of the proposed classification framework was evaluated with existing techniques such as C-GAN DenseNet (Abbas et al., 2021), CNN with transfer learning (Ahmad et al., 2021), DCGAN-GoogleNet (Wu et al., 2020), IBSA-Net (Zhang et al., 2023), and BotanicX-AI (Bhandari et al., 2023). Table 7 presents the outcomes when comparing the recommended classification technique with existing ones.

The experimental outcomes shown in Table 6 demonstrate that the suggested classification approach had improved outcomes in the stated measures when compared with existing techniques. For instance, the accuracy of T-LSTM with an attention mechanism was 99.98%, higher than conventional C-GAN DenseNet (Abbas et al., 2021), CNN with transfer learning (Ahmad et al., 2021), DCGAN-GoogleNet (Wu et al., 2020), IBSA Net (Zhang et al., 2023), and BotanicX-AI (Bhandari et al., 2023) with classification accuracies of 97.11%,99.69%, 94.33%, 99.4%, and 98.28% respectively. This was due to the transductive learning introduced in the LSTM architecture and that scaled dot product attention layer, which was integrated in T-LSTM, that computes the weights at each step on the basis of the hidden state and the features of image patches. The attention layer computes the weight of the contextual vector that is acquired as the weighted sum of image patches. The combination of the attention layer in the architecture of T-LSTM allows the model to focus on the appropriate regions of disease-affected partitions and differentiate between the classes of tomato leaf disease.

5 Discussion

This research provides effective classification of tomato leaf disease using T-LSTM with an attention mechanism and this was evaluated using the tomato PlantVillage dataset. The proposed T-LSTM with an attention mechanism obtained 99.98% accuracy which highlights its potential in revolutionizing tomato leaf disease detection. Similarly, this research contributes to various domains by demonstrating the efficiency of transductive learning in describing complex disease forms. In the same way, it delivers a comprehensive assessment with state-of-the-art models, confirming the dominance of the suggested T-LSTM with an attention mechanism. When compared to existing models such as C-GAN DenseNet (Abbas et al., 2021), CNN with transfer learning (Ahmad et al., 2021), DCGAN-GoogleNet (Wu et al., 2020), and IBSA-Net (Zhang et al., 2023), the presented outcomes show an enhanced accuracy of our model by eliminating potential misclassifications. Among all the existing models, the proposed classifier accomplished a classification accuracy of 99.98%. The proposed classifier accuracy was superior to existing techniques including C-GAN DenseNet, CNN with transfer learning, DCGAN-GoogleNet, and IBSA-Net with accuracies of 97.11%, 99.69%, 94.33%, and 99.4%, respectively. Moreover, the proposed approach achieved better results in the remaining metrics, namely, precision, recall, and F-1 score. The integration of the attention layer in T-LSTM architecture focuses on a specified region of the disease-affected portions and helps in the detection of diseased and healthy leaves.

The major significance of this study is to provide an early disease detection model which allows for timely interventions, minimizing crop damage and the financial burden on the farmers. Furthermore, this research presents a revolutionary method for tomato leaf disease classification which has incomparable efficiency and accuracy. Moreover, this impact will be experienced throughout the agricultural sector from farmers to policymakers.

6 Conclusion

In this research, an effective classification approach known as T-LSTM with an attention mechanism was introduced to classify diseased tomato leaves and healthy tomato leaves. Data acquisition was performed using the PlantVillage dataset and pre-processing was done through image resizing, color enhancement, and data augmentation. The pre-processed data was then processed in the segmentation stage with the help of U-Net architecture. After segmentation, VGG-16 architecture was used for extraction, and then classification was made by the proposed T-LSTM with an attention mechanism. The T-LSTM was based on a transductive learning approach and the scaled dot product attention mechanism that evaluates the weights of each step based on the hidden state and image patches. The outcomes show that proposed classification technique accomplished a better classification accuracy of 99.98% when compared with existing techniques, namely, C-GAN DenseNet, CNN with transfer learning, DCGAN-GoogleNet, and IBSA-Net. In the future, the efficiency of the proposed classifier should be evaluated with real-time datasets.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

AC: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Resources, Visualization, Writing – original draft, Writing – review & editing. DM: Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – review & editing. SA: Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Writing – review & editing. MA: Conceptualization, Formal analysis, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This project was funded by King Saud University, Riyadh, Saudi Arabia. Researchers Supporting Project number (RSP2025R167), King Saud University, Riyadh, Saudi Arabia.

Acknowledgments

Researchers Supporting Project number (RSP2025R167), King Saud University, Riyadh, Saudi Arabia.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abbas, A., Jain, S., Gour, M., Vankudothu, S. (2021). Tomato plant disease detection using transfer learning with C-GAN synthetic images. Comput. Electron. Agric. 187, 106279. doi: 10.1016/j.compag.2021.106279

Ahmad, M., Abdullah, M., Moon, H., Han, D. (2021). Plant disease detection in imbalanced datasets using efficient convolutional neural networks with stepwise transfer learning. IEEE Access 9, 140565–140580. doi: 10.1109/ACCESS.2021.3119655

Alzahrani, M. S., Alsaade, F. W. (2023). Transform and deep learning algorithms for the early detection and recognition of tomato leaf disease. Agronomy 13, 1184. doi: 10.3390/agronomy13051184

Arun Pandian, J., Kanchanadevi, K. (2022). An improved deep convolutional neural network for detecting plant leaf diseases. Concurrency Comput. Pract. Exper. 34, e7357. doi: 10.1002/cpe.7357

Ashwinkumar, S., Rajagopal, S., Manimaran, V., Jegajothi, B. (2022). Automated plant leaf disease detection and classification using optimal MobileNet based convolutional neural networks. Materials Today: Proc. 51, 480–487. doi: 10.1016/j.matpr.2021.05.584

Attallah, O. (2023). Tomato leaf disease classification via compact convolutional neural networks with transfer learning and feature selection. Horticulturae 9, 149. doi: 10.3390/horticulturae9020149

Bhandari, M., Shahi, T. B., Neupane, A., Walsh, K. B. (2023). Botanicx-ai: Identification of tomato leaf diseases using an explanation-driven deep-learning model. J. Imaging. 9, 53. doi: 10.3390/jimaging9020053

Bhujel, A., Kim, N. E., Arulmozhi, E., Basak, J. K., Kim, H. T. (2022). A lightweight Attention-based convolutional neural networks for tomato leaf disease classification. Agriculture 12, 228. doi: 10.3390/agriculture12020228

Borugadda, P., Lakshmi, R., Sahoo, S. (2023). Transfer learning VGG16 model for classification of tomato plant leaf diseases: A novel approach for multi-level dimensional reduction. Pertanika J. Sci. Technol. 31, 813–841. doi: 10.47836/pjst.31.2.09

Chen, H.-C., Widodo, A. M., Wisnujati, A., Rahaman, M., Lin, J. C.-W., Chen, L., et al. (2022). AlexNet convolutional neural network for disease detection and classification of tomato leaf. Electronics 11, 951. doi: 10.3390/electronics11060951

Chen, J., Zhang, D., Suzauddola, M., Nanehkaran, Y. A., Sun, Y. (2021). Identification of plant disease images via a squeeze-and-excitation mobileNet model and twice transfer learning. IET Image Proc. 15, 1115–1127. doi: 10.1049/ipr2.12090

Chong, H. M., Yap, X. Y., Chia, K. S. (2023). Effects of different pretrained deep learning algorithms as feature extractor in tomato plant health classification. Pattern Recognition Image Analysis. 33, 39–46. doi: 10.1134/S1054661823010017

Dataset. Available online at: https://www.kaggle.com/datasets/charuchaudhry/plantvillage-tomato-leaf-dataset (Accessed July 10, 2024).

Espejo-Garcia, B., Mylonas, N., Athanasakos, L., Vali, E., Fountas, S. (2021). Combining generative adversarial networks and agricultural transfer learning for weeds identification. Biosyst. Eng. 204, 79–89. doi: 10.1016/j.biosystemseng.2021.01.014

Fukada, K., Hara, K., Cai, J., Teruya, D., Shimizu, I., Kuriyama, T., et al. (2023). An automatic tomato growth analysis system using YOLO transfer learning. Appl. Sci. 13, 6880. doi: 10.3390/app13126880

Gill, H. S., Khehra, B. S. (2022). An integrated approach using CNN-RNN-LSTM for classification of fruit images. Materials Today: Proc. 51, 591–595. doi: 10.1016/j.matpr.2021.06.016

Harakannanavar, S. S., Rudagi, J. M., Puranikmath, V. I., Siddiqua, A., Pramodhini, R. (2022). Plant leaf disease detection using computer vision and machine learning algorithms. Global Transitions Proc. 3, 305–310. doi: 10.1016/j.gltp.2022.03.016

Huang, X., Chen, A., Zhou, G., Zhang, X., Wang, J., Peng, N., et al. (2023). Tomato leaf disease detection system based on FC-SNDPN. Multimedia Tools Appl. 82, 2121–2144. doi: 10.1007/s11042-021-11790-3

Kaur, P., Harnal, S., Gautam, V., Singh, M. P., Singh, S. P. (2022). An approach for characterization of infected area in tomato leaf disease based on deep learning and object detection technique. Eng. Appl. Artif. Intell. 115, 105210. doi: 10.1016/j.engappai.2022.105210

Kiran, S. M., Chandrappa, D. N. (2023). Plant leaf disease detection using efficient image processing and machine learning algorithms. J. Robotics Control (JRC). 4, 840–848. doi: 10.18196/jrc.v4i6.20342

Lin, L. P., Leong, J. S. (2023). Detection and categorization of tomato leaf diseases using deep learning. Int. J. Appl. Sci. Technol. Eng. 1, 282–291. doi: 10.24912/ijaste.v1.i1.282-291

Mahato, D. K., Pundir, A., Saxena, G. J. (2022). An improved deep convolutional neural network for image-based apple plant leaf disease detection and identification. J. Inst. Eng. India Ser. A 103, 975–987. doi: 10.1007/s40030-022-00668-8

Maliki, I., Prayoga, A. S. (2023). Implementation of convolutional neural network for sundanese script handwriting recognition with data augmentation. J. Eng. Sci. Technol. 18, 1113–1123.

Pandian, J. A., Kanchanadevi, K., Kumar, V. D., Jasińska, E., Goňo, R., Leonowicz, Z., et al. (2022). A five convolutional layer deep convolutional neural network for plant leaf disease detection. Electronics 11, 1266. doi: 10.3390/electronics11081266

Pavan, A. C., Lakshmi, S., Somashekara, M. T. (2023). An improved method for reconstruction and enhancing dark images based on CLAHE. Int. Res. J. Advanced Sci. Hub 5, 40–46. doi: 10.47392/irjash.2023.011

Peivandizadeh, A., Hatami, S., Nakhjavani, A., Khoshsima, L., Qazani, M. R., Haleem, M., et al. (2024). Stock market prediction with transductive long short-term memory and social media sentiment analysis. IEEE Access. doi: 10.1109/ACCESS.2024.3399548

Rahman, S. U., Alam, F., Ahmad, N., Arshad, S. (2023). Image processing based system for the detection, identification and treatment of tomato leaf diseases. Multimedia Tools Appl. 82, 9431–9445. doi: 10.1007/s11042-022-13715-0

Raja Kumar, R., Athimoolam, J., Appathurai, A., Rajendiran, S. (2023). Novel segmentation and classification algorithm for detection of tomato leaf disease. Concurrency Comput. Pract. Exper. 35, e7674. doi: 10.1002/cpe.7674

Reddy, S. R. G., Varma, G. P. S., Davuluri, R. L. (2023). Resnet-based modified red deer optimization with DLCNN classifier for plant disease identification and classification. Comput. Electr. Eng. 105, 108492. doi: 10.1016/j.compeleceng.2022.108492

Saeed, A., Abdel-Aziz, A. A., Mossad, A., Abdelhamid, M. A., Alkhaled, A. Y., Mayhoub, M. (2023). Smart detection of tomato leaf diseases using transfer learning-based convolutional neural networks. Agriculture 13, 139. doi: 10.3390/agriculture13010139

Sagar, S., Singh, J. (2023). An experimental study of tomato viral leaf diseases detection using machine learning classification techniques. Bull. Electrical Eng. Informatics. 12, 451–461. doi: 10.11591/eei.v12i1.4385

Sanida, T., Sideris, A., Sanida, M. V., Dasygenis, M. (2023). Tomato leaf disease identification via two–stage transfer learning approach. Smart Agric. Technol. 5, 100275. doi: 10.1016/j.atech.2023.100275

Shahi, T. B., Xu, C.-Y., Neupane, A., Guo, W. (2023). Recent advances in crop disease detection using UAV and deep learning techniques. Remote Sens. 15, 2450. doi: 10.3390/rs15092450

Singh, H., Tewari, U., Ushasukhanya, S. (2023). “Tomato crop disease classification using convolution neural network and transfer learning,” in Proceedings of the 2023 International Conference on Networking and Communications (ICNWC), Chennai, India. 1–6, IEEE. doi: 10.1109/ICNWC57852.2023.10127284

Sreedevi, A., Manike, C. (2023). Development of weighted ensemble transfer learning for tomato leaf disease classification solving low resolution problems. Imaging Sci. J. 71, 161–187. doi: 10.1080/13682199.2023.2178605

Tabbakh, A., Barpanda, S. S. (2023). A deep features extraction model based on the transfer learning model and vision transformer “TLMViT” for plant disease classification. IEEE Access 11, 45377–45392. doi: 10.1109/ACCESS.2023.3273317

Wu, Q., Chen, Y., Meng, J. (2020). DCGAN-based data augmentation for tomato leaf disease identification. IEEE Access 8, 98716–98728. doi: 10.1109/ACCESS.2020.2997001

Yin, X.-X., Sun, L., Fu, Y., Lu, R., Zhang, Y. (2022). U-net-based medical image segmentation. J. Healthc Eng. 2022, 4189781. doi: 10.1155/2022/4189781

Zhang, R., Wang, Y., Jiang, P., Peng, J., Chen, H. (2023). IBSA_Net: A network for tomato leaf disease identification based on transfer learning with small samples. Appl. Sci. 13, 4348. doi: 10.3390/app13074348

Zhong, Y., Teng, Z., Tong, M. (2023). LightMixer: A novel lightweight convolutional neural network for tomato disease detection. Front. Plant Sci. 14. doi: 10.3389/fpls.2023.1166296

Zhou, H., Fang, Z., Wang, Y., Tong, M. (2023). Image generation of tomato leaf disease identification based on small-ACGAN. Computers Materials Continua 76, 175–194. doi: 10.32604/cmc.2023.037342

Keywords: attention mechanism, data augmentation, segmentation, tomato leaf disease, Transductive Long Short-Term Memory

Citation: Chelladurai A, Manoj Kumar DP, Askar SS and Abouhawwash M (2025) Classification of tomato leaf disease using Transductive Long Short-Term Memory with an attention mechanism. Front. Plant Sci. 15:1467811. doi: 10.3389/fpls.2024.1467811

Received: 20 July 2024; Accepted: 18 December 2024;

Published: 21 January 2025.

Edited by:

Tej Bahadur Shahi, Tribhuvan University, NepalReviewed by:

Mohan Bhandari, Samridhhi College, NepalTek Raj Awasthi, Central Queensland University, Australia

Copyright © 2025 Chelladurai, Manoj Kumar, Askar and Abouhawwash. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohamed Abouhawwash, YWJvdWhhd3dAbXN1LmVkdQ==; c2FsZWgxMjg0QG1hbnMuZWR1LmVn

Aarthi Chelladurai1

Aarthi Chelladurai1 Mohamed Abouhawwash

Mohamed Abouhawwash