- Weill Institute for Cell and Molecular Biology and Section of Plant Biology, School of Integrative Plant Sciences, Cornell University, Ithaca, NY, United States

Arabidopsis thaliana sepals are excellent models for analyzing growth of entire organs due to their relatively small size, which can be captured at a cellular resolution under a confocal microscope. To investigate how differential growth of connected cell layers generate unique organ morphologies, it is necessary to live-image deep into the tissue. However, imaging deep cell layers of the sepal (or plant tissues in general) is practically challenging. Image processing is also difficult due to the low signal-to-noise ratio of the deeper tissue layers, an issue mainly associated with live imaging datasets. Addressing some of these challenges, we provide an optimized methodology for live imaging sepals, and subsequent image processing. For live imaging early-stage sepals, we found that the use of a bright fluorescent membrane marker, coupled with increased laser intensity and an enhanced Z- resolution produces high-quality images suitable for downstream image processing. Our optimized parameters allowed us to image the bottommost cell layer of the sepal (inner epidermal layer) without compromising viability. We used a ‘voxel removal’ technique to visualize the inner epidermal layer in MorphoGraphX image processing software. We also describe the MorphoGraphX parameters for creating a 2.5D mesh surface for the inner epidermis. Our parameters allow for the segmentation and parent tracking of individual cells through multiple time points, despite the weak signal of the inner epidermal cells. While we have used sepals to illustrate our approach, the methodology will be useful for researchers intending to live-image and track growth of deeper cell layers in 2.5D for any plant tissue.

Introduction

There is a close link between the shape of organs and the specialized function they perform. Multiple studies have shown that differential growth patterns across tissue layers generate characteristic organ shapes, which allow the organs to perform their specific functions (Waddington, 1939; Richman et al., 1975; Tsukaya, 2005; Savin et al., 2011; Varner et al., 2015; Singh Yadav et al., 2024). For example, the twisting of plant roots increases the force generated at the tip, which aids in better soil penetration (Silverberg et al., 2012), while on the other hand, most plant leaves have a smooth shape that enhances photosynthesis efficiency (Evans, 2013). Although the link between organ shape and function is well known, what factors influence tissue growth leading to distinct organ morphologies is an area of active research. Therefore, to fully comprehend the organ development process, a thorough assessment of growth across multiple cell layers is essential.

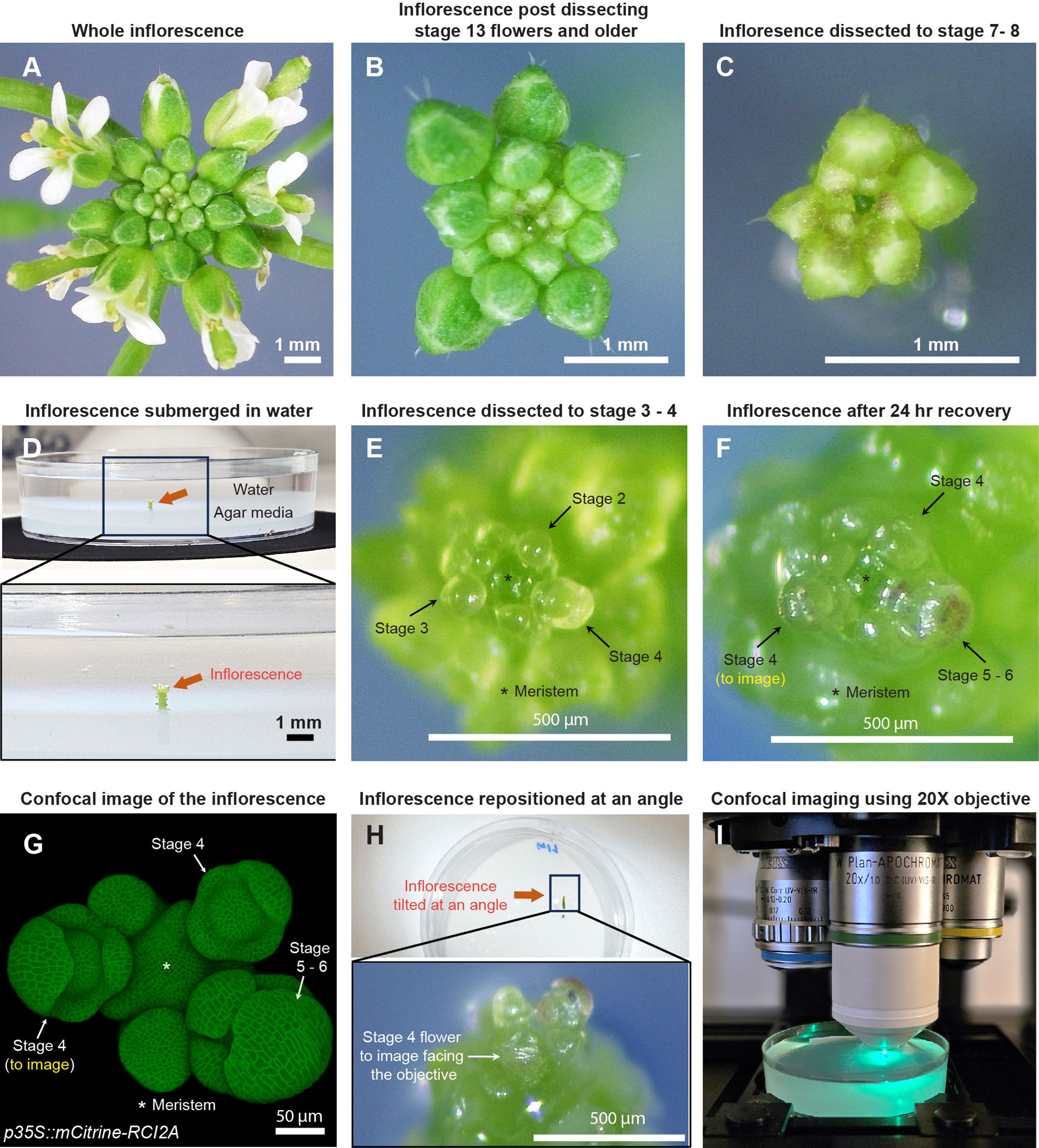

The Arabidopsis thaliana (henceforth Arabidopsis) sepals are the outermost green leaf-like floral organs; their smooth shape aids in properly enclosing and protecting growing flowers through development [Figure 1A (Roeder, 2021)]. Sepals are the first organs to initiate during floral development. Like leaves, sepals have an outer (abaxial) and an inner (adaxial) epidermal layer, encasing the loosely packed mesophyll cells (Roeder, 2021). Being anatomically very similar to leaves, sepals serve as a great model organ for studying how plant aerial organs develop. Their small size and robustness to environmental variation allow us to study organ development at cellular resolution (Roeder, 2021). For live imaging, sepals are relatively easier to access compared to internal floral organs and young leaves, since removal of surrounding tissue, which is essential for imaging is less complex (Roeder, 2021; Harline and Roeder, 2023). To live image sepals from early stages, prior dissection of the older flowers off the inflorescence is imperative, since they obscure the younger flowers from the field of view (Figure 1A; Supplementary Figure S1). This is because flowers initiate from the inflorescence meristem continuously, following a spiral phyllotactic pattern (Smyth et al., 1990; Bradley et al., 1997; Byrne et al., 2003). This arrangement results in the younger flowers being closer and the older flowers being located farther away from the meristem (Figure 1A). Since the pedicel also elongates rapidly in an upward direction, the older flowers tend to obscure the younger flowers, and thus dissection of the older flowers becomes necessary. Once the young flowers are exposed after dissection, the sepals can be live imaged through multiple time points for growth analysis.

Figure 1. Sample preparation for live imaging sepals. (A) Whole inflorescence image of Arabidopsis thaliana Ler-0 accession harboring pLH13 (p35S::mCitrine-RCI2A) membrane marker. (B) Same inflorescence after dissecting flowers that are stage 13 (flower buds open with visible petals) or older. Staging performed in accordance with Smyth et al. (Smyth et al., 1990). (C) Inflorescence dissected down to flowers of stages 8 or below. Below this stage, it’s preferable to perform the dissection in water. (D) Inflorescence (from Panel (C), red arrow) set in agar media containing petri plate is submerged in water for rehydration. (E) Inflorescence dissected down to flowers of stage 4 or below in water. Flowers of stages 2, 3, and 4 are indicated by arrows, with the meristem marked by an asterisk (*). (F) Inflorescence post recovery for 24 hours in growth chamber. Note the progression of flowers through the developmental stages. The stage 4 flower to be imaged is shown. (G) Confocal image of the inflorescence (F) under 20X magnification. (H) The inflorescence (F, G) is angled such the stage 4 flower faces the objective for imaging. (I) The flower being imaged under Zeiss LSM 710 confocal microscope under the 20X water-dipping objective (see Materials and Methods) using 514 nm excitation laser.

In addition to the need for dissection, we need to live-image deeper than previous studies into the sepal tissue to track the growth of the inner epidermis. It is well established that the epidermal layers of leaves and sepals are the primary determinants of organ shape and size (Savaldi-Goldstein and Chory, 2008; Roeder et al., 2010; Werner et al., 2021). The epidermis acts as a mechanical protective layer that can constrain or promote the growth of the underlying tissues. Hence, for assessing what growth properties determine organ shape and size, we mainly focus on analyzing growth of both the outer and inner epidermal layers. Previous research on sepal morphogenesis has focused on the outer epidermis due to technical limitations (Roeder et al., 2010; Tauriello et al., 2015; Hervieux et al., 2016; Hong et al., 2016; Tsugawa et al., 2017). Imaging deeper into the sepal tissue to capture the inner epidermal layer is challenging, primarily due to the following factors that hinder the penetration of light through the tissue. First, the strong autofluorescence of chlorophyll interferes with fluorophore signal detection, which affects imaging depth (Pawley, 1995; Zhou et al., 2005; Vesely, 2007). Second, extracellular air spaces in the mesophyll layer interferes with light transmission through the tissue (Van As et al., 2009; Littlejohn et al., 2014). Third, the intracellular components contribute to the variation in refractive indexes which affect light penetration, thus compromising the signal-to-noise ratio (Kam et al., 2001; Booth, 2014). Optical clearing methods are routinely used to remove chlorophyll and homogenize refractive indexes of intracellular components (Kurihara et al., 2021; Hériché et al., 2022; Sakamoto et al., 2022; Yu et al., 2023). However, since tissue clearing destroys its viability, such methods are inapplicable for live imaging. Overall, live imaging sepals through all tissue layers poses significant challenges, thus requiring optimization.

Alongside imaging an optimized data-driven image processing approach is required for segmentation on a curved surface of the tissue (2.5D) (Strauss et al., 2022). Segmentation in 2.5D provides a decent compromise between 2D and 3D segmentation at a reasonably low computational cost. Segmentation in 2.5D is ideal when volumetric information is not required, but the researcher intends to obtain more precise aerial measurements as compared to 2D. As 2.5D segmentation integrates the depth of the tissue, the amount of distortion typically associated with segmenting curved surfaces in 2D is largely minimized (Barbier de Reuille et al., 2015). This property of 2.5D segmentation allows us to accurately capture spatial details related to the morphology of cells and tissues (Jenkin Suji et al., 2023). Also, since the epidermal layers are composed of a single layer of cells wherein the growth along the X and Y planes are comparatively much larger than in thickness (Z plane), analyzing growth in 2.5D is sufficiently informative. For highly curved surfaces like sepals, generating a 2.5D surface for the outer epidermal layers allows for accurate segmentation, lineage tracking and subsequent extraction of aerial growth properties of individual cells (Hervieux et al., 2016; Hong et al., 2016; Zhu et al., 2020), since the cell depths remain relatively consistent. However, the challenge remains in generating a decent 2.5D surface for the curved inner epidermal layer which can be segmented. This challenge is posed due to the noise associated with signal from the inner epidermal layer and the variation in spatial signal intensity.

Here, we describe a detailed methodology for live-imaging Arabidopsis sepals and processing the images to track the development of individual cells on both outer and inner epidermal layers. We provide an improved and detailed procedure for floral dissection to reveal the young flowers (Chickarmane et al., 2010; Prunet, 2017), with video illustrations. We describe the parameters optimized for confocal live imaging sepals across tissue layers from early developmental stages at high resolution. Using MorphoGraphX (Barbier de Reuille et al., 2015; Strauss et al., 2022) image processing software, we developed a technique for generating 2.5D surfaces of the inner epidermal layers to accurately segment individual cells. Finally, we discuss the parent tracking method using MorphoGraphX to follow individual cell development over time and extract growth related information. This optimized methodology serves as a comprehensive guide for researchers interested in live imaging sepal development.

Results and discussion

Sample preparation for confocal live imaging young flowers

For optimal dissection, we prefer six- to seven-week-old Arabidopsis plants grown in 21°C long-day conditions, which prolongs vegetative growth phase relative to 24 hours light, resulting in larger meristem sizes and thicker inflorescence axes. Although plants can be grown under short day conditions as well, which leads to larger meristem size compared to long-day/24 hour light conditions (Hamant et al., 2019), plants exhibit significant delay in flowering under short day conditions (Mouradov et al., 2002), which is not necessary for our dissection purposes. Dissection can either be performed while the inflorescence is on the plant or on a clipped inflorescence to allow for easier maneuvering during dissection (Supplementary Figure S1). However, clipping the inflorescence bears the risk of faster dehydration, and thus requires quicker dissection. The choice of whether to dissect in planta or on a clipped inflorescence depends on the researcher.

Although the dissection techniques vary among researchers, we describe the procedure that works best for us, resulting in minimal tissue damage. For dissection, we grip the inflorescence in one hand, and use the tweezers to press the base of the pedicel and pull the flower backwards in a gentle yet swift manner (Supplementary Video S1). We adopt a stage-wise dissection approach by rotating the inflorescence, starting with the oldest flowers first and gradually progressing to younger ones, all the way down to stages 7 to 8 [Figures 1A–C; Supplementary Video S1, staging performed according to (Smyth et al., 1990)]. The inflorescence is then clipped ~0.5 cm (about 0.2 in) from the apex, and the base of the stem is securely embedded in a live-imaging agar media petri plate (Figure 1D). We then submerge the inflorescence in water for 3 to 4 hours to allow for rehydration at room temperature, a critical step in ensuring sample viability (Figure 1D). Following rehydration, dissection of flowers below stages 7 to 8 is performed under a stereo microscope (Zeiss Stemi 2000-C), while the inflorescence remains submerged in water. This retains sample hydration during dissection of younger flowers, as it requires increased caution and is therefore more time consuming. While using tweezers is an option, we prefer using a needle in favor of its sharper blade (see Material and Methods for specifics) and increased precision in dissection, to avoid damaging younger adjacent flowers. Dissection in water involves rotating the plate to position the flower to be dissected at a convenient angle, pulling the flower bud backwards using the needle and nipping it from the pedicel base (Supplementary Video S2). These steps are repeated until we dissect down to flowers of stages 3 to 4 (Figure 1E). Post dissection, we drain the water and transfer the sample to a fresh plate. The sample is then allowed to recover for 24 hours under initial growth conditions. Assuming no flowers were accidentally damaged during dissection, all flowers should transition through their respective developmental stages (Figures 1E–G).

For live imaging, we choose a mid-stage 4 (Smyth et al., 1990) flower (Figures 1F, G). The inflorescence is repositioned at an angle in which the flower to be imaged directly faces the confocal objective (Figure 1H). The sample is then submerged in 0.01% SILWET-77 (surfactant) in water solution to reduce surface tension, which helps displace the air bubbles that can obstruct imaging. Any persisting air bubbles can be removed by gently pipetting liquid solution towards air bubble. We then allow the sample to stabilize for five to ten minutes, following which the flower is imaged under a confocal microscope using a 20X water dipping objective (Figure 1I; also see Materials and Methods for specific settings).

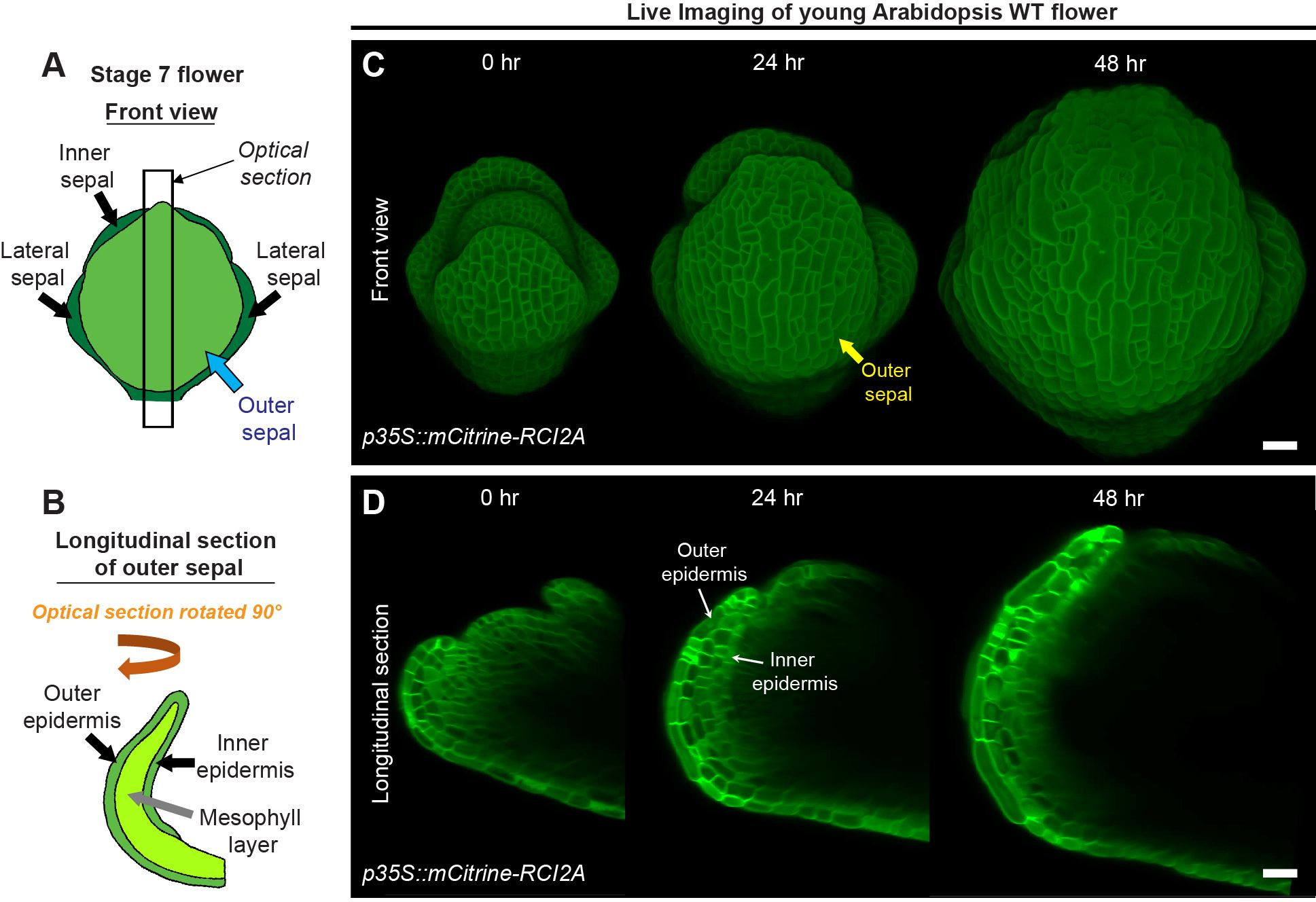

Confocal live imaging to capture sepal growth

The four sepals around Arabidopsis flowers are named according to their position relative to the inflorescence meristem (Zhu et al., 2020). The ‘outer’ sepal refers to the sepal that is positioned furthest from the meristem, while the ‘inner’ sepal is the closest (usually obscured from view, Figure 2A). The sepals on either side are referred to as the ‘lateral’ sepals (Figure 2A) (Zhu et al., 2020; Roeder, 2021). For live imaging, we image the outer sepal due to its accessibility (Figure 2A). For any given sepal, the outer (abaxial) and inner (adaxial) epidermal layers are connected at the margins, enclosing the inner mesophyll layer [Figure 2B (Roeder, 2021)]. During early sepal development, the outer and inner epidermal layers are differentially sized, with the outer epidermis exhibiting a larger surface area than the inner epidermis (Singh Yadav et al., 2024). Anatomically, the inner and outer epidermises emerge from a single epidermal cell layer in the floral meristem and remain connected with one another, while the mesophyll consists of 2-3 loosely packed cell layers with intervening air spaces that derive from the L2 layer of the meristem (Figures 2C, D) (Jenik and Irish, 2000; Roeder, 2021).

Figure 2. Live imaging of growing sepals. (A) Schematic diagram representing a typical stage 7 flower. The four sepals comprise an outer sepal (blue arrow) shown in light green, one inner sepal and two lateral sepals (black arrows) shown in dark green. (B) The schematic illustrates the optical longitudinal section of the outer sepal from the region marked by the black rectangle (A). The outer, inner epidermal layers (black arrows) in green and the mesophyll layer (grey arrow) in lime color are shown. (C) Confocal live imaging of a growing Arabidopsis flower imaged every 24 hours over a course of 48 hours with a high laser intensity for 514 nm wavelength on an argon laser at 20X magnification. The flower harbors pLH13 (p35S::mCitrine-RCI2A) membrane marker. The yellow arrow points to the outer sepal. Scale bar, 20 µm. (D) Longitudinal optical sections of growing flower (C) are shown. Note the reducing visibility of the inner layers over time. The inner and outer epidermal layers are marked (white arrows). This image series is one of the three replicates used for growth analysis in our previous study (Singh Yadav et al., 2024). Scale bar, 20 µm.

To assess cell growth parameters underlying sepal development, we commence imaging sepals from young stages, typically from mid-stage 4 onwards (Figure 2C). At this stage, the inner epidermis has just begun growing, and the sepals barely overlie the floral meristem (Smyth et al., 1990; Singh Yadav et al., 2024). To visualize cell membranes, we dissect flowers from transgenic plants harboring a yellow fluorescent protein-based membrane marker [pLH13, p35S::mCitrine-RCI2A (Robinson et al., 2018)]. Capturing the membrane signal from inner epidermal cells is challenging, mainly due to the highly obscured nature of inner epidermal signal compared to the outer epidermal layer, which prompted us to optimize our imaging process (Figures 2C, D). Firstly, we used the strong 35S viral promoter to drive membrane marker expression, which ensures robust membrane signal. Note, 35S does lead to silencing of the marker in some plants, so seedlings are screened for fluorescence before they are grown to flowering for dissection and imaging. Secondly, to enhance signal capture from deeper tissue layers without compromising sample viability, we used a high laser intensity at which the membrane signal of the outer epidermal cells was just barely saturated while that of the inner epidermal cells was unsaturated. Laser powers depend on the exact type of the laser and will have to be adjusted for each microscope and laser (on our Zeiss 710 microscope with a LASOS argon laser, we used 16 to 20% power of the 514-laser line). There is a point at which increasing laser power no longer improves internal cell layer image intensity and starts to inhibit sample growth and viability, and an optimal laser intensity can be identified before hitting that point. Validation that the laser is not inhibiting sample growth and viability is needed. Thirdly, we use isotropic voxels (x:y:z of 0.244 µm) and 1024 X 1024-pixel dimensions for obtaining high resolution images, although this considerably increases imaging time. All the three optimized parameters described above were used in combination to capture images of the quality required for image processing described in the subsequent sections. Despite these adjustments, the visibility of inner epidermal layers diminishes considerably as the flower develops (Figure 2D). We could capture the inner epidermal layer up to stage 7, but not beyond with this approach. The factors contributing to this opacity remain undetermined, although we suspect increasing air spaces in the mesophyll tissue may either impede excitation or emission of the signal from deeper layers. In the context of enhancing the signal of the cell outlines, it is worth mentioning that Convoluted Neural Network (CNN) based cell outline prediction in 3D may improve the visibility of cell outlines (Wolny et al., 2020), thus helping in downstream image processing (Singh Yadav et al., 2024). While we did not employ CNN in this pipeline, these algorithms, based on our experience, require high quality images with sufficient Z-resolution (below 0.5 µm) and strong membrane signal to begin with. Therefore, the optimized imaging parameters described above can be reliably employed to obtain high resolution images suitable for visualizing inner cell layers.

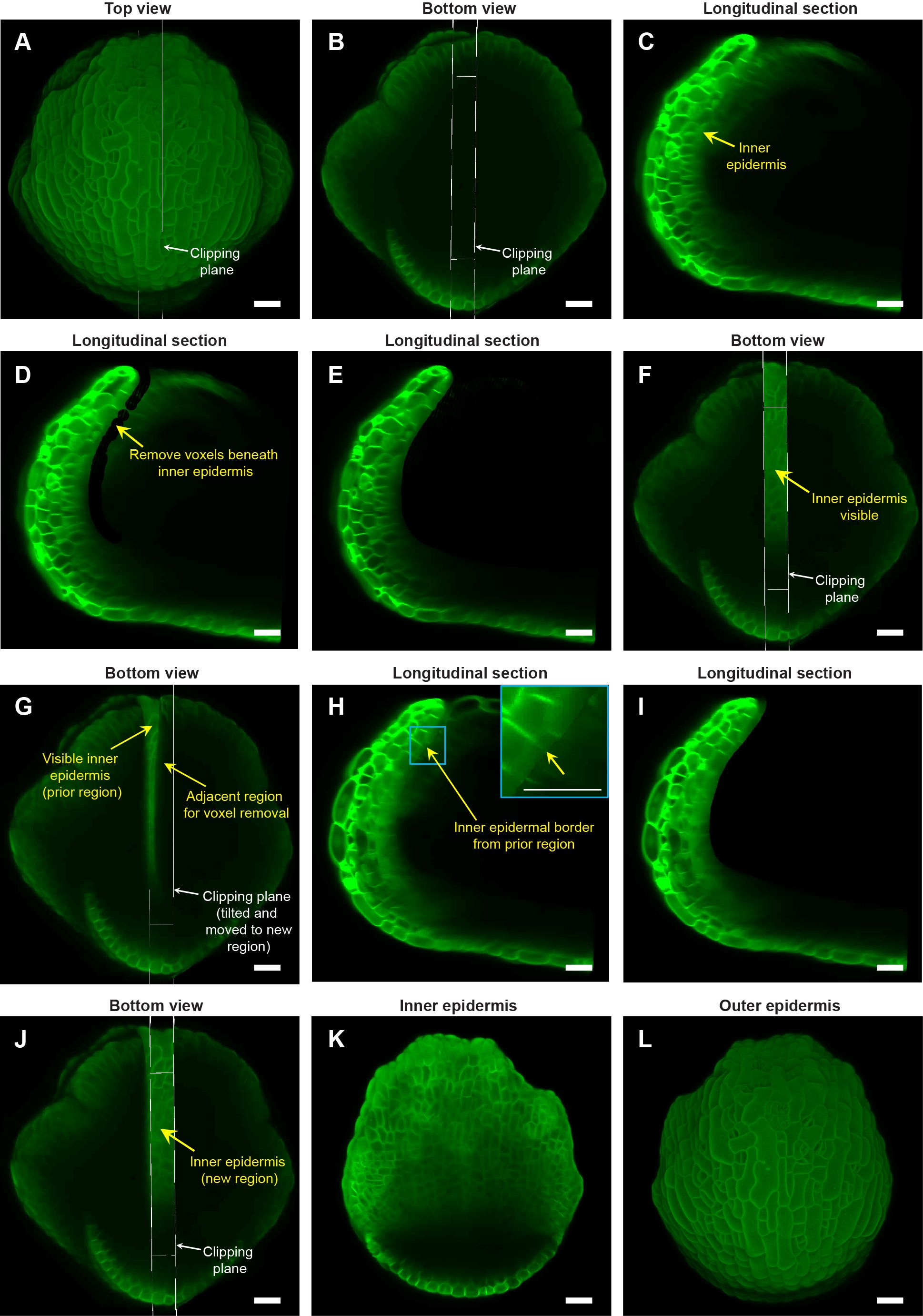

Visualizing the inner epidermal layer of the sepal

Once the flower is imaged, the next step is to reveal the inner epidermal cell layer of the outer sepal through image processing. Due to the small size of the flowers below stage 8 [103-238 µm (Roeder, 2021)], attempting to access the sepal inner epidermis by dissection is impractical and inevitably damages the sepals. To circumvent these limitations, we employed image processing using MorphoGraphX to expose the sepal inner epidermis, by removing the voxels associated with the flower meristem regions underneath the inner epidermal layer (hereafter referred to as ‘voxel removal’). We used the ‘Clipping plane’ tool to visualize the longitudinal section through the flower which enables us to see the sepal internal cell layers (Figures 3A–C; Supplementary Figure S2 also see MGX user manual for more details). The depth of the Clipping plane can be adjusted using the slider under View tab/Clip 1. We recommend maintaining the Clipping plane depth at or below 20 µm, which we determined as the optimal range within which a sepal section exhibits minimum curvature. To initiate voxel removal, we position the longitudinal Clipping plane through the middle of the sepal (Figures 3A, B). This serves as a convenient reference since the middle region of the sepal has reduced curvature compared to peripheral regions. For editing, the image is moved from the ‘Main’ Stack to the ‘Work’ Stack, using the ‘Stack/Multistack/Copy Main to Work Stack’ function. Once the Clipping plane is positioned, enabling the Clipping plane reveals the longitudinal section of the flower (Figure 3C). Visualization of the longitudinal section can be improved by maximizing the image opacity (Main/Stack 1/Opacity) and adjusting the brightness and contrast of the view window, especially given the weak signal of the inner epidermal layer.

Figure 3. Image processing steps to expose the inner (adaxial) epidermis of the sepal. (A) Top view of the stage 7 flower imaged at the 48-hour time point of live imaging, with the longitudinal clipping plane (white rectangular box) through the center of the outer sepal blade. (B) Bottom view of the flower (panel A) with the longitudinal clipping plane positioned through the same region. (C) Longitudinal section of the flower within the clipping plane region. The inner epidermis is marked (yellow arrow). (D) Floral meristem voxels beneath the inner epidermis are removed using the voxel edit tool in MorphoGraphX (Strauss et al., 2022) image processing software. (E) Longitudinal optical section post removal of all voxels beneath the inner epidermis within the clipping plane region. (F) Bottom view of the flower post voxel removal. Note the visibility of the inner epidermis compared to surrounding regions. (G) Clipping plane tilted and moved to the adjacent region on the right with some overlap with the previously edited region (C–E). (H) Longitudinal section displays the inner epidermal border (yellow arrow; also see inset) created by voxel removal in the previously edited region, serving as a guide for voxel removal in the adjacent region. (I) Longitudinal section post voxel removal in the adjacent region. (J) Bottom view of the flower post voxel removal of the adjacent floral meristem region. (K) Complete view of the inner epidermis of the sepal, exposed after voxel removal. (L) Outer epidermis of the sepal. Scale bars (A–L) represent 20 µm.

Deleting flower meristem voxels below the inner epidermis requires the use of ‘Voxel Edit’ tool (activated using the Alt-key), which allows us to remove voxels by simply left-clicking in the desired area (Supplementary Figure S2). The radius of the ‘Voxel Edit’ tool can either be adjusted manually using the slider under View/Stack Editing/Voxel Edit Radius, or by altering the image magnification (Supplementary Figure S2). We recommend keeping the radius of the ‘Voxel Edit’ tool below 5 µm in the initial steps for precise voxel editing. Using this small edit radius, we carefully remove internal floral meristem voxels below the inner epidermis (Figure 3D; Supplementary Video S3). Next, we zoom out to increase the Voxel Edit radius to remove voxels from the remaining internal region of that section (Figures 3E, F; Supplementary Video S3). Finally, any unevenness resulting from voxel removal can be finely smoothed out to prevent irregularities in mesh surface creation in the downstream image processing steps (Supplementary Video S3).

To delete the remaining floral meristem voxels underneath the inner epidermis and expose it for viewing, we undertake a two-step approach. Firstly, we move the clipping plane to the adjacent section while having some overlap with the previously exposed region (Figure 3G; Supplementary Video S4). Having an overlap reveals a residual border from voxel removal in the preceding section (Figure 3H). This border serves as a guide for subsequent voxel removal in the adjacent section. This strategy of using the border as a guide ensures uniform voxel removal by eliminating the chances of under- or over- removal of voxels across discrete longitudinal sections (Figures 3I, J; Supplementary Video S4). Maintaining this uniformity is critical for obtaining a smooth mesh surface and accurately projecting membrane signal in subsequent steps. Secondly, we tilt the clipping plane such that it is roughly perpendicular to the curvature of the sepal in the region from which we intend to remove voxels (Figure 3G; Supplementary Video S4). ‘Voxel Edit’ deletes voxels all the way through the thickness of the section. Since the sepal is a curved tissue, the clipping plane must be perpendicular so that voxels associated with the inner epidermal layer on the opposite side are not accidentally deleted.

The two steps (tilting and moving the clipping plane for voxel removal) are repeatedly performed throughout the flower in a longitudinal section-by-section manner, until we achieve a complete view of the inner epidermis (Figure 3K; Supplementary Video S4). Note that all the steps described above are error-prone; whether voxel removal was optimally performed or not can only be assessed later, based on how good the membrane signal projection is on the mesh surface (described in the next section). Therefore, saving our progress regularly is a smart practice that allows us to revert to any previous versions if any anomalies arise, without having to start all over. To save the current image version, we simply copy the image in the ‘Work’ stack back to the ‘Main’ stack using the process ‘Stack/Multistack/Copy Work to Main Stack’ and then save the stack. Overall, the steps described above allow one to view both the inner and outer epidermis of growing sepals (Figures 3K, L) without the need for physically dissecting the sepal. Although we illustrate the ‘voxel removal’ steps using the 48-hour time point, the same can be applied to visualize the inner epidermis for the other time-points.

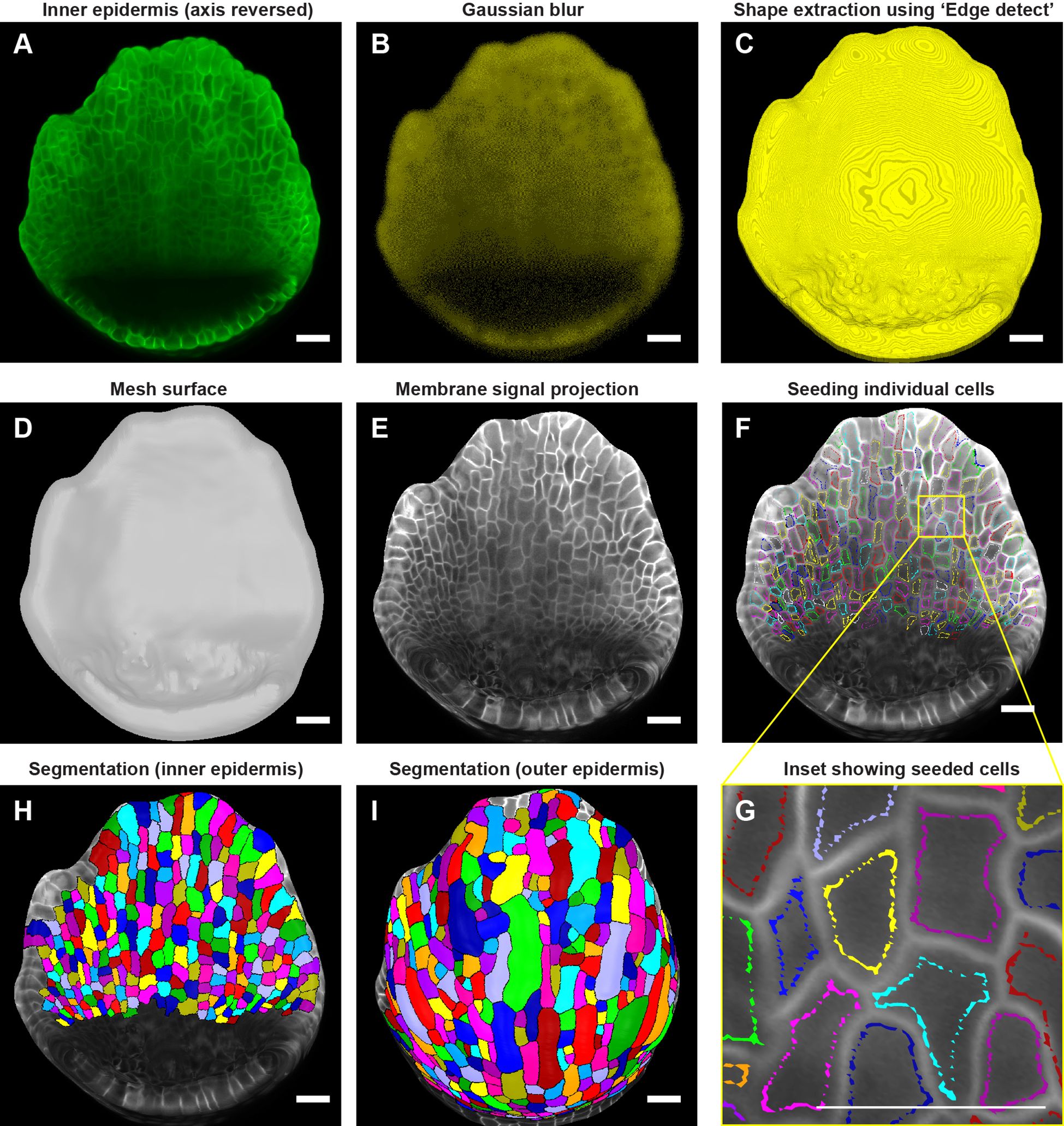

Image processing steps for cell segmentation

After the sepal inner epidermis is exposed, we then proceed with cell segmentation, which is a computational technique for demarcating individual cells that can be used for extracting cellular features (Roeder et al., 2012). We segment the cells on a computational 2.5D mesh surface that represents the sepal morphology. The mesh comprises triangles and segmenting the mesh into discrete cellular units involves labeling the triangle vertices (Barbier de Reuille et al., 2015). The methodology for generating a 2.5D mesh surface is outlined in the MorphoGraphX user manual. Here, we describe how to optimize the parameters for generating a 2.5D mesh surface specifically for the epidermal layers of the sepal. Note that, while we detail the parameter optimization specific to this study, the image processing steps need to be optimized for each individual experiment depending on the type and quality of the images.

To generate the inner epidermal mesh surface, the initial image orientation which places the inner epidermis at the bottom of the stack needs to be inverted along the Z-direction (Figures 3K, 4A). Reorienting the image stack by running ‘Stack/Canvas/Reverse Axes’ positions the inner epidermis at the top, which informs MorphoGraphX to create the mesh surface for the inner epidermis. To attenuate image noise which could interfere with inner epidermal shape extraction in the subsequent steps, we run the process ‘Stack/Filters/Gaussian Blur Stack’ with X/Y/Z sigma (µm) values ranging between 2 - 2.5 (Figure 4B). While lower sigma values (<=1) are generally preferred, images with weaker membrane marker signal requires more blurring, which minimizes the occurrence of holes when we extract the global shape. Once the image is blurred, we can extract the global shape of the inner epidermis by running ‘Stack/Morphology/Edge Detect’, with a threshold value ranging between 2000-3000 (Figure 4C). While a higher threshold value typically yields a shape closely resembling the image, this usually requires strong cell membrane signals. Weak signal strength (as is the case for the inner epidermis) leads to holes in the structure if higher threshold values are used. Although small holes can be corrected by running ‘Stack/Morphology/Closing’, attempts to fill large holes result in structural distortions.

Figure 4. Downstream image processing for cell segmentation. (A) MorphoGraphX (Strauss et al., 2022) processed image of the inner epidermis after reversing the axis along the Z direction. (B) Inner epidermal image stack post running Gaussian Blur filter. (C) Extraction of the global shape of the inner epidermis using MorphoGraphX (Strauss et al., 2022) process “Edge detect”. (D) Mesh surface generated using “Marching Cubes Surface” followed by smoothing and subdividing the mesh. (E) Plasma membrane marker signal projected onto the mesh. (F) Cells seeded (labeled) throughout the inner epidermis. Colors represent individual labels. (G) Inset (F) demonstrates how the cell label follows the cell shape. Note that the labeling close to the cell boundary is essential for accurate segmentation especially when the membrane signal is weak. Scale bar, 2 µm. (H, I) Watershed segmentation of (H) Inner epidermal cells and (I) Outer epidermal cells. Scale bars across panels (A–I) represent 20 µm.

Following global shape extraction, we generate a 2.5D mesh surface by running ‘Mesh/Creation/Marching Cube Surface’ with a cube size of 3 µm (Figure 4D). Irregularities in the mesh are then smoothed by running ‘Mesh/Structure/Smooth Mesh’ with 10 passes. We usually avoid smoothing the mesh beyond 10 passes as it can shrink the mesh, which in turn can distort membrane signal projection. To enhance mesh resolution, we increase the number of vertices by running ‘Mesh/Structure/Subdivide’. In this case, optimum resolution is achieved with two iterations of subdividing, with typically yields ~ 3 million vertices for a stage 4 sepal to ~10 million vertices for a stage 7 sepal Additional subdivisions beyond two iterations increases the file size of the mesh (and in turn, computational time for processing) without any discernable improvement in membrane signal resolution. Next, we project the membrane signal onto the mesh using ‘Mesh/Signal/Project Signal’ (Figure 4E). Projecting the signal with minimum and maximum projection distance of 3 and 8 µm respectively below the mesh is usually sufficient, although minor adjustments may be necessary. Typically, the lower the ‘Edge Detect’ threshold values used to extract the global shape, the deeper in microns the image needs to be projected on the mesh surface to capture the membrane signal. We then manually seed the cells using the ‘Mesh tools bar/Add new seed’ tool, activated using the Alt-key (Figure 4F). To prevent labels bleeding into neighboring cells during segmentation, especially given that the inner epidermal cell membrane signals are weak, care is taken to seed close to the cell membrane outlining the cell shape (Figure 4G). We then perform cell segmentation by running the process ‘Mesh/Segmentation/Watershed Segmentation’, which propagates the respective labels of individual cells (Figure 4H). In the final step, we verify whether the segmentation of each cell accurately represents the cell morphology. If any discrepancies are found, we delete the segmentation of the given cell and its adjacent cells by filling it with an empty label, using ‘Fill Label’ from ‘Stack tools’, and then re-seeded and re-segment the cells. Once we are satisfied with the segmentation, we run the process ‘Mesh/Cell Mesh/Fix Corner Triangles’ which ensures no vertices in the mesh are unlabeled, as unlabeled vertices interfere with the parent tracking process. Note, MorphoGraphX also allows for automatic seeding and segmentation (Barbier de Reuille et al., 2015). This approach is useful for segmenting individual cells in larger samples, such as leaves or fully mature sepals, particularly when the cell boundaries are well defined. However, this approach also results in under or over segmented cells which need to be manually corrected. In this case, where the number of cells is relatively few, and the membrane signal of the inner epidermal cells is weak, manual seeding is more efficient and accurate.

Due to a considerable disparity in signal-to-noise ratio, the surfaces for the inner and outer epidermis need to be created separately to achieve optimal membrane signal projection. To segment the outer epidermal cells from the same sepal, we use the sepal image prior to reorienting the stack. While the same processes described above for creating a surface for the inner epidermis can be followed, the parameters need to be modified to account for the enhanced membrane signal intensity of the outer epidermal cells. Briefly, we run ‘Gaussian blur’ to blur the stack with X/Y/Z sigma (µm) values of 1-1.5, followed by ‘Edge detect’ for global shape extraction with threshold values of 9,000-10,000. The parameters for creating the mesh surface, smoothing, and subdividing the mesh remain consistent with those described for the inner epidermis. Optimal signal projection is typically obtained with min. and max. distances of 2 and 4 µm, respectively. Cells are then carefully seeded according to cell shape, followed by segmentation using the watershed algorithm (Figure 4I). Taken together, the steps described are employed to segment cells on both the inner and outer epidermal layers across time points.

Parent tracking cells through multiple time points

To determine the cell lineages over time, we use the ‘Parent tracking’ process (refer to MorphoGraphX user manual for more details), which involves linking the daughter cell labels [in the later time point (T2)] with their respective parent cell labels [in the earlier time point (T1)]. This process allows us to track how cells grow and divide over time (Figures 5A, B). For parent tracking, the segmented T1 mesh and T2 meshes are loaded into ‘Stack 1’ and ‘Stack 2’ respectively (Supplementary Video S5). For the T1 mesh, we deselect ‘Surface’ in the ‘Stack 1’ view window, check ‘Mesh’ and choose ‘cells’ from the ‘Mesh’ dropdown menu to retain only the segmented cell outlines. For the T2 mesh, we activate ‘Parents’ under ‘Stack 2’ view window. We prefer distinct colors for segmented cell outlines in the T1 and T2 meshes to avoid confusion, options for which are available under ‘Miscellaneous tools bar/Color Palette’.

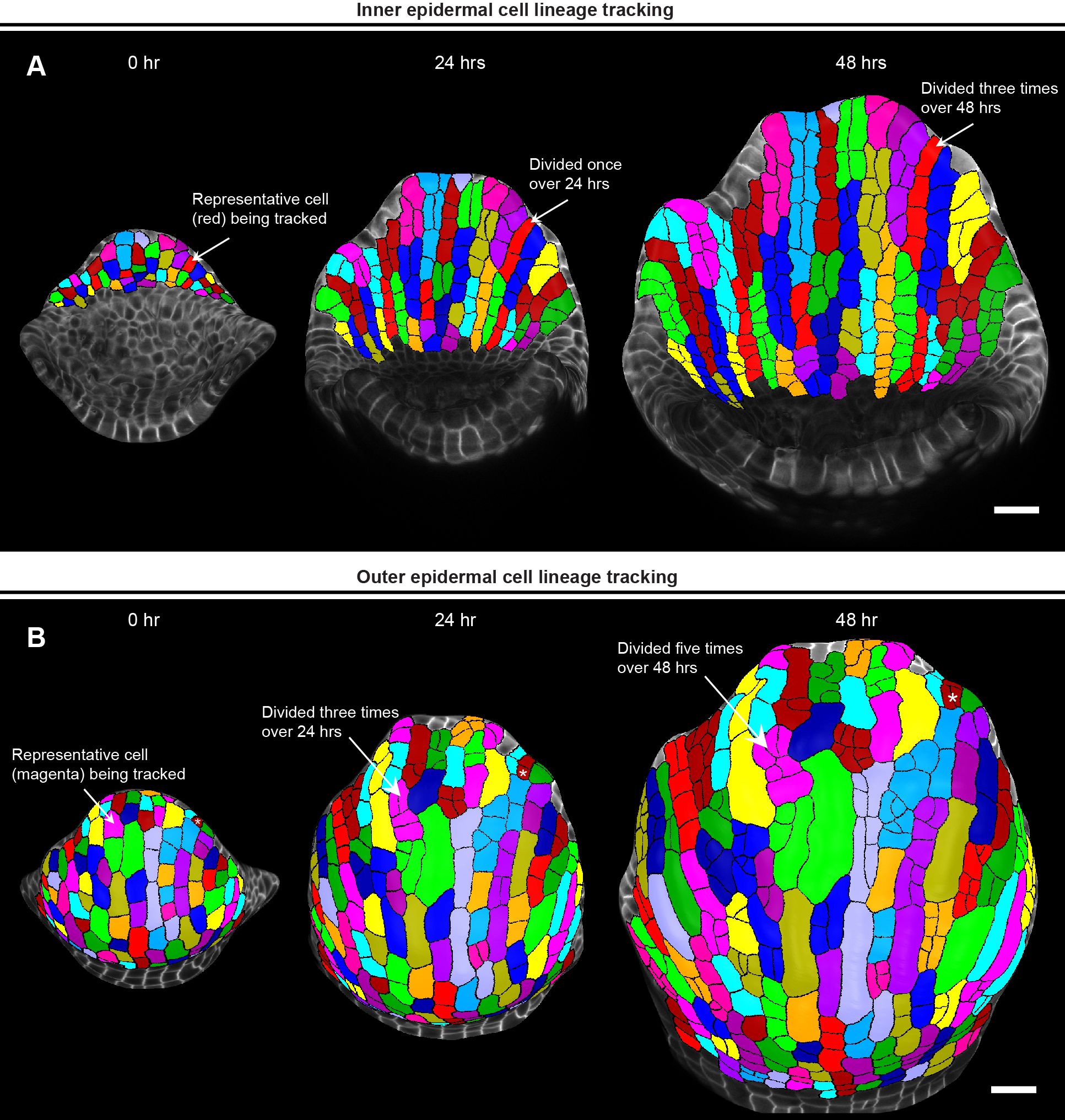

Figure 5. Parent tracking to quantify cell growth properties. (A) MorphoGraphX (Strauss et al., 2022) generated meshes of growing inner epidermis showing segmented cells parent tracked over 48 hours. The arrow shows a representative cell (red) that divided once to generate two daughter cells in 24 hours, and thrice to generate four daughter cells in 48 hours. Scale bar, 20 µm. (B) Meshes showing segmented cells of the outer epidermis parent tracked over 48 hours. The arrow points to a representative cell (magenta) that has divided thrice times over 24 hours and an additional two times over 48 hours. Note how a representative guard mother cell undergoes symmetric division to form two guard cells (white asterisk, top right). Scale bar, 20 µm.

To initiate the ‘Automatic parent labeling’ process in MorphoGraphX, manual parent labeling of a few daughter cells, ideally distributed uniformly across the T2 mesh, is necessary. These manually parent labeled cells act as landmarks for auto-parent labeling the remaining daughter cells (Supplementary Figure S3; Supplementary Videos S5, S6). To transfer parent labels, we superimpose the T1 mesh onto the T2 mesh surface such that the parent cell (in T1 mesh) selected for label transfer overlies its corresponding daughter cells (in T2 mesh) (Supplementary Video S5). Using the ‘Mesh tools/Grab label from other surface’ tool, we then simply click within the parent cell region to transfer the label. For manual parent labeling, we prioritize labeling those daughter cells in T2 mesh that are easily recognizable based on their morphological similarity to the parent cells in T1 mesh (Supplementary Video S5). Conceptually, the cell neighbors do not change despite the cells undergoing growth and division. Based on this concept, the daughter cells which can be easily recognized based on their morphological similarity to the parent cell can be used as references to parent label additional daughter cells at distant locations throughout the T2 mesh. We then run the process ‘Mesh/Deformation/Morphing/Set Correct Parents’ to set these manually parent labeled cells as landmarks for auto parent labeling, which is then performed by running ‘Mesh/Deformation/Mesh 2D/Auto Parent Labelling 2D’.

While ‘Auto Parent Labelling 2D’ labels the majority of the daughter cells, the cells that are left out need to be manually parent labeled (Supplementary Video S6). Finally, we verify whether all the parent labels have been assigned correctly by running the ‘Mesh/Cell Axis/PDG/Check Correspondence’ process on the T1 mesh (Supplementary Video S6). This generates a heatmap for both time points, allowing us to visually assess whether cell junctions correspond correctly between T1 and T2 meshes (Supplementary Video S6). The cells with accurately matched junctions are highlighted in blue, while those with errors are highlighted in red. These discrepancies typically arise from either segmentation errors or incorrect assignment of parent labels which need to be rectified manually, with the objective of having all the cells pass the correspondence check. Following this step, keeping Stack 2 active, we save the parent labels as a.csv file by running ‘Mesh/Lineage Tracking/Save Parents’. Usually, we parent track the cells across two consecutive 24-hour time points and save the parent labels for each pair of consecutive time points. In this case, we parent tracked cells from 0-to-24-hour and 24-to-48-hour time point for both outer and inner epidermis and save the parent labels as.csv files. For parent tracking through multiple time points (Figure 5), we use the.csv files to run a custom R script [combinelineages.r, Supplementary File S1) (Hong et al., 2016)] to correlate the parent labels, followed by manual correction if needed (in case there were any mistakes in parent tracking between time points). This allows us to trace individual cells from the initial time point (0 hour) up to the final time point (48 hour) (Figure 5).

Parent tracing cells on the outer and inner epidermal cells reveals an interesting pattern of cell growth and division. We found that almost all the inner epidermal cells divide vertically along the longitudinal axis of the sepal (Figure 5A). While cells on the outer epidermis also exhibit cell division along the longitudinal axis, several cells divide along the transverse direction (along the width) as well (Figure 5B). We also see multiple instances of asymmetric cell divisions, wherein the smaller sister cells can be predicted to differentiate into stomata. Additionally, since giant cell specification occurs early during development (Roeder et al., 2010), cells that cease division eventually differentiate into giant cells. Overall, once we have successfully parent tracked the cells, we can extract a multitude of growth related information for each cell lineage, which includes growth rate, direction of growth, cell division rate etc. to name a few, as previously shown (Singh Yadav et al., 2024).

Conclusions

Our optimized methodology offers a seamless workflow from sample preparation to live-imaging and image processing for analyzing early sepal development. Despite the faint nature of membrane marker signal from the inner epidermal layer, our pipeline enables reliable parent tracking of cell lineages. This enables us to bypass the need for sepal dissection, thus preserving sample integrity. Although our manual voxel editing method is time and labor intensive, it offers specific advantages over automated methods in extracting cell layers from curved tissues. While automated methods are efficient, they largely rely on robust signal intensity to accurately segment individual cells. This requirement poses a challenge for visualizing deeper cell layers, given the inherently weak signal. This is especially true in the case of live imaging samples, where maintaining sufficient signal intensity without compromising viability or causing phototoxicity is quite challenging. Manual extraction allows the investigator to meticulously discern subtle differences in membrane signal and identify the cells which the automated methods can misinterpret, leading to errors in segmentation and downstream growth calculations. Therefore, in this study we outlined the manual extraction method as a reliable approach to extract inner epidermal layer for segmenting the cells with a decent level of precision. While we demonstrated the usefulness of our computationally inexpensive approach using growing sepals, we believe that our robust methodology is applicable beyond Arabidopsis sepals, providing researchers with the much-needed manual control over signal extraction and subsequent segmentation. For example, our ‘Voxel Edit’ technique can be applied to obtain the bottommost cell layer of curved floral organs such as petals or stamens, or young leaves during the initial stages of development, to name a few. We believe our methodology will be of valuable use for researchers studying cell growth dynamics of inner plant tissue layers in 2.5D, regardless of tissue type, curvature and morphology, particularly where the signal strength of inner tissue layers is weak.

Materials and methods

Plant material and growth conditions

In this study, we used the Arabidopsis thaliana Landsberg erecta (Ler-0) ecotype plants, expressing the pLH13 (p35S::mCitrine-RCI2A) cell membrane marker [CS73559 (Singh Yadav et al., 2024)]. For imaging and subsequent analysis, plants of the T2 generation were used. The plants were segregating for the membrane marker, since homozygous lines for membrane markers driven under 35S promoter commonly exhibit silencing issues especially in the younger tissues. The seeds were initially planted on 1/2 MS media plates (comprising 1/2 MS salt mixture, 0.05% MES w/v, 1% sucrose w/v, pH ∼ 5.8 adjusted with KOH, and 0.8% phytoagar). The media was supplemented with Hygromycin (GoldBio) at a concentration of 25 µg/ml to select transgenic plants containing the pLH13 construct as previously described (Singh Yadav et al., 2024). For selection in plates, the seeds were sterilized by washing the seeds twice with a sterilization solution containing 70% ethanol + 0.1% Triton X-100, followed by two additional washes with 100% ethanol. Sterilized seeds were then dried on filter paper (Whatman 1), spread evenly on the ½ MS media hygromycin plates, and stratified at 4°C for three days. Following stratification, the plates were transferred to a growth chamber (Percival-Scientific), maintained under long-day light conditions (16 hours fluorescent light/8 hours dark) at a fluorescent light intensity of approximately 100 µmol m-1s-1 and a relative humidity of 65%. The seedlings were grown on plates for 1.5 to 2 weeks until the transgenic plants that survived Hygromycin selection were clearly distinguishable from the non-transgenic counterparts. The transgenics were subsequently transplanted into pots (6.5×8.5 cms) containing soil mixed with Vermaculite (LM-111), with one plant per pot to facilitate healthy vegetative growth. For live imaging, the inflorescence samples were cultured in ½ MS media containing 1% sucrose and 1.2% w/v phytoagar, supplemented with 1X Gamborg Vitamin mix [containing final concentration of 100 µg/ml myo-Inositol, 1 ng/ml Nicotinic Acid, 1 ng/ml Pyridoxine HCl, and 1 ng/ml Thiamine HCl (Hamant et al., 2014)] and 1000-fold diluted plant preservative mixture (PPM™, Plant Cell Technology).

Schematic diagrams

The schematic diagrams as shown in Figures 2A, B were drawn by hand using the Notability application in Apple iPad (model A1701).

Dissection

Primary inflorescences were clipped off six- to seven-week-old plants for dissection. For dissecting the inflorescences down to flower stages 7 to 8, Dumont tweezers (Style 5, Electron Microscopy Sciences) were used. For subsequent dissection of the flowers down to stages 3 to 4 in water, we used BD Micro-Fine IV needle (BD U-100 Insulin Syringes, 0.35mm (28 - gauge needle) and 12.7 mm [1/2 in.] length). Dissections were performed using either Zeiss Stemi 2000 or Stemi 508 stereo microscope.

Capturing inflorescences and whole plants

Photos of the whole plant, clipped inflorescence, and the dissected inflorescence embedded in plates containing live imaging media were taken with Samsung Galaxy S23+ phone (1848 x 4000, 7 MP). Microscopic images and videos of the inflorescence dissection were captured with an Accu-Scope Excelis 4K UHD camera mounted on Zeiss Stemi 508 stereo microscope.

Confocal imaging

A Zeiss AXIO examiner.Z1 upright microscope, equipped with Zeiss LSM710 confocal head was used for live-imaging the sepals using a 20X water-dipping objective (Plan-Apochromat 20x/1.0 DIC D=0.17 M27 75mm). The microscope is controlled by the Zeiss ZEN user interface software (ZEN 2012 SP5 FP3 (64 bit) version 14.0.27.201) which is installed in a 64-bit Windows 10 system (CPU - Intel Xeon(R) Gold 5222 @3.80 Hz, RAM - 64.0 GB). For the 0-hour timepoint, the sepal of stage 4 flower was imaged with the following settings: x: y: z spatial scaling of 0.244 µm, image dimensions of 1024 x 1024 x 743 pixels (8-bit), a zoom factor of 1.7, averaging = 1, excited with argon laser at 514 nm with 16 to 20% intensity and a master gain of 485. For the 24-hour timepoint, the imaging settings were the same, except for image dimensions, which were 1024 x 1024 x 885 pixels (8-bit), and a master gain of 505. For the 48-hour time point, the sepal was imaged with image dimensions of 1024 x 1024 x 895 pixels (8-bit) and a master gain of 513. Across all time points, the emission for mCitrine was collected between the range of 519-596 nm. The images were saved in .lsm file formats.

Image processing

Image processing in this study was performed using MorphographX 2.0 r1-294 image processing software (Barbier de Reuille et al., 2015; Strauss et al., 2022), installed in an Ubuntu 20.04.4 LTS (Focal Fossa) 64-bit operating system. The system has NVIDIA Cuda driver version 11.40 installed and is equipped with the following hardware specifications: CPU - Intel (R) CoreTM i7-5930K @ 3.50GHz with 12 cores, RAM - 64 GB, and GPU - NVIDIA GeForce GTX TITAN X with a capacity of 12 GB. Our system specifications met the requirements recommended for the MorphoGraphX software, which are 32-64 GB RAM and an NVIDIA graphics card.

For viewing and processing the images in MorphoGraphX, the raw images in.lsm file format were converted to.tiff format using Image J distribution Fiji (Rueden et al., 2017). In general, we import the first image in ‘Stack 1’ view window under ‘Main’, and any subsequent images in ‘Stack 2’. For taking screenshots of the flowers, the extraneous parts of the image not associated with the flower (such as dissected parts of the inflorescence and/or other flowers) were removed using the ‘Voxel Edit’ tool. All screenshots were captured using the ‘Save screenshot’ tool and saved as .png image files. The videos were captured using the ‘Record Movie tool’. Briefly, prior to video capturing, the images/meshes were pre-positioned in the view window and then rotated or moved in the view window as required, with the ‘Record Movie’ tool activated. The ‘Record Movie’ tool captures these movements by generating a series of two-dimensional.tiff files. To produce the videos, the.tiff file series were imported into Fiji using ‘File/Import/Image Sequence’ and were subsequently saved as uncompressed.avi video files. Further video processing, including lossless compression, labeling, and annotation, was performed using Adobe Premiere Pro 2023.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://doi.org/10.17605/OSF.IO/UMW9B, https://doi.org/10.17605/OSF.IO/P5Q39.

Author contributions

AS: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing. AR: Conceptualization, Data curation, Funding acquisition, Project administration, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. Research reported in this publication was supported by the National Institute of General Medical Sciences of the National Institutes of Health under Award Number R01GM134037 to AR. AS is also supported by the 2023 Sam and Nancy Fleming Research Fellowship.

Acknowledgments

We thank Isabella Rose Burda, Shuyao Kong and Michelle Heeney for their comments on the manuscript. We thank Richard Smith (John Innes Centre, UK) for his advice on image processing.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author Disclaimer

The content of this study is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health and other funders.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2024.1449195/full#supplementary-material

Supplementary Figure 1 | Trimmed inflorescence for dissection, associated with Figure 1. (A) Whole plant image of six to seven-week-old Arabidopsis Ler-0 accession plant grown in long-day conditions (16-hr light/8-hr dark). (B) Inflorescence post-trimming, which has been cut approximately 2-3 cm below the inflorescence apex (red line) for dissection. Scale bar, 1 cm.

Supplementary Figure 2 | The ‘Voxel Edit’ tool and ‘Clipping plane’ on the MorphographX window, associated with Figure 3. (A) The panel shows the location of the “Voxel Edit” tool and the slider under the ‘View’ tab to adjust the area for voxel editing. One can also change the editing area by using the mouse scrolling wheel to zoom in and out. Pressing and holding the Alt-key activates the tool (a white circle will appear), allowing for voxel deletion by left-clicking the mouse. Note, for “Voxel Edit” option to be available, the image needs to be in the work stack (Process/Stack/MultiStack/Copy Main to Work Stack). (B) Panel shows the clipping plane. In this case, “Clip 1”, which is parallel to the Z-direction and clips along the X-direction of the stack, is shown. The slider allows for adjusting the depth (thickness) of the Clipping plane.

Supplementary Figure 3 | Semi-automatic parent tracking, associated with Figure 5. (A) MorphoGraphX generated mesh for the inner epidermis at 24-hour time point showing segmented cells. Colors represent individual cells. (B) Inner epidermis mesh at 48-hour time point, wherein segmented cells are being parent tracked to cells in 24-hour time point (panel A). Colors correspond to parent cells in the 24- hour time point mesh. Note that manually parent labelled cells are distributed across the mesh, which greatly aids automating parent labelling. (C) Cells in 48-hour time point parent labelled using automatic parent labeling, followed by manual correction. Scale bar, 20 µm.

Supplementary Video 1 | Dissecting Arabidopsis flowers off the inflorescence using tweezers. The flowers are dissected by pressing the pedicel using tweezers while having the inflorescence securely held in one hand. As the flowers are being dissected, the inflorescence is rotated gradually such that the older flowers are dissected first, followed by younger flowers.

Supplementary Video 2 | Dissection of Arabidopsis flowers in water using a needle. The inflorescence, comprising flowers of stages 8 and below, is anchored in agar media while being submerged in water to prevent dehydration during dissection. The plate is rotated such that the flower to be dissected is at a convenient angle. Older flowers are dissected first, followed by the younger flowers.

Supplementary Video 3 | Removing voxels beneath the inner epidermis. Enabling the clipping plane (positioned longitudinally) reveals the longitudinal section of the flower. The ‘Voxel Edit’ tool is used to carefully remove voxels beneath the inner epidermis, without removing voxels associated with the inner epidermis.

Supplementary Video 4 | Sequential voxel removal beneath the sepal inner epidermis in longitudinal sections using the clipping plane to fully expose the inner epidermis. The video shows the 3D dimensional view of a typical Arabidopsis stage 7 flower, with cells on both epidermal layers of the outer sepal segmented. To expose the inner epidermis, voxels within a given longitudinal section defined by the clipping plane are removed. The steps for voxel removal involve tilting and moving the clipping plane to the adjacent regions, resulting in a complete view of the inner epidermis. The 3D image of the inner epidermis can then be used to generate a mesh surface onto which the membrane signal is projected for segmenting the cells.

Supplementary Video 5 | Manually assigning parent labels to inner epidermal cells. In this video, the inner epidermal meshes from earlier (T1, 24-hour) and later (T2, 48-hour) time points are loaded onto Stack 1 and Stack 2, respectively. To assign parent labels, the T1 mesh surface is unchecked, leaving only segmented cell outlines visible. With parents active for the T2 mesh, the T1 mesh is overlayed onto the T2 mesh, such that the parent cells lie above their corresponding daughter cells. Parent labels are assigned by clicking within the parent cell area.

Supplementary Video 6 | Semi-automatic parent labeling. In this video, 24-hour timepoint (T1) mesh and 24-hour timepoint (T2) mesh are loaded onto Stack 1 and 2 respectively. A subset of cells in the T2 mesh are manually parent labeled, which serves as references for MorphoGraphX process ‘Auto Parent Labeling 2D’ to label the remaining cells. Cells that were not auto-parent labeled were labeled manually. The accuracy of parent labeling was checked by running the process ‘Check Correspondence’ which checks whether cell junctions between T1 and T2 mesh correspond correctly.

References

Barbier de Reuille, P., Routier-Kierzkowska, A.-L., Kierzkowski, D., Bassel, G. W., Schüpbach, T., Tauriello, G., et al. (2015). MorphoGraphX: A platform for quantifying morphogenesis in 4D. eLife 4, e05864. doi: 10.7554/eLife.05864

Booth, M. J. (2014). Adaptive optical microscopy: the ongoing quest for a perfect image. Light: Sci. Appl. 3, e165. doi: 10.1038/lsa.2014.46

Bradley, D., Ratcliffe, O., Vincent, C., Carpenter, R., Coen, E. (1997). Inflorescence commitment and architecture in arabidopsis. Science 275, 80–83. doi: 10.1126/science.275.5296.80

Byrne, M. E., Groover, A. T., Fontana, J. R., Martienssen, R. A. (2003). Phyllotactic pattern and stem cell fate are determined by the Arabidopsis homeobox gene BELLRINGER. Development 130, 3941–3950. doi: 10.1242/dev.00620

Chickarmane, V., Roeder, A. H., Tarr, P. T., Cunha, A., Tobin, C., Meyerowitz, E. M. (2010). Computational morphodynamics: a modeling framework to understand plant growth. Annu. Rev. Plant Biol. 61, 65–87. doi: 10.1146/annurev-arplant-042809-112213

Evans, J. R. (2013). Improving photosynthesis. Plant Physiol. 162, 1780–1793. doi: 10.1104/pp.113.219006

Hamant, O., Das, P., Burian, A. (2014). Time-lapse imaging of developing meristems using confocal laser scanning microscope. Methods Mol. Biol. 1080, 111–119. doi: 10.1007/978-1-62703-643-6_9

Hamant, O., Das, P., Burian, A. (2019). Time-lapse imaging of developing shoot meristems using A confocal laser scanning microscope. Methods Mol. Biol. 1992, 257–268. doi: 10.1007/978-1-4939-9469-4_17

Harline, K., Roeder, A. H. K. (2023). An optimized pipeline for live imaging whole Arabidopsis leaves at cellular resolution. Plant Methods 19, 10. doi: 10.1186/s13007-023-00987-2

Hériché, M., Arnould, C., Wipf, D., Courty, P.-E. (2022). Imaging plant tissues: advances and promising clearing practices. Trends Plant Sci. 27, 601–615. doi: 10.1016/j.tplants.2021.12.006

Hervieux, N., Dumond, M., Sapala, A., Routier-Kierzkowska, A.-L., Kierzkowski, D., Roeder, A. H.K., et al. (2016). A mechanical feedback restricts sepal growth and shape in arabidopsis. Curr. Biol. 26, 1019–1028. doi: 10.1016/j.cub.2016.03.004

Hong, L., Dumond, M., Tsugawa, S., Sapala, A., Routier-Kierzkowska, A.-L., Zhou, Y., et al. (2016). Variable cell growth yields reproducible organ development through spatiotemporal averaging. Dev. Cell 38, 15–32. doi: 10.1016/j.devcel.2016.06.016

Jenik, P. D., Irish, V. F. (2000). Regulation of cell proliferation patterns by homeotic genes during Arabidopsis floral development. Development 127, 1267–1276. doi: 10.1242/dev.127.6.1267

Jenkin Suji, R., Bhadauria, S. S., Wilfred Godfrey, W. (2023). A survey and taxonomy of 2.5D approaches for lung segmentation and nodule detection in CT images. Comput. Biol. Med. 165, 107437. doi: 10.1016/j.compbiomed.2023.107437

Kam, Z., Hanser, B., Gustafsson, M. G. L., Agard, D. A., Sedat, J. W. (2001). Computational adaptive optics for live three-dimensional biological imaging. Proc. Natl. Acad. Sci. 98, 3790–3795. doi: 10.1073/pnas.071275698

Kurihara, D., Mizuta, Y., Nagahara, S., Higashiyama, T. (2021). ClearSeeAlpha: advanced optical clearing for whole-plant imaging. Plant Cell Physiol. 62, 1302–1310. doi: 10.1093/pcp/pcab033

Littlejohn, G. R., Mansfield, J. C., Christmas, J. T., Witterick, E., Fricker, M. D., Grant, M. R., et al. (2014). An update: improvements in imaging perfluorocarbon-mounted plant leaves with implications for studies of plant pathology, physiology, development and cell biology. Front. Plant Sci. 5, 140. doi: 10.3389/fpls.2014.00140

Mouradov, A., Cremer, F., Coupland, G. (2002). Control of flowering time: interacting pathways as a basis for diversity. Plant Cell 14 Suppl, S111–S130. doi: 10.1105/tpc.001362

Pawley, J. (1995). “Fundamental limits in confocal microscopy,” in Handbook of Biological Confocal Microscopy. Ed. Pawley, J. B. (Springer US, Boston, MA), 19–37.

Prunet, N. (2017). Live confocal imaging of developing arabidopsis flowers. J. Vis. Exp. (122). doi: 10.3791/55156

Richman, D. P., Stewart, R. M., Hutchinson, J. W., Caviness, V. S., Jr. (1975). Mechanical model of brain convolutional development. Science 189, 18–21. doi: 10.1126/science.1135626

Robinson, D. O., Coate, J. E., Singh, A., Hong, L., Bush, M., Doyle, J. J., et al. (2018). Ploidy and size at multiple scales in the arabidopsis sepal. Plant Cell 30, 2308–2329. doi: 10.1105/tpc.18.00344

Roeder, A. H. K. (2021). Arabidopsis sepals: A model system for the emergent process of morphogenesis. Quantit. Plant Biol. 2, e14. doi: 10.1017/qpb.2021.12

Roeder, A. H., Chickarmane, V., Cunha, A., Obara, B., Manjunath, B. S., Meyerowitz, E. M. (2010). Variability in the control of cell division underlies sepal epidermal patterning in Arabidopsis thaliana. PloS Biol. 8, e1000367. doi: 10.1371/journal.pbio.1000367

Roeder, A. H., Cunha, A., Burl, M. C., Meyerowitz, E. M. (2012). A computational image analysis glossary for biologists. Development 139, 3071–3080. doi: 10.1242/dev.076414

Rueden, C. T., Schindelin, J., Hiner, M. C., DeZonia, B. E., Walter, A. E., Arena, E. T., et al. (2017). ImageJ2: ImageJ for the next generation of scientific image data. BMC Bioinf. 18, 529. doi: 10.1186/s12859-017-1934-z

Sakamoto, Y., Ishimoto, A., Sakai, Y., Sato, M., Nishihama, R., Abe, K., et al. (2022). Improved clearing method contributes to deep imaging of plant organs. Commun. Biol. 5, 12. doi: 10.1038/s42003-021-02955-9

Savaldi-Goldstein, S., Chory, J. (2008). Growth coordination and the shoot epidermis. Curr. Opin. Plant Biol. 11, 42–48. doi: 10.1016/j.pbi.2007.10.009

Savin, T., Kurpios, N. A., Shyer, A. E., Florescu, P., Liang, H., Mahadevan, L., et al. (2011). On the growth and form of the gut. Nature 476, 57–62. doi: 10.1038/nature10277

Singh Yadav, A., Hong, L., Klees, P. M., Kiss, A., Petit, M., He, X., et al. (2024). Growth directions and stiffness across cell layers determine whether tissues stay smooth or buckle. bioRxiv: 2023.2007.2022.549953. doi: 10.1101/2023.07.22.549953

Silverberg, J. L., Noar, R. D., Packer, M. S., Harrison, M. J., Henley, C. L., Cohen, I., et al. (2012). 3D imaging and mechanical modeling of helical buckling in Medicago truncatula plant roots. Proc. Natl. Acad. Sci. U.S.A. 109, 16794–16799. doi: 10.1073/pnas.1209287109

Smyth, D. R., Bowman, J. L., Meyerowitz, E. M. (1990). Early flower development in Arabidopsis. Plant Cell 2, 755–767. doi: 10.1105/tpc.2.8.755

Strauss, S., Runions, A., Lane, B., Eschweiler, D., Bajpai, N., Trozzi, N., et al. (2022). Using positional information to provide context for biological image analysis with MorphoGraphX 2.0. eLife 11, e72601.

Tauriello, G., Meyer, H. M., Smith, R. S., Koumoutsakos, P., Roeder, A. H. K. (2015). Variability and constancy in cellular growth of arabidopsis sepals. Plant Physiol. 169, 2342–2358. doi: 10.1104/pp.15.00839

Tsugawa, S., Hervieux, N., Kierzkowski, D., Routier-Kierzkowska, A. L., Sapala, A., Hamant, O., et al. (2017). Clones of cells switch from reduction to enhancement of size variability in Arabidopsis sepals. Development 144, 4398–4405. doi: 10.1242/dev.153999

Tsukaya, H. (2005). Leaf shape: genetic controls and environmental factors. Int. J. Dev. Biol. 49, 547–555. doi: 10.1387/ijdb.041921ht

Van As, H., Scheenen, T., Vergeldt, F. J. (2009). MRI of intact plants. Photosynth. Res. 102, 213–222. doi: 10.1007/s11120-009-9486-3

Varner, V. D., Gleghorn, J. P., Miller, E., Radisky, D. C., Nelson, C. M. (2015). Mechanically patterning the embryonic airway epithelium. Proc. Natl. Acad. Sci. 112, 9230–9235. doi: 10.1073/pnas.1504102112

Vesely, P. (2007). Handbook of Biological Confocal Microscopy. 3rd ed Vol. 29. Ed. Pawley, J. B. (LLC, New York: Springer Science + Business Media), 91–91. ISBN 10: 0-387-25921-X.; hardback; 28 + 985 pages.” Scanning.

Waddington, C. H. (1939). Preliminary notes on the development of the wings in normal and mutant strains of drosophila. Proc. Natl. Acad. Sci. U.S.A. 25, 299–307. doi: 10.1073/pnas.25.7.299

Werner, S., Bartrina, I., Novák, O., Strnad, M., Werner, T., Schmülling, T. (2021). The cytokinin status of the epidermis regulates aspects of vegetative and reproductive development in arabidopsis thaliana. Front. Plant Sci. 12, 613488. doi: 10.3389/fpls.2021.613488

Wolny, A., Cerrone, L., Vijayan, A., Tofanelli, R., Barro, A. V., Louveaux, M., et al. (2020). Accurate and versatile 3D segmentation of plant tissues at cellular resolution. Elife 9. doi: 10.7554/eLife.57613.sa2

Yu, T., Zhong, X., Yang, Q., Gao, C., Chen, W., Liu, X., et al. (2023). On-chip clearing for live imaging of 3D cell cultures. BioMed. Opt. Express 14, 3003–3017. doi: 10.1364/BOE.489219

Zhou, X., Carranco, R., Vitha, S., Hall, T. C. (2005). The dark side of green fluorescent protein. New Phytol. 168, 313–322. doi: 10.1111/j.1469-8137.2005.01489.x

Keywords: growth, 2.5D segmentation, live imaging, image processing, deep tissue imaging, Arabidopsis, MorphoGraphX, sepals

Citation: Singh Yadav A and Roeder AHK (2024) An optimized live imaging and multiple cell layer growth analysis approach using Arabidopsis sepals. Front. Plant Sci. 15:1449195. doi: 10.3389/fpls.2024.1449195

Received: 14 June 2024; Accepted: 29 July 2024;

Published: 03 September 2024.

Edited by:

Jan Kovac, Technical University of Zvolen, SlovakiaCopyright © 2024 Singh Yadav and Roeder. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Adrienne H. K. Roeder, YWhyNzVAY29ybmVsbC5lZHU=

Avilash Singh Yadav

Avilash Singh Yadav Adrienne H. K. Roeder

Adrienne H. K. Roeder