95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 14 March 2024

Sec. Plant Biophysics and Modeling

Volume 15 - 2024 | https://doi.org/10.3389/fpls.2024.1367828

Precise and timely leaf area index (LAI) estimation for winter wheat is crucial for precision agriculture. The emergence of high-resolution unmanned aerial vehicle (UAV) data and machine learning techniques offers a revolutionary approach for fine-scale estimation of wheat LAI at the low cost. While machine learning has proven valuable for LAI estimation, there are still model limitations and variations that impede accurate and efficient LAI inversion. This study explores the potential of classical machine learning models and deep learning model for estimating winter wheat LAI using multispectral images acquired by drones. Initially, the texture features and vegetation indices served as inputs for the partial least squares regression (PLSR) model and random forest (RF) model. Then, the ground-measured LAI data were combined to invert winter wheat LAI. In contrast, this study also employed a convolutional neural network (CNN) model that solely utilizes the cropped original image for LAI estimation. The results show that vegetation indices outperform the texture features in terms of correlation analysis with LAI and estimation accuracy. However, the highest accuracy is achieved by combining both vegetation indices and texture features to invert LAI in both conventional machine learning methods. Among the three models, the CNN approach yielded the highest LAI estimation accuracy (R2 = 0.83), followed by the RF model (R2 = 0.82), with the PLSR model exhibited the lowest accuracy (R2 = 0.78). The spatial distribution and values of the estimated results for the RF and CNN models are similar, whereas the PLSR model differs significantly from the first two models. This study achieves rapid and accurate winter wheat LAI estimation using classical machine learning and deep learning methods. The findings can serve as a reference for real-time wheat growth monitoring and field management practices.

Wheat, a globally cultivated food grain crop, plays a pivotal role in sustaining approximately 35% of the world’s population. Its growth and yield are paramount for safeguarding food security (IDRC, 2010). Leaf area index (LAI) is intricately linked to fundamental biophysical processes like vegetation light absorption, evapotranspiration, and productivity (Du et al., 2022; Ma et al., 2023). Therefore, obtaining real-time and reliable LAI estimations is essential for assessing wheat growth potential and providing timely technical guidance for subsequent field management practices (Bellis et al., 2022; Yang et al., 2019).

Currently, two widely used methods for LAI measurement are direct and indirect. While direct measurement through field observations boasts high accuracy, it is labor-intensive and destructive, making it impractical for large scale assessments (Castro-Valdecantos et al., 2022). Alternatively, indirect methods are based on the Beer-Lambert Law theory and typically involve optical instruments for bottom-up hemispherical photography and spectral reflectance measurements (Apolo-Apolo et al., 2020; Yan et al., 2016). However, both direct and indirect approaches are inadequate for extending LAI estimation to larger scales or regions. Remote sensing technology offers a viable solution for this challenge. In recent decades, researchers have successfully utilized medium- and low-resolution satellite datasets, such as Landsat, MODIS (Moderate Resolution Imaging Spectroradiometer), and AVHRR (Advanced Very High-Resolution Radiometer), to retrieve broad-scale LAI (Xiao et al., 2016; Kang et al., 2021). Yet, LAI estimation based on satellite data falls short in providing refined field-scale monitoring. Unmanned aerial vehicles (UAVs) offer substantial advantages over satellites in terms of enhanced temporal and spatial resolution, alongside greater flexibility (Li et al., 2019; Yin et al., 2023). Multispectral cameras are a popular choice among UAV sensors, it can capture spectral information in the red-edge and near-infrared bands, which are crucial for analyzing vegetation (Yao et al., 2019). These spectral bands and the vegetation indices have been effectively employed to predict crop LAI, demonstrating commendable accuracy (Liu et al., 2021; Wang et al., 2020). However, limitations arise when estimating LAI solely using visible or multispectral vegetation indices, especially in the presence of high crop cover and saturation phenomena (Corti et al., 2022; Zhou et al., 2017). To address these limitations, some researchers have incorporated texture features alongside vegetation indices for crop parameter estimation (Liu et al., 2023b; Xu et al., 2022). For example, Liu, et al. (Liu et al., 2023a) investigated the use of vegetation indices, structural information, and texture features for estimating the leaf area index (LAI) of winter wheat. The model utilizing only texture features achieved the highest accuracy of 0.56 (R2), surpassing models based solely on vegetation indices or structural information. Texture’s success in LAI estimation stems largely from its ability to capture spatial information about crops (Zhang et al., 2022a; Liu et al., 2018). However, the existing research methods cannot accurately reflect the complex changes in the canopy structure at different growth stages. It is necessary to explore the extent to which vegetation index and texture features can improve the estimation of wheat LAI, especially in the canopy closure stage and heading stage.

The inversion model plays a pivotal role in accurately quantifying the crop LAI. The traditional estimation methods primarily rely on the empirical relationship between spectral features and crop parameters, often employing linear regression. While this method is straightforward to compute and implement, it is prone to regional variations and discrepancies across different crop types, even with similar predictors (Chen et al., 2002; Zhou et al., 2021). Additionally, traditional statistical regression models face limitations in handling complex nonlinear and multicollinearity relationships between LAI and spectral features. In recent years, numerous machines learning algorithms, including partial least squares regression (PLSR), random forest (RF), and decision trees (DT) have gained widespread adoption in remote sensing parameter inversion. These algorithms excel in modeling nonlinearity and heteroscedasticity relationships among various feature types (Jordan and Mitchell, 2015; Lary et al., 2016). However, the process of deriving and selecting a large number of characteristic bands can be impractical and inefficient, making it challenging to identify the most effective set of predictors and potentially leading to suboptimal performance. The emergence of deep learning (DL) techniques has revolutionized agricultural applications, offering robust and intelligent solutions (Osco et al., 2021). Unlike the shallow neural network approach, deep learning is characterized by a significantly increased number of successively connected neural layers, allowing them to extract more complex relationships (Kattenborn et al., 2021). Convolutional neural networks (CNN) are particularly adept at analyzing spatial patterns. Designed for spatial feature extraction, the CNN model possesses the advantage of directly processing raw images, eliminating the need for extensive pre-processing (Liang et al., 2018). The efficacy of UAV-based multispectral data in estimating crop leaf area index (LAI) has fueled advances in agricultural remote sensing (Guo et al., 2023; Wittstruck et al., 2022). Despite the widespread utilization of algorithms for predicting crop LAI, there remains a paucity of studies comparing the performance of wheat LAI estimation using both classical machine learning and deep learning approaches based on multispectral UAV data.

This study leverages UAV-derived multispectral data to address two key objectives: 1) to compare the predictive potential of vegetation indices and textural features for estimating winter wheat LAI, 2) to evaluate the performance of traditional machine methods (PLSR and RF) and deep learning method (CNN) for predicating wheat LAI. We hope our findings will provide technical basis and references for estimating key crop parameters during critical growth stages of wheat.

The winter wheat study area is located in the winter wheat breeding experimental field of Zhoukou Academy of Agricultural Sciences, Zhoukou, Henan province, China (114°41 ‘E, 33°39 ‘N), as shown in Figure 1. The primary crops cultivated are winter wheat and summer corn. The study area cultivates dozens of different wheat varieties with the purpose of displaying wheat varieties and conducting breeding screenings, which enhances the variability of the image dataset. Notably, the winter wheat was sown in October 2022, followed the same management practices as the local winter wheat crop.

On April 5, 2023, the critical period of wheat growth, specifically the heading stage, was captured using UAV remote sensing imagery. For this observation, a DJI Phantom 4 multispectral UAV (DJI Technology, Ltd., Shenzhen, China) was utilized to acquire images of the wheat canopy. The UAV’s RGB sensor provided high resolution visible light imagery, while its five monochrome sensors captured data across specific spectral bands (details in Table 1). The UAV has an integrated light intensity sensor on top, which can obtain solar irradiance information to compensate for illumination of the image and exclude interference from ambient light. In addition, the UAV platform is equipped with a real-time kinematic (RTK) module, which enables centimeter-level localization accuracy. For mission planning, DJI GS Pro software is employed to plan the route. Data collection was conducted at an altitude of 70 m under clear and windless sky conditions between 10:00 and 14:00. The spatial resolution of the captured images is 3.7 cm, and the drone’s flight speed is 5 m/s. The mission included an 80% heading overlap, a 70% side overlap, resulting in the acquisition of 2382 images. Using DJI Terra software, the acquired raw images were stitched together following below steps: (1) import all the images to the software; (2) perform aerial triangulation processing to calculate the sensor’s position and orientation during imaging, as well as generating a spare point cloud of the captured objects; (3) validate the quality, proceed to multispectral stitching reconstruction, and finally produce the ortho-mosaic images covering the study area.

Ground-based measurements of wheat LAI were conducted within three days of acquiring UAV images. The LAI-2200C canopy analyzer (LI-COR Biosciences, Inc., Lincoln, NE, USA) was utilized to obtain non-destructive information on the leaf area index. LAI measurements were conducted under controlled weather conditions, ensuring cloudy or clear, cloud-free weather with uniform cloud thickness. A total of 234 wheat quadrats (1×1m), evenly distributed across the study area, were selected for measurement (Figure 1), and the wheat growth within these quadrats was uniform. During measurements, the radiative value (A) was initially obtained above the wheat canopy, followed by the acquisition of four radiative values (B) below the diagonal wheat canopy in the two ridges. After the measurement, the instrument automatically computes the LAI values of the sampled points. Four sets of LAI values were measured within each sample square. During measurements, a 45° view cap was utilized to prevent the entry of the observer or direct light into the sensor’s field of view. Additionally, four A values were measured for scattering correction in the presence of sunlight exposure, aiming to mitigate errors caused by sunlight scattering. The centimeter-level differential localization service is provided by Qianxun SI (Shanghai, China), the sample number and its coordinate information were recorded by handheld RTK equipment (Qianxun SI, Ltd., Shanghai, China) at the center of each sample.

Vegetation indices provide a simple and effective means to reflect vegetation growth characteristics and are extensively employed for monitoring physiological and biochemical parameters, including crop LAI (Bendig et al., 2015; Tao et al., 2020b). For this study, 23 vegetation indices demonstrating a strong correlation with LAI, including NDVI, NDRE, and OSAVI, were chosen for estimation and modeling, building on previous research findings (Table 1). The mean value of the vegetation index computed for all pixels in each wheat quadrant corresponds to the measured LAI value on the ground, and the LAI is then estimated. The vegetation index is calculated and the spectral average is obtained in R ver. 4.3.1.

Texture features were extracted using the gray level co-occurrence matrix (GLCM), a powerful spatial analysis technique that captures the relationship between pixels, and has demonstrated effectiveness in extracting crop-related information in numerous studies (Ilniyaz et al., 2023). In this study, ENVI software was employed to extract 8 GLCM texture features from each of the 5 multispectral bands, including dissimilarity (dis), variance (var), entropy (ent), mean (mean), synergism (hom), second moment (sec), correlation (cor) and contrast (con). During extraction, 3×3 windows were employed, and calculations were performed at a 45° angle. Previous studies have shown that the constructed GLCM texture features are essentially independent of different directions and window sizes concerning the correlation with vegetation physiological parameters (Liu et al., 2022a; Fu et al., 2020). In order to reduce data dimensionality, this study extracted features using a 3×3 window and calculated them at a 45° angle. This process resulted in the extraction of a total of 40 texture features. To enhance clarity, the band name and texture features are linked with “_”. For instance, red_mean and red_var indicate the extraction of mean (mean) and variance (var) texture features from the red band, respectively.

This study utilizes the random forest algorithm to compute the weights assigned to each feature. Additionally, Pearson correlation analysis quantifies the linear relationship between each feature and LAI. The optimal feature selection is performed by synthesizing the correlation coefficients between each image feature and LAI, as well as the weights of each feature. Image features with weights exceeding 0.03 and displaying extremely significant correlation with LAI (without restricting the size of correlation coefficients) were chosen for subsequent LAI estimation of winter wheat. Following optimization, a total of 9 vegetation indices and 10 texture features were identified (Table 2).

To compare performance with deep learning methods, this study employed two classical machine learning models: partial least squares regression (PLSR) and Random Forest (RF). These models are extensively used in remote sensing and LAI inversion. PLSR is a multivariate regression analysis that combines advantages of traditional methods like principal component analysis and multivariate linear regression. PLSR adopts the method of data dimensionality reduction and increases the covariance between independent variables and dependent variables through the sequential selection of orthogonal factors, which reduces redundancy and improves the computational efficiency of the mode (Shao et al., 2023). In cases of datasets with numerous variables and multiple correlations between independent and dependent variables, the traditional linear regression method lacks precision in analyzing dependent variables, leading to low model accuracy. In contrast, the PLSR method enhances model accuracy under such circumstances. Furthermore, the resulting regression model will encompass all the information from independent variables and furnish a comprehensive explanation of the model’s regression coefficients (Tang et al., 2022; Tao et al., 2020a). The optimal number of explanatory variables for PLSR in this study was determined based on the root-mean-square error of LAI inversion accuracy (Cui and Kerekes, 2018), with default values adopted for other parameters. RF is an integrated learning algorithm founded on multiple decision trees and bagging technology introduced by Breiman (2001) in 2001. The training set is formed by putting samples back and taking samples multiple times, and then the prediction results are averaged through a combination of decision trees. The RF performance hinges on two crucial parameters: the number of decision trees and the number of split nodes. Generally, as the number of decision trees increases, the prediction error of the model gradually decreases and stabilizes. Following parameter tuning, the number of decision trees in this study is set to 1000, and the number of split nodes adopts the default value (1/3 of the number of variables). In R, the “pls” and “randomForest” packages were used to construct the model and adjust the parameters. The independent variable of the input model was the mean vegetation index and the mean texture features in the 1×1m quadrat, and the dependent variable was the ground measured value of LAI. The selection of training and test samples is consistent with CNN and will be described in the following section.

Given the augmented number of feature variables, expressing them through simple linear relations becomes challenging. Therefore, the model requires enhanced capability for nonlinear fitting. CNN has gained widespread adoption due to its robust nonlinear fitting capability, yielding favorable outcomes (Luo et al., 2023). Consequently, this study also constructs a CNN model for mapping LAI. Due to the apparent difference in the range of values between the different bands, the direct input of the CNN may trigger large gradient updates and thus prevent the network from converging. To remove the effect of such differences, the standard normalization method was used in this study, where the mean value of each band image was subtracted to centralize and then divided by its standard deviation to finally obtain a mean value of 0.

The CNN model used in this study consists of convolutional layers, batch normalization layers, pooling layers, dropout layers, fully connected layers, and regression layers. The model employs the linear rectification function (ReLU) as the activation function, and the loss is calculated using the mean square error (MSE) function. The model is optimized using gradient descent (with momentum) optimizer, with a learning rate of 0.001. To mitigate overfitting, a dropout layer is incorporated, randomly excluding learned parameters from neurons with a probability 0.2. This reduces the network’s sensitivity to specific neuron weights, thereby improving the model’s generalization ability. The specific structure of CNN model is shown in Table 3. The input CNN requires 234 cropped quadrate images, with each containing 32 by 32 pixels. This study employs data augmentation to expand the sample dataset. Prior to expansion, 70% of the sample data (164 frames) is allocated for training and validation, while the remaining 30% (70 frames) is reserved for the test dataset. Rotation and flipping are widely used in data expansion (Xiong et al., 2017; Ma et al., 2019). In this study, these methods are applied to augment the training and validation datasets, while the test dataset remains unchanged for evaluation. Specifically, the images underwent rotations (90°, 180° and 270°) and flipping (horizontal, vertical), resulting in the creation of five extended datasets. Consequently, the final training and validation dataset was expanded sixfold, totaling 984 frames. Among these, 80% (787 frames) were allocated for training, and the remaining 20% (197 frames) for validation. The Figure 2 shows the data augmentation traffic. The CNN model was implemented in R (4.3.1) using the “keras” and “tensorflow” packages. The computational setup includes an NVIDIA GeForce RTX 3090 graphics card (16GB video memory) (NVIDIA, Inc., Santa Clara, CA, USA), 64 GB RAM, and an Intel Core i7-11700 CPU (2.50 GHz, 16 cores) (Intel, Inc., Santa Clara, CA, USA).

This study employed the coefficient of determination (R2), root mean square error (RMSE), and mean absolute error (MAE) as evaluation indices for assessing model accuracy. The R2 signifies the agreement between estimated and measured values, with a value closer to 1 indicating a better fit of the model. The RMSE indicates the extent of deviation between estimated and measured values, with smaller values suggesting a better model fit. The MAE assesses the actual deviation between estimated and measured values, with smaller values indicating higher model accuracy. Both MAE and RMSE capture the average difference between estimated and measured values, with RMSE being more sensitive to large prediction errors. The formulas ((1), (2) and (3)) for these three indicators are as follows:

Where, and represent the measured and estimated value of the i th sample, is the average of all measured values, and n is the number of samples.

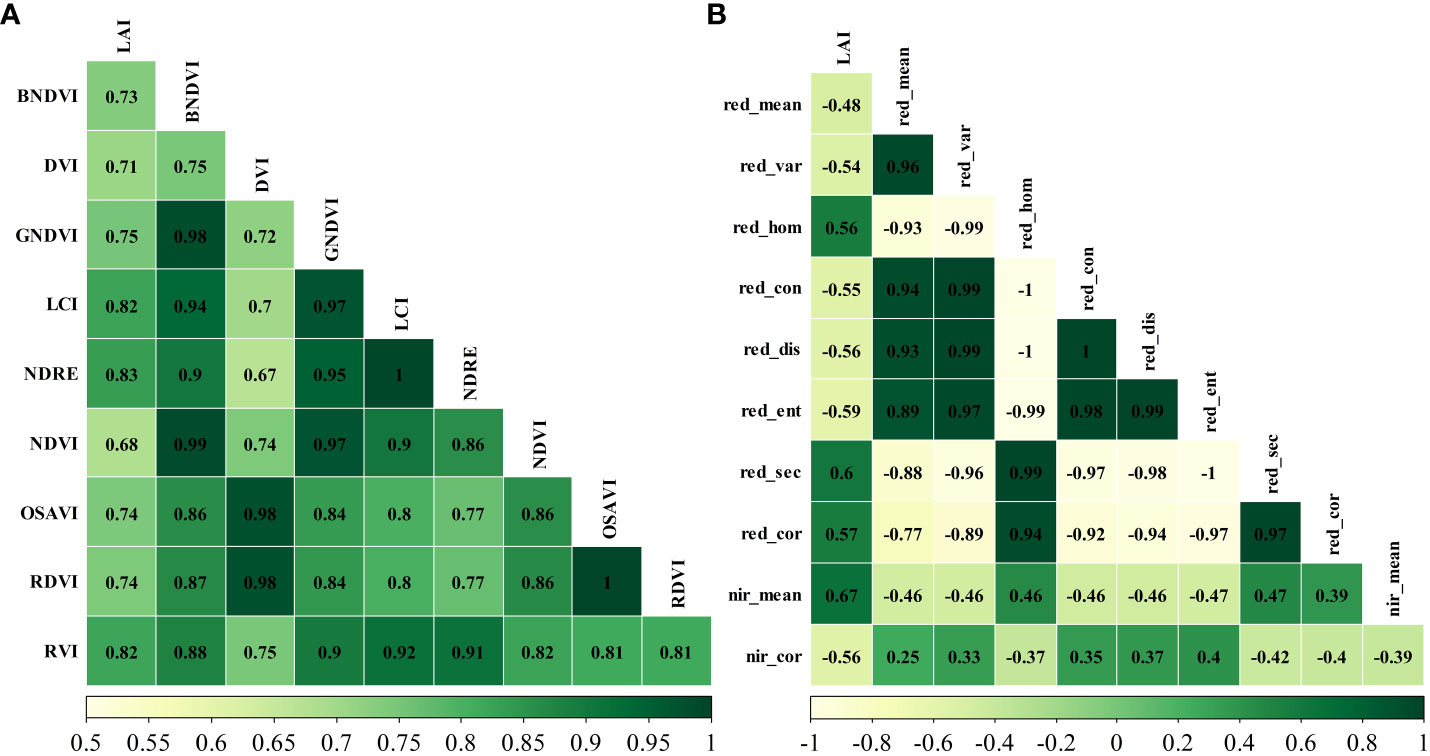

Pearson correlation analysis was performed between texture features and vegetation indices after feature selection and LAI for winter wheat. The correlation analysis results of vegetation index are shown in Figure 3A. All vegetation index values exhibit an extremely significant positive correlation with LAI at the p< 0.001 level. The correlation coefficients range from 0.68 to 0.83, with NDRE, LCI, and RVI showing higher correlation coefficients, all surpassing 0.8. The NDVI has the lowest correlation coefficient (0.68), while other vegetation indices range between 0.7 and 0.8. Regarding the correlation among vegetation indices, the correlation coefficient between DVI and NDRE is approximately 0.7, and all other vegetation indices exhibit a correlation coefficient greater than 0.8. Correlation analysis results of texture features are presented in Figure 3B. Among all texture features, nir_mean, red_sec, red_cor, and red_hom exhibited positive correlations with LAI, while other texture features showed negative correlations with LAI. The absolute correlation coefficients for all texture features ranged from 0.48 to 0.67. The nir_mean and red_sec had the highest correlation coefficients, both exceeding 0.6, while red_mean had the lowest absolute correlation coefficient (only 0.48). Regarding the correlation among texture features, except for nir_mean, nir_cor, and a few other texture features, the correlation among other texture features is relatively low (most absolute coefficients below 0.5). Overall, the absolute values of the correlation coefficients for vegetation indices are all greater than those for texture features.

Figure 3 Correlation analysis results of vegetation index (A) and texture feature (B). All correlation coefficients in the figure above pass the significance test at the p< 0.001 level.

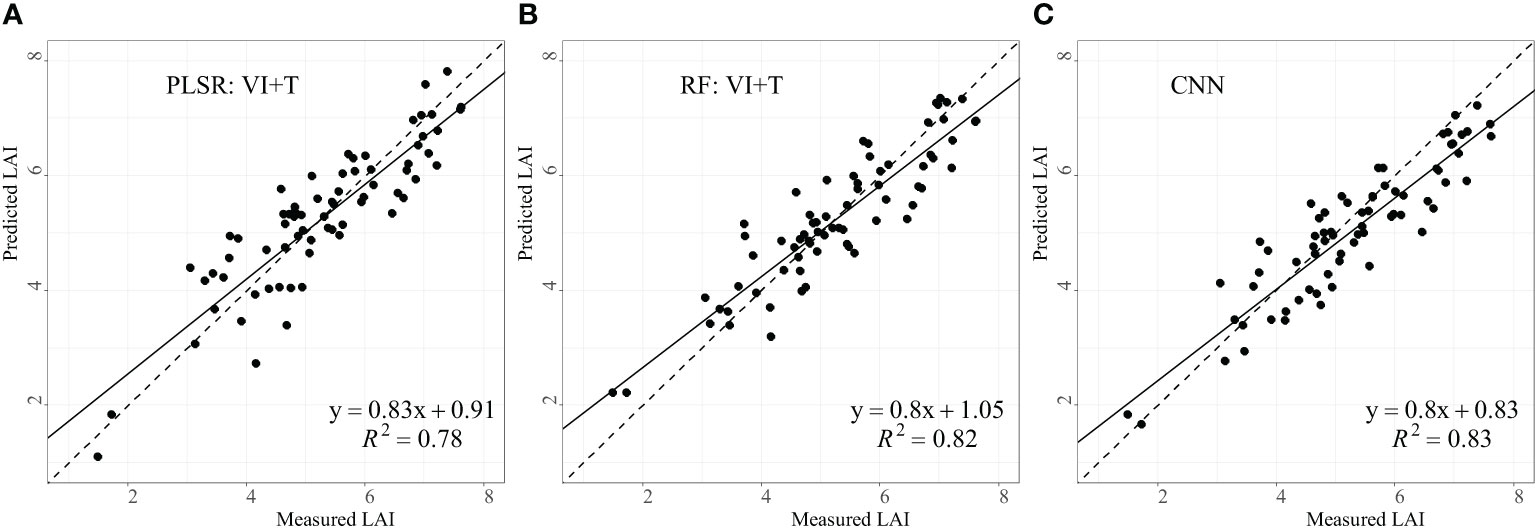

To assess the impact of different input variable types on the accuracy of winter wheat LAI estimation, three types of combination for input variables were employed in constructing and validating the accuracy of PLSR and RF models. The three input variables were vegetation index (VI), texture feature (T), and the combination of the two variables (VI+T). Both VI and T were the outcomes of the aforementioned feature optimization. The results of model estimation for different input variables are presented in Table 4. In the PLSR model, the highest estimation accuracy is achieved when the input variable is VI+T, with R2, RMSE, and MAE of 0.78, 0.52, and 0.63, respectively. In the RF model, the highest estimation accuracy is achieved when the input variable is VI+T or VI, both with R2 of 0.82. When the input variable is T, the estimation accuracy is the lowest, and R2 is only 0.66. On the whole, better LAI inversion results can be obtained by combining VI and T in both PLSR and RF models. As for the CNN model, high estimation accuracy can be achieved based on the scaled raw image data, with the R2 reached 0.83. Optimal inversion results were selected for the three models, as shown in Figure 4. The fitting regression slopes for three models are all less than and close to 1. This suggests that the three models tend to overestimate in the low value region and underestimate in the high value region. Additionally, the regression lines for PLSR and RF intersect the 1:1 line at a measured LAI of approximately 5, whereas the regression lines for CNN intersect the 1:1 line at a measured LAI of around 4.

Figure 4 Scatter plots of measured and estimated LAI values of PLSR (A), RF (B) and CNN (C). All the statistical analyses in the figure above pass the significance test at the p< 0.001 level.

Using the three optimal inversion models, we estimated the spatial distribution of LAI of wheat in the study area, as shown in Figure 5. As observed from the result, variations in wheat planting varieties, planting methods, planting density, etc., lead to substantial spatial distribution differences in LAI for wheat growing under the same environmental conditions and management practices. On the whole, the spatial distribution pattern of wheat LAI inversion by the three models was similar, and the high values (larger than 5) were concentrated in the southern and eastern parts of the study area. However, regions with LAI less than 4 were mainly found in the northwestern and eastern parts of the study area. In these regions, PLSR estimation results exhibit lower values compared to the other two models in areas with low LAI values.

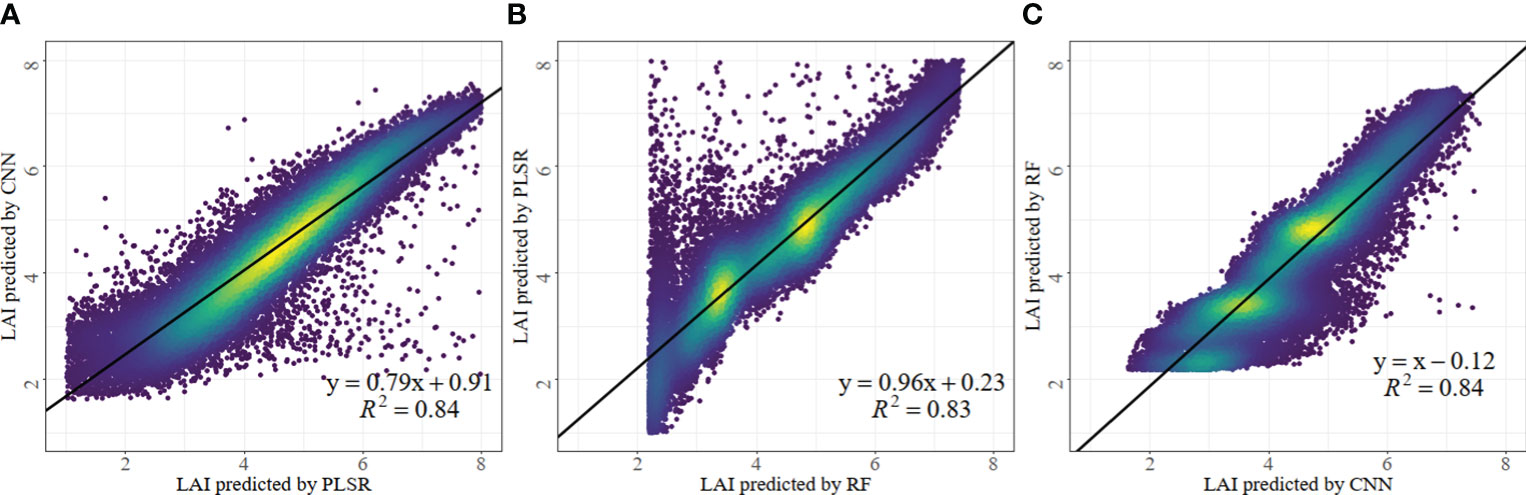

Scatter plots illustrating the LAI estimation results for the three models are presented in Figure 6. The figure indicates a strong pairwise correlation (R2 > 0.8) among the estimated results for the three models. In Figure 6A, the correlation between PLSR and CNN is high (R2 = 0.84), and the points are evenly distributed near the model fitting line, while the slope only measures 0.79. This suggests that although there is a high correlation between the two models, the similarity in LAI estimates is relatively low. Discrete points at the lower right of the fitting line suggest that PLSR tends to have higher LAI estimates than CNN in specific regions. As shown in Figure 6B, the R2 of the scatter plot for RF and PLSR estimates is 0.83, but there is an uneven distribution for points. The slope of the fitted line is 0.96, indicating that the estimated values are highly similar for both models. The numerous discrete points in the upper-left imply that PLSR tends to yield higher LAI estimates than RF in specific regions. As shown in Figure 6C, the scatter plot R2 of the CNN and RF estimation results is 0.84, and the point distribution is also uneven, which is similar to the results of the correlation analysis between RF and PLSR. However, the slope of the fitted line is approximate to 1, which is basically consistent with the 1:1 curve, indicating that the CNN and RF models have the highest similarity in the LAI estimation results. In addition, there are only a few discrete points in the lower right of the fitted line, further indicating that the numerical distributions of the two models are very close to each other.

Figure 6 Scatter plot of correlation analysis of LAI estimation results of three models. PLSR (A), RF (B) and CNN (C). All the statistical analyses in the figure above pass the significance test at the p< 0.001 level.

To enhance the accuracy of LAI inversion in wheat cultivation, this study employed multispectral images captured by UAV to evaluate the potential of vegetation indices and texture features, and then we evaluated the estimation accuracies of classical machine learning (PLSR, RF) models and deep learning (CNN) model. The results showed that vegetation indices exhibit higher correlation than texture features in LAI estimation. The strong correlation likely stems from vegetation indices being based on the linear combination of spectral reflectance, which partially captures the photosynthetic capacity of vegetation. These findings aligns with studies like Han, et al. (Han et al., 2022), who pointed out that vegetation indices had excellent performance for LAI estimation. However, the results of this study differ from those of earlier studies (Ilniyaz et al., 2023; Fan et al., 2023). These discrepancies may be attributed to the sensitivity of texture features to factors such as vegetation type and image resolution. Moreover, the vegetation indices employed in this study also differ from those used in previous studies, potentially contributing to the observed variations in results. While the LAI estimation accuracy using combined spectral and textural features is higher than that using either features alone, the overall accuracy improvement is not substantial. The marginal enhancement in accuracy observed when combining spectral and textural features may be attributed to the fact that both feature sets are already strongly correlated with LAI. Consequently, their aggregation does not elicit a substantial synergistic effect. The findings of this study are consistent with previous research (Zhang et al., 2022b), which has also demonstrated that combination of spectral and texture features is limited to the improvement of LAI estimation accuracy. Additionally, we also found that among the ten texture features obtained after filtering, eight were derived from the red light band, while only two were derived from the near-infrared band. This finding suggests that the red light band exhibits a stronger correlation with LAI, indicating that the wheat canopy is more sensitive to the red light band.

In this study, traditional machine learning and deep learning models were used to estimate wheat LAI. The results demonstrated that the CNN model achieved the highest accuracy, followed by the RF model and the PLSR, which exhibited the lowest accuracy. This outcome aligns with previous studies indicating superior accuracy of the CNN model compared to conventional machine models (Wittstruck et al., 2022; Yamaguchi et al., 2021). In contrast to conventional machine learning approaches, a distinguishing feature of the CNN model is its ability to extract features directly from raw data (Xu et al., 2021), eliminating the need for laborious manual feature selection and cumbersome computation processes. That might be the reason why CNN outperforms other models. Extensive studies have revealed that the relationships between LAI and spectral information are intrinsically nonlinear (Ma et al., 2022; Gao et al., 2023). In general, the RF regression model demonstrates remarkable robustness in handling high-dimensional data and nonlinear relationships, while PLSR, being a linear regression method, is not adequately equipped to capture these intricate nonlinear relationships between spectral reflectance and LAI. As a result, PLSR exhibits inferior accuracy in estimating LAI compared to the RF model.

Despite the high inversion accuracy achieved by the three models in this study, it was observed that at high LAI values (Figure 4), the majority of points in the scatter plot deviated below the 1:1 line, indicating a tendency for underestimation by the models. The primary cause of this underestimation lies in the fact that when LAI values are high, the vegetation canopy density typically increases, making it challenging for light to penetrate into the vegetation interior, leading to saturation effects, thus hindering the ability of images to capture wheat canopy information (Wittstruck et al., 2022; Li et al., 2021). On the other hand, the imbalanced distribution of samples with varying LAI values in the dataset may also contribute to the underestimation of LAI values. For instance, the dataset in this study contains fewer samples with high LAI values compared to those with medium and low LAI values, potentially leading to insufficient learning of spectral features associated with high LAI values in the models.

Our proposed models demonstrate high precision in estimating the LAI of small-scale crops. Leveraging similar small-scale data, it can promptly offer validation and serve as a reference for the estimation of LAI on a larger scale using satellite remote sensing data, such as Landsat and Sentinel. Despite the promising results obtained in this study, it is important to acknowledge certain limitations. Firstly, the study exclusively utilizes UAV images of winter wheat during a single growth period, restricting the validation and testing of the model’s ability to generalize across diverse growth stages of winter wheat. This is a crucial aspect for the practical application of the model in agricultural management, as it ensures that the model can accurately estimate LAI across the entire growth cycle (Li et al., 2023). Another critical aspect is the absence of comparison with mature pre-trained deep learning models. Pre-trained models are models that have been previously trained on a vast dataset and can be applied to various tasks. This ability to transfer learned features across different problems is a key advantage of deep learning compared to traditional shallow learning methods (Chollet et al., 2022). Thirdly, researchers have pointed out that vegetation height is correlated with aboveground biomass (AGB), and can be used for AGB estimation (Liu et al., 2022b; Li et al., 2020). As AGB is closely related to LAI, vegetation height can also be employed for LAI inversion (Wittstruck et al., 2022). Subsequent research may explore incorporating similar features as model inputs. Finally, it is important to note that the measured values of LAI were obtained indirectly, which introduce some error compared to direct destructive measurements. Therefore, future studies should consider the potential accumulation of errors between these two measurement methods.

This study compares the performance of classical machine learning (PLSR and RF) methods and deep learning (CNN) approach in estimating the LAI of winter wheat using multispectral images captured by UAV. The results indicate that for PLSR and RF methods, the estimation accuracy is significantly higher when using vegetation indices compared to texture features. The highest accuracy is achieved by combining both vegetation indices and texture features. For the PLSR model, the R2 obtained using only vegetation indices or texture features are 0.75 and 0.70, respectively. While for the RF method, the model’s R2 for using vegetation indices (0.82) is significantly larger than that using texture features (0.66). Overall, the highest accuracy is achieved by combining both vegetation indices and texture features, with R2 values of 0.78 and 0.82 for PLSR and RF, respectively. In contrast to the classical machine learning methods, the CNN method exhibits superior LAI estimation accuracy (R2 = 0.83), demonstrating its ability to effectively extract complex relationships between spectral features and LAI. Moreover, the spatial distribution and numerical values of LAI estimation results from the RF and CNN models exhibit a high degree of similarity, suggesting that both methods capture the spatial patterns of LAI well. In contrast, the PLSR model’s results differ significantly from the other two models. In summary, this study successfully employs CNN in conjunction with multispectral images from UAV to accurately estimate the winter wheat LAI. This approach offers a rapid and cost-effective method for monitoring winter wheat growth, serving as a valuable reference for winter wheat field management.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

JZ: Conceptualization, Software, Writing – original draft, Writing – review & editing. HY: Conceptualization, Software, Writing – original draft. JW: Writing – original draft. WC: Writing – original draft. YY: Conceptualization, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was funded by the National Natural Science Foundation of China (Grant No. 42001216), the fifth batch of special funds for Bagui scholars and Guangxi Science and Technology Project (Grant No. AD19110143).

We would like to thank to the editors and reviewers, who have put great effort into their comments on this manuscript. We would also thank the data publishers and funding agencies.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Apolo-Apolo, O. E., Pérez-Ruiz, M., Martínez-Guanter, J., Egea, G. A. (2020). Mixed data-based deep neural network to estimate leaf area index in wheat breeding trials. Agronomy 10, 175. doi: 10.3390/agronomy10020175

Bellis, E. S., Hashem, A. A., Causey, J. L., Runkle, B. R. K., Moreno-Garcia, B., Burns, B. W., et al. (2022). Detecting intra-field variation in rice yield with unmanned aerial vehicle imagery and deep learning. Front. Plant Sci. 13. doi: 10.3389/fpls.2022.716506

Bendig, J., Yu, K., Aasen, H., Bolten, A., Bennertz, S., Broscheit, J., et al. (2015). Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Observation Geoinformation 39, 79–87. doi: 10.1016/j.jag.2015.02.012

Castro-Valdecantos, P., Apolo-Apolo, O. E., Pérez-Ruiz, M., Egea, G. (2022). Leaf area index estimations by deep learning models using RGB images and data fusion in maize. Precis. Agric. 23, 1949–1966. doi: 10.1007/s11119-022-09940-0

Chen, J. M., Pavlic, G., Brown, L., Cihlar, J., Leblanc, S. G., White, H. P., et al. (2002). Derivation and validation of Canada-wide coarse-resolution leaf area index maps using high-resolution satellite imagery and ground measurements. Remote Sens. Environ. 80, 165–184. doi: 10.1016/S0034-4257(01)00300-5

Chollet, F., Kalinowski, T., Allaire, J. J. (2022). “ Deep learning with R ,” in MANNING, 2nd ed. Shelter Island, Manning, 568.

Corti, M., Cavalli, D., Cabassi, G., Bechini, L., Pricca, N., Paolo, D., et al. (2022). Improved estimation of herbaceous crop aboveground biomass using UAV-derived crop height combined with vegetation indices. Precis. Agric. 24, 587–606. doi: 10.1007/s11119-022-09960-w

Cui, Z., Kerekes, J. P. (2018). Potential of red edge spectral bands in future landsat satellites on agroecosystem canopy green leaf area index retrieval. Remote Sens. 10, 1458. doi: 10.3390/rs10091458

Datt, B. (1999). Remote sensing of water content in Eucalyptus leaves. Aust. J. Bot. 47, 909–923. doi: 10.1071/BT98042

Du, L., Yang, H., Song, X., Wei, N., Yu, C., Wang, W., et al. (2022). Estimating leaf area index of maize using UAV-based digital imagery and machine learning methods. Sci. Rep. 12, 15937. doi: 10.1038/s41598-022-20299-0

Fan, J., Wang, H., Liao, Z., Dai, Y., Yu, J., Feng, H. (2023). Winter wheat leaf area index estimation based on texture-color features and vegetation indices. Trans. Chin. Soc. Agric. Machinery 54, 347–359. doi: 10.6041/j.issn.1000-1298.202307.035

Fitzgerald, G., Rodriguez, D., O’Leary, G. (2010). Measuring and predicting canopy nitrogen nutrition in wheat using a spectral index—The canopy chlorophyll content index (CCCI). Field Crops Res. 116, 318–324. doi: 10.1016/j.fcr.2010.01.010

Fu, Y., Yang, G., Li, Z., Song, X., Li, Z., Xu, X., et al. (2020). Winter wheat nitrogen status estimation using UAV-based RGB imagery and gaussian processes regression. Remote Sens. 12, 3778. doi: 10.3390/rs12223778

Gao, S., Zhong, R., Yan, K., Ma, X., Chen, X., Pu, J., et al. (2023). Evaluating the saturation effect of vegetation indices in forests using 3D radiative transfer simulations and satellite observations. Remote Sens. Environ. 295, 113665. doi: 10.1016/j.rse.2023.113665

Gitelson, A. A., Kaufman, Y. J., Merzlyak, M. N. (1996). Use of a green channel in remote sensing of global. Remote Sens. Environ. 58, 289–298. doi: 10.1016/S0034-4257(96)00072-7

Guerrero, J. M., Pajares, G., Montalvo, M., Romeo, J., Guijarro, M. (2012). Support vector machines for crop/weeds identification in maize fields. Expert Syst. Appl. 39, 11149–11155. doi: 10.1016/j.eswa.2012.03.040

Guijarro, M., Pajares, G., Riomoros, I., Herrera, P., Burgos-Artizzu, X., Ribeiro, A. (2011). Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 75, 75–83. doi: 10.1016/j.compag.2010.09.013

Guo, Y., Xiao, Y., Hao, F., Zhang, X., Chen, J., de Beurs, K., et al. (2023). Comparison of different machine learning algorithms for predicting maize grain yield using UAV-based hyperspectral images. Int. J. Appl. Earth Observation Geoinformation 124, 103528. doi: 10.1016/j.jag.2023.103528

Guoxiang, S., Yongbo, L., Xiaochan, W., Guyue, H., Xuan, W., Yu, Z. (2016). Image segmentation algorithm for greenhouse cucumber canopy under various natural lighting conditions. Int. J. Agric. Biol. Eng. 9, 130–138.

Hague, T., Tillett, N., Wheeler, H. (2006). Automated crop and weed monitoring in widely spaced cereals. Precis. Agric. 7, 21–32. doi: 10.1007/s11119-005-6787-1

Han, J., Feng, C., Peng, J., Wang, Y., Shi, Z. (2022). Estimation of leaf area index of cotton from unmanned aerial vehicle multispectral images with different resolutions. Cotton Sci. 34, 338–349. doi: 10.11963/cs20220017

Hunt (2011). E.R.; Daughtry, C.; Eitel, J.U.; Long, D.S. Remote sensing leaf chlorophyll content using a visible band index. Agron. J. 103, 1090–1099.

Hunt, E. R., Cavigelli, M., Daughtry, C. S., Mcmurtrey, J. E., Walthall, C. L. (2005). Evaluation of digital photography from model aircraft for remote sensing of crop biomass and nitrogen status. Precis. Agric. 6, 359–378. doi: 10.1007/s11119-005-2324-5

IDRC (2010). Facts and figures on food and biodiversity (Canada: IDRC Communications, International Development Research Centre).

Ilniyaz, O., Du, Q., Shen, H., He, W., Feng, L., Azadi, H., et al. (2023). Leaf area index estimation of pergola-trained vineyards in arid regions using classical and deep learning methods based on UAV-based RGB images. Comput. Electron. Agric. 207, 107723. doi: 10.1016/j.compag.2023.107723

Jordan, C. F. (1969). Derivation of leafvatio index from quality of light on the forest floor. Ecology 50, 663–666. doi: 10.2307/1936256

Jordan, M. I., Mitchell, T. M. (2015). Machine learning: Trends, perspectives, and prospects. Science 349, 255–260. doi: 10.1126/science.aaa8415

Kang, Y., Ozdogan, M., Gao, F., Anderson, M. C., White, W. A., Yang, Y., et al. (2021). data-driven approach to estimate leaf area index for Landsat images over the. Remote Sens. Environ. 258, 112383. doi: 10.1016/j.rse.2021.112383

Kataoka, T., Kaneko, T., Okamoto, H., Hata, S. (2003). “Crop growth estimation system using machine vision,” in Proceedings of the Proceedings 2003 IEEE/ASME international conference on advanced intelligent mechatronics (AIM 2003). Piscataway: IEEE Service Center, b1079–b1083.

Kattenborn, T., Leitloff, J., Schiefer, F., Hinz, S. (2021). Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogrammetry Remote Sens. 173, 24–49. doi: 10.1016/j.isprsjprs.2020.12.010

Lary, D. J., Alavi, A. H., Gandomi, A. H., Walker, A. L. (2016). Machine learning in geosciences and remote sensing. Geosci. Front. 7, 3–10. doi: 10.1016/j.gsf.2015.07.003

Li, B., Xu, X., Zhang, L., Han, J., Bian, C., Li, G., et al. (2020). Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogrammetry Remote Sens. 162, 161–172. doi: 10.1016/j.isprsjprs.2020.02.013

Li, S., Yuan, F., Ata-UI-Karim, S. T., Zheng, H., Cheng, T., Liu, X., et al. (2019). Combining color indices and textures of UAV-based digital imagery for rice LAI estimation. Remote Sens. 11, 1763. doi: 10.3390/rs11151763

Li, W., Wang, J., Zhang, Y., Yin, Q., Wang, W., Zhou, G., et al. (2023). Combining texture, color, and vegetation index from unmanned aerial vehicle multispectral images to estimate winter wheat leaf area index during the vegetative growth stage. Remote Sens. 15, 5715. doi: 10.3390/rs15245715

Li, Y., Liu, H., Ma, J., Zhang, L. (2021). Estimation of leaf area index for winter wheat at early stages based on convolutional neural networks. Comput. Electron. Agric. 190, 106480. doi: 10.1016/j.compag.2021.106480

Liang, M., Zhou, T., Zhang, F., Yang, J., Xia, Y. (2018). Research on convolutional neural network and its application on medical image. Sheng wu yi xue gong cheng xue za zhi = J. Biomed. Eng. = Shengwu yixue gongchengxue zazhi 35, 977–985. doi: 10.7507/1001-5515.201710060

Liu, Y., An, L., Wang, N., Tang, W., Liu, M., Liu, G., et al. (2023a). Leaf area index estimation under wheat powdery mildew stress by integrating UAV−based spectral, textural and structural features. Comput. Electron. Agric. 213, 108169. doi: 10.1016/j.compag.2023.108169

Liu, Y., Feng, H., Yue, J., Fan, Y., Bian, M., Ma, Y., et al. (2023b). Estimating potato above-ground biomass by using integrated unmanned aerial system-based optical, structural, and textural canopy measurements. Comput. Electron. Agric. 213, 108229. doi: 10.1016/j.compag.2023.108229

Liu, Y., Feng, H., Yue, J., Jin, X., Li, Z., Yang, G. (2022a). Estimation of potato above-ground biomass based on unmanned aerial vehicle red-green-blue images with different texture features and crop height. Front. Plant Sci. 13. doi: 10.3389/fpls.2022.938216

Liu, Y., Feng, H., Yue, J., Li, Z., Yang, G., Song, X., et al. (2022b). Remote-sensing estimation of potato above-ground biomass based on spectral and spatial features extracted from high-definition digital camera images. Comput. Electron. Agric. 198, 107089. doi: 10.1016/j.compag.2022.107089

Liu, C., Yang, G., Li, Z., Tang, F., Wang, J., Zhang, C., et al. (2018). Biomass estimation in winter wheat by UAV spectral information and texture information fusion. Scientia Agricultura Sin. 51, 3060–3073. doi: 10.3864/j.issn.0578-1752.2018.16.003

Liu, T., Zhang, H., Wang, Z., He, C., Zhang, Q., Jiao, Y. (2021). Estimation of the leaf area index and chlorophyll content of wheat using UAV multi-spectrum images. Trans. Chin. Soc. Agric. Eng. 37, 65–72. doi: 10.11975/j.issn.1002-6819.2021.19.008

Luo, X., Xie, T., Dong, S. (2023). Estimation of citrus canopy chlorophyll based on UAV multispectral images. Trans. Chin. Soc. Agric. Machinery 54, 198–205. doi: 10.6041/j.issn.1000-1298.2003.04.019

Ma, J., Chen, P., Wang, L. A. (2023). Comparison of different data fusion strategies’ Effects on maize leaf area index prediction using multisource data from unmanned aerial vehicles (UAVs). Drones 7, 605. doi: 10.3390/drones7100605

Ma, J., Li, Y., Chen, Y., Du, K., Zheng, F., Zhang, L., et al. (2019). Estimating above ground biomass of winter wheat at early growth stages using digital images and deep convolutional neural network. Eur. J. Agron. 103, 117–129. doi: 10.1016/j.eja.2018.12.004

Ma, J., Wang, L., Chen, P. (2022). Comparing different methods for wheat LAI inversion based on hyperspectral data. Agriculture 12, 1353. doi: 10.3390/agriculture12091353

Mao, W., Wang, Y., Wang, Y. (2003). Real-time detection of between-row weeds using machine vision. In Proceedings of the 2003. ASAE Annu. Meeting, 1. doi: 10.13031/2013.15381

Meyer, G. E., Neto, J. C. (2008). Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 63, 282–293. doi: 10.1016/j.compag.2008.03.009

Niu, Q., Feng, H., Yang, G., Li, C., Yang, H., Xu, B., et al. (2018). Monitoring plant height and leaf area index of maize breeding material based on UAV digital images. Trans. Chin. Soc. Agric. Eng. 34, 73–82. doi: 10.11975/j.issn.1002-6819.2018.05.010

Osco, L. P., Marcato Junior, J., Marques Ramos, A. P., de Castro Jorge, L. A., Fatholahi, S. N., de Andrade Silva, J., et al. (2021). review on deep learning in UAV remote sensing. Int. J. Appl. Earth Observation Geoinformation 102, 102456. doi: 10.1016/j.jag.2021.102456

Peng, X., Han, W., Ao, J., Wang, Y. (2021). Assimilation of LAI derived from UAV multispectral data into the SAFY model to estimate maize yield. Remote Sens. 13, 1094. doi: 10.3390/rs13061094

Sellaro, R., Crepy, M., Trupkin, S. A., Karayekov, E., Buchovsky, A. S., Rossi, C., et al. (2010). Cryptochrome as a sensor of the blue/green ratio of natural radiation in Arabidopsis. Plant Physiol. 154, 401–409. doi: 10.1104/pp.110.160820

Shao, Y., Tang, Q., Cui, J., Li, X., Wang, L., Lin, T. (2023). Cotton leaf area index estimation combining UAV spectral and textural features. Trans. Chin. Soc. Agric. Machinery 54, 186–196. doi: 10.6041/j.issn.1000-1298.2023.06.019

Tang, S., Zhang, X., Qi, W., Zhang, M. (2022). Retrieving grape leaf area index with hyperspectral data based on long short-term memory neural network. Remote Sens. Inf. 37, 38–44. doi: 10.3969/j.issn.1000-3177.2022.05.006

Tao, H., Feng, H., Yang, G., Yang, X., Liu, M., Liu, S. (2020a). Leaf area index estimation of winter wheat based on UAV imaging hyperspectral imagery. Trans. Chin. Soc. Agric. Machinery 51, 176–187. doi: 10.6041/j.issn.1000-1298.2020.01.019

Tao, H., Xu, L., Feng, H., Yang, G., Dai, Y., Niu, Y. (2020b). Estimation of plant height and leaf area index of winter wheat based on UAV hyperspectral remote sensing. Trans. Chin. Soc. Agric. Machinery 51, 193–201. doi: 10.6041/j.issn.1000-1298.2020.12.021

Verrelst, J., Schaepman, M. E., Koetz, B., Kneubühler, M. (2008). Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 112, 2341–2353. doi: 10.1016/j.rse.2007.11.001

Wang, X., Wang, M., Wang, S., Wu, Y. (2015). Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng. 31, 152–159. doi: 10.3969/j.issn.1002-6819.2015.05.022

Wang, L., Xu, J., He, J., Li, B., Yang, X., Wang, L., et al. (2020). Estimating leaf area index and yield of maize based on remote sensing by unmanned aerial vehicle. J. Maize Sci. 28, 88–93. doi: 10.13597/j.cnki.maize.science.20200613

Wittstruck, L., Jarmer, T., Trautz, D., Waske, B. (2022). Estimating LAI from winter wheat using UAV data and CNNs. IEEE Geosci. Remote Sens. Lett. 19, 1–5. doi: 10.1109/LGRS.2022.3141497

Woebbecke, D. M., Meyer, G. E., Von Bargen, K., Mortensen, D. A. (1995). Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 38, 259–269. doi: 10.13031/2013.27838

Xiao, Z., Liang, S., Wang, J., Xiang, Y., Zhao, X., Song, J. (2016). Long-time-series global land surface satellite leaf area index product derived from MODIS and AVHRR surface reflectance. IEEE Trans. Geosci. Remote Sens. 54, 5301–5318. doi: 10.1109/TGRS.2016.2560522

Xiong, X., Duan, L., Liu, L., Tu, H., Yang, P., Wu, D., et al. (2017). Panicle-SEG: a robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization. Plant Methods 13, 104. doi: 10.1186/s13007-017-0254-7

Xu, L., Zhou, L., Meng, R., Zhao, F., Lv, Z., Xu, B., et al. (2022). An improved approach to estimate ratoon rice aboveground biomass by integrating UAV-based spectral, textural and structural features. Precis. Agric. 23, 1276–1301. doi: 10.1007/s11119-022-09884-5

Xu, Y., Zhou, Y., Sekula, P., Ding, L. (2021). Machine learning in construction: From shallow to deep learning. Developments Built Environ. 6, 100045. doi: 10.1016/j.dibe.2021.100045

Yamaguchi, T., Tanaka, Y., Imachi, Y., Yamashita, M., Katsura, K. (2021). Feasibility of combining deep learning and RGB images obtained by unmanned aerial vehicle for leaf area index estimation in rice. Remote Sens. 13, 84. doi: 10.3390/rs13010084

Yan, G., Hu, R., Luo, J., Mu, X., Xie, D., Zhang, W. (2016). Review of indirect methods for leaf area index measurement. J. Remote Sens. 20, 958–978. doi: 10.11834/jrs.20166238

Yang, C., Everitt, J. H., Bradford, J. M., Murden, D. (2004). Airborne hyperspectral imagery and yield monitor data for mapping cotton yield variability. Precis. Agric. 5, 445–461. doi: 10.1007/s11119-004-5319-8

Yang, Q., Shi, L., Han, J., Zha, Y., Zhu, P. (2019). Deep convolutional neural networks for rice grain yield estimation at the ripening stage using UAV-based remotely sensed images. Field Crops Res. 235, 142–153. doi: 10.1016/j.fcr.2019.02.022

Yao, H., Qin, R., Chen, X. (2019). Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 11, 1443. doi: 10.3390/rs11121443

Yin, Q., Zhang, Y., Li, W., Wang, J., Wang, W., Ahmad, I., et al. (2023). Estimation of winter wheat SPAD values based on UAV multispectral remote sensing. Remote Sens. 15, 3595. doi: 10.3390/rs15143595

Yu, F., Xu, T., Guo, Z., Du, W., Wang, D., Cao, Y. (2020). Remote sensing inversion of chlorophyll content in rice leaves in cold region based on Optimizing Red-edge Vegetation Index (ORVI). Smart Argiculture. 2, 77–86. doi: 10.12133/j.smartag.2020.2.1.201911-SA003

Zhang, D., Han, X., Lin, F., Du, S., Zhang, G., Hong, Q. (2022a). Estimation of winter wheat leaf area index using multi-source UAV image feature fusion. Trans.xChin. Soc. Agric. Eng. 38, 171–179. doi: 10.11975/j.issn.1002-6819.2022.09.018

Zhang, X., Zhang, K., Sun, Y., Zhao, Y., Zhuang, H., Ban, W., et al. (2022b). Combining spectral and texture features of UAS-based multispectral images for maize leaf area index estimation. Remote Sens. 14, 331. doi: 10.3390/rs14020331

Zheng, H., Ma, J., Zhou, M., Li, D., Yao, X., Cao, W., et al. (2020). Enhancing the nitrogen signals of rice canopies across critical growth stages through the integration of textural and spectral information from unmanned aerial vehicle (UAV) multispectral imagery. Remote Sens. 12, 957. doi: 10.3390/rs12060957

Zhou, X., Zheng, H. B., Xu, X. Q., He, J. Y., Ge, X. K., Yao, X., et al. (2017). Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogrammetry Remote Sens. 130, 246–255. doi: 10.1016/j.isprsjprs.2017.05.003

Keywords: leaf area index, multispectral, UAV, machine learning, convolutional neural network, orthophoto

Citation: Zu J, Yang H, Wang J, Cai W and Yang Y (2024) Inversion of winter wheat leaf area index from UAV multispectral images: classical vs. deep learning approaches. Front. Plant Sci. 15:1367828. doi: 10.3389/fpls.2024.1367828

Received: 09 January 2024; Accepted: 27 February 2024;

Published: 14 March 2024.

Edited by:

Lars Hendrik Wegner, Foshan University, ChinaReviewed by:

Juan De Dios Franco-Navarro, Spanish National Research Council (CSIC), SpainCopyright © 2024 Zu, Yang, Wang, Cai and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuanzheng Yang, eWFuZ3l6QG5ubnUuZWR1LmNu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.