95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

EDITORIAL article

Front. Plant Sci. , 05 June 2023

Sec. Sustainable and Intelligent Phytoprotection

Volume 14 - 2023 | https://doi.org/10.3389/fpls.2023.1215899

This article is part of the Research Topic AI, Sensors and Robotics in Plant Phenotyping and Precision Agriculture, Volume II View all 17 articles

Editorial on the Research Topic

AI, sensors and robotics in plant phenotyping and precision agriculture, volume II

With climate change and population growth, the ratio of food production to demand is increasingly shrinking. Plants and their production are crucial for retaining the sustainability for the natural ecosystem and human food security (Qiao et al., 2022). Rapid development and technology progress in robotics and artificial intelligence (AI), plant phenotyping and precision agriculture start to play an important role in intelligent plant phytoprotection, soil protection, reducing chemicals and labor cost, and ensuring food supply (Qiao et al., 2022). Plant phenotyping refers to obtaining the observable characteristics or traits jointly affected by their genotypes and the environment, and is formed during plant growth and development from the dynamic interaction between the genetic background and the physical world in which plants develop (Li et al., 2020).

Precision agriculture helps to maximize efficiency of soil and water usage, with the objective of minimizing loss and waste. It also increases the yield of crops, as well as reduce the variability and input costs (Cisternas et al., 2020).

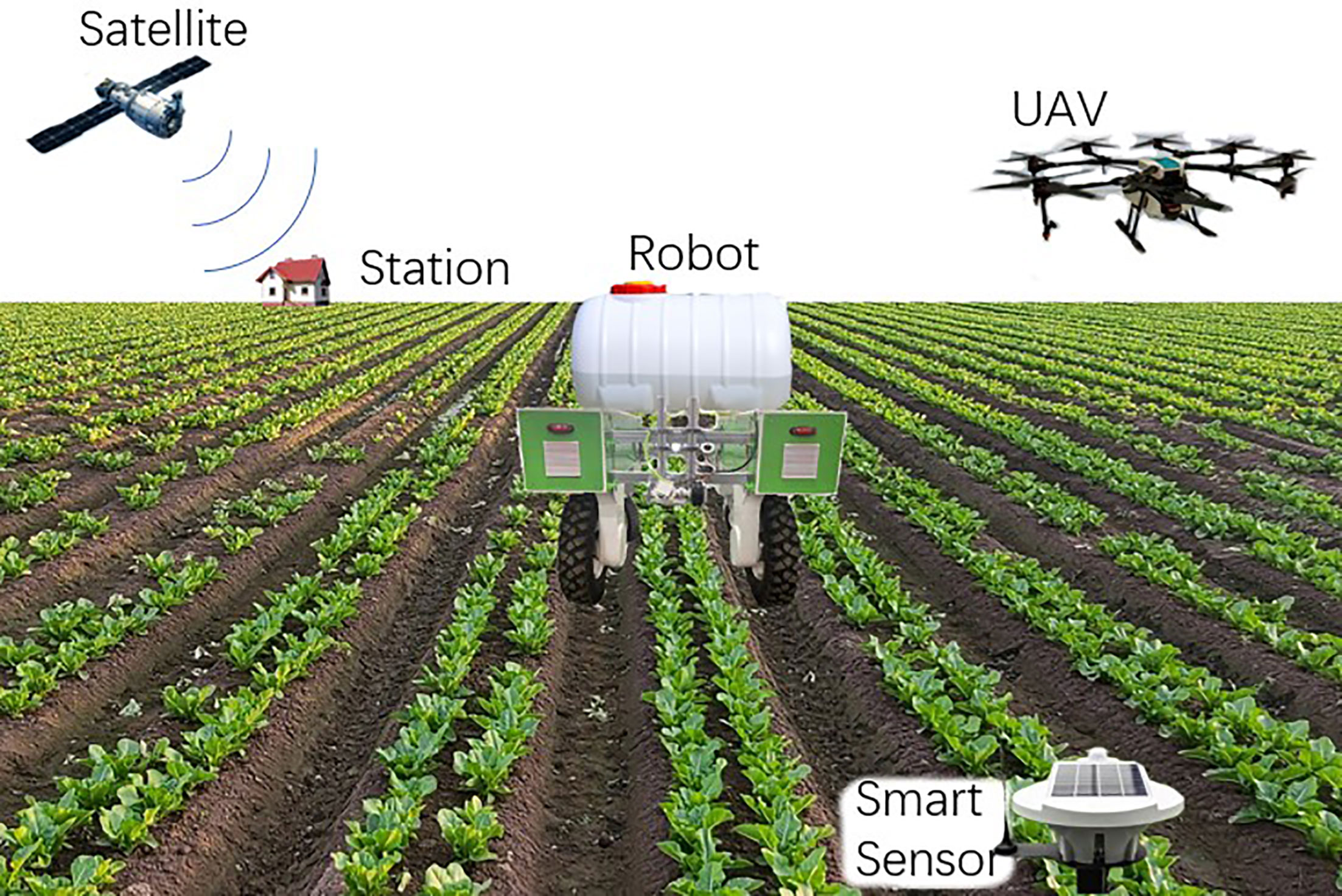

In recent years, researchers have made a significant progress in developing various AI methods, sensor technologies and agricultural robots for planting and monitoring plants (Weyler et al., 2021; Lottes et al., 2020; Hu et al., 2022 and Su et al., 2021), as shown in Figure 1. A significant number of plant morphological, physiological, and chemical parameters can be rapidly and conveniently measured using AI (Li et al., 2020). Additionally, the integration of AI and robotics technologies enables real-time monitoring of plants in complex field and controlled environment (Atefi et al., 2021). By probing the complex physiology of plants through plant phenotyping, higher quality plant seeds can be obtained (Watt et al., 2020). Moreover, during plant protection processes, the application of pesticides and fertilizers can be reduced, ultimately contributing to a more sustainable agricultural environment (Vélez et al., 2023).

Figure 1 AI, sensors and robotics based dynamic 3-D plant phenotyping and precision agriculture framework.

As an effective tool and process, plant phenotype is an essential part of modern, intelligent, and precise agricultural production. Various physiological and morphological parameters about plants are acquired by various sensors such as RGB cameras, lidar and multiple and hyperspectral cameras to serve as decision-making basis for real-time and future plant management (Rivera et al., 2023).

Shen et al. proposed a new backbone network ResNet50FPN-ED for the conventional Mask R-CNN instance segmentation to improve the detection and segmentation capability of grape clusters in complex field environments. The average precision (AP) was 60.1% on object detection and 59.5% on instance segmentation. Sun et al. proposed a multi-scale cotton boll localization method called MCBLNet based on point annotation, which achieved 49.4% average accuracy higher than traditional point-based localization methods on the test dataset. Based on an improved YOLOv5 model, Wang et al. proposed a fast and accurate litchi fruit detection method and corresponding software program. The results showed that the mean average precision (mAP) of the improved model was increased by 3.5% compared with the original model, and the correlation coefficient R2 between the application test and the actual results of yield estimation was 0.9879. Based on imaging technology, Li et al. performed three-dimensional reconstruction, point cloud preprocessing, phenotypic parameter analysis, and stem and leaf recognition and segmentation of corn seedlings in sequence, paving a new path for maize phenotype research. Li et al. proposed a Germination Sparse Classification (GSC) method based on hyperspectral imaging to detect peeled damaged fresh maize. The results show that the overall classification accuracy rate of this method in the training set is 98.33%, and the overall classification accuracy rate of the test set is 95.00%.

Pests and diseases occur irregularly and are harmful in plant growth and production. It is critical to detect pests and diseases in time for taking necessary actions. Recent advances in computer vision makes it a popular approach to accomplish this task (Guo et al., 2023).

Aiming at the problem of rapid detection of field crop diseases, Dai et al. proposed a novel network architecture YOLO V5-CAcT. They deployed the network on the deep learning platform NCNN, making it an industrial-grade crop disease solution. The results showed that in 59 categories of crop disease images from 10 crop varieties, the average recognition accuracy reached 94.24%, the average inference time per sample is 1.56 ms, with a the model size of 2 MB. To quantify the severity of leaf infection, Liu et al.. proposed an image-based approach with a deep learning-based analysis pipeline. They utilized image data of grape leaves infected with downy mildew (DM) and powdery mildew (PM) to test the effectiveness of the method. Experimental results showed that the DM and PM segmentation accuracies in terms of mean IOU of the proposed method in the test images were more than 0.84 and 0.74, respectively.

Cotton is an important economic crop, and its pest management has always been paid attention to. Fu et al. proposed a quantitative monitoring model of cotton aphid severity based on Sentinel-2 data by combining derivative of ratio spectral (DRS) and random forest (RF) algorithms. The overall classification accuracy is 80%, the kappa coefficient is 0.73, and the method outperforms four conventional methods. In order to facilitate easy deployment of deep convolution neural network models in mobile smart device APPs, Zhu et al. use pruning algorithms to compress the models. VGG16, ResNet164 and DenseNet40 are selected as compressed models for comparison. The results show that when compression rate is set to 80%, the accuracies of compressed versions of VGG16, ResNet164 and DenseNet40 are 90.77%, 96.31% and 97.23%, respectively. In addition, a cotton disease recognition APP on the Android platform is developed, and the average time to process a single image is 87 ms with the test phone.

With the rapid development and popularization of mobile robots and unmanned aerial vehicles (UAVs), they have been increasingly deployed for agricultural applications for automated operations to avoid dangerous, repetitive and complicated manual operations (Vong et al., 2022 and Vélez et al., 2023).

Aiming at the harvesting problems faced in precision agriculture, Zheng et al. designed a robot gripper by studying the picking problem of clustered tomatoes. The results show that in the simulation environment, the gripper can smoothly grasp the medium and large tomatoes with diameter of 65∼95 mm, and all of them meet the minimum damage force condition during grasping operation. In terms of crop management such as robotic spraying and fertilization, Hu et al. proposed LettuceTrack, a multiple object tracking (MOT) method for detection and tracking of individual lettuce plant by building unique feature. The method is designed to avoid multiple spray of the same lettuce plant. In order to solve the problem of vibration deformation caused by corn harvester working, an improved empirical mode decomposition (EMD) algorithm was provided by Fu et al. to decrease noise and non-stationary vibration in the field. The results show that the proposed model could reduce noise interference, restore the effective information of the original signal effectively, and achieve the accuracy of 99.21% when identifying the vibration states of the frame.

UAVs could be used to monitor crop health, soil moisture levels, and identify areas that require irrigation or fertilization. With the use of advanced sensors and cameras, drones can capture sensing data and conduct surveys that provide farmers with valuable insights into crop growth and yield (Zhang et al., 2023). Moreover, various aspects of the guidance, navigation, and control of UAV when applied to agriculture started to be investigated to allow real-time crop management with fleets of autonomous UAVs. Huang et al. proposed a distributed control scheme to solve the collision avoidance problem in multi-UAVs systems. Numerical simulation results show that the method can effectively control multiple UAVs to complete the plant protection task within a predetermined time. Li et al. proposed a solution for field wheat lodging identification. Drones are used to obtain 3D point cloud data of wheat, which is processed with neural network to obtain the recognition result of wheat lodging. The results show that the F1 scores of the classification model are 96.7% for filling, 94.6% for maturity, respectively.

Plant growth and agricultural production can be unstable, since they are greatly affected by their environment. A good ecological environment including forest, land and water resources is the basis of sustainable development. Researchers are paying more attention to applying artificial intelligence and sensor technology to ecological systems, and making further contributions to sustainable plant protection by sensing and monitoring ecosystem (Maharjan et al., 2022).

Zheng et al. conducted research on forest fire hazard identification methods. They proposed an improved forest fire recognition algorithm for fire recognition by fusing backpropagation (BP) neural network and SVM classifier. They constructed a forest fire dataset and tested it with different classification algorithms. The results show that the proposed method achieves an accuracy rate of 92.73%, which proves the effectiveness of the algorithm. Based on smooth channels and ecological channels with different shapes, Zhou et al. proposed a method of arming ultrasonic sensors to obtain channel flow velocity. The results show that the method simplifies the arrangement of sensors in channel flow, and improves the accuracy of the flow measurement method. The method is helpful to promote the construction of ecological channels.

Sustainable agricultural development requires efforts from multiple perspectives. Human beings need to create a good ecological environment including water resources, forests and soil to ensure that plants grow in a healthy environment. A more reasonable arrangement of sensors and the use of artificial intelligence can monitor environmental changes in real time, so that farmers can make more optimum control measures. In addition, plant phenotypes will play a more important role in future agriculture, including plant breeding and plant parameter acquisition. AI and robotics technologies have been increasingly integrated into plant protection, fertilization and harvesting to pursue higher food quality and yield.

Varieties AI methods, intelligent agricultural robots and equipment have been proposed and proven to be efficient in laboratories as well as on agricultural fields. Deployment of these methods and robots during real agricultural production, while enabling the entire process at a lower cost, is upcoming challenges for both researchers and agricultural industry. Furthermore, multi-robot collaboration including ground-to-air cooperation will shape a better smart agricultural system, and build a sustainable and circular agricultural system for future farming.

DS and YQ wrote the original draft. YJ, JV, ZZ, and DH reviewed and edited the paper. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Atefi, A., Ge, Y., Pitla, S., Schnable, J. (2021). Robotic technologies for high-throughput plant phenotyping: contemporary reviews and future perspectives. Front. Plant Sci. 12, 611940. 10.3389/fpls.2021.611940

Cisternas, I., Velásquez, I., Caro, A., Rodríguez, A. (2020). Systematic literature review of implementations of precision agriculture. Comput. Electron. Agric. 176, 105626. 10.1016/j.compag.2020.105626

Guo, Q., Wang, C., Xiao, D., Huang, Q. (2023). Automatic monitoring of flying vegetable insect pests using an RGB camera and YOLO-SIP detector. Precis. Agric. 24, 436–457. 10.1007/s11119-022-09952-w

Hu, N., Su, D., Wang, S., Nyamsuren, P., Qiao, Y., Jiang, Y., et al. (2022). LettuceTrack: detection and tracking of lettuce for robotic precision spray in agriculture. Front. Plant Sci. 13, 1003243. 10.3389/fpls.2022.1003243

Li, Z., Guo, R., Li, M., Chen, Y., Li, G. (2020). A review of computer vision technologies for plant phenotyping. Comput. Electron. Agric. 176, 105672. 10.1016/j.compag.2020.105672

Lottes, P., Behley, J., Chebrolu, N., Milioto, A., Stachniss, C. (2020). Robust joint stem detection and crop-weed classification using image sequences for plant-specific treatment in precision farming. J. Field Robotics 37 (1), 20–34. 10.1002/rob.21901

Maharjan, N., Miyazaki, H., Pati, B. M., Dailey, M. N., Shrestha, S., Nakamura, T. (2022). Detection of river plastic using UAV sensor data and deep learning. Remote Sens. 14 (13), 3049. 10.3390/rs14133049

Qiao, Y., Valente, J., Su, D., Zhang, Z., He, D. (2022). Editorial: AI, sensors and robotics in plant phenotyping and precision agriculture. Front. Plant Sci. 13, 1064219. 10.3389/fpls.2022.1064219

Rivera, G., Porras, R., Florencia, R., Sánchez-Solís, J. P. (2023). LiDAR applications in precision agriculture for cultivating crops: a review of recent advances. Comput. Electron. Agric. 207, 107737. 10.1016/j.compag.2023.107737

Su, D., Qiao, Y., Kong, H., Sukkarieh, S. (2021). Real time detection of inter-row ryegrass in wheat farms using deep learning. Biosyst. Eng. 204, 198–211. 10.1016/j.biosystemseng.2021.01.019

Vélez, S., Del Mar Ariza-Sentís, M., Valente, J. (2023). Mapping the spatial variability of botrytis bunch rot risk in vineyards using UAV multispectral imagery. Eur. J. Agron. 142, 126691. 10.1016/j.eja.2022.126691

Vong, C. N., Conway, L. S., Feng, A., Zhou, J., Kitchen, N. R., Sudduth, K. A. (2022). Corn emergence uniformity estimation and mapping using UAV imagery and deep learning. Comput. Electron. Agric. 198, 107008. 10.1016/j.compag.2022.107008

Watt, M., Fiorani, F., Usadel, B., Rascher, U., Muller, O., Schurr, U. (2020). Phenotyping: new windows into the plant for breeders. Annu. Rev. Plant Biol. 71, 689–712. 10.1146/annurev-arplant-042916-041124

Weyler, J., Milioto, A., Falck, T., Behley, J., Stachniss, C. (2021). Joint plant instance detection and leaf count estimation for in-field plant phenotyping. IEEE Robotics Automation Lett. 6 (2), 3599–3606. 10.1109/LRA.2021.3060712

Zhang, C., Valente, J., Wang, W., Guo, L., Tubau Comas, A., van Dalfsen, P., et al. (2023). Feasibility assessment of tree-level flower intensity quantification from UAV RGB imagery: a triennial study in an apple orchard. ISPRS J. Photogrammetry Remote Sens. 197, 256–273. 10.1016/j.isprsjprs.2023.02.003

Keywords: sensors, robotics, plant phenotyping, precision agriculture, artificial intelligence

Citation: Su D, Qiao Y, Jiang Y, Valente J, Zhang Z and He D (2023) Editorial: AI, sensors and robotics in plant phenotyping and precision agriculture, volume II. Front. Plant Sci. 14:1215899. doi: 10.3389/fpls.2023.1215899

Received: 02 May 2023; Accepted: 19 May 2023;

Published: 05 June 2023.

Edited and Reviewed by:

Lei Shu, Nanjing Agricultural University, ChinaCopyright © 2023 Su, Qiao, Jiang, Valente, Zhang and He. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yongliang Qiao, eW9uZ2xpYW5nLnFpYW9AaWVlZS5vcmc=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.