95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 24 March 2023

Sec. Sustainable and Intelligent Phytoprotection

Volume 14 - 2023 | https://doi.org/10.3389/fpls.2023.1102855

This article is part of the Research Topic Intelligent Computing in Farmland Water Conservancy for Smart Agriculture View all 5 articles

Reservoir operation is an important part of basin water resources management. The rational use of reservoir operation scheme can not only enhance the capacity of flood control and disaster reduction in the basin, but also improve the efficiency of water use and give full play to the comprehensive role the reservoir. The conventional decision-making method of reservoir operation scheme is computationally large, subjectivity and difficult to capture the nonlinear relationship. To solve these problems, this paper proposes a reservoir operation scheme decision-making model IWGAN-IWOA-CNN based on artificial intelligence and deep learning technology. In view of the lack of data in the original reservoir operation scheme and the limited improvement of data characteristics by the traditional data augmentation algorithm, an improved generative adversarial network algorithm (IWGAN) is proposed. IWGAN uses the loss function which integrates Wasserstein distance, gradient penalty and difference item, and dynamically adds random noise in the process of model training. The whale optimization algorithm is improved by introducing Logistic chaotic mapping to initialize population, non-linear convergence factor and adaptive weights, and Levy flight perturbation strategy. The improved whale optimization algorithm (IWOA) is used to optimize hyperparameters of convolutional neural networks (CNN), so as to obtain the best parameters for model prediction. The experimental results show that the data generated by IWGAN has certain representation ability and high quality; IWOA has faster convergence speed, higher convergence accuracy and better stability; IWGAN-IWOA-CNN model has higher prediction accuracy and reliability of scheme selection.

In recent years, people’s demand for water resources is increasing day by day, and water resources are also facing a series of serious problems such as increasing shortage, pollution and waste (Boursianis et al., 2021; Duque et al., 2021; Yan et al., 2021). The rational development and utilization of water resources can be realized by building water storage projects (such as reservoirs) and inter basin water transfer projects according to local conditions, and managing the overall operation of these water conservancy projects (Shiau et al., 2021). Reservoir operation refers to the regulation of natural runoff by utilizing the reservoir’s reserving capacity (Zio and Bazzo, 2011; Ji and Wei, 2022; Zhang et al., 2022). The rational use of reservoir operation can not only reduce and relieve flood, but also store flood and make up for drought, improve utilization efficiency of water resources, etc.

Reservoir operation scheme is to generate a set of feasible operation schemes based on the results of real-time hydrological forecast and on the premise of determining the operation objectives and constraints (Wu et al., 2022a). Decision-making for reservoir operation scheme is to select the advantages and disadvantages of several feasible operation schemes at the future time of the reservoir (Zhu et al., 2017a; Vassoney et al., 2021), so as to make the reservoir obtain the maximum benefit of flood control and benefit.

The evaluation of complex system schemes often involves many indicators, and the relationship between indicators is also complex. Conventional scheme decision-making methods mainly adopt the combination of qualitative and quantitative methods, and the fusion of objective information and subjective information, such as fuzzy optimization method (Xu and Zhao, 2008; Ignatius et al., 2018; Sitorus and Brito-Parada, 2022), grey relational analysis (GRA) (Xia et al., 2016; Tian et al., 2018; Cai et al., 2021), TOPSIS method (Liu and Zhang, 2014; Imam and Gurol, 2018), projection pursuit (PP) (Lan and Huang, 2018; Lee, 2018; Cho and Lee, 2021), analytic hierarchy process (AHP) (Wang et al., 2021; Yu et al., 2021; Ye and Chen, 2022) and artificial neural network (Galdo et al., 2021; Yuan et al., 2021; Leng and Huang, 2022). In the decision-making field of reservoir operation schemes, Zhu et al. (2017b) used TOPSIS method, fuzzy optimization method and fuzzy matter-element method to rank all feasible flood control alternatives of multi-reservoir system, and the optimization scheme provides support for decision-making. Yang et al. (2021) proposed a solution framework for multi-attribute decision-making of cascade reservoirs under multiple uncertainties, and adopted improved SMAA-GCA&TOPSIS for stochastic decision-making. Wang et al. (2020) introduced the concept of subjective trade-off rate (STOR) to measure the preference of decision-makers for each target, and combined with ecological risk analysis to select the most appropriate operation rules for the Three Gorges Reservoir.

The conventional scheme decision-making method generally has problems such as large computation, low efficiency, subjectivity, dimension disaster and poor universality, which cannot well reflect the complex relationship between the evaluation object and the evaluation index (Jain et al., 2021). With the development of artificial intelligence (Hassabis et al., 2017; Wu et al., 2022b), deep learning (Janiesch et al., 2021) and big data (Ying., 2014), many scholars began to try to apply intelligent methods to the research of scheme selection. In order to promote the research of intelligent decision-making in joint operations, Hu et al. (2020) proposed a method of air attack operation scheme selection based on neural network. Compared with the traditional decision-making method, the scheme selection effect of this model is better; Chen (2021) proposed a teaching quality scheme decision-making model based on information fusion and optimized RBF neural network decision algorithm; Based on big data and neural network technology, Fang et al. (2021) proposed a novel method for the selection of football tactical command scheme, which achieved good experimental results and provided a new idea for the combination of football and computer science.

Since the neural network has the advantages of high computational efficiency and strong nonlinear fitting ability (Schmidhuber, 2015; Dong et al., 2021; Shi et al., 2022), it can well reflect the nonlinear characteristic relationship in the process of scheme decision making, so as to be closer to the real scheme selection actual scene. Fabianowski et al. (2021) developed a neural network model for the decision-making of bridge management, and ranked the alternatives. Compared with the hybrid algorithm of Extent Analysis Fuzzy Analytic Hierarchy Process (EAFAHP) and Dominant Analytic Hierarchy Process (DAHP), the high accuracy of the neural network was verified. Abdelrasoul et al. (2022) used a cascade forward backpropagation neural network to select the appropriate mining method, and compared it with a variety of multi-criteria decision-making methods, discussed its applicability, subjectivity, qualitative and quantitative data, sensitivity and effectiveness. The experimental results show that CFBPNN is easier to apply and more accurate than traditional tools. Wang and Tian (2018) designed the genetic algorithm ANN to optimize the connection weight and threshold in the optimal BP network, and established the nonlinear relationship between the mining method of thin coal face and geological conditions. However, due to the small sample size, the neural network established in this study needs to be improved regularly. The biggest difficulty in the application of neural network is related to the process of network learning. The neural network adjusts the network parameters in an iterative way to reduce the root mean square error. Using different input combinations to determine the optimal network architecture can improve network performance, which requires a large number of highly diverse input data.

After studying and analyzing the characteristics of reservoir operation scheme data, convolutional neural network (Gu et al., 2018), generative adversarial network (Goodfellow et al., 2020) and whale optimization algorithm (Mirjalili and Lewis, 2016) are applied to the research of decision-making method of reservoir operation scheme. In order to solve the problem that the data of reservoir operation scheme is few and some evaluation index data are lack of characteristics, the data augmentation algorithm IWGAN is proposed. IWGAN combines Wasserstein distance, gradient penalty and loss function of difference based on GAN, and dynamically adds random noise in the process of model training, By alternately training generator and discriminator of IWGAN on the existing data set, the characteristics and laws of reservoir operation scheme data are continuously learned to generate high-quality data for expansion. The whale optimization algorithm is improved by introducing the initial population of Logistic chaotic map, nonlinear convergence factor, adaptive weight and Levy flight disturbance strategy. The improved whale optimization algorithm IWOA is used to optimize the CNN hyperparameters of the reservoir operation scheme decision-making model. Finally, the experiment verifies that IWGAN has better data augmentation effect, IWOA has higher convergence accuracy, stronger search ability, better stability, and the decision-making model IWGAN-IWOA-CNN of reservoir operation scheme has higher prediction accuracy, the scheme it selects has good reliability.

CNN is a feedforward neural network with deep structure and translation invariance inspired by biology (Gu et al., 2018). The basic structure of CNN is composed of input layer, convolution layer, pooling layer, full connection layer and output layer. The convolution layer extracts feature from the input data, the pooling layer selects features and filters information from the output results of the convolution layer, and the full connection layer classifies or regresses the extracted feature expression using the activation function. Originated from the data-driven idea, convolutional neural network does not need to carry out a detailed mathematical modeling of the system. It can mine the mapping relationship between input and output by learning and training the sample data, and then can effectively predict the output.

GAN is usually composed of two parts: a generator and a discriminator (Goodfellow et al., 2020). The generator aims to learn the potential distribution of the real sample data and generate new samples that can be confused with the real. The discriminator aims to correctly distinguish whether the input data is from the real data or the data generated by the generator. The two eventually achieve Nash equilibrium after continuous alternating confrontation training (Wu et al., 2022c). The basic structure of GAN is shown in Figure 1 Generator G generates virtual data G(z) from the input random noise, discriminator D randomly obtains the input from the data set fused by the real data set and the data generated by generator G, and outputs a single probability value of the sample from the real data set. During training, discriminator D should maximize the task of assigning correct labels to real data and generated data, while generator G should try to generate data similar to real data that discriminator D cannot distinguish. The loss function is:

Where, x is the real data, z is the input of the generator, G(z) is the generator G generated synthetic data, D(x) is the true probability of the discriminator D judging the real data, D(G(z)) is the true probability of judging the generated data, and V(D,G) represents the training process of GAN.

Arjovsky et al. (2017b) proposed a generative adversarial network based on Wasserstein distance (WGAN) to solve the problems of difficult convergence and poor controllability of GAN. WGAN uses Wasserstein distance instead of JS divergence (Huang, 2020) in GAN to measure the effective distance between real data and generated data distribution. Wasserstein distance is defined as:

Where, Pr is the real data distribution, Pg is the generated data distribution, ∏(Pr,Pg) is the set of joint probability distributions of Pr and Pr, (x,y)~δ represents sampling a group of samples (x,y) from the joint distribution δ, and calculates the distance of the pair of samples, and then calculates the expectation E(x,y)~δ[||x−y||]. W(Pr,Pg) is the expectation infimum of the joint probability distribution δ(x,y). The smaller W(Pr,Pg) is, the higher the similarity between the real data distribution and the generated data distribution. The loss function of WGAN is:

where, D∈Lip1 means that the discriminator meets the 1-lipschitz continuity condition.

In order to ensure the Lipschitz continuity condition of the discriminator, WGAN checks whether all parameters exceed a certain range [−c,c] every time the parameters ω of the discriminator are updated. If beyond this range, set the parameter greater than c as c and the parameter less than -c as -c. This weight clipping strategy (Han et al., 2020) will lead to changes in the structure of the parameter matrix of the discriminator network and the corresponding relationship between the parameters, The extreme phenomenon of maximum or minimum value of parameters, gradient disappearance and gradient explosion occur (Goodfellow, 2016; Gulrajani et al., 2017; Arjovsky and Bottou, 2017a). To solve these problems, this paper proposes an improved WGAN algorithm IWGAN, which adds the gradient penalty term and the finite difference in the loss function, and introduces the dynamic random noise adjustment algorithm.

WGAN restricts Lipschitz continuity condition in the whole sample space, while IWGAN only imposes gradient penalty constraint on the area between the real sample data and the generated sample data. The L2 norm of the gradient is constrained near 1 on the optimal path from the generated distribution to the real distribution. Based on the idea of random interpolation and bilateral penalty, a gradient penalty term is designed:

where, , , x∈Pr, y∈Pg.

The loss function of adding gradient penalty term is updated as:

where, λEx~penalty[||∇xD(x)||2−1]2 is a penalty term, whose purpose is to make smooth to accelerate the convergence speed of the model, means that random sampling (also known as penalty term sampling) is carried out between the real data distribution Pr and the generated data distribution Pg, and ∇xD(x) means to calculate the derivative of x.

Considering that the gradient penalty is weak for the continuity constraint in a small range on the Lipschitz continuity condition, and even discontinuous in some extreme cases, the difference item is added to the loss function to accelerate the convergence of the model by enhancing the continuity constraint of the gradient penalty, so as to improve the stability of network training.

Suppose the discriminator fw(x) is differentiable on Kantorovich-Rubinstein, , x2~pxg, l∈Uniform[0,1], and randomly interpolates between x1 and x2, with xl=(1−l)x1+lx2, meet ||f*(xl1)−f*(xl2)||=||xl1−xl2||, which satisfies the Lipschitz continuity condition. At this point, xl satisfies the distribution pxl.

The gradient constraint items incorporating the idea of difference are as follows:

The loss function of difference item is added as follows:

Where, , γ2, γ3 are super parameters.

In the process of model training, some Gaussian noise conforming to the distribution is added to each layer of generator network and input layer of discriminator network, and the noise size is dynamically adjusted. The algorithm is shown in Algorithm 1. At the initial stage of model training, the distance between the generated data distribution and the real data distribution is far, and adding large noise will not affect the convergence speed of the model. As the training depth of the model deepens, adding too much noise may cause the model parameters to oscillate near the Nash equilibrium point. Therefore, with the increase of the number of model training, the noise scale should be gradually reduced, so as to accelerate the convergence speed of the model. The flow chart of IWGAN is given in Appendix A.

Whale optimization algorithm (Mirjalili and Lewis, 2016) (WOA) simulates the predatory behavior of humpback whales, and searches for the optimal solution of the problem through three strategies: shrinking and surrounding prey, spiral bubble net predation and random foraging. In this paper, an improved whale optimization algorithm (IWOA) is proposed to solve the problems of slow convergence speed, difficulty in coordinating global and local search ability, and easy to fall into local optimization of WOA (Al-Zoubi et al., 2018; Sun et al., 2018). Algorithm 2 shows the IWOA.

Firstly, the chaotic map (Prasad et al., 2021) is used to initialize the whale population, so that the initial position sequence is evenly distributed in the search space, which effectively improves the convergence accuracy and stability of the algorithm. The Logistic chaotic mapping method is used to initialize the whale population. The mathematical expression is as follows:

where, xn is the state quantity and μ is the logistic parameter.

The nonlinear convergence factor is designed to decrease slowly in the early iteration process, making the value of the parameter A larger, so as to improve the global search ability; In the later iteration process, it decreases rapidly, making the value A smaller, so as to improve the local search ability. The formula is as follows:

where, T is the maximum number of iterations and t is the current number of iterations. Considering that the convergence speed of WOA is slow at the late stage of iteration, and due to the fixed weight during local search, WOA will oscillate around the current optimal solution, resulting in falling into local optimum. Therefore, it is hoped that WOA can appropriately expand the global search scope and enhance the ability to jump out of the local optimum while retaining the local exploration ability at the end of the iteration. Therefore, an adaptive weight ω is designed, and the formula is as follows:

The location update formula of IWOA is as follows:

The Levy flight disturbance mechanism is introduced (Deepa and Venkataraman, 2021), and the disturbance is added to the position update mode to make the algorithm not easy to fall into local optimization and premature convergence. The location update formula is as follows:

Where, Xl(t) is the position after adding Levy flight disturbance, α is the step size factor that changes dynamically with the number of iterations, ⊕ is the point multiplication, and Levy(λ) represents the Levy distribution that obeys the λ, and the formula is as follows:

Where, t is the current number of iterations, T is the maximum number of iterations, and is the adjustment factor. The Levy distribution is approximately simulated by Mantegna algorithm (Mantegna, 1994), and the formula is as follows:

Where, , μ and obey the normal distribution of the and . In order to ensure that the new position after disturbance is better than the original position, the greedy selection strategy is used to compare the fitness of the two to retain the new position with better fitness. The formula is as follows:

This paper refers to Hu et al. (2021), uses CNN as the benchmark model for reservoir operation scheme selection, and uses fuzzy optimization theory to construct CNN training samples. Firstly, the evaluation index system is constructed according to the fuzzy optimization theory; Secondly, the weight of each evaluation index is determined, the subjective weight is determined by analytic hierarchy process, the objective weight is determined by entropy weight method, and the comprehensive weight is obtained by coupling the subjective and objective weights by game theory; Finally, the comprehensive evaluation value of the scheme is calculated by fuzzy comprehensive evaluation, and the initial sample of CNN model is obtained. Due to the lack of data of the original reservoir operation scheme, IWGAN proposed in this paper is used to augment the data set of the original reservoir operation scheme, and improve the prediction accuracy of the model and the reliability of scheme selection. IWOA is used to optimize the parameters of CNN model and determine the optimal network structure, the specific process is given in Appendix B.

The evaluation index and comprehensive evaluation value in the sample data are respectively used as the input and output of CNN model. The comprehensive evaluation value of the scheme is predicted by CNN model, so as to evaluate the advantages and disadvantages of the scheme. According to the principle of maximum membership, the scheme with the maximum comprehensive evaluation value is the optimal scheme. The overall flow chart is shown in Figure 2.

In this paper, the Xidayang reservoir (Hu et al., 2021) is selected as the research object, and many reservoir operation schemes generated by the reservoir multi-objective operation model are used as the experimental data set, including the flood data of the Xidayang reservoir with 10-year return period (data set 1), 20-year return period (data set 2) and 50-year return period (data set 3). Each data has six characteristic columns, which are water resources conversion, water level recovery level, peak shaving amplitude, drawdown depth, recovery time and maximum water level variation. The experimental data were randomly divided into training set, verification set and test set according to the ratio of 6:2:2.

Data preprocessing includes: (і) missing value processing, select the value of adjacent pre discharge time under the same pre discharge flow to fill in the missing value; (ii) normalization processing, the evaluation index involves two types of indicators, benefit type and cost type, and the normalization methods are (19) and (20) respectively; and (iii) denoising processing, the 3 σ principles of normal distribution and kernel smoothing method (Ahmad et al., 2001) are selected for data denoising.

where, xij(i=1,2,…,m;j=1,2,…,n) is the eigenvalue of the scheme evaluation index, n is the number of schemes to be optimized, and is the number of evaluation indexes.

In order to verify the effectiveness of the IWGAN-IWOA-CNN model proposed in this paper, three experiments are carried out. The first part of the experiment verifies the data augmentation effect of IWGAN, the second part of the experiment verifies the optimization performance of IWOA, and the last part of the experiment compares this model with other scheme decision-making models, so as to verify the superiority of the model proposed in this paper.

In order to verify the data augmentation effect of IWGAN (the network structure of generator and discriminator is given in Appendix C, and the training process of IWGAN is given in Appendix D), the experimental data set is input into IWGAN model for confrontation game training. The experiment uses Adam optimizer, and sets the learning rate as 0.00001, β1 =0.9, β2 =0.99, the batch size as 64, and the epoch as 10000.

Taking dataset 1 as an example, Figure 3 shows the change process of Wasserstein distance during the training process. It can be seen from the figure that at the beginning of training, the Wasserstein distance value is large, and the similarity between the data generated by the generator and the real data distribution is low. With the progress of training, the Wasserstein distance gradually decreases, indicating that the generated data distribution gradually approaches the real data distribution. When the number of training times reaches about 4000, the Wasserstein distance approaches zero and fluctuates around it, indicating that the generator and the discriminator reach Nash equilibrium.

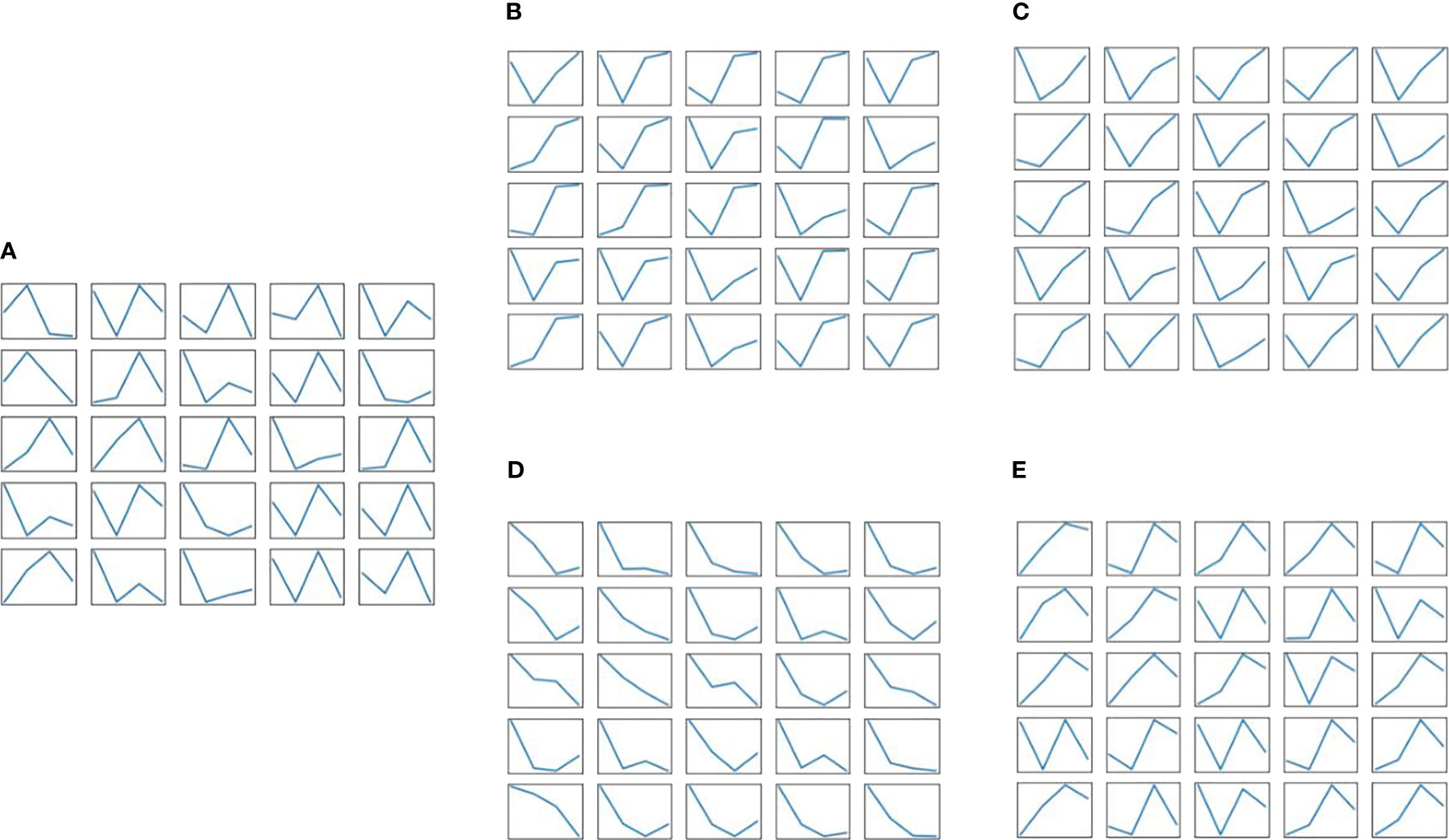

At the same time, in order to reflect the dynamic learning process of IWGAN model, the real sample data and the generated sample data are randomly sampled, and the generated sample data are saved every 50 epochs. Figure 4A is a visual image of real sample data distribution. Figures 4B–E show the generated sample data of IWGAN model when the number of iterations is 50, 1000, 2850 and 4000 respectively. It can be seen that at the initial stage of training e =50, the fluctuation law of the data generated by the generator is quite different from that of the real data. With the deepening of IWGAN training, the model gradually learns the change law of the real data, the distribution of the generated data and the real data is getting closer, and IWGAN is also constantly improving its data augmentation effect and the quality of the generated data. When e =4000, the data generated by IWGAN is basically consistent with the real data distribution. Therefore, the data generated by IWGAN has a certain representation ability, which is applicable to the enhancement of the reservoir operation scheme data in this paper.

Figure 4 The real sample data and the generated sample data are randomly sampled, and the generated sample data are saved every 50 epochs. (A) Real sample data. (B) Generated sample data when e=50. (C) Generated sample data when e=1000. (D) Generated sample data when e=2850. (E) Generated sample data when e=4000.

Through the above analysis, it can also be concluded that the Wasserstein distance in the discriminator is closely related to the data quality generated by the generator. The Wasserstein distance can indicate the training process to a certain extent. The smaller the Wasserstein distance, the better the training effect of IWGAN model. When the number of iterations of the model reaches about 4000, the Wasserstein distance approaches zero and tends to be flat, which proves that the network converges and the model reaches Nash equilibrium. At this time, the generator generates high-quality sample data, and its distribution has been close to the real data distribution. Finally, this paper uses the model with 4000 iterations on dataset 1 for data augmentation.

To verify the performance of IWOA, this paper compares it with the traditional whale optimization algorithm (WOA), particle swarm optimization (PSO) (Kennedy and Eberhart, 1995), cuckoo algorithm (CS) (Yang and Suash, 2009) and gray wolf algorithm (GWO) (Mirjalili et al., 2014) on 11 test functions. The test functions are shown in Table 1, and the test results are shown in Table 2. The optimization accuracy of the algorithm is the absolute value of the error between the actual optimal solution and the theoretical optimal solution. In this paper, the average (Ave) and standard deviation (Std) of the optimization accuracy are used to reflect the convergence accuracy and stability of the algorithm. The calculation formula is as follows:

Where, Xopt is the theoretical optimal solution, and N is the total number of experiments.

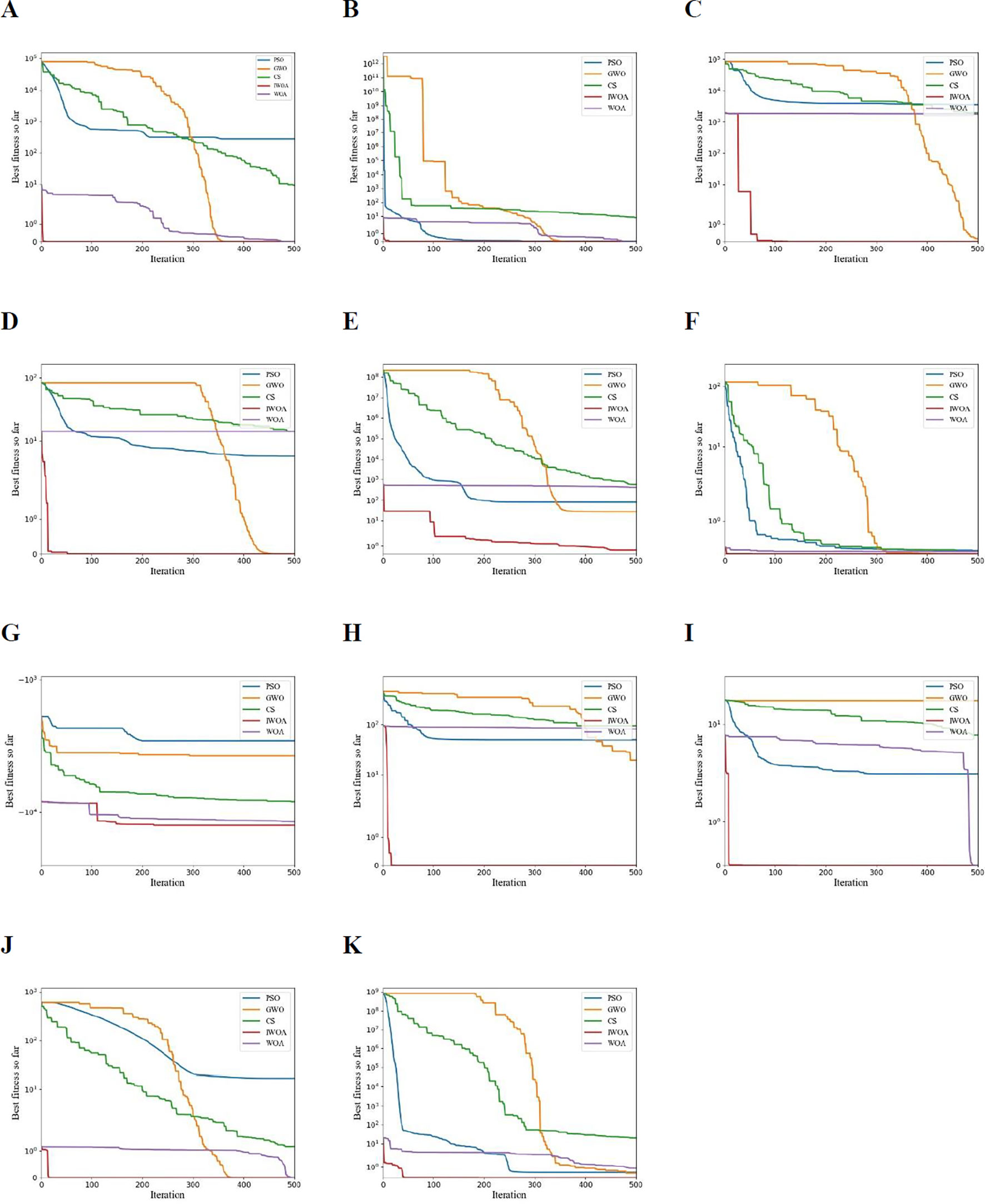

Figure 5 shows the convergence curves of the above five optimization algorithms on 11 test functions. The experiment set the population size of all algorithms N =30, the maximum number of iterations T =500, and each algorithm ran independently for 30 times. Set learning factor c1 = c2 =2, inertia weight wmin =0.1, wmax =0.4 in PSO; Set parameters in CS pa =0.25, Levy flight parameters =0.01, β =1.5.

Figure 5 Convergence curves of different optimization algorithms on 11 test functions, (A–K) correspond to f1~f11 respectively.

It can be seen from Table 2 that IWOA performs better than the other four algorithms in terms of optimization effect. From the perspective of Ave, IWOA is better on 11 test functions. This shows that the convergence accuracy of IWOA is higher, and the optimization result of IWOA is closer to the theoretical minimum of the test function. From the perspective of Std, in addition to the slightly worse stability of IWOA in the function than PSO, the stability of IWOA in other test functions is better than the other four algorithms, which shows that the optimization stability of IWOA is better.

As can be seen from Figure 5, compared with other algorithms, the convergence curve of IWOA decreases the fastest, and on the 11 test functions, the optimal fitness convergence curve of IWOA is located below the curves of the other four algorithms, indicating that IWOA has faster convergence speed and higher convergence accuracy. It can be seen from the convergence curves of functions , and f8~f11 that the convergence curve of IWOA can be approximately regarded as a straight line. This is due to the introduction of Logistic chaotic map initialization population, nonlinear convergence factor and adaptive weight, so that the algorithm can find the optimal solution after less iterations. It can also be seen from the convergence curve of function and f7 that there are many inflection points in the convergence curve of IWOA, and the curve is abrupt, which shows that IWOA has stronger ability to jump out of local optimum. To sum up, the IWOA proposed in this paper has faster convergence speed, stronger optimization ability and better stability.

In this paper, IWGAN is used to augment the data set of the original reservoir operation scheme, and IWOA is used to optimize the network structure of CNN and determine the optimal structure of the model (see Appendix E). In order to verify the effectiveness and prediction effect of IWGAN-IWOA-CNN reservoir operation scheme decision-making model, this paper conducts comparative experiments from three aspects: different data augmentation algorithms, different optimization algorithms and different scheme decision-making models.

In order to verify the superiority of IWGAN over other data augmentation algorithms, this section compares IWGAN-IWOA-CNN model with IWOA-CNN (without data augmentation algorithm), WGAN-IWOA-CNN, GAN-IWOA-CNN and SMOTE (Douzas and Bacao, 2019) -IWOA-CNN models. Table 3 shows the experimental results of models under different data augmentation algorithms. According to the Table 3, different data augmentation algorithms have improved the prediction accuracy of the model to a certain extent, but the IWGAN-CNN model proposed in this paper has better prediction effect. Compared with CNN, SMOTE-CNN, GAN-CNN and WGAN-CNN models, the MAE decreased by 29%, 27.5%, 14.4% and 13.2%, respectively; RMSE decreased by 15.2%, 23.2%, 5% and 9.7% respectively; R2 increased by 2.5%, 6.1%, 1.4% and 0.4% respectively. On the whole, IWGAN has the most significant effect on data augmentation, IWGAN-CNN model has the best robustness and generalization ability, and the effect of scheme selection is the best, followed by WGAN-CNN model. Because the loss function of GAN discriminator is defined based on JS divergence, it is difficult to solve the problems of unstable training and mode collapse, so the performance of GAN-CNN model is slightly poor. Smote algorithm is essentially an improvement on the oversampling algorithm. It is difficult to learn the distribution law of real data through its own learning ability, and it does not have the increase of effective information. Moreover, due to the randomness of oversampling, the performance of the model is greatly different and the stability is poor, so SMOTE-CNN model performs worst.

The IWGAN-IWOA-CNN model is compared with IWGAN-WOA-CNN, IWGAN-PSO-CNN, IWGAN-CS-CNN and IWGAN-GWO-CNN models to verify the effectiveness of IWGAN-IWOA-CNN model. The experimental results are shown in Table 4. On the whole, except that the MAE value of IWGAN-GWO-CNN model on dataset 1 is relatively minimum, in other cases, the Mae and RMSE values of IWGAN-IWOA-CNN model are relatively minimum, R2 is the closest to 1, and the model performs best. This shows that IWOA has higher optimization accuracy and better optimization effect. IWOA-CNN algorithm can effectively help CNN find the best network parameters, and IWGAN-IWOA-CNN model has higher prediction accuracy, better generalization ability and robustness

IWGAN-IWOA-CNN model is compared with IWGAN-IWOA-BP, IWGAN-IWOA-SVR and IWGAN-IWOA-MLP models. The experimental results are shown in Table 5.

According to the analysis chart, in terms of evaluation indicators, the MAE and RMSE of IWGAN-IWOA-CNN model are the smallest and R2 is the closest to 1 in data sets 1-3. Compared with IWGAN-IWOA-BP, IWGAN-IWOA-MLP and IWGAN-IWOA-SVR models, MAE decreases by 71.8%, 69.2% and 54.7%, RMSE decreases by 69.8%, 66.3% and 55.5%, and R2 increases by 5.8%, 4.8% and 2.5%. In terms of training time, in dataset 1, the training time of IWGAN-IWOA-CNN model is slightly longer than that of IWGAN-IWOA-BP model, and in dataset 3, the training time of this model is also slightly longer than that of IWGAN-IWOA-MLP and IWGAN-IWOA-BP models. However, considering the performance of the model, a slightly longer training time can greatly improve the prediction accuracy of the model and the accuracy of scheme evaluation, so the IWGAN-IWOA-CNN model proposed in this paper has the best prediction performance and scheme selection effect.

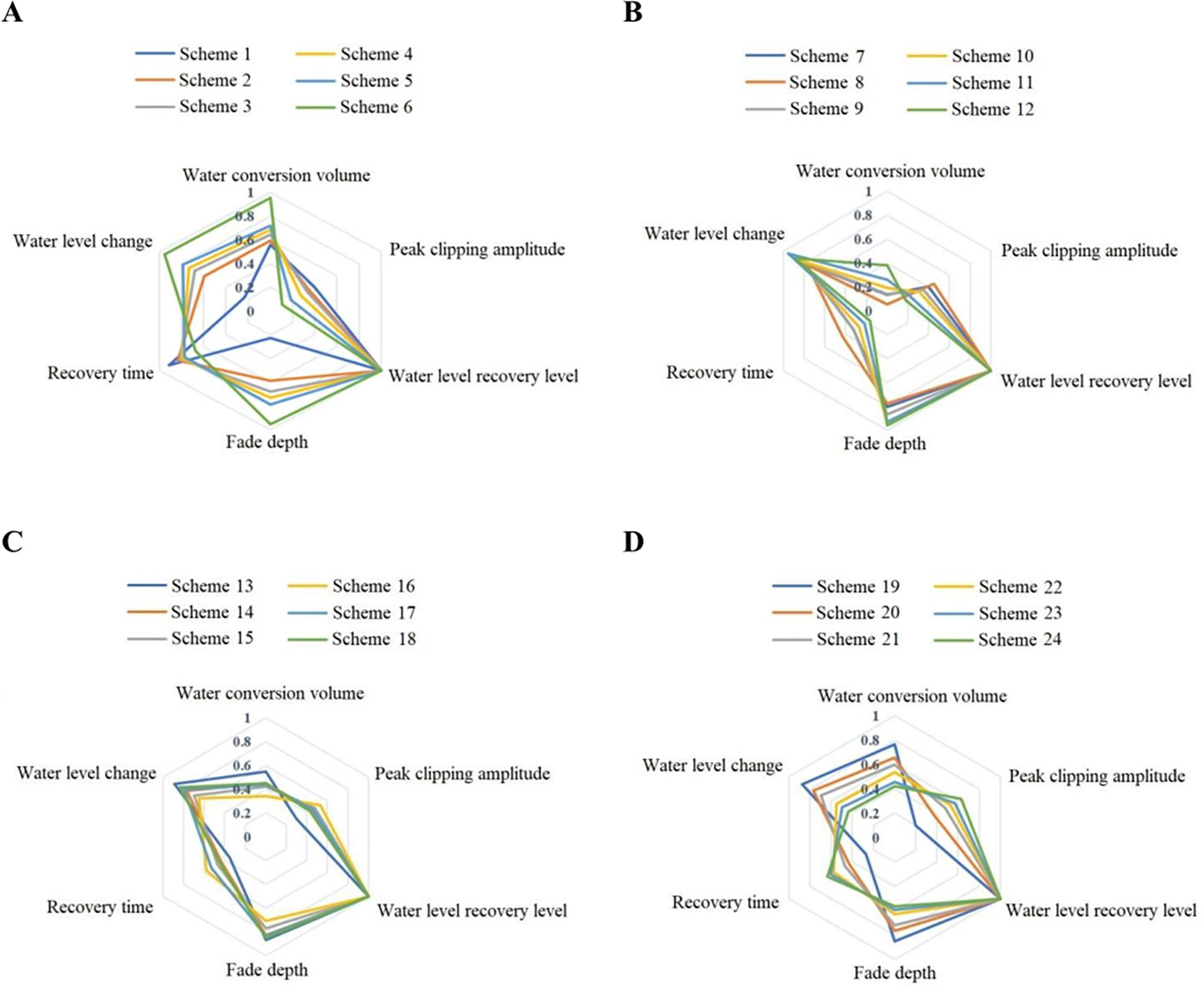

The reservoir operation scheme decision-making model based on IWGAN-IWOA-CNN proposed in this paper is used to evaluate and select the schemes in the data set. Select the scheme selection results of some typical test data in data sets 1-3 for empirical analysis. Table 6 shows the evaluation results of some schemes in dataset 1. Figure 6 shows the evaluation results of some schemes in dataset 1-3. In order to intuitively compare the evaluation indexes of the scheme selection results, taking some reservoir operation schemes in dataset 1 as examples (schemes 1-24 in Table 6), the comparison diagram of scheme selection results is drawn as shown in Figure 7.

Figure 6 Comparison of different pre discharge schemes under different pre discharge time in dataset 1-3. (A) Dataset 1. (B) Dataset 2. (C) Dataset 3.

Figure 7 Radar chart of decision results of different schemes in dataset 1. (A) Scheme with pre discharge flow of 130. (B) Scheme with pre discharge flow of 200. (C) Scheme with pre discharge flow of 250. (D) Scheme with pre discharge flow of 300.

According to Table 6, compared with the actual comprehensive evaluation value of reservoir operation scheme, the relative error of IWGAN-IWOA-CNN model prediction is within ± 3%, indicating that the generalization ability and robustness of the model are good, and the result of scheme selection is ideal. According to the analysis of Figure 6:

(A) For the 10-year flood of Xidayang reservoir (dataset 1):

(1) When the reservoir operation scheme with pre discharge flow of 130 m3/s is adopted, the comprehensive evaluation value of the scheme increases gradually with the increase of pre discharge time. When the reservoir operation scheme with pre discharge flow of 300 is adopted, the comprehensive evaluation value of the scheme gradually decreases with the increase of pre discharge time.

(2) When the pre discharge time is 36h, the evaluation results of each reservoir operation scheme have little difference, and the reservoir operation scheme with pre discharge flow of 130 is slightly better than that with pre discharge flow of 300 m3/s, 250 m3/s and 200 m3/s.

(3) When the pre discharge time exceeds 48h, the scheme with pre discharge flow of 130 is significantly better than that with pre discharge flow of 200 m3/s, 250m3/s and 300 .

(B) For the 20-year flood of Xidayang reservoir (dataset 2):

(1) With the increase of pre discharge time, the comprehensive evaluation value curve of the schemes with pre discharge flow of 130 m3/s., 200 m3/s., 250 m3/s. and 300 m3/s. shows an increasing trend except that there is a downward trend at individual time points. The reservoir operation scheme with pre discharge time of 72 hours performs better.

(2) When the pre discharge time is between 24h and 36h, the scheme with pre discharge flow of 130 m3/s. has the largest increase in the comprehensive evaluation value curve, indicating that the increase of pre discharge time has a certain effect on improving the effect of scheme selection.

(C) For the flood with a 50-year return period of Xidayang reservoir (dataset 3):

(1) With the increase of pre discharge time, the comprehensive evaluation curve of the scheme with pre discharge flow of 130 m3/s. shows a continuous growth trend, the scheme with pre discharge flow of 250 m3/s. shows a trend of first rising, then falling and then rising, and the scheme with pre discharge flow of 250 m3/s. shows a trend of first falling and then rising. No matter how the trend of the three changes, the scheme with pre discharge time of 72 hours has the best effect.

(2) The comprehensive evaluation value of the scheme with pre discharge flow of 300m ^ 3/s increases first and then decreases with the increase of pre discharge time, and the reservoir operation scheme with pre discharge time of 24h performs best.

Figures 7A–D correspond to the four scheme selection curves of dataset 1 in Figure 6. From the perspective of evaluation indicators, except that the “Peak clipping amplitude” and “Recovery time” are cost indicators (the smaller the better), other evaluation indicators are benefit indicators (the larger the better). It can be seen from the radar chart that when the scheme with pre discharge flow of 130 m3/s. and 200 m3/s. is adopted, the peak clipping amplitude and recovery time of scheme 6 are relatively minimum, and the other evaluation indexes are relatively maximum, so it is more appropriate to choose the reservoir operation scheme with pre discharge time of 72 hours; When the pre discharge flow is 250 m3/s. and 300 m3/s., the reservoir operation scheme with pre discharge time of 12h is more appropriate.

Aiming at the complex decision-making problem of reservoir operation scheme, this paper proposes a reservoir operation scheme decision-making model based on IWGAN-IWOA-CNN. Firstly, the training samples of CNN are constructed by fuzzy optimization theory; Secondly, IWGAN is proposed to augment the data set of the original reservoir operation scheme. The algorithm uses the loss function which integrates Wasserstein distance, gradient penalty and difference item, and dynamically adds random noise in the process of model training. The experimental results show that the data generated by IWGAN has certain characterization ability; Then IWOA is proposed. The initial population of Logistic chaotic map, nonlinear convergence factor, adaptive weight and Levy flight disturbance strategy are introduced. The algorithm is compared with WOA, PSO, CS and GWO on 11 test functions. The experimental results show that IWOA has faster convergence speed, higher convergence accuracy and better stability; Finally, IWOA-CNN algorithm is proposed to optimize the CNN super parameters, and the optimal parameters are used to predict the model. The experimental results show that the prediction accuracy and scheme selection accuracy of the model in this paper are higher.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

QH, H-XH, and Z-ZL designed and performed the experiments. Z-HC, and YZ devised the experiments. QH, Z-HC helped with the data analysis and writing of the manuscript. All authors contributed to the article and approved the submitted version.

This research was supported by the National Key Research and Development Program of China (2018YFC0407904).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2023.1102855/full#supplementary-material

Abdelrasoul, M. E. I., Wang, G., Kim, J., Ren, G., Abd-El-Hakeem Mohamed, M., Ali, M. A. M., et al. (2022). Review on the development of mining method selection to identify new techniques using a cascade-forward backpropagation neural network. Adv. Civ Eng. 2022, 1–16. doi: 10.1155/2022/6952492

Ahmad, I., Chen, X. H., Li, Q. (2001). Model check by kernel methods under weak moment conditions. Comput. Stat. Data Anal. 36, 403–409. doi: 10.1016/S0167-9473(00)00043-8

Al-Zoubi, A. M., Faris, H., Alqatawna, J., Hassonah, M. A. (2018). Evolving support vector machines using whale optimization algorithm for spam profiles detection on online social networks in different lingual contexts. Knowl. Based Syst. 153, 91–104. doi: 10.1016/j.knosys.2018.04.025

Arjovsky, M., Bottou, L. (2017a). Towards principled methods for training generative adversarial networks. Stat. (Int Stat. Inst) 1050. Available at: https://arxiv.org/abs/1701.04862.

Arjovsky, M., Chintala, S., Bottou, L. (2017b) Wasserstein gan. Available at: https://arxiv.org/abs/1701.07875.

Boursianis, A. D., Papadopoulou, M. S., Gotsis, A., Wan, S., Sarigiannidis, P., Nikolaidis, S., et al. (2021). Smart irrigation system for precision agriculture–the arethou5a iot platform. IEEE Sens J. 21, 17539–17547. doi: 10.1109/JSEN.2020.3033526

Cai, S., Sun, L., Liu, Q., Ji, Y., Wang, H. (2021). Research on the dispatching rules of inter-basin water transfer projects based on the two-dimensional scheduling diagram. Front. Earth Sci. (Lausanne) 9, 664201. doi: 10.3389/feart.2021.664201

Chen, Y. J. (2021). College english teaching quality evaluation system based on information fusion and optimized rbf neural network decision algorithm. J. Sens. 2021, 6178569. doi: 10.1155/2021/6178569

Cho, H., Lee, E. K. (2021). Tree-structured regression model using a projection pursuit approach. Appl. Sci. (Basel) 11, 9885. doi: 10.3390/app11219885

Deepa, R., Venkataraman, R. (2021). Enhancing whale optimization algorithm with levy flight for coverage optimization in wireless sensor networks. Comput. Electr. Eng. 94, 107359. doi: 10.1016/j.compeleceng.2021.107359

Dong, S., Wang, P., Abbas, K. (2021). A survey on deep learning and its applications. Comput. Sci. Rev. 40, 100379. doi: 10.1016/j.cosrev.2021.100379

Douzas, G., Bacao, F. (2019). Geometric smote a geometrically enhanced drop-in replacement for smote. Inf Sci. 501, 118–135. doi: 10.1016/j.ins.2019.06.007

Duque, A. F., Campo, R., Del Rio, A. V., Amorim, C. L. (2021). Wastewater valorization: practice around the world at pilot- and full-scale. Int. J. Env. Res. PUB HE 18, 9466. doi: 10.3390/ijerph18189466

Fabianowski, D., Jakiel, P., Stemplewski, S. (2021). Development of artificial neural network for condition assessment of bridges based on hybrid decision making method – feasibility study. Expert Syst. Appl. 168, 114271. doi: 10.1016/j.eswa.2020.114271

Fang, L., Wei, Q., Xu, C. J. (2021). Technical and tactical command decision algorithm of football matches based on big data and neural network. Sci. Program 2021, 5544071. doi: 10.1155/2021/5544071

Galdo, M., Miranda, J. T., Lorenzo, J., Caccia, C. G. (2021). Internal modifications to optimize pollution and emissions of internal combustion engines through multiple-criteria decision-making and artificial neural networks. Int. J. Env. Res. PUB HE 18, 12823. doi: 10.3390/ijerph182312823

Goodfellow, I. (2016) Nips 2016 tutorial: generative adversarial networks. Available at: https://arxiv.org/abs/1701.00160.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., et al. (2020). Generative adversarial networks. Commun. ACM 63, 139–144. doi: 10.1145/3422622

Gu, J. X., Wang, Z. H., Kuen, J., Ma, L. Y., Shahroudy, A., Shuai, B., et al. (2018). Recent advances in convolutional neural networks. Pattern Recognit 77, 354–377. doi: 10.1016/j.patcog.2017.10.013

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A. (2017) Improved training of wasserstein gans. Available at: https://arxiv.org/abs/1704.00028.

Han, W., Wang, L. Z., Feng, R. Y., Gao, L., Chen, X. D., Deng, Z., et al. (2020). Sample generation based on a supervised wasserstein generative adversarial network for high-resolution remote-sensing scene classification. Inf Sci. 539, 177–194. doi: 10.1016/j.ins.2020.06.018

Hassabis, D., Kumaran, D., Summerfield, C., Botvinick, M. (2017). Neuroscience-inspired artificial intelligence. Neuron 95, 245–258. doi: 10.1016/j.neuron.2017.06.011

Hu, H., Miao, Y., Hu, Q., Zhang, Y., Hu, Z., Ma, R. (2021). Optimization of reservoir operation scheme based on fuzzy optimization and convolution neural network. (Singapore: Thirteenth International Conference on Digital Image Processing) 11878. doi: 10.1117/12.2601054

Hu, G., Zhou, C., Zhang, X., Zhang, H., Zhou, Z. (2020). “A Neural Network-Based Intelligent Decision-Making in the Air-Offensive Campaign with Simulation,” in 2020 16th International Conference on Computational Intelligence and Security (CIS), (Guangxi, China) 342–346. doi: 10.1109/CIS52066.2020.00079

Huang, J. C. (2020). Image super-resolution reconstruction based on generative adversarial network model with double discriminators. Multimed Tools Appl. 79, 29639–29662. doi: 10.1007/s11042-020-09524-y

Ignatius, J., Hatami-Marbini, A., Rahman, A., Dhamotharan, L., Khoshnevis, P. (2018). A fuzzy decision support system for credit scoring. Neural Comput. Appl. 29, 921–937. doi: 10.1007/s00521-016-2592-1

Imam, M., Gurol, B. (2018). Measuring the performance of private pension sector by topsis multi criteria decision-making method. Pressacademia 5, 288–295. doi: 10.17261/Pressacademia.2018.937

Jain, N., Tomar, A., Jana, P. K. (2021). A novel scheme for employee churn problem using multi-attribute decision making approach and machine learning. J. Intell. Inf Syst. 56, 279–302. doi: 10.1007/s10844-020-00614-9

Janiesch, C., Zschech, P., Heinrich, K. (2021). Machine learning and deep learning. Electron Mark 31, 685–695. doi: 10.1007/s12525-021-00475-2

Ji, Y., Wei, H. (2022). An approximate dynamic programming method for unit-based small hydropower scheduling. Front. Energy Res. 10, 965669. doi: 10.3389/fenrg.2022.965669

Kennedy, J., Eberhart, R. (1995). Particle swarm optimization. (Perth, WA, Australia: Proceedings of ICNN'95 - International Conference on Neural Networks) 4, 1942–1948. doi: 10.1109/ICNN.1995.488968

Lan, Z. G., Huang, M. (2018). Safety assessment for seawall based on constrained maximum entropy projection pursuit model. Nat. Hazards (Dordr) 91, 1165–1178. doi: 10.1007/s11069-018-3172-8

Lee, E. K. (2018). Pptreeviz: an r package for visualizing projection pursuit classification trees. J. Stat. Softw 83, 1–30. doi: 10.18637/jss.v083.i08

Leng, Y. J., Huang, Y. H. (2022). Power system black-start decision making based on back-propagation neural network and genetic algorithm. J. Electr Eng. Technol. 17, 2123–2134. doi: 10.1007/s42835-022-01041-2

Liu, F., Zhang, W. G. (2014). Topsis-based consensus model for group decision-making with incomplete interval fuzzy preference relations. IEEE Trans. Cybern 44, 1283–1294. doi: 10.1109/TCYB.2013.2282037

Mantegna, R. N. (1994). Fast, accurate algorithm for numerical simulation of levy stable stochastic processes. Phys. Rev. E Stat. Phys. Plasmas Fluids Relat. Interdiscip Topics 49, 4677–4683. doi: 10.1103/physreve.49.4677

Mirjalili, S., Lewis, A. (2016). The whale optimization algorithm. Adv. Eng. Softw 95, 51–67. doi: 10.1016/j.advengsoft.2016.01.008

Mirjalili, S., Mirjalili, S. M., Lewis, A. (2014). Grey wolf optimizer. Adv. Eng. Softw 69, 46–61. doi: 10.1016/j.advengsoft.2013.12.007

Prasad, D., Mukherjee, A., Mukherjee, V. (2021). Temperature dependent optimal power flow using chaotic whale optimization algorithm. Expert. Syst. 38, 12685. doi: 10.1111/exsy.12685

Schmidhuber, J. (2015). Deep learning in neural networks: an overview. Neural Netw. 61, 85–117. doi: 10.1016/j.neunet.2014.09.003

Shi, G., Wu, Y., Liu, J., Wan, S., Wang, W., Lu, T. (2022). Incremental few-shot semantic segmentation via embedding adaptive-update and hyper-class representation. (New York, USA: The 30th ACM International Conference on Multimedia (MM '22)) 5547–5556. doi: 10.48550/arXiv.2207.12964

Shiau, J. T., Wen, H. H., Su, I. W. (2021). Comparing optimal hedging policies incorporating past operation information and future hydrologic information. Water Resour Manag 35, 2177–2196. doi: 10.1007/s11269-021-02834-2

Sitorus, F., Brito-Parada, P. R. (2022). The selection of renewable energy technologies using a hybrid subjective and objective multiple criteria decision making method. Expert Syst. Appl. 206, 117839. doi: 10.1016/j.eswa.2022.117839

Sun, Y. J., Wang, X. L., Chen, Y. H., Liu, Z. J. (2018). A modified whale optimization algorithm for large-scale global optimization problems. Expert Syst. Appl. 114, 563–577. doi: 10.1016/j.eswa.2018.08.027

Tian, G., Zhang, H., Feng, Y., Wang, D., Peng, Y., Jia, H. (2018). Green decoration materials selection under interior environment characteristics: a grey-correlation based hybrid mcdm method. Renewable Sustain. Energy Rev. 81, 682–692. doi: 10.1016/j.rser.2017.08.050

Vassoney, E., Mammoliti Mochet, A., Desiderio, E., Negro, G., Pilloni, M. G., Comoglio, C. (2021). Comparing multi-criteria decision-making methods for the assessment of flow release scenarios from small hydropower plants in the alpine area. Front. Environ. Sci. 9, 635100. doi: 10.3389/fenvs.2021.635100

Wang, X. J., Dong, Z., Ai, X. S., Dong, X., Li, Y. (2020). Multi-objective model and decision-making method for coordinating the ecological benefits of the three gorger reservoir. J. Clean Prod. 270, 122066. doi: 10.1016/j.jclepro.2020.122066

Wang, F., Lu, Y., Li, J., Ni, J. (2021). Evaluating environmentally sustainable development based on the psr framework and variable weigh analytic hierarchy process. Int. J. Env. Res. PUB HE 18, 2836. doi: 10.3390/ijerph18062836

Wang, C., Tian, S. (2018). Evolving neural network using genetic algorithm for mining method evaluation in thin coal seam working face. Int. J. Min Miner Eng. 9, 228–239. doi: 10.1504/IJMME.2018.10017388

Wu, Y., Guo, H., Chakraborty, C., Khosravi, M., Berretti, S., Wan, S. (2022c). Edge computing driven low-light image dynamic enhancement for object detection. IEEE Trans. Netw. Sci. Eng. 1. doi: 10.1109/TNSE.2022.3151502

Wu, X., Yu, L., Wu, S., Jia, B., Dai, J., Zhang, Y., et al. (2022a). Trade-offs in the water-energy-ecosystem nexus for cascade hydropower systems: a case study of the yalong river, china. Front. Environ. Sci. 10, 857340. doi: 10.3389/fenvs.2022.857340

Wu, Y., Zhang, L., Berretti, S., Wan, S. (2022b). Medical image encryption by content-aware dna computing for secure healthcare. IEEE Trans. Industr. Inform. 19 (2), 2089–2098. doi: 10.1109/TII.2022.3194590

Xia, X. F., Sun, Y., Wu, K., Jiang, Q. H. (2016). Optimization of a straw ring-die briquetting process combined analytic hierarchy process and grey correlation analysis method. Fuel Process Technol. 152, 303–309. doi: 10.1016/j.fuproc.2016.06.018

Xu, J., Zhao, L. (2008). A class of fuzzy rough expected value multi-objective decision making model and its application to inventory problems. Comput. Math Appl. 56, 2107–2119. doi: 10.1016/j.camwa.2008.03.040

Yan, X. S., Zhou, Z. C., Hu, C. Y., Gong, W. Y. (2021). Real-time location algorithms of drinking water pollution sources based on domain knowledge. Environ. Sci. pollut. Res. Int. 28, 46266–46280. doi: 10.1007/s11356-021-13352-4

Yang, X. S., Suash, D. (2009). Cuckoo search via lévy flights. (Coimbatore, India: 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC)) 210–214. doi: 10.1109/NABIC.2009.5393690

Yang, Z., Yang, K., Wang, Y. F., Su, L. W., Hu, H. (2021). Long -term multi-objective power generation operation for cascade reservoirs and risk decision making under stochastic uncertainties. Renew Energy 164, 313–330. doi: 10.1016/j.renene.2020.08.106

Ye, J., Chen, T. Y. (2022). Fabric selection based on sine trigonometric aggregation operators under pythagorean fuzzy uncertainty. J. Nat. Fibers. 19 (16), 13928–13942. doi: 10.1080/15440478.2022.2113847

Ying., W. (2014). From information revolution to intelligence revolution: big data science vs. intelligence science. (London, UK: 2014 IEEE 13th International Conference on Cognitive Informatics and Cognitive Computing) 3–5. doi: 10.1109/ICCI-CC.2014.6921432

Yu, D. J., Kou, G., Xu, Z. S., Shi, S. S. (2021). Analysis of collaboration evolution in ahp research: 1982-2018. Int. J. Inf Technol. Decis Mak 20, 7–36. doi: 10.1142/S0219622020500406

Yuan, C. M., Yang, Y. K., Liu, Y. (2021). Sports decision-making model based on data mining and neural network. Neural Comput. Appl. 33, 3911–3924. doi: 10.1007/s00521-020-05445-x

Zhang, P., Mao, J., Tian, M., Dai, L., Hu, T. (2022). The impact of the three gorges reservoir on water exchange between the yangtze river and poyang lake. Front. Earth Sci. (Lausanne) 10, 876286. doi: 10.3389/feart.2022.876286

Zhu, F. L., Zhong, P. A., Sun, Y. M., Xu, B. (2017b). Selection of criteria for multi-criteria decision making of reservoir flood control operation. J. Hydroinform 19, 558–571. doi: 10.2166/hydro.2017.059

Zhu, F. L., Zhong, P. A., Sun, Y. M., Yeh, W. (2017a). Real-time optimal flood control decision making and risk propagation under multiple uncertainties. Water Resour Res. 53, 10635–10654. doi: 10.1002/2017WR021480

Keywords: reservoir operation, decision-making method, convolutional neural network, data augmentation, generative adversarial network, whale optimization algorithm

Citation: Hu Q, Hu H-x, Lin Z-z, Chen Z-h and Zhang Y (2023) A decision-making method for reservoir operation schemes based on deep learning and whale optimization algorithm. Front. Plant Sci. 14:1102855. doi: 10.3389/fpls.2023.1102855

Received: 19 November 2022; Accepted: 28 February 2023;

Published: 24 March 2023.

Edited by:

Shaohua Wan, University of Electronic Science and Technology of China, ChinaReviewed by:

Honggang Qi, University of Chinese Academy of Sciences, ChinaCopyright © 2023 Hu, Hu, Lin, Chen and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhen-zhou Lin, bGluemhlbnpob3VAaGh1LmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.