- 1School of Artificial Intelligence and Big Data, Henan University of Technology, Zhengzhou, China

- 2College of Electrical Engineering, Henan University of Technology, Zhengzhou, China

Rice is a necessity for billions of people in the world, and rice disease control has been a major focus of research in the agricultural field. In this study, a new attention-enhanced DenseNet neural network model is proposed, which includes a lesion feature extractor by region of interest (ROI) extraction algorithm and a DenseNet classification model for accurate recognition of lesion feature extraction maps. It was found that the ROI extraction algorithm can highlight the lesion area of rice leaves, which makes the neural network classification model pay more attention to the lesion area. Compared with a single rice disease classification model, the classification model combined with the ROI extraction algorithm can improve the recognition accuracy of rice leaf disease identification, and the proposed model can achieve an accuracy of 96% for rice leaf disease identification.

Introduction

The pressure on the agriculture sector will increase with the continuing expansion of the human population and so agri-technology and precision farming have gained much importance in today’s world (Jha et al., 2019). The digital transformation of agriculture has evolved various aspects of management into artificial intelligent systems for the sake of making value from the ever-increasing data originated from numerous sources (Benos et al., 2021). Rice is one of the most widely consumed grains in the world. As the most populous country in the world, China also consumes more rice than any other country, with about 154.9 million metric tons consumed in 2021/2022. Rice leaf diseases directly affect the quality and yield of rice (Yang et al., 2021). Brown spot, Bacterial blight, and Leaf blast are three kinds of the most prevalent rice plant diseases (Azim et al., 2021). Therefore, the classification and control of leaf diseases are crucial in rice cultivation. Traditional disease identification mainly relies on manual observation and identification (Dutot et al., 2013), which is labor-intensive and requires extensive experience to identify accurately.

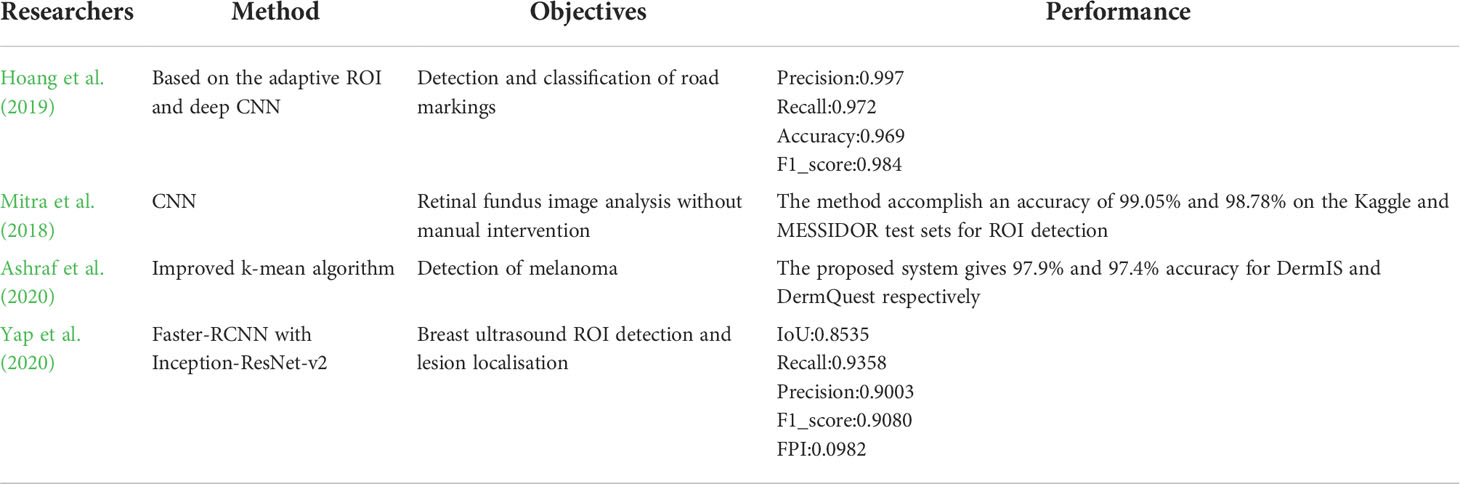

With the rapid advance of deep learning techniques in the field of computer vision, image-based crop disease identification has attracted much attention. Researchers have thus attempted to automate the process of plant disease detection and classification using leaf images (Kaur et al., 2019). The deep convolutional neural network (DCNN), which excels in image classification and detection, is widely used in crop disease identification (Krizhevsky et al., 2017). Xie et al. (2020) designed an improved convolutional neural network to achieve effective identification of grape leaf diseases, and the mAP reached 81.1%. Jiang et al. (2019) achieved successful recognition of apple leaf diseases by improving the convolutional neural networks model, and the mAP reached 78.8%. Chen et al. (2020) achieved an average accuracy of 92.00% for rice plant image class prediction using the transfer learning method. Goluguri et al. (2021) proposed technique EAFSO associates DCNN-LSTM identifies the rice diseases with 97.5% accuracy. Jiang et al. (2021) realized the identification of rice leaf diseases and wheat leaf diseases through the method of migration learning, and their identification accuracy of rice leaf diseases reached 97.22%, and the identification accuracy of wheat leaf diseases reached 98.75%. The Elliptical-Maximum Margin Criterion metric learning was used to study the identification of wheat leaf disease image. The wheat image was segmented through the Ostu method, achieving 94.16% accuracy of the identification of wheat diseases (Bao et al., 2021). Machine learning methods were used to classify the hyperspectral images of grape leaves to identify grapevine leaf roll disease. The highest identification accuracy reached 89.93%, which meant that machine learning methods could effectively detect grapevine leaf diseases during asymptomatic stages (Gao et al., 2020). The deep learning network of migration learning was used to conduct image identification research on four kinds of camellia diseases, they used the AlexNet model to pre-train on ImageNet, and designed a new fully connected layer, then got a mean validation accuracy of 91.25% (Long et al., 2018). Since there are various types of rice leaf diseases and the lesion characteristics exhibited by similar diseases vary greatly, it is difficult to rely on the basic neural network model to classify and identify rice leaf diseases, and the results are not ideal (Feng et al., 2021). Sharma et al. (2022) used transfer learning techniques to identify three rice diseases: bacterial blight, rice blast, and brown spot, with 99.5% accuracy. Neural network technology has made its mark in the field of crop disease identification. It not only improves the accuracy of crop disease identification, but also saves time and labor cost. The novelty of this study is using an improved U-Net for ROI extraction. The ROI extraction allows the computer to automatically select out the region of interest and identify the most appropriate result or the best image that provides more information than other images. There are many applications of ROI in the field of computer vision, as shown in Table 1.

After pre-processing of healthy and diseased (Brown spot, Leaf blight, Bacterial blast) rice leaf images from Kaggle, the present study uses ROI extraction to identify and extract lesion areas. Then disease area images were put into a hybrid attention-enhanced DenseNet model based on improved U-Net for accurate identification of disease types. This study uses the Dice coefficient, Accuracy, Precision, Recall, F1-Score, AUC, and confusion_matrix as model evaluation criteria. The experiments show that under the condition of a small dataset, the accuracy of the classification after ROI extraction was significantly higher than those without it, and the accuracy of the validation dataset could reach 96%, which meets the requirements of rice leaf disease identification and classification.

Materials and equipment

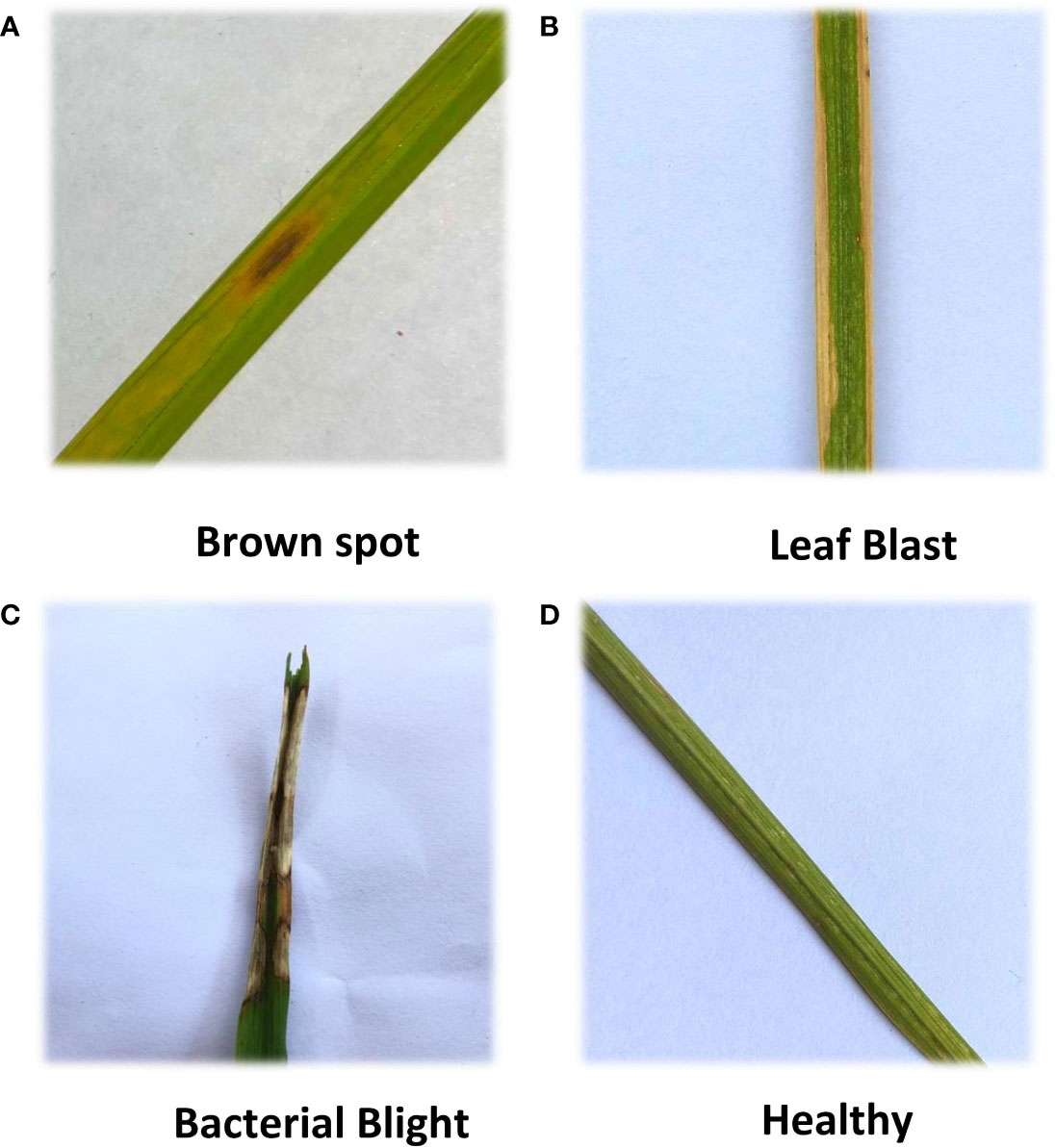

Four types of original rice leaf images from Kaggle. Healthy, Brown spot, Leaf Blast, and Bacterial Blight were collected as the experimental dataset. The training sample collection method is a separate shooting after collecting rice leaf samples in the field. To ensure a balanced distribution of the dataset, some training samples were generated using the Gan network and added to the dataset. These images are stored in PNG format. The four kinds of rice leaf images are shown in Figure 1.

Figure 1 Four kinds of rice leaf images. (A) Brown spot, (B) Leaf Blast, (C) Bacterial Blight, (D) Healthy. Four kinds of rice leaf images showing different morphology.

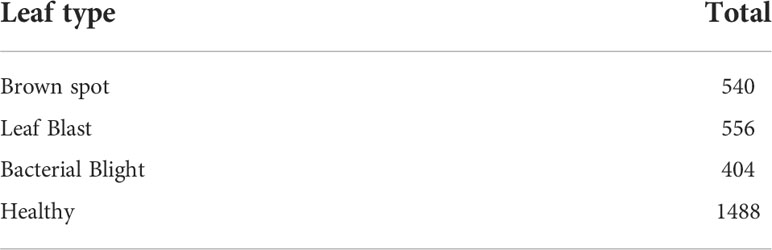

Table 2 lists a total of 2988 images of rice leaves in four categories including Brown spot, Leaf Blast, Bacterial Blight, and Healthy. Of these, 540 were Brown spot, 556 were Leaf Blast, 404 were Bacterial Blight, and 1488 were Healthy.

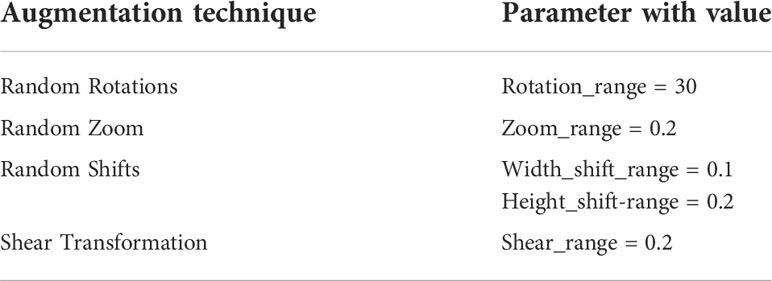

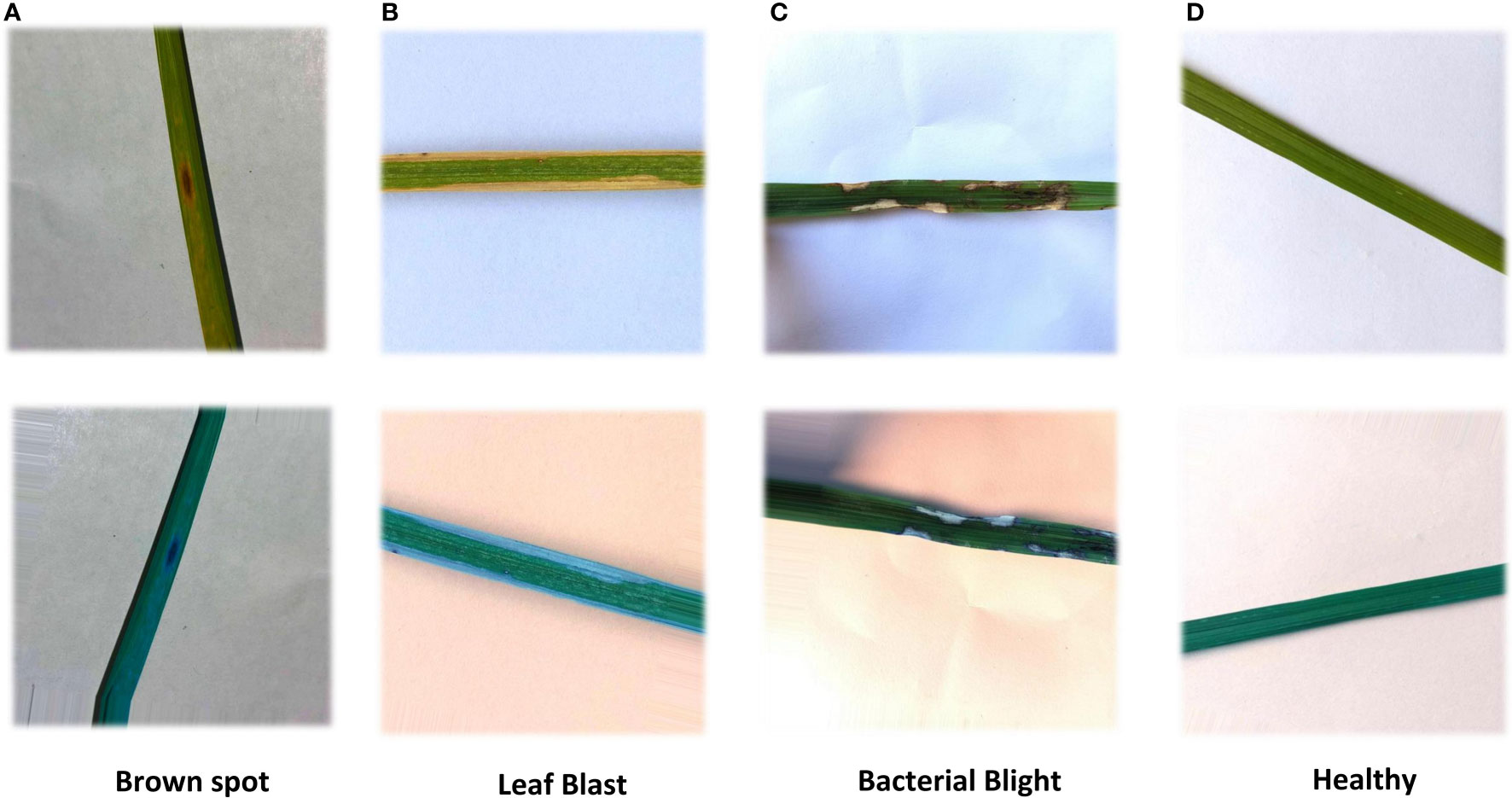

As the resolution ratio of collected rice leaf images was high and different, direct training would greatly increase the computational load of the model and lead to long training time, so images were cut into 256×256. Considering that the increased amount of data could improve the training effectiveness of the deep neural network model, the samples were all treated with random image enhancement, including random rotations, random zoom, random shifts, and shear transformation. Table 3 shows augmentation parameters. The results are shown in Figure 2. In addition, the disease areas were annotated by Labelme to get the masks used for the ROI extraction model training. This is shown in Figure 3. There are 540 masks for Brown spot, 549 masks for Leaf Blast, and 404 masks for Bacterial Blight, for a total of 1500 masks. Healthy images will not be annotated and blank masks will be output directly before the model is trained. The train and test datasets were divided according to a ratio of 0.7:0.3, with 2092 samples in the training dataset and 896 samples in the test dataset.

Figure 2 Rice images with random image enhancement. The enhancement includes horizontal flip, vertical flip, scaling horizontal offset, vertical offset, and shear transform. (A) Brown spot (B) Leaf Blast, (C) Bacterial Blight, (D) Healthy.

Figure 3 Masks of rice leaf. Rice leaf images were labeled by Labelme to generate masks.The original pictures are shown in (A), the red areas in (B) is the diseased areas.

Deep learning was conducted using Python 3.8 with a Tensorflow 2.4 framework with GPU acceleration. A computer with AMD Ryzen 5 5600x CPU, 16 GB of RAM, an NVidia GeForce RTX3070 GPU (8GB RAM, CUDA cores 5888, CUDA version 11.1), and a 256GB SSD were used for calculation.

Methods

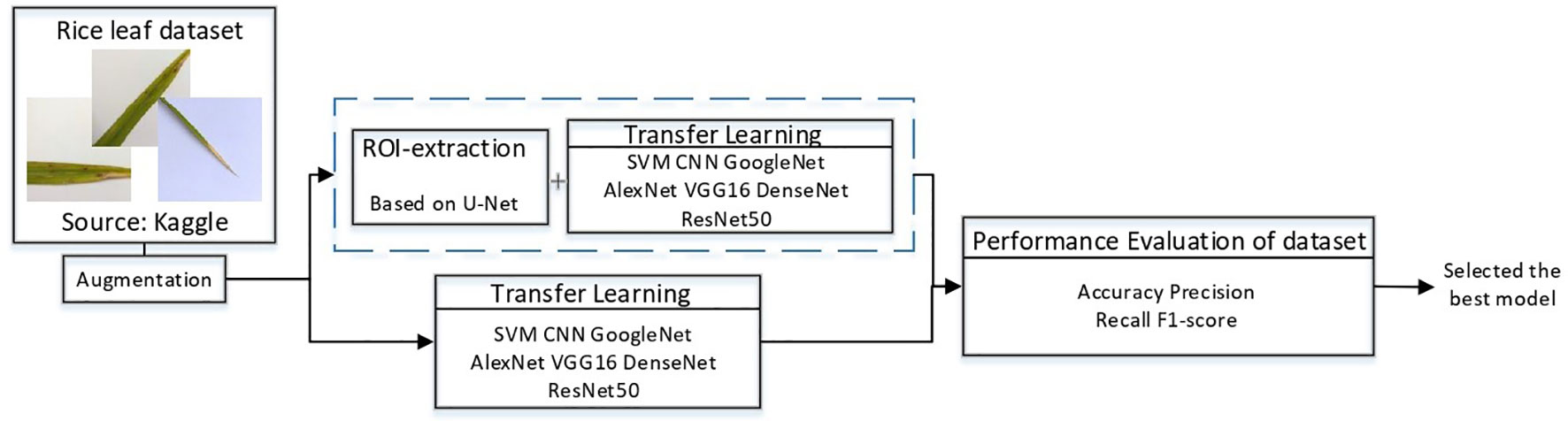

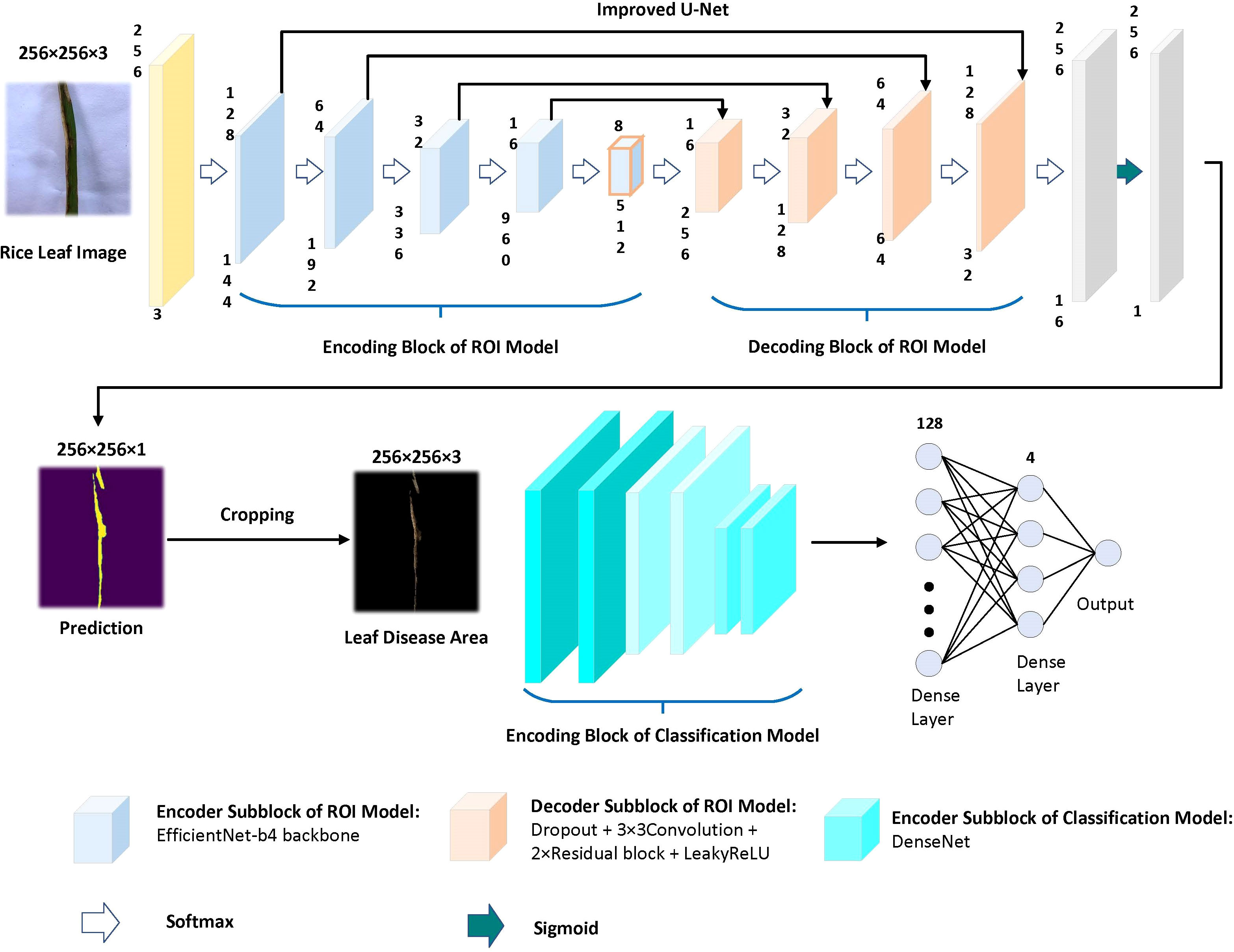

In this study, a deep learning model for rice disease recognition was proposed, as shown in Figure 4. The rice leaf disease identification model proposed in this study consists of two parts: (1) ROI extraction model: input the rice leaf images, then locate and output the disease area pictures. (2) Classification sub-model: input the segmented disease areas and output the exact disease types. Therefore, the model proposed in this study contains two outputs, one is the segmented image of rice leaf disease parts obtained after ROI extraction, and the other is the rice leaf disease species output from the classification sub-model. The general framework of the model is shown in Figure 5.

Figure 5 Overview of the recognition model. The upper part is the ROI extraction model, and the lower part is the DenseNet classification model.

ROI extraction model

In image segmentation, particularly in the field of medical image segmentation, the U-Net model has performed extremely well (Siddique et al., 2021). The aim of using U-Net is to create pixel-level masks for each object in the images. The result is the identification and identification of the position and the shape of different objects in the images, classifying each pixel in each image into lesioned and non-lesioned areas (Ghosh et al., 2021). U-Net uses jump connections at the same stage (Ronneberger et al., 2015), rather than direct monitoring and loss-back transmission of high-level semantic features, thus ensuring that the final recovered feature map integrates more of the underlying features and also enables the fusion of features at different scales, thus allowing for multi-scale prediction and deep supervision. Up-sampling also enables finer information such as the recovered edges of segmented images. In the medical field and with a small number of samples, U-Net is still able to perform the image segmentation task excellently (Du et al., 2020). Medical image segmentation has some similarities with crop disease image segmentation.

Based on this, the improved U-Net model was chosen as the framework for the automatic segmentation model of leaf disease areas in this study. After several experiments, it was found that the improved U-Net combined with the Efficientnet-b4 pre-training network (based on the ImageNet dataset) performed the best in segmentation training.

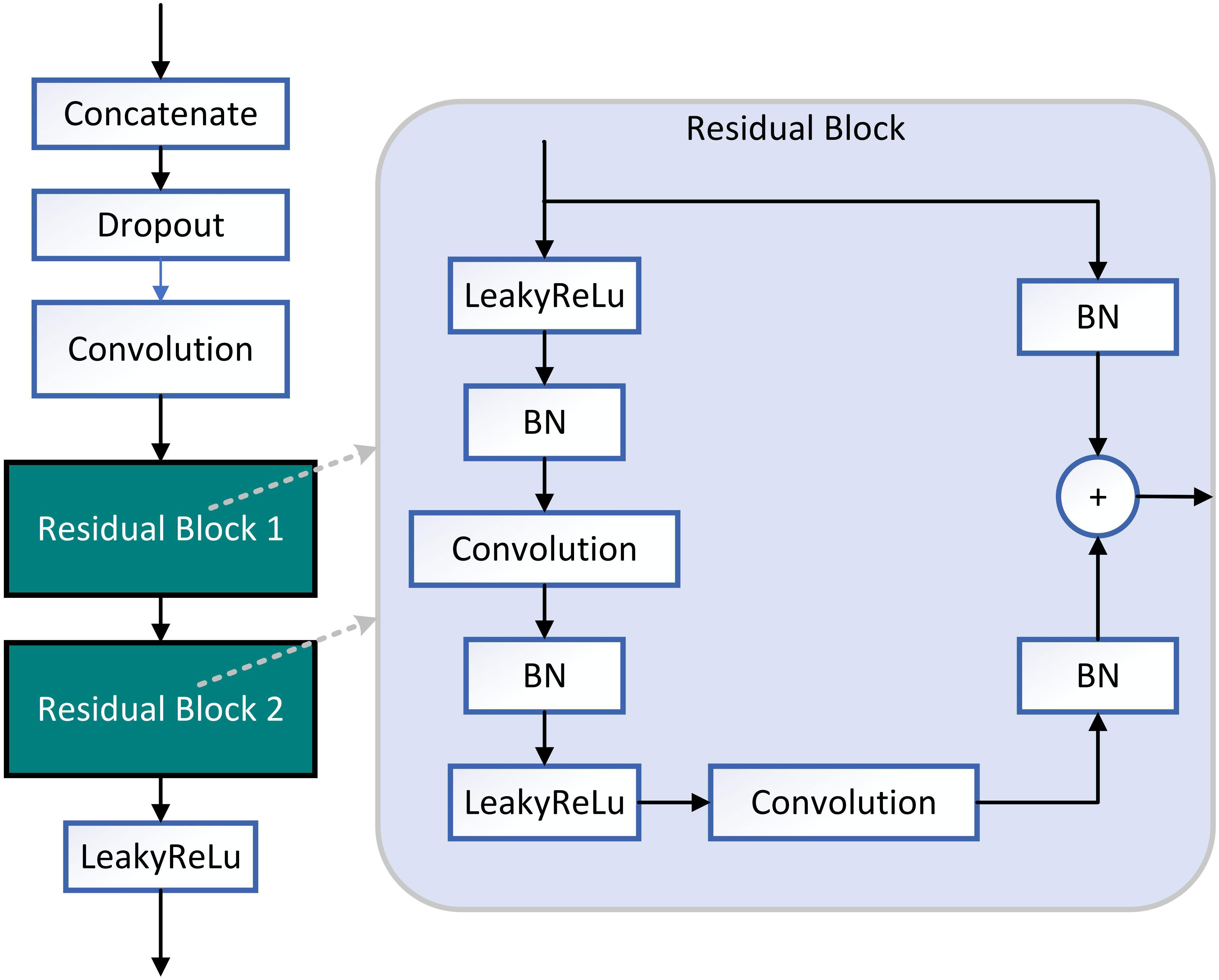

Using the pre-training Efficientnet-b4 as the encoder and the Residual block and LeakyRelu activation function in the decoder to improve the U-Net network (Liu et al., 2022). The encoder used in this study consists of Efficientnet-b4, and the decoder consists of five decoding subblocks, each of which includes a dropout layer, a 2D convolutional layer, a padding layer, two residual blocks, and a LeakyReLU layer, as shown in Figure 6. The dropout layer can effectively prevent model overfitting when the model has many parameters and few training samples. The LeakyReLU layer is used to improve the generalization ability of the model and prevent overfitting, and LeakyReLU is also used as the activation function of the middle layer to avoid neuron death. The two residual blocks prevent gradient vanishing and allow better information propagation. In addition, it was found during the experiments that using two residual blocks in series could yield higher segmentation accuracy. Finally, a 1×1 convolutional layer is applied and the ‘Sigmoid’ activation function (Equation 1) is used to output the mask.

Figure 6 Structure diagram of decoding subblock. Two residual blocks are used in decoding block. The activate function of decoding subblock is LeakyReLU.

Where x represents the input of the function.

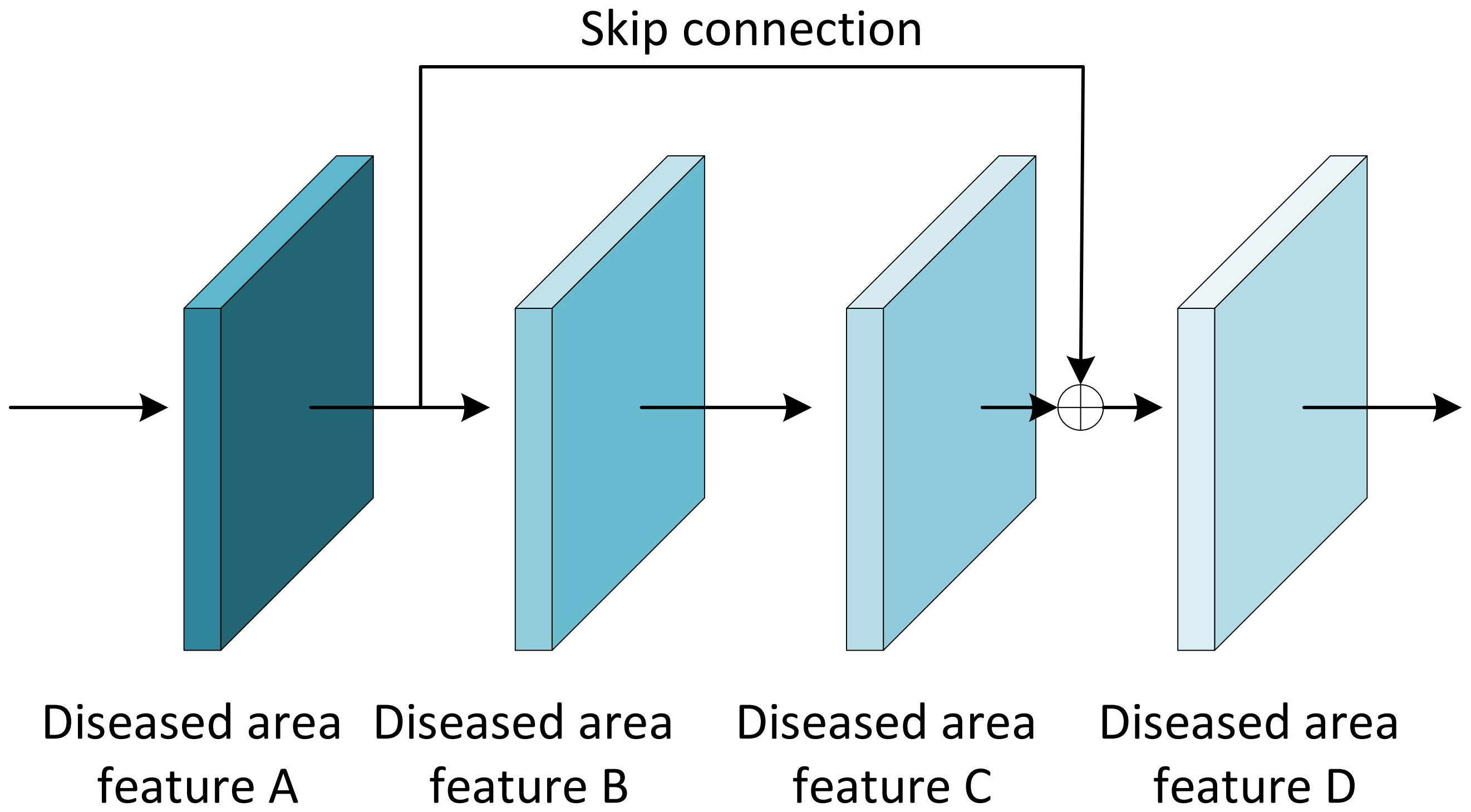

The Residual block is the most important module in the ResNet network. It adds a shortcut between the input and output of the network layer (He et al., 2016). The paradox is that while shallow networks do not improve performance significantly, deeper networks have a more pronounced ‘gradient vanishing’, which limits the effectiveness of training the network. However, the shortcut of residual block effectively solves the problem of ‘gradient vanishing’ when deepening the network, as shown in Figure 7. Even if the gradient decay occurs in the back-propagation of A-B-C, the gradient at D can still be transmitted directly to A. The gradient can be propagated across layers. In terms of gradient size, the residual network maintains a large weight value close to the data layer (input) to mitigate gradient vanishing, no matter how deep the network structure is.

LeakyReLU is often used as an activation function and works similarly to the ReLU function. The difference is that when the input is negative, the output of ReLU is 0, while the output of LeakyReLU is negative and with a gradient (Liu et al., 2019). Using ReLU as an intermediate layer activation function during backpropagation, the neurons will not update their weights and biases when the gradient is 0. Improved U-Net networks use LeakyReLU as an intermediate layer activation function to ensure computational speed, alleviate overfitting and also avoid neuron death.

Disease classification sub-model

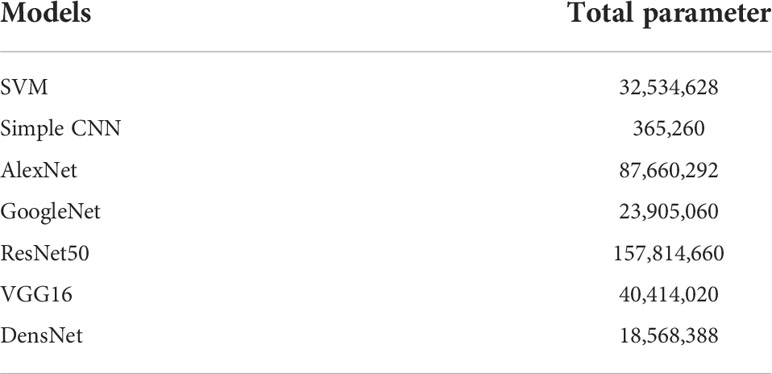

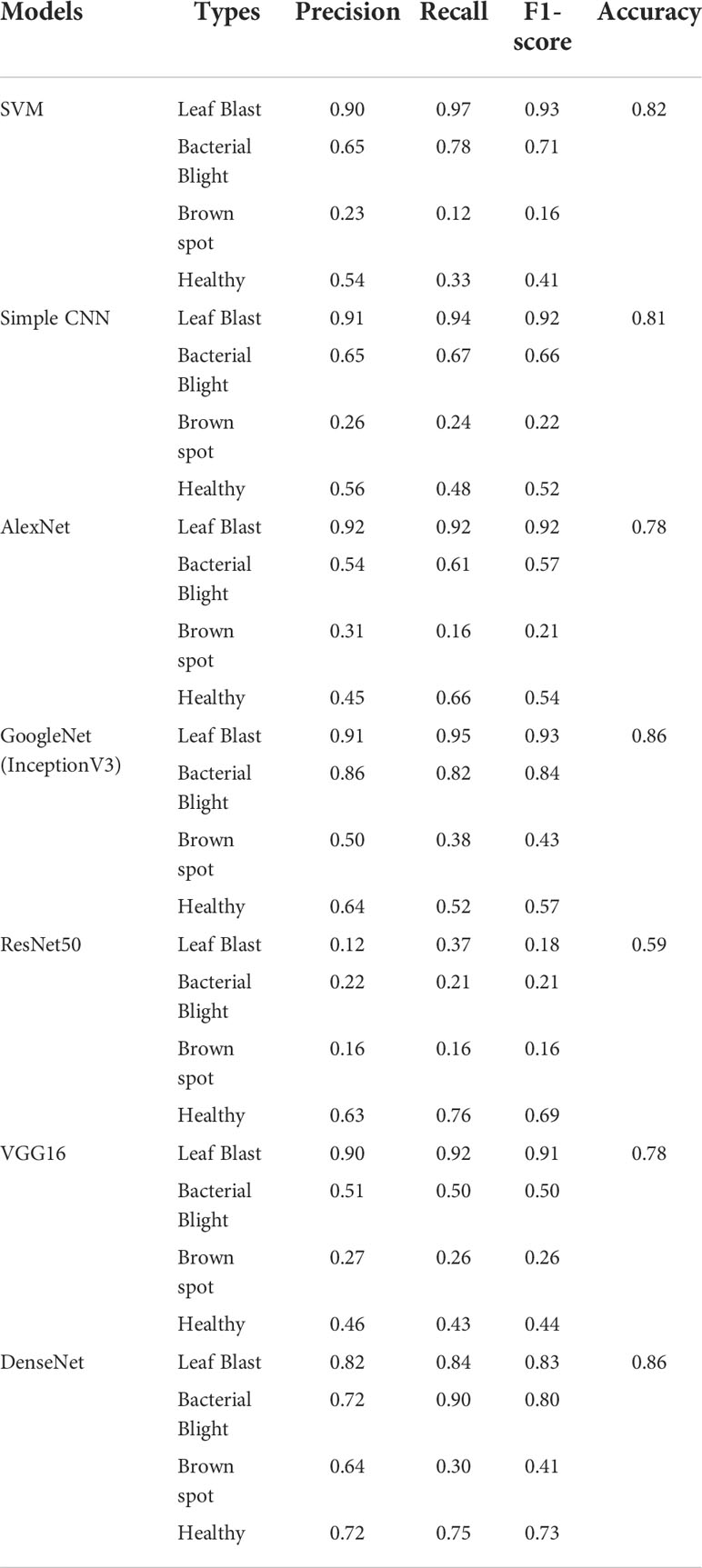

In this study, various pre-training models (based on the ImageNet dataset) were tried as tools for extracting image features, and finally, DenseNet with the highest classification accuracy was selected as the pre-training model. The experiments not only employed several popular neural network models including simple CNN, ResNet50, GoogleNet, VGG16, AlexNet, and DenseNet but also experimented with the machine learning method SVM. Each classification model is fine-tuned for training on this dataset. The total parameters used for each of the classification sub-models are shown in Table 4.

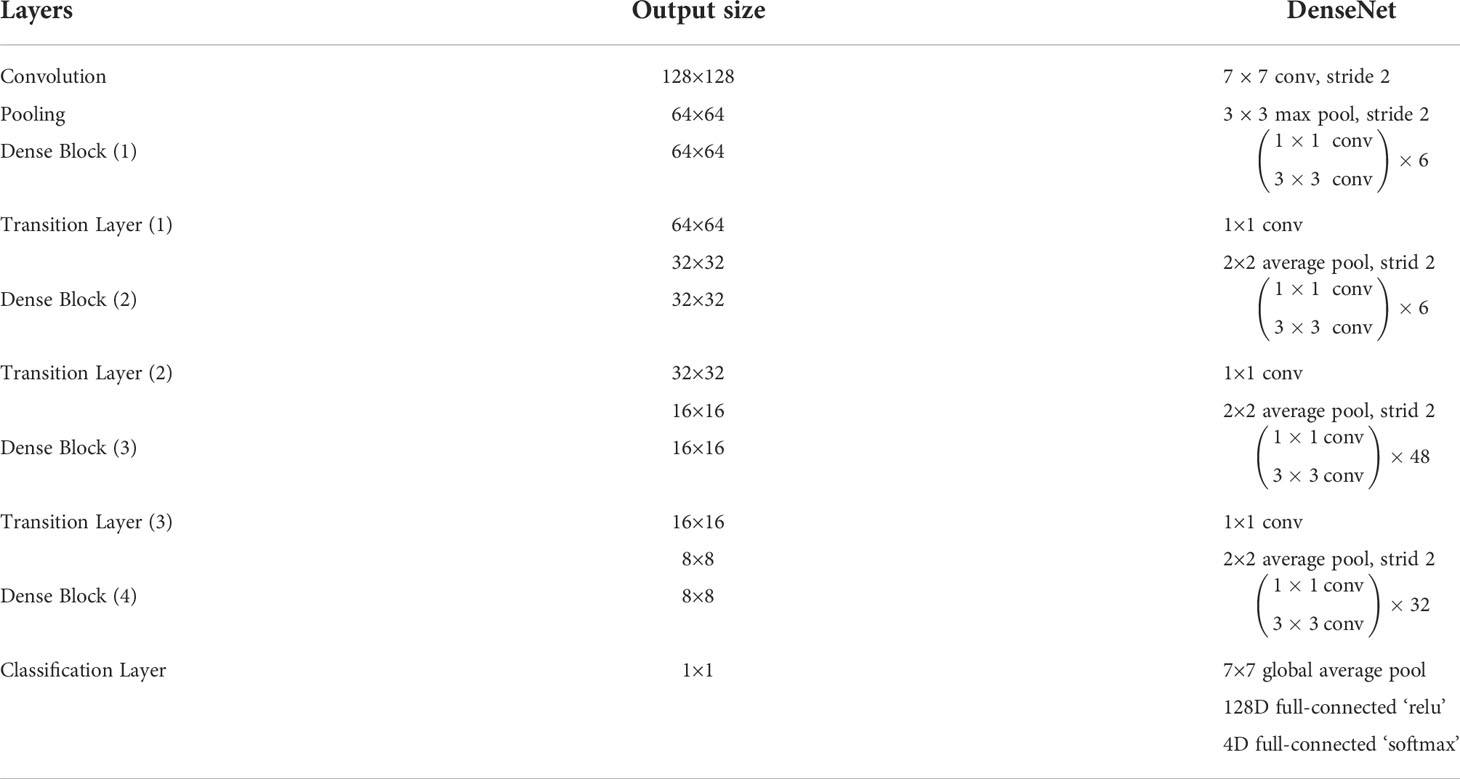

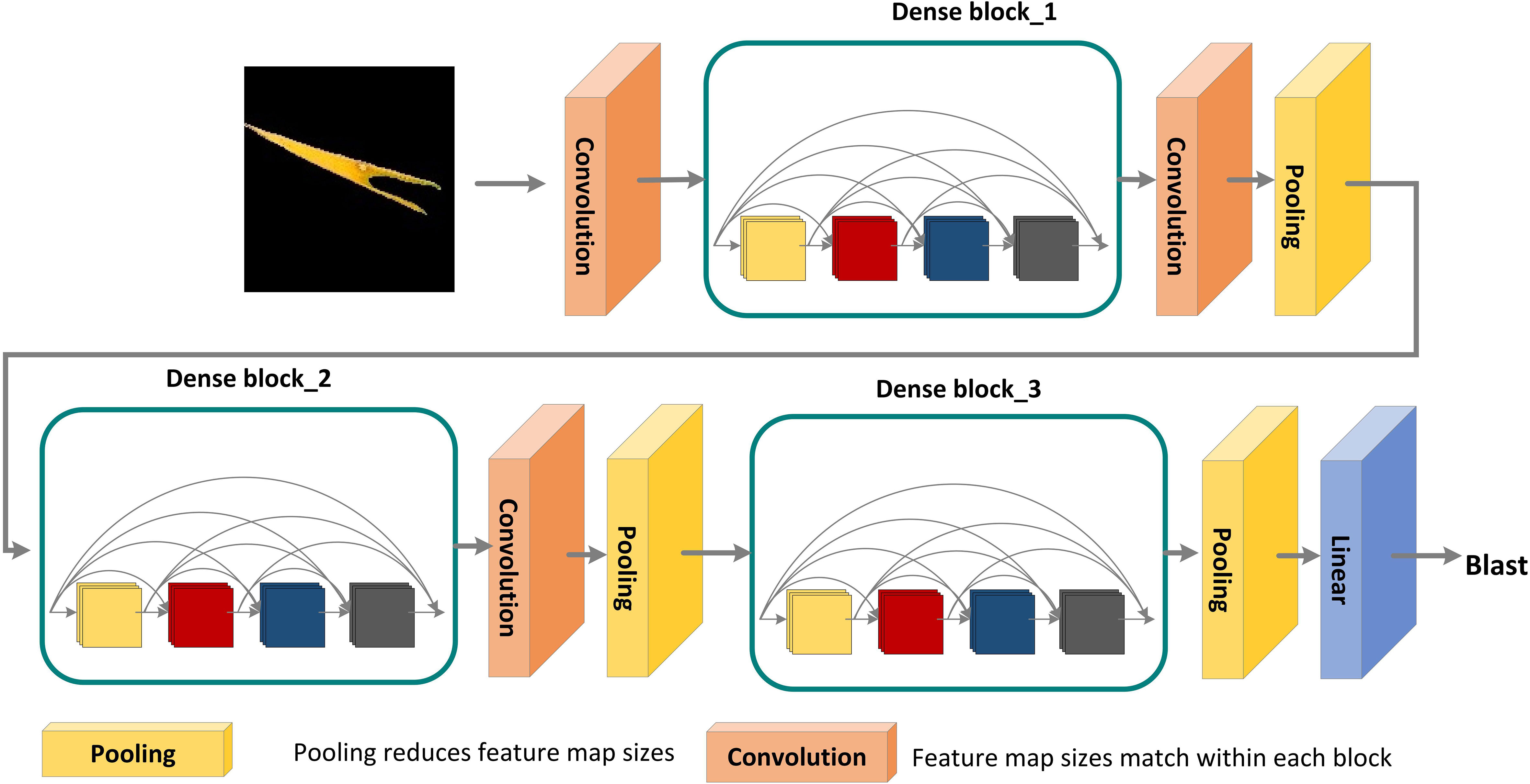

Each layer in the DenseNet model accepts all previous layers as its additional inputs, which is called the dense connectivity mechanism (Iandola et al., 2014). This can achieve feature reuse and reduce the number of parameters and computational cost of the network. To ensure that the size of the feature map is consistent across the layers (Huang et al., 2017), a dense block + transition structure is used in the DenseNet network, with the transition layer varying the feature map size through convolution and pooling, as shown in Figure 8. The transition layers used in the experiments consist of a batch normalization layer and a 1×1 convolutional layer followed by a 2×2 average pooling layer. The output from the DenseNet network is then flattened using the flatten layer, and the image information is parsed through two fully-connected layers with the ‘ReLU’ activation function (Equation 2) and the ‘Softmax’ activation function (Equation 3) to produce the classification results. The number of neurons in the final dense layer is 4 (indicating four classes). The exact network configurations are shown in Table 5.

Figure 8 DenseNet pre-training model for disease areas classification. A deep DenseNet with three dense blocks. The layers between two adjacent blocks are referred to as transition layers and change feature-map sizes via convolution and pooling.

Where z represents the output of the last layer, and C represents the dimension.

Metrics and hyper-parameters

The Dice (Equation 4) and Dice Loss (Equation 5) were chosen as the metrics for the split model, while the classification sub-model metrics were Accuracy (Equation 6), Precision (Equation 7), Recall (Equation 8), F1 (Equation 9) and AUC (Equation 10).

Where True Positive (TP) represents the number of correctly predicted positive samples, False Positive (FP) represents the number of incorrectly predicted positive samples, True Negative (TN) represents the number of correctly predicted negative samples, and False Negative (FN) represents the number of incorrectly predicted negative samples.

Where M represents the number of positive samples, and N represents the number of negative samples.

The initial learning rate of the model was set to 0.0002. The optimizer of the model was adam. The batch size was set to 2. Max epochs were set to 100. When the Dice of the model was not improved over five epochs, the learning rate will drop by 50 percent.

Results

ROI extraction model training results

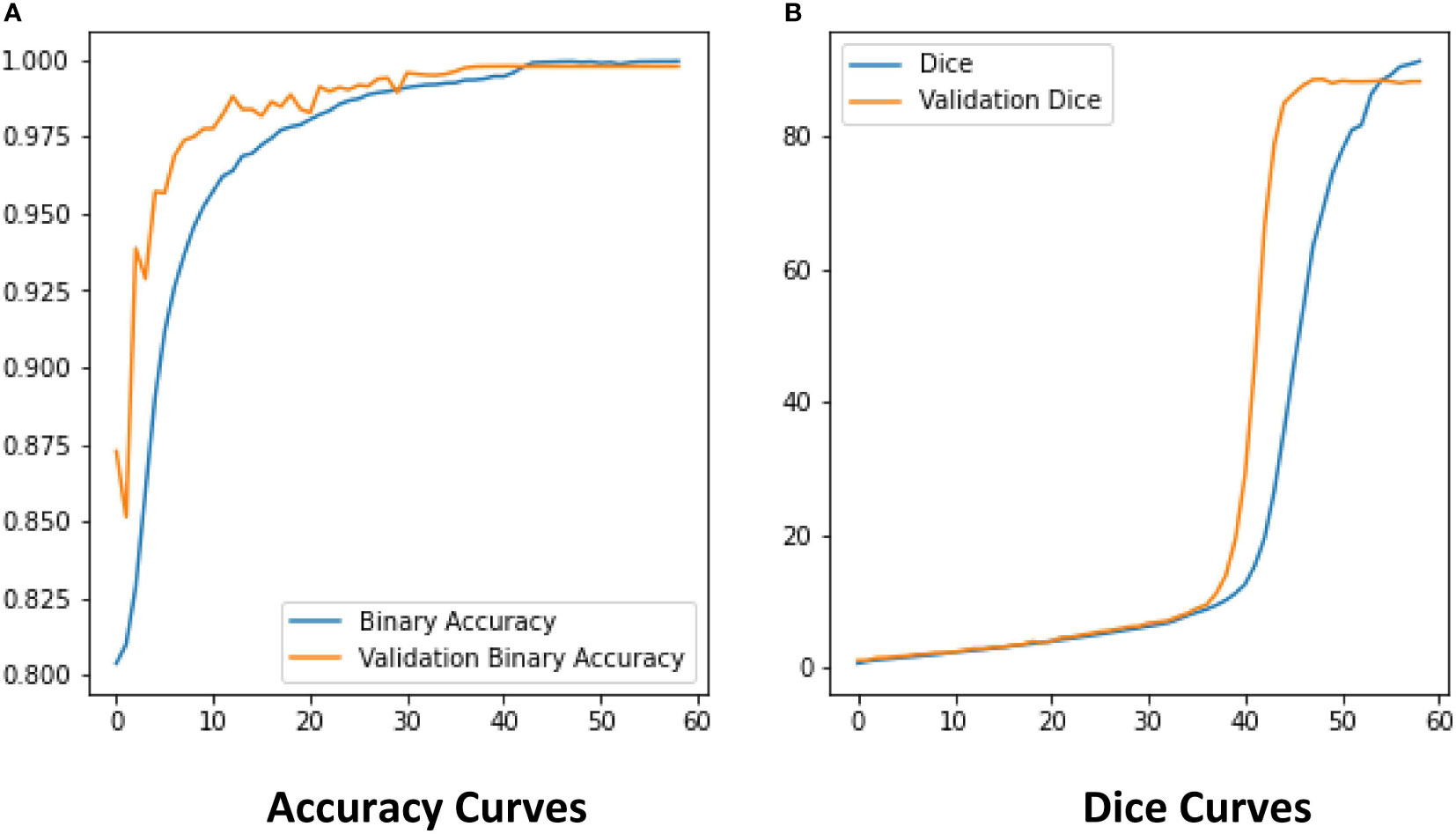

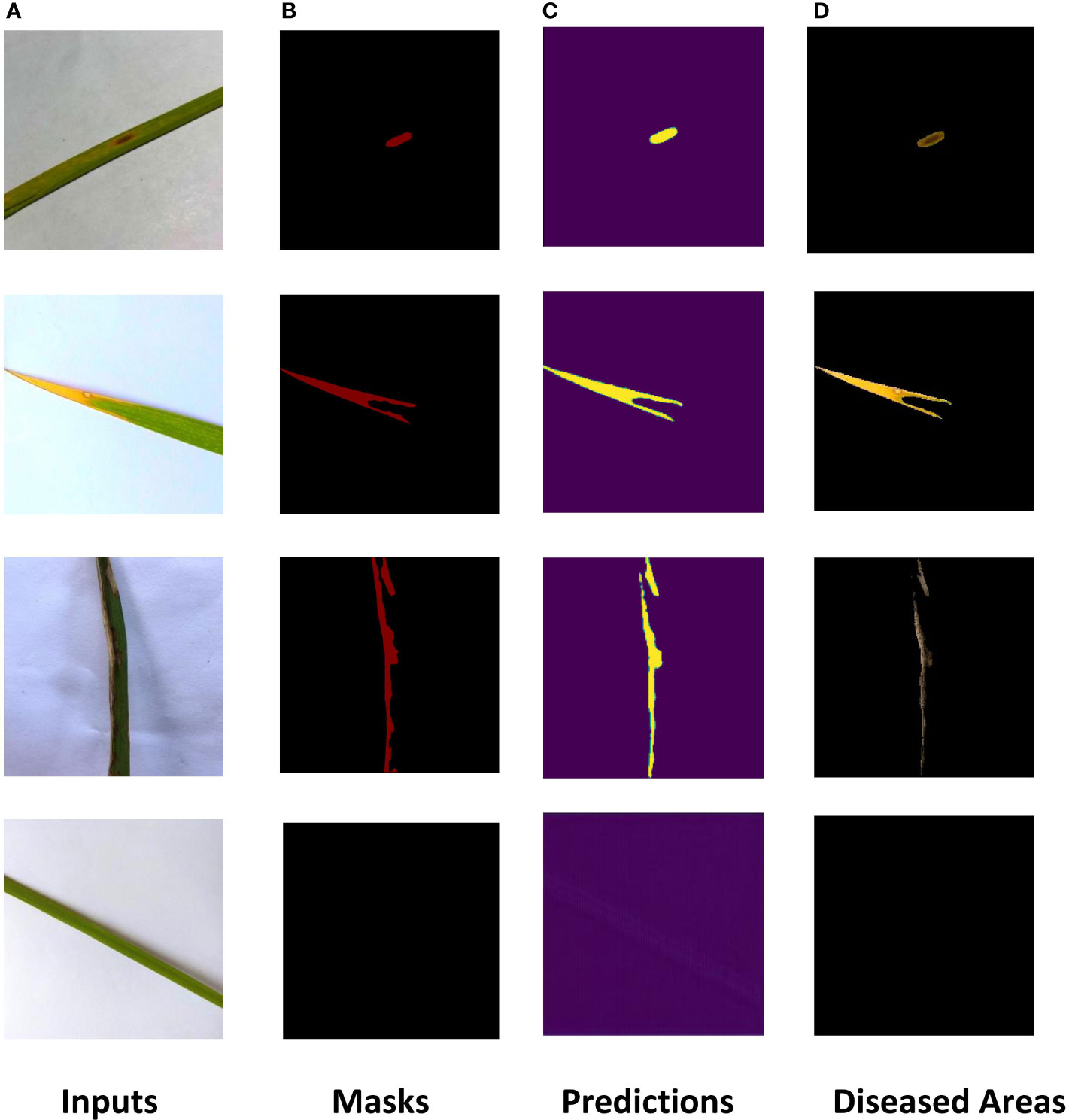

The ROI extraction model under the improved U-Net framework was used to extract diseases of rice leaf images. In order to prevent the model from overfitting, the Early-stopping algorithm and the learning rate decay strategy ReduceLROnPlateau were used to continuously update its learning rate. After adjusting the parameters several times, the model achieved excellent segmentation results, and the optimal value of Dice of the extraction results reached 0.86 by the 5-fold cross-validation method. The Dice and Accuracy variation curves of the training process are shown in Figure 9. Figure 10 shows the ROI extraction results, where (A) are the original image inputs, (B) are the masks, (C) are the model predictions, and (D) are the segmented lesion areas.

Classification sub-model training results

To testify the help of the ROI extraction algorithm on the identification accuracy of the classification model, images with and without ROI extraction were put into the training of the classification sub-model to obtain the classification accuracy of leaves. Considering the size of the dataset, the experiment uses Bootstrap Method to enhance the classification effect. Tables 6, 7 show the disease classification results of rice leaf images without and with ROI extraction, respectively. The experiments not only employed several popular neural network models including simple CNN, ResNet50, GoogleNet, VGG16, AlexNet, and DenseNet but also experimented with the machine learning method SVM.

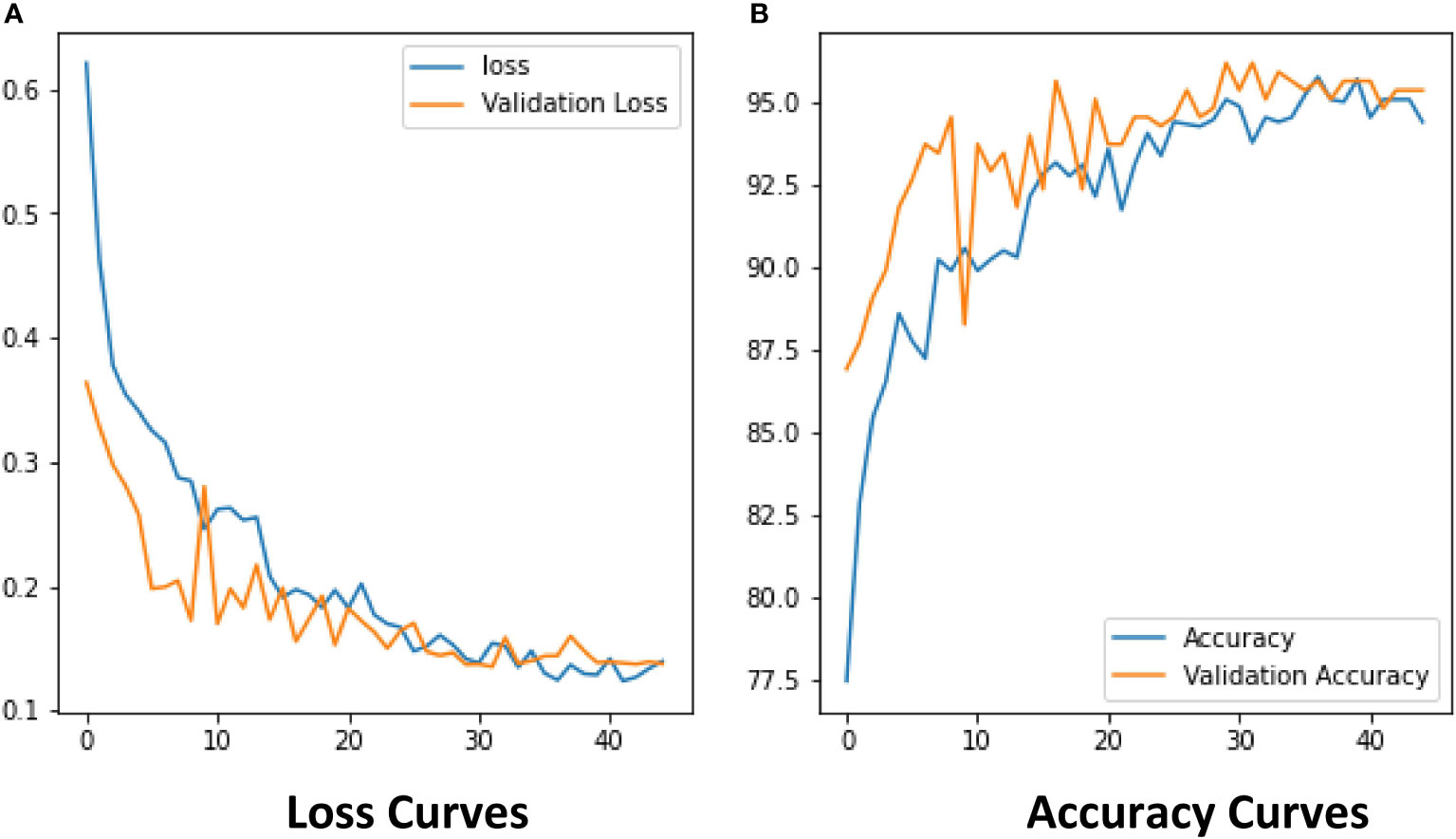

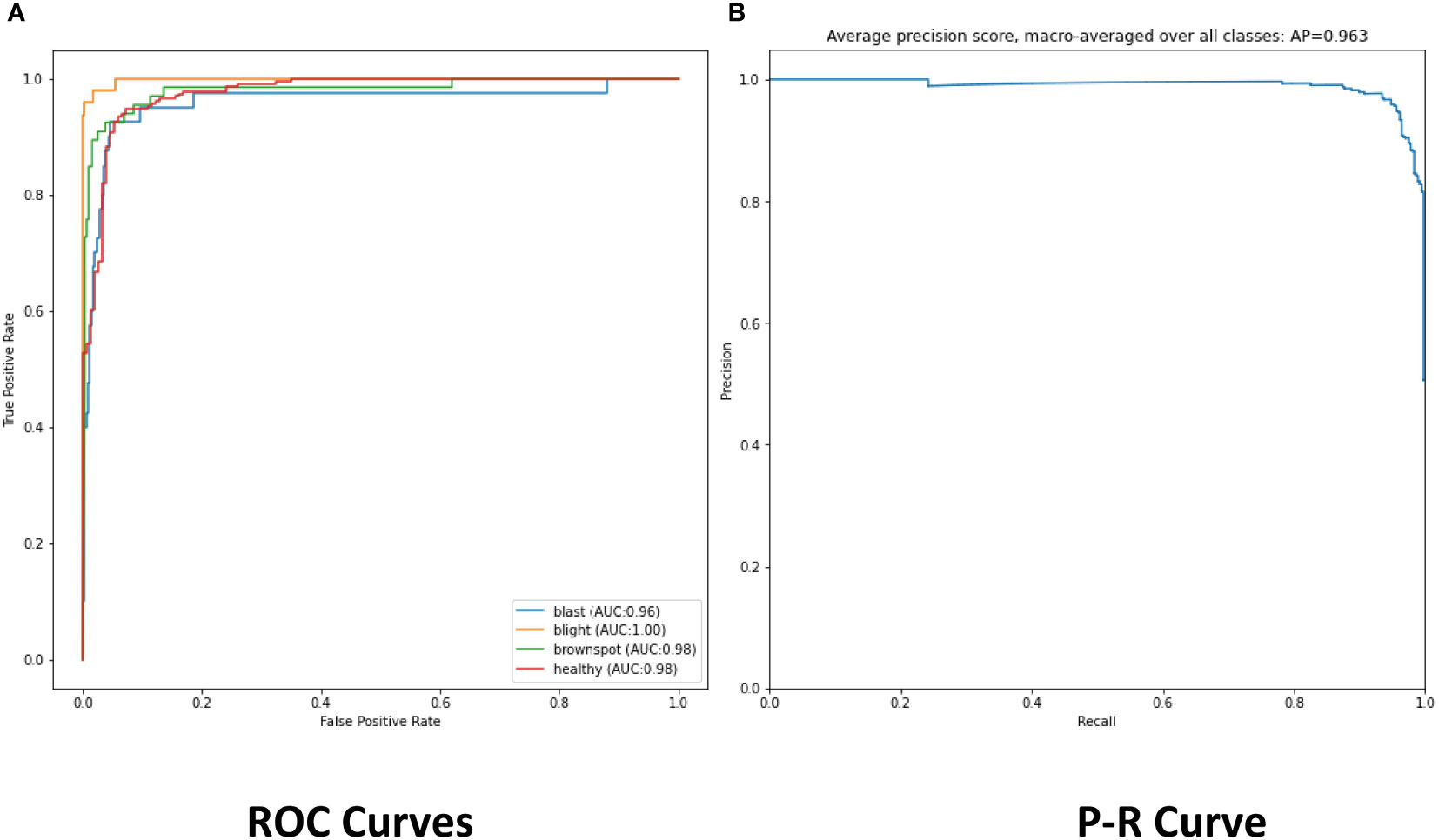

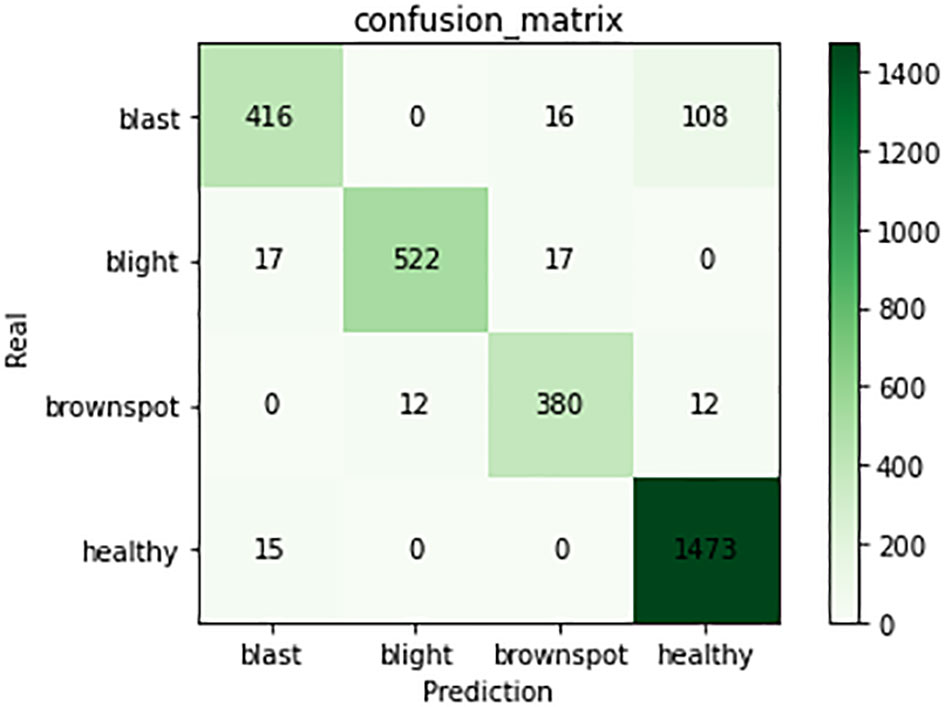

The experimental results show that the DenseNet model performs significantly better in the classification task than the other neural network models in the experiment. The single DenseNet classification model was able to achieve an average disease identification accuracy of 86% by the 5-fold cross-validation method. In contrast, the ROI feature extraction algorithm combined with the DenseNet model achieved identification accuracy of 96% by the 5-fold cross-validation method, an improvement of 10% over the single classification model. The accuracy and loss curves during the training of the DenseNet classification model combined with the ROI extraction algorithm are shown in Figure 11, and Figure 12 shows the identification model classification ROC and P-R curves. The confusion matrix of classification results is shown in Figure 13.

Figure 12 (A) The ROC of classification model. (B) The Precision and Recall of classification model.

Figure 13 Confusion matrix of classification model. The figure shows the number of predictions for four types of training samples.

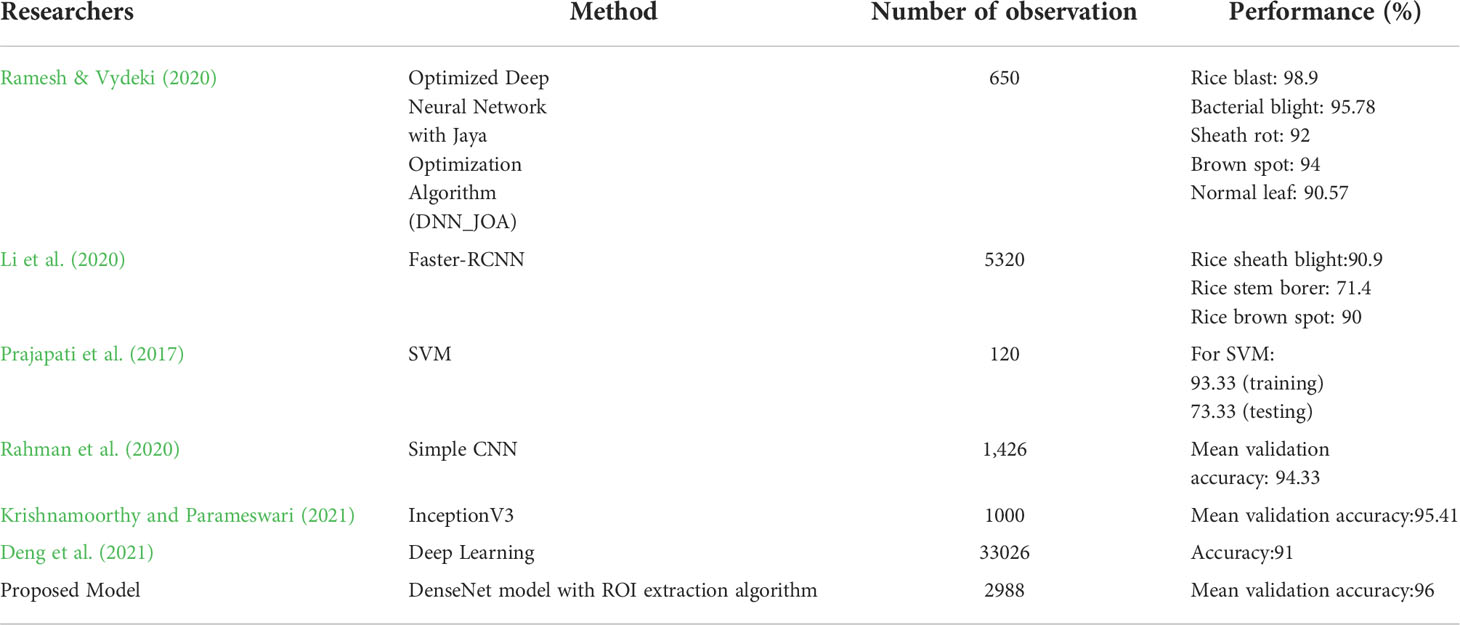

A comparison between this study and existing related studies is shown in Table 8. Classification of paddy leaf diseases using an optimized deep neural network with the Jaya algorithm is proposed. This method achieved high accuracy of 98.9% for the blast affected, 95.78% for the bacterial blight, 92% for the sheath rot, 94% for the brown spot, and 90.57% for the normal leaf image (Ramesh and Vydeki, 2020). Li et al. (2020) used faster-RCNN to detect rice videos. The proposed method was more suitable for the detection of the rice videos than VGG16, ResNet, and YOLOv3. Prajapati et al. (2017) used SVM for multi-class classification and achieved 93.33% accuracy on the training dataset and 73.33% accuracy on the test dataset. Rahman et al. (2020) proposed simple-CNN model can achieve the desired accuracy of 93.3% with a significantly reduced model size. N. Krishnamoorthy and Parameswari (2021) used transfer learning to identify leaf blast, bacterial blight, and brown spot. The classification accuracies for the VGG-16, ResNet50, and InceptionV3 CNN models were 87%, 93%and 95% respectively. Deng et al. (2021) used the ensemble model to diagnose six types of rice diseases, and overall accuracy of 91% was achieved. Their dataset contained 33026 images of six types of rice diseases: leaf blast, false smut, neck blast, sheath blight, bacterial stripe disease, and brown spot. The experimental results of this study were not inferior to other studies and accurate identification of rice leaf diseases could be accomplished.

Discussion

In this study, a hybrid DenseNet model based on improved U-Net is proposed to replace manual identification which is time-consuming, inefficient, and poorly coordinated. In which the improved U-Net is used for ROI extraction and the DenseNet is used for image classification. In order to select the most suitable classification network, several popular neural network models, including Simple CNN, SVM, ResNet50, GoogleNet, VGG16, AlexNet, and DenseNet, have been subjected to classification experiments of images with and without ROI extraction. In the classification experiments of images without ROI extraction, the accuracy of Simple CNN is 81%, the accuracy of SVM is 82%, the accuracy of ResNet50 is 59%, the accuracy of GoogleNet is 86%, the accuracy of VGG16 is 78%, the accuracy of AlexNet is 78%, and the accuracy of DenseNet is 0.86 In the hybrid ROI extraction classification experiment, the optimal value of Dice for ROI extraction results reached 0.86. In the experiments with ROI extraction processed images, the accuracy of Simple CNN is 88%, SVM is 92%, ResNet50 is 77%, GoogleNet is 0.86, VGG16 is 86%, AlexNet is 85%, and DenseNet is 96%. Combining the results of two experiments, the ROI extraction based on the improved U-Net improves the classification performance of Simple CNN, SVM, ResNet50, GoogleNet, VGG16, AlexNet, and DenseNet on the dataset of this study, with DenseNet performing the best. Due to the difficulty in collecting original training samples, the dataset used in the present study only included images of three rice leaf diseases. The original training samples used in the study were taken under well-lit environmental conditions, which may limit the scenarios for use in practical applications. In addition, only seven neural network models were considered in this study. Although these models met our experimental needs, the possibility that other neural network models will yield better results cannot be ruled out. These issues are yet to be addressed in future studies.

Conclusion

This study is based on improved U-Net and uses DenseNet to diagnose rice leaf diseases, which automatically locates and extracts rice leaf disease areas and identifies three common rice leaf diseases (brown spot, leaf blast, and bacterial blight). The segmentation dice coefficient can reach 0.86 by extracting the lesion area through the improved U-Net. Among the tested seven neural network models, including Simple CNN, SVM, ResNet50, GoogleNet, VGG16, AlexNet, and DenseNet, DenseNet performed the best in the training of disease classification for lesion area images with an accuracy of 96%. Improving U-Net improved the recognition accuracy of the seven neural network models, with DenseNet’s accuracy increasing by 10%. The disease area pictures obtained by the segmentation model can be used to assist in localization and aid in identification, which is beneficial to modern farm management, and the classification model can help laymen to identify disease species. It is convenient, real-time, and practical to assist disease classification through ROI extraction. Managers can observe the condition of rice fields through drones. In future works, we intend to add more types of rice leaf disease images to dataset and train them allowing this method to accomplish more rice disease identification. In addition, we intend to add images of the original training samples under different environmental conditions may improve the reliability of the model. We also intend to consider different crops as new research targets, e.g. tomato, wheat, maize, etc., to extend the applicability of this study to achieve disease identification and control of multiple crops.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

WL and LY wrote the main manuscript text. WL, LY, and JL performed experiments and prepared figures. LY cleansed the dataset. LY prepared the dataset and confirmed abnormalities. All authors reviewed the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the Henan university of technology (No.2017RCJH12 & No.2018008).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ashraf, R., Afzal, S., Rehman, A. U., Gul, S., Baber, J., Bakhtyar, M., et al. (2020). Region-of-interest based transfer learning assisted framework for skin cancer detection. IEEE Access 8, 147858–147871. doi: 10.1109/ACCESS.2020.3014701

Azim, M. A., Islam, M. K., Rahman, M. M., Jahan, F. (2021). An effective feature extraction method for rice leaf disease classification. Telkomnika 19 (2), 463–470. doi: 10.12928/telkomnika.v19i2.16488

Bao, W., Zhao, J., Hu, G., Zhang, D., Huang, L., Liang, D. (2021). Identification of wheat leaf diseases and their severity based on elliptical-maximum margin criterion metric learning. Sustain. Computing: Inf. Syst. 30, 100526. doi: 10.1016/j.suscom.2021.100526

Benos, L., Tagarakis, A. C., Dolias, G., Berruto, R., Kateris, D., Bochtis, D. (2021). Machine learning in agriculture: A comprehensive updated review. Sensors 21 (11), 3758. doi: 10.3390/s21113758

Chen, J., Chen, J., Zhang, D., Sun, Y., Nanehkaran, Y. A. (2020). Using deep transfer learning for image-based plant disease identification. Comput. Electron. Agric. 173, 105393. doi: 10.1016/j.compag.2020.105393

Deng, R., Tao, M., Xing, H., Yang, X., Liu, C., Liao, K., et al. (2021). Automatic diagnosis of rice diseases using deep learning. Front. Plant Sci. 12, 701038. doi: 10.3389/fpls.2021.701038

Du, G., Cao, X., Liang, J., Chen, X., Zhan, Y. (2020). Medical image segmentation based on u-net: A review. J. Imaging Sci. Technol. 64 (2), 20508–20501. doi: 10.2352/J.ImagingSci.Technol.2020.64.2.020508

Dutot, M., Nelson, L. M., Tyson, R. C. (2013). Predicting the spread of postharvest disease in stored fruit, with application to apples. Postharvest Biol. Technol. 85, 45–56. doi: 10.1016/j.postharvbio.2013.04.003

Feng, L., Wu, B., He, Y., Zhang, C. (2021). Hyperspectral imaging combined with deep transfer learning for rice disease detection. Front. Plant Sci. 12, 693521. doi: 10.3389/fpls.2021.693521

Gao, Z., Khot, L. R., Naidu, R. A., Zhang, Q. (2020). Early detection of grapevine leafroll disease in a red-berried wine grape cultivar using hyperspectral imaging. Comput. Electron. Agric. 179, 105807. doi: 10.1016/j.compag.2020.105807

Ghosh, S., Chaki, A., Santosh, K. C. (2021). Improved U-net architecture with VGG-16 for brain tumor segmentation. Phys. Eng. Sci. Med. 44 (3), 703–712. doi: 10.1007/s13246-021-01019-w

Goluguri, N. V., Devi, K. S., Srinivasan, P. (2021). Rice-net: an efficient artificial fish swarm optimization applied deep convolutional neural network model for identifying the oryza sativa diseases. Neural Computing Appl. 33 (11), 5869–5884. doi: 10.1007/s00521-020-05364-x

He, K., Zhang, X., Ren, S., Sun, J. (2016). “Deep residual learning for image recognition,” in 2016 IEEE conference on computer vision and pattern recognition (CVPR). 770–778. doi: 10.1109/CVPR.2016.90

Hoang, T. M., Nam, S. H., Park, K. R. (2019). Enhanced detection and recognition of road markings based on adaptive region of interest and deep learning. IEEE Access 7, 109817–109832. doi: 10.1109/ACCESS.2019.2933598

Huang, G., Liu, Z., van der Maaten, L., Weinberger, K. Q. (2017). “Densely connected convolutional networks,” in 2017 IEEE conference on computer vision and pattern recognition (CVPR). 2261–2269. doi: 10.1109/CVPR.2017.243

Iandola, F., Moskewicz, M., Karayev, S., Girshick, R., Darrell, T., Keutzer, K. (2014). Densenet: Implementing efficient convnet descriptor pyramids. arXiv preprint arXiv:1404.1869. doi: 10.48550/arXiv.1404.1869

Jha, K., Doshi, A., Patel, P., Shah, M. (2019). A comprehensive review on automation in agriculture using artificial intelligence. Artif. Intell. Agric. 2, 1–12. doi: 10.1016/j.aiia.2019.05.004

Jiang, P., Chen, Y., Liu, B., He, D., Liang, C. (2019). Real-time detection of apple leaf diseases using deep learning approach based on improved convolutional neural networks. IEEE Access 7, 59069–59080. doi: 10.1109/ACCESS.2019.2914929

Jiang, Z., Dong, Z., Jiang, W., Yang, Y. (2021). Recognition of rice leaf diseases and wheat leaf diseases based on multi-task deep transfer learning. Comput. Electron. Agric. 186, 106184. doi: 10.1016/j.compag.2021.106184

Kaur, S., Pandey, S., Goel, S. (2019). Plants disease identification and classification through leaf images: A survey. Arch. Comput. Methods Eng. 26 (2), 507–530. doi: 10.1007/s11831-018-9255-6

Krishnamoorthy, D., Parameswari, V. L. (2021). Rice leaf disease detection Via deep neural networks with transfer learning for early identification. Turkish J. Physiother. Rehabil. 32, 2. doi: 10.1016/j.envres.2021.111275

Krizhevsky, A., Sutskever, I., Hinton, G. E. (2017). Imagenet classification with deep convolutional neural networks. Commun. ACM 60 (6), 84–90. doi: 10.1145/3065386

Liu, W., Luo, J., Yang, Y., Wang, W., Deng, J., Yu, L. (2022). Automatic lung segmentation in chest X-ray images using improved U-net. Sci. Rep. 12 (1), 1–10. doi: 10.1038/s41598-022-12743-y

Liu, Y., Wang, X., Wang, L., Liu, D. (2019). A modified leaky ReLU scheme (MLRS) for topology optimization with multiple materials. Appl. Mathematics Comput. 352, 188–204. doi: 10.1016/j.amc.2019.01.038

Li, D., Wang, R., Xie, C., Liu, L., Zhang, J., Li, R., et al. (2020). A recognition method for rice plant diseases and pests video detection based on deep convolutional neural network. Sensors 20 (3), 578. doi: 10.3390/s20030578

Long, M., Ouyang, C., Liu, H., Fu, Q. (2018). Image recognition of camellia oleifera diseases based on convolutional neural network & transfer learning. Trans. Chin. Soc Agricult. Eng. 34 (18), 194–201. doi: 10.11975/j.issn.1002-6819.2018.18.024

Mitra, A., Banerjee, P. S., Roy, S., Roy, S., Setua, S. K. (2018). The region of interest localization for glaucoma analysis from retinal fundus image using deep learning. Comput. Methods Programs Biomed. 165, 25–35. doi: 10.1016/j.cmpb.2018.08.003

Prajapati, H. B., Shah, J. P., Dabhi, V. K. (2017). Detection and classification of rice plant diseases. Intelligent Decision Technol. 11 (3), 357–373. doi: 10.3233/IDT-170301

Rahman, C. R., Arko, P. S., Ali, M. E., Khan, M. A. I., Apon, S. H., Nowrin, F., et al. (2020). Identification and recognition of rice diseases and pests using convolutional neural networks. Biosyst. Eng. 194, 112–120. doi: 10.1016/j.biosystemseng.2020.03.020

Ramesh, S., Vydeki, D. (2020). Recognition and classification of paddy leaf diseases using optimized deep neural network with jaya algorithm. Inf. Process. Agric. 7 (2), 249–260. doi: 10.1016/j.inpa.2019.09.002

Ronneberger, O., Fischer, P., Brox, T. (2015). “U-Net: Convolutional networks for biomedical image segmentation,” in International conference on medical image computing and computer-assisted intervention (Cham: Springer), 234–241.

Shahbandeh, M. (2022)Global rice consumption 2021/22, by country. In: Statista. Available at: https://www.statista.com/statistics/255971/top-countries-based-on-rice-consumption-2012-2013/ (Accessed August 19, 2022).

Sharma, M., Kumar, C. J., Deka, A. (2022). Early diagnosis of rice plant disease using machine learning techniques. Arch. Phytopathol. Plant Prot. 55 (3), 259–283. doi: 10.1080/03235408.2021.2015866

Siddique, N., Paheding, S., Elkin, C. P., Devabhaktuni, V. (2021). U-Net and its variants for medical image segmentation: A review of theory and applications. IEEE Access 9, 82031–82057. doi: 10.1109/ACCESS.2021.3086020

Xie, X., Ma, Y., Liu, B., He, J., Li, S., Wang, H. (2020). A deep-learning-based real-time detector for grape leaf diseases using improved convolutional neural networks. Front. Plant Sci. 11, 751. doi: 10.3389/fpls.2020.00751

Yang, G., Chen, G., Li, C., Fu, J., Guo, Y., Liang, H. (2021). Convolutional rebalancing network for the classification of Large imbalanced rice pest and disease datasets in the field. Front. Plant Sci. 12, 1150. doi: 10.3389/fpls.2021.671134

Keywords: rice leaf disease identification, densenet, U-net, deep learning, convolution networks, ROI extraction

Citation: Liu W, Yu L and Luo J (2022) A hybrid attention-enhanced DenseNet neural network model based on improved U-Net for rice leaf disease identification. Front. Plant Sci. 13:922809. doi: 10.3389/fpls.2022.922809

Received: 18 April 2022; Accepted: 26 September 2022;

Published: 18 October 2022.

Edited by:

Febri Doni, Universitas Padjadjaran, IndonesiaReviewed by:

Prabira Kumar Sethy, Sambalpur University, IndiaMayuri Sharma, Royal Global University, India

Copyright © 2022 Liu, Yu and Luo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wufeng Liu, bHdmQGhhdXQuZWR1LmNu

Wufeng Liu

Wufeng Liu Liang Yu

Liang Yu Jiaxin Luo2

Jiaxin Luo2