94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci., 21 December 2022

Sec. Sustainable and Intelligent Phytoprotection

Volume 13 - 2022 | https://doi.org/10.3389/fpls.2022.1056842

This article is part of the Research TopicPrecision Control Technology and Application in Agricultural Pest and Disease ControlView all 8 articles

Maize is susceptible to infect pest disease, and early disease detection is key to preventing the reduction of maize yields. The raw data used for plant disease detection are commonly RGB images and hyperspectral images (HSI). RGB images can be acquired rapidly and low-costly, but the detection accuracy is not satisfactory. On the contrary, using HSIs tends to obtain higher detection accuracy, but HSIs are difficult and high-cost to obtain in field. To overcome this contradiction, we have proposed the maize spectral recovery disease detection framework which includes two parts: the maize spectral recovery network based on the advanced hyperspectral recovery convolutional neural network (HSCNN+) and the maize disease detection network based on the convolutional neural network (CNN). Taking raw RGB data as input of the framework, the output reconstructed HSIs are used as input of disease detection network to achieve disease detection task. As a result, the detection accuracy obtained by using the low-cost raw RGB data almost as same as that obtained by using HSIs directly. The HSCNN+ is found to be fit to our spectral recovery model and the reconstruction fidelity was satisfactory. Experimental results demonstrate that the reconstructed HSIs efficiently improve detection accuracy compared with raw RGB image in tested scenarios, especially in complex environment scenario, for which the detection accuracy increases by 6.14%. The proposed framework has the advantages of fast, low cost and high detection precision. Moreover, the framework offers the possibility of real-time and precise field disease detection and can be applied in agricultural robots.

Maize is one of the most vital food and industrial crops for human beings and is the most essential cereal crop across the globe after rice and wheat (Haque et al. (2022)). In addition to its edible value, maize also serves as the raw material for industrial products and animal fodder (Demetrescu et al., 2016; Samarappuli and Berti, 2018; He et al., 2018). However, maize is susceptible to various pest diseases (Mboya, 2013), and the loss of maize yield induced by pest disease has increased sharply. Early detection is an important way to stop the spread of pest diseases, but expert identification is time consuming and high cost. Therefore, the computer vision and machine learning technique has attracted numerous attention for detecting infected plants (Chen et al., 2021; Feng et al., 2020; Feng et al., 2021).

The raw data commonly used for disease detection is RGB images which are generally acquired by digital camera. Several disease detection models which combine RGB images with machine learning were proposed in recent years. Zhang et al. (2021) proposed a convolutional neural network (CNN) model optimized by a multi-activation function module in order to detect maize diseases including maculopathy, rust and blight. Wu (2021) introduced a two-channel CNN which constructed based on VGG and ResNet for maize leaf diseased detection and achieved a better performance than the single AlexNet model. A CNN model based on transformer and self-attention was implemented to automatically identify maize leaf diseases in a complex background (Qian et al. (2022)). Due to the high efficiency and low cost in RGB data acquisition, RGB image is the first choice for training deep learning model. However, most of the current models trained by RGB data are image-wise classification of plant diseases (Karthik et al. (2020); Wang et al. (2021); Syed-Ab-Rahman et al. (2022)). In the application in field, precise positioning of the diseased area is needed. Therefore, pixel-wise detection plays an important part in plant disease detection, but RGB image only has 3 channels in spectral domain and barely capable of locating diseased area accurately on account of the deficiency of spectral information.

Hyperspectral image (HSI), regarded as high-dimensional data can provide tremendous information on spectral domains. HSI, not like RGB image which only has three spectral bands, has multiple bands could be used for extracting disease characteristics, so it is an ideal candidate for pixel-wise disease detection (Nagasubramanian et al. (2019); Zhang et al. (2020); Feng et al. (2021)). Nguyen et al. (2021) extracted disease features from HSI data cube to detect grapevine vein-clearing virus and accomplished pixel-wise classification by using random forest classifier. By selecting features from shortwave infrared HSIs of peanuts, Qiao et al. (2017) concentrated spectral information into a subspace where the healthy peanuts and fungi-contaminated peanuts can be separated easily. Although HSI could not only provide amounts of spectral information but also locate the infected area effectively, the drawbacks of HSI are also observed. Normally, owing to the measurements of hyperspectral camera are performed based on the line scanner, the time to obtain HSI data is much longer than get RGB image by digital camera (Behmann et al. (2018)). Hence, it is hard to complete the disease detection fast and efficiently in the application of field detection. Moreover, the cost of hyperspectral imaging system is much higher than digital camera, so it is difficult to spread the use of it.

Above all, using neither RGB images nor HSIs could combine the advantages of detection accuracy, detection speed, data acquirement, and low cost. Ideally, it would be great if we could acquire HSI through a digital RGB camera. In this way, we can keep the advantages of both RGB image and HSI, it is not only convenient to detect disease accurately but also affordable. However, recovering HSIs from RGB images is an ill-posed problem since a large amount of spectral information is lost when RGB sensors capture the light (Xiong et al. (2017)). Typically, the methods can be categorized into two types. The first one is to build relatively shallow learning models or sparse coding from a hyperspectral prior (Robles-Kelly (2015); Arad and Ben-Shahar (2016); Aeschbacher et al. (2017); Jia et al. (2017); Akhtar and Mian (2018)). Nonetheless, these methods have poor expression capacity and therefore have limited performance. Due to the high correlation between RGB values and corresponding hyperspectral radiance, the second category of methods is to learn a map between HSIs and RGB images by utilizing large amount of training data (Stiebel et al. (2018); Wang and Wang (2021)). Recently, deep learning methods have been introduced into spectral recovery tasks and have good performance (Shi et al. (2018); Zhao et al. (2020); Zhu et al. (2021)). Based on U-Net, Yan et al. (2018) proposed a multi-scale CNN called SRMSCNN, the encoder and decoder of the network are symmetrical and the symmetrical downsampling-upsampling architecture jointly encode image information for spectral reconstruction. Can and Timofte (2018) proposed a model called SREfficientNet which contains multiple residual blocks to utilize low-level features, through combing local residuals with global residuals to enhance the feature expression ability, this method requires much less computing resources to complete the reconstruction task.

This study is performed aiming to explore an effective and cost-savings way in disease detection application, and the spectral recovery disease detection model is proposed. The main contributions of this study arise from two aspects. First, the novel spectral recovery disease detection framework which has provided a new way of thinking for plant disease detection is proposed. Second, the maize spectral recovery dataset is built and the effect of spectral recovery model on recovery performance is explored. By using the framework we proposed, the recovered maize HSIs are reconstructed from RGB images and the recovered HSIs perform well in disease detection, especially in complex environment scenarios. This means that we can use RGBimages to achieve nearly the same disease detection accuracy compared with HSIs.

Maize plants are cultivated in field, which is located in the Agricultural Experimental Base of Jilin University, Changchun, Jilin Province, China (125°25’43” E, 43°95’18” N). The variety of maize is Xianyu 335. To facilitate the speed and accuracy of spectral recovery from pest-infected maize RGB images, we obtained plenty of HSIs and corresponding RGB images of pest-infected maize leaves during mid-August. Each image data we collected contains both healthy and diseased maizes. Part of samples in dataset are shown in Figure 1. During the process of data collection, the data we obtained may suffer distortion due to the influence of intensity of illumination. It is essential to calibrate raw hyperspectral image by using white and dark references, according to Eq. 1. We carried a neutral reference panel and calibrated when is necessary so that the reliability of data is guaranteed.

where, and refer to calibrated and raw hypersepctral images respectively, and refer to white and dark image respectively.

The hyperspectral sensor used for collecting data was the Specim IQ sensor (Specim, Oulu, Finland), which is an integrated system that could obtain and visualize HSIs and RGB images data. The Specim IQ camera provides 512×512 pixels images with 204 bands in the 400-1000 nm range. The RGB images and raw HSIs were captured by the Specim IQ simultaneously to avoid pixel position deviation. The integration time was automatically calculated by camera due to the light condition was unfixed. Owing to our goal is to recovery HSIs from natural RGB images and the wavelength of natural RGB images ranges from about 400 - 700 nm. For the purpose of reducing training cost and improving training efficiency, the images were resampled to 31 spectral bands in the visual range from 400 nm to 700 nm with a spectral resolution of 10 nm (Arad et al. (2022)). In this study, the images of maize were captured at a distance of 1-1.5 m. A neutral reference panel with 99% reflection efficiency was used to perform spectral calibration.

In order to relieve the burden of network and increase training samples, the hyperspectral data and corresponding RGB data were divided into bunches of 31×128×128 and 31×128×128 patches respectively. The number of patches generated by an image depends on the stride, according to Eq. 2. To improve the generalization ability of the model, rotation and flipping were adopted to augment the original data.

where, Np refers to the number of patches, S refers to stride, W and Wp refer to the width of image and patch, respectively.

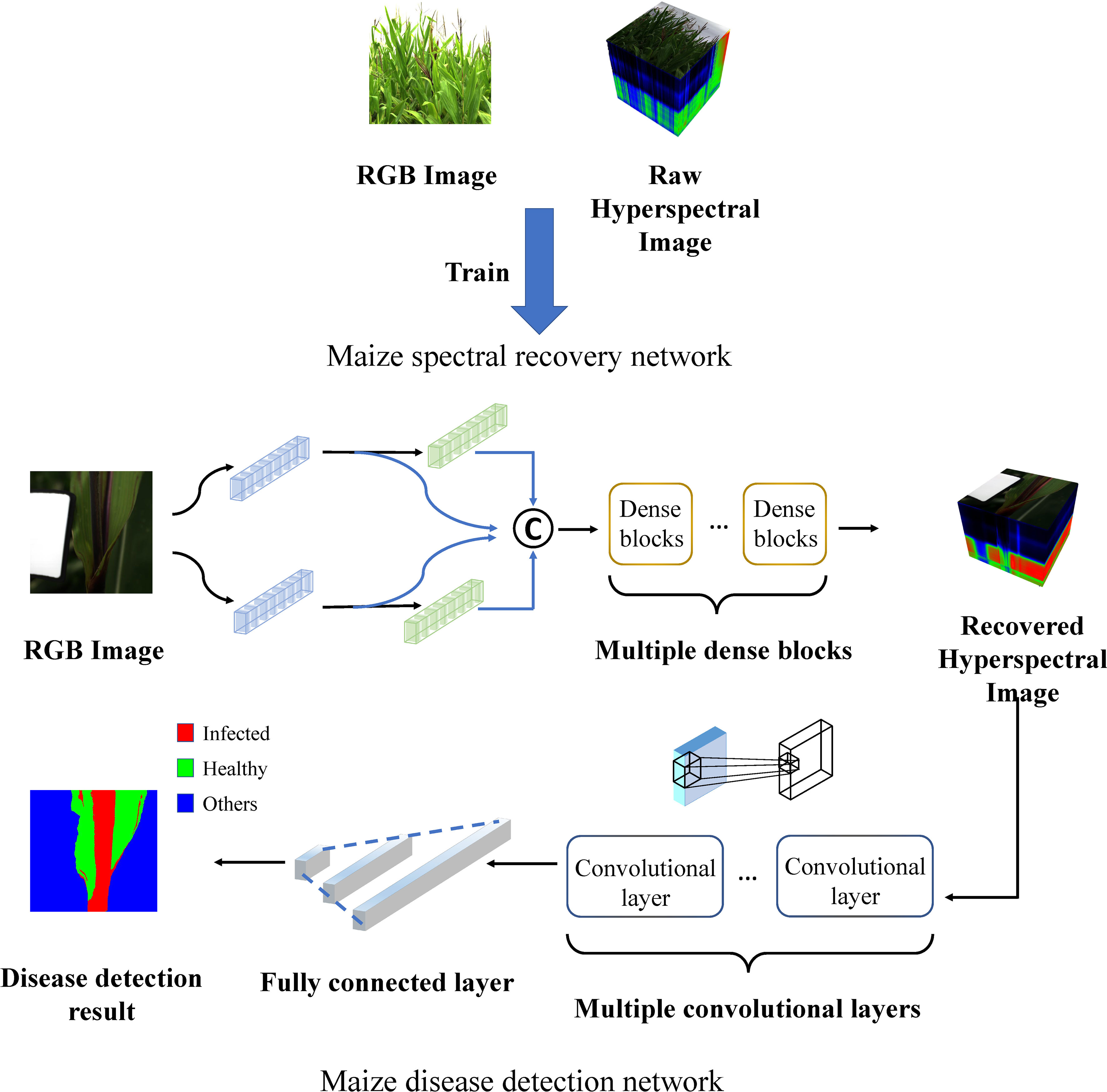

The overall framework is as depicted in Figure 2. The maize spectral recovery neural network was first trained by RGB images and corresponding raw HSIs. Raw RGB images were fed into the maize spectral recovery neural network, through feature extraction, mapping and reconstruction, we got the reconstructed HSIs. Subsequently, we put the reconstructed HSIs into disease detection neural network as input, and finally completed disease detection task. The detailed structure is described in the subsequent sections.

Figure 2 Schematic diagram of the overall maize spectral recovery and disease detection network architecture.

Recovering hyperspectral images from RGB images is an ill-posed problem, since a large amount of information is lost during the process of integrating the hyperspectral bands into RGB values. Traditional spectral recovery methods need hand-crafted priors (Arad and Ben-Shahar (2016); Akhtar and Mian (2018)), which performance is barely satisfactory due to the lacking of representing capacity. However, deep learning method, which performs well in many computer vision tasks, has been applied to hyperspectral recovery successfully. Through feeding a large number of training data, deep neural network can learn a map between RGB and HSIs. Various network structures have been proposed to accomplish the spectral recovery tasks, such as CNN and Generative Adversarial Network (GAN) (Zhang et al. (2022)). The GAN model contains a generator and a discriminator. The generator learns to reconstruct HSIs from RGB images and the discriminator judges whether the reconstruction quality is satisfactory. Although GAN can recover HSIs well, training GAN is unstable and likely to arise mode collapse. We tend to choose a more stable model. Recently, deep CNN based methods have achieved promising performance (Koundinya et al. (2018); Li et al. (2020); Fu et al. (2020)). In the training process of deep neural networks, the problem of the vanishing of the gradient may arise at times. The residual structure and dense structure could solve this problem. The residual structure could add skip connections among layers and provides the possibility for deeper network. However, the residual structure directly adds parameters of all previous layers which could destroy the distribution of convolution output and thus could reduce the transmission of feature information. In our maize spectral recovery network, we aim to make better use of spectral characteristics and thus the dense structure which concatenates channel dimensions of previous layers was adopted. The advanced hyperspectral recovery convolutional neural network (HSCNN+) contains dense blocks and could learn abundant and natural spectral information. In addition, unlike hyperspectral recovery convolutional neural network (HSCNN) requires prior knowledge from the RGB camera hardware, HSCNN+ requires no pre-knowledge from the RGB sensor and makes our framework easier to apply to field robots for agriculture. Therefore, the HSCNN+ which has superior performance on spectral recovery tasks was adopted as the backbone of our maize spectral recovery neural network (MSRNN).

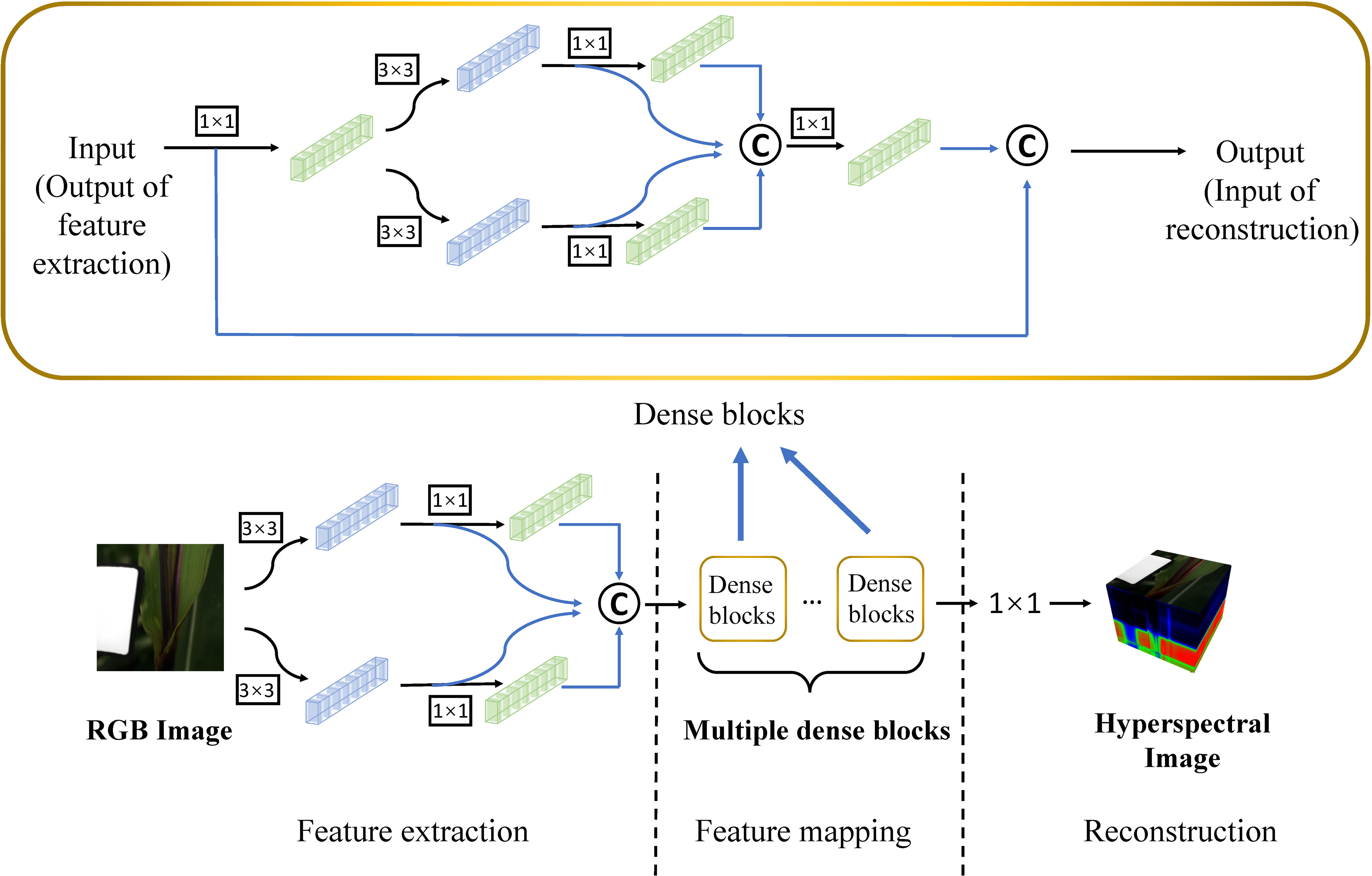

The HSCNN is one of the first CNN-based spectral recovery network and the HSCNN+ network was optimized on the basis of HSCNN (Xiong et al. (2017)).The HSCNN+ network include three parts which consists of feature extraction, feature mapping and reconstruction. The network structure is depicted in Figure 3. The core part of the network is the feature mapping part which contains multiple dense blocks. The output of previous layer mapped by 1 × 1, 3 × 3 and 3 × 3 - 1 × 1 convolution and then concatenated together. The dense structure enables the lth layer to receive the features from all preceding layers which can efficiently alleviate the problem of gradient vanishing, and what’s more, it offers a probability for deeper neural network. Our MSRNN has three parts, among them the structure of the first part of feature extraction and the last part of reconstruction is identical to the HSCNN+. The feature mapping part contains 20 dense blocks.

Figure 3 Network structure of the HSCNN+. The notation “1 × 1” and “3 × 3” denote the convolution with the kernel size of 1 × 1 and 3 × 3 respectively. The notation with rectangular box denotes the convolution is followed by ReLU activation function. The notation “C” with a circular box denotes the concatenation operation.

In terms of plant disease detection, most people focus on image-wise plant disease detection. However, it seems impossible for image-wise maize disease detection network to apply in field due to the influence of planting density. For pixel-wise plant disease detection, a large amount of spectral data is required. Fortunately, HSI is a good choice, and therefore CNN for HSIs classification was adopted as our pixel-wise maize disease detection neural network. The high dimensional data is sent into convolutional layers as input, and the output of convolutional layer is sent into a classifier which contains fully connected layer. All pixels in the spatial domain of hyperspectral images are classified into three classes: pest-infected maize, healthy maize, and others.

For spectral recovery network, the dataset we used contains 100 maize HSIs, and the training set: test set is 9: 1. We set S in Eq. 2 to 16, so each HSIs may create 625 augmented patches for training. We used the Adam solver for optimization and beta set as 0.9. The learning rate is decayed with a cosine annealing from 0.001 to 0.00001, and we stop training when no obvious decay of training loss is observed. The loss function we used is MSEloss that measures the mean squared error (squared L2 norm) between each element in the input and target.

For disease detection network, the data we used is the output of spectral recovery network. For input HSIs, we created patches with stride of 2, and the training set: test set is 9: 1. The learning rate was set to 0.001 and the cross entropy function was used as the loss function.

For the purpose of evaluating the quality of spectral reconstruction, Mean Relative Absolute Error (MRAE) and Root Mean Square Error (RMSE) were selected as evaluation metrics. MRAE computes mean absolute value between all spectral bands of recovered spectral images and groundtruth images. It represents the quality of spectral recovery and it is defined as Eq. 3. RMSE computes the root mean square error between the recovered and groundtruth spectral images. It is defined as Eq. 4.

where, N refers to the total number of pixels, and refer to the ith pixel of the recovered spectral images and groundtruth images respectively.

In addition to verifying the quality of the spectral recovery model through the above evaluation metrics, we utilize a pest-infected maize detection model to test the effectiveness of the spectral recovery model. This model classifies pixel-wise images into three classes: infected part, healthy part and others. The class “others” means it neither belongs to healthy maize nor infected maize, such as hand, white panel, stones and so on. When the agriculture robots are working in field, they may snap to something that does not relate to maize and could disturb the detection results. These things are therefore classified to “other”. We chose precision, recall and F1 score to evaluate our disease detection model. These evaluation metrics can be calculated by Eqs 5, 6, 7. We also used the overall accuracy (OA) and average accuracy (AA) evaluation metrics to evaluate the detection ability of the model. Here, OA refers to the total number of correctly classified pixels divided by the total number of all pixels and AA refers to the sum of accuracy for each class predicted divided by the number of class.

where, P refers to precision, R refers to recall, F1 refers to F1 score, TP refers to the number of true positives, FP refers to the number of false positives, and FN refers to the number of false negatives.

All the image preprocessing processes and main algorithm were conducted using MATLAB R2021a, Anaconda3 (Python 3.8), PyTorch library, scikit-learn library, etc. The proposed model was trained and tested with hardware configuration including IntelR i9-10980XE CPU (3.00GHz), 64-GB memory, and NVIDIA RTX A5000 (CUDA 11.4) graphics card.

For maize RGB images to HSIs conversion, the HSCNN+ which we chose for maize spectral recovery was compared with several state-of-the-art algorithms (Zamir et al. (2020); Cai et al. (2022); Zhao et al. (2020); Shi et al. (2018)). The dataset we used was mentioned in section 2.1, and the test set was strictly never used for training. All compared models adopted same patch size as HSCNN+. The initial learning rate of HRNet was 1×10-4. For MST++ and MIRNet, the learning rate was set to 4×10-4 and halved every 50 epochs during the training process. The batch size was 20. Random flipping and rotation were used for data augmentation. In order to evaluate the effectiveness of HSCNN+, we used MRAE and RMSE evaluation metrics. The experimental results are shown in Table 1.

Table 1 gives the numerical results of different models on the test set. As can be seen, the MRAE of HSCNN+ reached 0.0713 which was lower than MST++ 0.1681, MIRNet 0.3073, HRNet 0.1120. The HSCNN+ model achieved 57.6%, 76.8%, 36.3% decrease in MRAE compared with MST++, MIRNet, HRNet respectively. The RMSE of HSCNN+ were lower than all compared models as well and achieved 1.3%, 8.1%, 6.6% reduction. It demonstrates that in the maize spectral recovery case, the model learned by HSCNN+ is more suitable and can be well generalized. fidelity of the HSCNN+ model in maize spectral recovery application. However, it can be observed that the 228 largest error happens at both ends of the spectral bands. To the best of our knowledge, this may be caused 229 by the acquisition accuracy difference of the spectral camera. The precision of camera in middle bands is 230 higher than ends of the spectral bands. Therefore, the error at both ends of spectral bands caused by data 231 collection may impact on training accuracy. Fortunately, both ends of spectral bands have little impact on the overall disease detection accuracy.

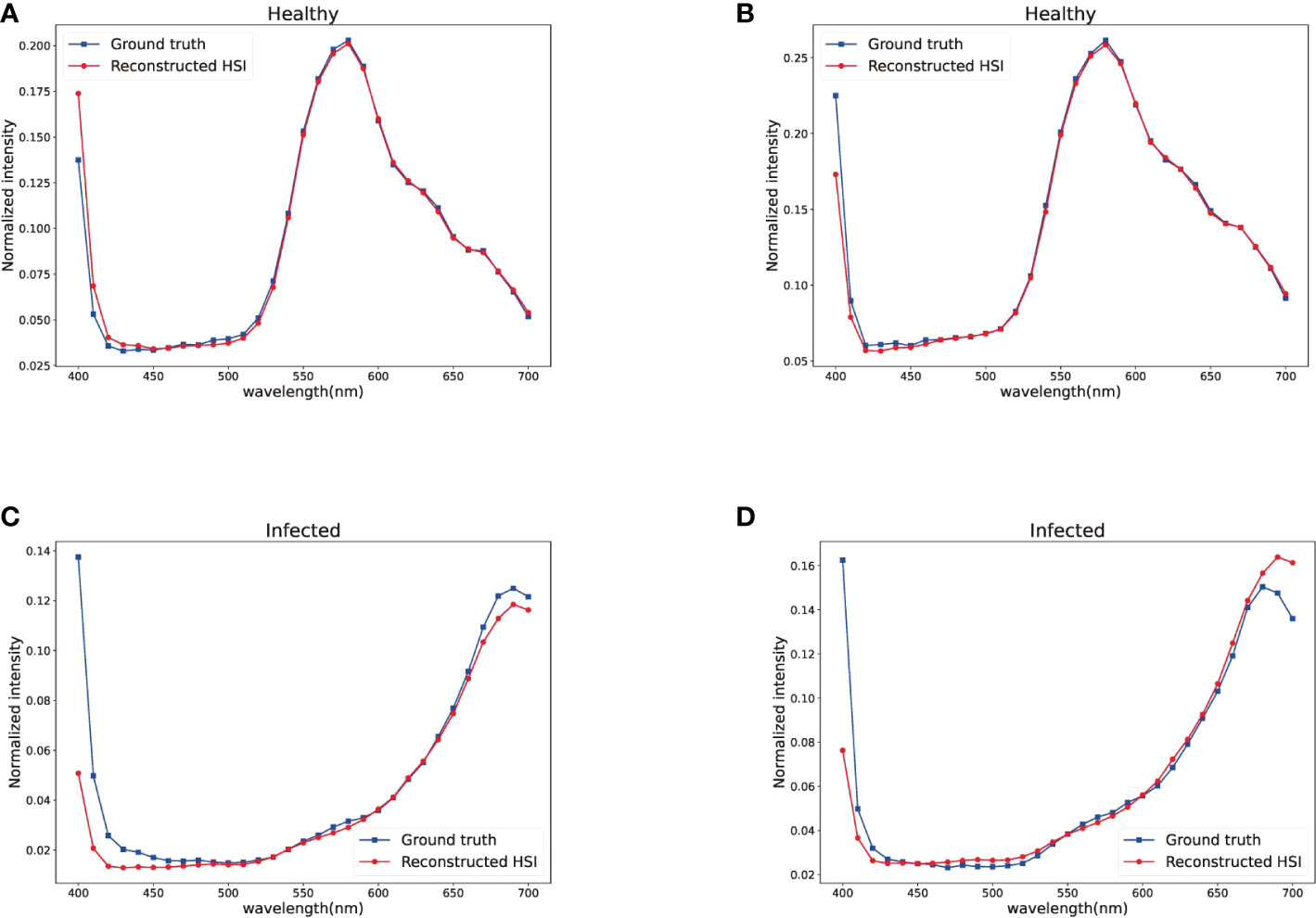

To evaluate the perceptual quality of maize spectral reconstruction, Figure 4 shows the visual results of four selected bands from a test hyperspectral image. The first four rows show the data distribution of 5 methods and the ground truth in the last row. As shown in Figure 4, the spectral recovery model maintained the spatial features well and the HSCNN+ model kept more spectral details than other compared models. As a result of most of the recovered HSIs are maize leaves which have similar spectral characteristics, details information in dark parts are not obvious, we recommend readers to concentrate on texture details. Figure 5 further shows the spectral signatures of four selected points from the test data, two of them were selected randomly from healthy part and two others were selected randomly from infected part. The recovered HSI and ground truth HSI have 31 spectral bands from 400 nm to 700 nm. We can observe that the spectral curve of reconstructed HSI has high similarity with ground truth, which confirmed the high reconstruction fidelity of the HSCNN+ model in maize spectral recovery application. However, it can be observed that the largest error happens at both ends of the spectral bands. To the best of our knowledge, this may be caused by the acquisition accuracy difference of the spectral camera. The precision of camera in middle bands is higher than ends of the spectral bands. Therefore, the error at both ends of spectral bands caused by data collection may impact on training accuracy. Fortunately, both ends of spectral bands have little impact on the overall disease detection accuracy.

Figure 5 Signature of four selected spatial points in Figure 4. (A) Point (133,81) of healthy part. (B) Point (307,439) of healthy part. (C) Point (304,191) of infected part. (D) Point (353,277) of infected part.

According to the above experiment results, we found that HSCNN+ is more suitable for maize spectral recovery. Raw maize RGB images was converted to reconstructed HSIs by maize spectral recovery net. In order to test the effectiveness of our reconstructed HSIs in disease detection, we test the detection performance of recovered HSIs in different detection scenarios. The maize spectral recovery disease detection framework is intended to apply in field robots for disease detection. Therefore, it is essential to choose scenarios that field robots are likely to be encountered. The four scenarios include three close shot and one complex scene. When the agriculture robots are working in field and moving between plants, the scenarios we chose for test are likely to be appeared in the robot view. We used our disease detection model and the input of models were raw RGB images, reconstructed HSIs and raw HSIs, so that we could clearly see the performance of reconstructed HSIs. The HSI and RGB image data collected in field were chosen as test detection scenarios as shown in Figure 6. The raw data of these four scenarios has never been used for our maize spectral recovery. We fed in the raw RGB images of different scenarios into maize spectral recovery network to get recovered maize HSIs, then the reconstructed HSIs, raw RGB images and raw HSIs were imported into maize disease detection network to finally get the disease detection results.

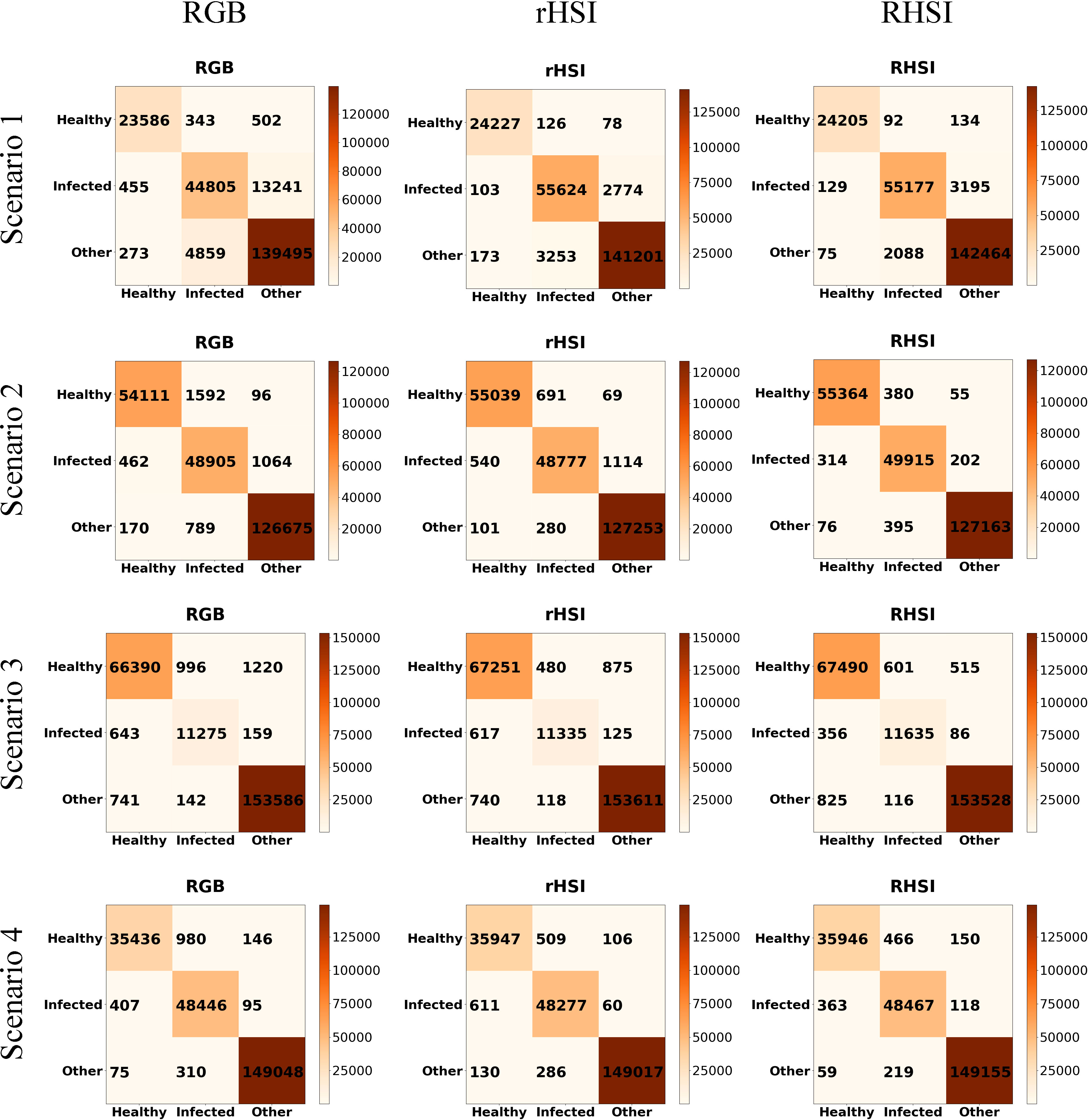

Our maize disease detection network concentrated on pixel-wise detection, all pixels of HSIs were used as dataset and the HSIs size is 512×512. The disease detection model contains 3D and 2D convolutional layers to extract features in spectral and spatial domain, and end up with fully connected layers as classifier to classify pixels into three classes: healthy, infected and others. The total number of labeled pixels in scenario1, scenario2, scenario3 and scenario4 are 227559, 233864, 235152 and234614 respectively. The 253 experiment results are shown in Table 2, and Figure 7 gives a detailed account of the disease detection results 254 in all scenarios.

Figure 7 Confusion matrices of all scenarios. (In each confusion matrix, the abscissa axis represents predicted class and the ordinate axis represents actual class.).

Table 2 compares the performance of different data in four test scenarios. As can be seen, the OA of disease detection reached RGB 91.35%, RHSI 97.49%, rHSI 97.29% in scenario 1, reached RGB 98.22%, RHSI 99.39%, rHSI 98.80% in scenario 2, reached RGB 98.34%, RHSI 98.94%, rHSI 98.74% in scenario 3, and reached RGB 99.14%, RHSI 99.41%, rHSI 99.28% in scenario 4. We found that in all scenarios, the OA of disease detection using reconstructed HSIs were all higher than that using RGB images which means our reconstructed HSIs performed better than RGB images. Moreover, although the OA of detection when using reconstructed HSIs were slightly lower than that when taking raw HSIs as input, the detection performance between using raw HSIs and recovered HSIs were very close. In most cases, not only the OA metrics, almost all evaluation metrics including precision, recall, F1 score and AA follow the above rules. This means that our reconstructed HSIs would work just as well as raw HSIs and better than raw RGB images. Above all, our recovered HIS has been achieved relatively large improvement in detecting infected maize compared with raw RGB image. In some cases, RGB image itself already has a high accuracy, the major reason for this is that in a relatively simple scenario, there is less disturbance. Therefore, the information raw RGB images provided match with the corresponding algorithms could achieve relatively high accuracy. It is difficult for our recovered HSIs to achieve great improvement and the space for improving is seriously limited.

To validate the proposed model’s detection results, we performed a 5-fold cross-validation strategy. Table 3 summarizes the disease detection OA in different test scenarios of all 5-folds. It could be observed that the recovered HSIs performed well to improve the detection accuracy in all folds which indicates the generalization capabilities of the framework.

Figure 7 shows the confusion matrices of all scenarios. The abscissa axis and ordinate axis of each confusion matrix represents predicted class and actual class respectively. As can be seen, the great mass of pixel samples distribute on the diagonal line of confusion matrices. In most cases, the diagonal numbers in rHSI are greater than in RGB, which indicates that our reconstructed HSI as input data could support the detection model has higher accuracy than RGB image. For further test the effect of reconstructed HSI, we chose a scenario to visualize our detection results as shown in Figure 8. As depicted in Figure 8, using the recovered HSI to detect disease has higher stability and precision compared with using the RGB data.

From detection results in scenario 1, we observed that using the reconstructed HSIs has tremendous effects on performance of disease detection. By importing raw RGB data into spectral recovered network to get recovered HSIs, the OA of disease detection is improved from 89.86% (using raw RGB images) to 97.29% (using recovered HSIs). This would be caused by the complex detection environment as shown in Figure 6A. The spatial features extracted by disease detection network from raw RGB images can not sufficient to support the disease detection tasks. By using spectral recovered network to convert raw RGB images to recovered HSIs, the spectral features were enlarged. Compared with 3 spectral channels in RGB images, the reconstructed HSIs have 31 channels which could get more accurate disease detection in the complex scenes.

Above all, the maize spectral recovery network first trained by our maize spectral recovery dataset which contains maize RGB images and corresponding HSIs to learn a map between raw RGB data and HSIs data. After enhancing spectral features of raw RGB images, the recovered HSIs can perform as well as raw HSIs in disease detection application. This means that we could obtain original maize RGB data fast by a low-cost digital camera, and then throw into our maize spectral recovery network to get reconstructed maize HSIs. By utilizing the recovered maize HSIs to detect diseases, we could achieve almost the same accuracy as raw HSIs can do. In view of the high-cost and time-consuming of acquiring HSIs and the operational complexity of hyperspectral camera, we offer a better choice for field maize disease detection application.

This research proposed a maize spectral recovery disease detection framework based on HSCNN+ and maize disease detection CNN to complete low-cost and high-precision maize disease detection in field application. We found ideal spectral recovered model to reconstruct HSI data from raw maize RGB data and used the recovered HSI data as input for disease detection network. The spectral information in the raw data was expanded, and the quality of HSI reconstruction was satisfactory. Our framework effectively improved the disease recognition accuracy when taking RGB images as raw data and had achieved excellent results in disease detection. The experiment findings demonstrated the efficiency and practicability of our framework, and it is successfully accomplished to detect infected maize under various conditions especially in the complex environment conditions. In the future, we plan to combine our theory with practice to resolve problems in agriculture production. The disease detection agricultural robots need to receive real-time data to make quick judgement. On account of the high-cost and time-consuming characteristics of the hyperspectral imaging system, it is almost impossible to apply it to field real-time disease detection. However, the framework we proposed offers this possibility.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

JL and RZ prepared materials and used the hyperspectral camera to obtain hyperspectral images. JF, JL, and RZ wrote the manuscript. JF and RZ provided funding for this work. ZC made guidance for the writing of the manuscript. JL, RZ, and YQ designed the experiment. DL provided guidance for revising manuscript. All authors contributed to the article and approved the submitted version.

This work was supported by the National Natural Science Foundation of China (No. 62103161), the Science and Technology Project of Jilin Provincial Education Department (No. JJKH20221023KJ), and by the Opening Project of the Key Laboratory of Bionic Engineering (Ministry of Education), Jilin University (No. KF20211005).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aeschbacher, J., Wu, J., Timofte, R. (2017). “In defense of shallow learned spectral reconstruction from rgb images,” in Proceedings of the IEEE International Conference on Computer Vision Workshops (Venice, Italy: IEEE). 471–479.

Akhtar, N., Mian, A. (2018). Hyperspectral recovery from rgb images using gaussian processes. IEEE Trans. Pattern Anal. Mach. Intell. 42, 100–113. doi: 10.1109/TPAMI.2018.2873729

Arad, B., Ben-Shahar, O. (2016). “Sparse recovery of hyperspectral signal from natural rgb images,” in European Conference on computer vision (Cham: Springer), 19–34.

Arad, B., Timofte, R., Yahel, R., Morag, N., Bernat, A., Cai, Y., et al. (2022). “Ntire 2022 spectral recovery challenge and data set,” in In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (New Orleans, LA, USA: IEEE). 863–881.

Behmann, J., Acebron, K., Emin, D., Bennertz, S., Matsubara, S., Thomas, S., et al. (2018). Specim iq: evaluation of a new, miniaturized handheld hyperspectral camera and its application for plant phenotyping and disease detection. Sensors 18, 441. doi: 10.3390/s18020441

Cai, Y., Lin, J., Hu, X., Wang, H., Yuan, X., Zhang, Y., et al. (2022). “Mask-guided spectral-wise transformer for efficient hyperspectral image reconstruction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (New Orleans, LA, USA: IEEE). 17502–17511.

Can, Y. B., Timofte, R. (2018). An efficient cnn for spectral reconstruction from rgb images. arXiv preprint arXiv:1804.04647.

Chen, J., Zhang, D., Zeb, A., Nanehkaran, Y. A. (2021). Identification of rice plant diseases using lightweight attention networks. Expert Syst. Appl. 169, 114514. doi: 10.1016/j.eswa.2020.114514

Demetrescu, I., Zbytek, Z., Dach, J., Pawłowski, T., Smurzyńska, A., Czekała, W., et al. (2016). “Energy and economic potential of maize straw used for biofuels production,” in MATEC Web of Conferences (Amsterdam, Netherlands: EDP Sciences), Vol. 60.

Feng, L., Wu, B., He, Y., Zhang, C. (2021). Hyperspectral imaging combined with deep transfer learning for rice disease detection. Front. Plant Sci. 12. doi: 10.3389/fpls.2021.693521

Feng, L., Wu, B., Zhu, S., Wang, J., Su, Z., Liu, F., et al. (2020). Investigation on data fusion of multisource spectral data for rice leaf diseases identification using machine learning methods. Front. Plant Sci. 11, 577063. doi: 10.3389/fpls.2020.577063

Fu, Y., Zhang, T., Zheng, Y., Zhang, D., Huang, H. (2020). Joint camera spectral response selection and hyperspectral image recovery. IEEE Trans. Pattern Anal. Mach. Intell. 44, 256–272. doi: 10.1109/TPAMI.2020.3009999

Haque, M., Marwaha, S., Deb, C. K., Nigam, S., Arora, A., Hooda, K. S., et al. (2022). Deep learning-based approach for identification of diseases of maize crop. Sci. Rep. 12, 1–14. doi: 10.1038/s41598-022-10140-z

He, L., Wu, H., Wang, G., Meng, Q., Zhou, Z. (2018). The effects of including corn silage, corn stalk silage, and corn grain in finishing ration of beef steers on meat quality and oxidative stability. Meat Sci. 139, 142–148. doi: 10.1016/j.meatsci.2018.01.023

Jia, Y., Zheng, Y., Gu, L., Subpa-Asa, A., Lam, A., Sato, Y., et al. (2017). “From rgb to spectrum for natural scenes via manifold-based mapping,” in Proceedings of the IEEE international conference on computer vision (Venice, Italy: IEEE). 4705–4713.

Karthik, R., Hariharan, M., Anand, S., Mathikshara, P., Johnson, A., Menaka, R. (2020). Attention embedded residual cnn for disease detection in tomato leaves. Appl. Soft Computing 86, 105933.

Koundinya, S., Sharma, H., Sharma, M., Upadhyay, A., Manekar, R., Mukhopadhyay, R., et al. (2018). “2d-3d cnn based architectures for spectral reconstruction from rgb images,” in Proceedings of the IEEE conference on computer vision and pattern recognition workshops (Salt Lake City, UT, USA: IEEE). 844–851.

Li, J., Wu, C., Song, R., Li, Y., Liu, F. (2020). “Adaptive weighted attention network with camera spectral sensitivity prior for spectral reconstruction from rgb images,” in In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (Seattle, WA, USA: IEEE). 462–463.

Mboya, R. M. (2013). An investigation of the extent of infestation of stored maize by insect pests in rungwe district, tanzania. Food Secur. 5, 525–531. doi: 10.1007/s12571-013-0279-3

Nagasubramanian, K., Jones, S., Singh, A. K., Sarkar, S., Singh, A., Ganapathysubramanian, B. (2019). Plant disease identification using explainable 3d deep learning on hyperspectral images. Plant Methods 15, 1–10. doi: 10.1186/s13007-019-0479-8

Nguyen, C., Sagan, V., Maimaitiyiming, M., Maimaitijiang, M., Bhadra, S., Kwasniewski, M. T. (2021). Early detection of plant viral disease using hyperspectral imaging and deep learning. Sensors 21, 742. doi: 10.3390/s21030742

Qian, X., Zhang, C., Chen, L., Li, K. (2022). Deep learning-based identification of maize leaf diseases is improved by an attention mechanism: Self-attention. Front. Plant Sci. 1154. doi: 10.3389/fpls.2022.864486

Qiao, X., Jiang, J., Qi, X., Guo, H., Yuan, D. (2017). Utilization of spectral-spatial characteristics in shortwave infrared hyperspectral images to classify and identify fungi-contaminated peanuts. Food Chem. 220, 393–399. doi: 10.1016/j.foodchem.2016.09.119

Robles-Kelly, A. (2015). “Single image spectral reconstruction for multimedia applications,” in Proceedings of the 23rd ACM international conference on Multimedia (New York, NY, USA: Association for Computing Machinery). 251–260.

Samarappuli, D., Berti, M. T. (2018). Intercropping forage sorghum with maize is a promising alternative to maize silage for biogas production. J. Cleaner Prod. 194, 515–524. doi: 10.1016/j.jclepro.2018.05.083

Shi, Z., Chen, C., Xiong, Z., Liu, D., Wu, F. (2018). “Hscnn+: Advanced cnn-based hyperspectral recovery from rgb images,” in In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (Salt Lake City, UT, USA: IEEE). 939–947.

Stiebel, T., Koppers, S., Seltsam, P., Merhof, D. (2018). “Reconstructing spectral images from rgb-images using a convolutional neural network,” in In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (Salt Lake City, UT, USA: IEEE). 948–953.

Syed-Ab-Rahman, S. F., Hesamian, M. H., Prasad, M. (2022). Citrus disease detection and classification using end-to-end anchor-based deep learning model. Appl. Intell. 52, 927–938. doi: 10.1007/s10489-021-02452-w

Wang, W., Wang, J. (2021). Double ghost convolution attention mechanism network: A framework for hyperspectral reconstruction of a single rgb image. Sensors 21 (2), s21020666. doi: 10.3390/s21020666

Wang, Y., Wang, H., Peng, Z. (2021). Rice diseases detection and classification using attention based neural network and bayesian optimization. Expert Syst. Appl. 178, 114770. doi: 10.1016/j.eswa.2021.114770

Wu, Y. (2021). “Identification of maize leaf diseases based on convolutional neural network,” in Journal of physics: Conference series, vol. 1748. (Shenyang, China: IOP Publishing), 032004.

Xiong, Z., Shi, Z., Li, H., Wang, L., Liu, D., Wu, F. (2017). “Hscnn: Cnn-based hyperspectral image recovery from spectrally undersampled projections,” in Proceedings of the IEEE International Conference on Computer Vision Workshops (Venice, Italy: IEEE). 518–525.

Yan, Y., Zhang, L., Li, J., Wei, W., Zhang, Y. (2018). “Accurate spectral super-resolution from single rgb image using multi-scale cnn,” in Chinese Conference on pattern recognition and computer vision (PRCV) (Cham: Springer), 206–217.

Zamir, S. W., Arora, A., Khan, S., Hayat, M., Khan, F. S., Yang, M.-H., et al. (2020). “Learning enriched features for real image restoration and enhancement,” in European Conference on computer vision (Cham: Springer), 492–511.

Zhang, J., Su, R., Fu, Q., Ren, W., Heide, F., Nie, Y. (2022). A survey on computational spectral reconstruction methods from rgb to hyperspectral imaging. Sci. Rep. 12, 1–17. doi: 10.1038/s41598-022-16223-1

Zhang, Y., Wa, S., Liu, Y., Zhou, X., Sun, P., Ma, Q. (2021). High-accuracy detection of maize leaf diseases cnn based on multi-pathway activation function module. Remote Sens. 13 (21), 4218. doi: 10.3390/rs13214218

Zhang, J., Yang, Y., Feng, X., Xu, H., Chen, J., He, Y. (2020). Identification of bacterial blight resistant rice seeds using terahertz imaging and hyperspectral imaging combined with convolutional neural network. Front. Plant Sci. 11, 821. doi: 10.3389/fpls.2020.00821

Zhao, Y., Po, L.-M., Yan, Q., Liu, W., Lin, T. (2020). “Hierarchical regression network for spectral reconstruction from rgb images,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (Seattle, WA, USA: IEEE). 422–423.

Keywords: maize, pest disease detection, spectral recovery, hyperspectral images (HSIs), convolutional neural network (CNN)

Citation: Fu J, Liu J, Zhao R, Chen Z, Qiao Y and Li D (2022) Maize disease detection based on spectral recovery from RGB images. Front. Plant Sci. 13:1056842. doi: 10.3389/fpls.2022.1056842

Received: 29 September 2022; Accepted: 23 November 2022;

Published: 21 December 2022.

Edited by:

Yunchao Tang, Zhongkai University of Agriculture and Engineering, ChinaReviewed by:

Jakub Nalepa, Silesian University of Technology, PolandCopyright © 2022 Fu, Liu, Zhao, Chen, Qiao and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rongqiang Zhao, enJxQGpsdS5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.