95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 06 October 2022

Sec. Sustainable and Intelligent Phytoprotection

Volume 13 - 2022 | https://doi.org/10.3389/fpls.2022.1010474

This article is part of the Research Topic Spotlight on Artificial Intelligence (AI) for Sustainable Plant Production View all 11 articles

In this paper, a method for predicting residual film content in the cotton field plough layer based on UAV imaging and deep learning was proposed to solve the issues of high labour intensity, low efficiency, and high cost of traditional methods for residual film content monitoring. Images of residual film on soil surface in the cotton field were collected by UAV, and residual film content in the plough layer was obtained by manual sampling. Based on the three deep learning frameworks of LinkNet, FCN, and DeepLabv3, a model for segmenting residual film from the cotton field image was built. After comparing the segmentation results, DeepLabv3 was determined to be the best model for segmenting residual film, and then the area of residual film was obtained. In addition, a linear regression prediction model between the residual film coverage area on the cotton field surface and the residual film content in the plough layer was built. The results showed that the correlation coefficient (R2), root mean square error, and average relative error of the prediction of residual film content in the plough layer were 0.83, 0.48, and 11.06%, respectively. It indicates that a quick and accurate prediction of residual film content in the cotton field plough layer can be realized based on UAV imaging and deep learning. This study provides certain technical support for monitoring and evaluating residual film pollution in the cotton field plough layer.

Agricultural mulch has been widely used to increase soil temperature and moisture, suppress pests and weeds and reduce soil salinity which are conducive to improving crop yields and increase farmers’ incomes (Wang et al., 2020; Li et al., 2021). Plastic film mulching was introduced to China in the late 1970s. After decades of development, it has been widely used for the cultivation of cotton, corn, pepper, and other crops in northwest China, especially Xinjiang Province. It has made important contributions to the increases in the production and income levels of farmers in arid regions (Yan et al., 2006; Hu et al., 2019).

However, the “white pollution” caused by the widespread use of mulch is becoming increasingly prominent (Liu et al., 2014; Zhang et al., 2021; Li et al., 2021). As an economically important crop, cotton is mainly planted with film mulching in Xinjiang, China. Due to the continuous use of plastic mulch in cotton fields, and the incomplete recovery of the residual film, the amount of residual film in cotton field is as many as 42 ~540 kg/hm2, and the average residual amount exceeds 200 kg/hm2 (Wang, 1998; Xu et al., 2005; Zhao et al., 2017). However, the pollution of residual mulch film in cotton fields has a massive impact on cotton production (Zhang et al., 2016). For example, a large amount of plastic film left in the plough layer blocks the migration of water and nutrients, destroy the soil structure, and suppresses the germination of seeds and the growth of crop roots. In addition, the residual film is mixed into the seed cotton, which reduces the weight of the cotton (Zhang et al., 2020; Zong et al., 2021).

Fast and accurate monitoring of residual film pollution in farmlands has great significance for the control of residual film pollution. The assessment of the residual film pollution degree in farmlands is mainly through manual sampling. Zhang et al. (2017) adopted the manual stratified sampling method and arranged 7 sampling points at each monitoring site. The data analysis showed that the amount of residual film had an increasing trend of increasing. Wang et al. (2018) took layered samples of soil in cotton fields with different mulching years to analyse the areas and net weights of the residual film, and found that with the increase in years of mulching, the content of residual film increased yearly, with an average increase of approximately 10%. Besides, the residual film fragmented gradually and moved down to deep soil layer during ploughing. Qi et al. (2001) found that the residual film in the soil of cultivated land was mainly distributed in the plough layer (0~10 cm) accounting for approximately 2/3 of the total residual film. The rest was distributed in the 10~30 cm soil layer, and no residual film was found below 40 cm. However, the manual sampling method for residual film pollution monitoring in these studies is labor-intensive and inefficient and cannot meet the demands of rapid and accurate monitoring of residual film pollution.

UAV remote sensing technology has many advantages such as high operation efficiency, good mobility, low cost, and high spatial resolution (Cao et al., 2021). In recent years, it has been combined with technologies such as artificial intelligence and the Internet of Things and is widely used in agriculture for disease and pest prevention and control, sowing, and so on (Li et al., 2021; Su et al., 2021). In terms of monitoring residual film in farmland, some scholars have also preliminarily explored the application potential of the UAV remote sensing and identification methods. For example, Sun et al. (2018) used a six-rotor UAV equipped with a Sony NEX-5k camera for aerial photography and proposed an end-to-end method for identifying greenhouses and mulched farmland from drone images. As a result, the average accuracy achieved for the testing area was 97%. Zhu et al. (2019) used the images taken by drones of a research area and a fusion-based supervised image classification algorithm, and found that the recognition accuracy was 94.84%. Ning et al. (2021) proposed an improved deep semantic segmentation model based on the DeepLabv3+ network for plastic film identification and found that this method could effectively segment plastic film from farmland in UAV multispectral remote sensing images and the identification accuracy was 7.1% high than that of the visible light method. In conclusion, the rapid detection of residual film in farmland can be realized based on UAV remote sensing imaging technology. However, in fact, the residual film is mainly concentrated in the plough layer. Currently, there are few reports about detecting residual film pollution in the plough layer with the UAV images and deep learning method, and there is a lack of methods for the rapid detection of residual film pollution in the plough layer.

Therefore, in this study, the cotton field before spring sowing was taken as the research object, and a method for predicting residual film in the cotton field plough layer based on UAV imaging and deep learning was proposed. Through the identification of residual film on soil surface and the linear fitting with the actual weight data of residual film in the plough layer, the content of the residual film in the cotton field plough layer was detected rapidly and accurately. This study will provide a theoretical basis for further study of the rapid and accurate assessment technology residual film pollution in the plough layer and equipment development.

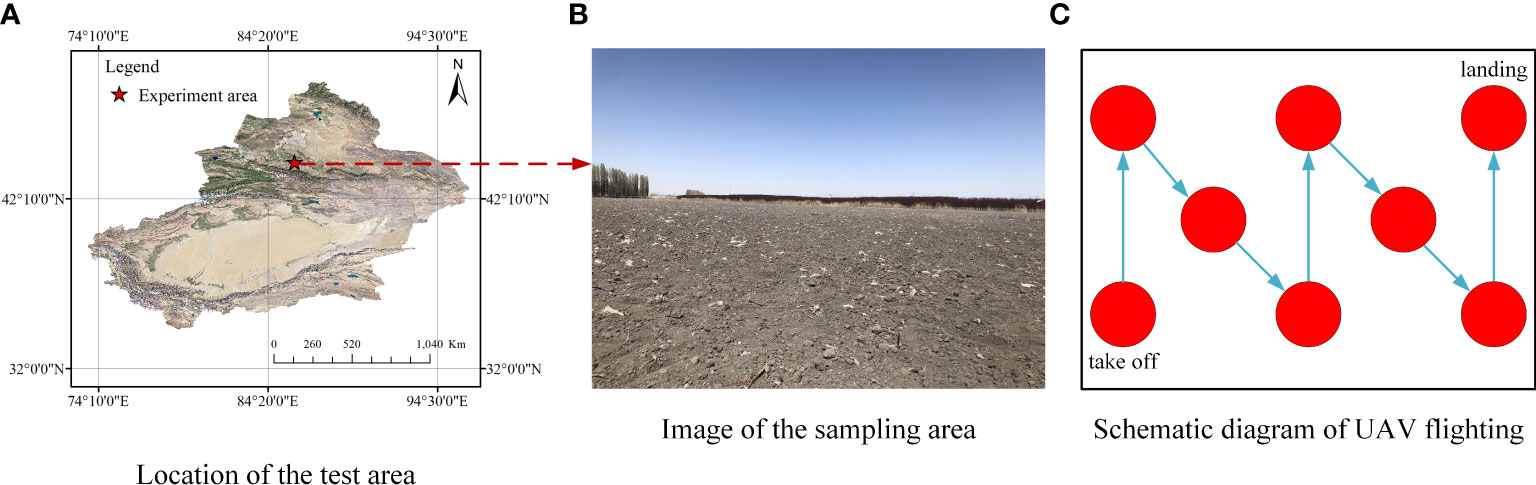

The data was collected in Shihezi City, Xinjiang, China (42°10′~45°21′N, 84°20′~86°55′E, a.s.l. 450.8 m), where film mulching has been continuously used for cotton cultivation for many years. The data was collected before sowing in early April 2021 and a large amount of residual plastic film remained on the soil surface (Figure 1). A total of 30 cotton fields were selected. The images were collected in sunny and cloudy days, to increase the robustness of the subsequent model and enhance the adaptability to lighting. First, images were collected by drones, with a height of 5 m, and a total of 900 images, which constituted the training set for surface residual film recognition, were collected. Then, ten points (1 m2 per point) were selected to manually collect the residual film in the plough layer (0-10 cm in depth) in each cotton field (a total of 300 sampling points). All the residual film sampled were put into label bags.

Figure 1 Test area and image acquisition. (A) Location of the test area; (B) Image of the sampling area; (C) Schematic diagram of UAV flighting.

The images of the cotton field were taken by a DJI M200 remote-controlled rotary-wing quadrotor drone (DJI MATRICE 200 V1, DJI, China) equipped with a ZENMUSE X4S cloud platform camera (FC6510, DJI, China). The camera had a fixed focal length of 8.8 mm, a F/2.8-11 focal ratio, and a field of view (FOV) of 84°. The resolution of the image was 5472×3078 pixels (JPG format). A DJI ground workstation (DJI, Shenzhen, China) was used to control the flight of the drone and transmit the images. After the drone landed, the images obtained were transferred to a laptop in the JPG format and checked for integrity. The sampling tools used for the collection of the residual film in the plough layer included a 1 m × 1 m folding ruler, a shovel, and a canvas. The equipment configuration and data acquisition process are shown in Figure 2.

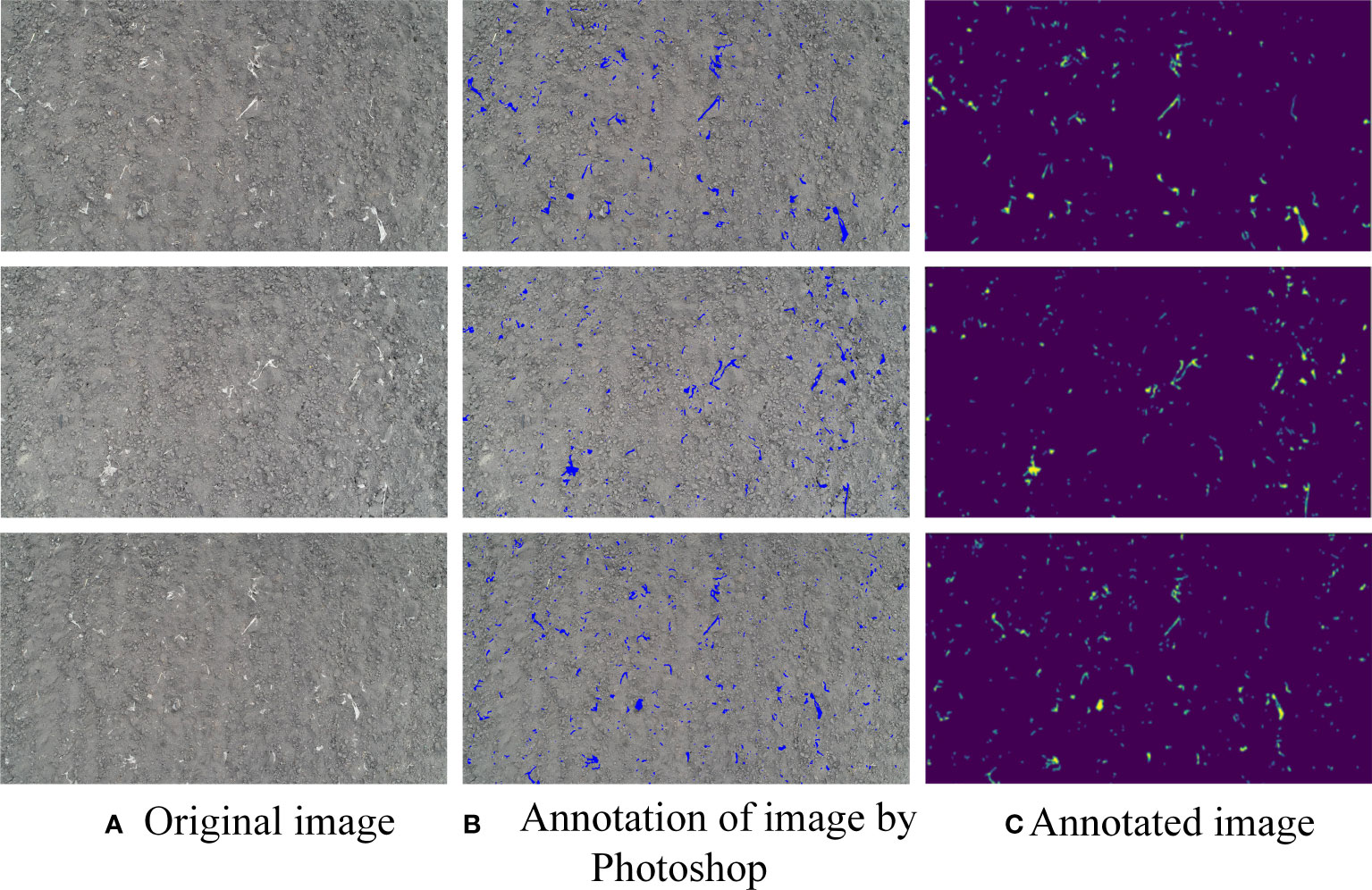

Due to the adhesion of soil on the surface of the residual film, the residual straw, and the drip irrigation belts, it was difficult to identify the residual film through a simple image processing method. In this study, a deep learning-based semantic segmentation method was used to identify the residual film. Deep learning is a new research direction in the field of machine learning. Semantic image segmentation is a very important research direction in computer vision. It identifies images at the pixel level, and its accuracy and efficiency greatly surpass those of other methods. In this study, the residual film in a total of 900 images of were manually marked with Photoshop CS5, and saved in the PNG format. The marked PNG images were used as a (Figure 3).

Figure 3 Schematic diagram of data annotation. (A) Original image; (B) Annotation of image by Photoshop; (C) Annotated image.

The marked dataset was enhanced by random cropping, that is, the image size was adjusted and the images were randomly cropped into images of the same size. Each raw image was normalized, and the corresponding marked image was randomly flipped. Then, the flipped images were normalized.

LinkNet uses the idea of a self-encoder with an architecture that includes two parts: an encoder and a decoder (Figure 4). The input contains 2 convolution layers and 1 pooling layer, the output contains 2 deconvolution layers, and the middle part contains 4 encoding layers and 4 decoding layers. The kernel size is 7×7, the number of kernels is 64, and the stride size is 2. The pooling layer utilizes the maximum pooling method. The maximum pooling window is 3 × 3, and the stride is 2. The upper part is the encoder structure, which contains 4 convolutional layers, and the encoder module performs forward propagation. The first 2 convolutional layers scale the input images, and the sizes of the images remain unchanged in the latter 2 convolutional layers. The output obtained by adding the outputs of the first 2 convolutional layers and the outputs of the latter 2 convolutional layers enters the decoder module. The decoder module in the lower part contains 2 convolutional layers and 1 deconvolutional layer; this module is equivalent to the back-propagation process and enlarges the images. After passing through the decoder module, the images enter the upsampling module. Then the images enter the second convolutional layer, and finally, the images are upsampled for the second time to obtain the final output images.

A Fully Convolutional Network (FCN) consists of two parts, full convolution and deconvolution layers (Figure 5). By referring to the Visual Geometry Group 16 (VGG16) pretraining network structure, pretraining weights were introduced in this study, and the fully connected layer of the VGG16 network was replaced with a 1 × 1 convolutional layer to solve the disadvantage that the number of neurons in the fully connected layer must be fixed and then to achieve input images of any size. The input convolution kernel size was 512, the convolution kernel size was 3 × 3, the input image size was 512 × 1024 pixels, and the number of channels was 3. By creating a submodel and obtaining the output of the middle layer of the VGG16 network, this study sets the last layer of the submodel as pool1 for upsampling, used the rectified linear unit (ReLU) function for activation, and then performed a convolution operation. Pool1 was added to the middle layer to obtain pool2, and in the same way, pool2 was upsampled, and another convolution operation were performed. Then, pool2 was added to the middle layer to obtain pool3. Pool3 was upsampled, and then convolution was performed again. The middle layers were added to obtain pool4, after completing the hopping structure, pool4 was upsampled to obtain the output images. The outputs were upsampled to obtain the final predicted images with the same size as that of the input image. The number of channels was 2. The model was created.

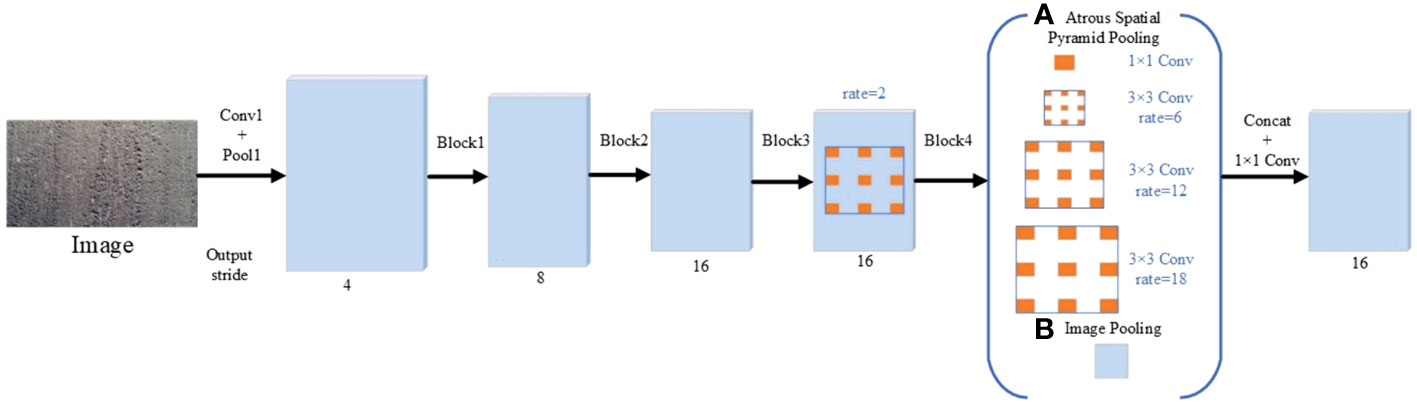

DeepLabv3, a multiscale image segmentation network based on the ResNet structure, is designed with serial and parallel atrous convolution module. It uses a variety of different convolution modules to obtain multiscale content information. It involves the hole convolution and the atrous spatial pyramid pooling (ASPP) with atrous convolution. The first three modules use the original convolution module, and the fourth module uses the atrous convolution module. The multiple-network atrous convolution expansion rate of the atrous convolution module is (2, 4, 8), the output stride is 16, and the size of the feature map is 32×32. Furthermore, the ASPP structure contains 4 parallel dilated convolutions, including one 1×1 convolution and three 3×3 convolutions. The ASPP structure obtains the global context information through a global average pooling layer and uses a 1×1 convolution to achieve fusion of the branch-processed features (Figure 6).

Figure 6 Schematic diagram of the DeepLabv3 structure. (A) Atrous Spatial Pyramid Pooling; (B) Image Pooling.

The proposed deep learning model was built based on Python 3.7 and the Jupyter Notebook editor using the Windows 10 desktop operating system running on an Intel(R) Gold 6126 CPU with a default frequency of 2.60 GHZ and 64 GB of memory. The graphics card used was an NVIDIA GeForce RTXTM 2060 (6 GB of video memory), and the model training framework adopted the TensorFlow 2.0 GPU version. In the experiment, 80% of the image samples were randomly selected as the training set, and the remaining were used as the validation set to verify the identification accuracy of each model. To improve the accuracy of the models, the Adam optimizer was used to optimize the three deep learning models. The learning rate was set to 0.001, the number of iterations was set to 50 epochs, the attenuation coefficient in the Adam optimizer was set to 0.9, and the loss function was the cross entropy loss function.

In this study, five indicators, including accuracy precision, the mean intersection over union (MIOU), recall, precision, and F1-score, were used to evaluate the identification accuracy of the models. Accuracy is the proportion of positive samples predicted by the model to the total samples (Formula 1). Precision is the proportion of true positive samples predicted by the model to positive samples (Formula 2). Recall is the proportion of samples with predicted true values out of all true values (Formula 3). The F1-score is the harmonic mean of precision and recall (Formula 4). The MIOU is the mean of all categories of IOUs (Formula 5).

Where TP denotes the number of correctly classified residual film pixels, FP denotes the number of background pixels that are misclassified as residual film pixels, FN denotes the number of residual film pixels that are incorrectly classified as background pixels, TN represents the number of correctly classified background pixels, and k is the total number of segmented residual film images.

The residual film coverage area S was calculated by the pixel ratio. Let the size of the aerial image be A×B, and the total number of residual film pixels be p. As shown in Figure 7, the aerial photography height is h=5m, and the aerial photography angle is θ=84°. The length of d was calculated to obtain the length of the hypotenuse 2d of the triangle, and the length a and width b of the rectangle were calculated by the Pythagorean theorem. The actual area S1 of the photograph relative to the ground was obtained by multiplying the length by the width (Formula 6). The aspect ratio of the images corresponded to the actual area (Table 1).

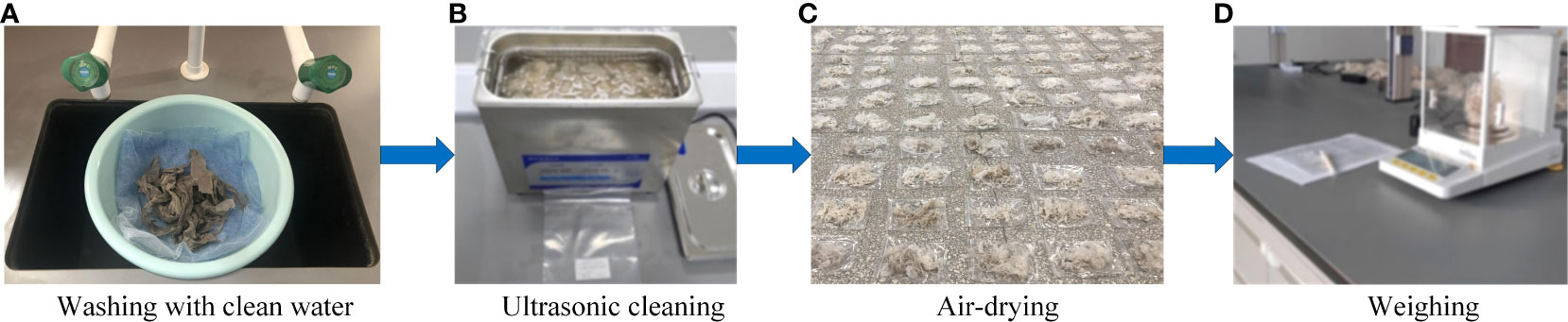

(Figure 8) The collected residual film was first cleaned with clean water. After that, ultrasonic cleaning was performed, followed by air drying. Finally, the air-dried residual film was weighed, counted, and marked. The residual film area calculation method described was applied to obtain the residual film coverage area S. Regression analysis was carried out with the corresponding residual film mass of the 0-10 cm plough layer, and the obtained mathematical relationship was used to predict the content of residual film in the plough layer. The average value of the residual film content of the plough layer of the five sampling points was taken as the residual film weight of an unit area.

Figure 8 Calculation process of the residual film weight. (A) Washing with clean water; (B) Ultrasonic cleaning; (C) Air-drying; (D) Weighing.

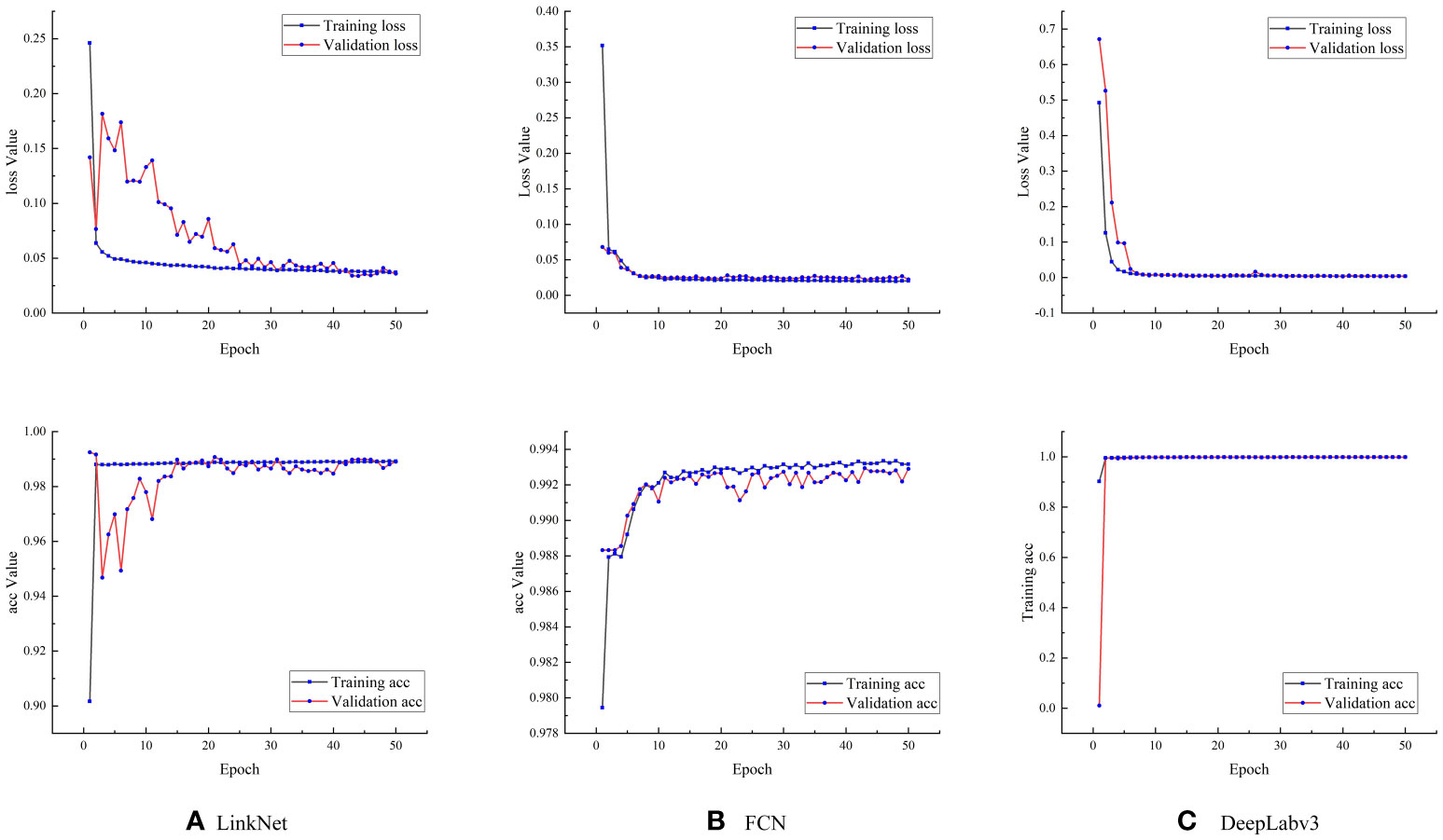

During the training of the three models (LinkNet, the FCN and DeepLabv3), the loss function decreased rapidly, then converged quickly, and finally stabilized (Figure 9). Among the three models, the DeepLabv3 model had the best convergence effect, followed by the FCN model, and the LinkNet model. The accuracy of the three models were relatively high, and during the training process, they quickly reached states of convergence. The DeepLabv3 model had the highest accuracy, followed by the FCN model, and the LinkNet model.

Figure 9 Loss values and accuracies of the three models. (A) Loss value and accuracy of the LinkNet model; (B) Loss value and accuracy of the FCN model; (C) Loss value and accuracy of the Deeplabv3 model.

The identification performance of the three models were generally better; among them, the DeepLabv3 model had the best identification performance, with an accuracy of 99.71%, a precision of 85.29%, a recall of 79.38%, an F1 of 79.73%, and a MIOU of 74.62% (Table 2). Moreover, the prediction accuracy based on the test set was similar to that based on the training set. It indicates that there is no overfitting.

The segmentation results (image size is 5472 × 3078 pixels) predicted by the three models (LinkNet, FCN, and DeepLabv3) (Figure 10), showed that the segmentation performance of the three models were generally improved. The DeepLabv3 model had the best segmentation performance, followed by the FCN model, and the LinkNet model. The FCN model failed to identify many small areas of residual film. The LinkNet model had misidentification, and many small soil blocks were misidentified as residual film, resulting in the worst identification performance.

Therefore, the DeepLabv3 model was determined as the optimal model for the prediction of the residual film in the plough layer. Then, linear regression analysis was performed on the residual film area and the weight of the residual film in the plough layer calculated by the model. To detect and exclude data outliers, the Mahalanobis distances of 255 sample data were calculated (Figure 11). The Mahalanobis distances between the five sets of data and the centre of the dataset were more than three times of the average distance. Therefore, these five sets of data were considered outliers and excluded. The remaining 250 sets of data were used for further analysis and modelling.

Figure 12 shows the analysis results obtained for the 250 sample data. The R2 was 0.83, and the root mean square error was 0.48. The mathematical expression y=15.76x+0.37 was obtained for the prediction of the residual film weight in the plough layer, where x is the area of the residual film on soil surface of the cotton field, and y is the weight of the residual film in the plough layer.

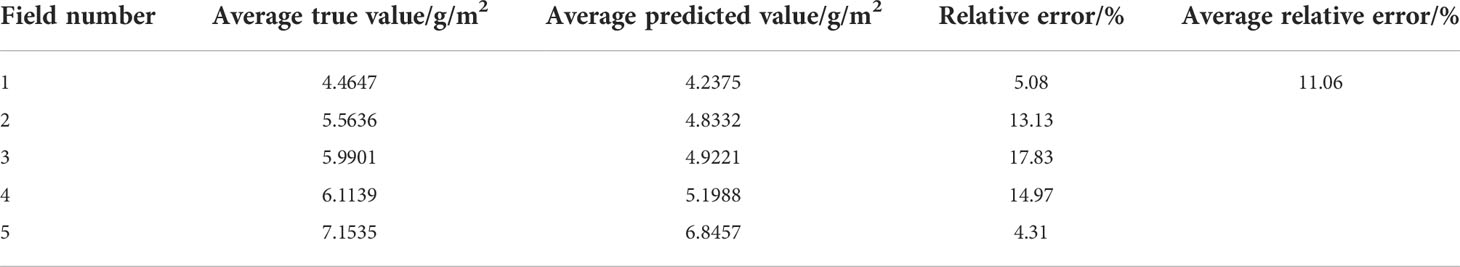

A total of 25 data sets collected from 5 cotton fields were used to verify the prediction model. The drone image were used to calculate the area of the residual film on the soil surface through model identification, and then the residual film content of the plough layer was calculated by the prediction model. The results predicted by the model and the results obtained by manual sampling are shown in Table 3. The average relative error of the prediction of the residual film content in the plough layer was 11.06%. It indicates that the proposed method has higher prediction accuracy.

Table 3 Comparison of the prediction results regarding the residual film content in the plough layer.

This paper compared the performance of three deep learning-based semantic segmentation algorithms, LinkNet, FCN, and DeepLabv3, in residual film identification and residual film coverage area prediction. The results showed that the predicted value of the LinkNet model was slightly higher than the real value, and its prediction speed was the fastest. The original intention of this model was to improve the prediction speed. Due to the simple structure and parameter settings of this model, many other things were misidentified as residual films. The parameters of the FCN model were relatively complex, and pretraining weights were introduced, so the model prediction speed was not fast. The model cannot extract the details of the images and does not fully consider the interpixel relationships. Besides, the space regularization used in the segmentation methods based on pixel classification are ignored, resulting in many small residual films not being identified. The DeepLabv3 model had the best segmentation performance, and its segmentation time was between those of the other two models. Chen et al. (2021) used the threshold segmentation method to identify the residual films in cotton fields and found that light intensity had a great influence on it, and its identification accuracy was high. Wu et al. (2020) used the threshold segmentation method to identify residual film in farmland based on colour characteristics and found that the integrity of the residual film was better and that the process of segmenting the residual film was easier compared with other methods. Our study proposed a deep learning method, which can improve the identification accuracy of residual films. However, the dataset of this study needs to be further expanded, and the influence of light intensity on the identification accuracy of the model should be further explored.

In this study, the DeepLabv3 semantic segmentation model was determined as the optimal segmentation model, the area of residual film on soil surface and the residual film weight of the plough layer were analysed by regression analysis, and finally, a regression model was established. The prediction accuracy was high, and the detection speed was greatly improved compared with that of the manual approach. However, the accuracy of the model needs to be further improved, and the influences of different mulching years and different soil qualities on the weight of the residual film in the plough layer should also be considered. Besides, more datasets need to be added to improve the robustness and generalization performance of the model.

Aiming at the monitoring and evaluation of the residual film content in the cotton field plough layer, a method based on UAV imaging and deep learning was proposed. The conclusions are drawn as follows.

(1) Compared with the LinkNet, FCN and DeepLabv3 models, the DeepLabv3 semantic segmentation model had the best performance, with accuracy, precision, recall, F1-score, and MIOU values of 99.71%, 85.29%, 79.38%, 79.73%, and 74.62%, respectively.

(2) A method for predicting residual film contents in the cotton field plough layer was proposed. The regression model was established by fitting the area of the residual film on soil surface and the weight of the corresponding residual film in the plough layer. The R2 of the regression model was 0.83, and the root mean square error was 0.48.

(3) The accuracy of the proposed method for predicting the residual film contents in the cotton field plough layer was verified. The results showed that the proposed method achieved a faster detection and a higher prediction accuracy, and the average relative error was 11.06%. This study makes up for the deficiency that the current monitoring methods can only evaluate the content of residual film on soil surface and provides an effective method for monitoring and evaluating the residual film pollution in the plough layer.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

FQ and ZZ who contributed equally to this work share the first authorship. They collected and analysed the data and wrote the manuscript under the supervision of RZ and YL. JY and HW assisted in collecting and analyzing the data. All authors reviewed and revised the manuscript.

The authors gratefully acknowledge the financial support provided by the National Natural Science Foundation of China (32060412), the High-level Talents Research Initiation Project of Shihezi University (CJXZ202104), the Earmarked Fund for China Agriculture Research System (CARS-15-17) and the Graduate Education Innovation Project of Xinjiang Autonomous Region (XJ2022G082).

The authors would like to thank Jie Huang and Hao Pan for their assistance with the experiment.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Cao, Z., Shan, C. F., Yang, C. L., Wang, Q. Y., Wang, G. B. (2021). The application of drones in smart agriculture. Modern. Agric. Technol. 11, 157–158+162. doi: 10.3969/j.issn.1007-5739.2021.11.069

Chen, M. (2021). Design and experiment of prediction system of plastic film residue in cotton field (Tarim University). doi: 10.27708/d.cnki.gtlmd.2021.000216

Hu, C., Wang, X. F., Chen, X. G., Yang, X. Y., Zhao, Y., Yan, C. R. (2019). Current situation and prevention and control strategies of residual film pollution of farmland in xinjiang. Trans. Chin. Soc. Agric. Eng. 35 (24), 223–234. doi: 10.11975/j.issn.1002-6819.2019.24.027

Li, J. Y., Hu, X. D., Lan, Y. B., Deng, X. L. (2021). 2001-2020 global agricultural UAV research progress based on bibliometrics. Trans. Chin. Soc. Agric. Eng. 37 (09), 328–339. doi: 10.11975/j.issn.1002-6819.2021.09.037

Li, C., Sun, M. X., Xu, X. B., Zhang, L. X. (2021). Characteristics and influencing factors of mulch film use for pollution control in China: Microcosmic evidence from smallholder farmers. Resour. Conserv. Recycling. 164 (4), 105222. doi: 10.1016/j.resconrec.2020.105222

Li, C., Sun, M. X., Xu, X. B., Zhang, L. X., Guo, J. B., Ye, Y. H. (2021). Environmental village regulations matter: Mulch film recycling in rural China. J. Cleaner. Production. 11, 126796. doi: 10.1016/j.jclepro.2021.126796

Liu, E. K., He, W. Q., Yan, C. R. (2014). ‘White revolution’ to ‘white pollution’-agricultural plastic film mulch in China. Environ. Res. Lett. 9 (9), 091001. doi: 10.1088/1748-9326/9/9/091001

Ning, J. F., Ni, J., He, Y. J., Li, L. F., Zhao, Z. X., Zhang, Z. T. (2021). Multi-spectral remote sensing image mulching farmland recognition based on convolution attention. Trans. Chin. Soc. Agric. Machinery. 52 (09), 213–220. doi: 10.6041/j.issn.1000-1298.2021.09.025

Qi, X. J., Gu, Y. Q., Li, W. Z., Wang, G. T. (2001). Investigation on damage of residual film to crops in inner Mongolia. Inner. Mongolia. Agric. Sci. Technol. 02), 36–37.

Su, B. F., Liu, Y. L., Huang, Y. C., Wei, R., Cao, X. F., Han, D. J. (2021). Dynamic unmanned aerial vehicle remote sensing monitoring method of population wheat stripe rust incidence. Trans. Chin. Soc. Agric. Eng. 37 (23), 127–135. doi: 10.11975/j.issn.1002-6819.2021.23.015

Sun, Y., Han, J. Y., Chen, Z. B., Shi, M. C., Fu, H. P., Yng, M. (2018). UAV aerial photography monitoring method for greenhouse and plastic film farmland based on deep learning. Trans. Chin. Soc. Agric. Machinery. 49 (02), 133–140. doi: 10.6041/j.issn.1000-1298.2018.02.018

Wang, P. (1998). Countermeasures and measures for residual film pollution control. Trans. Chin. Soc. Agric. Eng. 03, 190–193. Available at: https://kns.cnki.net/kcms/detail/detail.aspx?dbcode=CJFD&dbname=CJFD9899&filename=NYGU803.035&uniplatform=NZKPT&v=_b9FwgwXFTBL_XQWnD_4HuSTZ084H10BfXSxbo-OhLTEgjwr3bBoTWk7tFhRWPiZ.

Wang, Y. J., He, K., Zhang, J. B., Chang, H. Y. (2020). Environmental knowledge, risk attitude, and households’ willingness to accept compensation for the application of degradable agricultural mulch film: Evidence from rural China. Sci. Total. Environ. 744, 140616. doi: 10.1016/j.scitotenv.2020.140616

Wang, Z. H., He, H. J., Zheng, X. R., Zhang, J. Z., Li, W. H. (2018). Effects of returning cotton stalks to fields in typical oasis in xinjiang on the distribution of residual film in cotton fields covered with film-covered drip irrigation. Trans. Chin. Soc. Agric. Eng. 34 (21), 120–127. doi: 10.11975/j.issn.1002-6819.2018.21.015

Wu, X. M., Liang, C. J., Zhang, D. B., Yu, L. H., Zhang, F. G. (2020). Identification method of post-harvest film residue based on UAV remote sensing images. Trans. Chin. Soc. Agric. Machinery. 51 (08), 189–195. doi: 10.6041/j.issn.1000-1298.2020.08.021

Xu, G., Du, X. M., Cao, Y. Z., Wang, Q. H., Xu, D. P., Lu, G. L., et al. (2005). Study on the residual level and morphological characteristics of agricultural plastic film in typical areas. J. Agric. Environ. Sci. 01, 79–83. Available at: https://kns.cnki.net/kcms/detail/detail.aspx?dbcode=CJFD&dbname=CJFD2005&filename=NHBH200501018&uniplatform=NZKPT&v=PxaiLVoRSisEX8I45w4-BQWB1wP9FcM5qqyPBaXu3YPyaQo0dWN5k3p3gc92RVhc.

Yan, C. R., Mei, X. R., He, W. Q., Zheng, S. H. (2006). Current situation and prevention and control of residual pollution of agricultural plastic films. Trans. Chin. Soc. Agric. Eng. 11, 269–272. Available at: https://kns.cnki.net/kcms/detail/detail.aspx?dbcode=CJFD&dbname=CJFD2006&filename=NYGU200611058&uniplatform=NZKPT&v=w4bKOK-bW57RlpN6zUEgxsxVBeVfFoHBO8euovLZqH61hAW4EMOamJcOeVTvDRCo.

Zhang, Y. S. (2017). Monitoring and analysis of cotton mulch residues. Agric. service. 34 (13), 164. Available at: https://kns.cnki.net/kcms/detail/detail.aspx?dbcode=CJFD&dbname=CJFDLAST2017&filename=NJFW201713137&uniplatform=NZKPT&v=sBN3nVhf2SP6ZgVn99KNNPlCo5KI6cgKu0fFnSi01P0g7CnXKCTsQ3n_xKPa_urT.

Zhang, D., Liu, H. B., Hu, W. L., Qin, X. H., Ma, X. W., Yan, C. R., et al. (2016). The status and distribution characteristics of residual mulching film in xinjiang, China. J. Integr. Agric. 15 (11), 2639–2646. doi: 10.1016/S2095-3119(15)61240-0

Zhang, P., Wei, T., Han, Q. F., Ren, X. L., Jia, Z. K. (2020). Effects of different film mulching methods on soil water productivity and maize yield in a semiarid area of China. Agric. Water Manage. 241, 106382. doi: 10.1016/j.agwat.2020.106382

Zhang, J. J., Zou, G. Y., Wang, X. X., Ding, W. C., Xu, L., Liu, B. Y., et al. (2021). Exploring the occurrence characteristics of microplastics in typical maize farmland soils with long-term plastic film mulching in northern China. Front. Mar. Sci. 8, 800087. doi: 10.3389/fmars.2021.800087

Zhao, Y., Chen, X. G., Wen, H. J., Zheng, X., Niu, Q., Kang, J. M. (2017). Research status and prospect of farmland residual film pollution control technology. Trans. Chin. Soc. Agric. Machinery. 48 (06), 1–14. doi: 10.6041/j.issn.1000-1298.2017.06.001

Zhu, X. F., Li, S. B., Xiao, G. F. (2019). Extraction method of film-covered farmland area and distribution based on UAV remote sensing images. Trans. Chin. Soc. Agric. Eng. 35 (04), 106–113. doi: 10.11975/j.issn.1002-6819.2019.04.013

Keywords: cotton fields, plough layer, residual film pollution, UAV imaging, deep learning

Citation: Qiu F, Zhai Z, Li Y, Yang J, Wang H and Zhang R (2022) UAV imaging and deep learning based method for predicting residual film in cotton field plough layer. Front. Plant Sci. 13:1010474. doi: 10.3389/fpls.2022.1010474

Received: 03 August 2022; Accepted: 09 September 2022;

Published: 06 October 2022.

Edited by:

Muhammad Naveed Tahir, Pir Mehr Ali Shah Arid Agriculture University, PakistanCopyright © 2022 Qiu, Zhai, Li, Yang, Wang and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ruoyu Zhang, enJ5emp1QGdtYWlsLmNvbQ==; Yulin Li, MzkyMTMwOTZAcXEuY29t

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.